Submitted:

29 August 2025

Posted:

02 September 2025

Read the latest preprint version here

Abstract

Keywords:

Introduction

The Foundational Question: One True Effect?

- Under a fixed-effect view, we would insist that the true temperature is always 37.0 °C. Any deviation measured in practice—36.8 °C, 37.3 °C—would be dismissed as random error around this single correct value.

- Under a random-effects view, we recognize that normal body temperature is not identical for everyone. Some individuals average 36.5 °C, while others average 37.2 °C, and variations also occur with time of day, measurement method, or physiological state. There is still a meaningful average around 37 °C, but it represents a summary of genuine diversity rather than an immutable truth.

Fixed-Effect: Clarity with a Cost

Random-Effects: Embracing Variability

Methodological Choices within the Random-Effects Framework

- DerSimonian–Laird (DL): the classic method, fast and simple, but tends to underestimate variability (i.e., the real differences between study results) when there are few studies. This underestimation can result in overly narrow confidence intervals, thereby increasing the risk of false-positive findings (especially in meta-analyses with a small number of studies) [4]. This method persists because it is the long-standing default in many software packages.

- Paule–Mandel (PM): another robust option, often recommended today as an alternative to DL, particularly when heterogeneity is moderate. It has been endorsed in the Cochrane Handbook and supported by comparative evaluations [6].

- Wald CI: the traditional, straightforward approach; it often produces intervals that look reassuringly precise but can be too narrow, especially when there are few studies or some heterogeneity [2].

- Hartung–Knapp–Sidik–Jonkman (HKSJ): a modern method that produces wider and generally more reliable intervals. It is now considered the standard when heterogeneity is present. With very few studies, it can sometimes yield excessively wide (over-conservative) intervals; however, it remains the better option overall, as cautious inference is safer than overconfident conclusions [7,8].

- Modified or truncated HKSJ (mHK): a refinement of the HKSJ method, designed to prevent confidence intervals from becoming excessively wide in rare situations—typically when the number of studies is very small, a common scenario in clinical research, or when the between-study variance is close to zero [9].

Heterogeneity as the Compass for Model Choice

What Heterogeneity Means

Clinical vs. Statistical Heterogeneity

- Clinical heterogeneity: This is the real-world variability we expect when studies are not identical in who they include, what they do, or where they are done. Patients may differ in age, comorbidities, or disease severity; interventions may vary in dose, surgical technique, or how strictly protocols are followed; and settings may range from highly specialized hospitals to resource-limited clinics. These differences are not errors but part of normal clinical diversity—and they often explain why study results do not all look the same.

- Statistical heterogeneity: this is heterogeneity “put into numbers.” It describes how much the results of the included studies differ once we account for normal random fluctuations due to sample size. Every study will vary slightly, simply due to chance—this is known as sampling error. However, when the differences are greater than what chance alone would explain, we refer to it as statistical heterogeneity. Indices like Q, I², and τ² are simply ways of expressing that variability in numbers.

Model Choice Should Come First (and I² Should not Be Used to Make This Choice)

Measuring Statistical Heterogeneity

- Cochran’s Q: a test that asks whether the differences between studies are greater than expected by chance [10]. Its main limitation is that it strongly depends on the number of studies: with few, it often misses real differences; with many, it flags even trivial ones. A non-significant Q should therefore never be taken as proof of homogeneity.

- I²: the percentage of total variation explained by real heterogeneity rather than chance. Values of 25%, 50%, and 75% are often described as low, moderate, and high heterogeneity, though thresholds are arbitrary [11,12]. Moreover, I² itself is only an estimate and carries considerable uncertainty, particularly when the number of studies is small. It is also strongly influenced by the precision of the included studies: meta-analyses with large sample sizes can yield high I² values even when the absolute differences in effects are clinically trivial. These limitations further reinforce why model choice should be made conceptually rather than dictated by I².

- τ² (between-study variance): measures how much the true effects differ across studies. It is reported on the same scale as the effect size (e.g., risk difference in absolute %, or log scale for risk ratios). A τ² of 0 means no variability at all [2,6]. An estimated τ² of 0 suggests that there is no evidence of between-study variance beyond what would be expected by chance. τ² matters because it drives the weights in a random-effects model and is essential for calculating prediction intervals [2,6].

Putting it Together

The Guiding Role of Heterogeneity

Prediction Intervals: Looking Beyond Confidence Intervals

So, Which Model Should I Choose?

Key Principles for Model Choice

What Cochrane Recommends

Practical Guidance for Clinicians

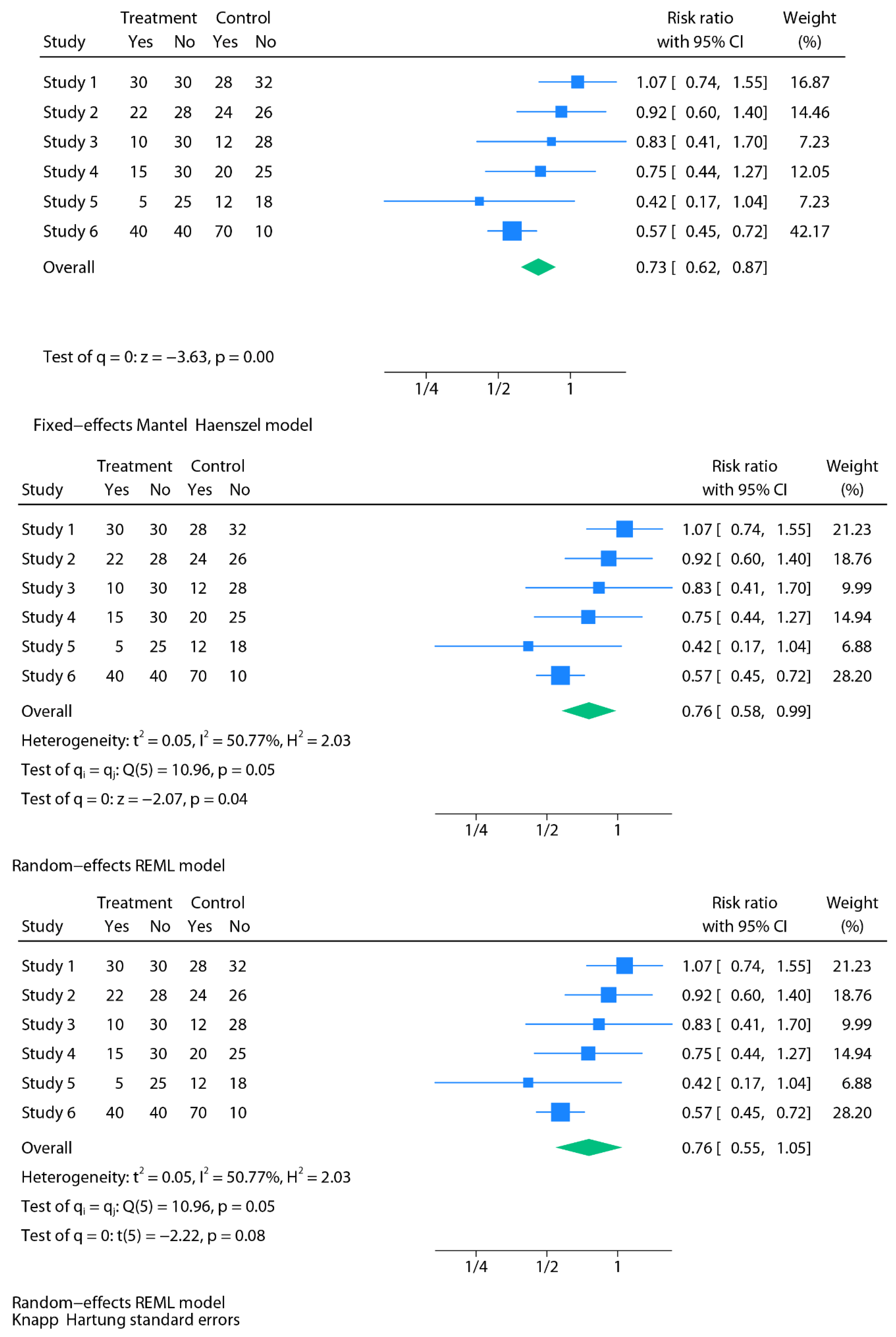

Making it Visual: Fixed vs Random at a Glance

How to Report a Meta-Analysis

Methods

Results

Real-World Case Studies: How Fixed vs Random-Effects Alter Conclusions

A final Nuance: Diagnostic Test Accuracy Studies

Conclusions

Original work

Ethical Statement

Informed consent

AI Use Disclosure

Data Availability Statement

Conflict of interest

References

- Riley RD, Gates S, Neilson J, Alfirevic Z. Statistical methods can be improved within Cochrane pregnancy and childbirth reviews. J Clin Epidemiol. 2011 Jun;64(6):608-18. Epub 2010 Dec 13. [CrossRef] [PubMed]

- Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA (eds.). Cochrane Handbook for Systematic Reviews of Interventions. Version 6.5 (updated March 2023). Cochrane, 2023.

- Riley RD, Higgins JP, Deeks JJ. Interpretation of random effects meta-analyses. BMJ. 2011;342:d549.

- DerSimonian R, Laird N. Meta-analysis in clinical trials. Control Clin Trials. 1986;7:177–88.

- Viechtbauer, W. (2005). Bias and Efficiency of Meta-Analytic Variance Estimators in the Random-Effects Model. Journal of Educational and Behavioral Statistics, 30(3), 261-293. (Original work published 2005). [CrossRef]

- Veroniki AA, Jackson D, Viechtbauer W, Bender R, Bowden J, Knapp G, Kuss O, Higgins JP, Langan D, Salanti G. Methods to estimate the between-study variance and its uncertainty in meta-analysis. Res Synth Methods. 2016 Mar;7(1):55-79. Epub 2015 Sep 2. PMID: 26332144; PMCID: PMC4950030. [CrossRef]

- IntHout J, Ioannidis JP, Borm GF. The Hartung-Knapp-Sidik-Jonkman method for random effects meta-analysis is straightforward and considerably outperforms the standard DerSimonian-Laird method. BMC Med Res Methodol. 2014;14:25.

- Hartung J, Knapp G. A refined method for the meta-analysis of controlled clinical trials with binary outcome. Stat Med. 2001 Dec 30;20(24):3875-89. [CrossRef] [PubMed]

- Röver C, Knapp G, Friede T. Hartung-Knapp-Sidik-Jonkman approach and its modification for random-effects meta-analysis with few studies. BMC Med Res Methodol. 2015 Nov 14;15:99. PMID: 26573817; PMCID: PMC4647507. [CrossRef]

- Cochran, WG. The combination of estimates from different experiments. Biometrics. 1954;10:101–29.

- Higgins JP, Thompson SG. Quantifying heterogeneity in a meta-analysis. Stat Med. 2002;21:1539–58.

- Higgins JP, Thompson SG, Deeks JJ, Altman DG. Measuring inconsistency in meta-analyses. BMJ. 2003;327:557–60.

- Arredondo Montero, J. Understanding Heterogeneity in Meta-Analysis: A Structured Methodological Tutorial. Preprints 2025, 2025081527. [Google Scholar] [CrossRef]

- IntHout J, Ioannidis JP, Rovers MM, Goeman JJ. Plea for routinely presenting prediction intervals in meta-analysis. BMJ Open. 2016 Jul 12;6(7):e010247. PMID: 27406637; PMCID: PMC4947751. [CrossRef]

- Nagashima K, Noma H, Furukawa TA. Prediction intervals for random-effects meta-analysis: A confidence distribution approach. Stat Methods Med Res. 2019 Jun;28(6):1689-1702. Epub 2018 May 10. PMID: 29745296. [CrossRef]

- Siemens W, Meerpohl JJ, Rohe MS, Buroh S, Schwarzer G, Becker G. Reevaluation of statistically significant meta-analyses in advanced cancer patients using the Hartung-Knapp method and prediction intervals-A methodological study. Res Synth Methods. 2022 May;13(3):330-341. Epub 2022 Jan 6. [CrossRef] [PubMed]

- Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, Shamseer L, Tetzlaff JM, Akl EA, Brennan SE, Chou R, Glanville J, Grimshaw JM, Hróbjartsson A, Lalu MM, Li T, Loder EW, Mayo-Wilson E, McDonald S, McGuinness LA, Stewart LA, Thomas J, Tricco AC, Welch VA, Whiting P, Moher D. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021 Mar 29;372:n71. PMCID: PMC8005924. [CrossRef] [PubMed]

- M. Molina-Madueño, S. Rodríguez-Cañamero, and J. M. Carmona-Torres, “Urination Stimulation Techniques for Collecting Clean Urine Samples in Infants Under One Year: Systematic Review and Meta-Analysis,” Acta Paediatrica (2025). [CrossRef]

- Arredondo Montero, J. Meta-Analytical Choices Matter: How a Significant Result Becomes Non-Significant Under Appropriate Modelling. Acta Paediatr. 2025 Jul 28. Epub ahead of print. [CrossRef] [PubMed]

- Azizoglu M, Perez Bertolez S, Kamci TO, Arslan S, Okur MH, Escolino M, Esposito C, Erdem Sit T, Karakas E, Mutanen A, Muensterer O, Lacher M. Musculoskeletal outcomes following thoracoscopic versus conventional open repair of esophageal atresia: A systematic review and meta-analysis from pediatric surgery meta-analysis (PESMA) study group. J Pediatr Surg. 2025 Jun 27;60(9):162431. Epub ahead of print. [CrossRef] [PubMed]

- Arredondo Montero, J. Letter to the editor: Rethinking the use of fixed-effect models in pediatric surgery meta-analyses. J Pediatr Surg. 2025 Aug 8:162509. Epub ahead of print. [CrossRef] [PubMed]

- Schmidt FL, Oh IS, Hayes TL. Fixed- versus random-effects models in meta-analysis: model properties and an empirical comparison of differences in results. Br J Math Stat Psychol. 2009 Feb;62(Pt 1):97-128. Epub 2007 Nov 13. [CrossRef] [PubMed]

- Shuster JJ, Jones LS, Salmon DA. Fixed vs random effects meta-analysis in rare event studies: the rosiglitazone link with myocardial infarction and cardiac death. Stat Med. 2007 Oct 30;26(24):4375-85. [CrossRef] [PubMed]

- Woods KL, Abrams K. The importance of effect mechanism in the design and interpretation of clinical trials: the role of magnesium in acute myocardial infarction. Prog Cardiovasc Dis. 2002 Jan-Feb;44(4):267-74. [CrossRef] [PubMed]

- Deeks JJ, Bossuyt PM, Gatsonis C (eds.). Cochrane Handbook for Systematic Reviews of Diagnostic Test Accuracy. Version 2.0. Cochrane, 2023.

- Reitsma JB, Glas AS, Rutjes AW, Scholten RJ, Bossuyt PM, Zwinderman AH. Bivariate analysis of sensitivity and specificity produces informative summary measures in diagnostic reviews. J Clin Epidemiol. 2005 Oct;58(10):982-90. [CrossRef] [PubMed]

- Rutter CM, Gatsonis CA. A hierarchical regression approach to meta-analysis of diagnostic test accuracy evaluations. Stat Med. 2001 Oct 15;20(19):2865-84. [CrossRef] [PubMed]

| Clinical Scenario | What does assuming fixed effects mean | What does assuming random effects mean |

|---|---|---|

| In non-operative management of uncomplicated appendicitis (antibiotics alone), is the success rate the same across all hospitals? | Assumes that non-operative antibiotic management yields the same success rate everywhere, with any between-hospital differences attributed only to chance. | Recognizes that true success rates differ—e.g., ~90% in some centers and ~70% in others—owing to patient selection, imaging protocols, antibiotic regimens, criteria for failure/crossover, and local care pathways. |

| Do ACE inhibitors lower blood pressure by the same amount in every patient? | Assumes that all patients experience an identical reduction (e.g., 10 mmHg), with observed deviations dismissed as random noise. | Recognizes that true responses vary according to patient- and context-specific factors—such as race (e.g., Black patients often respond differently to ACE inhibitors), comorbidities, baseline blood pressure, and treatment adherence. |

| Does screening colonoscopy reduce colorectal cancer mortality equally in all health systems? | Assumes that screening colonoscopy provides the same mortality reduction regardless of context. | Recognizes that the benefit of screening colonoscopy varies according to program- and practice-level factors. For example, mortality reduction is greater in robust, high-coverage programs and smaller in under-resourced systems; likewise, differences in endoscopist quality—such as adenoma detection rates—also influence the magnitude of effect. |

| Does prone positioning reduce mortality in ARDS patients to the same extent across ICUs? | Assumes a uniform mortality reduction across all settings (e.g., 15%). | Recognizes that the benefit varies according to multiple factors. For example, outcomes may be better in experienced centers with established protocols, adequate nurse-to-patient ratios, and optimal ventilatory management, compared to smaller units with less prepared staff. These are illustrative factors that influence the true effect, rather than random noise. |

| Do COVID-19 vaccines protect against infection? | Assumes identical vaccine effectiveness across all groups, regardless of age, comorbidity, or circulating variants. | Recognizes that effectiveness genuinely varies, with higher protection in some groups and lower in others, the pooled estimate reflecting an average across these conditions. |

| Does a smoking cessation intervention increase quit rates equally across settings? | Assumes that this intervention produces the same improvement in quit rates everywhere, with any observed variation across studies explained only by chance. | Recognizes that effectiveness depends on contextual and patient-level factors—for example, behavioral support intensity, pharmacotherapy access, or patient characteristics—so that observed differences reflect genuine variability rather than random noise. |

| Criterion | Fixed-effect (common-effect) model | Random-effects model |

|---|---|---|

| Underlying assumption | Assumes a single true effect applies to all studies; observed differences are due only to chance. | Assumes true effects vary across studies; the pooled estimate represents the average of a distribution. |

| Clinical diversity | Suitable only when studies are essentially identical in population, intervention, and setting. | Preferred when studies differ in patients, protocols, or healthcare contexts. |

| Number of studies | Appears stable with very few studies, but precision is often misleading | Safer with few; use HKSJ to better reflect uncertainty. HKSJ may be over-conservative with very few studies; consider modified/truncated HKSJ (mHK) |

| Statistical heterogeneity | Ignores between-study variability; heterogeneity is treated as sampling error. | Explicitly incorporates between-study variability into the analysis. |

| Precision vs realism | Produces narrower confidence intervals that may overstate certainty. | Produces wider intervals that better reflect real-world uncertainty. |

| Generalizability | Limited; results apply only to the specific studies included. | Broader; results are more applicable across diverse contexts. |

| Role in practice | Occasionally useful for sensitivity analyses or narrowly defined questions. | Default choice in most clinical meta-analyses. |

| Criterion | Fixed-effect (common-effect) model | Random-effects model |

|---|---|---|

| Underlying assumption | Assumes a single true effect applies to all studies; observed differences are due only to chance. | Assumes true effects vary across studies; the pooled estimate represents the average of a distribution. |

| Clinical diversity | Suitable only when studies are essentially identical in population, intervention, and setting. | Preferred when studies differ in patients, protocols, or healthcare contexts. |

| Number of studies | Appears stable with very few studies, but precision is often misleading | Safer with few; use HKSJ to better reflect uncertainty. HKSJ may be over-conservative with very few studies; consider modified/truncated HKSJ (mHK) |

| Statistical heterogeneity | Ignores between-study variability; heterogeneity is treated as sampling error. | Explicitly incorporates between-study variability into the analysis. |

| Precision vs realism | Produces narrower confidence intervals that may overstate certainty. | Produces wider intervals that better reflect real-world uncertainty. |

| Generalizability | Limited; results apply only to the specific studies included. | Broader; results are more applicable across diverse contexts. |

| Role in practice | Occasionally useful for sensitivity analyses or narrowly defined questions. | Default choice in most clinical meta-analyses. |

| Section | What should be reported | Why it matters |

|---|---|---|

| Methods |

|

Transparency; reproducibility; avoids selective reporting. |

| Results |

|

Ensures clarity; communicates both precision (CI) and expected variability across contexts (PI); readers understand robustness of findings. |

| Case study | Clinical question | Original model & result | Re-analysed model & result | Key lesson |

|---|---|---|---|---|

| 1. Urination stimulation in infants | Non-invasive stimulation to collect urine samples | FE Mantel–Haenszel: OR 3.88 (95% CI 2.28–6.60), p<0.01; I²=72% → strongly positive | RE REML: OR 3.44 (1.20–9.88), p=0.02; HKSJ: OR 3.44 (0.34–34.91), p=0.15 → wide, inconclusive | FE overstates precision; RE + HKSJ highlight the underlying uncertainty. With very few studies, confidence intervals become challenging to interpret—either too narrow under FE or excessively wide under HKSJ—underscoring the inherent difficulty of sparse-data scenarios |

| 2. Esophageal atresia repair | Musculoskeletal sequelae after thoracoscopic vs open repair | FE Mantel–Haenszel: RR 0.35 (0.14–0.84), p=0.02 → significant reduction | RE REML: RR 0.35 (0.09–1.36), p=0.13; HKSJ: RR 0.35 (0.05–2.36), p=0.18 → loss of significance | Certainty collapses when heterogeneity is acknowledged; RE prevents false confidence |

| 3. Psychological Bulletin re-analysis | 68 psychology meta-analyses re-examined | FE gave narrow, often “significant” CIs; apparent robustness | RE widened CIs, significance often disappeared; FE defensible in ~3% only | Large-scale evidence that FE systematically exaggerates certainty |

| 4. Rosiglitazone & CV risk | Myocardial infarction & cardiac death with rosiglitazone | FE: MI RR 1.43 (1.03–1.98), p=0.03 (↑ risk); cardiac death RR 1.64 (0.98–2.74), p=0.06 (NS) | RE (rare-event): MI RR 1.51 (0.91–2.48), p=0.11 (NS); cardiac death RR 2.37 (1.38–4.07), p=0.0017 (↑ risk) | FE masked real risk signal; RE exposed to clinically important harm |

| 5. Magnesium in acute MI | IV Mg²⁺ for AMI | FE: OR 1.02 (0.96–1.08) → null; extreme heterogeneity (p<0.0001) | RE: OR 0.61 (0.43–0.87), p=0.006 → protective | FE obscured mechanistic truth; RE aligned with biological plausibility (timing of administration) |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).