1. Introduction

In recent years, the study of soundscapes has emerged as a powerful tool for ecological monitoring and environmental assessment. A soundscape is defined as the collection of biophonic, geophonic, and anthropophonic sounds that characterize a given environment [

1]. Through passive acoustic monitoring, researchers can gather continuous, non-invasive, and cost-effective information about ecosystems, including biological activity, species richness, and anthropogenic disturbance [

2]. Unlike traditional biodiversity surveys that are often limited by spatial or temporal constraints, acoustic methods enable long-term sampling across large areas and can reveal patterns that would otherwise remain undetected. These advantages have contributed to the growing adoption of soundscape analysis in conservation programs, landscape-scale monitoring efforts, and biodiversity assessments [

3]. As acoustic technologies and computational tools continue to improve, soundscapes offer increasing potential for understanding the dynamics and health of ecosystems on spatial and temporal scales.

Recent developments in machine learning have significantly improved the ability to analyze ecoacoustic data [

2,

4], particularly in tasks involving species detection and classification. Supervised learning techniques, especially those based on deep neural networks, have enabled the automatic identification of animal vocalizations from large volumes of acoustic recordings [

4,

5]. Notably, tools such as BirdNET [

6], which use convolutional neural networks trained on expert-labeled datasets, have achieved high accuracy in the identification of numerous species of birds in different environments. These models have facilitated large scale biodiversity monitoring and made it possible to study specie specific patterns with high temporal resolution [

7,

8]. Despite these advances, many existing approaches focus primarily on taxonomic classification, often ignoring the broader acoustic structure of the landscape and the contextual information embedded in non-biological or unclassified sounds. This narrow focus limits the ecological interpretation of soundscapes and restricts the ability to assess ecosystem-level properties. However, the acoustic environment encodes more than just the presence of species or vocal activity. Soundscapes reflect the structure and function of ecosystems as a whole, including spatial patterns, temporal dynamics, and environmental stressors [

9,

10,

11]. Attributes such as habitat connectivity, land-use heterogeneity, and ecosystem degradation can be inferred from the composition and variability of acoustic signals over time and space. These broader patterns are essential for understanding ecological processes, especially in landscapes undergoing rapid change. Yet, studies that treat the soundscape as a complex and integrated ecological signal remain relatively scarce. Most existing research has prioritized species-level outcomes, leaving a gap in our understanding of how acoustic patterns relate to landscape structure and ecosystem health. In this study, we address this gap by comparing multiple methodological pipelines that combine dimensionality reduction and unsupervised clustering for large-scale soundscape characterization. Our goal is to explore how these approaches reveal spatial organization in the acoustic environment and to provide tools for interpreting the composition and distribution of clusters from an ecological perspective.

Our work is based on and motivated by recent efforts to explore soundscape patterns through unsupervised analysis. For example, [

12] used acoustic indices to perform spatial exploration of soundscapes within the same study area examined here. Their work emphasized the value of unsupervised learning and evaluated clustering outputs through comparisons with species detections, spectrograms, and the spatial distribution of acoustic indices, highlighting how soundscape-level structure can emerge without relying on taxonomic annotation. Similarly, [

13] proposed an unsupervised framework that leverages passive acoustic monitoring data and network inference to examine acoustic heterogeneity across landscapes. By characterizing biophonic patterns through the use of sonotypes they constructed site level profiles and applied graphical models to infer ecological interactions. Their graph-based approach allowed them to represent similarities among sites and capture acoustic diversity in heterogeneous environments.

On the other hand, although autoencoders have been widely adopted in other fields such as bioinformatics [

14], cybersecurity [

15], anomaly detection [

16], and even remote sensing and landscape monitoring applications [

17,

18], they remain relatively underused in ecoacoustics. Notable exceptions include the work by [

19], who proposed a vector-quantized autoencoder for generating synthetic audio of underrepresented species, and [

20], one of the earliest studies to explore autoencoders as an alternative to acoustic indices for clustering short audio recordings. Additionally, in our previous work [

21], we evaluated unsupervised learning using a variational autoencoder in comparison with cepstral coefficients and a convolutional architecture known as KiwiNet, highlighting the potential of deep unsupervised representations for soundscape analysis. However, our current work distinguishes itself from the studies mentioned above and from our previous research in several key aspects: (i) we conduct an in-depth evaluation of the dimensionality reduction and clustering stages, emphasizing the importance of parameter selection through both quantitative metrics and qualitative analyses; (ii) we thoroughly assess the relationship between the discovered patterns and ecological attributes derived from metadata, particularly emphasizing spatial structure; and (iii) we propose a novel methodology to identify acoustically connected geographic locations based on the similarity of their recordings, an aspect that, to our knowledge, has not been explicitly addressed in previous ecoacoustic studies.

3. Results and Discussion

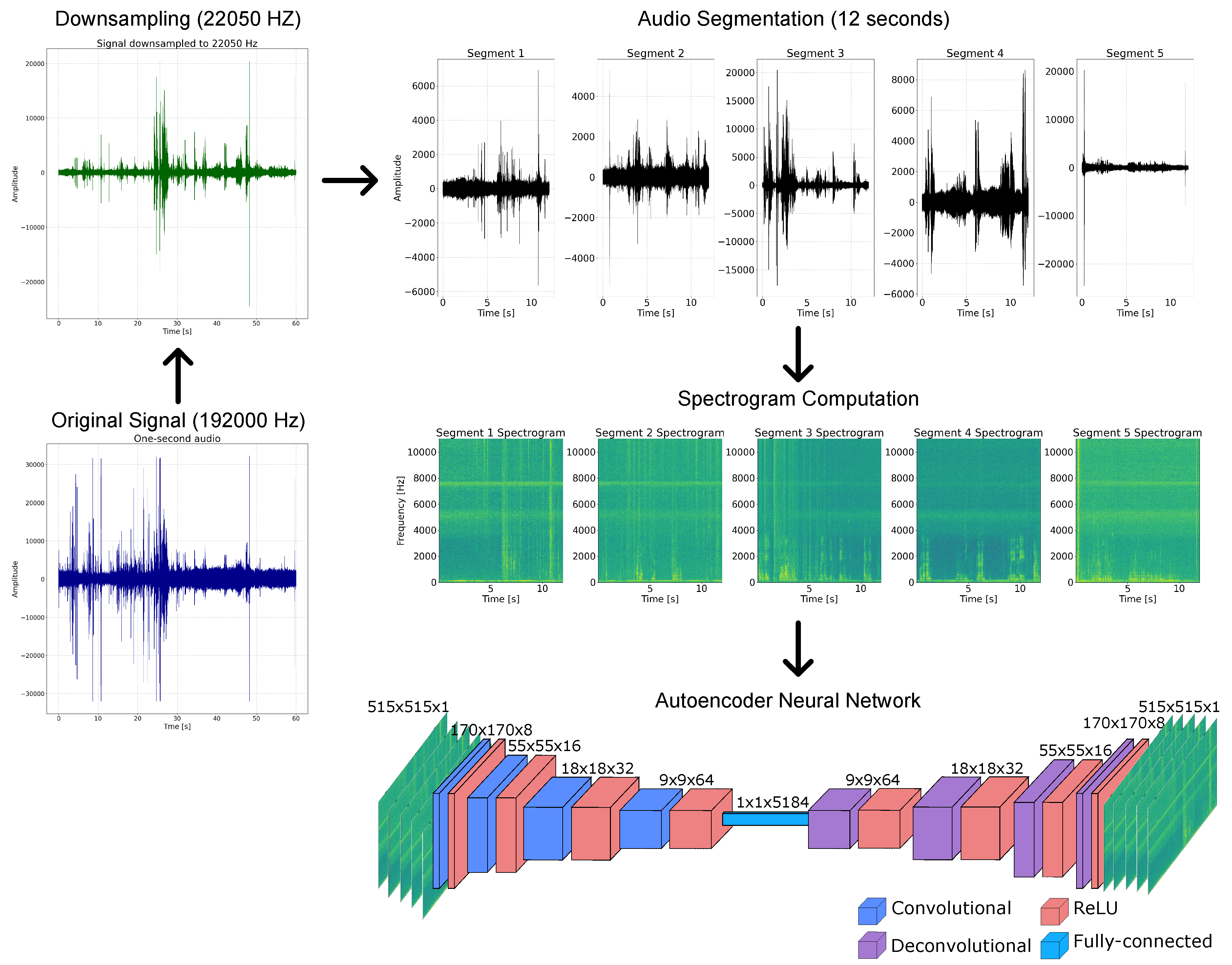

For experiments, we processed the dataset using only recordings without rainfall. Noisy data and recordings with significant rain content were removed using the methodology described in [

31]. This pre-processing step ensured that subsequent analyses focused only on biologically and ecologically informative acoustic content, avoiding to find patterns and clusters biased by noisy data. After removing rainfall data, as part of the pre-processing pipeline, we implemented a custom data loader that resamples the original recordings from 192,000 to 22,050 Hz. This sampling rate was chosen because it encompasses the range of human-audible frequencies and retains most of the ecologically relevant acoustic information present in typical soundscapes. Each recording was then segmented into five non-overlapping 12-second clips. For each segment, a spectrogram was computed following the procedure illustrated in

Figure 2.

We performed feature extraction using the baseline methods: VGGish embeddings and acoustic indices, according to the methodology described in

Section 2.2. These two approaches served as standardized representations for capturing the spectral and temporal properties of the soundscape.

For autoencoder feature extraction, we trained a vanilla convolutional autoencoder using 20% of the dataset among ten epochs. The architecture, illustrated in

Figure 2, consists of an encoder with four convolutional layers interleaved with Rectified Linear Unit (ReLU) activations, followed symmetrically by a decoder comprising four transposed convolutional (deconvolutional) layers, also with ReLU activations, except for the final layer, which uses a sigmoid activation to produce the reconstructed output.

To evaluate the performance and generalizability of the model, we monitored the Mean Squared Error (MSE) on a subset of tested held out during training and visually inspected the reconstructed spectrograms. The embedding space was obtained by flattening the output of the final convolutional layer in the encoder, producing a representation of 5184 dimensions (), where 64 corresponds to the number of filters and to the spatial resolution after the encoding stages. This low-dimensional representation served as the input for subsequent clustering and projection analyses.

The analysis of the experimentation and results was structured into the following components: feature projection, clustering, acoustic component identification through indices, spatial pattern analysis, and finally, evaluation of data connectivity. While the primary focus of this work is unsupervised exploration, the reliability of such analysis fundamentally depends on the ability of the model to extract meaningful and interpretable features. To assess the representational quality of the extracted features, we conducted a supervised learning evaluation using habitat cover type labels as a reference standard, allowing a comparative assessment of the described feature extraction methods.

Multiclass Classification Using Cover Type and Time Metadata as Labels

For the Rey Zamuro dataset, three habitat cover classes were defined: forest (19.4%), pasture (57.7%), and savanna (22.7%). This classification task presents two main challenges: the multiclass nature of the problem and the class imbalance, particularly due to the overrepresentation of pasture samples. Although forest and savanna are relatively balanced with respect to each other, the dominance of pasture introduces bias in the learning process.

To assess the discriminative power of the feature representations extracted from each method, we conducted a supervised classification task using a Random Forest (RF) classifier. The dataset was partitioned into two non-overlapping subsets: 80% of the total samples were allocated for training, and the remaining 20% were reserved exclusively for testing. This partitioning was performed using stratified sampling to preserve the class distribution across both sets. The training set was used to fit the classifier and assess performance during model development, while the test set was held out entirely during training and only used for final evaluation to measure generalization capability.

The Random Forest classifier was configured with a fixed maximum tree depth of 16 and a random seed of 0 to guarantee reproducibility and consistency across experiments. Classification performance was then quantified using standard evaluation metrics including accuracy, macro-averaged F1-score, and recall, allowing us to systematically compare how well each representation captured ecologically meaningful distinctions between landscape types.

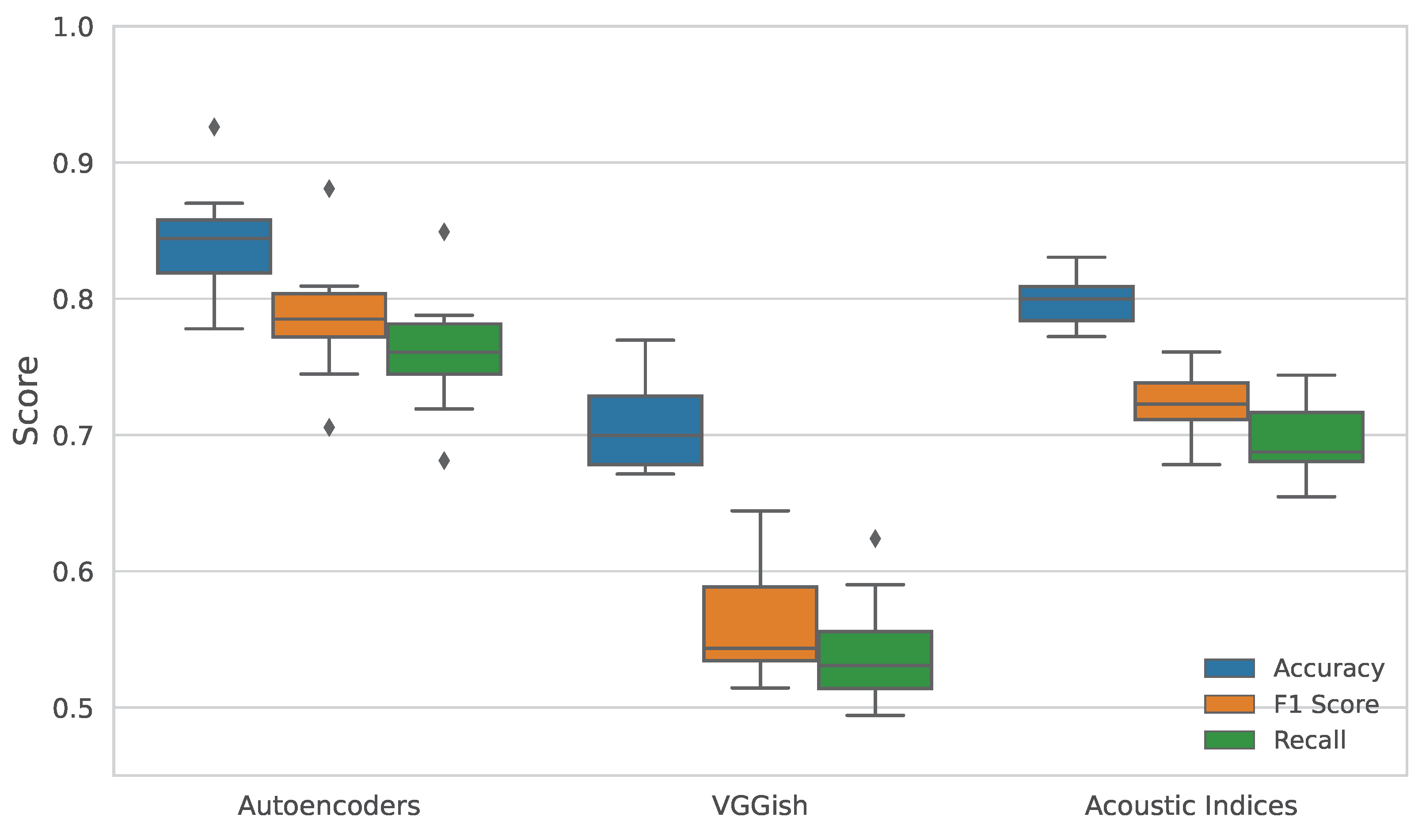

Initially, the classification was performed on the entire dataset of 53.275 samples. Although this approach is not computationally demanding, it lacks statistical robustness to assess generalization. To address this, we implemented an alternative evaluation strategy by partitioning the dataset according to the day on which each sample was recorded. This resulted in thirteen independent subsets, enabling day-wise classification and allowing for the assessment of metric variability across temporal segments. However, for each day-dataset, we conserved 80% of the data for training and 20% for evaluation. The results, summarized in the box plot presented in

Figure 3, show that the features extracted using the autoencoder consistently achieved the highest scores in all evaluation metrics, thereby demonstrating superior representational capacity and robustness.

To quantitatively evaluate the differences in classification performance among the feature extraction approaches, we conducted non-parametric statistical tests on three evaluation metrics: accuracy, F1 score, and recall. The Friedman test was used to assess whether there were significant differences across the three methods—autoencoders (AE), VGGish (VGG), and acoustic indices (AI). When significant differences were detected (

), pairwise comparisons were further examined using the Wilcoxon signed-rank test.

Table 1 summarizes the results of these tests. The Friedman test revealed statistically significant differences across methods for all three metrics (

). Post hoc Wilcoxon comparisons indicated that the autoencoder-based features significantly outperformed both VGGish and acoustic indices in most cases, particularly in comparisons involving AE vs VGG and AE vs AI. These results support the conclusion that the features extracted via autoencoders encode more discriminative information relevant to the classification of habitat cover types.

Similarly, we investigated the use of temporal metadata as classification labels, given that several studies have demonstrated significant variations in soundscape composition across different times of day. Temporal dynamics in acoustic environments are crucial for understanding species behavior, activity patterns, and ecosystem processes [

32,

33]. For instance, diurnal and nocturnal shifts in vocal activity influence the acoustic community structure, which can be effectively captured and analyzed through time-resolved soundscape data [

34]. Incorporating time-of-day information enables a more detailed characterization of ecological patterns and enhances the interpretability of unsupervised clustering and feature extraction methods.

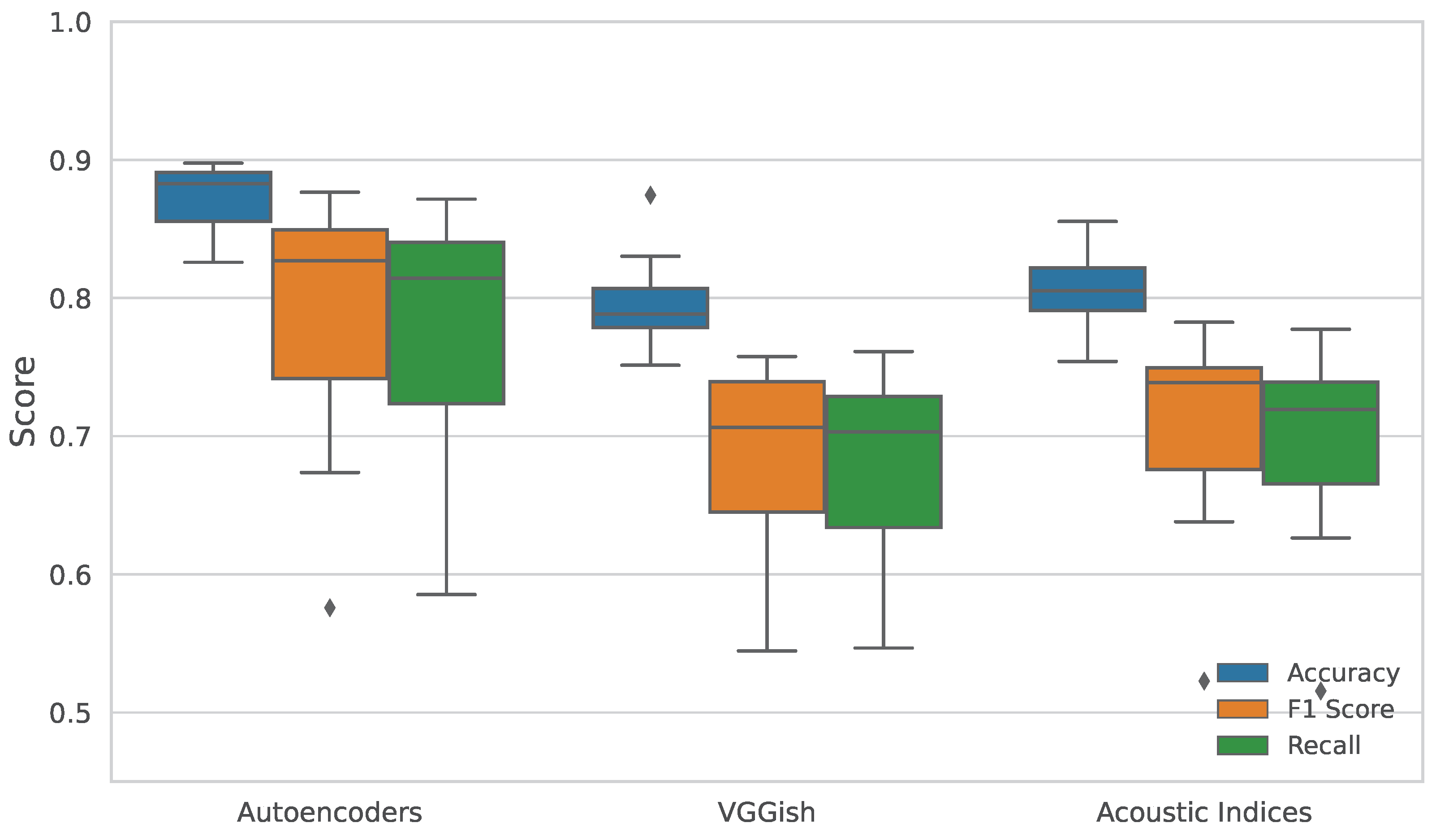

Figure 4 presents boxplots summarizing the classification performance across thirteen sampling days, segmented into three distinct time-of-day intervals: dawn (05:00–08:00), day (08:00–17:00), and night (17:00–05:00). This temporal segmentation aligns with recent studies in Colombia [

1,

35], that consider the equatorial location, which results in minimal seasonal variation in sunrise and sunset times. This division captures relevant diel patterns in acoustic activity and aligns with ecological processes and animal behavior commonly observed in neotropical soundscapes [

34].

A similar statistical evaluation was conducted for the classification performance across the three temporal segments: dawn, day, and night. The results, summarized in

Table 2, show a consistent pattern with the habitat cover classification. The Friedman test again revealed statistically significant differences among the feature extraction methods for all metrics (

). Subsequent Wilcoxon signed-rank tests confirmed that autoencoder features significantly outperformed both VGGish and acoustic indices across accuracy, F1 score, and recall. Of particular note is the AE vs AI comparison, which yielded a Wilcoxon statistic of 1.000 and a p-value of 0.0020, indicating a nearly systematic advantage of the autoencoder.

Low Dimensional Feature Embedding and Clustering

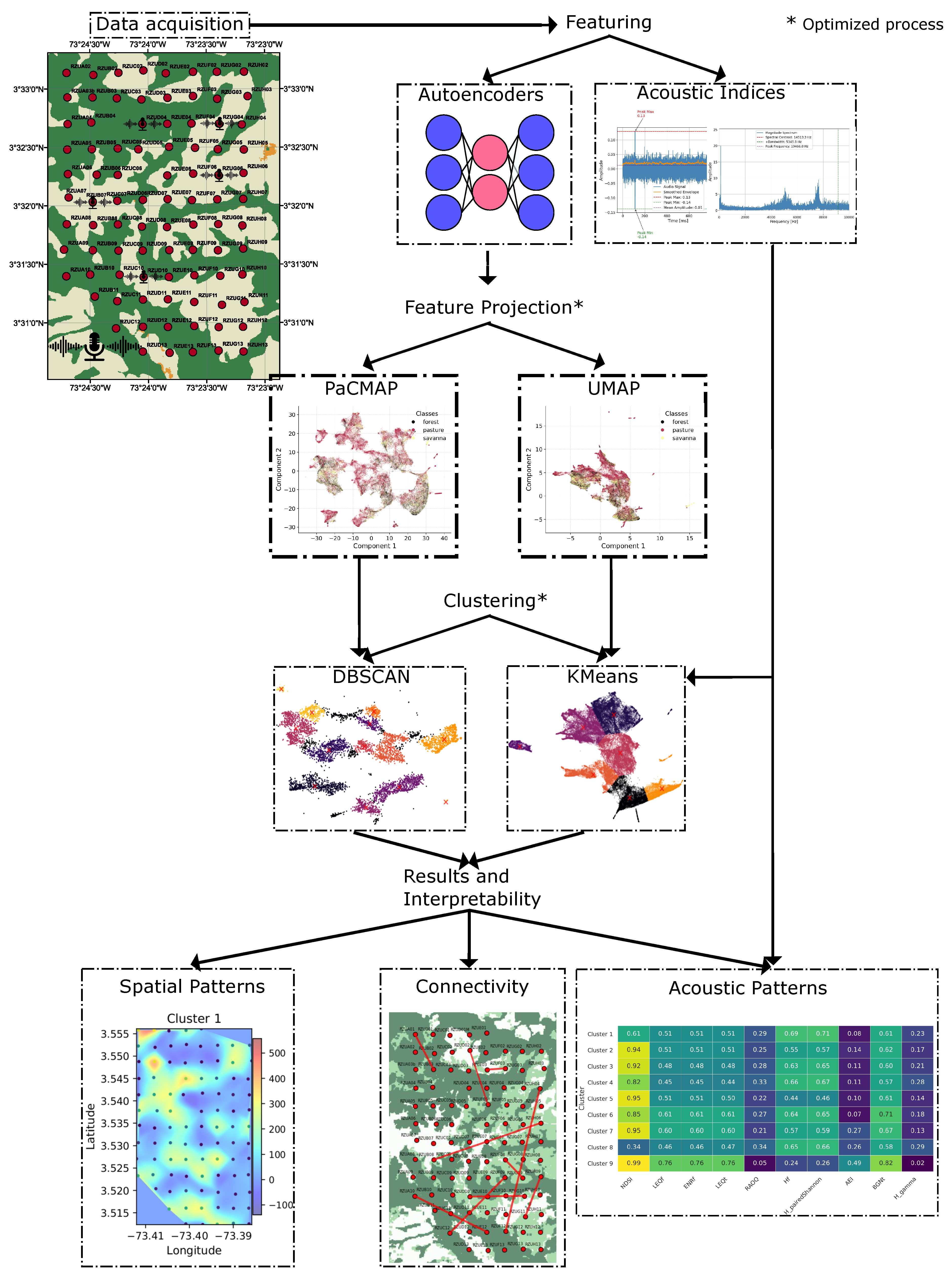

In this section, we detail the entire unsupervised procedure. As illustrated in

Figure 5, the process begins with data characterization using acoustic indices and embeddings which are then projected into a low-dimensional space using state of the art methods that have demonstrated strong performance across diverse data types. Clustering is subsequently performed to uncover patterns across multiple dimensions and ecological aspects of the landscape. Finally, we analyze the results through multiple strategies: first, by examining the spatial structure of each cluster via interpolation of features at each sampling point; second, by interpreting the most relevant acoustic patterns using acoustic indices; and third, by proposing a method to estimate connectivity between locations, using the connectivity of individual recordings as a proxy. Each component of the process is described in detail below.

To investigate the underlying structure of the acoustic landscape, we employed two non-linear dimensionality reduction techniques such as Uniform Manifold Approximation and Projection (UMAP) and Pairwise Controlled Manifold Approximation Projection (PaCMAP). These methods have demonstrated robust performance in preserving both local and global topological structures in high-dimensional data [

28,

36], making them particularly suitable for ecoacoustic applications where temporal, spectral, and spatiotemporal patterns coexist in complex ways. We did not perform analyses directly in the original feature space for several reasons: (1) recent studies emphasize the value of low-dimensional visualizations for enhancing interpretability and facilitating expert-driven ecological insights [

37,

38]; (2) processing in the original 5184-dimensional space significantly increases computational demands, reducing the feasibility of applying the method in practical or large-scale ecological contexts; and (3) as demonstrated in our previous work [

24], the difference in pattern detection performance between using the original space and its low-dimensional projection is marginal, further supporting the use of dimensionality reduction as a reliable and efficient alternative.

We placed particular emphasis on a thorough exploration of the parameter space for both dimensionality reduction and clustering, aiming to enhance the reliability and interpretability of the low-dimensional embeddings. Unlike previous studies in ecoacoustics and bioacoustics, which often apply default or minimally adjusted parameters in dimensionality reduction and clustering techniques (e.g., [

30,

39]), we performed a detailed grid search to systematically assess how hyperparameter choices affect the structure and separability of the resulting data representations. This is a critical yet frequently overlooked aspect, as recent work has shown that parameter sensitivity in methods like UMAP or PaCMAP can significantly influence the topology of the low-dimensional space and, consequently, the ecological interpretations drawn from these embeddings [

40].

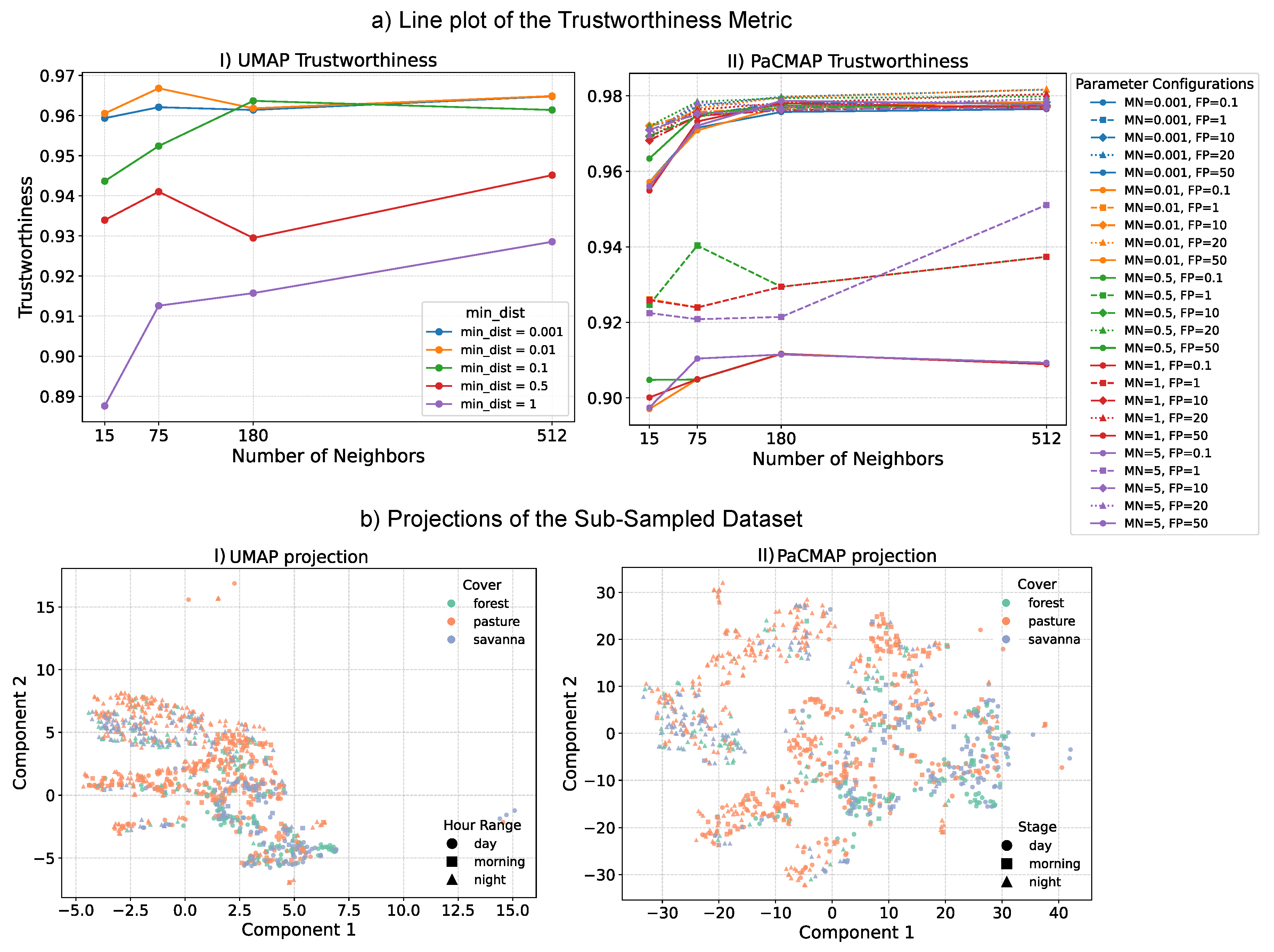

For quantitative evaluation,

Figure 6(a).I and

Figure 6(a).II present the quality assessment of low-dimensional embeddings generated by UMAP and PaCMAP, respectively. We employed the trustworthiness metric, which evaluates the consistency between high-dimensional neighborhoods and their representations in the reduced space without relying on class labels or prior clustering. This makes it particularly appropriate for ecoacoustic datasets, where annotated ground truth is typically unavailable or limited. For UMAP (

Figure 6(a).I), the configuration with the highest trustworthiness score used a neighborhood size of 75 and a minimum distance of 0.01. For PaCMAP (

Figure 6(a).II), the optimal configuration used a neighborhood size of 75, a mid-scale neighbor ratio of 0.5, and a far neighbor ratio of 20.

To analyze latent structures in the low-dimensional embeddings, we applied two commonly used unsupervised clustering algorithms, KMeans and DBSCAN, which offer complementary perspectives. KMeans assumes spherical and evenly spaced clusters, optimizing intra-cluster compactness, whereas DBSCAN identifies clusters based on local density, allowing it to detect arbitrarily shaped groupings and exclude noise. Based on the geometric characteristics of the embeddings, we paired UMAP with KMeans and PaCMAP with DBSCAN. UMAP tends to produce globally coherent layouts that align with the centroid-based partitioning of KMeans, facilitating the separation of data into compact and uniformly distributed clusters. In contrast, PaCMAP often results in high-density, tightly grouped regions with flexible spacing, which aligns well with DBSCAN’s density-based detection mechanism. This strategic pairing allowed us to better exploit the strengths of each clustering algorithm in accordance with the topological properties induced by the respective projection method, yielding more interpretable and ecologically meaningful groupings in the ecoacoustic dataset.

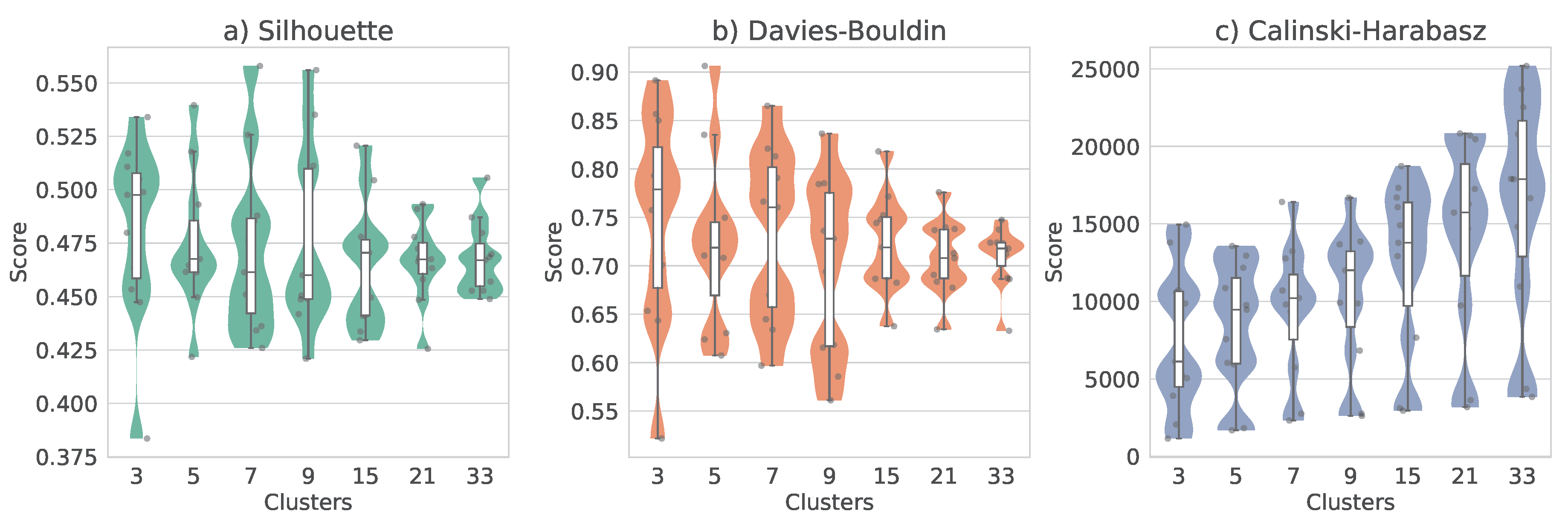

To evaluate the clustering results, we used three internal validation metrics for the KMeans and UMAP combination, i.e., Silhouette Coefficient, Davies–Bouldin Index, and Calinski–Harabasz index. Following an initial global evaluation to identify promising parameter configurations, we performed a per-day analysis to examine the consistency and reproducibility of the clustering structures across different temporal subsets. This approach ensured that the selected combinations of the dimensionality reduction and clustering methods yielded stable and interpretable groups throughout the entire dataset. However, after a deeper analysis of the results, we found that the Silhouette Coefficient and Davies–Bouldin Index did not consistently favor a specific cluster configuration throughout the study days when observing temporal trends of the metrics versus the number of clusters. To address this variability, we employed a boxplot-based visualization (

Figure 7) to summarize the distribution of scores for each metric on all days. This visualization revealed a pronounced trend in the Calinski-Harabasz index, which favored a larger number of clusters, while the Silhouette Coefficient and Davies–Bouldin Index exhibited less consistent behavior, although these metrics displayed a local optimum around 9 clusters, indicated by a local maximum in the Silhouette score and a local minimum in the Davies-Bouldin index (where lower values reflect better-defined clusters). Notably, the Calinski–Harabasz index also exhibited a sharp inflection after 9 clusters, suggesting this value as a feasible choice for the number of clusters. This result was unexpected, as it suggests a higher degree of ecological and acoustic heterogeneity compared to our previous assumption. Although ecoacoustic features are known to reflect various spatiotemporal dynamics and frequency-dependent behaviors associated with species composition and environmental structure (e.g., [

7,

39]), the emergence of 9 distinct clusters suggests that the learned acoustic representations are capturing latent ecological patterns that transcend superficial spatial, temporal, or spectral distinctions. This underscores the potential of unsupervised clustering on low-dimensional embeddings as a powerful tool for revealing nuanced ecoacoustic structure within complex soundscapes.

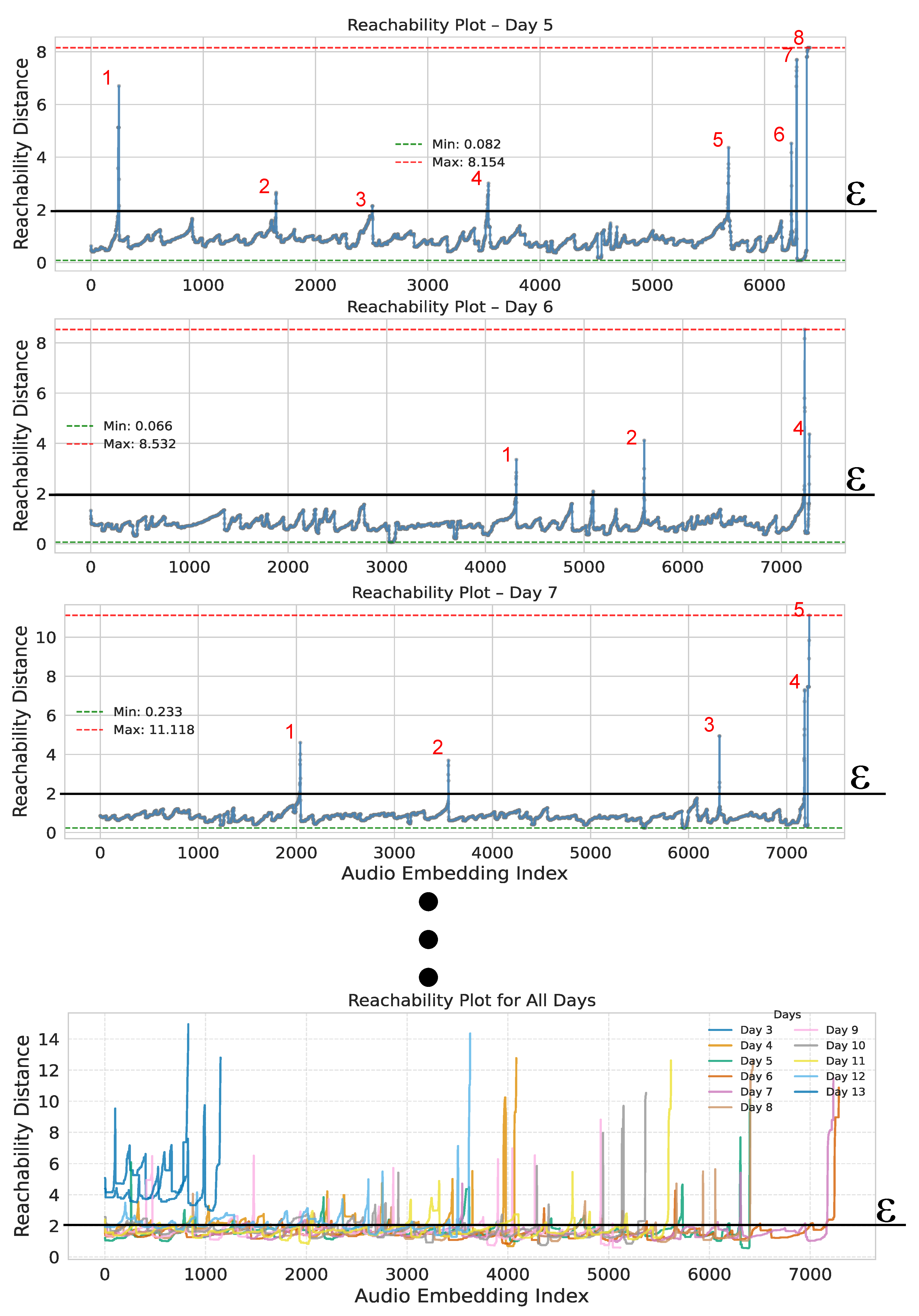

For the evaluation of parameter configurations using DBSCAN with PaCMAP, we extended the analysis beyond the internal metrics previously described by incorporating two additional methods specifically designed for density-based clustering algorithms. The first was the reachability plot, a visual tool commonly used with the OPTICS algorithm. This plot represents the reachability distances of points in the order they are processed, allowing the identification of cluster structures as valleys or drops in the curve, while flat regions typically indicate noise or transitions between clusters. Its main advantage lies in offering a flexible exploratory view of the data’s density structure without relying on a fixed density threshold.

In addition, we developed a custom procedure inspired by the principles of density peak clustering, which we refer to as Density Peak-based Validation of Cluster (DPVC). This method begins by estimating the local density of each point using the average distance to its k nearest neighbors. Then, for each cluster, the density peak is identified as the point with the highest local density (i.e., the smallest average distance to its neighbors). The DPVC score for a given cluster is computed as the mean distance of all points in the cluster to this density peak. The final DPVC value is obtained as the average of these scores across all non-noise clusters. This metric provides an indication of within-cluster compactness and proved particularly useful for validating DBSCAN results in non-linear spaces like those produced by PaCMAP, where traditional centroid-based metrics may fail to accurately capture cluster organization.

As shown in

Figure 8, the reachability plots for days 5, 6, and 7 show deep and well-separated valleys, indicating the presence of well-structured groups. This is an important condition for the application of density-based clustering methods such as DBSCAN, as such valleys reflect dense regions that are clearly separated by lower-density transitions. Additionally, for the selection of the

parameter based on reachability distance, it can be observed that the most prominent valleys consistently appear above a distance of 2, suggesting this as a feasible value. Consequently, we selected

for DBSCAN in our experiments. To support this choice, we also applied the DPVC (Density Peak-based Validation of Clusters) metric, which confirmed that the selected configuration of

and

produced compact and coherent clusters in the PaCMAP-embedded space. This validation is consistent with recent advances in adaptive density-based clustering, which highlight the importance of tuning parameters such as

and

in datasets with heterogeneous density structures [

41]. Furthermore, modern reviews of density peak clustering methods support the use of local density-based validation criteria such as DPVC to evaluate cluster cohesion [

42].

The figure also shows the reachability plots for all sampling days. A consistent clustering structure is visible on most days, with a clear anomaly in days 3 and 13. These deviations can be explained by incomplete sampling, as these days correspond to the deployment or removal of recording devices in the field. As a result, fewer audio samples were available, leading to sparser representations in the PaCMAP space and fewer detectable clusters. This highlights the importance of the completeness of the data when applying this methodology to spatial or temporal subsets. In such cases, a reduced sampling rate can significantly alter the low-dimensional representation and, consequently, the clustering outcomes.

Soundscape Spatial Pattern Analysis

From this point onward, we present the results analysis based on the previously described strategies, using their respective optimal parameter configurations. The analysis is structured into three main components. First, we conduct a spatial analysis based on the acoustic similarity revealed by the clustering results, allowing us to examine how soundscape patterns are distributed geographically. Second, in order to discern the specific acoustic patterns captured by each proposed method, we perform an index-based analysis. This step identifies the dominant acoustic indices contributing to the clustering structure and examines their ecological relevance. Lastly, we introduce an approach to explore geographic connectivity by evaluating the acoustic similarity among locations, thus highlighting potential ecological links or discontinuities in the acoustic landscape. This analytical framework enables a multifaceted examination of soundscape structure and facilitates the interpretation of the proposed methodology in relation to ecologically meaningful attributes. While the supervised classification experiments demonstrate high discriminative power using available labels such as habitat cover and time-of-day ranges, the core objective of the unsupervised framework is to uncover latent acoustic patterns beyond predefined class labels. Nevertheless, in the final stage of the analysis (focused on acoustic connectivity) we incorporate the habitat cover classes to interpret the similarity between geographically distinct sites. This integration allows us to validate the ecological relevance of the uncovered acoustic structures while preserving the exploratory nature of the unsupervised approach.

The clustering and projection analyses presented earlier were conducted with the objective of supporting the subsequent study of soundscape patterns while reducing the uncertainty associated with the configuration and parameterization of the methods. As demonstrated previously, these parameters significantly influence the outcome and therefore the interpretation of the acoustic landscape. In this section, we focus on spatial analysis, aiming to investigate whether the identified acoustic clusters are geographically concentrated in specific areas or if they share similar soundscape features across the landscape. This analysis leverages the design of the sampling protocol in the Zamuro Natural Reserve, which was based on a structured grid layout. This design enables spatial reasoning and interpretation by providing systematic coverage of the study area.

For spatial pattern analysis, we used the cluster assignments obtained from both proposed methodologies, i.e., UMAP combined with KMeans, and PaCMAP combined with DBSCAN. For each of the 93 recording sites, we computed the number of audio samples belonging to each cluster. This allowed us to quantify the degree of association between each site and each acoustic group, revealing the extent to which certain soundscape patterns dominate specific areas. Cluster-site associations serve as the foundation for exploring spatial acoustic structure in the reserve. These proportions of cluster membership, based on the number of audio samples assigned to each cluster per site, were used to represent the sampling points geographically and to perform an interpolation procedure. This allowed us to visualize: (1) how the clusters are spatially distributed across the landscape, and (2) the degree of similarity among locations based on their acoustic characteristics. In addition to the two clustering approaches previously described, this interpolation analysis was also performed using the characterization based on acoustic indices. The inclusion of this third approach aims to enhance interpretability, as acoustic indices capture relevant temporal and spectral dynamics of the recordings [

43,

44], thus providing a meaningful reference framework for the interpretation of emerging spatial patterns, as noted in previous studies [

45,

46].

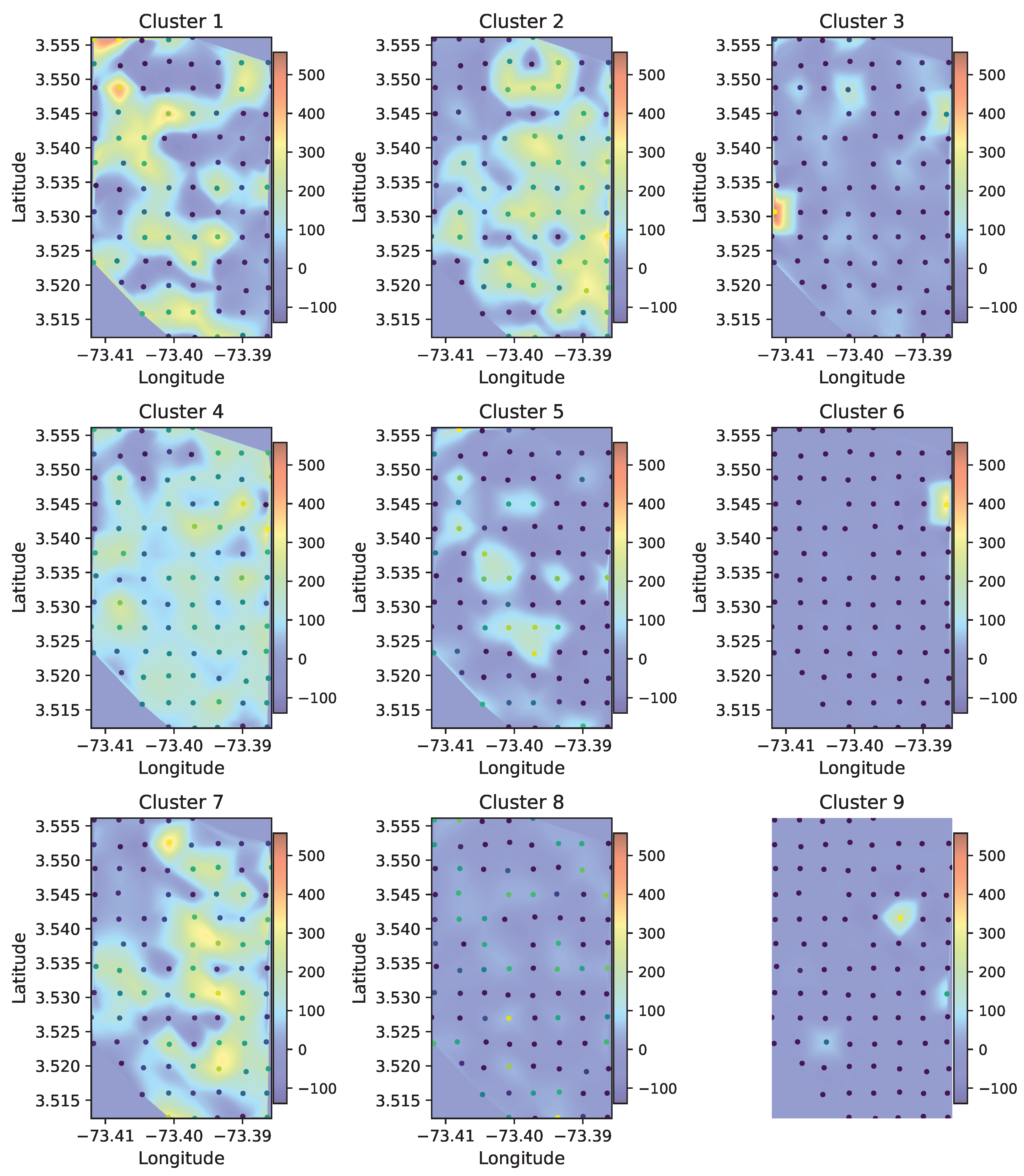

Figure 9 shows the resulting spatial distribution of the clusters using the PaCMAP projection combined with DBSCAN clustering, we computed and represented the information as a heat map. In the heat map, it is possible to observe both broadly distributed patterns across the landscape, such as those represented by clusters 2 and 4, and clusters that are more concentrated in specific areas, such as clusters 3, 6, and 9. These results suggest that certain acoustic patterns are widespread and occur under a variety of environmental or habitat conditions, while others are limited to particular zones, potentially linked to localized ecological features. This spatial contrast supports the idea that the clustering approach captures both general and site-specific soundscape structures, providing valuable insights into the acoustic organization of the study area. In addition, lateral patterns can be observed in certain clusters, such as cluster 1, which is concentrated toward the left side of the sampling area, and cluster 7, which appears more frequently on the right side. In contrast, no evident trends appear along the vertical (north–south) axis of the grid. As mentioned previously, the sampling design was implemented using a spatial grid, making this type of analysis appropriate for interpreting how soundscape variation occurs longitudinally or latitudinally. In this case, the observed patterns suggest stronger acoustic differentiation along the longitudinal gradient of the landscape.

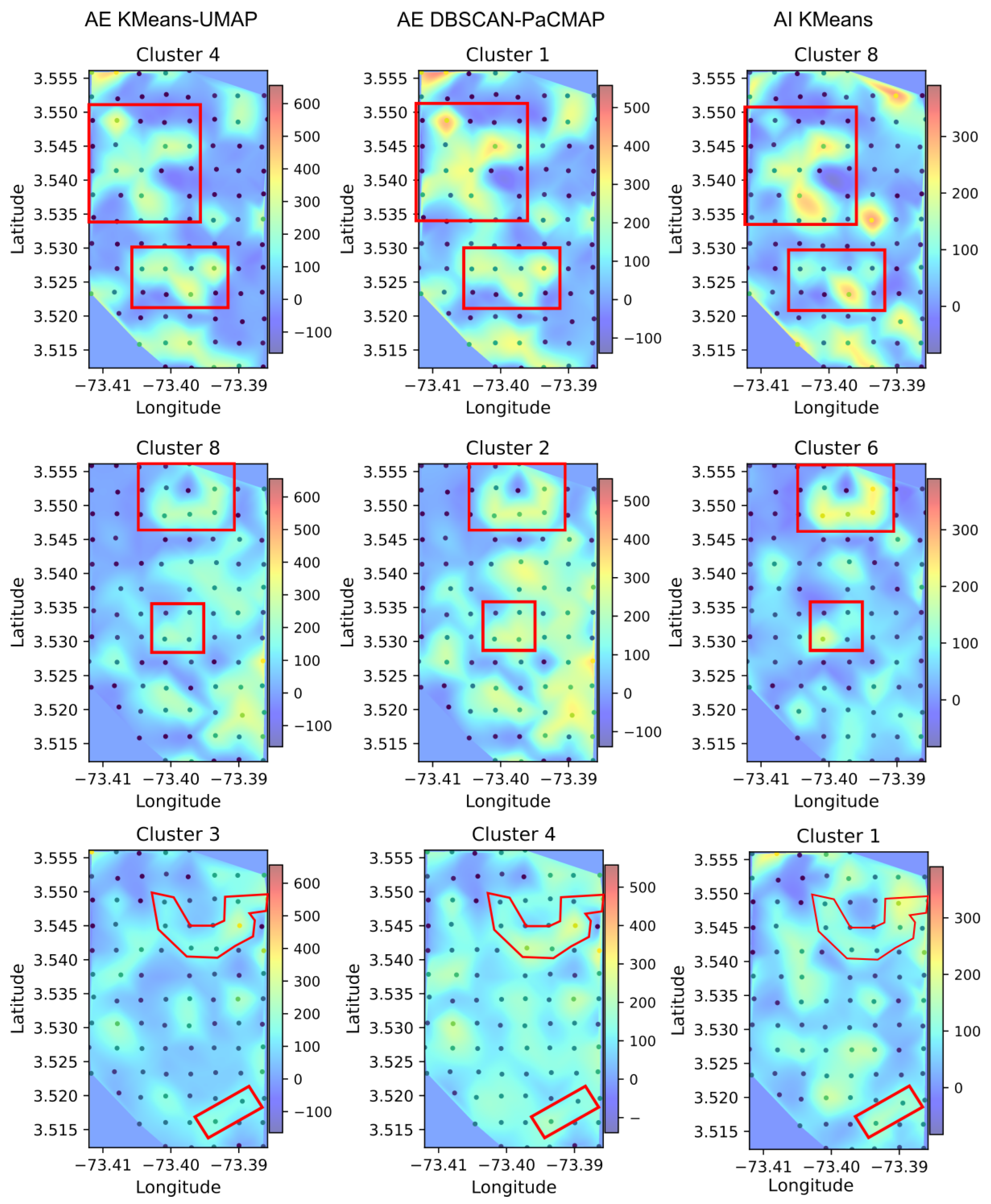

Similarly, we extracted heat maps for the clustering methodology using UMAP and KMeans, as well as for the characterization based on acoustic indices. As a result, we identified several spatial patterns that were shared between the deep embedding-based methods (i.e., Soundscape-Net representations) and the clustering derived from acoustic indices. This finding is relevant in two main ways. First, it confirms that the deep neural network embeddings effectively capture landscape-level patterns that are also perceptible through classical ecoacoustic approaches. Second, this alignment contributes to enhancing the interpretability of the results, thereby supporting interdisciplinary collaboration with biologists and ecologists by bridging data-driven acoustic representations with ecologically meaningful indicators.

Figure 10 shows a direct comparison of spatial heat maps derived from the clustering outputs of the three evaluated methods. The columns correspond to the three approaches: autoencoder embeddings with KMeans–UMAP, autoencoder embeddings with DBSCAN–PaCMAP, and acoustic indices with KMeans, while each row displays a representative cluster selected from each method. Several clusters exhibit similar spatial patterns across the methods. For example, in the first row, a cross-shaped pattern appears in the upper left part of the grid (covering rows 3 to 6 of the recorder layout) and is consistently detected by both autoencoder-based methods and the acoustic index-based approach. Moreover, the lower central region of the grid shows activity for the same clusters, with a higher degree of similarity between the two deep learning-based methods, although the pattern is still observable in the acoustic indices. Similarly, in the second and third rows of

Figure 10, shared spatial patterns can also be observed. The second row displays a more consistent cluster distribution across the three methods, indicating a stable acoustic pattern that emerges regardless of the feature extraction or clustering technique applied. In contrast, the third row exhibits clusters with high spatial variability, which shows less agreement between methods. This variability may reflect more complex or localized acoustic dynamics, making the clusters in this case more sensitive to the characteristics of the embedding space or the clustering algorithm used.

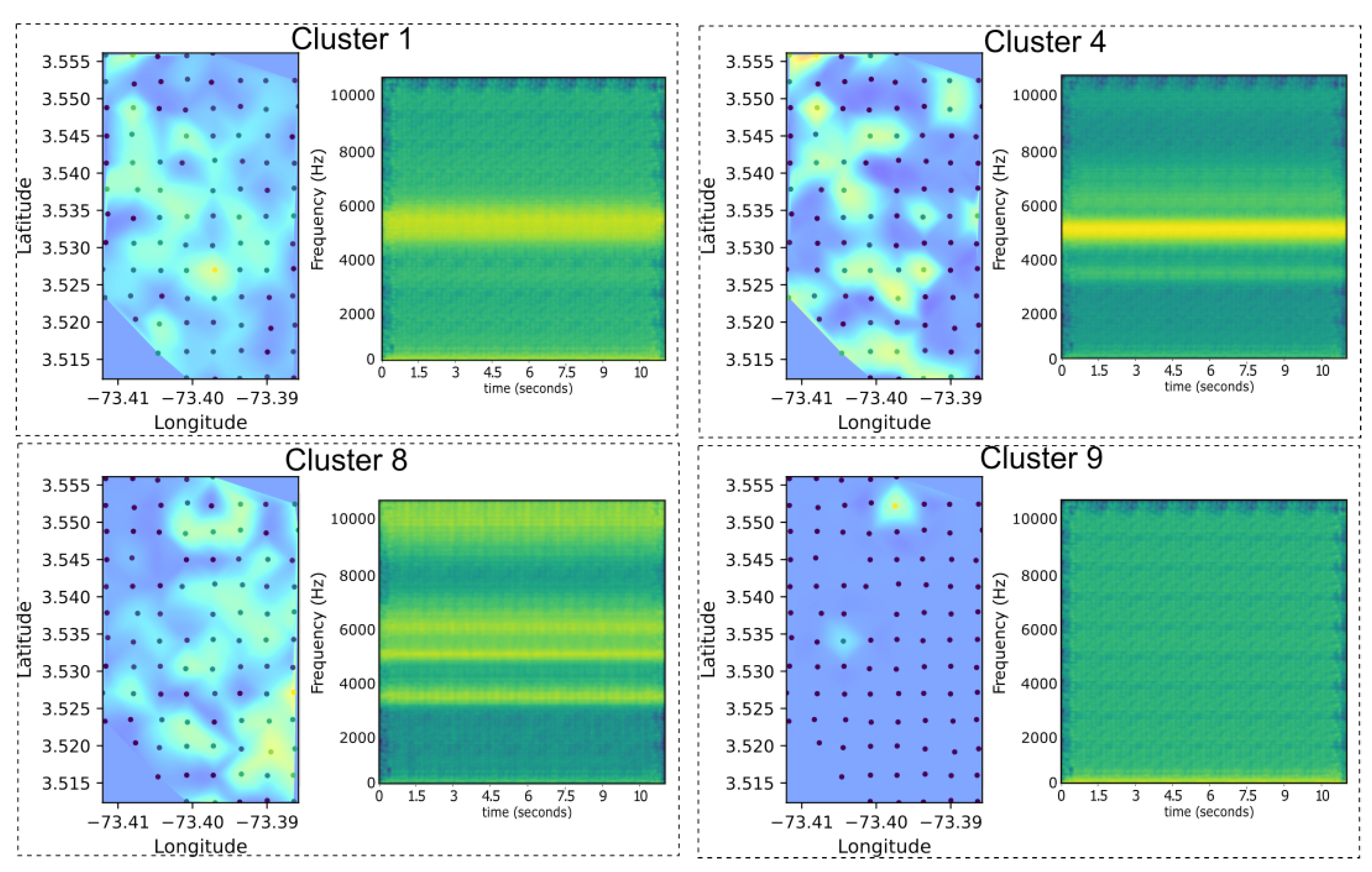

One key advantage of using UMAP in combination with autoencoders is that UMAP provides a transformation that allows inverse mapping from the low-dimensional embedding back to the original feature space, unlike PaCMAP which does not have this functionality. This reversibility function enables the use of the decoder component of the autoencoder to reconstruct representative spectrograms for each cluster based on the corresponding embeddings, as can be seen in

Figure 11. In the context of ecoacoustic analysis, this capability is particularly valuable as it allows us to identify the dominant frequency patterns associated with specific clusters and map them spatially. This offers a direct link between abstract latent representations and their interpretable acoustic content, enhancing both the explanatory power of the model and its ecological relevance.

Acoustic Indices Distribution Among Clusters

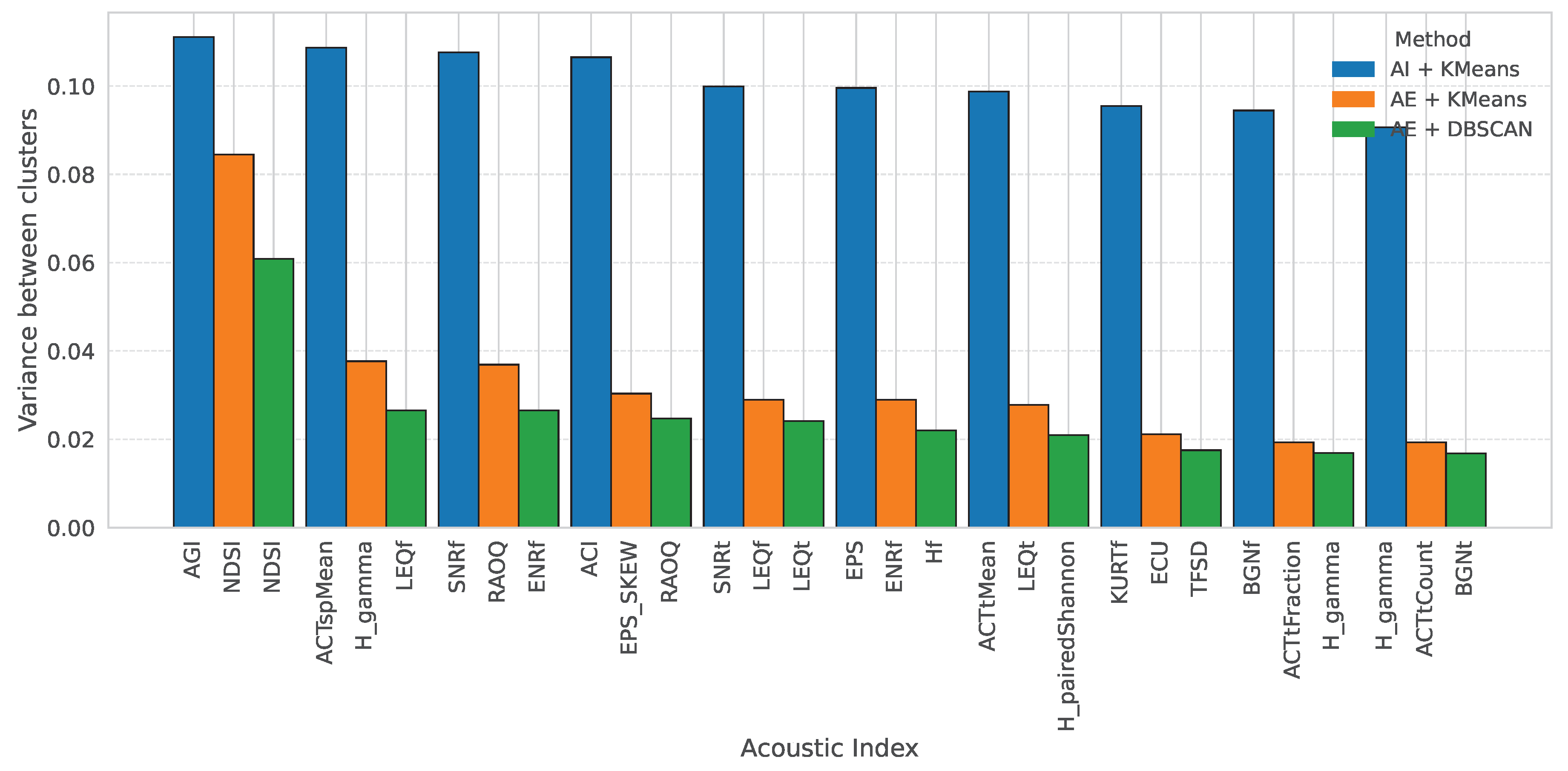

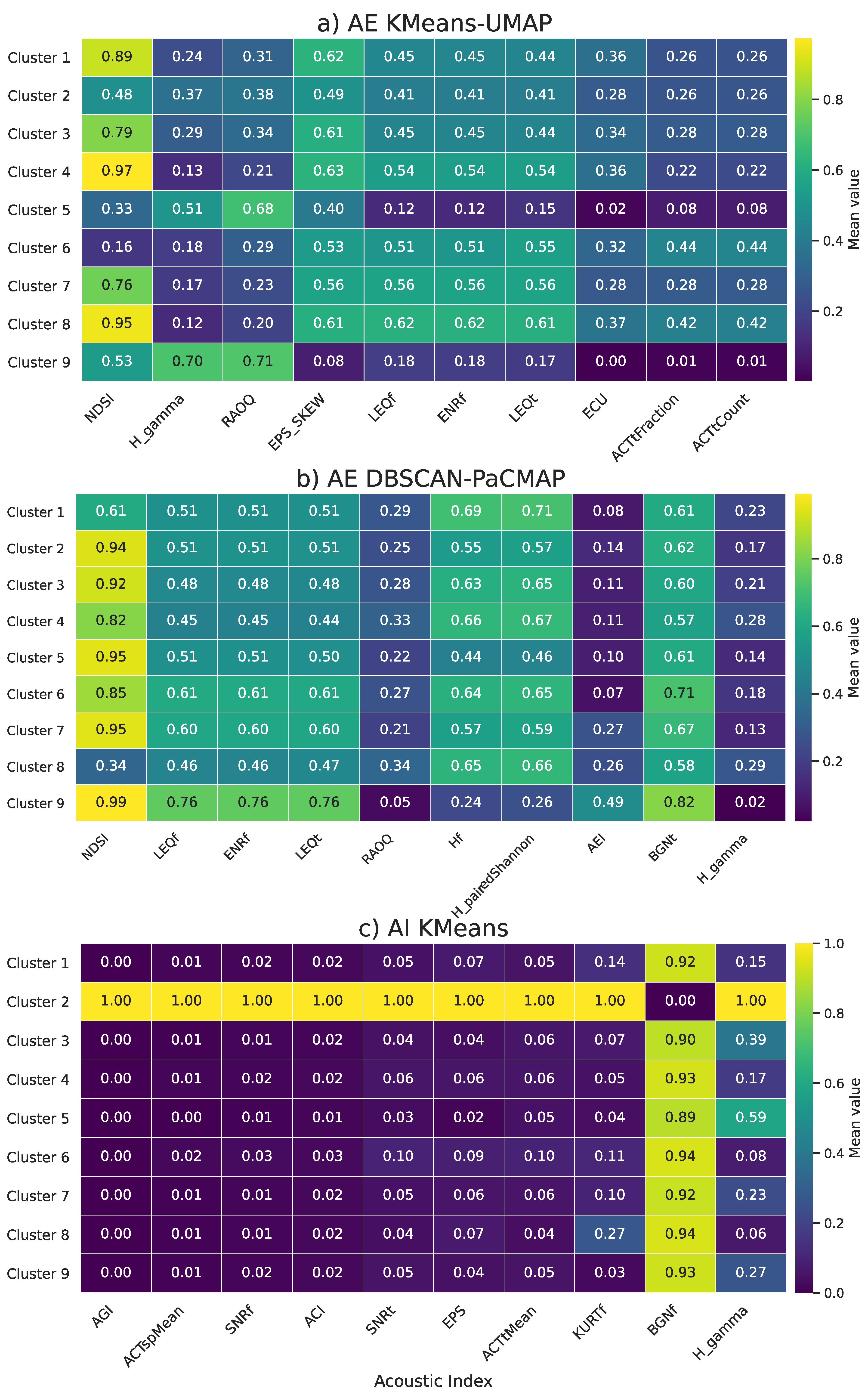

To gain deeper insight into the ecological patterns captured by each method, we computed acoustic indices for all audio samples within each cluster. We then calculated the variance of each index among clusters to identify which indices have more variability between the groups. This allowed us to determine which acoustic indices are most relevant for characterizing and discriminating between soundscape clusters.

Figure 12 shows the acoustic indices with the highest variance for both autoencoder-based methods and the baseline method using purely acoustic indices, facilitating a direct comparison. For autoencoder based methods, the Normalized Difference Soundscape Index (NDSI) showed the highest inter-cluster variance. The NDSI quantifies the balance between biological and anthropogenic acoustic activity by comparing the energy in biophonic frequency bands (typically 2–8 kHz) to that in anthropophonic bands (1–2 kHz) [

47,

48]. Higher values indicate a dominance of natural sound sources over human-made noise, making it a strong indicator of ecological integrity. In contrast, for the baseline clustering derived from acoustic indices, the Acoustic General Index (AGI) exhibited the highest variance across clusters. The AGI is a composite metric that integrates spectral entropy, signal-to-noise ratio, and frequency content to describe the overall complexity and richness of the soundscape [

49]. These results highlight how different features—whether derived from deep embeddings or direct signal-based descriptors—emphasize the distinct ecological dimensions of the acoustic environment.

Another index that consistently appeared among the highest variance features across all three approaches was the Gamma Spectral Entropy (). This metric captures spectral entropy by modeling the energy distribution of the signal using a gamma function, effectively reflecting the complexity and irregularity of the frequency spectrum. Similar to the Normalized Difference Soundscape Index (NDSI), which measures the proportion of biophonic to anthropophonic activity, is sensitive to acoustic heterogeneity, and higher values typically indicate a richer, more diverse soundscape. The presence of this index in both deep learning–based and traditional approaches suggests that it plays a key role in capturing ecologically meaningful variations in soundscape composition.

Additionally, we observed specifically in the autoencoder-based clustering using DBSCAN that there is an influence of highly correlated features. For example, pairs such as the Low-Frequency Equivalent Level (LEQf) and the Total Equivalent Level (LEQt), which both measure sound energy at different temporal or spectral resolutions, or entropy-based indices like the Frequency Entropy () and Paired Shannon Entropy (), often contributed simultaneously to inter-cluster variability. This redundancy may affect the sensitivity of density-based clustering methods, as DBSCAN is influenced by local density estimates that can be biased by overlapping information in the feature space. These findings underscore the importance of considering feature redundancy and correlation when combining handcrafted ecoacoustic metrics with unsupervised learning techniques.

Moreover,

Figure 13 shows a heatmap of the mean values of the top ten acoustic indices for each cluster and method. While the previous analysis identified the indices with the highest contribution to the clustering process, this visualization helps to understand how these indices vary across clusters and methods, providing more context for interpreting cluster composition. For example, the Normalized Difference Soundscape Index (NDSI) shows high values in most clusters, especially under the AE DBSCAN-PaCMAP method. This suggests that many of these clusters represent soundscapes with a high proportion of biophonic activity relative to anthropophonic noise. In contrast, the AI KMeans method shows generally low values for most indices across all clusters, except for Cluster 2, where all indices reach high values. This pattern indicates that Cluster 2 is acoustically distinct and may represent a particular environmental condition.

Another relevant observation is related to the Background Noise Floor (BGNf), which remains high in most clusters but is notably low in Cluster 2. This suggests a significant difference in ambient noise levels for this cluster compared to the rest, which could be linked to differences in habitat type or human presence (principal indices description is shown in the Appendix A, Table A1). In general, these heat maps complement the variance-based analysis by showing how each index behaves in clusters, helping to interpret the ecological meaning of each group more clearly. Together, these heat maps offer an ecologically interpretable perspective on cluster composition, facilitating the identification of acoustic signatures that characterize each group and strengthening the utility of unsupervised learning in landscape-scale eco-acoustic monitoring.

Soundscape Connectivity Based on Audio Features

In this work, we approach the concept of connectivity from an engineering perspective, identifying links between recording sites based on the similarity of their acoustic profiles. Locations with comparable acoustic behavior are assumed to share key ecological and environmental characteristics, such as vegetation structure, species assemblages, or proximity to hydrological elements detected acoustically through geophonic signatures. Although landscape connectivity is traditionally defined as the degree to which the landscape facilitates or impedes the movement of organisms among resource patches [

46,

50,

51], our interpretation focuses on spatial consistency and propagation of acoustic patterns, rather than species dispersal.

Recent studies have highlighted the ecological relevance of acoustic environments as indicators of landscape integrity [

46,

52], and have shown that soundscapes can encode meaningful ecological information, including structural attributes, biological diversity, and environmental processes [

41,

53]. From this perspective, the acoustic similarity between sites can reflect not only shared biological or physical sources of sound but also deeper characteristics in the ecological configuration. This approach complements classical notions of connectivity and enhances the potential of acoustic monitoring by exposing spatial patterns within the biophonic, geophonic, and anthropophonic components of the soundscape [

45,

54,

55].

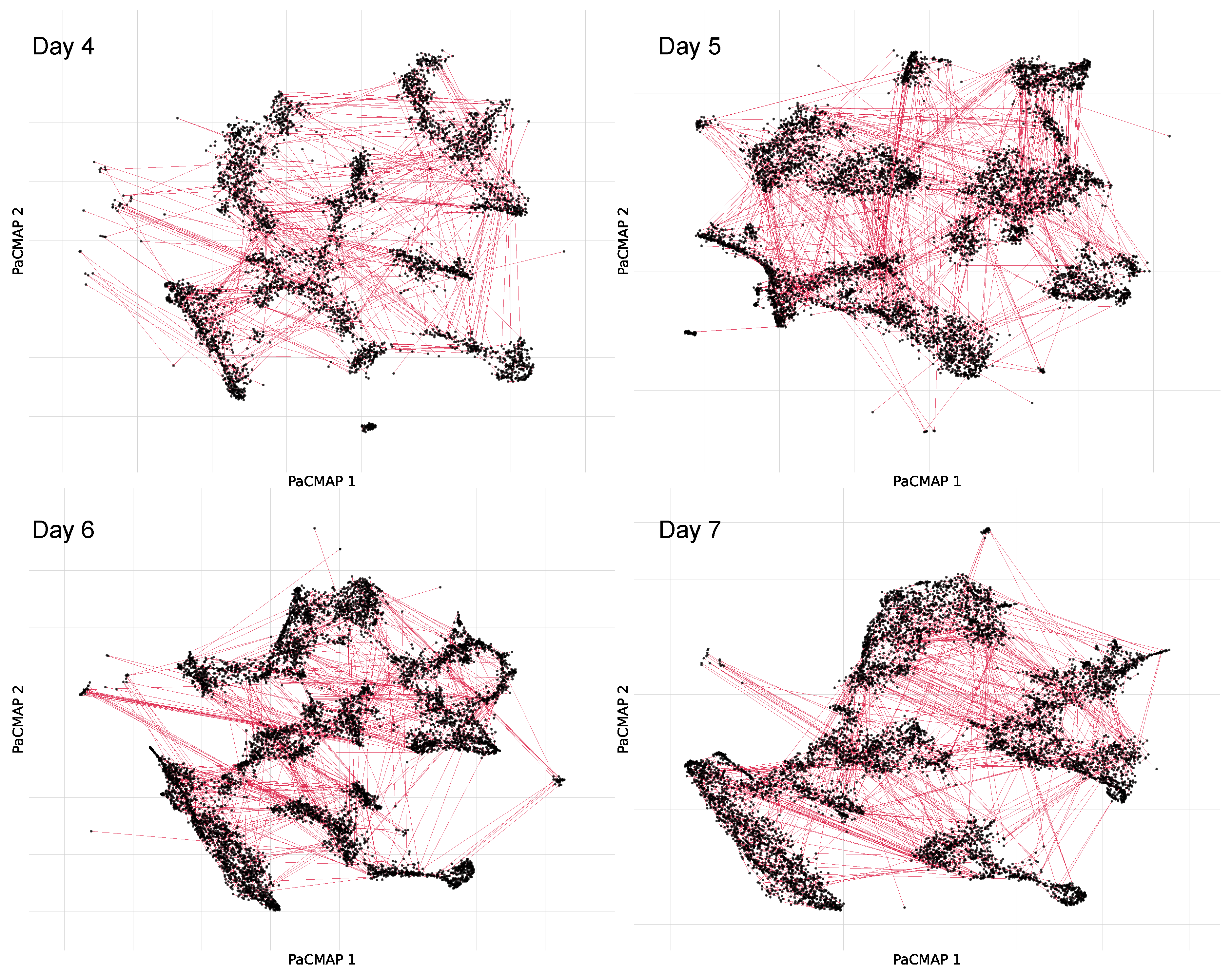

For the analysis of acoustic pattern similarity using connectivity, we leveraged the high-dimensional embedding space generated by the autoencoder. In contrast to previous approaches that built graphs directly in low-dimensional space, here we constructed connectivity graphs based on nearest neighbors in the original feature space to preserve the intrinsic structure of the learned acoustic representations. Using this high-dimensional representation, we constructed an undirected graph by connecting each node to its nearest neighbor using a k-nearest neighbor graph (), effectively capturing the most acoustically similar recordings. This process was performed both for each individual day and for the entire dataset, allowing us to assess connectivity patterns at multiple temporal scales.

To visualize these relationships, we applied PaCMAP with the parameter configuration previously described, projecting the embeddings into a two-dimensional space. The resulting graph layout retained the connectivity structure derived from the original high-dimensional space while enabling spatial interpretation of similarity patterns across samples. This strategy allows us to explore acoustic connectivity with greater fidelity, as the graph is informed by the full representation learned by the neural network, while the PaCMAP projection provides an interpretable spatial embedding for visualization and pattern recognition.

Figure 14 shows the connectivity graphs for a selection of sample days, illustrating how acoustically similar recordings are linked based on their proximity in the original autoencoder feature space.

However, since interpreting the connections directly in the feature space can be difficult and would require inspecting individual samples to understand their links, we used these connections as a proxy to explore how acoustic similarity is reflected in the physical space of the Rey Zamuro reserve.

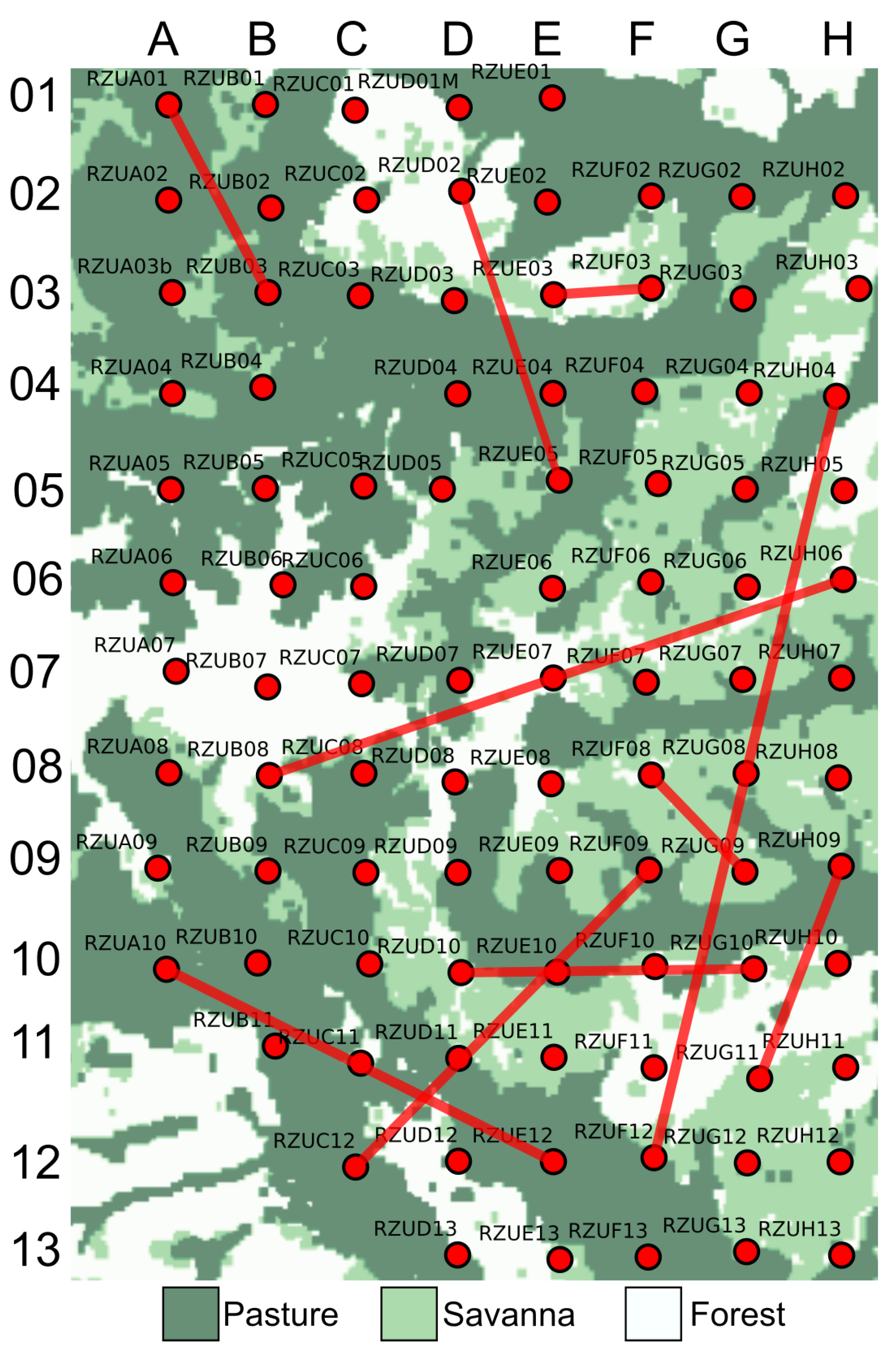

Figure 15 shows the resulting graph, where recorder locations are represented as nodes and edges indicate acoustic similarity derived from the high-dimensional autoencoder space. The background includes the land cover classification, showing forest, pasture, and savanna, to provide ecological context.

As expected, many connections appear between nearby sites with similar land cover types, confirming the method’s ability to capture ecologically consistent acoustic patterns. However, we also observed several long-range connections between sites with the same cover type, suggesting that this graph-based representation is particularly effective in identifying spatial relationships that are not constrained by geographic proximity. This offers a complementary perspective to the interpolation-based approach presented earlier, which, while useful for highlighting general spatial trends, may introduce interpretive bias by emphasizing local continuity. In contrast, the connectivity analysis reveals both local and distant associations, providing a more direct view of the underlying acoustic structure. Furthermore, connections between dissimilar land cover types (such as the link between RZUA10 and RZUE12, and RZUH04 and RZUF12) illustrate that acoustically similar conditions can emerge across heterogeneous environments, underscoring the capacity of this method to uncover nuanced ecological dynamics across the landscape.

4. Conclusions

This work presents an unsupervised framework for soundscape analysis that integrates deep representation learning, dimensionality reduction, clustering, and spatial exploration. One of the key aspects of our approach was the careful and systematic optimization of parameters for both projection and clustering methods. Rather than relying on default values, we performed an extensive grid search to evaluate the impact of hyperparameters on the structure and interpretability of the results, an often overlooked step in ecoacoustic studies.

A particularly striking result was the emergence of nine clusters across both unsupervised pipelines (UMAP + KMeans and PaCMAP + DBSCAN), despite their methodological differences. This consistency suggests that the acoustic landscape in our study area is structured around diverse and well-defined patterns. Moreover, the number of clusters exceeds what could be expected from an analysis based solely on spatial, temporal, or spectral properties, indicating that our method captures a combination of multiple ecological and acoustic dimensions. We also proposed the use of spatial interpolation to map the distribution of clusters across the landscape. Although interpolation in soundscape studies can be controversial, our use of a grid-based sampling design provided the spatial consistency needed to support this technique and interpret longitudinal and latitudinal trends in acoustic variation. The inclusion of acoustic indices further enhanced our ability to interpret the clusters, showing that macro-scale landscape patterns are associated with ecologically relevant features. Notably, indices such as the Normalized Difference Soundscape Index (NDSI) and the H-Gamma appeared consistently across methods and are linked to biophonic richness and biodiversity gradients.

On the other hand, we recognize the value that more detailed metadata or even species-level annotations would bring to the interpretation and validation of our results. However, the datasets available for this study do not include such granular ecological labels. This limitation is, in fact, a common challenge in ecoacoustic research and one of the key motivations for developing and evaluating methodologies that leverage general metadata (such as time, location, and land cover) since they are consistently available on ecoacoustic datasets. This approach also promotes broader applicability and reproducibility on different ecological contexts. Nonetheless, the proposed methodology and processing pipeline are openly available and designed to be adaptable. We encourage researchers working with more detailed ecological datasets to build upon our framework. Such collaborations may enhance the applicability and validation of the approach, contributing to the development of more robust ecoacoustic analysis methods across diverse environmental contexts.

Finally, we introduced a novel method for analyzing acoustic connectivity, transitioning from similarity graphs in a high-dimensional feature space to interpretable spatial connections among physical recording sites. This allowed us to detect not only local relationships but also long-range acoustic similarities that might reflect ecological structure or shared sound sources. By bridging engineering-based techniques with ecological interpretation, this approach opens opportunities for interdisciplinary analysis and supports the development of scalable tools for landscape monitoring.

Overall, our findings demonstrate that unsupervised deep learning, when combined with thoughtful design and multi-layered analysis, can offer powerful insights into the organization of complex soundscapes. This methodology contributes to the growing need for data-driven, label-free approaches in ecoacoustics and provides a foundation for future work in biodiversity assessment, habitat monitoring, and conservation planning.

Author Contributions

Conceptualization, D.A.N.-M., J.D.M.-V., and L.D.-M.; methodology, D.A.N.-M., J.D.M.-V., and L.D.-M.; software, D.A.N.-M.; validation, D.A.N.-M., J.D.M.-V., and L.D.-M.; formal analysis, D.A.N.-M., J.D.M.-V., and L.D.-M.; investigation, D.A.N.-M., and L.D.-M.; resources, D.A.N.-M, J.D.M.-V., and L.D.-M.; writing—original draft preparation, D.A.N.-M. and L.D.-M.; writing—review and editing, D.A.N.-M.; visualization, D.A.N.-M; supervision, J.D.M.-V., and L.D.-M.; project administration, J.D.M.-V., and L.D.-M. All authors have read and agreed to the published version of the manuscript.

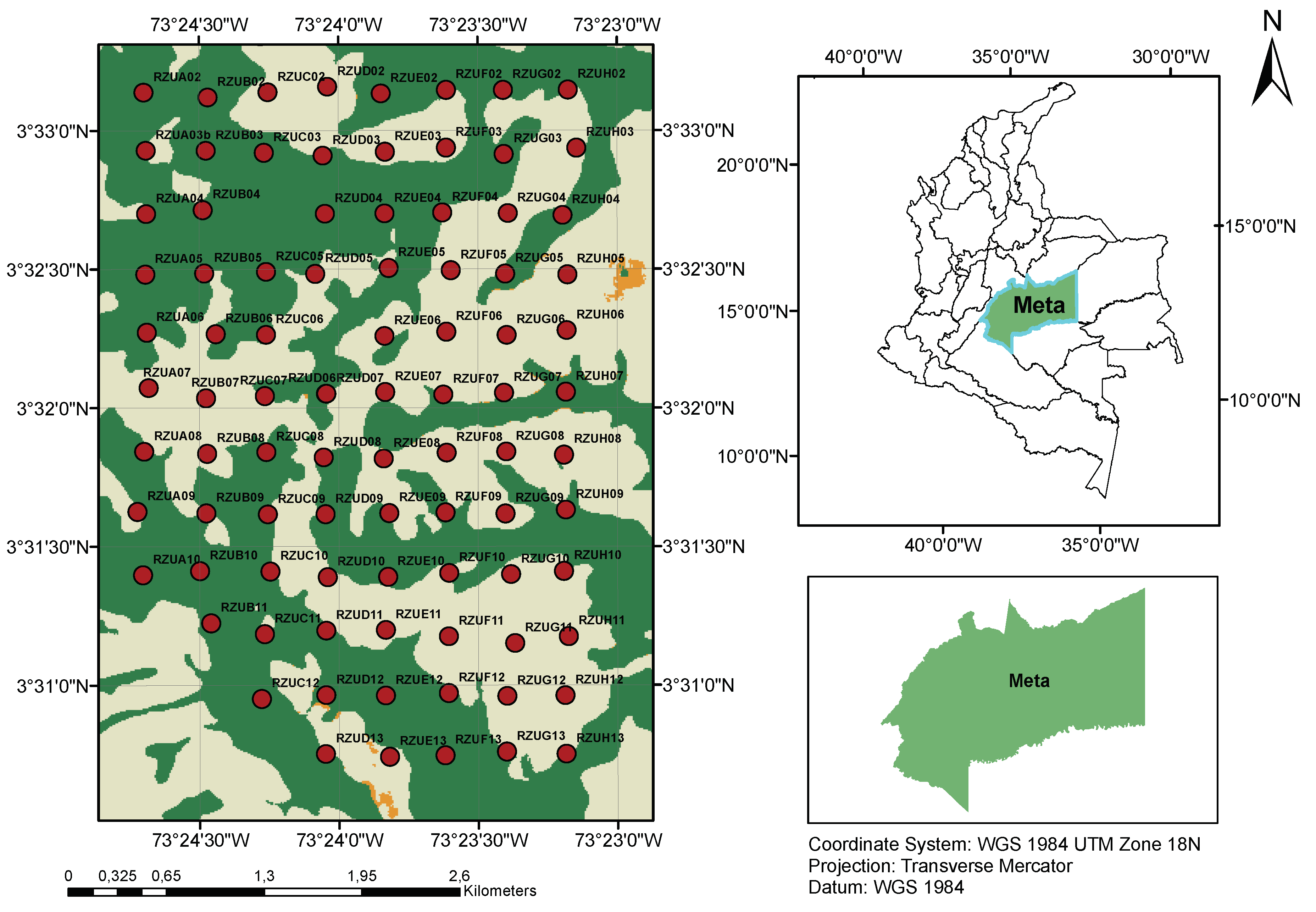

Figure 1.

Geographic location of the Rey Zamuro and Matarredonda Private Nature Reserve showing the distribution of 94 AudioMoth recording devices across different habitat types. The map illustrates the sampling grid with 200m spacing between devices across forest, savanna, and pasture areas.

Figure 1.

Geographic location of the Rey Zamuro and Matarredonda Private Nature Reserve showing the distribution of 94 AudioMoth recording devices across different habitat types. The map illustrates the sampling grid with 200m spacing between devices across forest, savanna, and pasture areas.

Figure 2.

Audio processing pipeline before feature extraction. The original audio recordings are downsampled from 192,000 Hz to 22,050 Hz. Each recording is then segmented into five 12-second segments. For each segment, a spectrogram is computed. Batches of these spectrograms are subsequently fed into the autoencoder network.

Figure 2.

Audio processing pipeline before feature extraction. The original audio recordings are downsampled from 192,000 Hz to 22,050 Hz. Each recording is then segmented into five 12-second segments. For each segment, a spectrogram is computed. Batches of these spectrograms are subsequently fed into the autoencoder network.

Figure 3.

Performance comparison of feature extraction methods on habitat classification across thirteen sampling days. The boxplots represent the distribution of (a) accuracy, (b) F1-score, and (c) recall obtained using Random Forest classifiers trained on features extracted by acoustic indices, VGGish, and autoencoder embeddings. The autoencoder consistently outperforms the other methods, demonstrating higher median scores and lower variability, indicating improved representational quality and robustness.

Figure 3.

Performance comparison of feature extraction methods on habitat classification across thirteen sampling days. The boxplots represent the distribution of (a) accuracy, (b) F1-score, and (c) recall obtained using Random Forest classifiers trained on features extracted by acoustic indices, VGGish, and autoencoder embeddings. The autoencoder consistently outperforms the other methods, demonstrating higher median scores and lower variability, indicating improved representational quality and robustness.

Figure 4.

Performance comparison of feature extraction methods on time-of-day classification across thirteen sampling days. The classification was performed using three temporal segments: dawn, midday, and dusk. The boxplots represent the distribution of (a) accuracy, (b) F1-score, and (c) recall obtained using Random Forest classifiers trained on features extracted by acoustic indices, VGGish, and autoencoder embeddings.

Figure 4.

Performance comparison of feature extraction methods on time-of-day classification across thirteen sampling days. The classification was performed using three temporal segments: dawn, midday, and dusk. The boxplots represent the distribution of (a) accuracy, (b) F1-score, and (c) recall obtained using Random Forest classifiers trained on features extracted by acoustic indices, VGGish, and autoencoder embeddings.

Figure 5.

Overview of the proposed unsupervised methodology. Feature extraction is performed using autoencoders, with acoustic indices included as a comparative baseline. The resulting embedding spaces are projected using PaCMAP and UMAP. These projections are then clustered by combining DBSCAN with PaCMAP and KMeans with UMAP. Finally, the evaluation scheme comprises three main components: spatial pattern analysis, comparative analysis using acoustic indices, and a novel connectivity approach based on acoustic similarity.

Figure 5.

Overview of the proposed unsupervised methodology. Feature extraction is performed using autoencoders, with acoustic indices included as a comparative baseline. The resulting embedding spaces are projected using PaCMAP and UMAP. These projections are then clustered by combining DBSCAN with PaCMAP and KMeans with UMAP. Finally, the evaluation scheme comprises three main components: spatial pattern analysis, comparative analysis using acoustic indices, and a novel connectivity approach based on acoustic similarity.

Figure 6.

Evaluation and visualization of low-dimensional embeddings generated by UMAP and PaCMAP. (a) Trustworthiness scores computed for a grid of parameter configurations in each method. The highest score for UMAP was achieved with 75 neighbors and a minimum distance of 0.01. For PaCMAP, the optimal configuration was 75 neighbors, a mid-scale neighbor ratio of 1.5, and a far neighbor ratio of 20. (b) Final low-dimensional projections of the dataset (subsampled), using the selected hyperparameters.

Figure 6.

Evaluation and visualization of low-dimensional embeddings generated by UMAP and PaCMAP. (a) Trustworthiness scores computed for a grid of parameter configurations in each method. The highest score for UMAP was achieved with 75 neighbors and a minimum distance of 0.01. For PaCMAP, the optimal configuration was 75 neighbors, a mid-scale neighbor ratio of 1.5, and a far neighbor ratio of 20. (b) Final low-dimensional projections of the dataset (subsampled), using the selected hyperparameters.

Figure 7.

Summary of KMeans clustering performance across different numbers of clusters using internal validation metrics. Each subplot shows a boxplot distribution of scores among days for a given number of clusters. (a) Silhouette Coefficient, where higher values indicate more cohesive and well-separated clusters; (b) Davies–Bouldin Index, where lower values indicate better clustering quality; and (c) Calinski–Harabasz Index, where higher values suggest better-defined cluster structure.

Figure 7.

Summary of KMeans clustering performance across different numbers of clusters using internal validation metrics. Each subplot shows a boxplot distribution of scores among days for a given number of clusters. (a) Silhouette Coefficient, where higher values indicate more cohesive and well-separated clusters; (b) Davies–Bouldin Index, where lower values indicate better clustering quality; and (c) Calinski–Harabasz Index, where higher values suggest better-defined cluster structure.

Figure 8.

Reachability plots for PaCMAP-embedded data across different sampling days.

Figure 8.

Reachability plots for PaCMAP-embedded data across different sampling days.

Figure 9.

Spatial distribution of acoustic clusters across the Zamuro Natural Reserve using PaCMAP projection and DBSCAN clustering. Each panel represents a heat map corresponding to one of the nine clusters. Color intensity indicates the relative number of audio samples associated with that cluster at each recording site.

Figure 9.

Spatial distribution of acoustic clusters across the Zamuro Natural Reserve using PaCMAP projection and DBSCAN clustering. Each panel represents a heat map corresponding to one of the nine clusters. Color intensity indicates the relative number of audio samples associated with that cluster at each recording site.

Figure 10.

Comparison of spatial distributions between the different clustering pipelines. Red boxes highlight areas where spatial overlap or similarity is observed between methods.

Figure 10.

Comparison of spatial distributions between the different clustering pipelines. Red boxes highlight areas where spatial overlap or similarity is observed between methods.

Figure 11.

Spatial distributions and decoded spectrograms for four selected clusters derived from UMAP projections and autoencoder embeddings. Each panel shows the heat map of cluster presence across the sampling grid (left) and the corresponding representative spectrogram reconstructed from the autoencoder latent space (right). This decoding enables the identification of dominant frequency bands associated with each cluster, facilitating the interpretation of their ecological and acoustic significance.

Figure 11.

Spatial distributions and decoded spectrograms for four selected clusters derived from UMAP projections and autoencoder embeddings. Each panel shows the heat map of cluster presence across the sampling grid (left) and the corresponding representative spectrogram reconstructed from the autoencoder latent space (right). This decoding enables the identification of dominant frequency bands associated with each cluster, facilitating the interpretation of their ecological and acoustic significance.

Figure 12.

Comparison of the acoustic indices with the highest inter cluster variance for both autoencoder based clustering (left) and baseline clustering using acoustic indices (right).

Figure 12.

Comparison of the acoustic indices with the highest inter cluster variance for both autoencoder based clustering (left) and baseline clustering using acoustic indices (right).

Figure 13.

Mean values of the top 10 acoustic indices per cluster for each analysis methodology.

Figure 13.

Mean values of the top 10 acoustic indices per cluster for each analysis methodology.

Figure 14.

Examples of acoustic connectivity graphs for Days 4, 5, 6, and 7, constructed using PaCMAP projections and a threshold-based nearest neighbor approach.

Figure 14.

Examples of acoustic connectivity graphs for Days 4, 5, 6, and 7, constructed using PaCMAP projections and a threshold-based nearest neighbor approach.

Figure 15.

Acoustic connectivity graph projected onto the physical layout of the Rey Zamuro reserve. Nodes represent recorder locations, and edges indicate acoustic similarity based on high-dimensional autoencoder embeddings. The background layer displays land cover types (forest, pasture, and savanna), providing ecological context. While several connections occur between nearby sites with similar cover types, long-range links are also present, revealing spatial acoustic patterns that extend beyond geographic proximity. This graph-based approach complements interpolation methods by highlighting both local and distant acoustic relationships across the landscape.

Figure 15.

Acoustic connectivity graph projected onto the physical layout of the Rey Zamuro reserve. Nodes represent recorder locations, and edges indicate acoustic similarity based on high-dimensional autoencoder embeddings. The background layer displays land cover types (forest, pasture, and savanna), providing ecological context. While several connections occur between nearby sites with similar cover types, long-range links are also present, revealing spatial acoustic patterns that extend beyond geographic proximity. This graph-based approach complements interpolation methods by highlighting both local and distant acoustic relationships across the landscape.

Table 1.

Statistical test results for performance metrics using cover types as labels in a multiclass classification approach. Significant p-values are marked as * (), ** (), and *** ().

Table 1.

Statistical test results for performance metrics using cover types as labels in a multiclass classification approach. Significant p-values are marked as * (), ** (), and *** ().

| Metric |

Test |

Comparison |

Statistic |

p-value |

| Accuracy |

Friedman |

AE, VGG, AI |

20.182 |

0.00004***

|

| Accuracy |

Wilcoxon |

AE vs VGG |

0.000 |

0.0010***

|

| Accuracy |

Wilcoxon |

AE vs AI |

1.000 |

0.0020**

|

| Accuracy |

Wilcoxon |

VGG vs AI |

0.000 |

0.0010***

|

| F1 Score |

Friedman |

AE, VGG, AI |

20.182 |

0.00004***

|

| F1 Score |

Wilcoxon |

AE vs VGG |

0.000 |

0.0010***

|

| F1 Score |

Wilcoxon |

AE vs AI |

1.000 |

0.0020**

|

| F1 Score |

Wilcoxon |

VGG vs AI |

0.000 |

0.0010***

|

| Recall |

Friedman |

AE, VGG, AI |

20.182 |

0.00004***

|

| Recall |

Wilcoxon |

AE vs VGG |

0.000 |

0.0010***

|

| Recall |

Wilcoxon |

AE vs AI |

1.000 |

0.0020**

|

| Recall |

Wilcoxon |

VGG vs AI |

0.000 |

0.0010***

|

Table 2.

Statistical test results for performance metrics using three range of hours as labels. Significant p-values are marked as * (), ** (), and *** ().

Table 2.

Statistical test results for performance metrics using three range of hours as labels. Significant p-values are marked as * (), ** (), and *** ().

| Metric |

Test |

Comparison |

Statistic |

p-value |

| Accuracy |

Friedman |

AE, VGG, AI |

16.909 |

0.0002***

|

| Accuracy |

Wilcoxon |

AE vs VGG |

0.000 |

0.0010***

|

| Accuracy |

Wilcoxon |

AE vs AI |

0.000 |

0.0010***

|

| Accuracy |

Wilcoxon |

VGG vs AI |

20.000 |

0.2783 |

| F1 Score |

Friedman |

AE, VGG, AI |

15.273 |

0.0005***

|

| F1 Score |

Wilcoxon |

AE vs VGG |

0.000 |

0.0010***

|

| F1 Score |

Wilcoxon |

AE vs AI |

1.000 |

0.0020**

|

| F1 Score |

Wilcoxon |

VGG vs AI |

13.000 |

0.0830 |

| Recall |

Friedman |

AE, VGG, AI |

14.364 |

0.0008***

|

| Recall |

Wilcoxon |

AE vs VGG |

0.000 |

0.0010***

|

| Recall |

Wilcoxon |

AE vs AI |

1.000 |

0.0020**

|

| Recall |

Wilcoxon |

VGG vs AI |

18.000 |

0.2061 |