1. Introduction

The emergence of Generative Artificial Intelligence (GenAI) has created a pivotal moment in higher education, fundamentally challenging traditional teaching and assessment methods (Lee et al., 2024). Educators across disciplines face the critical task of incorporating GenAI into their teaching while ensuring students develop essential critical thinking and analytical skills (Ng et al., 2025). This work addresses this challenge by introducing the Cognitive-AI Synergy Framework (CASF). This structured approach aligns GenAI integration with students' cognitive development stages.

The pedagogical landscape has shifted dramatically with GenAI tools like ChatGPT, Claude, and Gemini (Sharma, 2024). These technologies offer unprecedented capabilities in content generation, problem-solving assistance, and personalized learning support (Tajik, 2024). However, their integration into higher education requires careful consideration of how they affect learning processes, skill development, and assessment methods (Michel-Villarreal et al., 2023). Current approaches often lack systematic frameworks for incorporating these tools in ways that enhance rather than substitute critical thinking and deep learning (Wang et al., 2024).

Two existing frameworks offer valuable insights but reveal significant gaps in addressing the comprehensive needs of higher education. Julián De Zubiría's dialogic pedagogical model (de Zubiría Samper, 2006) provides a structured pathway for cognitive development, progressing from basic knowledge acquisition to metacognitive mastery. This model emphasizes the importance of balanced learning approaches that combine guided instruction with independent thinking (de Zubiría Samper, 2021). Meanwhile, the Artificial Intelligence Assessment Scale (AIAS), developed by Perkins et al. (2024), outlines various levels of AI involvement in educational assessment, ranging from no AI use to full AI collaboration. However, neither framework fully addresses the complex interplay between cognitive development and AI integration in higher education.

We propose the Cognitive-AI Synergy Framework (CASF) to bridge these gaps. This framework synthesizes De Zubiría's cognitive development stages with the AIAS assessment levels, creating a comprehensive approach for integrating GenAI in higher education. CASF provides educators with structured guidance for implementing GenAI tools while fostering students' cognitive growth and maintaining academic rigor. We present the CASF Implementation Assistant, developed in n8n, to facilitate practical implementation. This implementation features structured conversation flow management, contextual understanding, and consistent recommendations based on students' cognitive levels. This assistant provides educators with tailored guidance for integrating GenAI based on their specific teaching contexts and students' needs.

GenAI has profoundly impacted higher education's pedagogical landscape, presenting challenges and opportunities. Lim et al. (2023) characterize this situation as a paradox, recognizing AI as both a potential disruptor and a facilitator of learning. Meanwhile, Eager and Brunton (2023) advocate for AI-augmented teaching and learning practices that complement rather than replace traditional methods. This concept is particularly important in education, where hands-on experience remains crucial despite technological advancements.

This paper makes several key contributions to educational technology and pedagogy. First, it presents a theoretically grounded framework for GenAI integration that prioritizes cognitive development. Second, it introduces a practical tool that helps educators implement this framework effectively, detailing its evolution from a simple assistant to a structured agent with improved capabilities. Third, it provides comprehensive recommendations for addressing potential challenges in implementation, including ethical considerations and assessment strategies aligned with the concerns raised by Crawford et al. (2023) regarding the responsible use of AI in education.

The following sections detail the conceptual foundations of CASF, explain its structure and implementation strategies, and demonstrate how the CASF Implementation Assistant supports educators in practice. We also address ethical considerations and outline directions for future research, aiming to establish a foundation for thoughtful and effective GenAI integration in higher education.

2. Conceptual Background

Integrating GenAI in higher education requires a solid theoretical foundation that acknowledges established learning theories and emerging technological frameworks. This section presents the key theoretical pillars that underpin our Cognitive-AI Synergy Framework (CASF), beginning with fundamental learning theories and progressing to contemporary AI assessment models.

2.1. Introducing De Zubiría's Dialogic Pedagogical Model and Its Theoretical Foundations

Our CASF framework is built upon Julián De Zubiría's dialogic pedagogical model, a comprehensive approach to understanding cognitive development in educational contexts. Before exploring De Zubiría's model in detail, it is vital to briefly examine the foundational learning theories that underpin this model, as they provide crucial context for understanding both De Zubiría's work and our proposed framework for integrating GenAI in higher education.

De Zubiría's model draws upon several influential learning theories. Vygotsky's sociocultural theory, particularly his concept of the Zone of Proximal Development (ZPD), informs De Zubiría's understanding of how learners progress through developmental stages with appropriate support (Vygotsky & Cole, 1978). The ZPD—the gap between what learners can achieve independently and with guidance—is especially relevant when considering how AI tools might serve as dynamic scaffolding agents in education. Similarly, Anderson and Krathwohl's revised Bloom's Taxonomy (2001) provides a hierarchical model of cognitive processes that complements De Zubiría's cognitive stages. Their framework of remembering, understanding, applying, analyzing, evaluating, and creating aligns with the progressive nature of learning that De Zubiría emphasizes.

Constructivist learning theory, particularly Bruner's (1976) emphasis on active knowledge construction rather than passive reception, forms another pillar of De Zubiría's approach. This constructivist foundation is particularly relevant when considering GenAI's potential to enhance or undermine students' active participation in knowledge construction. Finally, Zimmerman's (2002) work on self-regulated learning offers insights into the metacognitive processes that become increasingly important in De Zubiría's higher cognitive stages. These metacognitive skills are crucial for students navigating AI-enhanced learning environments and deciding when and how to use these tools.

With these foundational theories in mind, we can now explore De Zubiría's dialogic pedagogical model in greater detail, understanding how it synthesizes these perspectives into a comprehensive framework for cognitive development.

2.2. De Zubiría's Dialogic Pedagogical Model in Detail

De Zubiría's dialogic pedagogical model emerges as a sophisticated synthesis of constructivist thought and traditional guided instruction, offering profound insights into cognitive development. This model transcends the conventional dichotomy between teacher-centered and student-centered approaches, proposing a nuanced understanding of how learning progresses through distinct developmental stages (de Zubiría Samper, 2006).

At its core, the dialogic model balances two fundamental educational approaches: self-structuring and hetero-structuring. Self-structuring is the process through which students actively construct their knowledge, aligning with constructivist principles and emphasizing learner autonomy. In contrast, hetero-structuring acknowledges the educator's essential role in guiding and facilitating the learning process, particularly when students encounter complex concepts that require expert scaffolding. This balanced approach creates what De Zubiría terms a "dialogic pedagogy," where learning emerges from the dynamic interaction between student autonomy and guided instruction (de Zubiría Samper, 2021).

The model's sophistication lies in its detailed articulation of cognitive development stages, each building upon the previous while maintaining distinct characteristics.

Table 1 illustrates these stages and their practical manifestations in higher education.

A distinguishing feature of De Zubiría's model is its recognition of cognitive development as fluid and non-linear. Students might demonstrate advanced capabilities in one domain while requiring more basic support in others, necessitating flexible pedagogical approaches that can adapt to varying developmental needs (de Zubiría Samper, 2006). This non-linear progression particularly resonates in higher education, where students often engage simultaneously with multiple disciplines and varying levels of complexity. As students progress through these cognitive stages, the balance between self-structured and guided learning shifts, with educators gradually transitioning from direct instruction to facilitating independent inquiry. This progression aligns with the increasing complexity of academic work and the growing need for autonomous learning skills.

Through its comprehensive framework, De Zubiría's model provides educators with a sophisticated tool for understanding and supporting cognitive development. It acknowledges the need for structured guidance in early learning stages and the importance of fostering independence as students progress to more advanced cognitive levels. This balanced approach offers valuable insights for designing educational experiences that effectively support students' cognitive growth while maintaining appropriate levels of guidance and support.

2.3. The Artificial Intelligence Assessment Scale (AIAS)

As GenAI continues to reshape educational landscapes, the Artificial Intelligence Assessment Scale (AIAS), developed by Perkins et al. (2024), emerges as an important framework for understanding and implementing AI in educational assessment. AIAS provides a structured approach to integrating AI tools while maintaining academic integrity and educational quality. This framework proves particularly valuable as institutions grapple with questions about meaningfully incorporating AI technologies into their assessment practices.

Table 2 presents the comprehensive AIAS framework, illustrating its progressive approach to AI integration in assessment:

AIAS's significance extends beyond mere categorization of AI use. The framework emphasizes the importance of purposeful AI integration, where each level of implementation serves specific pedagogical objectives. Recent studies indicate that when properly implemented, AIAS-aligned assessments can strengthen students' critical thinking and analytical capabilities (Furze et al., 2024; Roe et al., 2024).

The framework's ethical dimensions prove particularly noteworthy. At each level of AI integration, AIAS prompts educators to consider fundamental issues such as equity of access, algorithmic bias, and academic integrity. These considerations become increasingly important as AI tools grow more sophisticated and their role in education more prominent. The framework provides specific guidelines for maintaining transparency in AI use while ensuring that assessment practices remain fair and meaningful.

2.4. The Need for Synthesis

While both De Zubiría's dialogic model and the AIAS framework provide valuable insights for education, each has limitations when considered in isolation, particularly in the context of GenAI integration in higher education.

De Zubiría's model, despite its comprehensive approach to cognitive development, predates the widespread availability of GenAI tools. Consequently, it does not address how these powerful technologies might be integrated at different cognitive levels or affect the balance between self-structuring and hetero-structuring learning approaches. The model provides excellent guidance on cognitive progression but offers no specific direction on technology integration or the unique challenges posed by AI in educational contexts.

Conversely, the AIAS framework addresses AI integration in assessment but presents significant implementation challenges for many educators. The framework implicitly assumes a sophisticated understanding of AI tools' capabilities and limitations—knowledge that many professors, despite their pedagogical expertise, may not possess (Ghimire et al., 2024). This technical knowledge gap can create barriers to effective implementation and potentially lead to reluctance to adopt AI-enhanced assessment strategies. Additionally, while AIAS guides assessments, it doesn't address the broader pedagogical contexts in which they occur or how they align with students' cognitive development.

These complementary limitations highlight the need for a synthesized framework that combines De Zubiría's pedagogical insights with AIAS's practical approach to AI integration. Such a synthesis offers several advantages:

First, it leverages educators' pedagogical knowledge as the primary driver for AI integration decisions. Rather than requiring extensive AI expertise, this approach allows professors to make informed decisions based on their professional strengths: understanding student needs, cognitive development stages, and learning processes. This approach ensures that technology serves learning objectives rather than dictating them.

Second, it provides guidance for AI integration that aligns with recognized cognitive development stages, making implementation more intuitive for educators. By mapping AIAS levels to De Zubiría's cognitive stages, educators can more easily determine appropriate AI integration strategies based on their students' developmental needs.

Finally, it focuses on augmenting, rather than replacing, proven pedagogical practices. The synthesis transforms the technical-centric approach of AIAS into one grounded in pedagogical principles, ensuring that AI integration enhances rather than disrupts effective teaching and learning processes.

The following section presents our Cognitive-AI Synergy Framework (CASF) as a response to these challenges. It demonstrates how it synthesizes pedagogical expertise with AI integration capabilities to create a practical, educator-centered approach to GenAI implementation in higher education.

3. The Cognitive-AI Synergy Framework (CASF)

The Cognitive-AI Synergy Framework (CASF) provides a structured approach for aligning AI integration with students' natural cognitive development journey. Its fundamental premise is that pedagogical expertise—not technological proficiency—should drive educational decisions. By recognizing where students are in their cognitive development, educators can strategically incorporate AI tools to enhance rather than disrupt the learning process. This approach places students' developmental needs at the center, with AI as a supporting element rather than the focus of advancement.

3.1. Framework Structure and Implementation Stages

CASF recognizes that students naturally progress through distinct cognitive development stages as defined in De Zubiría's model. The framework guides appropriate AI integration at each stage, ensuring technology supports this developmental journey. The carefully calibrated approach, detailed in

Table 3, helps educators match AI integration strategies to their students' current cognitive needs.

3.1.1. Foundational AI Integration Stage

Students focus on basic concept memorization and comprehension at the notional level—equivalent to Bloom's remembering and understanding. During this foundational stage of learning, students need to develop a strong fundamental understanding through their cognitive efforts. Here, independent work without AI is encouraged, while AI-assisted idea generation is permitted only under supervision. Key activities involve defining terms, identifying process components, and describing basic characteristics—tasks that build essential knowledge structures.

As students naturally progress to the propositional level (application in Bloom's taxonomy), they begin applying knowledge to solve straightforward problems. Their cognitive development now supports more complex interactions with content, allowing for thoughtfully expanded AI integration. The framework acknowledges this development by permitting the independent use of AI for idea generation while maintaining supervision for editing activities. Faculty roles remain directive, providing guidance on appropriate AI tool use and validating outputs regularly.

3.1.2. Conceptual and Formal Cognitive Levels

As students' cognitive development advances to the conceptual level, their analytical capabilities expand significantly. At this stage of their learning journey, they analyze, compare, and contrast different elements—cognitive skills that reflect more profound understanding and more sophisticated thinking. Students can now independently use AI for idea generation and feedback, while supervised use extends to editing and task completion. Key activities include comparing theories, analyzing cause-effect relationships, and classifying information.

When students reach the formal level in their cognitive development, they begin evaluating and justifying decisions based on specific criteria. This phase represents a significant advancement in critical thinking, as students now assess, critique, and defend positions with reasoned arguments. Students can independently use AI for idea generation, feedback, and editing, while supervised use extends to task completion. Faculty roles evolve from direct instruction to facilitation, supporting students' emerging evaluative capabilities.

3.1.3. Categorial and Metacognitive Cognitive Levels

When students attain the categorial level in their cognitive development, they have developed sophisticated abilities to create and synthesize across domains. At this advanced stage, they generate new ideas, design original solutions, and integrate knowledge from multiple perspectives. Students can independently engage with AI across all integration levels, including task completion—leveraging these tools while maintaining intellectual autonomy.

The metacognitive level represents the pinnacle of students' cognitive development journey. Here, they reflect on their learning processes, planning strategies, monitoring progress, and adjusting approaches as needed. This sophisticated self-awareness allows for the most advanced relationship with AI tools—not because they are more advanced, but because students' cognitive development now supports full, autonomous integration. At this level, students can independently navigate the entire spectrum of AI integration, using technology as a collaborative partner that enhances rather than replaces their metacognitive processes. Faculty transition to strategic mentorship roles, guiding students in maximizing the symbiotic relationship between human cognition and artificial intelligence while ensuring the technology serves the students' learning objectives rather than defining them.

3.2. Quality Assurance and Ethical Considerations

Quality assurance within CASF emerges through a deliberate fusion of pedagogical integrity and technological innovation. The framework extends the Artificial Intelligence Assessment Scale (AIAS) developed by Perkins et al. (2024), adapting its structured approach to ethical AI implementation while upholding rigorous academic standards. By aligning AIAS levels with cognitive development stages, CASF creates a comprehensive system where technology amplifies rather than diminishes the learning journey.

Transparency functions as a foundational principle throughout the framework. Each integration level requires appropriate documentation of AI involvement, reflecting AIAS's commitment to academic integrity and authentic assessment. This transparency looks different at various thinking levels, starting with simply noting AI help in early stages to more detailed crediting methods in higher stages. The ethical dimensions of CASF address the complex considerations of equity, accessibility, and educational justice highlighted by Crawford et al. (2023). The framework prompts educators to consider crucial questions:

Do all students have equitable access to AI tools?

Might algorithmic biases affect learning outcomes?

How can assessment maintain integrity while incorporating technological assistance?

Lodge et al. (2023) emphasize that AI integration must be purposeful rather than permissive—a principle CASF embodies through its calibrated approach to technology involvement. The framework establishes clear boundaries at each developmental stage, recognizing that appropriate AI integration should evolve alongside students' growing cognitive capabilities.

3.3. Framework Adaptability and Ongoing Development

CASF's adaptability stems from its foundation in cognitive development principles that transcend specific technologies or disciplinary boundaries. While AI tools will inevitably evolve, the stages of cognitive development remain relatively constant, providing a stable foundation for ongoing refinement. The framework flexes to accommodate diverse educational contexts and changing technological capabilities while preserving its essential focus on supporting natural learning progression.

The framework's evolution continues through ongoing dialogue between cognitive science, pedagogical practice, and technological advancement. Future directions point toward an enhanced understanding of how AI can be optimally integrated at each developmental stage to support rather than supplant critical thinking, creativity, and metacognition. This dynamic approach ensures CASF remains responsive to educational needs while maintaining its fundamental commitment to student-centered cognitive development.

Through this comprehensive yet flexible approach, CASF empowers educators to leverage AI in service of their students' natural cognitive growth. The framework's success lies in recognizing that effective AI integration stems not from technological sophistication but from a deep understanding of how students learn and develop. This understanding of how students grow and learn makes sure that AI improvements truly help education, supporting students' thinking process while keeping the learning experience real and meaningful. foundation in developmental principles ensures that AI enhancement serves genuine educational objectives, supporting students' cognitive journey while preserving the authenticity and integrity of the learning experience.

4. CASF Implementation Assistant

4.1. Technical Design and Architecture

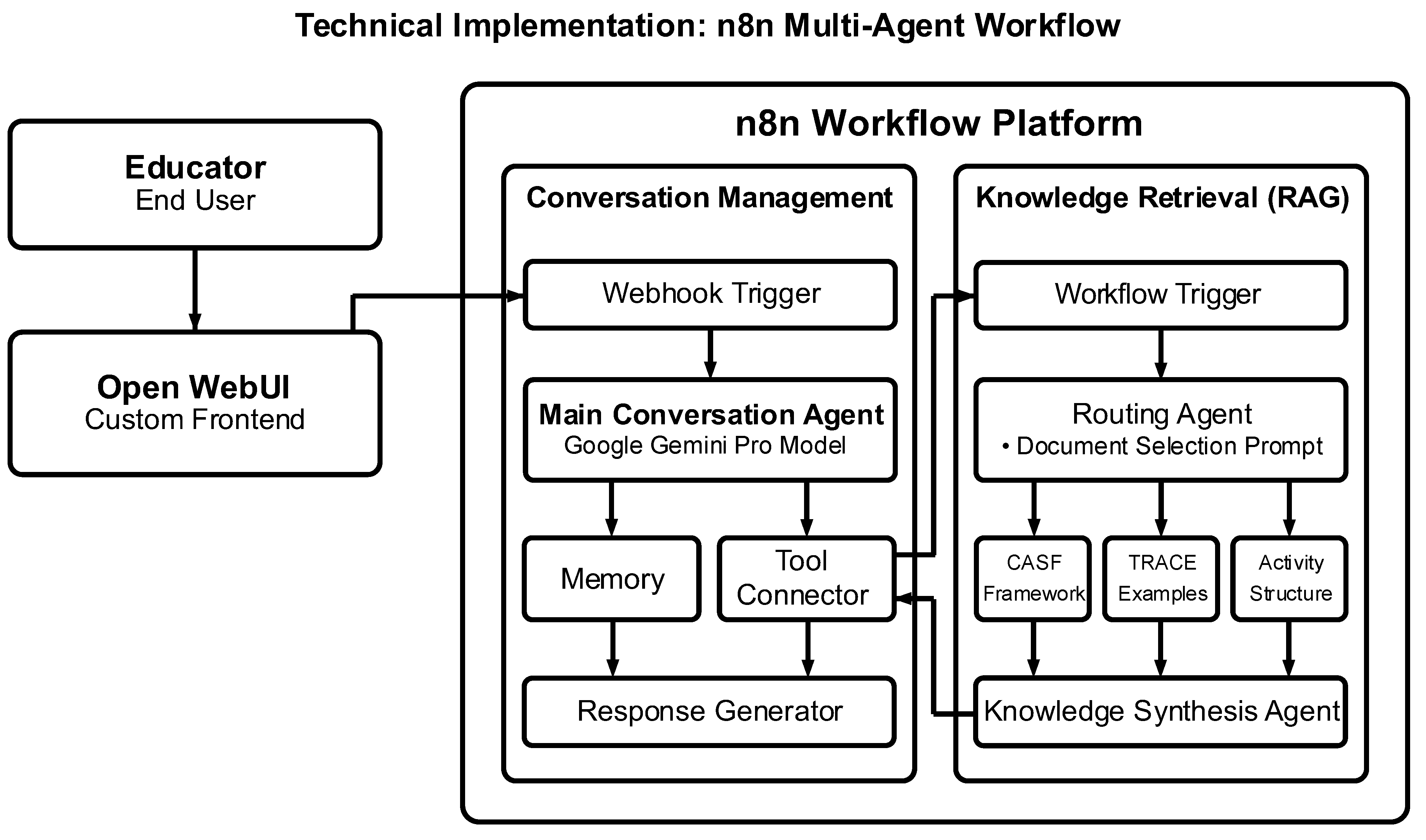

The CASF Implementation Assistant employs a multi-agent workflow architecture designed in n8n to guide educators through GenAI integration in their courses.

Figure 1 illustrates this interconnected system, prioritizing practical usability and pedagogical alignment while maintaining academic rigor.

The architecture's primary component is the Conversation Management Workflow (

Figure 1), which handles direct interaction with educators. Chat messages and webhook triggers initiate conversations through multiple entry points, connecting the system to a user-friendly interface based on a customized Open WebUI fork (see

https://www.openwebui.com). The core AI agent manages the conversational flow, utilizing a comprehensive system prompt that encodes the CASF framework principles. This agent operates with a sophisticated prompt that defines its role as an assistant, helping professors implement the Cognitive-AI Synergy Framework (CASF) in their courses. The prompt provides detailed guidance on the framework's cognitive levels, structured conversation flow, and implementation phases: from initial language selection through detailed activity development.

The assistant now runs a dual-model stack: a lightweight Gemini 1.5-Flash instance (models/gemini-1.5-flash-latest) routes document requests inside the Knowledge-Retrieval workflow, whereas a higher-capacity Gemini 2.5-Pro model (models/gemini-2.5-pro-exp-03-25) orchestrates the dialogue and pedagogical reasoning. This separation keeps retrieval latency low while reserving the more capable model for synthesis and instructional guidance. A buffer-window memory component (memoryBufferWindow) keyed to the educator’s sessionId retains only the most recent turns, enabling context-rich multi-turn conversations without persisting long-term personal data.

When educators interact with the system, the agent guides them through a structured sequence that collects course information, determines appropriate AI integration levels, and generates tailored educational activities aligned with students' cognitive development stages. The Knowledge Retrieval Workflow (

Figure 1) supports this primary interface, which provides on-demand access to specialized framework information. The conversation agent invokes this workflow when it requires more detailed knowledge. A routing agent first determines which knowledge source is most relevant to the current query, drawing on

CASF_framework.txt (framework specification),

ejemplos_TRACE.txt (TRACE(SE) prompt library), and

estructura_actividad.txt (activity template). By limiting the router to these three vetted Google Drive documents, the system ensures that every retrieval is aligned with the CASF framework and pedagogically coherent. The retrieved document undergoes text extraction, embedding generation

via Gemini 1.5-Flash, placement of the resulting vectors in an in-memory vector store (

vectorStoreInMemory) for low-latency semantic similarity search, and processing that transforms the content into actionable knowledge before the workflow returns the most relevant snippets to the Conversation Management agent. This allows the system to provide educators with relevant framework guidance without overwhelming them with raw documentation.

This distributed system leverages the strengths of n8n's node-based workflow architecture, allowing for modular functionality, specialized agent roles, and dynamic access to constantly updated knowledge sources. The architecture enables sophisticated conversation paths while maintaining a cohesive user experience through coordinated agent interactions that support pedagogical decision-making.

Supplementary Information A contains the complete JSON files for the agent implementation and related documents.

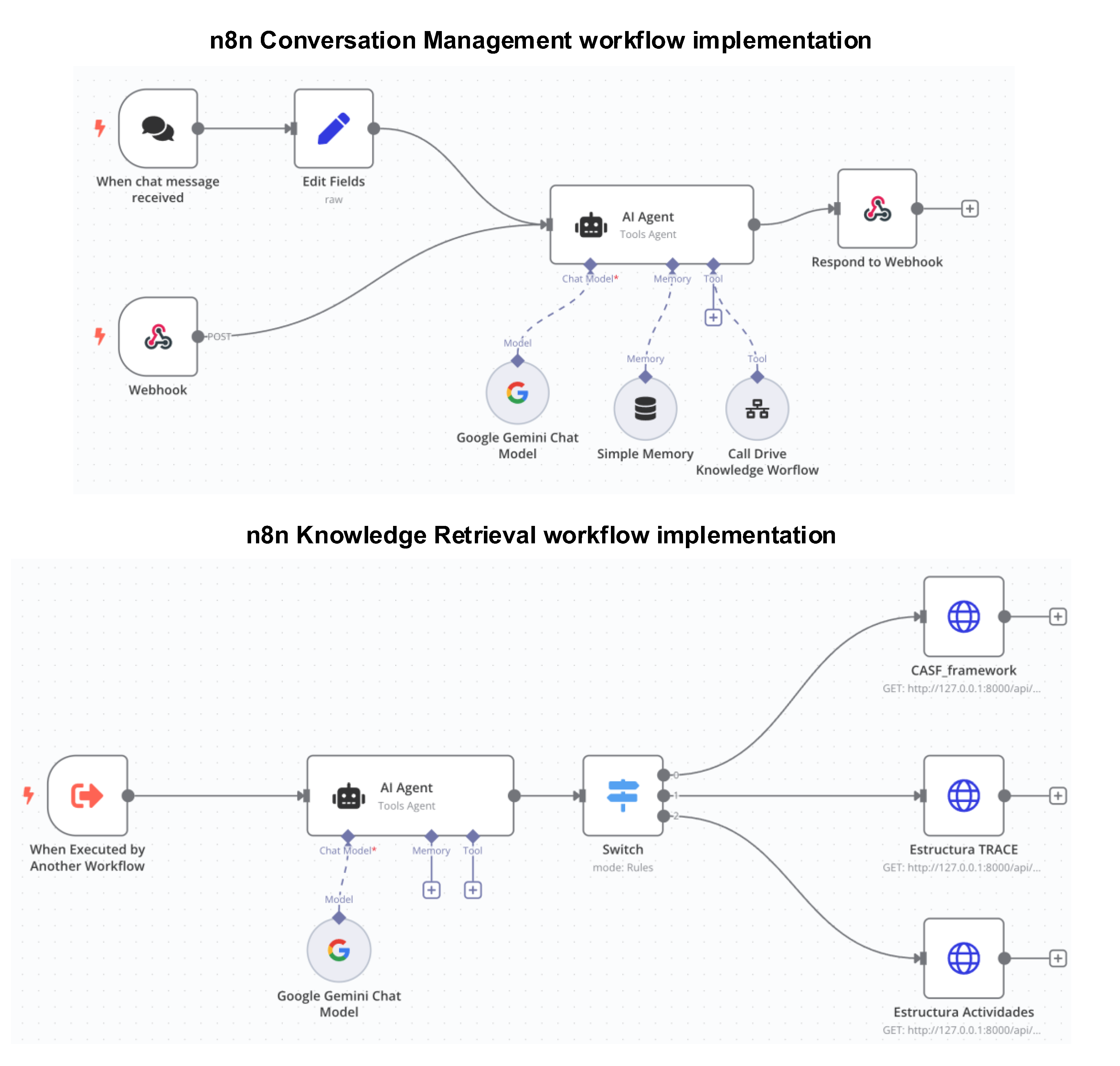

4.2. Development in n8n

The CASF Implementation Assistant was developed using n8n (

https://n8n.io), an open-source automation platform released under a fair-code Sustainable Use License. n8n provides a low-code/no-code visual interface with robust capabilities for creating complex, interconnected systems through its node-based architecture while maintaining the flexibility required for academic implementations.

The implementation features three key technical innovations that enhance its educational value:

Multi-Agent Coordination: The system distributes tasks across specialized agents rather than relying on a single conversational agent. This architecture facilitates consulting different resources when developing teaching strategies, allowing for more targeted and efficient processing aligned with educational needs.

Dynamic Knowledge Retrieval: Unlike static prompt systems, the implementation selectively accesses specific framework documents based on conversation context. The system routes queries to the most relevant knowledge sources: CASF framework specifications, TRACE prompt libraries, and activity templates.

Vector-Based Semantic Search: Text embedding and vector storage enable the assistant to identify conceptually relevant information even when exact terminology varies. This capability is particularly valuable for supporting educators who may describe similar pedagogical concepts using different disciplinary language.

A critical implementation component is its integration with a custom frontend based on Open WebUI. This open-source interface was forked and adapted to create an accessible entry point for educators, abstracting the technical complexity of the multi-agent system behind a familiar chat interface. The webhook integration between n8n and the modified Open WebUI creates a seamless experience where educators can focus on pedagogical decisions rather than technical interactions.

The modular nature of n8n enabled an iterative development approach, with components being continuously refined based on testing feedback from education professionals. This flexibility proved particularly valuable as the framework itself evolved alongside its technical implementation, allowing technical and pedagogical developments to progress in tandem. Screenshots from the n8n interface for the two workflows can be found in

Figure 2.

4.3. Key Features and Functionality

The assistant includes several sophisticated features specifically designed to support educational practice. The system's intelligent document consultation automatically determines which framework documents contain relevant information for a query, extracts the appropriate content, and synthesizes it into contextually relevant responses that maintain pedagogical coherence. This mimics how an expert educational consultant might draw on multiple resources when providing guidance. The conversation agent is designed to support multi-language interactions, making the CASF framework accessible to educators globally and supporting implementation across diverse educational contexts. This internationalization aspect is important for the framework's adoption in varied cultural and linguistic environments. Structured conversation flow guides educators through a well-designed interaction sequence, ensuring that all necessary pedagogical information is collected while maintaining a natural dialogue experience. This structured approach keeps the focus on crucial educational considerations, which less systematic implementations might overlook. maintain focus on critical educational considerations that might otherwise be overlooked in less systematic implementations.

The system performs sophisticated cognitive level analysis, assessing student readiness for different AI integration levels and recommending appropriate strategies that align with De Zubiría's model stages. This feature ensures that technology integration remains developmentally appropriate and pedagogically sound across different educational contexts.

TRACE(SE) prompt-generation capabilities create context-specific prompts that follow the full seven-element TRACE template (Task, Requirement, Action, Context, Example, Output Format, and Assessment) with the “(SE)” suffix merely signaling this bilingual TRACE adaptation rather than introducing extra elements. This uniform structure helps educators model effective AI-interaction strategies for their students.

For educators working with existing materials, the activity adaptation feature can analyze current approaches and recommend specific modifications to incorporate AI while preserving pedagogical integrity. This feature supports the evolution of established educational practices rather than requiring educators to start from scratch.

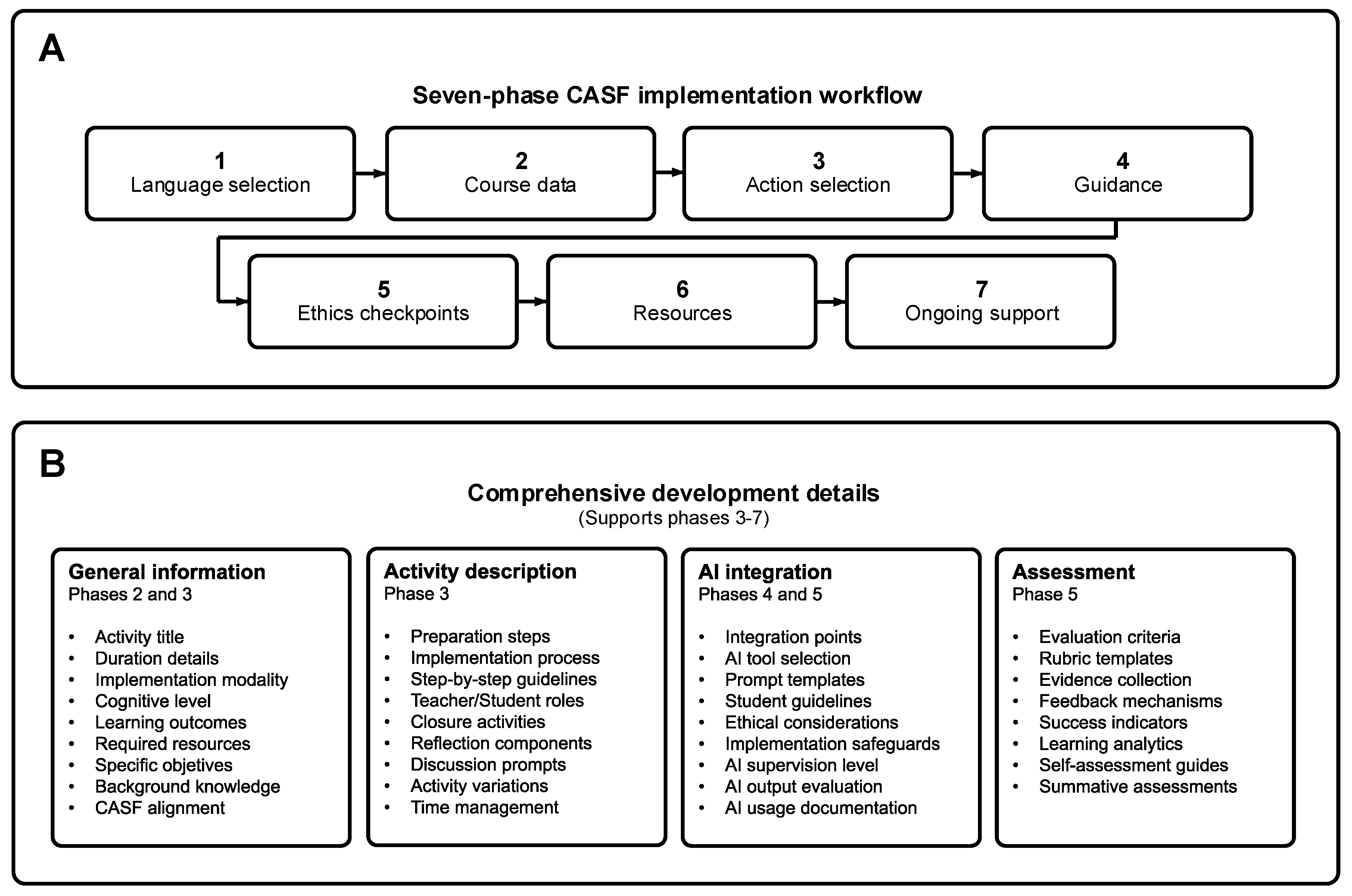

4.4. Implementation Process

The implementation follows a structured conversation flow with multiple phases to guide educators through the framework while maintaining pedagogical focus and academic rigor (

Figure 3).

The process begins with language selection, where the system establishes the user's preferred language for interaction, supporting the international implementation of the framework. The educator then selects their primary goal from creating a new activity, adapting an existing one, learning about CASF, or understanding AI integration levels. This goal-oriented approach ensures the system provides relevant guidance rather than generic information. To develop activities, the system collects contextual information about the course, including the course name, academic level, student cognitive development stage based on De Zubiría's model, learning outcomes, specific topic focus, and teaching modality (face-to-face, hybrid, or virtual). This comprehensive understanding of the educational context is essential for appropriate framework application.

The system then provides framework-aligned recommendations based on the collected information. These recommendations include the appropriate AI integration level for the identified cognitive stage. TRACE prompts adapted to student capabilities, boundaries for AI use to maintain pedagogical integrity, and implementation strategies supporting the specified learning outcomes. This integration of pedagogical theory and practical application supports educators in making informed decisions.

In the detailed activity development phase, the system generates comprehensive activity plans or adaptation strategies with implementation guidance, including preparation steps, student instructions, assessment approaches, and ethical considerations. This level of detail facilitates the transition from theoretical understanding to classroom implementation. Educators can also request additional resources, including workshop content for faculty development, student prompts that model effective AI interaction, evaluation rubrics aligned with cognitive development levels, and implementation guides for different educational contexts.

The system's multi-agent architecture enables continuous refinement as educators implement CASF-aligned activities and report their experiences. This creates a feedback loop that improves recommendations based on real-world classroom applications, allowing the framework to evolve based on practical experience while maintaining theoretical integrity.

This sophisticated implementation represents a significant advancement over traditional AI assistants, creating a synergistic system that combines the pedagogical depth of the CASF framework with the technical capabilities of a distributed, knowledge-aware, multi-agent architecture. Integrating a user-friendly Open WebUI-based frontend ensures that this technical sophistication remains accessible to educators regardless of their technical expertise.

4.5. Workshop Implementation and Evaluation

To validate the practical applicability of the CASF, we conducted a structured workshop with university professors to assess the framework's effectiveness in real educational settings. This validation approach was implemented to provide empirical evidence of CASF's utility in diverse educational contexts.

The workshop included nine university educators representing various disciplines within engineering education. These participants applied the CASF framework to courses across different educational levels: predominantly in both undergraduate and postgraduate levels (67%), with some focused solely on undergraduate (22%) or postgraduate (11%) courses. This diversity allowed us to evaluate CASF's adaptability across different instructional contexts and student cognitive development stages.

The workshop began with an introduction to the theoretical foundations of CASF, including De Zubiría's dialogic pedagogical model and the AIAS. Following this foundation, educators engaged with the CASF Implementation Assistant, using it to design AI-enhanced activities aligned with their specific courses' cognitive levels and learning objectives. Participants created concrete educational activities using the framework, applying it to their respective courses. Throughout the session, educators shared their experiences, discussing challenges and opportunities identified during implementation in a collaborative feedback environment.

Following the workshop, participants completed a comprehensive evaluation survey assessing multiple dimensions of the CASF framework's effectiveness. The survey employed a 5-point Likert scale (1=strongly disagree to 5=strongly agree) across 18 specific indicators grouped into five key categories. These categories included Activity Design, measuring CASF's effectiveness in supporting the development of educational activities; CASF Alignment, assessing the consistency between framework principles and implementation assistance; Usability, evaluating the framework's ease of use and implementation; AI Integration, measuring how effectively CASF helped integrate AI into educational practices; and Cognitive Development, assessing CASF's impact on supporting students' cognitive growth.

The survey also included open-ended questions to capture qualitative feedback on the most valuable aspects of the framework, areas for improvement, and general observations about implementing CASF in engineering education. This combination of quantitative ratings and qualitative responses provided a comprehensive assessment of the framework's practical utility in diverse engineering education contexts.

Survey responses were analyzed using both quantitative and qualitative approaches. For quantitative data, descriptive statistics, including means and percentages, were calculated for each indicator and category to assess overall satisfaction and identify strengths and areas for improvement. The qualitative responses to open-ended questions were thematically analyzed to identify recurring patterns, unique insights, and contextual factors influencing the implementation of CASF. This mixed-methods approach enabled a nuanced understanding of the framework's effectiveness and potential areas for refinement.

5. Application Examples in Education

5.1. General Examples of Application

To demonstrate the CASF Implementation Assistant's versatility across diverse academic disciplines, we present several examples showcasing authentic interactions between educators and the assistant. These dialogues illustrate the structured implementation process in various knowledge domains, from quantitative disciplines like calculus to humanities and professional programs. By including examples from multiple fields, we highlight how the assistant guides educators through the same pedagogically sound framework while adapting recommendations to discipline-specific needs and cognitive development levels. Each example captures the complete conversation flow, from initial course information gathering to comprehensive activity development, demonstrating how the assistant consistently aligns AI integration with students' cognitive development stages regardless of subject matter. Box 1 presents the calculus integration techniques implementation example. Additional implementation examples across diverse disciplines are available in Supplementary Information B.

5.2. Results from the Implementation Workshop

The workshop evaluation provided comprehensive insights into both the practical implementation and theoretical efficacy of CASF in authentic engineering education contexts. Survey responses from the nine participating educators revealed nuanced perceptions that illuminate the framework's strengths while identifying opportunities for refinement, particularly in how it supports cognitive development across De Zubiría's stages.

Box 1. Calculus integration techniques implementation example. This dialogue demonstrates how the CASF Implementation Assistant guides an instructor through designing an activity for a second-year Calculus II course at the Conceptual cognitive level.

Instructor: Hola

Bot: ¡Hola! Bienvenido/a. Soy tu asistente de IA para ayudarte a integrar IA generativa en tus cursos de ingeniería usando el Marco CASF. Para empezar, por favor, selecciona el idioma en el que prefieres que interactuemos: Español English Français 中文 Deutsch 日本語 Instructor: English Bot: Great! We will proceed in English. Now, what would you like to do? Please choose one of the following options: Create a new AI-enhanced activity: Develop a new learning activity incorporating generative AI. Adapt an existing activity: Modify an activity you already use to include generative AI. Learn more about the CASF Framework: Understand the Cognitive-Generative AI Synergy Framework (CASF) and its application. Get information about AI integration levels: Learn about the different ways generative AI can be integrated according to the CASF. Instructor: Create a new AI-enhanced activity Bot: Okay, let's create a new AI-enhanced activity. To tailor it effectively, I need some information about your course and students. Please answer the following questions one by one: Course Name and Level: What is the name and level of your course? Examples: "Introduction to Thermodynamics, first-year undergraduate", "Advanced Structural Design, postgraduate", "Electric Circuits, second-year undergraduate" Instructor: Calculus II, second-year undergraduate Bot: Got it. Calculus II for second-year undergraduates. Cognitive Level of Students: At what cognitive level are your students currently operating in this course? Choose one: Nocional (Remembering, Understanding): Learning basic terminology and fundamental concepts. Proposicional (Applying): Applying concepts to solve structured problems. Conceptual (Analyzing): Analyzing problems and identifying relationships between concepts. Formal (Evaluating): Evaluating solutions and justifying conclusions with arguments. Categorial (Creating): Creating original solutions and developing new approaches. Metacognitive (Reflecting, Self-evaluating): Reflecting on their learning process and evaluating their progress. Instructor: Proposicional Bot: Okay, Proposicional (Applying) level. This means your students are mainly focused on applying learned concepts and procedures to solve structured problems. We'll aim for activities where AI assists them in this application process. Learning Outcomes: What are the main learning outcomes for the current module or topic you are covering? Examples: "Students will be able to apply thermodynamic principles and use generative AI to analyze power cycles." "Students will design optimized digital circuits with generative AI assistance that meet given specifications." "Students will critically evaluate different wastewater treatment methods and use generative AI to compare potential solutions." Instructor: Students will be able to apply integration techniques to solve real-world engineering problems and use generative AI to verify their solutions, analyze different approaches, and explore the conceptual connections between various integration methods. Bot: Excellent learning outcome. It clearly states the skill (applying integration techniques to real-world problems) and how generative AI will be used (verify solutions, analyze approaches, explore connections). Specific Topic: What specific topic or concept within Calculus II do you plan to teach using generative AI, focusing on integration techniques for real-world problems? Examples: "Calculating the volume of solids of revolution", "Finding the centroid of a planar region", "Solving work problems using integration", "Applications of improper integrals in probability" Instructor: Applications of definite integrals to calculate the work required to pump water from a tank Bot: Great topic! Calculating the work required to pump water from tanks is a classic application of definite integrals. Teaching Modality: What teaching modality will you be using for this activity? (Face-to-face, Hybrid, Online) Instructor: Face-to-face Bot: Perfect, face-to-face modality. One last question before I suggest some activities: Activity Duration: Approximately how long do you envision this activity taking during your class time? (e.g., 30 minutes, 1 hour, a full 2-hour session) Instructor: 45 minutes Bot: Understood, the activity needs to fit within a 45-minute timeframe. I suggest three distinct activity options suitable for this time constraint and your learning objectives: Setup Verification & Conceptual Check: Students focus on setting up the integral correctly and use AI to check their reasoning before integration. Comparative Analysis of Tank Shapes: Students set up one integral and use AI to understand how changing the tank shape (e.g., cylinder to cone) affects the setup. Parameter Sensitivity "What-If" Analysis: Students solve a base problem and use AI to quickly explore how changing conditions (density, fill level, pumping height) impacts the integral. All three are designed to be feasible within 45 minutes in a face-to-face setting, especially since the focus for the AI interaction is on the setup and conceptual understanding rather than lengthy calculations or complex prompt engineering. To help you decide, which of these activities would you like me to elaborate on with a potential minute-by-minute breakdown for a 45-minute class? Or did you have a different idea in mind? Please let me know if you saw the previous detailed descriptions of these three activities. Instructor: Setup Verification & Conceptual Check Bot: Okay, here is the detailed 45-minute plan for the Setup Verification & Conceptual Check activity again. Activity Goal: Students will correctly set up the definite integral required to calculate the work done in pumping water from a tank, understanding the physical meaning of each component of the integral, and using AI for verification of their setup. The focus is on the setup, not the calculation. Alignment with CASF (Proposicional Level): Students at this level are learning to apply known procedures and concepts to structured problems. This activity requires them to apply the formula/method for work integrals (Work = ∫ ρg * A(y) * D(y) dy). The AI acts as a verification tool, confirming if their application of the formula to the specific problem's geometry and conditions is correct, reinforcing the procedure without solving it for them. Detailed Plan (45 Minutes): Introduction & Concept Review (5 mins) Teacher: Briefly recap work (Force x Distance) and the integral approach for variable forces/distances (summing work on small slices). Remind them of the key components needed for pumping problems: density (ρ), gravity (g), cross-sectional area of a slice (A(y)), distance the slice is pumped (D(y)), and limits of integration. Objective: "Today, we'll focus only on setting up the correct definite integral for a pumping problem. We'll use AI to help check our thinking before we calculate." Problem Presentation (5 mins) Teacher: Present a clear, well-defined problem on the board or screen. Example Problem: "A cylindrical tank with a radius of 3 meters and a height of 8 meters is completely filled with water (density = 1000 kg/m³, g = 9.8 m/s²). Set up the definite integral representing the work required to pump all the water to the top of the tank." Ensure all parameters are clearly stated. Student Work: Integral Setup (15 mins) Students: Work individually or in pairs. They must: Draw a diagram, establishing a clear coordinate system (e.g., y=0 at the bottom or top). Define a thin horizontal slice of water at an arbitrary height `y`. Determine the volume of this slice: `dV = Area(y) * dy`. For the cylinder, `Area(y)` is constant: `π * (3)²`. Determine the force on this slice: `dF = ρ * g * dV = 1000 * 9.8 * π(3)² * dy`. Determine the distance this slice must be moved: `D(y)`. If y=0 is at the bottom, `D(y) = 8 - y`. If y=0 is at the top, `D(y) = y` (but dy would be negative or limits adjusted). Assume y=0 at the bottom for clarity here. Identify the limits of integration based on where the water is: `0` to `8` since the tank is full. Assemble the integral: `Work = ∫₀⁸ 1000 * 9.8 * π(3)² * (8 - y) dy`. Teacher: Circulate, observe progress, ask guiding questions ("Where did you place your y=0?", "What does (8-y) represent physically?"), but do not confirm the setup's correctness yet. Encourage them to explicitly write down `Area(y)=...`, `D(y)=...`, limits=... AI Verification & Correction (10 mins) Students: Use a generative AI tool (on devices or shared stations). They need to formulate a prompt using the TRACE structure to check their specific setup. TRACE Prompt Example (Student uses their values): Task: Verify my definite integral setup for calculating the work to pump water out of a cylindrical tank (radius 3m, height 8m, full) to the top. Requirement: Analyze my integral: ∫₀⁸ 1000 * 9.8 * π(3)² * (8 - y) dy. Check if my constant Area π(3)² and distance (8-y) are correct for a slice at height y (assuming y=0 at the bottom). Also check the limits 0 to 8. Action: Explain if each component (ρg, Area, Distance, limits) correctly matches the problem description. Do not solve the integral. Context: I'm a Calc II student setting up a work integral for pumping. Water is pumped from a full cylinder (R=3, H=8) to the very top. y=0 is at the bottom. Format: Bullet points confirming or correcting each part of my setup. Students: Interpret the AI's feedback. If errors are found, they attempt to correct their setup based on the explanation. Class Discussion & Wrap-up (10 mins) Teacher: Lead a brief discussion. "Did anyone's setup get flagged by the AI? What was the mistake?" or "What part did the AI confirm was correct?" Write the correct integral setup on the board, step-by-step, emphasizing the derivation of Area(y) and D(y) from the diagram and coordinate system. Reiterate that the goal was the setup, which is often the most challenging conceptual part. Evaluation of the integral is a separate procedural skill. Phase 5: Additional considerations (Summary) Ethical considerations: Emphasize process over answer, use structured prompts (like TRACE) focusing on verification, require students to explain corrections. Clearly state AI is for checking, not replacing, their work. Assessment strategies: Use formative assessment during the activity, potentially collect setup attempts (before/after AI) for low-stakes feedback, assign follow-up problems requiring only the setup. Continuous improvement: Use quick check-ins or minute papers to gather feedback on clarity and usefulness of the AI verification step. Observe student-AI interactions. Now that you have the plan again, what specific aspect would you like to focus on next? For example: Refining the student prompt? Developing a handout? Creating a follow-up problem? Discussing implementation details?

5.2.1. Participant Demographics and Implementation Context

The workshop involved nine university educators from diverse engineering disciplines, providing a robust testing ground for the CASF framework across varied educational contexts. Participants implemented CASF across multiple educational levels: 67% applied it in both undergraduate and postgraduate courses, while 22% focused exclusively on undergraduate and 11% on postgraduate education. This distribution allowed for evaluation of the framework's effectiveness across different cognitive development stages in De Zubiría's model—from propositional to metacognitive levels.

The disciplinary diversity among participants enabled assessment of how effectively the framework's synthesis of dialogic pedagogy and AI integration functions across different knowledge domains within engineering education. This cross-disciplinary testing was particularly valuable, as both foundational models were designed to be discipline-agnostic, while CASF aims to operationalize their principles specifically within technical education contexts.

5.2.2. Quantitative Assessment of Framework Effectiveness

Quantitative evaluation revealed strong overall satisfaction with CASF (mean rating 4.5/5, representing 90% satisfaction), with meaningful variations across dimensions that provide insight into the framework's operational strengths and potential improvement areas.

The "Alignment with CASF" category received the highest rating (4.75/5), indicating successful operationalization of theoretical principles into practical guidance. Participants strongly affirmed that recommendations were consistent with the framework's conceptual foundations, demonstrating effective translation between De Zubiría's cognitive stages and appropriate AI integration levels. This strong alignment addresses a significant challenge in educational innovation: bridging the gap between theoretical frameworks and classroom implementation.

"Activity Design" emerged as the second-highest rated category (4.67/5), validating a core premise of CASF: that educators can leverage their pedagogical expertise as the primary driver for AI integration decisions without requiring extensive technical knowledge. These ratings suggest CASF successfully positions technology as supporting cognitive development rather than driving it—a key principle derived from De Zubiría's balance between self-structuring and hetero-structuring approaches. Similarly positive assessments for "AI Integration" (4.56/5) demonstrate that CASF provides effective guidance for incorporating AI technologies pedagogically. The statement "Permitted exploration of AI in classes" received the highest individual rating (4.78/5), suggesting the framework successfully enables thoughtful experimentation with AI tools while maintaining educational integrity.

While "Usability" received positive ratings (4.48/5), the most revealing insights came from the "Cognitive Development" category. This dimension, though positively rated (4.16/5), received the lowest average score, with "Promoted critical thinking" scoring lowest overall (3.75/5). This finding highlights a crucial area for framework refinement: while CASF effectively guides AI integration and activity design, it appears less successful in ensuring activities promote higher-order thinking. This tension reflects a fundamental challenge in educational technology: tools may enhance engagement without necessarily deepening cognitive processes.

5.2.3. Qualitative Insights on Implementation Experience

Qualitative feedback provided contextual understanding of educators' CASF implementation experiences, revealing several significant themes.

Participants consistently highlighted the efficiency of CASF in redesigning educational activities, with one educator noting that it "is a great help for redesigning activities and saves a lot of time by providing a structure for design." The framework's ability to align learning objectives with appropriate cognitive levels complemented this efficiency. The emphasis on time-saving aligns with research suggesting that adoption of educational innovations often depends on perceived implementation ease.

The framework's capacity to facilitate exploration of AI's potential educational roles was frequently mentioned, with participants valuing the opportunity to understand "how useful and dangerous AI can be in education." This balanced perspective reflects CASF's grounding in both pedagogical principles and ethical considerations. Several participants appreciated how CASF helped identify cognitive levels and connect them with suitable activities, with one noting the framework "helps to identify cognitive levels and link them with appropriate activities." This feedback validates CASF's central premise: that AI integration should be calibrated to students' cognitive development stages rather than implemented uniformly.

Constructive critique focused on several improvement areas. The most prominent suggestion concerned providing more granular guidance on which specific components of educational activities would benefit most from AI integration. Several participants requested greater flexibility in editing intermediate results, suggesting that the framework could better accommodate iterative design processes.

A noteworthy concern emerged regarding potential overreliance on AI, with one participant cautioning: "Care must be taken in how it is used so that it doesn't do all the student's work." This reflection underscores the importance of CASF's balanced approach to AI integration, which aims to enhance rather than replace critical thinking.

The juxtaposition of quantitative and qualitative findings reveals an important tension: while CASF successfully guides activity design and AI integration, its impact on cognitive development outcomes appears less robust. This suggests that future refinements should focus on strengthening the connection between AI-enhanced activities and higher-order thinking development, perhaps through more explicit guidance on designing activities that require students to critically engage with AI outputs.

6. Discussion

The findings from our implementation and evaluation of the Cognitive-AI Synergy Framework (CASF) illuminate both promising applications and significant challenges in integrating GenAI with cognitive development approaches in higher education. This section examines these findings in relation to existing educational frameworks, discusses practical implementation challenges, and offers recommendations for educators seeking to implement CASF in their teaching practice.

6.1. Limitations and Challenges

Despite the overall positive reception of CASF, several implementation challenges emerged that warrant careful consideration. These challenges reflect broader tensions in educational technology adoption that exist at the intersection of pedagogy, technology, and institutional contexts.

6.1.1. Cognitive Level Alignment Challenges

A significant challenge in implementing CASF lies in accurately aligning teaching methods with students' cognitive levels. The framework's effectiveness depends on educators' ability to correctly identify their students' developmental stages according to De Zubiría's model and select appropriate AI integration levels. However, many educators find it challenging to determine these cognitive stages precisely, particularly when student development varies across different domains within the same course. This aligns with what De Zubiría himself acknowledges as the non-linear and fluid nature of cognitive development (de Zubiría Samper, 2006).

The workshop evaluation revealed that while educators appreciated the framework's guidance in identifying cognitive levels, they sometimes struggled with its practical application when faced with heterogeneous student groups. This challenge echoes findings from other studies on implementing cognitive development frameworks, where the theoretical clarity of developmental stages becomes complicated in authentic learning environments (Zoller & Pushkin, 2007). Some workshop participants indicated a desire for more nuanced guidance on adapting CASF to mixed-ability groups, suggesting that the current framework may need refinement to address the reality of cognitive diversity within classrooms.

6.1.2. Risk of Over-Reliance on GenAI

A core tension identified in the workshop was balancing GenAI integration with the development of fundamental cognitive skills. As one participant aptly noted, "Care must be taken in how it is used so that it doesn't do all the student's work." This concern reflects what Jose et al. (2025) describe as the "cognitive paradox of AI in education": the potential for AI tools to simultaneously enhance and erode learning, depending on implementation.

The risk of cognitive offloading, where students transfer thinking responsibilities to AI tools rather than developing their own abilities, represents a significant challenge to CASF implementation. This situation is particularly concerning at earlier cognitive development stages (notional and propositional levels), where foundational skill building is essential. The CASF framework directly addresses this challenge by recommending no GenAI use at the notional level and carefully supervised use at the propositional level. Crucially, CASF establishes that all GenAI implementations must occur within the classroom environment under direct educator guidance, not as unsupervised activities. The educator's role is central to successful implementation; they must actively monitor, guide, and evaluate students' interactions with GenAI tools to ensure technology serves as scaffolding for learning rather than a replacement for cognitive effort. While CASF provides this calibrated approach to AI integration, the workshop findings suggest that additional safeguards and clearer boundaries may be necessary to reinforce the educator's supervisory responsibility and prevent student over-reliance on AI.

This challenge connects to broader discussions about AI's double-edged role in education. As Lim et al. (2023) frame it, GenAI presents education with a paradoxical choice between "Ragnarök" (potential destruction of traditional educational values) and "reformation" (transformative positive change). CASF aims to navigate this paradox by calibrating AI use to cognitive development, but implementation experiences suggest this balance remains precarious and requires the educator's continuous, active oversight throughout the learning process.

6.1.3. Technical and Institutional Barriers

Practical implementation of CASF also faces technical and institutional challenges. Many educational institutions have inconsistent policies regarding AI use, creating uncertainty for educators attempting to implement frameworks like CASF. Workshop participants noted concerns about institutional readiness, including issues of technology access, infrastructure support, and policy alignment.

While educators interact with CASF through a user-friendly OpenWebUI frontend rather than directly accessing the n8n technical implementation, customization challenges remain. Despite positive usability ratings (4.48/5), some participants requested greater flexibility and additional features, indicating that institutions would need dedicated development teams to adapt the CASF assistant to specific pedagogical or institutional requirements. This organizational requirement represents a potential adoption barrier, particularly for resource-limited institutions. The need for technical support aligns with the Technology Acceptance Model findings by Hazzan-Bishara et al. (2025), which indicate that perceived ease of use and organizational support significantly influence educators' willingness to adopt new educational technologies. Even with an intuitive frontend, the requirement for technical expertise to customize and maintain the system introduces an institutional dependency that may impact widespread adoption.

6.2. Recommendations for Implementation

Based on our findings and identified challenges, we offer comprehensive recommendations for educators implementing CASF in higher education environments. These recommendations address curriculum design, pedagogical approaches, assessment strategies, and ethical considerations.

6.2.1. Curriculum Redesign Strategies

Effective CASF implementation requires thoughtful curriculum redesign rather than simply adding AI to existing activities. We recommend:

Progressive AI Integration: Design curricula that gradually increase AI integration as students advance through cognitive levels, beginning with minimal AI support at notional levels and expanding to more collaborative integration at formal and categorial levels.

Dual-Path Activities: Develop learning activities with parallel paths (one with AI assistance and one without), allowing students to compare approaches and develop metacognitive awareness of when AI enhances or hinders their learning.

Skill Preservation Focus: Identify core skills that should remain AI-independent, and design dedicated activities that develop these abilities without technological assistance, particularly at foundational cognitive levels.

Cross-Cognitive Level Mapping: Create curriculum maps that explicitly connect learning objectives with both De Zubiría's cognitive levels and appropriate AI integration modalities, ensuring pedagogical alignment throughout the course.

6.2.2. Innovative Pedagogical Approaches

CASF implementation benefits from specific pedagogical strategies that maximize cognitive development while leveraging AI appropriately:

AI-Enhanced Dialogic Teaching: Implement structured dialogues where students critically engage with AI outputs rather than passively consuming them, promoting the active construction of knowledge through technology-mediated discussion.

Cognitive Apprenticeship with AI: Design activities where AI serves as a model for expert thinking that students progressively internalize, following the cognitive apprenticeship model described by Collins et al. (1991).

Metacognitive Scaffolding: Include explicit reflection activities where students analyze how AI affects their thinking processes, becoming more conscious of both its benefits and limitations.

Calibrated AI Prompting: Teach students to create increasingly sophisticated AI prompts that align with their cognitive development stage, moving from simple verification prompts at propositional levels to complex analytical prompts at formal and categorial levels.

6.2.3. Adaptive Assessment Strategies

Assessment approaches must evolve alongside AI integration to maintain academic integrity while evaluating authentic learning:

Process Documentation: Implement assessment approaches that emphasize documenting the thinking process rather than just final outputs, requiring students to explain their reasoning and AI interaction decisions.

Comparative Evaluation: Assess students' ability to critically evaluate AI outputs against established knowledge, encouraging them to identify limitations and potential biases in AI-generated content.

Staged Assessment Design: Create multi-phase assessments where initial phases are completed without AI to establish baseline understanding, followed by AI-enhanced phases that build upon this foundation.

AI-Verification Balance: Design assessments that require both AI-assisted components and human verification steps, ensuring students develop the ability to validate AI outputs rather than accepting them uncritically.

6.2.4. Ethical Implementation Considerations

Responsible CASF implementation requires attention to several ethical dimensions:

Equity of Access: Ensure all students have equitable access to required AI tools, implementing alternative approaches when technological disparities exist.

Transparent AI Boundaries: Clearly communicate to students when and how AI should be used in their learning, establishing explicit expectations for appropriate use at different cognitive levels.

Data Privacy Protection: Implement protocols for protecting student data when using AI tools, particularly when these tools may store interactions or require account creation.

Bias Awareness Education: Include discussions of algorithmic bias and limitations in AI implementation, helping students develop critical awareness of how these technologies may reflect and amplify existing societal biases.

6.3. Implementation Workshop Analysis

The workshop evaluation provides valuable insights into CASF's strengths and implementation dynamics in practical educational settings. The high ratings for framework alignment (4.75/5) demonstrate that CASF successfully operationalizes its theoretical foundations into actionable guidance. This achievement addresses a common gap in educational innovation: the challenge of translating theoretical principles into practical implementation strategies (Cukurova et al., 2020).

The workshop findings revealed varied ratings across different dimensions, with cognitive development (4.16/5) receiving comparatively lower scores than other categories. While the critical thinking promotion rating (3.75/5) initially appears concerning, this finding should be interpreted within the context of implementation maturity. The workshop represented participants' first engagement with the framework. This was essentially their first attempt in what should be seen as a continuous process of refinement and adaptation. As with any sophisticated pedagogical approach, mastery develops through multiple implementation cycles where educators grow increasingly adept at leveraging the framework's capabilities.

The ratings likely reflect the challenges inherent in the early adoption phase rather than fundamental limitations of the framework itself. Educators need time to fully internalize the framework's principles and develop facility with the OpenWebUI interface before they can effectively design activities that maximize cognitive development. This interpretation aligns with research on educational technology adoption, which consistently demonstrates that pedagogical integration deepens through iterative implementation (Michel-Villarreal et al., 2023).

The qualitative feedback highlighting CASF's efficiency in redesigning educational activities suggests the framework successfully reduces the cognitive load associated with AI integration, potentially lowering barriers to adoption. This effectiveness in supporting activity design (mean rating 4.67/5) validates a core premise of CASF: that educators can leverage their pedagogical expertise as the primary driver for AI integration decisions without requiring extensive technical knowledge. Participants' strong agreement in the AI Integration dimension (mean 4.56/5) indicates that CASF successfully facilitates the thoughtful incorporation of AI technologies into engineering education activities. The educators particularly appreciated how the framework maintained their central role in the implementation process: a critical feature of CASF's design, which emphasizes that GenAI use must occur under direct educator guidance, especially at foundational cognitive levels.

The workshop also revealed opportunities for enhancement, including requests for more granular guidance on designing activities that specifically target higher-order thinking skills. These suggestions highlight the iterative nature of framework development and implementation. Rather than indicating deficiencies, they represent the natural evolution of a sophisticated pedagogical approach as it moves from initial conception to mature implementation.

It is worth noting that the OpenWebUI frontend successfully shielded educators from the technical complexity of the n8n implementation, supporting the high usability ratings (4.48/5). However, participants identified opportunities for interface refinements that would further support the iterative design process that effective educational implementation requires.

The workshop findings suggest that CASF provides a strong foundation for thoughtful GenAI integration while maintaining the educator's central role in guiding student learning. As educators gain experience with the framework through multiple implementation cycles, we anticipate continued growth in their ability to design activities that effectively promote cognitive development across De Zubiría's stages. This maturation process reflects the inherently iterative nature of pedagogical innovation, where initial implementation provides valuable insights that inform ongoing refinement and increasingly sophisticated application.

6.4. Future Development and Research Directions

The findings from our implementation and evaluation point to several promising directions for future CASF development and research.

6.4.1. Empirical Validation Studies

While the initial workshop evaluation provides valuable insights, comprehensive empirical validation is needed to assess CASF's long-term impact on learning outcomes. Future research should include:

Longitudinal Implementation Studies: Track the impact of CASF implementation across multiple semesters to assess changes in student cognitive development, technical skill acquisition, and engagement.

Comparative Framework Evaluation: Compare learning outcomes between CASF and other AI integration approaches to identify relative strengths and potential synergies.

Cognitive Development Measurement: Develop and validate assessment instruments specifically designed to measure the impact of AI-enhanced activities on cognitive development across De Zubiría's stages.

6.4.2. Framework Extensions and Adaptations

CASF can be extended in several directions to enhance its applicability and effectiveness:

Discipline-Specific Adaptations: Develop specialized versions of CASF tailored to specific disciplinary contexts, accounting for the unique cognitive demands and GenAI applications within different fields.

Cross-Cultural Framework Validation: Evaluate CASF's effectiveness across diverse cultural and educational contexts, identifying necessary adaptations for global implementation.

Integration with Other Pedagogical Models: Explore how CASF might be synthesized with additional pedagogical approaches such as problem-based learning, flipped classroom methodologies, or universal design for learning principles.

6.4.3. Technical Enhancements to the Implementation Assistant

Future development of the CASF Implementation Assistant should address the technical limitations identified in the workshop evaluation:

Enhanced User Interface: Develop more intuitive interfaces that reduce technical barriers while maintaining the assistant's pedagogical sophistication.

Advanced Activity Analytics: Incorporate features that analyze proposed activities for their cognitive engagement level, providing feedback on how effectively they promote higher-order thinking.

Expanded Resource Integration: Enable direct integration with institutional learning management systems and resource repositories to facilitate seamless implementation.

These research and development directions aim to address the identified limitations while building on CASF's demonstrated strengths, positioning the framework to make an increasingly significant contribution to educational practice in the rapidly evolving GenAI landscape.

7. Conclusions

This paper introduces the Cognitive-AI Synergy Framework (CASF), a novel approach for integrating GenAI in higher education that aligns technology use with students' cognitive development stages. CASF represents a significant theoretical contribution by synthesizing two established frameworks: De Zubiría's dialogic pedagogical model with its progressive cognitive development stages and the Artificial Intelligence Assessment Scale (AIAS) with its structured approach to AI integration in assessment. This synthesis addresses a critical gap in current educational practice by providing educators with a pedagogically grounded framework for AI integration that prioritizes cognitive development over technological novelty.

Our second major contribution is the development and evaluation of the CASF Implementation Assistant, which evolved from a simple AI tool to a sophisticated structured agent with enhanced conversation flow and contextual understanding. This implementation demonstrates how theoretical frameworks can be operationalized through appropriate technological tools, making complex pedagogical approaches more accessible to educators. The assistant's evolution represents a model for how AI can support rather than supplant pedagogical expertise, with technology serving as an implementation scaffold rather than driving educational decisions.

The workshop evaluation represents a third key contribution, providing empirical insights into CASF's practical implementation. The generally positive reception across dimensions of framework alignment (4.75/5), activity design (4.67/5), and AI integration (4.56/5) validates CASF's core principles and usability. The workshop findings also revealed important areas for refinement, particularly in strengthening the framework's support for higher-order cognitive development through iterative implementation cycles. These findings demonstrate both CASF's immediate utility and its potential for ongoing enhancement through the feedback loop between theory and practice.

Collectively, these contributions advance our understanding of how GenAI can be thoughtfully integrated into higher education in ways that support rather than diminish cognitive development. By positioning pedagogical expertise as the driving force behind technology implementation decisions, CASF offers a promising alternative to technology-driven approaches that often fail to adequately consider educational objectives and developmental needs.

7.1. Implications for Engineering Education

The development and evaluation of CASF has several significant implications for engineering education. First, it demonstrates that GenAI integration need not follow an all-or-nothing approach. By calibrating AI use to cognitive development stages, engineering educators can strategically incorporate these powerful tools while preserving the development of fundamental skills and critical thinking abilities. This balanced approach is particularly important in STEM fields, where procedural knowledge and conceptual understanding provide essential foundations for higher-order problem-solving.

Second, CASF reinforces the central role of the educator in technology-enhanced learning environments. Unlike approaches that position AI as an autonomous educational agent, CASF emphasizes that GenAI use, particularly at earlier cognitive levels, must occur under direct educator guidance. This principle addresses concerns about cognitive offloading while acknowledging that appropriate scaffolding can enhance rather than diminish learning. For engineering education, where complex problem-solving often requires both technical knowledge and creative thinking, this balance is especially critical.

Third, the framework provides a structured approach for reimagining engineering education activities considering GenAI capabilities. Rather than simply prohibiting AI use or leaving students to navigate these tools independently, CASF enables educators to design learning experiences that thoughtfully incorporate AI while maintaining pedagogical integrity. This structured approach is particularly valuable in engineering disciplines, where rapid technological change requires adaptive educational responses that maintain focus on fundamental principles while acknowledging evolving professional practices.

Finally, CASF addresses the growing need for engineering graduates who can effectively collaborate with AI systems. By providing structured opportunities for students to engage with these technologies across their educational journey, the framework helps develop both technical proficiency and critical awareness—skills increasingly essential in AI-enhanced professional environments. This preparation represents a significant contribution to engineering education's responsibility to develop professionals ready for emerging workplace realities.