I. Introduction

Introduction Wireless communication systems have changed significantly over the last few decades from first generation (1G) analog systems based on cellular technology to the present digitalized softwarebased 5G systems and to a 6G that is based on what is being researched now, driven by exploding numbers of mobile subscribers, data-hungry applications, and ever-increasing quality of service (QoS) demands. Driven by these factors, new requirements call for more flexibility, scalability and intelligence on the wireless network infrastructure.

Classical Communication Systems Conventional communication systems are mainly based on predefined rule-based mechanisms and human intervention and are inadequate for dynamic situations characterized by high user mobility, rapidly changing channel conditions and various service requests. These limitations are then magnified as we consider newer use-cases such as autonomous cars, augmented reality, industrial IoT and ultra-reliable low-latency communications (URLLC) which need real-time responsiveness and network agility.

Artificial Intelligence (AI), such as machine learning (ML) deep learning (DL), reinforcement learning (RL), the successful solution of which are valuable in diverse cases of applications. Thanks to the technological advancements in data-driven decision making, AI will precipitate the wave of paradigm shift in the way wireless networks are designed and operated. Artificial intelligence (AI) is not only shedding light on why these models fail, but will also be the enabler for autonomous self-learning and predicting network behavior and managing performance dynamically. Such as intelligent spectrum management, dynamic resource allocation, signal identification, fault diagnosis and so on.

High dimension, which is in fact a bottleneck of AI, because dimension of Om is considered in traditional wireless techniques, and n and k are not considered. The AI models have the ability to learn and extract the hidden patterns/convergence that can not be pulled out or visualized using the traditional mathematical models. Moreover, the edge AI makes endpoint more intelligent, and as a result, pushing computational intelligence to the sight of users can achieve low-latency inference, low backhaul congestion and much better privacy.

Regarding future trends, AI is expected to have an increasing role in 6G, manifested in terms of AI-native networks where AI is closely integrated with all the aspects of communication protocol stack. That future is one where systems are not only beyond reactive, but also become proactive in nature, able to teach themselves, to heal themselves and have the ability to self-sustain a network without much human wrangling.

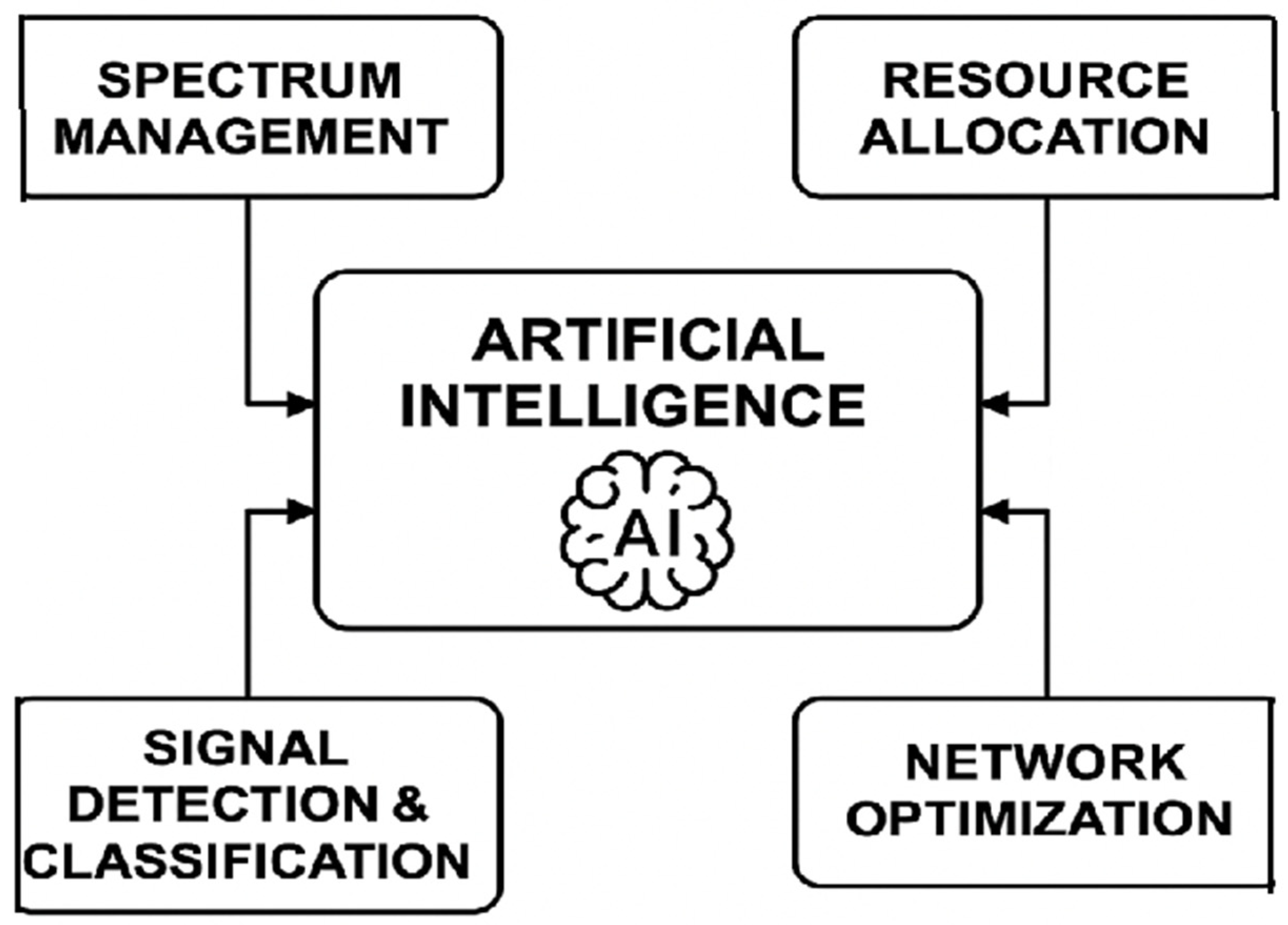

Figure 1.

Integration of AI across various levels in wireless where intelligent spectrum usage, adaptive support, proactive maintenance and user-centric services are exploited.

Figure 1.

Integration of AI across various levels in wireless where intelligent spectrum usage, adaptive support, proactive maintenance and user-centric services are exploited.

In this article, we provide an overview of the current and anticipated roles of AI in wireless networks. It shows how AI is used in various networking function, including the merits, challenges and the prospect research directions. The rest of the paper is structured as follows: Section II highlights the related work and the fundamental of AI concepts with wireless systems. In III, we describe system models and AI frameworks. The major challenges and limitations of these technologies are described in Section IV. Section V presents other potential lines of future research and Section VI concludes the paper.

III. Methodology

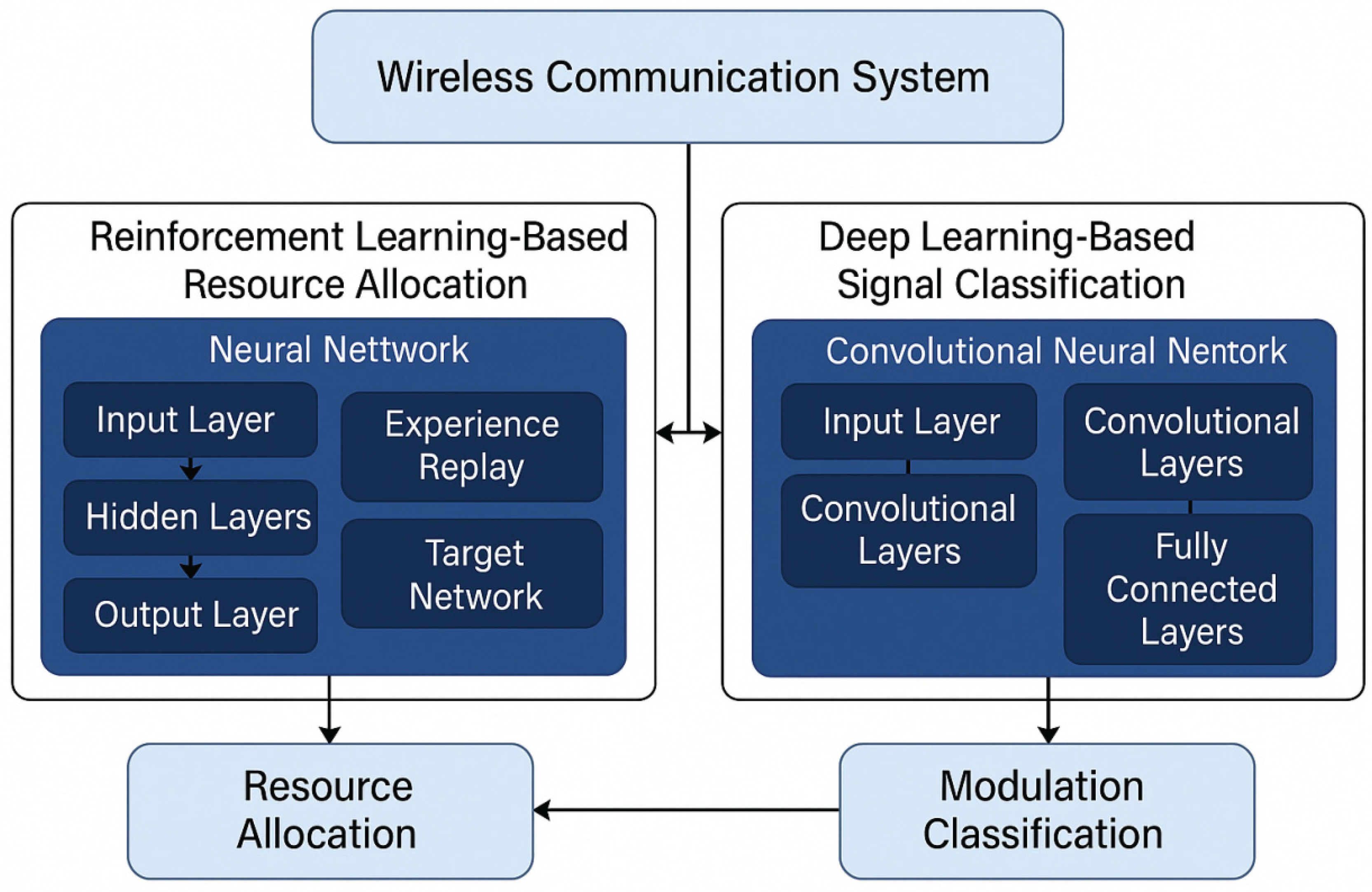

Figure 2 presents the framework of the proposed AI-enabled wireless communication model. Architecture Here, we split the architecture in two main modules: resource allocation (RA) based on RL and signal classification based on deep learning. RL module On the left side of the diagram is the RL module based on the RL, and it starts from the status of the network, which is the environmental observations (e.g., the channel state information, user request, and interference impairment). This state is then input into an experience replay buffer to keep track of past transitions for stable learning. Such experiences are then used by the DQN to learn optimal policy. The DQN uses a target network to enable convergence and stable learning. The main and target networks are also trained iteratively. The learned acts — including frequency allocations and power allocations are then performed to wireless environment for throughput gain and efficiency.

On the right, the deep learning block performs the signal classification. It first takes raw I/Q signal samples into multiple convolutional layers for combining time-domain and frequency-domain features. These features are then fed into pooling layers to lose spatial dimensions, at the same time, capture the feature with emphasis. Lastly, the signals propagate through fully connected layers which are responsible for classifying tasks such as modulation recognition. The result can help to detect and enhance the signal transmission in real-time communication for user. Both nodes shall also be responsive to the wireless network environment to receive feedback, and contribute continuously to learning and system optimization. This combined architecture makes the system to be flexible enough to accommodate the dynamics of the network and yet efficient in terms of communication one the system adapts to it.

A. System Model

We adopt a downlink multi-cell wireless network consisting of many base stations (BSs) and user equipment (UEs) like the network models in [

21,

22,

23]. The area is covered with a cluster of UEs being in communication with each transmission point (BS). A centralized controller, motivated by the principles of software-based networking (so called software defined networking (SDN)) [

24], orchestrates dynamic resource assignment in the network. The realistic wireless channel conditions, i.e., Rayleigh fading, path loss, shadowing, and user mobility according to random waypoint or Gauss-Markov or models, are included in the model [

25,

26].

The design goal of this system is to maximize spectral efficiency and energy efficiency, with equitable and QOS guaranteed. Specifically, spectral efficiency is the ultimate sum rate per unit bandwidth [

27], whereas energy efficiency is expressed as bits per Joule [

28]. We measure fairness by Jain’s fairness index [

29]. This dynamic optimization problem is high-dimensional, non-linear, and time-varying and represents an ideal problem where AI solutions can be used as a disruptive technology.

B. AI-Based Resource Allocation Framework

To mitigate the inherent complexity of the non-convex optimization problem of resource allocation in wireless systems, we leverage a reinforcement learning (RL) based scheme namely, Deep Q-Networks (DQN) [

30,

31]. It models the environment as a Markov Decision Process (MDP) with an observation sequence consisting of a state vector with CSI, traffic demands, interference levels, and a history of decisions made [

32,

33].

At each time step, DQN decides an action to make a power allocation and a sub-band assignment for each BS. The reward of action encourages the decision upon which action is better than others so that the network throughput and energy efficiency are optimized while high latency and unfair resource allocation are penalized [

34,

35]. DQN estimates the optimal action-value function with deep neural networks, which are trained using experience replay and fixed target networks to ensure learning stability [

36,

37].

Our implementation is based on a multi-agent extension of DQN, which plans in a decentralized way of cellular networks, where agents in each BS coordinate via limited signaling or centralized critic (as in [

38] or [

39]).

C. Deep Learning Signal Classification

Meanwhile, for modulation classification, we adopt a deep learning model by the one-dimensional convolutional neural network (1D-CNN), as discussed in [

40,

41,

42]. The model receives as input raw I/Q samples and processes them through convolutional layers to obtain robust temporal information.

The CNN contains several convolutional layers with ReLU activation, max pooling, and fully connected layers. The last layer is a softmax activation layer to make the classification for common modulation schemes, including BPSK, QPSK, 8-PSK, 16-QAM, and 64-QAM [

43,

44]. The model is trained on the RadioML 2016.10a dataset, where signal samples are given labels for different SNRs and different channel conditions as well [

45].

This method can greatly improve the classification accuracy even at low signal-to-noise ratio [

46]. The CNN’s resilience to fading and interference is a key enabler for adaptive, intelligent receivers in AI-based communication systems [

47].

D. Training and Simulation Environment

The training is carried out in a hybrid simulation setting. RL-based resource allocation: We adapt the OpenAI Gym environment to model multi-cell wireless networks for the purpose of this study, based on the approaches in [

48,

49]. The wireless channel models are in accordance with 3GPP standards, and UE mobility is considered to simulate practical deployment [

50].

The DQN is trained in an episodic manner where every episode emulates a full TTI sequence. The loss is minimized with an Adam optimizer at learning rate = 0.001. The hyperparameters are fine-tuned by grid searching and early stopping is employed to prevent overfitting [

51].

For signal classification using deep learning, the CNN is developed using the TensorFlow, observes training on GPUs with stochastic gradient descent (SGD) and adapts batch normalization to accelerate convergence [

52,

53]. The methods are demonstrated for performance porpose, with cross-validation procedures and confusion matrix analysis.

Simulations are conducted with NS-3 to evaluate the end-to-end performance indicators such as throughput, latency and energy consumption. Integration with the AI agents is implemented through NS-3 Python APIs and socket-based interfaces described in [

54,

55].

E. Evaluation Metrics

PerformanceThe AI based framework is assessed on the following metrics:

Spectrum Efficiency (SE): Network throughput per unit bandwidth, measured as bps/Hz [

27].

Energy Efficiency (EE): Sum aggregate throughput by Joule of energy consumption, under green communication direction [

28].

Latency: The average time between the data generation and successful reception, game changer for URLLC use [

34].

Modulation Classification Accuracy: The percentage of accurately classified signals, tested on a test set with various SNR [

45].

Fairness Index: Jain fairness index to measure fairness in resource distribution among UEs [

29].

These measurements present a comprehensive perspective on network performance and AI model capability in changing wireless contexts.

IV. Challenges and Limitations

However AI for wireless networks has the promise to provide significant benefits, but it has several challenges. Issue of Concern The biggest issue is the dearth of the high quality labeled data, because the labeled data are extremely important for the training of accurate and reliable supervised learning models [

56,

57]. In practical wireless environments, data collection is expensive and time-consuming, and data privacy policies could also limit the availability of user-related information.

There is also a computational constraint on deep learning models. The training of such models is computationally extensive, requiring large computational and memory resources, which are generally beyond the capability of edge devices that are usually employed in wireless networks [

58,

59]. This limitation greatly impedes the popularization of AI-based approaches in environments requiring low-latency and resource-constrained, such as in the area of edge computing and IoT [

60].

Real-time operation is another hurdle. The mobility transmission management is based on the requirements and can minimise the handoff Ping-pong looping effect which depends on different network parameters. Yet inference time of deep models is not guaranteed to meet the aggressive on-line constraints of applications, such as autonomous driving or URLLC (Ultra-Reliable Low-Latency Communication) [

61,

62]. From the point of view of research, reaching a tradeoff between accuracy and inference speed is an open question [

63].

Challenges regarding security and robustness are also at the forefront. AI models are not invulnerable to adversarial attacks, in which feeds of inputs might lead to wrong decisions, threatening network safety and reliability [

64,

65]. In addition, back-door attacks at the training stage can break down the trustworthiness of AI models, causing network performance to deteriorate for an extended period of time [

66,

67]. These security vulnerabilities call for secure and reliable AI frameworks for wireless apps [

68].

And AI models can serve as “black boxes,” with little transparency into how they make decisions. Such a lack of clarity can compromise trust and impact their use in mission-critical systems. Explanation mechanisms to tackle this problem are presented in the context of explainable AI (XAI), but it is still an underdeveloped area of research [Societal], especially in communication systems where explainability has to be combined with real-time analysis [

69,

70,

71].

Scaling and generalization are also obstacles for AI application in heterogeneous wireless environments. A model trained in one context may not generalize well when deployed in another geographical region or network setting [

72,

73]. strong adaptation and transfer learning methods are needed in order to enhance the model reliability under different settings [

42].

Finally, the incorporation of AI in (standardized) communication protocols and (industrial) equipment is not yet finished. The lack of end-to-end model deployment platforms and the lack of co-ordination among the different parties make the full-scale deployment of AI in wireless systems more challenging [

76,

77,

78]. Initiatives such as O-RAN and 3GPP are moving towards standardizing AI adoption, although full maturity is far from being reached [

79,

80,

81].

Summing up, AI has transformative potential for wireless networks, which said challenges must be dealt with for its successful and secure deployment [

82,

83,

84,

85].

V. Future Directions

Future wireless networks will likely leverage federated learning for privacy-preserving AI, edge AI for low-latency inference, and explainable AI for trustworthy decisions. Integration with 6G will require new frameworks to support AI-native communication infrastructure. These networks will emphasize self-optimization, context-awareness, and adaptability. Research will also focus on integrating AI with emerging technologies such as intelligent reflecting surfaces (IRS), non-terrestrial networks (NTN), and massive MIMO.

VI. Conclusion

Artificial Intelligence has the power to fundamentally change the field of wireless communication with transformative gains of efficiency, adaptability and intelligence. By incorporating machine learning, deep learning, and reinforcement learning to existing network functionalities (e.g., resource/signal/spectrum management), AI enables wireless systems to perform more dynamically, data-driven, and autonomous. The proposed methodology in this paper provides a successful approach of training and deploying AI models in a more realistic wireless environment which can enhance the mentioned performance metrics (i.e., throughput, latency, energy efficiency and fairness).

However, as pointed out, the issues pertaining to data accessibility, computational resource utilization, real-time limitations, and security concern need to be resolved to achieve safe and scalable AI incorporation. Considering the future evolution towards 6G and beyond, AI will progress from a supportive to a primary component of network design. Future studies should pay attention to AI interpretability, decentralization (e.g., federated learning), and cross-domain collaboration to fully unleash the power of AI in next-generation wireless systems.

In summary, the combination of AI and wireless communication is a key area for academic research and industrial deployment, enabling future intelligent, resilient, and self-optimizing networks to accommodate the exponentially increasing requirements of present and future applications.

References

- Singh, H. (2025) AI-Powered Chatbots Transforming Customer Support through Personalized and Automated Interactions. Available at SSRN 526 7858.

- Kumar, T. V. (2019). Personal Finance Management Solutions with AI-Enabled Insights.

- Arora, A. (2025). Transforming Cybersecurity Threat Detection and Prevention Systems Using Artificial Intelligence. Available at SSRN 526 8166.

- Sidharth, S. (2022). Zero Trust Architecture: A key component of modern cybersecurity frameworks.

- Singh, B. (2025). DevSecOps: A Comprehensive Framework for Securing Cloud-Native Applications. Available at SSRN 526 7982.

- Dalal, A. (2025). Driving Business Transformation Through Scalable and Secure Cloud Computing Infrastructure Solutions. Aryendra Dalal, Deloitte. Available at SSRN 5268120.

- Singh, B. (2025). Challenges and Solutions for Adopting DevSecOps in Large Organizations. Available at SSRN 526 7971.

- Singh, H. (2025). Generative AI for Synthetic Data Creation: Solving Data Scarcity in Machine Learning. Available at SSRN 5267914.

- Sidharth, S. (2023). The Role of Homomorphic Encryption in Secure Cloud Data Processing.

- Kumar, T. V. (2021). Natural Language Understanding Models for Personalized Financial Services.

- Singh, B. (2025). Enhancing Oracle Database Security with Transparent Data Encryption (TDE) Solutions. Available at SSRN 5267924.

- Arora, A. (2025). Enhancing Customer Experience Across Multiple Business Domains Using Artificial Intelligence. Available at SSRN 526 8178.

- Singh, H. (2025). Artificial Intelligence and Robotics Transforming Industries with Intelligent Automation Solutions. Available at SSRN 526 7868.

- Singh, B. (2025). Practices, and Implementation Strategies. (, 2025). 23 May.

- Sidharth, S. (2020). The growing threat of deepfakes: Implications for security and privacy.

- Dalal, A. (2025). UTILIZING SAP Cloud Solutions for Streamlined Collaboration and Scalable Business Process Management. Available at SSRN 526 8108.

- Arora, A. (2025). Comprehensive Cloud Security Strategies for Protecting Sensitive Data in Hybrid Cloud Environments.

- Kumar, T. V. (2015). Cloud-Native Model Deployment for Financial Applications.

- Singh, B. (2025). CD Pipelines Using DevSecOps Tools: A Comprehensive Study. (, 2025). 23 May.

- Singh, H. (2025). Enhancing Cloud Security Posture with AI-Driven Threat Detection and Response Mechanisms. Available at SSRN 5267878.

- Arora, A. (2025). Analyzing Best Practices and Strategies for Encrypting Data at Rest (Stored) and Data in Transit (Transmitted) in Cloud Environments. Available at SSRN 5268190.

- Singh, B. (2025). Oracle Database Vault: Advanced Features for Regulatory Compliance and Control. Available at SSRN 5267938.

- Sidharth, S. (2024). Enhancing Cloud Security with AI-Based Intrusion Detection Systems.

- Singh, H. (2025). Meeting Regulatory and Compliance Standards. (, 2025). 23 May.

- Dalal, A. (2025). BRIDGING OPERATIONAL GAPS USING CLOUD COMPUTING TOOLS FOR SEAMLESS TEAM COLLABORATION AND PRODUCTIVITY. Available at SSRN 526 8126.

- Kumar, T. V. (2019). Blockchain-Integrated Payment Gateways for Secure Digital Banking.

- Sidharth, S. (2019). Securing cloud-native microservices with service mesh technologies.

- Singh, H. (2025). Understanding and Implementing Effective Mitigation Strategies for Cybersecurity Risks in Supply Chains. Available at SSRN 526 7866.

- Singh, B. (2025). Building Secure Software Faster with DevSecOps Principles, Practices, and Implementation Strategies. (, 2025). 23 May.

- Arora, A. (2025). The Future of Generative AI and Its Role in Shaping Secure and Ethical AI Systems.

- Kumar, T. V. (2016). Layered App Security Architecture for Protecting Sensitive Data.

- Shuriya, B. , & Thenmozhi, S. (2015). RBAM with Constraint Satisfaction Problem in Role Mining. International Journal of Innovative Research and Development, 4(2).

- Singh, H. (2025). The Role of Multi-Factor Authentication and Encryption in Securing Data Access of Cloud Resources in a Multitenant Environment. Available at SSRN 5267886.

- Arora, A. (2025). Evaluating Ethical Challenges in Generative AI Development and Responsible Usage Guidelines. Available at SSRN 526 8196.

- Sidharth, S. (2021). Multi-cloud environments: Reducing security risks in distributed architectures.

- Kumar, T. V. (2015). Serverless Frameworks for Scalable Banking App Backends.

- Singh, B. (2025). Mastering Oracle Database Security: Best Practices for Enterprise Protection. Available at SSRN 5267920.

- Singh, B. (2025). Enhancing Real-Time Database Security Monitoring Capabilities Using Artificial Intelligence. Available at SSRN 5267988.

- Singh, H. (2025). Strengthening Endpoint Security to Reduce Attack Vectors in Distributed Work Environments. Available at SSRN 526 7844.

- Dalal, A. (2025). The Research Journal (TRJ): A Unit of I2OR. Available at SSRN 5268120.

- Arora, A. (2025). Securing Multi-Cloud Architectures Using Advanced Cloud Security Management Tools. Available at SSRN 5268184.

- Singh, H. (2025). Cybersecurity for Smart Cities: Protecting Infrastructure in the Era of Digitalization. Available at SSRN 5267856.

- Arora, A. (2025). Zero Trust Architecture: Revolutionizing Cybersecurity for Modern Digital Environments. Available at SSRN 5268151.

- Singh, B. (2025). Integrating Threat Modeling In DevSecOps for Enhanced Application Security. Available at SSRN 526 7976.

- Singh, B. (2025). Shifting Security Left: Integrating DevSecOps into Agile Software Development Lifecycles. Available at SSRN 5267963.

- Kumar, T. V. (2017). Cross-Platform Mobile Application Architecture for Financial Services.

- Sidharth, S. (2023). AI-driven anomaly detection for advanced threat detection.

- Singh, H. (2025). Key Cloud Security Challenges for Organizations Embracing Digital Transformation Initiatives. Available at SSRN 526 7894.

- Sidharth, S. (2020). The rising threat of deepfakes: Security and privacy implications.

- Singh, B. (2025). Advanced Oracle Security Techniques for Safeguarding Data Against Evolving Cyber Threats. Available at SSRN 526 7951.

- Kumar, T. V. (2020). Generative AI Applications in Customizing User Experiences in Banking Apps.

- Singh, H. (2025). The Future of Generative AI: Opportunities, Challenges, and Industry Disruption Potential. (, 2025). 23 May.

- Arora, A. (2025). Developing Generative AI Models That Comply with Privacy Regulations and Ethical Principles. Available at SSRN 526 8204.

- Sidharth, S. (2019). Enhancing security of cloud-native microservices with service mesh technologies.

- Dalal, A. (2023). Data Management Using Cloud Computing. Available at SSRN 519 8760.

- Singh, H. (2025). How Generative AI is Revolutionizing Scientific Research by Automating Hypothesis Generation. Available at SSRN 526 7912.

- Kumar, T. V. (2018). Event-Driven App Design for High-Concurrency Microservices.

- Arora, A. (2025). Integrating DevSecOps Practices to Strengthen Cloud Security in Agile Development Environments. Available at SSRN 526 8194.

- Singh, B. (2025). Integrating Security Seamlessly into DevOps Development Pipelines Through DevSecOps: A Holistic Approach to Secure Software Delivery. Available at SSRN 5267955.

- Sidharth, S. (2022). Improving generative AI models for secure and private data synthesis.

- Shuriya, B. , Umamaheswari, S., Rajendran, A., & Sivaprakash, P. (2023, June). One-Dimensional Dilated Hypothesized Learning Method for Intrusion Detection System Under Constraint Resource Environment. In 2023 2nd ICAECA (pp. 1–6). IEEE.

- Dalal, A. , et al. (2025, February). Developing a Blockchain-Based AI-IoT Platform for Industrial Automation and Control Systems. In IEEE CE2CT (pp. 744–749).

- Singh, H. (2025). Strengthening Endpoint Security to Reduce Attack Vectors in Distributed Work Environments. Available at SSRN 526 7844.

- Arora, A. (2025). THE SIGNIFICANCE AND ROLE OF AI IN IMPROVING CLOUD SECURITY POSTURE FOR MODERN ENTERPRISES. Available at SSRN 526 8192.

- Sidharth, S. (2019). Quantum-enhanced encryption techniques for cloud data protection.

- Singh, H. (2025). Advanced Cybersecurity Techniques for Safeguarding Critical Infrastructure Against Modern Threats. SSRN. https://ssrn.com/abstract=5267496.

- Arora, A. (2025). The Future of Cybersecurity: Trends and Innovations Shaping Tomorrow’s Threat Landscape. Available at SSRN 5268161.

- Singh, B. (2025). Advanced Threat Detection Using AI-Driven Anomaly Detection Systems.

- Kumar, T. V. (2022). AI-Powered Fraud Detection in Real-Time Financial Transactions.

- Arora, A. (2025). Detecting and Mitigating Advanced Persistent Threats in Cybersecurity Systems.

- Sidharth, S. (2024). Strengthening Cloud Security with AI-Based Intrusion Detection Systems.

- Singh, H. (2025). The Importance of Cybersecurity Frameworks and Constant Audits for Identifying Gaps.

- Dalal, A. (2025). DEVELOPING SCALABLE APPLICATIONS THROUGH ADVANCED SERVERLESS ARCHITECTURES IN CLOUD ECOSYSTEMS. Available at SSRN 526 8116.

- Singh, B. (2025). CD Pipelines Using DevSecOps Tools: A Comprehensive Study. Presented , 2025. 23 May.

- Singh, H. (2025). Meeting Regulatory and Compliance Standards. Presented , 2025. 23 May.

- Singh, B. (2025). Practices, and Implementation Strategies. Presented , 2025. 23 May.

- Kumar, T. V. (2015). Analysis of SQL and NoSQL Database Management Systems Intended for Unstructured Data.

- Jha, K. , Dhakad, D., & Singh, B. (2020). Critical Review on Corrosive Properties of Metals and Polymers in Oil and Gas Pipelines.

- Singh, H. (2025). Securing High-Stakes Digital Transactions: A Comprehensive Study on Cybersecurity and Data Privacy in Financial Institutions. Available at SSRN 5267850.

- Singh, B. (2025). Enhancing Oracle Database Security with Transparent Data Encryption.

- Singh, H. (2025). AI-Powered Chatbots Transforming Customer Support.

- Sidharth, S. (2019). Homomorphic Encryption: Enabling Secure Cloud Data Processing.

- Dalal, A. (2025). Revolutionizing Enterprise Data Management Using SAP HANA.

- Arora, A. (2025). Enhancing Customer Experience Across Multiple Business Domains.

- Shuriya, B. , Kumar, S. V., & Bagyalakshmi, K. (2024). Noise-Resilient Homomorphic Encryption arXiv preprint. arXiv:2412.11474.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).