1. Introduction and Motivation

This section introduces the motivation for this paper. It also includes the background that informed our approach to this work.

Neural Tax Networks [

1,

2] is building a proof-of-technology for a multimodal AI system. The first modality is based on automated theorem-proving for first-order logic systems. This is realized by using the logic programming language ErgoAI [

3] for proving statements in tax law. The second modality is expected to use LLMs (Large Language Models) in two ways. The initial application of LLMs is to help users input information into the system and understand the system’s output or answer. This application of LLMs is the focus of our comparison of modalities of LLMs and first-order logical theorem-proving systems. The second application of LLMs will target the translation of tax law into ErgoAI code. Both of these applications of LLMs are challenging and have not yet been fully tried and tested.

This paper leverages logical model theory to better understand the limits of applying these two modalities together. Particularly, this paper gives insight into the limits on combining LLMs and theorem proving systems into a single system capable of both reading and analyzing U.S. tax law. We make a key assumption: the LLMs work with an uncountable number of words over all time. We argue this is not an unreasonable assumption since deeper specialization in new areas of the law or new areas of human understanding often requires either the creation of new words or the assignment of new, additional meanings to existing words. These new word meanings differ from previous word meanings. Moreover, (1) LLMs are based on very large amounts of data that is occasionally updated, (2) linguistic models such as Heaps’ law [

4] allows partial estimates of the number of unique words in a document based on the document’s size and does not have an explicit bound, and (3) natural language usage by speakers adds new words, or creates additional meanings of words over time, while fewer words fall out of use or represent irrelevant concepts.

We will highlight a special case of the upward Löwenheim–Skolem Theorem that indicates if we have a countable infinite number of word meanings for our system, then we have an uncountable set of word meanings. Alternatively, we can take a limit over all time providing an uncountable number of word meanings over an infinite number of years.

We argue that resolution theorem-proving systems, unlike LLMs, have domains that contain at most a countable number of words and word meanings. Particularly, these words and word meanings are established when the rules and facts are set. In addition, for applications of theorem-proving systems to legal reasoning, the rules are often set with specific word meanings in mind.

A key question we work on is: Suppose a theorem-proving system (such as a system designed to analyze U.S. tax law) does not add any new rules while new word meanings are added to the system’s domain by an LLM. This LLM essentially “feeds” the words represented by LLM tokens into the theorem-proving system. For example, suppose a theorem proving system based on U.S. tax law is required to answer a question input by the user using a word tokenized by the LLM as having a certain meaning but which word is not contained in the text of the tax law that otherwise would apply to the user’s question. In this case, the theorem proving system’s rules would work the same for the original domain (i.e., the domain of words contained in the tax law when it was enacted) while being required to deal with the new words that are tokenized from an LLM. To address this in our analysis we assume an uncountable domain of word meanings, a subset of which may be supplied to the theorem-proving system by the LLMs.

A novel application of the Löwenheim–Skolem (L-S) Theorems and elementary logical model equivalence, see Theorems 8 and 7, indicates that the same rules of the theorem proving system can still work even when an uncountable number of word meanings are in the theorem-proving system’s domain. Another view is from the Lefschetz Principle of first-order logic [

5]. This indicates the same first-order logic sentences can be true given specific models with different cardinalities. For instance, one model may have cardinality

and another may have cardinality

.

1.1. Technical Background

ChatGPT was the first publicly available LLM. ChatGPT and many other similar models are based on [

6]. Surveys of LLMs include [

7,

8].

This paper seeks insight on multimodal AI systems based on the foundations of computing and mathematics. To do so we analyze the effects of the combination of a first-order logic theorem proving system with LLMs.

LLMs have been augmented with logical reasoning [

9]. Our work uses logical reasoning systems to augment LLMs. There has also been work on the formalization of AI based on the foundations of computing. For example, standard notions of learnability can neither be proven nor disproven [

10]. This result leverages foundations of machine learning and a classical result of the independence of the continuum hypothesis. The Turing test can be viewed as version of an interactive proof system brining the Turing test to key foundations of computational complexity [

11].

1.2. Technical Approach

The domain of an LLM is the specific area of knowledge or expertise that the model is focused on. It’s essentially the context in which the LLM is trained and applied. Thus it determines the types of tasks it can perform and the kind of information it can process and generate.

Applying LLMs are in the next phase of our proof-of-technology. We already have basic theorem proving working in ErgoAI with several tax rules. It is the practical combination of these modalities that will allow complex questions to be precisely answered. Ideally, given the capabilities of LLMs, the users will be able to easily work with the system.

Many theorem proving systems supply answers with explanations. In our case, these explanations are proofs from the logic-programming system ErgoAI. Of course, the Neural Tax Networks system assumes all facts entered by a user are true.

ErgoAI is an advanced multi-paradigm logic-programming system by Coherent Knowledge LLC [

3,

12]. It is Prolog-based and includes non-monotonic logics, among other advanced logics and features. For instance, ErgoAI supports defeasible reasoning. Defeasible reasoning allows a logical conclusion to be defeated by new information. This is sometimes how law is interpreted: If a new law is passed, then any older conclusions that the new law contradicts are dis-allowed.

The idea that proofs are syntactic and model theory is semantic goes back to, at least, the start of logical model theory. Of course, the semantics of model theory or LLMs may not align with the linguistic or philosophical semantics of language.

Table 1.

List of symbols and their definitions

Table 1.

List of symbols and their definitions

| Symbol |

Definition |

|

Root of a proof tree |

|

A context-free grammar |

|

Countable infinite cardinality |

|

Uncountable infinite cardinality |

|

Logical model of first-order theorem-proving system with originalism |

|

Logical model of LLMs |

|

The set of natural numbers

|

|

Field of rational numbers |

|

Field of all solutions to polynomial quations with coefficients from

|

|

The set of real numbers |

|

The set of complex numbers |

1.3. Structure of This Paper

Section 2 discusses select previous work on multimodality and the law. It briefly reviews expert systems and approaches where LLMs can perform some level of legal reasoning.

Section 3 gives a logic background illustrated by select ErgoAI statements. This section shows ErgoAI or Prolog have at most a countably infinite number of atoms that may be substituted for variables. We argue each of these atoms has a finite number of meanings.

Section 4 introduces logical model theory and gives a high-level view of how our system works.

Section 5 discusses the upward and downward Löwenheim-Skolem theorems as well as elementary equivalence from logical model theory. We give results based on these foundations to show (1) LLMs have uncountable models under certain circumstances, and (2) Models of both LLMs and the first-order logic theorem proving systems cannot be distinguished by first-order logic expressions.

3. First-Order Logic Systems

This section reviews first-order logic. It discusses aspects of legal reasoning as it may be implemented in ErgoAI.

First-order logic expressions have logical connectives and a unary symbol ¬. They also have variables and quantifiers . These quantifiers only apply to the variables. We also discuss functions and predicates in first-order logic. It is the variables, functions, and predicates that differentiates first-order logic from more basic logics. Functions and predicates represent relationships. The logical connectives, functions, and predicates all can apply to the variables. We assume all logic expressions are well-formed, finite, and in first-order logic.

Proofs are syntactic structures. A proof of a logic formula may be concisely expressed as a directed acyclic graph. First-order logic proofs can be laid out as trees. If the proof’s goal is to establish an expression is provable, then the proof tree has as its root. The vertices of a proof graph are facts, axioms, and expressions. The directed edges connect nodes by an application of an inference rule. The inference rule for Prolog-like theorem proving systems is generally selective linear definite clause resolution. To apply such inference rules may require substitution or unification. If a logical formula is provable, then finding a proof often reduces to trial and error or exhaustive search.

ErgoAI has frame-based syntax which adds structure and includes object oriented features. The ErgoAI or Prolog expression H:-B is a rule. This rule indicates that if the body B is true then conclude the head H is true.

Listing is an ErgoAI rule for determining if an expenditure is a deduction. The notation ?X is that of a variable. In Listing , ?X is an expenditure that has Boolean typed properties ordinary, necessary, and forBusiness.

|

Listing 1. A rule in ErgoAI in frame-based syntax |

?X:Deduction :-

?X:Expenditure,

?X[ordinary => \boolean],

?X[necessary => \boolean],

?X[forBusiness => \boolean]. |

The rule in Listing has a body indicating that if there is an ?X that is an expenditure with true properties ordinary, necessary, and forBusiness, then ?X is a deduction. This rule is taken as an axiom.

The ErgoAI code in Listing has three forBusiness expenses. It also has two donations that are not forBusiness. Since these two donations are not explicitly forBusiness, by negation-as-failure ErgoAI and Prolog systems assume they are not forBusiness. The facts are the first five lines. There is a rule on the last line. Together the facts and rules form the database of axioms for an ErgoAI frame-based program.

|

Listing 2. Axioms in ErgoAI in frame-based syntax |

employeeCompensation:forBusiness.

rent:forBusiness.

robot:forBusiness.

foodbank:donation.

politicalParty:donation.

?X:liability :- ?X:forBusiness. |

A program in the form of a query of the database in Listing is in Listing . This listing shows three matches for the variable ?X.

|

Listing 3. A program in ErgoAI in frame-based syntax. |

ErgoAI> ?X:forBusiness.

>> employeeCompensation

>> rent

>> robot |

Hence an ErgoAI system can prove employeeCompensation, rent, and robot all are forBusiness. We can also query the liabilities which gives the same output as the bottom three lines of Listing .

It is interesting that some modern principles of tax law are not all that different from ancient principles of tax law. The principles under-pinning tax law evolve slowly. Certain current tax principles are analogous to some in the Roman Empire in the last 1,900+ years [

32,

33]. While the meaning of words evolves, ideally the semantics of tax law remains the same over time. Modern tax law is of course much more complex than ancient tax law, in large part because both commercial laws and bookkeeping have improved and become more complex (and more precise) than in ancient times. Modern tax law has both evolved as financial transactions have evolved and has even allowed the creation of more complex financial transactions.

Under the legal theory of Originalism, the words in the tax law should be given the meaning those words had at the time the law was enacted. Scalia and Garner’s book is on how to interpret written laws ([

34], p 78). For example, they say,

“Words change meaning over time, and often in unpredictable ways.”

Assumption A1 (Originalism). A law should be interpreted based on the meaning of its words when the law was enacted.

Of course, tax law can be changed quickly by a legislature. Assuming originalism, the meaning of the words in the newly changed laws, when the new laws are enacted, is the basis of understanding these new laws.

Specifying the meanings of words in natural language uses natural language. Specifying meanings or words for theorem-proving systems uses variables that are expressed as atoms. The syntax of variables in most programming languages are specified by regular expressions. Variables can be specified or grouped together using context-free grammars. The same is true for the syntax of atoms in ErgoAI or Prolog. An atom is an identifier that represents a constant, a string, name or value. Atoms are alphbetic characters provided the first character is lower-case or the under-score. Atoms can name predicates, but the focus here is on atoms representing items that are be substituted for variables in first-order expressions.

In tax law, some of the inputs may be parenthesized expressions. For example, some amounts owed to the government are compounded quarterly based on the “applicable federal rate” published by the U.S. Treasury Department. Such an expression may be best written as a parenthesized expression. So, the text of all unique words or inputs for the tax statutes are a subset of certain context free grammars. A context-free grammar can represent paranthesized expressions and atoms in ErgoAI.

Definition 1 (Context-free grammar (CFG)).A context-free grammar is a 4-tuple G so that where N is a set of non-terminal variables, Σ is a set of terminals or fixed symbols, P is a set of production rules so is such that where and , and is the start symbol.

The language generated by a context-free grammar is all strings of terminals that are generated by the production rules of a CFG. The number of strings in a language generated by CFGs is at most countably infinite.

Definition 2 (Countable and uncountable numbers). If all elements of any set T of can be counted by some or all of the natural numbers , then T has cardinality . Equality holds when there is a bijection between each element of the set T and a non-finite subset of .

The real numbers have cardinality which is uncountable.

There is a rich set of extensions and results beyond Definition 2, see for example [

35,

36,

37,

38]. The next theorem is classical. In our case, this result is useful for applications of the Löwenheim–Skolem (L-S) Theorems. See Theorem 8.

Theorem 1 (Domains from context free grammars). A context free grammar can generate a language of size .

Proof. Consider a context-free grammar made of a set of non-terminals N, a set of terminals , a set of productions P, and the start symbol S. Let , be the empty symbol.

The productions

P of interest are:

Starting from

S, the set of all strings of terminals

w that can be derived from

P is written as,

So, the CFG G can generate all strings representing the natural numbers . All natural numbers form a countably infinite set, completing the proof. □

The proof of Theorem 1 uses a CFG that generates the language of all integers in . Each individual word in the domain of all words used in the law can be uniquely numbered by an integer in . Separately, any numerical values required by computing taxes can also be represented using integers and paranthesized expressions.

Listing can be expressed in terms of provability. In this regard, consider Listing .

|

Listing 4. Applying a rule or axiom in pseudo-ErgoAI as a proof |

?X:Expenditure

∧?X[ordinary -> \true]

∧ ?X[necessary -> \true]

∧ ?X[forBusiness -> \true]

⊢ ?X:Deduction |

So, if there is an ?X that is an expenditure, and it is ordinary, necessary, and forBusiess, then this is accepted as a proof that ?X is a deduction. This is because rules and facts are axioms. So, if the first four lines of the rule in Listing are satisfied, then we have a proof that ?X is a Deduction.

The statement

indicates that

E is provable in the logical system at hand. That is,

E is a tautology. A tautology is a formula that is always true. For example, given a Boolean variable

, then

must always be true so it is a tautology. The formula

is a contradiction. Contradictions are always false.

Consider a formula E and a set of formulas F in the same logical system. If from the formulas in F, axioms made of the facts and rules of the system, and one or more inference rules, then we can prove E, we write . In other words, from F can we prove E given the database of rules and facts of the sytem an one or more inference rules.

Also, consider a first-order logical system. Suppose E is formula of this system. A formula may have free variables. A free variable is not bound or restricted.

Adding quantification, where E is a first-order formula, then this means if or if . If a variable is quantified it is not a free variable. Quantification is important to express laws as logic. Theorem proving languages such as ErgoAI or Prolog default to for any free variable x.

Definition 3 (Logical symbols of a first-order language [

37]).

The logical symbols of a first-order language are,

- (1)

variables

- (2)

logic connectives

- (3)

quantifiers

- (4)

scoping (, ) or [] or such-that :

- (5)

equality =

Definition 4 (-language). If , then this is a first-order -language where L is the set of logical symbols and connectives, D is the domain, and σ is the signature of the system which is its constants, predicates, and functions.

The signature

of a logical system is the set of all

non-logical symbols and operators of a first-order language [

37].

A formula f is an expression made from an -language and we write . A formula f has no free-variables if each variable in f is quantified by one of ∀ or ∃.

Definition 5 (Sentence). Consider a first-order logic language and a formula . If f has no free variables, then f is a sentence.

An interpretation defines a domain and semantics to functions and predicates for formulas or sentences. An interpretation that makes a formula E true is a model. Given an interpretation, a formula may not be either true or false if the formula has free variables.

Definition 6 (First-order logic interpretation [

37]).

Consider a first-order logic language and a set I. The set I is an interpretation of iff the following holds:

There is a interpretation domain made from the elements in I

If there is a constant , then it maps uniquely to an element

If there is a function symbol where f takes n arguments, then there is a unique where is an n-ary function.

If there is a predicate symbol where r takes n arguments, then there is a unique n-ary predicate .

An interpretation I of a first-order logic language includes all of the logical symbols in L. Consider the domain D, signature , and I is an interpretation. The interpretation I can be applied to a sentence. Given any interpretation, then any sentence must be or .

Definition 7 (Logical model). Consider a first-order -language and an interpretation I of , then I is a model iff for all are so that is true, where corresponds with f.

Models are denoted and .

The expression indicates all interpretations of the system make E true. If an interpretation I in a logical system can express the positive integers and the sentence , then is true. However, if I is updated to include , then .

The expression means we can substitute values from I into E giving . The sentence is true when .

Definition 8 (First-order logic definitions). Given any set of first-order logic formulas E and an interpretation I, then E is:

Validif every interpretation I is so that E is true

Inconsistent or Unsatisfiableif E is false under all interpretations I

Consistent or Satisfiableif E is true under at least one interpretation I

The next result is classical, see [

36,

37].

Theorem 2 (First-order logic is semi-decidable). Consider a set of first-order logic formulas E.

If E is true, then there is an algorithm that can verify E’s truth in a finite number of steps.

If E is false, then in the worst case there is no algorithm can verify E’s falsity in a finite number of steps.

Suppose H and C are first-order logic formulas. If H is provable, then it is valid so it satisfies all models.

Theorem 3 (Logical soundness). For any set of first-order logic formulas H and a first-order formula C: If , then .

Gödel’s completeness theorem [

37] indicates if a first-order logic formulas is valid, then it is provable. If

C satisfies all models

H, then

C is provable.

Theorem 4 (Logical completeness). For any set of first-order logic formulas H and a first-order logic formula C: If , then .

There are many rules that can be found in tax statutes. Many of these rules are complex. Currently there are about word instances in the US Federal tax statutes and about word instances in the US Federal case tax law. Of course most of these words are repeated many times.

3.1. Interpretations and Models for Originalism and LLMs

This subsection discusses the interpretation and the model . These are used by the remainder of the paper. These interpretations are for first-order theorem-proving systems and LLMs. The focus here is on for ErgoAI.

Theorems 3 and 4 tell us that any first-order sentence that has a valid model can be proved. And symmetrically, any first-order sentence that can be proved has a valid model. These relationships extend to first-order logic in ErgoAI. Recall, all ErgoAI statements are equivalent to first-order sentences. Any ErgoAI statement that has a valid model is provable. Any first-order statement that is provable in ErgoAI has a valid model. Here we sketch a correspondence between ErgoAI statemens and logical interpretations.

The interpretation is for first-order logic programs encoding tax law. Of course, the ideas here can apply to many other areas besides tax law. In any case, the interpretation fixes all word and phrase meanings from when the laws were enacted, see Assumption A1.

The domain is where any string can be uniquely represented by its numerical representation, such as characters in UTF-8. All strings in have first element of 1. All integers have a first element of 2.

Rules A rule is interpreted as the logic expression .

PredicatesPredicate symbols can be uniquely represented as tuples from . The first element of a predicate tuple is a 1 indicating the second element represents a string denoting the predicate’s name. The final element is the arity of the predicate.

Functions Functions are predicates that return elements of the domain. Functions can be built in ErgoAI by having a predicate that returns an element of the domain or by using an if-then-else clause.

An ErgoAI predicate can be expressed as ?D:Refund :- ?D:TaxesOwed, ?D < 0. A facts can be expressed as ?P:NeedTaxAttorney :- true .

All expressions using are sentences. This is because languages, such as ErgoAI or Prolog, assumes universal quantification for any unbound variables.

Unification and negation-as-failure add complexity to first-order logic programming interpretations [

43,

53]. Handling logic programs database change in first-order logic programming can be done using additional semantics [

39].

4. Theorem Proving, Logic Models, and LLMs

This section focuses on logical model theory as it applies to LLMs using logic programming. Logic programming can illustrate logical semantics using the ⊧ symbol. We use first-order logic capabilities of ErgoAI in frame-mode.

If there is an ErgoAI rule or axiom:

so that

H is the head and

B is the body. Where

H holds if

B is true.

Given

and

, the next statement is true:

That is, the interpretation

makes

and

true. Hence

is a model for

. Alternatively consider,

then

is true. This is because there is no ?X so that ?X:forBusiness in

.

In the case of LLMs, words or word fragments are represented by vectors (embeddings) in high-dimensional space. These word or word fragments are tokens. Each feature of a token has a dimension. In many cases there are thousands of dimensions [

40]. Similar token vectors have close semantic meanings. This is a central foundation for LLMs [

41,

42].

The notion of semantics in LLMs is based on the context dependent similarity between token vectors [

41]. These similarity measures form an empirical distribution. Over time, such empirical distributions change as the meanings of words evolve. We believe it is reasonable to assume our users will be using the most recent jargon. The most recent jargon may be different from the semantics of the laws when the laws were enacted. See Assumption A1.

In the case of LLMs, we use to indicate semantics by adding that close word or phrase vectors can be substituted for each other.

Given two word vectors x and y both from the set of all word vectors V and a similarity measure . So values close to 1 indicate high similarity and values close to indicate vectors whose words have close to the opposite meanings. The function s can be a cosine similarity measure. In any case, suppose there are words where for all , then y is similar enough to x so y can be substituted for x given a suitable threshold. We assume similarity measures stringent enough so all are finite. Substitutions can be randomly selected from , which may make this AI method seem human-like.

A simplified case for deducting a lunch expense highlights key issues for computing with LLMs. Consider a model

These terms may be a model for a lunch expenditure. So salad[ordinary -> ] holds. But, a zillion-dollar-frittata, costs about 200 times the cost of a salad or burger. So the zillion-dollar-frittata is not an ordinary expenditure. For example, Listing indicates that the zillion-dollar-frittata property or field ordinary disqualifies it from being deductible. That is, zillion-dollar-frittata[ordinary -> ]. All three of these salad, burger and zillion-dollar-frittata may have some close dimensions, but their cost dimension (feature) is not in alignment.

Definition 9 (Extended logical semantics for LLMs). Consider a first-order logic language and a first-order sentence with a model . Then iff all pairs the similarity is above a suitable threshold.

In Definition 9, since , then given the expression f is true.

Definition 10 (Theory for a model).

Consider a first-order logic language and and a model for all sentences in , then ’s theory is,

Given a model of a language , then the theory is all true sentences from . Generating these sentences can be challenging.

Definition 11 (Knowledge Authoring). Consider a set of legal statutes and case-law in natural language L, then finding equivalent first-order rules and facts and putting them all in a set R is expressed as, .

Knowledge authoring is very challenging [

44] and this holds true for tax law. We do not have a solution for knowledge authoring of tax law, even using LLMs. Consider the first sentence of US Federal Law defining a business expense [

45],

“26 §162 In general - There shall be allowed as a deduction all the ordinary and necessary expenses paid or incurred during the taxable year in carrying on any trade or business, including—”

The full definition, not including references to other sections has many word instances. The semantics of each of the words must be defined in terms of facts and rules. Currently, our proof-of-technology has a basic highly structured user interface that allows a user to enter facts. The proof-of-technology also builds simple queries with the facts. Currently, this is done using our rather standard user interface. Our system has a client-server cloud-based architecture where the proofs are computed by ErgoAI on our backend. A tax situation is entered by a user through the front end. A tax question is expressed by facts. We also add queries, as functions or predicates, based on the user input. The proofs are presented on the front end of our system.

Our goal is to have users enter their questions in a structured subset of natural language

with the help of an LLM-based software bot. The user input is the set of natural language expressions

U. The logic axioms based on the statues and regulations is the set

R. An LLM will

help translate these natural language statements

U into ErgoAI facts and queries that are compatible with the logic rules and facts

R in ErgoAI. Accurate translations of natural language statements into ErgoAI is challenging. We do not yet have an automated way to translate from user entered natural language tax questions into ErgoAI facts and queries. However, Coherent Knowledge LLC has recently announced a product, ErgoText, that may help with this step [

46].

Definition 12 (Determining facts for R). Consider a natural language tax query U and its translation into both facts and a query into the expression G that is compatible with the ErgoAI rules R, then .

Using LLMs, Definition 12 depends on natural language word similarity. The words and phrases in user queries must be mapped to a query and facts in our ErgoAI rules R so ErgoAI can work to derive a result.

Definition 11 and Definition 12 can be combined to give the next definition.

Definition 13. Consider the ErgoAI rules and facts R representing the tax law and the ErgoAI user tax question as a queries and facts . The query and facts are written as sentences in G where since they must be compatible with the rules R. The sentences G must be built from for R’s theory. Now a theorem prover such as ErgoAI can determine whether, .

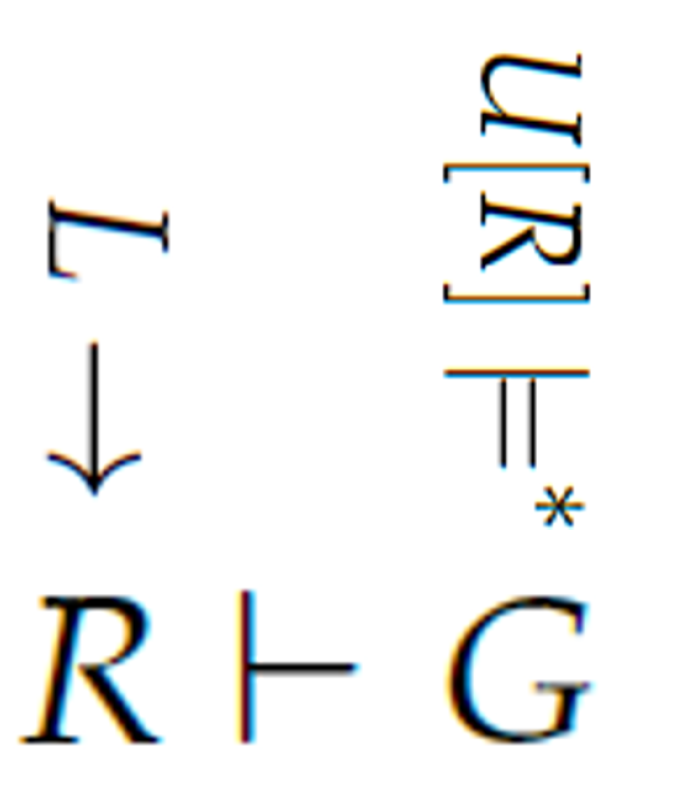

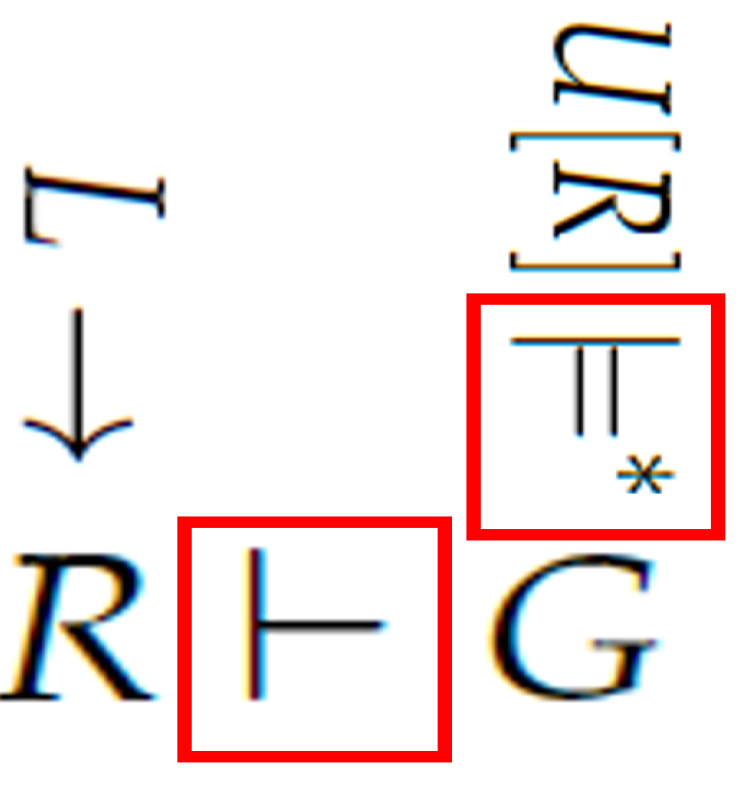

Figure 1 shows our vision of the Neural Tax Networks system. Currently, we are not doing the LLM translations automatically.

This figure depicts knowledge authoring as

. Since

L is the natural language laws, they are based on the semantics of when the said laws were enacted by Assumption A1. Next, a natural language query

U, with its facts, is converted into first-order sentences

G which are compatible with

R as

. Particularly, after computing

then

G is computed so that the expression

is true. This can be repeated for many different user questions

U. Finally, the system tries to find a proof

. See

Figure 2. The red boxes indicate parts of our processing that may be repeated many times in one user session. This figure highlights knowledge authoring, the semantics of LLMs, and logical deduction.

5. Löwenheim–Skolem Theorems and Elementary Equivalence

This section gives the main results of this paper. As before, our theorem-proving systems prove theorems in first-order logic. These results suppose legal rules and regulations are encoded in a first-order logic legal theorem-proving system. We assume the semantics of the law is at most countable from when the laws were enacted. This is assuming originalism for the rules in a theorem proving system for first-order logic. The culmination of this section then shows: if new semantics are introduced by LLMs, then the first-order theorem-proving system will be able to prove the same results from the origional semantics as well as the new semantics introduced by the LLMs. In traditional logic terms, first-order logic cannot differentiate between the original semantics and the new LLM semantics.

LLMs are trained on many natural language sentences or phrases. Currently, several LLMs are trained on greater than words instances or tokens. LLMs are trained mostly on very recent meanings of words or phrases.

Theorem 5 (Special-case of the upward Löwenheim–Skolem [

37]).

Consider a first-order theory E. If E has a countable model, then E has an uncountable model.

Michel, et al. [

48] indicates that English adds about 8,500 new words per year. See also Petersen, et al. [

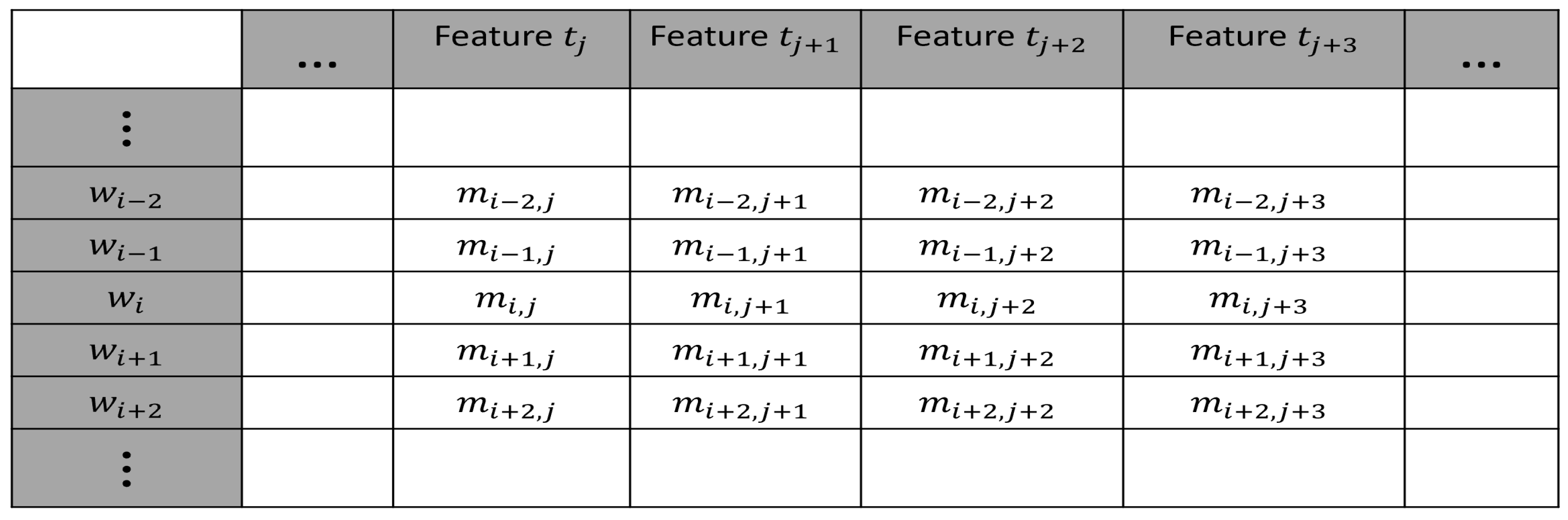

49]. Theorem 6 is based on the idea that new words with new meanings or new meanings are added to current words over time. There are several ways we can represent new meanings: (1) a new meaning can be represented as a set of features with different values from other concepts, or (2) a new meaning may require some new features. See the features in

Figure 3. We assume there will always be new meanings that require new features. So the number of feature columns is countable over all time. Just the same, we assume the number of words an LLM is trained on is countable over all time. But the number of meanings of words is uncountable. In summary, over all time, we assume the number of rows of words is countably infinite. Also, we assume the number of feature columns is countably infinite.

Given all of these new words and their new features, they diagonalize.

Figure 3 shows a word

whose features must be different from any other word with a different meaning. Therefore, any new word with a new meaning or an additional meaning for the same word

is

. So

will never have the same feature values of any of the other word meanings. So, if there is any countable feature columns and word meaning rows, there must always be additional meanings not listed.

Furthmore, individual words have many feature sets. Each feature set of a single word represents a different meaning.

In the case of Neural Tax Networks, some of these new words represent goods or services that are taxable. Ideally, the tax law statutes will not have to be changed for such new taxable goods or services. These new word meanings will supply new logical interpretations for tax law.

Assumption A2. Natural language will always add new meanings to workds or add new words with new meanings. Some words or phrases or their particular meanings decline in use.

Old or archaic meanings of words are not lost. Rather, these archaic meanings are often known and recorded.

For LLMs, assuming languages always add new words over time, then it can be argued that natural language has an uncountable model if we take a limit over all time even though this uncountable model may only add a few terms related to tax law each year. To understand the limits of our multimodal system, our assumption is that such language growth and change goes on forever. Some LLMs currently train on word instances or tokens. This is much larger than the number of words often needed by many theorem proving systems. Comparing countable and uncountable sets in these contexts may give us insight.

For the next theorem, recall Assumption A1 which states the original meaning of words for our theorem-proving system is fixed. In other words, the meaning of the words in law is fixed from when the laws were enacted. These word meanings are captured by the models for our theorem-proving system.

Theorem 1 shows context-free grammars can build infinite domains for theorem proving systems. This is by construting a countable number of atoms. Assumption A2 supposes the set of all words

V in an LLM has cardinality

. So using a similarity measure

s so that each word token vector

x has a finite subset of equivalent words

where

, for a constant integer

. Since this uncountable union of finite sets is indexed by an uncountable set,

it must be uncountable.

Theorem 6. Taking a limit over all time, LLMs with constant bounded similarity sets have an number tokens.

In some sense, Theorem 6 assumes human knowledge will be extended forever. This assumption is based on the idea that as time progresses new meanings will continually be formed. This appears to be tantamount to assuming social constructs, science, engineering, and applied science will never stop evolving.

The next definitions relate different models to each other.

Definition 14 (Elementary extensions and substructures). Consider a first-order language . Let and be models and suppose .

Then is an elementary extension of or iff every first-order sentence is so that

If , then is anelementary extensionof ,

If , then is anelementary substructureof .

The function can have any arity, so x can be considered a tuple for .

Definition 15 (Elementary equivalence).

Consider a first-order language . Let and be models of . Then is elementary equivalent to so , iff every first-order sentence is so that

Given a model , then is the complete first-order theory of . See Definition 10.

Theorem 7 (Elementary and first-order theory equivalence).

Consider a first-order language and two of its models and , then

Suppose a user input U is compatible with first-order logic rules and regulations R of our theorem proving system. These facts and rules are in the set . LLMs may help give the semantic expression G where . These formulas G are computed with (e.g., cosine) similarity along with any additional logical rules and facts. This also requires Assumption A2 giving a countable model for G.

The next version of the Löwenheim–Skolem (L-S) Theorems is from [

35,

50].

(L-S) Theorems).Theorem 8 (Löwenheim–Skolem Consider a first-order language with an infinite model . Then there is a model so that and

UpwardIf , then is an elementary extension of , or ,

DownwardIf , then is an elementary substructure of , or .

A first-order language with an countably infinite model can encode tax law.

The next corollary applies to tax law as well as other areas.

Corollary 1 (Application of Upward L-S). Consider a first-order language with a countably infinite model for a first-order logic theorem proving system. Suppose this first-order theorem-proving system has a countably infinite domain from a countably infinite model where . Then there is a model that is an elementary extension of and .

Proof. There is a countably infinite number of constants from the domain from an interpretation of if these constants can be specified by Theorem 1. So, apply Theorem 8 (Upward) to the first-order logic theorem proving system for sentences of , with a countably infinite model . The Upper L-S theorem indicates there is a countable infinite model so that . □

For the next result, a precondition is the model is a subset of .

Corollary 2 (Application of Downward L-S). Consider a first-order language and a countably infinite model , for a first-order logic theorem proving system. We assume an LLM with and a model so that . By the downward L-S theorem, is an elementary substructure of and .

Proof. Consider a first-order language and a first-order logic theorem-proving system with a countably infinite model . The elements or atoms of this model can be defined using context-free grammars or a regular language, see Theorem 1. This generates a countably infinite domain for the model so .

Suppose we have an uncountable model

based on the uncountablility of LLM models by Theorem 6. That is,

Then by the downward Löwenheim–Skolem Theorem, there is an elementary substucture of where . By Theorem 1 there is an infinite number of elements in the origional domain of the first-order theorem proving system so . □

To apply this corollary, suppose the originalism-based legal first-order logic theorem proving system has a model whose elements are a subset of an LLM model. Then there is an equivalence between an LLM’s uncountable model using new words and phrases with new meanings and a first-order countable logic model. This equivalence is based on the logical theory of each of these models. In other words, a consequence of Theorem 7 is next.

Corollary 3.

Consider a language and two of its models and , where , then