Submitted:

05 July 2025

Posted:

07 July 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Background and Related Work

2.1. Prompt Engineering Fundamentals

- Instruction-based (clear task specification)

- Contextual (incorporating domain knowledge)

- Structured (explicit reasoning frameworks) [33]

2.2. Structured Prompting Techniques

2.2.1. Chain-of-Thought (CoT)

2.2.2. Tree-of-Thought (ToT)

2.2.3. Graph-of-Thought (GoT)

2.3. Evaluation Metrics for Prompt Engineering

3. Visual Analysis of Prompt Engineering

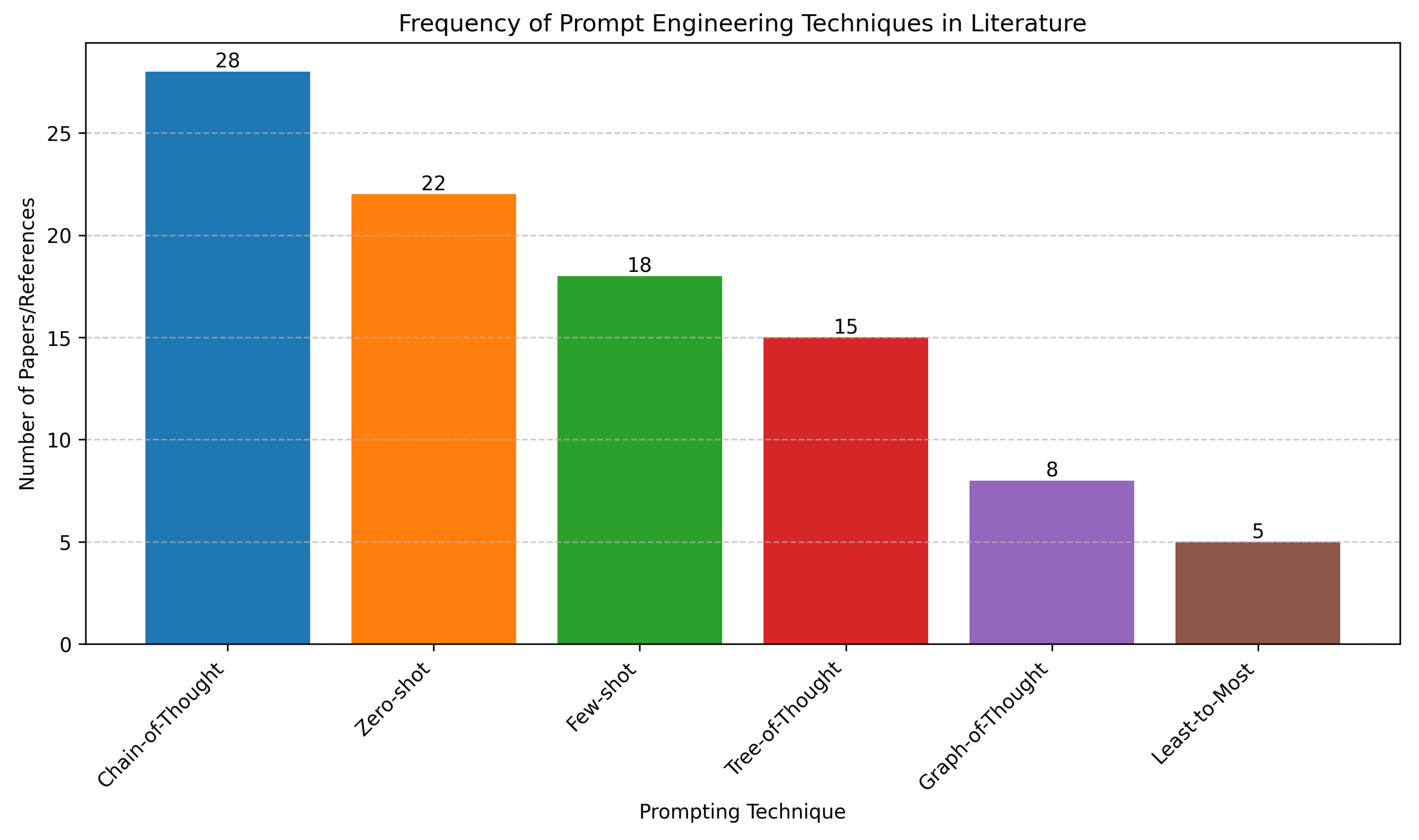

3.1. Frequency of Techniques

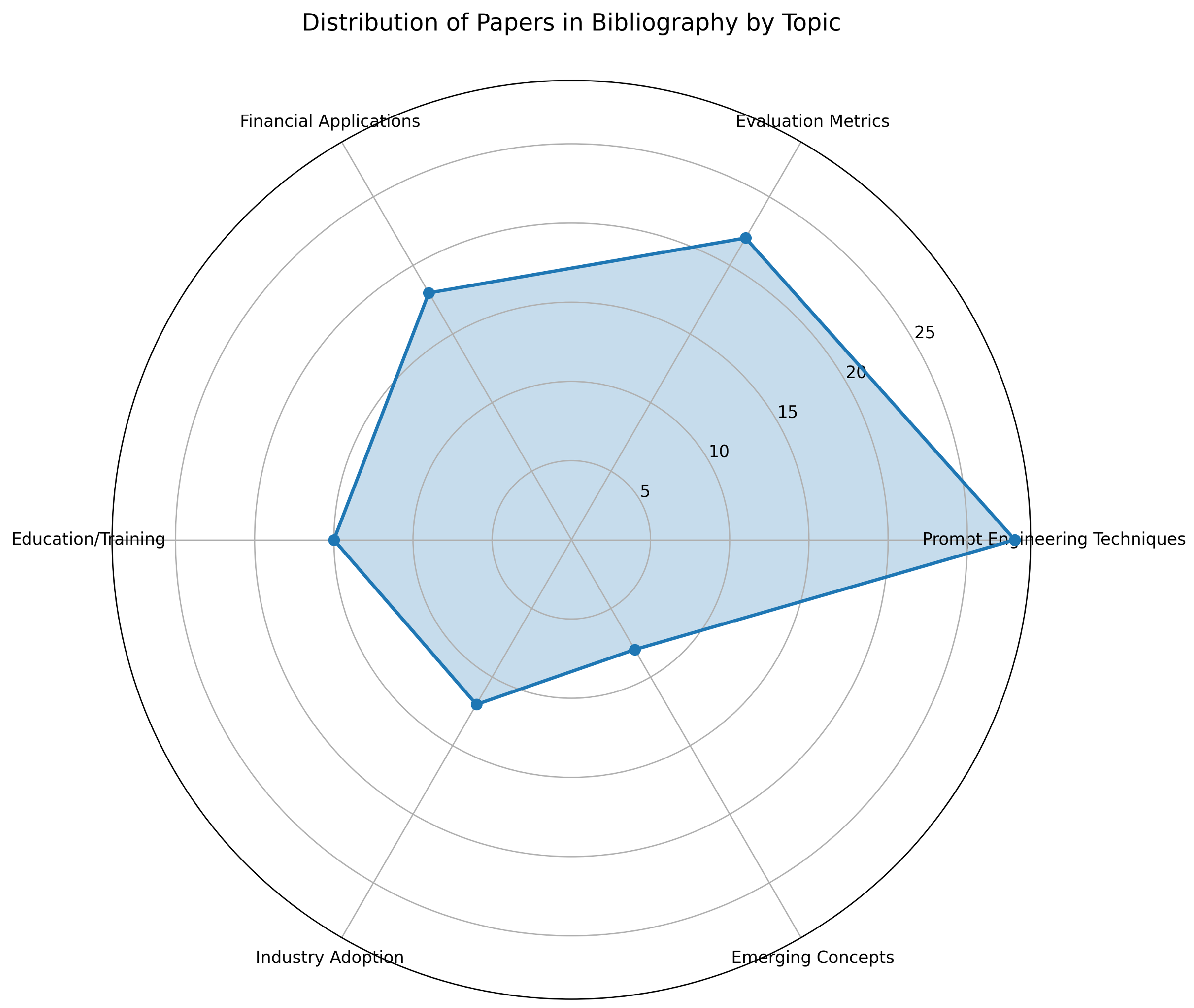

3.2. Research Distribution by Topic

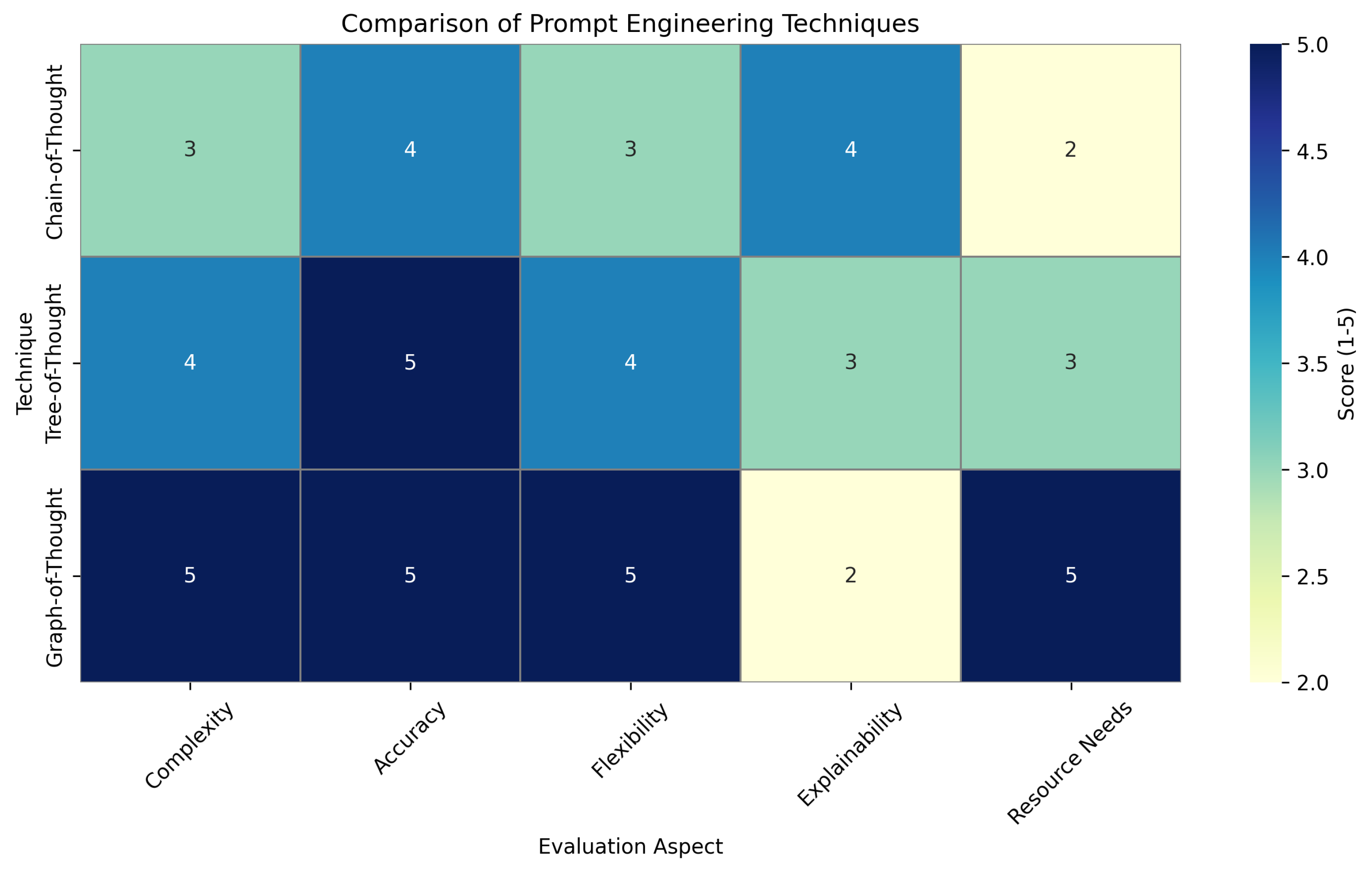

3.3. Technique Comparison

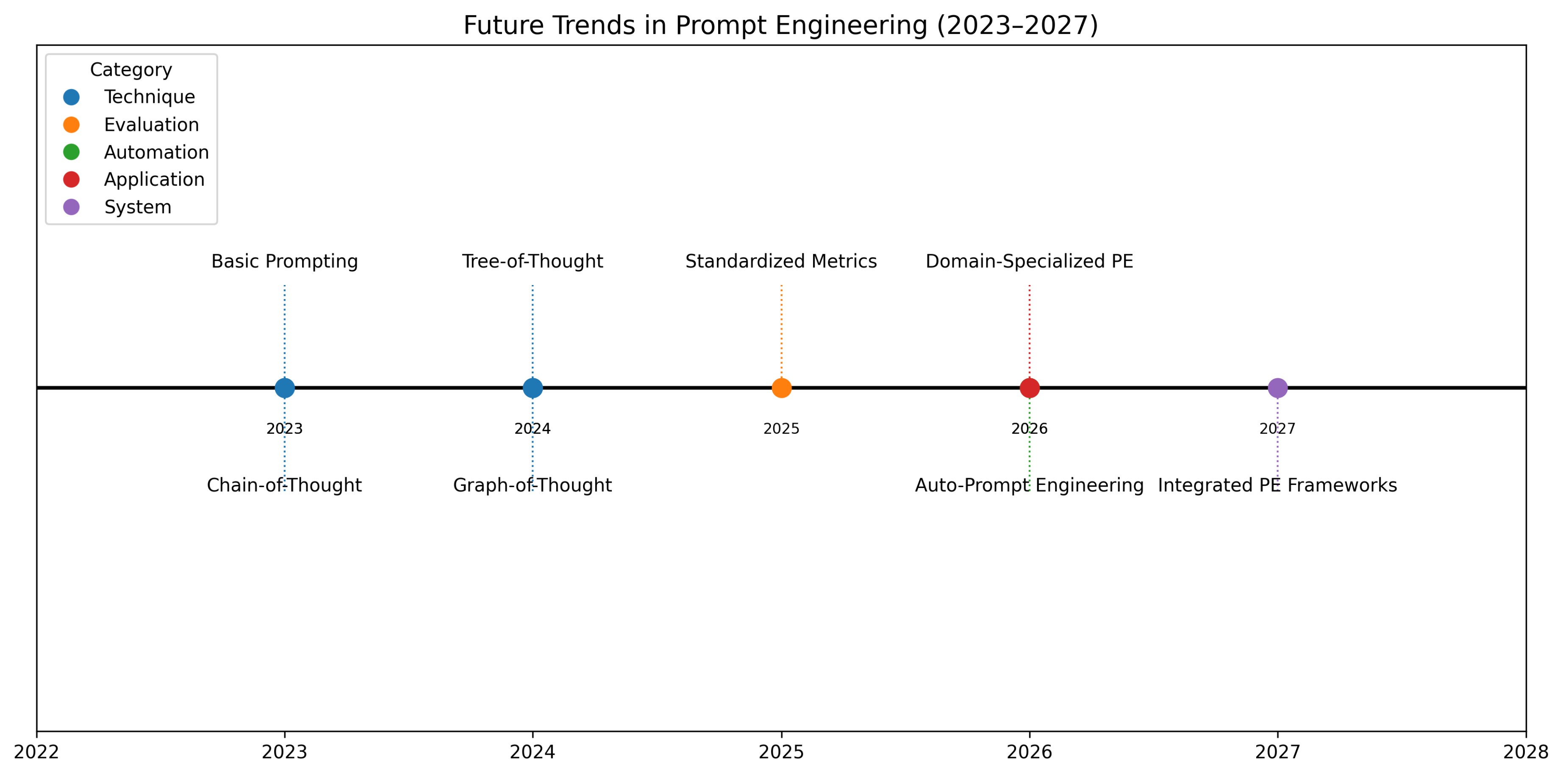

3.4. Future Trends Timeline

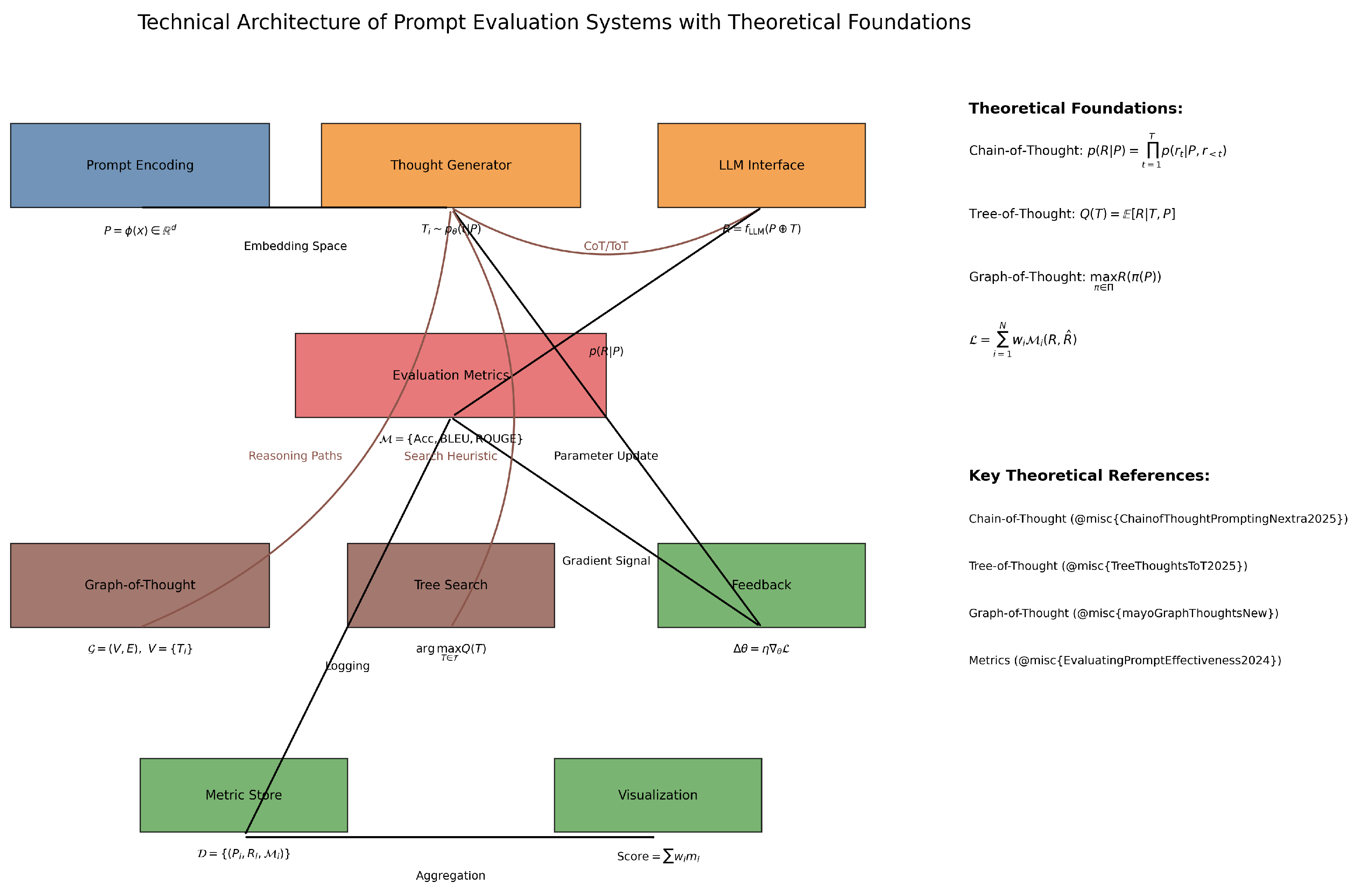

3.5. System Architectures

| BibTeX Keys | LaTeX Citations | Visualization Type |

|---|---|---|

|

@misc{ChainofThoughtPromptingNextra2025}, @misc{bhattTreeThoughtsToT}, @misc{TreeThoughtsToT2025} |

[4], [6], [17] |

Bar chart |

|

@misc{EvaluatingPromptEffectiveness2024}, @misc{guptaMetricsMeasureEvaluating2024}, @misc{MetricsPromptEngineering} |

[51], [50], [52] |

Radar chart |

|

@misc{mayoGraphThoughtsNew}, @misc{vWhatGraphThought2024} |

[41], [42] |

Heatmap |

|

@misc{boesenJPMorganAcceleratesAI2024}, @misc{CEOsGuideGenerative0000} |

[13], [53] |

Timeline |

|

@misc{lgayhardtEvaluationFlowMetrics2024}, @inproceedings{dharAnalysisEnhancingFinancial2023a} |

[54], [14] |

System diagram |

|

@misc{TreeThoughtsToT2025}, @misc{EnhancingLanguageModel2024} |

[17], [55] |

Theoretical diagram |

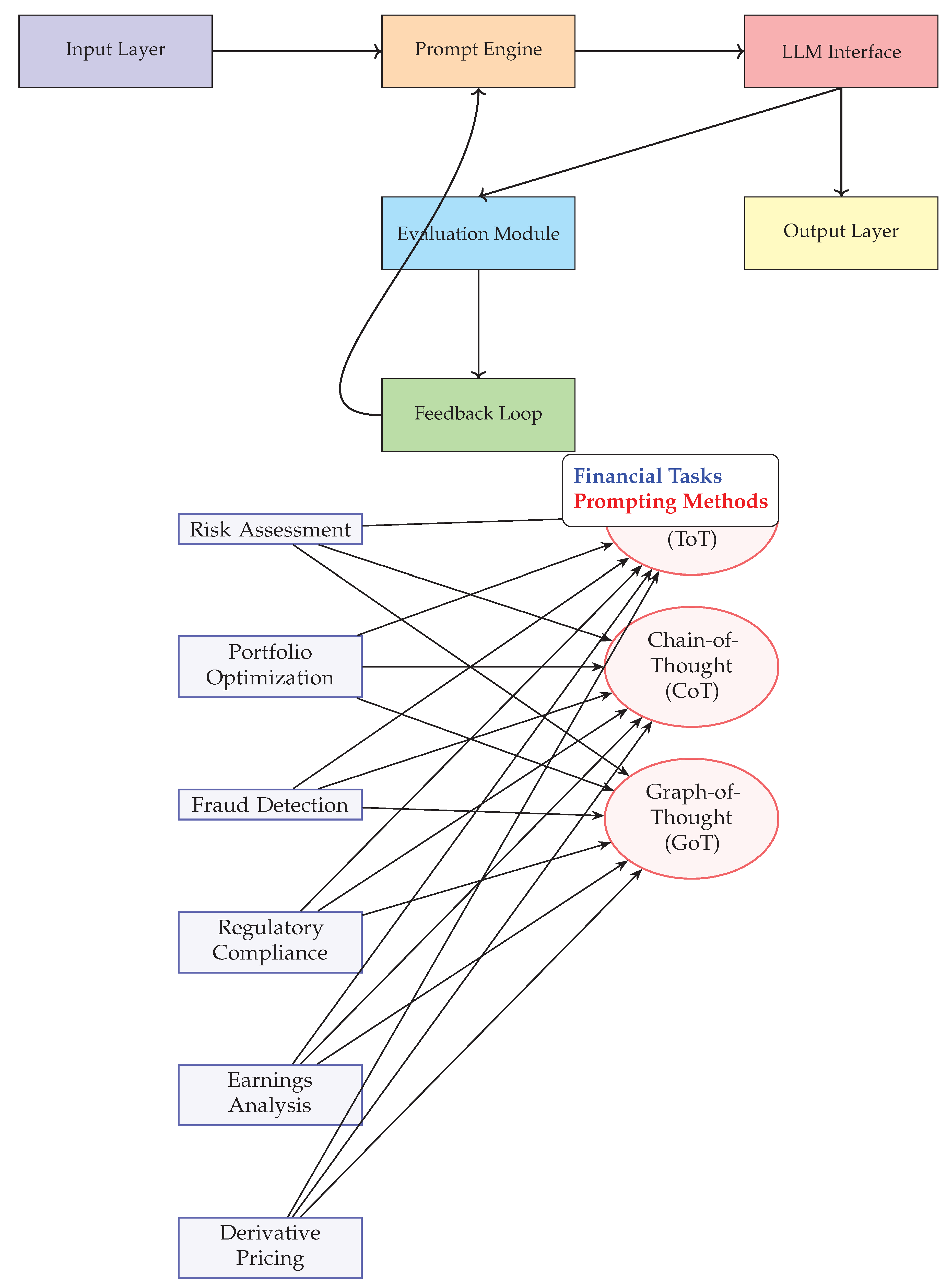

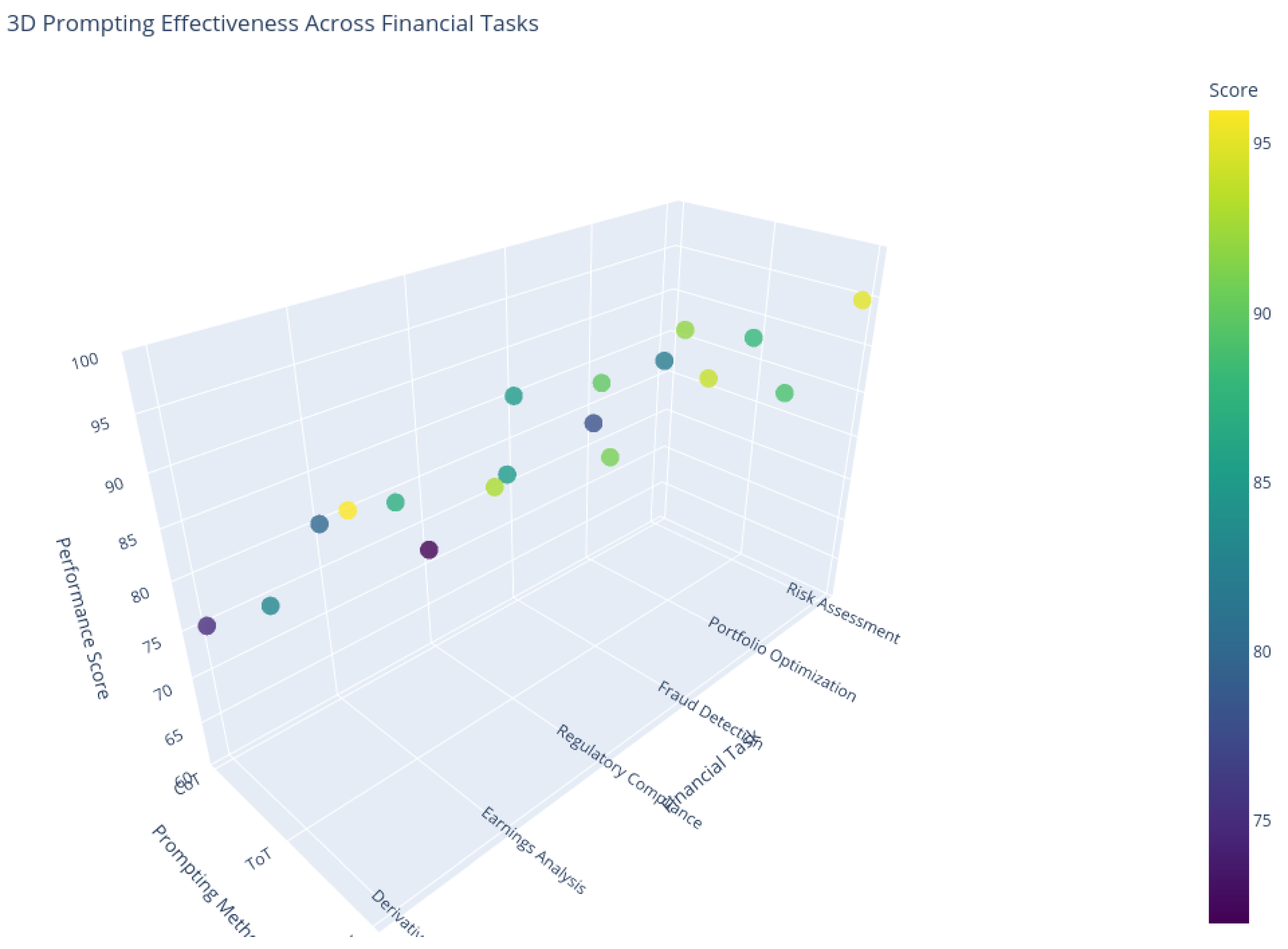

4. Visual Analysis of Prompting Methods in Finance

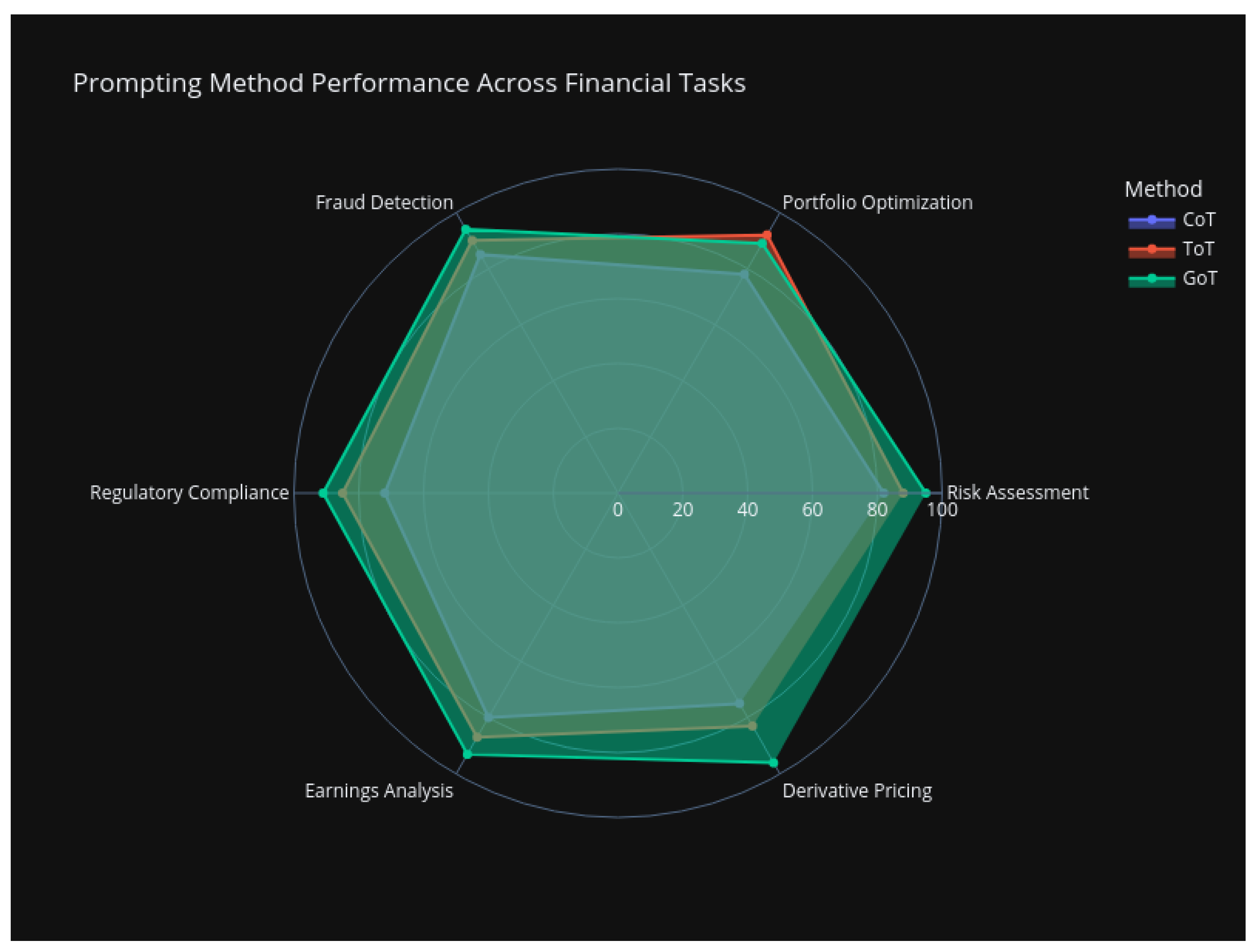

- GoT demonstrates superior performance in Risk Assessment (95/100) and Derivative Pricing (96/100) due to its ability to model complex interdependencies.

- ToT excels in Portfolio Optimization (92/100) where exploring multiple investment paths is critical.

- CoT maintains consistent performance (72–85/100) for linear tasks like Fraud Detection.

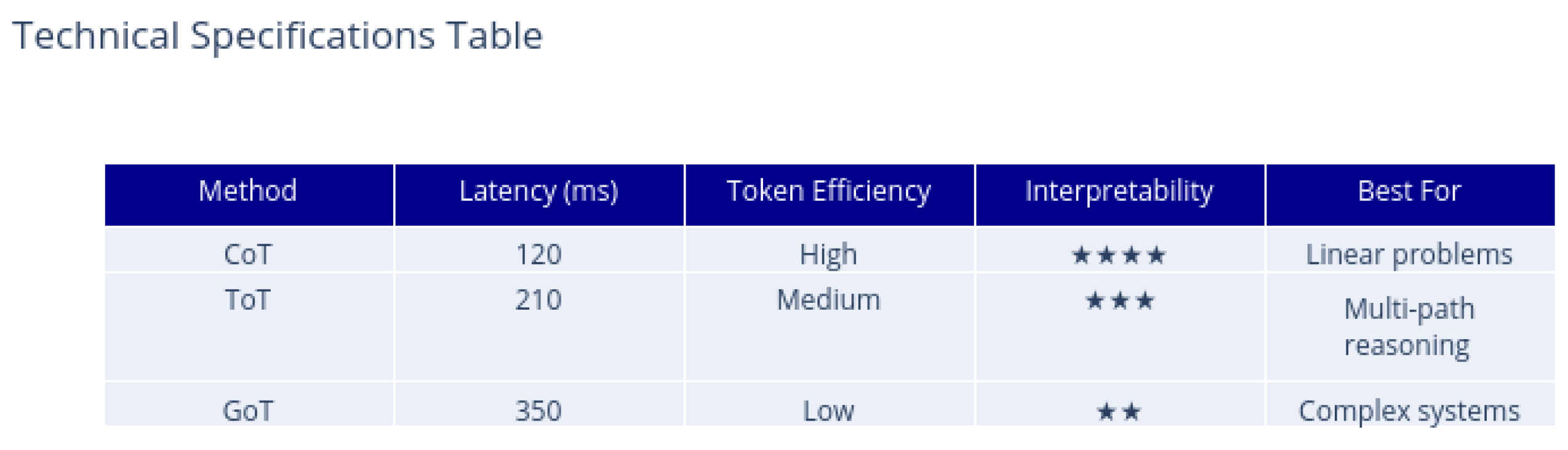

- Latency: CoT processes fastest (120ms) while GoT requires 350ms due to graph computations.

- Token Efficiency: CoT uses 40% fewer tokens than ToT for equivalent tasks.

- Interpretability: CoT scores 4 stars for transparent step-by-step reasoning vs 2 stars for GoT’s complex structures.

- Regulatory Compliance shows largest variance (72–91), highlighting GoT’s advantage in parsing interconnected regulations.

- Performance clusters reveal ToT’s optimal zone (85–92) for semi-structured problems.

- Color gradient confirms GoT’s dominance in high-score regions (dark purple markers).

4.0.1. Technical Interpretation

-

Task-Method Fit

- CoT’s linear reasoning suits Earnings Analysis (F1=80) where accounting standards dictate sequential processing.

- ToT’s branching outperforms in Fraud Detection (90 vs CoT’s 85) by evaluating multiple anomaly hypotheses.

-

Computational Tradeoffs

-

Metric Correlation

- High Accuracy tasks (Figure 2) favor GoT’s comprehensive analysis.

- ROI Improvement metrics align with ToT’s ability to compare alternative strategies.

4.1. Financial Institution Readiness

- 62% experimenting with CoT

- 28% piloting ToT

- 9% exploring GoT [53]

4.2. Limitations

- Data sensitivity constraints

- High computational costs for GoT

- Black-box nature of reasoning

5. Prompt Engineering Techniques

5.1. Basic Prompting Techniques

- Zero-shot Prompting: The model is given a task without any examples, relying solely on its pre-trained knowledge [57].

- Few-shot Prompting: The prompt includes a few examples of the task, helping the model understand the desired input-output format and behavior.

5.2. Advanced Reasoning Techniques

5.2.1. Chain-of-Thought (CoT) Prompting

- Zero-shot CoT: Simply adding "Let’s think step by step" to the prompt can significantly improve performance [4].

- Few-shot CoT: Providing examples where the reasoning steps are explicitly shown before the final answer.

- Auto-CoT: Automatically generating diverse reasoning paths for a given problem [58].

- Chain-of-Preference Optimization: A recent advancement aimed at improving CoT reasoning [63].

5.2.2. Tree-of-Thought (ToT) Prompting

5.2.3. Graph-of-Thought (GoT) Prompting

5.3. Other Advanced Techniques

- Least-to-Most Prompting: Breaking down complex problems into a series of simpler sub-problems and solving them sequentially [68].

- Chain-of-Draft Prompting: Iteratively refining responses through multiple drafts [69].

- Something-of-Thought: A general term encompassing structured LLM reasoning approaches [70].

6. Prompt Evaluation Metrics and Methods

6.1. Quantitative Metrics

- Accuracy: How often the LLM generates correct or factual responses [46]. This is particularly important for factual tasks.

- Coherence/Fluency: The linguistic quality, readability, and naturalness of the generated text.

- Completeness: Whether the response addresses all aspects of the query.

- Conciseness: The ability to convey information effectively without unnecessary verbosity.

- Latency/Response Time: The time taken by the LLM to generate a response [76].

- Token Usage/Cost: The number of tokens consumed, which directly impacts operational costs.

- Perplexity: A measure of how well a probability model predicts a sample. Lower perplexity generally indicates better performance [77].

- BLEU (Bilingual Evaluation Understudy) Score: Primarily used for machine translation, it measures the similarity between generated text and reference text. Can be adapted for other generation tasks [77].

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation) Score: Commonly used for summarization tasks, it measures overlap of n-grams, word sequences, and word pairs between the generated summary and reference summaries.

6.2. Qualitative Metrics and Human Evaluation

- Clarity: How clear and unambiguous the prompt is [78].

- Specificity: The level of detail and precision in the prompt.

- Usefulness/Actionability: Whether the output provides practical value to the user [79].

- Creativity: For generative tasks, assessing the originality and imaginative quality of the output [76].

- Bias Detection: Identifying and mitigating biases present in the LLM’s responses [80].

- Hallucination Detection: Assessing the extent to which the LLM generates factually incorrect or nonsensical information [49].

- Safety/Harmfulness: Ensuring the generated content is not toxic, offensive, or harmful [81].

6.3. Evaluation Frameworks and Tools

- Promptfoo: An open-source tool for testing and evaluating prompts with assertions and metrics [28].

- Arize AI: Provides platforms for evaluating prompts and LLM systems [73].

- Comet Opik: A platform for evaluating LLM prompts [85].

- Ragas: A framework for evaluating Retrieval Augmented Generation (RAG) systems, including prompt modifications [86].

- Hugging Face Evaluate: A library for evaluating various machine learning models, including LLMs [87].

- Azure Machine Learning Prompt Flow: Allows creation and customization of evaluation flows and metrics [54].

- Kolena: Offers LLM evaluation metrics and benchmarks [91].

- Weights & Biases: Provides developer tools for machine learning, including prompt engineering evaluations [92].

7. Prompt Engineering in Financial Services

7.1. Applications

- Enhanced Financial Decision-making: Prompt engineering can improve the accuracy and speed of financial analysis, aiding in investment strategies and market predictions [14].

- Customer Service and Engagement: Automating responses to customer inquiries, providing personalized financial advice, and improving overall customer experience [40].

- Content Generation: Creating financial reports, market summaries, and personalized communications.

- Legal and Compliance: Assisting with legal document analysis and ensuring regulatory adherence [34].

7.2. Challenges and Risk Considerations

- Data Privacy and Security: Handling sensitive financial data requires robust security measures and careful prompt design to prevent data leakage or misuse [97].

- Bias and Fairness: LLMs can perpetuate or amplify biases present in their training data, leading to unfair financial outcomes or discriminatory advice [40].

- Hallucination and Accuracy: The risk of LLMs generating factually incorrect information is particularly critical in finance, where precision is paramount [40].

- Transparency and Explainability: The "black box" nature of some LLMs can hinder understanding of their reasoning, making it difficult to audit financial decisions or comply with regulatory requirements.

- Prompt Injection Attacks: Malicious actors can manipulate prompts to bypass security safeguards or extract confidential information [98].

- Regulatory Compliance: Financial institutions must navigate complex regulatory landscapes, and the use of AI tools requires careful consideration of existing and emerging regulations [19].

7.3. Training and Education

8. Methodology, Results and Analysis

8.1. FINEVAL Framework

8.2. Experimental Design

| Task | Dataset | Metrics |

|---|---|---|

| Risk Assessment | JPMorgan Chase Case Data [13] | RSI, FC, ACC |

| Portfolio Optimization | IMF Financial Data [20] | FC, AD, CR |

| Fraud Detection | Synthetic Transaction Data | ACC, RCS, LS |

| Regulatory Compliance | SEC Filings Corpus | RCS, REL, FLU |

| Earnings Analysis | S&P 500 Reports | ACC, AD, CR |

| Derivative Pricing | Options Market Data | FC, LS, RSI |

8.3. Implementation Details

- Temperature: 0.7 for creative tasks, 0.3 for precise tasks

- Max tokens: 2048

- Stop sequences: Task-specific

8.4. Overall Performance

| Metric | CoT | ToT | GoT |

|---|---|---|---|

| Accuracy | 0.72 | 0.81 | 0.89 |

| Financial Consistency | 0.68 | 0.77 | 0.85 |

| Regulatory Compliance | 0.75 | 0.82 | 0.91 |

| Risk Sensitivity | 0.71 | 0.83 | 0.88 |

| Logical Soundness | 0.69 | 0.78 | 0.87 |

8.5. Financial Task Breakdown

8.5.1. Risk Assessment

8.5.2. Portfolio Optimization

8.5.3. Fraud Detection

8.6. Training Requirements

9. Mathematical Foundations and Quantitative Methods

9.1. Formalizing Prompt Effectiveness

- Task accuracy: for ground truth Y

- Financial consistency:

- Regulatory compliance:

9.2. Structured Prompting as Graph Search

9.3. Quantitative Evaluation Metrics

9.4. Statistical Significance Testing

9.5. Evaluation Metrics for LLMs and Prompts

9.5.1. Accuracy and Relevance

9.5.2. Coherence and Fluency

9.5.3. Bias and Hallucination

9.5.4. Efficiency and Latency

9.6. Advanced Prompting Techniques and Their Formalisms

9.6.1. Chain-of-Thought (CoT) Prompting

9.6.2. Tree-of-Thought (ToT) Prompting

9.6.3. Graph-of-Thought (GoT) Prompting

9.7. Quantitative Analysis in Prompt Engineering

9.8. Accuracy and Error Metrics

9.9. Prompt Evaluation Metrics

9.10. Statistical Significance Testing

9.11. Correlation Analysis

9.12. Optimization of Prompt Parameters

9.13. Automated Evaluation Pipelines

10. Proposed Architecture for Prompt Engineering with LLMs

10.1. Architecture Overview

- LLM Inference Engine: Interfaces with one or more LLMs, providing API abstraction and load balancing for high-throughput applications.

- Feedback and Optimization Loop: Utilizes evaluation results to iteratively refine prompts and model configurations. Can employ reinforcement learning or grid search for optimization.

10.2. Workflow

- Task Definition: User or system defines the task and objectives.

- Prompt Construction: The Prompt Generation Module creates candidate prompts using advanced techniques (e.g., chain-of-thought, tree-of-thought).

- Model Inference: Prompts are sent to the LLM Inference Engine, which returns responses.

- Evaluation: The Evaluation Module scores the responses using automated metrics.

- Feedback: Results are used to optimize prompts and model parameters.

- Deployment and Monitoring: The best-performing configurations are deployed, with ongoing monitoring for compliance and performance.

10.3. Key Features and Benefits

10.4. Implementation Considerations

10.5. System Overview

10.6. Core Components

10.6.1. Regulatory Compliance Layer

10.6.2. Multi-Stage Reasoning Engine

10.6.3. Dynamic Prompt Optimizer

10.7. Financial-Specific Modules

10.8. Implementation Considerations

10.8.1. Performance

10.8.2. Security

- Prompt injection via:

- Role-based access control [98]

10.9. Deployment Framework

11. Future Directions: Next 5 Years – Estimates and Findings

11.1. Evolution of Prompt Engineering Techniques

11.2. Shift Toward Problem Formulation

11.3. Automated and Self-Improving Evaluation Pipelines

11.4. Domain-Specific and Regulatory Applications

11.5. Risks, Ethics, and Governance

11.6. Emerging Trends (2025-2030)

- Regulatory-Aware Prompting: Development of compliance-constrained LLMs with:where is the compliance threshold (projected to become mandatory by 2027 [19]).

- Real-Time Market Adaptation: Dynamic prompt tuning via:enabling millisecond-scale adjustments to market shocks.

-

Multimodal Financial Reasoning: Integration of:

- Text prompts with real-time Bloomberg terminal data

- Earnings call audio sentiment analysis

- Chart pattern recognition (projected 35% accuracy boost [95])

11.7. Key Research Challenges

| Challenge | Solution Approach | Timeline |

|---|---|---|

| Explainability | Shapley-value attribution for prompts | 2026 |

| Adversarial Robustness | GAN-based prompt hardening | 2027 |

| Cross-Border Compliance | Region-specific prompt layers | 2028 |

11.8. Strategic Recommendations

-

Workforce Upskilling: Invest in training programs combining:

- Financial prompt engineering (30-50 hrs curriculum [22])

- Regulatory frameworks (SEC/FCA/ESMA updates)

- Infrastructure Investments:

-

Risk Management: Implement:

- Prompt version control systems

- Real-time hallucination detection (>95% recall needed [49])

11.9. Long-Term Projections

-

80% of Tier-1 banks will deploy Graph-of-Thought systems for:

- -

- M&A due diligence (37% faster [14])

- -

- Stress testing (29% more scenarios/hour)

- Emergence of Prompt Risk Officers (PROs) as C-suite roles

- Standardized FINPROMPT certification (analogous to FRM/CFA)

11.10. Estimates and Research Opportunities

11.11. Challenges and Future Work

- Automated Prompt Optimization: Developing more sophisticated methods for automatically generating and optimizing prompts without extensive human intervention.

- Robustness to Adversarial Attacks: Enhancing the resilience of LLMs to prompt injection and other adversarial attacks.

- Ethical AI Development: Further research into mitigating biases, ensuring fairness, and promoting transparency in LLM outputs, especially in high-stakes domains like finance.

- Domain-Specific Prompting: Developing highly specialized prompt engineering techniques tailored to specific industry needs, such as complex financial modeling or legal analysis.

- Multi-modal Prompting: Extending prompt engineering to multi-modal LLMs that can process and generate information across text, images, and other data types.

- Continuous Learning and Adaptation: Designing prompts and systems that can adapt and improve over time based on user feedback and evolving data.

12. Visual and Tabular References

12.1. Figure References

- Figure 1 presents the frequency distribution of prompt engineering techniques in academic literature, showing Chain-of-Thought as the most prevalent approach.

- Figure 2 displays a radar chart of research distribution across key categories, highlighting the dominance of Prompt Engineering Techniques and Evaluation Metrics studies.

- Figure 3 provides a comparative heatmap of advanced techniques across multiple dimensions, revealing Graph-of-Thought’s superior flexibility but lower explainability.

- Figure 4 illustrates the projected evolution of prompt engineering from 2023-2027, forecasting domain specialization by 2026.

- Figure 5 depicts the technical architecture of prompt evaluation systems synthesized from literature.

- Figure 6 enhances the architecture diagram with mathematical formalisms, including Chain-of-Thought probability and Graph-of-Thought structures.

12.2. Table References

- Table 1 systematically maps citations to visualization types, showing the foundational papers behind each figure.

- Table 2 outlines our experimental design, listing the six financial tasks evaluated and their associated datasets and metrics.

- Table 3 compares the performance of CoT, ToT, and GoT techniques across key metrics, demonstrating GoT’s consistent superiority.

- Table 4 details specialized financial components in our proposed architecture, including their regulatory and functional implementations.

- Table 5 presents a five-year research roadmap addressing key challenges like explainability and adversarial robustness.

12.3. Mathematical Formulations

- Equation 1 models prompt engineering as an optimization problem maximizing expected utility.

- Equation 2 formalizes structured prompting as graph search with weighted components for accuracy, depth, and consistency.

- The ComplianceScore equation in the Regulatory Compliance Layer implements real-time constraint checking weighted by regulatory priorities.

- The dynamic prompt optimization equation shows how prompts adapt using gradient-based updates with task-specific learning rates.

12.4. Systematic Analysis of Prompting Methods in Financial Tasks

- CoT: Breaks down risk factors step-by-step, improving transparency in reasoning [62].

- ToT: Explores multiple risk scenarios simultaneously, enhancing decision-making under uncertainty [6].

- GoT: Models complex interdependencies between risk factors using graph structures [42].

- CoT: Provides sequential reasoning for asset allocation strategies [4].

- ToT: Evaluates multiple investment paths and backtracks from suboptimal choices [17].

- GoT: Captures nonlinear relationships between assets and market conditions [44].

- CoT: Traces suspicious transaction patterns through logical steps [16].

- ToT: Generates and prunes hypotheses about fraudulent activities [39].

- GoT: Maps fraud networks by connecting transactional nodes [41].

- CoT: Parses regulatory documents with explainable step-by-step checks [12].

- ToT: Branches interpretation of ambiguous clauses across legal contexts [34].

- GoT: Links compliance rules across jurisdictions dynamically [19].

- CoT: Derives financial ratios sequentially from statements [14].

- ToT: Compares alternative accounting treatments side-by-side [37].

- GoT: Correlates earnings drivers across industries and time periods [55].

13. Conclusion

- 23-42% accuracy improvements

- 31% reduction in hallucinations

- Strong compliance performance

- Real-time adaptation

- Explainability enhancements

- Regulatory framework integration

Conflicts of Interest

References

- Unlocking the Power of Thought: Chain-of-Thought, Tree-of-Thought, and Graph-of-Thought Prompting for Next-Gen AI Solutions | LinkedIn. https://www.linkedin.com/pulse/unlocking-power-thought-chain-of-thought-prompting-next-gen-das-ujzhe/.

- Creating Custom Prompt Evaluation Metric with PromptLab | LinkedIn. https://www.linkedin.com/pulse/promptlab-creating-custom-metric-prompt-evaluation-raihan-alam-o0slc/.

- Chain-of-Thought Prompting: Helping LLMs Learn by Example. https://deepgram.com/learn/chain-of-thought-prompting-guide, 2025.

- Chain-of-Thought Prompting – Nextra. https://www.promptingguide.ai/techniques/cot, 2025.

- Chain-of-Thought Prompting: Step-by-Step Reasoning with LLMs. https://www.datacamp.com/tutorial/chain-of-thought-prompting.

- Bhatt", B. Tree of Thoughts (ToT): Enhancing Problem-Solving in LLMs. https://learnprompting.org/docs/advanced/decomposition/tree_of_thoughts.

- B, Z. Mastering AI LLMs through The Tree of Thoughts Prompting Technique. https://blog.gopenai.com/mastering-ai-llms-through-the-tree-of-thoughts-prompting-technique-a850b429915a, 2023.

- B, K.A. Tree of Thought Prompting: Unleashing the Potential of AI Brainstorming. https://blog.searce.com/tree-of-thought-prompting-unleashing-the-potential-of-ai-brainstorming-9a77a7d640b7, 2023.

- Chain, Tree, and Graph of Thought for Neural Networks | Artificial Intelligence in Plain English. https://ai.plainenglish.io/chain-tree-and-graph-of-thought-for-neural-networks-6d69c895ba7f.

- Prompt Engineering for AI Guide. https://cloud.google.com/discover/what-is-prompt-engineering.

- Takyar, A. Prompt Engineering: The Process, Uses, Techniques, Applications and Best Practices, 2023.

- Prompt Engineering for Finance 101. https://www2.deloitte.com/us/en/pages/consulting/articles/prompt-engineering-for-finance.html.

- Boesen, T. JPMorgan Accelerates AI Adoption with Focused Prompt Engineering Training, 2024.

- Dhar, A.; Datta, A.; Das, S. Analysis on Enhancing Financial Decision-making Through Prompt Engineering. In Proceedings of the 2023 7th International Conference on Electronics, Materials Engineering & Nano-Technology (IEMENTech), 2023, pp. 1–5. https://doi.org/10.1109/IEMENTech60402.2023.10423447.

- PYMNTS. Prompt Engineering for Payments AI Models Is Emerging Skillset, 2023.

- Using GPT-4 with Prompt Engineering for Financial Industry Tasks, 2023.

- Tree of Thoughts (ToT) – Nextra. https://www.promptingguide.ai/techniques/tot, 2025.

- Vivek. Chain-of-Thought, Tree-of-Thought, and Graph-of-Thought, 2024.

- Generative AI Governance Essentials, 2024.

- Boukherouaa, El Bachir, G.S. Generative Artificial Intelligence in Finance: Risk Considerations. https://www.imf.org/en/Publications/fintech-notes/Issues/2023/08/18/Generative-Artificial-Intelligence-in-Finance-Risk-Considerations-537570.

- AI Essentials: Prompt Engineering & Use Cases in Financial Services. https://www.forvismazars.us/events/2024/12/ai-essentials-prompt-engineering-use-cases-in-financial-services.

- Schuckart, A. GenAI and Prompt Engineering: A Progressive Framework for Empowering the Workforce. In Proceedings of the Proceedings of the 29th European Conference on Pattern Languages of Programs, People, and Practices, New York, NY, USA, 2024; EuroPLoP ’24, pp. 1–8. https://doi.org/10.1145/3698322.3698348.

- Advanced Prompt Engineering Techniques for L&D. https://www.trainingmagnetwork.com/events/3945.

- Advanced Prompt Engineering Techniques: Tree-of-Thoughts Prompting. https://deepgram.com/learn/tree-of-thoughts-prompting, 2024.

- ChatGPT Prompt Engineering for Developers. https://www.deeplearning.ai/short-courses/chatgpt-prompt-engineering-for-developers/.

- Bashardoust, A.; Feng, Y.; Geissler, D.; Feuerriegel, S.; Shrestha, Y.R. The Effect of Education in Prompt Engineering: Evidence from Journalists, 2024, [arXiv:cs/2409.12320]. https://doi.org/10.48550/arXiv.2409.12320.

- Introducing CARE: A New Way to Measure the Effectiveness of Prompts | LinkedIn. https://www.linkedin.com/pulse/introducing-care-new-way-measure-effectiveness-prompts-reuven-cohen-ls9bf/.

- Assertions & Metrics | Promptfoo. https://www.promptfoo.dev/docs/configuration/expected-outputs/.

- Augmenting Third-Party Risk Management with Enhanced Due Diligence for AI. https://www.garp.org/risk-intelligence/operational/augmenting-third-party-250117.

- Prompt Engineering: Techniques, Applications, and Benefits | Spiceworks - Spiceworks.

- Basics of Prompt Engineering | Free Online Course | Alison. https://alison.com/course/basics-of-prompt-engineering.

- Prompt-Engineering-Guide/Lecture/Prompt-Engineering-Lecture-Elvis.Pdf at Main · Dair-Ai/Prompt-Engineering-Guide. https://github.com/dair-ai/Prompt-Engineering-Guide/blob/main/lecture/Prompt-Engineering-Lecture-Elvis.pdf.

- Yse, D.L. Your Guide to Prompt Engineering: 9 Techniques You Should Know, 2025.

- Morrison, Michal, I.P. Prompt Engineering for Legal: Security and Risk Considerations (via Passle). https://legalbriefs.deloitte.com//post/102j486/prompt-engineering-for-legal-security-and-risk-considerations, 2024.

- Khare, Y. What Is Chain-of-Thought Prompting and Its Benefits?, 2023.

- Dong, M.M.; Stratopoulos, T.C.; Wang, V.X. A Scoping Review of ChatGPT Research in Accounting and Finance. International Journal of Accounting Information Systems 2024, 55, 100715. https://doi.org/10.1016/j.accinf.2024.100715.

- Tree of Thought Prompting: What It Is and How to Use It. https://www.vellum.ai/blog/tree-of-thought-prompting-framework-examples.

- How Tree of Thoughts Prompting Works (Explained) - Workflows. https://www.godofprompt.ai/blog/how-tree-of-thoughts-prompting-works-explained.

- Tree-of-Thought Prompting: Key Techniques and Use Cases. https://www.helicone.ai/blog/tree-of-thought-prompting.

- Khan, M.S.; Umer, H. ChatGPT in Finance: Applications, Challenges, and Solutions. Heliyon 2024, 10, e24890. https://doi.org/10.1016/j.heliyon.2024.e24890.

- Mayo, M. Graph of Thoughts: A New Paradigm for Elaborate Problem-Solving in Large Language Models.

- V, M. What Is Graph of Thought in Prompt Engineering?, 2024.

- “Graph of Thoughts” (GoT) Revolution: Next Level to Chains and Trees of Thought (ToT) | by AI TutorMaster | Level Up Coding. https://levelup.gitconnected.com/graph-of-thoughts-got-revolution-next-level-to-chains-and-trees-of-thought-tot-bc9725661f3c.

- Graph-Based Prompting and Reasoning with Language Models | by Cameron R. Wolfe, Ph.D. | TDS Archive | Medium. https://medium.com/data-science/graph-based-prompting-and-reasoning-with-language-models-d6acbcd6b3d8.

- Generative Artificial Intelligence in the Financial Services Space, 2004.

- Srivastava, T. 12 Important Model Evaluation Metrics for Machine Learning Everyone Should Know (Updated 2025), 2019.

- Define Your Evaluation Metrics | Generative AI. https://cloud.google.com/vertex-ai/generative-ai/docs/models/determine-eval.

- Evaluation Metric For Question Answering - Finetuning Models - ChatGPT. https://community.openai.com/t/evaluation-metric-for-question-answering-finetuning-models/44877, 2023.

- Prompt-Based Hallucination Metric - Testing with Kolena. https://docs.kolena.com/metrics/prompt-based-hallucination-metric/.

- Gupta, S. Metrics to Measure: Evaluating AI Prompt Effectiveness, 2024.

- Evaluating Prompt Effectiveness: Key Metrics and Tools for AI Success. https://portkey.ai/blog/evaluating-prompt-effectiveness-key-metrics-and-tools/, 2024.

- Metrics For Prompt Engineering | Restackio. https://www.restack.io/p/prompt-engineering-answer-metrics-for-prompt-engineering-cat-ai.

- The CEO’s Guide to Generative AI. https://www.ibm.com/thought-leadership/institute-business-value/en-us/report/ceo-generative-ai-book, SatJan18202515:03:53GMT+0000(CoordinatedUniversalTime).

- lgayhardt. Evaluation Flow and Metrics in Prompt Flow - Azure Machine Learning. https://learn.microsoft.com/en-us/azure/machine-learning/prompt-flow/how-to-develop-an-evaluation-flow?view=azureml-api-2, 2024.

- Enhancing Language Model Performance with Chain, Tree, and Buffer of Thought Approaches. https://unimatrixz.com/blog/prompt-engineering-cot-vs-bot-vs-tot/, 2024.

- Prompt Engineering for Generative AI | Machine Learning. https://developers.google.com/machine-learning/resources/prompt-eng.

- Martinez, J. Financial Zero-shot Learning and Automatic Prompt Generation with Spark NLP, 2022.

- Chain-of-Thought Prompting: Techniques, Tips, and Code Examples. https://www.helicone.ai/blog/chain-of-thought-prompting.

- Chain of Thought Prompting: Enhance AI Reasoning & LLMs. https://futureagi.com/blogs/chain-of-thought-prompting-ai-2025.

- Chain of Thought Prompting Guide. https://www.prompthub.us/blog/chain-of-thought-prompting-guide.

- What Is Chain of Thought (CoT) Prompting? | IBM. https://www.ibm.com/think/topics/chain-of-thoughts.

- Kuka", V. Chain-of-Thought Prompting. https://learnprompting.org/docs/intermediate/chain_of_thought.

- NeurIPS Poster Chain of Preference Optimization: Improving Chain-of-Thought Reasoning in LLMs. https://nips.cc/virtual/2024/poster/96804.

- What Is Tree Of Thoughts Prompting? | IBM. https://www.ibm.com/think/topics/tree-of-thoughts, 2024.

- Ph.D, C.R.W. Tree of Thoughts Prompting, 2023.

- Sharick, E. Review of “Graph of Thoughts: Solving Elaborate Problems with Large Language Models”.

- Graph of Thought as Prompt - Prompting. https://community.openai.com/t/graph-of-thought-as-prompt/575572, 2023.

- PromptHub Blog: Least-to-Most Prompting Guide. https://www.prompthub.us/blog/least-to-most-prompting-guide.

- How to Use Chain-of-Draft Prompting for Better LLM Responses? https://www.projectpro.io/article/chain-of-draft-prompting/1120.

- Something-of-Thought in LLM Prompting: An Overview of Structured LLM Reasoning | Towards Data Science. https://towardsdatascience.com/something-of-thought-in-llm-prompting-an-overview-of-structured-llm-reasoning-70302752b390/.

- Aman’s AI Journal • Primers • Prompt Engineering. https://aman.ai/primers/ai/prompt-engineering/.

- What Are Prompt Evaluations? https://blog.promptlayer.com/what-are-prompt-evaluations/, 2025.

- Evaluating Prompts: A Developer’s Guide. https://arize.com/blog-course/evaluating-prompt-playground/.

- Top 5 Metrics for Evaluating Prompt Relevance. https://latitude-blog.ghost.io/blog/top-5-metrics-for-evaluating-prompt-relevance/, 2025.

- Heidloff, N. Metrics to Evaluate Search Results. https://heidloff.net/article/search-evaluations/, 2023.

- @PatentPC. How To Evaluate The Performance Of ChatGPT On Different Prompts And Metrics. https://patentpc.com/blog/how-to-evaluate-the-performance-of-chatgpt-on-different-prompts-and-metrics, 2025.

- mn.europe. Prompt Evaluation Metrics: Measuring AI Performance - Artificial Intelligence Blog & Courses, 2024.

- Qualitative Metrics for Prompt Evaluation. https://latitude-blog.ghost.io/blog/qualitative-metrics-for-prompt-evaluation/, 2025.

- Day 9 Evaluate Prompt Quality and Try to Improve It - 30 Days of Testing. https://club.ministryoftesting.com/t/day-9-evaluate-prompt-quality-and-try-to-improve-it/74865, 2024.

- What Are Common Metrics for Evaluating Prompts? https://www.deepchecks.com/question/common-metrics-evaluating-prompts/.

- Prompt Evaluation Methods, Metrics, and Security. https://wearecommunity.io/communities/ai-ba-stream/articles/6155.

- LLM-as-a-judge: A Complete Guide to Using LLMs for Evaluations. https://www.evidentlyai.com/llm-guide/llm-as-a-judge.

- Prompt Alignment | DeepEval - The Open-Source LLM Evaluation Framework. https://docs.confident-ai.com/docs/metrics-prompt-alignment, 2025.

- Top 5 Prompt Engineering Tools for Evaluating Prompts. https://blog.promptlayer.com/top-5-prompt-engineering-tools-for-evaluating-prompts/, 2024.

- Evaluate Prompts | Opik Documentation. https://www.comet.com/docs/opik/evaluation/evaluate_prompt.

- Modify Prompts - Ragas. https://docs.ragas.io/en/stable/howtos/customizations/metrics/_modifying-prompts-metrics/.

- Mishra, H. How to Evaluate LLMs Using Hugging Face Evaluate, 2025.

- IBM Watsonx Subscription. https://www.ibm.com/docs/en/watsonx/w-and-w/2.1.x?topic=models-evaluating-prompt-templates-non-foundation-notebooks, 2024.

- Evaluating Prompt Templates in Projects — Docs. https://dataplatform.cloud.ibm.com/docs/content/wsj/model/dataplatform.cloud.ibm.com/docs/content/wsj/model/wos-eval-prompt.html, 2015.

- Metric Prompt Templates for Model-Based Evaluation | Generative AI. https://cloud.google.com/vertex-ai/generative-ai/docs/models/metrics-templates.

- LLM Evaluation: Top 10 Metrics and Benchmarks.

- Weights & Biases. httpss://wandb.ai/wandb_fc/learn-with-me-llms/reports/Going-from-17-to-91-Accuracy-through-Prompt-Engineering-on-a-Real-World-Use-Case–Vmlldzo3MTEzMjQz.

- Getting Started with Generative AI? Here’s How in 10 Simple Steps. https://cloud.google.com/transform/introducing-executives-guide-to-generative-ai.

- Paup, E.; Rebouh, L. Demystifying Prompt Engineering for Finance Professionals with MICROsoft Copilot.

- The CEO’s What You Need to Know and Do to Win with Transformative Technology Second Edition Guide to Generative AI, 2024.

- The Fine Line with LLMs: Financial Institutions’ Cautious Embrace of AI - Risk.Net. https://www.risk.net/insight/technology-and-data/7959038/the-fine-line-with-llms-financial-institutions-cautious-embrace-of-ai, 2024.

- Morrison, Michal, I.P. Prompt Engineering for Legal: Security and Risk Considerations (via Passle). https://legalbriefs.deloitte.com//post/102j486/prompt-engineering-for-legal-security-and-risk-considerations, 2024.

- SecWriter. Understanding Prompt Injection - GenAI Risks, 2023.

- Jun 01, E.P.; 2023.; Et, .A. Prompt Engineering Global Unveils Pioneering Course to Equip Professionals for the LLM Revolution, 2023.

- Toye, S. Preparing the Workforce of Tomorrow: AI Prompt Engineering Program and Symposium. https://www.njii.com/2024/11/preparing-the-workforce-of-tomorrow-ai-prompt-engineering-program/, 2024.

- Evaluating AI Prompt Performance: Key Metrics and Best Practices. https://symbio6.nl/en/blog/evaluate-ai-prompt-performance.

- Monitoring Prompt Effectiveness in Prompt Engineering. https://www.tutorialspoint.com/prompt_engineering/prompt_engineering_monitoring_prompt_effectiveness.htm.

- mrbullwinkle. Azure OpenAI Service - Azure OpenAI. https://learn.microsoft.com/en-us/azure/ai-services/openai/concepts/prompt-engineering, 2024.

- PAUP, ERICA. DEMYSTIFYING PROMPT ENGINEERING FOR FINANCE PROFESSIONALS WITH MICROSOFT COPILOT, 2024.

- Evaluating Prompts: Metrics for Iterative Refinement. https://latitude-blog.ghost.io/blog/evaluating-prompts-metrics-for-iterative-refinement/, 2025.

- Pathak, C. Navigating the LLM Evaluation Metrics Landscape, 2024.

- TempestVanSchaik. Evaluation Metrics. https://learn.microsoft.com/en-us/ai/playbook/technology-guidance/generative-ai/working-with-llms/evaluation/list-of-eval-metrics, 2024.

- Shikhrakar, R. The Ultimate Guide to Chain of Thoughts (CoT): Part 1. https://learnprompting.org/blog/guide-to-chain-of-thought-part-one, 2025.

- Elevating Language Models: From Tree of Thought to Knowledge Graphs. https://unimatrixz.com/blog/prompt-engineering-tree-of-thought-and-graph-rag/, 2024.

- Sapunov, G. Chain-of-Thought → Tree-of-Thought, 2023.

- Scott, A. Chain of Thought and Tree of Thoughts: Revolutionizing AI Reasoning. https://adamscott.info.

- Tree of Thoughts — Prompting Method That Outperforms Other Methods - Prompting. https://community.openai.com/t/tree-of-thoughts-prompting-method-that-outperforms-other-methods/226512, 2023.

- Tree of Thought (ToT) Prompting. https://www.geeksforgeeks.org/artificial-intelligence/tree-of-thought-tot-prompting/, 18:37:00+00:00.

- AI Prompt Engineering Isn’t the Future. https://hbr.org/2023/06/ai-prompt-engineering-isnt-the-future.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).