Submitted:

05 July 2025

Posted:

07 July 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

- The technical entry barrier remains high, since constructing a performant RAG-LLM pipeline typically requires substantial programming expertise, which many engineering professionals and researchers may lack.

- To be truly effective, an RAG-LLM application must integrate seamlessly with the user’s existing document repositories, such as Google Drive or SharePoint. However, implementing such integrations introduces additional layers of complexity, often involving authentication protocols, API configurations, and document parsing logic.

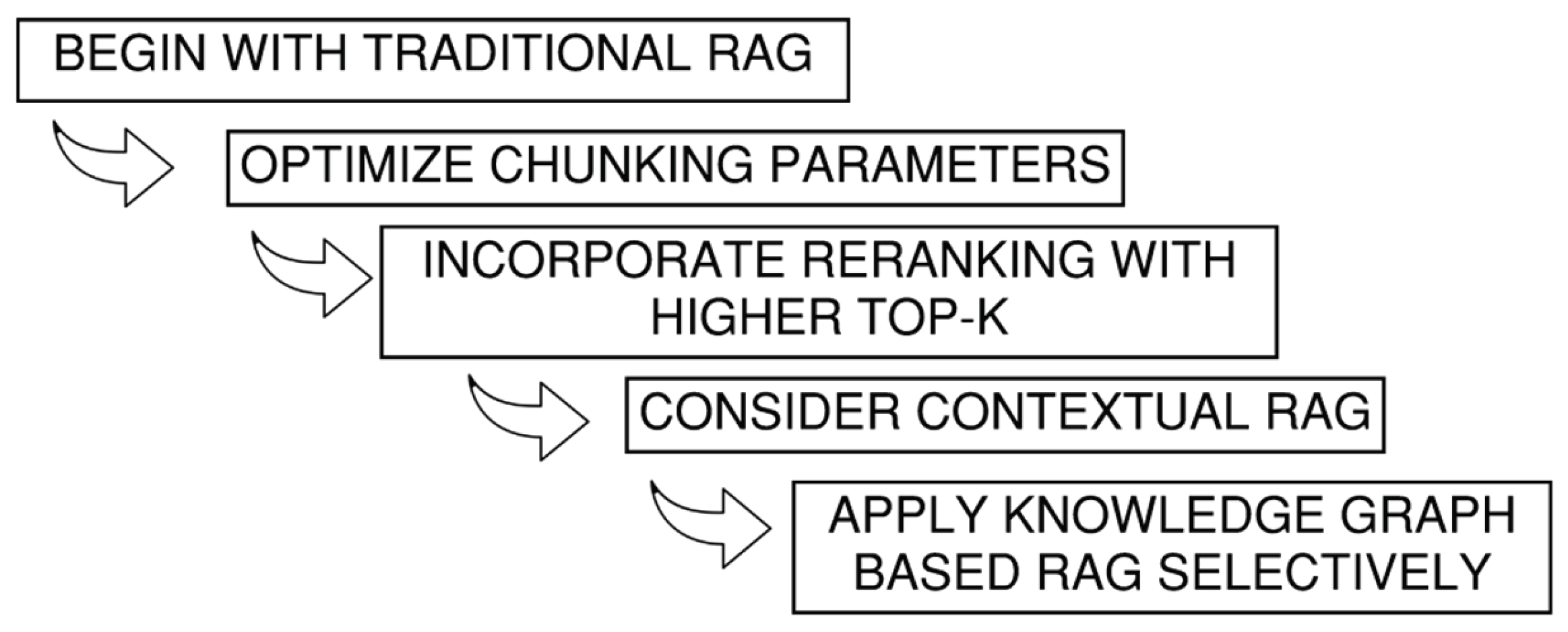

- Achieving optimal performance from a RAG pipeline frequently necessitates incorporating advanced enhancements such as reranking strategies, contextual retrieval mechanisms, or knowledge graph-based augmentation. While these techniques improve retrieval precision and output relevance, they are often technically demanding and require familiarity with multiple NLP tools and frameworks. These nuances collectively restrict the accessibility and practical deployment of RAG-LLM systems for many practitioners in engineering and applied research settings.

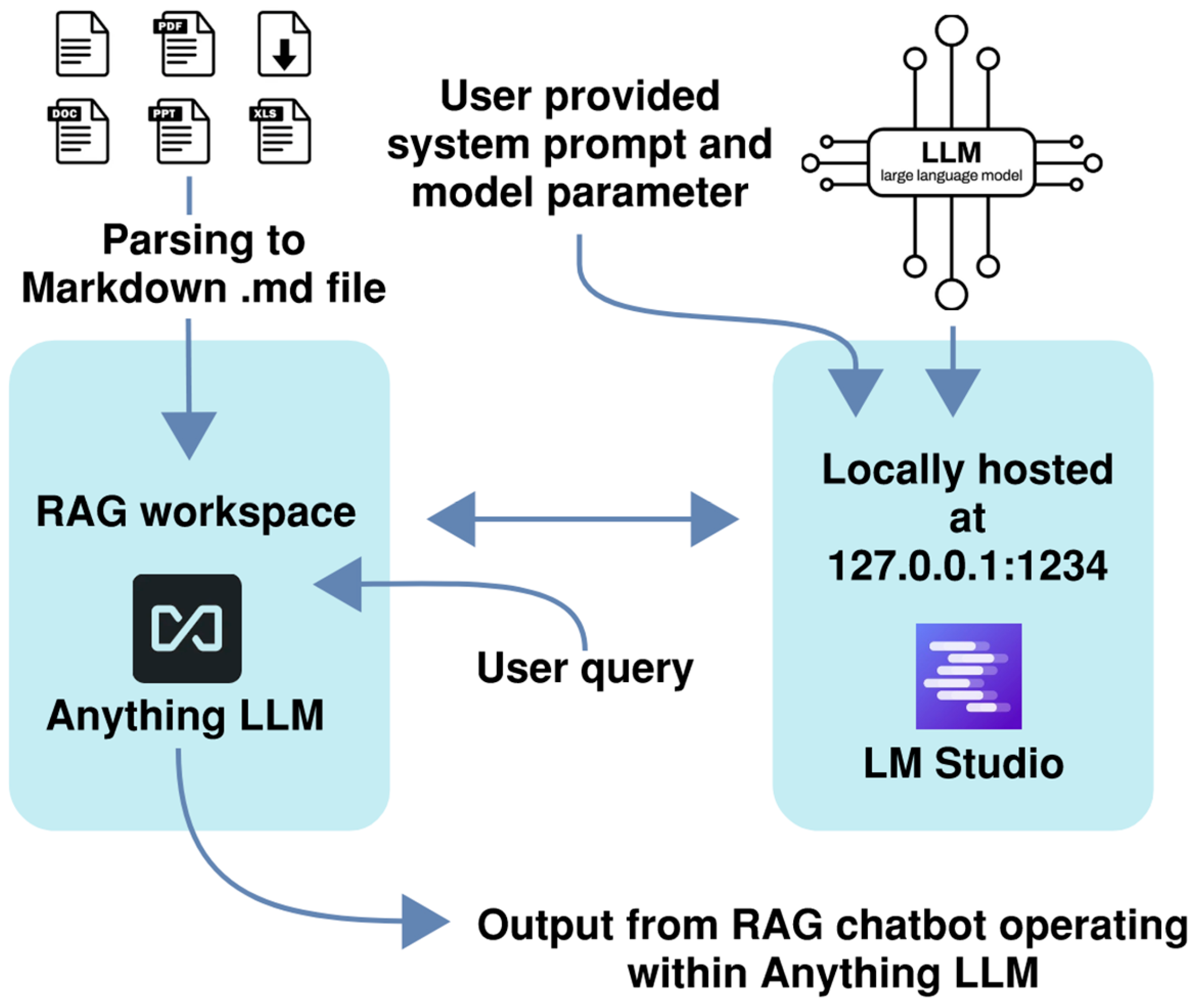

- Low-entry RAG-LLM pipeline implementation: A practical illustration of a low-barrier RAG-LLM pipeline is provided, utilizing LM Studio and AnythingLLM to enable secure, local document retrieval and language model inference. The accompanying code for parsing and embedding domain-specific documents is made available and can be accessed through the ‘Data Availability’ section of the manuscript.

- Practitioner-oriented evaluation and design considerations: The manuscript frames the design and evaluation of RAG workflows with a focus on practitioner guidance, particularly for electrical engineers and applied researchers working in low-code environments such as N8N. Special attention is given to the challenges encountered by traditional RAG systems when processing dense tabular data and multi-layered exceptions commonly found in technical codes and standards.

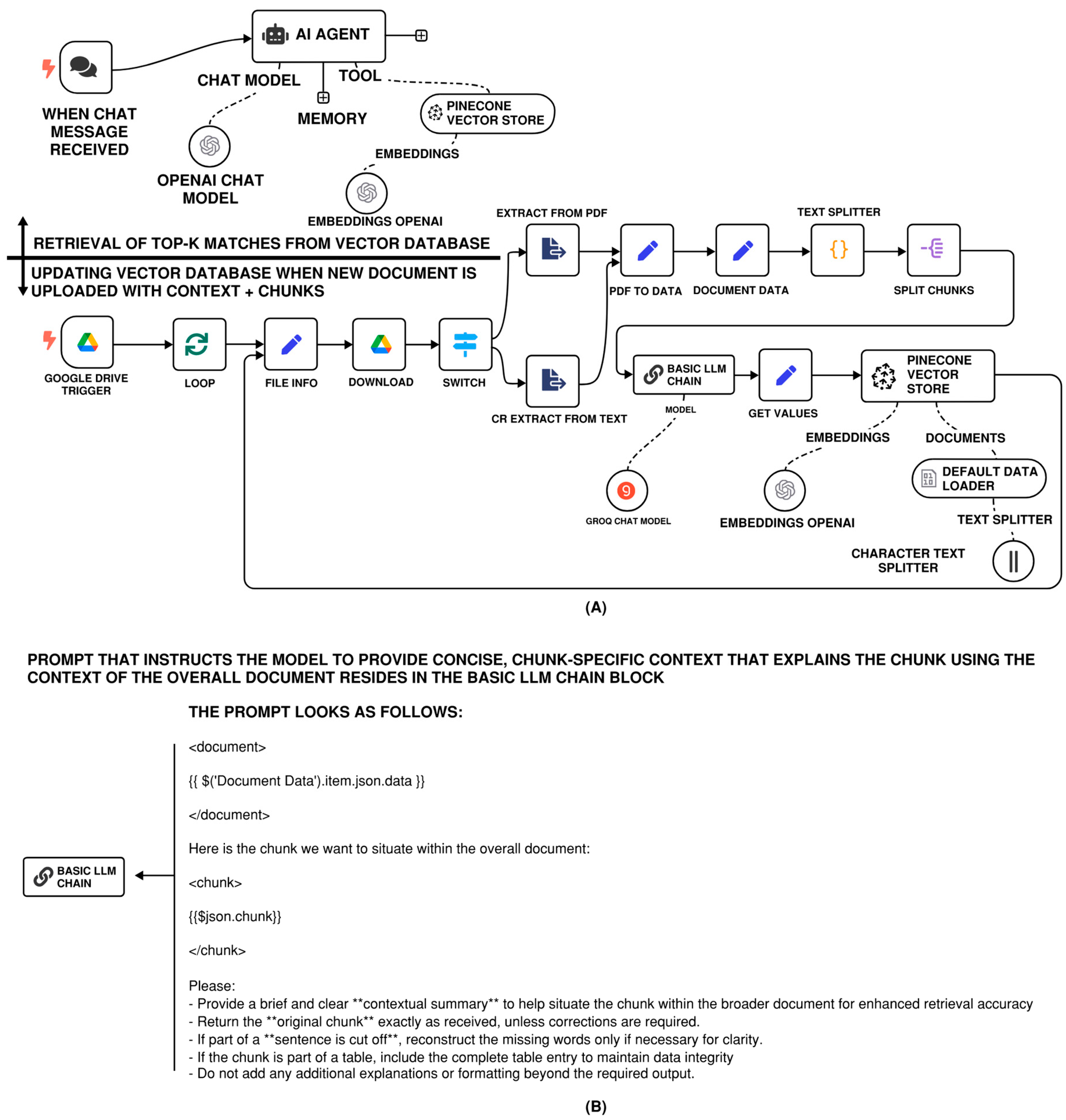

- Contextual retrieval workflow via N8N: A contextual RAG workflow built in N8N is introduced, demonstrating improved handling of tabular structures and ‘exception logic’ through orchestrated document processing and dynamic retrieval logic. The complete workflow is made publicly available and can be accessed via the ‘Data Availability’ section to support reproducibility and practical adoption.

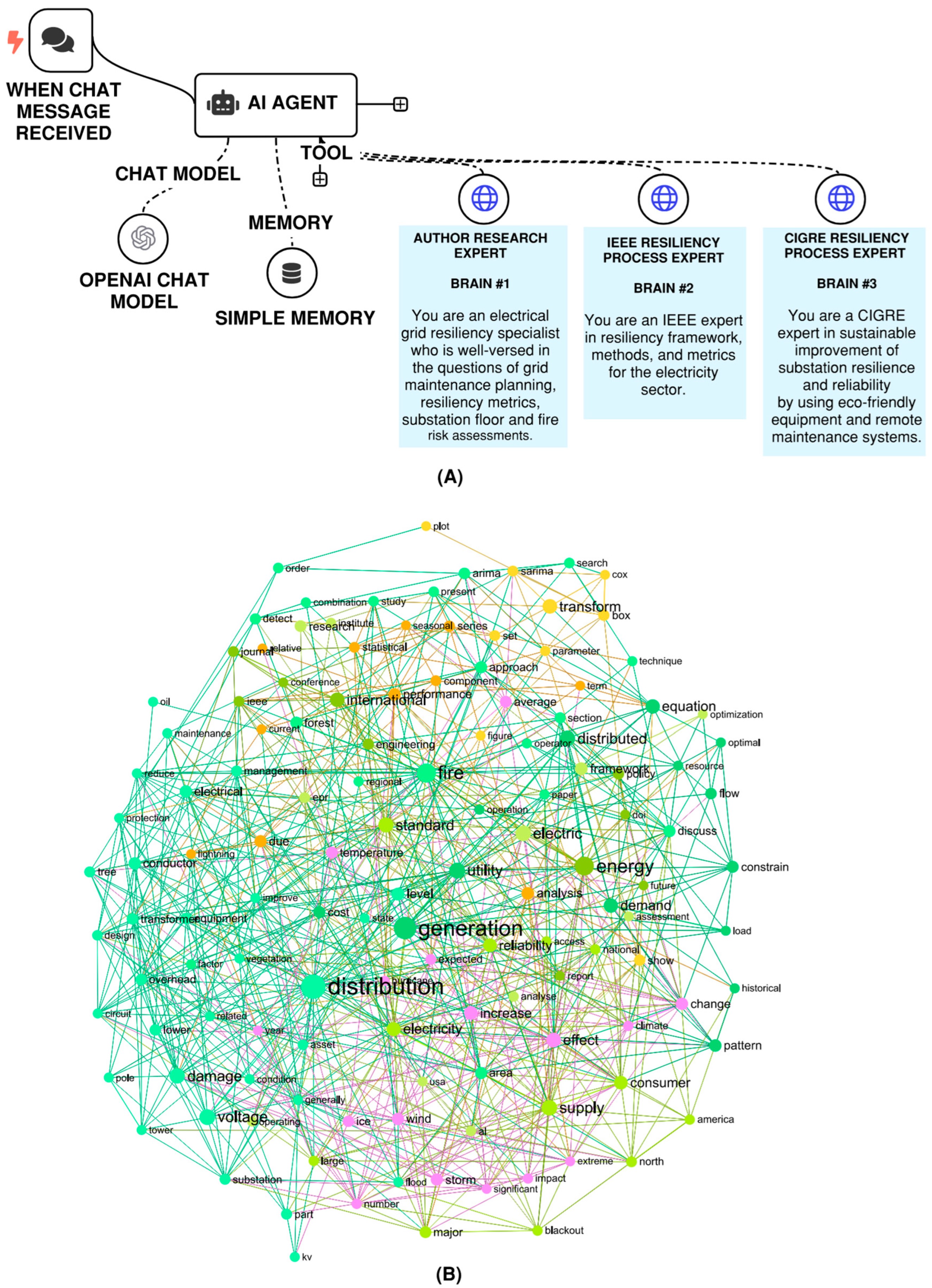

- Knowledge graph-based retrieval workflow via N8N and Infranodus: A knowledge graph-augmented RAG workflow is presented using N8N in conjunction with Infranodus, enabling semantic structuring of engineering documents to uncover hidden relationships between technical concepts. This approach supports exploratory querying and cross-referencing across interconnected standards, particularly useful in complex design and compliance tasks. The complete workflow, including graph generation, integration, and retrieval logic, is made publicly available in the ‘Data Availability’ section to facilitate reproducibility and practical application.

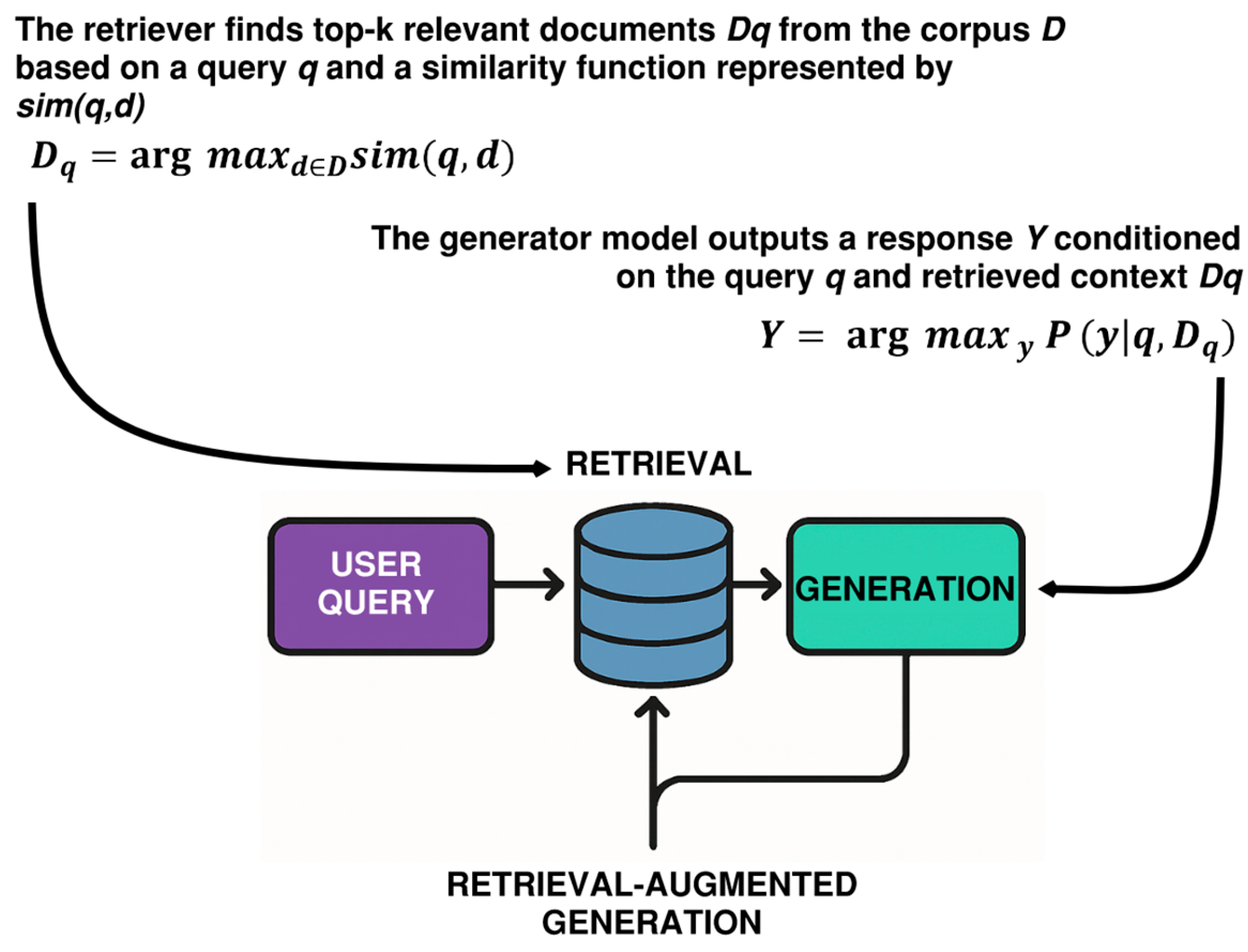

2. Fundamentals of Retrieval-Augmented Generation (RAG)

- Data sovereignty and confidentiality, become a pressing concern. Uploaded documents leave the confines of personal or corporate workstations, potentially violating internal data handling policies, intellectual property agreements, or regulatory requirements, especially when proprietary designs, sensitive specifications, or restricted standards are involved.

- Token and context length limitations inherent to hosted models often pose technical barriers. Large documents, such as multi-chapter standards or comprehensive handbooks, frequently exceed the maximum token window (even with chunking and summarization techniques), leading to truncated context or incomplete retrieval, which can compromise the accuracy of the generated response.

- Hosted solutions typically lack persistent, user-controlled storage of parsed knowledge. Once the document is uploaded and used for a single session, the embedding and indexing layers are ephemeral and inaccessible to the user. This prevents engineers from building long-term, reusable vector databases that can evolve with ongoing projects or organizational knowledge needs.

3. Opensource LLM and Private RAG-LLM Pipeline for Engineering Professionals

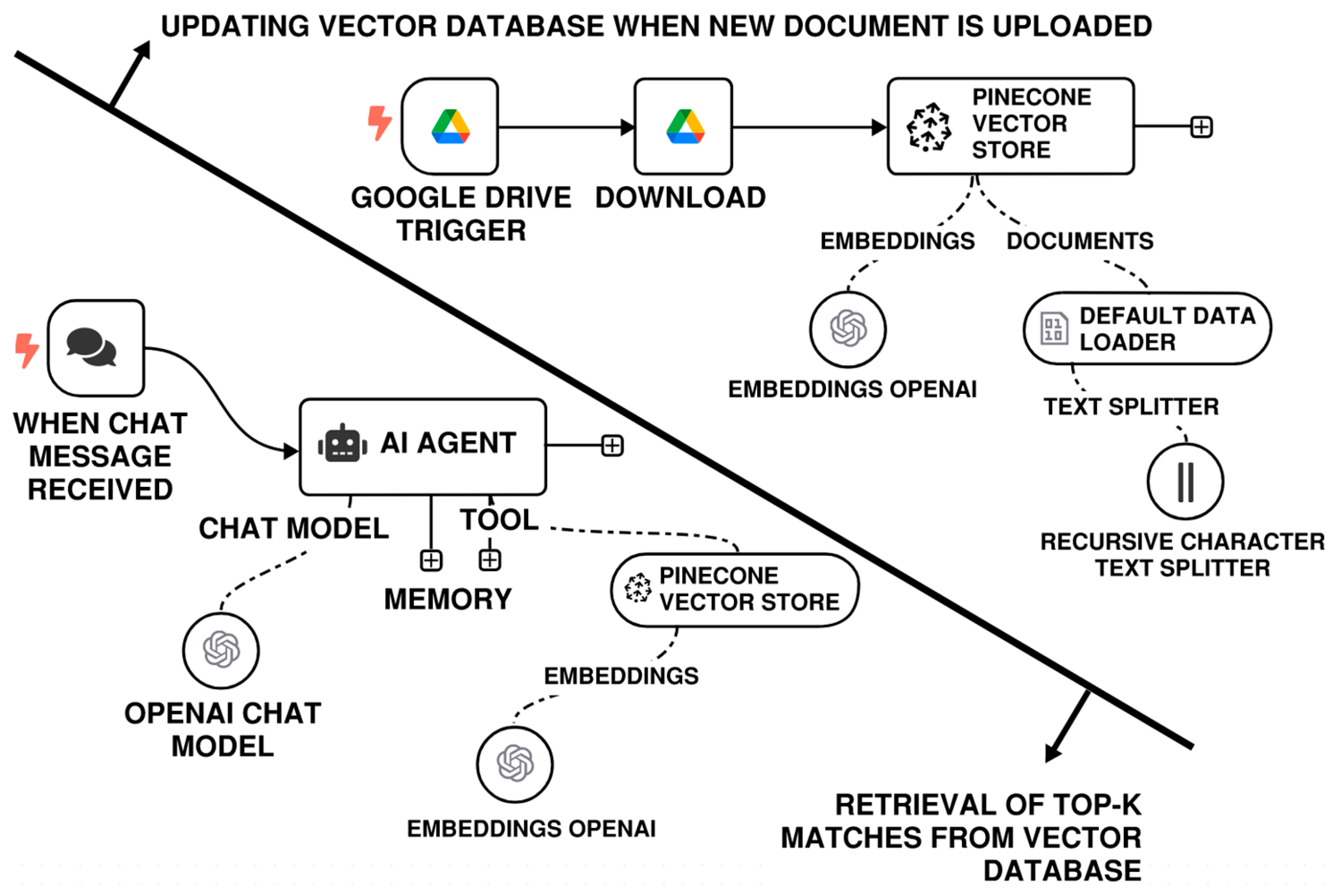

4. Low-Code Integration with N8N for Document Retrieval

- Document upload: Engineers or technical staff upload documents, such as PDF datasheets, word-based specifications, or standard manuals into a designated Google Drive folder.

- Automated ingestion: N8N monitors the Google Drive folder for new uploads. When a file is detected, it triggers a processing pipeline that extracts the content, optionally parses it to Markdown or plain text, and embeds the text using a user-defined embedding model.

- Vector storage: The resulting embeddings are stored in a local or cloud-hosted vector database (e.g., Qdrant, Chroma, or Pinecone) for later retrieval.

- Query interface: End users submit queries via various channels, such as Gmail, Slack, or a web-based chatbot. The N8N agent retrieves the top-K relevant chunks from the vector database.

- LLM generation: These retrieved passages are then passed to an LLM of the user’s choice, which is hosted locally and generates a grounded, context-aware response.

- Locate and extract numerical values from NEC tables (e.g., minimum burial depths, conductor ampacity ratings, overcurrent protection limits).

- Interpret codebook exceptions and conditional clauses, which often appear as footnotes or structured rule deviations.

- Retrieve relevant sections and apply contextual logic (e.g., identifying requirements that vary by installation type, voltage class, or application environment).

- Grounding: Did the model cite or synthesize relevant text from the retrieved NEC section?

- Exactness: Did it accurately reproduce or infer key numeric thresholds and regulatory conditions?

- Verifiability: Are the statements presented traceable to the original document sections?

- Semantic prompting and section identification: The RAG-LLM pipeline demonstrated strong capability in retrieving and identifying the correct NEC sections relevant to a given question. However, optimal performance often required minor prompt reformulations to guide the language model semantically toward the appropriate retrieval vector. Prompts that aligned more closely with the phrasing or terminology found in the NEC tended to yield more accurate and relevant outputs, highlighting the importance of semantic alignment in user queries.

- Numerical table retrieval and chunking limitations: The system exhibited average performance when answering questions that required retrieving numerical values from tabular data. In several cases, particularly those involving large or complex NEC tables, the retrieval process failed to return a coherent representation of the table. This behavior is attributed to the document chunking strategy—when large tables are split across multiple chunks, the model often receives incomplete context, resulting in incorrect or partially accurate responses. This fragmentation of tabular structure presents a known limitation in current RAG implementations.

- Challenges with multi-condition exceptions: The RAG-LLM pipeline struggled with rules that involved multi-conditioned exceptions; this is common in NEC clauses that specify alternate requirements based on voltage, environment, or application type. These exceptions are often presented as enumerated or nested logic conditions, and the system had difficulty parsing and reasoning through the full set of conditions. As a result, responses sometimes omitted key qualifying criteria or misapplied the rule entirely. This highlights a current gap in the system's ability to interpret hierarchical exception logic within highly structured regulatory texts.

5. Enhancements to Traditional RAG-LLM Workflow

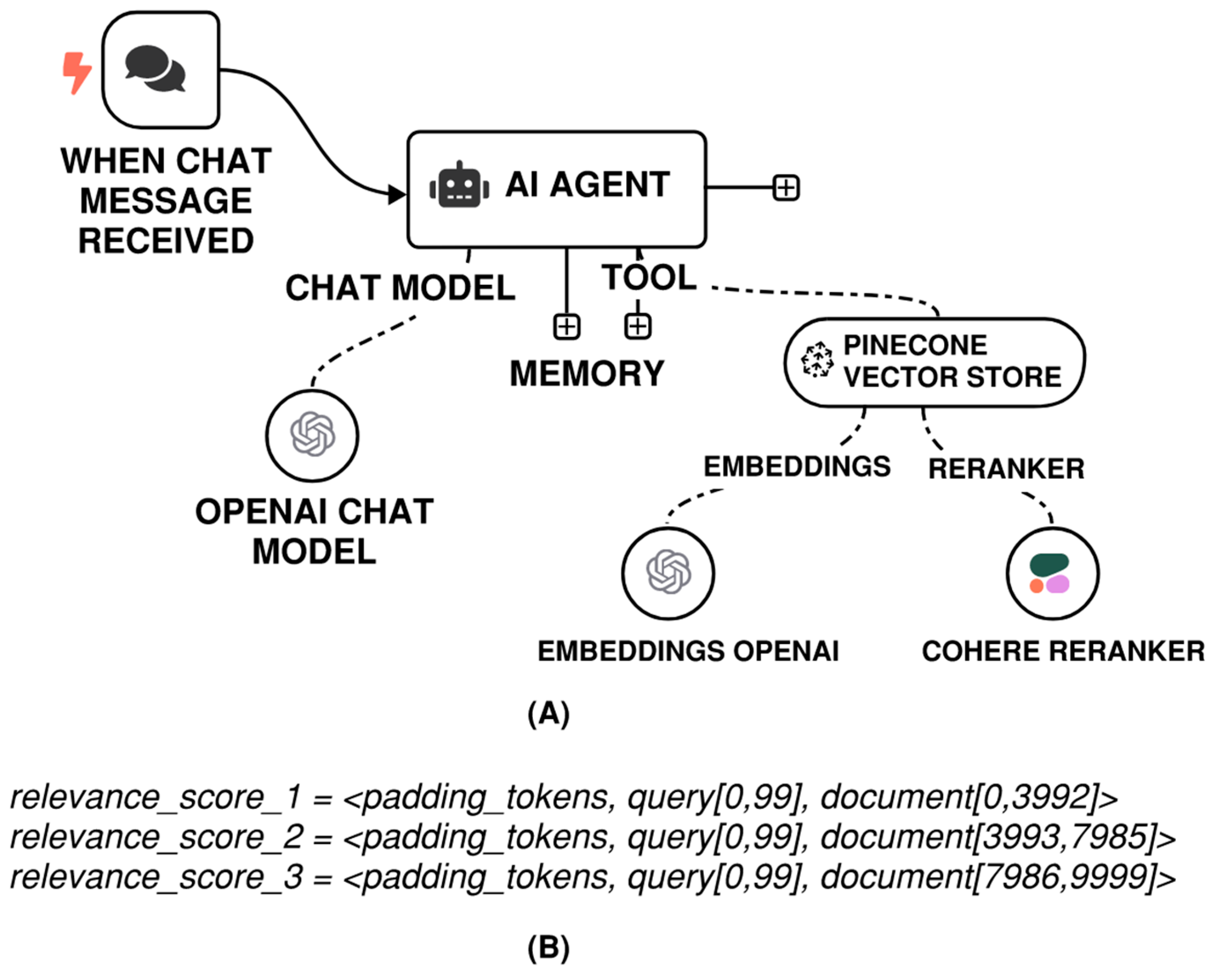

5.1. Enhancements Based on Reranking

- The model in use is rerank-v3.5, which supports a maximum context length of 4096 tokens, and

- The input query consists of 100 tokens, and,

- The document to be ranked is 10,000 tokens long, and

- Document truncation is turned off by assigning ‘max_tokens_per_doc’ a value of 10,000.

- Without any reranking (baseline),

- Bge-reranker-base, and

- Cohere V3.5 reranker.

- OpenAI + CohereRerank combinations consistently achieve the highest scores across both hit rate and MRR, positioning them as the top-performing setup.

- Both CohereRerank and bge-reranker-base demonstrate consistent improvements across diverse embedding models, indicating their robustness and effectiveness in enhancing search quality regardless of the embedding backbone used.

5.2. Enhancements Based on Contextual Retrieval Pipeline and its Advantages

"What is the minimum burial depth for direct-buried conductors under a parking lot?"

"The minimum cover depth shall be 24 inches for direct-buried conductors."

- High token throughput (e.g., 200 - 300 tokens/sec),

- Low per-token cost (e.g., <$0.10 per million tokens), and

- Extended context windows (e.g., 1 million tokens).

- First, text splitting and chunking are performed more deliberately, with explicit control over chunk size, overlap, and structural boundaries. This ensures that semantically cohesive units, such as full table entries, complete regulatory exceptions, or paragraph-level logical constructs, are preserved as atomic retrieval units. This refinement is critical for maintaining the integrity of context during downstream retrieval and reasoning.

- Second, instead of aggregating top-K retrievals into a single prompt, each chunk is individually passed to a "Basic LLM Chain" node within N8N. This node is configured with a well-crafted, structured prompt (user message), Figure 5b, that guides the LLM in evaluating each chunk’s relevance and factual contribution to the original query. The design of this prompt is inspired by prompt templates found in contextual RAG applications such as those published by Anthropic [10]. The chain then filters or ranks responses from multiple chunks before synthesizing a final answer.

- Complex or multi-layered prompts, where the query involves reasoning across multiple conditions or clauses.

- Cross-referenced rules, common in regulatory and engineering texts, where one section refers to definitions, exceptions, or constraints located in another part of the document.

6. Advanced Implementation with Multi-Brain Knowledge Graph Based RAG

- Identification of structural gaps and blind spots: As a more visual and relational representation of knowledge, knowledge graphs can expose under-connected or isolated nodes, that may indicate overlooked or weakly integrated concepts. These blind spots can be leveraged to generate novel insights by prompting new, contextually relevant connections between disparate ideas.

- Exploratory pathways for idea navigation: Knowledge graphs facilitate intuitive exploration of conceptual relationships. By tracing how a given node connects to others across the graph, users can uncover indirect yet meaningful pathways between ideas, supporting hypothesis generation, interdisciplinary discovery, or refinement of conceptual frameworks.

- Revealing nuance through concept removal: By algorithmically or manually removing dominant or highly connected nodes, knowledge graphs can surface latent structures and peripheral relationships. This approach highlights less obvious, contextually rich ideas that are often obscured by central concepts, enabling deeper interpretation and nuanced understanding of the information space.

- Higher API usage cost for knowledge graph generation: From an implementation standpoint, generating a knowledge graph from source material (e.g., PDFs or structured text) incurs significantly higher API consumption compared to traditional vector database ingestion. For instance, while basic text embedding may consume 1–2 tokens per word, the creation of a knowledge graph—particularly those involving relation extraction, entity disambiguation, and ontology alignment—can require 5–10 × more tokens per document. This increased cost reflects the deeper semantic parsing and relationship mapping inherent to graph construction.

- 2.

- Limitations with numerical tabular data: Knowledge graphs are not well-suited for representing dense numerical tabular data, as they excel at modeling conceptual and relational structures rather than high-volume matrix-like values. Hence using knowledge graphs for rich tabular content, such as the NEC or other NFPA standards would be limiting. Through the workflow, it was observed that when numerical tables are forced into a graph format, granularity is lost, and retrieval becomes inefficient; numeric precision, row-wise dependencies, and columnar statistics do not translate naturally into entity-relation triples, limiting effective graph-based inference in such contexts.

- 3.

- Expanded exploratory potential with multiple knowledge graphs: A key advantage of using multiple specialized knowledge graphs is the dynamic expansion of the knowledge space accessible to the AI agent. Depending on the prompt, the agent can selectively or concurrently traverse these graphs, uncovering deeper and more diverse interconnections. This multi-graph strategy enhances exploratory analysis, allowing users to follow conceptual pathways across different but related domains, uncover latent insights, and generate novel hypotheses grounded in a broader knowledge base.

- 4.

-

Other observations: Some additional observations that were made are docketed as follows:

- Traditional vector-based RAG loses structural information when chunking documents, while such hierarchies and relationships are preserved in knowledge graph-RAG.

- Knowledge graph-RAG were better able to support complex reasoning across multiple facts, such as exceptions to certain engineering code sections, or supplementary discussions on a particular subject within a research paper.

7. Summary and Future Work

- Expanding support for multi-modal retrieval, such as integrating diagrams and structured tables into the RAG workflow,

- Developing more efficient chunking and indexing strategies to reduce token overhead,

- Enabling real-time collaboration and feedback loops between human experts and RAG systems, and

- Improving support for multilingual engineering documents. Continued refinement of low-code frameworks and better benchmarking across diverse engineering datasets will further strengthen the practical utility of RAG-LLM systems in technical disciplines.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| IEEE | Institute of Electrical and Electronics Engineers |

| LLM | Large Language Models |

| NEC | National Electric Code (also known as NFPA 70) |

| NFPA | National Fire Protection Association (US) |

| RAG | Retrieval-Augmented Generation |

References

- J. Li, X. Cheng, W. X. Zhao, J.-Y. Nie and J.-R. Wen, "HaluEval: A large-scale hallucination evaluation benchmark," in Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, 2023.

- D. Roustan and F. Bastardot, "The clinicians’ guide to large language models: A general perspective with a focus on hallucinations," Interactive Journal of Medical Research, 2025. [CrossRef]

- T. Zhang, L. Qiu, Q. Guo, C. Deng, Y. Zhang, Z. Zhang, C. Zhou, X. Wang and L. Fu, "Enhancing uncertainty-based hallucination detection with stronger focus," Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, pp. 915-932, 2023.

- M. Omar, V. Sorin, J. D. Collins, D. Reich, R. Freeman, N. Gavin, A. Charney, L. Stump, N. L. Bragazzi, G. N. Nadkarni and E. Klang, "Large language models are highly vulnerable to adversarial hallucination attacks in clinical decision support: A multi-model assurance analysis," MedRxiv, 2025.

- S. Gopi, D. Sreekanth and N. Dehboz, "Enhancing engineering education through LLM-driven adaptive quiz generation: A RAG-based approach," in IEEE Frontiers in Education Conference (FIE), Washington, DC, US, 2024.

- L. Siddharth and J. Luo, "Retrieval augmented generation using engineering design knowledge," Knowledge-Based Systems, vol. 303, 2024. [CrossRef]

- J. Superbi, H. Pereira, E. Santos, L. Lattari and B. Castro, "Enhancing large language model performance on ENEM math questions using retrieval-augmented generation," in Proceedings of the XVIII Brazilian e-Science Workshop (BreSci), 2024.

- Y. Chen, Q. Fu, Y. Yuan, Z. Wen, G. Fan and D. Liuet, "Hallucination detection: robustly discerning reliable answers in large language models," in Proceedings of the 32nd ACM International Conference on Information and Knowledge Management, 2023.

- Huggingface, "Open LLM Leaderboard," 2025. [Online]. Available: https://huggingface.co/spaces/open-llm-leaderboard/open_llm_leaderboard#/. [Accessed 2025].

- Anthropic, "Introducing Contextual Retrieval," 09 2024. [Online]. Available: https://www.anthropic.com/news/contextual-retrieval. [Accessed 06 2025].

- S. Ji, S. Pan, E. Cambria, P. Marttinen and P. S. Yu, "A survey on knowledge graphs: Representation, acquisition, and applications," IEEE Transactions on Neural Networks and Learning Systems (, vol. 33, no. 2, pp. 494-514, 2022.

- C. Peng, F. Xia, M. Naseriparsa and F. Osborne , "Knowledge graphs: Opportunities and challenges," Artificial Intelligence Review, vol. 56, pp. 19071-13102, 2023. [CrossRef]

- S. Tiwari, F. N. Al-Aswadi and D. Gaurav , "Recent trends in knowledge graphs: theory and practice," Soft Computing, vol. 25, p. 8337–8355, 2021. [CrossRef]

- K. Baclawski, E. S. Chan, D. Gawlick, A. Ghoneimy, K. Gross, Z. H. Liu and X. Zhang , "Framework for ontology-driven decision making," Applied Ontology, vol. 12, no. 3-4, 2017. [CrossRef]

- S. Ghosh and P. Suryawanshi , "Enhancing grid resiliency in high fire or flood risk areas: Integrating protective relay settings, broken conductor detection, and grid hardening for climate-induced event preparedness," Journal of The Institution of Engineers (India): Series B, vol. 106, pp. 393-405, 2024.

- S. Ghosh and S. Dutta, "A comprehensive forecasting, risk modelling and optimization framework for electric grid hardening and wildfire prevention in the US," International Journal of Energy Engineering, vol. 10, no. 3, pp. 80-89, 2020.

- Bose, S. Brown, B. Chalamala, D. Immerman, A. Khodaei, J. Liu, D. Novosel, A. Paaso, F. Rahmatian, J. R. Aguero and M. Vaiman, "Resilience framework, methods, and metrics for the electricity sector," IEEE Power & Energy Society (PES-TR83), 2020.

- F. Safdarian, J. L. Wert, D. Cyr and T. J. Overbye, "Power system resiliency and reliability issues from renewable resource droughts," in IEEE Kansas Power and Energy Conference (KPEC), Manhattan, Kansas, US, 2024.

- S. Bhattarai, A. Sapkota and R. Karki, "Analyzing investment strategies for power system resilience," in IEEE Power & Energy Society General Meeting (PESGM), Denver, Colorado, US, 2022.

- A. Tabassum, S. Lee, N. Bhusal and S. Chinthavali, "Power outage forecasting for system resiliency during extreme weather events," in IEEE International Conference on Big Data (BigData), Washington, DC, US, 2024.

- E. Ciapessoni, D. Cirio, A. Pitto, M. Van Harte and M. Panteli, "Power System Resilience: definition, features and properties," CIGRE Science and Engineering, vol. CSE030, 10 2023.

- R. Itotani, K. Sadahiro, M. Tokai, H. Hama, K. Sugino and M. Takeda, "Sustainable improvement on substation resilience and reliability by using eco-friendly equipment and remote maintenance systems," in CIGRE Paris Session, 2024.

- D. Paranyushkin, "InfraNodus: Discover What Everyone Else Is Missing," 2024. [Online]. Available: https://infranodus.com/. [Accessed 07 2025].

| Model | Average score across all benchmarks | IFEva† | GPQA‡ | CO2 cost | |

| Qwen2.5-test-32b-it | 47.37% | 78.89% | 15.21% | 29.54 kg | |

| Horizon-AI-Avengers-V1-32B | 47.34% | 79.72% | 14.99% | 7.95 kg | |

| FluentlyLM-Prinum | 47.22% | 80.90% | 18.23% | 21.25 kg | |

| Qwen.5-14b-v1.0-e3 | 47.09% | 73.24% | 22.26% | 1.56 kg | |

| Qwen2.5-32B-Instruct-abliterated-v2 | 46.89% | 83.34% | 15.66% | 13.49 kg | |

|

Q. Prompt: I am trying to find the table in the NEC that deals with single phase AC motor and associated full load current. A. Ground truth: Table 430.248 of the NEC deals with single phase AC motor and associated full load current. A. Actual output: According to the National Electrical Code (NEC), the section dealing with single-phase AC motors and their associated full-load currents can be found in Article 430, which is titled “Motors, Motor Circuits, and Controllers.” Specifically, you may refer to Table 430.248 of Article 430 for information on the minimum full-load current required for a single-phase AC motor based on the nameplate rating of the motor. Score: 0.9072185128712421 Reason: The actual output correctly identifies Table 430.248 as the relevant section for single-phase AC motors and their full-load currents, aligning with the expected output. It provides additional context by mentioning Article 430 and its title, which enhances factual accuracy and comprehensiveness. There are no contradictions or omissions, but the extra detail slightly exceeds the expected output's simplicity. |

Q. Prompt: What is the minimum cover required per Section 300.5 of the NEC, for direct buried cables running under a parking lot based on table 300.5. A. Ground truth: For direct buried cables running under a parking lot based on table 300.5, minimum cover required is 24 inches. A. Actual output: According to Table 300.5 of the NEC, the minimum cover required for direct-buried cable installations running under a parking lot is 24 inches (609 mm) based on the values provided in the table. This value applies to the top surface of the finished grade, and other factors may also be considered when determining the appropriate cover for your specific installation. Score: 0.859266659995407 Reason: The actual output correctly identifies the minimum cover required as 24 inches, aligning with the expected output. It provides additional context by mentioning the measurement in millimeters and noting that other factors may influence the cover, which, while not in the expected output, does not contradict it. There are no factual inaccuracies or critical omissions, but the extra details slightly deviate from the expected brevity. |

|

Q. Prompt: In the NEC which section deals with minimum cover requirement for cables and conduits. A. Ground truth: In the NEC, section 300.5 deals with minimum cover requirement for cables and conduits. A. Actual output: According to my knowledge based on the context provided, the minimum cover requirements for cables and conduits can be found in Section 300.5 of the NEC. This section specifies the minimum cover requirements for direct-buried cable, conduit or other raceways. Additionally, you may find further information regarding mechanical protection for direct-buried cables in sections 840.48 and 830.47 of the NEC. Score: 0.8009300906486064 Reason: The actual output correctly identifies Section 300.5 of the NEC as dealing with minimum cover requirements for cables and conduits, aligning with the expected output. It also provides additional information about related sections, which is not required but does not contradict the expected output. There are no factual inaccuracies or omissions regarding the primary question, but the extra details, while accurate, are not necessary for the specific query. |

Q. Prompt: Is there any restriction in running conductors in parallel in the NEC. A. Ground truth: NEC section 310.10(H) talks about conductors in parallel. A. Actual output: Yes, according to Section 310.10(H) of the NEC, parallel conductors are permitted to be installed in raceways, multiconductor cables or direct-buried single conductor cables. Score: 0.5432697039021899 Reason: The actual output correctly identifies NEC section 310.10(H) as relevant to conductors in parallel, aligning with the expected output. However, it introduces additional details about installation methods that are not present in the expected output, which could be seen as extraneous information. There are no factual inaccuracies or contradictions, but the extra details could be considered an omission of focus on the core expected content. |

|

Q. Prompt: Based on section 310.10(H) of the NEC, if I have two parallel #8 AWG conductors, what is my restriction on overcurrent protection. A. Ground truth: With two parallel #8 AWG conductors, the overcurrent protection should be such that the ampacity of individual conductors will not be exceeded if one or more of the parallel conductors become inadvertently disconnected. A. Actual output: According to Section 310.10(H) of the NEC, parallel conductors are allowed in raceways, multiconductor cables or direct-buried single conductor cables if they meet certain conditions: The total current-carrying capacity of the parallel circuits shall not exceed the ampacity rating of the raceway or overhead circuit. Based on the given context, if you have two parallel #8 AWG conductors, it is important to ensure that the total current-carrying capacity of both circuits does not exceed the ampacity rating of the raceway or overhead circuit. Score: 0.2610665811531422 Reason: The actual output fails to specifically mention the restriction on overcurrent protection related to the scenario where one or more parallel conductors become disconnected, which is a key aspect of the expected output. |

Q. Prompt: I am trying to find the table in the NEC that deals with AC motors and associated current. A. Ground truth: Tables 430.248 through 430.250 reflect the typical and most used 4-pole and 2-pole induction motors (AC) in use. A. Actual output: According to the National Electrical Code (NEC), the section dealing with AC motors and their associated currents can be found in Article 440, which is titled “Motor Controllers.” Specifically, you may refer to Table 440.5 of Article 440 for information on the minimum full-load current required for a single-phase or three-phase AC motor based on the nameplate rating of the motor. Score: 0.20462199541570797 Reason: The actual output incorrectly identifies Article 440 and Table 440.5 as the relevant section for AC motors, while the expected output specifies Tables 430.248 through 430.250. This indicates a factual inaccuracy and a significant contradiction. Additionally, the actual output fails to address the specific tables mentioned in the expected output, leading to an omission of required information. |

| Embedding | Without any reranking (baseline) | bge-reranker-base | Cohere V3.5 reranker | |||

| Hit Rate | MRR | Hit Rate | MRR | Hit Rate | MRR | |

| OpenAI | 0.828 | 0.692 | 0.899 | 0.822 | 0.932 | 0.877 |

| Voyage | 0.766 | 0.650 | 0.818 | 0.777 | 0.891 | 0.830 |

| Google-PaLM | 0.815 | 0.676 | 0.892 | 0.752 | 0.916 | 0.865 |

| RAG strategy | Avg token per chunk (or chunk + context) | Retrieval fidelity | Embedding cost |

| Traditional RAG | ~250–350 tokens | Medium | Low |

| Contextual RAG | ~600–1000 tokens | High | Higher (unless models like Gemini 2.5 Flash are used, with low $/million token cost) |

| Q&A responses and scores with traditional RAG-LLM | Q&A responses and scores with contextual RAG-LLM |

|

Q. Prompt: Based on the NEC, for solar photovoltaic (PV) system application, what is the correction factor that needs to be applied to PV cables for an ambient temperature of 55 degree C, and the temperature rating of the conductor being 75 degree C rated. A. Ground truth: According to NEC Table 690.31(A), the applicable correction factor is 0.67. A. Actual output from traditional RAG: According to the National Electrical Code (NEC) 2017, specifically Table 310.15(B)(2)(a) (renumbered in later versions as Table 310.15(B)(1) or Table 310.15(B)(1) Adjustment Factors), the required correction factor is 0.70 for 75°C rated PV conductors in 55°C ambient conditions. Score: 0.1981536822161493 Reason: The actual output provides a correction factor of 0.70 based on a different NEC table than the expected output, which specifies a correction factor of 0.67 from Table 690.31(A). This indicates a factual inaccuracy and a contradiction with the expected output. Additionally, the actual output omits the specific reference to Table 690.31(A), which is essential information from the expected output. |

Q. Prompt: Based on the NEC, for solar photovoltaic (PV) system application, what is the correction factor that needs to be applied to PV cables for an ambient temperature of 55 degree C, and the temperature rating of the conductor being 75 degree C rated. A. Ground truth: According to NEC Table 690.31(A), the applicable correction factor is 0.67. A. Actual output from contextual RAG: According to NEC Table 690.31(A), for a 75 °C rated conductor in an ambient temperature of 51–55 °C, the applicable correction factor is 0.67. Score: 0.8918686352485045 Reason: The actual output is factually accurate and provides the correct correction factor of 0.67, as expected. It fully addresses the expected output by specifying the NEC table and the temperature range, which adds clarity. There are no contradictions, and the output aligns well with the context and requirements of the input. The only minor shortcoming is the slightly more detailed explanation than necessary, but it does not detract from the correctness. Score improved over traditional RAG implementation. |

|

Q. Prompt: Based on NEC section 310, what is the adjustment factor for 4 current carrying conductor? A. Ground Truth: According to NEC Table 310.15(B)(3)(a), the applicable correction factor is 80%. A. Actual Output from traditional RAG: According to Section 310.15(3)(a) of the National Electrical Code (NEC), the adjustment factors for four current-carrying conductors are 1% and 2%. These factors are used to adjust the load diversity of the installation. Score: 0.08819886431169416 Reason: The Actual Output incorrectly identifies the adjustment factors as 1% and 2%, which does not match the Expected Output's 80% correction factor. It also references the wrong section, 310.15(3)(a), instead of Table 310.15(B)(3)(a). This demonstrates factual inaccuracies and discrepancies with the Expected Output. |

Q. Prompt: Based on NEC section 310, what is the adjustment factor for 4 current carrying conductor? A. Ground Truth: According to NEC Table 310.15(B)(3)(a), the applicable correction factor is 80%. A. Actual Output from contextual RAG: According to the National Electrical Code (NEC) 2017, specifically Table 310.15(B)(3)(a), the applicable correction factor as adjusted for ambient temperature if necessary is 80%. Score: 0.7562603783294554 Reason: The actual output correctly identifies the adjustment factor as 80% according to NEC Table 310.15(B)(3)(a), aligning with the expected output. However, it introduces additional information about ambient temperature adjustment, which is not required by the input or expected output, slightly deviating from the expected response. Score improved over traditional RAG implementation. |

|

Q. Prompt: Based on section 310.10(H) of the NEC, if I have two parallel #8 AWG conductors, what is my restriction on overcurrent protection. A. Ground truth: With two parallel #8 AWG conductors, the overcurrent protection should be such that the ampacity of individual conductors will not be exceeded if one or more of the parallel conductors become inadvertently disconnected. A. Actual Output from traditional RAG: According to Section 310.10(H) of the NEC, parallel conductors are allowed in raceways, multiconductor cables or direct-buried single conductor cables if they meet certain conditions: The total current-carrying capacity of the parallel circuits shall not exceed the ampacity rating of the raceway or overhead circuit. If the ungrounded service-entrance conductors are installed in parallel, they must be sized to match the ungrounded service-entrance conductor ampacity and rated voltage. Based on the given context, if you have two parallel #8 AWG conductors, it is important to ensure that the total current-carrying capacity of both circuits does not exceed the ampacity rating of the raceway or overhead circuit. Score: 0.2610665811531422 Reason: The actual output fails to specifically mention the restriction on overcurrent protection related to the scenario where one or more parallel conductors become disconnected, which is a key aspect of the expected output. |

Q. Prompt: Based on section 310.10(H) of the NEC, if I have two parallel #8 AWG conductors, what is my restriction on overcurrent protection. A. Ground truth: With two parallel #8 AWG conductors, the overcurrent protection should be such that the ampacity of individual conductors will not be exceeded if one or more of the parallel conductors become inadvertently disconnected. A. Actual Output from contextual RAG: According to NEC 2017, Section 310.10(H), for two parallel #8 AWG conductors, each conductor must be protected so that no individual conductor exceeds its ampacity. Score: 0.8042020327293316 Reason: The actual output aligns well with the expected output by stating that each conductor must be protected to not exceed its ampacity, which is consistent with the requirement that the ampacity of individual conductors should not be exceeded. Score improved over traditional RAG implementation. |

| Prompt into knowledge graph-based RAG | Brains which the AI agent consulted | Response from the knowledge graph-based RAG agent |

| Q. Prompt: Referring to the IEEE technical reference documents and the author's own research, can you summarize very briefly how fast trip relay settings can help in high fire threat areas. | Brain 1 and Brain 2 (see Figure 6a) |

A. Actual Output from knowledge graph-based RAG agent: Fast trip relay settings contribute significantly to enhancing grid resiliency in high fire threat areas. According to both IEEE documents and research on grid resilience: Rapid fault detection and isolation: Fast trip relay settings quickly identify and isolate faults along power lines, which is crucial in preventing small issues from escalating into larger problems that could ignite wildfires. Proactive risk mitigation: By setting sensitive thresholds for detecting faults, relays act as a proactive defense mechanism. They mitigate the risk of electrical faults that could lead to fire ignition, thereby aligning with a risk mitigation strategy that addresses specific hazards prevalent in high fire risk zones. System reliability: This approach not only prevents potential fires but also enhances the overall system's reliability and safety, maintaining electric continuity and preventing outages that could compound firefighting efforts. Integrating such relay settings reflects a focused effort on prevention and protection, key aspects of a resilient grid especially in regions vulnerable to wildfires. |

| Q. Prompt: Referring CIGRE documents and the author's own research, can you list briefly what are the be benefits of SF6 alternative circuit breaker. | Brain 1 and Brain 3 (see Figure 6a) |

A. Actual Output from knowledge graph-based RAG agent: The benefits of using SF6 alternative circuit breakers, specifically those using vacuum and synthetic air, include: Environmental sustainability: SF6 alternative circuit breakers, such as those using vacuum technology and synthetic air, significantly reduce environmental impact by eliminating greenhouse gas emissions associated with SF6, which has a high Global Warming Potential (GWP). Improved safety: These alternatives enhance safety by simplifying the gas recovery and filling processes, and they avoid the release of harmful gases into the environment. Cost-effective maintenance: The vacuum technology extends maintenance cycles, reducing overall costs and increasing operational efficiency, as noted in innovative applications that align with sustainable development goals. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).