1. Introduction

With the rapid development of autonomous driving technology, on-board sensors, as the core components for environmental perception in vehicles, directly determine the safety and intelligent performance of autonomous driving systems. As the technology evolves toward higher-level autonomy, enhancing environmental perception capabilities has become a critical pathway to overcoming safety bottlenecks in complex scenarios. Among various sensors, millimeter-wave radar stands out as a pivotal technological solution in autonomous driving due to its all-weather operational capability, high-precision detection, and cost-effectiveness [

1].

Compared to other sensors, millimeter-wave radar exhibits significant advantages:

Cost Advantage: Millimeter-wave radar is more cost-effective than LiDAR, making it better suited for mass deployment in automotive and industrial applications.

Environmental Adaptability: Unlike cameras, millimeter-wave radar operates independently of ambient light and maintains stable performance in complex weather conditions (e.g., fog, rain, snow).

High Reliability: With robust anti-interference capability, millimeter-wave radar ensures consistent functionality even in electromagnetically noisy environments, such as urban areas with dense wireless signals.

Traditional 3D millimeter-wave radars (capable of measuring distance, azimuth angle, and velocity) often fail to distinguish objects due to the absence of target height information [

2]. For example, they struggle to differentiate between manhole covers on the ground and road signs in the air, or between stationary soda cans and elevated bridge piers, leading to frequent occurrences of typical accidents (such as the 2016 Tesla-truck collision incident). Upgrading from traditional 3D to 4D millimeter-wave radars introduces the capability to measure target height (pitch angle), thereby enabling four-dimensional information perception of targets: distance, azimuth angle, velocity, and height. The ability to provide target height information is critical for intelligent driving vehicles to determine drivable areas. For instance, 4D millimeter-wave radars can detect overpasses, road signs, and ground obstacles (such as soda cans and manhole covers) ahead, helping vehicles avoid potential hazards. This significantly enhances the spatial contour parsing capability of obstacles, making it a key direction for upgrading intelligent driving sensor architectures [

3,

4].

In-vehicle radar systems are highly cost-sensitive, making single-chip solutions a research focus due to their superior cost-performance ratio. While traditional multi-chip cascaded solutions offer robust performance, their higher costs hinder large-scale adoption. In contrast, the direct upgrade of single-chip 3D radar to 4D radar presents technical challenges in achieving optimal imaging performance, yet it enables the acquisition of elevation data for detected objects. This capability allows advanced prediction of potential hazards like overpasses and traffic signs along the road. Through technological innovation and cost optimization, single-chip 4D millimeter-wave radar is poised to play an increasingly vital role in intelligent driving systems [

5,

6,

7].

The main contributions of this work can be summarized as follows:

- (1)

Single-Chip Design: We propose a design scheme for a single-chip 4D millimeter-wave radar, realizing a low-cost and high-performance 4D radar system through a highly integrated hardware platform and an optimized sparse MIMO antenna array. We developed high-gain, low-side-lobe antenna arrays to enhance detection capability and anti-interference performance. This design breaks through the cost constraints of traditional multi-chip cascade solutions, offering the potential for large-scale commercial applications.

- (2)

Rain Clutter Identification: A rain clutter identification method based on the distribution characteristics of raindrops is proposed. By statistically analyzing the speed and distance distribution of raindrops, this method effectively distinguishes between raindrops and real targets, thereby reducing the interference of rain clutter on radar detection.

- (3)

Noise-point Suppression: A noise-suppression method based on angular FFT peak-amplitude variance is proposed. By designing an effective strategy, this approach suppresses noise-point interference while preserving true targets, thereby enhancing radar target-detection stability in complex environments.

The structure of this paper is as follows:

Section 2 presents the system hardware design, including antenna radiation patterns.

Section 3 details the signal processing flow with angle accuracy analysis, along with rain-clutter suppression and noise-point suppression methods.

Section 4 provides extensive field test results validating system effectiveness.

Section 5 discusses implementation approaches for 4D radar and future development trends.

Section 6 concludes the paper.

2. Radar System Hardware Platform and Beam Design

The radar is connected to the vehicle body gateway via the public CAN bus to acquire information such as vehicle speed, gear position, turn signals, door status, yaw rate, and steering wheel angle. Meanwhile, it uploads target information, dangerous target warning information, and diagnostic fault information to the vehicle body CAN network. The overall dimensions of the hardware structure are 109mm × 67mm × 34.7mm (including the connector), with a weight of approximately 86g.

The hardware circuit section mainly consists of the following components:

- (1)

RF & Signal Processing Circuit

Functions: Processes radio frequency signals (e.g., millimeter-wave radar signals), performs analog-to-digital conversion (ADC), and implements baseband signal processing algorithms (e.g., FFT, CFAR and target detection).

- (2)

Power Management Circuit

Functions: Converts vehicle battery power (12V/24V) to stable voltages (e.g., 3.3V, 1.8V) for RF chips, microcontrollers (MCUs), and sensors. Includes surge protection, EMI filtering, and low-power sleep modes.

- (3)

CAN Transceiver Circuit

Functions: Bridges the digital logic of the radar MCU to the vehicle’s CAN bus (ISO 11898-2). Converts differential CAN signals to TTL/CMOS levels for data transmission (e.g., Vehicle speed, Danger warnings) and reception (e.g., Steering angle).

- (4)

Peripheral Storage Circuit

Functions: Stores configuration parameters (e.g., radar calibration data), fault codes (DTCs), and temporary target logs. Typically uses EEPROM (for non-volatile storage) or SPI Flash (for large data buffers).

Specifically, for the RF circuit, the system employs the AWR2944 chip ,which is built with TI’s low-power 45nm RFCMOS process and enables unprecedented levels of integration in a small form factor and minimal BOM. The chip integrates essential RF components including a PLL, VCO, mixer, and ADC, supporting multi-channel antenna interfaces for advanced radar operations. Operating in the 76–81GHz frequency band with over 4GHz contiguous bandwidth, it features a 4-transmit (TX) and 4-receive (RX) channel architecture, facilitating high-resolution MIMO (Multiple-Input Multiple-Output) signal acquisition. For signal processing, the system leverages a 360MHz C66x digital signal processor combined with the HWA 2.1 radar hardware accelerator, which optimizes real-time execution of key algorithms such as FFT (Fast Fourier Transform) , CFAR (Constant False Alarm Rate), log magnitude, and memory compression for accurate target detection and tracking in automotive environments. This highly integrated design ensures compact form factor compatibility and robust performance for advanced driver assistance systems (ADAS).

The radar antenna employs a MIMO architecture to minimize system volume, featuring a comb-shaped radiator for compact integration. To enhance the comb antenna’s gain, we optimized the geometry of individual radiating elements:

Structural Optimization: Specialized shape and dimension tuning increased the effective radiation area by 28% (via HFSS simulation), boosting radiation efficiency by 15% compared to conventional comb designs.

Current Distribution Control: Current distribution weighting optimization suppressed sidelobe levels to -15dB (vs. -12dB for unoptimized arrays), achieved through adaptive current amplitude/phase weighting.

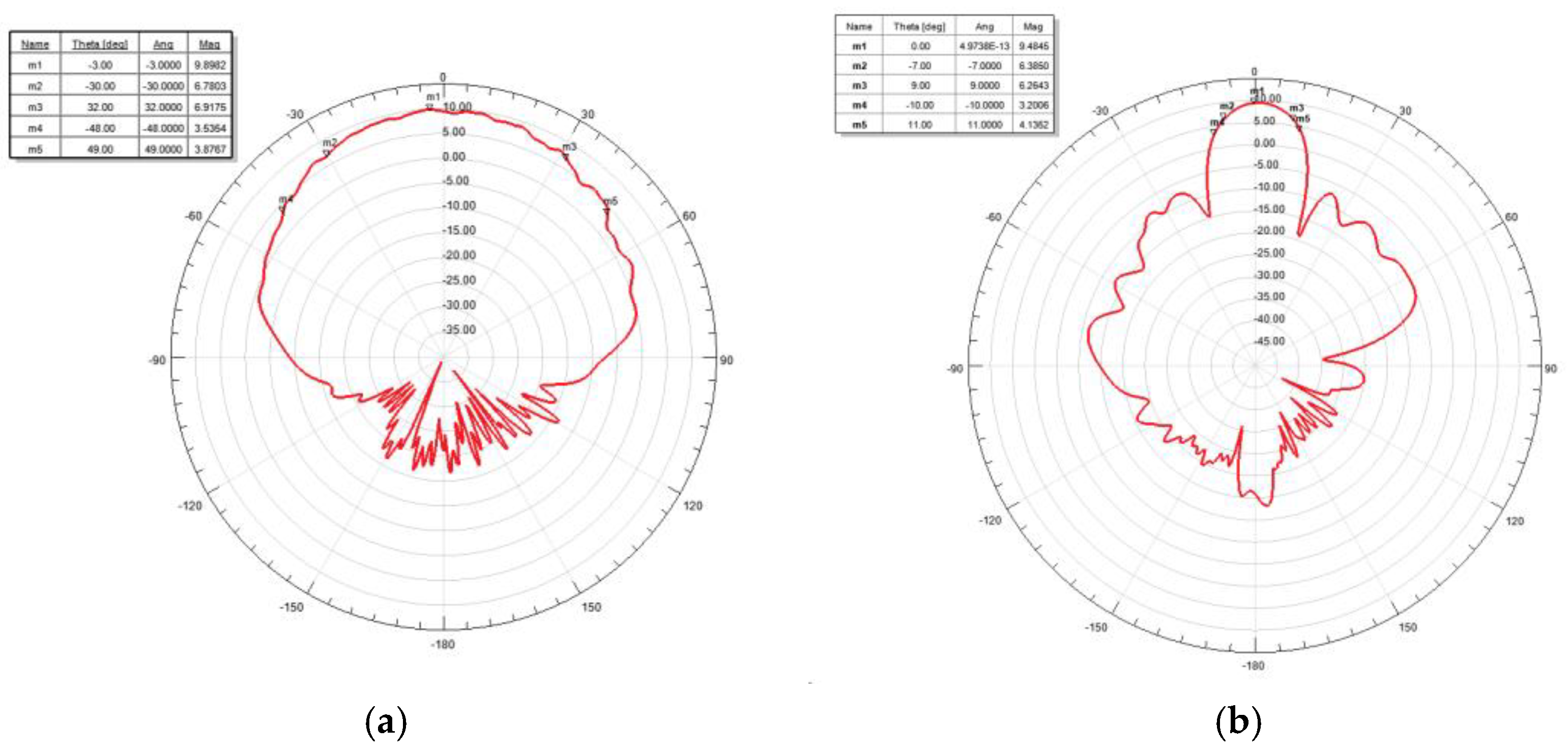

As shown in

Figure 1,the optimized antenna achieves a 60° 3dB beamwidth in the horizontal direction for wide angular coverage and simultaneous multi-target detection, along with a 16° 3dB beamwidth and 20° 6dB beamwidth in the elevation direction, ensuring accurate height estimation for overpassing vehicles.

3. Signal Processing

3.1. FMCW Radar Signal Processing and Angle Accuracy

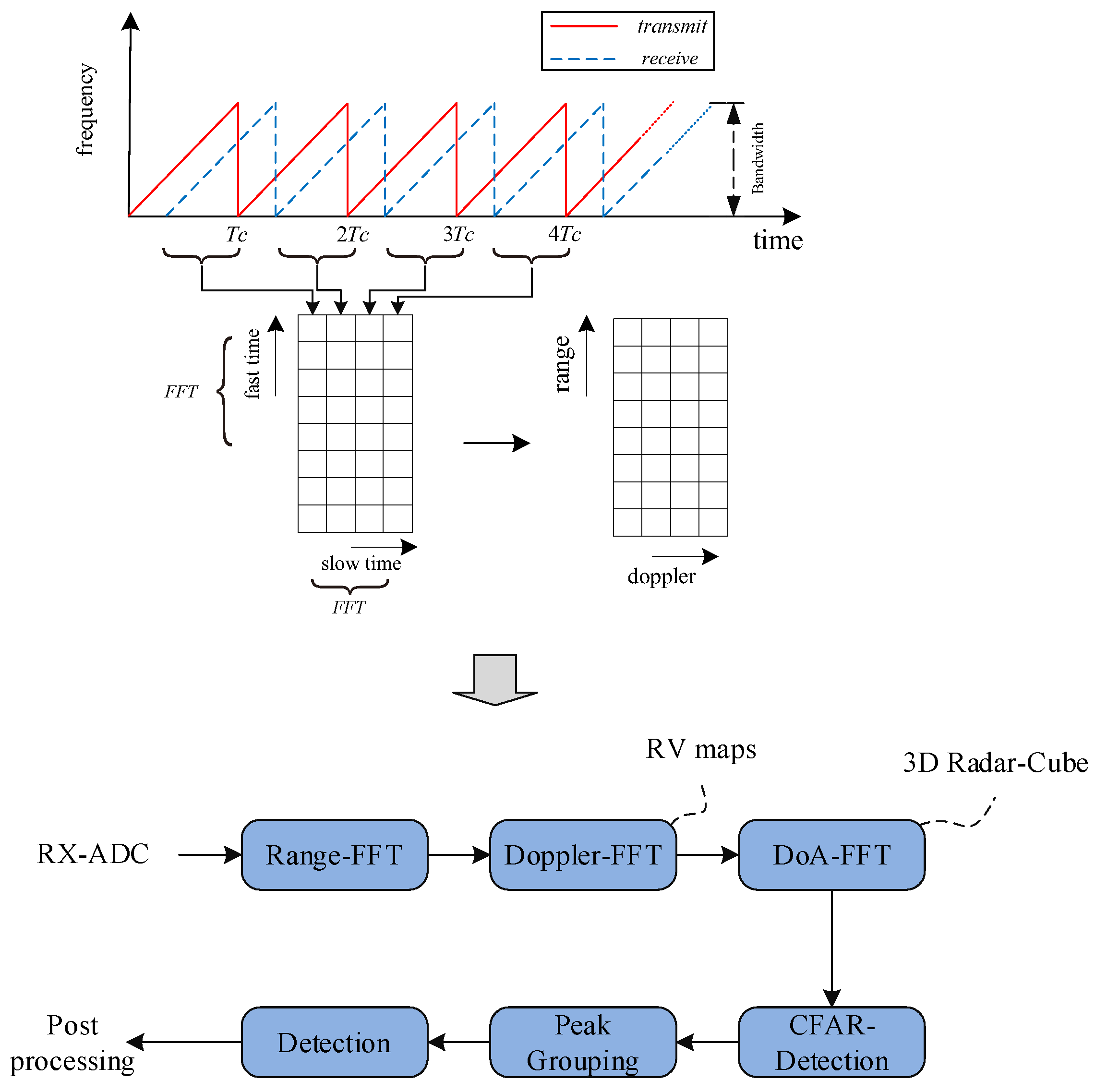

Although the FMCW is a popularly used waveform for automotive radar and other applications. The fast chirp ramp sequence shown in

Figure 2[

8]. The process of obtaining range and velocity utilizes 2-DFT which is performed first for each individual chirp/ramp to obtain range information and second across ramps to obtain velocity information. By further computing angle information based on the antenna configuration used to receive the chirp ramp sequence, then 3D target data can be constructed and consists of range, velocity, and angle [

9].

Frequency-Modulated Continuous Wave (FMCW) waveform [

10], also known as a chirp, is a complex sinusoidal signal whose frequency increases linearly with time, expressed as [

11]:

where

is the bandwidth,

is the chirp period, and

is the carrier frequency. The received signal is mixed with the transmitted chirp to generate a complex sinusoidal beat frequency signal, whose frequency equals

, where

is the range frequency,

is the Doppler frequency. The beat frequency signal is passed through a low-pass filter (LPF) in the RF domain to remove out-of-band interference, then digitized. By applying a Fast Fourier Transform (FFT) to the beat frequency signal sampled along fast time (within a single chirp),

can be extracted. Based on

, the target distance

R is calculated using:

To obtain the Doppler velocity of a target, a second Fast Fourier Transform (FFT) is performed along the slow-time dimension (across consecutive chirps), where the range frequency

remains consistent within the same range bin [

12].

Pulse Repetition Frequency (PRF) is denoted as

(

). To avoid Doppler ambiguity, the Doppler frequency must satisfy

, i.e., the minimum unambiguous detectable radial velocity is

The antennas transmit waveforms in a manner that ensures their orthogonality. At each receive antenna, for a system with

uniformly spaced transmit antennas and

uniformly spaced receive antennas, a virtual array with

elements can be synthesized [

13]. The array response can be expressed as:

where

is the control matrix of the virtual array

,

represents the noise term, and

denotes the reflection coefficient of the k-th target, angle estimation can be performed by applying a Fast Fourier Transform (FFT) to the snapshots of the array elements. When employing non-uniform or sparse linear arrays combined with MIMO radar technology, the key challenges lie in the selection of array element positions and the utilization of virtual sparse arrays for angle measurement [

14].

Assuming the angle and azimuth of a target are defined as and , respectively, (), similar to the doppler processing, the frequency variation between different receiving antennas can be ignored. This simplification allows for the calculation of the target’s angle based on the phase difference between the antennas.

The phase difference between adjacent elements can be expressed as follows [

15]:

where

represents the interval between array elements and

indicates the wavelength.

d is set to

. Based on the position of the virtual array antenna , the signal on the 9 virtual antenna array elements can be expressed as:

where

represents the signal after 2D-FFT at the same range and velocity. After performing a fast Fourier transform (FFT) along the horizontal antenna dimension, it is obtained as

where

F(w) is the angle spectrum, similar to the Sinc function. The angle is further derived from the peak of the spectrum corresponding to

.

In the synthetic virtual array, the antenna array in the elevation dimension comprises the highest number of array elements in the same column in that direction, along with an additional array element, resulting in a total of four array elements.

The horizontal offset of the three elements in the same column, compared to the first element used to calculate the azimuth angle is

(), and the offset of the added element is

. Consequently, the signals received by the four array elements can be represented as:

where

represents multiple of the phase that needs to be compensated in the azimuth direction;

represents phase difference in the vertical direction; and

represents the position of the vertical array element.

In the chamber, a corner reflector is positioned directly in front of the radar, with the radar elevation maintained at 0°. The actual azimuth of the corner reflector is compared to the measured azimuth by mechanically rotating the radar in increments of 2°. Similarly, the elevation of the corner reflector is measured when the azimuth is set to 0°. The error curve illustrating the difference between the measured values and the actual azimuth and elevation of the corner reflector is presented in

Figure 3.

The azimuth error is less than 0.3° within the angular range of -70° to 70° and no more than 0.2° within the range of ±30°. Additionally, the elevation error is less than 0.4° within the range of ±13°. Therefore, the antenna array designed in this scheme demonstrates high measurement accuracy in angle measurement.

3.2. Clutter Suppression

3.2.1. Mitigation and Avoidance of Rain Clutter Interference

While millimeter-wave radar can penetrate rain and fog to detect distant targets, when rainfall intensifies to a detectable level by the radar, raindrops are often mistakenly identified as targets, triggering false alarms. These alarms are generally undesirable, as continuous rainfall scenarios may cause persistent radar alerts suggesting approaching targets—even when the vehicle is in an open area with no actual obstacles.

Current public literature indicates there are no highly effective methods for mitigating rain clutter interference, although individual radar or automotive manufacturers may employ proprietary solutions [

16,

17,

18]. This paper proposes a raindrop identification method based on raindrop distribution characteristics to alleviate rain clutter interference.

As raindrops fall from several kilometers in altitude, they are subject to the combined effects of gravity, air resistance, and buoyancy. The terminal velocity calculation formula is [

19]:

where

represents the mass of the raindrop,

denotes gravitational acceleration,

is air density,

is the raindrop’s frontal area, and

is the drag coefficient (approximately 0.47 for spherical raindrops).

The relationship between raindrop mass and radius can be expressed as:

where

represents the density of the raindrop, and the frontal area of the raindrop is given by:

Therefore, the terminal velocity of the raindrop can be derived as:

In typical rainfall conditions, raindrop radii are generally less than 4mm (mostly below 2mm). Consequently, under low wind conditions, the terminal velocity of raindrops rarely exceeds 10m/s. Since radar measurements are conducted in near-horizontal directions (capturing the horizontal component of falling raindrops), this component is primarily influenced by wind speed and terrain [

19]. Under normal conditions with mild winds, we assume raindrops have a maximum inclination angle of approximately 30°. Under this constraint, the maximum horizontal component of raindrops at terminal velocity reaches

. We therefore set ±4m/s as the threshold for radar target detection.

Given that rainfall distribution is spatially uniform within limited areas (e.g., 100m × 100m) at any given moment, raindrop sizes remain consistent, and their terminal velocities are nearly identical. Furthermore, we restrict the radar detection range to within 30 meters because distant low-speed targets pose no collision risk and thus require no alerts—only nearby moving objects are of concern. By statistically analyzing raindrops within this defined region (2-30m), we can derive their velocity distribution and variance, enabling effective clutter discrimination.

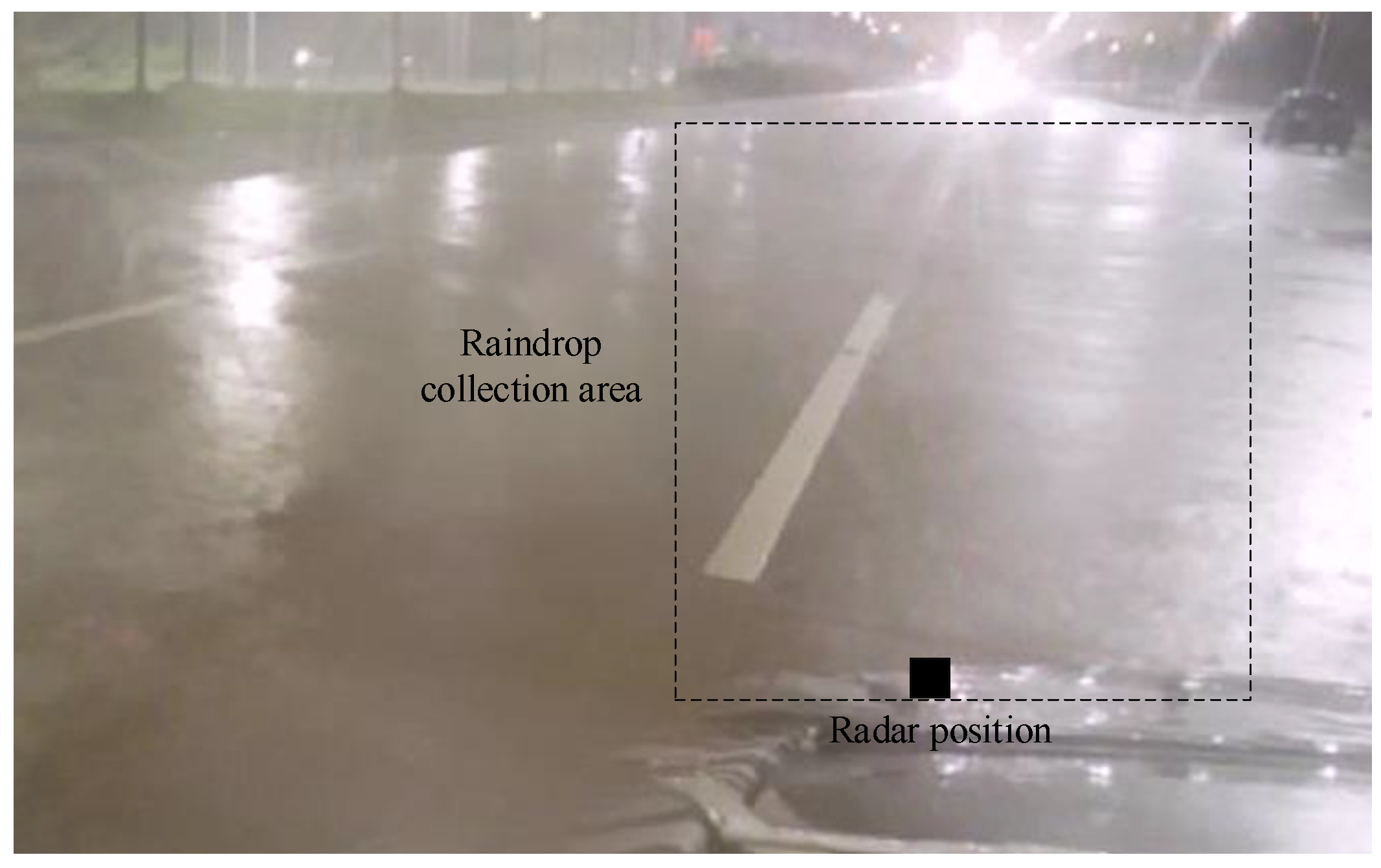

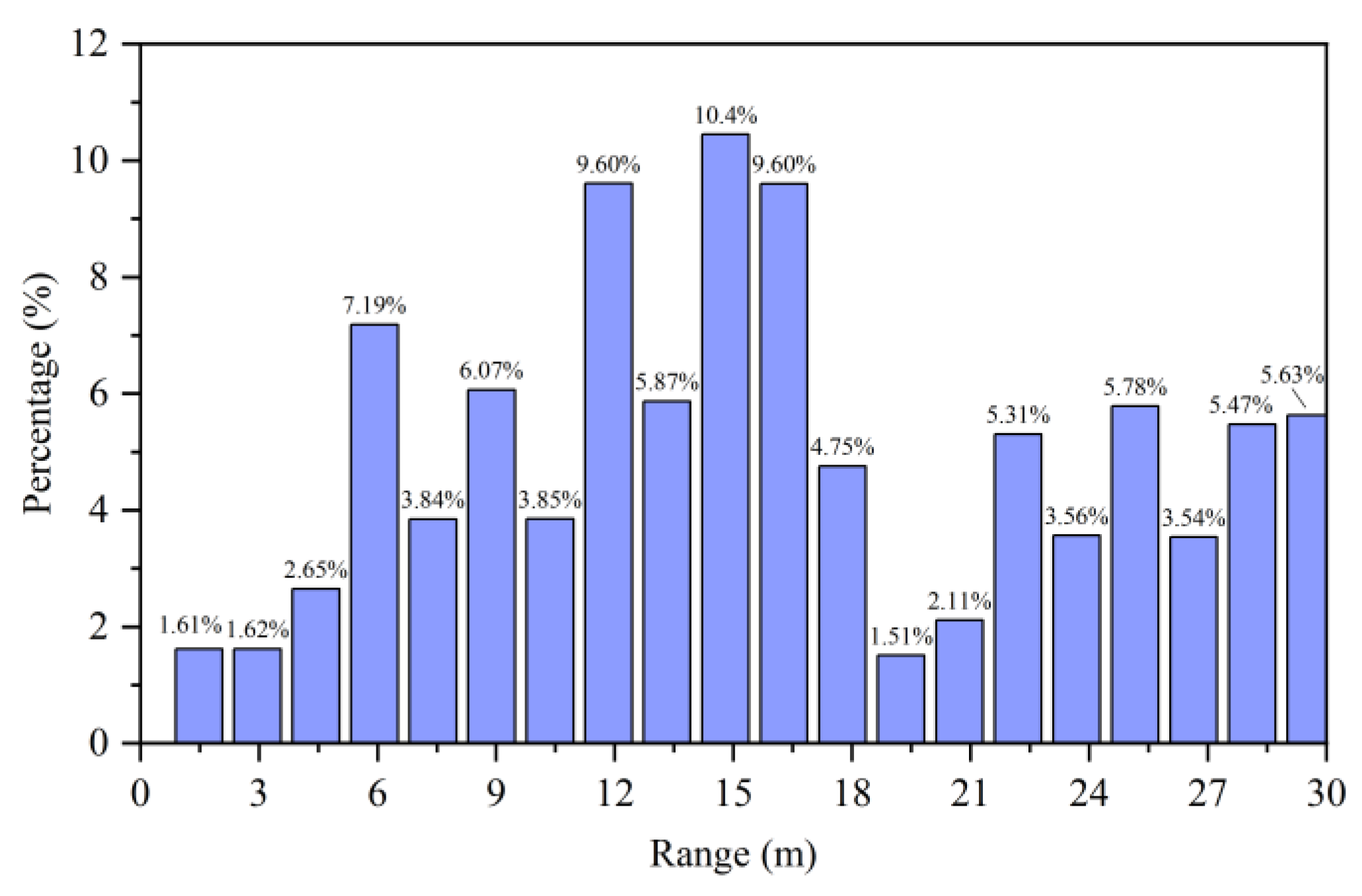

We collected rainfall data under normal rainy conditions using a compact vehicle equipped with a commercial millimeter-wave radar, which was stationed alongside an open road during precipitation. The data acquisition scenario is illustrated in the figure below.

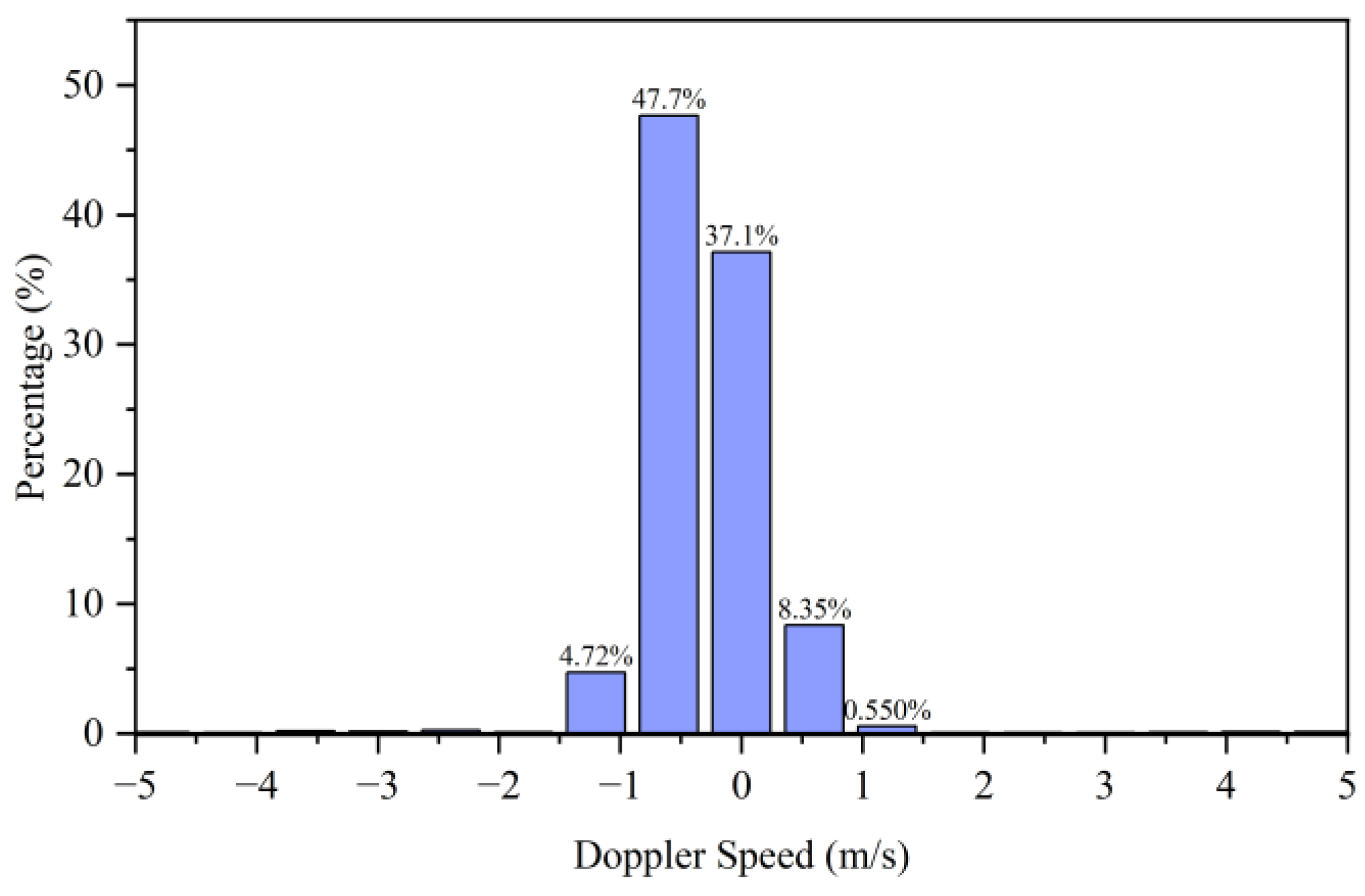

During data collection, the radar was mounted at a height of approximately 60 cm. Data acquisition was performed while the radar remained stationary. Throughout continuous rainfall conditions, we collected approximately 40 minutes of data comprising 35,159 frames. The velocity distribution of the detected raindrops is shown in

Figure 5. The majority of raindrops detected by the radar fall within the velocity range of -1 m/s to 1 m/s.

Meanwhile, the distance distribution of the detected raindrops is more uniform. Within our selected range of 2-30 m, the number of raindrop detections remains relatively consistent between 5-30 m, with an especially uniform distribution observed between 8-23 m. Therefore, we quantify raindrop characteristics using both velocity variance and distance variance. The variance is calculated as follows:

where

represents the population variance,

is the sample size,

is the mean value, and

denotes either velocity or distance. Since the radar cannot consistently detect dynamic raindrops (i.e., the number of detected targets fluctuates dynamically under the same conditions due to the unstable reflection of radar waves), and rainfall typically persists for extended periods, we employ a sliding-window statistical method to process the data and avoid abrupt changes in algorithm output. Specifically, we create an array with data from a fixed number of frames (60 frames in this case). As new radar data is acquired, the array is updated by replacing the oldest data, enabling stable processing of time-series data and minimizing fluctuations caused by radar measurement variability.

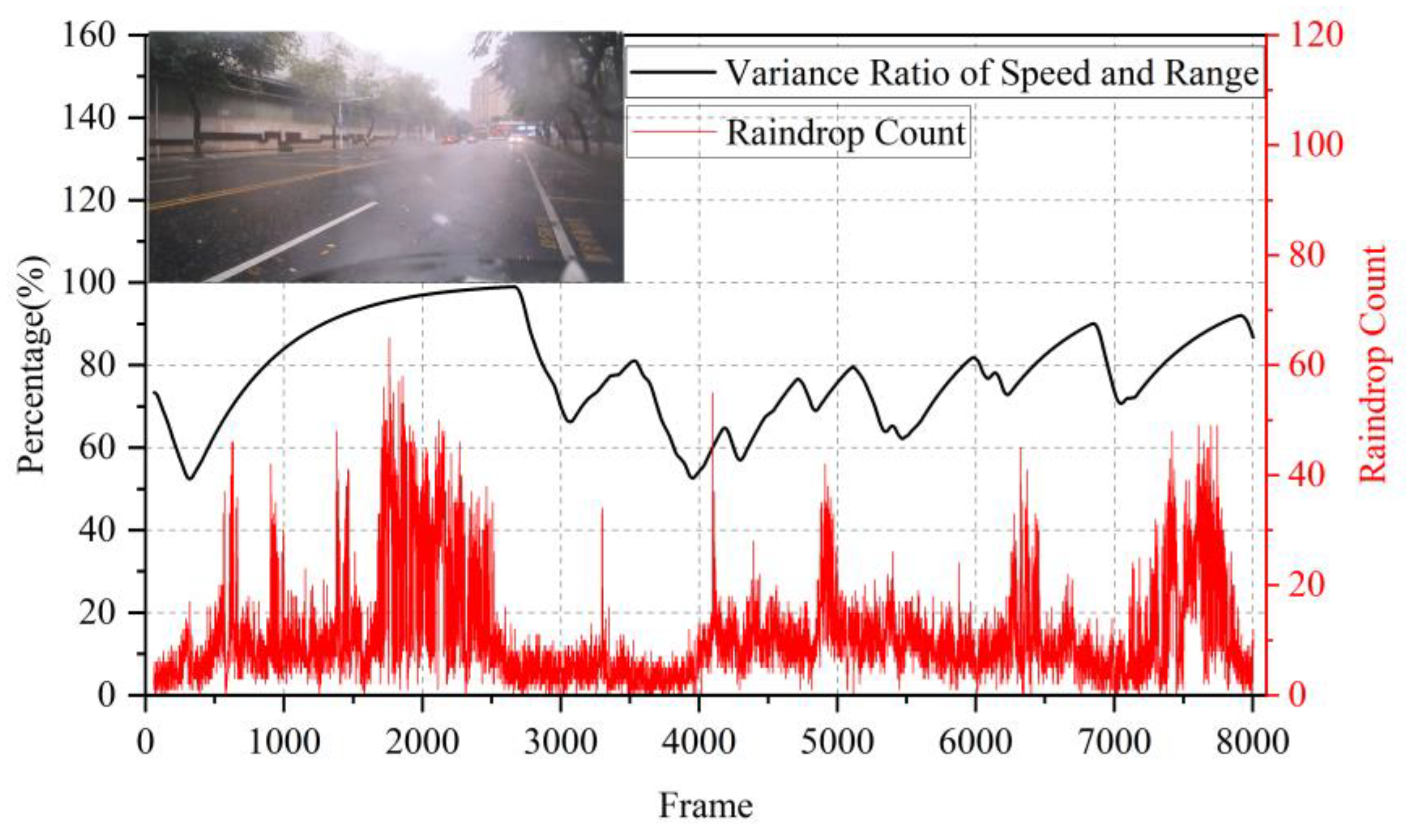

During a rainfall test in Guangzhou, China, we obtained the results shown in

Figure 7.

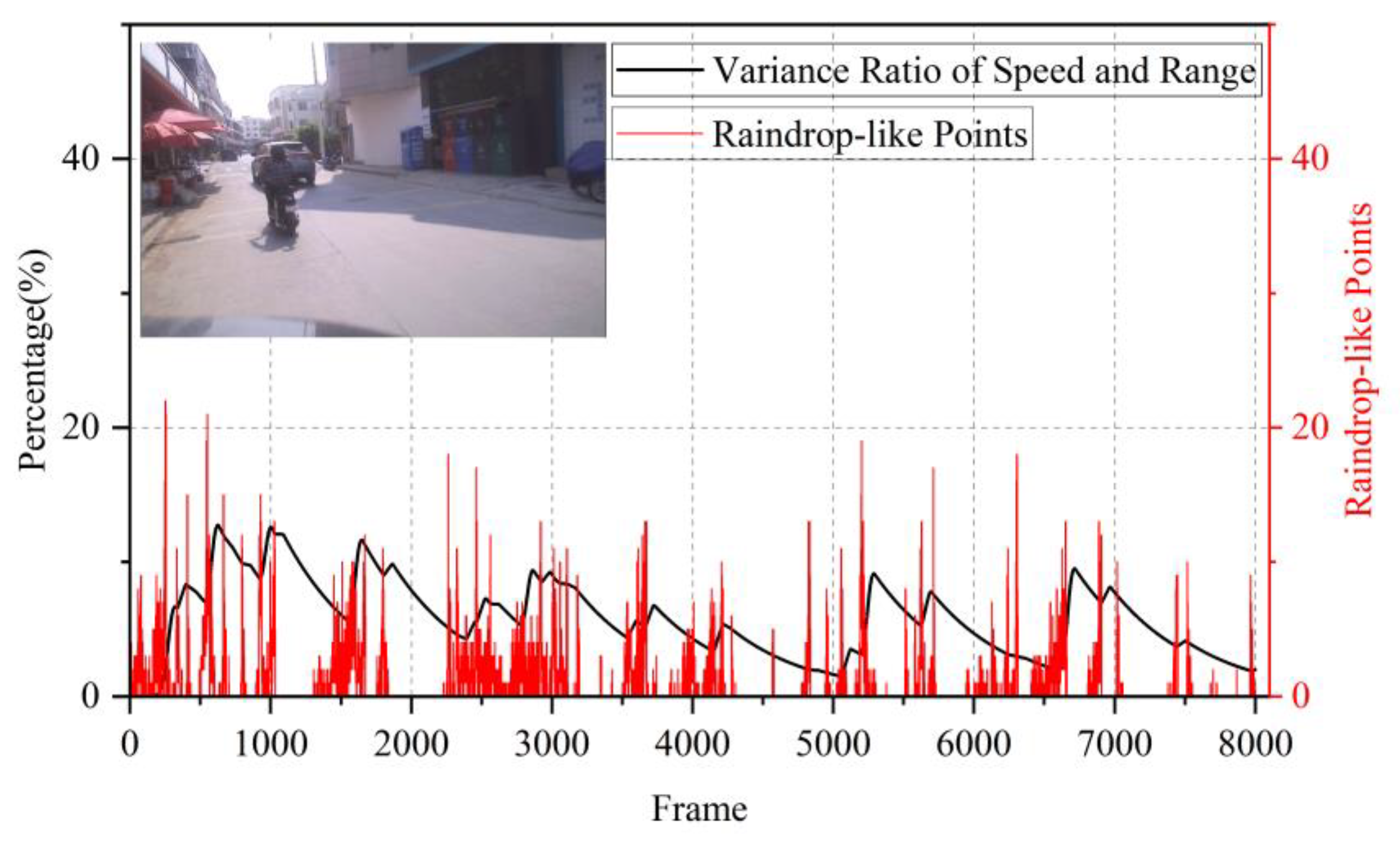

Over approximately 10 minutes of measurement, the algorithm output closely matched the trend of raindrop counts detected by the radar, confirming that the algorithm’s results align with precipitation intensity. This demonstrates the algorithm’s effectiveness in rainy conditions. For comparison, we also tested the algorithm in a more complex sunny scenario at a roadside in a small town, where pedestrians, bicycles, and cars frequently passed by. The results are shown in

Figure 8.

Compared to the rainy scenario, the radar detected some point clouds with raindrop-like characteristics even in sunny conditions. However, the algorithm output remained at a low level (significantly lower than during rainfall), showing clear distinction between rainy and sunny conditions. This confirms the algorithm’s ability to avoid false classification in non-rainy scenarios.

3.2.2. Noise Point Elimination Method in Complex Environments

In automotive radar point cloud measurements, beyond legitimate targets, there often exist numerous “extraneous” points that interfere with proper target tracking. In multi-lane traffic scenarios, mutual interference between vehicle radars or cross-interference from nearby systems can degrade performance and introduce data inaccuracies. While existing literature proposes various interference mitigation strategies, no comprehensive solution effectively eliminates all noise sources under extreme conditions [

21,

22,

23,

24]. This paper presents a simple yet efficient noise point elimination method that preserves genuine targets while suppressing most interference.

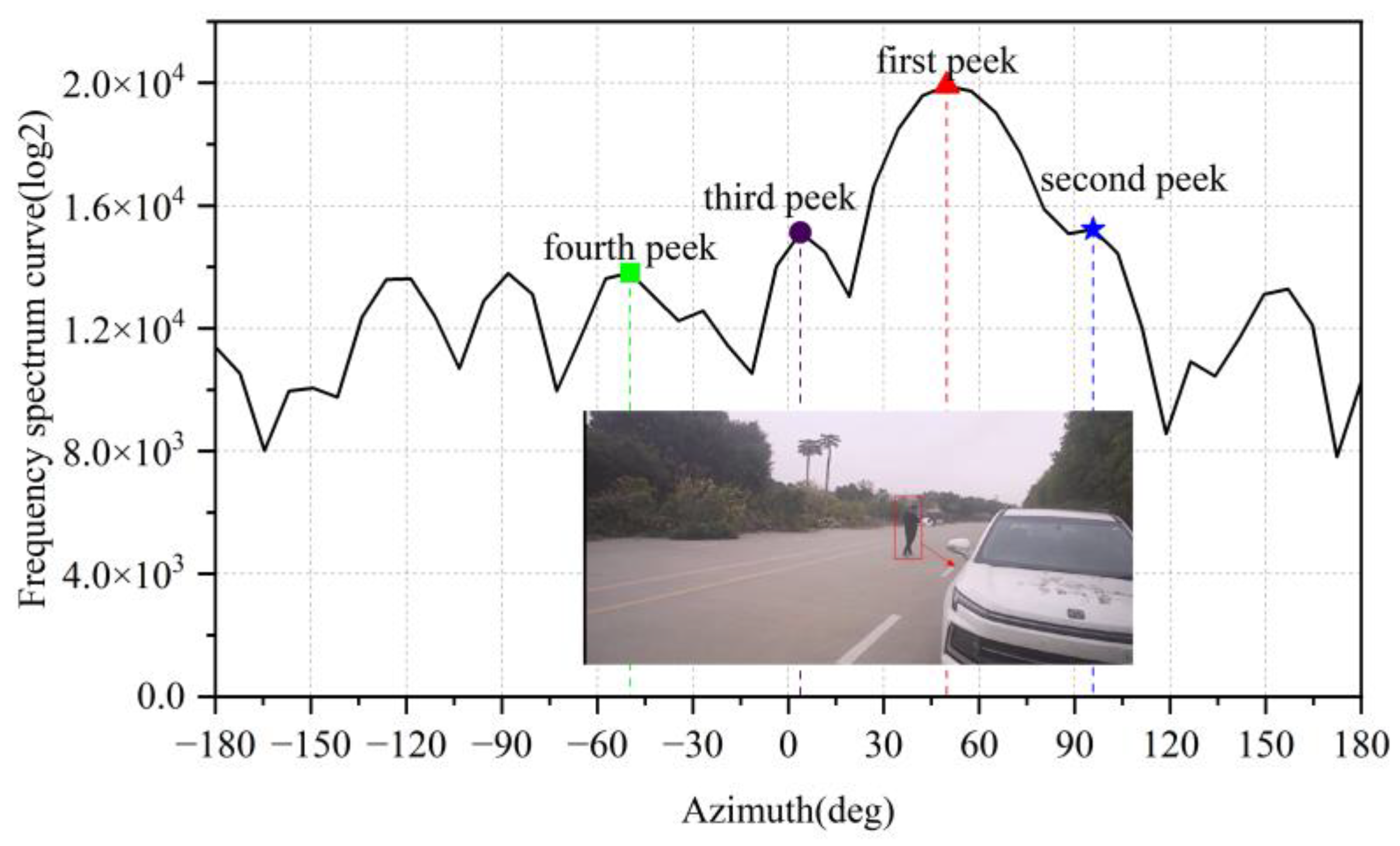

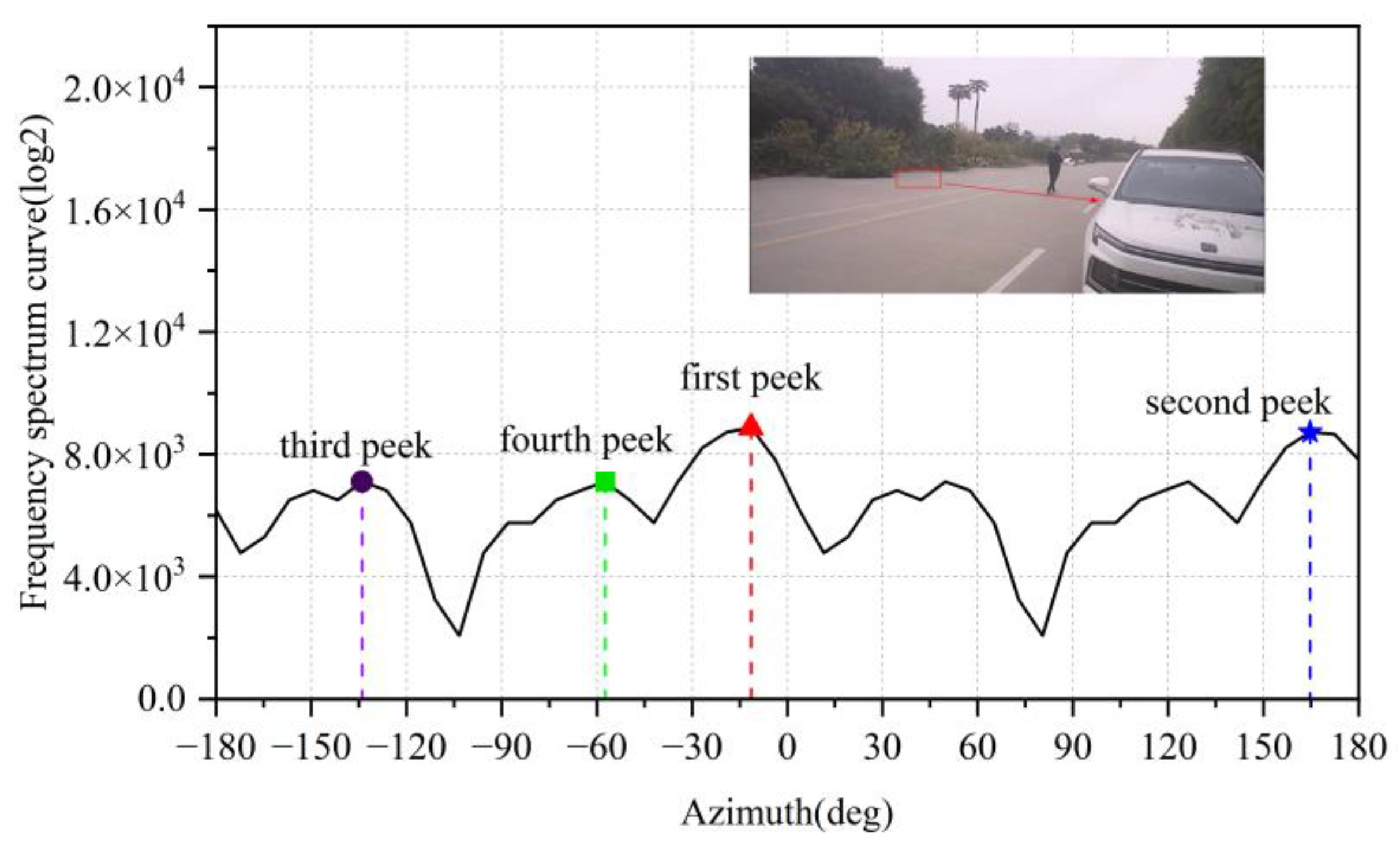

Legitimate targets typically exhibit high signal-to-noise ratio (SNR) characteristics. After applying angular-dimension FFT processing, their peaks significantly dominate adjacent positions, as illustrated in

Figure 9. In contrast, noise points (e.g., ghost reflections from open ground surfaces, raindrops) demonstrate distinct angular FFT signatures shown in

Figure 10, their response curves remain relatively flat, with minimal amplitude differences between peak values.

Despite their lower amplitudes, these noise peaks may still pass through Constant False Alarm Rate (CFAR) detection thresholds and be misclassified as valid targets [

25]. To address this, we propose evaluating the differential amplitudes between the top N peaks in angular FFT profiles. By setting appropriate thresholds for peak amplitude variance, our method effectively discriminates and filters out noise points while retaining true targets.

For the cases shown in

Figure 9 and

Figure 10, we calculate the amplitude differences between the first maximum peak and subsequent peaks (2nd, 3rd, and 4th):

Table 1.

Measured peak amplitude differences in echo signals: normal point clouds vs. noise.

Table 1.

Measured peak amplitude differences in echo signals: normal point clouds vs. noise.

| Normal Point Clouds |

Noise Point Clouds |

| Δp1-2

|

2548 |

Δp1-2

|

521 |

| Δp1-3

|

2944 |

Δp1-3

|

736 |

| Δp1-4

|

3560 |

Δp1-4

|

832 |

Where, Δp₁-₂ represents the amplitude difference between the 1st and 2nd peaks; Δp₁-₃ represents the amplitude difference between the 1st and 3rd peaks; Δp₁-₄ represents the amplitude difference between the 1st and 4th peaks.

Here, Δp₁-₂ denotes the amplitude difference between the 1st and 2nd peaks, Δp₁-₃ represents the 1st-to-3rd peak difference, and Δp₁-₄ indicates the 1st-to-4th peak difference. Analysis reveals that in the echo curves of human targets (legitimate targets), these three differential values are significantly greater than those observed in noise point echo curves. This characteristic enables effective noise point rejection through threshold-based filtering.

Building upon this methodology, we conducted road target detection tests using a millimeter-wave radar system, with the following parameters:

Targets of interest: Electric two-wheelers and automobiles;

Undesired interference sources: Ground clutter, multipath reflections, and vegetation-induced noise points;

The evaluation focused on:

Identification rate of unwanted noise points;

-

Misidentification probability of valid targets;

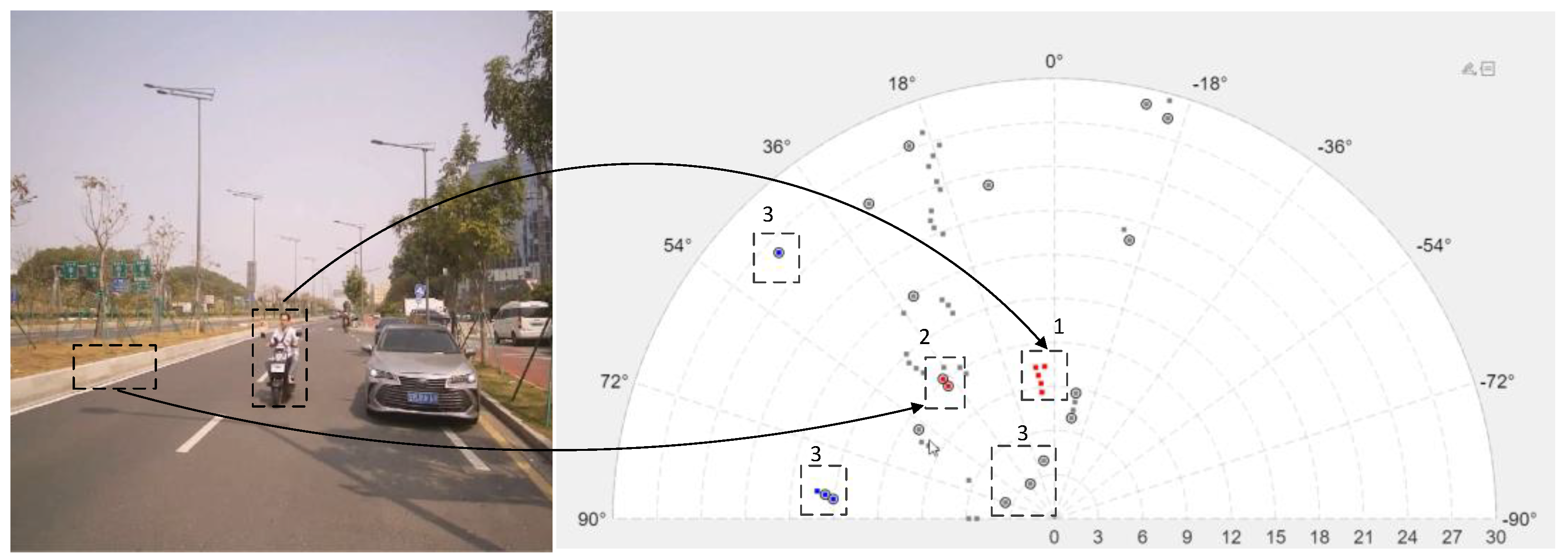

to validate the algorithm’s practical efficacy. The test scenario is illustrated in the figure below:

In

Figure 11, Red points indicate approaching objects (potential hazards); Blue points represent receding objects; Gray points denote stationary objects. Key areas are marked as:

- (1)

Zone 1: Valid two-wheeler targets;

- (2)

Zone 2: Multipath reflections from roadside vegetation edges;

- (3)

Zone 3: Ground clutter-induced noise points;

The system successfully identified over 90% of unwanted points in this scenario. Under consistent environmental conditions, we statistically evaluated: (1) Correct identification rate for noise points; (2) False identification probability for legitimate targets. The quantitative results are presented in the following table:

Table 2.

Statistics of false recognition rates for correct targets using the proposed algorithm.

Table 2.

Statistics of false recognition rates for correct targets using the proposed algorithm.

| Target |

Number of Tests |

Total Points |

Marked Points |

Recognition Rate(%) |

Average Recognition Rate(%) |

| Pedestrian |

first |

164 |

3 |

1.83 |

3.65 |

| second |

204 |

13 |

6.37 |

| third |

182 |

5 |

2.75 |

| Car |

first |

415 |

29 |

6.99 |

5.19 |

| second |

520 |

22 |

4.23 |

| third |

322 |

14 |

4.35 |

| Two wheel cart |

first |

322 |

10 |

3.11 |

3.66 |

| second |

432 |

21 |

4.86 |

| third |

332 |

10 |

3.01 |

Table 3.

Statistics of correct recognition rate for point clouds caused by noise.

Table 3.

Statistics of correct recognition rate for point clouds caused by noise.

| Target |

Number of Tests |

Total Points |

Marked Points |

Recognition Rate(%) |

Average Recognition Rate(%) |

| Multipath points caused by cars |

first |

62 |

56 |

90.32 |

87.60 |

| second |

56 |

48 |

85.71 |

| third |

68 |

59 |

86.76 |

| Ground clutter |

first |

288 |

201 |

69.79 |

73.41 |

| second |

304 |

230 |

75.66 |

| third |

321 |

240 |

74.77 |

Measurement Note: The statistical data was collected under the following dynamic conditions: Pedestrians: Tracked while walking toward the radar from 30m distance; Vehicles: Approaching the radar at 50 km/h from 70m range; Two-wheelers: Moving toward the radar at 20 km/h from 40m;

The statistical results demonstrate that our algorithm achieves:

>70% ground clutter rejection rate

~90% vehicle multipath detection accuracy

<5% false identification rate for legitimate targets

This confirms the algorithm’s exceptional effectiveness in noise suppression for automotive radar applications, successfully addressing three critical challenges: ground reflection interference, multipath artifacts, and target discrimination accuracy.

4. Radar Performance Test

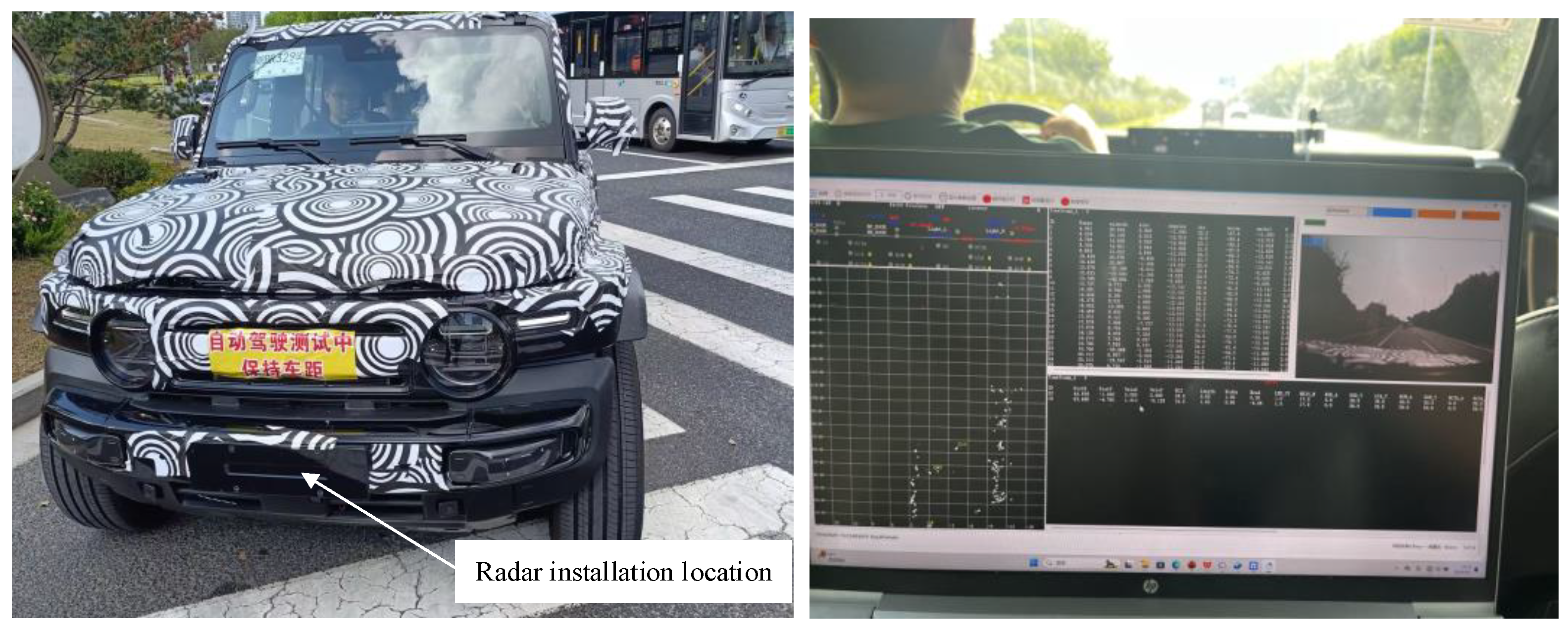

The radar system has been integrated within the front bumper assembly of a compact SUV, as depicted in

Figure 12. Real-time measurement data is transmitted to a portable computing unit for visualization, with output parameters encompassing Cartesian coordinates (X-Y), slant range, radial velocity, azimuth angle, and elevation angle relative to the sensor array. Extensive field validation was conducted across multiple road surfaces (pavement, gravel, dirt) to assess system performance under varying environmental conditions.

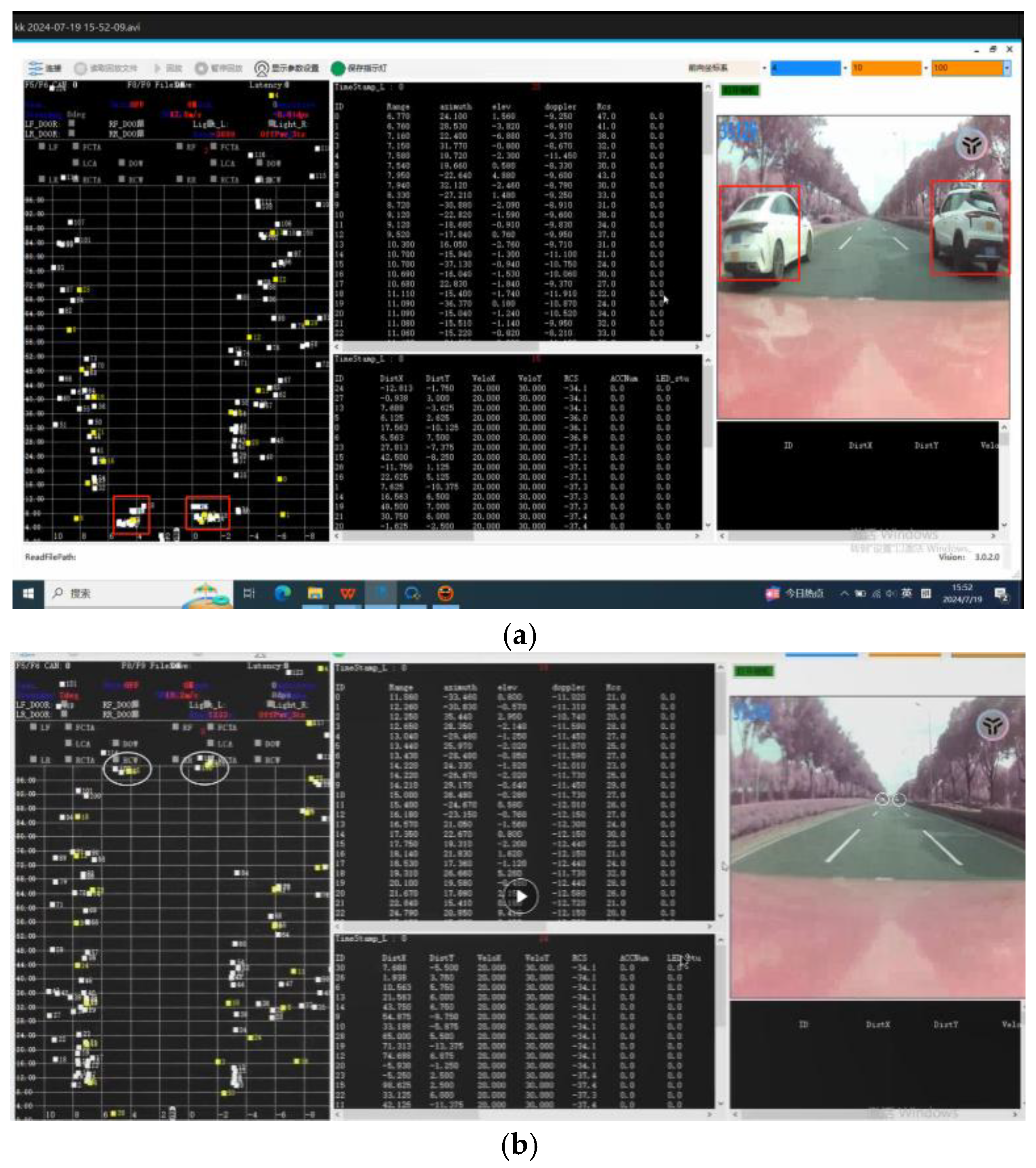

To validate the azimuth accuracy in the horizontal plane, an experimental setup was implemented involving two vehicles traveling in parallel trajectories. The test vehicles maintained a higher relative velocity compared to the radar-equipped platform and gradually diverged from the target vehicle during the trial.

The measurement results are illustrated in

Figure 13.

Under a slant range of 5 meters, the lateral separation between the two target vehicles was maintained at approximately 5 meters. The measured Cartesian coordinates (X-axis) of the targets were recorded as follows:

Left vehicle: X₁ = 4.4 m

Right vehicle: X₂ = 0.6 m

Both vehicles traveled in straight trajectories within their respective lanes. When the slant range increased to 100 meters, the actual lateral separation remained consistent at 5 meters. Corresponding coordinate measurements at this extended range showed:

Left vehicle: X₁ = 4.4 m

Right vehicle: X₂ = 0.8 m

The right-side target exhibited a lateral displacement of approximately 0.2 meters, corresponding to an angular deviation of 0.1°, which aligns with the angular error margin quantified in Section 2.1. Notably, at a slant range of 100 meters, the radar system successfully resolved both targets with an angular separation of approximately 3.0°, demonstrating robust angular resolution performance under extended-range conditions.

During road testing, the radar achieved a maximum detection range of 210 meters, effectively identifying and tracking large trucks, cars, electric bicycles, and pedestrians, whether vehicles were operating at low or high speeds. Additionally, the radar clearly detected guardrails and roadside greenery belts on both sides of the road. As shown in

Figure 14, even when vehicles were traveling at high speeds, the radar successfully detected them.

The radar sensor is established as the spatial origin of the coordinate system. As depicted in

Figure 14, seven vehicles are positioned in front of the sensor array, with the farthest target located at a maximum slant range of 210 meters. Within the 50-meter slant range zone, three vehicles are clustered in adjacent lanes. The roadside metal fences manifest as sparsely distributed point clouds, exhibiting vertical alignment despite lower density compared to 4D imaging radars. By integrating spatial coordinates (X-Y-Z) and radial velocity data, static target classification is achievable through height reconstruction and aspect-angle analysis.

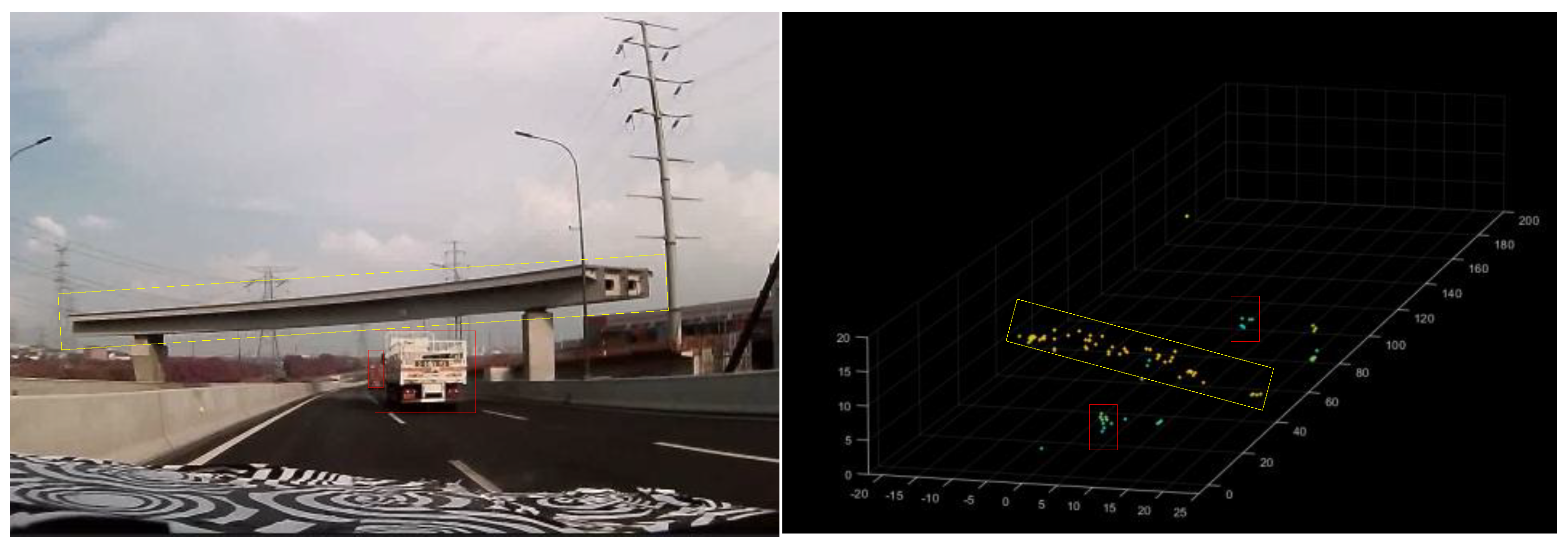

As illustrated in

Figure 15, the radar system accurately renders overpass structures and metal signage within its field of view, demonstrating robust clutter suppression and high-resolution imaging capability.

When encountering suspended targets such as overpasses or traffic lights above the road, these structures manifest as detectable point clouds directly ahead of the vehicle. This enables high-altitude target identification through height, position, and velocity parameters extracted from the point cloud. As demonstrated in

Figure 15, when approaching a road section with an overhead footbridge, the bridge’s reflection point cloud (highlighted in the yellow box) is clearly visible. The point cloud’s horizontal orientation corresponds to the footbridge’s actual direction, as shown in the image. Simultaneously, vehicles traveling adjacent to the footbridge are clearly resolved. The footbridge’s structural height ranges between 5 to 10 meters. With the radar fixed at the vehicle’s front, the detected bridge height exhibits minimal variation. As the vehicle approaches, the target’s reflected point cloud expands proportionally, with both the bridge and adjacent vehicles clearly captured in

Figure 15.

5. Discussion

The current implementation methods for 4D millimeter-wave imaging radar primarily include:

- (1)

Chip cascade technology, exemplified by the AWR2243 chip cascade scheme from Texas Instruments (TI), features a single chip equipped with three transmitters (TX) and four receivers (RX). By cascading four chips, a configuration of 12 TX and 16 RX is achieved, resulting in a total of 192 virtual channels.

- (2)

A single-chip design enables the transmission and reception of multiple signals on a single chip. A notable example is Arbe’s chipset, which can be expanded to accommodate 48 transmitters (TX) and 48 receivers (RX), generating a total of 2,304 virtual channels. In contrast, the radar-on-chip solution from the US company Uhnder utilizes a 12 TX / 8 RX configuration, capable of forming 96 virtual channels.

However, all of the above-mentioned solutions face challenges such as high cost, complex technology, and long development cycles, and they have not been widely adopted in the market. Although it is relatively difficult to achieve the ideal imaging effect with the method of directly upgrading 3D radar to 4D radar, this method can obtain the height information of the target, enabling the early prediction of obstacles on the road such as overpasses and road signs. In most cases, it is meaningful for the drivable area detection of forward-looking radars. Moreover, the low cost facilitates large-scale application. Based on this concept, this paper proposes a design scheme for a single-chip 4D millimeter-wave forward-looking radar. It achieves height measurement through the design of sparse MIMO antennas. In road tests, the developed radar effectively detected high-position targets such as traffic lights and overpasses. Compared with the standard 3D millimeter-wave radar, this scheme has a cost advantage, lower development difficulty, and accelerates the product commercialization process. Therefore, we believe that the 4D single-chip radar will become the main direction of future forward-looking radar systems and will be widely installed in vehicles.

6. Conclusions

This paper details the development process of a single - chip four - dimensional (4D) automotive millimeter - wave radar, encompassing system architecture design, antenna optimization, signal processing algorithm creation, and performance verification. It primarily addresses the issue that traditional 3D millimeter - wave radars lack target height information. By upgrading to a 4D radar, it achieves four - dimensional information perception of target distance, azimuth, velocity, and height, significantly enhancing obstacle detection capabilities and safety in autonomous driving.

The radar system employs a highly integrated hardware platform. Through the optimized design of a sparse MIMO antenna array and efficient signal processing algorithms, it can accurately measure both dynamic and static targets in complex environments. In actual road tests, it demonstrates high detection accuracy and reliability.

In addition, this paper proposes an effective rain clutter recognition method based on the characteristics of raindrop distribution and a method for suppressing noise points in complex environments based on the amplitude differences of angle FFT peaks, further improving the robustness of the radar system.

After extensive road - test verification, the designed radar can reliably detect dynamic targets such as vehicles, pedestrians, and bicycles, as well as static infrastructure such as overpasses and traffic signs. It has significant advantages in terms of cost, performance, and commercialization, and can be reliably applied in the field of autonomous driving, with broad market prospects.

Author Contributions

Conceptualization, Y.C. and B.R..; methodology , Y.C. and H.S.; validation, Y.C. and L.H.; writing—original draft preparation, Y.C. and L.H.; writing—review and editing, J.B. and H.S.. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Key Research and Development Program of China under grant 2023YFB3209800 and 2023YFB3209804.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data will be made available on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Hakobyan, G.; Yang, B. High-Performance Automotive Radar: A Review of Signal Processing Algorithms and Modulation Schemes. IEEE Signal Process Mag, 2019, 36, 32–44. [Google Scholar] [CrossRef]

- Sun, S.; Petropulu, A. P.; Poor, H. V. MIMO Radar for Advanced Driver-Assistance Systems and Autonomous Driving: Advantages and Challenges. IEEE Signal Process Mag, 2020, 37, 98–117. [Google Scholar] [CrossRef]

- Sun, S.; Zhang, Y. D. 4D Automotive Radar Sensing for Autonomous Vehicles: A Sparsity-Oriented Approach. IEEE J. Sel. Top. Signal Process 2021, 1. [Google Scholar] [CrossRef]

- Li, G.; Sit, Y. L.; Manchala, S.; Kettner, T.; Ossowska, A.; Krupinski, K.; Sturm, C.; Goerner, S.; Lübbert, U. Pioneer Study on Near-Range Sensing with 4D MIMO-FMCW Automotive Radars. 20th International Radar Symposium (IRS), Ulm, Germany, 26-28 June 2019.

- Abdullah, H.; Mabrouk, M.; Kabeel, A. E.; Hussein, A. High-Resolution and Large-Detection-Range Virtual Antenna Array for Automotive Radar Applications. Sensors, 2021, 21, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Paek, D. H.; Kong, S. H.; Wijaya, K. T. ; K-radar: 4d radar object detection for autonomous driving in various weather conditions. Adv. Neural Inform. Process. Syst, 2022, 35, 3819–3829. [Google Scholar]

- Choi, M.; Yang, S.; Han, S.; Lee, M.; Choi, K. H.; Kim, K. S. MSC-RAD4R: ROS-Based Automotive Dataset With 4D Radar. IEEE Rob. Autom. Lett, 2024, 8, 3307005. [Google Scholar] [CrossRef]

- Kronauge, M.; Rohling, H. New chirp sequence radar waveform. IEEE Trans. Aerosp. Electron. Syst, 2014, 50, 2870–2877. [Google Scholar] [CrossRef]

- Li, X.; Wang, x.; Yang, Q.; Fu, S. Signal Processing for TDM MIMO FMCW Millimeter-Wave Radar Sensors. IEEE Access, 2021, 9, 167959–167971. [Google Scholar] [CrossRef]

- Stove, A. G. Modern FMCW radar-techniques and applications. First European Radar Conference, Amsterdam, Netherlands, 14-15 October 2004.

- Lin, J. J.; Li, Y. P.; Hsu, W. C.; Lee, T. S. Design of an FMCW radar baseband signal processing system for automotive application. SpringerPlus, 2016, 5, 1–16. [Google Scholar] [CrossRef]

- Yan, B.; Roberts, I. P. Advancements in Millimeter-Wave Radar Technologies for Automotive Systems: A Signal Processing Perspective. Electronics, 2025, 14, 1436. [Google Scholar] [CrossRef]

- Sun, S.; Petropulu, A. P.; Poor, H. V. MIMO radar for advanced driver-assistance systems and autonomous driving: Advantages and challenges. IEEE Signal Process Mag, 2020, 37, 98–117. [Google Scholar] [CrossRef]

- Waldschmidt, C.; Hasch, J.; Menzel, W. Automotive Radar-From First Efforts to Future Systems. IEEE Journal of Microwaves, 2021, 1, 135–148. [Google Scholar] [CrossRef]

- Vasanelli, C.; Batra, R.; Di Serio, A.; Boegelsack, F.; Waldschmidt, C. Assessment of a millimeter-wave antenna system for MIMO radar applications. IEEE Antennas Wirel Propag Lett, 2016, 16, 1261–1264. [Google Scholar] [CrossRef]

- Steinhauser, D.; Held, P.; Thöresz, B.; Brandmeier, T. Towards safe autonomous driving: Challenges of pedestrian detection in rain with automotive rada. 17th European Radar Conference (EuRAD 2020). Utrecht, Netherlands,13-15 January 2021.

- Jang, B. J.; Jung, I. T.; Chong, K. S.; Lim, S. Rainfall observation system using automotive radar sensor for ADAS based driving safety. IEEE Intelligent Transportation Systems Conference (ITSC). Auckland, New Zealand, 27-30 October 2019.

- Li, H.; Bamminger, N.; Magosi, Z. F.; Feichtinger, C.; Zhao, Y.; Mihalj, T.; Orucevic, F.; Eichberger, A. The effect of rainfall and illumination on automotive sensors detection performance. Sustainability, 2023, 15, 7260. [Google Scholar] [CrossRef]

- Ong, C. R.; Miura, H.; Koike, M. The terminal velocity of axisymmetric cloud drops and raindrops evaluated by the immersed boundary method. J Atmos Sci, 2021, 78, 1129–1146. [Google Scholar] [CrossRef]

- Montero-Martínez, G.; García-García, F. On the behaviour of raindrop fall speed due to wind. Q J Roy Meteor Soc, 2016, 142, 2013–2020. [Google Scholar] [CrossRef]

- Tan, B.; Ma, Z.; Zhu, X.; Huang, L.; Bai, J. Tracking of multiple static and dynamic targets for 4D automotive millimeter-wave radar point cloud in urban environments. Remote Sens, 2023, 15, 2923. [Google Scholar] [CrossRef]

- Zhou, Y.; Liu, L.; Zhao, H.; López-Benítez, M.; Yu, L.; Yue, Y. Towards deep radar perception for autonomous driving: Datasets, methods, and challenges. Sensors, 2022, 22, 4208. [Google Scholar] [CrossRef] [PubMed]

- Scheiner, N.; Kraus, F.; Appenrodt, N.; Dickmann, J.; Sick, B. Object detection for automotive radar point clouds–a comparison. AI Perspectives & Advances, 2021, 3, 6. [Google Scholar]

- Alland, S.; Stark, W.; Ali, M.; Hegde, M. Interference in automotive radar systems: Characteristics, mitigation techniques, and current and future research. IEEE Signal Process Mag, 2019, 36, 45–59. [Google Scholar] [CrossRef]

- Tavanti, E.; Rizik, A.; Fedeli, A.; Caviglia, D. D.; Randazzo, A. A short-range FMCW radar-based approach for multi-target human-vehicle detection. IEEE Trans. Geosci. Remote Sens, 2021, 60, 1–16. [Google Scholar] [CrossRef]

Figure 1.

Radar system transmitting antenna beam pattern: (a) horizontal direction; (b) elevation direction.

Figure 1.

Radar system transmitting antenna beam pattern: (a) horizontal direction; (b) elevation direction.

Figure 2.

An example of the fast chirp ramp sequence waveform for range and velocity detection and a radar signal processing process.

Figure 2.

An example of the fast chirp ramp sequence waveform for range and velocity detection and a radar signal processing process.

Figure 3.

angle error curve for measuring angle reversal: (a) azimuth angle; (b) elevation angle.

Figure 3.

angle error curve for measuring angle reversal: (a) azimuth angle; (b) elevation angle.

Figure 4.

Raindrop measurement location and study region.

Figure 4.

Raindrop measurement location and study region.

Figure 5.

Velocity distribution of radar-detected raindrops.

Figure 5.

Velocity distribution of radar-detected raindrops.

Figure 6.

Distance distribution of radar-detected raindrops.

Figure 6.

Distance distribution of radar-detected raindrops.

Figure 7.

Rainfall scenario(Gray curve: Number of raindrop point clouds measured by radar; Red curve: Algorithm output results (percentage scale).

Figure 7.

Rainfall scenario(Gray curve: Number of raindrop point clouds measured by radar; Red curve: Algorithm output results (percentage scale).

Figure 8.

Sunny urban roadside scenario (Gray curve: Detected target point clouds measured by radar; Red curve: Algorithm output results (percentage scale).

Figure 8.

Sunny urban roadside scenario (Gray curve: Detected target point clouds measured by radar; Red curve: Algorithm output results (percentage scale).

Figure 9.

Angular FFT profile of normal targets.

Figure 9.

Angular FFT profile of normal targets.

Figure 10.

Angular FFT profile of clutter in open areas.

Figure 10.

Angular FFT profile of clutter in open areas.

Figure 11.

Test scenarios and point cloud distributions.

Figure 11.

Test scenarios and point cloud distributions.

Figure 12.

Radar installation location and computer interface.

Figure 12.

Radar installation location and computer interface.

Figure 13.

Measurement results of two targets near (a: 5m) and far (b: 100m) in front of the radar.

Figure 13.

Measurement results of two targets near (a: 5m) and far (b: 100m) in front of the radar.

Figure 14.

The display effect of vehicles during road testing, with nearby vehicles indicated by red, green, white, and purple boxes, and more distant vehicles marked with yellow boxes. the gray area represents the fence on both sides.

Figure 14.

The display effect of vehicles during road testing, with nearby vehicles indicated by red, green, white, and purple boxes, and more distant vehicles marked with yellow boxes. the gray area represents the fence on both sides.

Figure 15.

Rendering of the pedestrian bridge in relation to the road vehicles. the red box indicates two moving vehicles, while the yellow box marks the reflection point of the pedestrian bridge.

Figure 15.

Rendering of the pedestrian bridge in relation to the road vehicles. the red box indicates two moving vehicles, while the yellow box marks the reflection point of the pedestrian bridge.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).