Submitted:

24 June 2025

Posted:

25 June 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

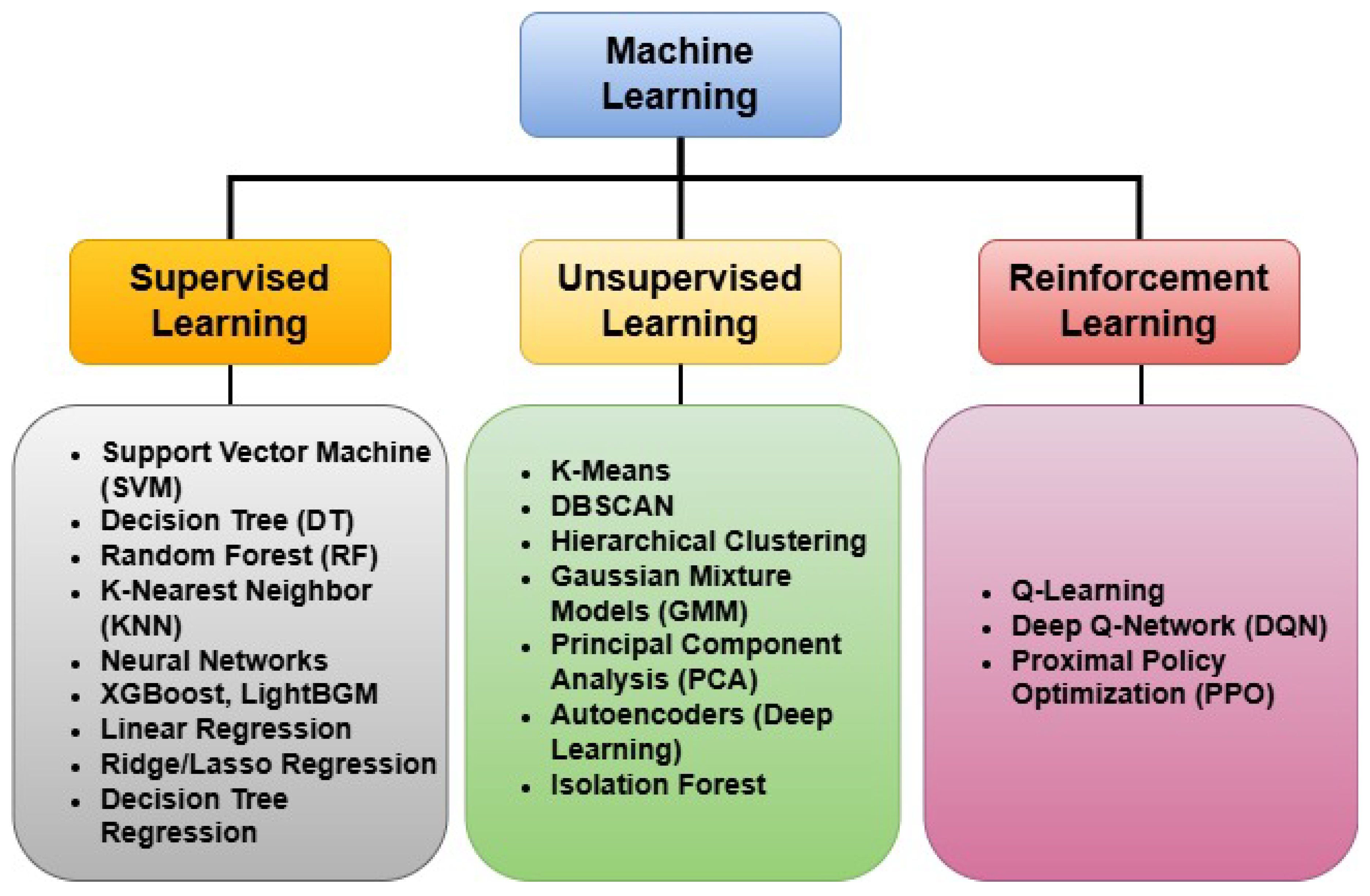

2. Machine Learning Algorithms

3. ML Application in Predicitve Maintenance, Quality Control, and Process Optimization

- Predictive Maintenance (PdM)

- Quality Control (QC), and

- Process Optimization (PO)

3.1. Predictive Maintenance

| Sub-Area | Publication Year & Reference | Algorithm | Task, Methodology, & Outcome |

|---|---|---|---|

| Fault Prediction | 2023, [21] | Supervised Learning (LSTM, KNN, KG) | Task: Robot state prediction and PdM strategy generation. Methodology: LSTM for state detection, KNN for fault prediction, and KG for decision support. Outcome: Closed-loop PdM system for welding robots. |

| Fault Prediction | 2023, [22] | Supervised Learning (RF, GB, DL) | Task : Predict failures in a manufacturing plant. Methodology: ML models trained on factory equipment data. Outcome: Improved failure prediction, reduced downtime. |

| Fault Prediction | 2022, [23] | Supervised Learning(SVM,BNN, RF) | Task: Fault detection and classification in LV motors. Methodology: Two-phase ML approach (abnormal behavior detection + fault type prediction). Outcome:Reduced detection time, accurate fault diagnosis. |

| Fault Prediction | 2018, [24] | Supervised Learning (LSTM) | Task: Build a smart predictive maintenance system for early fault detection and technician support. Methodology: Used IoT sensors for data collection, LSTM/GRU for failure prediction, and AR tools (HoloLens/tablet) to guide maintenance actions. Outcome: Improved fault prediction and reduced downtime. AR support made maintenance faster and easier for operators. |

| Fault Prediction | 2018, [25] | Supervised Learning (BN) | Task:Develop a fault modeling and diagnosis system. Methodology: A Bayesian Network (BN) framework was used to represent causal relationships between process parameters and faults. A hybrid learning system was created to improve fault prediction and root cause analysis. Outcome: The system demonstrated improved fault modeling and interpretability. |

| Fault Prediction | 2018, [26] | Supervised Learning, Unsupervised Learning (RF) | Task: Develop a real-time fault detection and diagnosis system in smart factory environments. Methodology: Employed a big data pipeline integrating data acquisition, storage, preprocessing, and analytics. Used Principal Component Analysis (PCA) and k-Nearest Neighbors (k-NN) for dimensionality reduction and classification. Applied decision trees for fault reasoning. Outcome: Achieved over 90% accuracy in fault classification across multiple use cases. |

| Fault Prediction | 2017, [27] | Supervised Learning (ANN) | Task: Enable predictive maintenance in machine centers. Methodology: Proposed a five-step framework integrating sensors, AI, CPS, and ANN for fault diagnosis and prognosis. Demonstrated using machine data to predict backlash errors. Outcome: Successfully predicted faults weeks in advance, enabling proactive maintenance. |

| Fault Prediction | 2023, [28] | Supervised Learning (CF) | Task: Cooling system monitoring. Methodology: Open-source R-based DSS with data preprocessing and predictive models. Outcome: Cost-effective PdM for SMEs. |

| Condition Monitoring | 2021, [29] | Supervised Learning (ET) | Task: Develop scalable PdM framework. Methodology: Modular edge-cloud architecture with plug-and-play sensor integration and time-series ML. Outcome: Demonstrated early condition degradation in HPC components. |

| Condition Monitoring | 2019, [30] | Supervised Learning, Unsupervised Learning (PCA, DTree, RF, KNN, SVM) | Task: Predict tool wear in CNC end-milling operations using multi-sensor data. Methodology: Time and frequency domain features were extracted and fused. Machine learning models (Random Forest, SVM, MLP) were trained and validated. Outcome: Random Forest achieved the best performance. Sensor fusion enhanced prediction accuracy over individual sensors. |

| Condition Monitoring | 2018, [31] | Supervised Learning (LDA, Clustering) | Task: Improve fault diagnosis in Fused Deposition Modeling (FDM) using acoustic emission (AE) data to monitor extruder health. Methodology: Extracted time/frequency domain features were reduced via Linear Discriminant Analysis (LDA). Unsupervised clustering (CFSFDP) was used to identify states without prior labels. Outcome: Achieved 90.2% classification accuracy across five states using 2D feature space. CFSFDP outperformed other clustering methods in F1 score and accuracy. |

| Lifetime Prediction | 2023, [32] | Supervised Learning (RF, XGBoost, MLP, SVR) | Task: Remaining Useful Life estimation. Methodology: Comparative ML modeling with filtering, clustering, and feature engineering. Outcome: RF achieved best results; prevented 42% of failures. |

| Lifetime Prediction | 2021, [33] | Supervised Learning | Task: RUL prediction for robot reducer. Methodology: Use motor current signature analysis (MCSA) features in ML model. Outcome: Effective health state classification. |

| Cost Minimization | 2022, [34] | Supervised Learning | Task: Develop PdM for wiring firms. Methodology: Expert system using ML to reduce downtime. Outcome: Identifies AI as cost-effective alternative to PM. |

| Cost Minimization | 2019, [35] | Supervised Learning | Task: Optimize maintenance timing in parallel production lines. Methodology: Used multi-agent PPO-based reinforcement learning in a simulated environment to decide maintenance based on machine state and buffer load. Outcome: Reduced breakdowns by 80%, improved throughput by up to 28%, and cut maintenance costs by 19%. |

3.2. Quality Control

| Sub-Area | Publication Year & Reference | Algorithm | Task, Methodology, & Outcome |

|---|---|---|---|

| Defect Detection | 2024, [41] | Supervised Learning, Unsupervised Learning (YOLOv5, OCR,CNN) | Task: Real-time defect detection in tuna cans. Methodology: Used YOLOv5 for can inspection, OCR for label detection, integrated with IoT stack (Node-RED, Grafana). Outcome: High-speed classification, automated sorting via robotic arm. |

| Defect Detection | 2023, [42] | Supervised Learning (LSTM, RF, NN) | Task : Predict hole locations in bumper beams to preempt quality issues. Methodology: Trained time-series models using previous beam measurements. Outcome: Improved early detection of tolerance violations, enhancing QC and reducing scrap. |

| Defect Detection | 2023, [43] | Supervised Learning, Unsupervised Learning (Custom CNN) | Task: Visual defect detection in casting. Methodology: Developed custom CNN and deployed on shop floor via user-friendly app. Outcome:Achieved 99.86% accuracy in image-based inspection for castings. |

| Defect Detection | 2022, [44] | Supervised Learning, Unsupervised Learning (CNN) | Task: Visual flaw detection with explainability. Methodology: Combined CNN for image analysis with ILP for rule-based reasoning, integrated human-in-the-loop feedback. Outcome: Created a system offering human-verifiable justifications. |

| Defect Detection | 2019, [45] | Supervised Learning (CNN) | Task: On-line defect recognition in Selective Laser Melting (SLM) during additive manufacturing. Methodology: Developed a bi-stream Deep Convolutional Neural Network (DCNN) to analyze layer-wise in-process images (powder layers and part slices) and detect defects caused by improper SLM parameters. Outcome: Achieved 99.4% defect classification accuracy; model outperformed traditional approaches; supports adaptive SLM process control and real-time quality assurance. |

| Defect Detection | 2018, [46] | Supervised Learning (DTree) | Task: Detect keyholing porosity and balling instabilities in Laser Powder Bed Fusion (LPBF). Methodology: Applied Scale-Invariant Feature Transform (SIFT) to extract melt pool features, encoded using Bag-of-Words representation, followed by classification with Support Vector Machine (SVM). Outcome: Enabled accurate identification of melt pool defects, supporting quality control in LPBF processes. |

| Image Recognition | 2019, [39] | Supervised Learning, Unsupervised Learning (SIFTS, SVM) | Task: Monitor and predict tool wear conditions in milling operations. Methodology: Tool condition classification was performed using a Support Vector Machine (SVM). A cloud dashboard was used for monitoring and visualization. Outcome: Enabled efficient and scalable monitoring of tool conditions, supporting timely maintenance decisions. |

| Image Recognition | 2018, [47] | Supervised Learning (SVM) | Task: Detect anomalies and failures in industrial manufacturing processes. Methodology: Employed an intelligent agent with a threshold-based decision algorithm and trained it using operational data. Outcome: Enabled proactive fault detection and efficient process management, reducing unexpected downtimes. |

| Image Recognition | 2018, [48] | Supervised Learning (CNN) | Task: Predict track width and continuity in Laser Powder Bed Fusion (LPBF) using video analysis. Methodology: Trained a CNN using supervised learning on 10 ms in situ video clips of the LPBF process. Outcome: Enabled accurate prediction of track features from video, supporting real-time quality monitoring. |

| Online Quality Control | 2023, [40] | Supervised Learning (WDCNN, FTRL) | Task: Real-time quality assessment of cars and bearings. Methodology: Applied online learning (incremental updates) with identity parsing on streaming data using river in Python. Outcome: Achieved real-time classification with stable accuracy. |

| Online Quality Control | 2021, [38] | Supervised Learning, Unsupervised Learning (CNN) | Task: Detect sealing and closure defects in food trays inline. Methodology: Built a modular CV system using CNNs trained on domain-specific image datasets. Outcome: Achieved near 100% defect detection rate inline, with <0.3 |

| Online Quality Control | 2019, [49] | Supervised Learning (SVM) | Task: Enable cost-efficient real-time QC in automotive manufacturing. Methodology: Applied an SVM considering inspection costs and error types; performance assessed via Design of Experiment. Outcome: Effective QC with improved cost-sensitivity and error handling. |

| Online Quality Control | 2019, [50] | Supervised Learning, Unsupervised Learning (SDAE) | Task: Perform robust pattern recognition from noisy signals. Methodology: Used SDAE for unsupervised feature extraction and supervised regression fine-tuning. Outcome: Improved generalization and feature robustness for classification tasks. |

3.3. Process Optimization

| Sub-Area | Publication Year & Reference | Algorithm | Task, Methodology, & Outcome |

|---|---|---|---|

| Performance Prediction | 2024, [51] | Supervised Learning, Unsupervised Learning (MPC) | Task:: Optimize process chains via decentralized learning. Methodology: Uses a quasi-neural network model with gradient-based continual learning across distributed nodes. Outcome: Enables continual optimization without compromising data sovereignty. |

| Performance Prediction | 2022, [56] | Supervised Learning (Bayesian Optimization with Probabilistic Constraints) | Task : Improve solar cell efficiency using data-efficient optimization. Methodology: BO with human-in-the-loop feedback and prior knowledge constraints. Outcome: Achieved 18.5% PCE with only 100 tests—faster than conventional methods. |

| Performance Prediction | 2019, [53] | Supervised Learning (ANN, GA, RBF, BPNN, ANFIS, SVR) | Task: Optimize and model desalination and treatment processes. Methodology: Benchmarked ANN/GA vs classical models for ion rejection, flux prediction, pollutant removal, etc. Outcome:ANN-based tools achieved superior prediction accuracy and process adaptability. |

| Performance Prediction | 2019, [57] | Supervised Learning (NN) | Task: Predict temperature and density evolution from laser trajectories. Methodology: Used three neural networks with a localized trajectory decomposition technique. Outcome: Enabled spatially-aware predictions for process monitoring. |

| Performance Prediction | 2018, [58] | Supervised Learning (CNN) | Task: Identify geometries that are hard to manufacture. Methodology: Applied a 3D CNN with a secondary interpretability method to analyze feature contribution. Outcome: Accurately predicted and explained manufacturability issues. |

| Performance Prediction | 2018, [59] | Supervised Learning (RF, SVM) | Task: Predict lead time in variable-demand flow shops. Methodology: Employed a Twin model with frequent retraining and online learning. Outcome: Achieved adaptive and accurate lead time forecasts. |

| Process Control | 2025, [60] | Supervised Learning (RSM-GA, ANN-GA, ANFIS-GA) | Task: Maximize tensile, flexural, and compressive strengths in FDM parts. Methodology: Used hybrid optimization combining RSM and AI methods on experimental design. Outcome: Hybrid models improved strength by up to 8.86% across mechanical tests. |

| Process Control | 2024, [61] | Reinforcement Learning, Supervised Learning (TD3, PPO) | Task: Develop autonomous process control in injection molding. Methodology: Combines supervised learning + DRL in a digital twin framework. Outcome: Real-time optimization with reduced human involvement and improved quality/cost-efficiency balance. |

| Process Control | 2022, [54] | Supervised Learning (ANN) | Task: Optimize AFP process to reduce defects and improve ILSS. Methodology: Combined ANN with photonic sensors, VSG, and FEA simulations. Outcome: Developed a decision-support tool to automate parameter tuning and defect minimization. |

| Process Control | 2020, [62] | Reinforcement Learning (QLrn) | Task: Optimize control in nonlinear, uncertain manufacturing processes. Methodology: Applied Q-learning for independent decision-making under partial observability. Outcome: Achieved adaptive control despite randomness and incomplete information. |

| Process Control | 2019, [63] | Supervised Learning (SVM) | Task: Improve grinding parameters for helical flutes. Methodology: Combined simulation, SVM prediction, and simulated annealing to optimize feed rate and grinder speed. Outcome: Enhanced surface quality and process efficiency. |

| Scheduling | 2019, [64] | Reinforcement Learning (QLrn) | Task: Minimize makespan in robotic assembly lines. Methodology: Used multi-agent reinforcement learning for dynamic planning and task scheduling. Outcome: Improved scheduling efficiency in multi-robot systems. |

| Scheduling | 2018, [65] | Supervised Learning (Bagging, Boosting) | Task: Optimize job shop scheduling via dispatching rule selection. Methodology: Evaluated bagging, boosting, and stacking for rule selection. Outcome: Reduced mean tardiness and flow time. |

4. Machine Learning-Driven Digital Twins and Edge AI for Industrial Automation

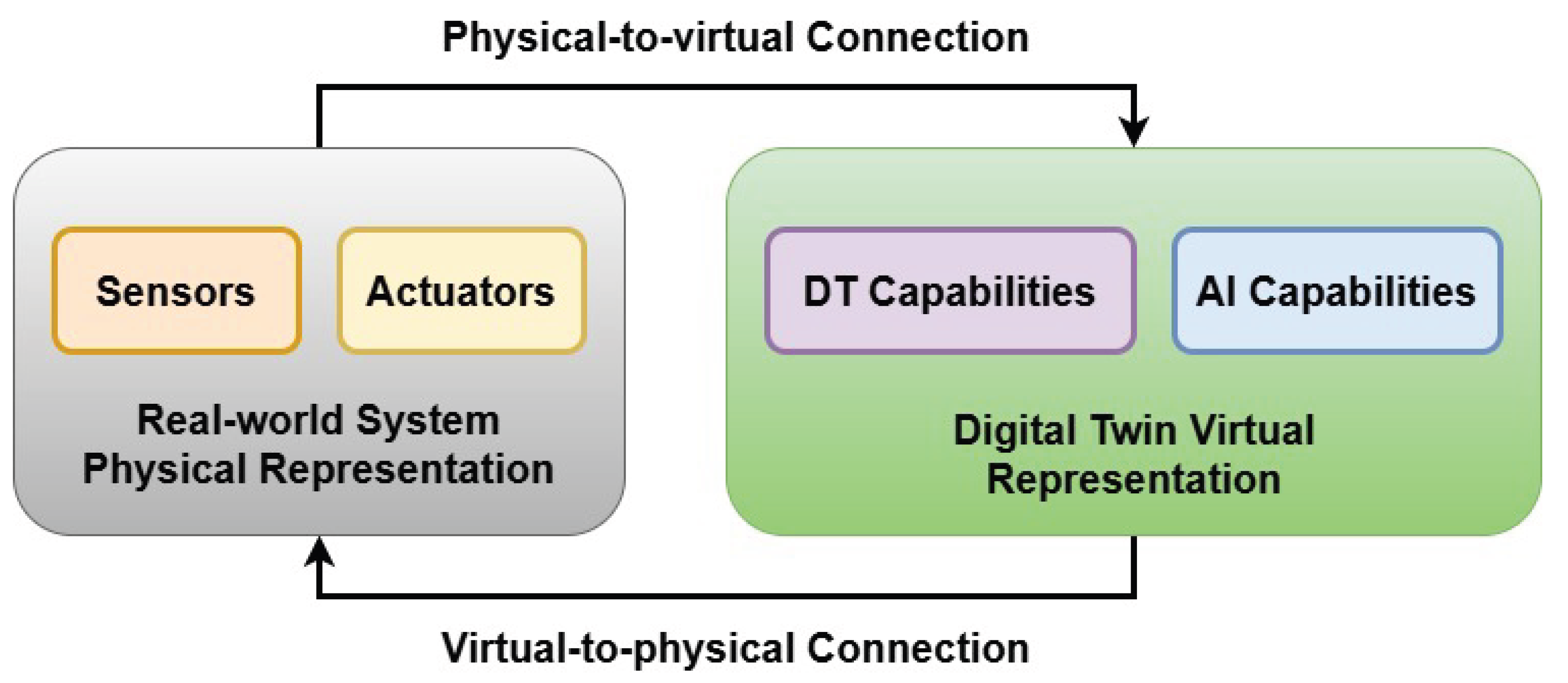

4.1. Digital Twin

4.1.1. AI- Driven Digital Twin Applications for PdM, QC, and PO

| Area | Sub-Area | Publication Year & Reference | Algorithm | Task, Methodology, & Outcome |

|---|---|---|---|---|

| Predictive Maintenance | Fault Prediction | 2024, [82] | Supervised Learning (LSTM, CNN) | Task: Predict early failure of SiC/GaN semiconductors. Methodology: Built a DT for thermal monitoring and used ML for degradation prediction. Outcome: Enabled early detection and extended device lifespan. |

| Predictive Maintenance | Fault Prediction | 2022, [83] | Supervised Learning (BPNN) | Task: Improve fault prediction and diagnosis for large-diameter auger rigs in coal mining. Methodology: Developed a digital twin model with geometric, physical, and behavioral layers using Unity3D and ANSYS. Trained a BP neural network on fault data (4 fault types) with expert-assisted feedback correction. Outcome: Model showed strong performance in identifying drill pipe bend/fracture, bearing fault, and overpressure events. |

| Predictive Maintenance | Fault Prediction | 2021, [84] | Supervised Learning, Unsupervised Learning (IRF, HC, TL) | Task: Improve fault detection and classification on intelligent production lines. Methodology: Proposed IRF by filtering RF trees via hierarchical clustering (high accuracy + diversity), then applied transfer learning to fine-tune with physical data. Outcome: Achieved 97.8% accuracy (vs. 88.7% for RF); outperformed KNN, ANN, LSTM, SVM; effective in diagnosing conveyor, tightening, and alignment faults with low-latency online analysis. |

| Predictive Maintenance | Fault Prediction | 2021, [85] | Supervised Learning | Task: Predict surface defects in HPDC castings. Methodology: Converted HPDC process images into pixel-based tabular data; applied SVD and edge detection for dimensionality reduction. Trained a Random Forest classifier and mapped tree paths into distributed CEP engine rules for real-time inference. Outcome: : RF + SVD + edge detection achieved 99.99% accuracy; crack location precisely identified in test images. CEP model enabled lightweight, distributed, low-latency defect prediction without large-scale computation. |

| Predictive Maintenance | Fault Prediction | 2020, [86] | Supervised Learning (NN, RF, RR) | Task: Predict generator oil temperature and detect early anomalies to prevent aircraft No-Go events. Methodology: Segmented time-series data from 606 anomaly-free flights and applied Fourier/Haar basis expansion. NN chosen for best generalization. Anomalies detected by monitoring divergence from reference MSE over consecutive flights. Outcome: Detected failures 5 to 9 flights before actual events; NN-Fourier DT achieved MSE 0.10 and showed good anomaly sensitivity with minimal false positives. |

| Predictive Maintenance | Fault Prediction | 2019, [87] | Supervised Learning, Unsupervised Learning (DNN) | Task: Perform real-time fault diagnosis under data-scarce and distribution-shifting conditions in smart manufacturing. Methodology: Proposed DFDD (Digital-Twin Fault Diagnosis using DTL), trained DNN model in digital space using SSAE + Softmax, then used DTL with MMD to adapt model to physical space. Integrated Process Visibility System (PVS) retrieved shop-floor operation data without extra sensors. Outcome:DFDD achieved 97.96% accuracy, outperforming DNN trained only on virtual (67.59%) or physical (91.54%) data. Robust against imbalanced and distribution-shifted test sets. |

| Predictive Maintenance | Lifetime Prediction | 2022, [88] | Supervised Learning (LASSO, SVR, XGBoost) | Task: Achieve full-lifecycle monitoring and predictive maintenance for locomotives. Methodology: Proposed a 3-layer ML-integrated DT architecture. Applied ML to a Digital Twin in Maintenance (DTMT) for health monitoring and fault prediction using bearing temperature data. Developed a series combination model (LASOO + SVR + XGBoost) to forecast axle temperature trends. Outcome:Detected locomotive bearing faults 1 week in advance. Enabled proactive fault alerts and lifecycle optimization. |

| Predictive Maintenance | Lifetime Prediction | 2021, [89] | Supervised Learning, Unsupervised Learning (LSTM) | Task: Enhance predictive maintenance of aero-engines through data-driven digital twin modeling. Methodology: Developed an implicit digital twin (IDT) using sensor data and historical operation data, integrated with LSTM for RUL prediction. Applied S-G filter for denoising. Defined Health Index (HI) to evaluate degradation and predict RUL using IDT-LSTM. Outcome: Achieved RMSE of 13.12 for RUL prediction, outperforming other methods; optimal performance at 80% training data. |

| Predictive Maintenance | Health Monitoring | 2024, [82] | Supervised Learning (DNN) | Task: Monitor WBG semiconductor health using a digital twin. Methodology: Combined thermal-electrical simulation and AI models to predict degradation. Outcome: Enabled accurate lifetime estimation and failure prediction using hybrid DT-AI approach. |

| Quality Control | Defect Detection | 2022, [90] | Supervised Learning, Unsupervised Learning (SVR, GPR) | Task: Identify bearing crack type and size under variable speed. Methodology: Modeled AE signals using autoregression, SVR, and GPR combined with Laguerre filters. Estimated unknown signals using a strict-feedback backstepping DT with fuzzy logic. Generated residuals and used RMS features for classification via SVM. Outcome: Achieved 97.13% accuracy in crack type diagnosis and 96.9% in crack size classification across eight bearing conditions and multiple speeds. |

| Quality Control | Defect Detection | 2022, [91] | Supervised Learning, Unsupervised Learning (LR, K-means Clustering) | Task: Detect anomalies in a pasteurization system at a food plant using ML-enhanced Digital Twin. Methodology: Built a LabVIEW-Python based DT of a pilot pasteurizer using real-time pressure and flow data. Trained 3 ML models: linear regressor (P1 prediction), MLP classifier (machine status: ok, warning, failure), and K-means (unsupervised status clustering). Outcome: MLP reached 96–99% accuracy across fluids; K-means accurately clustered operational states. DT enabled remote monitoring and decision support. |

| Quality Control | Image Recognition | 2022, [92] | Supervised Learning, Unsupervised Learning (CNN) | Task: Monitor and classify the quality of banana fruit. Methodology: Developed a Digital Twin system using thermal images (FLIR One camera) labeled into four classes. Trained a CNN with SAP Intelligent Technologies and used cloud-edge architecture for real-time data collection and alerts. Outcome: Enabled real-time classification and inventory decision-making. |

| Quality Control | Image Recognition | 2020, [93] | Supervised Learning (Inception-v3 CNN with Transfer Learning) | Task: Classify orientation ("up" or "down") of 3D-printed parts in robotic pick-and-place system. Methodology: Synthetic images generated using DT simulations in Blender. Labeled with Python script. Inception-v3 CNN retrained using TensorFlow. Outcome: Achieved 100% accuracy on real-world images; validated DT-generated data for robust model training. |

| Quality Control | Image Recognition | 2020, [94] | Supervised Learning, Unsupervised Learning (CNN) | Task: Monitor and control weld joint growth and penetration. Methodology: Built DT using weld images processed by CNN for BSBW and image processing for TSBW. Unity GUI for visualization. System adaptively adjusted welding time to meet penetration specs. Outcome: Real-time monitoring via visualization. |

| Quality Control | Image Recognition | 2020, [95] | Supervised Learning, Unsupervised Learning (MobileNet, UNet, Transfer Learning) | Task: Enable low-cost, high-precision plant disease/nutrient deficiency detection. Methodology: LoRaWAN WSN collected sensor data; used MobileNet and UNet on PlantVillage dataset. Simulated WSN in OMNeT++ and FLoRa; image downsampling for efficiency. Outcome: 95.67% validation accuracy; enabled rural deployment via energy-efficient LoRa-based WSN. |

| Quality Control | Online Quality Control | 2022, [96] | Supervised Learning, Unsupervised Learning (PointNet) | Task: Real-time object detection and pose estimation in robotic DT system. Methodology: Built DT with ROS and Unity for ABB IRB 120. Used LineMod and PointNet for object recognition/pose estimation. Collected data with Blensor and RealSense D435i. Outcome: 100% classification accuracy, 3° pose error; real-time DT sync with <0.1 ms delay. |

| Quality Control | Online Quality Control | 2022, [97] | Supervised Learning, Unsupervised Learning (YOLOv4-M2, OpenPose) | Task: Improve small object detection in complex smart manufacturing. Methodology: Designed a hybrid model using MobileNetv2+YOLOv4 for object detection and OpenPose for long-range human posture detection. Outcome: Achieved 91.8% accuracy, 78.2% mAP at 8–10 m. |

| Quality Control | Online Quality Control | 2021, [98] | Supervised Learning (FFT, PCA, SVM) | Task: Enhance welder training and performance using VR-based DT. Methodology: Captured motion via VR, transmitted to UR5 robot. Used FFT-PCA-SVM to classify welding skill. Outcome: 94.44% classification accuracy; enabled immersive feedback and performance monitoring. |

| Process Optimization | Performance Prediction | 2023, [99] | Supervised Learning (ANN, k-NN, Symbolic Regression) | Task: Predict and optimize workstation productivity using DT. Methodology: Combined PPC and ML to forecast throughput from failure/downtime data. Outcome: Symbolic Regression: =0.96 (train); ANN: =0.95 (test); enabled adaptive PPC decisions. |

| Process Optimization | Performance Prediction | 2022, [100] | Supervised Learning, Unsupervised Learning (CNN, Spatio-Temporal GCN) | Task: Predict road behavior and secure data transfer in autonomous cars. Methodology: Combined CNN and DT with spatio-temporal GCN and load balancing. Outcome: 92.7% prediction accuracy, 80% delivery rate, low delay and leakage. |

| Process Optimization | Performance Prediction | 2022, [101], | Reinforcement Learning (BCDDPG, LSTM) | Task: Enable robust and energy-efficient flocking of UAV swarms. Methodology: Developed DT-enabled framework using BCDDPG and LSTM for dynamic feature learning. Trained in simulation and deployed to UAVs. Outcome: Outperformed baselines in 8 metrics including arrival rate >80% and energy efficiency. |

| Process Optimization | Task Modelling | 2022, [102] | Reinforcement Learning (DDQN) | Task: Minimize energy in UAV-based Mobile Edge Computing. Methodology: DT-based offloading with DDQN, closed-form power solutions, and iterative CPU allocation. Outcome: Reduced energy and delay vs. baselines; scalable under dynamic loads. |

| Process Optimization | Process Control | 2024, [103] | Supervised Learning (CNN, YOLOv3) | Task: Object detection in factories. Methodology: Trained YOLOv3 on synthetic data from factory DT. Outcome: Enabled robust object recognition without real datasets. |

| Process Optimization | Process Control | 2022, [104] | Supervised Learning, Unsupervised Learning (VGG-16) | Task: Enable intuitive robot programming. Methodology: DT system with Hololens MR, Unity simulation, and CNN for object pose estimation. Outcome: Real-time gesture control with ±1–2 cm error. |

| Process Optimization | Process Control | 2022, [105] | Reinforcement Learning (PDQN, DQN) | Task: Optimize smart conveyor control. Methodology: Built DT-ACS and introduced PDQN to improve control performance. Outcome: Faster convergence, better robustness, reduced cost under dynamic loads. |

| Process Optimization | Process Control | 2021, [106] | Supervised Learning, Unsupervised Learning (K-Means, KNN) | Task: Improve monitoring and prediction in chemical plants. Methodology: Preprocessed data (IQR, normalization), clustered via K-Means, and built KNN models. Deployed model to cloud with WebSocket interface. Outcome: 16.6% data reduction, 99.74% classification accuracy, R2 = 0.96 for regression. |

| Process Optimization | Process Control | 2019, [107] | Supervised Learning (LightGBM, XGBoost, RF, AdaBoost, CART) | Task: Optimize yield in catalytic cracking units. Methodology: 5-step DT framework using IoT + ML; trained 4 models with ensemble methods; online deployment with MES. Outcome: Real-world deployment increased light oil yield by 0.5%. |

| Process Optimization | Scheduling | 2022, [108] | Reinforcement Learning (Parallel RL, Q-Learning, SARSA, DNN) | Task: Improve shipyard scheduling and QoS management. Methodology: Built 3-layer DTN; trained DNN for latency prediction; tested RL variants. Outcome: Parallel RL had best performance; DT enabled real-time decisions and resource efficiency. |

| Process Optimization | Scheduling | 2021, [109] | Supervised Learning (ANN) | Task: Enhance planning in fast fashion lines. Methodology: DT system with ANN for demand forecast, DES for simulating operations, and dashboard visualization. Outcome: Lead time reduced by 28%, operator use up 37%, staffing optimized. |

| Process Optimization | Scheduling | 2020, [110] | Reinforcement Learning | Task: Optimize scheduling in manual assembly. Methodology: Built Python-based adaptive simulation using FPY/HPU data, RL for recommendation refinement. Outcome: Identified bottlenecks and improved efficiency; RL adapted dynamically. |

4.2. Edge AI

4.2.1. Edge AI in PdM, QC, and PO

| Area | Sub-Area | Publication Year & Reference | Algorithm | Task, Methodology, & Outcome |

|---|---|---|---|---|

| Predictive Maintenance | Fault Prediction | 2024, [116] | Supervised Learning (SVM, RF, KNN, CNN, LightBGM) | Task: : Detect tool wear in milling. Methodology: Developed an Edge AI system running 5 SL models on low-cost hardware. Outcome: CNN outperformed others in wear classification, enabling efficient on-device inference. |

| Predictive Maintenance | Fault Prediction | 2020, [125] | Supervised Learning, Unsupervised Learning (GBRBM, DNN) | Task: Accurately detect faults in IIoT manufacturing facilities using edge AI with minimal latency. Methodology: Transforms fault detection into a classification task using a multi-block GBRBM (Gaussian-Bernoulli Restricted Boltzmann Machine) for feature extraction and deep autoencoder for training. The architecture enables low-latency classification directly at the edge. Outcome: Achieved 88.39% accuracy; significantly outperformed SVM, LDA, LR, QDA, and FNN baselines. |

| Predictive Maintenance | Fault Prediction | 2020, [126] | Supervised Learning, Unsupervised Learning (1D-CNN) | Task: Accurately detect gear and bearing faults in gearboxes under multiple operating conditions using deep learning on edge equipment. Methodology: Proposed a multi-task 1D-CNN model trained with shared and task-specific layers. Model deployed on edge devices for low-latency real-time diagnosis. Outcome: Achieved 95.76% joint accuracy; after applying triplet loss, test accuracy reached 90.13 |

| Predictive Maintenance | Anomaly Detection | 2020, [127] | Supervised Learning, Unsupervised Learning (CNN-VA, SCVAE) | Task: Perform unsupervised anomaly detection on time-series manufacturing sensor data. Methodology: Proposes SCVAE (compressed CNN-VAE using Fire Modules) trained on labeled UCI datasets and unlabeled CNC machine data. Compares SCVAE with other anomaly detection methods. Outcome: : SCVAE achieved high anomaly detection accuracy while reducing model size and inference time significantly, making it suitable for edge deployment. |

| Quality Control | Defect Detection | 2020, [128] | Supervised Learning, Unsupervised Learning (R-CNN, ResNet101) | Task: Detect surface defects on complex-shaped manufactured parts (turbo blades). Methodology: Faster R-CNN is deployed at edge nodes for low-latency detection, while cloud servers support training and updates. The smart system integrates cloud-edge collaboration for continuous model evolution. Outcome: Achieved 81% precision and 72% recall on test set; edge computing improved speed over cloud or embedded-only setups. |

| Quality Control | Defect Detection | 2021, [129] | Supervised Learning, Unsupervised Learning (CNN) | Task: Automate visual defect detection in injection-molded tampon applicators using deep learning and edge computing. Methodology: A CNN model processes grayscale images acquired from vision sensors mounted on rotating rails.The system performs real-time defect classification on edge boxes connected to PLCs for automated sorting. Outcome:Achieved 92.62% accuracy and 0.839 MCC with fast inference, validating industrial applicability. |

| Quality Control | Defect Detection | 2020, [130] | Unsupervised Learning (K-means Clustering) | Task: Develop a real-time, low-latency fabric defect detection system. Methodology:Modified DenseNet is optimized with a custom loss function, data augmentation (6 strategies), and pruning for edge deployment. Trained and deployed on Cambricon 1H8 edge device with factory data. Outcome:Achieved 18% AUC gain, 50% reduction in data transmission, and 32% lower latency vs cloud, validating robust, real-time performance for 11 defect classes. |

| Quality Control | Image Recognition | 2023, [131] | Supervised Learning, Unsupervised Learning (TADS) | Task: Optimize execution time of DNN-based quality inspection tasks in smart manufacturing. Methodology: Proposes TADS (Task-Aware DNN Splitting), a scheme that selects optimal DNN layer split points based on task number, type (concurrent/periodic), inter-arrival time, and bandwidth. Outcome:Achieved up to 97% task time reduction vs baseline schemes; validated through both simulations and real-world deployment. |

| Quality Control | Image Recognition | 2021, [132] | Supervised Learning (MobileNetV1, ResNet) | Task: Improve operator safety and operational tracking in a shipyard workshop. Methodology: A mist computing architecture using smart IIoT cameras performs real-time human detection and machinery tracking locally without uploading image data to the cloud. Outcome: Demonstrated extremely low yearly energy consumption (0.35–0.36 kWh/device) and scalable carbon footprint analysis across regions using different energy sources. |

| Quality Control | Image Recognition | 2020, [133] | Supervised Learning (SVM) | Task: Automate detection of edge and surface defects in logistics packaging boxes. Methodology: Images are preprocessed with grayscale, denoising, and morphological operations. Features are extracted using SIFT and classified using SVM (RBF kernel). Outcome: Achieved 91% accuracy in classifying edge and surface defects, outperforming CNN in both accuracy and speed under edge computing conditions. |

| Quality Control | Online Quality Control | 2020, [134] | Supervised Learning (GBT, SVM, DT, NB, LR) | Task: Replace traditional X-ray inspections in PCB manufacturing. Methodology: Historical SPI data were used to train supervised models (GBT selected). Prediction occurs on solder-joint level; deployment strategy filters X-ray usage based on predicted FOV defect status. Outcome: 29% average X-ray inspection volume reduced without sacrificing defect detection accuracy. |

| Process Optimization | Process Control | 2020, [135] | Supervised Learning, Unsupervised Learning (ResNet34, RFBNet, Key Point Regression) | Task: Estimate and calibrate the 3D pose of robotic arms with five key points (base, shoulder, elbow, wrist, end). Methodology: Two-stage pipeline—robot arm detection with RFBNet and key point regression using a lightweight CNN (ResNet34 backbone). Trained on RGB-D data from Webots simulator, deployed on NVIDIA Jetson AGX. Outcome: Achieved 1.28 cm joint error, 0.70 cm base error; 14 FPS on edge device with low GPU memory. |

| Process Optimization | Scheduling | 2020, [136] | Supervised Learning, Unsupervised Learning (LSTM, FCM clustering) | Task: Detect anomalies in discrete manufacturing processes and perform energy-aware production rescheduling. Methodology: Energy data is collected from CNC tools and preprocessed (cleaning, clustering by FCM). An LSTM model predicts tool wear and machine degradation. If an anomaly occurs, an edge-triggered rescheduling mechanism (RSR/TR) is initiated. Outcome: 3.5% detection error; energy and production efficiency improved by 21.3% and 13.7% respectively. |

5. Dataset, Data Acquisition Tools, and Industrial Platforms

5.1. Dataset

| Area | Reference | Dataset Used | Devices Used | Input Variables | Output Variables | Number of Samples |

|---|---|---|---|---|---|---|

| Predictive Maintenance | [88] | Real-world axle temperature data from CDD5B1 locomotives | Onboard sensors | Axle temperature, ambient temp, GPS speed, generator temp | Predicted axle temp, residual error, failure alert | 0,000 |

| Predictive Maintenance | [113] | Custom dataset (6 sensors, 6 units) | 4 low-power embedded edge devices | Accelerometer, gyro, magnetometer, mic | Aging classification | 939 |

| Predictive Maintenance | [126] | Custom DDS vibration data (gear & bearing) | Edge-ready hardware (lightweight CNNs), DDS simulator, 1D sensors, FFT preprocessor | Time-series vibration signals (gear, bearing) | Fault category of gear and bearing (multi-label output) | 192,000 |

| Predictive Maintenance | [145] | Time-series current signals from solar panel systems | TIDA-010955 AFE board with C2000 control card , current transformers. | ADC samples, FFT features. | Binary classification: Arc (1) or Normal (0). | Not specified |

| Predictive Maintenance | [146] | Vibration data (3-axis), collected from motors under various fault conditions. | Vibration sensors, motor controller, dual GaN inverters, and EMJ04-APB22 PMSM motors | Time-series vibration data, FFT or raw signals. | Fault types (e.g., normal, flaking, erosion, localized damage). | Not Specified |

| Quality Control | [129] | Real factory image dataset from SMEs | GigE Vision Cameras, Edge Box (NVIDIA GTX 1080 Ti), PLC, rotating rail | Grayscale product images (300×300 px) | Binary defect classification (OK/Defective) | 3428 |

| Quality Control | [130] | Alibaba Tianchi fabric dataset (real industrial images) | Intelligent edge camera (Cambrian 1H8), ARM Cortex A7 | High-res fabric images | Defect classification | 2022 |

| Quality Control | [133] | Custom dataset from logistics warehouse | TXG12 industrial camera, LED lights, conveyor with PLC | Grayscale carton images (500×653 px) | Binary classification (OK, Edge Defect, Surface Defect) | 3000 |

| Quality Control | [147] | Custom image dataset (12 defect categories) | Sensors, fog nodes, cameras | Image features from product sensors | Binary/Multiclass defect classification | 2400 |

| Process Optimization | [4] | Custom manufacturing images | NVIDIA Jetson Nano | Product images, object categories | Defect detection, inventory state | Not specified |

| Process Optimization | [106] | 64,789 records of process data | IoT devices | Process temps, fan pressure/speed, raw material consumption | Operating mode, fault diagnosis, predicted material consumption | 61,753 |

| Process Optimization | [136] | Milling shop energy logs | Electric meters, edge server, PLCs, CNC lathes, milling machines | Energy consumption metrics | Anomaly class (normal, tool wear, degradation), reschedule strategy | 1,000 |

| Process Optimization | [148] | Real CNC motion data | Fagor 8070 CNC controller | Control loop parameters, speed, load torque, backlash, friction factors | Position error, control effort, peak error | Not specified |

5.2. Industrial Platforms and Software

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| ANFIS | Adaptive Neuro-Fuzzy Inference System |

| ANFIS-GA | ANFIS with Genetic Algorithm |

| ANN | Artificial Neural Network |

| ANN-GA | Artificial Neural Network with Genetic Algorithm |

| BCDDPG | Behavior-Coupling Deep Deterministic Policy Gradient |

| BN | Batch Normalization |

| BNN | Bayesian Neural Network |

| BPNN | Backpropagation Neural Network |

| CART | Classification and Regression Trees |

| CF | Collaborative Filtering |

| CNN | Convolutional Neural Network |

| DDQN | Double Deep Q-Network |

| DL | Deep Learning |

| DNN | Deep Neural Network |

| DQN | Deep Q-Network |

| DT | Digital Twin |

| Dtree | Decision Tree |

| EC | Edge Computing |

| ET | Extra Trees |

| FFT | Fast Fourier Transform |

| GA | Genetic Algorithm |

| GB | Gradient Boosting |

| GPR | Gaussian Process Regression |

| HC | Hierarchical Clustering |

| IoT | Internet of Things |

| IRF | Iterative Random Forest |

| LASSO | Least Absolute Shrinkage and Selection Operator |

| LDA | Linear Discriminant Analysis |

| LR | Logistic Regression / Linear Regression |

| LSTM | Long Short-Term Memory |

| MEC | Mobile Edge Computing |

| ML | Machine Learning |

| MLP | Multi-Layer Perceptron |

| MPC | Model Predictive Control |

| MSE | Mean Squared Error |

| NN | Neural Network |

| OCR | Optical Character Recognition |

| PCA | Principal Component Analysis |

| PdM | Predictive Maintenance |

| PDQN | Profit-sharing Deep Q-Network |

| PO | Process Optimization |

| PPO | Proximal Policy Optimization |

| QC | Quality Control |

| QLrn | Q-Learning |

| RF | Random Forest |

| RL | Reinforcement Learning |

| RMS | Root Mean Square |

| RR | Ridge Regression |

| SARSA | State-Action-Reward-State-Action |

| SCADA | Supervisory Control and Data Acquisition |

| SDAE | Stacked Denoising Autoencoder |

| SIFT | Scale-Invariant Feature Transform |

| ST-GCN | Spatio-Temporal Graph Convolutional Network |

| SVR | Support Vector Regression |

| SVM | Support Vector Machine |

| TD3 | Twin Delayed Deep Deterministic Policy Gradient |

| TL | Transfer Learning |

| UNet | U-shaped Convolutional Neural Network |

| VCG | Variational Cooperative Game |

| XGBOOST | Extreme Gradient Boosting |

| XR | Extended Reality |

References

- Lee, J.; Bagheri, B.; Kao, H.-A. A Cyber-Physical Systems Architecture for Industry 4.0-Based Manufacturing Systems. Manufacturing Letters 2015, 3, 18–23. [CrossRef]

- Wuest, T.; Weimer, D.; Irgens, C.; Thoben, K.-D. Machine Learning in Manufacturing: Advantages, Challenges, and Applications. Production & Manufacturing Research 2016, 4(1), 23–45. [CrossRef]

- Rahman, M.A.; Abushaiba, A.A.; Elrajoubi, A.M. Integration of C2000 Microcontrollers with MATLAB Simulink Embedded Coder: A Real-Time Control Application. In: Proceedings of the 2024 7th International Conference on Electrical Engineering and Green Energy (CEEGE); IEEE: Los Angeles, CA, USA, 2024; pp. 131–136. [CrossRef]

- Gawate, E.; Rane, P. Empowering Intelligent Manufacturing with the Potential of Edge Computing with NVIDIA’s Jetson Nano. In: Proceedings of the 2023 International Conference on Computing, Communication, and Intelligent Systems (ICCCIS); IEEE: Greater Noida, India, 2023; pp. 375–380. [CrossRef]

- Liang, Y.C.; Li, W.D.; Lou, P.; Hu, J.M. Thermal Error Prediction for Heavy-Duty CNC Machines Enabled by Long Short-Term Memory Networks and Fog-Cloud Architecture. Journal of Manufacturing Systems 2022, 62, 950–963. [CrossRef]

- Qin, J.; Liu, Y.; Grosvenor, R. A Categorical Framework of Manufacturing for Industry 4.0 and Beyond. Procedia CIRP 2016, 52, 173–178. [CrossRef]

- Wang, J.; Ma, Y.; Zhang, L.; Gao, R.X.; Wu, D. Deep Learning for Smart Manufacturing: Methods and Applications. Journal of Manufacturing Systems 2018, 48, 144–156. [CrossRef]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge Computing: Vision and Challenges. IEEE Internet of Things Journal 2016, 3(5), 637–646. [CrossRef]

- Tao, F.; Zhang, H.; Liu, A.; Nee, A.Y.C. Digital Twin in Industry: State-of-the-Art. IEEE Transactions on Industrial Informatics 2019, 15(4), 2405–2415. [CrossRef]

- Transforming Manufacturing with Digital Twins | McKinsey. https://www.mckinsey.com/capabilities/operations/our-insights/digital-twins-the-next-frontier-of-factory-optimization. Accessed 2025-05-19.

- Hermann, M.; Pentek, T.; Otto, B. Design Principles for Industrie 4.0 Scenarios. In: Proceedings of the 2016 49th Hawaii International Conference on System Sciences (HICSS); IEEE: Koloa, HI, USA, 2016; pp. 3928–3937. http://ieeexplore.ieee.org/document/7427673/. Accessed 2025-05-19. [CrossRef]

- Meddaoui, A.; Hain, M.; Hachmoud, A. The Benefits of Predictive Maintenance in Manufacturing Excellence: A Case Study to Establish Reliable Methods for Predicting Failures. The International Journal of Advanced Manufacturing Technology 2023, 128(7–8), 3685–3690. [CrossRef]

- Hadi, R.H.; Hady, H.N.; Hasan, A.M.; Al-Jodah, A.; Humaidi, A.J. Improved Fault Classification for Predictive Maintenance in Industrial IoT Based on AutoML: A Case Study of Ball-Bearing Faults. Processes 2023, 11(5), 1507. [CrossRef]

- Kiangala, K.S.; Wang, Z. An Effective Predictive Maintenance Framework for Conveyor Motors Using Dual Time-Series Imaging and Convolutional Neural Network in an Industry 4.0 Environment. IEEE Access 2020, 8, 121033–121049. [CrossRef]

- Lu, B.-L.; Liu, Z.-H.; Wei, H.-L.; Chen, L.; Zhang, H.; Li, X.-H. A Deep Adversarial Learning Prognostics Model for Remaining Useful Life Prediction of Rolling Bearing. IEEE Transactions on Artificial Intelligence 2021, 2(4), 329–340. [CrossRef]

- Guo, D.; Chen, X.; Ma, H.; Sun, Z.; Jiang, Z. State Evaluation Method of Robot Lubricating Oil Based on Support Vector Regression. Computational Intelligence and Neuroscience 2021, 2021(1). [CrossRef]

- Nunes, P.; Rocha, E.; Santos, J.; Antunes, R. Predictive Maintenance on Injection Molds by Generalized Fault Trees and Anomaly Detection. Procedia Computer Science 2023, 217, 1038–1047. [CrossRef]

- Scalabrini Sampaio, G.; Vallim Filho, A.R.D.A.; Santos Da Silva, L.; Augusto Da Silva, L. Prediction of Motor Failure Time Using an Artificial Neural Network. Sensors 2019, 19(19), 4342. [CrossRef]

- Quiroz, J.C.; Mariun, N.; Mehrjou, M.R.; Izadi, M.; Misron, N.; Mohd Radzi, M.A. Fault Detection of Broken Rotor Bar in LS-PMSM Using Random Forests. Measurement 2018, 116, 273–280. [CrossRef]

- Predictive Maintenance | GE Research. https://www.ge.com/research/project/predictive-maintenance. Accessed 2024-02-26.

- Wang, X.; Liu, M.; Liu, C.; Ling, L.; Zhang, X. Data-Driven and Knowledge-Based Predictive Maintenance Method for Industrial Robots for the Production Stability of Intelligent Manufacturing. Expert Systems with Applications 2023, 234, 121136. [CrossRef]

- Satwaliya, D.S.; Thethi, H.P.; Dhyani, A.; Kiran, G.R.; Al-Taee, M.; Alazzam, M.B. Predictive Maintenance Using Machine Learning: A Case Study in Manufacturing Management. In: Proceedings of the 2023 3rd International Conference on Advance Computing and Innovative Technologies in Engineering (ICACITE); IEEE: Greater Noida, India, 2023; pp. 872–876. https://ieeexplore.ieee.org/document/10183012/. Accessed 2025-05-23. [CrossRef]

- Nikfar, M.; Bitencourt, J.; Mykoniatis, K. A Two-Phase Machine Learning Approach for Predictive Maintenance of Low Voltage Industrial Motors. Procedia Computer Science 2022, 200, 111–120. [CrossRef]

- Cachada, A.; Moreira, P.M.; Romero, L.; Barbosa, J.; Leitão, P.; Geraldes, C.A.S.; Deusdado, L.; Costa, J.; Teixeira, C.; Teixeira, J.; Moreira, A.H.J. Maintenance 4.0: Intelligent and Predictive Maintenance System Architecture. In: Proceedings of the 2018 IEEE 23rd International Conference on Emerging Technologies and Factory Automation (ETFA); IEEE: Turin, Italy, 2018; pp. 139–146. https://ieeexplore.ieee.org/document/8502489/. Accessed 2024-04-19. [CrossRef]

- Carbery, C.M.; Woods, R.; Marshall, A.H. A Bayesian Network Based Learning System for Modelling Faults in Large-Scale Manufacturing. In: 2018 IEEE International Conference on Industrial Technology (ICIT); IEEE: Lyon, France, 2018; pp. 1357–1362. https://ieeexplore.ieee.org/document/8352377/. Accessed 2024-04-19. [CrossRef]

- Syafrudin, M.; Alfian, G.; Fitriyani, N.; Rhee, J. Performance Analysis of IoT-Based Sensor, Big Data Processing, and Machine Learning Model for Real-Time Monitoring System in Automotive Manufacturing. Sensors 2018, 18(9), 2946. [CrossRef]

- Li, Z.; Wang, Y.; Wang, K.-S. Intelligent Predictive Maintenance for Fault Diagnosis and Prognosis in Machine Centers: Industry 4.0 Scenario. Advances in Manufacturing 2017, 5(4), 377–387. [CrossRef]

- Pejić Bach, M.; Topalović, A.; Krstić, I.; Ivec, A. Predictive Maintenance in Industry 4.0 for the SMEs: A Decision Support System Case Study Using Open-Source Software. Designs 2023, 7(4), 98. [CrossRef]

- Borghesi, A.; Burrello, A.; Bartolini, A. ExaMon-X: A Predictive Maintenance Framework for Automatic Monitoring in Industrial IoT Systems. IEEE Internet of Things Journal 2023, 10(4), 2995–3005. [CrossRef]

- Li, Z.; Liu, R.; Wu, D. Data-Driven Smart Manufacturing: Tool Wear Monitoring with Audio Signals and Machine Learning. Journal of Manufacturing Processes 2019, 48, 66–76. [CrossRef]

- Liu, J.; Hu, Y.; Wu, B.; Wang, Y. An Improved Fault Diagnosis Approach for FDM Process with Acoustic Emission. Journal of Manufacturing Processes 2018, 35, 570–579. [CrossRef]

- Taşcı, B.; Omar, A.; Ayvaz, S. Remaining Useful Lifetime Prediction for Predictive Maintenance in Manufacturing. Computers & Industrial Engineering 2023, 184, 109566. [CrossRef]

- Lulu, J.; Yourui, T.; Jia, W. Remaining Useful Life Prediction for Reducer of Industrial Robots Based on MCSA. In: 2021 Global Reliability and Prognostics and Health Management (PHM-Nanjing); IEEE: Nanjing, China, 2021; pp. 1–7. https://ieeexplore.ieee.org/document/9613006/. Accessed 2025-05-23. [CrossRef]

- Eddarhri, M.; Adib, J.; Hain, M.; Marzak, A. Towards Predictive Maintenance: The Case of the Aeronautical Industry. Procedia Computer Science 2022, 203, 769–774. [CrossRef]

- Kuhnle, A.; Jakubik, J.; Lanza, G. Reinforcement Learning for Opportunistic Maintenance Optimization. Production Engineering 2019, 13(1), 33–41. [CrossRef]

- Bertolini, M.; Mezzogori, D.; Neroni, M.; Zammori, F. Machine Learning for Industrial Applications: A Comprehensive Literature Review. Expert Systems with Applications 2021, 175, 114820. [CrossRef]

- Villalba-Diez, J.; Schmidt, D.; Gevers, R.; Ordieres-Meré, J.; Buchwitz, M.; Wellbrock, W. Deep Learning for Industrial Computer Vision Quality Control in the Printing Industry 4.0. Sensors 2019, 19(18), 3987. [CrossRef]

- Banús, N.; Boada, I.; Xiberta, P.; Toldrà, P.; Bustins, N. Deep Learning for the Quality Control of Thermoforming Food Packages. Scientific Reports 2021, 11(1), 21887. [CrossRef]

- Scime, L.; Beuth, J. Using Machine Learning to Identify In-Situ Melt Pool Signatures Indicative of Flaw Formation in a Laser Powder Bed Fusion Additive Manufacturing Process. Additive Manufacturing 2019, 25, 151–165. [CrossRef]

- Yin, Y.; Wan, M.; Xu, P.; Zhang, R.; Liu, Y.; Song, Y. Industrial Product Quality Analysis Based on Online Machine Learning. Sensors 2023, 23(19), 8167. [CrossRef]

- González, S.V.; Jimenez, L.C.; Given, W.G.G.; Noboa, B.V.; Enderica, C.S. Automated Quality Control System for Canned Tuna Production Using Artificial Vision. In: 2024 3rd International Conference on Artificial Intelligence for Internet of Things (AIIoT); IEEE: Vellore, India, 2024; pp. 1–6. https://ieeexplore.ieee.org/document/10574669/. Accessed 2025-05-23. [CrossRef]

- Msakni, M.K.; Risan, A.; Schütz, P. Using Machine Learning Prediction Models for Quality Control: A Case Study from the Automotive Industry. Computational Management Science 2023, 20(1), 14. [CrossRef]

- Sundaram, S.; Zeid, A. Artificial Intelligence-Based Smart Quality Inspection for Manufacturing. Micromachines 2023, 14(3), 570. [CrossRef]

- Müller, D.; März, M.; Scheele, S.; Schmid, U. An Interactive Explanatory AI System for Industrial Quality Control. arXiv 2022, Version Number: 1. [CrossRef]

- Caggiano, A.; Zhang, J.; Alfieri, V.; Caiazzo, F.; Gao, R.; Teti, R. Machine learning-based image processing for on-line defect recognition in additive manufacturing. CIRP Ann. 2019, 68(1), 451–454. [CrossRef]

- Kim, A.; Oh, K.; Jung, J.-Y.; Kim, B. Imbalanced classification of manufacturing quality conditions using cost-sensitive decision tree ensembles. Int. J. Comput. Integr. Manuf. 2018, 31(8), 701–717. [CrossRef]

- Gobert, C.; Reutzel, E.W.; Petrich, J.; Nassar, A.R.; Phoha, S. Application of supervised machine learning for defect detection during metallic powder bed fusion additive manufacturing using high resolution imaging. Addit. Manuf. 2018, 21, 517–528. [CrossRef]

- Yuan, B.; Guss, G.M.; Wilson, A.C.; Hau-Riege, S.P.; DePond, P.J.; McMains, S.; Matthews, M.J.; Giera, B. Machine-Learning-Based Monitoring of Laser Powder Bed Fusion. Adv. Mater. Technol. 2018, 3(12), 1800136. [CrossRef]

- Oh, Y.; Busogi, M.; Ransikarbum, K.; Shin, D.; Kwon, D.; Kim, N. Real-time quality monitoring and control system using an integrated cost effective support vector machine. J. Mech. Sci. Technol. 2019, 33(12), 6009–6020. [CrossRef]

- Yu, J.; Zheng, X.; Wang, S. A deep autoencoder feature learning method for process pattern recognition. J. Process Control 2019, 79, 1–15. [CrossRef]

- Emmert, J.; Mendez, R.; Dastjerdi, H.M.; Syben, C.; Maier, A. The Artificial Neural Twin — Process optimization and continual learning in distributed process chains. Neural Netw. 2024, 180, 106647. [CrossRef]

- Reguera-Bakhache, D.; Garitano, I.; Uribeetxeberria, R.; Cernuda, C.; Zurutuza, U. Data-Driven Industrial Human-Machine Interface Temporal Adaptation for Process Optimization. In Proceedings of the 2020 25th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Vienna, Austria, 2020; pp. 518–525. [CrossRef]

- Al Aani, S.; Bonny, T.; Hasan, S.W.; Hilal, N. Can machine language and artificial intelligence revolutionize process automation for water treatment and desalination? Desalination 2019, 458, 84–96. [CrossRef]

- Islam, F.; Wanigasekara, C.; Rajan, G.; Swain, A.; Prusty, B.G. An approach for process optimisation of the Automated Fibre Placement (AFP) based thermoplastic composites manufacturing using Machine Learning, photonic sensing and thermo-mechanics modelling. Manuf. Lett. 2022, 32, 10–14. [CrossRef]

- Farooq, A.; Iqbal, K. A Survey of Reinforcement Learning for Optimization in Automation. In Proceedings of the 2024 IEEE 20th International Conference on Automation Science and Engineering (CASE), Bari, Italy, 2024; pp. 2487–2494. [CrossRef]

- Liu, Z.; Rolston, N.; Flick, A.C.; Colburn, T.W.; Ren, Z.; Dauskardt, R.H.; Buonassisi, T. Machine Learning with Knowledge Constraints for Process Optimization of Open-Air Perovskite Solar Cell Manufacturing. arXiv 2021, Version Number: 4. [CrossRef]

- Stathatos, E.; Vosniakos, G.-C. Real-time simulation for long paths in laser-based additive manufacturing: a machine learning approach. Int. J. Adv. Manuf. Technol. 2019, 104(5–8), 1967–1984. [CrossRef]

- Ghadai, S.; Balu, A.; Sarkar, S.; Krishnamurthy, A. Learning localized features in 3D CAD models for manufacturability analysis of drilled holes. Comput. Aided Geom. Des. 2018, 62, 263–275. [CrossRef]

- Gyulai, D.; Pfeiffer, A.; Nick, G.; Gallina, V.; Sihn, W.; Monostori, L. Lead time prediction in a flow-shop environment with analytical and machine learning approaches. IFAC-Pap. 2018, 51(11), 1029–1034. [CrossRef]

- Dev, S.; Srivastava, R. Experimental investigation and optimization of the additive manufacturing process through AI-based hybrid statistical approaches. Prog. Addit. Manuf. 2025, 10(1), 107–126. [CrossRef]

- Khdoudi, A.; Masrour, T.; El Hassani, I.; El Mazgualdi, C. A Deep-Reinforcement-Learning-Based Digital Twin for Manufacturing Process Optimization. Systems 2024, 12(2), 38. [CrossRef]

- Dornheim, J.; Link, N.; Gumbsch, P. Model-free Adaptive Optimal Control of Episodic Fixed-horizon Manufacturing Processes Using Reinforcement Learning. Int. J. Control Autom. Syst. 2020, 18(6), 1593–1604. [CrossRef]

- Denkena, B.; Dittrich, M.-A.; Böß, V.; Wichmann, M.; Friebe, S. Self-optimizing process planning for helical flute grinding. Prod. Eng. 2019, 13(5), 599–606. [CrossRef]

- Tan, Q.; Tong, Y.; Wu, S.; Li, D. Modeling, planning, and scheduling of shopfloor assembly process with dynamic cyber-physical interactions: a case study for CPS-based smart industrial robot production. Int. J. Adv. Manuf. Technol. 2019, 105(9), 3979–3989. [CrossRef]

- Priore, P.; Ponte, B.; Puente, J.; Gómez, A. Learning-based scheduling of flexible manufacturing systems using ensemble methods. Comput. Ind. Eng. 2018, 126, 282–291. [CrossRef]

- Jones, D.; Snider, C.; Nassehi, A.; Yon, J.; Hicks, B. Characterising the Digital Twin: A systematic literature review. CIRP J. Manuf. Sci. Technol. 2020, 29, 36–52. [CrossRef]

- Aivaliotis, P.; Georgoulias, K.; Chryssolouris, G. The use of Digital Twin for predictive maintenance in manufacturing. Int. J. Comput. Integr. Manuf. 2019, 32(11), 1067–1080. [CrossRef]

- Pan, Y.; Kang, S.; Kong, L.; Wu, J.; Yang, Y.; Zuo, H. Remaining useful life prediction methods of equipment components based on deep learning for sustainable manufacturing: a literature review. Artif. Intell. Eng. Des. Anal. Manuf. 2025, 39, 4. [CrossRef]

- Xu, Q.; Ali, S.; Yue, T. Digital Twin-based Anomaly Detection with Curriculum Learning in Cyber-physical Systems. ACM Trans. Softw. Eng. Methodol. 2023, 32(5), 1–32. [CrossRef]

- Schena, L.; Marques, P.A.; Poletti, R.; Ahizi, S.; Van Den Berghe, J.; Mendez, M.A. Reinforcement Twinning: From digital twins to model-based reinforcement learning. J. Comput. Sci. 2024, 82, 102421. [CrossRef]

- Kreuzer, T.; Papapetrou, P.; Zdravkovic, J. Artificial intelligence in digital twins—A systematic literature review. Data Knowl. Eng. 2024, 151, 102304. [CrossRef]

- Liu, J.; Lu, X.; Zhou, Y.; Cui, J.; Wang, S.; Zhao, Z. Design of Photovoltaic Power Station Intelligent Operation and Maintenance System Based on Digital Twin. In: Proceedings of the 2021 6th International Conference on Robotics and Automation Engineering (ICRAE), Guangzhou, China, 20–22 Nov 2021; IEEE: pp. 206–211. [CrossRef]

- Livera, A.; Paphitis, G.; Pikolos, L.; Papadopoulos, I.; Montes-Romero, J.; Lopez-Lorente, J.; Makrides, G.; Sutterlueti, J.; Georghiou, G.E. Intelligent Cloud-Based Monitoring and Control Digital Twin for Photovoltaic Power Plants. In: Proceedings of the 2022 IEEE 49th Photovoltaics Specialists Conference (PVSC), Philadelphia, PA, USA, 5–10 June 2022; IEEE: pp. 0267–0274. [CrossRef]

- Ma, Y.; Kassler, A.; Ahmed, B.S.; Krakhmalev, P.; Thore, A.; Toyser, A.; Lindbäck, H. Using Deep Reinforcement Learning for Zero Defect Smart Forging. In: Ng, A.H.C., Syberfeldt, A., Högberg, D., Holm, M., Eds.; Advances in Transdisciplinary Engineering; IOS Press: 2022. [CrossRef]

- Chhetri, S.R.; Faezi, S.; Canedo, A.; Faruque, M.A.A. QUILT: quality inference from living digital twins in IoT-enabled manufacturing systems. In: Proceedings of the International Conference on Internet of Things Design and Implementation, Montreal, QC, Canada, 15–18 April 2019; ACM: pp. 237–248. [CrossRef]

- Maia, E.; Wannous, S.; Dias, T.; Praça, I.; Faria, A. Holistic Security and Safety for Factories of the Future. Sensors 2022, 22(24), 9915. [CrossRef]

- Barriga, R.; Romero, M.; Nettleton, D.; Hassan, H. Advanced data modeling for industrial drying machine energy optimization. J. Supercomput. 2022, 78(15), 16820–16840. [CrossRef]

- Bansal, R.; Khanesar, M.A.; Branson, D. Ant Colony Optimization Algorithm for Industrial Robot Programming in a Digital Twin. In: Proceedings of the 2019 25th International Conference on Automation and Computing (ICAC), Lancaster, UK, 5–7 Sep 2019; IEEE: pp. 1–5. [CrossRef]

- Liu, Y.; Xu, H.; Liu, D.; Wang, L. A digital twin-based sim-to-real transfer for deep reinforcement learning-enabled industrial robot grasping. Robot. Comput. Integr. Manuf. 2022, 78, 102365. [CrossRef]

- Li, J.; Pang, D.; Zheng, Y.; Guan, X.; Le, X. A flexible manufacturing assembly system with deep reinforcement learning. Control Eng. Pract. 2022, 118, 104957. [CrossRef]

- Kobayashi, K.; Alam, S.B. Explainable, Interpretable & Trustworthy AI for Intelligent Digital Twin: Case Study on Remaining Useful Life. arXiv 2023, arXiv:2301.06676. Version 2. [CrossRef]

- Mehrabi, A.; Yari, K.; Van Driel, W.D.; Poelma, R.H. AI-Driven Digital Twin for Health Monitoring of Wide Band Gap Power Semiconductors. In: Proceedings of the 2024 IEEE 10th Electronics System-Integration Technology Conference (ESTC), Berlin, Germany, 11–13 Sep 2024; IEEE: pp. 1–8. [CrossRef]

- Li, Y. Fault Prediction and Diagnosis System for Large-diameter Auger Rigs Based on Digital Twin and BP Neural Network. In: Proceedings of the 2022 IEEE 6th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Beijing, China, 7–9 Jan 2022; IEEE: pp. 523–527. [CrossRef]

- Guo, K.; Wan, X.; Liu, L.; Gao, Z.; Yang, M. Fault Diagnosis of Intelligent Production Line Based on Digital Twin and Improved Random Forest. Appl. Sci. 2021, 11(16), 7733. [CrossRef]

- Chakrabarti, A.; Sukumar, R.P.; Jarke, M.; Rudack, M.; Buske, P.; Holly, C. Efficient Modeling of Digital Shadows for Production Processes: A Case Study for Quality Prediction in High Pressure Die Casting Processes. In: Proceedings of the 2021 IEEE 8th International Conference on Data Science and Advanced Analytics (DSAA), Porto, Portugal, 6–9 Oct 2021; IEEE: pp. 1–9. [CrossRef]

- Boulfani, F.; Gendre, X.; Ruiz-Gazen, A.; Salvignol, M. Anomaly detection for aircraft electrical generator using machine learning in a functional data framework. In: Proceedings of the 2020 Global Congress on Electrical Engineering (GC-ElecEng), Valencia, Spain, 4–6 Nov 2020; IEEE: pp. 27–32. [CrossRef]

- Xu, Y.; Sun, Y.; Liu, X.; Zheng, Y. A Digital-Twin-Assisted Fault Diagnosis Using Deep Transfer Learning. IEEE Access 2019, 7, 19990–19999. [CrossRef]

- Ren, Z.; Wan, J.; Deng, P. Machine-Learning-Driven Digital Twin for Lifecycle Management of Complex Equipment. IEEE Trans. Emerg. Top. Comput. 2022, 10(1), 9–22. [CrossRef]

- Xiong, M.; Wang, H.; Fu, Q.; Xu, Y. Digital twin–driven aero-engine intelligent predictive maintenance. Int. J. Adv. Manuf. Technol. 2021, 114(11–12), 3751–3761. [CrossRef]

- Piltan, F.; Toma, R.N.; Shon, D.; Im, K.; Choi, H.-K.; Yoo, D.-S.; Kim, J.-M. Strict-Feedback Backstepping Digital Twin and Machine Learning Solution in AE Signals for Bearing Crack Identification. Sensors 2022, 22(2), 539. [CrossRef]

- Tancredi, G.P.; Vignali, G.; Bottani, E. Integration of Digital Twin, Machine-Learning and Industry 4.0 Tools for Anomaly Detection: An Application to a Food Plant. Sensors 2022, 22(11), 4143. [CrossRef]

- Melesse, T.Y.; Bollo, M.; Pasquale, V.D.; Centro, F.; Riemma, S. Machine Learning-Based Digital Twin for Monitoring Fruit Quality Evolution. Procedia Comput. Sci. 2022, 200, 13–20. [CrossRef]

- Alexopoulos, K.; Nikolakis, N.; Chryssolouris, G. Digital twin-driven supervised machine learning for the development of artificial intelligence applications in manufacturing. Int. J. Comput. Integr. Manuf. 2020, 33(5), 429–439. [CrossRef]

- Wang, Q.; Jiao, W.; Zhang, Y. Deep learning-empowered digital twin for visualized weld joint growth monitoring and penetration control. J. Manuf. Syst. 2020, 57, 429–439. [CrossRef]

- Angin, P.; Anisi, M.H.; Göksel, F.; Gürsoy, C.; Büyükgülcü, A. AgriLoRa: A Digital Twin Framework for Smart Agriculture. J. Wirel. Mob. Netw. Ubiquitous Comput. Dependable Appl. 2020, 11(4), 77–96. [CrossRef]

- Zhang, Q.; Li, Y.; Lim, E.; Sun, J. Real Time Object Detection in Digital Twin with Point-Cloud Perception for a Robotic Manufacturing Station. In: 2022 27th International Conference on Automation and Computing (ICAC), Bristol, United Kingdom, 2022; pp. 1–6. [CrossRef]

- Zhou, X.; Xu, X.; Liang, W.; Zeng, Z.; Shimizu, S.; Yang, L.T.; Jin, Q. Intelligent Small Object Detection for Digital Twin in Smart Manufacturing With Industrial Cyber-Physical Systems. IEEE Trans. Ind. Inform. 2022, 18(2), 1377–1386. [CrossRef]

- Wang, Q.; Jiao, W.; Wang, P.; Zhang, Y. Digital Twin for Human-Robot Interactive Welding and Welder Behavior Analysis. IEEE/CAA J. Autom. Sin. 2021, 8(2), 334–343. [CrossRef]

- Chiurco, A.; Elbasheer, M.; Longo, F.; Nicoletti, L.; Solina, V. Data Modeling and ML Practice for Enabling Intelligent Digital Twins in Adaptive Production Planning and Control. Procedia Comput. Sci. 2023, 217, 1908–1917. [CrossRef]

- Chen, D.; Lv, Z. Artificial Intelligence Enabled Digital Twins for Training Autonomous Cars. Internet Things Cyber-Phys. Syst. 2022, 2, 31–41. [CrossRef]

- Shen, G.; Lei, L.; Li, Z.; Cai, S.; Zhang, L.; Cao, P.; Liu, X. Deep Reinforcement Learning for Flocking Motion of Multi-UAV Systems: Learn From a Digital Twin. IEEE Internet Things J. 2022, 9(13), 11141–11153. [CrossRef]

- Li, B.; Liu, Y.; Tan, L.; Pan, H.; Zhang, Y. Digital Twin Assisted Task Offloading for Aerial Edge Computing and Networks. IEEE Trans. Veh. Technol. 2022, 71(10), 10863–10877. [CrossRef]

- Urgo, M.; Terkaj, W.; Simonetti, G. Monitoring Manufacturing Systems Using AI: A Method Based on a Digital Factory Twin to Train CNNs on Synthetic Data. CIRP J. Manuf. Sci. Technol. 2024, 50, 249–268. [CrossRef]

- Gallala, A.; Kumar, A.A.; Hichri, B.; Plapper, P. Digital Twin for Human–Robot Interactions by Means of Industry 4.0 Enabling Technologies. Sensors 2022, 22(13), 4950. [CrossRef]

- Wang, T.; Cheng, J.; Yang, Y.; Esposito, C.; Snoussi, H.; Tao, F. Adaptive Optimization Method in Digital Twin Conveyor Systems via Range-Inspection Control. IEEE Trans. Autom. Sci. Eng. 2022, 19(2), 1296–1304. [CrossRef]

- Li, H.; Liu, Z.; Yuan, W.; Chen, G.; Chen, X.; Yang, Y.; Peng, J. The Digital Twin Model of Chemical Production Systems in Smart Factories: A Case Study. In: 2021 IEEE 23rd International Conference on High Performance Computing & Communications (HPCC); 7th Int. Conf. on Data Science & Systems; 19th Int. Conf. on Smart City; 7th Int. Conf. on Dependability in Sensor, Cloud & Big Data Systems & Application (DSS/SmartCity/DependSys), Haikou, Hainan, China, 2021; pp. 1035–1041. [CrossRef]

- Min, Q.; Lu, Y.; Liu, Z.; Su, C.; Wang, B. Machine Learning Based Digital Twin Framework for Production Optimization in Petrochemical Industry. Int. J. Inf. Manag. 2019, 49, 502–519. [CrossRef]

- Huang, B.; Wang, D.; Li, H.; Zhao, C. Network Selection and QoS Management Algorithm for 5G Converged Shipbuilding Network Based on Digital Twin. In: 2022 10th International Conference on Information and Education Technology (ICIET), Matsue, Japan, 2022; pp. 403–408. [CrossRef]

- Dos Santos, C.H.; Gabriel, G.T.; Do Amaral, J.V.S.; Montevechi, J.A.B.; De Queiroz, J.A. Decision-Making in a Fast Fashion Company in the Industry 4.0 Era: A Digital Twin Proposal to Support Operational Planning. Int. J. Adv. Manuf. Technol. 2021, 116(5–6), 1653–1666. [CrossRef]

- Latif, H.; Shao, G.; Starly, B. A Case Study of Digital Twin for a Manufacturing Process Involving Human Interactions. In: 2020 Winter Simulation Conference (WSC), Orlando, FL, USA, 2020; pp. 2659–2670. [CrossRef]

- Qiu, T.; Chi, J.; Zhou, X.; Ning, Z.; Atiquzzaman, M.; Wu, D.O. Edge Computing in Industrial Internet of Things: Architecture, Advances and Challenges. IEEE Commun. Surv. Tutor. 2020, 22(4), 2462–2488. [CrossRef]

- Nain, G.; Pattanaik, K.K.; Sharma, G.K. Towards Edge Computing in Intelligent Manufacturing: Past, Present and Future. J. Manuf. Syst. 2022, 62, 588–611. [CrossRef]

- Montes-Sánchez, J.M.; Uwate, Y.; Nishio, Y.; Vicente-Díaz, S.; Jiménez-Fernández, Predictive Maintenance Edge Artificial Intelligence Application Study Using Recurrent Neural Networks for Early Aging Detection in Peristaltic Pumps. IEEE Trans. Reliab. 2024, 1–15. [CrossRef]

- Parikh, S.; Dave, D.; Patel, R.; Doshi, N. Security and Privacy Issues in Cloud, Fog and Edge Computing. Procedia Comput. Sci. 2019, 160, 734–739. [CrossRef]

- Maciel, P.; Dantas, J.; Melo, C.; Pereira, P.; Oliveira, F.; Araujo, J.; Matos, R. A Survey on Reliability and Availability Modeling of Edge, Fog, and Cloud Computing. J. Reliab. Intell. Environ. 2022, 8(3), 227–245. [CrossRef]

- Artiushenko, V.; Lang, S.; Lerez, C.; Reggelin, T.; Hackert-Oschätzchen, M. Resource-Efficient Edge AI Solution for Predictive Maintenance. Procedia Comput. Sci. 2024, 232, 348–357. [CrossRef]

- Texas Instruments. TMS320F28P55x Real-Time Microcontrollers Datasheet (Rev. B). 2024. https://www.ti.com/lit/ds/symlink/tms320f28p550sj.pdf.

- What’s New in Artificial Intelligence From the 2023 Gartner Hype Cycle™. https://www.gartner.com/en/articles/what-s-new-in-artificial-intelligence-from-the-2023-gartner-hype-cycle.

- Bringing AI to the Device: Edge AI Chips Come Into Their Own. https://www2.deloitte.com/us/en/insights/industry/technology/technology-media-and-telecom-predictions/2020/ai-chips.html.

- Hsu, H.-Y.; Srivastava, G.; Wu, H.-T.; Chen, M.-Y. Remaining Useful Life Prediction Based on State Assessment Using Edge Computing on Deep Learning. Comput. Commun. 2020, 160, 91–100. [CrossRef]

- Teoh, Y.K.; Gill, S.S.; Parlikad, A.K. IoT and Fog-Computing-Based Predictive Maintenance Model for Effective Asset Management in Industry 4.0 Using Machine Learning. IEEE Internet Things J. 2023, 10(3), 2087–2094. [CrossRef]

- Zhang, T.; Ding, B.; Zhao, X.; Liu, G.; Pang, Z. LearningADD: Machine Learning Based Acoustic Defect Detection in Factory Automation. J. Manuf. Syst. 2021, 60, 48–58. [CrossRef]

- Milić, S.D.; Miladinović, N.M.; Rakić, A. A Wayside Hotbox System with Fuzzy and Fault Detection Algorithms in IIoT Environment. Control Eng. Pract. 2020, 104, 104624. [CrossRef]

- Hu, L.; Miao, Y.; Wu, G.; Hassan, M.M.; Humar, I. iRobot-Factory: An Intelligent Robot Factory Based on Cognitive Manufacturing and Edge Computing. Future Gener. Comput. Syst. 2019, 90, 569–577. [CrossRef]

- Huang, H.; Ding, S.; Zhao, L.; Huang, H.; Chen, L.; Gao, H.; Ahmed, S.H. Real-Time Fault Detection for IIoT Facilities Using GBRBM-Based DNN. IEEE Internet Things J. 2020, 7(7), 5713–5722. [CrossRef]

- Zhao, X.; Lv, K.; Zhang, Z.; Zhang, Y.; Wang, Y. A Multi-Fault Diagnosis Method of Gear-Box Running on Edge Equipment. J. Cloud Comput. 2020, 9(1), 58. [CrossRef]

- Kim, D.; Yang, H.; Chung, M.; Cho, S.; Kim, H.; Kim, M.; Kim, K.; Kim, E. Squeezed Convolutional Variational AutoEncoder for Unsupervised Anomaly Detection in Edge Device Industrial Internet of Things. In Proceedings of the 2018 Int. Conf. Inf. Comput. Technol. (ICICT), DeKalb, IL, USA, 23–25 March 2018; pp. 67–71. https://ieeexplore.ieee.org/document/8356842/ Accessed 2025-05-24. [CrossRef]

- Wang, Y.; Liu, M.; Zheng, P.; Yang, H.; Zou, J. A Smart Surface Inspection System Using Faster R-CNN in Cloud-Edge Computing Environment. Adv. Eng. Inform. 2020, 43, 101037. [CrossRef]

- Ha, H.; Jeong, J. CNN-Based Defect Inspection for Injection Molding Using Edge Computing and Industrial IoT Systems. Appl. Sci. 2021, 11(14), 6378. [CrossRef]

- Zhu, Z.; Han, G.; Jia, G.; Shu, L. Modified DenseNet for Automatic Fabric Defect Detection With Edge Computing for Minimizing Latency. IEEE Internet Things J. 2020, 7(10), 9623–9636. [CrossRef]

- Gauttam, H.; Pattanaik, K.K.; Bhadauria, S.; Nain, G.; Prakash, P.B. An Efficient DNN Splitting Scheme for Edge-AI Enabled Smart Manufacturing. J. Ind. Inf. Integr. 2023, 34, 100481. [CrossRef]

- Fraga-Lamas, P.; Lopes, S.I.; Fernández-Caramés, T.M. Green IoT and Edge AI as Key Technological Enablers for a Sustainable Digital Transition towards a Smart Circular Economy: An Industry 5.0 Use Case. Sensors 2021, 21(17), 5745. [CrossRef]

- Yang, X.; Han, M.; Tang, H.; Li, Q.; Luo, X. Detecting Defects With Support Vector Machine in Logistics Packaging Boxes for Edge Computing. IEEE Access 2020, 8, 64002–64010. [CrossRef]

- Schmitt, J.; Bönig, J.; Borggräfe, T.; Beitinger, G.; Deuse, J. Predictive Model-Based Quality Inspection Using Machine Learning and Edge Cloud Computing. Adv. Eng. Inform. 2020, 45, 101101. [CrossRef]

- Ma, Q.; Niu, J.; Ouyang, Z.; Li, M.; Ren, T.; Li, Q. Edge Computing-Based 3D Pose Estimation and Calibration for Robot Arms. In Proceedings of the 2020 7th IEEE Int. Conf. on Cyber Security and Cloud Computing (CSCloud)/6th IEEE Int. Conf. on Edge Computing and Scalable Cloud (EdgeCom), New York, NY, USA, 22–24 August 2020; pp. 246–251. https://ieeexplore.ieee.org/document/9170983/ Accessed 2025-05-24. [CrossRef]

- Zhang, C.; Ji, W. Edge Computing Enabled Production Anomalies Detection and Energy-Efficient Production Decision Approach for Discrete Manufacturing Workshops. IEEE Access 2020, 8, 158197–158207. [CrossRef]

- Du, J.; Li, X.; Gao, Y.; Gao, L. Integrated Gradient-Based Continuous Wavelet Transform for Bearing Fault Diagnosis. Sensors 2022, 22(22), 8760. [CrossRef]

- Yang, D.; Karimi, H.R.; Gelman, L. A Fuzzy Fusion Rotating Machinery Fault Diagnosis Framework Based on the Enhancement Deep Convolutional Neural Networks. Sensors 2022, 22(2), 671. [CrossRef]

- Janssens, O.; Loccufier, M.; Van Hoecke, S. Thermal Imaging and Vibration-Based Multisensor Fault Detection for Rotating Machinery. IEEE Trans. Ind. Inform. 2019, 15(1), 434–444. [CrossRef]

- Duquesnoy, M.; Liu, C.; Dominguez, D.Z.; Kumar, V.; Ayerbe, E.; Franco, A.A. Machine Learning-Assisted Multi-Objective Optimization of Battery Manufacturing from Synthetic Data Generated by Physics-Based Simulations. arXiv 2022, abs/2205.01621. https://arxiv.org/abs/2205.01621 Accessed 2025-05-20. [CrossRef]

- Khosravi, H.; Farhadpour, S.; Grandhi, M.; Raihan, A.S.; Das, S.; Ahmed, I. Strategic Data Augmentation with CTGAN for Smart Manufacturing: Enhancing Machine Learning Predictions of Paper Breaks in Pulp-and-Paper Production. arXiv 2023, abs/2311.09333. https://arxiv.org/abs/2311.09333 Accessed 2025-05-20. [CrossRef]

- Liaskovska, S.; Tyskyi, S.; Martyn, Y.; Augousti, A.T.; Kulyk, V. Systematic Generation and Evaluation of Synthetic Production Data for Industry 5.0 Optimization. Technologies 2025, 13(2), 84. [CrossRef]

- Singh, R.; Gill, S.S. Edge AI: A Survey. Internet Things Cyber-Phys. Syst. 2023, 3, 71–92. [CrossRef]

- Lim, W.Y.B.; Luong, N.C.; Hoang, D.T.; Jiao, Y.; Liang, Y.-C.; Yang, Q.; Niyato, D.; Miao, C. Federated Learning in Mobile Edge Networks: A Comprehensive Survey. arXiv 2019, abs/1909.11875. https://arxiv.org/abs/1909.11875. Accessed 2025-05-20. [CrossRef]

- Texas Instruments. TIDA-010955 Arc Fault Detection Using Embedded AI Models Reference Design. Texas Instruments 2024. https://www.ti.com/tool/TIDA-010955. Accessed 2025-05-21.

- Texas Instruments. Motor Fault Detection Using Embedded AI Models. Texas Instruments 2024. https://www.ti.com/lit/pdf/spracw8. Accessed 2025-05-21.

- Xu, C.; Zhu, G. Intelligent Manufacturing Lie Group Machine Learning: Real-Time and Efficient Inspection System Based on Fog Computing. J. Intell. Manuf. 2021, 32(1), 237–249. [CrossRef]

- Guerra, R.H.; Quiza, R.; Villalonga, A.; Arenas, J.; Castano, F. Digital Twin-Based Optimization for Ultraprecision Motion Systems With Backlash and Friction. IEEE Access 2019, 7, 93462–93472. [CrossRef]

- ABB. ABB Ability™ Digital Powertrain—Condition Monitoring of Rotating Equipment Fitted with ABB Ability™ Smart Sensors (EN). https://new.abb.com/service/motion/data-and-advisory-services/condition-monitoring-for-rotating-equipment.

- ABB. ABB Ability Predictive Maintenance for Grinding. https://new.abb.com/mining/services/advanced-digital-services/predictive-maintenance-grinding. Accessed 2025-05-21.

- IBM. IBM Maximo Application Suite (2025). https://www.ibm.com/docs/en/masv-and-l/cd?topic=overview-maximo-application-suite-technical. Accessed 2025-05-21.

- PTC. ThingWorx: Industrial IoT Software | IIoT Platform. https://www.ptc.com/en/products/thingworx. Accessed 2025-05-21.

- Microsoft Azure. Azure IoT – Internet of Things Platform. https://azure.microsoft.com/en-us/solutions/iot. Accessed 2025-05-21.

- Uptake. Predictive Maintenance. https://uptake.com/. Accessed 2025-05-21.

- MathWorks. Predictive Maintenance Toolbox. https://www.mathworks.com/products/predictive-maintenance.html. Accessed 2025-05-21.

- Cognex. VisionPro Software. https://www.cognex.com/products/machine-vision/vision-software/visionpro-software. Accessed 2025-05-21.

- KEYENCE. Vision Systems | KEYENCE America. https://www.keyence.com/products/vision/vision-sys/. Accessed 2025-05-21.

- NI. What Is the NI Vision Development Module. https://www.ni.com/en/shop/data-acquisition-and-control/add-ons-for-data-acquisition-and-control/what-is-vision-development-module.html. Accessed 2025-05-21.

- Integrys. Matrox Imaging Library (MIL) Developing Machine Vision. https://integrys.com/product/matrox-imaging-library-mil/. Accessed 2025-05-21.

- ZEISS. ZEISS PiWeb | Quality Data Management. https://www.zeiss.com/metrology/us/software/zeiss-piweb.html. Accessed 2025-05-21.

- Mao, M.; Hong, M. YOLO Object Detection for Real-Time Fabric Defect Inspection in the Textile Industry: A Review of YOLOv1 to YOLOv11. Sensors 2025, 25(7), 2270. [CrossRef]

- Razavi, M.; Mavaddati, S.; Koohi, H. ResNet Deep Models and Transfer Learning Technique for Classification and Quality Detection of Rice Cultivars. Expert Syst. Appl. 2024, 247, 123276. [CrossRef]

- Yadao, G.G.; Julkipli, O.M.Y.; Manlises, C.O. Performance Analysis of EfficientNet for Rice Grain Quality Control—An Evaluation against YOLOv7 and YOLOv8. In: 2024 7th International Conference on Information and Computer Technologies (ICICT), pp. 93–98. IEEE, Honolulu, HI, USA (2024). https://ieeexplore.ieee.org/document/10541526/. Accessed 2025-05-21. [CrossRef]

- Amazon Web Services. Computer Vision SDK - AWS Panorama. https://aws.amazon.com/panorama/. Accessed 2025-05-21.

- Edge Impulse. The Leading Edge AI Platform. https://edgeimpulse.com/. Accessed 2025-05-21.

- Coral. https://coral.ai/. Accessed 2025-05-21.

- Siemens. Plant Simulation Software | Siemens Software. https://plm.sw.siemens.com/en-US/tecnomatix/plant-simulation-software/. Accessed 2025-05-21.

- Rockwell Automation. Arena Simulation Software. https://www.rockwellautomation.com/de-de/products/software/arena-simulation.html. Accessed 2025-05-21.

- AnyLogic. Simulation Modeling Software Tools & Solutions. https://www.anylogic.com/. Accessed 2025-05-21.

- AspenTech. Aspen Plus | Leading Process Simulation Software. https://www.aspentech.com/en/products/engineering/aspen-plus. Accessed 2025-05-21.

- AspenTech. Aspen HYSYS | Process Simulation Software. https://www.aspentech.com/en/products/engineering/aspen-hysys. Accessed 2025-05-21.

- Ansys. Ansys Twin Builder | Create and Deploy Digital Twin Models. https://www.ansys.com/products/digital-twin/ansys-twin-builder. Accessed 2025-05-21.

- Schmid, J.; Teichert, K.; Chioua, M.; Schindler, T.; Bortz, M. Simulation and Optimal Control of the Williams-Otto Process Using Pyomo. arXiv 2020. https://arxiv.org/abs/2004.07614. Accessed 2025-05-21. [CrossRef]