The fundamental concept of compressed sensing theory is the integration of signal acquisition and compression, thereby avoiding the conventional approach in signal processing where the signal is first sampled and then compressed for transmission. The mathematical model of compressed sensing theory can be expressed as follows [

6]:

where

P is the observation matrix of

,

,

D is the sparse dictionary (sparse basis),

is the compressible original signal of length

N,

is the observed signal of

M dimensions, and

is the coefficient vector under the sparse basis. In reference [

8], it is assumed that the sparse basis

D is an orthogonal matrix, which has led many works related to compressed sensing to define

D as an orthogonal matrix. In reality, in references [

7], M. Elad and D. L. Donoho, among others, have extended the sparse basis to non-orthogonal cases, defining this matrix as a dictionary.

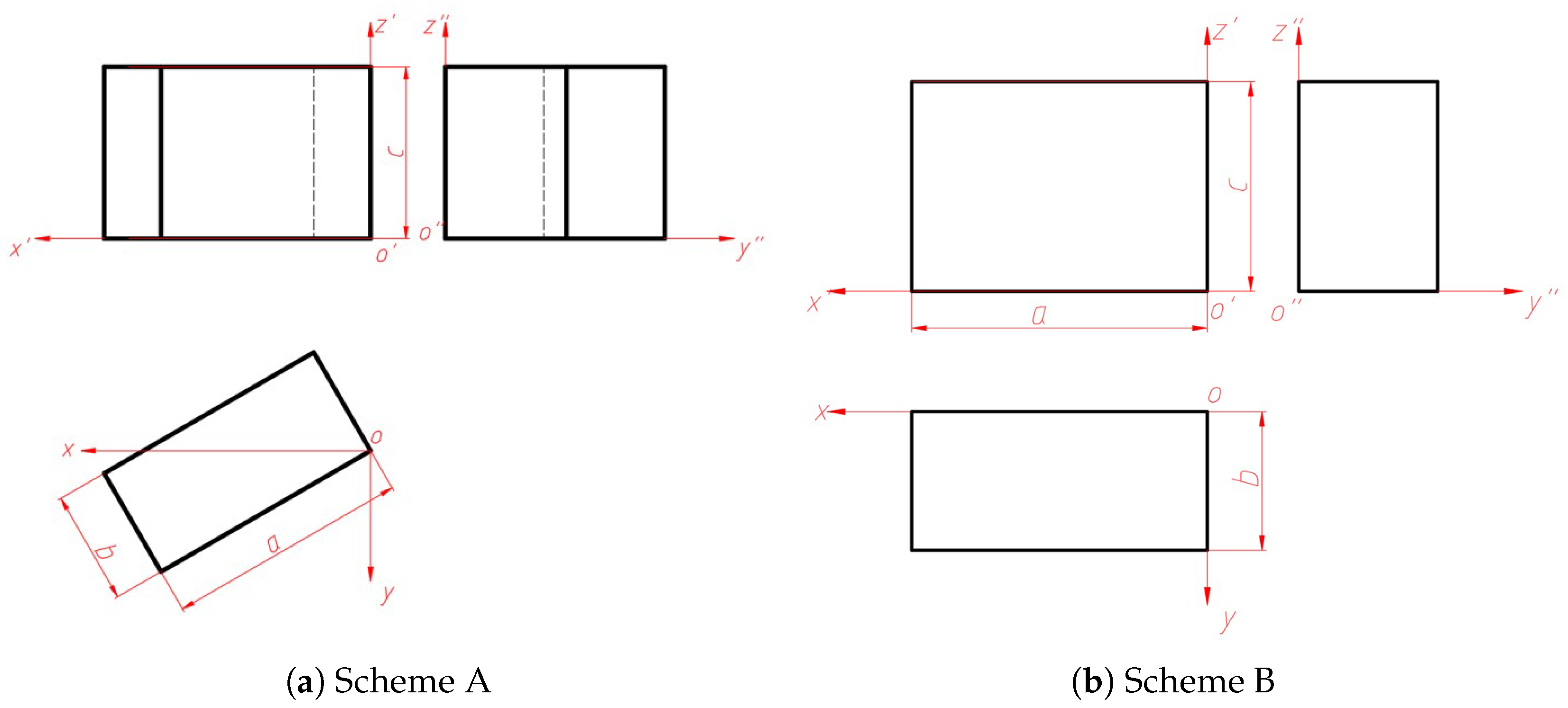

Compared to the Nyquist-Shannon sampling theorem, the sparse dictionary is learned and provides a more accurate representation of the function being approximated relative to the Shannon function. For understanding the sparse dictionary, we can explain it through the projection of points, lines, and planes, for example:

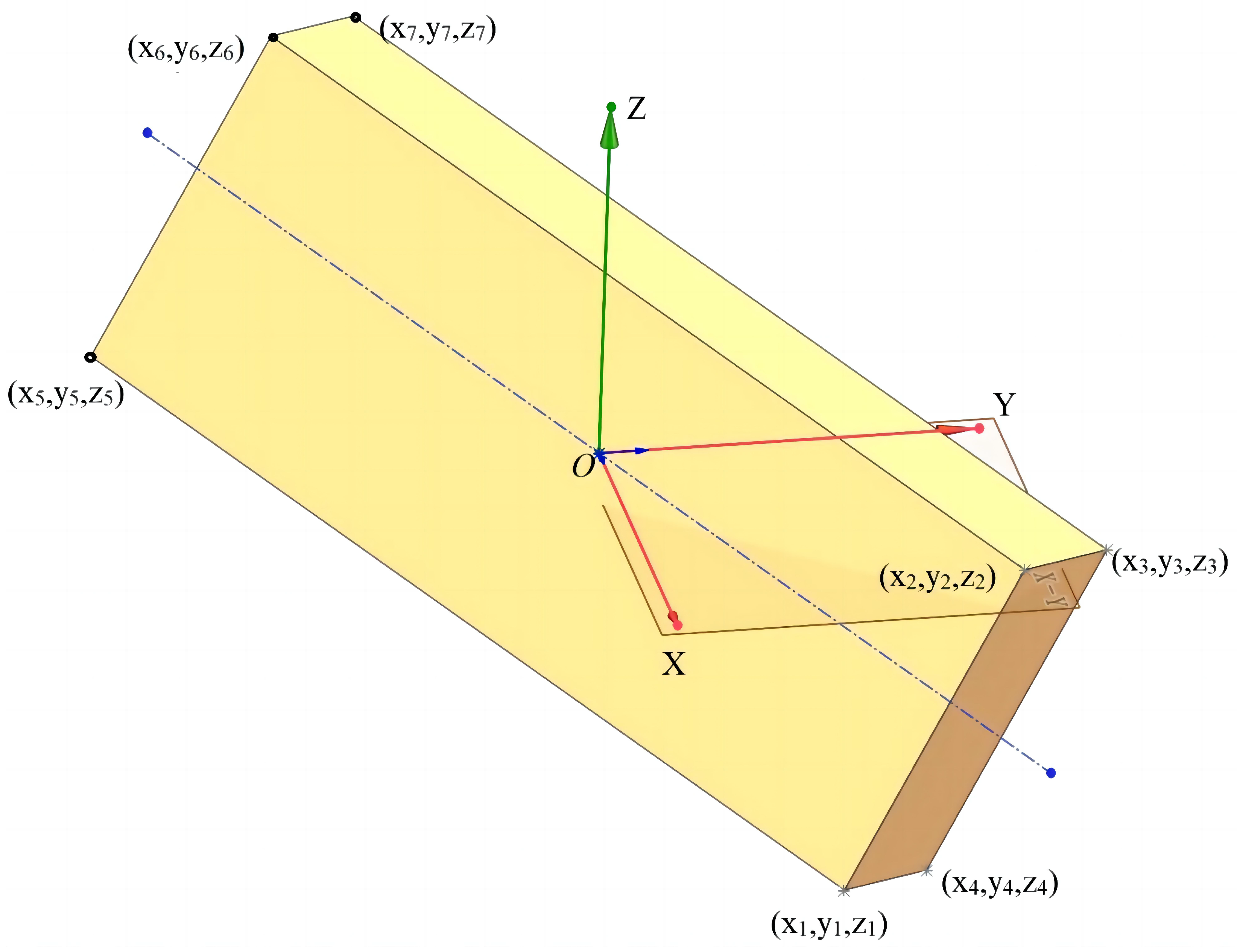

In 3D Euclidean space, although a point has three coordinate values, we can establish a local coordinate system in such a way that the point lies on one of the axes of this local coordinate system. This local coordinate system corresponds to the geometric structure of the point in space (although, strictly speaking, a single point does not constitute a structure; it is merely a special case of a structure). In the global coordinate system, we do not need to measure its position using all three global coordinate axes. A straight line in three-dimensional Euclidean space has a clear and simple geometric structure [

9]. A local coordinate system can be established such that one of its axes is parallel or coincides with the straight line, thereby reflecting the geometric structure of the line. For a rectangle, which is characterised by 4 vertices with a total of 12 coordinate values, two axes of the local coordinate system can be aligned or parallel with two adjacent edges of the rectangle. In the local coordinate system, the four points are simply four points on a plane, and the number of non-zero coordinate values is reduced to eight. If we use the relative coordinates between the four characteristic points, the non-zero elements are further reduced to four, or even two (if the origin of the local coordinate system is translated to one of the characteristic points).

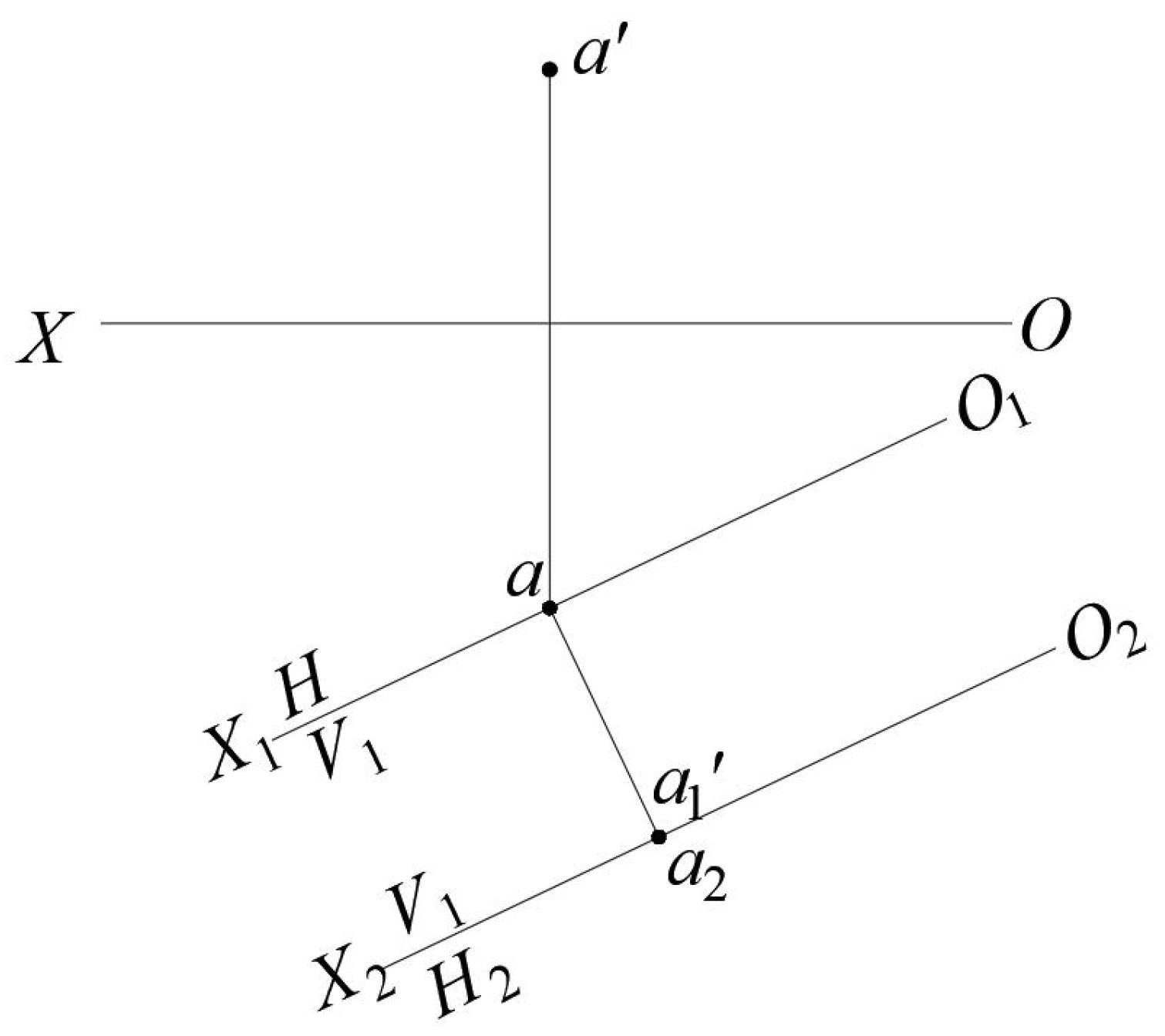

3.1. The Sparse Representation and Reconstruction Model of Points

As shown in

Figure 4, in 3D Euclidean space, let the coordinates of point

A be

. During the first plane change, the new projection axis

passes through the horizontal projection

a of point

A. In the new projection plane system

, point

A actually lies on the projection plane

. In the second plane change, the new projection axis

passes through

, and in the newly formed projection plane system

, point

A lies on the projection axis

, with its coordinates expressed as

.

The new projection plane system

can have infinitely many variations. The simplest form is the projection plane system obtained by translation, where the axis

passes through point

A, such that the three-projection plane system can still be represented in the form of a unit matrix

.

In the theory of compressed sensing, this matrix is referred to as a sparse matrix or a sensing matrix. Professor David L. Donoho from Stanford University suggests that this matrix does not need to be an orthogonal matrix [

7], which simplifies our subsequent analysis.

Theorem 1. A measurement matrix corresponding to a point in 3D Euclidean space allows for the precise reconstruction of the original signal from the measurement signal.

Proof. Without loss of generality, let the measurement matrix (projection matrix) be denoted as . According to the theory of compressed sensing, as long as the measurement matrix and the vector formed by the three coordinate values of a known point A are not orthogonal, we have:

(1)The measurement signal.

(2)

, where

is a sparse matrix;

x is the original signal, corresponding to a point in 3D Euclidean space; and

is the sparse coefficient. Therefore, we have

because

It is evident that by setting

,

, Equation (

4) can be satisfied, and

, thereby meeting the sparsity requirement. Next, we use the sparse coefficients

obtained in this way to reconstruct the original signal

, which represents the coordinates of point A.

According to the original signal reconstruction steps in compressed sensing theory [

8], we have:

It is evident that an exact reconstruction of the original signal x has been achieved. Hence, the proof is complete. □

3.2. The Sparsity and Reconstruction Representation of a Straight Line

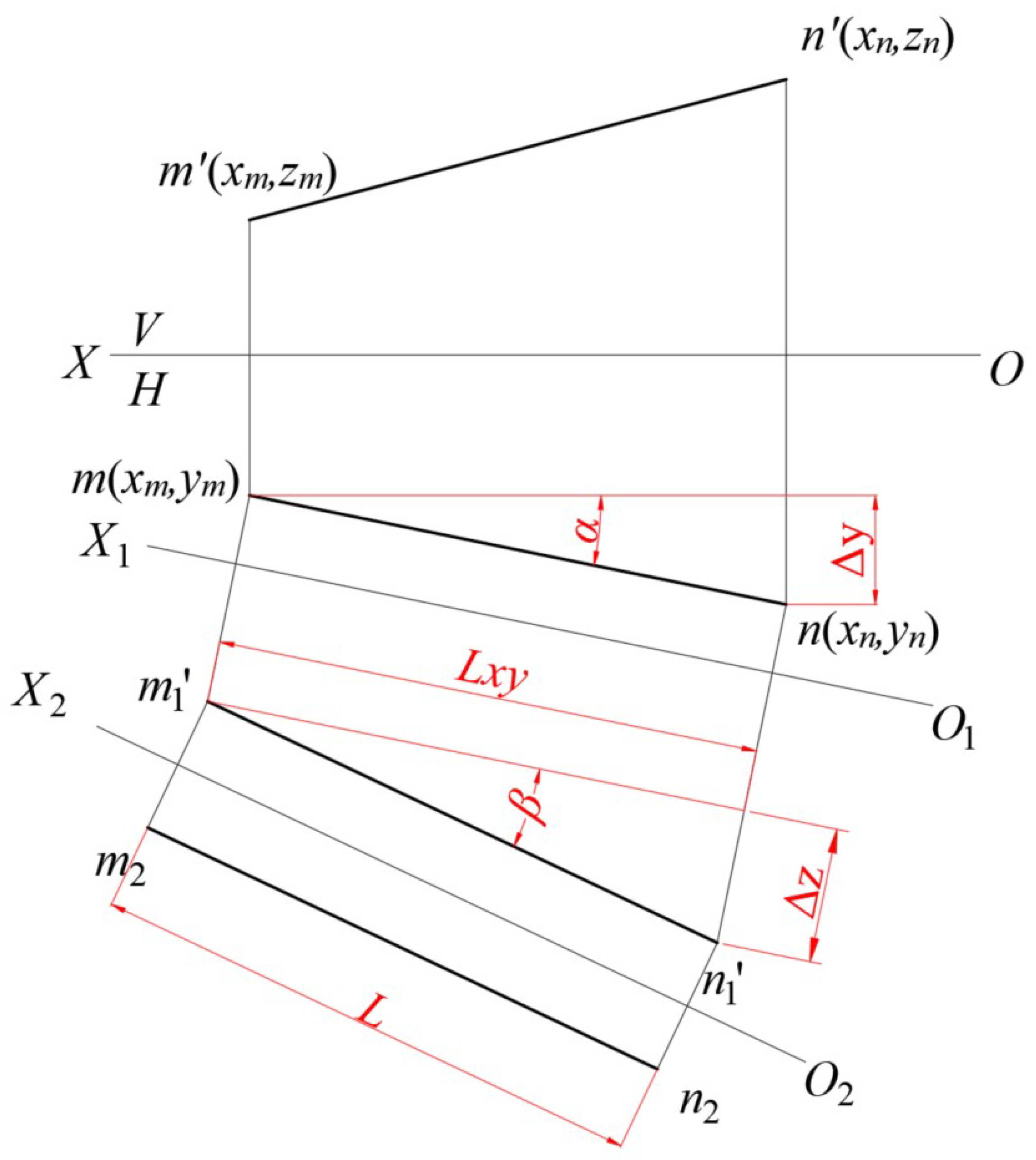

It should be noted that the signal being processed is a straight line, and a line segment can be determined by two points, which already confirms the sparsity of the signal. In descriptive geometry, to further achieve the sparse representation of a straight line, one can use two plane transformations to convert a general straight line into a line perpendicular to the projection plane, and then by translating the coordinate system so that one of its axes coincides with the line, the maximum sparse representation of the line can be realised. As shown in

Figure 5, the line segment MN is a general straight line, which, after two plane transformations, becomes a line perpendicular to the projection plane in the new projection plane system.

For convenience of description, let

,

, and

. Assume that after two changes of the projection plane, in the new projection plane system

, the projection axis

coincides with the line segment

, which can be represented in vector form as

; in the original projection plane system

, the corresponding line can be expressed in vector form as

. Therefore, the sparse matrix

can be defined as

According to compressed sensing theory, the original line signal can be represented using a sparse basis as:

By direct observation, the solution for the sparse coefficient can be obtained as follows: , . We shall not delve into the methods for solving the sparse coefficients at this juncture, but it is important to note that this represents the sparsest solution for the sparse coefficients, .

Next, we present the compressed sensing theorem concerning a line segment in the form of a theorem [

11].

Theorem 2. The measurement matrix corresponding to the line segment formed by two points and in three-dimensional Euclidean space allows for the accurate reconstruction of the original signal from the measured data.

Proof. Without loss of generality, let the measurement matrix (projection matrix) be

According to the compressed sensing theory, we have:

(1) The measurement signal

(2)

, where

is a sparse matrix;

x is the original signal, corresponding to two points in 3D Euclidean space;

is the sparse coefficient. Therefore,

because

where

It is not difficult to see from Equation (

7) that by choosing

,

, Equation (

6) can be satisfied, and

, which meets the sparsification requirement. Using Equation (

8), the sparse coefficient

can be obtained to reconstruct the original signal

. This completes the proof of the theorem.

□

It is easy to understand that in the sensing matrix , the first and fourth column vectors are the most crucial, while the second, third, fifth, and sixth column vectors only need to be uncorrelated with the first and fourth column vectors. For this reason, the observation matrix only needs to retain the information from the first and fourth column vectors, so an observation matrix with 2 rows and 6 columns will suffice to meet the requirements.

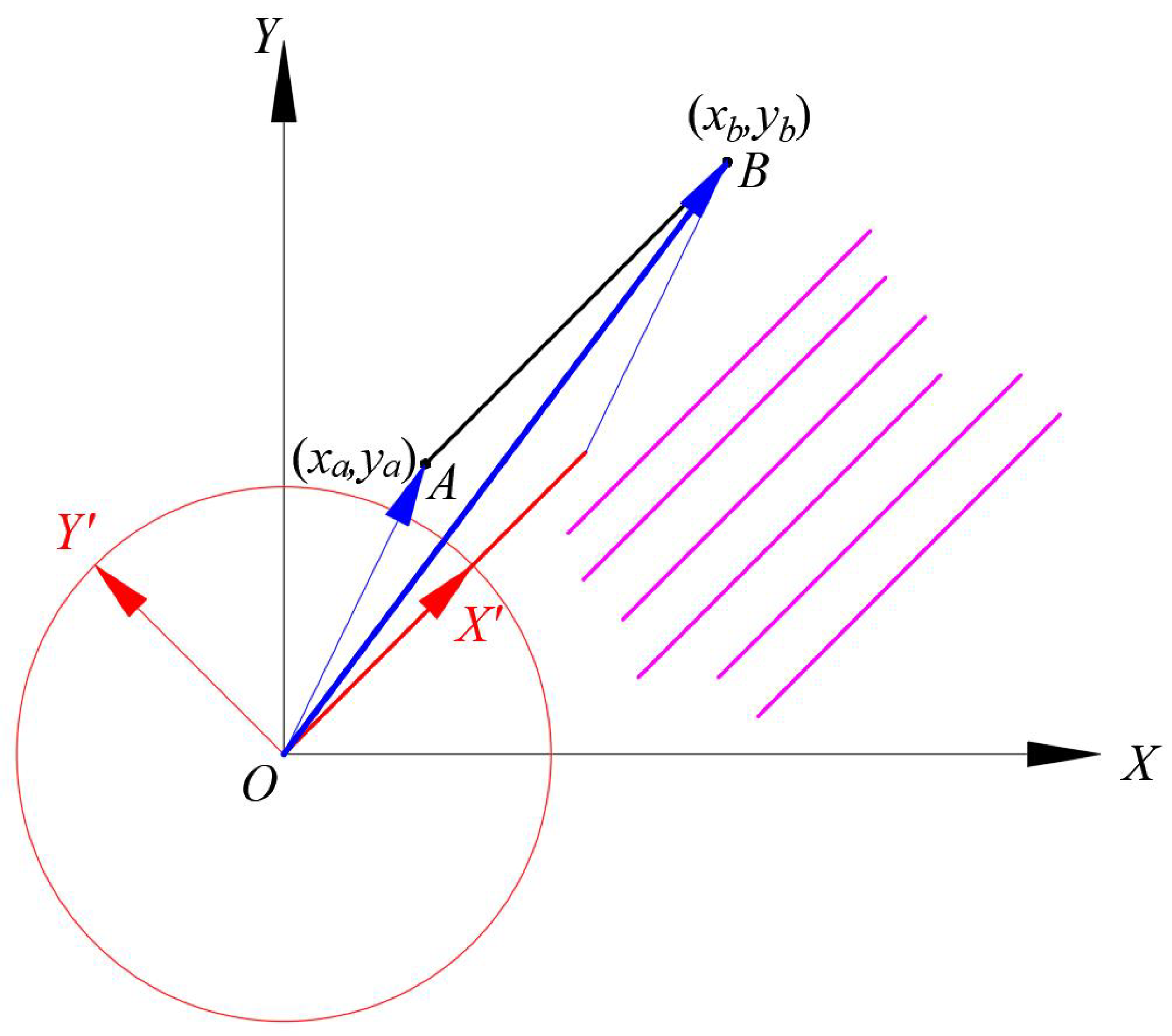

For a line segment, another more general vector expression method involves transforming the line segment into a line parallel to the coordinate axes, using a linear combination of the vector formed by the endpoint coordinates and the coordinate axis vectors. As shown in

Figure 6, the length of the line segment

is

, and the red unit vector

can be expressed as:

, where

,

,

. Its direction is parallel to the line segment

.

In this method of representation, the local coordinate axes that make up the perception matrix are parallel to, but do not coincide with, the direction of the known line segment. A straight line segment can be expressed as a vector,

, and the corresponding perception matrix is represented as

The sparse expression of the line segment

is given by

, and its corresponding matrix expression is

According to compressed sensing theory, its sparsest solution can be expressed by the following optimisation formula:

It can be directly observed that

,

,

,

,

. at first glance, the sparse coefficient vector

does not appear sufficiently sparse. However, the matrix possesses a certain degree of generality, enabling it to represent all lines parallel to line

, such as the magenta parallel lines shown in

Figure 6. Furthermore, when additional points are added on line

, the number of non-zero elements in the sparse coefficient vector

does not need to increase. For instance, when point C is added on the extension of line AB, making points A, B, and C evenly distributed, the signal containing the three points is still represented.

The sparse expression is

, and the corresponding matrix form is

Direct observation reveals that

Similar to the analysis of Theorem 2, the key to the aforementioned sensing matrix lies in the first four column vectors. As long as the observation matrix can capture the first four column vectors of the sensing matrix, it guarantees the accurate reconstruction of the original signal x from the observed signals.

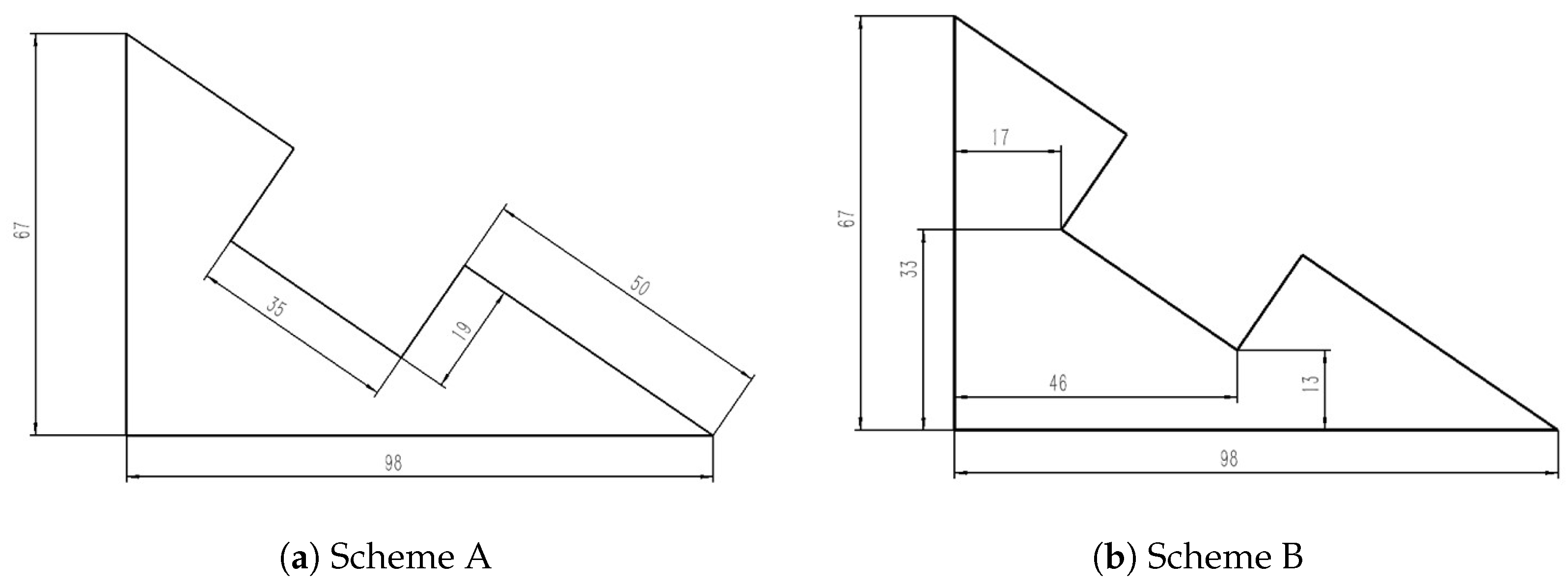

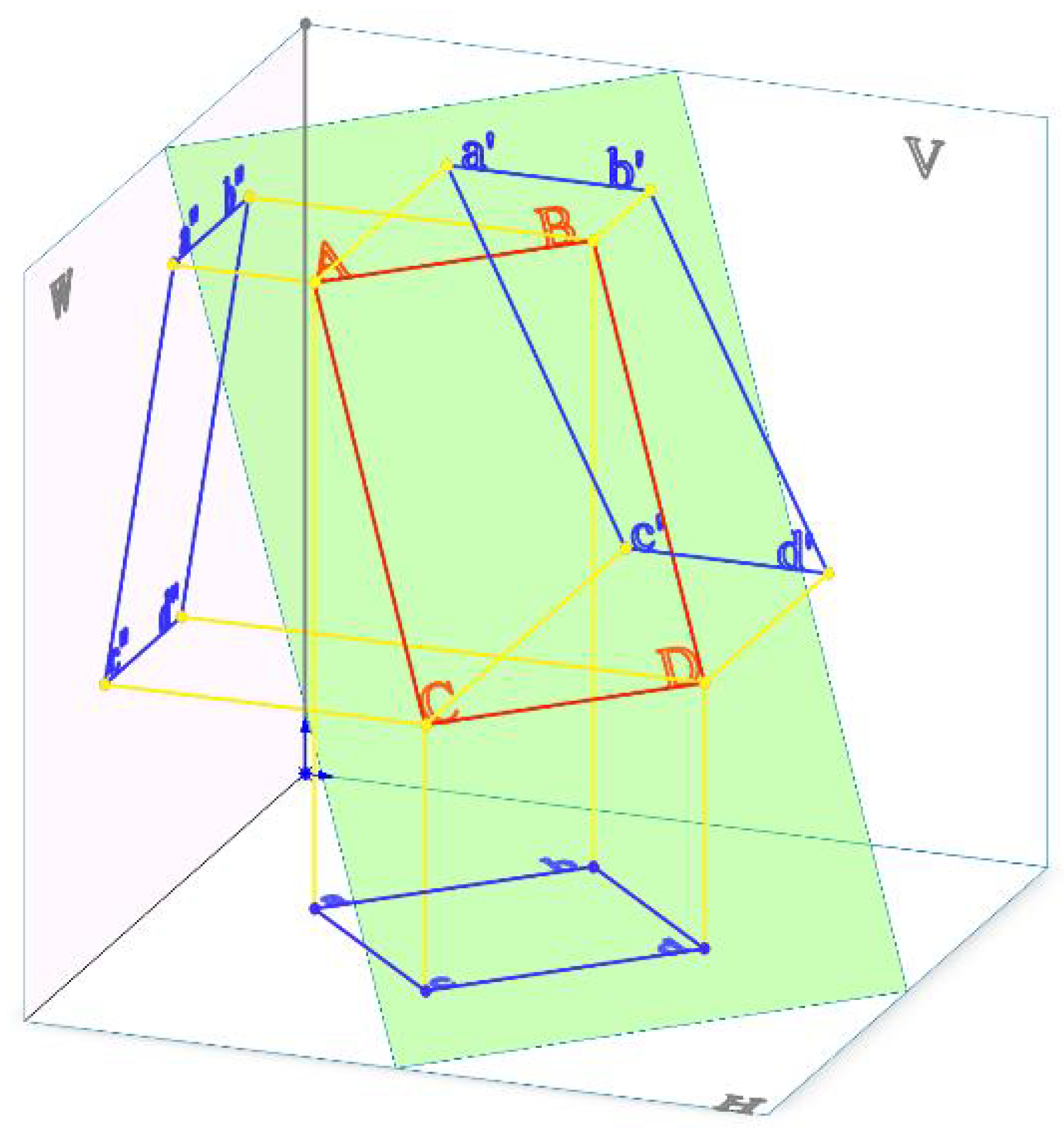

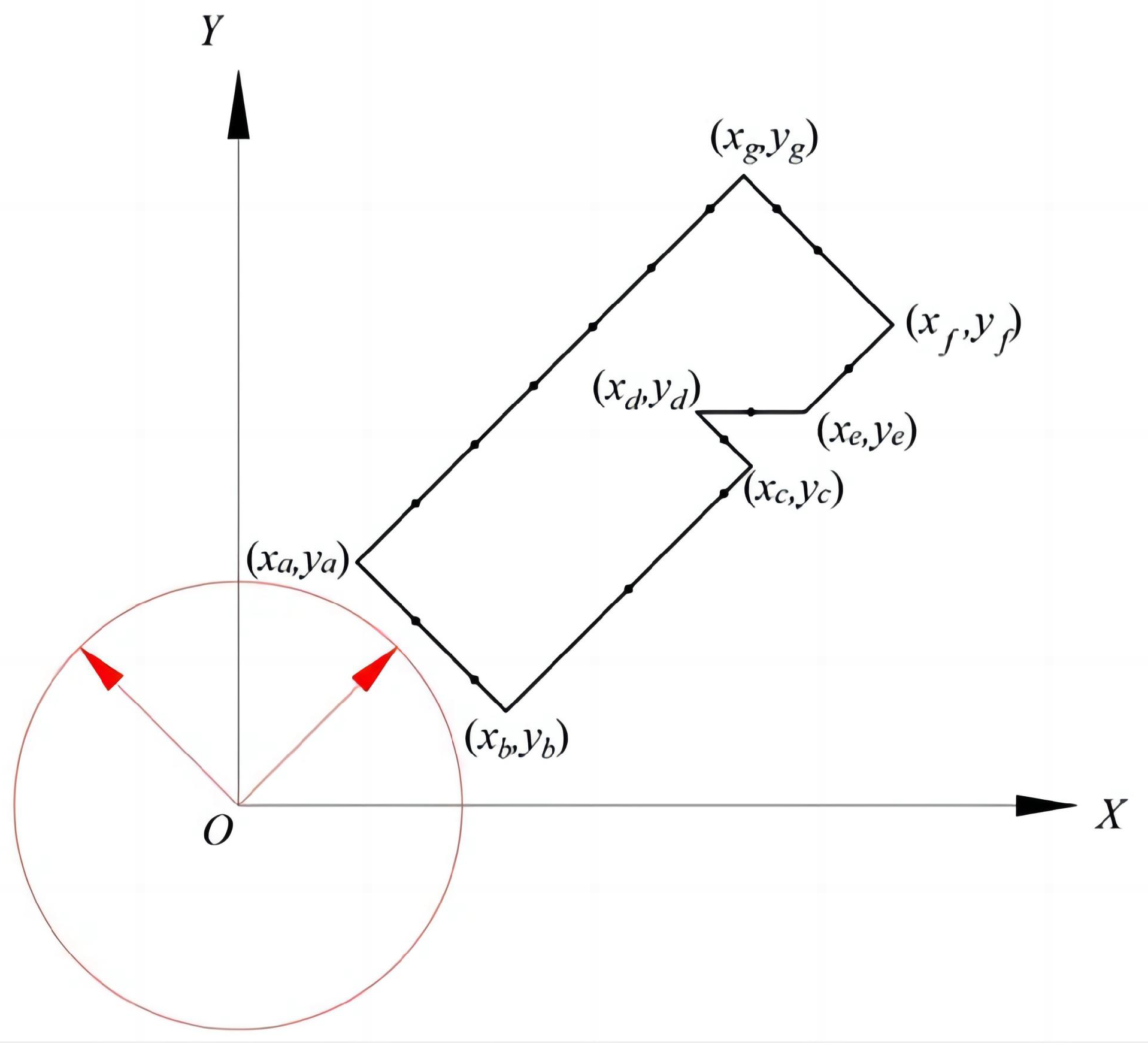

3.3. Sparsity of Planar Polygons

In three-dimensional Euclidean space, a point requires three coordinate values to determine its position; a line segment requires two endpoints, which amounts to 6 coordinate values to define its location. However, when a point in space lies on one of the coordinate axes, only one of its coordinate values is non-zero; similarly, a line segment would have only two non-zero coordinate values. For instance, point A on the X-axis has coordinates ; the line segment on the X-axis has coordinates .

For a planar polygon formed by

k characteristic points, the method of plane projection can be employed so that the plane coincides with a projection plane, such as the horizontal projection plane. In this case, the

z-coordinates of these

k characteristic points are zero, and the vector composed of the coordinate values of these

k points is

-sparse. As shown in

Figure 7, the four characteristic points

of a quadrilateral lie on the same plane, forming a vector with 12 elements, of which 4 elements are zero.

In the theory of sparse representation of lines, only one dimension of the three-dimensional coordinate system is utilised, and the other two coordinate axes are effectively redundant. For simplicity, we will directly use planar figures as examples to illustrate the principle of compressed sensing for planar figures with certain geometric structures. As shown in

Figure 7, a seemingly complex planar polygon has edges that actually follow only two directions. In the local coordinate system formed by the two red-marked vectors, all contour edges are either horizontal or vertical lines. Compressed sensing theory exploits this particular geometric structure.

As illustrated in

Figure 8, the principal components are immediately apparent; however, the structure is not symmetrical. This dataset pertains to planar data, but the principal components should encompass three directions, which can be further extended to additional directions. The model can be described as follows:

Clearly, this is a problem of sparse representation and compressed sensing.

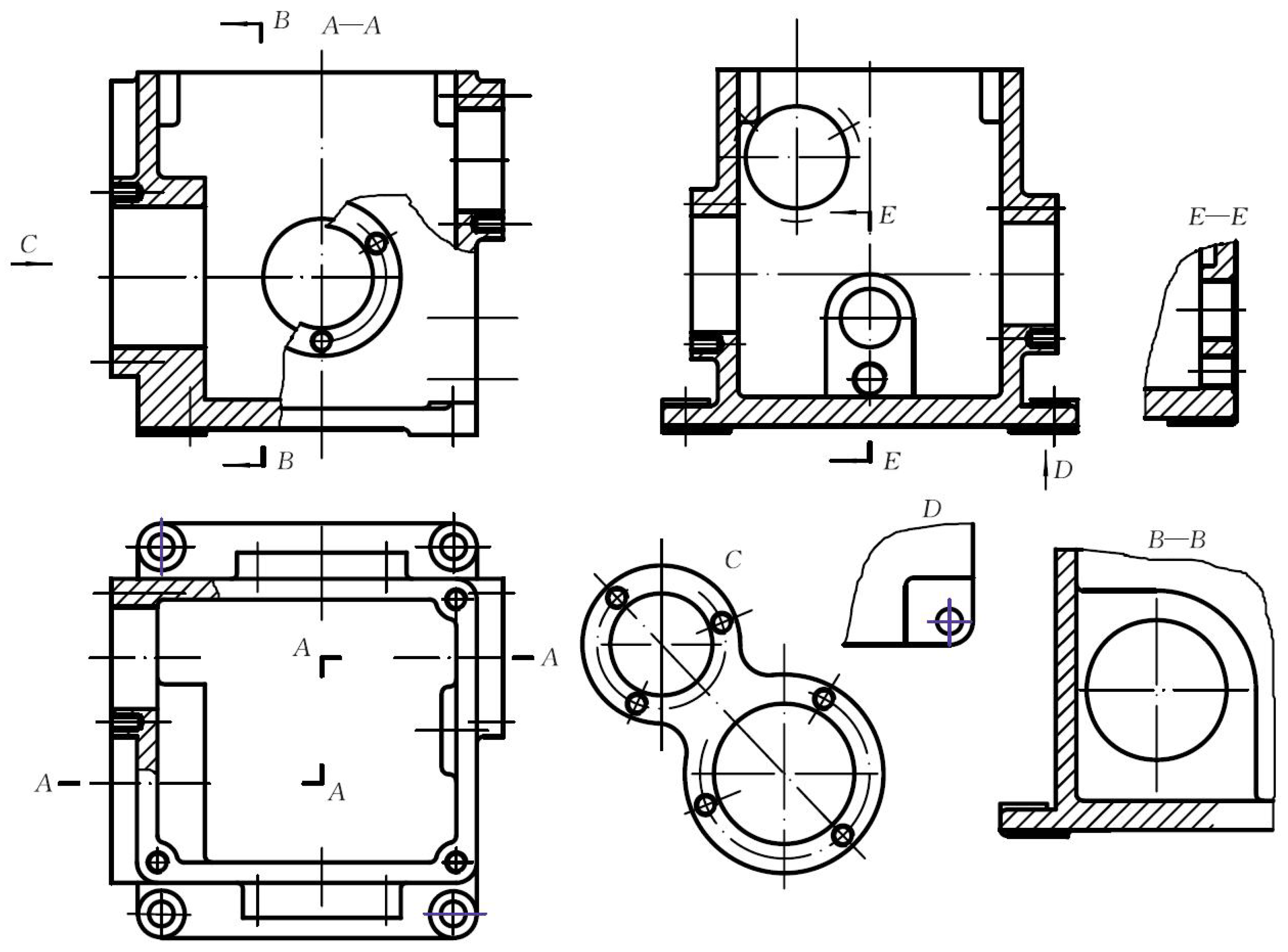

3.4. Sparsity of Solids

Basic solids of revolution (cylinders, cones, spheres) and plane solids with symmetrical properties (prisms and pyramids), despite having numerous lines in their diagrams, can achieve compressed sensing through their axis of symmetry and axis of rotation. It can be demonstrated that the eigenvectors of the covariance matrix, formed by the characteristic points of a solid, correspond to the axis of symmetry or the axis of rotation of the solid. This is the primary reason for performing singular value decomposition on data matrices in sparse representation, compressed sensing, and deep learning [

12]. For symmetric geometric bodies involved in descriptive geometry and engineering drawing, the axis of rotation or symmetry can be directly obtained from the eigenvectors of the data matrix.

Store the given m pieces of n-dimensional data in an matrix D, then perform zero-mean normalisation on each row of matrix D. Next, construct the covariance matrix of the dataset and compute its eigenvalues and eigenvectors. Arrange the eigenvectors in a matrix, sorted by their corresponding eigenvalues from largest to smallest. Select the first k rows to form matrix P. By multiplying matrix P with the original dataset matrix, the dimensionally reduced data can be obtained.

If we take the characteristic points that define a line or a plane as a dataset, can we use Principal Component Analysis (PCA) to analyse the principal components of this dataset? Without loss of generality, let us consider the cuboid shown in

Figure 9 as an example. The axis of the cuboid (depicted by the blue dashed line) is in a general position with respect to the coordinate system shown. Based on the symmetry of the cuboid’s vertices relative to the midpoint

O, it can be deduced that

According to the principles of Principal Component Analysis, the set of points formed by the eight vertices of the cuboid can be represented in the following matrix form:

The covariance matrix corresponding to matrix

D is

Matrix

C’s eigenvalues can be determined as follows:

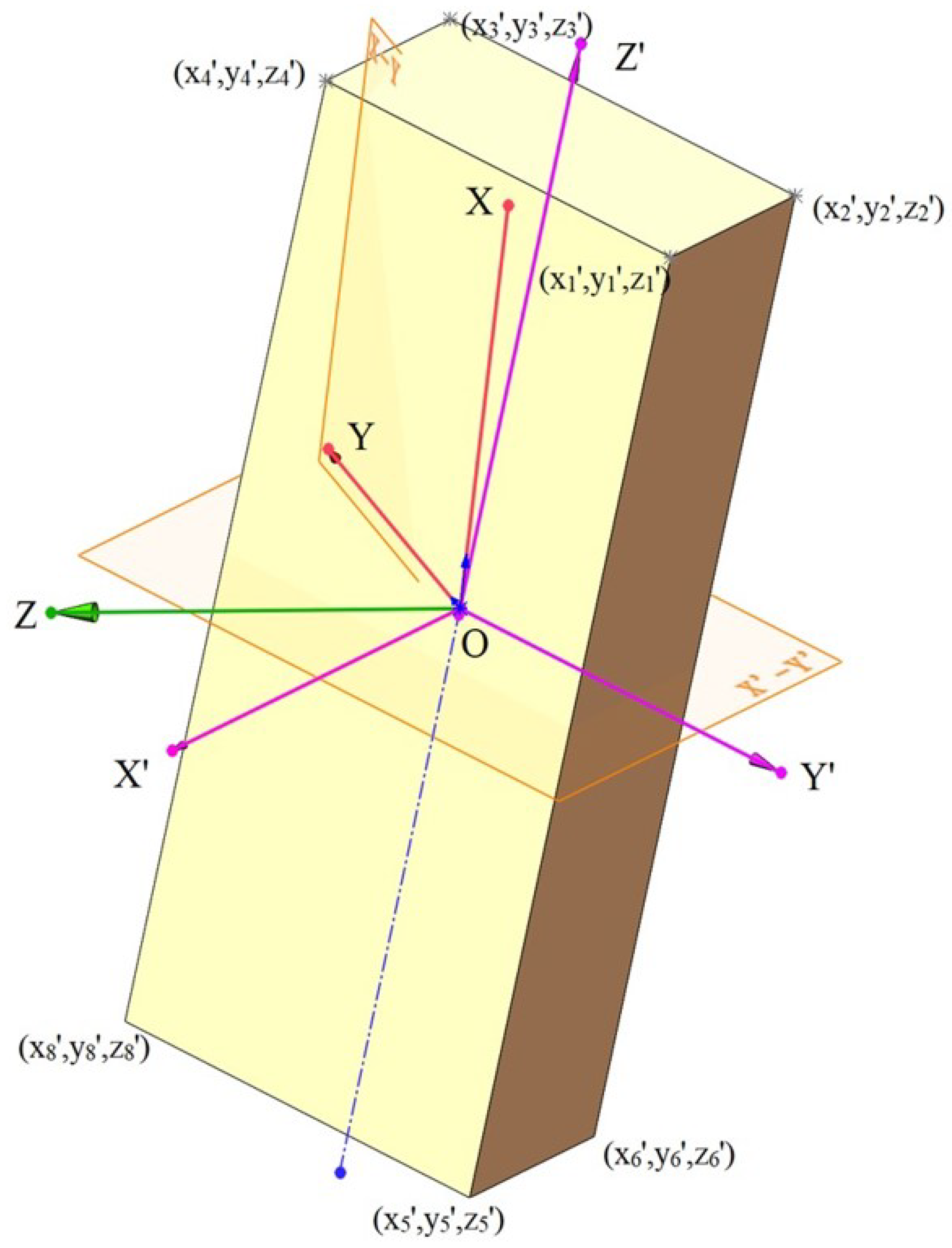

To simplify calculations, we employ the method of change plane to transform the central axis of the cuboid (indicated by the blue dashed line) into the vertical line of the projection plane, as shown in

Figure 10. Clearly, directly computing the corresponding eigenvalues and eigenvectors is rather challenging.

Without loss of generality, the new projective plane system (purple coordinate axes

) shown in

Figure 10 can be easily obtained by selecting the axonometric axes and adopting the method of "changing the plane." The coordinate values of the 8 vertices under the new coordinate system are shown in

Figure 10. Let the length, width, and height of this cuboid be 2a, 2b, 2c, respectively, then there are

In the new coordinate system, the set of points formed by the 8 vertices of the cuboid can be represented in the following matrix form:

The covariance matrix corresponding to matrix

D’ is

The eigenvalues of a matrix

can be determined by the following method:

Hence, it is not difficult to deduce:

Next, we seek the corresponding eigenvectors.

(1)

According to the definition of matrix eigenvectors, we have

Substituting Equation (

12) into Equation (

13), we obtain

Solving equation system (14), we obtain the eigenvalues and their corresponding eigenvectors as follows: the eigenvector corresponding to eigenvalue

is

; similarly, we have

for

and

for

. These three eigenvectors have clear geometric interpretations, as they correspond to the three purple coordinate axes in

Figure 10.

Next, we will use the method of change plane to find the eigenvalues and eigenvectors in the original coordinate system. According to the definition of eigenvalues, for the same point set, the eigenvalues remain unchanged in different coordinate systems.

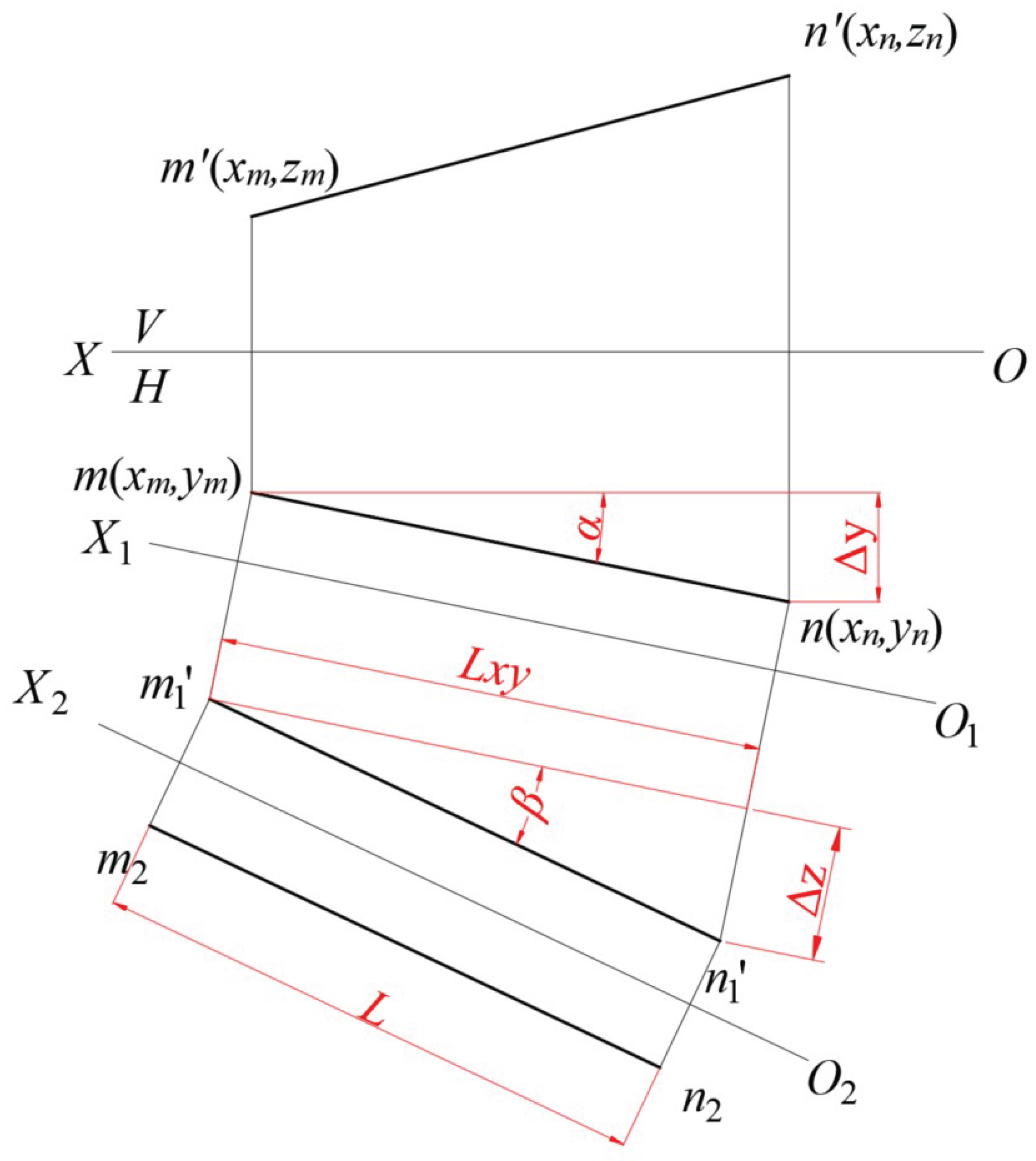

As shown in

Figure 11, the vector

represents the characteristic vector of the vertex data matrix of the cuboid shown in

Figure 10. Without loss of generality, in the new coordinate system (formed by the characteristic vectors of vertices of the symmetrical geometric object), the coordinates of points

M and

N are (-L/2,

,

) and (L/2,

,

) respectively. Hence, the coordinates of

, as shown in

Figure 11, are (-L/2,

), and the coordinates of

are (L/2,

). Below, we will transform back the projections

and

of the line segment MN in the new projection plane system to the original projection plane system [

13].

Step one, change the H-plane. Rotate by an angle

around the Y-axis.

The first step can be represented by the corresponding rotation transformation matrix as follows.

Step two, change the V-plane. Rotate by an angle

around the Z-axis.

Where

, the corresponding rotation transformation matrix can be represented as

Step three involves transforming the line segment MN’s projections,

and

, from the new projection plane system back to the original projection plane system.

The result indicates that, regardless of how a cuboid is rotated, the vectors corresponding to its edges are the eigenvectors of the matrix obtained by multiplying its vertex data matrix with its transposed matrix. Therefore, the geometric interpretation of multiplying the vertex data matrix with its transposed matrix can be explained using the method of changing plane.

Hence, it can be observed that for basic three-dimensional solids with a symmetric structure, the choice of axonometric axis and the results of principal component analysis are consistent

For asymmetrical structures, the corresponding eigenvector (principal component) directions are relatively complex. A combination of clustering and singular value decomposition (such as K-SVD) methods may help identify sparse vectors that can represent the characteristics of the shape.