Submitted:

30 May 2025

Posted:

30 May 2025

You are already at the latest version

Abstract

Keywords:

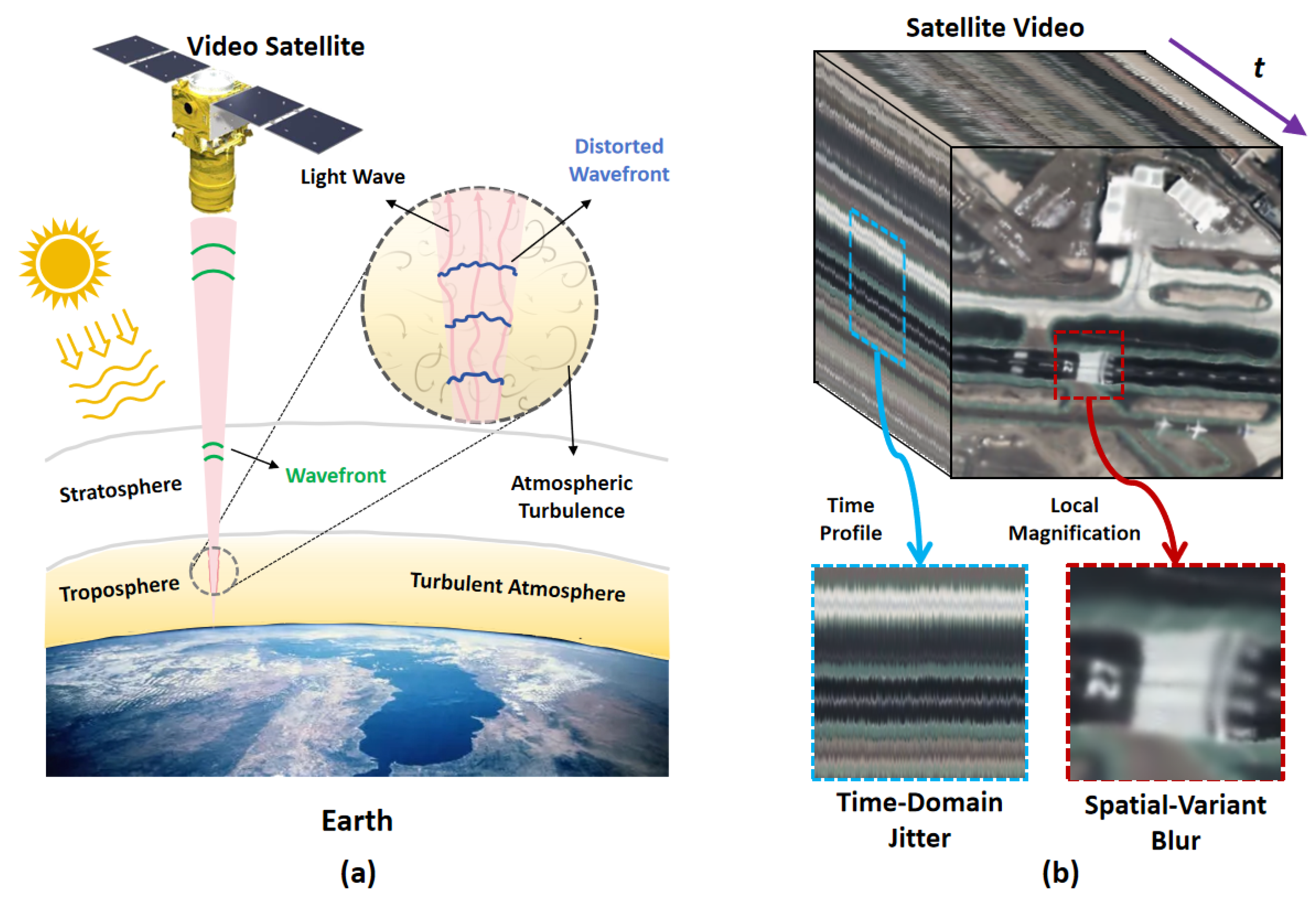

1. Introduction

2. Proposed Method

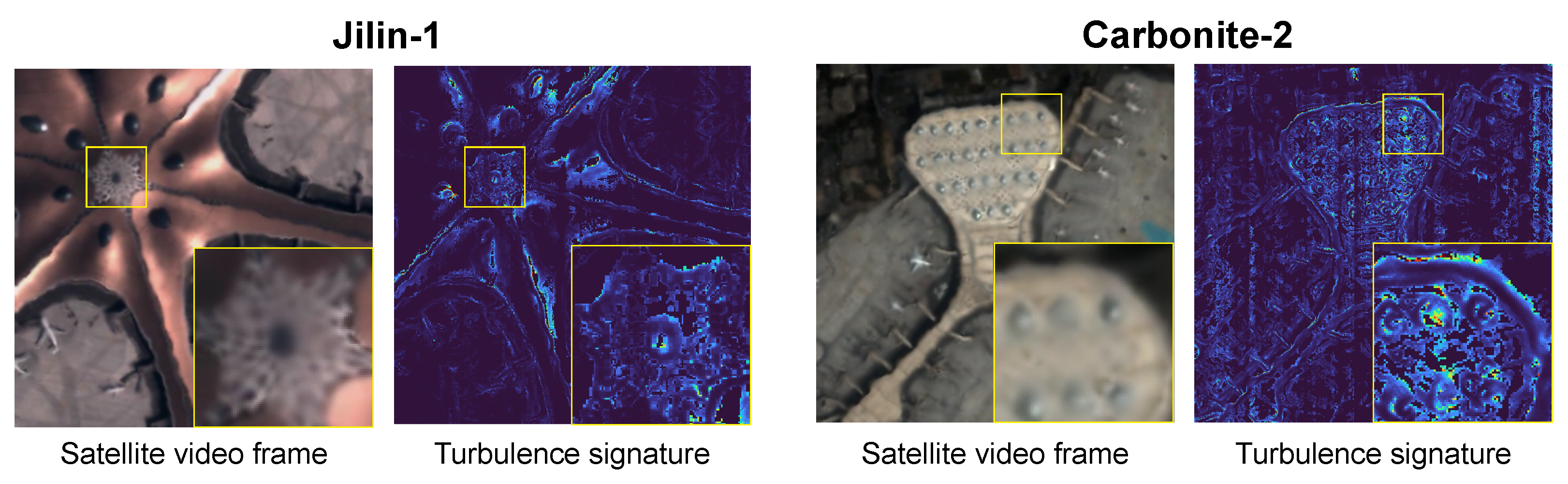

2.1. Turbulence Signature

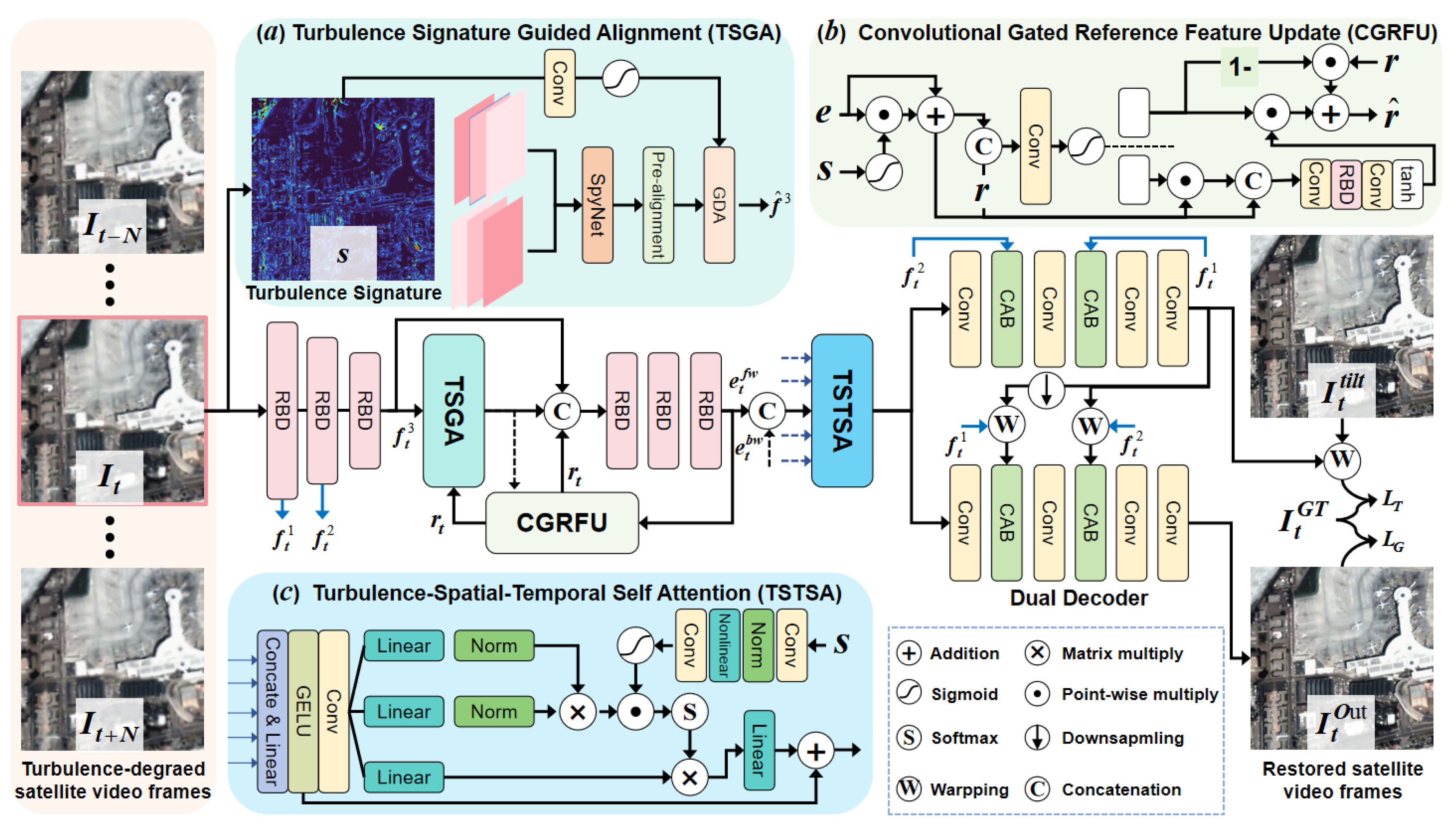

2.2. TMTS Network

2.2.1. Overview

2.2.2. TSGA Module for Feature Alignment

2.2.3. CGRFU Module for Reference Feature Update

2.2.4. TSTSA for Lucky Fusion

2.2.5. Loss Fuction

3. Experiment and Discussion

3.1. Satellite Video Datasource

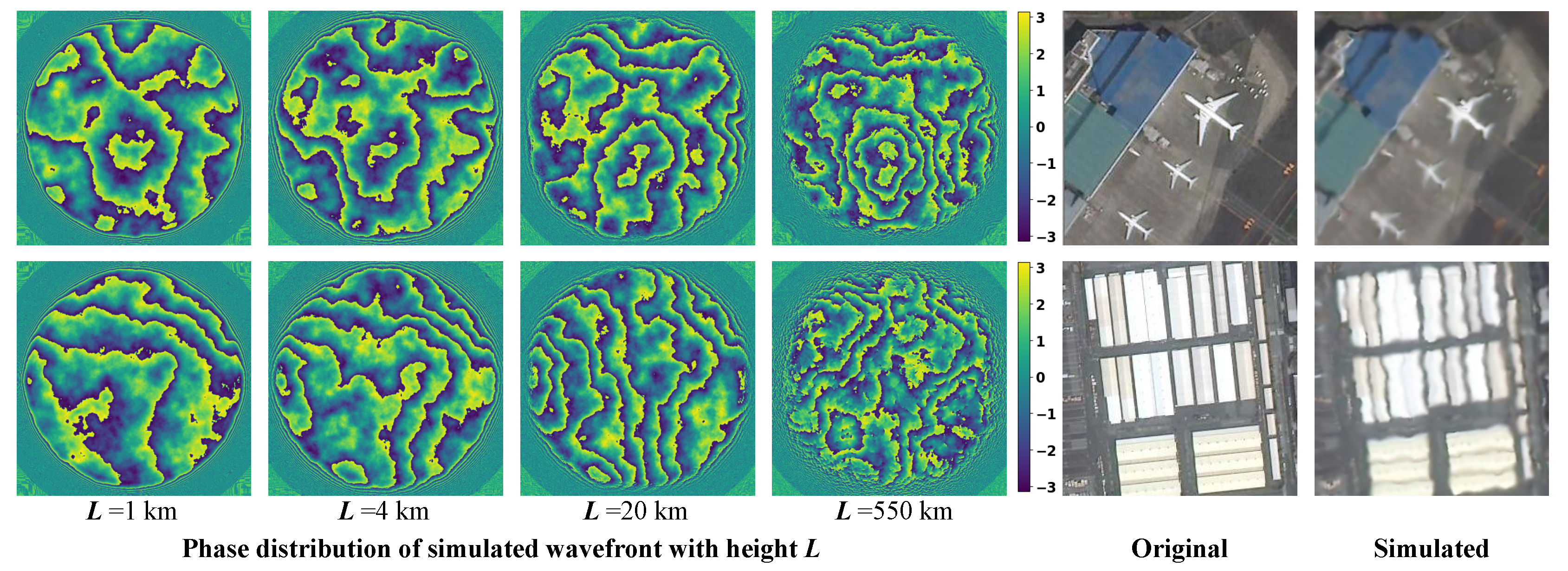

3.2. Paired Turbulence Data Synthesis

3.3. Implementation Details

3.4. Metrics

3.5. Performance on Synthetic Datasets

3.5.1. Quantitative Evaluation

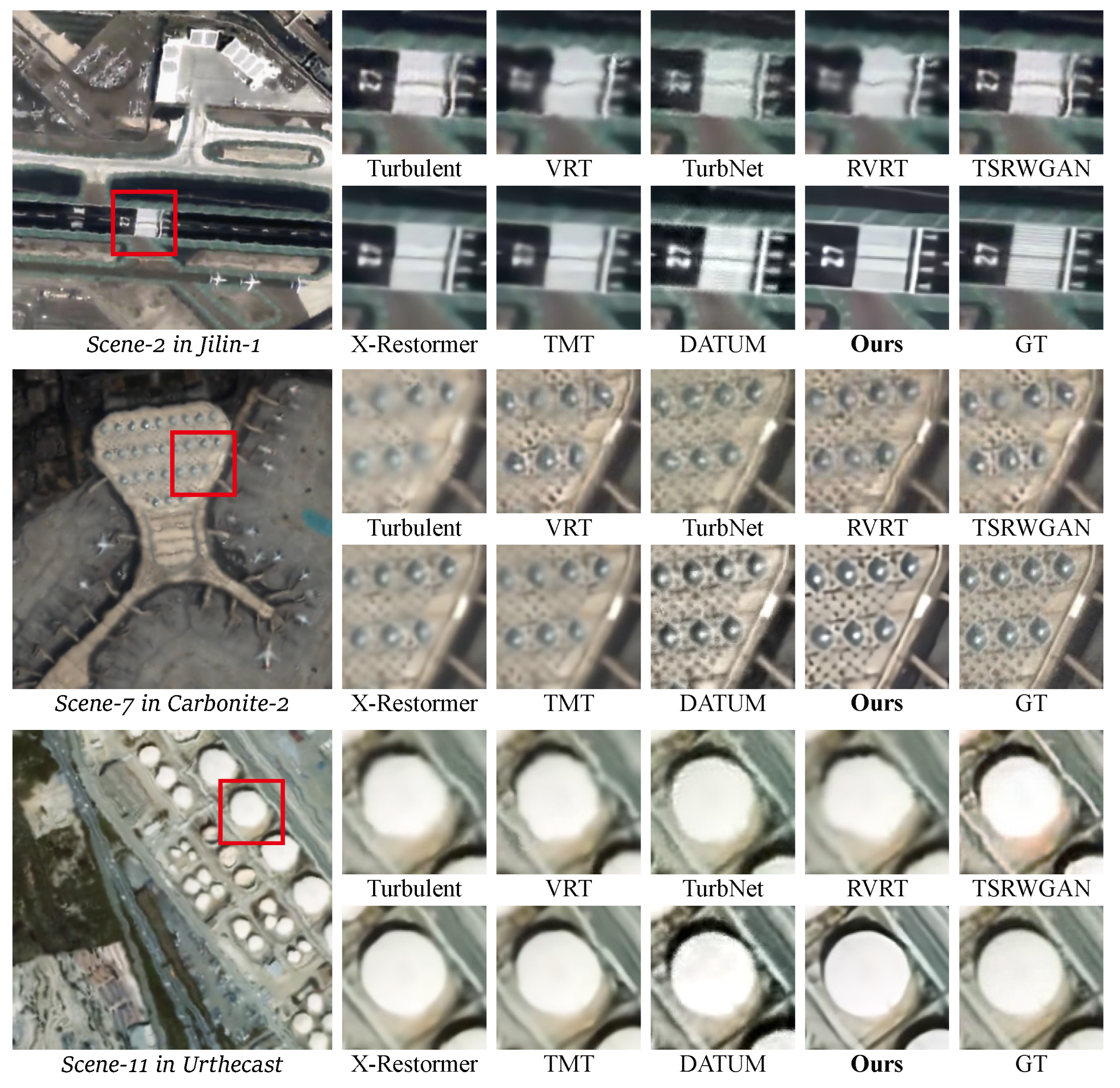

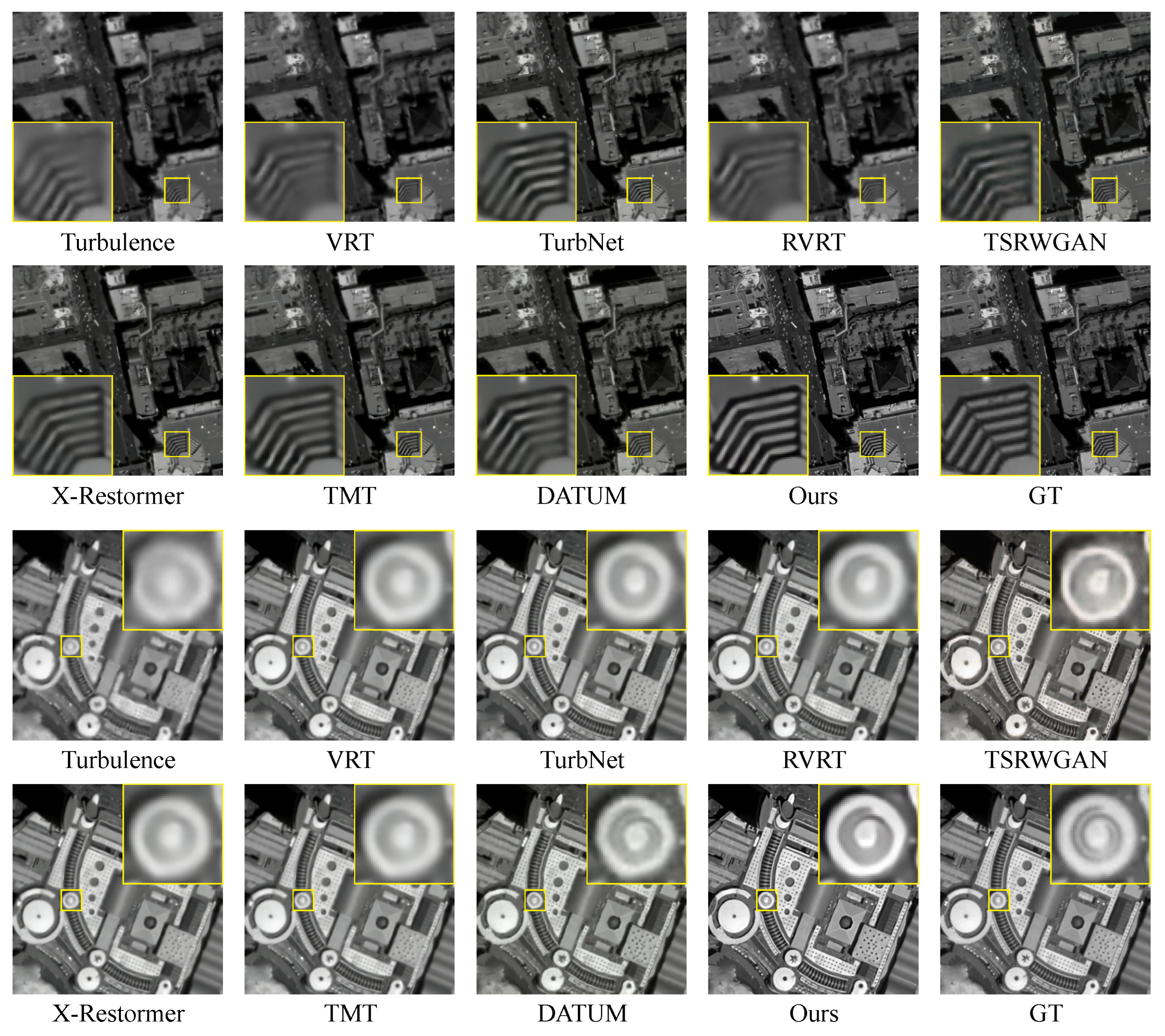

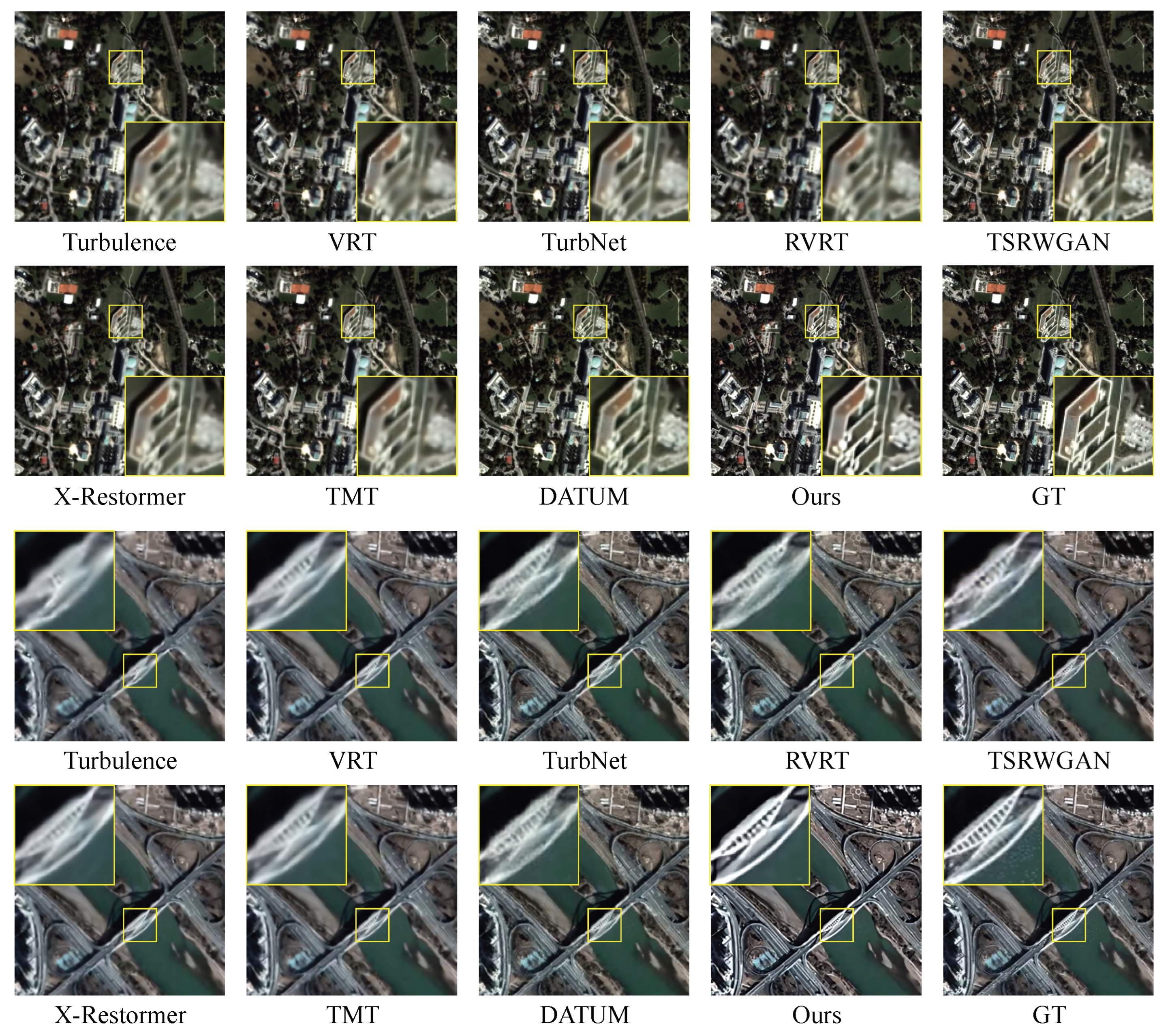

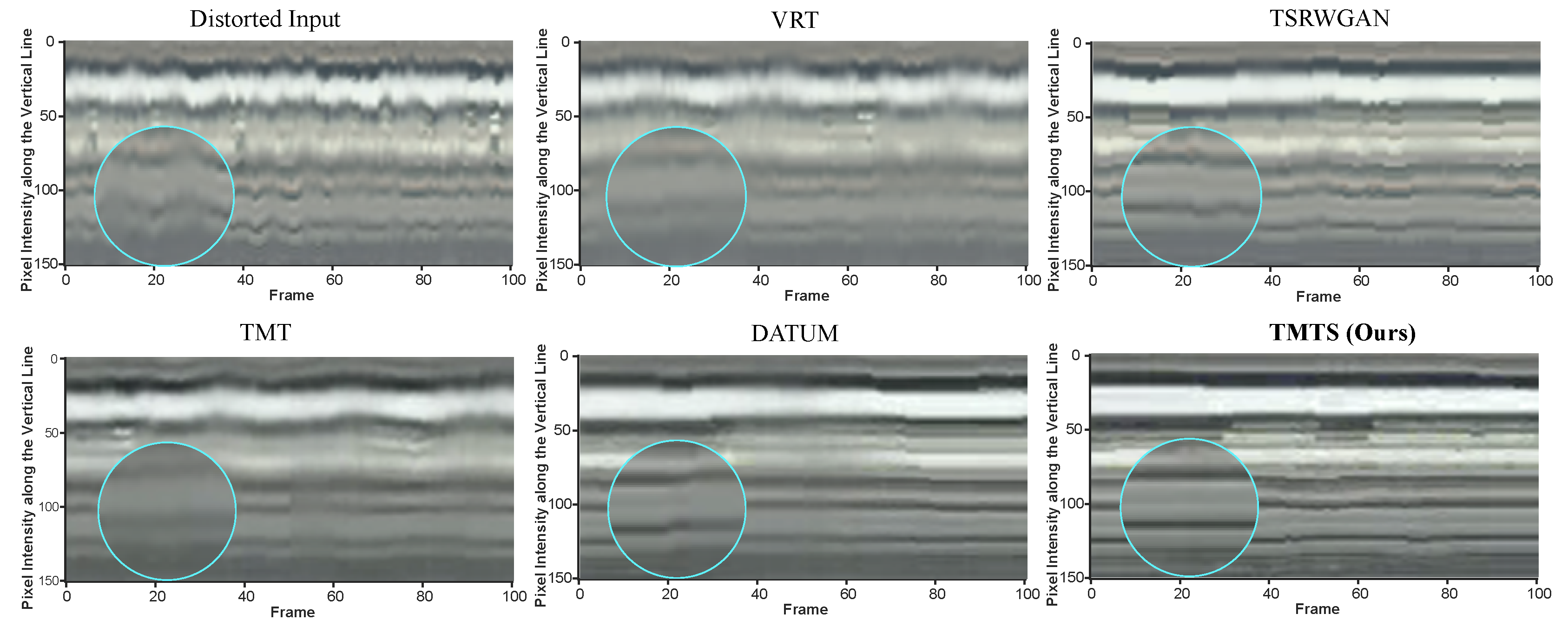

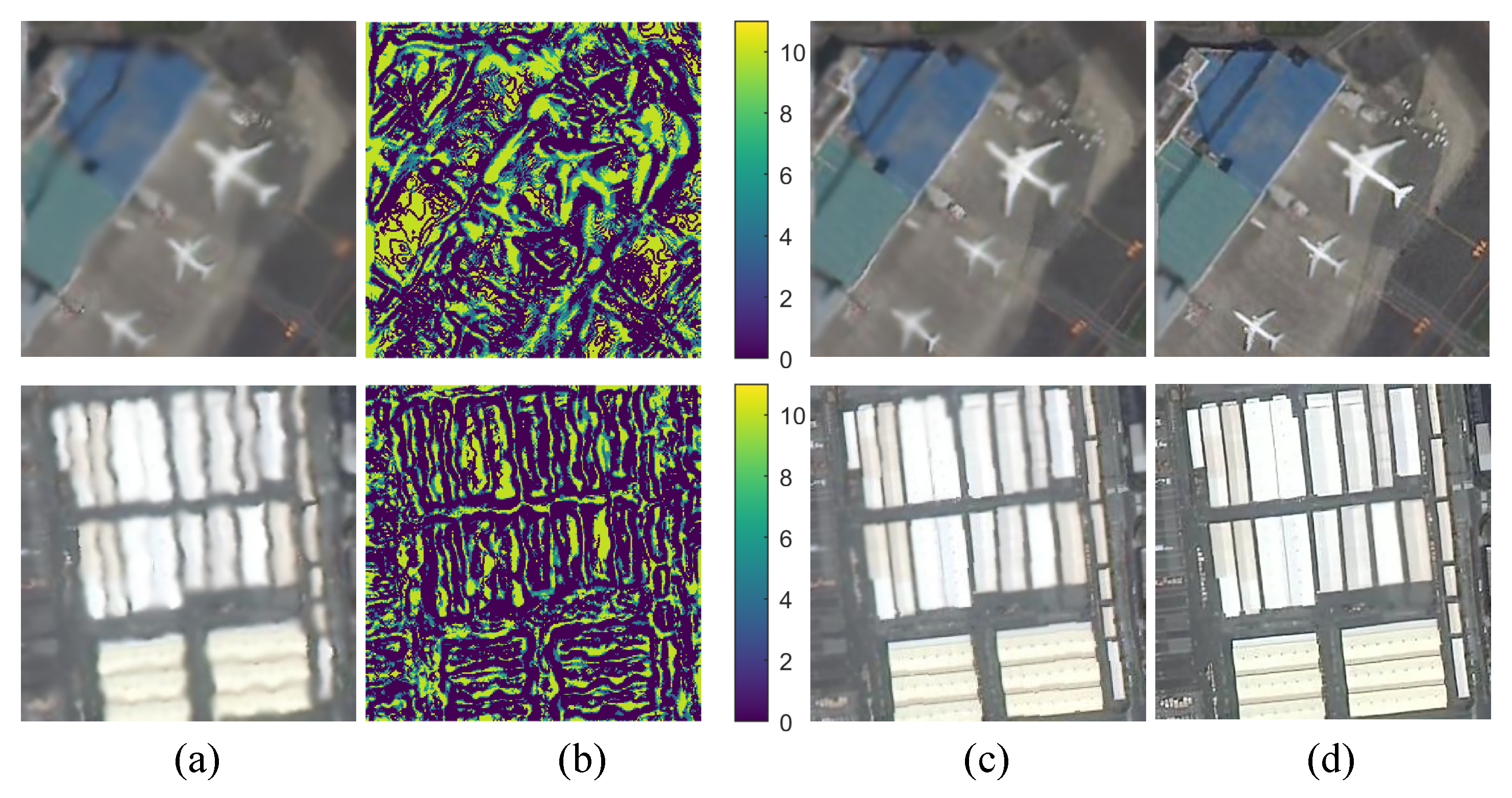

3.5.2. Qualitative Results

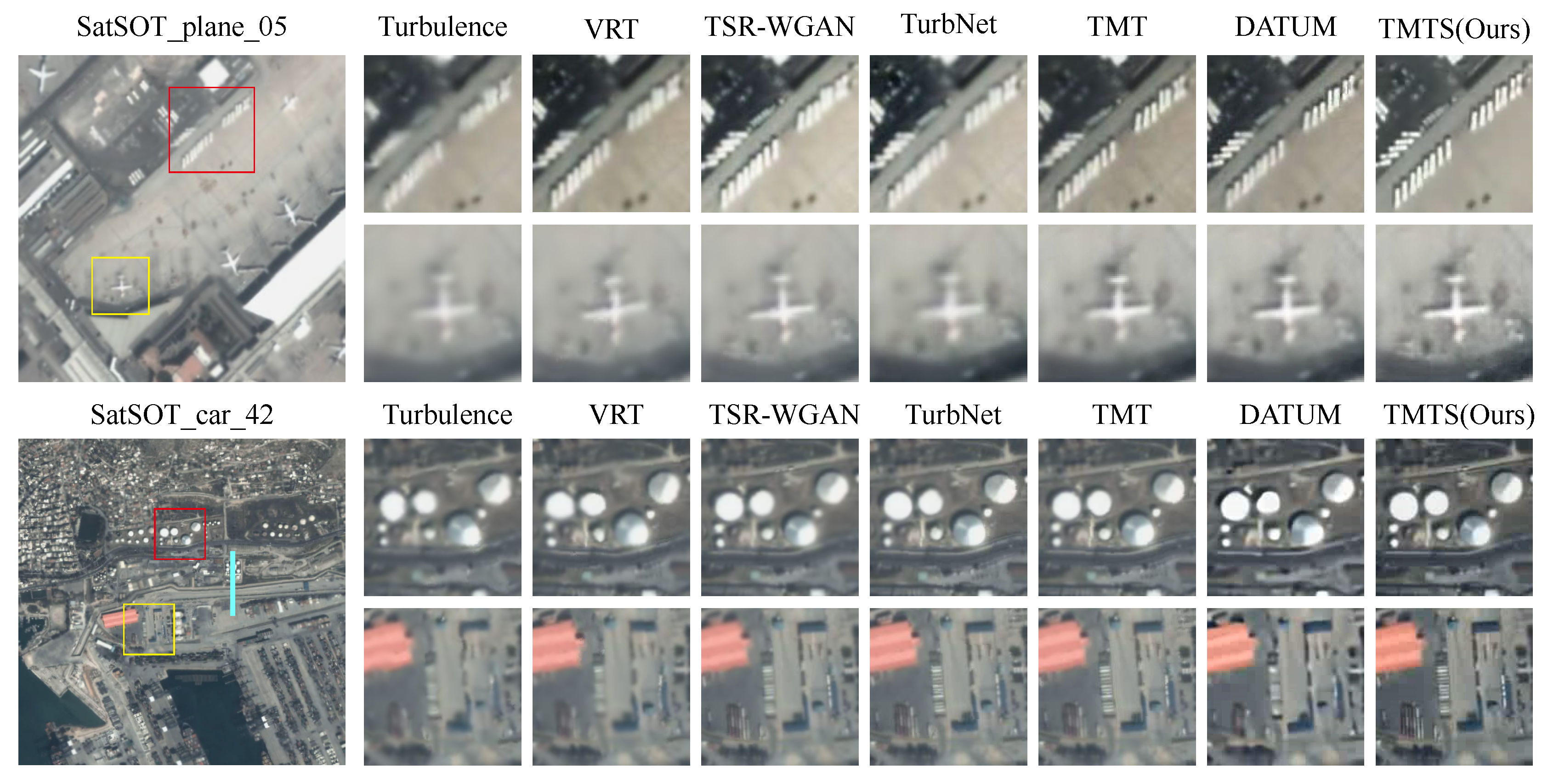

3.6. Performance on Real Data

3.7. Ablation Studies

3.7.1. Effect of Turbulence Signature

3.7.2. Effect of TSGA, CGRFU and TSTSA

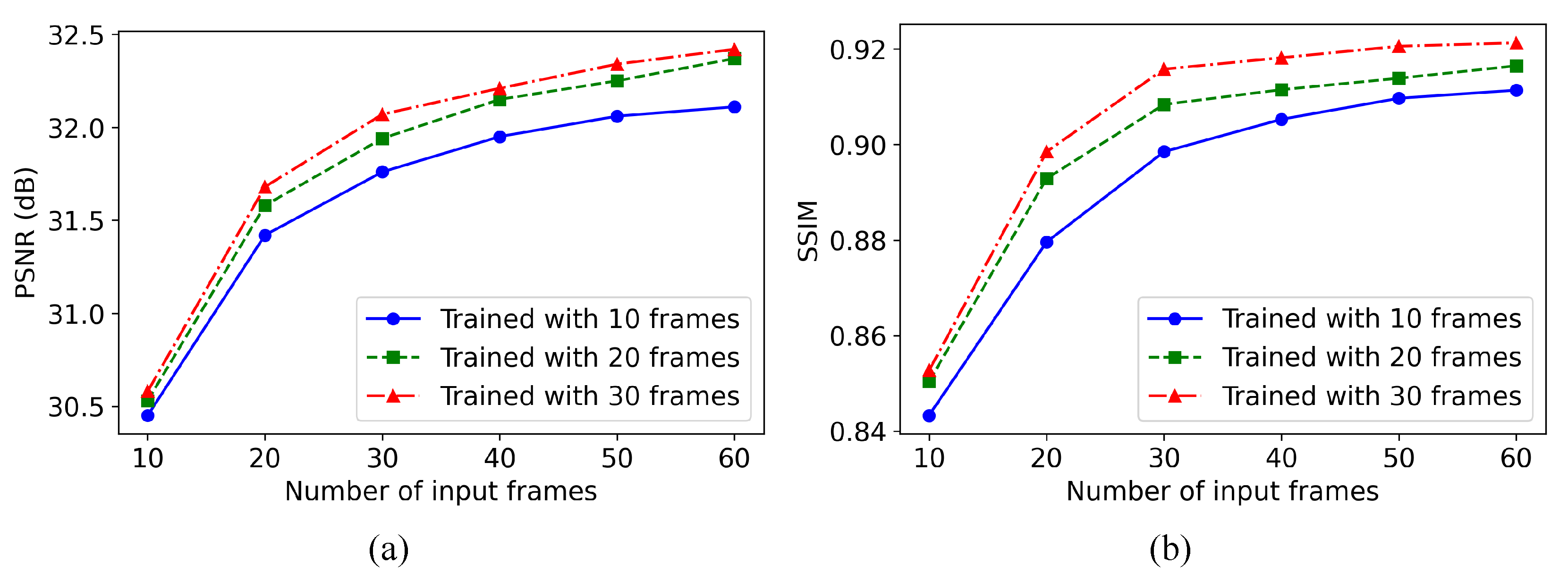

3.7.3. Influence of Input Frame Number

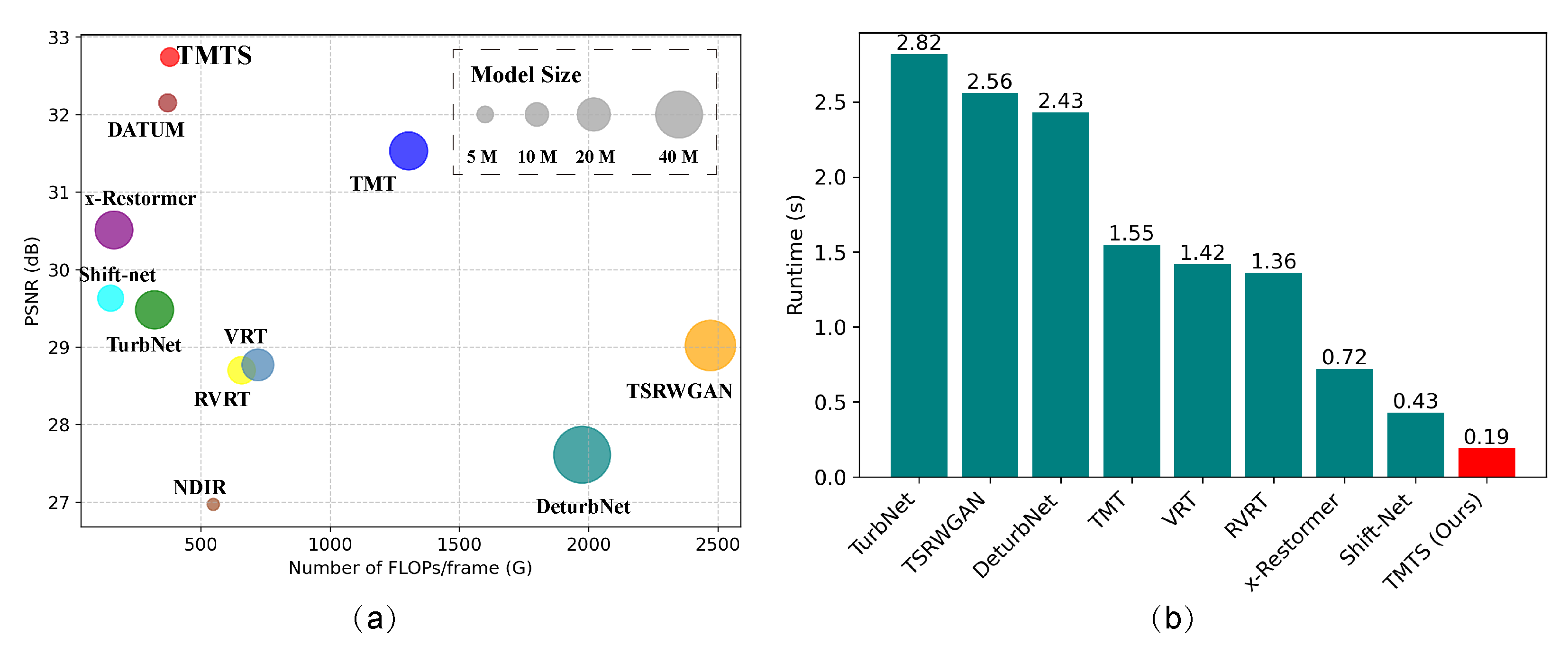

3.7.4. Model Efficiency and Computation Budget

3.8. Limitations and Future Works

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Xiao, Y.; Yuan, Q.; Jiang, K.; Jin, X.; He, J.; Zhang, L.; Lin, C.W. Local-Global Temporal Difference Learning for Satellite Video Super-Resolution. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 2789–2802. [Google Scholar] [CrossRef]

- Zhao, B.; Han, P.; Li, X. Vehicle Perception From Satellite. IEEE Transactions on Pattern Analysis and Machine Intelligence 2024, 46, 2545–2554. [Google Scholar] [CrossRef]

- Han, W.; Chen, J.; Wang, L.; Feng, R.; Li, F.; Wu, L.; Tian, T.; Yan, J. Methods for Small, Weak Object Detection in Optical High-Resolution Remote Sensing Images: A survey of advances and challenges. IEEE Geosci. Remote Sens. Mag. 2021, 9, 8–34. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, C.; Song, J.; Xu, Y. Object Tracking Based on Satellite Videos: A Literature Review. Remote Sens. 2022, 14. [Google Scholar] [CrossRef]

- Rigaut, F.; Neichel, B. Multiconjugate Adaptive Optics for Astronomy. In ANNUAL REVIEW OF ASTRONOMY AND ASTROPHYSICS, VOL 56; Faber, S.; VanDishoeck, E., Eds.; 2018; Vol. 56, Annual Review of Astronomy and Astrophysics, pp. 277–314. [CrossRef]

- Liang, J.; Williams, D.; Miller, D. Supernormal vision and high-resolution retinal imaging through adaptive optics. J. Opt. Soc. Am. A 1997, 14, 2884–2892. [Google Scholar] [CrossRef]

- Law, N.; Mackay, C.; Baldwin, J. Lucky imaging: high angular resolution imaging in the visible from the ground. Astron. Astrophys. 2006, 446, 739–745. [Google Scholar] [CrossRef]

- Joshi, N.; Cohen, M. Seeing Mt. Rainier: Lucky Imaging for Multi-Image Denoising, Sharpening, and Haze Removal. In Proceedings of the 2010 IEEE International Conference on Computational Photography (ICCP 2010). IEEE, 2010 2010, p. 8 pp. 2010 IEEE International Conference on Computational Photography (ICCP 2010), 29-30 March 2010, Cambridge, MA, USA.

- Anantrasirichai, N.; Achim, A.; Kingsbury, N.G.; Bull, D.R. Atmospheric Turbulence Mitigation Using Complex Wavelet-Based Fusion. IEEE Trans. Image Process. 2013, 22, 2398–2408. [Google Scholar] [CrossRef]

- Mao, Z.; Chimitt, N.; Chan, S.H. Image Reconstruction of Static and Dynamic Scenes Through Anisoplanatic Turbulence. IEEE Trans. Comput. Imaging 2020, 6, 1415–1428. [Google Scholar] [CrossRef]

- Cheng, J.; Zhu, W.; Li, J.; Xu, G.; Chen, X.; Yao, C. Restoration of Atmospheric Turbulence-Degraded Short-Exposure Image Based on Convolution Neural Network. Photonics 2023, 10. [Google Scholar] [CrossRef]

- Ettedgui, B.; Yitzhaky, Y. Atmospheric Turbulence Degraded Video Restoration with Recurrent GAN (ATVR-GAN). Sensors 2023, 23. [Google Scholar] [CrossRef]

- Wu, Y.; Cheng, K.; Cao, T.; Zhao, D.; Li, J. Semi-supervised correction model for turbulence-distorted images. Opt. Express 2024, 32, 21160–21174. [Google Scholar] [CrossRef] [PubMed]

- Mao, Z.; Jaiswal, A.; Wang, Z.; Chan, S.H. Single Frame Atmospheric Turbulence Mitigation: A Benchmark Study and a New Physics-Inspired Transformer Model. In Proceedings of the COMPUTER VISION, ECCV 2022, PT XIX; Avidan, S.; Brostow, G.; Cisse, M.; Farinella, G.; Hassner, T., Eds., 2022, Vol. 13679, Lecture Notes in Computer Science, pp. 430–446. 17th European Conference on Computer Vision (ECCV), Tel Aviv, ISRAEL, OCT 23-27, 2022. [CrossRef]

- Li, X.; Liu, X.; Wei, W.; Zhong, X.; Ma, H.; Chu, J. A DeturNet-Based Method for Recovering Images Degraded by Atmospheric Turbulence. Remote Sens. 2023, 15. [Google Scholar] [CrossRef]

- Jin, D.; Chen, Y.; Lu, Y.; Chen, J.; Wang, P.; Liu, Z.; Guo, S.; Bai, X. Neutralizing the impact of atmospheric turbulence on complex scene imaging via deep learning. Nat. Mach. Intell. 2021, 3, 876–884. [Google Scholar] [CrossRef]

- Zou, Z.; Anantrasirichai, N. DeTurb: Atmospheric Turbulence Mitigation with Deformable 3D Convolutions and 3D Swin Transformers. In Proceedings of the Computer Vision - ACCV 2024: 17th Asian Conference on Computer Vision, Proceedings. Lecture Notes in Computer Science (15475); Cho, M.; Laptev, I.; Tran, D.; Yao, A.; Zha, H., Eds., 2025 2025, pp. 20–37. Asian Conference on Computer Vision, 2024, Hanoi, Vietnam. [CrossRef]

- Zhang, X.; Chimitt, N.; Chi, Y.; Mao, Z.; Chan, S.H. Spatio-Temporal Turbulence Mitigation: A Translational Perspective. In Proceedings of the 2024 IEEE/CVF CONFERENCE ON COMPUTER VISION AND PATTERN RECOGNITION, CVPR 2024. IEEE; IEEE Comp Soc; CVF, 2024, IEEE Conference on Computer Vision and Pattern Recognition, pp. 2889–2899. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, JUN 16-22, 2024. [CrossRef]

- WANG, J.; MARKEY, J. MODAL COMPENSATION OF ATMOSPHERIC-TURBULENCE PHASE-DISTORTION. J. Opt. Soc. Am. 1978, 68, 78–87. [Google Scholar] [CrossRef]

- Wang, Y.; Jin, D.; Chen, J.; Bai, X. Revelation of hidden 2D atmospheric turbulence strength fields from turbulence effects in infrared imaging. Nat. Comput. Sci. 2023, 3, 687–699. [Google Scholar] [CrossRef]

- Zamek, S.; Yitzhaky, Y. Turbulence strength estimation from an arbitrary set of atmospherically degraded images. J. Opt. Soc. Am. A 2006, 23, 3106–3113. [Google Scholar] [CrossRef]

- Saha, R.K.; Salcin, E.; Kim, J.; Smith, J.; Jayasuriya, S. Turbulence strength Cn2 estimation from video using physics-based deep learning. Opt. Express 2022, 30, 40854–40870. [Google Scholar] [CrossRef]

- Beason, M.; Potvin, G.; Sprung, D.; McCrae, J.; Gladysz, S. Comparative analysis of Cn2 estimation methods for sonic anemometer data. Appl. Opt. 2024, 63, E94–E106. [Google Scholar] [CrossRef]

- Zeng, T.; Shen, Q.; Cao, Y.; Guan, J.Y.; Lian, M.Z.; Han, J.J.; Hou, L.; Lu, J.; Peng, X.X.; Li, M.; et al. Measurement of atmospheric non-reciprocity effects for satellite-based two-way time-frequency transfer. Photon. Res. 2024, 12, 1274–1282. [Google Scholar] [CrossRef]

- Zhang, Y.; Sun, Y.; Liu, S. Deformable and residual convolutional network for image super-resolution. Appl. Intell. 2022, 52, 295–304. [Google Scholar] [CrossRef]

- Luo, G.; Qu, J.; Zhang, L.; Fang, X.; Zhang, Y.; Man, S. Variational Learning of Convolutional Neural Networks with Stochastic Deformable Kernels. In Proceedings of the 2022 IEEE International Conference on Systems, Man, and Cybernetics (SMC), 2022 2022, pp. 1026–31. 2022 IEEE International Conference on Systems, Man, and Cybernetics (SMC), 9-12 Oct. 2022, Prague, Czech Republic. [CrossRef]

- Ranjan, A.; Black, M.J. Optical Flow Estimation using a Spatial Pyramid Network. In Proceedings of the 30TH IEEE CONFERENCE ON COMPUTER VISION AND PATTERN RECOGNITION (CVPR 2017). IEEE; IEEE Comp Soc; CVF, 2017, IEEE Conference on Computer Vision and Pattern Recognition, pp. 2720–2729. 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, JUL 21-26, 2017. [CrossRef]

- Rucci, M.A.; Hardie, R.C.; Martin, R.K.; Gladysz, S.Z.Y.M.O.N. Atmospheric optical turbulence mitigation using iterative image registration and least squares lucky look fusion. Appl. Opt. 2022, 61, 8233–8247. [Google Scholar] [CrossRef] [PubMed]

- Liang, J.; Fan, Y.; Xiang, X.; Ranjan, R.; Ilg, E.; Green, S.; Cao, J.; Zhang, K.; Timofte, R.; Van Gool, L. Recurrent Video Restoration Transformer with Guided Deformable Attention. In Proceedings of the ADVANCES IN NEURAL INFORMATION PROCESSING SYSTEMS 35 (NEURIPS 2022); Koyejo, S.; Mohamed, S.; Agarwal, A.; Belgrave, D.; Cho, K.; Oh, A., Eds., 2022, Advances in Neural Information Processing Systems. 36th Conference on Neural Information Processing Systems (NeurIPS), ELECTR NETWORK, NOV 28-DEC 09, 2022. [CrossRef]

- Lau, C.P.; Lai, Y.H.; Lui, L.M. Restoration of atmospheric turbulence-distorted images via RPCA and quasiconformal maps. Inverse Probl. 2019, 35. [Google Scholar] [CrossRef]

- Barron, J. A General and Adaptive Robust Loss Function. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Proceedings, 2019 2019, pp. 4326–34. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 15-20 June 2019, Long Beach, CA, USA. [CrossRef]

- Srinath, S.; Poyneer, L.A.; Rudy, A.R.; Ammons, S.M. Computationally efficient autoregressive method for generating phase screens with frozen flow and turbulence in optical simulations. Optics Express 2015, 23, 33335–33349. [Google Scholar] [CrossRef]

- Chimitt, N.; Chan, S.H. Simulating Anisoplanatic Turbulence by Sampling Correlated Zernike Coefficients. In Proceedings of the 2020 IEEE International Conference on Computational Photography (ICCP); 2020; pp. 1–12. [Google Scholar] [CrossRef]

- Wu, X.Q.; Yang, Q.K.; Huang, H.H.; Qing, C.; Hu, X.D.; Wang, Y.J. Study of Cn2 profile model by atmospheric optical turbulence model. Acta Phys. Sin. 2023, 72. [Google Scholar] [CrossRef]

- Li, N.; Thapa, S.; Whyte, C.; Reed, A.; Jayasuriya, S.; Ye, J. Unsupervised Non-Rigid Image Distortion Removal via Grid Deformation. In Proceedings of the 2021 IEEE/CVF INTERNATIONAL CONFERENCE ON COMPUTER VISION (ICCV 2021). IEEE; CVF; IEEE Comp Soc, 2021, pp. 2502–2512. 18th IEEE/CVF International Conference on Computer Vision (ICCV), ELECTR NETWORK, OCT 11-17, 2021. [CrossRef]

- Li, D.; Shi, X.; Zhang, Y.; Cheung, K.C.; See, S.; Wang, X.; Qin, H.; Li, H. A Simple Baseline for Video Restoration with Grouped Spatial-temporal Shift. In Proceedings of the 2023 IEEE/CVF CONFERENCE ON COMPUTER VISION AND PATTERN RECOGNITION (CVPR). IEEE; CVF; IEEE Comp Soc, 2023, IEEE Conference on Computer Vision and Pattern Recognition, pp. 9822–9832. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, CANADA, JUN 17-24, 2023. [CrossRef]

- Zhang, X.; Mao, Z.; Chimitt, N.; Chan, S.H. Imaging Through the Atmosphere Using Turbulence Mitigation Transformer. IEEE Trans. Comput. Imaging 2024, 10, 115–128. [Google Scholar] [CrossRef]

- Liang, J.; Cao, J.; Fan, Y.; Zhang, K.; Ranjan, R.; Li, Y.; Timofte, R.; Van Gool, L. VRT: A Video Restoration Transformer. IEEE Trans. Image Process. 2024, 33, 2171–2182. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Li, Z.; Pu, Y.; Liu, Y.; Zhou, J.; Qiao, Y.; Dong, C. A Comparative Study of Image Restoration Networks for General Backbone Network Design. In Proceedings of the COMPUTER VISION - ECCV 2024, PT LXXI; Leonardis, A.; Ricci, E.; Roth, S.; Russakovsky, O.; Sattler, T.; Varol, G., Eds. AIM Group, 2025, Vol. 15129, Lecture Notes in Computer Science, pp. 74–91. 18th European Conference on Computer Vision (ECCV), Milan, ITALY, SEP 29-OCT 04, 2024. [CrossRef]

- Diederik, P.; Kingma, J.B. Adam: A Method for Stochastic Optimization. In Proceedings of the In Proceedings of the International Conference on Learning Representations (ICLR), 2015 2015, pp. 1–15. [CrossRef]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-Reference Image Quality Assessment in the Spatial Domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef] [PubMed]

- Fang, Y.; Ma, K.; Wang, Z.; Lin, W.; Fang, Z.; Zhai, G. No-Reference Quality Assessment of Contrast-Distorted Images Based on Natural Scene Statistics. IEEE Signal Processing Letters 2015, 22, 838–842. [Google Scholar] [CrossRef]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “Completely Blind” Image Quality Analyzer. IEEE Signal Process. Lett. 2013, 20, 209–212. [Google Scholar] [CrossRef]

- Li, S.; Zhou, Z.; Zhao, M.; Yang, J.; Guo, W.; Lv, Y.; Kou, L.; Wang, H.; Gu, Y. A Multitask Benchmark Dataset for Satellite Video: Object Detection, Tracking, and Segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61. [Google Scholar] [CrossRef]

- Saha, R.K.; Qin, D.; Li, N.; Ye, J.; Jayasuriya, S. Turb-Seg-Res: A Segment-then-Restore Pipeline for Dynamic Videos with Atmospheric Turbulence. In Proceedings of the 2024 IEEE/CVF CONFERENCE ON COMPUTER VISION AND PATTERN RECOGNITION (CVPR). IEEE; IEEE Comp Soc; CVF, 2024, IEEE Conference on Computer Vision and Pattern Recognition, pp. 25286–25296. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, JUN 16-22, 2024. [CrossRef]

| Train/Test | Video Satellite | Region | Captured Date | Duration | FPS | Frame Size |

|---|---|---|---|---|---|---|

| Train | Jilin-1 | San Francisco | Apr. 24th, 2017 | 20 s | 25 | 3840 × 2160 |

| Valencia, Spain | May. 20th, 2017 | 30 s | 25 | 4096 × 2160 | ||

| Derna, Libya | May. 20th, 2017 | 30 s | 25 | 4096 × 2160 | ||

| Adana-02, Turkey | May. 20th, 2017 | 30 s | 25 | 4096 × 2160 | ||

| Tunisia | May. 20th, 2017 | 30 s | 25 | 4096 × 2160 | ||

| Minneapolis-01 | Jun. 2nd, 2017 | 30 s | 25 | 4096 × 2160 | ||

| Minneapolis-02 | Jun. 2nd, 2017 | 30 s | 25 | 4096 × 2160 | ||

| Muharag, Bahrain | Jun. 4th, 2017 | 30 s | 25 | 4096 × 2160 | ||

| Test | Jilin-1 | San Diego | May. 22nd, 2017 | 30 s | 25 | 4096 × 2160 |

| Adana-01, Turkey | May. 25th, 2017 | 30 s | 25 | 4096 × 2160 | ||

| Carbonite-2 | Buenos Aires | Apr. 16th, 2018 | 17 s | 10 | 2560 × 1440 | |

| Mumbai, India | Apr. 16th, 2018 | 59 s | 6 | 2560 ×1440 | ||

| Puerto Antofagasta | Apr. 16th, 2018 | 18 s | 10 | 2560× 1440 | ||

| UrtheCast | Boston, USA | - | 20 s | 30 | 1920 × 1080 | |

| Barcelona, Spain | - | 16 s | 30 | 1920 ×1080 | ||

| Skysat-1 | Las Vegas, USA | Mar. 25th, 2014 | 60 s | 30 | 1920 × 1080 | |

| Burj Khalifa, Dubai | Apr. 9th, 2014 | 30 s | 30 | 1920 × 1080 | ||

| Luojia3-01 | Geneva, Switzerland | Oct. 11th, 2023 | 27 s | 25 | 1920 ×1080 | |

| LanZhou, China | Feb. 23rd, 2023 | 15 s | 24 | 640 × 384 |

| Method | Scene-1 | Scene-2 | Scene-3 | Scene-4 | Scene-5 | Average |

|---|---|---|---|---|---|---|

| Turbulence | 24.96/0.7644 | 25.15/0.7743 | 22.97/0.7207 | 24.21/0.7670 | 24.35/0.7559 | 24.33/0.7564 |

| NDIR [35] | 25.45/0.8206 | 25.63/0.8308 | 24.33/0.7906 | 26.04/0.8262 | 25.12/0.8466 | 25.31/0.82296 |

| TSRWGAN [16] | 27.55/0.9122 | 28.75/0.9236 | 26.85/0.8918 | 26.63/0.8989 | 26.97/0.9071 | 27.35/0.9067 |

| RVRT [29] | 28.65/0.8817 | 26.44/0.8276 | 26.27/0.8762 | 27.33/0.8830 | 26.95/0.9044 | 27.13/0.8746 |

| TurbNet [14] | 27.03/0.8548 | 28.31/0.8663 | 25.28/0.8049 | 25.15/0.8263 | 26.35/0.8590 | 26.42/0.8423 |

| DeturbNet [15] | 25.61/0.8305 | 26.08/0.8327 | 24.27/0.7855 | 25.81/0.8409 | 25.74/0.8501 | 25.50/0.8280 |

| ShiftNet [36] | 29.31/0.9275 | 28.93/0.8757 | 27.62/0.8905 | 31.48/0.9357 | 30.34/0.9297 | 29.54/0.9118 |

| TMT [37] | 33.25/0.9331 | 32.67/0.9359 | 28.91/0.8989 | 33.27/0.9284 | 31.22/0.9267 | 31.11/0.9242 |

| VRT [38] | 28.49/0.8834 | 26.39/0.8232 | 26.30/0.8757 | 27.39/0.8875 | 26.92/0.9041 | 27.10/0.8748 |

| x-Restormer [39] | 30.16/0.8960 | 29.15/0.8601 | 28.54/0.8973 | 30.68/0.9034 | 30.27/0.9102 | 29.76/0.8934 |

| DATUM [18] | 33.15/0.9329 | 33.92/0.9517 | 29.66/0.9042 | 32.47/0.9571 | 31.97/0.9438 | 32.23/0.9379 |

| TMTS | 33.46/0.9461 | 34.17/0.9458 | 29.75/0.9046 | 32.53/0.9609 | 32.13/0.9390 | 32.41/0.9393 |

| Satellite | Method | Scene-6 | Scene-7 | Scene-8 | Scene-9 | Average |

|---|---|---|---|---|---|---|

| Carbonite-2 | Turbulence | 25.86 /0.7955 | 25.20 /0.7806 | 23.57/0.8161 | 22.85/0.7747 | 24.37/0.7917 |

| NDIR[35] | 26.42/0.823 | 27.56 /0.8090 | 26.97/0.8311 | 26.34/0.8309 | 26.82/0.8235 | |

| TSRWGAN[16] | 28.35/0.8649 | 29.04/0.8931 | 28.46 0.8742 | 27.11/0.8635 | 28.24 0.8739 | |

| RVRT[29] | 29.03/0.8833 | 29.70/0.9048 | 27.58 0.8859 | 28.82/0.8601 | 28.78/0.8835 | |

| TurbNet[14] | 30.56/0.9056 | 29.32/0.9285 | 27.37/0.9156 | 28.21/0.8738 | 28.87/0.9059 | |

| DeturNet[15] | 27.29/0.8696 | 28.14/0.8972 | 26.26/0.8714 | 27.43/0.8575 | 27.28/0.8739 | |

| Shift-Net[36] | 28.09/0.9098 | 28.52/0.8863 | 27.63/0.9141 | 27.82/0.8815 | 28.02/0.8979 | |

| TMT[37] | 31.48/0.9397 | 31.35/0.9220 | 29.62/0.9372 | 31.27/0.9029 | 30.93/0.9255 | |

| VRT[38] | 28.19/0.8991 | 28.77/0.8535 | 27.18/0.8674 | 29.51/0.8630 | 28.41/0.8708 | |

| X-Restormer[39] | 29.41/0.9135 | 29.36/0.9050 | 28.95/0.8983 | 30.55/0.8706 | 29.57/0.8969 | |

| DATUM[18] | 32.38/0.9405 | 32.23/0.9259 | 30.91/0.9316 | 31.68/ 0.9055 | 31.80/0.9259 | |

| TMTS | 32.69/0.9422 | 32.44/0.9328 | 30.75/ 0.9385 | 32.24/0.9018 | 32.03/0.9288 | |

| Satellite | Method | Scene-10 | Scene-11 | Scene-12 | Scene-13 | Average |

| Urthecast | Turbulence | 25.46 /0.8548 | 27.28/0.8610 | 24.56/0.8426 | 25.10/0.8415 | 25.60/0.8500 |

| NDIR[35] | 26.42/0.823 | 27.56 /0.8090 | 26.97/0.8311 | 26.34/0.8309 | 26.82/0.8235 | |

| TSRWGAN[16] | 29.69/0.9018 | 29.43/0.9293 | 28.18/0.9121 | 28.92/0.9079 | 29.06/0.9128 | |

| RVRT[29] | 29.04/0.8633 | 28.35/0.8915 | 27.62/0.8740 | 28.4/0.8963 | 28.35/0.8813 | |

| TurbNet[14] | 27.76/0.9005 | 28.72/0.8843 | 28.45/0.8764 | 27.34/0.8972 | 28.07/0.8896 | |

| DeturNet[15] | 27.29/0.8696 | 28.14/0.8972 | 26.26/0.8714 | 27.43/0.8575 | 27.28/0.8739 | |

| Shift-Net[36] | 28.27/0.9136 | 30.25/0.9305 | 31.24/0.9199 | 30.41/0.9268 | 30.04/0.9227 | |

| TMT[37] | 31.23/0.9267 | 30.36/0.9359 | 32.18/0.9424 | 30.64/0.9170 | 31.10/0.9305 | |

| VRT[38] | 29.96/0.9033 | 28.38/0.8966 | 28.53/0.9070 | 29.68/0.9052 | 29.14/0.9030 | |

| X-Restormer[39] | 31.42/0.9228 | 30.75/0.9312 | 31.66/0.9305 | 30.42/0.9103 | 31.06/0.9237 | |

| DATUM[18] | 32.56/0.9409 | 33.37/0.9452 | 32.16/0.9396 | 30.43/0.9355 | 32.13/0.9403 | |

| TMTS | 32.97/0.9368 | 33.82/0.9540 | 33.24/0.9437 | 31.05/0.9362 | 32.77/0.9427 |

| Satellite | Scene | TurbNet [14] | VRT [38] | TMT [37] | X-Restormer [39] | DATUM [18] | TMTS |

|---|---|---|---|---|---|---|---|

| SkySat-1 | Scene-14 | 31.10/0.9462 | 33.61/0.9493 | 30.49/0.9258 | 32.05/0.9296 | 33.86/0.9424 | 33.93/0.9430 |

| Scene-15 | 31.61/0.9299 | 33.08/0.9518 | 30.85/0.9145 | 32.33/0.9310 | 34.02/0.9558 | 33.86/0.9572 | |

| Scene-16 | 32.42/0.9233 | 32.52/0.9304 | 31.24/0.9167 | 30.68/0.9242 | 32.17/0.9575 | 32.77/0.9510 | |

| Luojia3-01 | Scene-17 | 33.18/0.9306 | 33.43/0.9418 | 30.06/0.9035 | 31.55/0.9304 | 33.21/0.9450 | 33.59/0.9453 |

| Scene-18 | 31.07/0.9273 | 32.31/0.9356 | 29.48/0.9085 | 31.60/0.9124 | 32.84/0.9389 | 33.29/0.9405 | |

| Average | 31.87/0.9315 | 32.99/0.9418 | 30.42/ 0.9138 | 33.39/0.9164 | 31.64/0.9255 | 33.49/0.9474 | |

| Method | VRT [38] | TurbNet [14] | TSRWGAN [16] | TMT [37] | DATUM [18] | TMTS(Ours) |

|---|---|---|---|---|---|---|

| BRISQUE (↓) | 48.8979 | 46.7041 | 46.2586 | 45.8577 | 44.0835 | 42.2954 |

| CEIQ (↑) | 2.9326 | 3.0831 | 3.1102 | 3.1793 | 3.3512 | 3.3458 |

| NIQE (↓) | 4.6137 | 4.4832 | 4.3419 | 4.1135 | 3.9943 | 3.8161 |

| Components | Baseline (TSTSA) | + TSGA | + CGRFU | |||

| w/o TS | w TS | w/o TS | w TS | w/o TS | w TS | |

| PSNR (↑) | 30.15 | 30.41 | 31.67 | 32.05 | 32.52 | 32.74 |

| SSIM (↑) | 0.8765 | 0.8804 | 0.9113 | 0.9122 | 0.9206 | 0.9217 |

| #Param. (M) | 4.782 | 4.768 | 5.739 | 5.724 | 6.27 | 6.24 |

| FLOPs (G) | 306.5 | 304.2 | 352.8 | 349.7 | 381.4 | 377.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).