1. Introduction

Many civil infrastructures are in a poor state [

1,

2,

3]. This is mainly due to poor maintenance practices as well as exposure of the infrastructure to harsh environmental conditions [

4]. Besides these, structural deterioration has been associated with misuse of infrastructure [

5]. Regardless of the cause, structures in such a state usually pose risk to life, property and economic performance. Talking of negative impacts of structural deterioration, South Africa is a good example. To put this into context, South Africa loses almost 50% of the water pumped into its piped networks to leakages, among other factors [

6]. This is in part due to deteriorating infrastructure which is rarely maintained. Problems related to deteriorating infrastructure are also encountered in South Africa’s power sector and transportation system [

2]. The issue of deteriorating structures is further highlighted in South Africa’s Infrastructure Development scenarios for 2050 [

7]. While the problem of deteriorating infrastructure is worse in the developing world, similar challenges are also observed in developed countries. A recent report by MakeUK indicates that approximately 54% of manufacturers in the United Kingdom perceive a decrease in road infrastructure quality over the last decade [

8]. These statistics are a clear indication that interventions have to be taken to ensure serviceability of the existing infrastructure. Considering the economic value of civil infrastructure, their exposure to harsh environmental influences, and progressive material deterioration, conventional inspection and maintenance practices, which often rely on manual and periodic interventions, are insufficient to ensure structural integrity and long-term serviceability. As such, there is a need for continuous monitoring to enable early detection of deterioration and timely maintenance decisions [

9,

10].

Structure Health Monitoring (SHM) appears to be a promising solution to the issues discussed. The field of SHM was developed in the 1990s and has since matured over the years [

11]. These days, SHM is extensively studied and applied to civil infrastructure. The aim of SHM is to enable damage identification, localisation, quantification and prognosis. This is accomplished through instrumentation, whereby sensors are deployed to collect data from a structure, followed by data analysis. Fundamentally, SHM is guided by 7 Axioms [

12]. Of particular interest to this review is Axiom IV(a), which states that sensors cannot measure damage as such feature extraction is necessary to convert sensor data to damage information. In this regard, statistical and deep learning methods are used.

SHM involves the deployment of permanently installed sensors on a structure to gather data related to structural response and environmental conditions [

13]. Collected data may include vibration signals, strain histories, humidity, among others. Despite the role of sensors in data collection, axiom IV(a) states that sensors cannot measure damage as such feature extraction is necessary to convert sensor data to damage information. In this regard, statistical and deep learning methods are used.

SHM methods are usually classified into two main categories: global and local [

14]. Global methods typically involve acquisition of data across a full structure. The data is then processed to assess damage. Vibration based SHM methods fall under this category. Global methods can be used to detect, locate, and quantify damage on a structure. On the other hand, local methods focus on a particular area of a structure. In most cases, the location is known a priori and the goal is to assess the level of damage. Some methods under non-destructive evaluation are in this category. Considering the wide availability of cheap sensors, modern SHM systems are hugely global and a common characteristic of such methods is that the generated data is time series. It is therefore important that time series analysis is well understood.

Traditionally, time series analysis uses methods such as Auto Regressive Moving Average(ARMA), AutoRegressive Moving Average with eXogenous excitation (ARMAX), and state space models (SSMs), among others [

6]. This is so because time series data exhibit correlations and dependencies which are otherwise absent in static data. Despite the successes of these methods on SHM systems, current data scale in SHM makes them inefficient in some cases. These traditional methods require assumptions which do not fit reality. As is the case with real world systems, noise and missing data are a common issue. Furthermore, modern SHM systems generate huge amounts of highly dimensional data and traditional methods have always found it difficult to analyse such data due to a phenomenon called the

curse of dimensionality.

Recently, researchers have turned to deep learning to resolve these issues. Deep learning has proven to work extremely well in high dimensional spaces. With regards to time series, deep learning methods designed for sequential modeling have proven to be effective [

15]. However, despite this, the application of deep learning to time series in SHM proves to be an outstanding issues for a number of reasons. Firstly, despite the generation of huge amounts of data by SHM systems, most of this data is inconsistent, contain significant amount of noise and is unlabeled. Secondly, in the real world, the accuracy of models is significantly poor which among other issues is due to domain shift. This is the case because structures are exposed to extremely harsh environments whose nuances might have not been captured in the model. A highlight on these issues is also the lack of integration of existing knowledge in developed models. Another issue with deep learning models is their high computational costs which hinders their development and deployment [

16]. Deep learning models are known to be computationally demanding due to the enormous matrix operations which are performed during training and inference. A fourth and less commonly considered reality are regulatory restriction. The advent of tools like ChatGPT have brought this issue to light [

17]. As for structural systems, which by the end of the day can impact life and economies, their deployment is trivial. This is a serious issue as classical deep learning is inherently deterministic and black-box, in which case no rationale is provided for the decision made by the system.

The aim of this paper is therefore to provide a mathematically grounded review of deep learning for time series in an attempt to address the current challenges and scepticisms. The review provides a discussion of neural network architectures which are common in time series, generative deep learning models, and uncertainty quantification in deep learning.To ensure the review is self-contained, we include derivations of key results where appropriate.Although the first part of this review tackles deep learning in general, the primary target audience of this review are SHM practitioners and researchers. Thus, the review provides a discussion of deep learning for time series in SHM, including challenges.

The remainder of the paper is structured as follows: It begins with the discussion of the related works in

Section 2. To provide context,

Section 3 provides the background to deep learning. This is followed by a discussion of generative models in

Section 5. A review of uncertainty quantification in deep learning is provided in

Section 6 .The final discussion in this review deals with applications in

Section 7, state of DL in SHM in

Section 8 and a concluding synthesis in

Section 9

2. Related Works

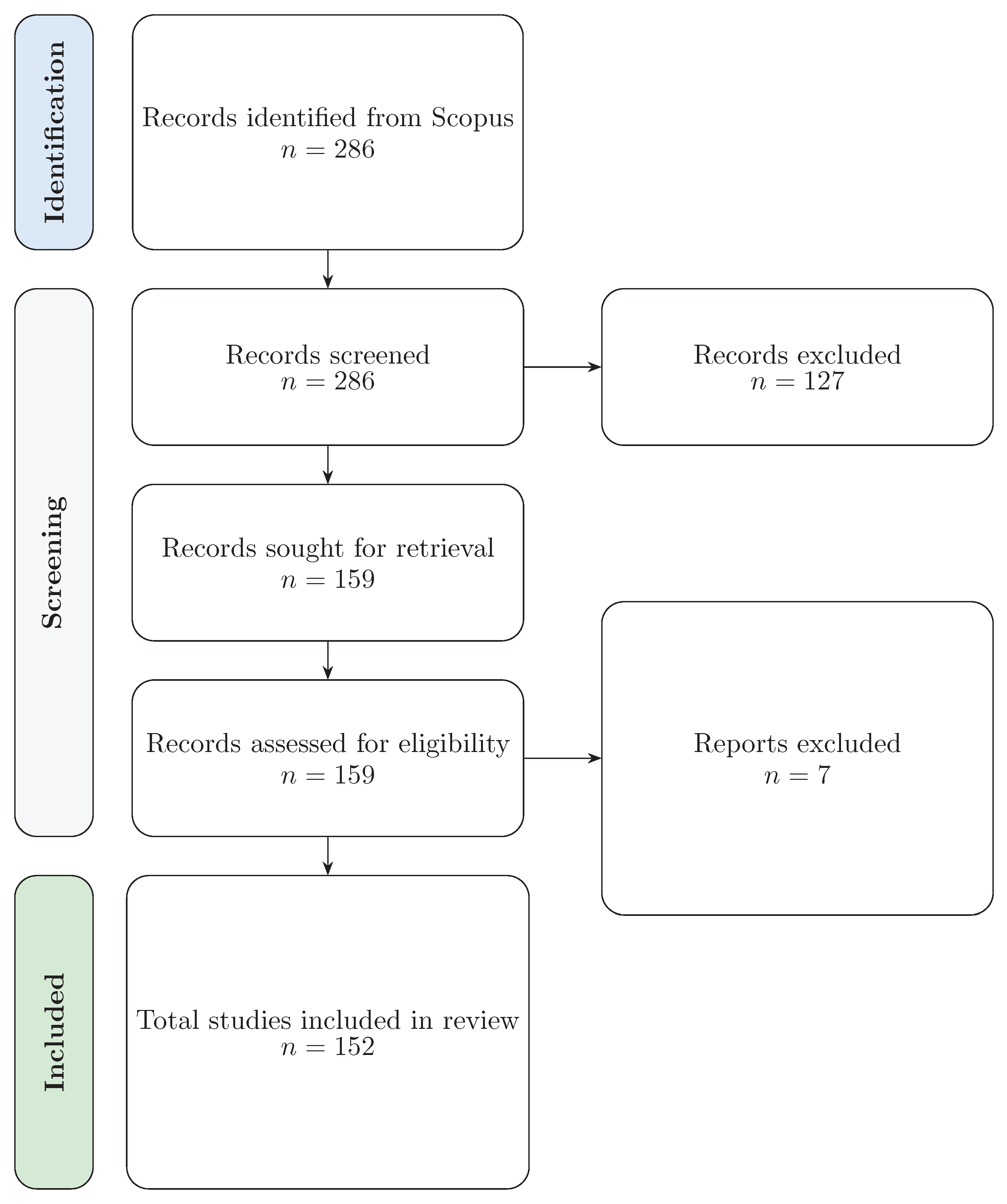

In recent years, deep learning (DL) has gained significant traction in structural health monitoring (SHM) due to its ability to model complex, high-dimensional, and often nonlinear data [

18,

19]. Since 2020, several review studies have emerged to synthesize the expanding range of DL applications in SHM. This section analyzes 24 key publications that collectively reflect the current state of research, with a focus on methodological trends and persistent research gaps.

Several reviews have aimed to map the application of DL in SHM. Jia and Li [

20], for example, presented a taxonomy based on data types, emphasizing the prevalence of vibration- and vision-based methods. However, their review lacked systematic coverage of sequential modeling or generative approaches. Similarly, Afshar et al. [

21] emphasized the predictive power of neural networks for the prediction of structural responses, but provided minimal theoretical grounding.

A focused subset of the current literature addresses vibration-based SHM. Notable among these are works by Spencer et al. [

18], Toh and Park [

22], Azimi et al. [

23], Indhu et al. [

24], Wang et al. [

25] and Avci et al. [

26]. While insightful regarding modal data, these reviews largely overlooked temporal models such as recurrent neural networks (RNNs), temporal convolutional neural networks (TCNNs), and long short-term memory networks (LSTMs), resulting in an application-centric rather than theory-driven perspective.

In vision-based SHM, studies by Hamishebahar et al. [

27], Chowdhury and Kaiser [

28], Gomez-Cabrera and Escamilla-Ambrosio [

29], Sony et al. [

30] and Deng et al. [

31] reviewed CNN-based methods for surface crack detection, defect classification , among others. Though these works offered comprehensive discussions of image-based SHM and relevant architectures, they paid little attention to temporal modeling, hybrid strategies, or generalization beyond specific materials. Chowdhury and Kaiser [

28], for example, focused solely on concrete structures.

A few studies have attempted a more integrative approach. Khan et al. [

32] explored hybrid methods combining DL with physics-based models, identifying avenues for methodological convergence, but their review lacked coverage of generative models and uncertainty quantification. Zhang et al. [

33] focused on DL-based imputation techniques for incomplete SHM data, though without a rigorous theoretical foundation for the models used.

Despite the rising interest in generative models, only Luleci and Catbas [

34] and Luleci et al. [

35] have examined their use in SHM, and both lacked mathematical depth. Other reviews by Spencer et al. [

18], Cha et al. [

36], Abedi et al. [

37], Zhang et al. [

38], Tapeh and Naser [

39] and Xu et al. [

40] have made notable contributions, yet often remained narrowly application-focused and did not thoroughly address the theoretical principles underlying DL models.

Collectively, several limitations emerge across the current reviews. Most reviews remain confined to either vision-based or vibration-based SHM, with little integration of multimodal approaches or comparative learning paradigms. While CNNs are extensively covered, sequential models such as LSTMs, gated recurrent units (GRUs), and attention-based architectures receive insufficient attention, despite their common usage in SHM.This analysis finds no prior review dedicated exclusively to these models. Generative models are rarely discussed in depth, and theoretical treatments of uncertainty quantification are largely absent.

CNNs continue to dominate existing literature, while key areas such as uncertainty quantification and generative modeling are rarely covered, and when they are, theoretical treatment is minimal. This review addresses these gaps by providing a unified synthesis of deep learning methods, grounded in theory and explicitly tied to SHM applications. While some theory-focused or theory-practice-focused reviews exist, such as those on RNNs [

15], generative models [

41], time series [

42,

43,

44], and uncertainty quantification [

45,

46],this work stands out by offering an integrated perspective across multiple DL paradigms. To the best of our knowledge, it is the first to do so.

3. Background

3.1. Time Series

Since Structural Health Monitoring (SHM) involves continuous monitoring of structures, a significant portion of the generated data is time series [

47]. This data may include accelerometers, strain gauges, displacement transducers, and piezoelectric elements that capture the structural response to operational and environmental loading [

13] . SHM data is often multivariate, noisy and might contain missing values. As such, modeling this data requires taking these issues into consideration.

Formally, a time series can be represented as

where

denotes the number of dimensions and L represents the length of the time series [

43].

Time series are grouped into two major categories, i.e. univariate and multivariate. In a univariate time series, D=1. A typical example would be water level in a dam. For a multivariate time series, D> 1, and thus additional variables are considered. The presence of multiple variables introduces complexity as they may exhibit correlations which need to be taken into account during analysis. In the dam context, water levels are most likely influenced by daily temperature fluctuations and seasonal variations in rainfall.

Time series models typically serve four key purposes, namely;

classification [

48],

forecasting[

49],

anomaly detection [

50,

51], and

data imputation [

52]. In an SHM system,

classification involves assigning labels to time series data based on patterns identified in structural behaviour. A common classification task might be the categorization of the state of a structure based on inspection records. On the other hand,

forecasting deals with the prediction of future values based on historical trends. In most cases, forecasting deals with continuous values and provides a quantitative outlook on how a system is likely to evolve over time.

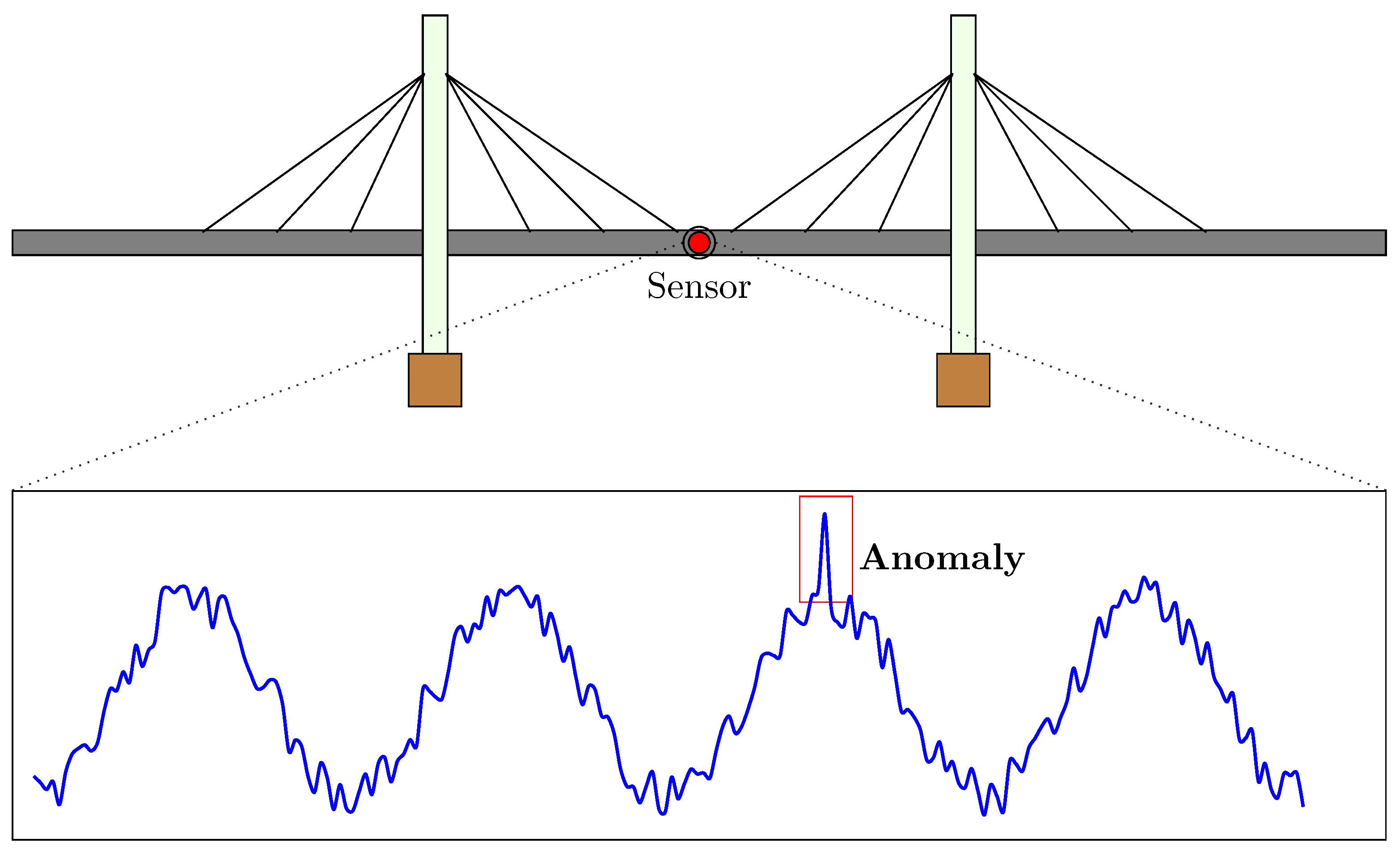

Anomaly detection (

Figure 1) plays a crucial role in identifying deviations from normal behaviour that may indicate a number of issues such as structural damage, shock events e.g. an earthquake, sensor malfunction or simply false alarms. This is important to ensure that events are correctly detected and an appropriate action is taken. Most importantly, anomaly detection can help save a structure and life of its users or occupants.Finally,

data imputation focuses on estimating and filling in missing values to ensure the integrity of a dataset. In real-world scenarios, measurements are often incomplete due to factors such as sensor malfunctions, transmission errors, or the high cost of continuous data collection. For robust analysis, it is important to ensure that the missing data is reconstructed with reasonable accuracy. Imputation directly impacts the results of other time series analysis discussed before. Thus, imputation supports the development of robust predictive models and enhances the reliability of decision-making.

3.1.1. Components of a Time Series

Time series data can be decomposed into four primary components, namely:

trend, seasonality, cyclic variations, and irregular fluctuations [

53].To begin with, the

trend represents long-term changes that are often observable as a gradual shift in the system’s behaviour. In structural systems, a trend may manifest as a slow change in the natural frequency of vibrations due to material aging or increasing stress. Such changes could shift the structural dynamics and thus affect structural response to external loads.

On the other hand, seasonality refers to repetitive patterns that happen at fixed time intervals. In a structure, this could appear as periodic vibrations caused by factors, such as daily traffic patterns or seasonal wind fluctuations.

However, not all oscillations are strictly periodic. The cyclic component captures long-term, non-periodic fluctuations. For example, temperature changes over the year can cause materials to expand and contract, altering the vibrational characteristics of a structure over an extended period. This type of fluctuation, while repetitive, does not follow a fixed schedule, making it distinct from seasonal patterns, yet it still reflects periodic behaviour over the long run.

Lastly, irregular fluctuations, represent random, unpredictable disturbances in a time series. In a signal, irregular fluctuations remain after the seasonal, cyclic, and trend components have been removed. Generally, this component is associated with random noise and and cannot be easily explained.

All in all, time series models typically assume an additive or multiplicative approach to capture the interactions between these components.

3.1.2. Methods of Time Series Analysis

Different methods for time series analysis can be categorised into

time domain and

frequency-domain [

54,

55]. Time domain methods consider raw signals relative to the time variable and tend to be useful for forecasting and understanding trends, seasonal patterns, and autocorrelations within the data.However, there are cases where it is important to transform the raw data into the frequency domain to capture other important features. This is done using tools such as such as the Fourier Transform [

56] or Wavelet Transform [

57]. Frequency-domain analysis is effective at detecting cyclical or periodic patterns, and thus provides insights into the dominant frequencies that influence the behavior of the time series.

3.2. Deep Learning

3.2.1. A Brief History

The foundations of modern neural networks date back to the McCulloch-Pitts model [

58] which laid the conceptual groundwork for viewing computation in terms of interconnected logical units. Building on this idea, the perceptron was introduced by Rosenblatt Rosenblatt [

59] in the late 1950s. Following that, the development of neural networks had several successes and winters. Despite several challenges, which among others include lack of funding, several researchers persisted on neural network research. The progression of research in this area is in part linked to the the works of Werbos [

60], Fukushima [

61], Hopfield [

62], Rumelhart et al. [

63], LeCun et al. [

64], Hochreiter and Schmidhuber [

65], Bengio et al. [

66], Hinton et al. [

67] and Glorot and Bengio [

68]. Although much progress was made in 1980s, late 1990s and early 2000s, it was not until 2012 that a breakthrough in image classification was achieved using a neural network model [

69]. The major reasons for this advancement include data availability, improvements in computational hardware and software, and novel research in machine learning algorithms [

70]. Unlike traditional machine learning approaches, neural networks have been empirically shown to perform extremely well on large, highly-dimensional datasets. This is linked to the problem of curse of dimensionality in high dimensional spaces [

71,

72]. Besides data availability, and hardware and software innovations, he successes of deep learning are attributed to the ability of neural networks to learn a broad class of functions with arbitrary precision. Several results have proven this in the context of multi layer perceptrons [

73,

74]. The goal of this section is to dissect the inner workings of the neural network model and establish the fundamental principles necessary for their understanding.

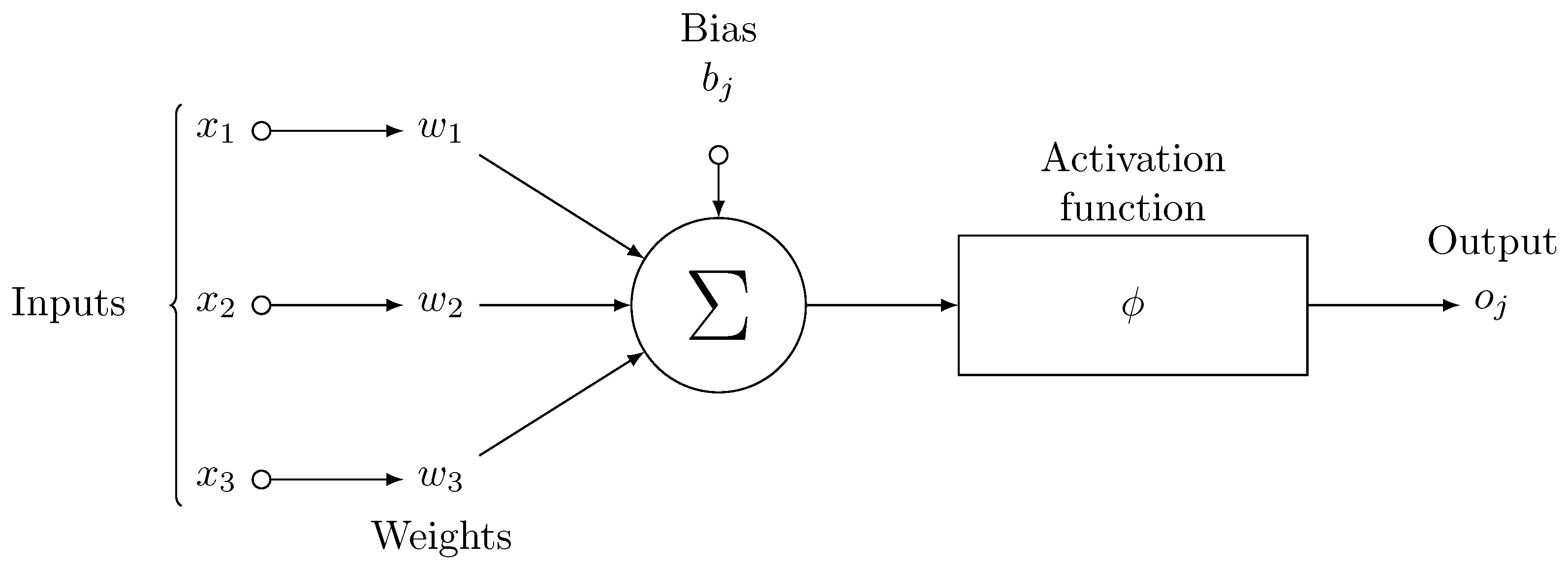

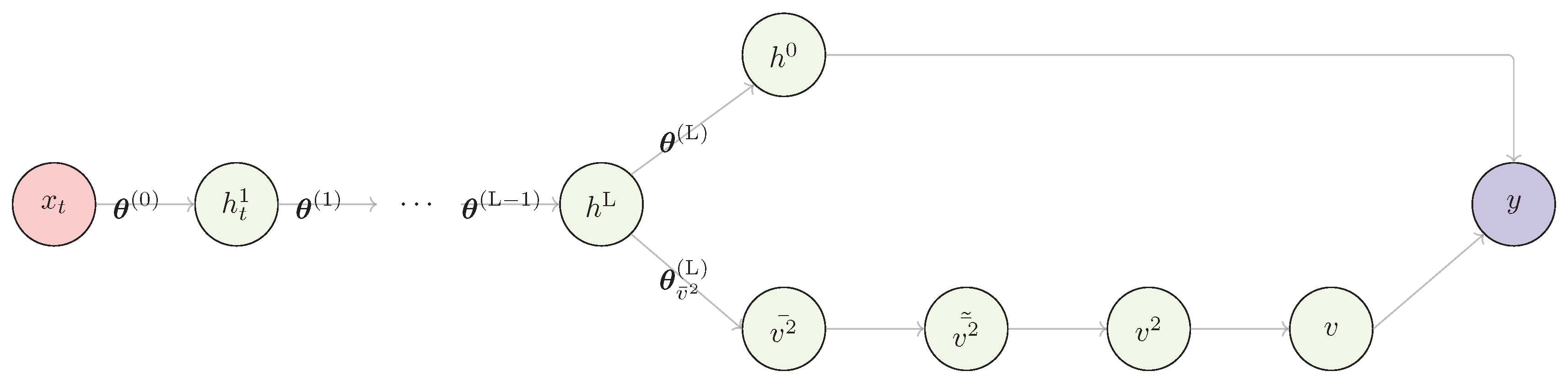

3.2.2. Artificial Neuron

A neuron is the basic computational block of an artificial neural network. A conceptual model of a neuron is provided in

Figure 2.

Clause 3.4.9 of ISO/IEC 22989:2022 [

75] defines a neuron as a primitive processing element which takes one or more input values and produces an output value by combining the input values and applying an

activation function, i.e.

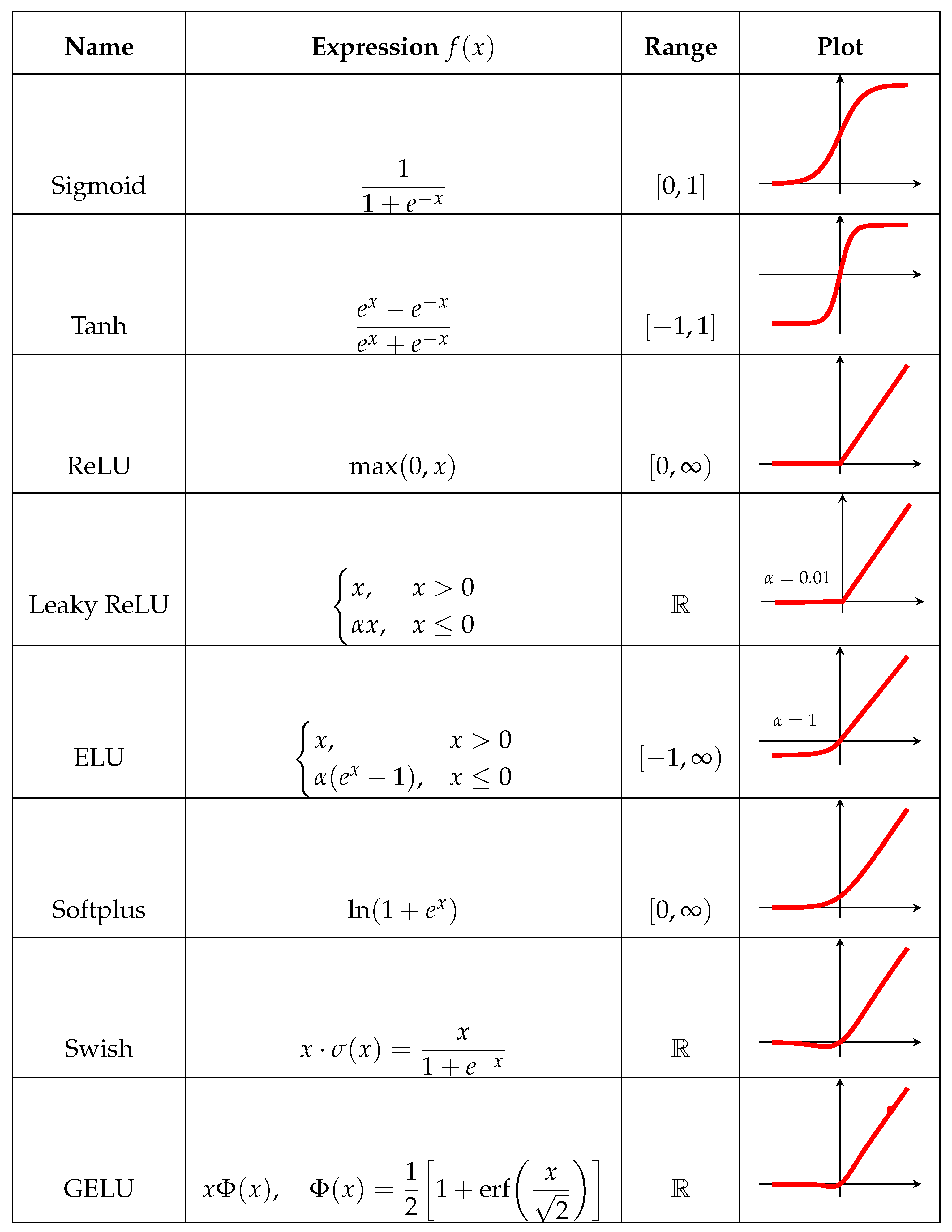

The use of an activation function in a neural network is to learn non-linearity in the target function. With a few exceptions, commonly used activation functions are non-linear. An activation function is required to be continuous or piecewise differentiable in order to facilitate the update of network weights, which are usually learned through backpropagation [

76]. The choice of an activation function is typically guided by empirical performance, with ReLU [

77] being the most commonly used due to its effectiveness in practice. Typical examples of activation functions are shown in

Table 1.For a comprehensive treatment of activation functions, the works by Kunc and Kléma [

78], Dubey et al. [

79] can be consulted.

3.3. Artificial Neural Network

To compose a neural network, a group of neurons is organized into layers. Neurons in one layer are connected to those in the next layer through weights. To build a deep neural network, several layers are arranged in a particular organization called an

architecture. While the traditional architectures sequentially stack layers one after the other, it is also common to have architectures with recurrent loops [

80] and skip connections [

81] which are introduced to solve issues with sequential data and efficient learning in very deep layered networks. For most practical applications, deep architectures are preferred due to their ability to learn rich and expressive feature representations [

82].

3.3.1. Learning in Neural Networks

The goal of a neural network is to learn a mapping

where

D and

Q represent the dimensions of the input and output spaces, respectively. The network is designed to handle inputs of arbitrary dimensionality and approximate complex functions. To measure if the network has accurately learned an optimal mapping, its performance is evaluated using a loss function, also known as an objective function, which measures the discrepancy between the neural network’s prediction

and the actual target

. This is achieved by minimization of the objective function,

Loss functions differ based on the task at hand. Generally, there are three foundational approaches to machine learning, i.e.

supervised learning, unsupervised learning, and

reinforcement learning [

83]. The discussions in this review will only consider the first two.

In a supervised learning setting, the model is trained to predict an output

given an input

. The data is labelled prior to training where each input is set to predict a given output, i.e.,

with

being the size of the dataset. In this setup, the loss function is designed in such a way that the predicted outputs

closely match the target labels

. For regression tasks, the mean squared error is a commonly used metric

For classification tasks, a commonly used loss function is the cross-entropy loss, given by

Cross entropy has its origins in information theory.In ML it is used to measure the deviation of the predicted distribution to the true distribution. The goal is to achieve a low cross entropy, where the predicted probability is close to the true label. In the case where the target output has only two labels, the binary cross entropy is used. For multiple classes, the categorical cross entropy is more appropriate.

It is possible to derive the above loss functions using a probabilistic framework where learning is considered an attempt at modeling the probability distributions over the target variables. With such a framework in place, loss functions for regression are formulated considering a continuous probability distribution while those for a classification task consider a discrete distribution [

84]. However, it has to be noted that discrete distributions can also be used to formulate loss functions for regression problems.

In unsupervised learning, the goal is to learn patterns from the data which are then used to cluster, reduce data dimensionality, or generate new data samples. Thus, the objective function for unsupervised learning is crafted with these tasks in mind. These are tackled in detail when discussing generative models.

3.3.2. Regularization

The parameter space is highly multidimensional, encompassing various possible parameter sets. This complexity makes learning challenging, as the learning process must identify parameters that generalize well to unseen data. To address this, a

regularization term,

, can be incorporated into the loss function, helping steer the learning process toward reasonable parameters

Model regularization is a way of restricting the model parameters and helps avoid overfitting [

85]. With overfitting, the network learns the training data so well but fails to generalize to unseen data during inference. Common explicit regularization terms are the

, , and

elastic net[

86]. The

regularization term is given by,

, where

is the regularization parameter, and

represents the model weights. In practice,

regularization produces sparse models [

87]. Due to this property,

is effective at feature selection and dimensionality reduction in high-dimensional datasets with correlated features. In contrast,

regularization, expressed as,

, imposes a large penalty on large weights, encouraging the model to select smaller weights. With

regularization, most weights are close to zero. In other settings, the best of the two approaches are combined to produce the elastic net. This creates a more flexible regularization scheme. Besides these explicit regularization techniques, neural networks employ other approaches such as early stopping [

88], batch normalization [

89], and mixup [

90]. The discussion of these approaches is outside the scope of this review.

3.3.3. Update of Network Parameters

During training, neural networks are initialized with weights, which get updated over multiple iterations. The initial weights can be categorized as weak or strong. Strong initial weights impose stricter assumptions compared to their weak counterparts.In this light, various weight initialization techniques exist, including Xavier [

68], He [

91], LeCun [

92], and Orthogonal [

93,

94].

Typically, weights are updated using the following expression

Here,

evaluates the sensitivity of the objective function to changes in the parameters.This expression is computed using backpropagation, a specialized case of automatic differentiation [

95]. Backpropagation applies the chain rule to compute this term. Following this, parameter updates can occur after a full pass over the dataset (batch gradient descent) or over a smaller subset of data points (mini-batch or stochastic gradient descent(SGD)). Among these two approaches, SGD is preferred as it is more efficient and scalable.

The learning rate governs the step size toward the optimal solution, i.e, . Convergence of the model is hugely dependent on the choice of the learning rate. A small learning rate may lead to slow convergence, while a large learning rate risks overshooting minima or diverging altogether. This is so because the objective function encountered in real world problems is a highly complex, non-convex function characterized by multiple local minima and saddle points.

Despite its use, the basic update rule is somewhat naïve in the sense that the update strength remains constant, regardless of the model’s position in the learning trajectory. To overcome this limitation, a momentum term can be introduced to the update rule. Momentum helps accelerate updates in consistent directions and suppresses oscillations in directions with fluctuating gradients. The modified update rule is given by

Here,

is the velocity term,

is the momentum coefficient (typically around 0.9). This formulation effectively averages gradients over time, enhancing movement in stable directions while dampening updates in volatile regions. However, one issue with traditional momentum is the potential to overshoot minima. A refined version known as Nesterov Accelerated Gradient (NAG) anticipates the future position of parameters by calculating the gradient at a lookahead point,

This subtle change improves convergence in practice and reduces the risk of overshooting. Rosebrock [

96] likens Nesterov momentum to a child rolling down a hill who decelerates early upon seeing a brick fence at the bottom. Besides these two approaches, other more advanced optimization algorithms such as Adagrad [

97], RMSProp [

98], and Adam [

99] have also been proposed to further address the challenges in training deep networks.

4. Foundational Architectures

This section introduces the commonly used architectures for time series analysis. Generative models have been reserved for the next section. The aim is to maintain the natural flow of the discussion, since the architectures covered in this section can serve as the backbone for either generative or discriminative models. Our discussion begins with multilayer perceptrons as they are extensively covered in deep learning literature.

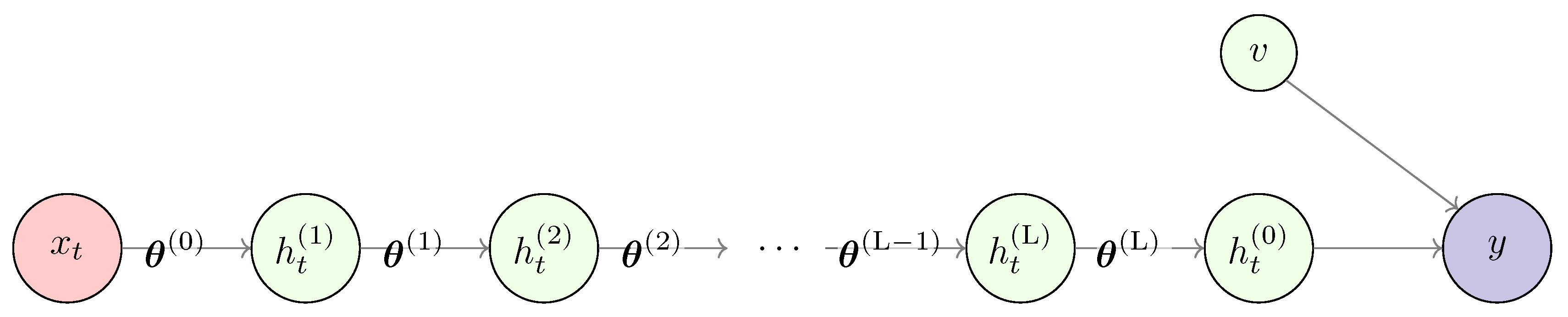

4.1. Multilayer Perceptrons

A multilayer perceptron (MLP) is a feedforward neural network whose architecture is defined by an input layer, one or more hidden layers, and an output layer. A typical compact representation of an MLP with an added observation error is shown in

Figure 3.

MLPs have found success in modeling highly complex functions for both classification [

101,

102] and forecasting tasks [

103]. Unlike RNNs, as will be seen later, MLPs do not have an inherent memory mechanism to handle sequential data. In order to process such data, the problem is transformed into a supervised learning problem by creating a lagged version of the data. This approach is called the

sliding window approach, where to predict the value at time

, the input consists of a fixed-size window of past observations, typically represented as

, and this works for both univariate and multivariate time series.

In addition to the sliding window technique, features inherent to time series can be manually engineered and incorporated into model training. Furthermore, network architectures can be designed to autonomously extract essential features from sequential data. For instance, frequency-domain features can be integrated into the model. While several approaches exist, the focus here is on a novel architecture known as the Fourier Analysis Network (

FAN) [

104]. FANs activate a certain subset of the neurons in a layer using cosine and sine functions, while the remaining neurons are activated using standard functions such as ReLU. Compared to traditional multilayer perceptrons (MLPs), FANs offer the advantage of reduced trainable parameters and, consequently, lower computational complexity due to their design. In principle, FANs are simply performing a Fourier series analysis, as seen by the compact representation of the layer operation,i.e.

which is structurally equivalent to

where

corresponds to the constant term

,

and

represent the learned amplitudes analogous to

and

, respectively, and

plays the role of the frequency-modulated phase

.

Beyond feature engineering and activation design, recent research has demonstrated that carefully structured MLP architectures can achieve competitive performance on time series forecasting tasks.

NBEATS [

105] introduced a purely MLP-based approach by stacking fully connected layers into forecast and backcast modules. This enables the network to model trend and seasonality components directly from the data without the need for recurrent or convolutional operations. Building on this idea,

N-HiTS [

106] extended the architecture by introducing hierarchical interpolation strategies that effectively capture multi-scale temporal patterns. N-HiTS was proven to improve accuracy with almost 20% over Transformer architectures for time series. Similarly,

TSMixer [

107] employ stacked MLPs to separately mix temporal and feature dimensions. This work was inspired by earlier vision models which showed that simple linear projections over time and features can match or surpass more complex sequential models. The last architecture in our discussion is

gMLP [

108]. This architecture incorporates spatial gating mechanisms into MLP layers to allow the network to model interactions across time steps more effectively without explicit recurrence.

4.2. Recurrent Neural Networks

Over the last 30 years, recurrent neural networks (RNNs) have been the standard deep neural architecture for time series. RNNs are inherently designed to remember past information using memory cells. Thus, RNNs could also be termed memory or stateful networks due to their ability to remember the past. Different variations of RNNs have so far been developed and the following sections tackle these in detail.

4.2.1. Simple RNNs

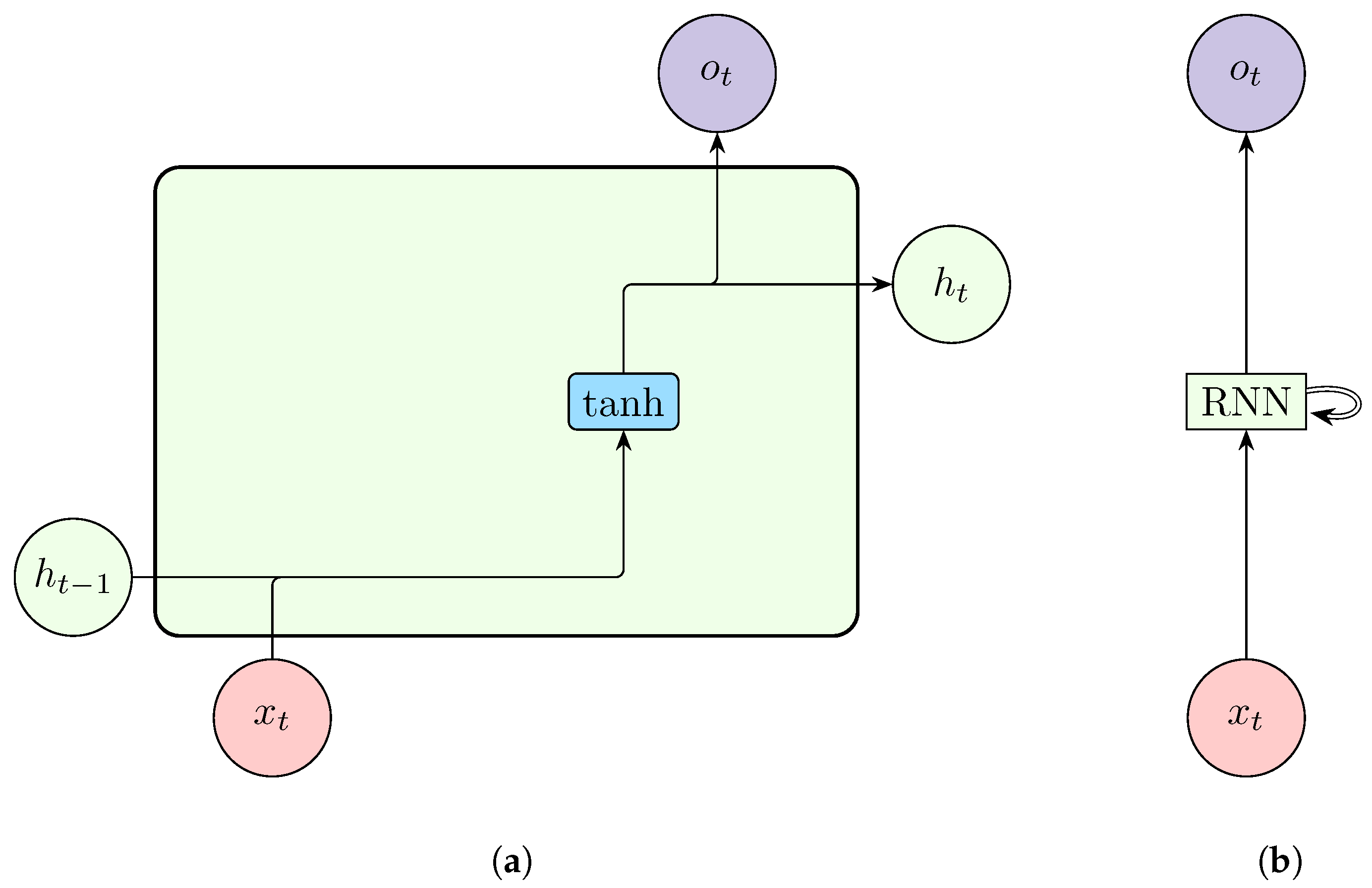

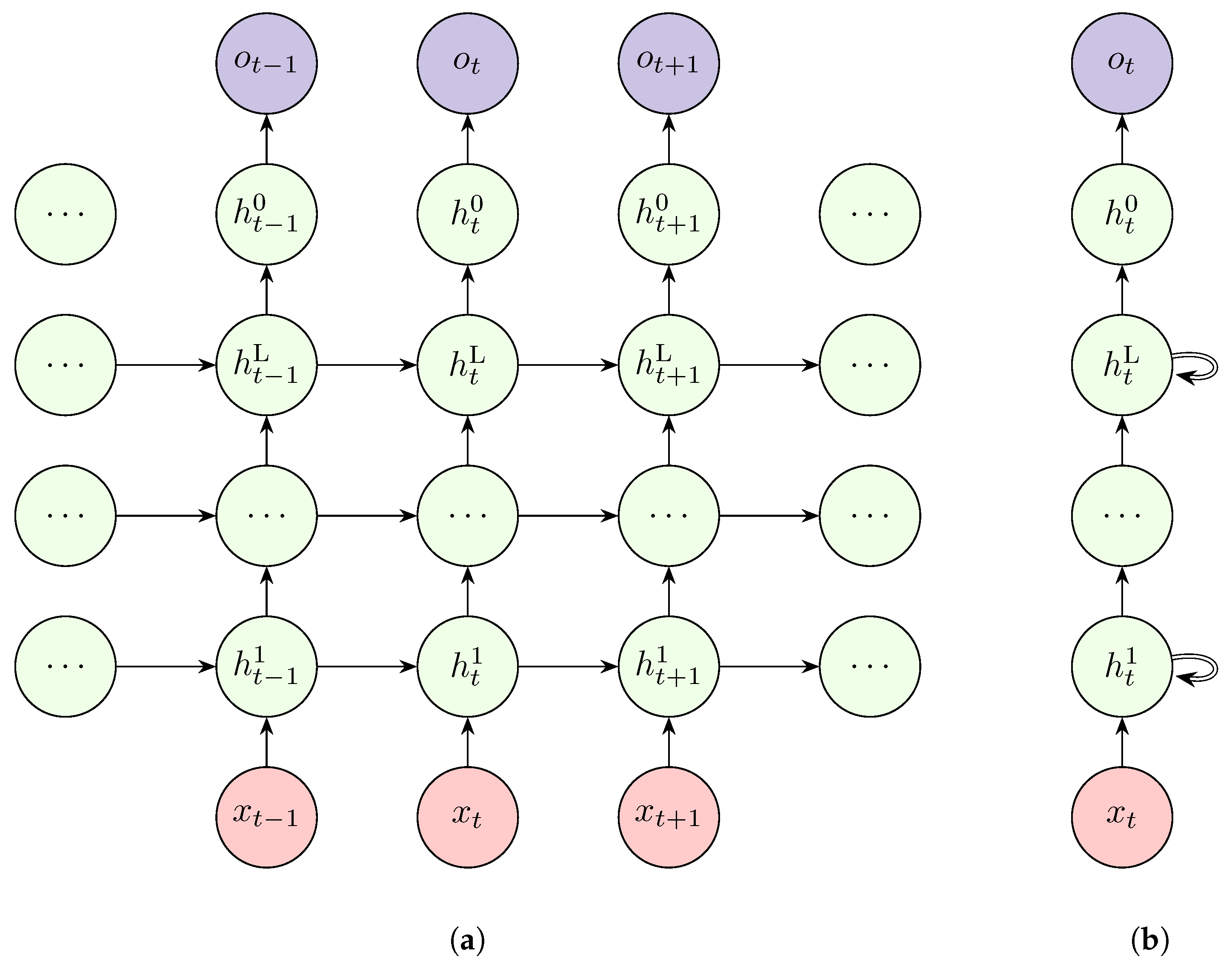

Simple RNNs use RNN cells which are stacked together to create an RNN. An RNN cell accepts two inputs at each time step; the previous hidden state, which encodes previous information, and the input at the current time step.

Figure 4.

A representation of a simple Recurrent Neural Network cell (a) and a single-layered unrolled Recurrent Neural Network (b).

Figure 4.

A representation of a simple Recurrent Neural Network cell (a) and a single-layered unrolled Recurrent Neural Network (b).

These inputs are combined using Equation

11 to produce the new hidden state at time step

t,

contains a summary vector of all inputs up to and including

t[

109].Based on the overall structure of the neural network, the hidden state can be used to compute the output, which is given by Equation

12, or it can be passed to the next RNN cell at time step

,

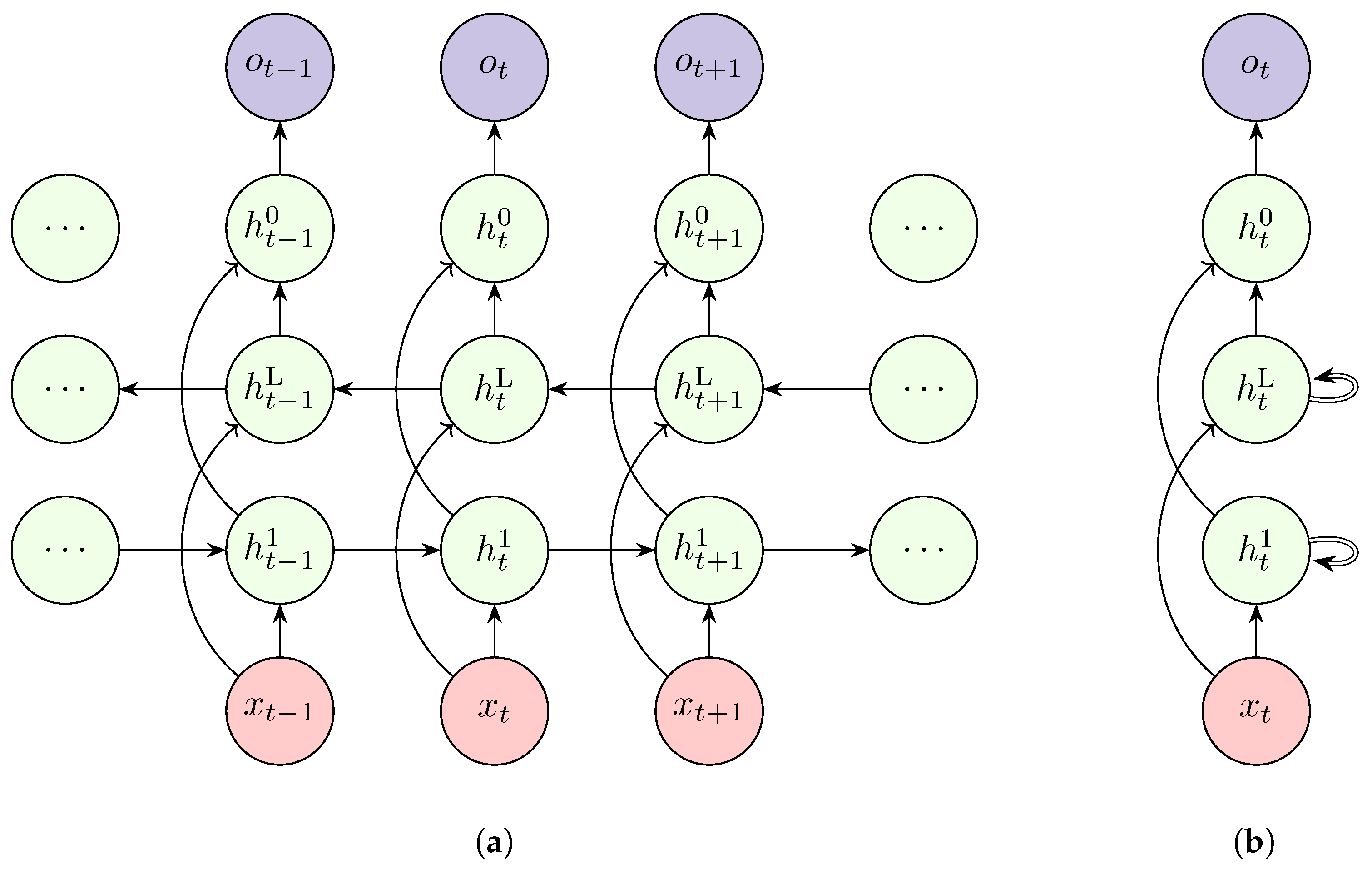

4.2.2. Deep RNN

The typical RNN discussed so far only considers a single layer. However, as indicated earlier, neural networks perform better with depth. Just as MLPs, this is possible with RNNs. Multiple RNN layers are stacked on top of each other as shown in

Figure 5 to compose a Deep RNN. Cells in the top layers get input from the previous cell of the same layer as well as from the bottom layer.

4.2.3. Bidirectional RNN

To help address issues like missing values, RNNs use an innovative architecture called bidirectional recurrent neural network(BiRNN). This architecture was proposed by Schuster and Paliwal [

111]. BiRNN combines two RNNs oriented in different directions with each RNN treated separately, i.e.,

for the forward RNN and

for the backward RNN. The outputs of these networks are concatenated, i.e.,

, to produce the final output.

Figure 6.

Architecture of a Bidirectional Recurrent Neural Network (rolled(a) and unrolled(b)). Adapted from Vuong [

110].

Figure 6.

Architecture of a Bidirectional Recurrent Neural Network (rolled(a) and unrolled(b)). Adapted from Vuong [

110].

4.3. Comparisons of Standards RNNs

The three architectures of RNNs discussed thus far have some similarities and differencies. Simple RNNs offer a lightweight structure capable of modeling short-term dependencies; however, their effectiveness diminishes on long sequences due to vanishing gradients [

112] and limited memory capacity . On the other hand, deep RNNs are able to learn hierarchical temporal features and tend to perform better than simple RNNs. Despite improving model accuracy, the added depth adds a layer of complexity and there is always a tradeoff between accuracy and model generalization. Thus, careful regularization and initialization are needed in deep RNNs to mitigate training instability. BiRNN’s introduce a layer of complexity with their dual encoding feature. This architecture is useful in applications such as anomaly detection, classification or data imputation tasks where the entire sequence is available a priori . However, bidirectionality is inherently noncausal and thus unsuitable for real-time forecasting, where future observations are not accessible. Despite these differences, all RNNs share a common advantage,

weight sharing. Weight sharing reduces the number of trainable parameters in RNNs and thus reducing the computational costs

Table 2.

Comparison of Simple RNN, DRNN, and BiRNN in Time Series Modeling.

Table 2.

Comparison of Simple RNN, DRNN, and BiRNN in Time Series Modeling.

| Aspect |

Simple RNN |

Deep RNN |

Bidirectional RNN |

| Temporal direction |

Forward only |

Forward only |

Both |

| Long-term memory |

Poor |

Improved |

Strong (non-causal) |

| Depth and abstraction |

Shallow |

Hierarchical |

Context-rich |

| Suitability for forecasting |

Online / short horizon |

Long horizon forecasting |

Not suitable for real-time use |

| Computation and training |

Efficient, stable |

Expensive, harder to train |

High overhead |

| Best use case |

Basic time series tasks |

Multiscale or nonlinear time series |

Offline classification or anomaly detection |

4.4. Shortfalls of RNNs

There are two main problems with the discussed RNN architectures. Firstly, RNNs are trained through a process called

backpropagation through time (BPTT), which involves going back in time n steps to compute the derivative of the loss. Thus, the gradient of the loss function involves repeated multiplication of the Jacobian matrix of the hidden state transition,

If the largest eigenvalue

of this matrix is less than 1, gradients tend to vanish. On the other hand, if

is greater than 1, gradients grow exponentially, leading to the

exploding gradient problem (unstable training) [

15,

113].

There are different ways to resolve these issues including gradient clipping, proper weight initialization, and use of gating mechanisms (LSTMs and GRUs). This issue is also tackled via training technique called

teacher forcing, where ground truth at

t is fed as input to the cell at

[

70].

A second shortfall of RNNs, which is also common to their advanced variants, is their lack of parallelizability, as they process data sequentially. This can significantly slow down training times. Attempts to resolve this have led to the development of advanced architectures such as transformers and parallelizable RNNs.

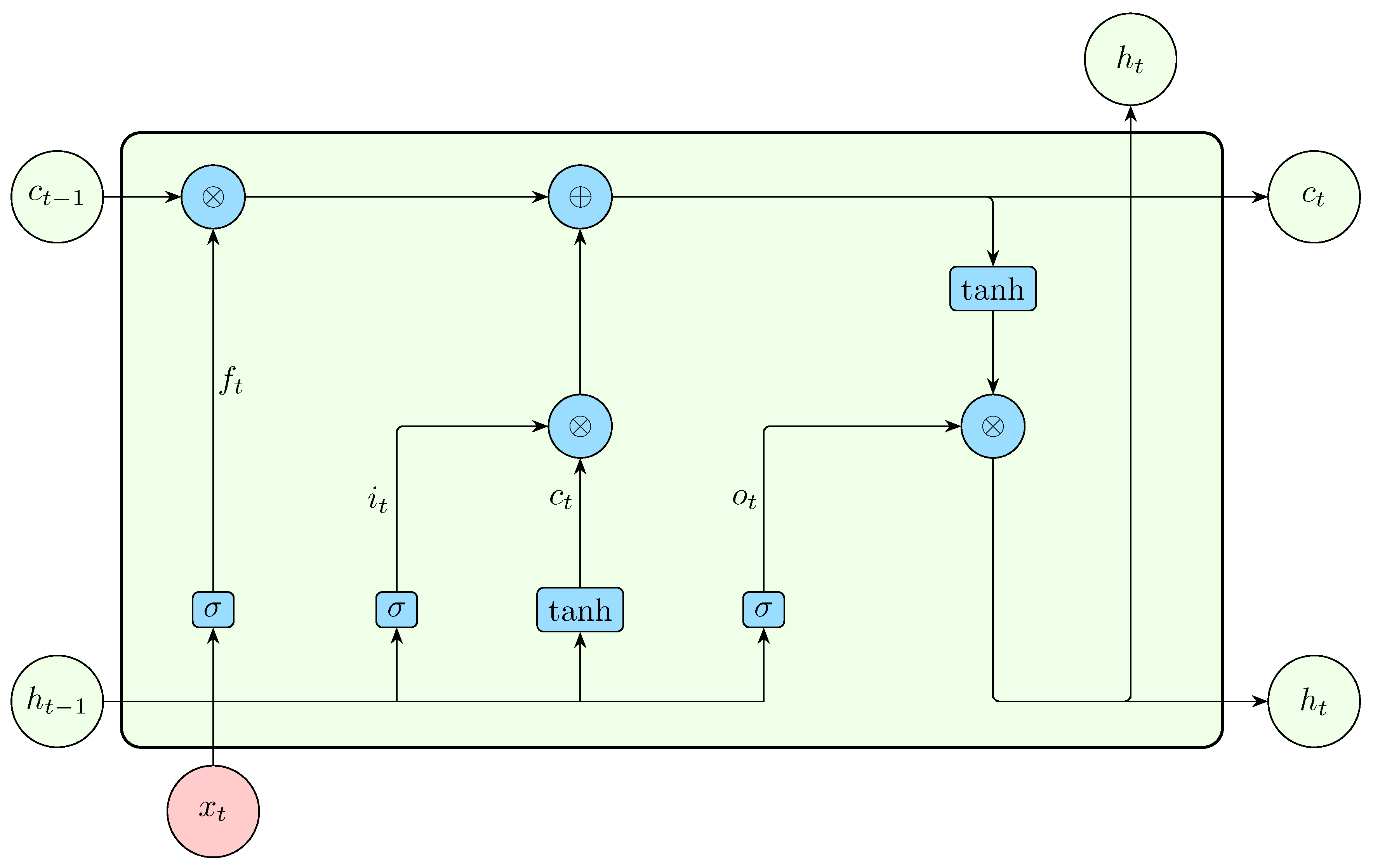

4.5. Long Short-Term Memory

To handle long and short sequences effectively without issues such as exploding or vanishing gradients, Long Short-Term Memory(LSTM) networks were introduced [

65]. LSTM neural networks use a cleverly designed cell (LSTM cell) instead of the simple RNN cell. These gates are depicted in Figure (

Figure 7).

The input to the forget gate is the previous hidden state and current input

acts as a mask for the cell memory since a sigmoid function squashes all outputs into values between 0 and 1. Zero entries erase irrelevant previous memory contents at a specific memory location.

To update the cell memory, an LSTM uses the following equations,

The input gate controls how much new information, which is represented by the candidate memory , should be stored in the cell state . It decides what proportion of the candidate memory will be added to the current memory, effectively controlling the flow of information into the cell state. The candidate memory represents a potential new memory value that could be added to the cell state. Its value is computed based on the current input and previous hidden state, capturing the new information to be incorporated into the cell state. The input gate modulates this candidate memory and determines how much of it should influence the memory update.

The current hidden state is then calculated based on the updated memory. A copy of the current memory

is passed through a tanh activation function to normalize it between -1 and 1. This result is then multiplied by

, which is computed as

The current hidden state, given by

along with the current cell memory

, are passed to the next LSTM cell.

4.6. Other LSTM Variants

Different variations of the LSTM cell exist. The focus here is on the peephole LSTM [

115] and xLSTM [

116] The peephole LSTM extends the standard LSTM architecture by adding connections from the internal cell state to the input, forget, and output gates. The main benefit of this approach is improved gradient flow, which aids in mitigating the vanishing gradient problem. In contrast, xLSTM approaches a similar problem by incorporating additional gates for finer control over information retentions. The architecture also includes an exponential gating mechanism in which exponential activation functions are used in the input and forget gates to better manage data flow

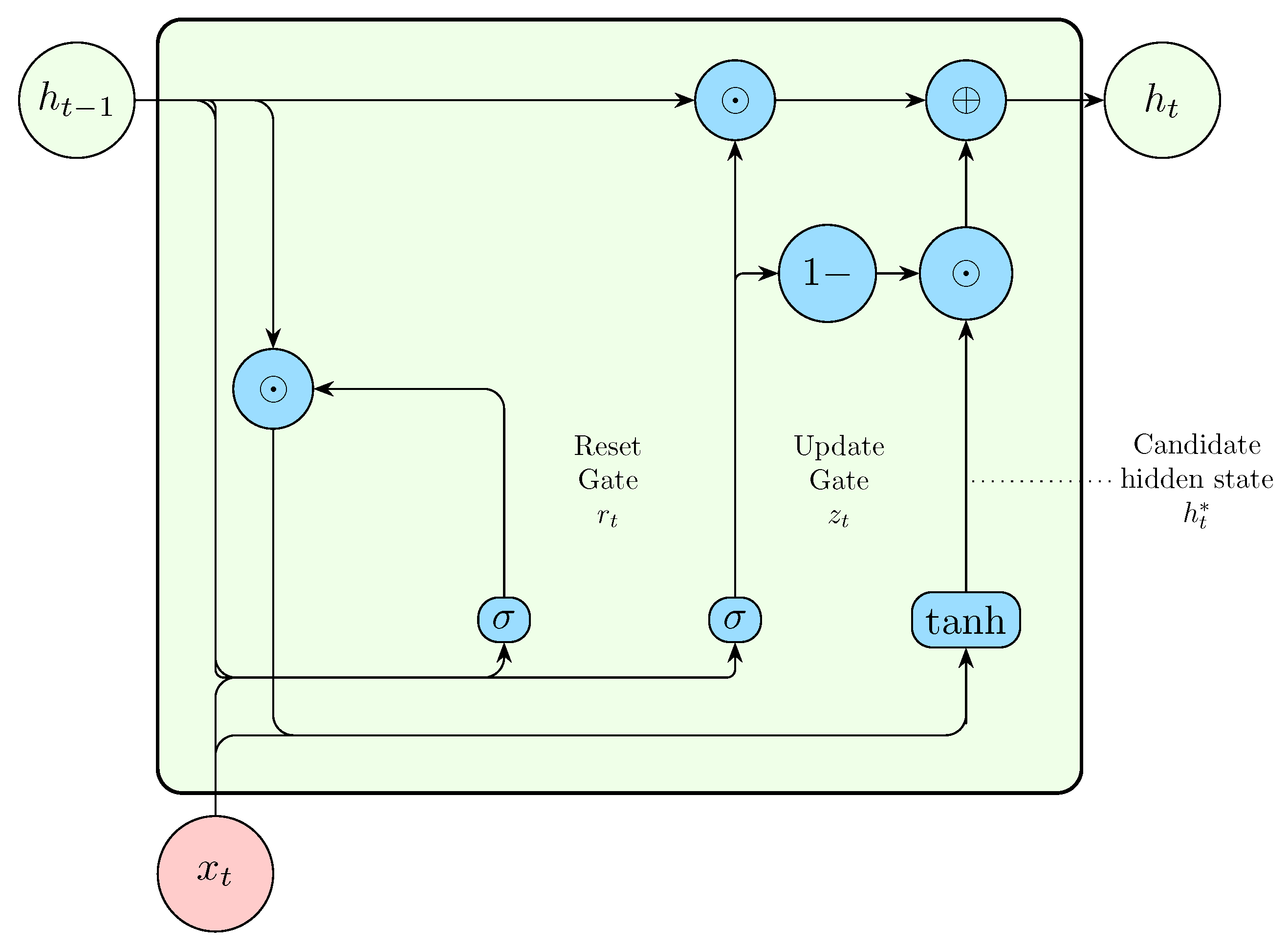

4.7. Gated Recurrent Unit

The Gated Recurrent Unit(GRU) [

117] was designed to simplify the LSTM while maintaining comparable accuracy. The GRU removes the need for a separate memory cell and reduces the number of gates to two; the reset gate

and the update gate

.

Figure 8.

Internal structure of a Gated Recurrent Unit(GRU) [

114].

Figure 8.

Internal structure of a Gated Recurrent Unit(GRU) [

114].

The reset gate

controls how much of the previous hidden state should be considered irrelevant for the current time step. The input to the reset gate is the current input

and the previous hidden state

After applying the logistic sigmoid activation,

determines how much of the previous hidden state should be reset. Next, the candidate current hidden state

is calculated by multiplying the reset gate output

with the previous hidden state

, and then adding the current input

The update gate

determines the balance between the previous hidden state and the candidate current hidden state. A

value of 0 completely ignores the previous hidden state and only considers the current input. Conversely, a

value of 1 completely ignores the current input and only considers the previous hidden state. The update gate is computed as

Finally, the current hidden state

is calculated as a linear interpolation between the previous hidden state

and the candidate hidden state

based on the value of

,

4.8. Comparison between LSTM and GRU

Both the LSTM and GRU were designed to solve the issue of vanishing gradients and enable long term modeling. The subtle difference between the two architectures is mostly in the cell complexity, where the number of gates in the GRU is reduced to two. While both models perform well on many sequence modeling tasks, GRUs often generalize comparably to LSTMs with less computational cost, making them a preferred choice in scenarios with limited resources or smaller datasets. However, in tasks that require modeling more intricate temporal dynamics, LSTMs may offer better performance due to their more expressive gating mechanisms. For more expressive modeling, in practice, it is also common to use deep or bidirectional RNNs with the LSTM or the GRU as the central computing mechanism.

4.9. Transformer Models

Transformers [

118] were introduced to address the limitations of recurrent neural networks (RNNs). The issues referred to here are long-term memory and parallelization. Tranformers rely on self-attention to capture contextual dependencies across input sequences. Mathematically, self-attention is defined as

where

,

, and

represent the query, key, and value matrices, respectively, and

d denotes the dimensionality of the key vectors. To ensure causality, which is a key issue in time series, masking is used to prevent information from future time steps from influencing the current prediction.

Original transformer models were designed for machine translation tasks. However, over the past few years, transformers have been adapted to time series analysis due to their ability to capture long-range dependencies and enable parallel computation. Notable variants include

Temporal Fusion Transformer (TFT) [

119],

Informer [

120],

Autoformer [

121],

FEDformer [

122], and

PatchTST [

123].

4.10. Mamba

Mamba [

124] is a recent architecture for efficient and expressive sequence modeling. This architecture was developed to overcome the quadratic complexity

of self-attention in Transformers. It builds on discrete-time state space models (SSMs), represented by

Unlike classical SSMs, Mamba introduces input-dependent parameters

,

, and a dynamic step size

, enabling selective compression of relevant information and improved adaptability. To extend memory and enable parallelization, Mamba uses structured initialization, (HIgh-order Polynomial Projection Operator) [

125] for

and a hardware-aware parallel scan algorithm.

4.11. Convolutional Neural Networks

Convolutional neural networks (CNNs) are among the most popular architectures and have significantly influenced the modern era of deep learning. CNNs were initially developed to handle grid-like data such as images. However, researchers have found CNNs to perform well on sequential data, such as time series. It has to be noted that time series can be considered one-dimensional grid data [

109]. The fundamental operation in a CNN is the convolution. Mathematically, for the one dimensional discrete case, this is defined as,

where

G is the input signal,

H is the filter or kernel, * denotes convolution operator,

i is the output index,

m is the summation index,

is the value of

G at

m, and

is the flipped and shifted version of

H.

Convolutions can also be multidimensional. For 2D convolutions, the operation becomes,

As can be seen, a typical convolution operation involves flipping the function

H (kernel).However, in CNNs, it is uncommon to flip function

H before applying it, so the operation is more accurately described as

cross correlation[

126].Despite this, the term

convolution is still commonly used. The purpose of CNNs is to learn a set of functions

H, also known as filters or kernels. These filters are typically small tensors (e.g., 1x1, 3x3, 5x5, or 7x7). For multi-dimensional inputs, the filters are extended accordingly. Unlike MLPs, CNNs rely on local connections and share the same filter across the entire input to create the output feature map. Multiple filters can be used in one layer, and their outputs are concatenated to form a complete feature representation. The dimensions of the feature map are dependent on the number of kernels, padding, stride, dilation, pooling, and upsampling. Since the same filter is reused across the input, CNNs are able to learn spatial relationships invariant to transformations. Additionally, CNNs require fewer parameters compared to fully connected networks, making them well-suited for high-dimensional data. Unlike RNNs, CNNs are parallelizable.

4.11.1. 1D CNN

The most basic CNNs used for time series are 1D CNNs [

127]. This involves sliding the filter along the sequence length from the beginning. Notably, 1D CNNs can be used for multivariate time series modeling as well, provided that the filter width matches the width of the sequence. Despite their effectiveness, 1D CNNs suffer from future data leakage, violating causality.

4.11.2. Causal CNN

To overcome the issue of future data leakage in 1D CNNs, causal convolutions are used. Mathematically, causal convolution is written as,

Causal convolutions only use past data to make predictions. To handle long sequences, dilated convolutions, introduced by Yu and Koltun [

128] are used to increase the receptive field with fewer layers,

This generalization allows flexible dilation without modifying the filter. The standard convolution is a special case where .

4.12. Graph Neural Networks

In several practical applications, time series emerge within networked structures such as road networks, water distribution systems, and railway infrastructures. In such settings, the data exhibit dependencies that are both spatial and temporal in nature. To capture these dependencies accurately requires their explicit modeling. Graph Neural Networks(GNNs) are a natural architecture for this as they provide a more flexible way to model such dependencies. Graph Neural Networks that take into account the aspect of time are called dynamic. In this section, we will firstly discuss the fundamentals of GNNs and will then consider how they fit in time series analysis.

Typically, the input to a GNN is a graph, e.g. a protein structure, social network, transportation network, etc. A graph is defined by a set of nodes , a set of edges , and associated mappings that describe the relationships between nodes. Mathematically, a graph is represented either through an adjacency list or adjacency matrix (A). The latter is widely used in Graph Neural Network applications. Each element in A indicates the existence or absence of an edge between a pair of nodes, i.e., if there is an edge from node i to node j, and otherwise. The specific structure of A depends on the type of graph. Graphs can have single or multiple edges between a pair of nodes. It is also possible to have edges which start and terminate on the same node (self-loops). Further categorisation of a graph depends on edge- directionality and edge-weight. Thus, graphs can be classified as directed or undirected as well as weighted or unweighted. A special case which will be our focus are undirected graphs without self-loops or multiple edges. The analysis of such graphs is simple since the adjacency matrix is symmetric.

Node ordering in a graph is arbitrary as such different formulations of the adjacency matrix are possible [

84]. For this reason, a permutation matrix

P is used to ensure that the output of the GNN remains invariant to the ordering of nodes. This is achieved through permutation-invariant embeddings

and a transformed adjacency matrix

.

In general, the goals of a GNN span a variety of tasks, including node classification [

129], link prediction [

130], graph classification [

131], and graph generation [

132] . A GNN aims to map the input node or edge features into an embedding space that offers a rich representation for further modeling [

133]. These node or edge features are continuously updated by aggregating information from neighbouring nodes or edges through a process known as

message passing,

where

is a non-linear activation function,

is the learnable weight matrix at layer

t, AGG is a neighborhood aggregation function such as mean or sum, and

denotes the set of neighbors of node

v.

Message passing in a Graph Neural Network (GNN) occurs iteratively over several iterations. After a specified number of iterations

t, each node encodes information about all other nodes in the graph. The number of iterations is considered optimal to ensure that the GNN has a sufficiently large receptive field while preventing

oversmoothing; a scenario where all embeddings become indistinguishable [

134]. The basic approach assumes all nodes and edges are of equal importance; however, more advanced methods have been developed to account for varying levels of importance.

4.12.1. Temporal Modeling in GNN

To make predictions, a GNN typically utilizes the final embedding layer. For temporal modeling, it is possible to integrate a GNN with other network architectures, where the final embedding layer is passed as input to subsequent neural network blocks. This approach is commonly used in temporal GNNs [

135], where the final embedding is fed into another network block, such as an RNN or CNN. This allows the network to capture both spatial patterns using the GNN and temporal features using the RNN or CNN.

GNNs can also be used for data which is not spatial by nature. This is possible by first transforming the data into a graph structure. The approach seems to work quite well with time series. Liang et al. [

136] employed this technique to impute missing values in traffic datasets. For a comprehensive overview of GNN applications in time series tasks, refer to Jin et al. [

137].

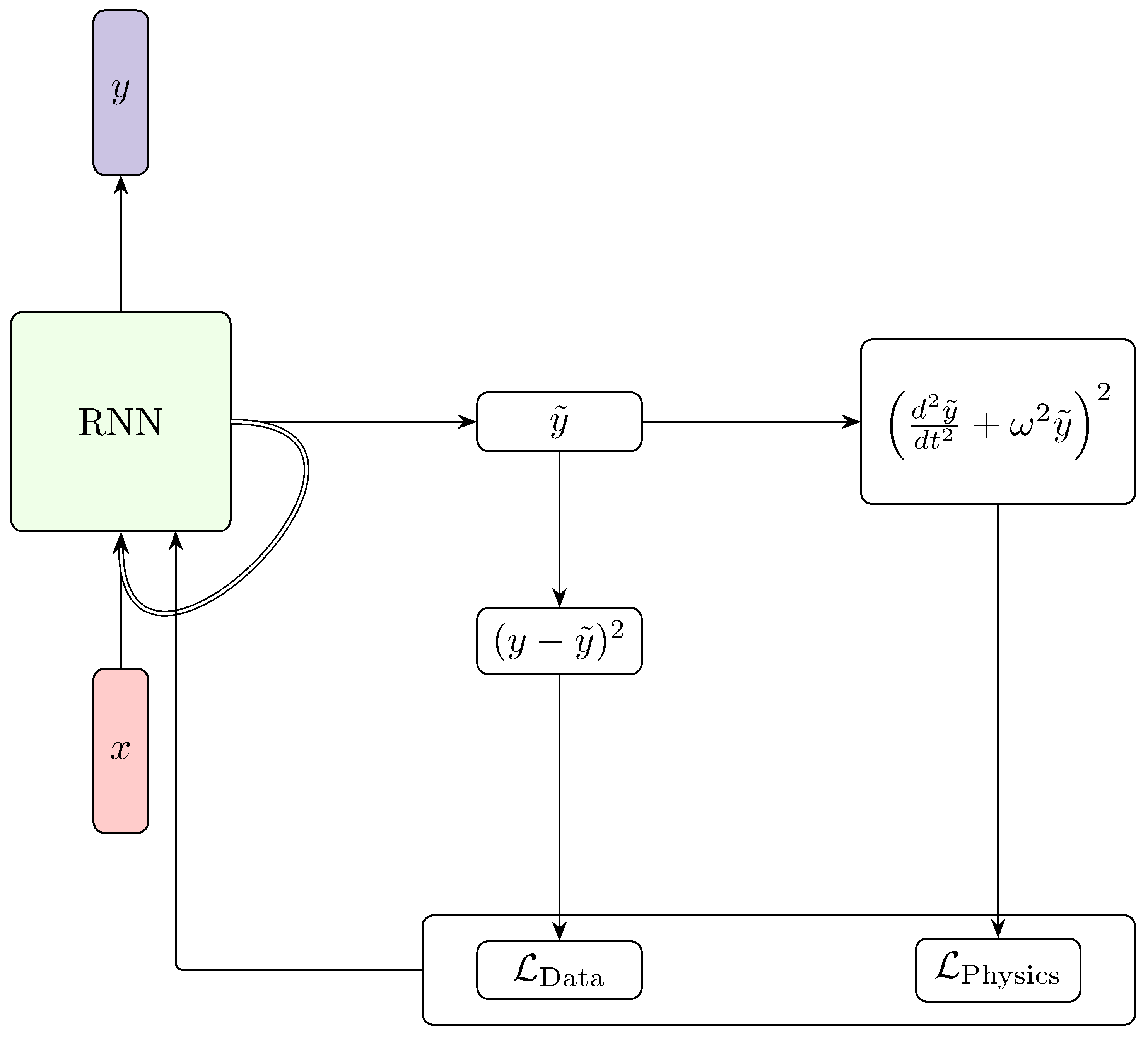

4.13. Physics-Informed Neural Networks

Traditionally, the approach to training neural networks has been heavily data-centric. However, this approach may have drawbacks, such as noisy data that deviates from actual physical reality.To resolve this, researchers have been experimenting with the idea of incorporating the physics of a problem into a model. Enforcing physics into a model is a well studied field of machine learning under the umbrella of

Scientific Machine Learning (SciML). Several studies have so far shown that models in scientific settings perform better on unseen data when the physics of the phenomenon being studied is considered [

138] . Although SciML has been achieved through different techniques, our consideration in this review are Physics Informed Neural Networks (PINNs) [

139] . PINNs are a household name in SciML hence their discussion. PINNs enforce physics through a physics regularization term which is added to the loss function. This is achieved through the problem’s differential equation and its associated conditions.

Consider the general form of a partial differential equation,

where

represents a differential operator,

is the solution to the PDE, and

denotes the forcing term. The solution to this PDE must satisfy both initial and boundary conditions. Specifically, the initial condition is,

where

is the initial time, and

describes the initial state of the system. The boundary condition is expressed as,

where

prescribes the behavior of the solution on the boundary

of the spatial domain

. Here, the spatial variable

x spans the domain

, and the temporal variable

t lies within the interval

.

The first step in a PINN is to calculate the residual of the PDE as follows,

Residuals are also calculated for initial and boundary conditions, and the total residual is termed the physics loss. Minimizing this loss across the domain and over the specified time interval is crucial for validating the accuracy and consistency of the solution under the given initial and boundary conditions. This ensures that the proposed solution

adheres to both the dynamics described by the PDE and the constraints imposed by the initial and boundary conditions. However, in practice, exact satisfaction is rarely achievable; hence, PINNs do not perfectly satisfy the governing physics [

140,

141].

PINNs are usually trained by minimizing the following composite loss function,with each term adequately weighted,

where,

is the Initial Condition Weight, is the Boundary Condition Weight, is the Physics Weight, and is the Data Weight, all balancing their respective influences in the PIN model.

PINNs have been successfully applied to time series problems, such as the estimation of cuffless blood pressure [

142]. A recent application of PINNs by Park et al. [

143] involved weather data forecasting, where an RNN served as the backbone network and the harmonic oscillator equation was used to enforce physical constraints (see

Figure 9).

Due to the nature of the harmonic motion solutions, the neural PDE component effectively modeled the seasonal component of the time series. This approach bears some similarity to the Fourier Analysis Network described earlier.

4.13.1. Issues with PINNs

Similar to other neural architectures, PINNs have shortfalls. A major shortfall lies in the training instability and difficulty in balancing the contributions of data loss and physics loss, especially when dealing with stiff PDEs or multi-scale phenomena [

144]. This imbalance often leads to poor convergence or solutions that satisfy the data but violate the governing equations, or vice versa. Moreover, PINNs typically rely on automatic differentiation to compute residuals, which can be computationally intensive . Another notable challenge is the difficulty PINNs face in enforcing complex boundary conditions, especially in domains with discontinuities or sharp gradients [

145]. A detailed investigation into the failure modes of PINNs and strategies to address them is provided by Krishnapriyan et al. [

140].

5. Generative Modeling

In practical applications, there are scenarios where it is essential to model the input data itself. In such cases, a different class of models, generative models, may be more appropriate. Generative models learn the joint probability distribution and can use this distribution to generate new data points that resemble the training data. The aim of this section is to introduce various classes of generative models that are commonly employed for tasks such as data imputation and synthetic data generation in time series applications.

5.1. Autoencoders

The goal of an autoencoder is to learn a low-dimensional latent space of the data, and then to reconstruct the original data from this compressed space. An authoencoder achieves this using a decoder

, for compression and

, for reconstruction. Learning is done by minimization of the reconstruction loss, which is given by

Exact reconstruction is not possible because the latent space has a lower dimension than the input and output. However, this architectural design is necessary to encourage the autoencoder to learn useful features required for reconstruction.Different researchers have successfuly applied autoencoders to data denoising [

146], data imputation [

147], anomaly detection [

148], and more. Despite its wide usage in literature, the standard autoencoder cannot perform meaningful data interpolation, and this arises from the discrete nature of the learned latent space [

149]. Among other approaches, this limitation is addressed by variational autoencoders.

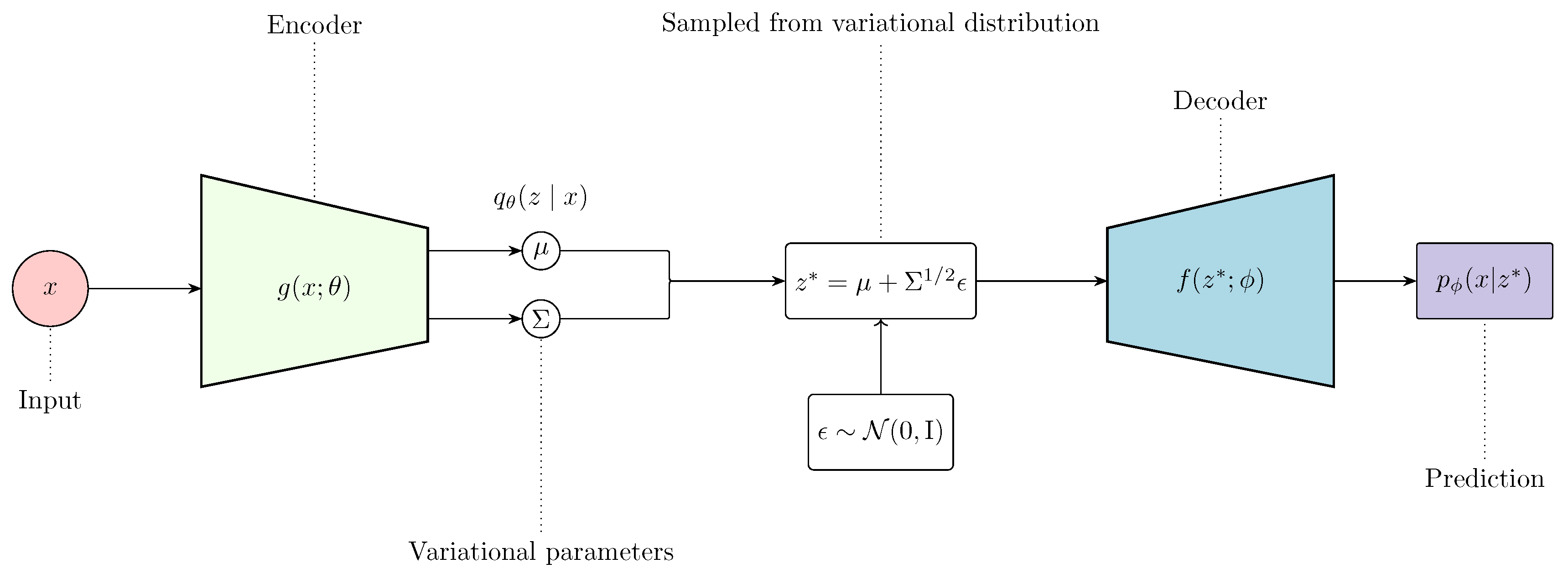

5.2. Variational Autoencoders

Unlike traditional autoencoders, variational autoencoders (VAEs) [

150] adopt a probabilistic framework to learn a more structured latent space.

Figure 10.

Architecture of a Variational Autoencoder (VAE). The input

x is passed through an encoder network

to predict the variational parameters:

and

, which parameterize a Gaussian variational distribution

. A latent variable

is sampled from this distribution using the reparameterization trick:

, where

. This sample is then fed into the decoder

, to predict the data x. Adapted from Prince [

84].

Figure 10.

Architecture of a Variational Autoencoder (VAE). The input

x is passed through an encoder network

to predict the variational parameters:

and

, which parameterize a Gaussian variational distribution

. A latent variable

is sampled from this distribution using the reparameterization trick:

, where

. This sample is then fed into the decoder

, to predict the data x. Adapted from Prince [

84].

First, the input is mapped to a latent space z using an encoder , where . Since is generally intractable due to the intractability of the marginal likelihood , VAEs introduce a more tractable approximate posterior . Then, to reconstruct the data from the latent space, a decoder is used.

VAEs are trained by maximizing the marginal likelihood over the reconstructed sample,

, which is obtained by marginalizing over the latent space,

This integral is generally intractable and is typically simplified to provide tractable proxy objectives. By introducing a variational distribution

, we can apply Jensen’s inequality to get lower bound of the log marginal likelihood,

This gives rise to the evidence lower bound (ELBO),

Intuitively, the first part of this expression represents the reconstruction term, which encourages the decoder to accurately reconstruct the input data. The second term encourages the variational distribution to be as close as possible to the prior. It is common not to sample directly from

, but rather to use a reparameterization trick (Equation

41),

This allows the expectation over

to be rewritten as an expectation over a fixed noise distribution

. Expression (

40) can therefore be rewritten as a reparameterized objective

According to Gundersen [

151], without the reparameterization trick, backproping would not compute an estimate of the derivative of the ELBO and would thus give no guarantee that sampling large numbers of z will help converge to the right estimate of the gradient. The gradient of the ELBO is computed with respect

and

via

At this stage, standard stochastic optimization algorithms (e.g., SGD) are used to optimize the variational parameters. Typical VAE architectures for time series include

HyVAE [

152],

TimeVAE [

153], and

Time-NeighbourVAE [

154].

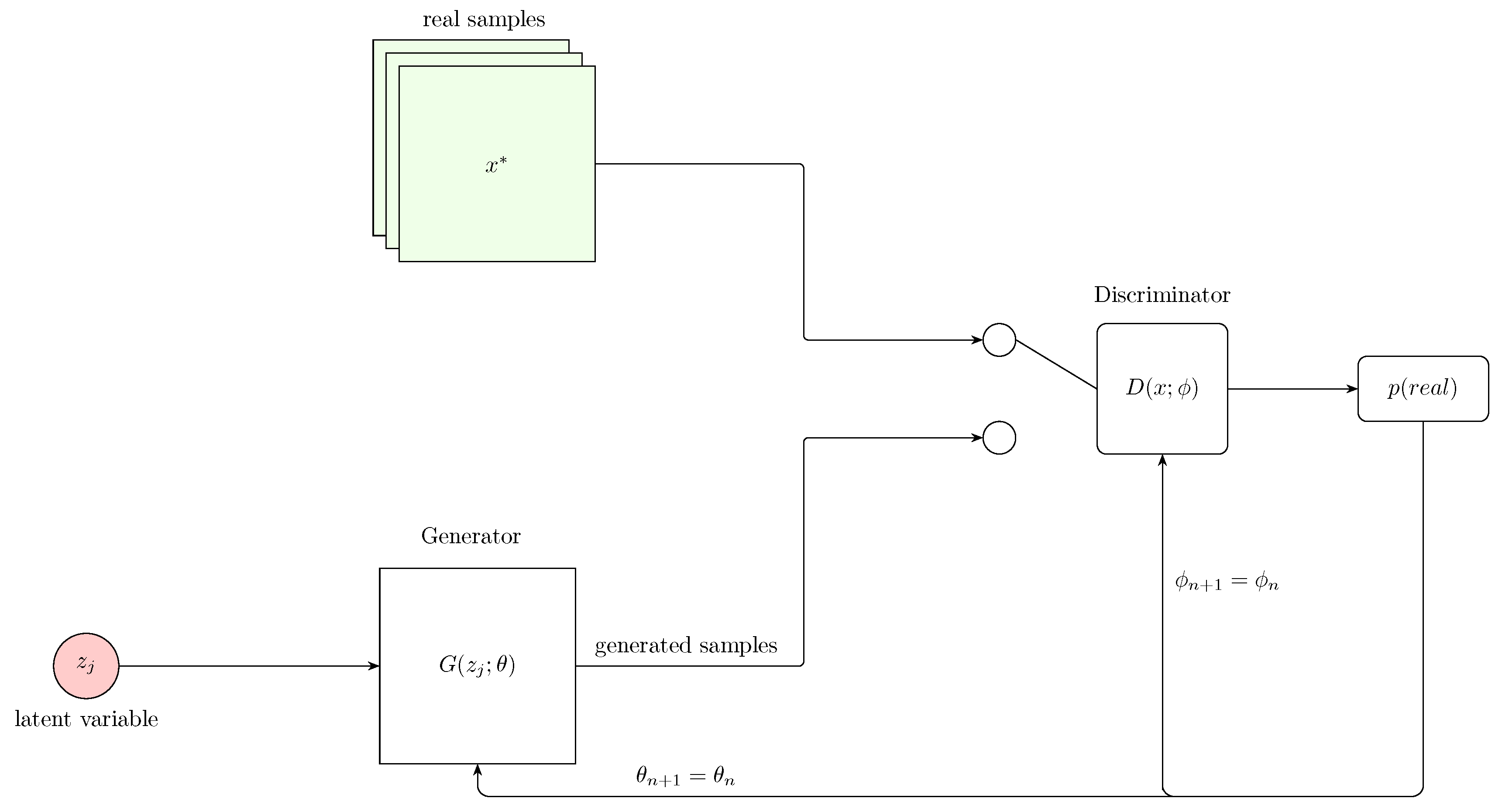

5.3. Generative Adversarial Network

A Generative Adversarial Network (GAN) [

126] aims at generating realistic data similar to real-world data present during training by fooling another network into believing that the generated samples are real. This is achieved by simultaneously training two networks: a generator

G and a discriminator

D.

Figure 11.

Schematic of a Generative Adversarial Network (GAN) training loop. A latent variable

z is sampled and transformed by the generator

to produce samples. These, along with real data

, are evaluated by the discriminator

, which outputs the probability of each sample being real. The discriminator is updated to better distinguish real from generated, while the generator updates its parameters to fool the discriminator.Adapted from Calin [

155].

Figure 11.

Schematic of a Generative Adversarial Network (GAN) training loop. A latent variable

z is sampled and transformed by the generator

to produce samples. These, along with real data

, are evaluated by the discriminator

, which outputs the probability of each sample being real. The discriminator is updated to better distinguish real from generated, while the generator updates its parameters to fool the discriminator.Adapted from Calin [

155].

The generator creates samples

from a latent variable

. The objective of the generator is to produce data that is indistinguishable from real data. Mathematically, this is equivalent to minimizing the following expression with respect to

,

Technically, if samples are assigned a higher probability, i.e., if the discriminator classifies them as being real, the above expression approaches zero. However, if generated data is classified correctly, i.e., a low probability is assigned to generated data, the expression tends to infinity. This means that the generator loss can only be reduced if the discriminator incorrectly classifies generated data, in which case the generator achieves a minimum loss.

As already highlighted, the discriminator is responsible for classifying data as being real or generated(binary classifier). The input to the discriminator is either a generated or real sample, and it returns a probability that is higher when a sample is real (i.e., close to 1). The objective of the discriminator is usually to maximize the following expression with respect to

Despite the fact that the underlying principle behind GANs is conceptually straightforward, they are difficult to train in practice. A major challenge is

mode collapse, where the generator ignores the variability in the latent space and produces limited or nearly identical output [

84,

156]. To address this issue, several techniques have been proposed. They include feature matching and mini-batch discrimination [

157], unrolled GANs [

158], Wasserstein GANs (WGAN) [

159], spectral normalization [

160], and gradient penalty methods (e.g., WGAN-GP) [

161].

Although GANs were initially developed for image data, they have since been extended to handle sequential data such as time series data. Notable adaptations in this space include

TimeGAN [

162],

SeriesGAN [

163];

FinGAN [

164] to name a few. For a detailed survey of GAN applications in time series, the works of Brophy et al. [

165] and Zhang et al. [

166] can be consulted.

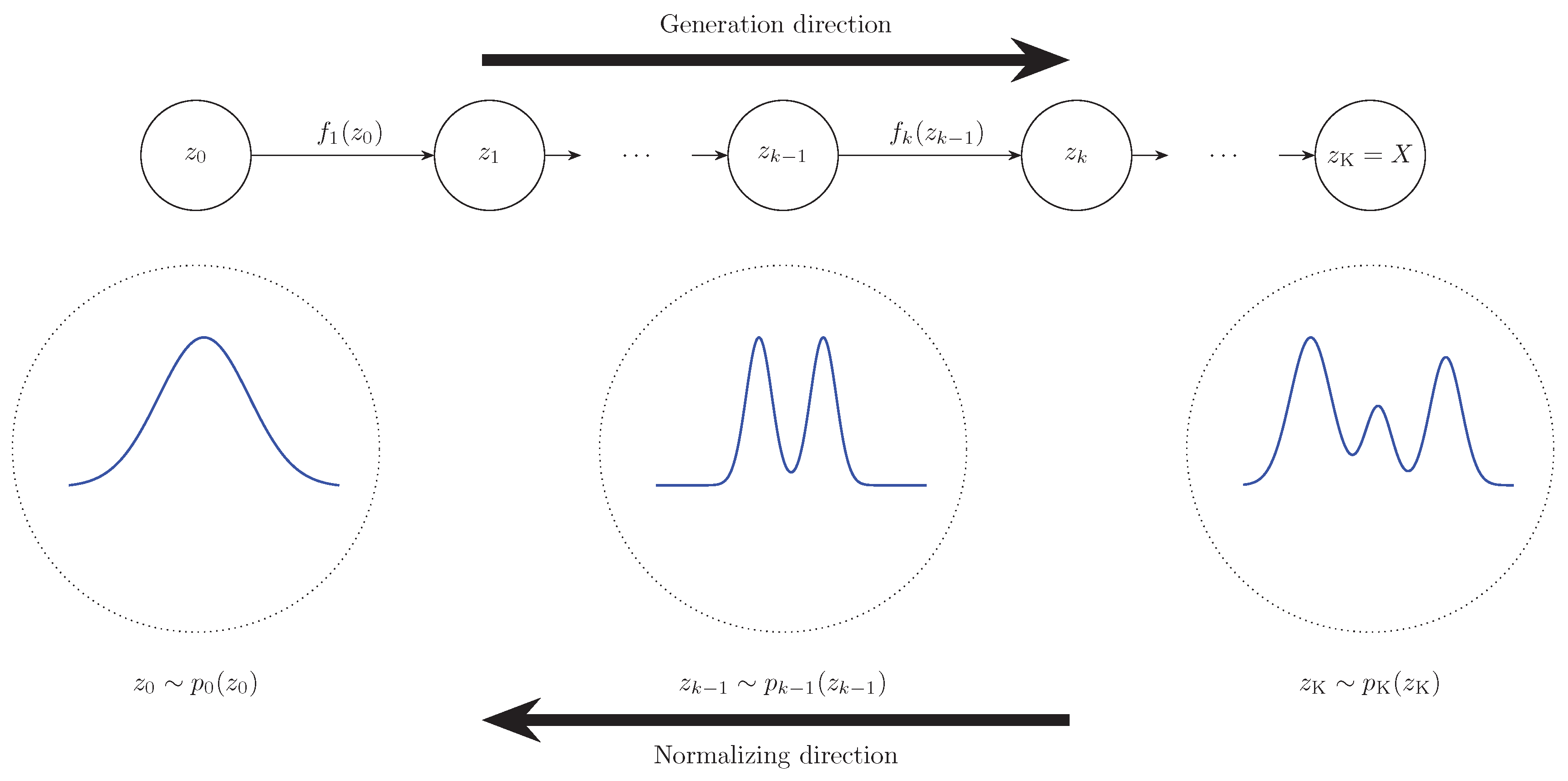

5.4. Normalizing Flows

Instead of implicitly modeling the data distribution like GANs, normalizing flows explicitly model the probability distribution over the data [

84]. Normalizing flows transform a simple distribution over a latent variable

into a more complex distribution using differentiable and bijective transformations

(see

Figure 12).

The transformation function is usually parameterized by a neural network. Transformations are required to be differentiable and bijective so as to ensure a smooth transition between the latent and data space while enabling efficient learning via gradient-based methods. Several techniques have been developed to design transformations with these desired properties. Among them are coupling flows [

168,

169], neural ODEs [

170], and autoregressive flows [

171].

Since the data distribution is defined through a transformation, its density is determined using the change of variables formula,

where

.

The objective is then to maximize the likelihood of the data under this model, i.e.,

Recent studies have applied normalizing flows to capture complex temporal dependencies and uncertainty in time series. Rasul et al. [

172] proposed a graph-augmented normalizing flow (GANF) using Bayesian networks to model inter-series causality.Flow based modeling has also been combined with temporal and attribute-wise attention for high-dimensional, label-scarce data [

173]. In forecasting, Rasul et al. [

172] integrated autoregressive models with conditional flows to enhance extrapolation and capture temporal correlations. Fan et al. [

174] proposed a decoupled formulation called instance normalization flow (IN-Flow), which addresses distribution shifts via an invertible transformation network.

5.5. Diffusion Models

Diffusion models (DM) [

175] are trained by sequentially adding noise to a data sample over multiple time steps and then attempting to remove this noise. The noise is added according to a Markovian process

with its associated distribution,

is a

hyperparameter which determines how quickly the noise gets blended. This hyperparameter can be fixed across all time steps or it can vary according to a predefined schedule. While single-step updates are possible for adjacent time steps by applying Equation

48, it is usually helpful to be able to move directly from

to

in a single step as well. This is

important especially in the reverse process when

denoising. The expression required to do this can be derived by generalizing Equation

48 over multiple time steps and is expressed as,

Derivation of this expression takes advantage of the properties of sums of Gaussian random variables. On the other hand, during the reverse process, the transition process is modelled as,

with an associated transition probability

. Here,

is a standard normal distribution used for

reparameterization.

is the noise predicted by the neural network, which is parameterized by

. The goal is to learn a mapping that makes

and

as close as possible, which leads to the following loss function,

In other words, the model tries to predict the amount of noise that was added to the data sample during the forward (diffusion) process.

The discussion thus far has primarily focused on denoising diffusion probabilistic models (DDPMs), often considering the unconditional generation setting where the data distribution is modeled without auxiliary information. However, general DMs are also capable of conditional generation, where the data distribution is modeled given some conditioning variable such as class labels or other contextual attributes. DMs have been proposed for forecasting [

176,

177,

178], classification [

179,

180], anomaly detection [

181,

182] , imputation [

183,

184], and generation Narasimhan et al. [

185], Chi et al. [

186]. A comprehensive review of the use of diffusion models in time series has been conducted by Lin et al. [

187], covering key models, methodologies, and applications across forecasting, imputation, and generation tasks. More recently, Yang et al. [

188] have provided an extensive survey encompassing both time series and spatio-temporal applications of diffusion models, categorizing existing approaches based on task type and model structure.

5.6. Autoregressive Models

Given a sequence,

, the joint probability distribution can be factorized using the chain rule of probability,

where

.

This is called an Autoregressive model (ARM) and it allows for the exact computation of the likelihood [

189]. To capture complex dependencies within the data, each conditional distribution in equation Murphy [

190]is parameterized by a neural network. Tomczak [

191] provides good cases for ARMS. Generally, the conditional distribution can be modelled as it is, but in practice, it usually computationally expensive as the sequence length .An alternative approach employs the first-order Markov assumption to simplify the conditional distribution at time

t.Thus, the conditional distribution at time setstep t depends only on the immediate previous time step

, i.e.

An ARM is trained by maximizing the likelihood of the observed data. This is also equivalent to minimizing the negative log-likelihood (NLL) given by

Once trained, data generation follows an ancestral sampling procedure, where values are sequentially sampled based on the learned conditionals.

5.7. Energy-Based Models

Energy-Based Models (EBMs) offer a general framework for modeling probability densities by associating scalar energy values to configurations of the input space.EBMs have their origins in statistical physics.The underlying assumption is that observed, high-probability data should correspond to low-energy states, while implausible or unlikely configurations should be mapped to regions of higher energy.

Formally, the model defines an unnormalized density over

via a Boltzmann distribution,

Here,

is a learned energy function, typically parameterized by a deep neural network, and

is the partition function that ensures the density integrates to one. However, this normalization term is generally intractable in high dimensions, rendering exact likelihood evaluation and sampling computationally prohibitive. The parameters of the energy function are found through maximum likelihood estimation, which results in the following objective function,

with the gradient given by,

The first term is readily computable using observed data, while the second requires samples from the model distribution, which is itself defined implicitly by the energy function. Consequently, much of the complexity in training EBMs arises from the need to draw samples from a model whose normalization is unknown. Markov Chain Monte Carlo (MCMC) methods, such as Langevin dynamics, are commonly used for this purpose [

189].

Popular examples of EBMs in literature include Boltzmann Machines [

192] and Hopfield Networks [

62]. Similar to the other generative models, EBMs have also been applied to time series analysis. Brakel et al. [

193] outlined the training strategy for training EBMs for data imputation.Yan et al. [

194] proposed ScoreGrad, which a is multivariate probabilistic time series forecasting framework based on continuous energy-based generative models and has been found to achieve state of the art results on a number of datasets.

5.8. Summary of Generative Models

Table 3 presents a comparative summary of the generative models discussed.

6. Uncertainty Quantification

SHM data can be limited or noisy. Noise can emanate from different sources such measurement errors and environmental variability. Limited data might be due to sensor malfunction, missing data, and intermittent data collection due to huge costs associated with the process. Considering these factors, uncertainty quantification is critical to SHM for effective decision making. Uncertainties are generally classified as

aleatoric and

epistemic [

83,

195]. Aleatoric uncertainty is associated with inherent randomness and cannot be reduced. According to Wikipedia, epistemic uncertainty is associated with things which one could in principle know but does not know [

196]. Thus, once knowledge is available, epistemic uncertainty can be reduced. Epistemic uncertainty is further grouped into two major categories:

homoscedastic, meaning it is constant across all observations, or

heteroscedastic, meaning it varies with covariates. While homoscedastic uncertainty is relatively straightforward to handle, heteroscedasticity introduces additional complexity into modeling tasks. If these uncertainties are not properly accounted for, model predictions are usually unreliable can may potentially lead to suboptimal decision-making.The goal of this section is therefore to discuss how uncertainties are handled in deep learning. We do this from a Bayesian context.

6.1. Bayesian Inference

In many practical scenarios, prior knowledge plays a critical role in shaping our understanding of a problem. However, conventional deterministic modeling approaches typically disregard this prior information. A significant limitation of such methods is their reliance on large volumes of data to achieve good performance. In reality, data is often limited, incomplete, or corrupted by noise. In the late 1980s and early 1990s, Denker et al. [

197], Tishby et al. [

198], Denker and LeCun [

199], Buntine and Weigend [

200], MacKay [

201], Neal [

202] and Neal [

203] proposed the bayesian framework as an alternative learning method. This approach provides a way to incorporate prior knowledge into a model as well estimate uncertainties associated with its outputs. The central idea that governs this philosophy is Bayes’ rule. Mathematically, Bayes’ rule is expressed as

where

is the posterior,

is the likelihood,

is the prior, and the evidence term

is given by,

To make predictions for a new data point in a supervised mode, the posterior predictive distribution is used,

With regards to this expression, Murphy [

204] writes, "the posterior is our internal belief state about the world and the way to test if our beliefs are justified is to use them to predict objectively observable quantities."

Despite the simplicity of Bayes’ rule, the posterior distribution is often analytically intractable in real-world applications. For deep neural neural networks, the parameter vector may contain millions or even billions of parameters. For this reason, Bayesian methods were historically not favored for training deep models. However, this has changed with the development of efficient approximation techniques. Common methods for parameter estimation are discussed in the following sections.

6.1.1. Analytical Methods (Conjugacy)

For certain combinations of the prior and likelihood, the posterior can be computed analytically. In this case, the posterior distribution is of the same functional form as the prior, and the likelihood-prior pair is known as a

conjugate pair [

83].Besides providing a closed form solution for the posterior distribution, conjugacy enables sequential learning where the posterior at

t becomes the prior at

. Some of the conjugate pairs include Gaussian-Gamma, Gaussian-Inverse-Chi-Squared, Gaussian-Inverse-Gamma, and Gaussian-Inverse-Wishart [

205].

6.1.2. Maximum Likelihood Estimation

Although not Bayesian, Maximum Likelihood Estimation (MLE) is widely used to estimate model parameters. The goal of MLE is to find the set of parameters

that maximizes the likelihood of the data,

Equation

61 considers that the data is identically and independently distributed (i.i.d.). For large datasets, the computation of the likelihood can result in numerical instability. Large values of of

result in overflow, while small values result in underflow. It is important to note that

is a likelihood function, not a probability, and therefore can take values greater than 1.

To improve numerical stability and make the expression amenable to optimization, the log-likelihood is considered instead. Applying the properties of logarithms, the log-likelihood function is given by,

The above equation is a non-convex function and would otherwise benefit from readily available convex optimization algorithms by considering the negative log likelihood. This, in theory, is possible by taking the negative of the function. Thus, it is common to encounter negative log-likelihood in optimization problems

Maximization of the log-likelihood is similar to the minimization of the negative log-likelihood, and thus to find the optimal parameters,

A key limitation of MLE is its tendency to overfit, which can be mitigated using priors, leading us to Maximum A Posteriori estimation.

6.1.3. Maximum A Posteriori (MAP)

Bayes’ rule can be rewritten, omitting the marginal likelihood (which is constant w.r.t.

) as,

With this expression , it is possible to maximize the unnormalized posterior directly, i.e.,

Taking the log for numerical stability,

The second term serves as a regularization term, and this form is structurally similar to penalized optimization.

6.1.4. Laplace Approximation