Submitted:

08 May 2025

Posted:

12 May 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

- Rigorous Definition of : I formalize as the critical value that marks the boundary between non-trivial (informative) and trivial (uninformative) representations. This corresponds to the slope of the IB curve at the origin, , representing the point of maximal compression beyond which the representation collapses. This definition is made precise in Section 3.

- Theoretical Properties and Existence: I derive conditions under which exists and is unique. Key properties such as the concavity of the IB curve (as a function vs ) and the continuity and monotonicity of optimal solutions w.r.t. are proven. I show, for instance, that and are non-increasing in . I prove that there is a critical (which I define as ) beyond which the only solution is the trivial one (Z carries no information from X).

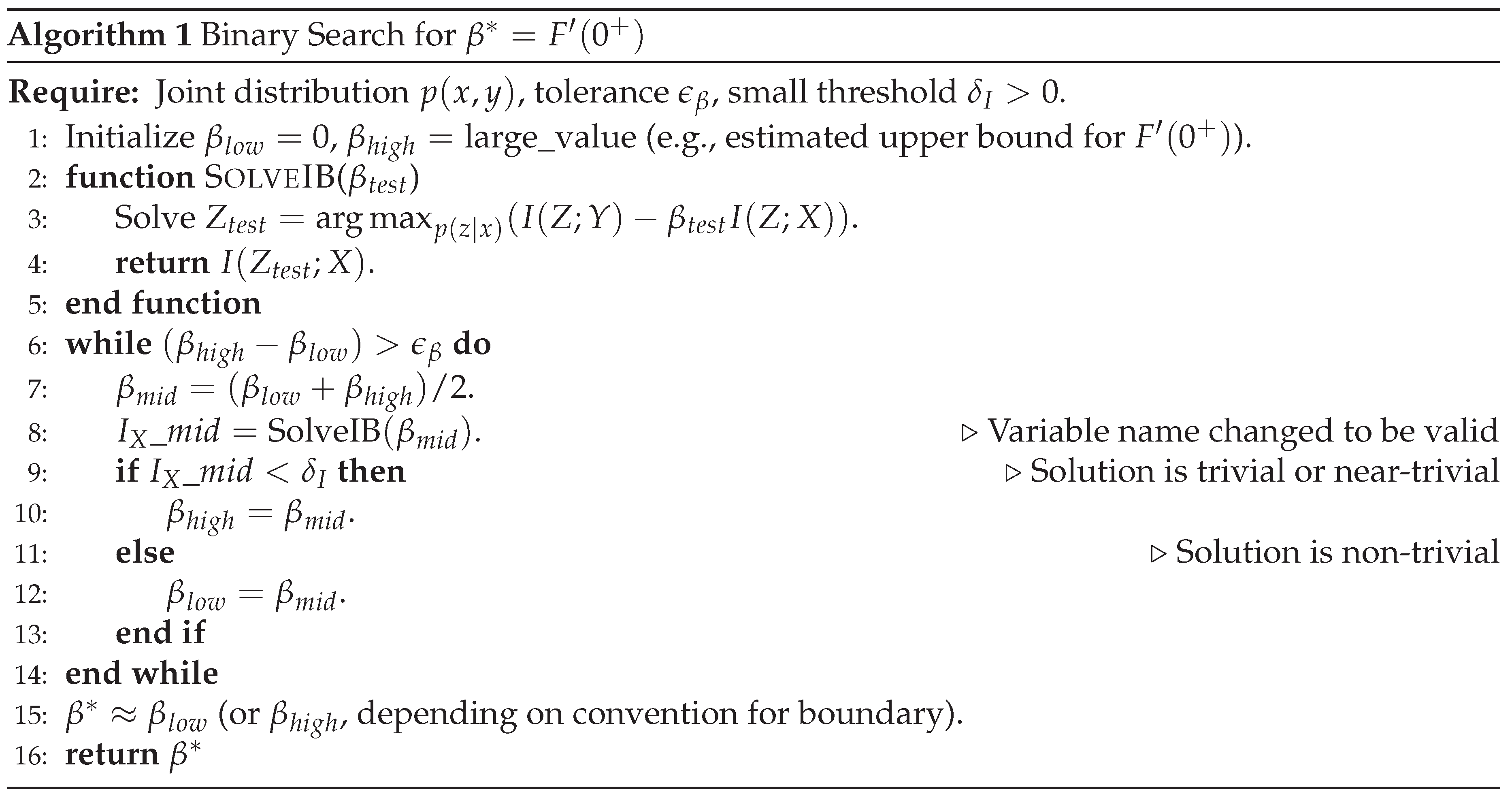

- Algorithmic Discussion and Complexity: I discuss how one can solve for in practice. I provide pseudo-code for a binary search procedure on . I analyze the complexity of naïvely sweeping versus more efficient methods that leverage my theoretical insights (e.g., using the properties of the IB Lagrangian to pinpoint ). I also compare the computational complexity of VIB and NIB approaches.

- Comparisons of IB, VIB, and NIB: I provide a comparative analysis of how should be interpreted in standard IB theory versus in VIB and NIB implementations. I show, for example, that if the VIB approximation is tight, the chosen in VIB corresponds closely to the true ; however, if variational bounds are loose [6], the effective trade-off might differ. In the NIB setting (e.g., DIB [4]), I examine how replacing the mutual information constraint with alternative penalties changes the -criterion. Formal propositions highlight these differences.

2. Methodology

2.1. Background: Information Bottleneck Framework

2.2. Defining (Optimal Trade-off Parameter)

2.3. IB in VIB and NIB Settings

3. Theoretical Results

3.1. Properties of the IB Lagrangian and the Trade-off Curve

3.2. Existence and Characterization of

3.3. Algorithmic Implications and Complexity

- Sweep and Search: One can solve the IB optimization for a range of values and observe where the solution transitions from non-trivial to trivial. A binary search on is more efficient. The complexity is roughly , where is the cost of solving the IB objective for a fixed , and is the number of iterations for the search (e.g., for binary search with precision ). For discrete , using Blahut-Arimoto style iterations is polynomial in alphabet sizes [1].

- Frontier Geometry Methods: If is known analytically (e.g., Gaussian IB [3]), can be computed directly. Alternatively, methods estimating the (hyper)contraction coefficient of the channel can estimate and thus (or depending on formulation) [9]. Multi-objective optimization techniques might generate the Pareto front, from which could be estimated [12].

4. Discussion

4.1. in Variational IB (VIB)

- Approximation Error: VIB uses bounds for and . If these bounds are not tight, or if the parametric encoder/decoder families are not expressive enough, the VIB-optimized curve may differ from the true IB curve [6]. This can shift the empirically observed for collapse.

- Collapse Phenomenon: VIB models are known to exhibit a collapse phenomenon: for too large , the encoder learns to ignore x and (the prior), making . This empirical collapse threshold in VIB is the analogue of the theoretical .

- Practical Estimation: Practitioners often find a suitable by sweeping values and observing validation performance. The largest that maintains good performance before a sharp drop could be considered an empirical estimate of a "useful" , which might be lower than the strict collapse threshold if some minimal is required.

4.2. in Neural IB (NIB)

- Deterministic IB (DIB): DIB optimizes . Since , DIB penalizes an upper bound on . DIB tends to find deterministic encoders where . The critical for collapse in DIB will exist but may have a different numerical value than due to the different complexity term.

- MI Estimators: Using neural MI estimators for can be noisy. Detecting the exact where (and thus ) truly vanishes can be hard. However, the principle of a collapse threshold remains.

4.3. Generalization and Robustness Considerations

4.4. Multi-Target or Multi-Layer Extensions

4.5. Limitations and Assumptions

- Concavity and differentiability of : For some distributions, might have kinks or linear segments. as still exists (as a one-sided derivative).

- Existence of optimal encoders: Assumed for theoretical IB. In practice (VIB/NIB), model capacity and optimization are critical. If models are too restricted, apparent collapse might occur earlier due to capacity limits rather than itself.

5. Conclusion

- exists and is unique for a given X–Y distribution, identifiable as (Theorem 2). It signifies the point beyond which further increase in the compression penalty leads to a complete loss of information.

- The IB trade-off curve is concave (Theorem 1), ensuring a well-behaved relationship between (as the slope ) and the optimal information measures .

- At , the representation is maximally compressed while potentially retaining the initial, most "efficient" bits of information about Y (Theorem 3). This is distinct from concepts like minimal sufficiency for Y (i.e., ), which occurs at the other end of the IB curve (typically ).

- Algorithmic approaches, such as binary search (Algorithm ), can be used to estimate in practice.

- The interpretation of extends to VIB and NIB, where analogous collapse phenomena are observed, though the exact value may be affected by approximations or alternative objective formulations.

- Developing adaptive algorithms that dynamically tune towards (or a desired point relative to ) during training.

- Investigating robust estimation of from finite samples, especially in high-dimensional settings.

- Extending the theory of -optimization to more complex scenarios, such as multi-target IB, sequential IB (e.g., for time-series data or reinforcement learning), or hierarchical IB in deep networks.

- Exploring the relationship between and other notions of an "optimal" , such as one corresponding to the "knee" of the IB curve or one optimizing generalization performance on a validation set. While is a mathematically precise critical point, other definitions might be more relevant for specific practical goals.

References

- Tishby, N., Pereira, F.C., Bialek, W. (2000). The information bottleneck method. Proc. of 37th Allerton Conference on Communication, Control, and Computing.

- Shamir, O., Sabato, S., Tishby, N. (2010). Learning and Generalization with the Information Bottleneck. In Proc. of the 2010 IEEE Information Theory Workshop (ITW).

- Chechik, G., Globerson, A., Tishby, N., Weiss, Y. (2005). Information bottleneck for Gaussian variables. Journal of Machine Learning Research, 6:165–188.

- Strouse, D.J., Schwab, D.J. (2017). The Deterministic Information Bottleneck. Neural Computation, 29(6):1611–1630. [CrossRef]

- Alemi, A.A., Fischer, I., Dillon, J.V., Murphy, K. (2017). Deep Variational Information Bottleneck. Proc. of the International Conference on Learning Representations (ICLR).

- Kolchinsky, A., Tracey, B.D., Wolpert, D.H. (2019). Nonlinear Information Bottleneck. Entropy, 21(12):1181. [CrossRef]

- Kolchinsky, A., Tracey, B.D., Van Kuyk, S. (2019). Caveats for Information Bottleneck in deterministic scenarios. Proc. of the International Conference on Learning Representations (ICLR).

- Rodríguez Gálvez, B., Thobaben, R., Skoglund, M. (2020). The Convex Information Bottleneck Lagrangian. Entropy, 22(1):98. [CrossRef]

- Wu, T., Fischer, I., Chuang, I.L., Tegmark, M. (2019). Learnability for the Information Bottleneck. Entropy, 21(10):924. [CrossRef]

- Belghazi, M.I., Baratin, A., Rajeshwar, S., Ozair, S., Bengio, Y., Courville, A., Hjelm, D. (2018). MINE: Mutual Information Neural Estimation. Proc. of the International Conference on Machine Learning (ICML).

- Achille, A., Soatto, S. (2018). Emergence of Invariance and Disentanglement in Deep Representations. Journal of Machine Learning Research, 19(54):1–34.

- Zhao, Z., Liu, Y., Peng, X., Li, Y., Liu, T., Tao, D. (2024). Exploring Complex Trade-offs in Information Bottleneck through Multi-Objective Optimization. arXiv preprint. arXiv:2310.00789.

- Liu, Y., Zhao, Z., Peng, X., Liu, T., Tao, D. (2023). Exploring the Trade-Off in the Variational Information Bottleneck for Regression with a Single Training Run. Entropy, 25(7):1043. DOI: 10.3390/e25071043. (Corrected volume/issue for 2023, assuming typical Entropy numbering. Original was 26(12):1043 which is unlikely for 2023).

- Cover, T.M., Thomas, J.A. (2012). Elements of Information Theory. Wiley, 2nd Ed.

- Witsenhausen, H.S., Wyner, A.D. (1975). A conditional entropy bound for a pair of discrete random variables. IEEE Transactions on Information Theory, 21(5):493–501.

- Shwartz-Ziv, R., Tishby, N. (2017). Opening the Black Box of Deep Neural Networks via Information. arXiv preprint. arXiv:1703.00810.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).