Submitted:

25 April 2025

Posted:

28 April 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

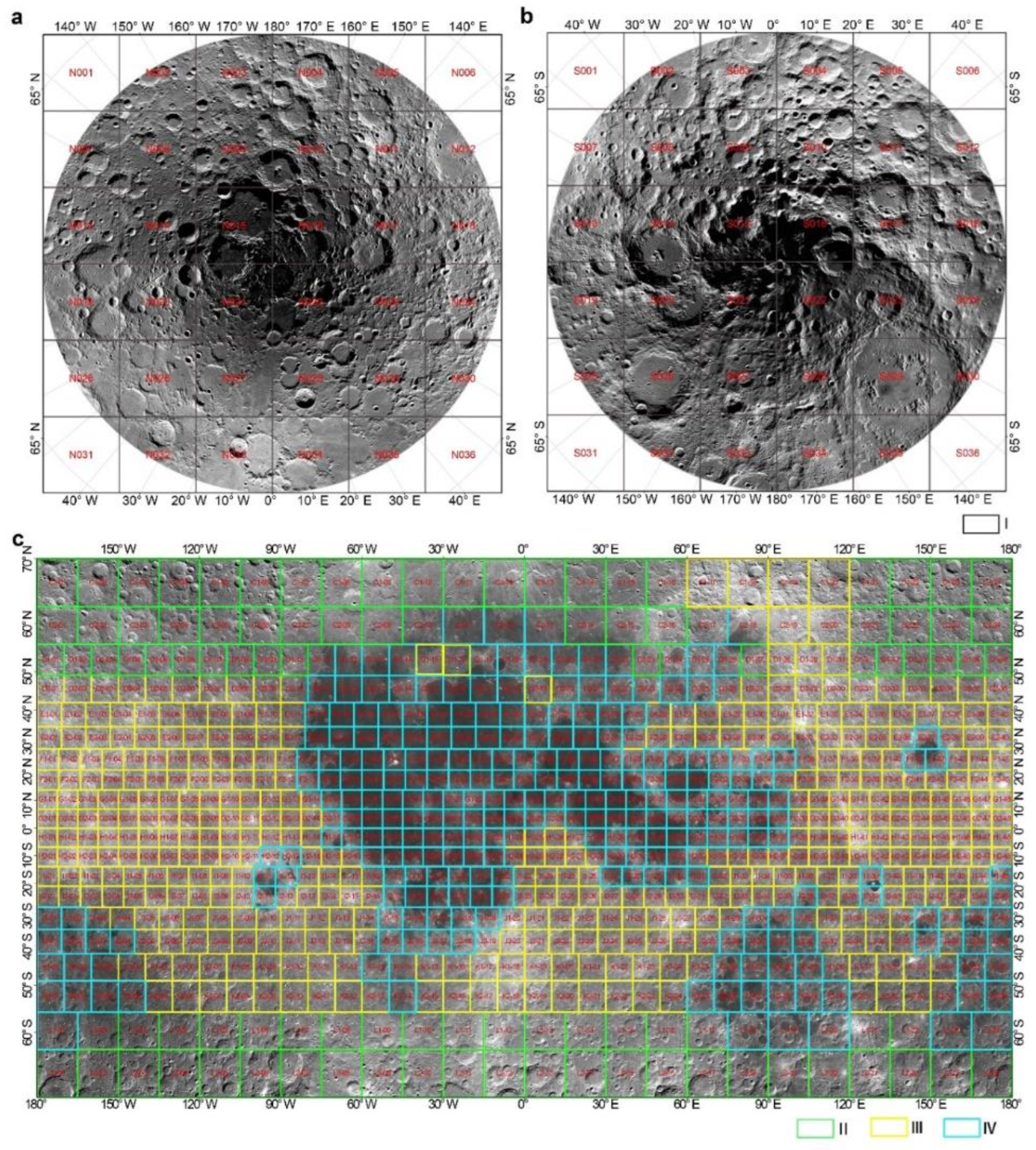

2.1. Study Regions

2.1.1. Lunar Image Classification

- Polar Regions (I): Complex terrain with rugged mountains and abundant craters, many of which are circular or elliptical. These areas exhibit varying albedo, with lower reflectivity near the poles and brighter surfaces in surrounding areas.

- High-Latitude Highlands (II): Characterized by dramatic topographic changes, including mountains, canyons, and slopes, with higher reflectivity compared to mare regions. Craters in these areas are relatively larger and exhibit complex shapes.

- Mid-Low Latitude Highlands (III): Defined by undulating terrain influenced by lava eruptions, volcanic activity, and magma intrusion, leading to altered crater morphology.

- Lunar Mare Regions (IV): Flat regions covered by extensive basaltic lava flows, with relatively low reflectivity. Craters in these areas are generally circular or elliptical with distinct outer walls, lacking significant central peaks or hills.

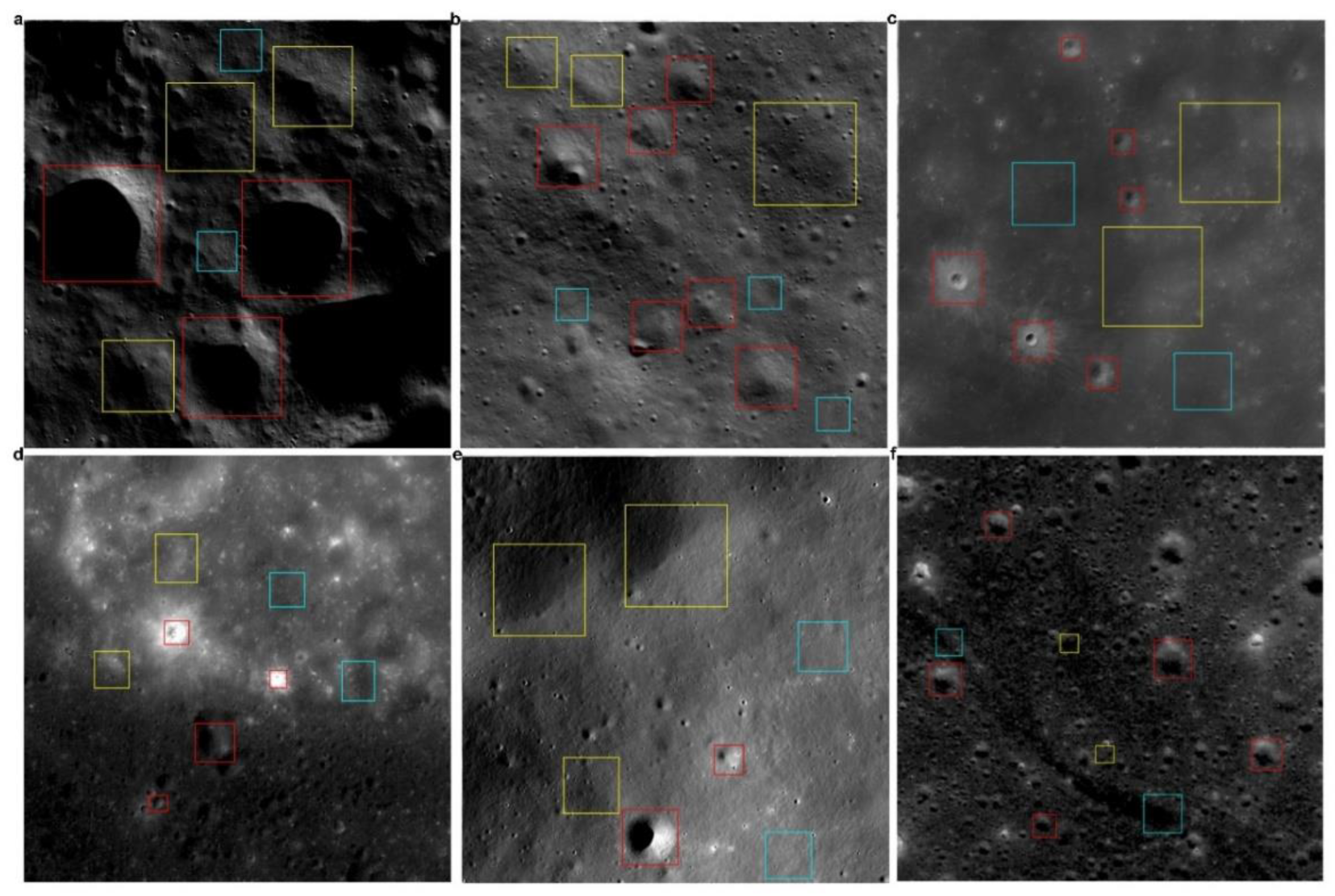

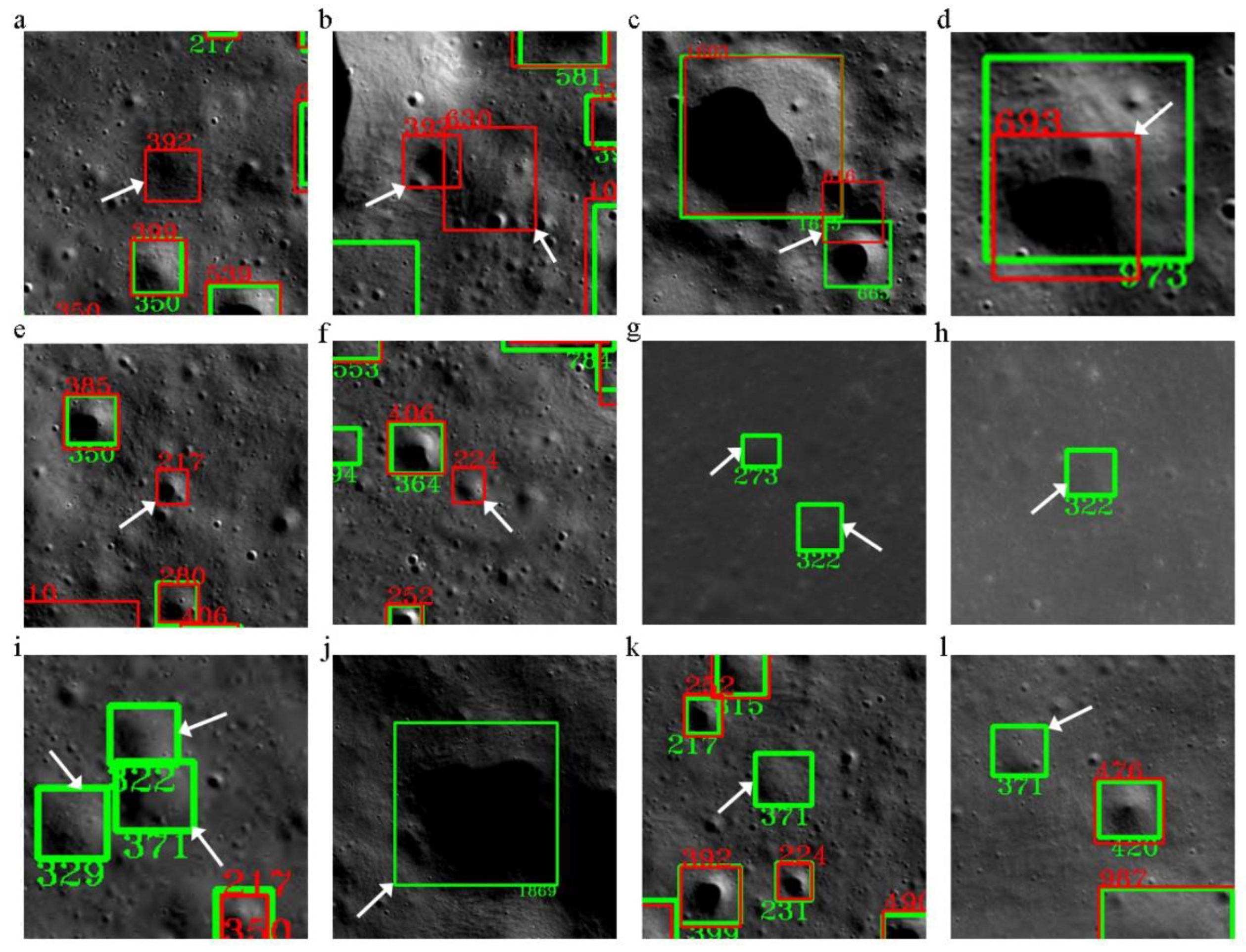

2.1.2. Crater Annotation Rules

- Class A (Definite craters): Craters with clear boundaries and well-preserved morphology.

- Class B (Probable craters): Craters with ambiguous boundaries, requiring subjective interpretation.

- Class C (Non-craters): Geological features that are definitively not craters.

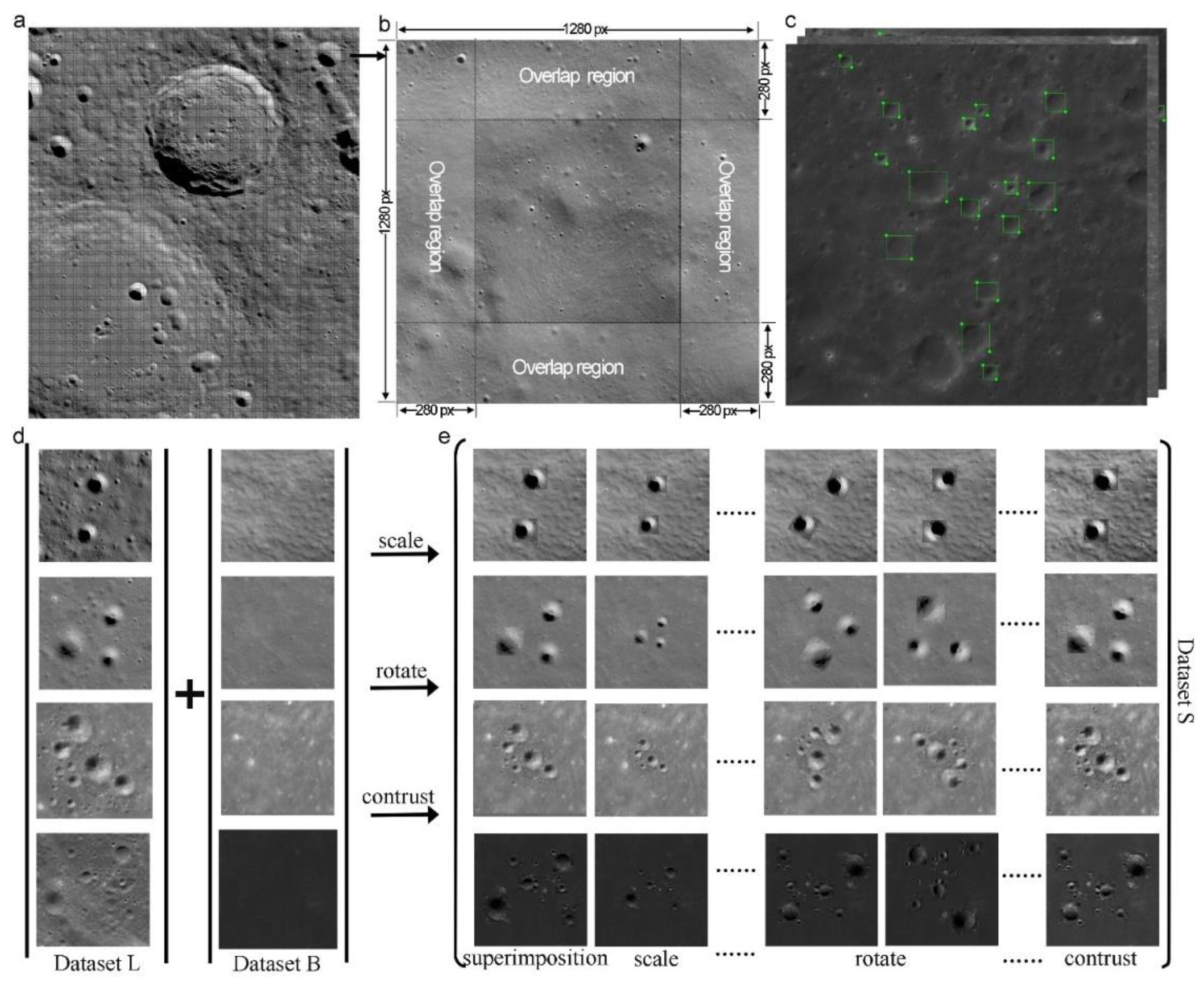

2.1.3. Sample Synthesis and Data Augmentation

2.1.4. Dataset Construction and Partitioning

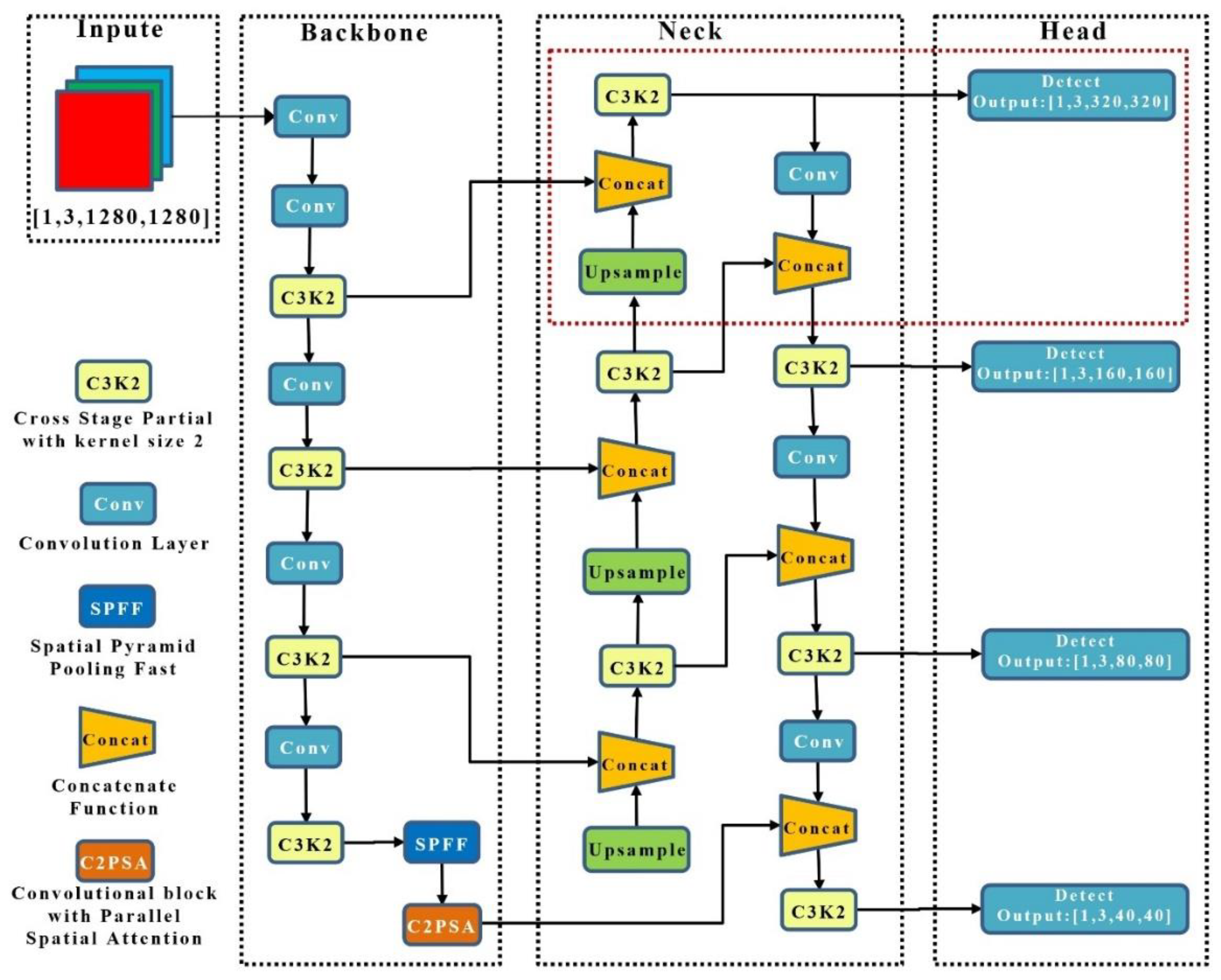

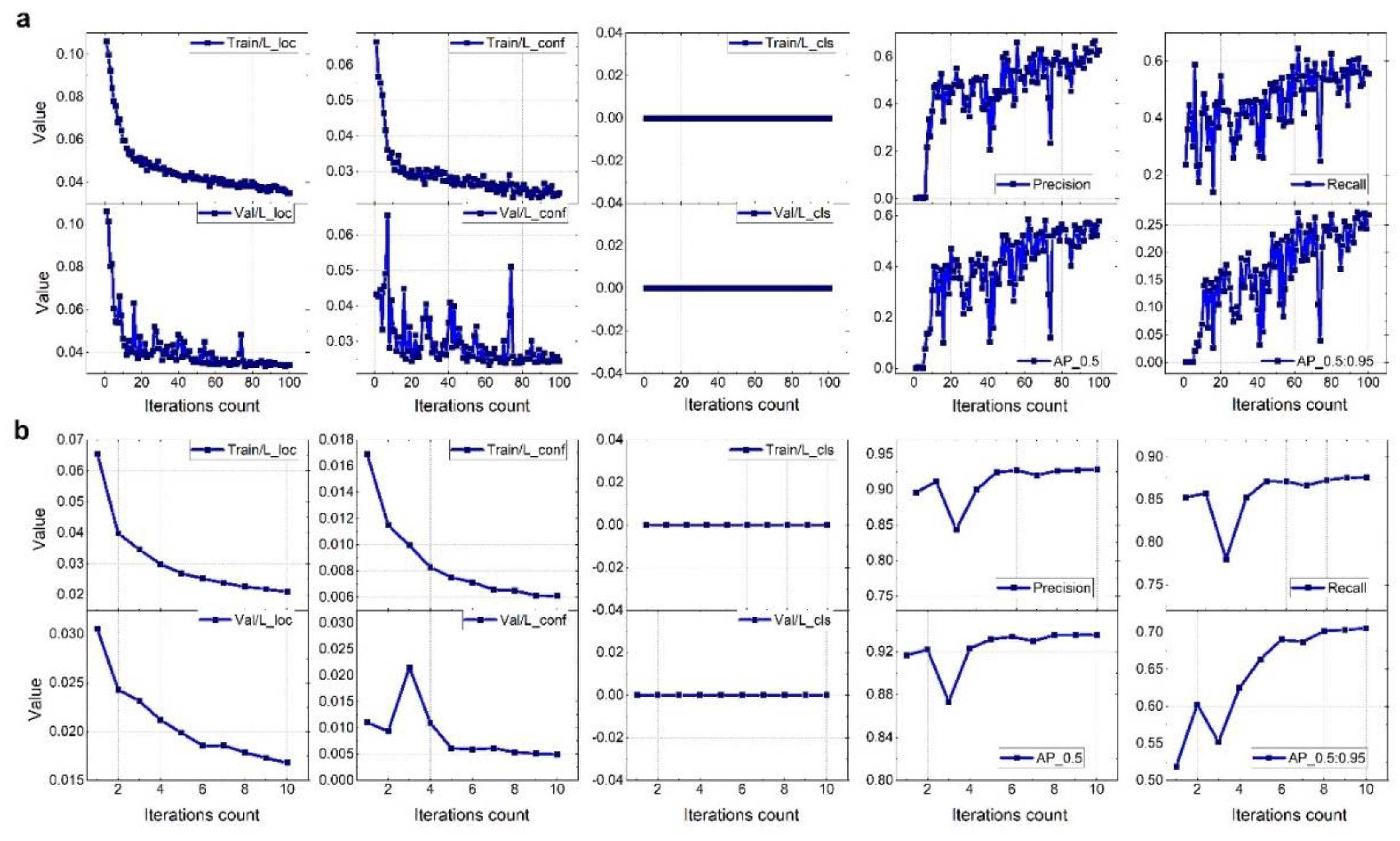

2.2. YOLO-SCNet Detection Method

2.2.1. YOLO-SCNet Architecture and Key Features

2.2.2. Design and Optimization of the Small Object Detection Head

- Classification loss (Lcls): Measures the discrepancy between predicted and true class labels.

- localization loss (Lloc): Evaluates the difference between predicted and true bounding box coordinates.

- confidence loss (Lconf): Assesses the accuracy of the model’s confidence predictions for bounding boxes.

- λ1, λ2, λ3 are dynamically adjusted weight coefficients.

2.2.3. Model Training and Optimization

- Model Configuration and Training Environment: Experiments were conducted using PyTorch 1.12 on a robust, high-performance computing system. This system equipped with two Intel 6346 Xeon 16-core processors, two NVidia GeForce RTX 3090 Ti 24 GB GPUs, and 256 GB of memory, all operating under Ubuntu 20.04.5. Key parameters included an input size of 1280×1280, batch size of 8, initial learning rate of 0.01, momentum of 0.937, and weight decay of 0.0005.

- Dataset Partitioning: To ensure fairness in training, validation, and testing, we ultimately constructed a dataset consisting of 80,607 samples, which were collected from four distinct lunar regions (polar region, high-latitude highlands, mid-low latitude highlands, and lunar mare). This dataset was partitioned into training, validation, and testing sets in an 8:1:1 ratio. This partitioning ensures that each dataset represents different lunar terrains and lighting conditions, providing a comprehensive test of the model's generalization capability.

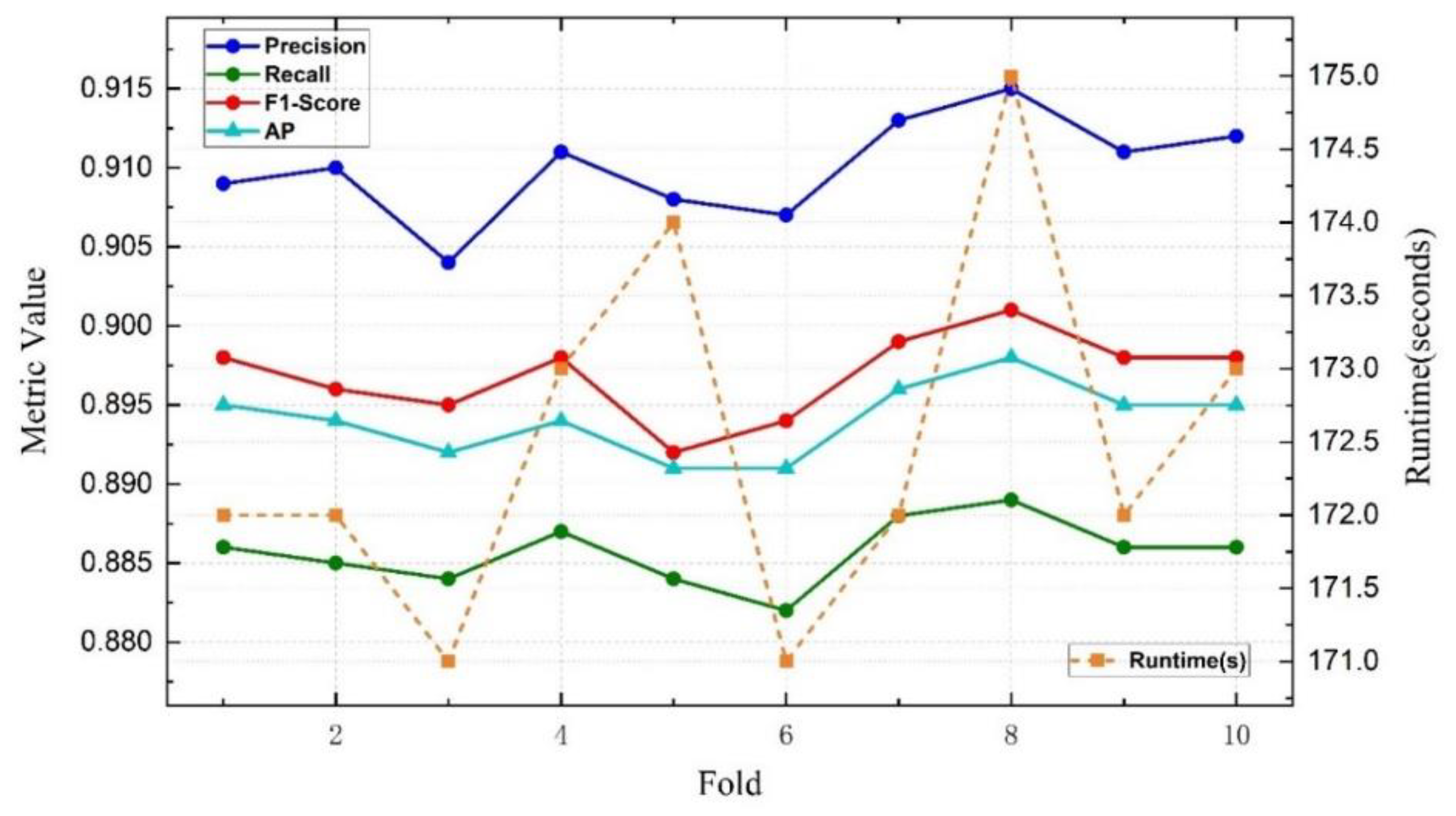

- Training Strategies: To ensure optimal adaptation to lunar crater detection tasks, the following strategies were employed: 1) Fine-Tuning Pre-Trained Weights: The model was fine-tuned with pre-trained weights from ImageNet to adapt to the specific features of lunar craters.2) Adaptive Learning Rate Scheduling: A dynamic learning rate adjustment strategy was employed to accelerate convergence and reduce the risk of overfitting. 3) k-Fold Cross-Validation: A 10-fold cross-validation approach was used to evaluate the stability of the model across different data partitions and reduce the potential bias introduced by a single dataset split. 4) Robustness Validation with Augmented Data: The model's robustness was tested using augmented datasets with variations in noise, contrast, and lighting to simulate real-world imaging conditions. 5) Focused Small Crater Detection: Special attention was given to craters with diameters smaller than 200 meters to ensure the model's suitability for global lunar mapping and planetary geological studies.

- Post-Processing and Result Optimization: During detection, post-processing techniques were applied, including thresholding and Non-Maximum Suppression (NMS), to refine the model's predictions. By setting confidence thresholds, low-confidence detections were filtered out, while NMS was used to eliminate overlapping bounding boxes, retaining only the highest-confidence predictions.

2.2.4. Performance Evaluation

- Precision (P): Measures the proportion of correctly identified craters among all predictions.

- Recall (R): Indicates the proportion of true craters detected by the model.

- F1-Score (F1): The harmonic mean of precision and recall, providing a balanced measure of performance.

- Average Precision (AP): Represents the area under the precision-recall curve across various IoU thresholds, reflecting localization and classification accuracy

- Area Under the Curve (AUC): Assesses the model’s ability to distinguish between true and false detections across different confidence levels.

3. Experimental Results

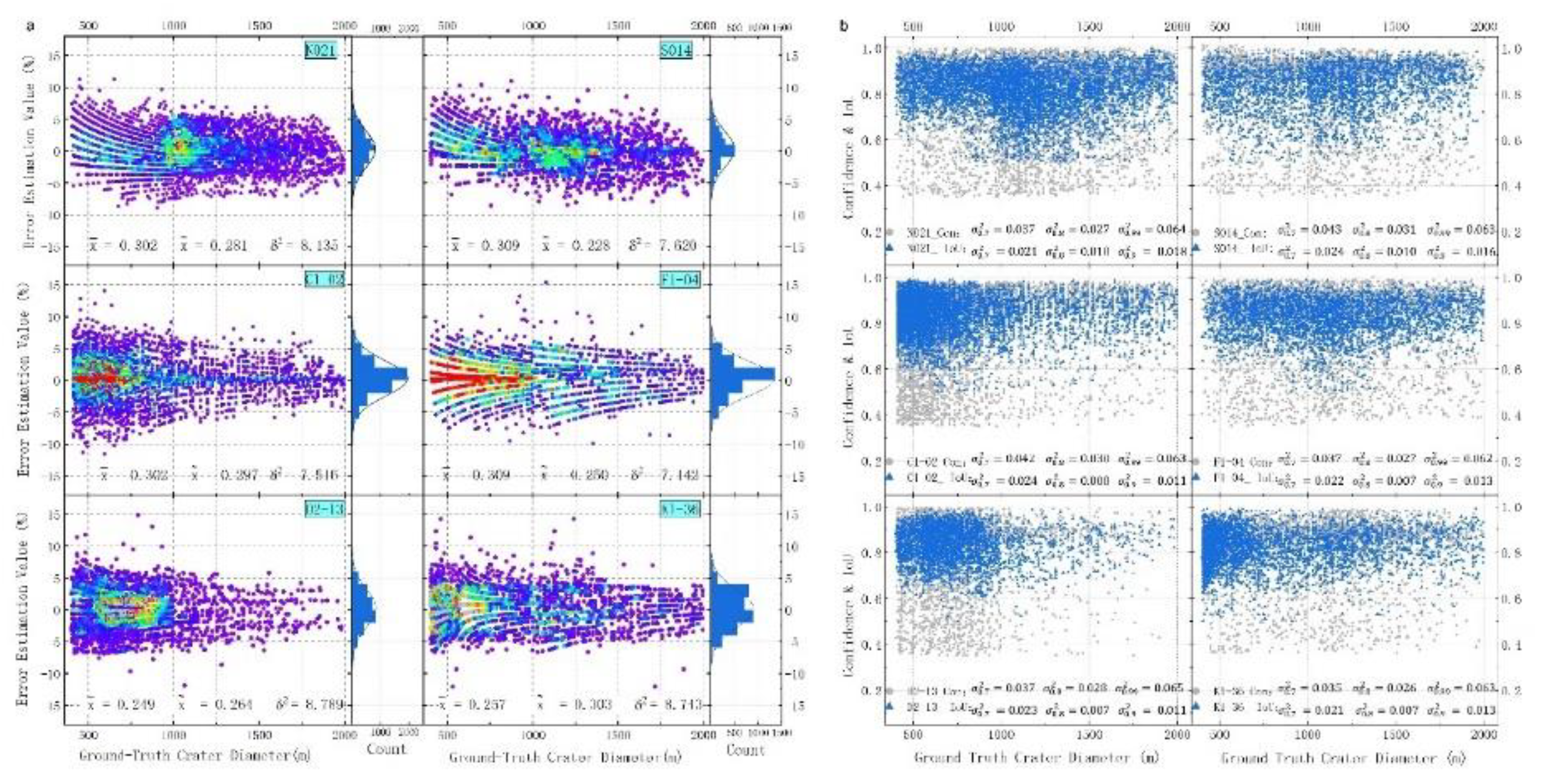

3.1. Ground Truth Data Preparation and Independent Regional Testing

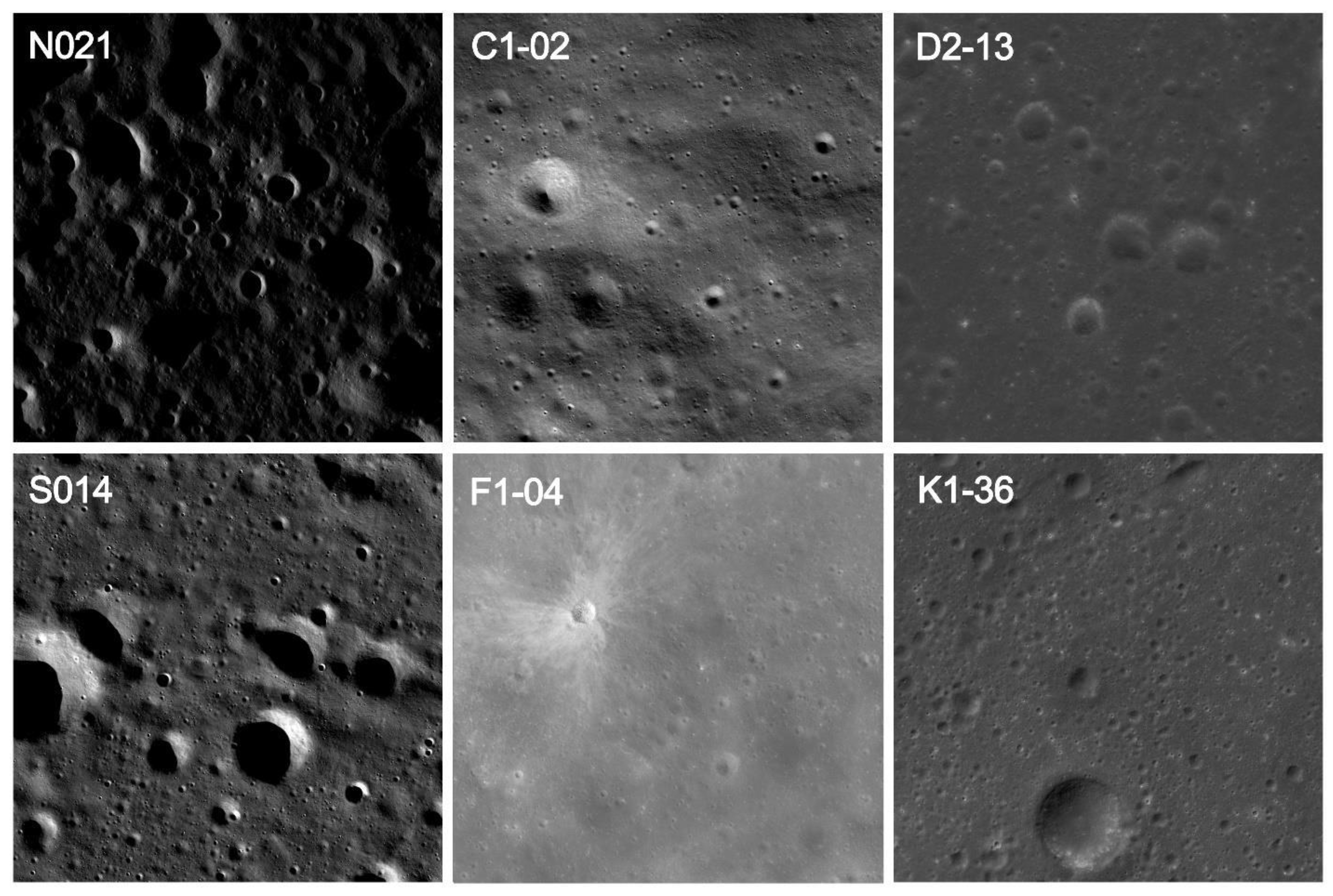

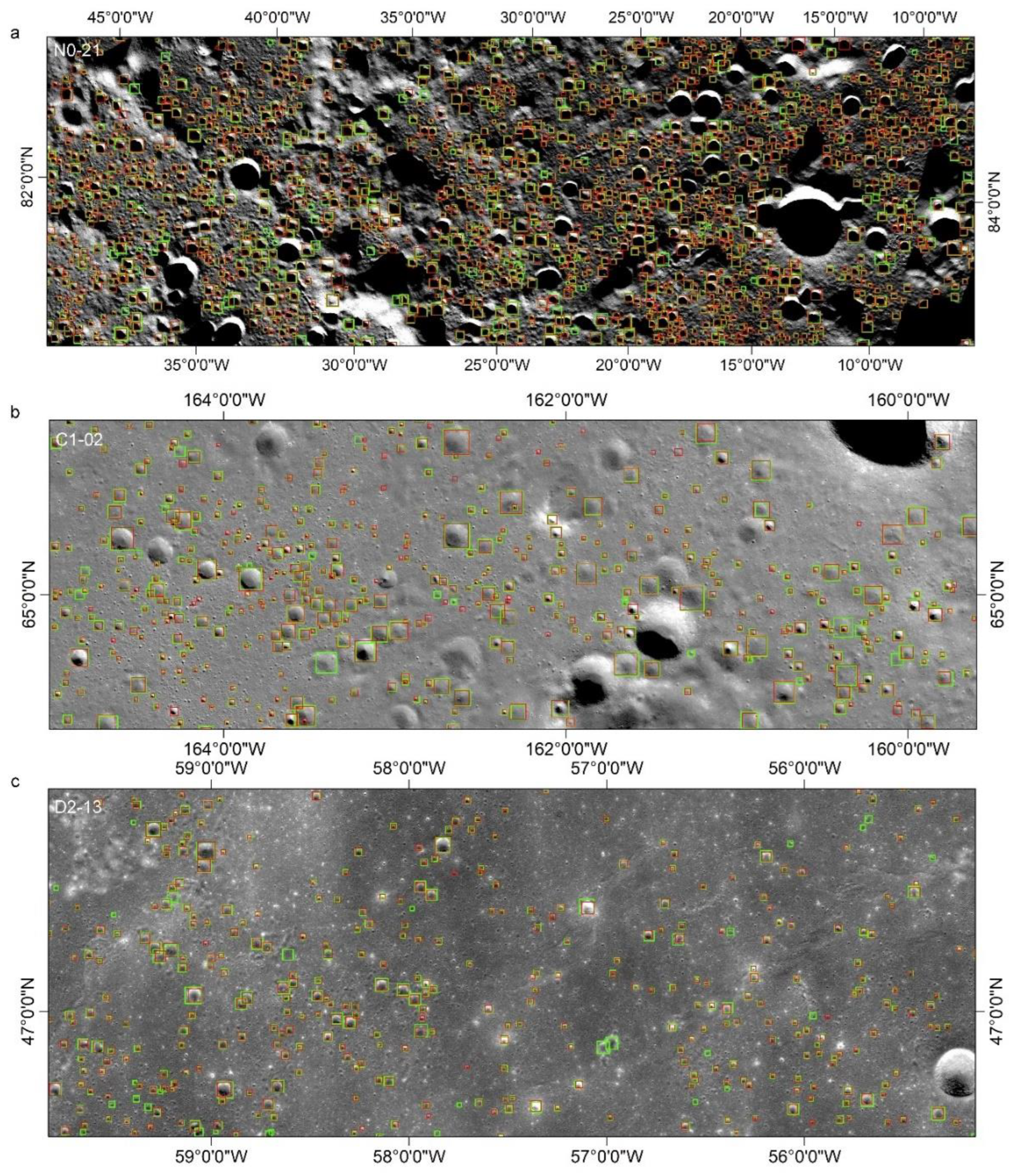

- I-1 (N021, North Pole): Bright surfaces near the polar region with abundant small craters exhibiting black-and-white wart-like structures.

- I-2 (S014, South Pole): Low-albedo areas with dim illumination and larger craters containing central stacks.

- II-1 (C1-02, Northern High-Latitude Highlands): Darker surface with the lowest albedo among similar regions, characterized by dramatic topographic variations.

- III-2 (F1-04, Mid-Low Latitude Highlands): Bright ejecta material with higher reflectivity, featuring craters altered by volcanic activity and lava flows.

- IV-2 (D2-13, Lunar Near-Side Mare): Darker near-side regions with low reflectivity and distinct circular craters.

- IV-3 (K1-36, Lunar Far-Side Mare): Far-side regions with moderate albedo and circular craters with smooth edges.

- Medium-sized craters (400m–2km) Annotated across the entire extent of the six regions to assess the model's ability to detect craters of moderate size.

- Small craters (200m–2km): Fine-grained annotations in specific areas to evaluate the model’s precision in detecting smaller craters.

3.2. Type 1 Test: Detection of Medium-Sized Craters (400m—2km)

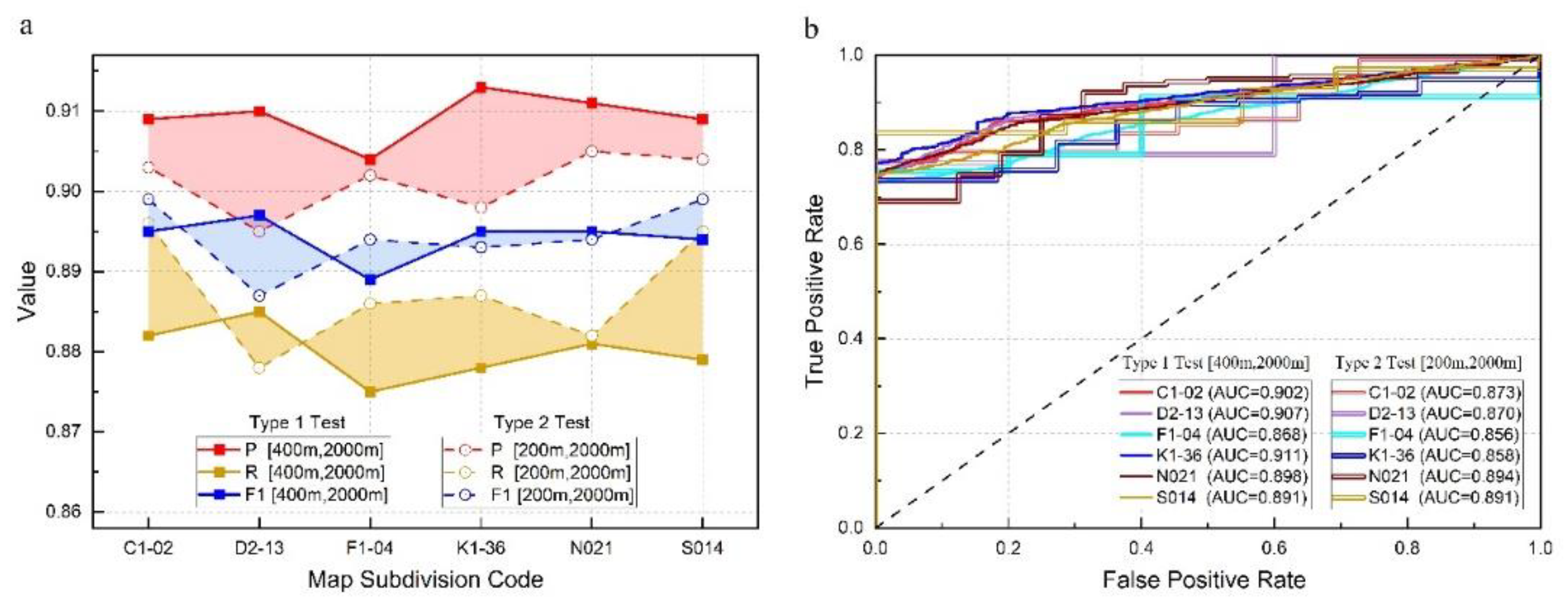

- Highlands (including C1-02 and F1-04): In high-latitude highland regions with significant terrain variations and higher reflectance, the model still demonstrated exceptional adaptability. In the C1-02 northern high-latitude highland, where the reflectance was low and the terrain complex, the model successfully detected most craters, with a precision of 90.9% and recall of 88.2%. In the F1-04 mid-latitude highlands, influenced by volcanic activity and lava flows, which caused alterations in crater morphology, the model achieved a precision of 90.4% and recall of 87.5%, fully reflecting its robustness in complex terrains.

- Maria Regions (including D2-13 and K1-36): In the maria regions, YOLO-SCNet also demonstrated strong detection capabilities. In the D2-13 maria region, where the craters were mostly round or elliptical with clear edges, the model achieved a precision of 91.0% and recall of 88.5%. In the K1-36 maria region, with similar crater features, the model's precision was 91.3% and recall 87.8%. These results indicate that YOLO-SCNet can accurately identify craters even in low reflectance and flat terrains.

- Polar Regions (including N021 and S014): In extreme environments, the model exhibited outstanding adaptability. In the N021 polar region, despite the low reflectance, the model was able to accurately identify a large number of craters, achieving a precision of 90.9% and recall of 88.1%. In the S014 Antarctic region, under high contrast and complex lighting conditions, YOLO-SCNet also performed excellently, with a precision of 90.9% and recall of 87.9%, demonstrating its stability in polar environments.

3.3. Type 2 Test: Detection of Small Craters (200m—2km)

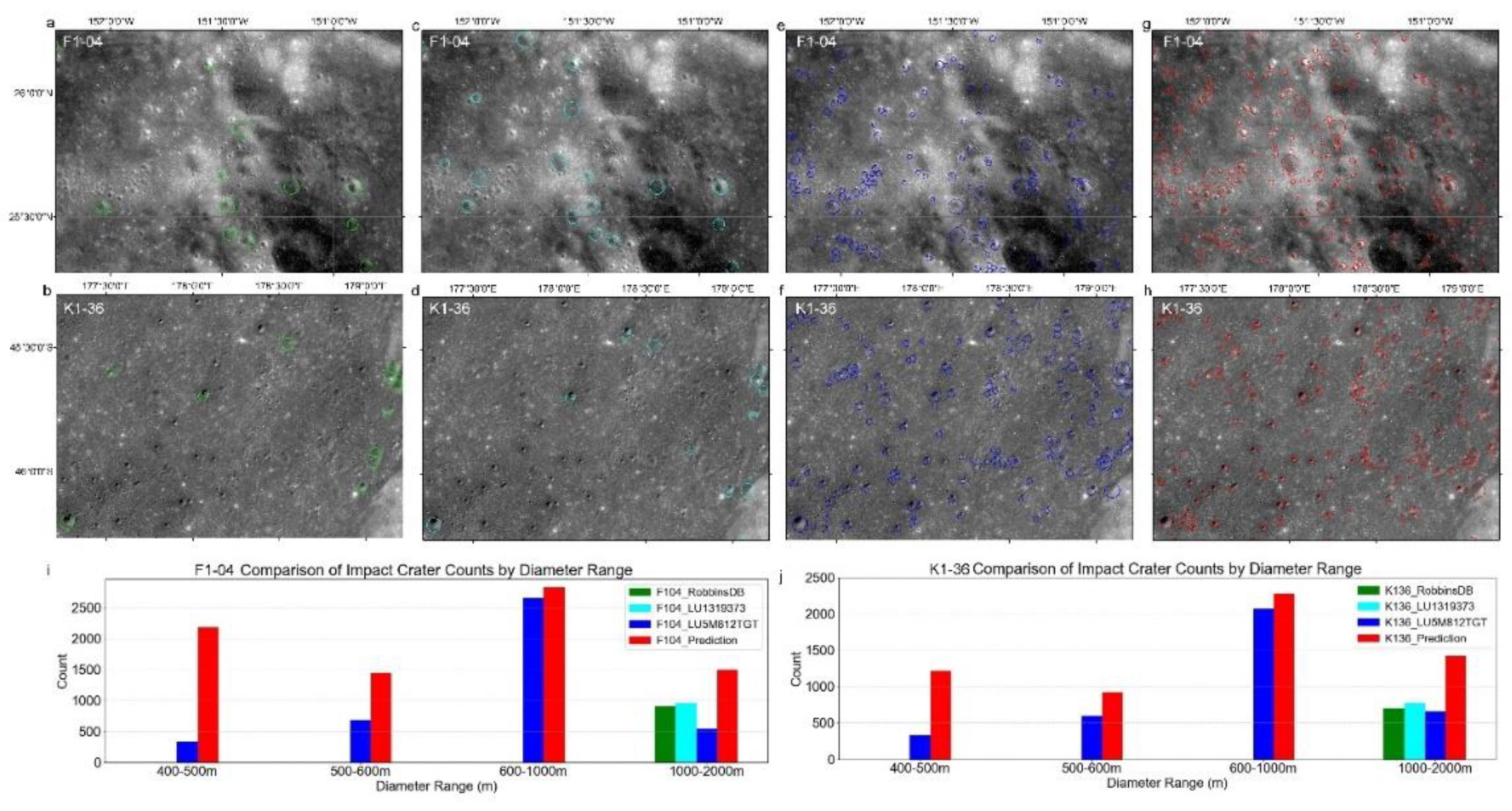

3.4. Type 3 Test: Database Comparison and Detection Expansion (400m—2km)

3.5. Summary of Experimental Results

- An average Precision of 90.9%, Recall of 88.0% and F1-score of 89.4% for medium-sized craters (400m–2km).

- An overall Precision of 90.2%, Recall of 88.7% and F1-score of 89.4% for small craters (200m–400m), demonstrating adaptability to subtle topographical features.

- A high Recall (97.2%) for database comparison tests, with the model identifying additional craters likely to refine and expand existing catalogs.

4. Analysis and Discussion

4.1. Performance and Evaluation of the Proposed Model

4.1.1. Overall Performance in Crater Detection

4.1.2. Implications of Regional Diversity in Model Evaluation

- Polar regions (e.g., N021, S014): Extreme lighting conditions and shadow effects make detecting small craters particularly challenging.

- High-latitude highlands (e.g., C1-02): Rugged terrain, steep slopes, and dramatic topographic variations test the model’s ability to adapt to complex geological features.

- Mid-low latitude highlands and mare regions (e.g., F1-04, D2-13, K1-36): Contrasting features such as volcanic alterations, flat basaltic plains, and low reflectivity require the model to generalize across varied surface types.

4.2. Comprehensive Model Performance and Stability Analysis

4.2.1. Crater Detection Accuracy Analysis

4.2.2. Robustness Evaluation via Cross-Validation

4.2.3. Discussion of Combined Results

4.3. Dataset Construction and Augmentation Methods in Lunar Crater Detection

4.3.1. Importance of Dataset Construction and Its Role in Performance Improvement

- Filling Existing Database Gaps: The current lunar impact crater databases, such as RobbinsDB and LU1319373, primarily include craters with diameters ≥1 km. In contrast, the newly released LU5M812TGT database contains craters with diameters ≥0.4 km. While smaller craters (<200m) are of interest, their detection and annotation often face significant challenges due to terrain complexity and resolution limitations. By targeting the 200m-2km range, our study addresses a critical gap in lunar crater datasets, providing new insights into medium-sized crater distributions.

- Reducing Annotation and Computational Workload: Annotating craters <200m requires extremely high-resolution imagery and significant manual effort, while >2km craters are typically well-documented in existing databases. The chosen range thus balances scientific value and practical feasibility.

4.3.2. Application and Effectiveness of Data Augmentation Strategies

- Highest Precision and F1-Score: Poisson Image Editing achieved the highest Precision (0.915), Recall (0.882), and F1-Score (0.898), outperforming all other methods.

- Faster Convergence: The method required only 1000 iterations to achieve stable training, significantly faster than other methods (e.g., CutMix: 1300 iterations, rotation: 1500 iterations).

- Better Stability: Poisson Image Editing exhibited the lowest variance in metrics (±0.003), indicating consistent performance across validation folds.

4.3.3. Advantages and Scalability of Poisson Image Editing

4.3.4. Discussion and Future Directions

4.4. Comparison with Existing Methods

4.4.1. Performance Comparison

4.4.2. Comparative Analysis of Detection Methods

4.4.3. Key Advantages of Our Approach

4.5. Analysis of False Positives, False Negatives, and Future Improvements

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Code availability section

Acknowledgments

Conflicts of Interest

References

- Head, J. W., et al. Global distribution of large lunar craters: Implications for resurfacing and impactor populations. Science. 2010, 329(5998), 1504–1507. [CrossRef]

- Fassett, C., et al. Lunar impact basins: Stratigraphy, sequence and ages from superposed crater populations measured from Lunar Orbiter Laser Altimeter (LOLA) data. J. Geophys. Res. Planets. 2012, 117 (E12), Art. no. e2011JE003951. [CrossRef]

- Hartmann, W. K. Terrestrial and lunar flux of large meteorites in the last two billion years. Icarus. 1965, 4(2), 157-165. [CrossRef]

- Neukum, G. Meteorite Bombardment and Dating of Planetary Surfaces. National Aeronautics and Space Administration: Washington, DC, USA (1984).

- Salamunićcar, G., & Lončarić, S. Manual feature extraction in lunar studies. Computers & Geosciences. 2008,34 (10), 1217-1228.

- Zuo, W., et al. Contour-based automatic crater recognition using digital elevation models from Chang'E missions. Computers & Geosciences. 2016, 97: 79-88. [CrossRef]

- Krgli, D., & et al. Deep learning for planetary surface analysis. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp:1-8.

- Salamunićcar, G., et al. Planetary crater detection using advanced deep learning. Planetary and Space Science. 2011,59 (2-3): 111-131.

- Emami, E., Ahmad, T., Bebis, G., Nefian, A., Fong, T. “Crater detection using unsupervised algorithms and convolutional neural networks.” IEEE Transactions on Geoscience and Remote Sensing 57 (8): 5373-5383. [CrossRef]

- Del Prete, R., Renga, A. “A Novel Visual-Based Terrain Relative Navigation System for Planetary Applications Based on Mask R-CNN and Projective Invariants.” Aerotec. Missili Spaz 101: 335–349. [CrossRef]

- Del Prete, R., Saveriano, A. and Renga A. 2022b. “A Deep Learning-based Crater Detector for Autonomous Vision-Based Spacecraft Navigation.” 2022 IEEE 9th International Workshop on Metrology for AeroSpace, Pisa, Italy, 2022: 231-236. [CrossRef]

- Luca Ostrogovich, et al. A Dual-Mode Approach for Vision-Based Navigation in a Lunar Landing Scenario. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops (2024): 6799-6808.

- Silburt, A., Ali-Dib, M., Zhu, C., Jackson, A., Valencia, D., & Kissin, Y. Lunar crater identification via deep learning.” Icarus. 2019, 317: 27-38. [CrossRef]

- Jia, Y., Liu, L., Zhang, C. Moon crater detection using nested attention mechanism based UNet++. IEEE Access. 2021, 9: 44107-44116. [CrossRef]

- Lin, X., et al. Lunar Crater Detection on Digital Elevation Model: A Complete Workflow Using Deep Learning and Its Application. Remote Sensing. 2022, 14 (3): 621. [CrossRef]

- Latorre, F., Spiller, D., Sasidharan, S.T., Basheer, S., Curti, F. Transfer learning for real-time crater detection on asteroids using a Fully Convolutional Neural Network. Icarus. 2023, 394: 115434. [CrossRef]

- Zhang, S., et al. “Automatic detection for small-scale lunar crater using deep learning.” Advances in Space Research. 2024, 73(4): 2175-2187. [CrossRef]

- La Grassa, M., & et al. “Cost and performance issues in deep learning for lunar exploration.” IEEE Transactions on Geoscience and Remote Sensing. 2023, 61: 1-10.

- La Grassa, R. et al. 2025. “LU5M812TGT: An AI-Powered global database of impact craters ≥ 0.4 km on the Moon.” ISPRS Journal of Photogrammetry and Remote Sensing 220:75–84. [CrossRef]

- Zang, S., Mu, L., Xian, L., Zhang, W. Semi-supervised deep learning for lunar crater detection using ce-2 dom. Remote Sensing. 2021, 13(14), Art. no. 2819. [CrossRef]

- Mu, L., et al. YOLO-Crater Model for Small Crater Detection. Remote Sens.2023,15 (20): 5040. [CrossRef]

- Haruyama, J., et al. Long-lived volcanism on the lunar farside revealed by SELENE terrain camera. Science. 2009, 323 (5916): 905–908. [CrossRef]

- Yingst, R. A., Skinner, J. A., Jr., & Beaty, D. W. Improving data sets for planetary surface analysis: An integrated approach. Planetary and Space Science. 2013, 87: 74-81. [CrossRef]

- Wang, J., Bai, X., Jin, Y., Wu, B., & Zhang, J. A robust crater detection algorithm using deep learning framework with high-resolution lunar DEM data. Computers & Geosciences. 2020, 137:104421.

- Robbins, S. J. A new global database of lunar craters >1–2 km: 1. crater locations and sizes, comparisons with published databases, and global analysis. J. Geophys. Res. Planets, 2019, 124 (4): 871-892. [CrossRef]

- Wang, Y., Wu, B., Xue, H., Li, X., Ma, J. An improved global catalog of lunar craters (≥1 km) with 3d morphometric information and updates on global crater analysis. J. Geophys. Res. Planets. 2021,126(9), Art. no. e2020JE006728. [CrossRef]

- Li, C.L., et al. “Lunar global high-precision terrain reconstruction based on Chang'E-2 stereo images.” Geomatics and Information Science of Wuhan University. 2018, 43(4): 485-495. (in Chinese with English abstract). [CrossRef]

- Zuo, W., et al. China's Lunar and Planetary Data System: Preserve and Present Reliable Chang'e Project and Tianwen-1 Scientific Data Sets. Space Science Reviews. 2021, 217(88): 1-38. [CrossRef]

- Li, C.L., et al. “The Chang’e-2 High Resolution Image Atlas of the Moon.” Surveying and Mapping Press, Beijing, China 2012. (in Chinese).

- Shorten, C., & Khoshgoftaar, T. M. A survey on Image Data Augmentation for Deep Learning. Journal of Big Data .2019,6(1): 1-48. [CrossRef]

- Mumuni, A. and Mumuni, F. Data augmentation: A comprehensive survey of modern approaches. Array. 2022,16: 100258. [CrossRef]

- Boudouh, N., Mokhtari, B. & Foufou, S. Enhancing deep learning image classification using data augmentation and genetic algorithm-based optimization. Int J Multimed Info Retr. 2024, 13 (36). [CrossRef]

- Pérez, P., Gangnet, M. and Blake A. Poisson Image Editing. Seminal Graphics Papers: Pushing the Boundaries. 2023, Volume 2 Article No.: 60: 577–582. [CrossRef]

- Ghiasi, G., Cui, Y., Srinivas, A., Qian, R., Lin, T.-Y., & Le, Q. V. Simple Copy-Paste is a Strong Data Augmentation Method for Instance Segmentation. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021.

- Zhang, H., Cisse, M., Dauphin, Y. N., & Lopez-Paz, D. mixup: Beyond Empirical Risk Minimization. International Conference on Learning Representations (ICLR) 2018. [CrossRef]

- Yun, S., Han, D., Oh, S. J., Chun, S., Choe, J., & Yoo, Y. CutMix: Regularization Strategy to Train Strong Classifiers with Localizable Features. IEEE International Conference on Computer Vision (ICCV) 2019.

- Joseph, R., Santosh, D., Ross, G., Ali, F. “You Only Look Once: Unified, Real-Time Object Detection.” Proceedings of the IEEE conference on computer vision and pattern recognition, LAS VEGAS, USA. 2016, 779-788.

- Jocher, G. et al., ultralytics/yolov5: v3.1 - Bug Fixes and Performance Improvements. Zenodo. 2020. https://ui.adsabs.harvard.edu/abs/2020zndo...4154370J. [CrossRef]

- Jocher, G. and Qiu J. Ultralytics yolo11. 2024.

- Khanam, R. and Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv. 2024, 2410.17725 [cs.CV]. [CrossRef]

- Jocher, G., Chaurasia, A., Qiu, J. YOLO by Ultralytics. 2023. https://github.com/ultralytics/ultralytics.

- He Z.J., Wang K., Fang T., Su L., Chen R., Fei X.H. “Comprehensive Performance Evaluation of YOLOv11, YOLOv10, YOLOv9, YOLOv8 and YOLOv5 on Object Detection of Power Equipment.” arXiv. 2024, 2411.18871[cs.CV]. https://arxiv.org/pdf/2411.18871. [CrossRef]

- Povilaitis, R., et al. Crater density differences: exploring regional resurfacing, secondary crater populations, and crater saturation equilibrium on the moon. Planet Space Sci. 2017,162 (1): 41-51. [CrossRef]

| Region No. | Region name | Regional Characteristics | Sub-region No. | Sub-region Characteristics |

|---|---|---|---|---|

| I | Lunar Polar Regions | Intricate terrain with rugged mountains and abundant craters. Lower albedo near poles, brighter in surrounding areas. | I-1 | Brighter surface with numerous small craters exhibiting black and white wart structures. |

| I-2 | Low albedo near poles; larger craters with central stacks. | |||

| Ⅱ | High- Latitude Highlands | Dramatic topographic changes with high reflectivity; larger and more complex craters. | Ⅱ-1 | Dark surface with lowest albedo among similar regions. |

| Ⅱ-2 | Small amount of ejecta material; crater edges are blunted. | |||

| Ⅱ-3 | Bright surface covered by ejecta material; highest albedo among similar regions. | |||

| Ⅲ | Mid-Low Latitude Highlands | Undulating terrain; crater morphology altered by volcanic activity and lava flows. | Ⅲ-1 | Complex craters are common; some craters are covered by lava flows. |

| Ⅲ-2 | Some regions covered by bright ejecta; higher reflectivity than similar areas. | |||

| Ⅳ | Lunar Mare Regions | Flat basaltic regions with low reflectivity; circular or elliptical craters with distinct edges. | Ⅳ-1 | Highest reflectivity among similar areas; flat-bottomed craters. |

| Ⅳ-2 | IDistributed on the nearside; dark surface with lowest reflectivity among similar areas. | |||

| Ⅳ-3 | Distributed on the far side; circular craters with distinct edges. |

| Map Subdivison Code |

Sub-region No. | Image Dimensions | Area of Map Subdivison (km2) |

Split Images Number | Labeled Crater Number | Predicted Crater Number | TP (IoU≥0.5) |

FP | FN | P | R | F1 | AP | Operating speed |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| C1-02 | Ⅱ-1 | 34877 x 29601 | 50587.31 | 1050 | 5719 | 5523 | 5020 | 503 | 699 | 0.909 | 0.882 | 0.895 | 0.897 | 3′32″ |

| D2-13 | IV-2 | 28539 x 28521 | 39884.08 | 841 | 3404 | 3273 | 2975 | 298 | 429 | 0.910 | 0.885 | 0.897 | 0.883 | 2′12″ |

| F1-04 | III-2 | 31239 x 32454 | 49677.69 | 1056 | 3859 | 3727 | 3360 | 367 | 499 | 0.904 | 0.875 | 0.889 | 0.869 | 3′22″ |

| K1-36 | IV-3 | 28539 x 28521 | 39884.08 | 841 | 3616 | 3475 | 3170 | 305 | 446 | 0.913 | 0.878 | 0.895 | 0.884 | 3′09″ |

| N021 | I-2 | 29056 x 29056 | 41368.31 | 900 | 5487 | 5281 | 4790 | 491 | 697 | 0.911 | 0.881 | 0.895 | 0.877 | 2′52″ |

| S014 | I-1 | 29055 x 29056 | 41368.31 | 841 | 2485 | 2388 | 2170 | 218 | 315 | 0.909 | 0.879 | 0.894 | 0.885 | 2′07″ |

| Average | 30218 x 29535 | 43794.96 | 922 | 4095 | 3935 | 3581 | 364 | 514 | 0.909 | 0.880 | 0.894 | 0.883 | 2′52″ | |

| Map Subdivison Code |

Sub-region No. | Image Dimensions | Area of the testing range (km2) |

Split Images Number | Labeled Crater Number | Predicted Crater Number | TP (IoU≥0.5) |

FP | FN | P | R | F1 | AP |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| C1-02 | Ⅱ-1 | 6560 x 6560 | 150.62 | 18 | 414 | 411 | 371 | 40 | 43 | 0.903 | 0.896 | 0.899 | 0.882 |

| D2-13 | IV-2 | 6560 x 6560 | 150.62 | 18 | 378 | 371 | 332 | 39 | 46 | 0.895 | 0.878 | 0.887 | 0.89 |

| F1-04 | III-2 | 8560 x 6560 | 196.54 | 24 | 528 | 519 | 468 | 51 | 60 | 0.902 | 0.886 | 0.894 | 0.885 |

| K1-36 | IV-3 | 6560 x 6560 | 150.62 | 18 | 588 | 581 | 522 | 59 | 66 | 0.898 | 0.887 | 0.893 | 0.872 |

| N021 | I-2 | 6560 x 6560 | 150.62 | 18 | 951 | 927 | 839 | 88 | 112 | 0.905 | 0.882 | 0.894 | 0.895 |

| S014 | I-1 | 6560 x 6560 | 150.62 | 18 | 694 | 687 | 621 | 66 | 73 | 0.904 | 0.895 | 0.899 | 0.883 |

| Average | 6893 x 6893 | 158.27 | 19 | 592 | 582 | 525 | 57 | 67 | 0.902 | 0.887 | 0.894 | 0.885 | |

| Map Subdivison Code |

Crater Catalog |

Crater Diameter Range | Crater Number | Predicted Crater Number | TP (IoU≥0.5) |

FP | FN | P | R | F1 |

|---|---|---|---|---|---|---|---|---|---|---|

| F1-04 | RobbinsDB | [1km, 2km] | 902 | 1112 | 880 | 232 | 22 | 0.791 | 0.976 | 0.874 |

| K1-36 | RobbinsDB | [1km, 2km] | 703 | 1131 | 685 | 446 | 18 | 0.606 | 0.974 | 0.747 |

| F1-04 | LU1319373 | [1km, 2km] | 959 | 1112 | 927 | 185 | 32 | 0.834 | 0.967 | 0.895 |

| K1-36 | LU1319373 | [1km, 2km] | 773 | 1131 | 746 | 385 | 27 | 0.660 | 0.965 | 0.784 |

| F1-04 | LU5M812TGT | [0.4km, 2km] | 4217 | 7946 | 4157 | 3789 | 94 | 0.523 | 0.978 | 0.682 |

| K1-36 | LU5M812TGT | [0.4km, 2km] | 3660 | 5835 | 3562 | 2273 | 99 | 0.610 | 0.973 | 0.750 |

| Average | 1869 | 3045 | 1826 | 1218 | 49 | 0.671 | 0.972 | 0.789 | ||

| Fold | P | R | F1 | AP | Runtime (s) |

|---|---|---|---|---|---|

| 1 | 0.909 | 0.886 | 0.898 | 0.895 | 172 |

| 2 | 0.910 | 0.885 | 0.896 | 0.894 | 172 |

| 3 | 0.904 | 0.884 | 0.895 | 0.892 | 171 |

| 4 | 0.911 | 0.887 | 0.898 | 0.894 | 173 |

| 5 | 0.908 | 0.884 | 0.892 | 0.891 | 174 |

| 6 | 0.907 | 0.882 | 0.894 | 0.891 | 171 |

| 7 | 0.913 | 0.888 | 0.899 | 0.896 | 172 |

| 8 | 0.915 | 0.889 | 0.901 | 0.898 | 175 |

| 9 | 0.911 | 0.886 | 0.898 | 0.895 | 172 |

| 10 | 0.912 | 0.886 | 0.898 | 0.895 | 173 |

| Mean | 0.909 | 0.886 | 0.897 | 0.894 | 172 |

| Std | ±0.003 | ±0.003 | ±0.003 | ±0.003 | ±2 |

| Augmentation Method | P | R | F1 | AP | Convergence Iterations | Variance in Metrics | Average Processing Time (ms/image) |

|---|---|---|---|---|---|---|---|

| Rotation | 0.878 | 0.856 | 0.867 | 0.852 | 1500 | ±0.007 | 1.2 |

| Scaling | 0.882 | 0.861 | 0.871 | 0.859 | 1400 | ±0.006 | 1.3 |

| Flipping | 0.885 | 0.863 | 0.874 | 0.860 | 1350 | ±0.006 | 0.9 |

| CutMix | 0.892 | 0.870 | 0.881 | 0.869 | 1300 | ±0.005 | 2.5 |

| Poisson Image Editing (Proposed) | 0.915 | 0.882 | 0.898 | 0.889 | 1000 | ±0.003 | 5.8 |

| Reference | Detection method | Data source | P | R | F1 | Sample Dataset | Crater diameter |

|---|---|---|---|---|---|---|---|

| Silburt et al. 2019 [13] | UNET | SLDEM/~59m | 56.0% | 92.0% | 69.6% | Head 2010 [1] Povialaitis,2018 [43] | ≥5km |

| Jia et al., 2021 [14] | UNET++ | SLDEM/ ~59m | 85.6% | 79.1% | 82.2% | ≥5km | |

| Lin et al., 2022 [15] | Faster R-CNN + FPN | SLDEM/ ~59m | 82.9% | 79.4% | 81.0% | ≥5km | |

| Latorre et al., 2023 [16] | transfer learning, UNET+FCNs |

SLDEM/~118m | 83.8% | 84.5% | 84.1% | ≥5km | |

| Grassa et al., 2023 [18] | YOLOLens5x | LROC-WAC/100m | 89.9% | 87.2% | 88.5% | RobbinsDB [25] | ≥1km |

| Zhang et al., 2024 [17] | CenterNet model using a transfer learning strategy | LROC-WAC/100m | 78.3% | 73.7% | 76.0% | ≥500m | |

| La Grassa et al. 2025 [19] | YOLOLens (YOLOv8) | LROC-WAC/100m/50m | 85.2% | 15,408,735 Robbins crater labels | ≥400m | ||

| Mu et al., 2023 [20] | YOLO-Crater | CE-2 DOM /7m | 87.9% | 66.0% | 75.4% | 83,620 manual labelled crater samples | ~400m |

| Zang et al., 2021 [21] | R-CNN | CE-2 DOM /7m | 90.5% | 63.5% | 74.7% | 38,121 manual labelled crater samples | ≥100m |

| This study | YOLO-SCNet (YOLOv11) | CE-2 DOM /7m | 90.2% | 88.7% | 89.4% | 80,607 crater samples data (generated by the sample production method proposed in this study) | [0.2km,2km] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).