Submitted:

24 April 2025

Posted:

24 April 2025

You are already at the latest version

Abstract

Keywords:

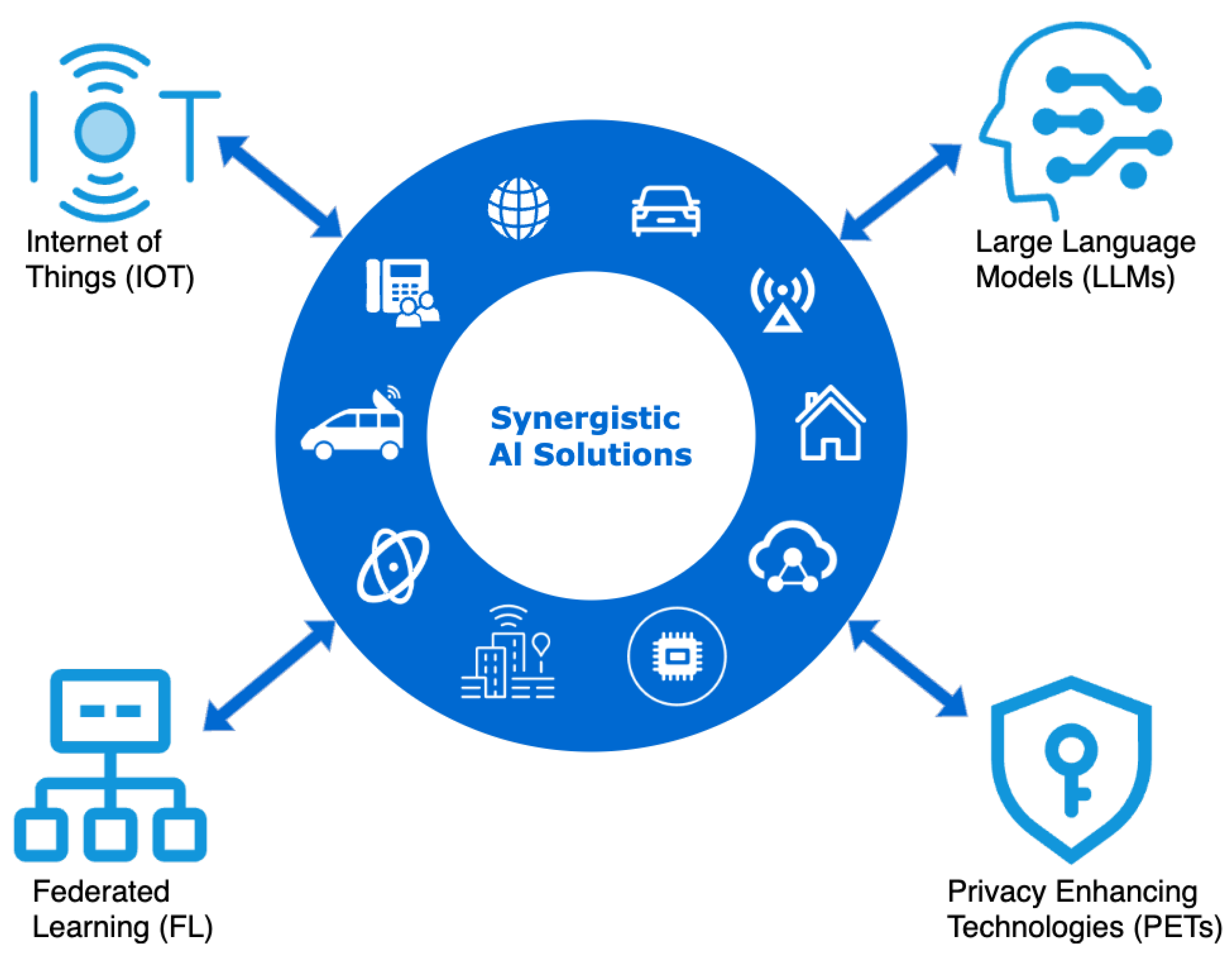

1. Introduction

1.1. Background

1.2. Motivation

1.3. Scope and Contribution

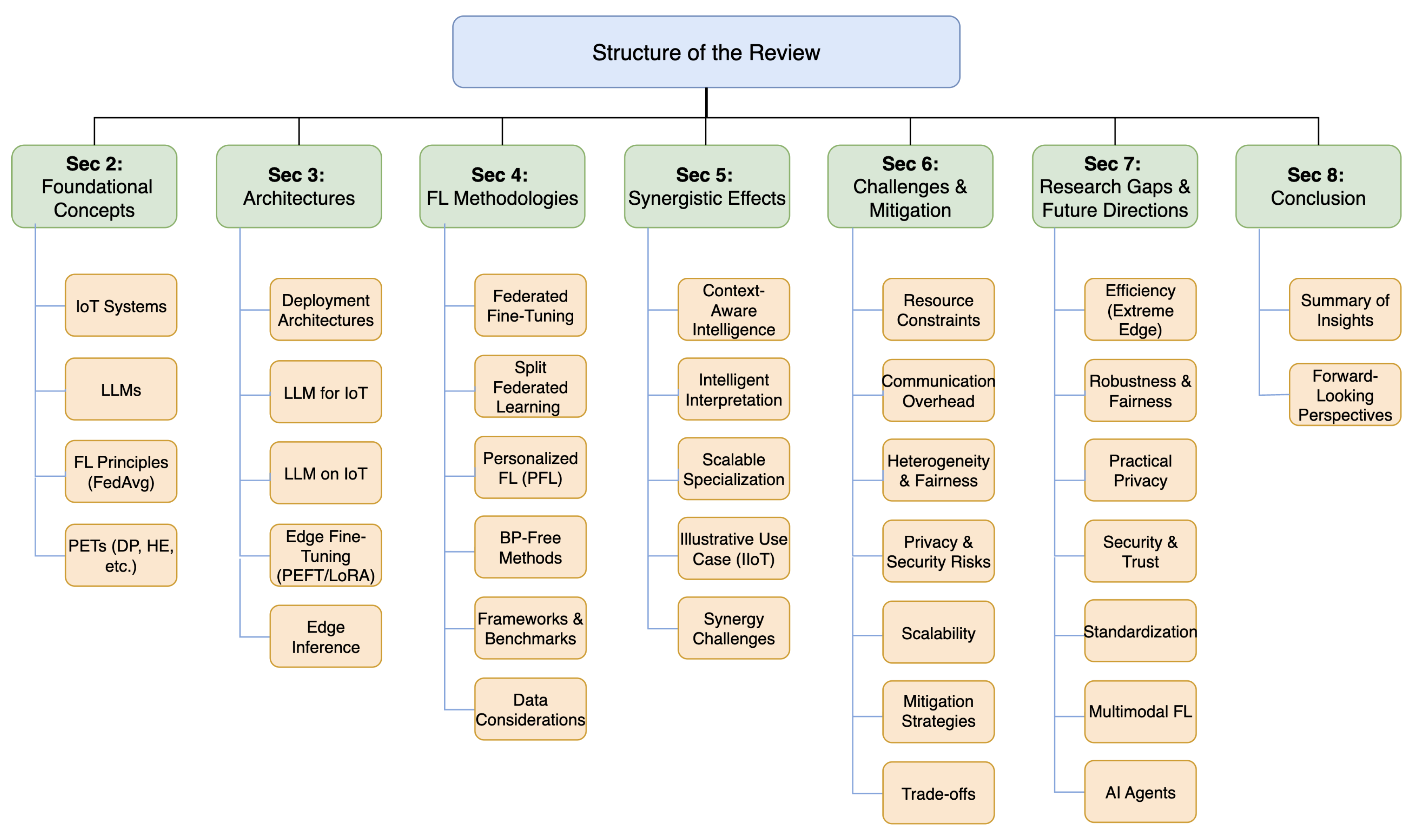

- Qu et al. [13] focuses on how Mobile Edge Intelligence (MEI) infrastructure can support the deployment (caching, delivery, training, inference) of LLMs, emphasizing resource efficiency in mobile networks. Their core contribution lies in detailing MEI mechanisms specifically tailored for LLMs, especially in caching and delivery, within a 6G context.

- Adam et al. [14] provides a comprehensive overview of FL applied to the broad domain of IoT, covering FL fundamentals, diverse IoT applications (healthcare, smart cities, autonomous driving), architectures (CFL, HFL, DFL), a detailed FL-IoT taxonomy, and challenges like heterogeneity and resource constraints. LLMs are treated as an emerging FL trend within the IoT ecosystem.

- Friha et al. [15] examines the integration of LLMs as a core component of Edge Intelligence (EI), detailing architectures, optimization strategies (e.g., compression, caching), applications (driving, software engineering, healthcare, etc.), and offering an extensive analysis of the security and trustworthiness aspects specific to deploying LLMs at the edge.

- Cheng et al. [10] specifically targets the intersection of FL and LLMs, providing an exhaustive review of motivations, methodologies (pre-training, fine-tuning, Parameter-Efficient Fine-Tuning (PEFT), backpropagation-free), privacy (DP, HE, SMPC), and robustness (Byzantine, poisoning, prompt attacks) within the “Federated LLM” paradigm, largely independent of the specific application domain (like IoT) or deployment infrastructure (like MEI).

2. Foundational Concepts

2.1. IoT in Advanced Networks

2.2. Large Language Models (LLMs)

2.3. Federated Learning (FL)

- Enhanced Privacy: Data remains localized on user devices, reducing risks associated with central data aggregation.

- Communication Efficiency: Transmitting model updates instead of raw data significantly reduces network load.

- Utilizing Distributed Resources: Leverages the computational power available at the edge devices [34].

- CFL vs. DFL: Centralized FL (CFL) uses a server for coordination and aggregation, offering simplicity but creating a potential bottleneck and single point of failure [35]. Decentralized FL (DFL) employs peer-to-peer communication, potentially increasing robustness and scalability for certain network topologies (like mesh networks common in IoT scenarios) but adding complexity in coordination and convergence analysis [36].

- Non-IID Data: A central challenge in FL stems from heterogeneous data distributions across clients, commonly referred to as Non-Independent and Identically Distributed (Non-IID) data [37]. This means the statistical properties of data significantly vary between clients; for instance, clients might hold data with different label distributions (label skew) or different feature characteristics for the same label (feature skew). Such heterogeneity can substantially degrade the performance of standard algorithms like FedAvg, as the single global model aggregated from diverse local models may not generalize well to each client’s specific data distribution [7].

2.4. Privacy-Preserving Techniques

3. LLM-Empowered IoT Architecture for Distributed Systems

3.1. Architectural Overview

3.2. LLM for IoT

- Intelligent Interfaces & Interaction: Enabling sophisticated natural language control (e.g., complex conditional commands for smart environments) and dialogue-based interaction with IoT systems for status reporting or troubleshooting [52].

- Advanced Data Analytics & Reasoning: Fusing data from multiple sensors (e.g., correlating camera feeds with environmental sensor data for scene understanding in smart cities), performing complex event detection, predicting future states (e.g., equipment failure prediction in IIoT based on subtle degradation patterns), and providing causal explanations for system behavior.

- Automated Optimization & Control: Learning complex control policies directly from high-dimensional sensor data for optimizing resource usage (e.g., dynamic energy management in buildings considering real-time occupancy, weather forecasts, and energy prices) or network performance (e.g., adaptive traffic routing in vehicular networks).

3.3. LLM on IoT: Deployment Strategies

3.3.1. Edge Fine-Tuning

3.3.2. Edge Inference

4. Federated Learning for Privacy-Preserving LLM Training in IoT

4.1. Federated Fine-Tuning of LLMs

4.2. Split Federated Learning

4.3. Personalized Federated LLMs (PFL)

4.4. Back-Propagation-Free Methods

4.5. Frameworks and Benchmarks

4.6. Initialization and Data Considerations

4.7. LLM-Assisted Federated Learning

4.8. Evaluation Metrics

5. Synergistic Effects of Integrating IoT, LLMs, and Federated Learning

5.1. Introduction: Beyond Pairwise Integration

5.2. Theme 1: Privacy-Preserving, Context-Aware Intelligence from Distributed Real-World Data

- Hyper-Personalization: Training models tailored to individual users or specific environments (e.g., a smart home assistant learning user routines from sensor data via FL [14]).

- Enhanced Robustness: Learning from diverse, real-world IoT data sources via FL can make LLMs more robust to noise and domain shifts compared to training solely on cleaner, but potentially less representative, centralized datasets [37].

5.3. Theme 2: Intelligent Interpretation and Action Within Complex IoT Environments

5.4. Theme 3: Scalable and Adaptive Domain Specialization at the Edge

- Locally Optimized Performance: Models fine-tuned via FL+PEFT on local IoT data will likely outperform generic models for specific edge tasks (e.g., a traffic sign recognition LLM adapted via FL to local signage variations [14]).

- Rapid Adaptation: New IoT devices or locations can quickly join the FL process and adapt the shared base LLM using PEFT without needing massive data transfers or full retraining [10].

- Resource-Aware Deployment: Allows leveraging powerful base LLMs even when end devices can only handle the computation for small PEFT updates during FL training [70], or optimized inference models (potentially distilled using FL-trained knowledge [77]). Frameworks like Split Federated Learning can further distribute the load [17,18].

5.5. Illustrative Use Case: Predictive Maintenance in Federated Industrial IoT (IIoT)

- IoT only: Basic thresholding or simple local models on sensor data might miss complex failure patterns. No collaborative learning.

- IoT + Cloud LLM: Requires sending massive, potentially sensitive sensor streams and logs to the cloud, incurring high costs, latency, and privacy risks [13].

- IoT + FL (Simple Models): Can learn collaboratively but struggles to interpret unstructured maintenance logs or complex multi-sensor correlations indicative of subtle wear patterns [14].

- LLM + FL (No IoT): Lacks real-time grounding; trained on potentially outdated or generic data, not the specific, current state of the machines [10].

- Data Generation : Sensors on machines continuously generate multi-modal time-series data and operational logs.

- Model Choice (LLM): A powerful foundation LLM (potentially pre-trained on general engineering texts and machine manuals) is chosen as the base model. It possesses the capability to understand technical language in logs and potentially process time-series data patterns [15].

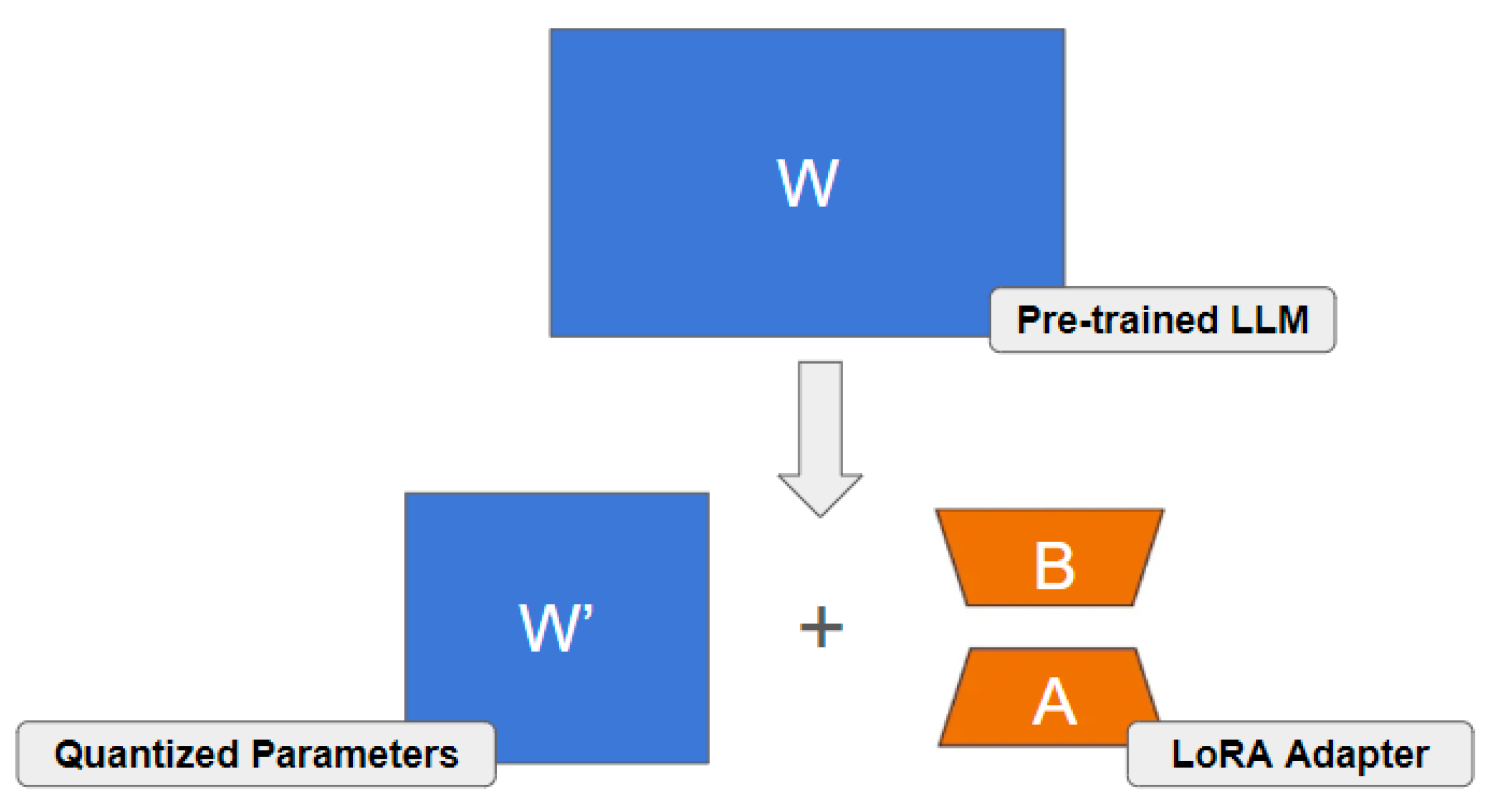

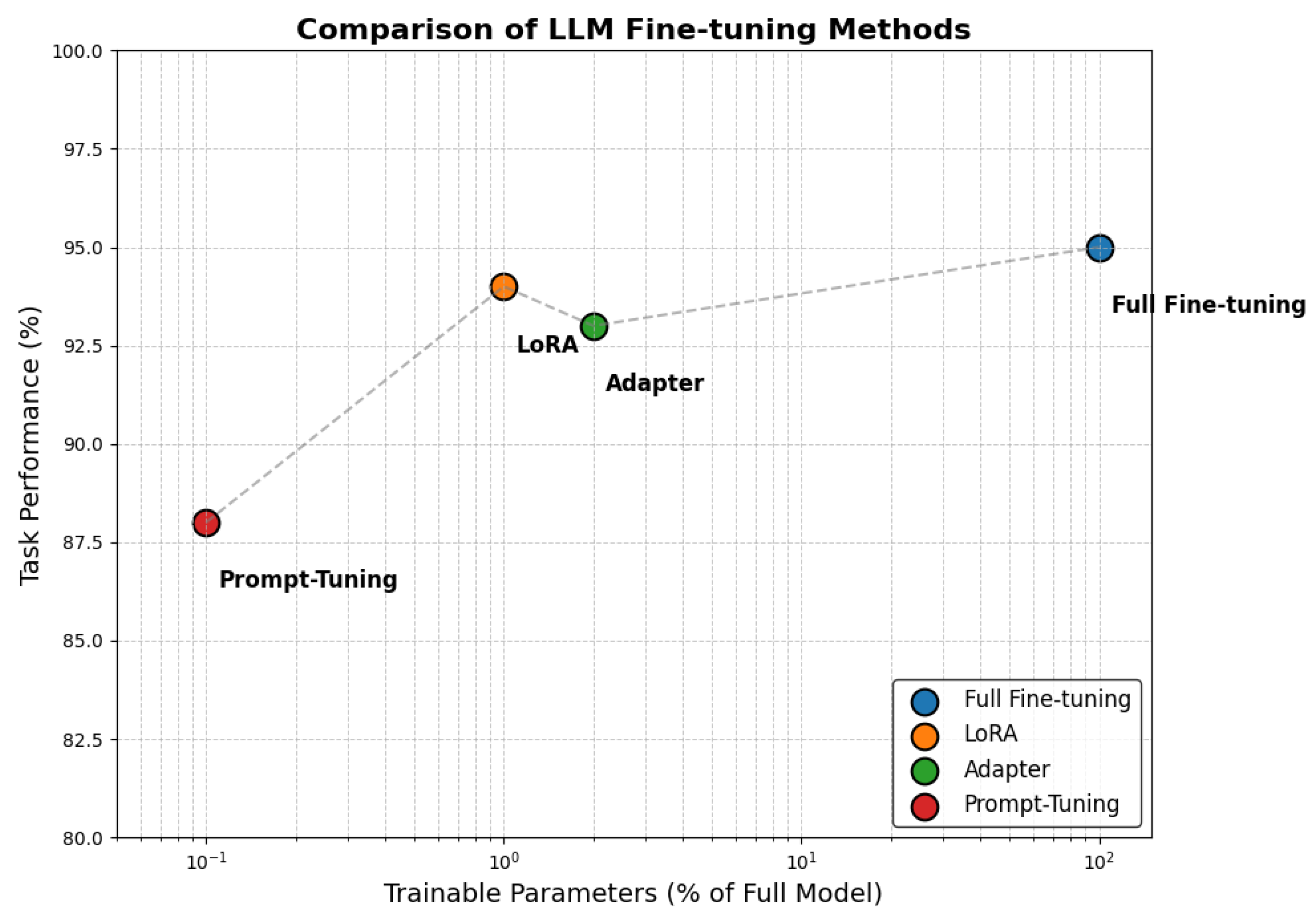

- Collaborative Fine-Tuning (FL + PEFT): FL is used to fine-tune this LLM across the plants using their local IoT sensor data and maintenance logs [60]. To manage resources and communication, PEFT (e.g., LoRA [16]) is employed. Only the small LoRA adapter updates are shared with a central FL server (or aggregated decentrally [63]) – preserving privacy regarding raw data and detailed operational parameters [54].

- Intelligence & Action (LLM + IoT): The fine-tuned LLM (potentially deployed at edge servers within each plant [13]) analyzes incoming IoT data streams and logs in near real-time. It identifies complex failure precursors missed by simpler models, correlates sensor data with log entries, predicts remaining useful life, and generates concise, human-readable alerts and maintenance recommendations for specific machines [99]. These alerts can be directly integrated into the plant’s maintenance workflow system (potentially an IoT actuation).

5.6. Challenges Arising from the Synergy

5.7. Concluding Remarks on Synergy

6. Key Challenges and Mitigation Strategies

6.1. Resource Constraints

6.2. Communication Overhead

6.3. Data Heterogeneity and Fairness

6.4. Privacy and Security Risks

6.5. Scalability and On-Demand Deployment

7. Research Gaps and Future Directions

8. Conclusions

Author Contributions

Conflicts of Interest

Abbreviations

| l]@lm11.5cm AI | Artificial Intelligence |

| CFL | Centralized Federated Learning |

| DFL | Decentralized Federated Learning |

| DP | Differential Privacy |

| FL | Federated Learning |

| GDPR | General Data Protection Regulation |

| HE | Homomorphic Encryption |

| HIPAA | Health Insurance Portability and Accountability Act |

| IIoT | Industrial Internet of Things |

| IoT | Internet of Things |

| KD | Knowledge Distillation |

| LLM | Large Language Model |

| LoRA | Low-Rank Adaptation |

| Non-IID | Non-Independent and Identically Distributed |

| PEFT | Parameter-Efficient Fine-Tuning |

| PET | Privacy-Enhancing Technology |

| PFL | Personalized Federated Learning |

| PQC | Post-Quantum Cryptography |

| SFL | Split Federated Learning |

| SMPC | Secure Multi-Party Computation |

| TEE | Trusted Execution Environment |

| ZKP | Zero-Knowledge Proof |

References

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. In Proceedings of the Advances in Neural Information Processing Systems 33 (NeurIPS 2020); Larochelle, H.; Ranzato, M.; Hadsell, R.; Balcan, M.F.; Lin, H., Eds. Curran Associates, Inc. 2020; pp. 1877–1901. [Google Scholar]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. LLaMA: Open and Efficient Foundation Language Models. arXiv preprint arXiv:2302.1 3971, arXiv:2302.13971, 2023, [arXiv:cs.CL/2302.13971]2023, [arXiv:csCL/230213971]. [Google Scholar]

- Chen, X.; Wu, W.; Li, Z.; Li, L.; Ji, F. LLM-Empowered IoT for 6G Networks: Architecture, Challenges, and Solutions. arXiv preprint arXiv:2503.13819, arXiv:2503.13819 2025.

- Kaplan, J.; McCandlish, S.; Henighan, T.; Brown, T.B.; Chess, B.; Child, R.; Gray, S.; Radford, A.; Wu, J.; Amodei, D. Scaling Laws for Neural Language Models. arXiv preprint arXiv:2001.0 8361, arXiv:2001.08361, 2020, [arXiv:cs.LG/2001.08361]2020, [arXiv:csLG/200108361]. [Google Scholar]

- Weidinger, L.; Mellor, J.; Rauh, M.; Griffin, C.; Uesato, J.; Huang, P.S.; Cheng, M.; Glaese, M.; Balle, B.; Kasirzadeh, A.; et al. Ethical and social risks of harm from Language Models. arXiv preprint arXiv:2112.0 4359, arXiv:2112.04359, 2021, [arXiv:cs.CL/2112.04359]2021, [arXiv:csCL/211204359]. [Google Scholar]

- Mao, Y.; You, C.; Zhang, J.; Huang, K.; Letaief, K.B. A survey on mobile edge computing: The communication perspective. IEEE communications surveys & tutorials 2017, 19, 2322–2358. [Google Scholar]

- Wang, J.; Liu, Z.; Yang, X.; Li, M.; Lyu, Z. The Internet of Things under Federated Learning: A Review of the Latest Advances and Applications. Computers, Materials and Continua 2025, 82, 1–39. [Google Scholar]

- Villalobos, P.; Sevilla, J.; Heim, L.; Besiroglu, T.; Hobbhahn, M.; Ho, A. Will We Run Out of Data? An Analysis of the Limits of Scaling Datasets in Machine Learning. arXiv preprint arXiv:2211.0 4325, arXiv:2211.04325, 2022, [arXiv:cs.LG/2211.04325]2022, [arXiv:csLG/221104325]. [Google Scholar]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial intelligence and statistics. PMLR; 2017; pp. 1273–1282. [Google Scholar]

- Cheng, Y.; Zhang, W.; Zhang, Z.; Zhang, C.; Wang, S.; Mao, S. Towards Federated Large Language Models: Motivations, Methods, and Future Directions. IEEE Communications Surveys & Tutorials.

- Li, K.; Yuan, X.; Zheng, J.; Ni, W.; Dressler, F.; Jamalipour, A. Leverage Variational Graph Representation for Model Poisoning on Federated Learning. IEEE Transactions on Neural Networks and Learning Systems 2025, 36, 116–128. [Google Scholar] [CrossRef]

- Wevolver. Chapter 5: The Future of Edge AI. Available online: url:https://www.wevolver.com/article/2025-edge-ai-technology-report/the-future-of-edge-ai, 2025.

- Qu, Y.; Ding, M.; Sun, N.; Thilakarathna, K.; Zhu, T.; Niyato, D. The frontier of data erasure: Machine unlearning for large language models, 2024.

- Adam, M.; Baroud, U. Federated Learning For IoT: Applications, Trends, Taxonomy, Challenges, Current Solutions, and Future Directions. IEEE Open Journal of the Communications Society 2024. [Google Scholar] [CrossRef]

- Friha, O.; Ferrag, M.A.; Kantarci, B.; Cakmak, B.; Ozgun, A.; Ghoualmi-Zine, N. Llm-based edge intelligence: A comprehensive survey on architectures, applications, security and trustworthiness. IEEE Open Journal of the Communications Society 2024. [Google Scholar] [CrossRef]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. Lora: Low-rank adaptation of large language models. arXiv 2021. arXiv preprint arXiv:2106.09685, arXiv:2106.09685 2021.

- Lin, Z.; Hu, X.; Zhang, Y.; Chen, Z.; Fang, Z.; Chen, X.; Li, A.; Vepakomma, P.; Gao, Y. Splitlora: A split parameter-efficient fine-tuning framework for large language models, 2024.

- Wu, W.; Li, M.; Qu, K.; Zhou, C.; Shen, X.; Zhuang, W.; Li, X.; Shi, W. Split learning over wireless networks: Parallel design and resource management. IEEE Journal on Selected Areas in Communications 2023, 41, 1051–1066. [Google Scholar] [CrossRef]

- Chen, H.Y.; Tu, C.H.; Li, Z.; Shen, H.W.; Chao, W.L. On the importance and applicability of pre-training for federated learning. arXiv preprint arXiv:2206.11488, arXiv:2206.11488 2022.

- Khan, L.U.; Saad, W.; Han, Z.; Hossain, E.; Hong, C.S. Federated learning for internet of things: Recent advances, taxonomy, and open challenges. IEEE Communications Surveys & Tutorials 2021, 23, 1759–1799. [Google Scholar]

- Nguyen, D.C.; Ding, M.; Pathirana, P.N.; Seneviratne, A.; Li, J.; Poor, H.V. Federated learning for internet of things: A comprehensive survey. IEEE Communications Surveys & Tutorials 2021, 23, 1622–1658. [Google Scholar]

- Liu, M.; Ho, S.; Wang, M.; Gao, L.; Jin, Y.; Zhang, H. Federated learning meets natural language processing: A survey. arXiv preprint arXiv:2107.12603, arXiv:2107.12603 2021.

- Zhuang, W.; Chen, C.; Lyu, L. When foundation model meets federated learning: Motivations, challenges, and future directions. arXiv preprint arXiv:2306.15546, arXiv:2306.15546 2023.

- Pfeiffer, K.; Rapp, M.; Khalili, R.; Henkel, J. Federated learning for computationally constrained heterogeneous devices: A survey. ACM Computing Surveys 2023, 55, 1–27. [Google Scholar]

- Xu, M.; Du, H.; Niyato, D.; Kang, J.; Xiong, Z.; Mao, S.; Han, Z.; Jamalipour, A.; Kim, D.I.; Shen, X.; et al. Unleashing the power of edge-cloud generative AI in mobile networks: A survey of AIGC services. IEEE Communications Surveys & Tutorials 2024, 26, 1127–1170. [Google Scholar]

- Gong, X. Delay-optimal distributed edge computing in wireless edge networks. In Proceedings of the IEEE INFOCOM 2020-IEEE conference on computer communications. IEEE; 2020; pp. 2629–2638. [Google Scholar]

- Ashish, V. Attention is all you need. Advances in neural information processing systems 2017, 30, I. [Google Scholar]

- Lee, J.; Toutanova, K. Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805 2018, arXiv:1810.04805 2018, 33. [Google Scholar]

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.L.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; et al. Training Language Models to Follow Instructions with Human Feedback. In Proceedings of the Advances in Neural Information Processing Systems 35 (NeurIPS 2022); Koyejo, S.; Mohamed, S.; Agarwal, A.; Belgrave, D.; Cho, K.; Oh, A., Eds. Curran Associates, Inc. 2022; pp. 27730–27744. [Google Scholar]

- Carlini, N.; Nasr, M.; Choquette-Choo, C.A.; Jagielski, M.; Gao, I.; Koh, P.W.W.; Ippolito, D.; Tramer, F.; Schmidt, L. Are aligned neural networks adversarially aligned?, 2023.

- Wu, N.; Yuan, X.; Wang, S.; Hu, H.; Xue, M. Cardinality Counting in" Alcatraz": A Privacy-aware Federated Learning Approach. In Proceedings of the Proceedings of the ACM Web Conference 2024, 2024, pp. 3076–3084. [Google Scholar]

- Hu, S.; Yuan, X.; Ni, W.; Wang, X.; Hossain, E.; Vincent Poor, H. OFDMA-F²L: Federated Learning With Flexible Aggregation Over an OFDMA Air Interface. IEEE Transactions on Wireless Communications 2024, 23, 6793–6807. [Google Scholar] [CrossRef]

- Bhavsar, M.; Bekele, Y.; Roy, K.; Kelly, J.; Limbrick, D. FL-IDS: Federated learning-based intrusion detection system using edge devices for transportation IoT. IEEE Access 2024. [Google Scholar] [CrossRef]

- Tian, Y.; Wang, J.; Wang, Y.; Zhao, C.; Yao, F.; Wang, X. Federated vehicular transformers and their federations: Privacy-preserving computing and cooperation for autonomous driving. IEEE Transactions on Intelligent Vehicles 2022, 7, 456–465. [Google Scholar] [CrossRef]

- Beltrán, E.T.M.; Pérez, M.Q.; Sánchez, P.M.S.; Bernal, S.L.; Bovet, G.; Pérez, M.G.; Pérez, G.M.; Celdrán, A.H. Decentralized federated learning: Fundamentals, state of the art, frameworks, trends, and challenges. IEEE Communications Surveys & Tutorials 2023, 25, 2983–3013. [Google Scholar]

- Witt, L.; Heyer, M.; Toyoda, K.; Samek, W.; Li, D. Decentral and incentivized federated learning frameworks: A systematic literature review. IEEE Internet of Things Journal 2022, 10, 3642–3663. [Google Scholar] [CrossRef]

- Li, T.; Sahu, A.K.; Talwalkar, A.; Smith, V. Federated learning: Challenges, methods, and future directions. IEEE signal processing magazine 2020, 37, 50–60. [Google Scholar] [CrossRef]

- Dwork, C.; McSherry, F.; Nissim, K.; Smith, A. Calibrating noise to sensitivity in private data analysis. In Proceedings of the Theory of Cryptography: Third Theory of Cryptography Conference, TCC 2006, New York, NY, USA, 2006. Proceedings 3. Springer, 2006, March 4-7; pp. 265–284.

- Shan, B.; Yuan, X.; Ni, W.; Wang, X.; Liu, R.P.; Dutkiewicz, E. Preserving the privacy of latent information for graph-structured data. IEEE Transactions on Information Forensics and Security 2023, 18, 5041–5055. [Google Scholar] [CrossRef]

- Ragab, M.; Ashary, E.B.; Alghamdi, B.M.; Aboalela, R.; Alsaadi, N.; Maghrabi, L.A.; Allehaibi, K.H. Advanced artificial intelligence with federated learning framework for privacy-preserving cyberthreat detection in IoT-assisted sustainable smart cities. Scientific Reports 2025, 15, 4470. [Google Scholar] [CrossRef] [PubMed]

- Abadi, M.; Chu, A.; Goodfellow, I.; McMahan, H.B.; Mironov, I.; Talwar, K.; Zhang, L. Deep learning with differential privacy. In Proceedings of the Proceedings of the 2016 ACM SIGSAC conference on computer and communications security, 2016, pp.

- Ahmadi, K.; et al. . An Interactive Framework for Implementing Privacy-Preserving Federated Learning: Experiments on Large Language Models. ResearchGate preprint, 2025.

- Basu, P.; Roy, T.S.; Naidu, R.; Muftuoglu, Z.; Singh, S.; Mireshghallah, F. Benchmarking differential privacy and federated learning for bert models. arXiv preprint arXiv:2106.13973, arXiv:2106.13973 2021.

- Hu, S.; Yuan, X.; Ni, W.; Wang, X.; Hossain, E.; Vincent Poor, H. Differentially Private Wireless Federated Learning With Integrated Sensing and Communication. IEEE Transactions on Wireless Communications. [CrossRef]

- Yuan, X.; Ni, W.; Ding, M.; Wei, K.; Li, J.; Poor, H.V. Amplitude-Varying Perturbation for Balancing Privacy and Utility in Federated Learning. IEEE Transactions on Information Forensics and Security 2023, 18, 1884–1897. [Google Scholar] [CrossRef]

- Paillier, P. Public-key cryptosystems based on composite degree residuosity classes. In Proceedings of the International conference on the theory and applications of cryptographic techniques. Springer; 1999; pp. 223–238. [Google Scholar]

- Shamir, A. How to share a secret. Communications of the ACM 1979, 22, 612–613. [Google Scholar] [CrossRef]

- Yao, A.C. Protocols for secure computations. In Proceedings of the 23rd annual symposium on foundations of computer science (sfcs 1982). IEEE; 1982; pp. 160–164. [Google Scholar]

- Bonawitz, K.; Ivanov, V.; Kreuter, B.; Marcedone, A.; McMahan, H.B.; Patel, S.; Ramage, D.; Segal, A.; Seth, K. Practical secure aggregation for privacy-preserving machine learning. In Proceedings of the proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security; 2017; pp. 1175–1191. [Google Scholar]

- Che, T.; Liu, J.; Zhou, Y.; Ren, J.; Zhou, J.; Sheng, V.S.; Dai, H.; Dou, D. Federated learning of large language models with parameter-efficient prompt tuning and adaptive optimization. arXiv preprint arXiv:2310.15080, arXiv:2310.15080 2023.

- Lyu, L.; Yu, H.; Ma, X.; Chen, C.; Sun, L.; Zhao, J.; Yang, Q.; Philip, S.Y. Privacy and robustness in federated learning: Attacks and defenses. IEEE transactions on neural networks and learning systems 2022. [Google Scholar] [CrossRef]

- Xi, Z.; Chen, W.; Guo, X.; He, W.; Ding, Y.; Hong, B.; Zhang, M.; Wang, J.; Jin, S.; Zhou, E.; et al. The rise and potential of large language model based agents: A survey. Science China Information Sciences 2025, 68, 121101. [Google Scholar] [CrossRef]

- Malaviya, S.; Shukla, M.; Lodha, S. Reducing communication overhead in federated learning for pre-trained language models using parameter-efficient finetuning. In Proceedings of the Conference on Lifelong Learning Agents. PMLR; 2023; pp. 456–469. [Google Scholar]

- Jiang, J.; Jiang, H.; Ma, Y.; Liu, X.; Fan, C. Low-parameter federated learning with large language models, 2024.

- Gu, Y.; Dong, L.; Wei, F.; Huang, M. MiniLLM: Knowledge distillation of large language models. arXiv preprint arXiv:2306.08543, arXiv:2306.08543 2023.

- Naik, K. LLM fine tuning with LoRA, 2025.

- Dettmers, T.; Pagnoni, A.; Holtzman, A.; Zettlemoyer, L. Qlora: Efficient finetuning of quantized llms. Advances in neural information processing systems 2023, 36, 10088–10115. [Google Scholar]

- Frantar, E.; Alistarh, D. SparseGPT: Massive Language Models Can Be Accurately Pruned in One-Shot. In Proceedings of the Proceedings of the 40th International Conference on Machine Learning (ICML); Krause, A.; Brunskill, E.; Cho, K.; Engelhardt, B.; Sabato, S.; Scarlett, J., Eds., Honolulu, HI, USA, July 2023; Vol. 202, Proceedings of Machine Learning Research, pp.

- Xiao, G.; Lin, J.; Seznec, M.; Wu, H.; Demouth, J.; Han, S. SmoothQuant: Accurate and Efficient Post-Training Quantization for Large Language Models. In Proceedings of the Proceedings of the 40th International Conference on Machine Learning (ICML); Krause, A.; Brunskill, E.; Cho, K.; Engelhardt, B.; Sabato, S.; Scarlett, J., Eds., Honolulu, HI, USA, July 2023; Vol. 202, Proceedings of Machine Learning Research, pp.

- Tian, Y.; Wan, Y.; Lyu, L.; Yao, D.; Jin, H.; Sun, L. FedBERT: When federated learning meets pre-training. ACM Transactions on Intelligent Systems and Technology (TIST) 2022, 13, 1–26. [Google Scholar] [CrossRef]

- Liu, X.Y.; Zhu, R.; Zha, D.; Gao, J.; Zhong, S.; White, M.; Qiu, M. Differentially private low-rank adaptation of large language model using federated learning, 2025.

- Zhang, Z.; Yang, Y.; Dai, Y.; Wang, Q.; Yu, Y.; Qu, L.; Xu, Z. FedPETuning: When federated learning meets the parameter-efficient tuning methods of pre-trained language models. In Proceedings of the Annual Meeting of the Association of Computational Linguistics 2023. Association for Computational Linguistics (ACL); 2023; pp. 9963–9977. [Google Scholar]

- Ghiasvand, S.; Alizadeh, M.; Pedarsani, R. Decentralized Low-Rank Fine-Tuning of Large Language Models, 2025.

- Deng, Y.; Ren, J.; Tang, C.; Lyu, F.; Liu, Y.; Zhang, Y. A hierarchical knowledge transfer framework for heterogeneous federated learning. In Proceedings of the IEEE INFOCOM 2023-IEEE Conference on Computer Communications. IEEE; 2023; pp. 1–10. [Google Scholar]

- Fallah, A.; Mokhtari, A.; Ozdaglar, A. Personalized Federated Learning with Theoretical Guarantees: A Model-Agnostic Meta-Learning Approach. In Proceedings of the Advances in Neural Information Processing Systems 33 (NeurIPS 2020); Larochelle, H.; Ranzato, M.; Hadsell, R.; Balcan, M.F.; Lin, H., Eds. Curran Associates, Inc. 2020; pp. 3557–3568. [Google Scholar]

- Collins, L.; Wu, S.; Oh, S.; Sim, K.C. PROFIT: Benchmarking Personalization and Robustness Trade-off in Federated Prompt Tuning. arXiv preprint arXiv:2310.0 4627, arXiv:2310.04627, 2023, [arXiv:cs.LG/2310.04627]2023, [arXiv:csLG/231004627]. [Google Scholar]

- Yang, F.E.; Wang, C.Y.; Wang, Y.C.F. Efficient model personalization in federated learning via client-specific prompt generation. In Proceedings of the Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp.

- Yi, L.; Yu, H.; Wang, G.; Liu, X.; Li, X. pFedLoRA: model-heterogeneous personalized federated learning with LoRA tuning. arXiv preprint arXiv:2310.13283, arXiv:2310.13283 2023.

- Cho, Y.J.; Liu, L.; Xu, Z.; Fahrezi, A.; Joshi, G. Heterogeneous lora for federated fine-tuning of on-device foundation models. arXiv preprint arXiv:2401.06432, arXiv:2401.06432 2024.

- Su, S.; Li, B.; Xue, X. Fedra: A random allocation strategy for federated tuning to unleash the power of heterogeneous clients. In Proceedings of the European Conference on Computer Vision. Springer; 2024; pp. 342–358. [Google Scholar]

- Guo, T.; Guo, S.; Wang, J.; Tang, X.; Xu, W. Promptfl: Let federated participants cooperatively learn prompts instead of models–federated learning in age of foundation model. IEEE Transactions on Mobile Computing 2023, 23, 5179–5194. [Google Scholar] [CrossRef]

- Zhao, H.; Du, W.; Li, F.; Li, P.; Liu, G. Fedprompt: Communication-efficient and privacy-preserving prompt tuning in federated learning. In Proceedings of the ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE; 2023; pp. 1–5. [Google Scholar]

- Chen, Y.; Chen, Z.; Wu, P.; Yu, H. FedOBD: Opportunistic block dropout for efficiently training large-scale neural networks through federated learning. arXiv preprint arXiv:2208.05174, arXiv:2208.05174 2022.

- Sun, G.; Mendieta, M.; Luo, J.; Wu, S.; Chen, C. Fedperfix: Towards partial model personalization of vision transformers in federated learning. In Proceedings of the Proceedings of the IEEE/CVF international conference on computer vision, 2023, pp.

- Chen, D.; Yao, L.; Gao, D.; Ding, B.; Li, Y. Efficient personalized federated learning via sparse model-adaptation. In Proceedings of the International conference on machine learning. PMLR; 2023; pp. 5234–5256. [Google Scholar]

- Cho, Y.J.; Manoel, A.; Joshi, G.; Sim, R.; Dimitriadis, D. Heterogeneous ensemble knowledge transfer for training large models in federated learning. arXiv preprint arXiv:2204.12703, arXiv:2204.12703 2022.

- Sui, D.; Chen, Y.; Zhao, J.; Jia, Y.; Xie, Y.; Sun, W. Feded: Federated learning via ensemble distillation for medical relation extraction. In Proceedings of the Proceedings of the 2020 conference on empirical methods in natural language processing (EMNLP), 2020, pp.

- Mei, H.; Cai, D.; Wu, Y.; Wang, S.; Xu, M. A Survey of Backpropagation-free Training For LLMS, 2024.

- Xu, M.; Wu, Y.; Cai, D.; Li, X.; Wang, S. Federated Fine-tuning of Billion-sized Language Models Across Mobile Devices. arXiv preprint arXiv:2308.1 3894, arXiv:2308.13894, 2023, [arXiv:cs.LG/2308.13894]2023, [arXiv:csLG/230813894]. [Google Scholar]

- Qin, Z.; Chen, D.; Qian, B.; Ding, B.; Li, Y.; Deng, S. Federated full-parameter tuning of billion-sized language models with communication cost under 18 kilobytes. arXiv preprint arXiv:2312.06353, arXiv:2312.06353 2023.

- Sun, J.; Xu, Z.; Yin, H.; Yang, D.; Xu, D.; Chen, Y.; Roth, H.R. Fedbpt: Efficient federated black-box prompt tuning for large language models. arXiv preprint arXiv:2310.01467, arXiv:2310.01467 2023.

- Pau, D.P.; Aymone, F.M. Suitability of forward-forward and pepita learning to mlcommons-tiny benchmarks. In Proceedings of the 2023 IEEE International Conference on Omni-layer Intelligent Systems (COINS). IEEE; 2023; pp. 1–6. [Google Scholar]

- He, C.; Li, S.; So, J.; Zeng, X.; Zhang, M.; Wang, H.; Wang, X.; Vepakomma, P.; Singh, A.; Qiu, H.; et al. FedML: A Research Library and Benchmark for Federated Machine Learning. arXiv preprint arXiv:2007.1 3518, arXiv:2007.13518, 2020, [arXiv:cs.LG/2007.13518]2020, [arXiv:csLG/200713518]. [Google Scholar]

- Beutel, D.J.; Topal, T.; Mathur, A.; Qiu, X.; Fernandez-Marques, J.; Gao, Y.; Sani, L.; Li, K.H.; Parcollet, T.; de Gusmão, P.P.B.; et al. Flower: A Friendly Federated Learning Research Framework. arXiv preprint arXiv:2007.1 4390, arXiv:2007.14390, 2020, [arXiv:cs.LG/2007.14390]2020, [arXiv:csLG/200714390]. [Google Scholar]

- Arisdakessian, S.; Wahab, O.A.; Mourad, A.; Otrok, H.; Guizani, M. A survey on IoT intrusion detection: Federated learning, game theory, social psychology, and explainable AI as future directions, 2022.

- Fan, T.; Kang, Y.; Ma, G.; Chen, W.; Wei, W.; Fan, L.; Yang, Q. FATE-LLM: A Industrial Grade Federated Learning Framework for Large Language Models. arXiv preprint arXiv:2310.1 0049, arXiv:2310.10049, 2023, [arXiv:cs.LG/2310.10049]2023, [arXiv:csLG/231010049]. [Google Scholar]

- Kuang, W.; Qian, B.; Li, Z.; Chen, D.; Gao, D.; Pan, X.; Xie, Y.; Li, Y.; Ding, B.; Zhou, J. Federatedscope-llm: A comprehensive package for fine-tuning large language models in federated learning. In Proceedings of the Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, 2024, pp.

- Zhang, J.; Vahidian, S.; Kuo, M.; Li, C.; Zhang, R.; Yu, T.; Wang, G.; Chen, Y. Towards Building The Federatedgpt: Federated Instruction Tuning. In Proceedings of the ICASSP; 2024. [Google Scholar]

- Lin, B.Y.; He, C.; Zeng, Z.; Wang, H.; Huang, Y.; Dupuy, C.; Gupta, R.; Soltanolkotabi, M.; Ren, X.; Avestimehr, S. FedNLP: Benchmarking Federated Learning Methods for Natural Language Processing Tasks. arXiv preprint arXiv:2104.0 8815, arXiv:2104.08815, 2021, [arXiv:cs.CL/2104.08815]2021, [arXiv:csCL/210408815]. [Google Scholar]

- Ye, R.; Wang, W.; Chai, J.; Li, D.; Li, Z.; Xu, Y.; Du, Y.; Wang, Y.; Chen, S. Openfedllm: Training large language models on decentralized private data via federated learning. In Proceedings of the Proceedings of the 30th ACM SIGKDD conference on knowledge discovery and data mining, 2024, pp.

- Chakshu, N.K.; Nithiarasu, P. Orbital learning: a novel, actively orchestrated decentralised learning for healthcare. Scientific Reports 2024, 14, 10459. [Google Scholar] [CrossRef]

- Nguyen, J.; Malik, K.; Zhan, H.; Yousefpour, A.; Rabbat, M.; Malek, M.; Huba, D. Federated learning with buffered asynchronous aggregation. In Proceedings of the International conference on artificial intelligence and statistics. PMLR; 2022; pp. 3581–3607. [Google Scholar]

- Charles, Z.; Mitchell, N.; Pillutla, K.; Reneer, M.; Garrett, Z. Towards federated foundation models: Scalable dataset pipelines for group-structured learning. Advances in Neural Information Processing Systems 2023, 36, 32299–32327. [Google Scholar]

- Zhang, T.; Feng, T.; Alam, S.; Dimitriadis, D.; Lee, S.; Zhang, M.; Narayanan, S.S.; Avestimehr, S. Gpt-fl: Generative pre-trained model-assisted federated learning. arXiv preprint arXiv:2306.02210, arXiv:2306.02210 2023.

- Wang, B.; Zhang, Y.J.; Cao, Y.; Li, B.; McMahan, H.B.; Oh, S.; Xu, Z.; Zaheer, M. Can public large language models help private cross-device federated learning? arXiv preprint arXiv:2305.12132, arXiv:2305.12132 2023.

- Cui, C.; Ma, Y.; Cao, X.; Ye, W.; Zhou, Y.; Liang, K.; Chen, J.; Lu, J.; Yang, Z.; Liao, K.D.; et al. A Survey on Multimodal Large Language Models for Autonomous Driving. In Proceedings of the Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, January 2024.

- Pandya, S.; Srivastava, G.; Jhaveri, R.; Babu, M.R.; Bhattacharya, S.; Maddikunta, P.K.R.; Mastorakis, S.; Piran, M.J.; Gadekallu, T.R. Federated learning for smart cities: A comprehensive survey. Sustainable Energy Technologies and Assessments 2023, 55, 102987. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, X.; Su, H.; Zhu, J. A comprehensive survey of continual learning: Theory, method and application. IEEE Transactions on Pattern Analysis and Machine Intelligence 2024. [Google Scholar] [CrossRef]

- Xia, Y.; Chen, Y.; Zhao, Y.; Kuang, L.; Liu, X.; Hu, J.; Liu, Z. FCLLM-DT: Enpowering Federated Continual Learning with Large Language Models for Digital Twin-based Industrial IoT. IEEE Internet of Things Journal 2024. [Google Scholar] [CrossRef]

- Fang, M.; Cao, X.; Jia, J.; Gong, N.Z. Local Model Poisoning Attacks to Byzantine-Robust Federated Learning. In Proceedings of the Proceedings of the 29th USENIX Security Symposium (USENIX Security 20), Virtual Event, August 2020.

- Melis, L.; Song, C.; De Cristofaro, E.; Shmatikov, V. Exploiting unintended feature leakage in collaborative learning. In Proceedings of the 2019 IEEE symposium on security and privacy (SP). IEEE; 2019; pp. 691–706. [Google Scholar]

- Yang, Y.; Dang, S.; Zhang, Z. An adaptive compression and communication framework for wireless federated learning. IEEE Transactions on Mobile Computing 2024. [Google Scholar] [CrossRef]

- Hu, C.H.; Chen, Z.; Larsson, E.G. Scheduling and aggregation design for asynchronous federated learning over wireless networks. IEEE Journal on Selected Areas in Communications 2023, 41, 874–886. [Google Scholar] [CrossRef]

- Vahidian, S.; Morafah, M.; Chen, C.; Shah, M.; Lin, B. Rethinking data heterogeneity in federated learning: Introducing a new notion and standard benchmarks. IEEE Transactions on Artificial Intelligence 2023, 5, 1386–1397. [Google Scholar] [CrossRef]

- Zhu, L.; Liu, Z.; Han, S. Deep Leakage from Gradients. In Proceedings of the Advances in Neural Information Processing Systems 32 (NeurIPS 2019); Wallach, H.; Larochelle, H.; Beygelzimer, A.; d’Alché Buc, F.; Fox, E.; Garnett, R., Eds. Curran Associates, Inc. 2019; pp. 14774–14784. [Google Scholar]

- Bagdasaryan, E.; Veit, A.; Hua, Y.; Estrin, D.; Shmatikov, V. How to Backdoor Federated Learning. In Proceedings of the Proceedings of the 23rd International Conference on Artificial Intelligence and Statistics (AISTATS); Chiappa, S.; Calandra, R., Eds., Palermo, Italy, August 2020; Vol. 108, Proceedings of Machine Learning Research, pp.

- Blanchard, P.; El Mhamdi, E.M.; Guerraoui, R.; Stainer, J. Machine Learning with Adversaries: Byzantine Tolerant Gradient Descent. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017); Guyon, I.; Luxburg, U.V.; Bengio, S.; Wallach, H.; Fergus, R.; Vishwanathan, S.; Garnett, R., Eds. Curran Associates, Inc. 2017; pp. 119–129. [Google Scholar]

- Li, C.; Pang, R.; Xi, Z.; Du, T.; Ji, S.; Yao, Y.; Wang, T. An embarrassingly simple backdoor attack on self-supervised learning. In Proceedings of the Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp.

- Li, X.; Wang, S.; Wu, C.; Zhou, H.; Wang, J. Backdoor threats from compromised foundation models to federated learning. arXiv preprint arXiv:2311.00144, arXiv:2311.00144 2023.

- Wu, C.; Li, X.; Wang, J. Vulnerabilities of foundation model integrated federated learning under adversarial threats, 2024.

- Sun, H.; Zhang, Y.; Zhuang, H.; Li, J.; Xu, Z.; Wu, L. PEAR: privacy-preserving and effective aggregation for byzantine-robust federated learning in real-world scenarios. The Computer Journal.

- Gu, Z.; Yang, Y. Detecting malicious model updates from federated learning on conditional variational autoencoder. In Proceedings of the 2021 IEEE international parallel and distributed processing symposium (IPDPS). IEEE; 2021; pp. 671–680. [Google Scholar]

- Zhang, Z.; Cao, X.; Jia, J.; Gong, N.Z. Fldetector: Defending federated learning against model poisoning attacks via detecting malicious clients. In Proceedings of the Proceedings of the 28th ACM SIGKDD conference on knowledge discovery and data mining, 2022, pp.

- Huang, W.; Wang, Y.; Cheng, A.; Zhou, A.; Yu, C.; Wang, L. A fast, performant, secure distributed training framework for LLM. In Proceedings of the ICASSP 2024-2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE; 2024; pp. 4800–4804. [Google Scholar]

- Fan, Y.; Zhu, R.; Wang, Z.; Wang, C.; Tang, H.; Dong, Y.; Cho, H.; Ohno-Machado, L. ByzSFL: Achieving Byzantine-Robust Secure Federated Learning with Zero-Knowledge Proofs, 2025.

- Wang, Z.; Xu, H.; Liu, J.; Huang, H.; Qiao, C.; Zhao, Y. Resource-efficient federated learning with hierarchical aggregation in edge computing. In Proceedings of the IEEE INFOCOM 2021-IEEE conference on computer communications. IEEE; 2021; pp. 1–10. [Google Scholar]

- Guerraoui, R.; Rouault, S.; others. The Hidden Vulnerability of Distributed Learning in Byzantium. In Proceedings of the Proceedings of the 35th International Conference on Machine Learning (ICML); Dy, J.; Krause, A., Eds., Stockholm, Sweden, July 2018; Vol. 80, Proceedings of Machine Learning Research, pp. 1863–1872. Krause, A., Eds., Stockholm, Sweden, July 2018.

- Li, S.; Ngai, E.C.H.; Voigt, T. An experimental study of byzantine-robust aggregation schemes in federated learning. IEEE Transactions on Big Data 2023. [Google Scholar] [CrossRef]

- Wu, Z.; Ling, Q.; Chen, T.; Giannakis, G.B. Federated variance-reduced stochastic gradient descent with robustness to byzantine attacks. IEEE Transactions on Signal Processing 2020, 68, 4583–4596. [Google Scholar] [CrossRef]

- Zhou, T.; Yan, H.; Han, B.; Liu, L.; Zhang, J. Learning a robust foundation model against clean-label data poisoning attacks at downstream tasks. Neural Networks 2024, 169, 756–763. [Google Scholar] [CrossRef]

- Girdhar, R.; El-Nouby, A.; Liu, Z.; Singh, M.; Alwala, K.V.; Joulin, A.; Misra, I. Imagebind: One embedding space to bind them all. In Proceedings of the Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2023, pp.

- Li, Y.; Wen, H.; Wang, W.; Li, X.; Yuan, Y.; Liu, G.; Liu, J.; Xu, W.; Wang, X.; Sun, Y.; et al. Personal llm agents: Insights and survey about the capability, efficiency and security. arXiv preprint arXiv:2401.05459, arXiv:2401.05459 2024.

- Shenaj, D.; Toldo, M.; Rigon, A.; Zanuttigh, P. Asynchronous federated continual learning. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 5055–5063.

- Hu, Z.; Zhang, Y.; Xiao, M.; Wang, W.; Feng, F.; He, X. Exact and efficient unlearning for large language model-based recommendation, 2024.

- Patil, V.; Hase, P.; Bansal, M. Can sensitive information be deleted from llms? objectives for defending against extraction attacks. arXiv preprint arXiv:2309.17410, arXiv:2309.17410 2023.

- Qiu, X.; Shen, W.F.; Chen, Y.; Cancedda, N.; Stenetorp, P.; Lane, N.D. Pistol: Dataset compilation pipeline for structural unlearning of llms. arXiv preprint arXiv:2406.16810, arXiv:2406.16810 2024.

| Survey | Primary Focus | Key Strengths | Distinction from Our Work |

|---|---|---|---|

| Qu et al. [13] | MEI supporting LLMs | Deep dive into edge resource optimization (compute, comms, storage); Mobile network context (6G); Detailed edge caching/delivery for LLMs. | Focuses on infrastructure for LLMs; Less depth on FL specifics, security/trust, or the unique synergy of IoT+LLM+FL. Less emphasis on IoT data characteristics. |

| Adam et al. [14] | FL for IoT Applications | Comprehensive FL principles in IoT context; Detailed IoT application case studies; Broad FL taxonomy for IoT. | IoT application-driven; LLMs are only one emerging aspect; Less depth on LLM specifics or the challenges arising from the three-way synergy. |

| Friha et al. [15] | LLMs integrated into EI | Deep analysis of security and trustworthiness for LLM-based EI; Covers architectures, optimization, autonomy, applications broadly. | Focuses on LLM as EI component; Less depth on FL methods specifically for training LLMs on distributed IoT data. |

| Cheng et al. [10] | Federated LLMs (FL + LLM) | Exhaustive review of FL methods for LLMs (PEFT, init, etc.); Deep dive into privacy/robustness specific to Federated LLMs. | Focuses narrowly on FL+LLM interaction; Less emphasis on the specific IoT context (data types, device constraints) or the edge infrastructure aspects. |

| This Survey | Synergy of IoT + LLM + FL for Privacy-Preserving Edge Intelligence | Unique focus on the three-way interaction; Explicit analysis of synergistic effects (Section 5); Addresses challenges arising specifically from the integration; Compares trade-offs in the specific IoT+LLM+FL@Edge context. | Provides a holistic view of the integration, bridging gaps between surveys focused on pairwise interactions or single components. Emphasizes the unique capabilities, privacy considerations, and challenges born from the specific combination of IoT data richness, LLM intelligence, and FL’s distributed privacy paradigm within advanced edge networks. |

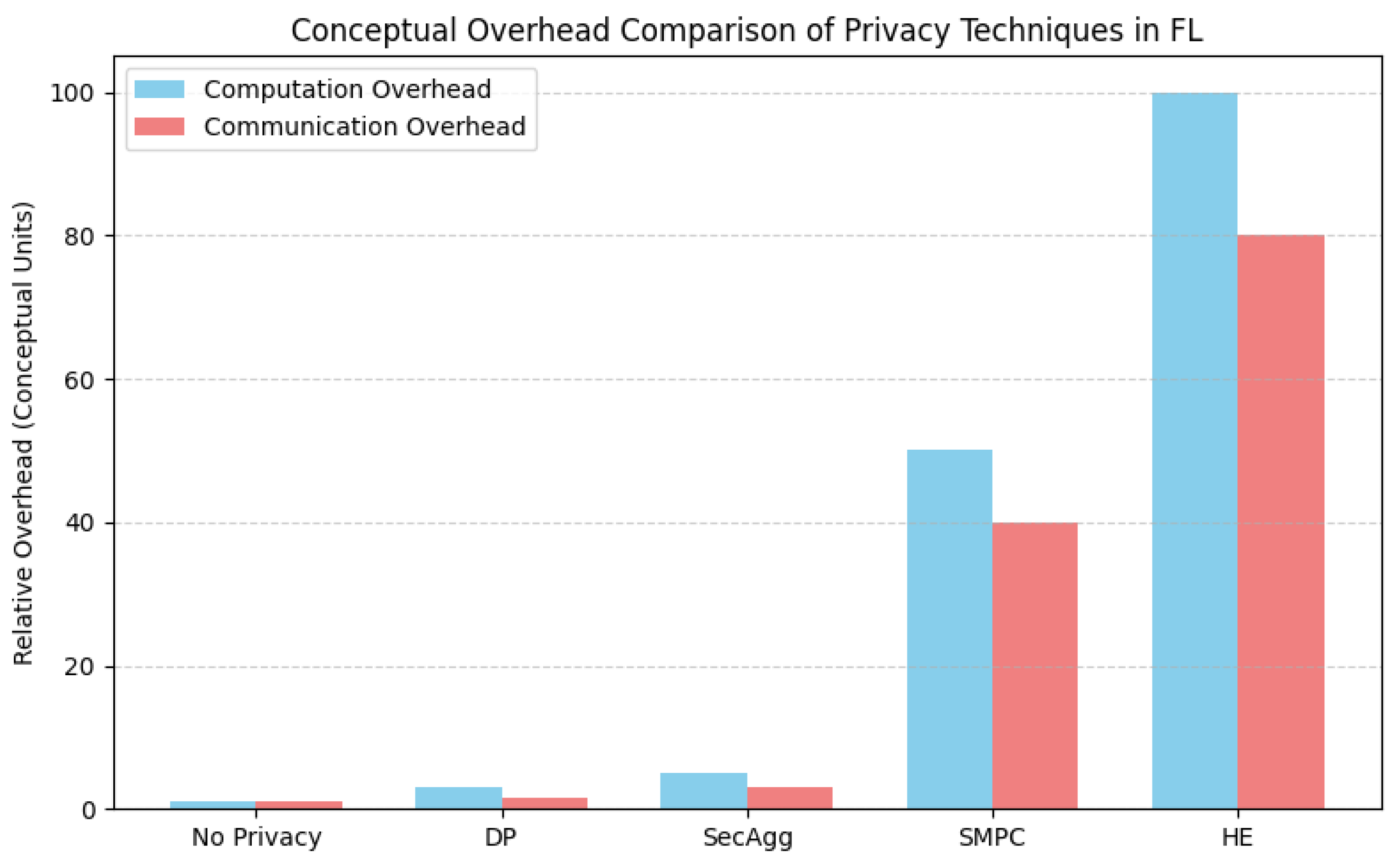

| Technique | Mechanism | Pros | Cons |

|---|---|---|---|

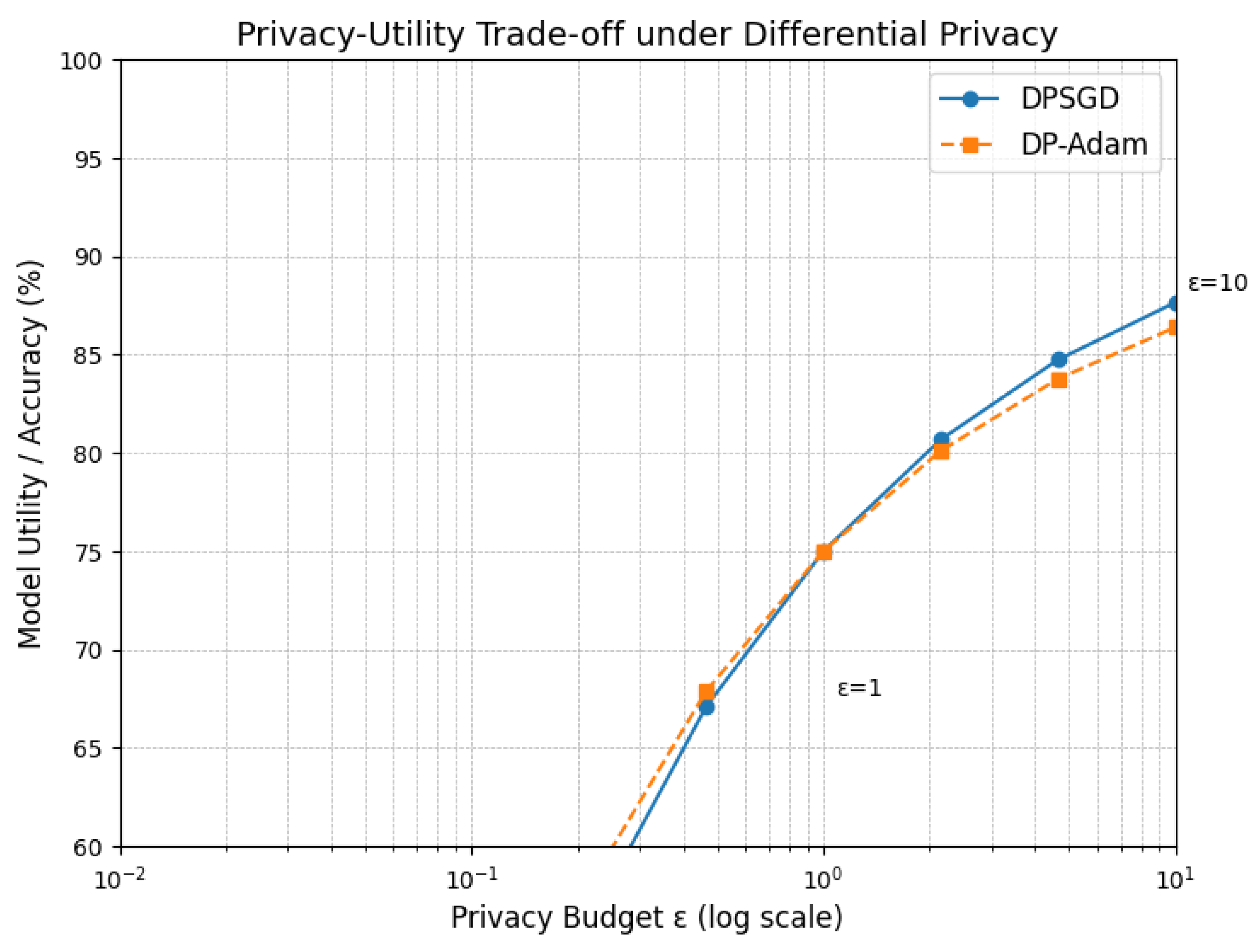

| Differential Privacy | Adds calibrated noise to gradients, updates, or data for formal -privacy guarantees. | Strong, mathematical privacy guarantees; relatively lower computational overhead than cryptographic methods. | Direct privacy-utility trade-off (noise vs. accuracy); complex privacy budget management; can impact fairness; overhead can still be significant for resource-poor IoT devices. |

| Homomorphic Encryption | Allows specific computations (e.g., addition) on encrypted data; server aggregates ciphertexts without decryption. | Strong confidentiality against the server; no impact on model accuracy (utility). | Extremely high computational overhead (encryption, decryption, operations); significant communication overhead (ciphertext size); largely impractical for direct use on most IoT devices. |

| Secure Multi-Party Computation | Enables joint computation (e.g., sum) via cryptographic protocols without parties revealing private inputs. | Strong privacy guarantees (distributed trust); no impact on model accuracy. | Requires complex multi-round interaction protocols; significant communication overhead; often assumes synchronicity or fault tolerance mechanisms, challenging in dynamic IoT. |

| Secure Aggregation | Specialized protocols (often secret sharing based) optimized for securely computing the sum/average of client updates. | More efficient (computationally and communication-wise) than general HE/SMPC for the aggregation task; widely adopted. | Protects individual updates from the server during aggregation, but not the final aggregated model from inference, nor updates during transmission without extra encryption. |

| Approach | Mechanism | Heterogeneity Handled | Efficiency | Trade-offs |

|---|---|---|---|---|

| PEFT-based PFL (Prompts, Adapters, LoRA) | Clients train personalized PEFT components attached to a shared, frozen LLM backbone. Global aggregation might occur on these PEFT components or parts thereof [16,54,66,67,68]. | Primarily statistical (data); some methods adapt to system heterogeneity by adjusting PEFT complexity (e.g., heterogeneous LoRA ranks) [69,70]. | High communication efficiency (small updates); moderate computation (only PEFT tuning) [71]. | Personalization depth limited by PEFT capacity; potential for negative interference if global components are poorly aggregated [72]. |

| Model Decomposition / Partial Training | Global model is structurally divided; clients train only specific assigned layers or blocks [60,73,74]. | Primarily system (computation/memory); can assign smaller parts to weaker clients. | Reduced client computation; communication depends on the size of trained part. | Less flexible personalization compared to PEFT; requires careful model partitioning design; potential information loss between components. |

| Knowledge Distillation (KD) based PFL | Uses outputs (logits) or intermediate features from a global "teacher" model (or ensemble of client models) to guide the training of personalized local "student" models [75,76,77]. | Can handle model heterogeneity (different student architectures); adaptable to data heterogeneity. | Communication involves logits/features, potentially smaller than parameters; client computation depends on student model size. | Distillation process can be complex; potential privacy leakage from shared logits; the student’s model might not perfectly capture the teacher’s knowledge. |

| Meta-Learning based PFL (e.g., Reptile, MAML adaptations) | Learns a global model initialization that can be rapidly adapted (fine-tuned) to each client’s local data with few gradient steps [65]. | Focuses on adapting to statistical (data) heterogeneity. | Communication similar to standard FL; potentially more local computation during adaptation phase. | Can be sensitive to task diversity across clients; training the meta-model can be computationally intensive. |

| Category | Specific Metrics Examples | Relevance/Notes |

|---|---|---|

| Model Utility | Accuracy, F1-score, BLEU, ROUGE, Perplexity, Calibration, Robustness (to noise, adversarial inputs) | Task-specific performance and reliability of the learned model. |

| Efficiency: | Crucial for resource-constrained and bandwidth-limited IoT environments. | |

| - Communication | Total bytes/bits transmitted, Number of rounds, Compression rates | Impacts network load, energy consumption on wireless devices. |

| - Computation | Client training time/round, Server aggregation time, Edge inference latency, Total FLOPs, Energy consumption | Determines feasibility on device, overall system speed, battery life. |

| - Memory | Peak RAM usage (client/server), Model storage size | Critical for devices with limited memory capacity. |

| Privacy | Formal guarantees (e.g., -DP values), Empirical leakage (e.g., Membership Inference Attack success rate) | Quantifies the level of privacy protection provided against specific threats. |

| Fairness | Variance in accuracy across clients/groups, Worst-group performance vs. average | Measures consistency of performance for diverse participants or data subpopulations. |

| Scalability | Performance/efficiency degradation as the number of clients increases | Assesses the system’s ability to handle large-scale IoT deployments. |

| Challenge | Description | Mitigation Strategies | Trade-offs / Notes |

|---|---|---|---|

| Resource Constraints (Compute, Memory, Energy) | Severe limitations on many IoT devices conflict with LLM computational demands [3,24]. | Model Compression [57]; Split Computing [3,18]; PEFT [16]; Adaptive Distribution. | Accuracy loss (Compression); Latency/Sync needs (Split); Limited adaptivity ; Orchestration complexity (Adaptive). |

| Communication Overhead | High cost of transmitting large model updates frequently over constrained IoT networks [10,102]. | PEFT [16]; Update Compression [102]; Reduced Frequency [9]; Asynchronous Protocols [92,103]. | Smaller updates limit model changes ; Info loss risk (Compression); Slower convergence (Frequency); Staleness issues (Async). |

| Data Heterogeneity (Non-IID) & Fairness | Non-IID data hinders convergence and fairness [37,104]; biases can be amplified [30]; decentralized bias mitigation is hard. | Robust Aggregation (e.g., FedProx) [37]; PFL [64,65]; Fairness-aware Algorithms; Synthetic/Public Data Augmentation [94,95]. | Complexity (PFL); Potential avg. accuracy reduction (Fairness); Privacy concerns with data augmentation. |

| Privacy & Security Risks | Balancing privacy vs. utility; protecting against leakage [101,105], poisoning [100,106], Byzantine [107], backdoor attacks [108,109,110]; regulatory compliance (GDPR, HIPAA). | PETs (DP [41], HE [46], SMPC [48], Secure Aggregation [49]); Robust Aggregation (e.g., Krum [107], PEAR [111]); Attack Detection [112,113]; TEEs [114]; ZKP-based methods (e.g., ByzSFL [115]). | Accuracy loss (DP) [42]; High overhead (HE/SMPC) [3]; Limited protection (SecAgg); Assumptions fail (Robust Agg.); Verifiability (ZKP). See Table 2. |

| On-Demand Deployment & Scalability | Efficiently managing FL training and LLM inference across massive, dynamic IoT populations [3]. | Edge Infrastructure Optimization (Caching, Serving) [3]; Scalable FL Orchestration (Hierarchical, Decentralized, Asynchronous) [35,92,116]; Resource-Aware Management [3,36]. | Orchestration trade-offs; Incentive complexity. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).