Appendix B. Our Dataset

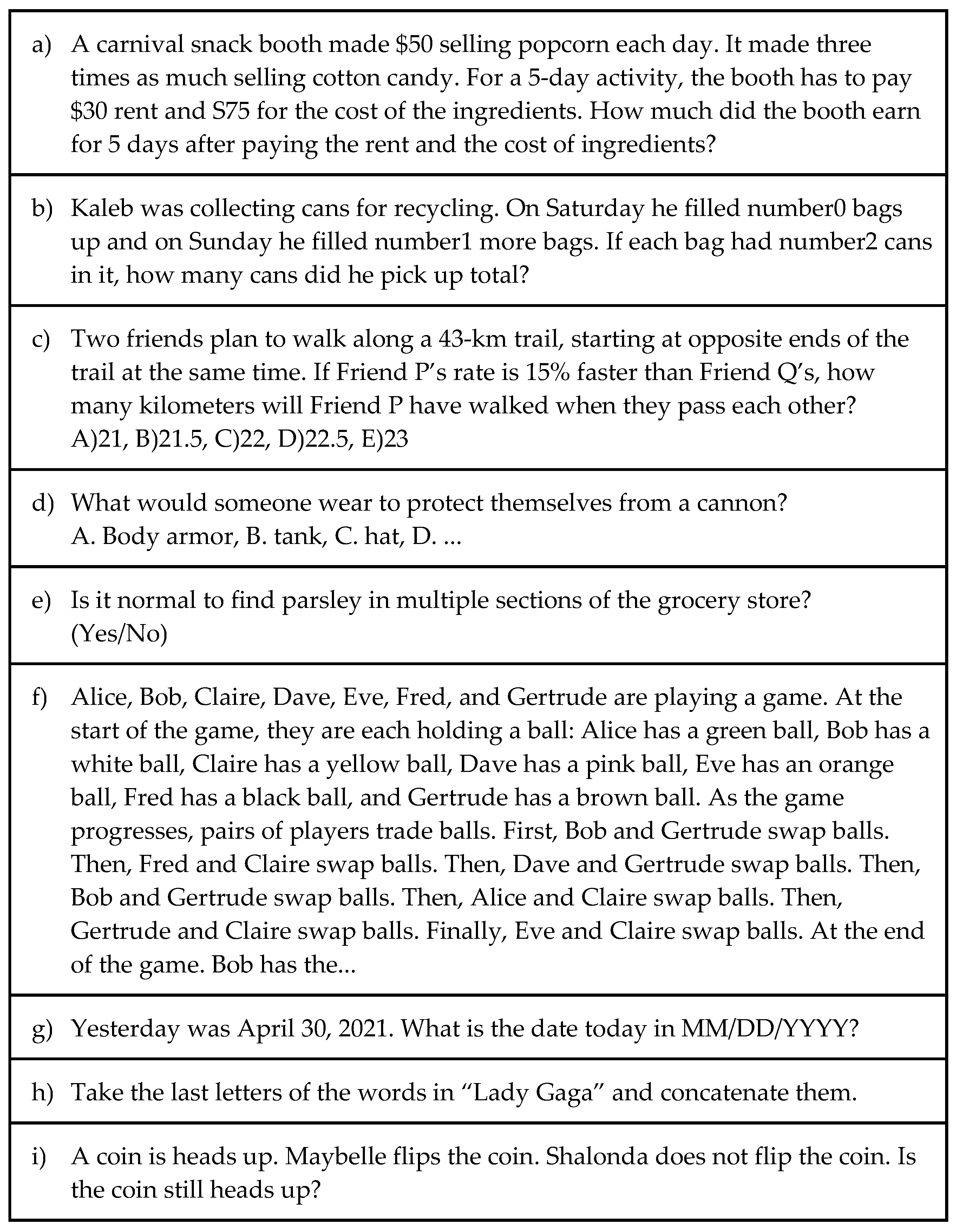

You are given a logic test. Are you ready?

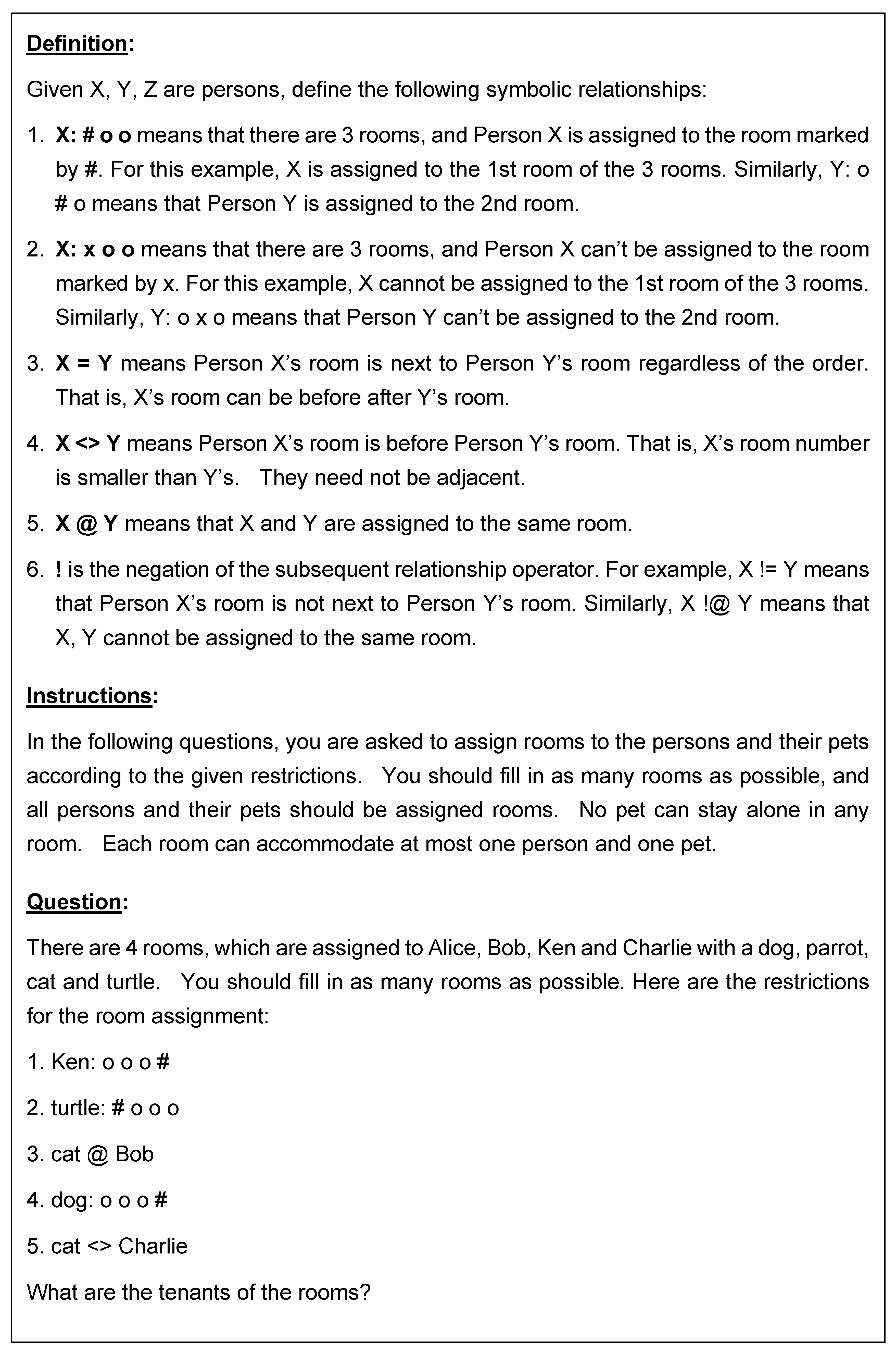

Definition

Given X, Y, Z are persons, define the following symbolic relationships:

1. X: # o o means that there are 3 rooms, and Person X is assigned to the room marked by #. For this example, X is assigned to the 1st room of the 3 rooms. Similarly, Y: o # o means that Person Y is assigned to the 2nd room.

2. X: x o o means that there are 3 rooms, and Person X can’t be assigned to the room marked by x. For this example, X cannot be assigned to the 1st room of the 3 rooms. Similarly, Y: o x o means that Person Y can’t be assigned to the 2nd room.

3. X = Y means Person X’s room is next to Person Y’s room regardless of the order. That is, X’s room can be before after Y’s room.

4. X <> Y means Person X’s room is before Person Y’s room. That is, X’s room number is lower than Y’s. They need not be adjacent.

5. X @ Y means that X and Y are assigned to the same room.

6. ! is the negation of the subsequent relationship operator. For example, X != Y means that Person X’s room is not next to Person Y’s room. Similarly, X !@ Y means that X, Y cannot be assigned to the same room.

Instructions:

In the following questions, you are asked to assign rooms to the persons and their pets according to the given restrictions. You should fill in as many rooms as possible, and all persons and their pets should be assigned rooms. No pet can stay alone in any room. Each room can accommodate at most one person and one pet.

84 Questions:

Q1. There are 3 rooms, which are assigned to Alice, Bob and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice: o o #

2. Bob: # o o

What are the tenants of the rooms?

Answer:

room 1: Bob

room 2: Charlie

room 3: Alice

Q2. There are 3 rooms, which are assigned to Alice, Bob and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice: # o o

2. Bob: o x o

What are the tenants of the rooms?

Answer:

room 1: Alice

room 2: Charlie

room 3: Bob

Q3. There are 3 rooms, which are assigned to Alice, Bob and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice: o o x

2. Bob: o x o

3. Alice: o x o

What are the tenants of the rooms?

Answer:

room 1: Alice

room 2: Charlie

room 3: Bob

Q4. There are 3 rooms, which are assigned to Alice, Bob and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice: x o o

2. Bob = Charlie

3. Bob: o x o

What are the tenants of the rooms?

Answer:

room 1: bob

room 2: Charlie

room 3: alice

Q5. There are 3 rooms, which are assigned to Alice, Bob and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice: # o o

2. Bob = Alice

What are the tenants of the rooms?

Answer:

room 1: Alice

room 2: Bob

room 3: Charlie

Q6. There are 3 rooms, which are assigned to Alice, Bob and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

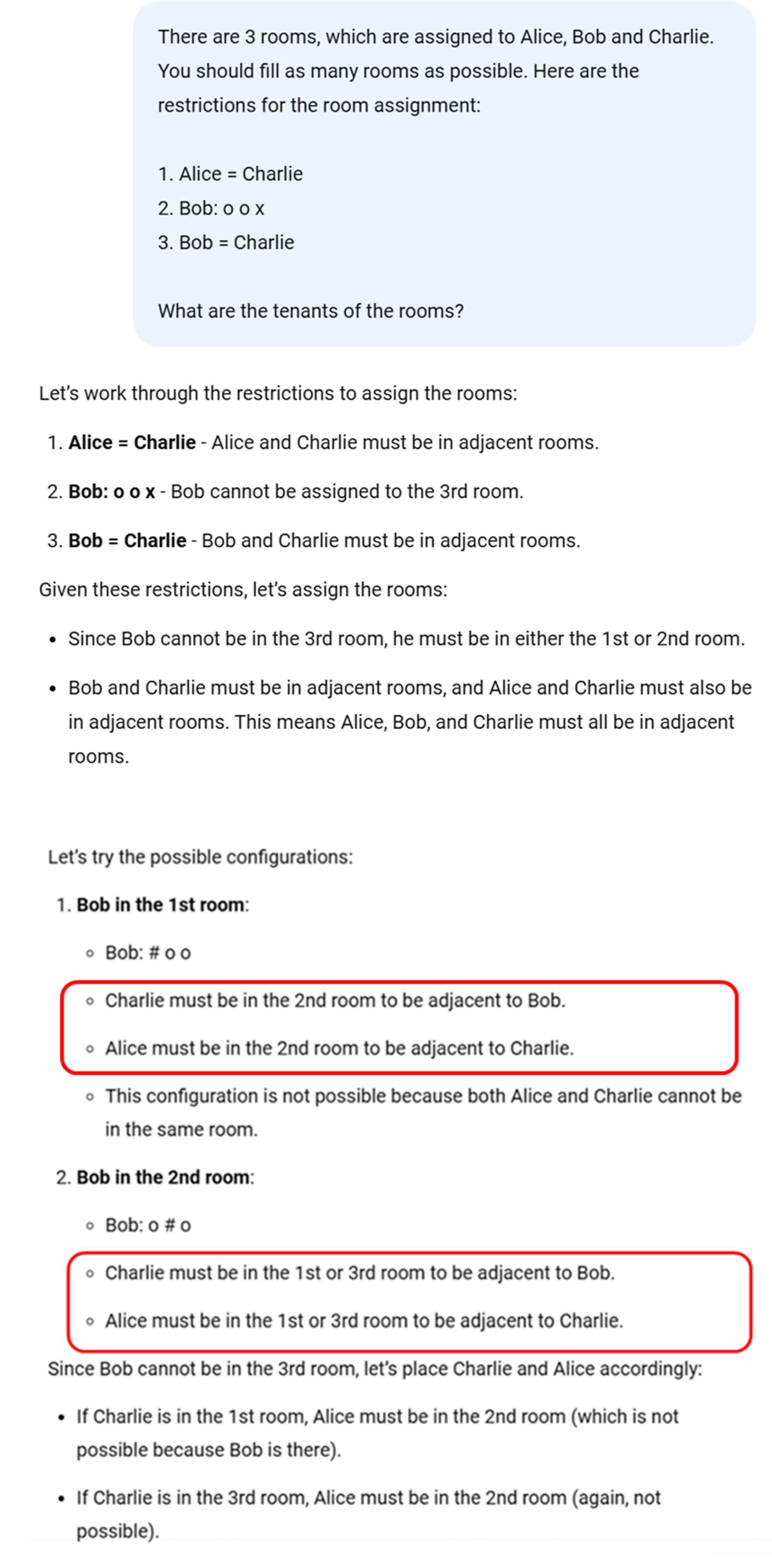

1. Alice = Charlie

2. Bob: o o x

3. Bob = Charlie

What are the tenants of the rooms?

Answer:

room 1: Bob

room 2: Charlie

room 3: Alice

Q7. There are 3 rooms, which are assigned to Alice, Bob and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice != Bob

3. Alice: o o #

What are the tenants of the rooms?

Answer:

room 1: Bob

room 2: Charlie

room 3: Alice

Q8. There are 3 rooms, which are assigned to Alice, Bob and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice != Charlie

2. Charlie: x o o

What are the tenants of the rooms?

Answer:

room 1: Alice

room 2: Bob

room 3: Charlie

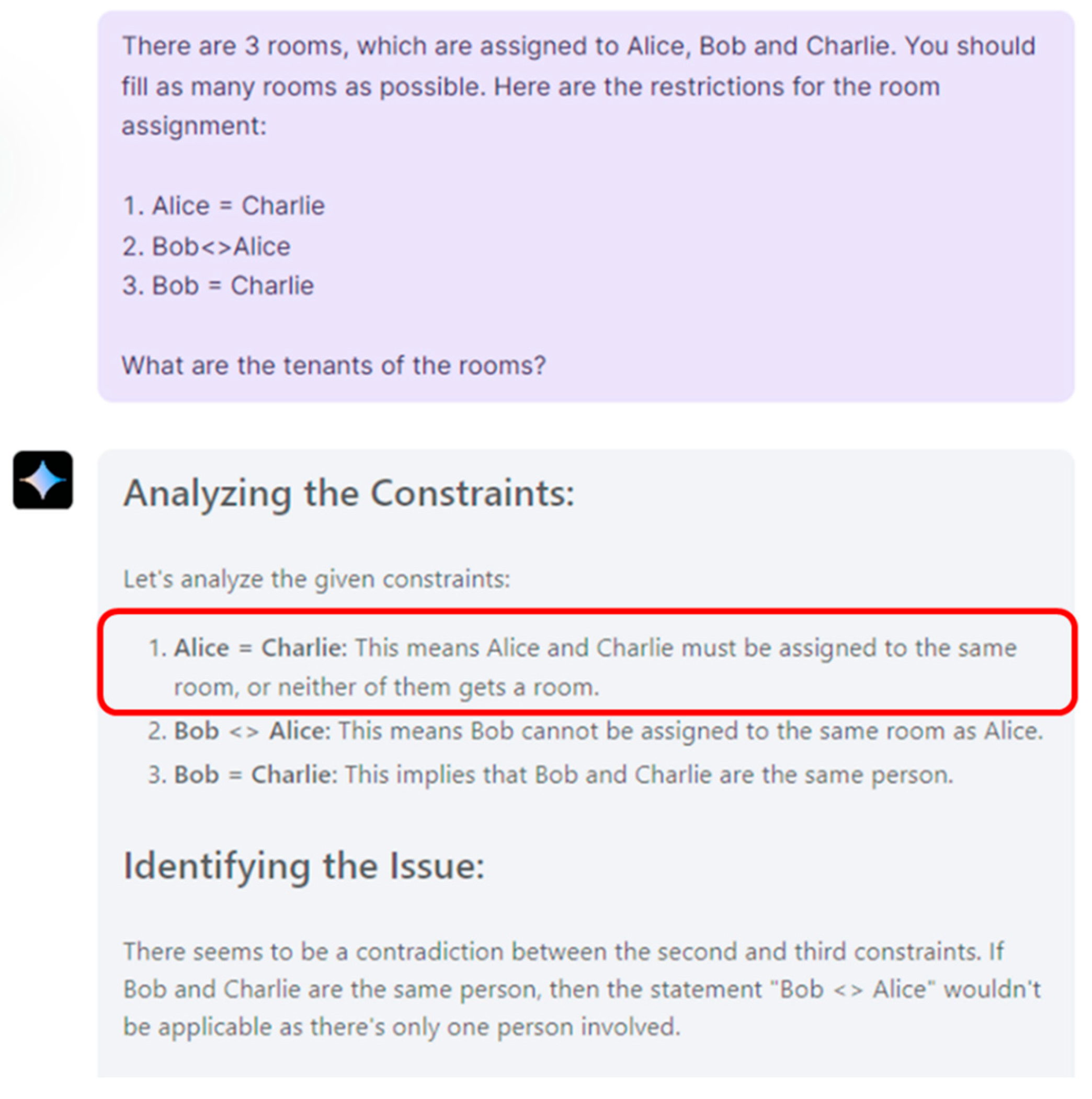

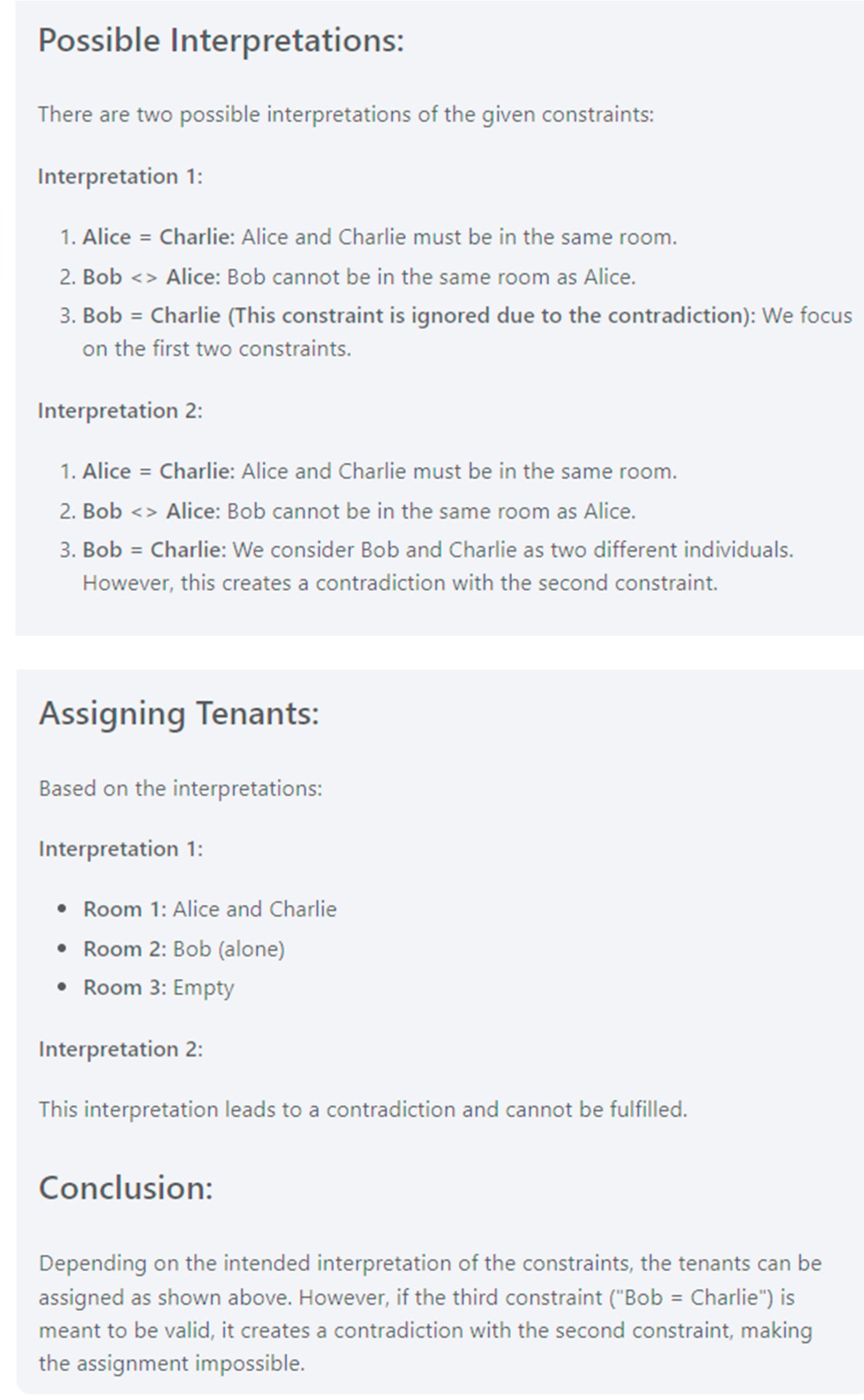

Q9. There are 3 rooms, which are assigned to Alice, Bob and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice = Charlie

2. Bob<>Alice

3. Bob = Charlie

What are the tenants of the rooms?

Answer:

room 1: Bob

room 2: Charlie

room 3: Alice

Q10. There are 3 rooms, which are assigned to Alice, Bob and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice <> Charlie

2. Bob: o x o

3. Bob: x o o

What are the tenants of the rooms?

Answer:

room 1: Alice

room 2: Charlie

room 3: Bob

Q11. There are 3 rooms, which are assigned to Alice, Bob and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice <> Charlie

2. Bob: # o o

What are the tenants of the rooms?

Answer:

room 1: Bob

room 2: Alice

room 3: Charlie

Q12. There are 3 rooms, which are assigned to Alice, Bob and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice <> Charlie

2. Charlie <> Bob

What are the tenants of the rooms?

Answer:

room 1: Alice

room 2: Charlie

room 3: Bob

Q13. There are 3 rooms, which are assigned to Alice, Bob and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Bob: o o x

2. Alice <> Bob

What are the tenants of the rooms?

Answer:

room 1: Alice

room 2: Bob

room 3: Charlie

Q14. There are 3 rooms, which are assigned to Alice, Bob and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Bob != Charlie

2. Bob <> Charlie

What are the tenants of the rooms?

Answer:

room 1: Bob

room 2: Alice

room 3: Charlie

Q15. There are 3 rooms, which are assigned to Alice, Bob and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice = Charlie

2. Alice <> Bob

3. Alice <> Charlie

What are the tenants of the rooms?

Answer:

room 1: Alice

room 2: Charlie

room 3: Bob

Q16. There are 3 rooms, which are assigned to Alice, Bob and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice <> Charlie

2. Bob: x o o

3. Alice = Charlie

What are the tenants of the rooms?

Answer:

room 1: Alice

room 2: Charlie

room 3: Bob

Q17. There are 3 rooms, which are assigned to Alice, Bob and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice <> Charlie

2. Bob: o x o

3. Bob <> Charlie

What are the tenants of the rooms?

Answer:

room 1: Bob

room 2: Alice

room 3: Charlie

Q18. There are 3 rooms, which are assigned to Alice, Bob and Charlie with 3 pets, parrot, cat and turtle respectively. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Cat: o o #

2. Bob: o o #

3. Turtle: o # o

4. Alice: # o o

What are the tenants of the rooms?

Answer:

room 1: Alice, parrot

room 2: Charlie, turtle

room 3: Bob, cat

Q19. There are 3 rooms, which are assigned to Alice, Bob and Charlie with three pets, parrot, cat and turtle respectively. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice: # o o

2. Parrot: o o #

3. Turtle @ Bob

What are the tenants of the rooms?

Answer:

room 1: Alice, cat

room 2: Bob, turtle

room 3: Charlie: parrot

Q20. There are 3 rooms, which are assigned to Alice, Bob and Charlie with three pets, parrot, cat and turtle respectively. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice: x o o

2. Parrot: o o x

3. Turtle: # o o

4. Bob @ parrot

What are the tenants of the rooms?

Answer:

room 1: Charlie, turtle

room 2: Bob, parrot

room 3: Alice, cat

Q21. There are 3 rooms, which are assigned to Alice, Bob and Charlie with three pets, parrot, cat and turtle respectively. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Parrot = turtle

2. Alice: o o #

3. Turtle: o o #

4. Bob: # o o

What are the tenants of the rooms?

Answer:

room 1: Bob, cat

room 2: Charlie, parrot

room 3: Alice, turtle

Q22. There are 3 rooms, which are assigned to Alice, Bob and Charlie with three pets, parrot, cat and turtle respectively. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice: o o #

2. Parrot: # o o

3. Turtle @ Alice

4. Alice = Bob

What are the tenants of the rooms?

Answer:

room 1: Charlie, parrot

room 2: Bob, cat

room 3: Alice, turtle

Q23. There are 3 rooms, which are assigned to Alice, Bob and Charlie with three pets, parrot, cat and turtle respectively. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice: o o #

2. Alice = turtle

3. Bob @ cat

What are the tenants of the rooms?

Answer:

room 1: Bob, cat

room 2: Charlie, turtle

room 3: Alice, parrot

Q24. There are 3 rooms, which are assigned to Alice, Bob and Charlie with three pets, parrot, cat and turtle respectively. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice = Bob

2. Parrot: # o o

3. Turtle = Bob

4. Alice: o o #

What are the tenants of the rooms?

Answer:

room 1: Charlie, parrot

room 2: Bob, cat

room 3: Alice, turtle

Q25. There are 3 rooms, which are assigned to Alice, Bob and Charlie with three pets, parrot, cat and turtle respectively. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Bob != Parrot

2. Bob @ turtle

3. Alice: # o o

What are the tenants of the rooms?

Answer:

room 1: Alice, parrot

room 2: Charlie, cat

room 3: Bob, turtle

Q26. There are 3 rooms, which are assigned to Alice, Bob and Charlie with three pets, parrot, cat and turtle respectively. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice: o # o

2. Parrot != cat

3. Charlie: x o o

4. Bob !@ parrot

What are the tenants of the rooms?

Answer:

room 1: Bob, cat

room 2: Alice, turtle

room 3: Charlie, parrot

Q27. There are 3 rooms, which are assigned to Alice, Bob and Charlie with three pets, parrot, cat and turtle respectively. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Charlie: o o x

2. Turtle: o o #

3. Parrot != Turtle

4. Bob = turtle

What are the tenants of the rooms?

Answer:

room 1: Charlie, parrot

room 2: Bob, cat

room 3: Alice, turtle

Q28. There are 3 rooms, which are assigned to Alice, Bob and Charlie with three pets, parrot, cat and turtle respectively. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Turtle: o o #

2. Parrot != Alice

3. Alice !@ parrot

4. Bob = Alice

What are the tenants of the rooms?

Answer:

room 1: Charlie, parrot

room 2: Bob, cat

room 3: Alice, turtle

Q29. There are 3 rooms, which are assigned to Alice, Bob and Charlie with three pets, parrot, cat and turtle respectively. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Bob @ parrot

2. Bob: x o o

3. Bob <> Charlie

4. cat @ Alice

What are the tenants of the rooms?

Answer:

room 1: Alice, cat

room 2: Bob, parrot

room 3: Charlie, turtle

Q30. There are 3 rooms, which are assigned to Alice, Bob and Charlie with three pets, parrot, cat and turtle respectively. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice <> Bob

2. Alice @ cat

3. cat != parrot

4. cat != Bob

What are the tenants of the rooms?

Answer:

room 1: Alice, cat

room 2: Charlie, turtle

room 3: Bob, parrot

Q31. There are 3 rooms, which are assigned to Alice, Bob and Charlie with three pets, parrot, cat and turtle respectively. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice <> Cat

2. Bob @ cat

3. parrot: o o #

What are the tenants of the rooms?

Answer:

room 1: Alice, turtle

room 2: Bob, cat

room 3: Charlie, parrot

Q32. There are 3 rooms, which are assigned to Alice, Bob and Charlie with three pets, parrot, cat and turtle respectively. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Bob: o # o

2. Alice <> parrot

3. parrot <> turtle

What are the tenants of the rooms?

Answer:

room 1: Alice, cat

room 2: Bob, parrot

room 3: Charlie, turtle

Q33. There are 3 rooms, which are assigned to Alice, Bob and Charlie with three pets, parrot, cat and turtle respectively. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice <> Bob

2. Charlie = turtle

3. cat <> Alice

What are the tenants of the rooms?

Answer:

room 1: Charlie, cat

room 2: Alice, turtle

room 3: Bob, parrot

Q34. There are 3 rooms, which are assigned to Alice, Bob and Charlie with three pets, parrot, cat and turtle respectively. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. cat <> parrot

2. Alice <> turtle

3. turtle <> Bob

What are the tenants of the rooms?

Answer:

room 1: Alice, cat

room 2: Charlie, turtle

room 3: Bob, parrot

Q35. There are 3 rooms, which are assigned to Alice, Bob and Charlie with three pets, parrot, cat and turtle respectively. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice = cat

2. Turtle: o # o

3. Alice <> Bob

4. Charlie @ cat

What are the tenants of the rooms?

Answer:

room 1: Charlie, cat

room 2: Alice, turtle

room 3: Bob, parrot

Q36. There are 3 rooms, which are assigned to Alice, Bob and Charlie with three pets, parrot, cat and turtle respectively. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. parrot <> cat

2. Alice <> Bob

3. Charlie: o # o

4. Turtle: # o o

What are the tenants of the rooms?

Answer:

room 1: Alice, turtle

room 2: Charlie, parrot

room 3: Bob, cat

Q37. There are 3 rooms, which are assigned to Alice, Bob and Charlie with three pets, parrot, cat and turtle respectively. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice !@ turtle

2. Alice <> Bob

3. cat @ Bob

4. Alice: o # o

What are the tenants of the rooms?

Answer:

room 1: Charlie, turtle

room 2: Alice, parrot

room 3: Bob, cat

Q38. There are 3 rooms, which are assigned to Alice, Bob and Charlie with three pets, parrot, cat and turtle respectively. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice: o # o

2. turtle = cat

3. parrot <> Bob

4. cat <> turtle

What are the tenants of the rooms?

Answer:

room 1: Charlie, parrot

room 2: Alice, cat

room 3: Bob, turtle

Q39. There are 4 rooms, which are assigned to Alice, Bob, Ken and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Ken: o o o #

2. Alice: o # o o

3. Bob: x o o o

What are the tenants of the rooms?

Answer:

room 1: Charlie

room 2: Alice

room 3: Bob

room4: Ken

Q40. There are 4 rooms, which are assigned to Alice, Bob, Ken and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Ken: o # o o

2. Alice: o o # o

3. Bob = Ken

What are the tenants of the rooms?

Answer:

room 1: Bob

room 2: Ken

room 3: Alice

room4: Charlie

Q41. There are 4 rooms, which are assigned to Alice, Bob, Ken and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Bob != Charlie

2. Alice: o o # o

3. Bob: o # o o

What are the tenants of the rooms?

Answer:

room 1: Ken

room 2: Bob

room 3: Alice

room4: Charlie

Q42. There are 4 rooms, which are assigned to Alice, Bob, Ken and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Ken: o o o #

2. Alice: # o o o

3. Bob <> Charlie

What are the tenants of the rooms?

Answer:

room 1: Alice

room 2: Bob

room 3: Charlie

room 4: Ken

Q43. There are 4 rooms, which are assigned to Alice, Bob, Ken and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Ken <> Bob

2. Alice: o o # o

3. Bob = Ken

What are the tenants of the rooms?

Answer:

room 1: Ken

room 2: Bob

room 3: Alice

room4: Charlie

Q44. There are 4 rooms, which are assigned to Alice, Bob, Ken and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Ken != Bob

2. Alice <> Bob

3. Bob: o o o x

4. Alice <> Ken

What are the tenants of the rooms?

Answer:

room 1: Alice

room 2: Bob

room 3: Charlie

room4: Ken

Q45. There are 4 rooms, which are assigned to Alice, Bob, Ken and Charlie. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Ken <> Alice

2. Bob != Ken

3. Ken <> Bob

4. Bob != Alice

What are the tenants of the rooms?

Answer:

room 1: Ken

room 2: Alice

room 3: Charlie

room4: Bob

Q46. There are 4 rooms, which are assigned to Alice, Bob, Ken and Charlie with a dog, parrot, cat and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Ken: o # o o

2. Alice: o o # o

3. dog @ Bob

4. parrot! = turtle

5. Ken @ parrot

What are the tenants of the rooms?

Answer:

room 1: Bob, dog

room 2: Ken, parrot

room 3: Alice, cat

room4: Charlie, turtle

Q47. There are 4 rooms, which are assigned to Alice, Bob, Ken and Charlie with a dog, parrot, cat and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Ken: o o o #

2. Turtle: # o o o

3. cat @ Bob

4. dog: o o o #

5. cat <> Charlie

What are the tenants of the rooms?

Answer:

room 1: Alice, turtle

room 2: Bob, cat

room 3: Charlie, parrot

room 4: Ken, dog

Q48. There are 4 rooms, which are assigned to Alice, Bob, Ken and Charlie with a dog, parrot, cat and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. parrot: # o o o

2. turtle = Bob

3. cat <> Ken

4. Alice: o o o #

What are the tenants of the rooms?

Answer:

room 1: Charlie, parrot

room 2: Bob, cat

room 3: Ken, turtle

room4: Alice, dog

Q49. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. rabbit: o o o # o

2. Alice: # o o o o

3. cat: o o # o o

4. rabbit = Ken

5. Ben = dog

6. dog @ Charlie

7. cat <> turtle

What are the tenants of the rooms?

Answer:

room 1: Alice, parrot

room 2: Charlie, dog

room 3: Ben, cat

room 4: Bob, rabbit

Room 5: Ken, turtle

Q50. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. parrot @ Bob

2. Ken = turtle

3. Alice = Ken

4. Charlie <> Ken

5. Bob: o o o o #

6. Charlie: x o o o o

7. rabbit: o o o # o

8. cat: o # o o o

What are the tenants of the rooms?

Answer:

room 1: Ben, dog

room 2: Charlie, cat

room 3: Alice, turtle

room4: Ken, rabbit

room 5: Bob, parrot

Q51. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. turtle: o # o o o

2. Bob @ rabbit

3. cat @ Ben

4. Ken: # o o o o

5. dog <> parrot

6. cat: o o o x o

7. Ken != Charlie

8. Bob: o o # o o

What are the tenants of the rooms?

Answer:

room 1: Ken, dog

room 2: Alice, turtle

room 3: Bob, rabbit

room 4: Charlie, parrot

Room 5: Ben, cat

Q52. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. rabbit != turtle

2. rabbit: o o # o o

3. dog: o # o o o

4. Ken: o # o o o

5. Alice <> Bob

6. dog = cat

7. Alice =Ben

8. Alice != turtle

What are the tenants of the rooms?

Answer:

room 1: Charlie, cat

room 2: Ken, dog

room 3: Alice, rabbit

room 4: Ben, parrot

room 5: Bob, turtle

Q53. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Ken != Ben

2. Alice @ cat

3. Alice != dog

4. parrot != Alice

5. Ben: o o x o o

6. parrot <> Charlie

7. rabbit: # o o o o

8. parrot: o o # o o

What are the tenants of the rooms?

Answer:

room 1: Ben, rabbit

room 2: Bob, dog

room 3: Ken, parrot

room 4: Charlie, turtle

Room 5: Alice, cat

Q54. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. cat @ Ken

2. Alice @ turtle

3. Ben: o o o o #

4. cat: # o o o o

5. cat = dog

6. Ben @ parrot

7. Charlie <> Bob

8. turtle: o o x o o

What are the tenants of the rooms?

Answer:

room 1: Ken, cat

room 2: Charlie, dog

room 3: Bob, rabbit

room 4: Alice, turtle

room 5: Ben, parrot

Q55. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. turtle: o o o # o

2. cat != turtle

3. rabbit <> Ben

4. Ken @ parrot

5. Bob: # o o o o

7. cat = Ben

8. Alice @ cat

What are the tenants of the rooms?

Answer:

room 1: Bob, rabbit

room 2: Alice, cat

room 3: Ben, dog

room 4: Charlie, turtle

room 5: Ken, parrot

Q56. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. turtle = cat

2. parrot @ Bob

3. Ken: o # o o o

4. turtle = Bob

5. Ben: o o o # o

6. Charlie @ rabbit

7. parrot <> cat

8. Alice != dog

What are the tenants of the rooms?

Answer:

room 1: Charlie, rabbit

room 2: Ken, dog

room 3: Bob, parrot

room 4: Ben, turtle

room 5: Alice, cat

Q57. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Ken: o # o o o

2. cat != Bob

3. Ben != rabbit

4. turtle <> Ben

5. rabbit = Charlie

6. parrot <> Charlie

7. Charlie @ parrot

8. Alice = cat

9. rabbit @ Bob

What are the tenants of the rooms?

Answer:

room 1: Alice, turtle

room 2: Ken, cat

room 3: Ben, dog

room 4: Charlie, parrot

room 5: Bob, rabbit

Q58. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Ken: o o o # o

2. turtle <> Bob

3. Ben: # o o o o

4. dog: # o o o o

5. Alice @ parrot

6. cat != turtle

7. Bob <> Ken

What are the tenants of the rooms?

Answer:

room 1: Ben, dog

room 2: Charlie, turtle

room 3: Bob, rabbit

room 4: Ken, cat

room 5: Alice, parrot

Q59. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Ken = Ben

2. Ken <> parrot

3. Bob = Alice

4. Alice <> Bob

5. cat @ Alice

6. rabbit: o o o o #

8. dog: x o o o o

9. Ken = Alice

What are the tenants of the rooms?

Answer:

room 1: Ben, turtle

room 2: Ken, dog

room 3: Alice, cat

room 4: Bob, parrot

room 5: Charlie, rabbit

Q60. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. turtle: o o o o #

2. parrot: o # o o o

3. Alice: o # o o o

4. turtle = Ben

5. rabbit = Alice

6. dog <> rabbit

7. Charlie <> Ken

8. parrot != Bob

What are the tenants of the rooms?

Answer:

room 1: Charlie, dog

room 2: Alice, parrot

room 3: Ken, rabbit

room 4: Ben, cat

room 5: Bob, turtle

Q61. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Ken <> Ben

2. turtle = dog

3. Ben <> Bob

4. Bob <> dog

5. Ken: o x o o o

6. Ken = parrot

7. cat = turtle

8. Ken = Alice

What are the tenants of the rooms?

Answer:

room 1: Ken, rabbit

room 2: Alice, parrot

room 3: Ben, cat

room 4: Bob, turtle

room 5: Charlie, dog

Q62. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. rabbit <> dog

2. parrot @ Ken

3. Ben = Ken

4. Ken <> Ben

5. Bob: o o # o o

6. cat: o o o # o

7. Charlie = rabbit

What are the tenants of the rooms?

Answer:

room 1: Ken, parrot

room 2: Ben, turtle

room 3: Bob, rabbit

room 4: Charlie, cat

room 5: Alice, dog

Q63. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Ken <> Ben

2. parrot = Alice

3. Ben: o o # o o

4. Bob <> turtle

5. cat = Alice

6. turtle <> dog

7. parrot: o o x o o

What are the tenants of the rooms?

Answer:

room 1: Bob, rabbit

room 2: Ken, turtle

room 3: Ben, cat

room 4: Alice, dog

room 5: Charlie, parrot

Q64. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Ken: o # o o o

2. rabbit <> cat

3. Ben <> rabbit

4. dog @ Bob

5. Alice <> dog

6. Bob != Alice

7. parrot: o # o o o

What are the tenants of the rooms?

Answer:

room 1: Ben, turtle

room 2: Ken, parrot

room 3: Alice, rabbit

room 4: Charlie, cat

room 5: Bob, dog

Q65. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice: o o # o o

2. dog <> Bob

3. Turtle: o o o x o

4. parrot <> cat

5. Ben: o o o o #

6. cat != Ben

7. cat @ Ken

What are the tenants of the rooms?

Answer:

room 1: Charlie, parrot

room 2: Ken, cat

room 3: Alice, dog

room 4: Bob, rabbit

room 5: Ben, turtle

Q66. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice <> dog

2. rabbit: # o o o o

3. Ken <> Ben

4. Alice @ parrot

5. Ben: o o o o x

6. cat = Ben

7. dog: o o o o x

8. Bob: o o o # o

What are the tenants of the rooms?

Answer:

room 1: Ken, rabbit

room 2: Alice, parrot

room 3: Ben, dog

room 4: Bob, cat

room 5: Charlie, turtle

Q67. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice <> Ken

2. Ken <> parrot

3. dog != Ben

4. parrot <> cat

5. turtle @ Bob

6. Charlie: o o x o o

7. Ben != Charlie

What are the tenants of the rooms?

Answer:

room 1: Alice, dog

room 2: Ken, rabbit

room 3: Ben, parrot

room 4: Bob, turtle

room 5: Charlie, cat

Q68. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice <> cat

2. Bob <> Alice

3. cat <> Ken

4. dog <> rabbit

5. Ken != cat

6. Ben <> turtle

7. parrot <> dog

8. Charlie = turtle

What are the tenants of the rooms?

Answer:

room 1: Bob, parrot

room 2: Alice, dog

room 3: Ben, cat

room 4: Charlie, rabbit

room 5: Ken, turtle

Q69. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice = turtle

2. turtle <> rabbit

3. turtle: x o o o o

4. parrot != Bob

5. dog: o o # o o

6. rabbit <> cat

7. Ken: # o o o o

8. rabbit @ Charlie

What are the tenants of the rooms?

Answer:

room 1: Ken, parrot

room 2: Ben, turtle

room 3: Alice, dog

room 4: Charlie, rabbit

room 5: Bob, cat

Q70. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. cat != dog

2. dog = Ken

3. Ben <> Alice

4. Bob !@ turtle

5. Ben = Bob

6. Bob <> Ben

7. Charlie: o # o o o

8. cat <> rabbit

What are the tenants of the rooms?

Answer:

room 1: Ken, turtle

room 2: Charlie, dog

room 3: Bob, parrot

room 4: Ben, cat

room 5: Alice, rabbit

Q71. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. rabbit: o o o o x

2. Alice <> Ben

3. Ken <> Alice

4. parrot: # o o o o

5. turtle = Ken

6. Ken <> Bob

7. Bob != Ben

8. Ken @ dog

What are the tenants of the rooms?

Answer:

room 1: Charlie, parrot

room 2: Ken, dog

room 3: Bob, turtle

room 4: Alice, rabbit

room 5: Ben, cat

Q72. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice @ dog

2. Bob != Alice

3. rabbit !@ Bob

4. turtle <> dog

5. Ken <> Bob

6. cat: o # o o o

7. cat @ Ben

8. parrot: o o o # o

What are the tenants of the rooms?

Answer:

room 1: Ken, rabbit

room 2: Ben, cat

room 3: Bob, turtle

room 4: Charlie, parrot

room 5: Alice, dog

Q73. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. rabbit <> Alice

2. cat <> dog

3. cat = Bob

4. Bob <> parrot

5. Ken != turtle

6. rabbit = Ben

7. dog = Charlie

8. rabbit: o o o # o

What are the tenants of the rooms?

Answer:

room 1: Bob, turtle

room 2: Charlie, cat

room 3: Ben, dog

room 4: Ken, rabbit

room 5: Alice, parrot

Q74. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice <> Bob

2. Ken: o o o o x

3. cat @ Bob

4. dog: o o x o o

5. Ben != cat

6. Charlie @ rabbit

7. Ben: o o o # o

8. turtle <> Bob

What are the tenants of the rooms?

Answer:

room 1: Alice, turtle

room 2: Bob, cat

room 3: Ken, parrot

room 4: Ben, dog

room 5: Charlie, rabbit

Q75. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice = cat

2. Bob @ dog

3. Bob <> Alice

4. cat: o # o o o

5. parrot != rabbit

6. Bob = Ken

7. parrot <> Ben

8. Charlie !@ turtle

What are the tenants of the rooms?

Answer:

room 1: Bob, dog

room 2: Ken, cat

room 3: Alice, parrot

room 4: Ben, turtle

room 5: Charlie, rabbit

Q76. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice <> turtle

2. rabbit != Bob

3. parrot @ Bob

4. turtle <> cat

5. Ken != Bob

6. Ben <> Bob

7. Ben @ rabbit

8. Bob: o o o o x

What are the tenants of the rooms?

Answer:

room 1: Ben, rabbit

room 2: Alice, dog

room 3: Bob, parrot

room 4: Charlie, turtle

room 5: Ken, cat

Q77. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. cat = turtle

2. Bob: o o # o o

3. Alice != Bob

4. Ken != Bob

5. parrot <> turtle

6. rabbit <> Charlie

7. Bob @ turtle

8. Ken @ parrot

What are the tenants of the rooms?

Answer:

room 1: Ken, parrot

room 2: Ben, rabbit

room 3: Bob, turtle

room 4: Charlie, cat

room 5: Alice, dog

Q78. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. rabbit <> Alice

2. parrot != dog

3. Bob != Alice

4. Ken: o o o o #

5. Ben: # o o o o

6. cat <> rabbit

7. cat: x o o o o

8. Ken = dog

What are the tenants of the rooms?

Answer:

room 1: Ben, parrot

room 2: Bob, cat

room 3: Charlie, rabbit

room 4: Alice, dog

room 5: Ken, turtle

Q79. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice != cat

2. Bob: o o o # o

3. turtle = Ken

4. Ben != cat

5. cat: o o o o x

6. rabbit <> Charlie

7. parrot <> Alice

8. cat @ Charlie

What are the tenants of the rooms?

Answer:

room 1: Ben, turtle

room 2: Ken, rabbit

room 3: Charlie, cat

room 4: Bob, parrot

room 5: Alice, dog

Q80. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice != cat

2. Bob <> turtle

3. Ken: o o o x o

4. Alice = Bob

5. dog: x o o o o

6. Alice @ dog

7. rabbit: o o o o #

8. Charlie <> turtle

What are the tenants of the rooms?

Answer:

room 1: Charlie, cat

room 2: Bob, parrot

room 3: Alice, dog

room 4: Ben, turtle

room 5: Ken, rabbit

Q81. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice @ turtle

2. turtle != parrot

3. Bob != Ken

4. parrot @ Bob

5. cat <> parrot

6. parrot <> Ben

7. Bob <> turtle

8. turtle <> dog

What are the tenants of the rooms?

Answer:

room 1: Charlie, cat

room 2: Bob, parrot

room 3: Ben, rabbit

room 4: Alice, turtle

room 5: Ken, dog

Q82. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice <> turtle

2. turtle = rabbit

3. turtle: o o o o #

4. rabbit != Ken

5. parrot <> cat

6. cat != rabbit

7. Ben <> Ken

8. Charlie: o o o # o

What are the tenants of the rooms?

Answer:

room 1: Ben, parrot

room 2: Ken, cat

room 3: Alice, dog

room 4: Charlie, rabbit

room 5: Bob, turtle

Q83. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice != Bob

2. Alice = dog

3. cat != rabbit

4. Bob = rabbit

5. rabbit = Ken

6. Ben <> Charlie

7. parrot @ Ben

8. Bob <> Charlie

What are the tenants of the rooms?

Answer:

room 1: Ben, parrot

room 2: Bob, turtle

room 3: Charlie, rabbit

room 4: Ken, dog

room 5: Alice, cat

Q84. There are 5 rooms, which are assigned to Alice, Bob, Ken, Ben and Charlie with a dog, parrot, cat, rabbit and turtle. You should fill as many rooms as possible. Here are the restrictions for the room assignment:

1. Alice: o o o x o

2. Bob <> Ken

3. Ben @ cat

4. dog: o # o o o

5. Ken <> Alice

6. dog != parrot

7. Charlie != Ben

8. Bob @ turtle

What are the tenants of the rooms?

Answer:

room 1: Bob, turtle

room 2: Charlie, dog

room 3: Ken, rabbit

room 4: Ben, cat

room 5: Alice, parrot