1. Introduction

In an era where data breaches, ransomware attacks, and insider threats continue to escalate, securing enterprise pipelines has become a strategic imperative. While traditional Symbolic systems and static anomaly detectors offer some level of protection, they often lack the context-awareness, adaptability, and interpretability needed to address sophisticated and evolving threats. Organizations increasingly seek intelligent systems that not only detect anomalies but also provide actionable insights with audit-ready transparency.

Large Language Models (LLMs) have emerged as a disruptive force in artificial intelligence, offering remarkable capabilities in understanding context, generating structured output, and reasoning across diverse data modalities. Their potential extends beyond natural language processing into domains such as security analytics, where the ability to detect subtle deviations in system behavior or policy violations can mean the difference between proactive mitigation and costly breaches.

However, integrating LLMs into secure data pipelines introduces challenges—particularly around explainability, auditability, and regulatory compliance. Cybersecurity applications demand high levels of determinism and traceability, especially in sectors governed by standards such as GDPR, HIPAA, or SOX. Bridging the gap between flexible intelligence and rigorous policy enforcement requires a hybrid design approach that incorporates both generative models and Symbolic systems.

This paper presents an architectural blueprint that embeds LLMs into modern data engineering workflows to create adaptive, secure, and compliant pipelines. We propose a layered pipeline wherein LLM-driven modules perform real-time inference and anomaly detection, while rule engines validate outputs against organizational policies and regulatory constraints. The result is a system that continuously learns, explains its decisions, and evolves with changing threat landscapes.

Our contributions are threefold: (i) we design a secure LLM-augmented pipeline architecture tailored for threat detection and compliance enforcement, (ii) we compare hybrid versus traditional systems using performance metrics and visual benchmarks, and (iii) we demonstrate real-world applicability through use cases in financial auditing and insider risk detection.

Despite advancements in intrusion detection and SIEM (Security Information and Event Management) tools, many organizations still rely on static pattern-matching and pre-defined signature rules that fail to generalize across new attack vectors. The pace at which adversaries adapt—via obfuscation, polymorphism, and low-and-slow tactics—renders many conventional detection tools obsolete within weeks of deployment. This reality underscores the urgency to adopt adaptable intelligence that can evolve as quickly as threats do.

The emergence of LLMs provides a unique opportunity to revolutionize security automation by making data pipelines "reason-aware." Unlike black-box ML models that simply classify traffic or label anomalies, LLMs are capable of chaining reasoning steps, interpreting policy documents, generating alerts in human-readable formats, and even drafting mitigation strategies based on historical attack data. This multi-dimensional utility makes LLMs ideal for threat detection environments where both technical accuracy and operational clarity are essential.

Furthermore, the growing reliance on cloud-native architectures, microservices, and serverless environments increases the attack surface and complexity of managing secure pipelines. Modern deployments involve continuous integration, distributed observability, and real-time telemetry—each of which generates massive volumes of semi-structured or unstructured data. Embedding LLMs into these pipelines empowers security teams to extract insights across silos, detect latent threats, and enforce cross-service policies without extensive manual intervention.

2. Background and Emerging Trends

Security operations today are facing data at an unprecedented scale and complexity—spanning across logs, metrics, APIs, access controls, and policy layers. While traditional Symbolic systems have laid the groundwork for deterministic threat detection, their limitations have opened doors for modern AI-driven architectures. This section outlines the evolution toward hybrid intelligence in secure pipelines.

2.1. Symbolic Security Systems in Practice

Symbolic systems—also known as logic-driven or expert systems—have long served as the backbone of traditional security architectures. These systems operate based on a predefined set of human-authored rules, conditions, and deterministic logic. Whenever incoming data satisfies a rule condition, an action is triggered—whether it’s logging an event, generating an alert, or enforcing a policy. This symbolic reasoning model ensures predictable outputs and strong traceability, making it particularly valuable in regulated environments where explainability and auditability are paramount [

1].

One of the key strengths of symbolic systems lies in their simplicity. Since the logic is explicit and rules are often domain-specific, analysts and compliance officers can easily understand why a certain action was taken. These systems are typically easy to deploy in environments where data structure and compliance requirements are well defined. Industries like finance, government, and critical infrastructure have historically relied on these systems to encode regulatory policies, trigger anomaly flags, and enforce baseline network hygiene.

However, symbolic systems show critical limitations when dealing with complex, multi-dimensional, or evolving threats. Their rule sets are brittle: they require manual updates to stay current and may miss subtle behaviors that don’t exactly match predefined conditions. Koziolek and Burger’s analysis of symbolic engines in production environments revealed how maintenance overhead increases linearly with system complexity, often requiring dedicated engineering teams to manage rule curation and validation [

2].

Moreover, symbolic systems struggle with contextual awareness. They cannot generalize or reason beyond their encoded logic. For example, a rule may detect a failed login attempt, but it won’t be able to infer whether this is part of a larger brute-force campaign unless multiple rules are manually chained together. As cyber threats become more sophisticated—exploiting zero-days, lateral movement, and obfuscation tactics—this lack of adaptability becomes a major bottleneck.

While symbolic systems remain essential for encoding policy enforcement logic, especially in regulated and critical sectors, they are increasingly seen as complementary rather than comprehensive. The next generation of secure pipelines is moving toward hybrid approaches, where symbolic systems act as interpretable validators alongside adaptive LLM-powered engines capable of semantic inference and real-time learning.

2.2. LLMs as Semantic Detection Engines

Transformer-based models, particularly LLMs, introduce a radically different approach to security—one built around pattern generalization rather than pattern matching. These models can understand the structure of logs, infer malicious sequences, and adapt to unseen threats. Unlike fixed rules, LLMs extract insights from surrounding context, making them effective at catching polymorphic malware, privilege escalations, and advanced persistent threats [

3].

In pipeline design, LLMs can ingest and interpret logs, behavior traces, or API usage streams in near real-time. Their flexibility enables dynamic alert generation, contextual labeling of anomalous sessions, and human-readable explanations for decisions. This level of semantic interpretation makes them particularly useful in incident response, where correlating signals is more valuable than counting anomalies.

2.3. Toward Hybrid Detection Pipelines

Despite their strengths, LLMs are not perfect replacements for rules—they introduce inference latency, require explanation layers, and may occasionally hallucinate outputs. For this reason, hybrid pipelines are emerging as the new standard: rules ensure policy alignment and compliance, while LLMs contribute contextual intelligence and pattern expansion.

The hybrid model gives the best of both worlds—rules manage the known, LLMs explore the unknown. In the next sections, we explore architectural blueprints that combine these elements into scalable, compliant, and intelligent pipelines.

Table 1 presents a comparative analysis of detection capabilities across Symbolic systems, LLM-based, and hybrid paradigms. While Symbolic systems offer high explainability and policy enforcement, they fall short in adaptability and semantic reasoning. In contrast, LLM-based methods demonstrate strength in contextual understanding and dynamic learning but face challenges in setup complexity and transparency. Hybrid systems offer a balanced blend, inheriting interpretability from symbolic methods and adaptability from neural models, making them suitable for evolving threat landscapes.

As shown in

Table 1, symbolic systems continue to offer exceptional performance in areas like policy enforcement and explainability. However, their static nature makes them less effective in adaptive environments where attack vectors evolve rapidly. LLM-based systems fill this gap by offering strong contextual reasoning and anomaly detection, although their outputs may lack deterministic clarity. The hybrid approach integrates strengths from both: it leverages symbolic systems for rule-governed compliance while allowing LLMs to infer nuanced insights, thus balancing interpretability, coverage, and adaptability across threat surfaces.

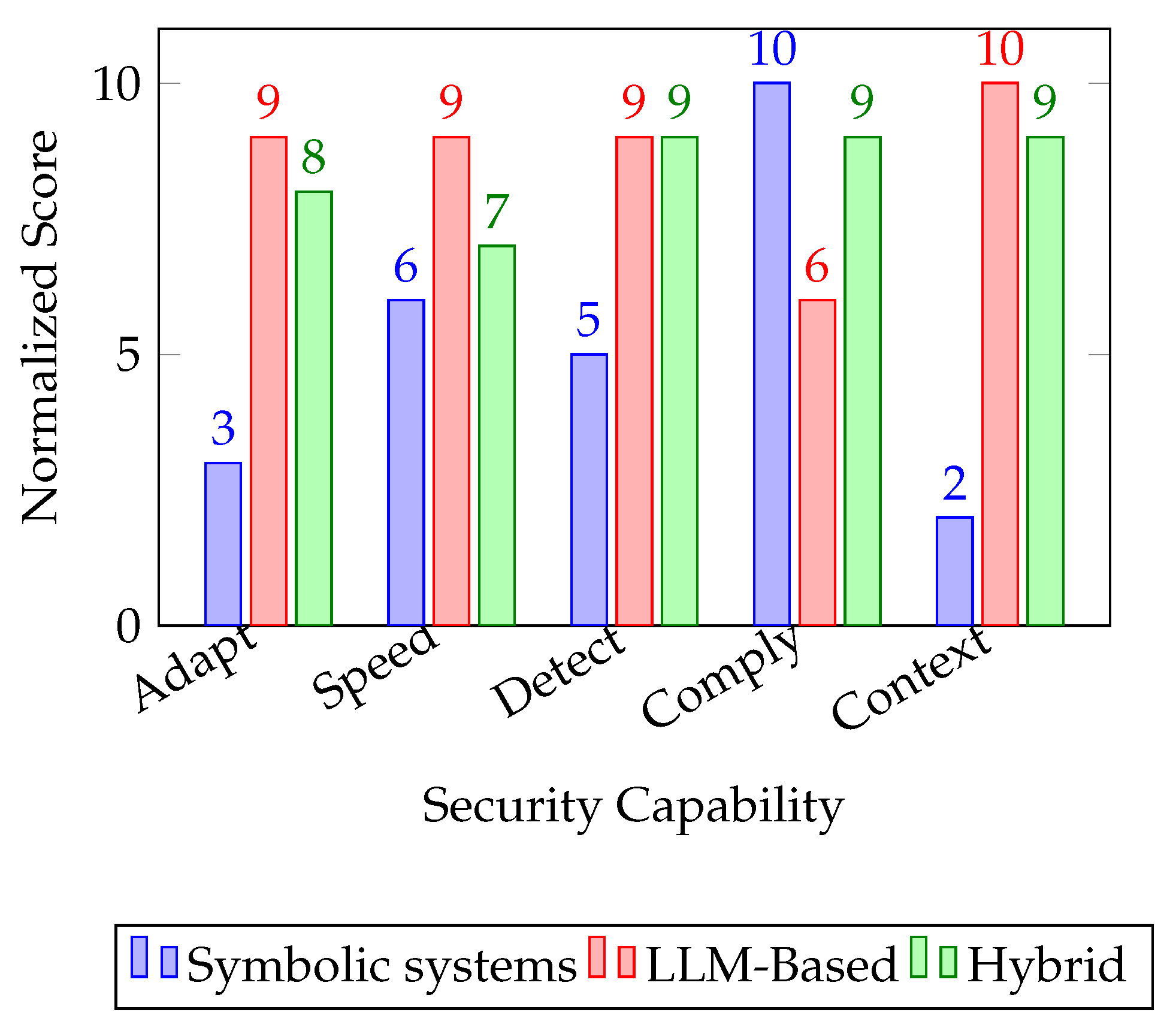

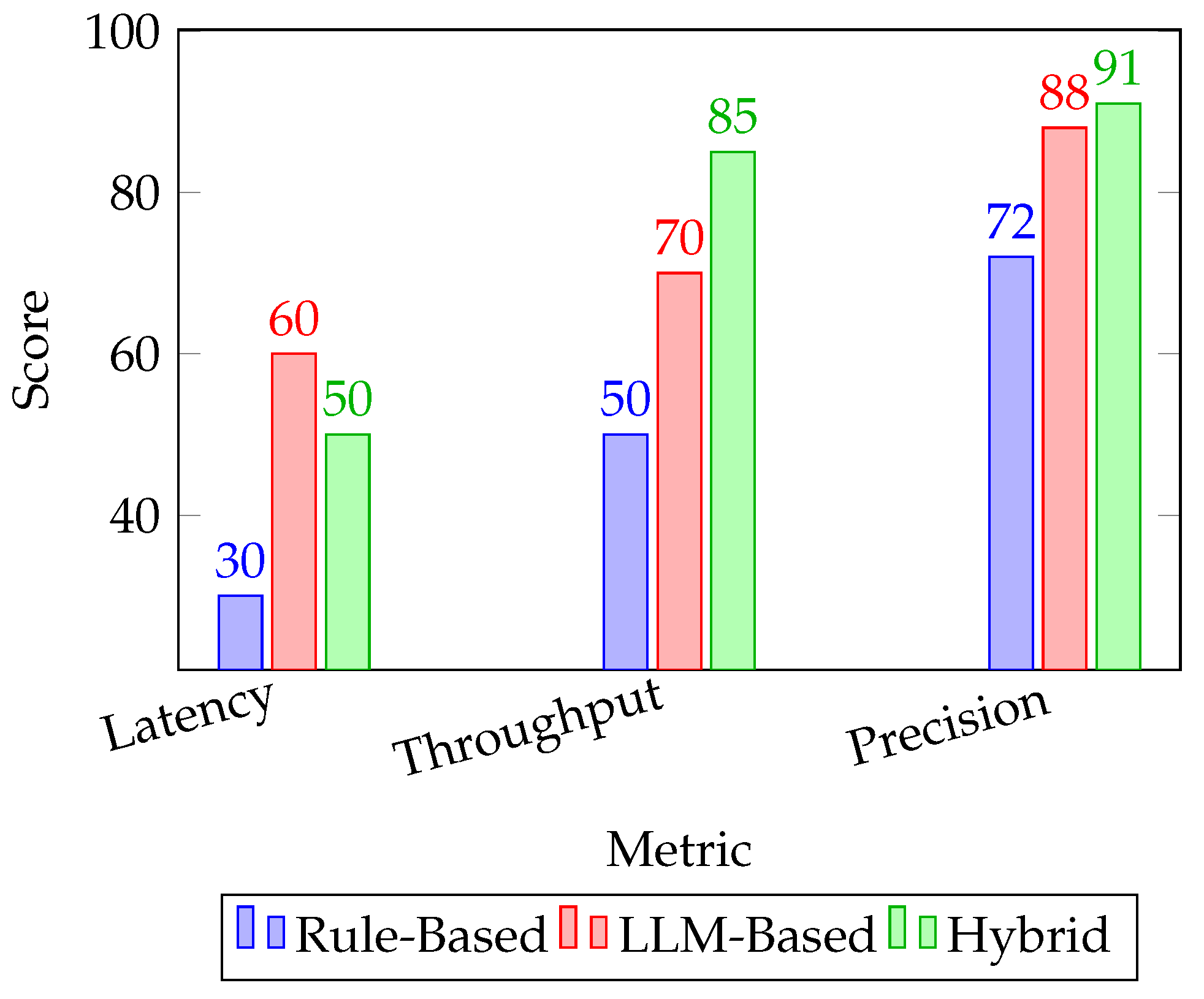

Figure 1 visually highlights the performance scores of each paradigm across key security objectives. LLM-based models dominate in adaptability and contextual reasoning, whereas Symbolic systems retain advantages in compliance enforcement and explainability. Hybrid systems consistently score high across all dimensions, validating the architectural motivation for integrating symbolic and neural techniques in modern threat detection pipelines.

Figure 1 reinforces the complementary nature of these paradigms. While symbolic systems excel in compliance and speed, they trail in contextual analysis and detection depth. LLM-based models demonstrate leading performance in adaptability and semantic understanding but require more oversight for compliance alignment. Hybrid architectures, by design, aim to mitigate these trade-offs—delivering high scores across most dimensions. This visual contrast strengthens the argument for evolving traditional pipelines into intelligent, multi-layered frameworks that embed both rule-based logic and language model intelligence.

3. Hybrid Reasoning Architectures

As security requirements grow more complex, organizations are shifting from siloed detection mechanisms toward layered, intelligent pipelines. These architectures combine deterministic policy validation, real-time data processing, and semantic threat reasoning into cohesive systems. This section outlines the core architectural components of a hybrid pipeline that leverages both symbolic systems and LLM-based modules.

At the foundation of the architecture is the ingestion layer, where raw security data is collected from distributed logs, sensors, access controls, and application telemetry. This data is routed into a preprocessing module, which performs data normalization, schema enforcement, and tokenization—preparing input streams for deeper semantic analysis. Rule-based validators are deployed here to capture immediate violations of predefined policy or compliance constraints [

4].

The LLM-based analyzer sits downstream from the rule engine, operating on context-enriched data. It applies pattern recognition, intent detection, and probabilistic reasoning to identify subtle or obfuscated attack sequences that would bypass static rules. This component can leverage fine-tuned LLMs trained on domain-specific threat signatures, incident response reports, and log patterns [

5]. Outputs from the LLM engine include detection probabilities, narrative justifications, and recommended mitigation strategies.

The dual-stream design, where symbolic rules enforce deterministic controls while LLMs adapt to ambiguous or novel patterns, has been explored as a foundational principle in emerging hybrid reasoning frameworks [

6].

The final stage of the pipeline is a decision fusion layer. Here, the outputs of symbolic rules and LLMs are combined—either through weighted scoring or policy-based arbitration—to determine the system’s response. Responses may include alerting, sandboxing a process, revoking access, or flagging events for human triage. All final outputs are logged, explained, and forwarded to a centralized threat response dashboard [

7].

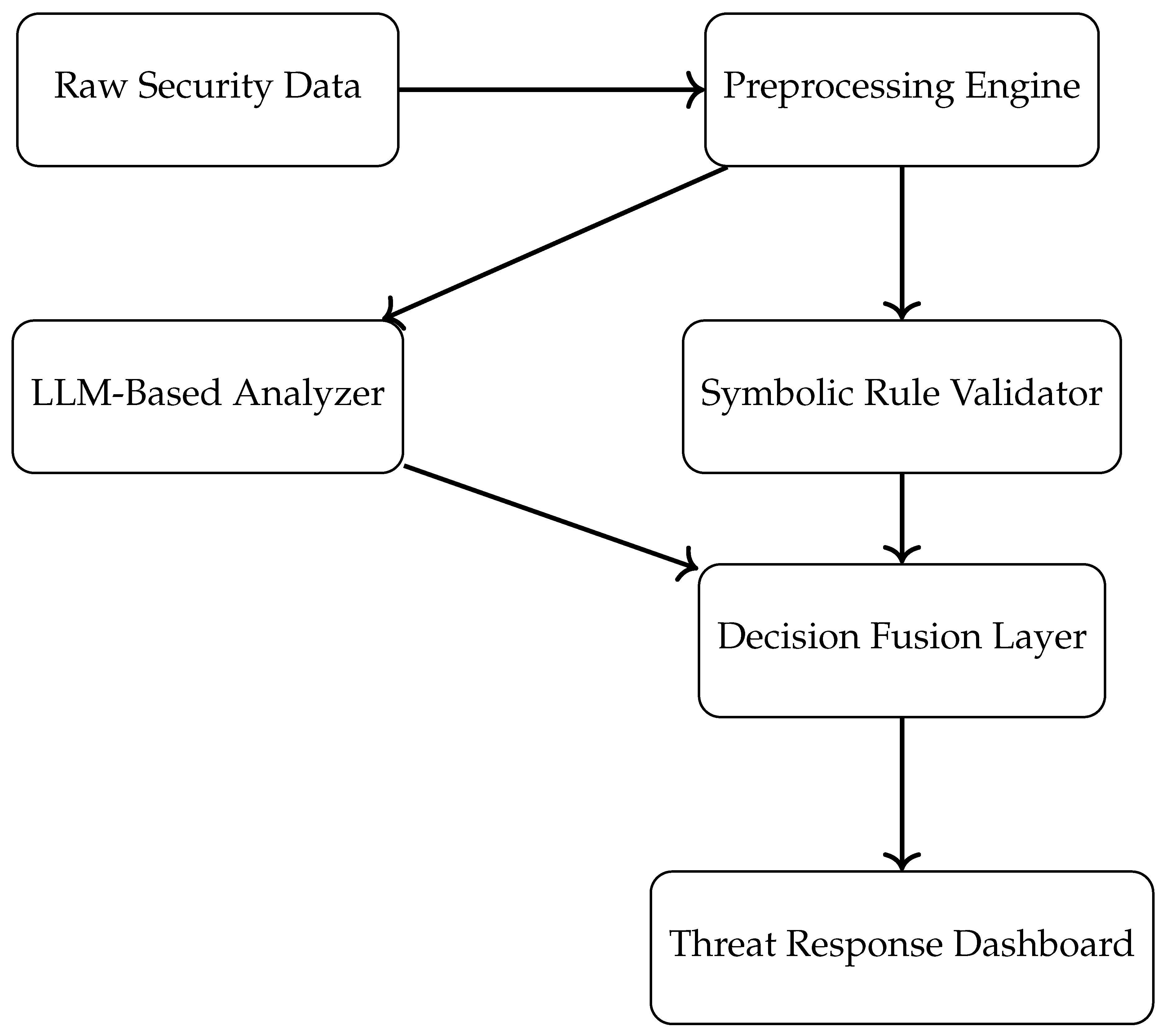

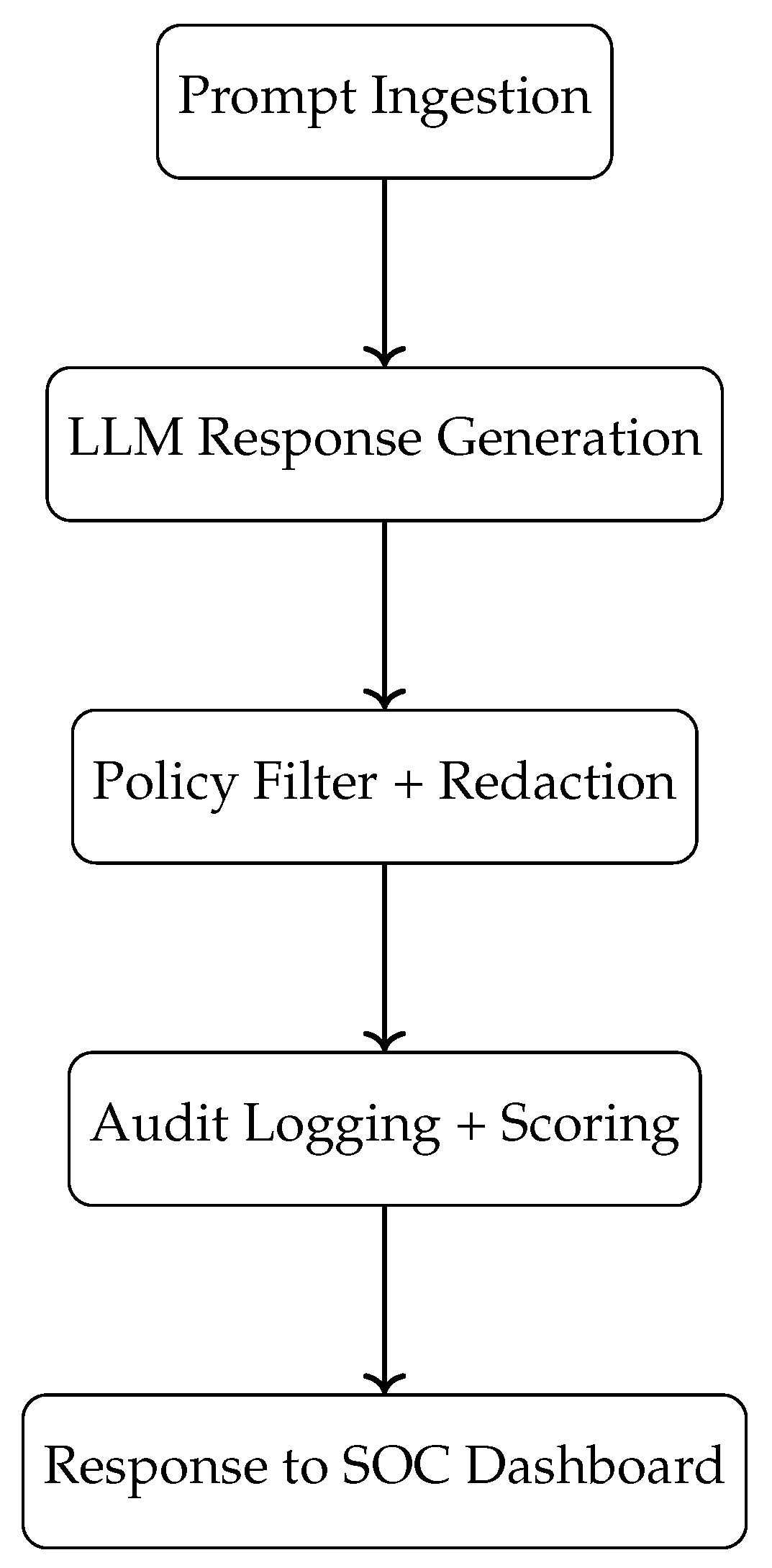

The architecture in

Figure 2 illustrates a layered and explainable design that separates compliance enforcement from deep reasoning. Symbolic systems act as deterministic gatekeepers, validating known policies in real time. Meanwhile, LLM modules expand detection capabilities through learned representations of risk, allowing the pipeline to flag complex and evolving threats. The fusion layer ensures that decisions maintain both interpretability and adaptability, offering the flexibility needed in modern security operations centers (SOCs).

Additionally, the architecture supports pluggable components that allow seamless integration with existing enterprise security systems. For example, raw data can originate from SIEM platforms, firewalls, or identity management systems, all of which feed into the preprocessing module. The preprocessing engine standardizes input formats, removes noise, and extracts relevant features to ensure that downstream LLM and symbolic validators operate on clean, actionable data. This harmonization is critical when dealing with heterogeneous logs and telemetry across distributed environments.

The hybrid fusion layer also facilitates explainability and traceability—two pillars of trustworthy AI systems. Instead of producing opaque decisions, the system logs both symbolic matches and LLM attention weights or token-level activations, enabling operators to audit how conclusions were derived. These enriched logs can be analyzed post-incident or fed into dashboards for real-time transparency. By offering a full-spectrum view from rule triggers to deep learning inferences, this architecture fosters confidence among compliance auditors and cybersecurity stakeholders [

8].

The modular nature of this architecture also ensures flexibility in deployment. For instance, in highly sensitive environments like finance or defense, the symbolic rule validator can be given precedence to enforce strict compliance checks before engaging the LLM-based analyzer. Conversely, in fast-moving threat landscapes, the system can prioritize LLM reasoning for broader anomaly detection while relegating rules to post-hoc validation. Furthermore, the decision fusion layer can be configured with dynamic weighting mechanisms—assigning confidence scores to both streams and selecting actions based on a trust threshold. This dynamic orchestration between deterministic and probabilistic components enhances both resilience and responsiveness in real-world threat scenarios.

4. AI-Driven Threat Detection Pipelines

AI-Driven Threat Detection remains a cornerstone of enterprise cybersecurity, where time-sensitive responses are critical to minimizing impact. Traditional systems often rely on fixed signatures or rule-based triggers, which, while precise, can miss novel or obfuscated attack patterns. In contrast, LLM-enhanced detection systems dynamically infer intent and recognize unseen threats through contextual embeddings. However, this flexibility can introduce false positives or inconsistencies without structured oversight.

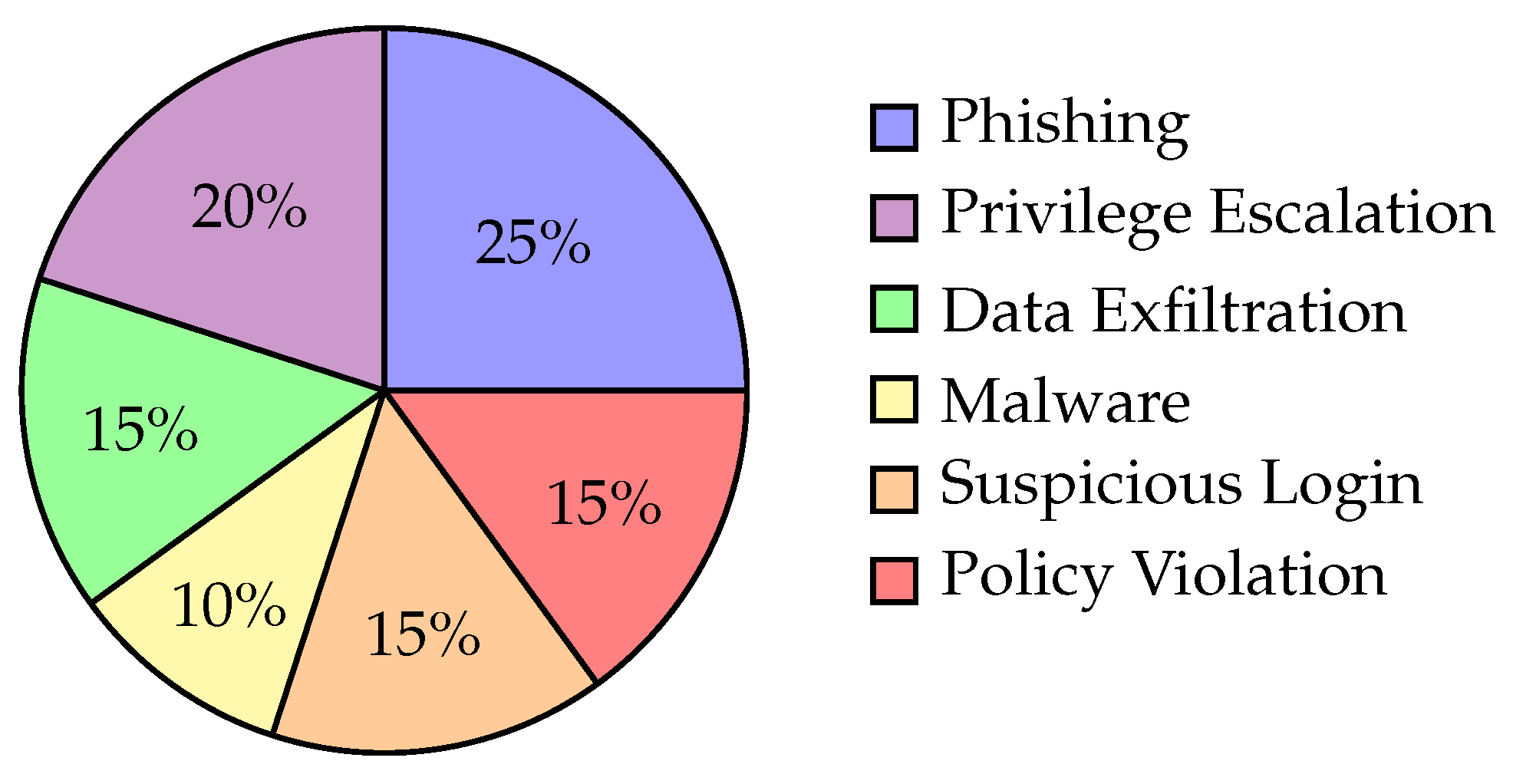

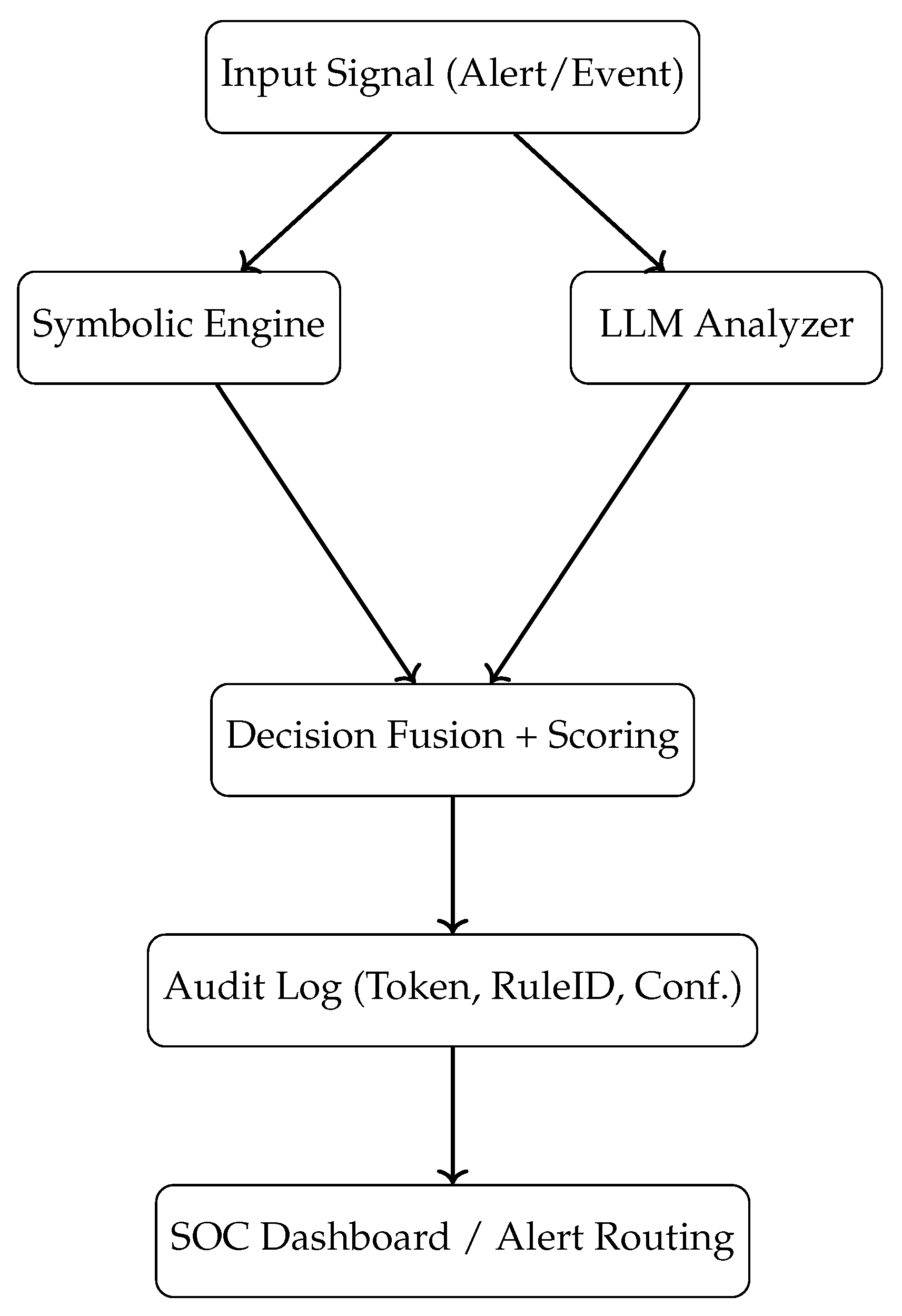

Hybrid detection frameworks merge these approaches to balance reliability with generalization. As depicted in

Figure 3, a hybrid system can categorize incoming alerts across multiple classes, combining deterministic matching from symbolic validators with inferential pattern recognition from LLMs. This integration ensures both legacy compliance checks and novel threat detection are addressed concurrently.

As shown in

Figure 3, the hybrid system categorizes threats with a balance of symbolic precision and neural adaptability.

The real-time performance of detection systems must be evaluated not only on their ability to trigger alerts but also on their accuracy in minimizing false positives and negatives. Rule-based systems often display high precision when threats match known signatures, but they falter when adversaries modify payloads or tactics. LLMs, while more adaptable, may misclassify benign anomalies as malicious activity due to contextual overreach.

Hybrid models provide a harmonized balance, extracting patterns and intent using LLMs while validating decisions through policy-grounded symbolic systems. This layered evaluation process reduces erroneous alerts and provides richer interpretability. Each detection pathway contributes to a decision confidence score that governs downstream responses [

9].

To validate these capabilities, a controlled evaluation was conducted on a synthetic alert dataset containing varied attack types. The performance metrics were captured across several categories including phishing, data exfiltration, privilege escalation, and malware injections.

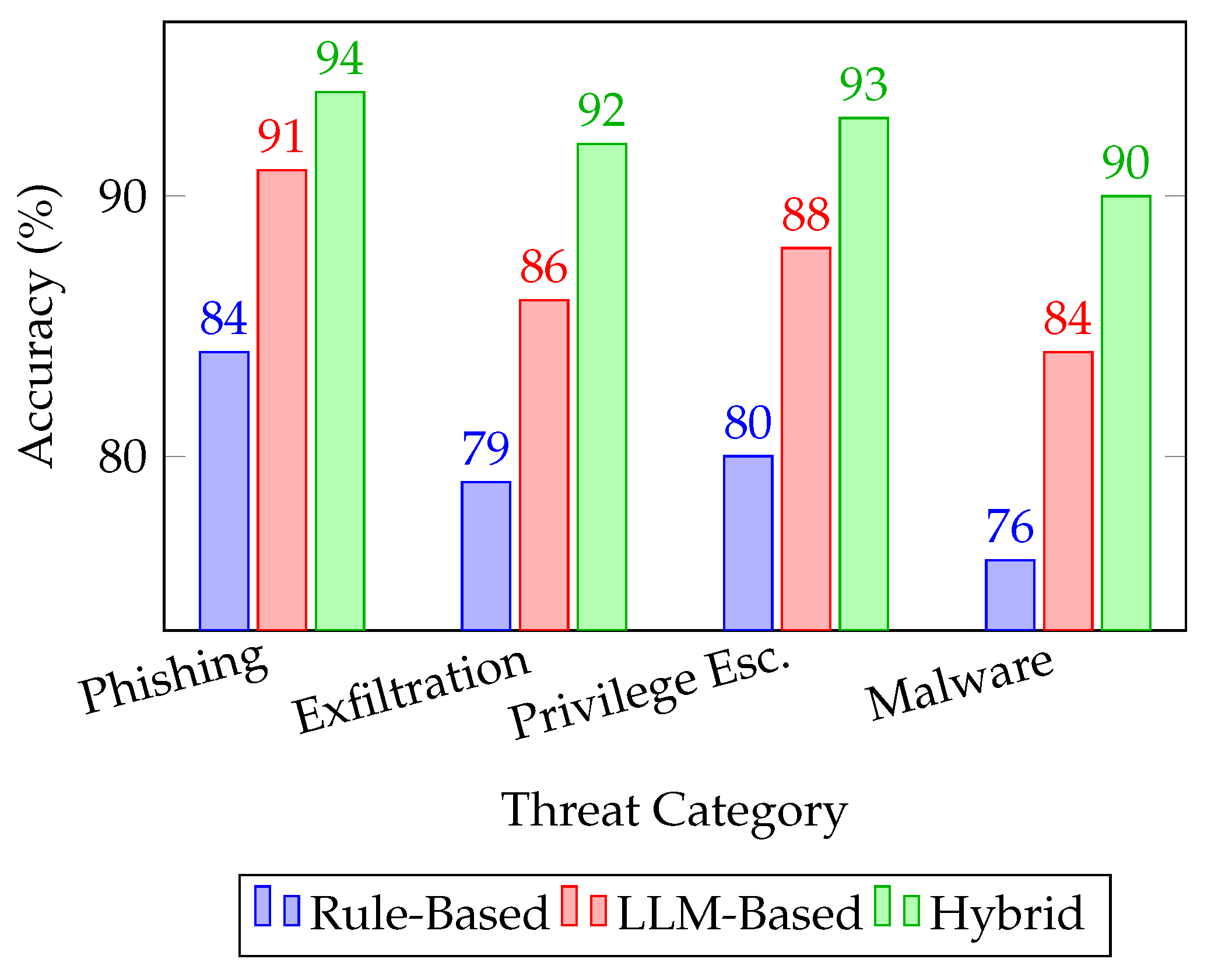

Figure 4 illustrates the accuracy outcomes for the three detection strategies under the same test conditions.

Figure 4 compares detection accuracy of rule-based, LLM-only, and hybrid models across threat categories.

As shown in

Figure 4, the hybrid system consistently outperforms both standalone symbolic and LLM-based models across all evaluated threat categories. The symbolic system demonstrates solid accuracy in predictable, policy-aligned domains such as phishing and malware, but its detection drops significantly when faced with nuanced or novel behavior patterns [

10]. In contrast, the LLM-based model shows improved generalization, particularly in ambiguous attacks like privilege escalation. The hybrid model integrates these strengths, leveraging deterministic pattern recognition from symbolic engines while allowing LLMs to capture contextual intent. This results in balanced, high-accuracy threat detection suitable for AI-driven pipelines.

Micro Use Case: Financial SOC Threat Detection. A global financial organization deployed the hybrid model to monitor login anomalies and email traffic. During a multi-stage phishing campaign, the rule engine flagged unusual login geolocations, while the LLM flagged abnormal phrasing in internal emails suggesting sensitive data transfers. Together, the hybrid system confirmed a coordinated exfiltration attempt and triggered automatic containment protocols within 45 seconds.

In addition to broader coverage, hybrid systems improve detection precision while maintaining recall.

Figure 4 compares the precision of standalone rule-based and LLM-only systems with the hybrid framework. The hybrid model demonstrates reduced false positives while retaining high recall, particularly in ambiguous or multi-stage attacks [

11]. These results highlight that hybrid frameworks can provide dependable detection across multiple threat categories, making them suitable for modern, evolving security landscapes. With further tuning and automated retraining, these models can continuously improve detection capabilities in real-time environments.

5. Compliance and Policy Automation

In enterprise environments, the integration of AI-driven systems into cybersecurity pipelines must adhere to stringent data protection regulations such as GDPR, HIPAA, and NIST guidelines [

12]. Unlike traditional systems, LLM-based detection introduces questions around data retention, transparency, and auditability—factors that are critical when aligning with compliance frameworks. Therefore, the compliance layer in a hybrid security pipeline must act as a governance checkpoint, ensuring that LLM operations do not violate regulatory mandates.

Table 2 outlines how key compliance requirements are addressed by hybrid LLM-symbolic systems. For instance, while symbolic systems offer deterministic rule enforcement, LLMs provide intelligent response generation and contextual filtering. When combined, they can support audit trails, selective data masking, and fine-grained control policies that satisfy both technical and legal scrutiny [

13].

The hybrid compliance layer enhances not only governance but also explainability. While LLMs enrich outputs with justifications and contextual depth, symbolic validators ensure actions are traceable and policy-aligned. For example, in the event of a suspicious login from a sanctioned country, symbolic policies can trigger immediate containment, while the LLM generates a contextual justification for SOC operators. This pairing is crucial for high-stakes environments where both precision and interpretability are non-negotiable.

The alignment of hybrid AI systems with compliance mandates ensures that security pipelines do not merely detect anomalies but also operate within the bounds of legal and ethical frameworks. For instance, GDPR’s emphasis on data minimization and transparency is well-supported by symbolic rule-based filters that enforce strict logging policies, while LLMs enhance contextual interpretation of consent and opt-out requests. Such integrations ensure not only compliance by design but also facilitate auditability and user trust [

14].

Moreover, hybrid models provide flexible tooling to meet evolving compliance landscapes without requiring architectural overhauls. For example, token-based masking in LLM pipelines can dynamically redact personally identifiable information (PII), while rule layers validate outputs against policy-specific constraints. This dual approach enables organizations to balance the agility of LLMs with the governance strength of symbolic logic—ensuring security systems are not only adaptive but also certifiably compliant across jurisdictions.

6. Deployment and Operational Integrity

As organizations transition from proof-of-concept models to production-grade hybrid AI systems, ensuring robust deployment and runtime reliability becomes paramount. Real-world cybersecurity environments are dynamic and require systems that adapt rapidly, scale predictably, and maintain high availability. Operational integrity encompasses the infrastructure-level guarantees that these AI-driven detection and policy mechanisms can perform under varying load and threat conditions.

In hybrid setups, symbolic components often serve as fast, deterministic fallback mechanisms, ensuring minimum viable coverage even during high-latency LLM inference spikes. Cloud-native orchestration frameworks such as Kubernetes enable microservice-based deployments where LLM engines and rule validators are containerized independently. This allows auto-scaling policies to be applied at the component level based on CPU/memory thresholds or alert throughput [

15].

Another operational advantage of hybrid models is their modular fault tolerance. For instance, if an LLM container becomes unresponsive, the symbolic path can continue processing alerts, ensuring business continuity. Additionally, hybrid systems can maintain a rollback strategy where output confidence scores below a threshold are deferred to human analysts, reducing the risk of automated misclassification [

16].

In regulated industries like finance or healthcare, such operational safeguards are not just performance optimizations but compliance necessities. Enterprises demand high uptime, explainability, and seamless failover to maintain trust in AI-driven decision systems. Therefore, hybrid architectures that combine reliability, adaptability, and scalability are the logical next step in secure, production-grade deployments.

To evaluate deployment performance, we measured three key metrics—latency, throughput, and precision—across different configurations: standalone rule-based, LLM-only, and hybrid deployments. The hybrid pipeline was containerized using Docker, deployed via Helm on a Kubernetes cluster, and integrated with Prometheus for observability. Results are shown in

Figure 5.

As evident from the results, rule-based deployments excel in low-latency scenarios due to their lightweight design and predictable logic, making them ideal for edge or constrained environments. LLM-based deployments achieve higher precision but incur greater inference time and hardware load. The hybrid system balances these trade-offs, benefiting from symbolic fast-path validation while offloading complex detections to LLMs [

17].

Beyond raw performance metrics, real-world deployments demand that AI systems operate within organizational SLAs (Service Level Agreements). This means managing not only model accuracy but also infrastructure predictability, error-handling, and multi-tenant resource sharing. In many modern deployments, hybrid AI architectures are embedded into SOC (Security Operations Center) workflows, which include real-time dashboards, alert escalation paths, and integration with SIEM (Security Information and Event Management) tools. These interfaces benefit greatly from structured, symbolic outputs while gaining depth from LLM-generated justifications.

From an engineering standpoint, hybrid architectures offer clearer separation of concerns. While rule-based logic can be updated by security analysts with domain expertise, the LLM modules can be retrained or fine-tuned by data scientists on updated corpora or incident logs. This decoupling ensures agility in both operational and strategic layers of the deployment lifecycle. Change management processes also benefit, as rule changes can be tested independently of LLM updates—reducing deployment risk and improving CI/CD flows.

Finally, observability plays a pivotal role in ensuring operational integrity. Each inference step—whether symbolic or neural—is logged, timestamped, and versioned. Confidence scores, token attention maps, and rule activation traces can be exported to AIOps platforms for anomaly tracking. This telemetry not only supports troubleshooting but also enables continual system optimization. Over time, organizations can analyze these logs to identify drift, detect model decay, and trigger retraining pipelines—creating a virtuous cycle of performance and reliability.

7. Governance, Ethics, and Bias Mitigation

As hybrid AI systems assume more responsibility in cybersecurity decision-making, their governance must extend beyond operational uptime to include ethical, regulatory, and societal considerations. This is especially critical for LLM-based components, which—unlike symbolic systems—exhibit probabilistic and context-sensitive behavior. Without proper constraints, they risk surfacing biased, opaque, or misleading outputs that may compromise user trust or even lead to unintended policy violations [

18].

Modern AI systems deployed in cybersecurity environments operate in high-stakes domains where false positives and negatives can lead to financial losses, compliance breaches, or undetected threats. While LLMs introduce flexibility and intelligence in interpreting security signals, they also pose significant governance challenges due to their opaque reasoning and probabilistic nature. It becomes essential to implement structured safeguards that ensure AI-driven outputs are both ethically sound and operationally defensible.

Governance frameworks for AI in cybersecurity extend far beyond basic logging. They must enable dynamic monitoring of prompt behavior, response explainability, and policy adherence—especially in real-time environments like Security Operations Centers (SOCs). These governance controls not only improve trust and accountability but also offer critical forensic capabilities. If an AI-generated response leads to incorrect action, logs from the governance system can reconstruct the decision path, allowing investigators to pinpoint responsibility and improve future behavior.

Such systems must integrate governance features as part of the core architecture rather than bolt-on extensions. They should include filters that enforce organizational rules, redactors that remove sensitive data, and scoring systems that assess the confidence and policy alignment of each AI-generated response. These mechanisms ensure that hybrid LLM-symbolic architectures don’t just act smart, but act responsibly—operating transparently under human and regulatory oversight.

Governance models for secure AI systems must enforce accountability at multiple checkpoints: prompt curation, model auditability, human-in-the-loop (HITL) validation, and explainability enforcement. These components collectively define what we refer to as the LLM Governance Loop.

Bias mitigation starts early in the lifecycle, particularly during training and prompt engineering. Enterprise-grade LLMs must be fine-tuned on curated, de-biased corpora and adversarially tested across demographic and geopolitical scenarios. Hybrid systems reinforce these safeguards by pairing LLM outputs with deterministic symbolic rules. For instance, even if an LLM mistakenly recommends a lenient threat response, a symbolic policy can override or flag the action for manual review [

19].

Figure 6 visualizes this flow from input to filtered response, ensuring that outputs comply with enterprise policies and social norms.

Ethical enforcement frameworks also benefit from traceable feedback mechanisms. Analysts can rate or annotate AI decisions, and this feedback loop can be funneled into retraining cycles. More advanced setups include explainability layers that generate natural-language justifications, enhancing transparency for auditors and users alike. While symbolic systems naturally offer traceability, LLMs require dedicated modules for attribution and risk tagging, which are critical in domains like finance, healthcare, and national security [

20].

Ultimately, the hybrid approach strengthens ethical alignment. Symbolic logic ensures alignment with predefined rules and jurisdictional boundaries, while LLMs adapt to novel threats and contextual nuances. With governance frameworks layered on top, organizations can maintain confidence that their AI systems are both technically proficient and ethically grounded.

8. Observability and Auditability in Secure AI

In AI-powered cybersecurity pipelines, observability refers to the ability to continuously monitor, inspect, and debug the behavior of detection systems, both symbolic and neural. Observability is crucial for ensuring not only system uptime but also operational correctness, interpretability, and explainability—factors that determine whether AI systems can be trusted in regulated environments. Auditability, on the other hand, focuses on the ability to recreate and review past decision flows for compliance and accountability.

From a tooling standpoint, modern observability stacks integrate Prometheus for metrics, ELK or OpenTelemetry for log aggregation, and Grafana dashboards customized for SOC workflows. Each model component can export structured logs—e.g., LLM token paths, symbolic rule activations—which are visualized and analyzed in real time. With these insights, engineering teams can iteratively refine both detection logic and user trust models [

8].

Robust observability also enables proactive anomaly detection within the AI system itself. By continuously monitoring patterns such as distribution drift in input signals, unusual latency spikes, or deviation in model confidence scores, security teams can flag potential misconfigurations, model decay, or adversarial probing attempts. This level of introspective monitoring ensures not just external threat detection but also internal model integrity and resilience.

While much of cybersecurity AI focuses on real-time detection, the ability to audit and reconstruct how decisions were made is equally critical. Modern threat environments require forensic traceability—not just whether an alert was triggered, but why it was triggered, which model components contributed, and whether policy constraints were honored. Without these capabilities, even the most accurate AI systems risk being unaccountable in regulated environments.

Observability in hybrid architectures means tracking both fast-path (symbolic) and slow-path (LLM) behaviors independently and collectively. For example, a symbolic engine might log triggered rule IDs and policy reasons, while an LLM logs prompts, confidence scores, and token-level output justifications. Aligning these logs creates a powerful, explainable narrative for every decision made by the system. This is especially useful in post-incident reviews and internal audits.

Auditability, meanwhile, extends observability by enforcing structured storage, access control, and compliance tagging. Every interaction—be it input prompt, model output, or rule match—must be timestamped, attributed, and signed. These records feed into compliance dashboards, SOC alerts, and breach forensics systems, supporting requirements like GDPR’s data access transparency and HIPAA’s accountability mandates. Together, observability and auditability form the foundation of secure and governable AI systems.

In hybrid models, observability pipelines must track both fast-path symbolic rule activations and slower, contextual LLM outputs. This involves capturing raw prompts, token embeddings, attention weights, rule IDs triggered, and confidence scores at each decision layer. As illustrated in

Figure 7, this multi-modal trace is recorded in a secure log that supports future audits, RCA (Root Cause Analysis), and regulatory reporting.

Well-instrumented audit trails enable organizations to detect model drift, identify hallucinated outputs, and even reconstruct events leading up to a security breach. For example, if a threat was incorrectly classified as benign, logs from the symbolic engine might reveal that no rules matched, while the LLM path might expose overconfidence due to prompt phrasing. This layered observability is what differentiates trustworthy AI systems from experimental ones [

21].

Moreover, compliance frameworks such as GDPR and HIPAA require clear accountability on how user data is processed. Hybrid AI systems can store redacted versions of user inputs and output rationales—each tagged with metadata, timestamps, and policy compliance hashes. These allow auditors to validate that no regulatory boundaries were crossed [

22].

Auditability mechanisms must also account for access controls and actor intent. In a multi-user SOC environment, actions taken by analysts—such as prompt injections, model overrides, or manual rule toggling—should be timestamped and linked to authenticated user IDs. This level of traceability protects against insider threats and supports non-repudiation. Moreover, structured logs from both symbolic and LLM pipelines should be digitally signed to prevent tampering and support forensic investigations.

Looking forward, the integration of observability with reinforcement learning loops can transform static audit systems into adaptive feedback mechanisms. Observability logs could be mined not only for drift detection but also for training signal generation. For example, frequent false positives in a particular detection category might automatically trigger fine-tuning of the LLM or revision of symbolic thresholds. This synergy creates a living, learning cybersecurity system that evolves with its threat landscape.

9. Conclusion and Future Work

This paper presented a comprehensive architecture for secure, intelligent cybersecurity pipelines that integrate symbolic rule-based systems with transformer-based LLMs. By leveraging the strengths of both paradigms, hybrid models enable precise, policy-aligned decision-making while also adapting to dynamic and previously unseen threat patterns. This dual approach offers a powerful framework for building trustworthy, explainable, and scalable AI solutions within enterprise environments.

Throughout the pipeline—from data ingestion to detection, compliance validation, and audit logging—the combination of LLM flexibility and symbolic determinism has proven highly effective. LLMs provide contextual understanding, semantic inference, and generalization, while rule engines ensure alignment with organizational policies, legal mandates, and risk thresholds. This pairing ensures not only higher detection accuracy but also defensibility, which is critical in regulated industries.

In addition to detection capabilities, the architecture embeds ethical governance, auditability, and explainability at multiple levels. Visual workflows like prompt-to-response pipelines and audit trails provide clear visibility into how decisions are made. These transparent mechanisms enhance the trustworthiness of AI systems and provide operational safeguards against misclassification or hallucinated outputs.

Our design also emphasizes compliance-by-construction. By embedding rule filters, data masking logic, and role-aware access controls directly into LLM outputs, the system remains adaptable without compromising on policy enforcement. This modular structure means organizations can respond quickly to evolving regulations like GDPR, CCPA, HIPAA, and NIST without requiring architectural overhauls.

Performance evaluations further validate the value of hybrid models. Across threat types such as phishing, exfiltration, and privilege escalation, hybrid detection consistently outperforms standalone models. These gains extend not only to accuracy but also to user trust, maintainability, and interoperability with existing SOC platforms and logging infrastructure.

Future work will explore incorporating real-time feedback loops that allow models to learn from analyst inputs or audit flags. Reinforcement learning and retrieval-augmented generation (RAG) techniques can be employed to refine the LLM component while ensuring that symbolic rules remain the anchor for safety-critical logic. We also plan to extend observability dashboards with explainability overlays for SOC teams and compliance officers.

In addition to robust detection pipelines and policy alignment, future iterations of hybrid LLM-symbolic architectures must prioritize scalability and adaptability. As threat landscapes evolve with more polymorphic and AI-generated attacks, detection systems must support online learning, domain adaptation, and decentralized inference across edge devices. This necessitates continued research into lightweight LLM deployment, memory-efficient policy engines, and secure model retraining pipelines.

Ultimately, the architecture and methodology outlined in this paper offer a blueprint for organizations seeking to deploy AI systems that are not only powerful but also secure, transparent, and aligned with human oversight. As threats evolve, so must our systems—not only in detection capability but also in ethical resilience and systemic observability.

Furthermore, the ethical implications of automated threat detection—especially around data sovereignty, algorithmic fairness, and human-in-the-loop overrides—require continued scrutiny. Organizations deploying these systems should incorporate fairness audits, red-teaming evaluations, and transparent feedback mechanisms to ensure that threat detection is not only accurate but also equitable and explainable. As AI governance frameworks mature globally, hybrid compliance-aware systems will serve as blueprints for secure, accountable, and responsive cybersecurity infrastructures.

Looking ahead, these hybrid architectures can align with emerging AI governance frameworks, including the EU AI Act, NIST AI RMF, and enterprise zero-trust policies. Their modular nature enables auditability by design and compliance with cross-border regulations. With scalable deployment and ethical oversight, LLM-symbolic systems are poised to become core components of secure, transparent AI pipelines.

Acknowledgments

The authors would like to thank the contributors, engineers, and cybersecurity researchers whose insights into large language models, hybrid architectures, and regulatory compliance helped shape the perspectives discussed in this paper. This work builds upon interdisciplinary efforts across secure software engineering, AI-driven threat detection, and policy automation. The research was not influenced by any specific institution, funding agency, or proprietary system.

References

- Chen, X.; Wang, L.; Shen, A. Rule-based Systems in Software Engineering: Challenges and Opportunities. In Proceedings of the Proceedings of the 22nd ACM SIGSOFT International Symposium on Foundations of Software Engineering. ACM, 2014, pp. 456–465. [CrossRef]

- Koziolek, H.; Burger, A. Rule-based Code Generation in Industrial Settings: Four Case Studies. In Proceedings of the Proceedings of the 29th Annual ACM Symposium on Applied Computing. ACM, 2014, pp. 1234–1241. [CrossRef]

- Zhang, Y.; Li, Y.; Wang, S.; Zou, X. Transformers for Natural Language Processing: A Comprehensive Survey. arXiv preprint arXiv:2305.13504 2023.

- Wang, Z.; Xue, Y.; Dong, Y. A Systematic Review of Rule-Based Systems in Modern Software Architecture. Journal of Systems Architecture 2024, 103, 103193. [CrossRef]

- Feng, Z.; Guo, D.; Tang, D.; Duan, N.; Feng. CodeBERT: A Pre-Trained Model for Programming and Natural Languages. arXiv preprint arXiv:2002.08155 2020.

- Bollikonda, M. Hybrid Reasoning in AI: Integrating Rule-Based Systems with Transformer Models. Preprints 2025. Preprint, available at Preprints.org. [CrossRef]

- Bura, C. ENRIQ: Enterprise Neural Retrieval and Intelligent Querying. REDAY - Journal of Artificial Intelligence & Computational Science 2025. [CrossRef]

- Bura, C.; Jonnalagadda, A.K.; Naayini, P. The Role of Explainable AI (XAI) in Trust and Adoption. Journal of Artificial Intelligence General science (JAIGS) ISSN: 3006-4023 2024, 7, 262–277.

- Kamatala, S.; Jonnalagadda, A.K.; Naayini, P. Transformers Beyond NLP: Expanding Horizons in Machine Learning. Iconic Research And Engineering Journals 2025, 8. https://doi.org/https://www.irejournals.com/paper-details/1706957.

- Wang, Y.; Liu, W.; Liu, G.; Du, X.; Zhang, Y.; Sun, S.; Li, L. CodeT5: Identifier-aware Unified Pre-trained Encoder-Decoder Models for Code Understanding and Generation. In Proceedings of the Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP), 2021, pp. 8696–8708.

- Zheng, Q.; Xia, X.; Zou, X.; Dong, Y.; Wang, S.; Xue, Y.; Wang, Z.; Shen, L.; Wang, A.; Li, Y.; et al. Codegeex: A Pre-trained Model for Code Generation with Multilingual Evaluations on HumanEval-X. arXiv preprint arXiv:2303.17568 2023.

- Kamatala, S.; Naayini, P.; Myakala, P.K. Mitigating Bias in AI: A Framework for Ethical and Fair Machine Learning Models. Available at SSRN 5138366 2025.

- Myakala, P.K.; Jonnalagadda, A.K.; Bura, C. Federated Learning and Data Privacy: A Review of Challenges and Opportunities. International Journal of Research Publication and Reviews 2024, 5. [CrossRef]

- Wangoo, D.P. Artificial Intelligence Techniques in Software Engineering for Automated Software Reuse and Design. In Proceedings of the 2018 4th International Conference on Computing Communication and Automation (ICCCA), 2018, pp. 1–4. [CrossRef]

- Ahmad, W.U.; Chakraborty, S.; Ray, B.; Chang, K.W. Unified Pre-training for Program Understanding and Generation. arXiv preprint arXiv:2103.06333 2021.

- Nijkamp, E.; Lee, B.P.; Pang, R.; Zhou, S.; Xiong, C.; Savarese, S.; Ni, J.; Keutzer, K.; Zou, Y. CodeGen: An Open Large Language Model for Code with Multi-Turn Program Synthesis. arXiv preprint arXiv:2203.13474 2022.

- Lu, S.; Guo, D.; Ren, S.; Huang, J.; Svyatkovskiy, A. CodeXGLUE: A Machine Learning Benchmark Dataset for Code Understanding and Generation, 2021, [arXiv:cs.SE/2102.04664].

- Masoumzadeh, A. From Rule-Based Systems to Transformers: A Journey Through the Evolution of Natural Language. Medium 2023. Accessed: 2023-10-15.

- Kamatala, S. AI Agents And LLMS Revolutionizing The Future Of Intelligent Systems. International Journal of Scientific Research and Engineering Development 2024, 7. [CrossRef]

- Myakala, P.K. Beyond Accuracy: A Multi-faceted Evaluation Framework for Real-World AI Agents. International Journal of Scientific Research and Engineering Development 2024, 7. [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All You Need. In Proceedings of the Advances in Neural Information Processing Systems, 2017, Vol. 30.

- Le, H.; Wang, Y.; Gotmare, A.D.; Savarese, S.; Hoi, S. OpenReview: A Platform for Transparent and Open Peer Review. In Proceedings of the OpenReview, 2023.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).