1. Introduction

Practical text data classification and comprehension [

1,

2,

3] are crucial to a range of applications, such as social media analysis [

4,

5,

6,

7,

8], recommendation systems, and automated content moderation [

9,

10,

11]. During the last decade, extensive efforts have been made towards developing methods to process and extract data from short texts with restricted and condensed information. Among the most potent approaches in this area are BERT, TF+IDF, and the integrated LDA+BERT+AE method. BERT (Bidirectional Encoder Representations from Transformers) is a groundbreaking method in deep learning that allows models to learn contextual relations within the text more efficiently. TF+IDF, an ancient statistical technique, is used to quantify the importance of words in a document and is thus a valuable asset for information filtering and identification of essential topics. The combined LDA+BERT+AE method promises to combine the depth and width of text understanding using LDA (Latent Dirichlet Allocation) topic modeling, BERT contextual understanding, and AE (Autoencoder) data dimensionality reduction and optimization. The importance of the analysis of short texts with the help of intelligent clustering methods [

12] stems from the growing need for rapid processing and understanding of vast amounts of compressed text data characteristic of modern digital communication. With the growth of social networks, websites, and mobile applications, the number of short messages such as tweets, statuses, and comments is increasing, for which natural language processing systems must improve the speed and accuracy of analysis. These messages contain valuable information about public opinion, user preference, and current trends, and therefore, their analysis is crucial in marketing, brand management, political analysis, and many other fields. Hence, developing and optimizing models such as BERT, TF+IDF, and LDA+BERT+AE to deal with such texts efficiently and accurately is of topmost priority in artificial intelligence and NLP [

13,

14,

15].

This book aims to improve the quality and hastening the process of text clustering data so that intelligent clustering methods can be employed for more precise and efficient retrieval of helpful information. Particular emphasis is placed on their flexibility and utility across applications where traditional approaches would face difficulties because of the paucity of data quantity or heterogeneity. The results of this work can offer significant improvements in the field of short text analysis, providing a deeper and more accurate understanding of the content and helping to implement more effective NLP systems. The importance of this topic increases in the digital era when data volumes are growing exponentially. Short texts such as tweets, user reviews, and social media comments represent a significant portion of this content. Traditional analysis methods [

16,

17,

18] are often ineffective for working with such formats due to their brevity and high concentration of meaning in a limited number of words. This challenges researchers to select suitable data processing tools and adapt them to the specific requirements of short text formats. The impact of contextual analysis on the quality and accuracy of clustering is also considered. Modern technologies such as BERT [

19,

20] offer a revolutionary approach to language understanding, allowing systems to better deal with the ambiguities and complexities of natural language. Integrating BERT with LDA [

21,

22] and AE [

23,

24,

25] within a single solution opens up new opportunities to improve analysis accuracy through a deeper understanding of text structures and semantics. This work aims to demonstrate how such combined approaches can enhance short texts' clustering and classification processes, considering their unique characteristics and needs. Thus, the study offers a comprehensive view of the problem of analyzing short texts and examines promising directions for developing clustering technologies based on data mining. The results obtained in this work suggest a significant contribution to natural language processing. They can be used to create more efficient and adaptive NLP systems capable of coping with a wide range of tasks in the modern information world.

In this work [

26], the authors discuss the importance of analyzing short texts such as social media posts for clustering and knowledge extraction. They review different approaches to short text clustering (STC) to overcome the problems of sparsity, high dimensionality, and lack of information and analyze and summarize research results from five authoritative databases. In this paper [

27], the authors discuss the popularity and simplicity of the K-means algorithm for data clustering despite its many limitations, such as the need to pre-specify the number of clusters, dependence on initial cluster centers, and problems with detecting complex and overlapping clusters. They also review research to improve the performance and robustness of K-means, provide an overview and classification of its variants, and discuss history, current trends, open questions, and prospects for future research.

Article [

28] discusses adapting marketing strategies to digital systems in the e-commerce era to improve customer relationship management (CRM). The authors focus on using big data mining to predict customer intentions and make advertising decisions based on the clustering of target groups and customer recommendations. The study examines the application of sentiment analysis in e-commerce systems, highlighting its essential role in understanding customer behavior through sentiment mining. Proposes a business intelligence framework integrated with decision-making, forecasting, and recommendation systems modeling using hybrid feature selection based on rule-based sentiment analysis and machine learning for future innovative trends in intelligent digital marketing. The paper [

29] discusses the growing interest in short text topic modeling (STTM) on social platforms such as Twitter, Facebook, and Weibo. The authors comprehensively review and classify existing STTM algorithms, analyzing their strengths and weaknesses. It also benchmarks the quality and performance of STTM models on real Twitter datasets, including pandemic and cyberbullying data, using metrics such as topic coherence, purity, NMI, and accuracy. The paper discusses open challenges and future research directions in STTM, providing helpful information for researchers studying modern topic modeling algorithms for short texts and developing new methods in this area.

The paper [

30] discusses the importance of load forecasting (LF) for smart grids (SGs) and its role in ensuring the reliability, stability, and efficiency of these networks. The authors systematically review modern forecasting methods, including traditional, clustering-based, artificial intelligence (AI), and time series methods, analyzing their performance and results. The paper aims to identify the most suitable LF methods for specific applications in SGs. The study results show that AI-based LF methods using machine learning (ML) and neural networks (NN) models demonstrate the best forecast accuracy compared to other methods, achieving higher root mean square (RMS) and mean absolute percentage values and errors (MAPE). This paper [

31] presents a new short text clustering method to improve text mining accuracy and efficiency from social media platforms such as Twitter, Facebook, and Weibo. The Topic Representative Term Discovery (TRTD) method is based on identifying closely related and meaningful terms that frequently appear in texts on the same topic. This approach can effectively group short texts by joint issues, improving clustering results. Experiments on accurate data show that TRTD outperforms state-of-the-art methods in accuracy and efficiency.

2. Materials and Methods

This work presents an end-to-end approach to text data analysis and clustering that integrates two advanced natural language processing technologies, LDA (hidden Dirichlet lattice) and BERT (bidirectional encoding of representations from transformers). This hybrid method called dependent embedding, aims to overcome the limitations inherent in each method when used in isolation. In addition to these methods, the study also uses TF+IDF to improve the estimation of word importance in texts. The LDA method (

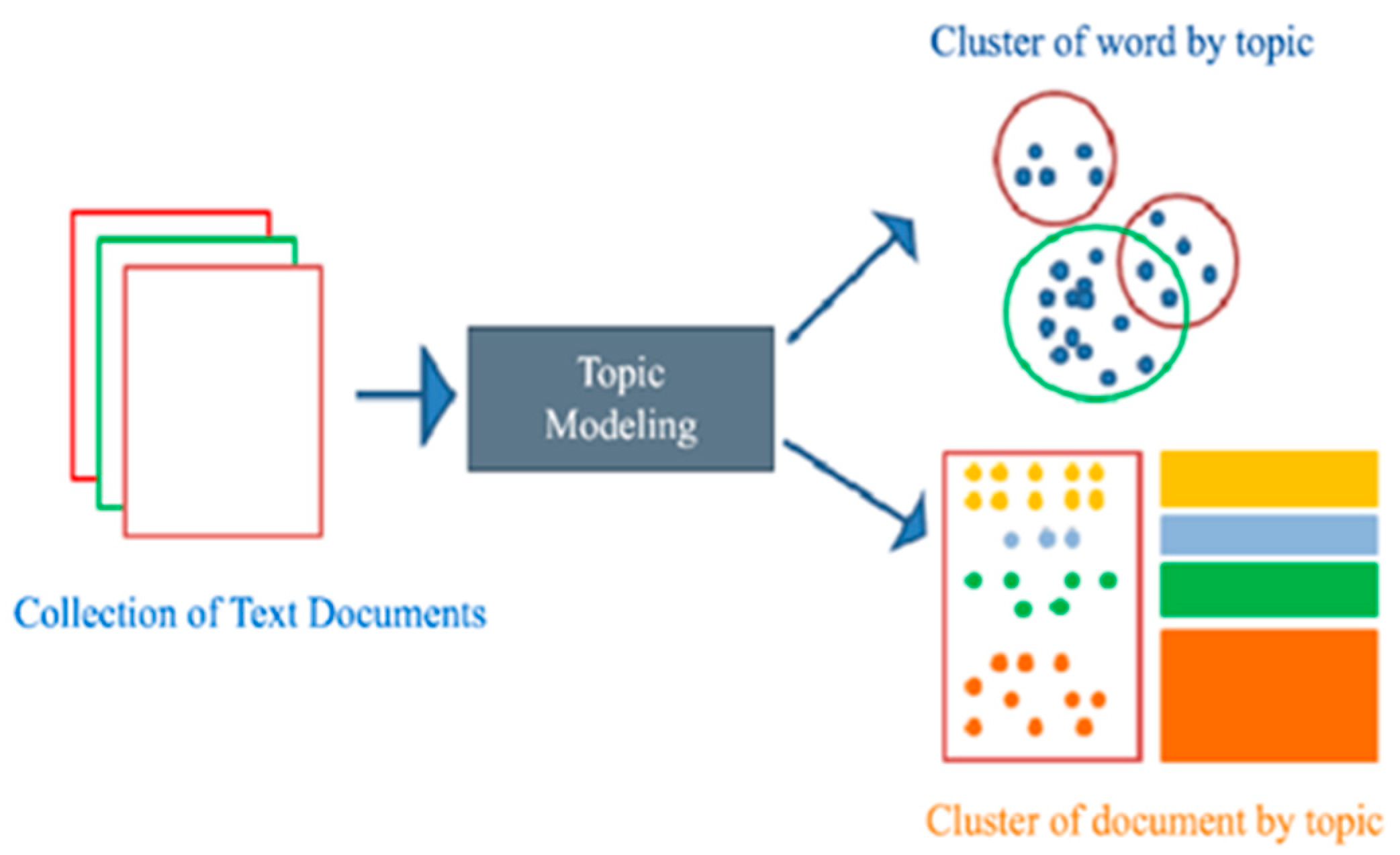

Figure 1), as a statistical approach to topic modeling, allows you to identify common topics in large text arrays. The main disadvantage of LDA is its inability to account for word order and meaning, which can significantly limit its applicability for text analysis where meaning is critical.

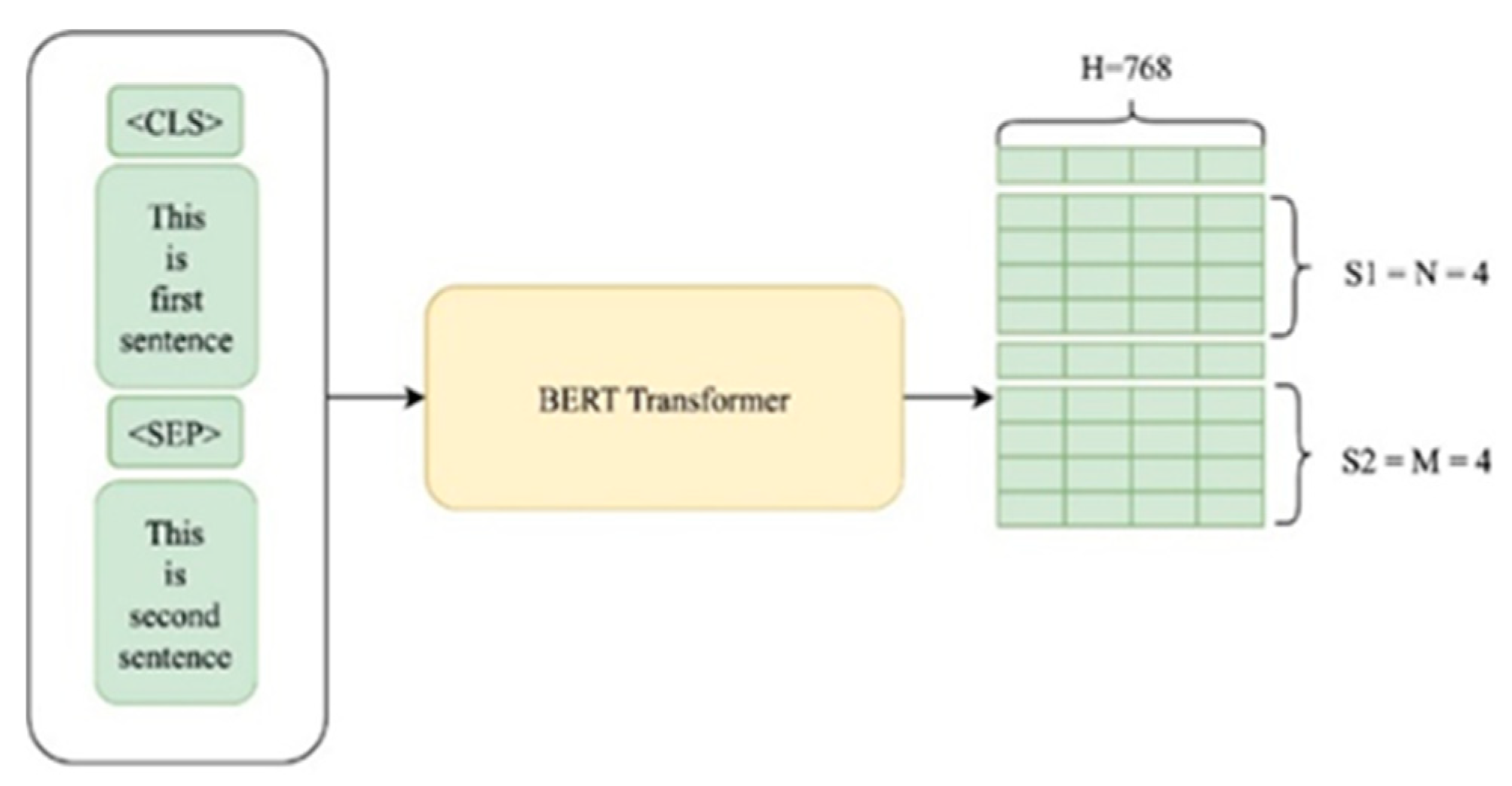

In response to this limitation, BERT is introduced, a deep learning model based on the transformer architecture, which can analyze each word in all other words in a sentence, thereby capturing the contextual nuances of language. The analysis begins with data preprocessing, including removing text noise, such as special characters and stop words, and tokenizing and lemmatizing words. LDA is then applied to identify broad topic clusters, which helps identify common themes in a collection of texts. BERT is then used to create vectors of each word, allowing for a deeper understanding of the semantic relationships and nuances present in the texts (

Figure 2).

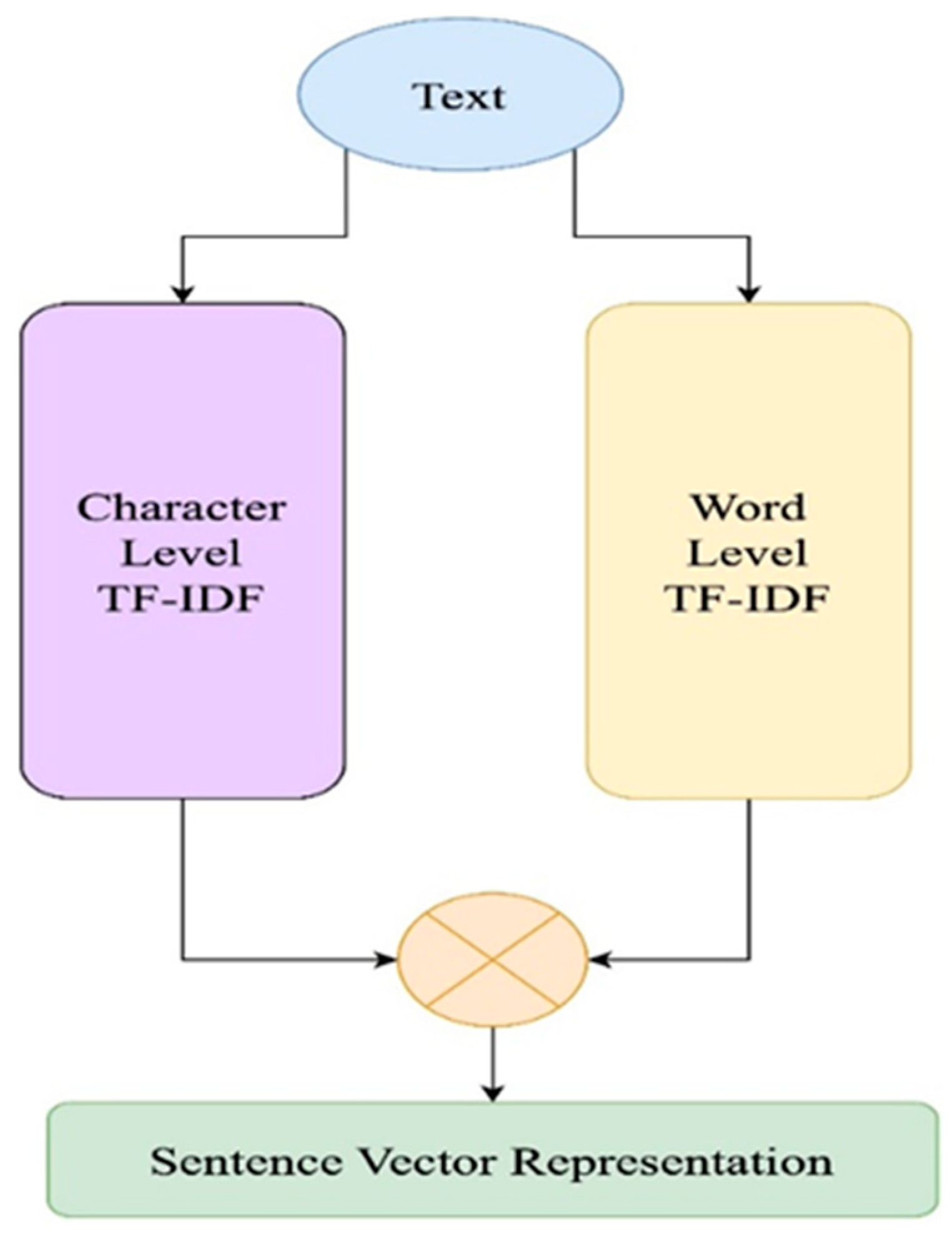

This study uses the TF+IDF (Term Frequency-Inverse Document Frequency) method to improve the quality of analysis and clustering further. This method estimates the importance of each word in a single document based on its frequency of occurrence compared to its overall frequency across all documents in the corpus. This approach to weighting terms makes it possible to identify the most significant keywords for specific texts. It provides a more accurate and informative representation of texts for subsequent stages of clustering (

Figure 3).

The metrics used to evaluate clustering quality include the following: silhouette — indicates how objects are separated from each other during clustering (the higher, the better); calinski_harabasz index — evaluates intra-cluster and inter-cluster variation (the higher, the better); davies_bouldin — an averaged measure of cluster "similarity" (the lower, the better); adjusted_rand (ARI) — compares predicted clusters with ground truth labels (the higher, the better); homogeneity — shows how well each cluster contains objects from only one accurate category; completeness — reflects how well objects of the same category fall into one cluster; v_measure — a harmonic mean between homogeneity and completeness (the higher, the better). The parameter γ controls the relative weight of "topic" features (LDA vector) compared to "contextual" features (BERT vector), where increasing γ increases the contribution of topic-based features (LDA) when forming the feature space. In the code, this is implemented with the line (1):

where

– concatenates two arrays: (LDA * γ) and BERT. We selected γ=15 based on a series of preliminary experiments (values ranging from 1 to 20), as \gamma=15 achieved the best balance between topic-based and semantic information (

Table 1).

The hybrid LDA+BERT+AE method is an advanced approach to text data analysis, combining LDA (hidden Dirichlet allocation) for topic modeling, deep learning with BERT (Bidirectional Representation Encoding from Transformers), and dimensionality reduction using autoencoders (AE). This combination effectively identifies main topics from large text arrays while accounting for contextual relationships between words, capturing subtle nuances of language. As explained in the manuscript,

Figure 4 illustrates the overall structure of this method. The input to the model is a collection of documents (D). The LDA module generates topic distributions

p(topic∣document) of dimensionality

k, and BERT provides contextual embeddings of 768 dimensions. These outputs are concatenated into a single vector (\gamma\cdot{vec}_{lda}\ and{\ vec}_{bert}) and passed to the autoencoder, compressing the vector into a lower-dimensional latent space and reconstructing it. The latent representation produced by the autoencoder is then used for clustering with the K-Means algorithm, where the number of clusters equals

k. The results include cluster labels, the trained LDA model (for interpretability), and the autoencoder (for reuse). This method enhances the quality of clustering and visualization. It provides a deeper semantic understanding of the text, making it particularly effective for analyzing complex short texts and supporting various NLP tasks (

Figure 4).

2. Logarithmic transformation of features. To reduce the impact of outliers and smooth the data distribution, some numerical features (e.g., temperature or land area) undergo a logarithmic transformation. This transformation helps the model adapt to data with an extensive range of values. Let

be the value of feature

for observation

. The logarithmic transformation is performed as (3):

Several stages of data preprocessing were used to work on the short text clustering problem. First, all texts were cleared of noise, including removing special characters, links, numbers, and stop words. Then, the texts were tokenized and lemmatized to bring words to their original form, which improved the quality of the input data for the models. A grid search method was used to optimize the parameters of the BERT and LDA models to find the best hyperparameters. This included adjusting the number of topics for the LDA model and the length of sequences for BERT. The architecture of the hybrid LDA + BERT + AE model is presented in the following stages. First, the LDA model was used to identify topic clusters, after which BERT was used to extract vector representations of words, taking into account the category types. AE performed dimensionality reduction of the vector space, improving the clustering accuracy. The results of this integrated approach are evaluated by comparison with traditional text clustering methods. Integrating LDA+BERT+AE is expected to significantly enhance clustering accuracy, providing a deeper understanding of texts and more efficient extraction of helpful information. This research shows the significant potential of the "context-aware embedding" approach to improve the processing and analysis of text data, which has important implications for a wide range of applications in natural language processing. In the study, several works have been referenced that validate the effectiveness of advanced techniques such as BERT, TF+IDF, and the hybrid LDA+BERT+AE approach in short text clustering. Notably, the methodologies outlined by Manias et al. (2023) [

1] and Fu et al. (2023) [

2] have been considered. These studies emphasize the advantages of multilingual strategies and ensemble methods, particularly in text categorization and sentiment analysis. The integration of these approaches has been demonstrated to significantly enhance the accuracy and efficiency of clustering tasks in the study of short texts.

3. Results and Discussion

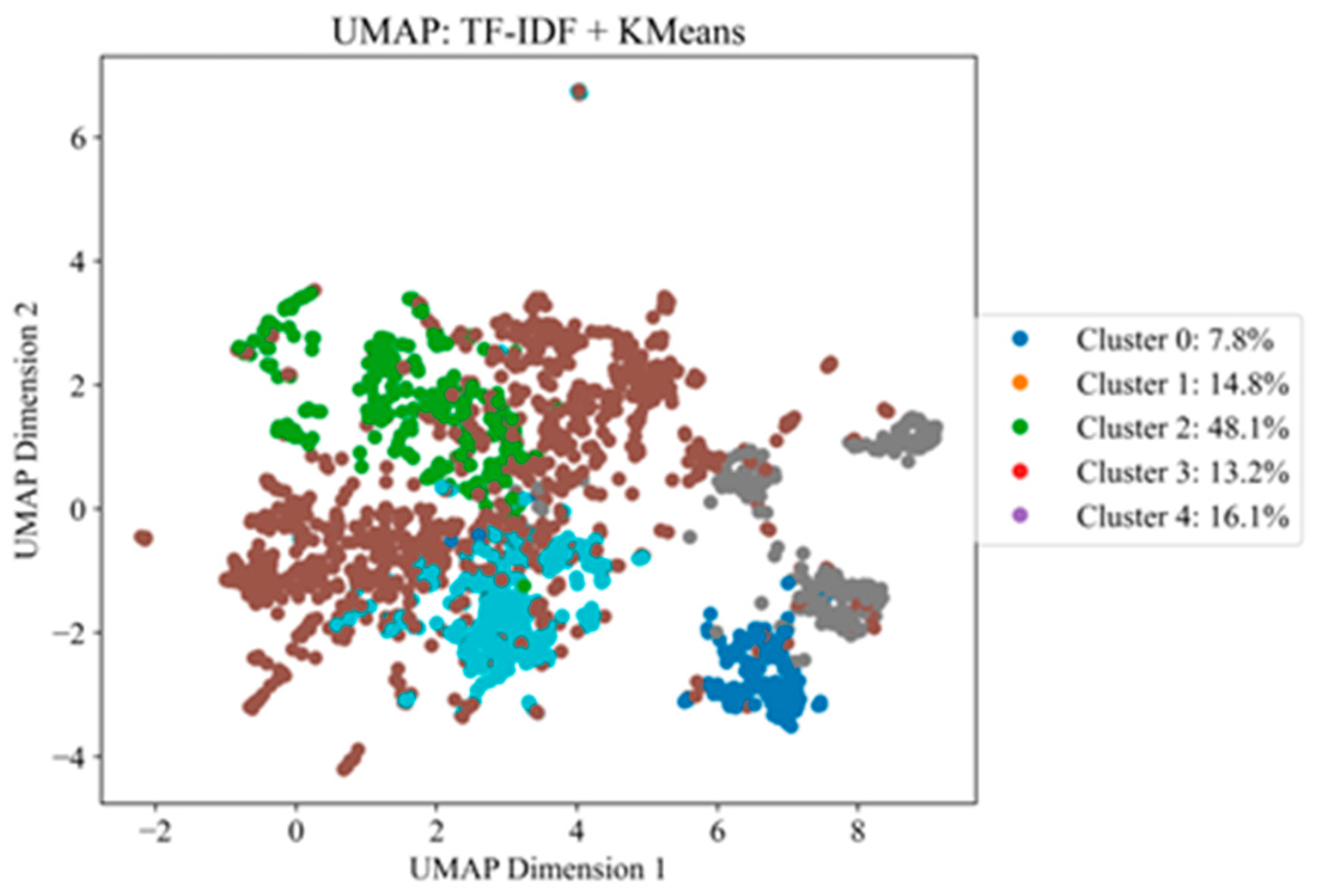

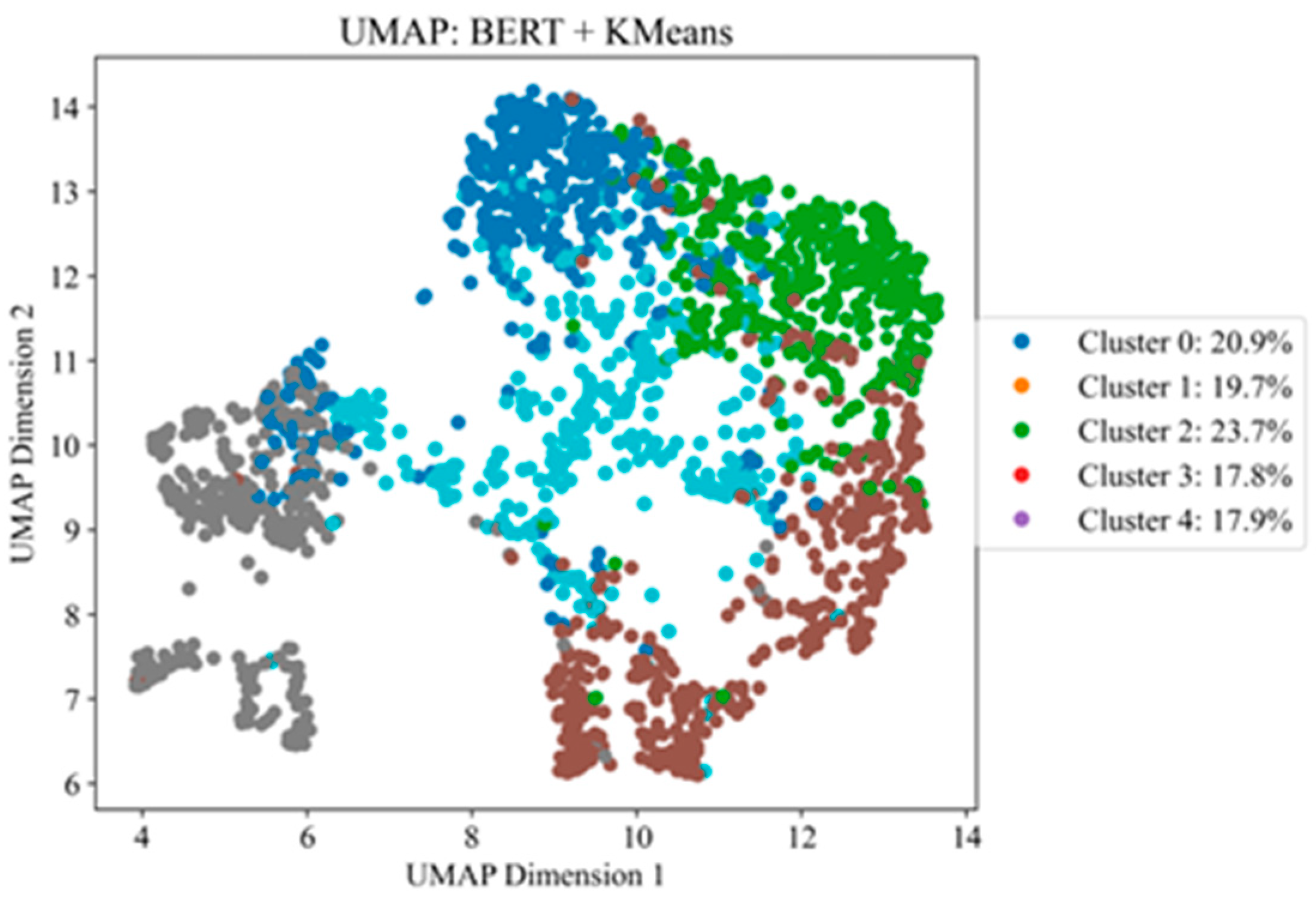

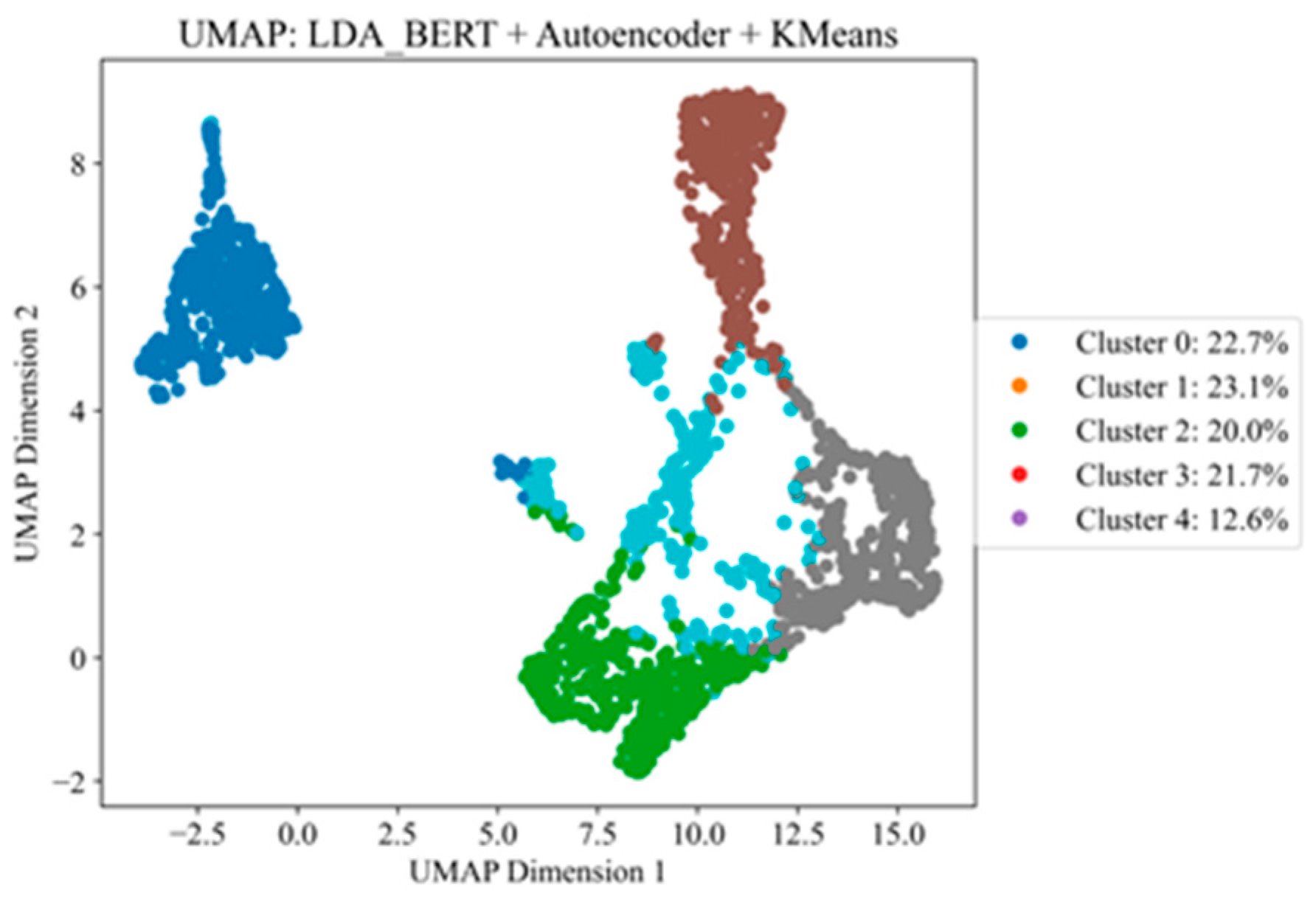

The training dataset used in our study, presented on the Kaggle platform, includes 2225 entries classified into five categories: sports, technology, business, entertainment, and politics. The data collected from news articles on the website represents a balanced and diverse set, making it an excellent basis for studying and comparing different clustering methods. The dataset structure is ideal for machine learning and projects aimed at understanding text data and classifying it into predefined categories. The dataset is divided into two primary columns: category and text. The Category column assigns each article to one of the categories mentioned, with the category "sports" being the most common. The "Text" column contains the full text of the news article. The texts of the articles vary in length and content, covering a wide range of topics within their categories, which adds uniqueness to each article. However, there are also duplicates with identical text. This dataset provides a rich opportunity for developing and testing text classification models, allowing algorithms to learn to identify categories of texts based on their content. This is especially true for natural language processing (NLP) applications such as topic modeling, keyword analysis, or developing systems to automatically sort news articles into appropriate sections on a website. The structure and content of this dataset make it ideal for research projects aimed at understanding the language used in different types of news articles and developing effective methods for classifying and analyzing text data. First, we show how different vectorization methods (TF-IDF, BERT, LDA_BERT) affect document clustering in Uniform Manifold Approximation and Projection (UMAP) plots. The UMAP method transformed text data into a two-dimensional space after feature extraction using TF-IDF, BERT, or hybrid LDA+BERT+AE. The UMAP method provides a non-linear dimensionality reduction while preserving the topological structure of the data. The accuracy of the UMAP method directly depends on the tuning parameters (n_neighbors, min_dist, etc.) and the complexity of the data. The primary purpose of using UMAP in this case is to visualize the clustering results, not to assess the accuracy of the classification. Each document in the 2D space is represented as a point, and its color corresponds to the cluster (obtained by the K-Means method). These visualizations demonstrate to what extent objects are grouped (or, conversely, mixed) by a particular feature variant: TF-IDF, pure BERT embeddings, or a hybrid combination of LDA and BERT. However, the concatenation of LDA and BERT alone may not be enough to provide the most straightforward structure of the vector space. We introduce an autoencoder (AE) — a self-learning neural network that can compress (encode) the combined LDA+BERT vector to a more compact latent representation and decode it back. In this form, the model learns to eliminate redundant information and capture the most relevant factors of variation. The K-Means algorithm with the number of clusters k is used to cluster the transformed documents. K-Means iteratively minimizes the sum of squared distances between points and centroids of clusters, forming groups of similar documents in the resulting vector space. The choice of K-Means is due to its simplicity, widespread use, and sufficiency in the initial assessment of the effectiveness of various vectorization methods (TF-IDF, BERT, LDA_BERT).

Figure 5 shows the result of data clustering performed using the TF-IDF (Term Frequency-Inverse Document Frequency) method. This method transforms texts into a vector space where each dimension corresponds to a single word in the document, allowing the degree of content similarity between different documents to be measured. The graph finds the five clusters, and the distribution of each cluster shows how much percentage the cluster has compared to the total data. The TF-IDF method has disadvantages, mainly if used with short texts such as reviews or comments. The first problem is that TF-IDF loses context because it does not consider the text's grammar and word order. This can render the approach ineffective for handling loosely coupled and unstructured data where semantic word relations are significant.

Figure 6 demonstrates the clustering results using vector clause join obtained from the BERT (Bidirectional Encoder Representations from Transformers) model. As a result of clustering, the BERT method, unlike TF-IDF, which processes each word separately and across the entire document corpus, BERT takes into account the bidirectional meaning of words in a sentence, providing rich and differentiated vector representations, which is especially important for the analysis of sentences and paragraphs where understanding the meaning critically influences the meaning of words and phrases.

Figure 7 presents the results of clustering performed using a synthesized approach that combines two powerful text analysis methods - LDA (Latent Dirichlet Allocation) and BERT (Bidirectional Encoding of Representations from Transformers), known as “context-thematic anchoring.” This hybrid approach aims to overcome the main limitations of using each method individually by combining LDA statistical topic modeling with a deep contextual understanding of language. This integration allows you to more fully explore text data's semantics and contextual aspects, providing a more profound and accurate knowledge of the content, which is critical for effective clustering and subsequent analysis.

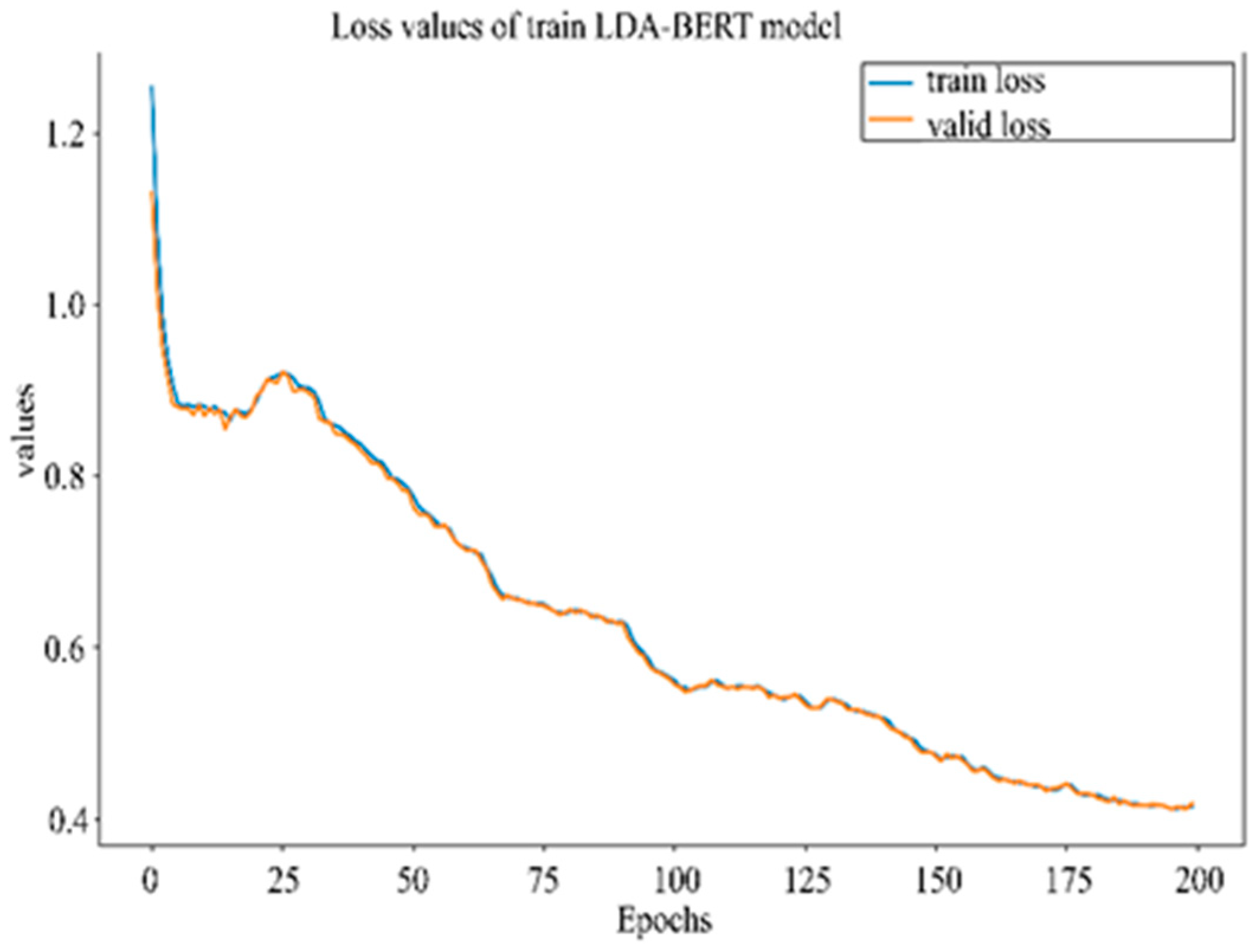

Figure 8 displays the hybrid LDA+BERT+AE model training process in which the model was trained for 200 epochs.

Figure 8 also shows how the model, throughout the training process, has a tremendous loss reduction, which indicates its appropriateness to learn and adapt effectively. The abscissa axis indicates the number of epochs, and the ordinate axis shows the values of the loss function of the epochs. Since the beginning of training, loss values for both the training and validation sets are approximately 1.25 during the zeroth epoch. This is a typical sign of the initial phases of training where the model has not been optimized yet, but its parameters are still being calibrated. However, during the initial 25 epochs, the model exhibits a sharp decline in loss up to the value of about 0.9. This stage can be referred to as the rapid progression of the model during training when it balances its weights and parameters. Between 25-75 epochs, training and validation loss graphs level off and stabilize with minor fluctuations. These fluctuations may indicate the model fine-tuning stage when the model acclimates to the idiosyncrasies of the data and resists overfitting. By the 200th epoch, both curves reach stable values of about 0.4, which indicates the successful completion of the training process. The same behavior of the curves on the training and validation sets confirms that the LDA+BERT+AE model has excellent generalization ability and can work effectively on new data. This efficiency of the model is confirmed by the subsequent results of its application, presented in the images. Examples of text clustering using the hybrid LDA+BERT+AE model demonstrate high prediction accuracy, almost identical to the actual values. For instance, for a case, the model accurately identifies the subject of the text as "sports" with 98.20% precision, which is entirely consistent with the actual subject. Similarly, the model accurately classifies texts into other subjects, such as "business" and "politics," which confirms its high validity and reliability for text data analysis and clustering activities. Therefore, this hybrid model completed the training process efficiently and demonstrated outstanding performance on real-life tasks, becoming extremely beneficial in short text analysis and other natural language processing applications.

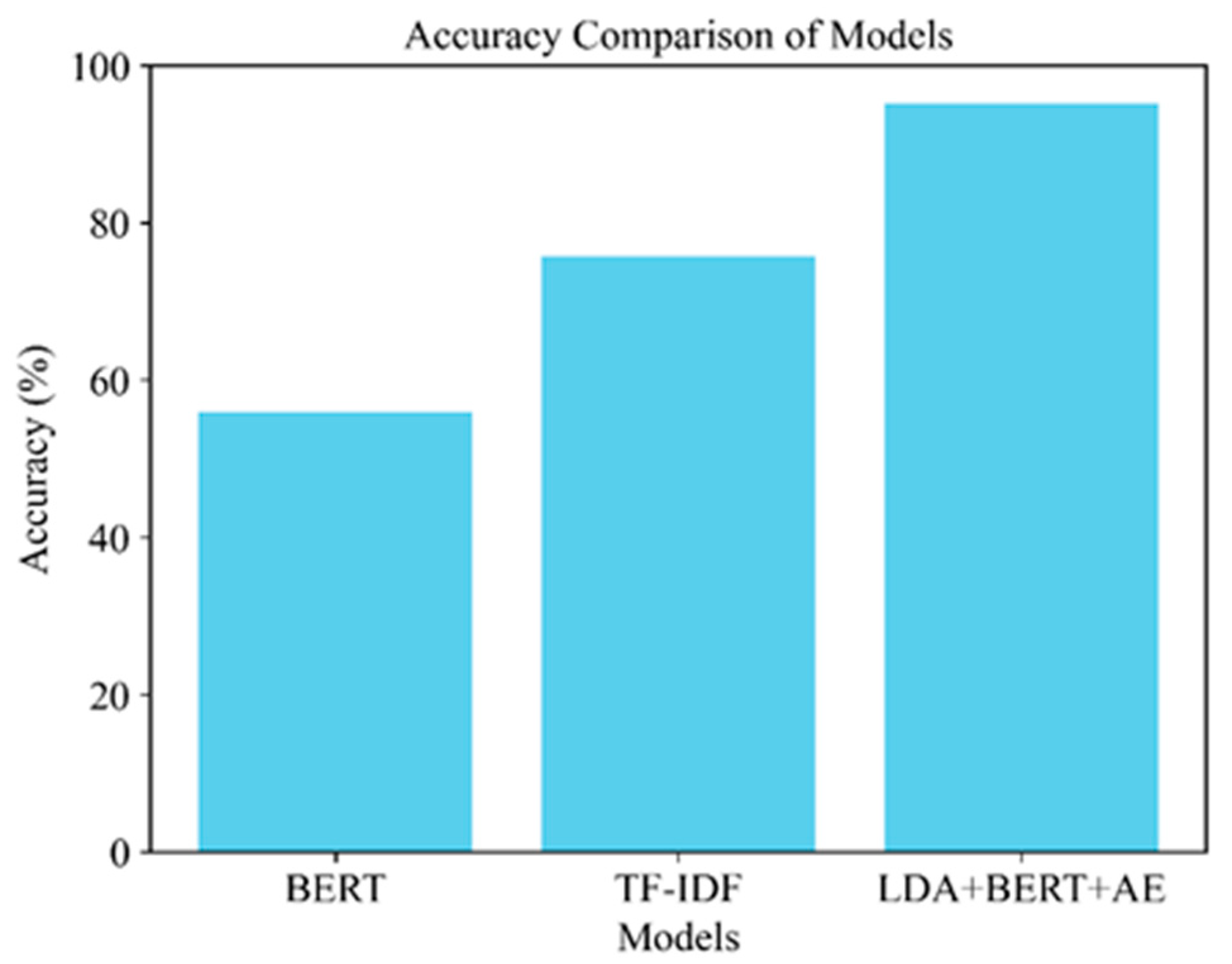

Figure 9 shows the accuracy comparison of three models: BERT, TF-IDF, and LDA+BERT+AE. The BERT model shows an accuracy of about 60%. Although it can cope with contextual relationships between words, its limitations in classifying texts that require precise topic extraction reduce the overall effectiveness. The TF-IDF model shows higher accuracy, about 75%. It is based on the frequency of words in the document and their significance, which improves its accuracy compared to BERT. However, the lack of consideration of contextual relationships between words is the main limitation of the method, especially when working with texts that require a deep understanding of the semantics. The hybrid LDA+BERT+AE model shows a significantly better result with an accuracy of about 98%. This is explained by the fact that this model uses the strengths of LDA for topic modeling, BERT for contextual analysis, and Autoencoder for data dimensionality reduction. The result is a model with high classification accuracy, especially effective when analyzing texts with a clearly expressed topic focus.

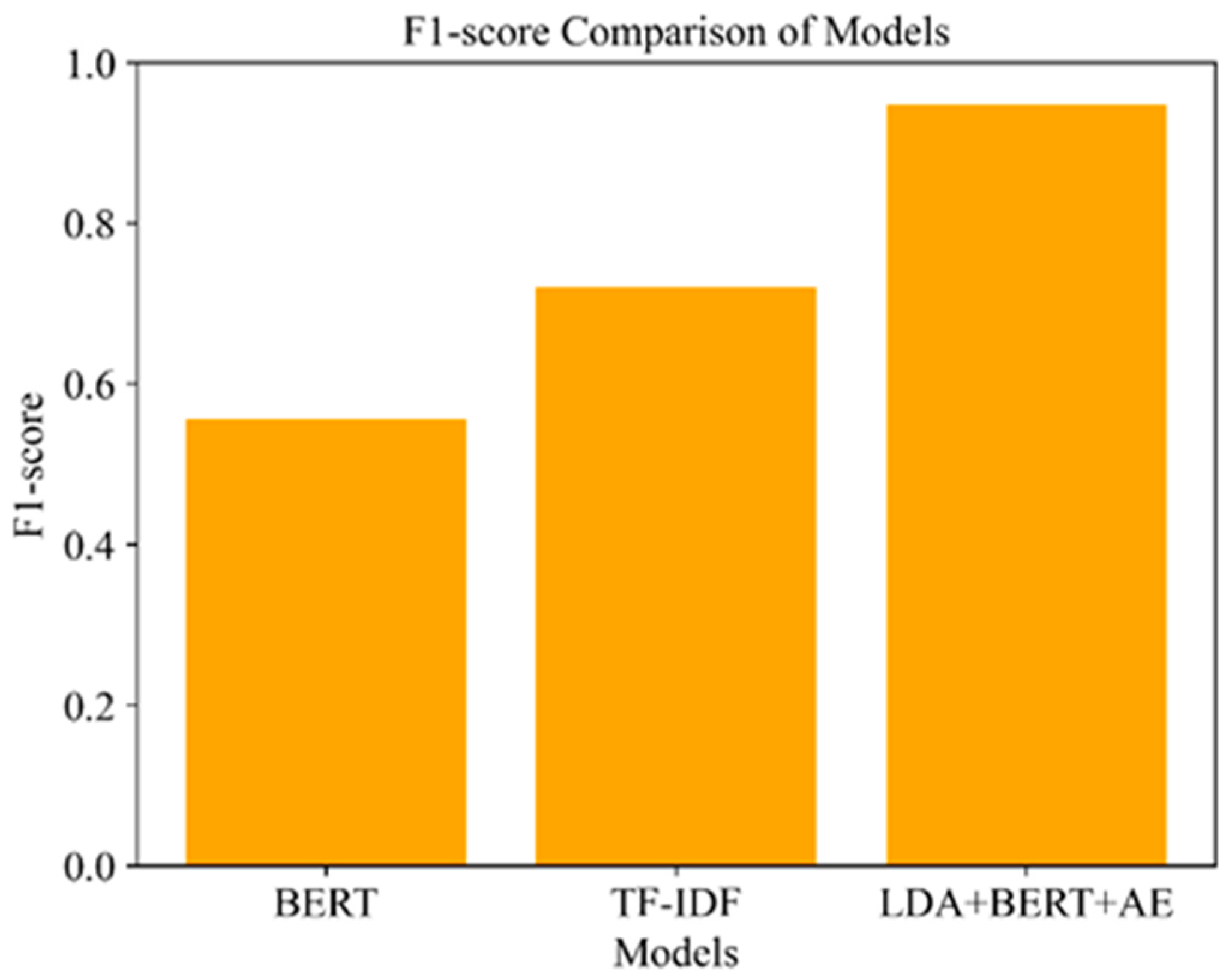

Figure 10 displays the comparison of the F1 scores of the same models. The measure considers precision and recall, providing a balanced view of the model's performance. The BERT model possesses an F1-score of around 0.55, which shows its ability to interpret context but falls short in accurately classifying texts, especially when dealing with specific categories. The TF-IDF model shows a better result with an F1-score of about 0.7, which has a more suitable precision-recall ratio than BERT. However, the lack of understanding of deep semantic relationships limits the classification of complex texts. Compared with the other four models, the hybrid LDA+BERT+AE model achieves an F1-score of around 0.9, confirming that it performs very well accurately classifying texts. Extracting topic aspects and contextual nuances achieves higher precision and recall, so it is a good choice for short text analysis tasks. In conclusion, in both cases, the LDA+BERT+AE model significantly outperforms the BERT and TF-IDF models regarding both accuracy and F1-score. Although BERT provides contextual understanding and TF-IDF effectively handles keyword importance, its limitations become apparent when classifying texts that require deep semantic analysis. The hybrid LDA+BERT+AE model, combining the best features of different methods, provides maximum accuracy and efficiency in context-rich text analysis tasks.

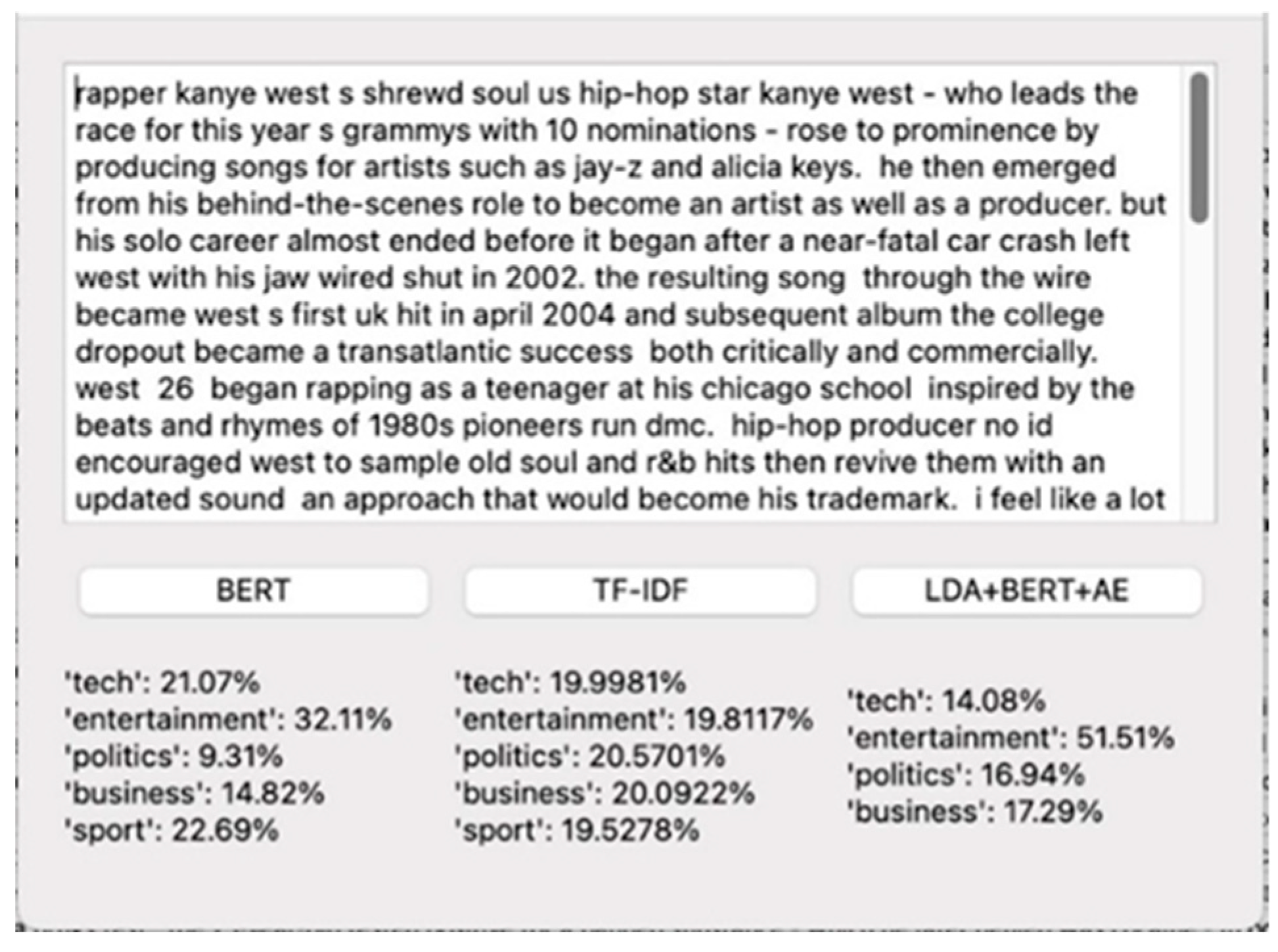

Figure 11 shows the text analysis results about Kanye West using various text processing methods: BERT, TF-IDF, and LDA+BERT+AE. The BERT method identifies 'entertainment' as the dominant theme (32.11%), corresponding to the text's central theme of music and entertainment. TF-IDF and LDA+BERT+AE distribute weights more evenly between categories, although LDA+BERT+AE is more accurate at identifying 'entertainment' (51.51%) as the top category. This shows how integrating contextual understanding with topic modeling can improve text classification.

We used measures such as the Jaccard Index, Matthus Correlation Coefficient (MCC), Foulkes-Mallows Index (FM), and Cohen's Kappa Coefficient to quantify the performance of models. These measures helped us compare and evaluate the classification performance of different methods in-depth, providing a comprehensive understanding of their performance and accuracy. The metrics results show that the LDA+BERT+AE method performs better on most metrics, indicating its superiority in classifying texts (

Table 2).

This table summarizes the performance metrics of LDA+BERT+AE and TF-IDF methods, indicating the superiority of LDA+BERT+AE over Jaccard Index, Matthews Correlation Coefficient (MCC), and Cohen's Kappa Coefficient. These metrics indicate better classification performance and accuracy. The analyses demonstrate that the combined approach of LDA+BERT+AE significantly outperforms BERT and TF-IDF methods in text processing and classification tasks, particularly when precise classification of thematically rich texts is required. Integrating LDA for topic modeling with BERT's deep contextual understanding and autoencoder capabilities for vector space optimization enables high accuracy in determining the topical content of text. This approach enhances classification quality and provides deeper insights into semantic relationships within the text, which is crucial for various natural language processing applications. Experiments were conducted in supervised mode (Random Forest, SVM) to highlight the advantages of hybrid representations, training them on the same feature vectors. Such metrics as accuracy, F1-score, and others were obtained (

Table 3).

The results showed that supervised classification achieves higher ARI/homogeneity values. However, the unsupervised clusterer gives quite comparable results. This indicates that the obtained vectors (especially LDA_BERT) contain qualitative information about the data structure. To ensure the reproducibility and transparency of the study, the source code of the hybrid model and analysis methods was placed in the public domain. The code is available in the repository at the following link:

https://github.com/JamalbekTussupov01/Text-clustering/tree/main. The posted code contains all stages of the model implementation, including data preprocessing, selection of significant features, parameter analysis, and deviation prediction. This allows researchers and practitioners to use the proposed approach for their tasks and, if necessary, make improvements and adapt the methodology to different conditions. This approach helps increase scientific work's transparency and supports open scientific discussion.

4. Conclusions

This research paper presents a detailed analysis of methods for intelligent clustering of short texts, including BERT, TF+IDF, and the hybrid approach LDA+BERT+AE. The study results show that each method has advantages and limitations when working with short texts. In particular, BERT demonstrates a solid ability to understand contextual dependencies, while TF+IDF assesses the importance of terms in documents. However, the hybrid method LDA+BERT+AE, which combines the advantages of generative modeling of LDA, deep contextual analysis of BERT, and the ability to reduce dimensionality using autoencoders, showed the most excellent efficiency in clustering short texts. The results confirmed the high efficiency of the hybrid method, particularly in tasks requiring deep semantic analysis of texts. Specifically, during the training and validation of the LDA-BERT model over 200 epochs, the loss values started above 1.2 and decreased rapidly within the first 25 epochs, reaching approximately 0.8. The loss values declined as training progressed, stabilizing at around 0.4 by the 200th epoch. The close alignment of the training and validation loss curves indicated the model's practical learning and good generalization capabilities, with minimal signs of overfitting. This study's practical implications lie in optimizing NLP systems, which can significantly enhance the quality of text data analysis across industries such as marketing, social media, and automated content moderation. Furthermore, the method holds promise for use in the analysis of multilingual text, which is of particular interest to multinational enterprises and global platforms. However, the technique has some demerits in that it is resource- and labor-demanding, which may pose a challenge to small firms and start-ups. Further, the accuracy of the method is mainly dependent on data quality, as poor or inadequate preprocessing of data tends to affect clustering outcomes adversely.

Potential future directions include optimizing the algorithms to reduce computational costs, increasing data processing speed, and implementing the method in other fields such as biomedicine, law, and economics. The development of combining this method with other models, such as GPT or transformers, to improve the quality and efficiency of data analysis is also a potential area. In conclusion, this study confirms that hybrid intelligent clustering methods like LDA+BERT+AE offer promising prospects for enhancing the accuracy and efficiency of short text processing. The results pave the way for developing more advanced and adaptive NLP systems capable of tackling many tasks in today's information-driven world.

Author Contributions

Conceptualization, J.T., A.K., and M.Y.; methodology, J.T., A.K., A.M., and Z.A.; software, J.T. and A.M.; validation, A.K., Z.A., and Z.Az.; formal analysis, J.T., M.Y., and Z.A.; investigation, A.K., A.B., and Z.Az.; resources, A.K. and A.B.; data curation, A.M. and M.Y.; writing—original draft preparation, J.T., A.K., and M.Y.; writing—review and editing, Z.Az., Z.A., and A.B.; visualization, A.M. and M.Y.; supervision, A.K. and Z.Az.; project administration, A.K. and Z.Az. All authors have read and agreed to the published version of the manuscript.

Funding

This research has been funded by the Science Committee of the Ministry of Science and Higher Education of the Republic of Kazakhstan (Grant No. AP19677451) ».

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AE |

Autoencoder |

| ARI |

Adjusted Rand Index |

| BERT |

Bidirectional Encoder Representations from Transformers |

| CH |

Calinski-Harabasz Index |

| DB |

Davies-Bouldin Index |

| FM |

Fowlkes-Mallows Index |

| KNN |

K-Nearest Neighbors |

| Lasso |

Least Absolute Shrinkage and Selection Operator |

| LDA |

Latent Dirichlet Allocation |

| LSTM |

Long Short-Term Memory |

| MAPE |

Mean Absolute Percentage Error |

| MCC |

Matthews Correlation Coefficient |

| NLP |

Natural Language Processing |

| RMS |

Root Mean Square |

| RMSE |

Root Mean Square Error |

| SGs |

Smart Grids |

| STC |

Short Text Clustering |

| TF-IDF |

Term Frequency–Inverse Document Frequency |

| TRTD |

Topic Representative Term Discovery |

| UMAP |

Uniform Manifold Approximation and Projection |

| V-Measure |

Harmonic Mean of Homogeneity and Completeness |

| XGBoost |

Extreme Gradient Boosting |

References

- Manias, G.; Mavrogiorgou, A.; Kiourtis, A.; Symvoulidis, C.; Kyriazis, D. Multilingual text categorization and sentiment analysis: a comparative analysis of multilingual approaches for classifying twitter data. Neural Comput. Appl. 2023, 35(29), 21415–21431. [Google Scholar] [CrossRef] [PubMed]

- Fu, G.; Li, B.; Yang, Y.; Li, C. Re-ranking and TOPSIS-based ensemble feature selection with multi-stage aggregation for text categorization. Pattern Recognit. Lett. 2023, 168, 47–56. [Google Scholar] [CrossRef]

- Edara, D. C.; Vanukuri, L. P.; Sistla, V.; Kolli, V. K. K. Sentiment analysis and text categorization of cancer medical records with LSTM. J. Ambient Intell. Humaniz. Comput. 2023, 14(5), 5309–5325. [Google Scholar] [CrossRef]

- Balaji, T. K.; Annavarapu, C. S. R.; Bablani, A. Machine learning algorithms for social media analysis: A survey. Comput. Sci. Rev. 2021, 40, 100395. [Google Scholar]

- Abbas, A. F.; Jusoh, A.; Mas’ od, A.; Alsharif, A. H.; Ali, J. Bibliometrix analysis of information sharing in social media. Cogent Bus. Manag. 2022, 9(1), 2016556. [Google Scholar] [CrossRef]

- McKitrick, M. K.; Schuurman, N.; Crooks, V. A. Collecting, analyzing, and visualizing location-based social media data: review of methods in GIS-social media analysis. GeoJournal 2023, 88(1), 1035–1057. [Google Scholar] [CrossRef]

- Becken, S.; Friedl, H.; Stantic, B.; Connolly, R. M.; Chen, J. Climate crisis and flying: Social media analysis traces the rise of “flightshame”. J. Sustain. Tour. 2021, 29(9), 1450–1469. [Google Scholar] [CrossRef]

- Chakraborty, K.; Bhattacharyya, S.; Bag, R. A survey of sentiment analysis from social media data. IEEE Trans. Comput. Soc. Syst. 2020, 7(2), 450–464. [Google Scholar] [CrossRef]

- Horta Ribeiro, M.; Cheng, J.; West, R. Automated content moderation increases adherence to community guidelines. Proc. ACM Web Conf. 2023, 2666–2676. [Google Scholar]

- He, Q.; Hong, Y.; Raghu, T. S. The effects of machine-powered platform governance: An empirical study of content moderation. SSRN Electron. J. 2021.

- Fasel, M.; Weerts, S. Can Facebook's community standards keep up with legal certainty? Content moderation governance under the pressure of the Digital Services Act. Policy Internet 2024.

- Saranya, S.; Usha, G. A Machine Learning-Based Technique with IntelligentWordNet Lemmatize for Twitter Sentiment Analysis. Intell. Autom. Soft Comput. 2023, 36(1).

- Hupkes, D.; Giulianelli, M.; Dankers, V.; Artetxe, M.; Elazar, Y.; Pimentel, T.; Jin, Z. A taxonomy and review of generalization research in NLP. Nat. Mach. Intell. 2023, 5(10), 1161–1174. [Google Scholar] [CrossRef]

- Chung, S.; Moon, S.; Kim, J.; Kim, J.; Lim, S.; Chi, S. Comparing natural language processing (NLP) applications in construction and computer science using preferred reporting items for systematic reviews (PRISMA). Autom. Constr. 2023, 154, 105020. [Google Scholar] [CrossRef]

- Xin, Q.; He, Y.; Pan, Y.; Wang, Y.; Du, S. The implementation of an AI-driven advertising push system based on a NLP algorithm. Int. J. Comput. Sci. Inf. Technol. 2023, 1(1), 30–37. [Google Scholar] [CrossRef]

- Işıkdemir, Y. E. NLP TRANSFORMERS: Analysis of LLMs and traditional approaches for enhanced text summarization. Eskişehir Osmangazi Univ. J. Eng. Archit. Fac. 2024, 32(1), 1140–1151. [Google Scholar] [CrossRef]

- Nelson, L. K.; Burk, D.; Knudsen, M.; McCall, L. The future of coding: A comparison of hand-coding and three types of computer-assisted text analysis methods. Sociol. Methods Res. 2021, 50(1), 202–237. [Google Scholar] [CrossRef]

- Zhang, X.; Ju, T.; Liang, H.; Fu, Y.; Zhang, Q. LLMs Instruct LLMs: An extraction and editing method. arXiv preprint 2024, arXiv:2403.15736. [Google Scholar]

- Zhou, C.; Li, Q.; Li, C.; Yu, J.; Liu, Y.; Wang, G.; Sun, L. A comprehensive survey on pretrained foundation models: A history from BERT to ChatGPT. arXiv preprint 2023, arXiv:2302.09419. [Google Scholar] [CrossRef]

- Lamsiyah, S.; Mahdaouy, A. E.; Ouatik, S. E. A.; Espinasse, B. Unsupervised extractive multi-document summarization method based on transfer learning from BERT multi-task fine-tuning. J. Inf. Sci. 2023, 49(1), 164–182. [Google Scholar] [CrossRef]

- Yu, D.; Xiang, B. Discovering topics and trends in the field of Artificial Intelligence: Using LDA topic modeling. Expert Syst. Appl. 2023, 120114. [Google Scholar] [CrossRef]

- Lohith, C.; Chandramouli, H.; Balasingam, U.; Arun Kumar, S. Aspect oriented sentiment analysis on customer reviews on restaurant using the LDA and BERT method. SN Comput. Sci. 2023, 4(4), 399. [Google Scholar] [CrossRef]

- Li, P.; Pei, Y.; Li, J. A comprehensive survey on design and application of autoencoder in deep learning. Appl. Soft Comput. 2023, 138, 110176. [Google Scholar] [CrossRef]

- Chen, S.; Guo, W. Auto-encoders in deep learning—a review with new perspectives. Mathematics 2023, 11(8), 1777. [Google Scholar] [CrossRef]

- Bengesi, S.; El-Sayed, H.; Sarker, M. K.; Houkpati, Y.; Irungu, J.; Oladunni, T. Advancements in generative AI: A comprehensive review of GANs, GPT, autoencoders, diffusion model, and transformers. IEEE Access 2024. [CrossRef]

- Ahmed, M. H.; Tiun, S.; Omar, N.; Sani, N. S. Short text clustering algorithms, application and challenges: A survey. Appl. Sci. 2023, 13(1), 342. [Google Scholar] [CrossRef]

- Ikotun, A. M.; Ezugwu, A. E.; Abualigah, L.; Abuhaija, B.; Heming, J. K-means clustering algorithms: A comprehensive review, variants analysis, and advances in the era of big data. Inf. Sci. 2023, 622, 178–210. [Google Scholar] [CrossRef]

- Kyaw, K. S.; Tepsongkroh, P.; Thongkamkaew, C.; Sasha, F. Business intelligent framework using sentiment analysis for smart digital marketing in the E-commerce era. Asia Soc. Issues 2023, 16(3), e252965. [Google Scholar] [CrossRef]

- Murshed, B. A. H.; Mallappa, S.; Abawajy, J.; Saif, M. A. N.; Al-Ariki, H. D. E.; Abdulwahab, H. M. Short text topic modelling approaches in the context of big data: Taxonomy, survey, and analysis. Artif. Intell. Rev. 2023, 56(6), 5133–5260. [Google Scholar] [CrossRef]

- Habbak, H.; Mahmoud, M.; Metwally, K.; Fouda, M. M.; Ibrahem, M. I. Load forecasting techniques and their applications in smart grids. Energies 2023, 16(3), 1480. [Google Scholar] [CrossRef]

- Yang, S.; Huang, G.; Cai, B. Discovering topic representative terms for short text clustering. IEEE Access 2019, 7, 92037–92047. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The views, opinions, and data presented in this publication are solely those of the individual author(s) and contributors. They do not necessarily reflect the views of the publisher and/or the editorial board. The publisher and editors disclaim any responsibility for any harm, damage, or injury to individuals or property arising from the application of any ideas, methods, instructions, or products discussed in this content. |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).