2. Information and the “Bar-Hillel Carnap” Paradox

Floridi, following Dretske, [

6] adheres to the Veridicality Thesis (VT). That is, in order to count as information a message must be true ([

7] chap. 4). The idea of “false information” is in the same boat as the term “false friend”: a useful and evocative description, but with the clear meaning of not being a friend at all [

6]. This view is not universally accepted, but for the purposes of this paper it will, without prejudice, be taken as read that VT holds, so that we can focus on Floridi’s arguments for the quantitation of information that he develops.

Floridi borrows from situation logic the term

infon (symbolised as

) [

8,

9] “to refer to discrete items of factual semantic information qualifiable in principle as true or false, irrespective of their semiotic code and physical implementation”

3. Using this, the General Definition of Information is given as a quad-partite definition:

(an infon) is an instance of semantic information iff:

- GDI.1:

consists of ndata (d) for

- GDI.2:

the data are well-formed ()

- GDI.3:

the wfd are meaningful ()

- GDI.4:

the are truthful.

This definition makes use of a prior term

data, or

datum which is ultimately a

lack of uniformity[

11, p 23]. Which leads to a description of information as “a distinction that makes a difference.”

4 The definition of a datum is then:[

11, p 23]

- Dd:

datumx being distinct from y, where x and y are two uninterpreted variables and relation of `being distinct’ as well as the domain, are left open to further interpretation.

Floridi uses these to distinguish between the Bar Hillel and Carnap approach, that he calls the “Theory of Weakly Semantic Information” (TWSI), in which truth merely supervenes on information, and gives rise to a paradox, and the “Theory of Strongly Semantic Information” (TSSI), his own formulation which he believes overcomes that paradox. The former is defined by GDI.1 – GDI.3, whereas the latter uses all four in the definition of information.

Floridi provides an analysis of where TWSI leads,[

7] in particular as described by Bar-Hillel and Carnap [

13] as a grounding for his TSSI. The context for Floridi’s approach is as a response to the definition of semantic information put forward by Bar-Hill and Carnap in the 1960s. They identified the amount of information in, or the semantic content (CONT) of, a proposition as being inversely related to the prior probability of the proposition (referred to as the Inverse Relationship Principle, IRP).

Here is the prior probability of p and could be, for example a set of possible worlds, or a set of propositions, and is a measure of the likelihood of p not being the case. One upshot of this is that while the semantic content of a tautology is zero, as one would expect, , is 1. That is, a contradiction is maximally informative. This is rather surprising. As Bar-Hillel and Carnap put it:

“It might perhaps, at first, seem strange that a self-contradictory sentence, hence one which no ideal receiver would accept, is regarded as carrying with it the most inclusive information. It should, however, be emphasized that semantic information is here not meant as implying truth. A false sentence which happens to say much is thereby highly informative in our sense. Whether the information it carries is true or false, scientifically valuable or not, and so forth, does not concern us. A self-contradictory sentence asserts too much; it is too informative to be true.” [

13, p 229].

That is, Bar-Hillel and Carnap reject the VT and see truth as supervening on information. It is this feature that gives rise to what Floridi calls the Bar-Hillel Carnap paradox (BCP), wherein the impossible situation of a contradiction is the most conveys that maximum information.

3. The Theory of Strongly Semantic Information

In order to understand the criticism that will follow in the next section we need to present an outline of the substance of Floridi’s TSSI. Floridi proposes that the best place to start is with “an analysis of the quantity of semantic information in

including a reference to its alethic value. This is TSSI” ([

7] p 117). To this end he identifies three desiderata for a theory of semantic information; it should: ([

7] p 117)

- D.1:

avoid any counterintuitive inequality comparable to BCP;

- D.2:

treat the alethic value of not as a supervenient but as a necessary feature of semantic information, relevant to the quantitative analysis;

- D.3:

extend a quantitative analysis to the whole family of information-related concepts: semantic information vacuity and inaccuracy, informativeness, misinformation (what is ordinarily called `false information’), disinformation.

These three desiderata are fundamental. The first two are unproblematic; the third is unproblematic in the abstract, but the way it is unpacked may give rise to some issues (see

Section 4). It this unpacking that Floridi proceeds to develop. Throughout the discussion Floridi makes use of an example universe,

E, consisting of all possible worlds, or states, arising from the conjunction of a set of basic infons.

5

As well as the statement of BCP as an inverse relation:

based on what an infon excludes, he also highlights the relation between the informativeness,

, of an infon and its semantic content, stated as:

The particular quantitative measure that Floridi chooses for TSSI is not probability but the discrepancy,

, from the actual state of affairs.

6

As a step towards formulating a relation to enable the calculation of informativeness for an infon, Floridi identified five criteria that he considered any method of quantifiction must meet (which he labelled M1-M5). These criteria are uncontroversial given the aims of TSSI, but, as we shall see in the next section Floridi’s chosen means of implementation does not actually meet all these criteria.

The criteria are as follows: the true state will have zero discrepancy [M1]; both a tautology [M2] and a contradiction [M3] will have the maximum discrepancy; contingently true [M4] and contingently false [M5] infons will have discrepancies that lie strictly in the range between zero and the maximum discrepancy

7

3.1. Degrees of Inaccuracy

The model universe is maximal for states

8 (i.e., no further conjunctive propositions may be added without introducing a contradiction) and so it must contain the true state. Since the infons are conjunctions, any infon,

, other than that representing the actual state of affairs will be false. However, different infons will contain greater or fewer components (

conjuncts) that are false, and this gives rise the idea of

degree of falsity or

inaccuracy. It is straightforward then to create a metric of the distance from the true state of affairs as the ratio of the number of false components to the length of the infon (that is, the total number of conjuncts in the infon). More formally:

Here l is the length of the infon (which in the case of Floridii’s example is 6), e is the number of erroneous conjuncts in the infon, and is the distance of the infon from the true state of affairs (the negative sign refers to the fact that it deals with degrees of error); and spans the range 0 (matching the actual situation) to -1 (the infon contains no truth).

3.2. Degrees of Vacuity

The other aspect of distance from the actual state of affairs arises when the infon is true but more abstract than the actual (true) state. The most extreme example of this is a tautology, which includes the actual situation, but is uninformative due to its being true in all circumstances. This has a distance of +1. In order to fill in the gap between these two extremes Floridi introduced the semi-dual.

9 By this means a set of classes are identified which contain all the infons with the same number of disjunctions. Each member of the class will have the same number of ways of being true given that some components of the infon are false. So in this case the distance is the ratio of the number of ways of being true,

n, to the size of the universe

10 (where

l is the length of the infon and

s is the number of values the infon can take: 2 in this case). More formally:

3.3. Degrees of Informativeness

Now that a putatively suitable distance metric has been identified for these two situations Floridi is able to use it to provide a means of quantifying the degree of informativeness, , of an infon. He proposes that the distance be viewed as spanning the range [-1, +1], with the actual state of affairs at the origin, the LHS being the degree of inaccuracy (hence the negative value) and the RHS the degree of vacuity.

The fact that, as Floridi sees it, the BCP arises, at least in part, from the inverse relation between information content and probability in that formulation suggests that a relation that identifies the actual state of affairs as having maximum informativeness and the two extremæ (tautology and contradiction), zero informativeness will serve better as a metric.

He proposes a quadratic relation as being the simplest relation that meets a number of criteria that he considers mandatory for a function to quantify the degree of informativeness. Most of these are unobjectionable, but a couple appear more problematic and less than optimal. (We will describe thee and the issues surrounding them in

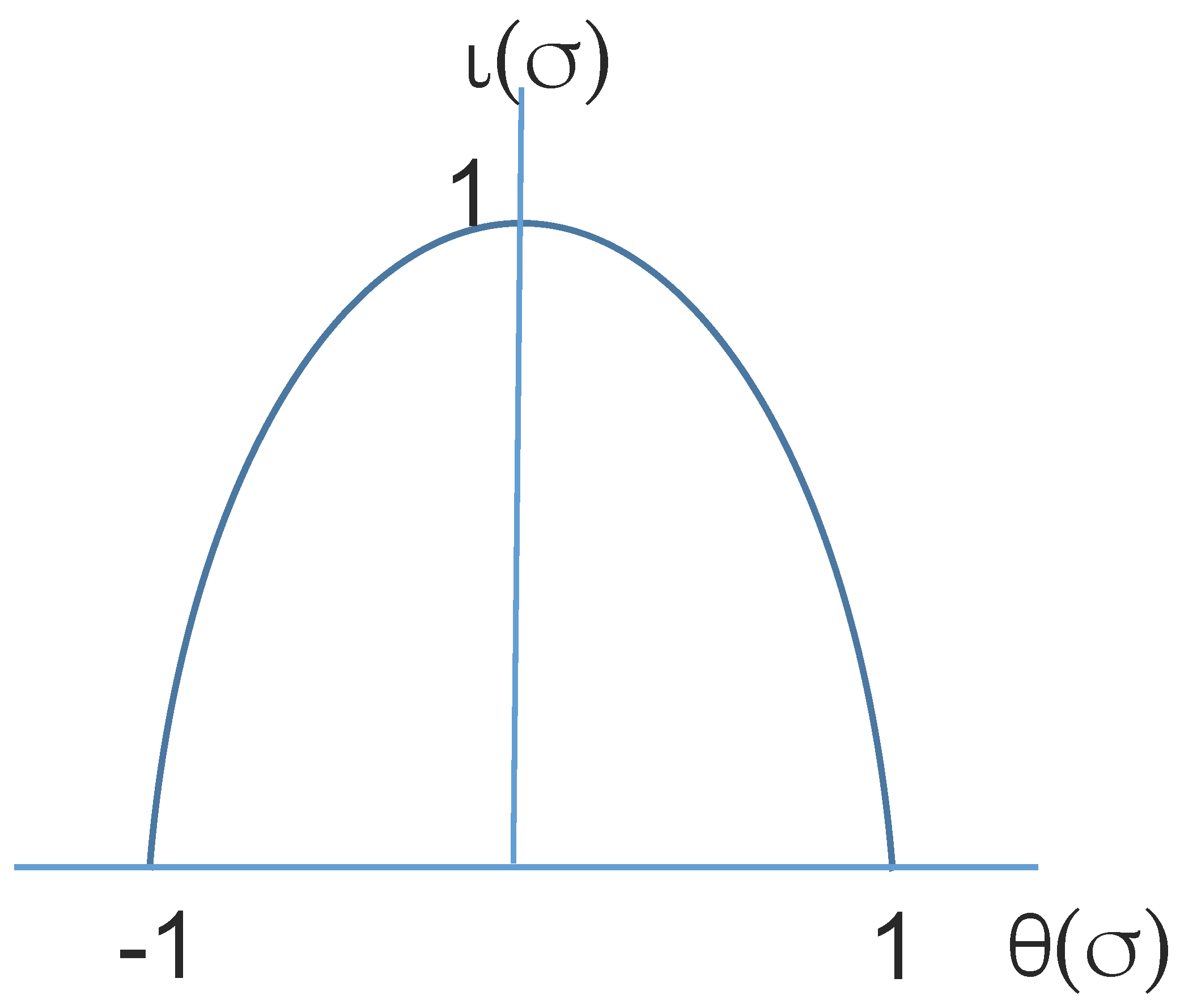

Section 4.1 below). The precise formulation used by Floridi is:

see

Figure 1.

The motivation, in part at least, for this quadratic is the claim that it meets a number of other conditions that he believes follow directly from M1- M5. These conditions are: that when the discrepancy , is zero the informativeness of the infon, , is 1 [E1]; that the integral of . must be bounded in the interval between minimum and, maximum values (i.e., it must be a proper integral) [E2]; when the discrepancy is at a maximum, the informativeness must be zero [E3]; when the discrepancy is in the range between minimum and maximum, then the informativeness lies in the range [E4]; a small variation in discrepancy yields a substantial change in informativeness; [E5]; and finally [E6]: the marginal information function (which is the derivation of the equation for informativeness) is a linear function. This last is meant to approximate the ideal that “all atomic messages ought to be assigned the same potential degree of informativeness”. It can be agreed that these are, for the most part, suitable criteria and conditions for any function that aims to quantify informativeness to possess. Unfortunately, as we shall see in the next section TSSI fails to meet several of them. Most importantly it does not yield an informativeness of zero for a contradiction, and therefore fails in the central aim of removing the BCP.

5. A More General Representation of Semantic Information

In this section I shall propose a different representation for the Information Space (IS) that will provide the means to meet the desiderata identified by Floridi and, most importantly, remove the BCP. This can be done utilising methods that have been used very successfully in other domains. In the Mathematical Theory of Communication, and related areas (such as Control Theory and Signal Theory) the Unit Circle (UC) is often used as a convenient means of representing signals and analysing stability. This is not surprising since in these domains sinusoids and trigonometric relations are commonplace, and they are defined in relation to a circle. In addition, in developing Quantum Probability (QP), based on Hilbert spaces, von Neumann utilised similar methods.[

17] This being the case I argue that, given the problems identified in the previous section, the UC is worth exploring in this context as a better means of representing the relations of quantification of information.

It is a commonplace of such domains that incompatible variables or ranges be represented by orthogonal axes, whether it be Real and Imaginary numbers in communication theory or `up’ and `down’ in quantum theory. Two key features of the UC that are relevant here are the ease with which one can calculate quantitative relations by means of the cosine rule, and related to that, the fact that pure True infons and pure False infons are orthogonal to each other (and hence with no mapping since the cosine is zero). As such, True and False can be used as the axes of the space with respect to which the deviations are measured. In this case the true state-of-affairs is again the origin. Messages which contain both true and false atoms will be distributed in the space between the axes.

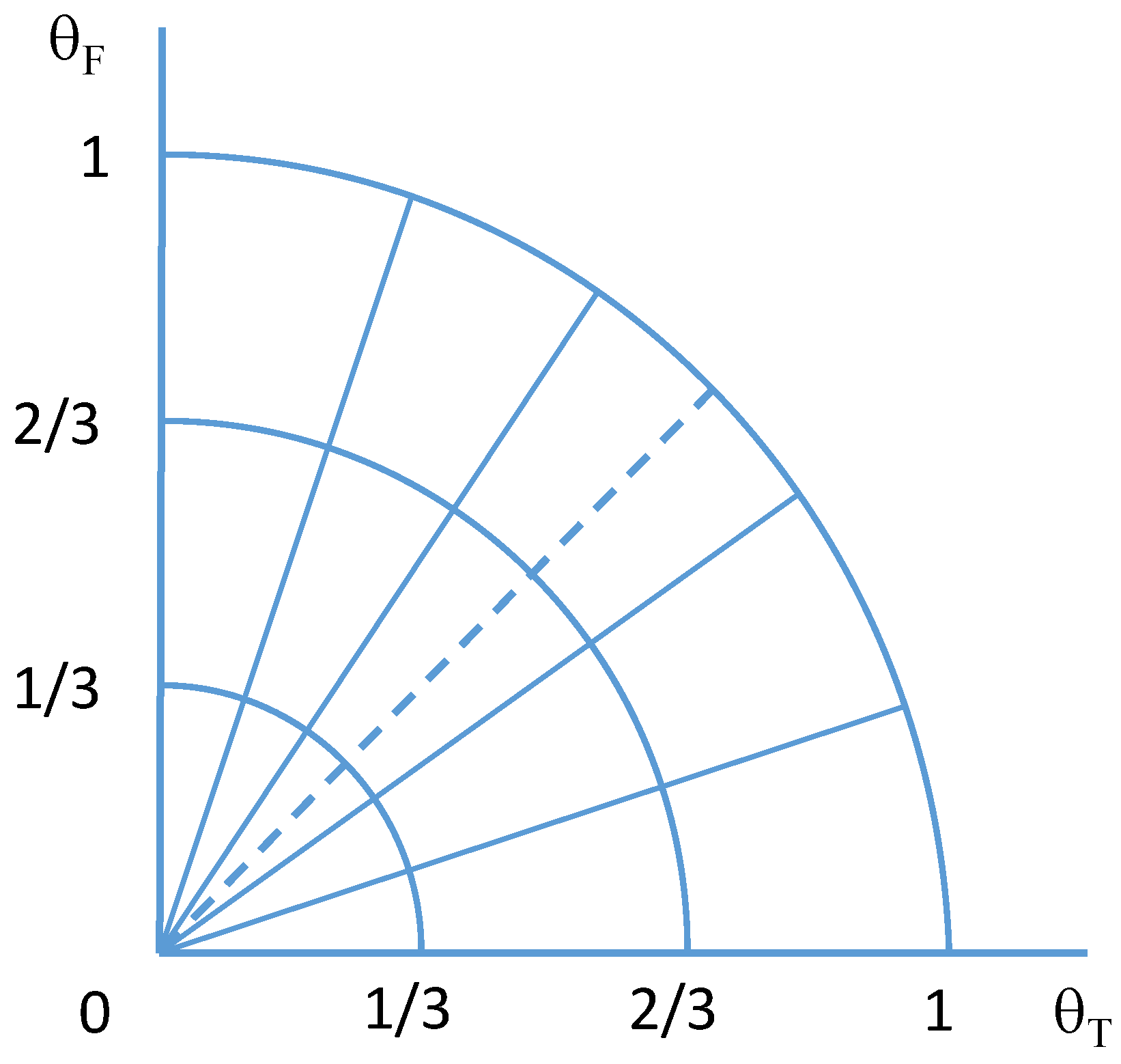

The first things that need to be done then are to change the space from that shown in

Figure 1 (2-D) to that shown in

Figure 2 (3-D, with the third dimension, representing

, coming out of the page). Then we need to decide on what the best, and most coherent, means to calculate the distance from the true state-of-affairs is. In deciding what scales to utilise in measuring the distance from the true state, we need to consider two things: the distance along each axis, and the position in the plane. Before we settle this we should look at what messages can be created. This is best done by means of an example. Floridi used a universe consisting of three atoms and two predicates. which gave a space of 64 states (or worlds). However, as D’Alfonso[

3] has pointed out, the actual number of possible messages is

(

) for this universe. We wish our space to represent all these messages and hence will need a simpler example to demonstrate things. In fact the only manageable possibility for these purposes is a single predicate with two atoms. This yields a universe of two atomic infons, four worlds (or states), and sixteen messages.

13 Table 1 shows the complete set of messages for the two atomic infons

x and

y.

14 From

Table 1 we see that the number of worlds in which any message may be true (or false) ranges between 0 and 4. In particular note that the tautology is true in 4 worlds (and the contradiction is false in 4 worlds).

Again we wish to quantify the deviation

of each infon from the actual state. Let us assume that the actual state of affairs is, for example,

. That state is true in one world, so in order to calculate the deviation we first identify the difference in the number of worlds between the state of interest and the true state, call that

k, (e.g., the difference between

and

T is 3 for this example). The deviation from the true state (for the horizontal axis) is then the ratio of

k to the total number of worlds, call that

m. This is expressed formally as:

This is similar to the metric used by Floridi for the RHS of his space. We now require two variables to represent the deviation from the true state of affairs; call these and for the True and False axes respectiely. We keep as the informativeness metric (which is the third dimension in the space, and would be coming out of the page).

We have already seen that on the LHS he used the ratio of the number of false atoms to the length of the state as a metric. That the metrics were different was, as mentioned previously, odd for a straight line scale, but for the current representation it makes sense for those messages that may be true but cannot lie on the horizontal axis because these contain a mixture of true and false atoms. In this case, the number of false atoms can be used as a metric for how far round towards the vertical (false) axis they lie. For the current example, there can be zero, one, or two false atoms, which suggest a suitable set of steps for the angle of each message relative to the horizontal axis. This is depicted in

Figure 2. In the general case, for an infon with

n atoms, the angle between each ray would be

rads.

So far we have only dealt with messages that are true, and we now need to look at how the false infons should be placed in the space. As it happens this is straightforward and follows directly from the relation between the two axes. Being orthogonal they represent contradictory infons: where all the infon lying on the horizontal axis are true and contain only true atoms, those lying on the vertical axis are false and contain only false atoms. In fact they form a “mirror image” reflected through a

line in the space (the dotted line in

Figure 2). In this case the distance from the origin is given by the number of worlds in which the infon is false (relative to the true state-of-affairs). Hence the same formula can be used to calculate the distance from the origin. Now we have that the two halves of the space are symmetric around the

line with the contradictory infons being located at the same relative positions, and therefore having the same distance from the origin (true state).

We are now in a position to put forward a suitable metric to replace that proposed by Floridi. The equation of a UC is:

which for the information metric space becomes:

from which we get the formula for the informativeness metric:

At this point we have a clear means whereby we can calculate the deviation of an infon from the true state affairs, and a metric to identify the informativeness of any infon. However, things do not end there. Floridi utilised his metric to quantify the degree of inaccuracy and degree of vacuity of infons. His approach is very straightforward for inaccuracy, but it is quite complex for the amount of vacuity (requiring the integral of the distance). It is not difficult to see from Equation

7 that it would be even more so for our space, requiring the double integration of what could be a complex shape.

15 Fortunately we can simplify things significantly by following the approach taken by von Neumann in the development of QP.

In QP the basic metric is a wave amplitude, which is a periodic (sinusoidal) displacement: the maximum absolute amplitude is 1 and the minimum is 0, ranging around a UC. That is if one makes the square of the amplitude as the probability of an electron being within that amplitude then one has

which is a measure rather than a metric.

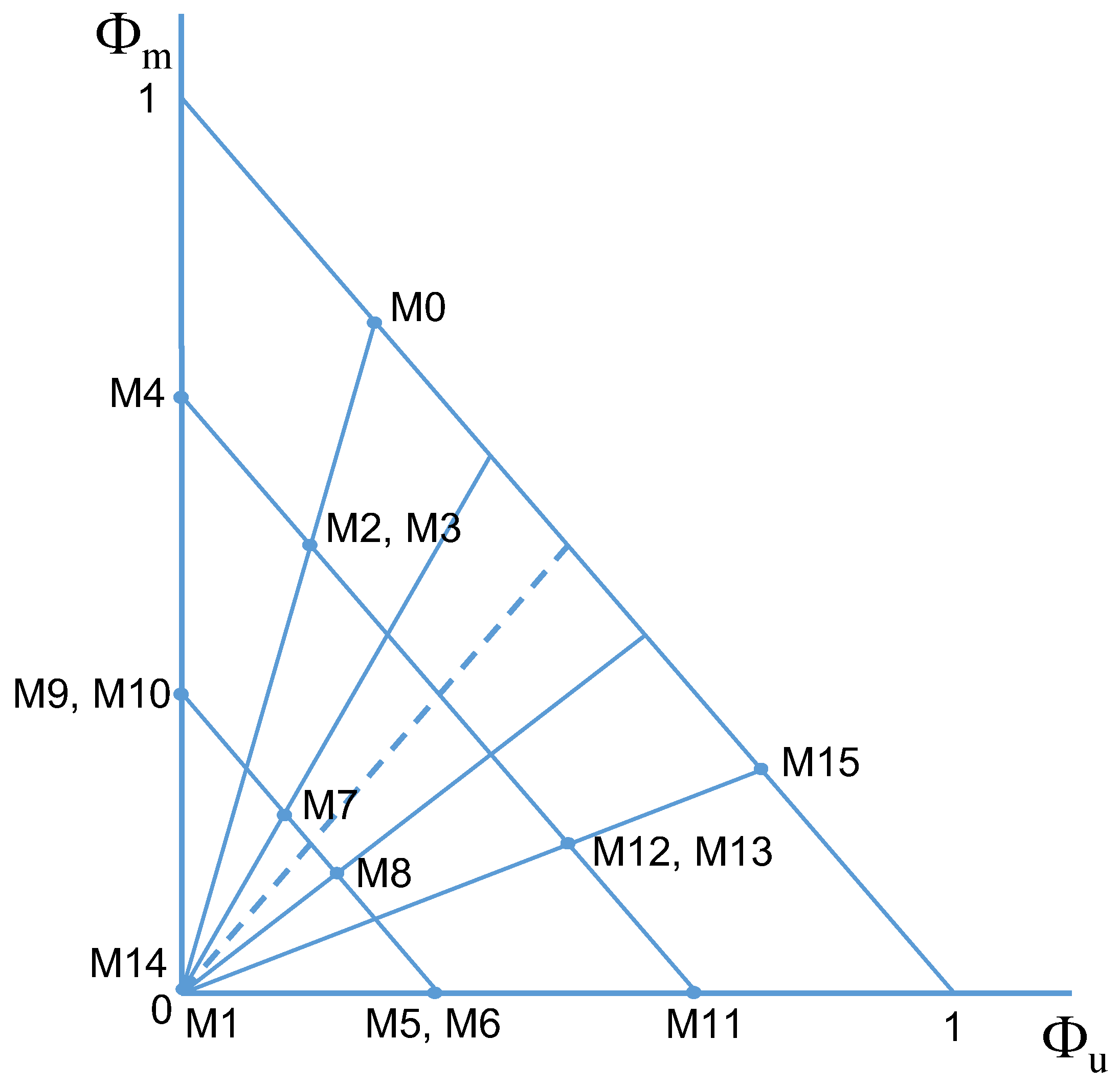

By analogy, if we square the metric values

,

and

we will (as with von Neumann) obtain measure values that generate a new space. The axis obtained from

gives a measure of vacuity or the degree to which one is uninformed (or ignorant).

16 Let us label that

. The other axis then is a measure of misinformation,

say.

17 And finally, the third axis gives a measure of the informativeness of the infon (and would come out of the page in

Figure 3), call that axis

.

As things are set up in the IS for MTSI a contradiction has informativeness of zero (as does a tautology) as required to avoid BCP. However, for reasons presented previously, neither a contradiction nor a tautology lies on the axes of the space since they both contain both true and false atomic infons. Strictly speaking there is no infon on either axis that has a value of zero. On the other hand because (as mentioned previously) a contradiction (or tautology) can contain as many true or false atoms as there are atoms in the messages, each ray not on the axes will contain a version of a contradiction (or tautology) at its zero point, as shown by the

and

labels in

Figure 3. As the number of atomic infons increases the points representing a contradiction or tautology will asymptotically approach their respective axes.

The final issue is that according to our measure space all infons and their contradictories have the same value for informativeness. This starts, as we have just seen, with the contradiction and tautology: they are contradictories and both have value zero. As another example consider the atomic infon x; it is on the axis and will be true in 50% of cases. Its contradictory, , is located on the axis and is false in 50% of cases. They are images of one another through the () line and have the same value of informativeness. In one sense it is not surprising that true and false infons have the same value, after all that was also the case for TSSI, but it did not capture this relation for contradictories. But what is surprising is that this also means that for the true state of affairs, its contradictory will also have a value of 1. That is, for the example used, both and yield .

The simplest way to show why this is the case is again by means of an example. Consider a familiar problem scenario: You are confronted by two doors. Behind one is a million dollars, behind the other is instant death. The doors are protected by two guards, one of whom only tells lies and the other of whom only tell the truth. You have to choose one door, and, obviously, you want to pick the money not the demise.

There are two situations we can explore to make things clear: one in which you know which.guard is which, and one in which you don’t. To help you make your decision you are allowed to ask one guard one question. In the first case you can simply ask either guard directly which door you should choose. If you ask Guard 1, then you choose the other door from the one they tell you (because they will misinform you), and if you ask Guard 2, then you choose the door they tell you to take. Simple. Here we see that regardless of which guard you ask, you become equally well informed as to what action to take, because there is a reliable process (logic) that leads you to the correct answer.

In the second situation, which is the one usually used in the puzzle, you can still ask a single question and regardless of which guard you ask, become equally well informed as to which door to choose. In this case you ask either guard what answer the other guard would give. If you ask Guard 1 they will falsely tell you (i.e., misinform you) that Guard 2 would say Door 2. and if you ask Guard 2 they will truthfully tell you that Guard I would say Door 2. So you are now informed that Door 2 is the door you don’t want and you can safely choose Door 1. Again, this is because propositional logic provides a robust and reliable means of leading you to the desired result.

We are now in a position to consider how this new approach to semantic information matches up to the desiderata, the BCP and the specified conditions put forward by Floridi (which I believe are correct).

18 In

Section 4 we saw that TSSI did not meet all the stated conditions for various reasons. Lets look at each one in turn and see how MTSI compares.

Clearly M1- M3 are satisfied in a straightforward manner by the new version of the distance metric, and overcome the problem that M3 poses for TSSI.

The spirit of M4 and M5 is also met straightforwardly, but is adapted to conform to the 2D situation rather than the single dimension of TSSI. For M4 and M5, again MTSI achieves what TSSI aimed for but did not provide (i.e., ). So MTSI gives a robust measure of inaccuracy without the problems highlighted for TSSI.

Then also E1, E3 and E4 have their direct counterpart in MTSI. The fact that MTSI is measure based means that the calculation of quantity of information is simplified with respect to the integral needed for E2.

Floridi states that “all atomic messages ought to be assigned the same potential degree of informativeness and therefore, although [E6] indicates that the graph of the model has variable gradient, the rate at which changes with respect to change in should be assumed to be uniform, continuous and linear.” Here again, since the MTSI measure is affine, the aims of E6 are met exactly, whereas they are only approximated with the quadratic.

The information space of MTSI includes all possible messages explicitly, and not just the conjunctive states/ worlds as in TSSI.

Floridi points out that the space he proposed would allow for continuous model to be analysed as well. The same applies here. For the boolean case, as one increases the length of the infon, the space becomes denser but the relative positions of the messages in the space remains the same (e.g., atomic infons will still be true in half the cases etc).

There have been a number of challenges to VT and Floridi’s TSSI recently. It is beyond the scope of this paper to address these in detail, but a couple of comments are in order. The situation is not helped by the fact that the criticisms deal with different versions, or statements, of VT which makes it harder to address the criticisms. Nonetheless, the paper by Fresco

et al[

19] seems to be based on having a reliable means of shifts from a false to true status. It should be obvious from the foregoing description, and particularly the example of the two doors, that this is not a problem for MTSI. On the other hand, Ferguson[

4] attacks VT by means of carefully constructed paradoxes whereby VT gives rise to statements that both “convey information” and do not “convey information.”’

19 However one does not need to go to these lengths because Floridi has already provided examples of statements that are information and not information, depending on the

accessibility of the relevant propositions.[

11, p 50] The example Floridi uses is of a man phoning a garage to get a repair done, stating: “My wife left the car lights on overnight, and now the battery is flat.” This statement is false (and hence not information) since it was the man who left the light on. But the relevant part for the mechanic is only that the battery is flat, which is true. One might also suggest in passing that if one were to follow Ferguson here, and reject VT, then unless the term “misinformation” is evacuated of all meaning, any statement would both “convey information” and “convey-misinformation”. which does not seem to be an improvement. No doubt the debate on VT will continue, but there is nothing in the current criticism that demands jettisoning it quite yet, and a lot to suggest its continued value.

Finally, and as the observant reader will note, MTSI has the form of an inverse relation. This indicates that it is not the IRP in and of itself that leads to, or entails, the BCP, but the fact that the original version only utilised a single variable. That is, it was at the wrong Level of Abstraction.[

20] TWSI is the same formula as Popper suggested for “verisimilitude” ([

21] p 233f), and Floridi proposed that “likeness” should be used to describe the proximity of messages to the state of interest. This is precisely what MTSI, at least potentially, provides, and complements Cevolani’s contention that “truthlikeness” rather than “informativeness” should be the focus of attention.[

10] Hence the use of MTSI for verisimilitude is one possible direction for future work. Another question that arises from this analysis is: could the approach taken here be used to explore the distribution of messages in the information space when the datum of interest is more abstract, or representative of a different Level of Abstraction? ([

7] ch. 3 )