Submitted:

18 March 2025

Posted:

19 March 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Literature Review

3. Methodology

4. Data Acquisition

5. Pre-Processing

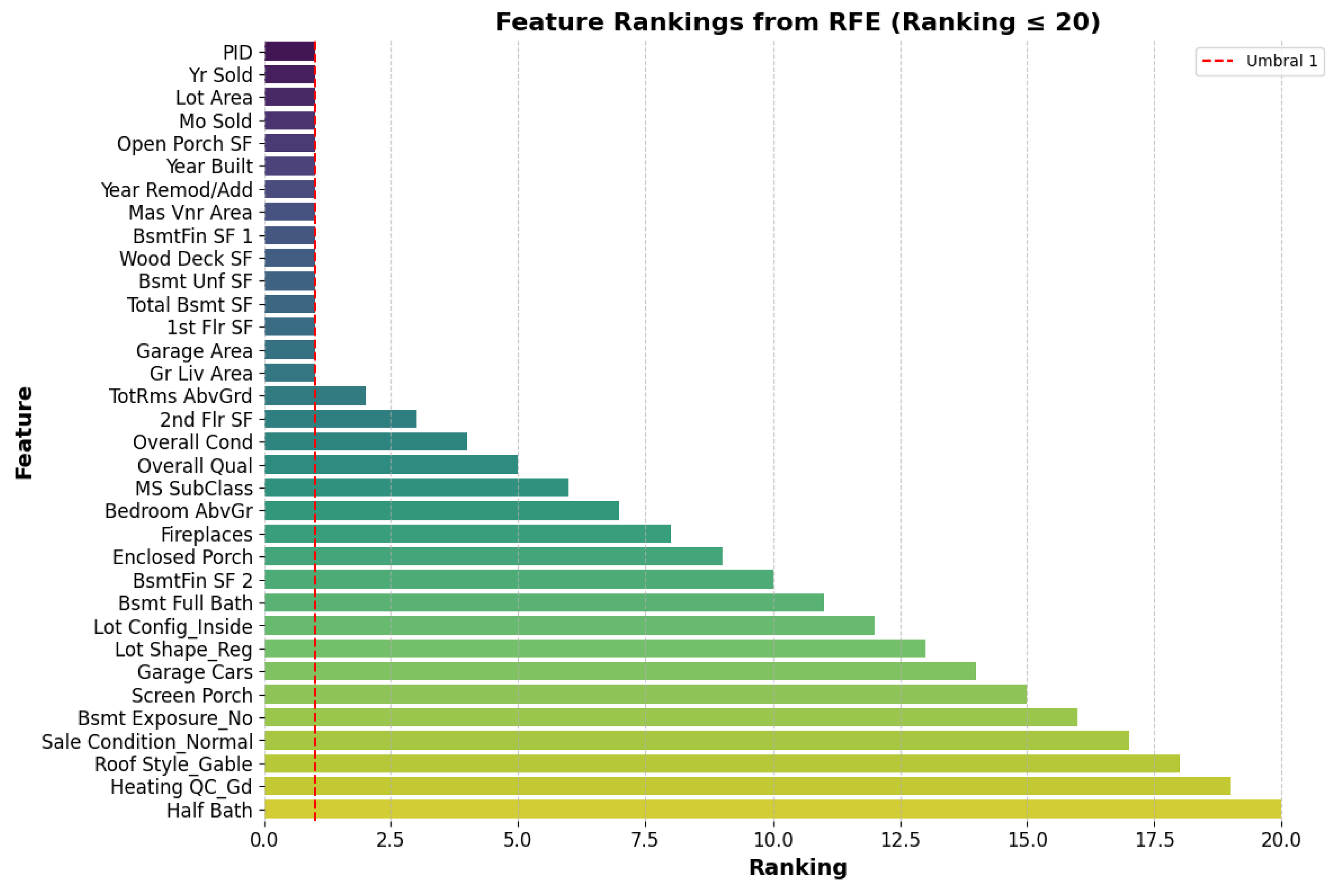

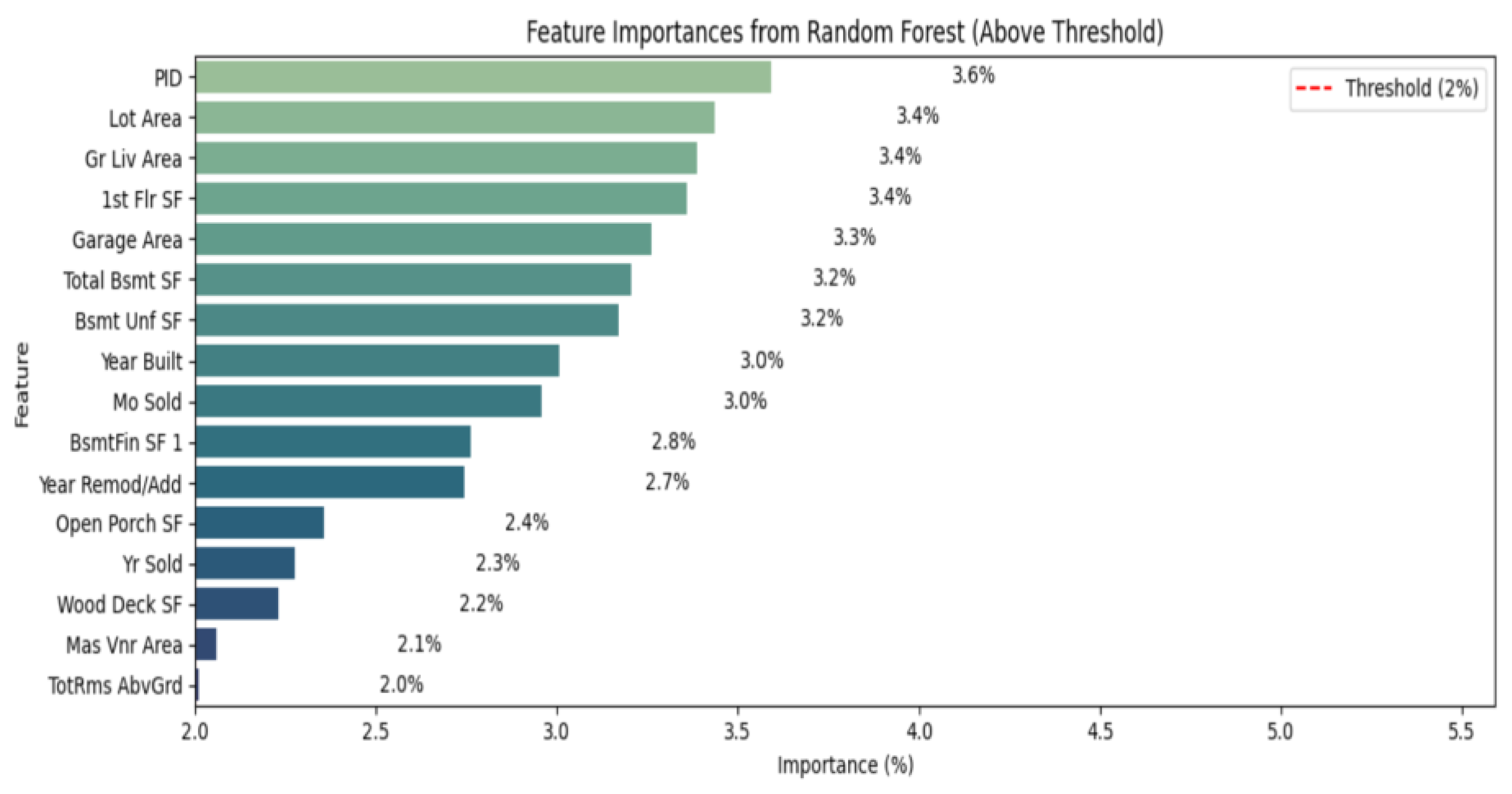

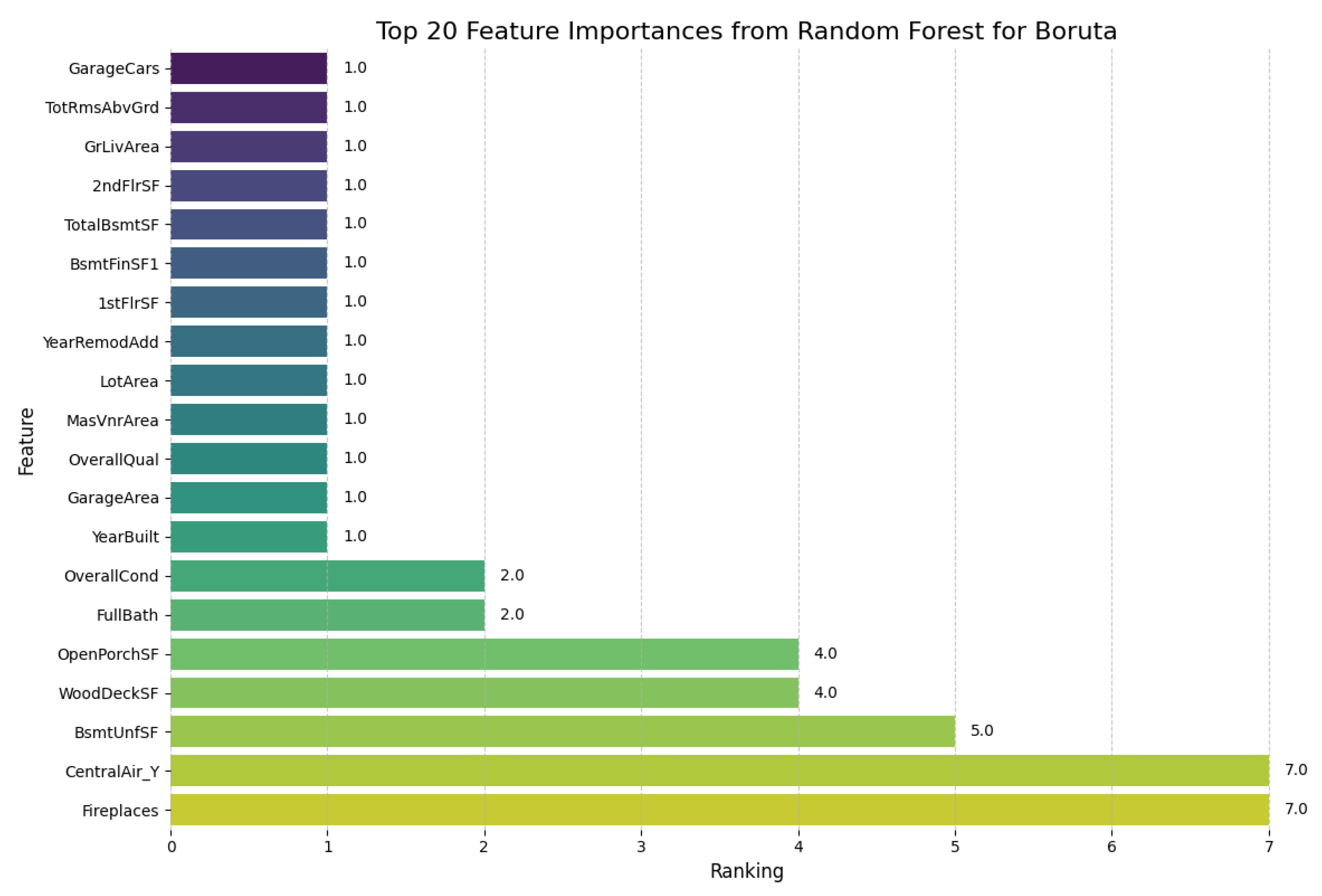

6. Selection Methods

Learning algorithm selection

AdaBoost

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Shi, X.; Cheng, Q.; Xia, M. The Industrial Linkages of the Real Estate Industry and Its Impact on the Economy Caused by the COVID-19 Pandemic. In Proceedings of the Proceedings of the 25th International Symposium on Advancement of Construction Management and Real Estate; Lu, X., Zhang, Z., Lu, W., Peng, Y., Eds.; Springer: Singapore, 2021; pp. 1015–1028. [Google Scholar] [CrossRef]

- Karanasos, M.; Yfanti, S. On the Economic Fundamentals behind the Dynamic Equicorrelations among Asset Classes: Global Evidence from Equities, Real Estate, and Commodities. Journal of International Financial Markets, Institutions and Money 2021, 74, 101292. [Google Scholar] [CrossRef]

- Abdul Rahman, M.S.; Awang, M.; Jagun, Z.T. Polycrisis: Factors, Impacts, and Responses in the Housing Market. Renewable and Sustainable Energy Reviews 2024, 202, 114713. [Google Scholar] [CrossRef]

- Newell, G.; McGreal, S. The Significance of Development Sites in Global Real Estate Transactions. Habitat International 2017, 66, 117–124. [Google Scholar] [CrossRef]

- El Bied, S.; Ros Mcdonnell, L.; de-la-Fuente-Aragón, M.V.; Ros Mcdonnell, D. A Comprehensive Bibliometric Analysis of Real Estate Research Trends. Int. J. Financial Stud. 2024, 12, 95. [Google Scholar] [CrossRef]

- Schoen, E.J. The 2007–2009 Financial Crisis: An Erosion of Ethics: A Case Study. J Bus Ethics 2017, 146, 805–830. [Google Scholar] [CrossRef]

- Trovato, M.R.; Clienti, C.; Giuffrida, S. People and the City: Urban Fragility and the Real Estate-Scape in a Neighborhood of Catania, Italy. Sustainability 2020, 12, 5409. [Google Scholar] [CrossRef]

- Zhang, P.; Hu, S.; Li, W.; Zhang, C.; Yang, S.; Qu, S. Modeling Fine-Scale Residential Land Price Distribution: An Experimental Study Using Open Data and Machine Learning. Applied Geography 2021, 129, 102442. [Google Scholar] [CrossRef]

- De la Luz Juárez, G.; Daza, A.S.; González, J.Z. La crisis financiera internacional de 2008 y algunos de sus efectos económicos sobre México. Contaduría y Administración 2015, 60, 128–146. [Google Scholar] [CrossRef]

- Tekouabou, S.C.K.; Gherghina, Ş.C.; Kameni, E.D.; Filali, Y.; Idrissi Gartoumi, K. AI-Based on Machine Learning Methods for Urban Real Estate Prediction: A Systematic Survey. Arch Computat Methods Eng 2024, 31, 1079–1095. [Google Scholar] [CrossRef]

- Porter, L.; Fields, D.; Landau-Ward, A.; Rogers, D.; Sadowski, J.; Maalsen, S.; Kitchin, R.; Dawkins, O.; Young, G.; Bates, L.K. Planning, Land and Housing in the Digital Data Revolution/The Politics of Digital Transformations of Housing/Digital Innovations, PropTech and Housing – the View from Melbourne/Digital Housing and Renters: Disrupting the Australian Rental Bond System and Tenant Advocacy/Prospects for an Intelligent Planning System/What Are the Prospects for a Politically Intelligent Planning System? Planning Theory & Practice 2019, 20, 575–603. [Google Scholar] [CrossRef]

- Fabozzi, F.J.; Kynigakis, I.; Panopoulou, E.; Tunaru, R.S. Detecting Bubbles in the US and UK Real Estate Markets. J Real Estate Finan Econ 2020, 60, 469–513. [Google Scholar] [CrossRef]

- Pollestad, A.J.; Næss, A.B.; Oust, A. Towards a Better Uncertainty Quantification in Automated Valuation Models. J Real Estate Finan Econ 2024. [CrossRef]

- Munanga, Y.; Musakwa, W.; Chirisa, I. The Urban Planning-Real Estate Development Nexus. In The Palgrave Encyclopedia of Urban and Regional Futures; Brears, R.C., Ed.; Springer International Publishing: Cham, 2022; ISBN 978-3-030-87745-3. [Google Scholar] [CrossRef]

- Kwangwama, N.A.; Mafuku, S.H.; Munanga, Y. A.; Mafuku, S.H.; Munanga, Y. A Review of the Contribution of the Real Estate Sector Towards the Attainment of the New Urban Agenda in Zimbabwe. In New Urban Agenda in Zimbabwe. In New Urban Agenda in Zimbabwe: Built Environment Sciences and Practices; Chavunduka, C., Chirisa, I., Eds.; Springer Nature: Singapore, 2024; Mafuku, S.H.; ISBN 978-981-97-3199-2. [Google Scholar] [CrossRef]

- Behera, I.; Nanda, P.; Mitra, S.; Kumari, S. Machine Learning Approaches for Forecasting Financial Market Volatility. In Machine Learning Approaches in Financial Analytics; Maglaras, L.A., Das, S., Tripathy, N., Patnaik, S., Eds.; Springer Nature Switzerland: Cham, 2024; ISBN 978-3-031-61037-0. [Google Scholar] [CrossRef]

- Goldfarb, A.; Greenstein, S.M.; Tucker, C.E. Economic Analysis of the Digital Economy 2015. Volume URL: http://www.nber.

- Bond, S.A.; Shilling, J.D.; Wurtzebach, C.H. Commercial Real Estate Market Property Level Capital Expenditures: An Options Analysis. J Real Estate Finan Econ 59, 372–390 (2019). [CrossRef]

- Arcuri, N.; De Ruggiero, M.; Salvo, F.; Zinno, R. Automated Valuation Methods through the Cost Approach in a BIM and GIS Integration Framework for Smart City Appraisals. Sustainability 2020, 12, 7546. [Google Scholar] [CrossRef]

- Moorhead, M.; Armitage, L.; Skitmore, M. Real Estate Development Feasibility and Hurdle Rate Selection. Buildings 2024, 14, 1045. [Google Scholar] [CrossRef]

- Htun, H.H.; Biehl, M.; Petkov, N. Survey of feature selection and extraction techniques for stock market prediction. Financ Innov 9, 26 (2023). [CrossRef]

- Glaeser, E.L.; Nathanson, C.G. an Extrapolative Model of House Price Dynamics. Journal of Financial Economics 2017, 126, 147–170. [Google Scholar] [CrossRef]

- Zheng, M.; Wang, H.; Wang, C.; Wang, S. Speculative Behavior in a Housing Market: Boom and Bust. Economic Modelling 2017, 61, 50–64. [Google Scholar] [CrossRef]

- Fotheringham, A.S.; Crespo, R.; Yao, J. Exploring, Modelling and Predicting Spatiotemporal Variations in House Prices. Ann Reg Sci 2015, 54, 417–436. [Google Scholar] [CrossRef]

- Kumar, S.; Talasila, V.; Pasumarthy, R. A Novel Architecture to Identify Locations for Real Estate Investment. International Journal of Information Management 2021, 56, 102012. [Google Scholar] [CrossRef]

- Barkham, R.; Bokhari, S.; Saiz, A. Urban Big Data: City Management and Real Estate Markets. In Artificial Intelligence, Machine Learning, and Optimization Tools for Smart Cities: Designing for Sustainability; Pardalos, P.M., Rassia, S.Th, Tsokas, Eds.; Springer International Publishing: Cham, 2022; ISBN 978-3-030-84459-2. [Google Scholar]

- Moghaddam, S.N.M.; Cao, H. Housing, Affordability, and Real Estate Market Analysis. In Artificial Intelligence-Driven Geographies: Revolutionizing Urban Studies; Moghaddam, S.N.M., Cao, H., Eds.; Springer Nature: Singapore, 2024; ISBN 978-981-97-5116-7. [Google Scholar] [CrossRef]

- Parmezan, A.R.S.; Souza, V.M.A.; Batista, G.E.A.P.A. Evaluation of Statistical and Machine Learning Models for Time Series Prediction: Identifying the State-of-the-Art and the Best Conditions for the Use of Each Model. Information Sciences 2019, 484, 302–337. [Google Scholar] [CrossRef]

- Rane, N.; Choudhary, S. P.; Rane, J. Ensemble Deep Learning and Machine Learning: Applications, Opportunities, Challenges, and Future Directions. Studies in Medical and Health Sciences 2024, 1, 18–41. [Google Scholar] [CrossRef]

- Naz, R.; Jamil, B.; Ijaz, H. Machine Learning, Deep Learning, and Hybrid Approaches in Real Estate Price Prediction: A Comprehensive Systematic Literature Review. Proceedings of the Pakistan Academy of Sciences: A. Physical and Computational Sciences 2024, 61, 129–144. [Google Scholar] [CrossRef]

- Breuer, W. , Steininger, B.I. Recent trends in real estate research: a comparison of recent working papers and publications using machine learning algorithms. J Bus Econ 90, 963–974 (2020). [CrossRef]

- Adetunji, A.B.; Akande, O.N.; Ajala, F.A.; Oyewo, O.; Akande, Y.F.; Oluwadara, G. House Price Prediction Using Random Forest Machine Learning Technique. Procedia Computer Science 2022, 199, 806–813. [Google Scholar] [CrossRef]

- Jui, J.J.; Imran Molla, M.M.; Bari, B.S.; Rashid, M.; Hasan, M.J. Flat Price Prediction Using Linear and Random Forest Regression Based on Machine Learning Techniques. In Proceedings of the Embracing Industry 4.0; Mohd Razman, M.A., Mat Jizat, J.A., Mat Yahya, N., Myung, H., Zainal Abidin, A.F., Abdul Karim, M.S., Eds.; Springer: Singapore, 2020; pp. 205–217. [Google Scholar] [CrossRef]

- Wang, X.; Wen, J.; Zhang, Y.; Wang, Y. Real Estate Price Forecasting Based on SVM Optimized by PSO. Optik 2014, 125, 1439–1443. [Google Scholar] [CrossRef]

- Liu, G. Research on Prediction and Analysis of Real Estate Market Based on the Multiple Linear Regression Model. Scientific Programming 2022, 2022, 5750354. [Google Scholar] [CrossRef]

- Sharma, M.; Chauhan, R.; Devliyal, S.; Chythanya, K.R. House Price Prediction Using Linear and Lasso Regression. In Proceedings of the 2024 3rd International Conference for Innovation in Technology (INOCON); March 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Harahap, T.H. K-Nearest Neighbor Algorithm for Predicting Land Sales Price. Al’adzkiya International of Computer Science and Information Technology (AIoCSIT) Journal 2022, 3, 58–67. [Google Scholar]

- Mohd, T.; Jamil, N.S.; Johari, N.; Abdullah, L.; Masrom, S. An Overview of Real Estate Modelling Techniques for House Price Prediction. In Proceedings of the Charting a Sustainable Future of ASEAN in Business and Social Sciences; Kaur, N., Ahmad, M., Eds.; Springer: Singapore, 2020; pp. 321–338. [Google Scholar] [CrossRef]

- Zhu, D.; Cai, C.; Yang, T.; Zhou, X. A Machine Learning Approach for Air Quality Prediction: Model Regularization and Optimization. Big Data and Cognitive Computing 2018, 2, 5. [Google Scholar] [CrossRef]

- Huang, J.-C.; Ko, K.-M.; Shu, M.-H.; Hsu, B.-M. Application and Comparison of Several Machine Learning Algorithms and Their Integration Models in Regression Problems. Neural Comput & Applic 2020, 32, 5461–5469. [Google Scholar] [CrossRef]

- Ahsan, M.M.; Mahmud, M.A.P.; Saha, P.K.; Gupta, K.D.; Siddique, Z. Effect of Data Scaling Methods on Machine Learning Algorithms and Model Performance. Technologies 2021, 9, 52. [Google Scholar] [CrossRef]

- Rane, N.L.; Rane, J.; Mallick, S.K.; Kaya, Ö. 2024.

- Jiaji, S. Machine Learning-Driven Dynamic Scaling Strategies for High Availability Systems. International IT Journal of Research, ISSN: 3007-6706 2024, 2, 35–42. [Google Scholar] [CrossRef]

- Ho, W.K.O.; Tang, B.-S.; Wong, S.W. Predicting Property Prices with Machine Learning Algorithms. Journal of Property Research 2021, 38, 48–70. [Google Scholar] [CrossRef]

- Phan, T.D. Housing Price Prediction Using Machine Learning Algorithms: The Case of Melbourne City, Australia. In Proceedings of the 2018 International Conference on Machine Learning and Data Engineering (iCMLDE); December 2018; pp. 35–42. [Google Scholar] [CrossRef]

- Pai, P.-F.; Wang, W.-C. Using Machine Learning Models and Actual Transaction Data for Predicting Real Estate Prices. Appl. Sci. 2020, 10, 5832. [Google Scholar] [CrossRef]

- Dhal, P.; Azad, C. A Comprehensive Survey on Feature Selection in the Various Fields of Machine Learning. Appl Intell 2022, 52, 4543–4581. [Google Scholar] [CrossRef]

- Charles, Z.; Papailiopoulos, D. Stability and Generalization of Learning Algorithms That Converge to Global Optima. In Proceedings of the Proceedings of the 35th International Conference on Machine Learning; 2p.

- Gilpin, L.H.; Bau, D.; Yuan, B.Z.; Bajwa, A.; Specter, M.; Kagal, L. Explaining Explanations: An Overview of Interpretability of Machine Learning. In Proceedings of the 2018 IEEE 5th International Conference on Data Science and Advanced Analytics (DSAA); October 2018; pp. 80–89. [Google Scholar] [CrossRef]

- Aremu, O.O.; Hyland-Wood, D.; McAree, P.R. A Machine Learning Approach to Circumventing the Curse of Dimensionality in Discontinuous Time Series Machine Data. Reliability Engineering & System Safety 2020, 195, 106706. [Google Scholar] [CrossRef]

- Khan, W.A.; Chung, S.H.; Awan, M.U.; Wen, X. Machine Learning Facilitated Business Intelligence (Part II): Neural Networks Optimization Techniques and Applications. Industrial Management & Data Systems 2020, 120, 128–163. [Google Scholar] [CrossRef]

- Qin, H. Athletic Skill Assessment and Personalized Training Programming for Athletes Based on Machine Learning. Journal of Electrical Systems 2024, 20, 1379–1387. [Google Scholar] [CrossRef]

- Park, B.; Bae, J.K. Using Machine Learning Algorithms for Housing Price Prediction: The Case of Fairfax County, Virginia Housing Data. Expert Systems with Applications 2015, 42, 2928–2934. [Google Scholar] [CrossRef]

- Varma, A.; Sarma, A.; Doshi, S.; Nair, R. House Price Prediction Using Machine Learning and Neural Networks. In Proceedings of the 2018 Second International Conference on Inventive Communication and Computational Technologies (ICICCT); April 2018; pp. 1936–1939. [Google Scholar] [CrossRef]

- Rafiei, M.H.; Adeli, H. A Novel Machine Learning Model for Estimation of Sale Prices of Real Estate Units. Journal of Construction Engineering and Management 2016, 142, 04015066. [Google Scholar] [CrossRef]

- Ahmad, M.W.; Mourshed, M.; Rezgui, Y. Tree-Based Ensemble Methods for Predicting PV Power Generation and Their Comparison with Support Vector Regression. Energy 2018, 164, 465–474. [Google Scholar] [CrossRef]

- Arabameri, A.; Chandra Pal, S.; Rezaie, F.; Chakrabortty, R.; Saha, A.; Blaschke, T.; Di Napoli, M.; Ghorbanzadeh, O.; Thi Ngo, P.T. Decision Tree Based Ensemble Machine Learning Approaches for Landslide Susceptibility Mapping. Geocarto International 2022, 37, 4594–4627. [Google Scholar] [CrossRef]

- Ribeiro, M.H.D.M.; dos Santos Coelho, L. Ensemble Approach Based on Bagging, Boosting and Stacking for Short-Term Prediction in Agribusiness Time Series. Applied Soft Computing 2020, 86, 105837. [Google Scholar] [CrossRef]

- Sibindi, R.; Mwangi, R.W.; Waititu, A.G. A Boosting Ensemble Learning Based Hybrid Light Gradient Boosting Machine and Extreme Gradient Boosting Model for Predicting House Prices. Engineering Reports 2023, 5, e12599. [Google Scholar] [CrossRef]

- Kim, J.; Won, J.; Kim, H.; Heo, J. Machine-Learning-Based Prediction of Land Prices in Seoul, South Korea. Sustainability 2021, 13, 13088. [Google Scholar] [CrossRef]

- Sankar, M.; Chithambaramani, R.; Sivaprakash, P.; Ithayan, V.; Dilip Charaan, R.M.; Marichamy, D. Analysis of Landlord’s Land Price Prediction Using Machine Learning. In Proceedings of the 2024 5th International Conference on Smart Electronics and Communication (ICOSEC); September 2024; pp. 1514–. [Google Scholar] [CrossRef]

- Gonzalez Zelaya, C.V. Towards Explaining the Effects of Data Preprocessing on Machine Learning. In Proceedings of the 2019 IEEE 35th International Conference on Data Engineering (ICDE); April 2019; pp. 2086–2090. [Google Scholar] [CrossRef]

- Nair, P.; Kashyap, I. Hybrid Pre-Processing Technique for Handling Imbalanced Data and Detecting Outliers for KNN Classifier. In Proceedings of the 2019 International Conference on Machine Learning, Big Data, Cloud and Parallel Computing (COMITCon); February 2019; pp. 460–464. [Google Scholar] [CrossRef]

- Mallikharjuna Rao, K.; Saikrishna, G.; Supriya, K. Data Preprocessing Techniques: Emergence and Selection towards Machine Learning Models - a Practical Review Using HPA Dataset. Multimed Tools Appl 2023, 82, 37177–37196. [Google Scholar] [CrossRef]

- Habibi, A.; Delavar, M.R.; Sadeghian, M.S.; Nazari, B.; Pirasteh, S. A Hybrid of Ensemble Machine Learning Models with RFE and Boruta Wrapper-Based Algorithms for Flash Flood Susceptibility Assessment. International Journal of Applied Earth Observation and Geoinformation 2023, 122, 103401. [Google Scholar] [CrossRef]

- Kursa, M.B. Robustness of Random Forest-Based Gene Selection Methods. BMC Bioinformatics 2014, 15, 8. [Google Scholar] [CrossRef] [PubMed]

- Solomatine, D.P.; Shrestha, D.L. AdaBoost. In RT: A Boosting Algorithm for Regression Problems. In Proceedings of the 2004 IEEE International Joint Conference on Neural Networks (IEEE Cat. No.04CH37541); July 2004; Vol. 2; pp. 1163–1168. [Google Scholar] [CrossRef]

- Zhang, P.; Yang, Z. A Robust AdaBoost.RT Based Ensemble Extreme Learning Machine. Mathematical Problems in Engineering 2015, 2015, 260970. [Google Scholar] [CrossRef]

- Zharmagambetov, A.; Gabidolla, M.; Carreira-Perpiñán, M.Ê. Improved Boosted Regression Forests Through Non-Greedy Tree Optimization. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN); July 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Hoang, N.-D.; Tran, V.-D.; Tran, X.-L. Predicting Compressive Strength of High-Performance Concrete Using Hybridization of Nature-Inspired Metaheuristic and Gradient Boosting Machine. Mathematics 2024, 12, 1267. [Google Scholar] [CrossRef]

- Hoang, N.-D. Predicting Tensile Strength of Steel Fiber-Reinforced Concrete Based on a Novel Differential Evolution-Optimized Extreme Gradient Boosting Machine. Neural Comput & Applic 2024, 36, 22653–22676. [Google Scholar] [CrossRef]

- Borup, D.; Christensen, B.J.; Mühlbach, N.S.; Nielsen, M.S. Targeting Predictors in Random Forest Regression. International Journal of Forecasting 2023, 39, 841–868. [Google Scholar] [CrossRef]

- González, S.; García, S.; Del Ser, J.; Rokach, L.; Herrera, F. A Practical Tutorial on Bagging and Boosting Based Ensembles for Machine Learning: Algorithms, Software Tools, Performance Study, Practical Perspectives and Opportunities. Information Fusion 2020, 64, 205–237. [Google Scholar] [CrossRef]

- Hajihosseinlou, M.; Maghsoudi, A.; Ghezelbash, R. Stacking: A Novel Data-Driven Ensemble Machine Learning Strategy for Prediction and Mapping of Pb-Zn Prospectivity in Varcheh District, West Iran. Expert Systems with Applications 2024, 237, 121668. [Google Scholar] [CrossRef]

- Tummala, M.; Ajith, K.; Mamidibathula, S.K.; Kenchetty, P. Driving Sustainable Water Solutions: Optimizing Water Quality Prediction Using a Stacked Hybrid Model with Gradient Boosting and Ridge Regression. In Proceedings of the 2024 3rd International Conference on Automation, Computing and Renewable Systems (ICACRS); December 2024; pp. 1584–1589. [Google Scholar] [CrossRef]

- Revathy, P.; Manju Bhargavi, N.; Gunasekar, S.; Lohit, A. Voting Regressor Model for Timely Prediction of Sleep Disturbances Using NHANES Data. In Proceedings of the ICT Systems and Sustainability; Tuba, M., Akashe, S., Joshi, A., Eds.; Springer Nature: Singapore, 2024; pp. 53–65. [Google Scholar] [CrossRef]

- Chen, S.; Luc, N.M. RRMSE Voting Regressor: A Weighting Function Based Improvement to Ensemble Regression 2022. [CrossRef]

- Battineni, G.; Sagaro, G.G.; Nalini, C.; Amenta, F.; Tayebati, S.K. Comparative Machine-Learning Approach: A Follow-Up Study on Type 2 Diabetes Predictions by Cross-Validation Methods. Machines 2019, 7, 74. [Google Scholar] [CrossRef]

- Kumkar, P.; Madan, I.; Kale, A.; Khanvilkar, O.; Khan, A. Comparison of Ensemble Methods for Real Estate Appraisal. In Proceedings of the 2018 3rd International Conference on Inventive Computation Technologies (ICICT); November 2018; pp. 297–300. [Google Scholar] [CrossRef]

| Mode | Features | MAE | MSE | RMSE | R2 |

|---|---|---|---|---|---|

| Adaboost | 227 | 23627.919429 | 1.044387e+09 | 32316.982313 | 0.851426 |

| RFE+ Adaboost | 15 | 25392.158815 | 1.173752e+09 | 34260.063181 | 0.833023 |

| Gradient Boosting | 227 | 14536.517194 | 5.642879e+08 | 23754.744694 | 0.919725 |

| RFE + Gradient Boosting | 15 | 17454.651420 | 6.754278e+08 | 25988.994436 | 0.903914 |

| RandomForest | 227 | 15185.544380 | 6.065719e+08 | 24628.680229 | 0.913710 |

| RFE+ RandomForest | 15 | 17535.397304 | 7.454770e+08 | 27303.425108 | 0.893949 |

| ExtraTrees | 227 | 15476.414061 | 6.573662e+08 | 25639.153719 | 0.906484 |

| RFE+ ExtraTrees | 15 | 16762.966974 | 6.782548e+08 | 26043.325620 | 0.903512 |

| Bagging | 227 | 15134.036132 | 6.391276e+08 | 25280.973836 | 0.909078 |

| RFE+ Bagging | 15 | 17429.414198 | 7.574605e+08 | 27522.001198 | 0.892244 |

| Stacking | 227 | 14092.304154 | 5.338036e+08 | 23104.189688 | 0.924062 |

| RFE+ Stacking | 15 | 16505.849309 | 6.473327e+08 | 25442.732864 | 0.907911 |

| Voting | 227 | 15723.876482 | 5.970945e+08 | 24435.517691 | 0.915058 |

| RFE+ Voting | 15 | 17512.356684 | 6.940057e+08 | 26343.988514 | 0.901271 |

| Mode | Features | MAE | MSE | RMSE | R2 |

|---|---|---|---|---|---|

| Adaboost | 227 | 23627.919429 | 1.044387e+09 | 32316.982313 | 0.851426 |

| RF+ Adaboost | 16 | 24371.146572 | 1.124288e+09 | 33530.410706 | 0.840060 |

| Gradient Boosting | 227 | 14536.517194 | 5.642879e+08 | 23754.744694 | 0.919725 |

| RF+ Gradient Boosting | 16 | 17308.667825 | 6.725001e+08 | 25932.606461 | 0.904331 |

| RandomForest | 227 | 15185.544380 | 6.065719e+08 | 24628.680229 | 0.913710 |

| RF+ RandomForest | 16 | 17170.938199 | 7.264897e+08 | 26953.472589 | 0.896650 |

| ExtraTrees | 227 | 15476.414061 | 6.573662e+08 | 25639.153719 | 0.906484 |

| RF+ ExtraTrees | 16 | 16776.916428 | 6.684286e+08 | 25853.985352 | 0.904910 |

| Bagging | 227 | 15134.036132 | 6.391276e+08 | 25280.973836 | 0.909078 |

| RF+ Bagging | 16 | 17653.341320 | 7.408339e+08 | 27218.264857 | 0.894610 |

| Stacking | 227 | 14092.304154 | 5.338036e+08 | 23104.189688 | 0.924062 |

| RF+ Stacking | 16 | 16586.994273 | 6.388316e+08 | 25275.118788 | 0.909120 |

| Voting | 227 | 15723.876482 | 5.970945e+08 | 24435.517691 | 0.915058 |

| RF+ Voting | 16 | 17392.159866 | 6.870462e+08 | 26211.565771 | 0.902261 |

| Mode | Features | MAE | MSE | RMSE | R2 |

|---|---|---|---|---|---|

| Adaboost | 227 | 23627.919429 | 1.044387e+09 | 32316.982313 | 0.851426 |

| Boruta+ Adaboost | 16 | 23528.792421 | 1.041813e+09 | 32277.132408 | 0.851793 |

| Gradient Boosting | 227 | 14536.517194 | 5.642879e+08 | 23754.744694 | 0.919725 |

| Boruta+Gradient Boosting | 16 | 16073.472932 | 6.530824e+08 | 25555.476678 | 0.907093 |

| RandomForest | 227 | 15185.544380 | 6.065719e+08 | 24628.680229 | 0.913710 |

| Boruta+ RandomForest | 16 | 15840.843610 | 6.861629e+08 | 26194.711106 | 0.902387 |

| ExtraTrees | 227 | 15476.414061 | 6.573662e+08 | 25639.153719 | 0.906484 |

| Boruta+ ExtraTrees | 16 | 15899.186200 | 7.232086e+08 | 26892.537158 | 0.897117 |

| Bagging | 227 | 15134.036132 | 6.391276e+08 | 25280.973836 | 0.909078 |

| Boruta + Bagging | 16 | 15575.694721 | 6.866662e+08 | 26204.316267 | 0.902315 |

| Stacking | 227 | 14092.304154 | 5.338036e+08 | 23104.189688 | 0.924062 |

| Boruta + Stacking | 16 | 15471.669310 | 6.451969e+08 | 25400.725612 | 0.908215 |

| Voting | 227 | 15723.876482 | 5.970945e+08 | 24435.517691 | 0.915058 |

| Boruta + Voting | 16 | 16228.236364 | 6.583434e+08 | 25658.203542 | 0.906345 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).