3.1. Classification Algorithms

In this paper, we consider six classifiers: the Random Convolutional Kernel Transform (ROCKET), the Residual Network (ResNet), the Inception Time algorithm, a one-dimensional CNN, the CNN1D-LSTM model, and the Transformer algorithm. We excluded the HIVE-COTEv2 algorithm, a heterogeneous meta-ensemble for MTSC, due to its limited scalability with respect to dimensionality and time series length [

5]. For the same reason, other ensemble methods were also not considered. In the following sub-sections, we briefly explain each of the algorithms used.

3.1.1. The Random Convolutional Kernel Transform (ROCKET)

The Random Convolutional Kernel Transform (ROCKET), introduced by Dempster et al. [

8], utilizes numerous random convolution kernels combined with a linear classifier, such as ridge regression or logistic regression. Each kernel is applied to every instance, producing feature maps from which the maximum value and a novel feature, the proportion of positive values (ppv), are extracted. For each of the 10,000 generated kernels, parameters are drawn from specific distributions: The length

is selected such that,

; the value of each weight,

, in the kernel is selected such that,

; dilation

is sampled from an exponential scale up to the input length, and the binary decision to pad the series is chosen with equal probability. If padding is applied, the series is zero-padded at both ends, allowing the kernel’s midpoint to be applied to each point in the input series.

The convolution operation between an instance and a kernel can be interpreted as a dot product of two vectors, resulting in a feature map used to calculate the maximum value and ppv features. The ppv captures the proportion of the series correlated to the kernel, which has been shown to enhance classification accuracy. After all convolutions, each series is transformed into an instance with 20,000 attributes, which is then used to train the ridge regression classifier. An extension to the ROCKET approach to enable use on multivariate datasets has recently been added to the Python’s sktime library [

9]. For application on multivariate datasets, kernels are assigned to random dimensions. Weights are then generated for each channel. Here, convolution is interpreted as a matrix dot product as the kernel convolves horizontally across the series, and the maximum and ppv values are calculated across all dimensions for each kernel, producing a 20,000-attribute instance.

In most cases, ridge regression is preferred due to its efficiency in cross-validating the regularization hyperparameter. However, for very large datasets where the number of instances significantly exceeds the number of features, logistic regression with stochastic gradient descent offers greater scalability. ROCKET effectively zooms out the time series data in a manner analogous to how Support Vector Machines (SVM) operate on data points.

3.1.2. Residual network (ResNet)

ResNet was first applied to time series classification in [

10]. It consists of three consecutive blocks, each containing three convolutional layers connected by residual ’shortcut’ connections, which add each block’s input to its output. These residual connections facilitate the direct flow of gradients through the network, helping to mitigate the vanishing gradient problem. Following the residual blocks, global average pooling and softmax layers are used to generate features and make predictions. We retain all hyperparameter and optimizer settings from Fawaz et al.’s evaluation [

3]. The implementation in sktime [

9] interfaces with the original implementation provided by their study.

3.1.3. InceptionTime

InceptionTime achieves high accuracy by building on ResNet to incorporate Inception modules [

10] and by assembling over five multiple random-initial-weight instantiations of the network to improve stability [

11]. Each network in the ensemble consists of two blocks, each containing three Inception modules, as opposed to ResNet’s structure of three blocks with three traditional convolutional layers. These blocks retain residual connections and are followed by global average pooling and softmax layers, as in ResNet.

An Inception module takes an input multivariate series of length

and dimensionality

. It first applies a bottleneck layer with filter length and stride of 1 to reduce the dimensionality to

, maintaining the original series length

. This dimensionality reduction significantly decreases the number of parameters. Convolutions of varying lengths are then applied to the bottleneck layer’s output to detect patterns of different sizes. The outputs of these convolutions are combined with an additional source of diversity, a Max Pooling followed by bottleneck (with the same value of

d’) applied to the original time series, and all stacked to form the dimensions of the output multivariate time series to be fed into the next layer. Once more, we maintain all hyperparameter settings and optimizer settings from the source article [

12], and the implementation in sktime [

9] is an interfacing of the implementation provided by that study.

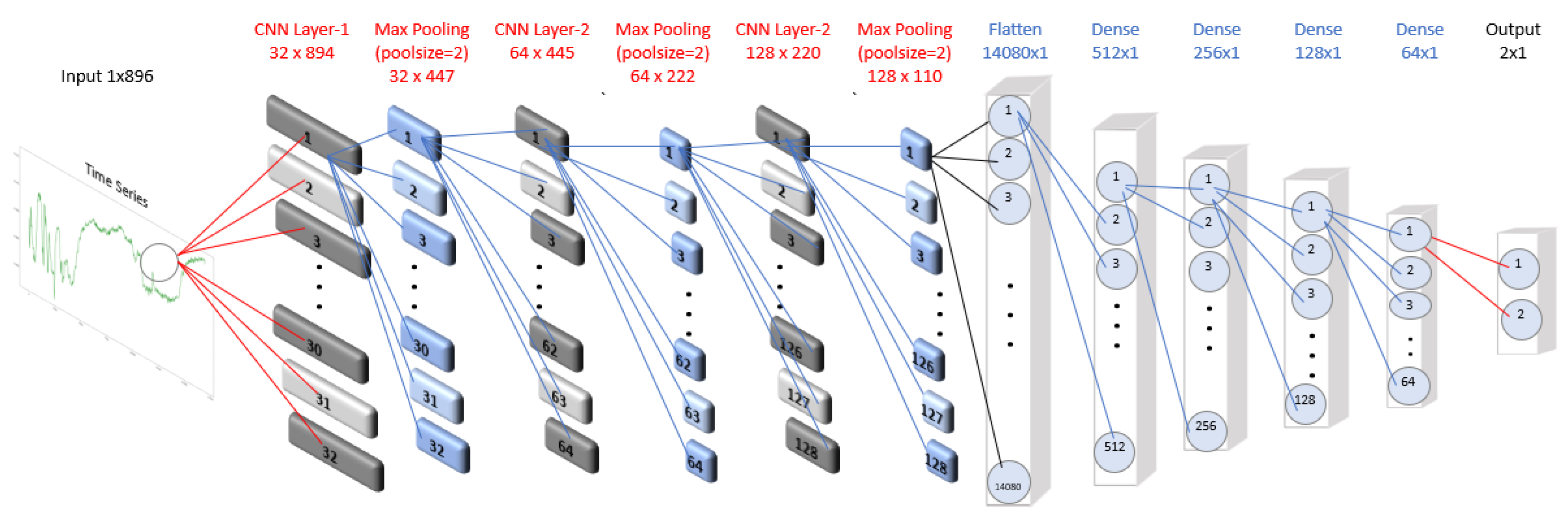

3.1.4. One Dimensional Convolutional Neural Network (CNN-1D)

There are numerous CNN architectures, such as LeNet, AlexNet, and GoogleNet [

13], used to classify multivariate time series. In this work, we employed a sequential model for CNN, specifically a 1D CNN [

14,

15], which is well-suited for analyzing sequential data like time series and spectral data. Each time series is represented by the input shape parameter of the Conv1D layer with a given number of feature maps (filters) and kernel size. The feature maps determine the number of times the input is processed or interpreted, whereas the kernel size specifies the number of input time steps considered as the input sequence is read or processed onto the feature maps.

In our experiments, we varied the number of feature maps between 32 and 256 and used kernel sizes of either 2 or 3. Following the Conv1D layer, we applied a MaxPooling1D layer with a pool size of 3, repeating this sequence of layers three times. Afterward, a Flatten layer was added, followed by three dense layers with 64 neurons each. The output layer matched the number of dataset classes and used the softmax activation function. Given that most datasets have a limited number of features, we did not include a dropout layer.

The architecture of the 1D CNN model used for the SCP1 dataset is shown in

Figure 1. We trained the model for 30 epochs with a batch size of 256, optimizing the categorical cross-entropy loss function using the ADAM optimizer, a variant of stochastic gradient descent.

3.1.5. CNN-1D - LSTM

In the MLP model, the pre-layer and post-layer are fully connected, with no connections among neurons within the same layer. In contrast, the Recurrent Neural Network (RNN) introduces a weighted sum of the previous inputs into the hidden layer’s calculation, allowing it to consider both the output from the previous layer and all prior outputs [

16]. This feedback mechanism in the hidden layer helps the network learn context-related information, making RNNs effective for processing sequential data, such as time series and spectral data.

However, RNNs are limited by short-term memory, struggling to retain information over long sequences. Long Short-Term Memory (LSTM) networks, a type of RNN, address this by managing long-term dependencies between inputs and outputs [

17,

18]. LSTM networks use internal mechanisms called gates to regulate the flow of information, enabling the network to learn which data in a sequence is important to retain or discard. This capability allows LSTMs to pass relevant information along the sequence, helping improve predictions over long input chains.

3.1.6. Transformers

Vaswani et al. [

19] introduced the concept of attention-based networks, originally designed for natural language processing (NLP), where sequences of words are ordered by grammar and syntax. In an attention-based network, known as the Transformer, an input sequence (e.g., text in English) is processed to generate an output sequence (e.g., text in Spanish). In time series analysis, where data is ordered chronologically in time steps, the Transformer generates a forecast along the time axis from a sequence of training observations. Transformers are effective in capturing long-range dependencies and interactions, making them well-suited for time series modeling. In many applications, Transformers have demonstrated superior performance compared to RNN and LSTM models [

20,

21,

22].

A key feature of the Transformer is its attention heads, which enable it to learn relationships between each time step and every other time step in the input sequence. Transformer dynamically updates attention weights to emphasize relevant time steps and downgrade less relevant ones, with a score matrix expressing the association between each time step and the others.

The Transformer architecture consists of a stack of Encoder and Decoder layers, each with corresponding Embedding layers for their respective inputs. The final Output layer generates the model’s predictions. Each attention block within the architecture includes components such as Self-Attention, Layer Normalization, and a Feed-Forward layer. The input dimensions of each block are equal to its output dimensions.

3.2. Datasets

In this paper, we use only 15 of the 30 MTSC datasets available in the UEA repository, discarding others for the following reasons. Four datasets—Insect Wingbeats, Spoken Arabic Digits, Character Trajectories, and Japanese Vowels—contain unequal-length time series. These were excluded to avoid preprocessing steps that could impact classifier comparisons. Additionally, four datasets—AtrialFibrillation, BasicMotions, ERing, and StandWalkJump—have less than 50 instances in their training sets, which can be problematic for deep learning algorithms that require a larger number of instances to achieve reliable prediction performance. Despite this, we have included the BasicMotions dataset in our experimental study.

There are also four datasets—Libras, LSST, PendDigits, and RacketSports—containing time series of very short length (less than 50 points), which may limit the predictive power of machine learning algorithms. In our study, we have considered two of these datasets: Libras and RacketSports. Conversely, two datasets, EigenWorms and MotorImagery, feature very long time series (over 2000 points), which can overwhelm the algorithms and reduce prediction accuracy. Binning can be used to reduce the dimensionality but to carry out a fair comparison, we prefer to exclude both datasets. The Phoneme dataset was also excluded due to its limited training set for a large number of classes (39), which could impair model performance.

Lastly, the Cricket, Handwriting, and UWaveGesture datasets were excluded due to their small training sets relative to their testing sets, resulting in poor performance for deep learning models. For these datasets, the product of training size and dimensionality is low, leading to underperformance in deep learning algorithms.

In the following sections, we provide a brief overview of the datasets included in our experiments, listed in descending order based on the average accuracy achieved by the classifiers considered in our experiments.

3.2.1. Epilepsy (EPI)

The data presented in [

23] was generated using a 3D accelerometer worn on the dominant wrist, with healthy participants simulating specific class activities. An accelerometer measures changes in speed and typically reports data across three movement axes (x, y, z). Data was collected from six participants using a tri-axial accelerometer as they performed four different activities, each with varying durations. The four tasks, each of different length, are: i) Walking includes different paces and gestures: walking slowing while gesturing, walking slowly, walking normal and walking fast, each of 30 seconds long; ii) Running includes running a 40 meters long corridor; iii) Sawing with a saw and for 30 seconds; and iv) Seizure mimicking whilst seated, with 5-6 sec before and 30 sec after the mimicked seizure. The seizure was 30 sec long. Each participant performs each activity 10 times at least. As a standard practice for the dataset, data was truncated to match the length of the shortest retained series. One case (ID002 Running 16) was removed due to incomplete data collection. After cleaning, the dataset consists of 275 cases, with 137 instances in the training set, approximately equally distributed among the four classes, and 138 instances in the test set.

3.2.2. NATOPS

This dataset, adapted from the 2016 Advanced Analytics and Learning on Temporal Data challenge [

24], contains 24-dimensional data recorded via Xbox Kinect as participants performed one of six gestures. Sensors attached to each hand, elbow, wrist, and thumb captured 3D positional data throughout each gesture. The training set consists of 180 instances, equally distributed across the six classes, and the test set also contains 180 instances.

3.2.3. Articulacy Word Recognition (AWR)

An Electromagnetic Articulograph (EMA) is a device used to measure tongue and lip movements during speech by tracking small sensors attached to the articulators (e.g., tongue, lips). Subjects are seated within a calibrated magnetic field, allowing for precise measurement of sensor position changes. The EMA AG500 achieves a spatial accuracy of 0.5 mm.

Data was collected from multiple native English speakers as they produced 25 words [

25]. Nine sensors, each recording x, y, and z positions at a 200 Hz sampling rate, were used in data collection. The sensors were placed on the forehead, jaw, lips, and along the tongue from tip to back in the midline. Three head sensors (Head Center, Head Right, and Head Left) were mounted on a pair of glasses to account for head-independent movement of other sensors. Tongue sensors were labeled T1 through T4, from tip to back. Out of 27 available dimensions, this dataset includes only nine.

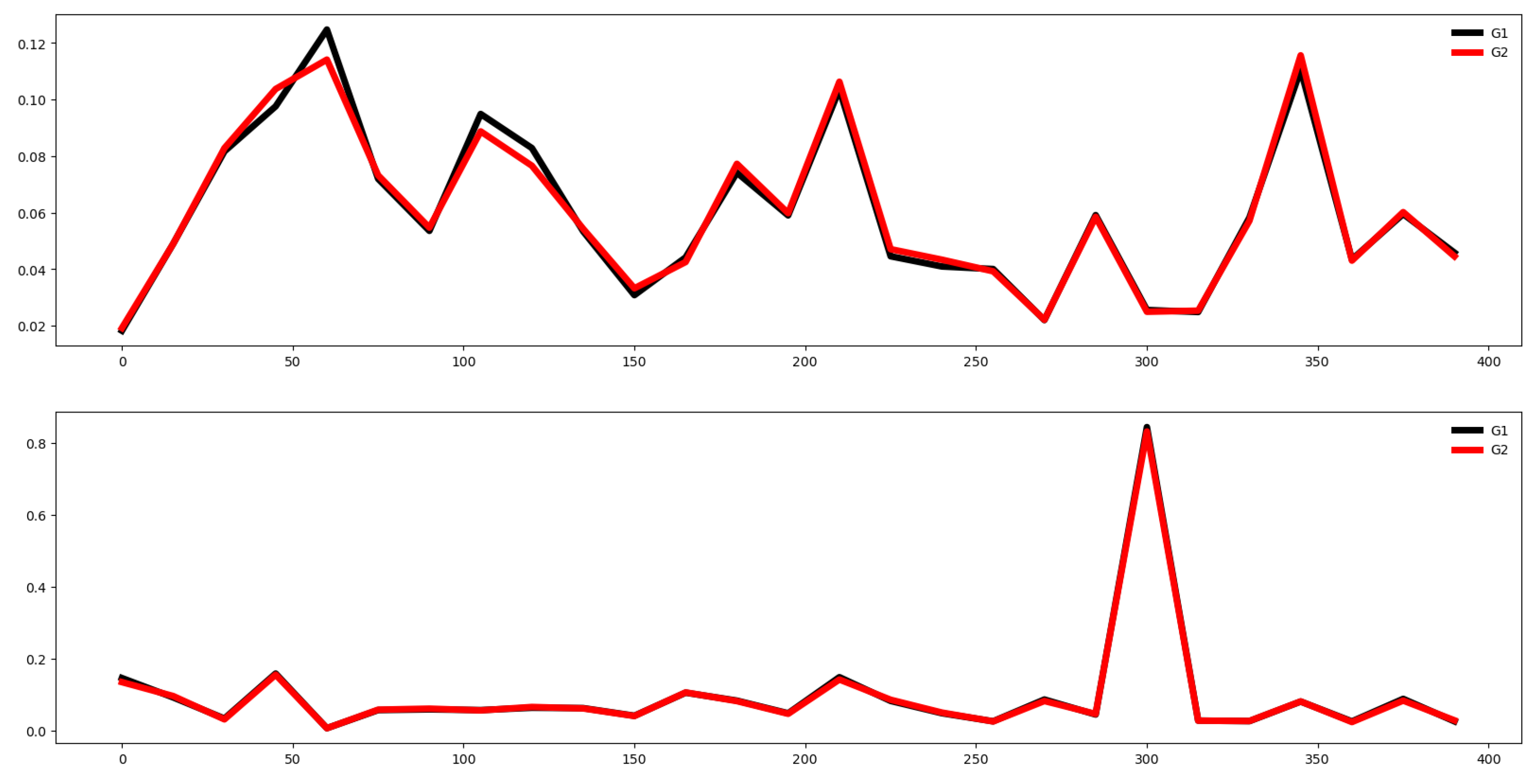

3.2.4. SelfRegulationSCP1 (SCP1)

In this dataset, healthy participants were asked to visualize moving a cursor either up or down on a screen. The direction of movement was determined by their Slow Cortical Potential (SCP), measured via EEG and visually fed back to the participant [

26]. EEG data was recorded from six positions on the head. The objective is to classify each instance as positive (downward) or negative (upward) movement based on EEG readings.

During each trial, the task was visually indicated with a highlighted goal at either the top (positive) or bottom (negative) of the screen, starting from 0.5 seconds until the end of the trial. Visual feedback was provided between 2 and 5.5 seconds. Only this 3.5-second interval is used for training and testing. With a sampling rate of 256 Hz and a recording length of 3.5 seconds, each trial includes 896 samples per channel.

The training data consists of 268 trials, with 135 trials for one class and 133 for the other, recorded over two separate days and randomly mixed. The test set includes 293 trials.

3.2.5. PEMS-SF

This is a UCI dataset provided by the California Department of Transportation, as reported in [

27]. It contains 15 months of daily data from the California Department of Transportation’s PEMS website, covering the period from January 1, 2008, to March 30, 2009 (455 days in total). The data represents the occupancy rate (between 0 and 1) of various freeway lanes in the San Francisco Bay Area, sampled every 10 minutes.

Each day in the dataset is represented as a single time series with 963 dimensions, corresponding to the sensors that consistently operated throughout the period, and a length of 144 time steps (6 samples per hour over 24 hours). Public holidays were excluded from the dataset, along with two days containing anomalies—March 8, 2009, and March 9, 2008—when all sensors were muted between 2:00 and 3:00 AM. This results in a dataset of 440 time series, each representing a day of data.

The task is to classify each time series by its corresponding day of the week, from Monday (label 1) to Sunday (label 7).

3.2.6. Heartbeat (HB)

This dataset is derived from the PhysioNet/CinC Challenge 2016 [

28] and consists of heart sound recordings sourced from various contributors worldwide. Recordings were made in both clinical and nonclinical environments, from both healthy subjects and patients with confirmed cardiac conditions. Heart sounds were collected from different locations on the body, typically including the aortic, pulmonic, tricuspid, and mitral areas, though recordings could come from any of nine possible locations.

The recordings are categorized into two classes: normal (from healthy subjects) and abnormal (from patients with confirmed cardiac diagnoses). Both healthy subjects and patients include adults and children. Each recording was truncated to a standard length of 5 seconds. Spectrograms of each instance were then generated with a window size of 0.061 seconds and a 70% overlap. In this multivariate dataset, each dimension represents a frequency band from the spectrogram. The dataset includes 113 patients in the normal class and 296 in the abnormal class.

3.2.7. Face Detection (FD)

This dataset is derived from the training set of a Kaggle competition [

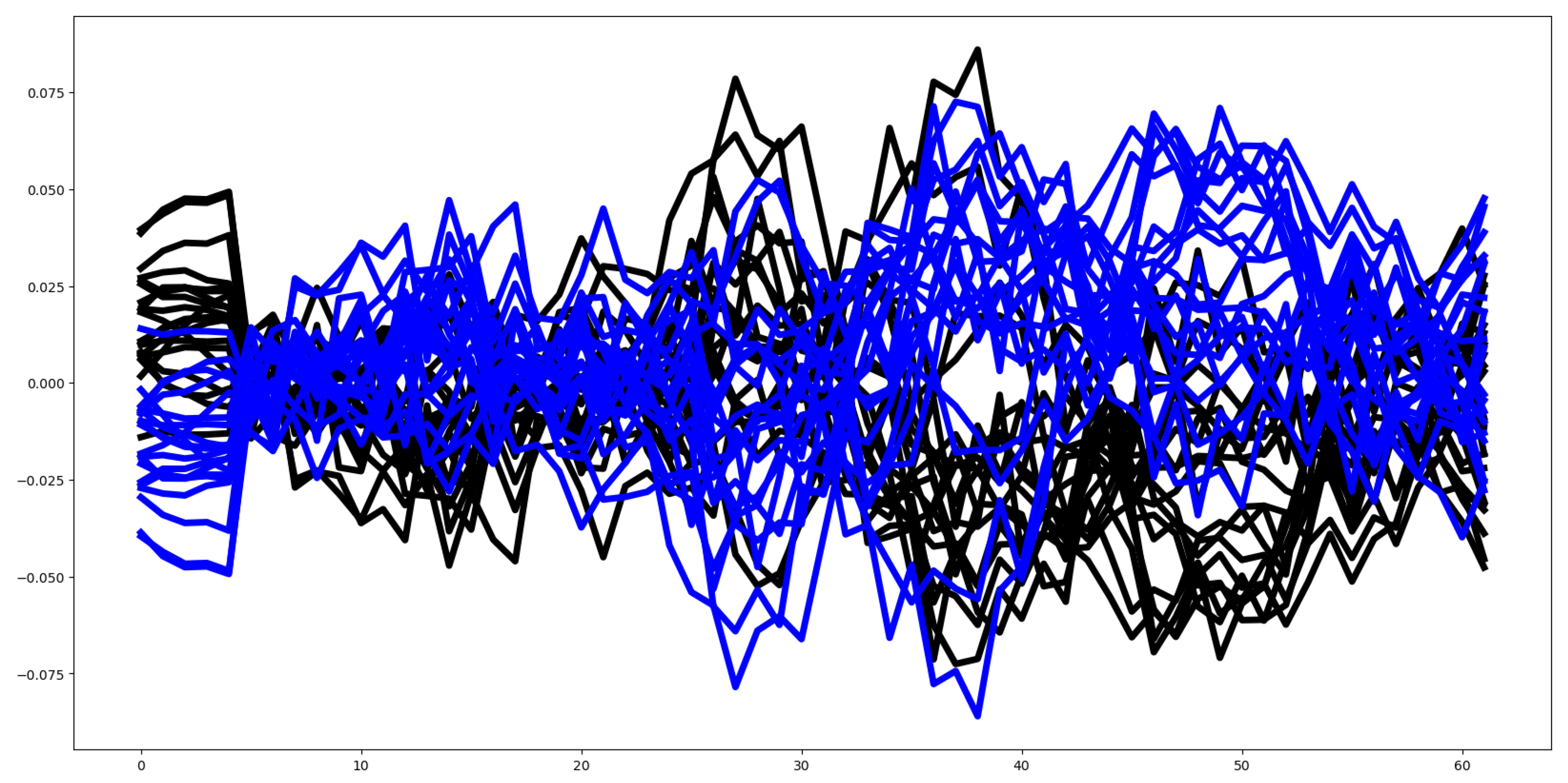

29] and consists of Magnetoencephalography (MEG) recordings with class labels (Face/Scramble) from 10 subjects (subject01 to subject10). Test data is available for an additional six subjects (subject11 to subject16). For each subject, approximately 580-590 trials are included. Each trial contains 1.5 seconds of MEG recordings, beginning 0.5 seconds before the stimulus onset, along with a corresponding class label: Face (class 1) or Scramble (class 0).

The MEG data was down-sampled to 250 Hz and high-pass filtered at 1 Hz. The original dataset includes 306 time series channels, each containing 375 time steps. For this study, we used a reduced set of 144 channels and 62 time steps, as provided on the competition website. Trials for each subject are organized into a 3D data matrix with dimensions (trial x channel x time), resulting in matrices of size 580 x 144 x 62. The training set comprises 5,890 instances, evenly split between the two classes.

3.2.8. Duck Duck Geese (DDG)

This dataset was derived from audio recordings available on the Xeno Canto website [

30]. Each recording was selected from either the A or B quality category. Due to varying sample rates among the recordings, all audio files were downsampled to 44,100 Hz. Each recording was then center truncated to a length of 5 seconds (matching the length of the shortest recording) before being transformed into a spectrogram with a window size of 0.061 seconds and an overlap of 70%. The dataset includes the following classes: Black-bellied Whistling Duck (20 instances); Canadian Goose (20 instances); Greylag Goose (20 instances); Pink Footed Goose (20 instances); and White-faced Whistling Duck (20 instances).

3.2.9. Finger Movements (FM)

This dataset, described in [

31], consists of 500 ms intervals of Electroencephalogram (EEG) recordings, starting 130 ms before a key press. A single participant, seated in a normal position at a keyboard, was instructed to type characters using only the index and pinky fingers. The dataset has 28 dimensions, each with 50 attributes, and includes two target classes: left and right. The training set contains 316 instances, with 159 labeled as the first class and 157 as the second. The test set includes 100 instances.

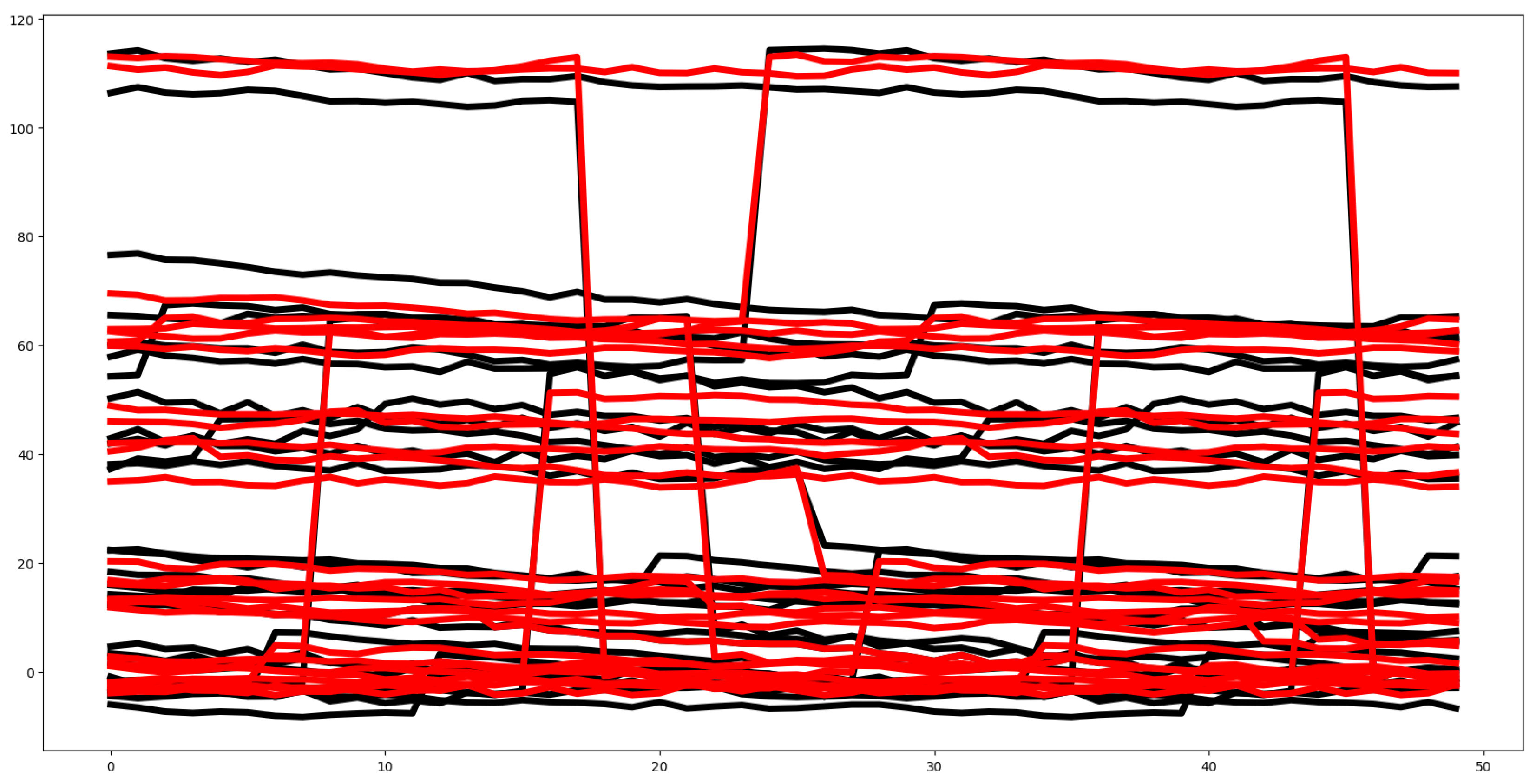

3.2.10. SelfRegulationSCP2 (SCP2)

An artificially respirated ALS patient was instructed to move a cursor either up or down on a screen. The direction of movement was controlled via Slow Cortical Potential (SCP), measured using EEG, and provided to the participant both visually and audibly [

26]. EEG data was recorded from seven positions on the head. The objective is to classify each instance as positive (downward) or negative (upward) movement based on EEG readings.

During each trial, the task was indicated by a highlighted goal at the top (indicating negativity) or bottom (indicating positivity) of the screen from 0.5 to 7.5 seconds. Additionally, the task (“up” or “down”) was vocalized at 0.5 seconds. Visual feedback was provided from 2 to 6.5 seconds. Only the 4.5-second interval from 2 to 6.5 seconds is available for training and testing.

With a sampling rate of 256 Hz over 4.5 seconds, each trial consists of 1152 samples per channel. The training data contains 200 trials (100 of each class), recorded on the same day and permuted randomly. Each time series has a length of 1152 across 7 dimensions. The test set includes 180 trials.

3.2.11. Hand Movement Direction (HMD)

This dataset includes recordings from two right-handed subjects moving a joystick with their hand and wrist in one of four possible directions (right, up, down, or left) after hearing a prompt. The task is to classify the direction of movement based on the resulting MEG data. Each recording captures an interval beginning 0.4 seconds before the movement and ending 0.6 seconds afterward, across 10 MEG channels. The data is sampled at 400 Hz (see [

29] for more details). The training set consists of 160 instances, with 40 instances per direction. The test set includes 74 instances.

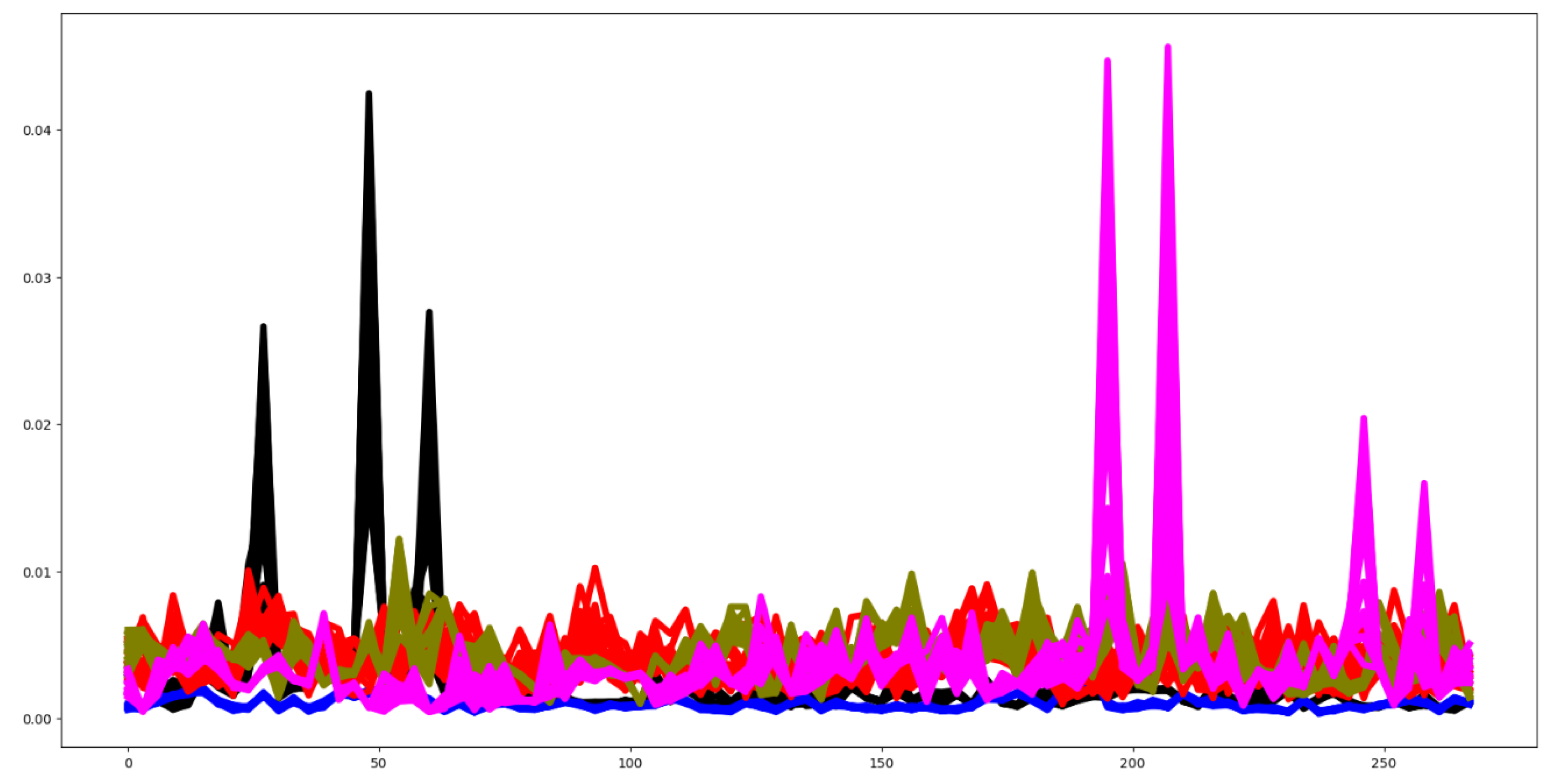

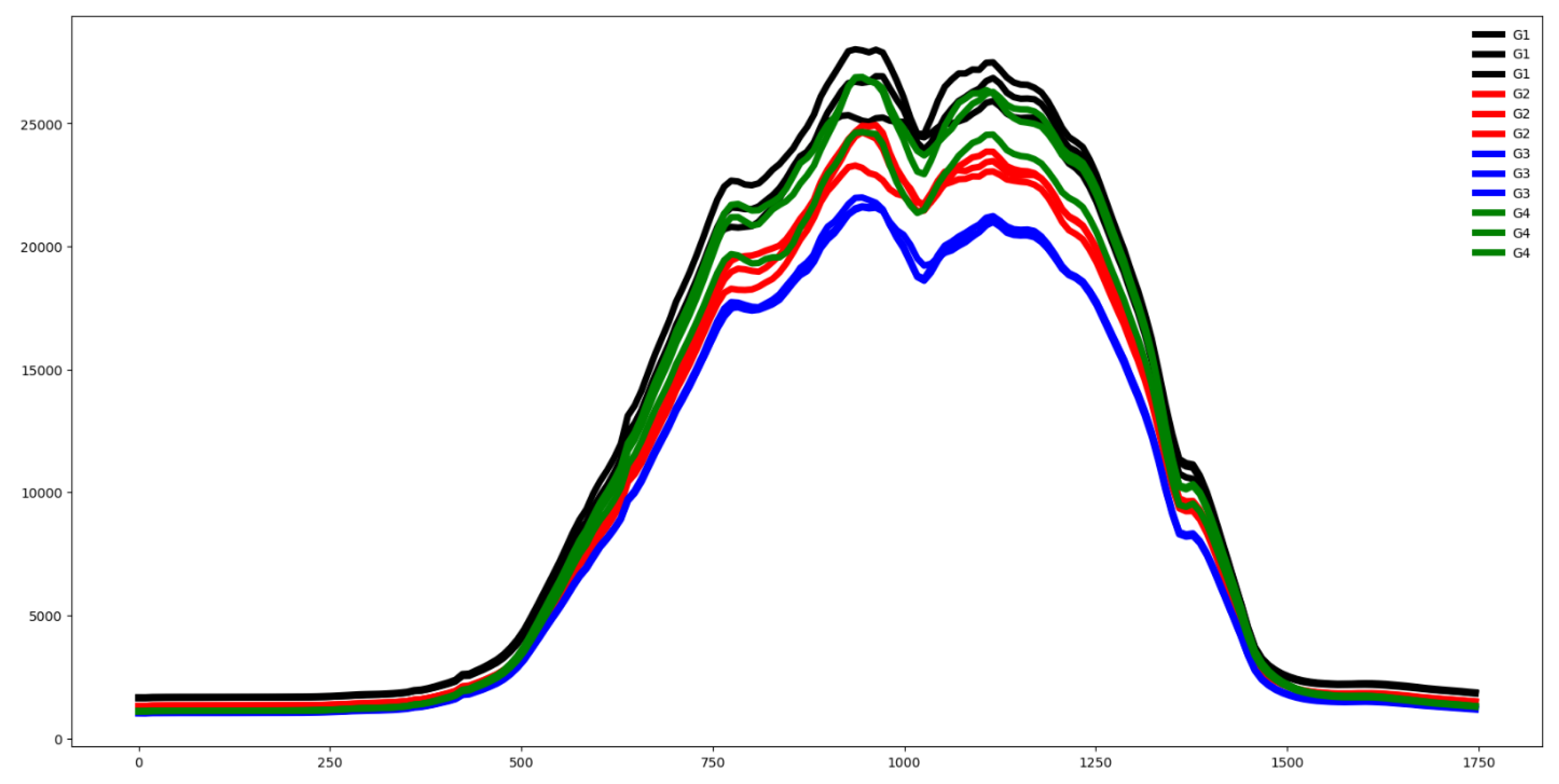

3.2.12. Ethanol Concentration (EC)

The Ethanol Concentration dataset contains raw spectra of water-and-ethanol solutions in 44 unique whisky bottles [

32]. The ethanol concentrations are set at 35%, 38%, 40%, and 45%, and the classification task is to determine the alcohol concentration of a sample in an arbitrary bottle. Each instance in the dataset includes three repeated readings from the same bottle and batch of solution. Three batches of each concentration were prepared, with each bottle-batch combination measured three times. For each reading, the bottle was picked up, placed between the light source and spectroscope, and the spectra recorded. Spectra were captured across the full wavelength range of the Stellar-Net BLACKComet-SR spectrometer (226 nm to 1101.5 nm, with a sampling interval of 0.5 nm) over a one-second integration time.To simulate potential real-world conditions for large-scale screening, no special precautions were taken to obtain pristine readings or to replicate the exact light path through the bottle for each repeat reading, aside from avoiding labeled, embossed, or seamed areas on the bottle.

3.2.13. Basic Motions (BM)

This dataset was collected by students at UEA. Data was generated by participants performing four activities while wearing a smart watch. The watch collected 3D accelerometer and gyroscope data. The dataset consists of four classes: walking, resting, running and playing badminton. Participants were required to record each motion a total of five times. The sample rate of both sensors was 10Hz and activity was recorded for 10 seconds. The training set consists of 40 instances. Each time series has a length of 100 across 6 channels. The test set includes 40 instances.

3.2.14. Racket Sports (RS)

This dataset was collected by students at UEA. The dataset consists of data recorded while participants were playing one or two strokes during the time that they were playing badminton or squash. The data was captured through a smart watch (Sony smart watch 3) worn on the participant’s dominant hand. The watch relayed the x, y, z values for both the gyroscope and the accelerometer. Th0e task is to classify which sport, and which stroke the players are making. The data was collected at a rate of 10Hz, over 3 seconds while the participant was playing either a forehand/backhand in squash or a clear/smash in badminton. The training set consists of 151 instances. Each time series has a length of 30 across 6 channels. The test set includes 152 instances.

3.2.15. Libras (LIB)

LIBRAS (“Lingua Brasileira de Sianis”) is the official Brazilian sign, This dataset contains 15 classes each of them with 24 instances, Each class represents a hand movement type in LIBRAS. The hand movement is represented by a bi-dimensional curve performed by the hand in a period of time. This hand movement is extracted from a video. From each video 45 frames were selected uniformly to extract the hand movement (see [

33] for more details). The whole dataset was equally spitted into training sets. Thus, both have 180 instances. Each time series has a length of 3 across 6 channels.

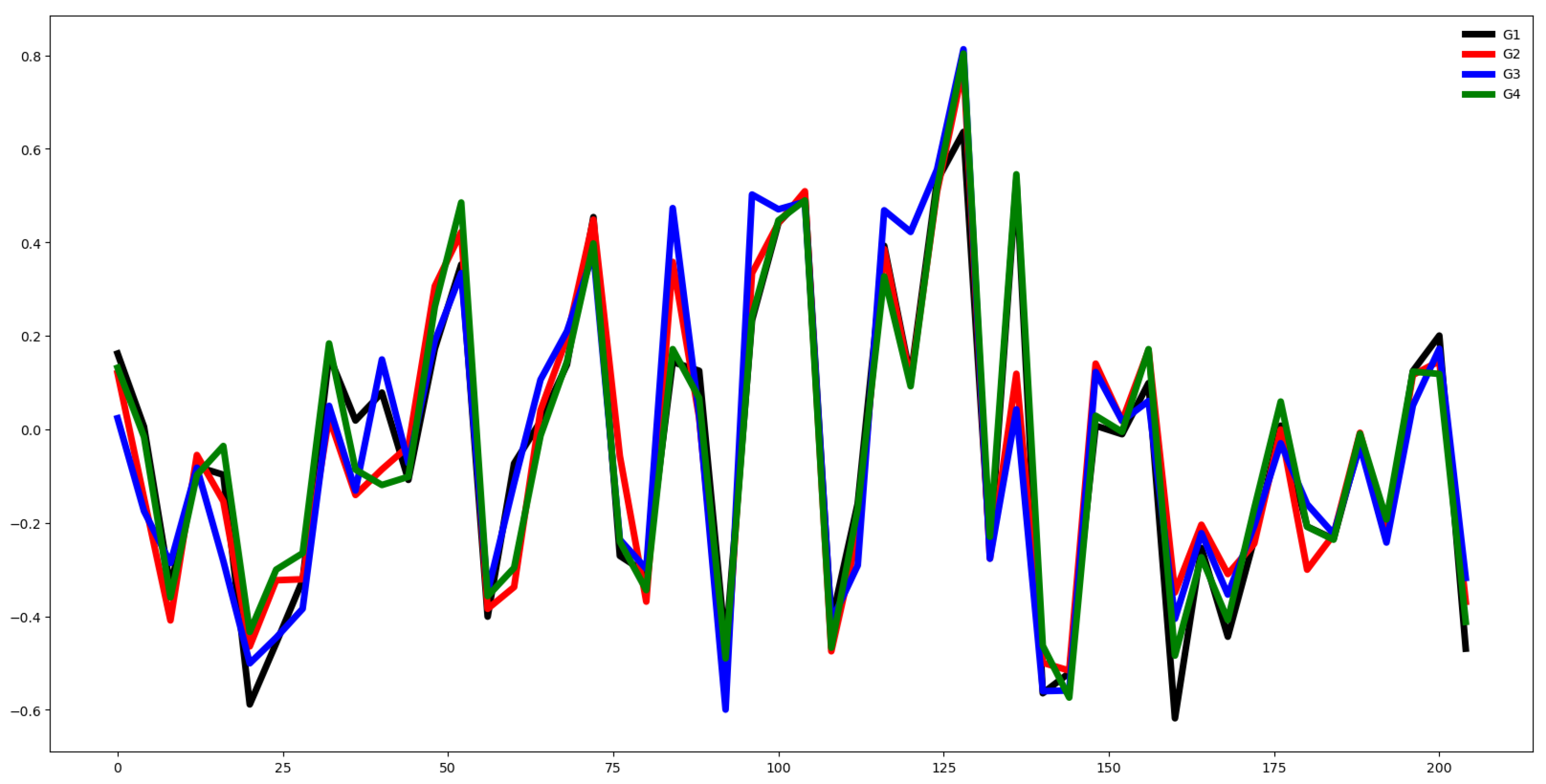

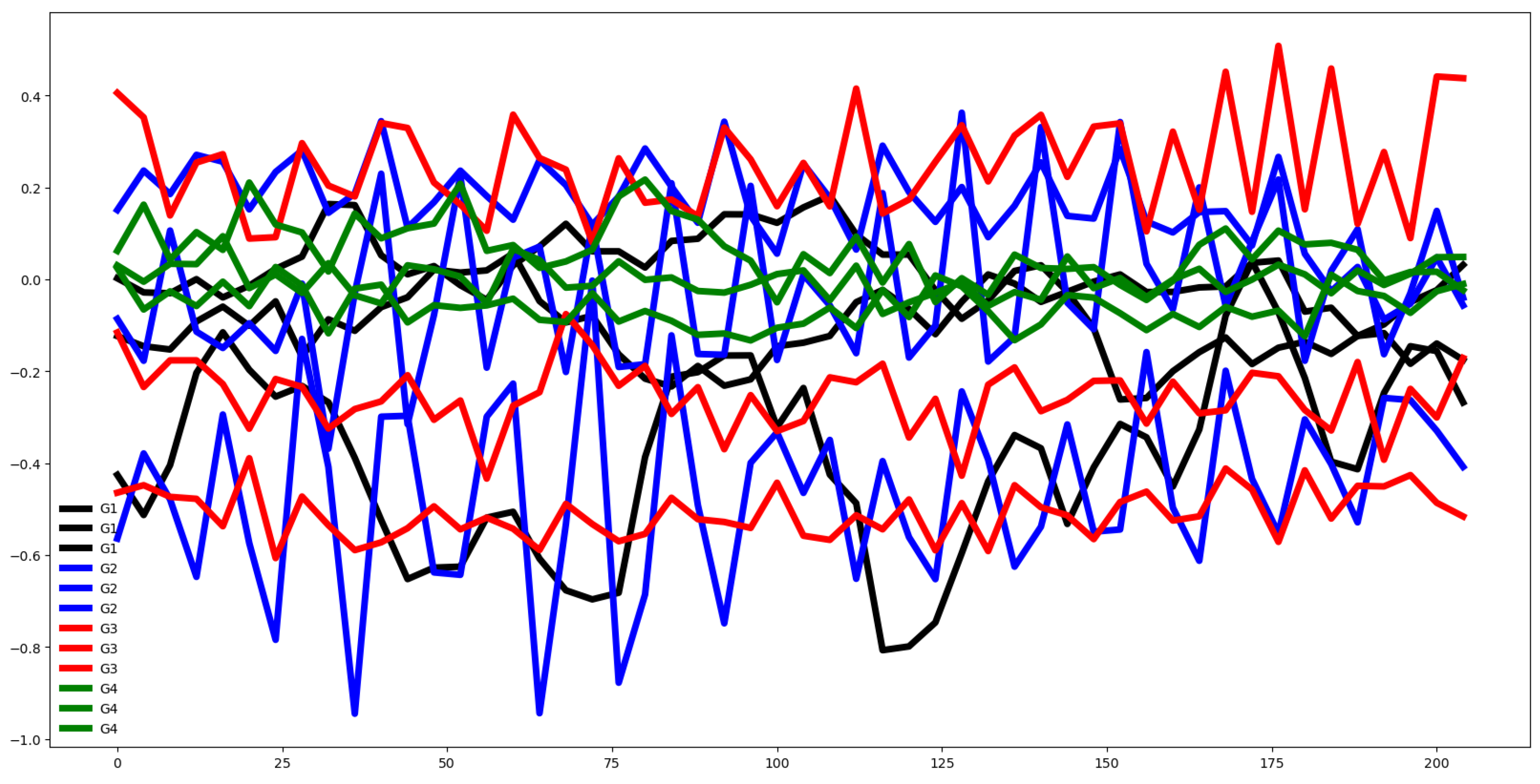

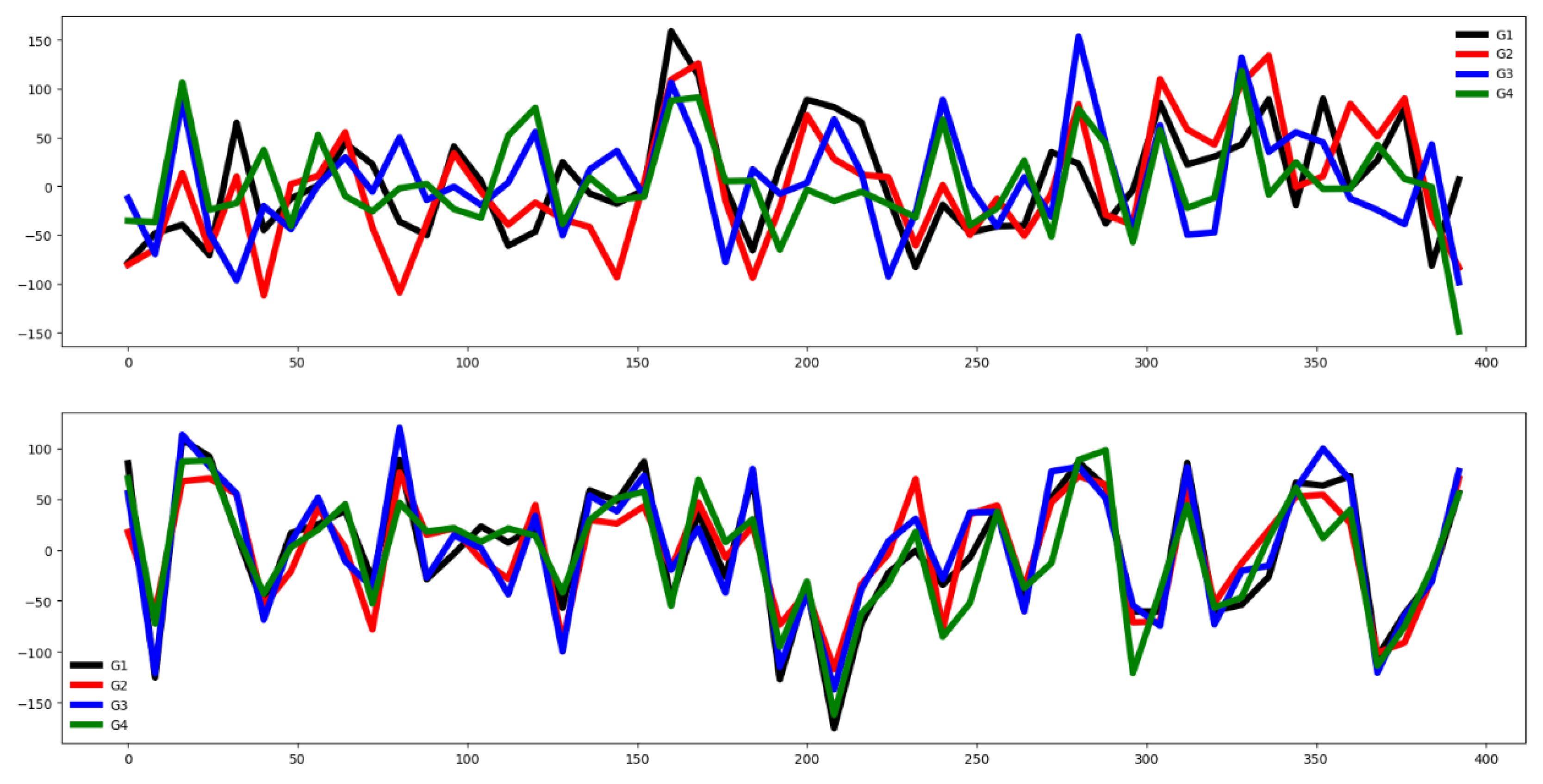

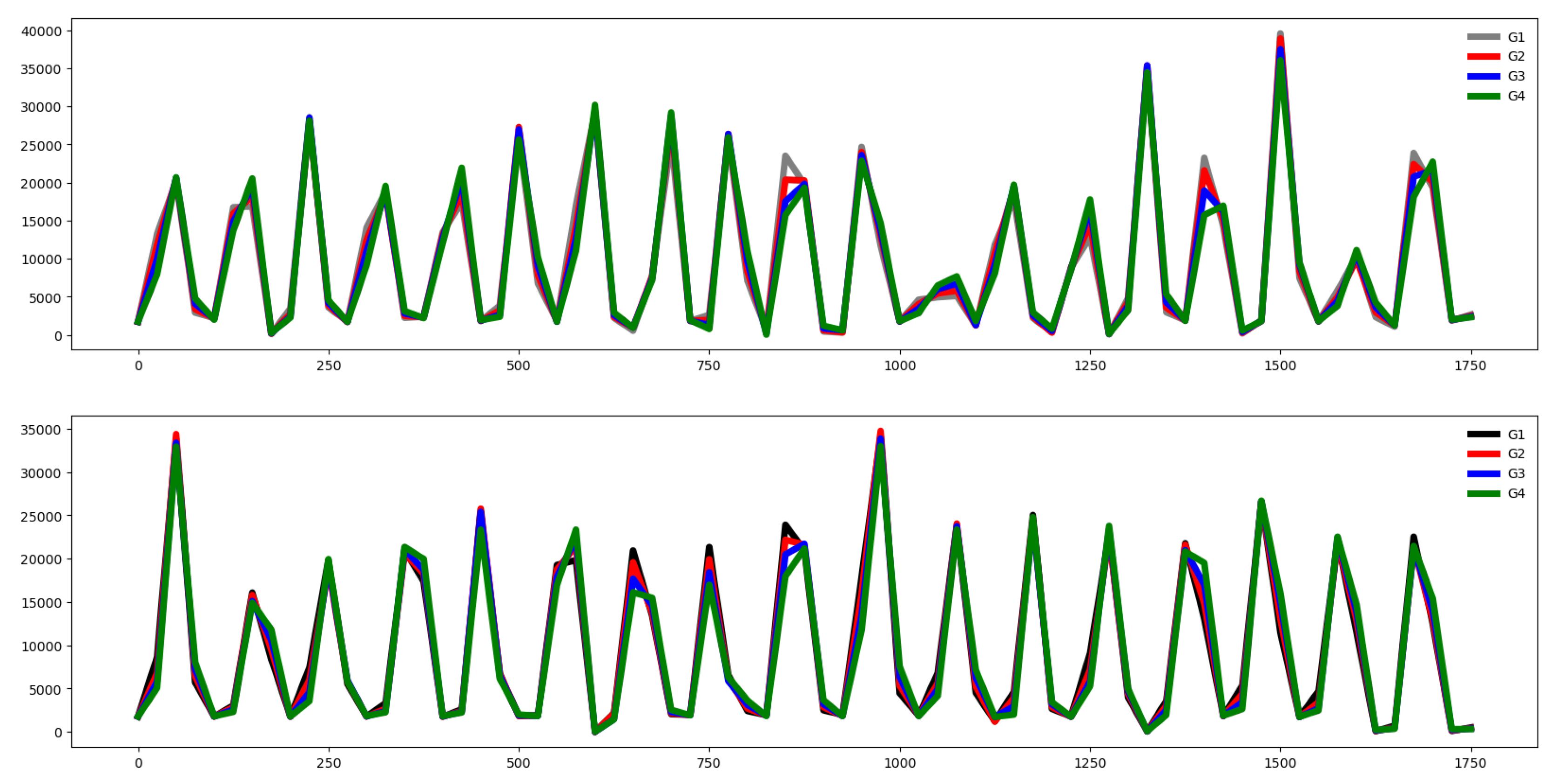

Our research workflow is as follows:

First, we load the dataset from the UEA repository.

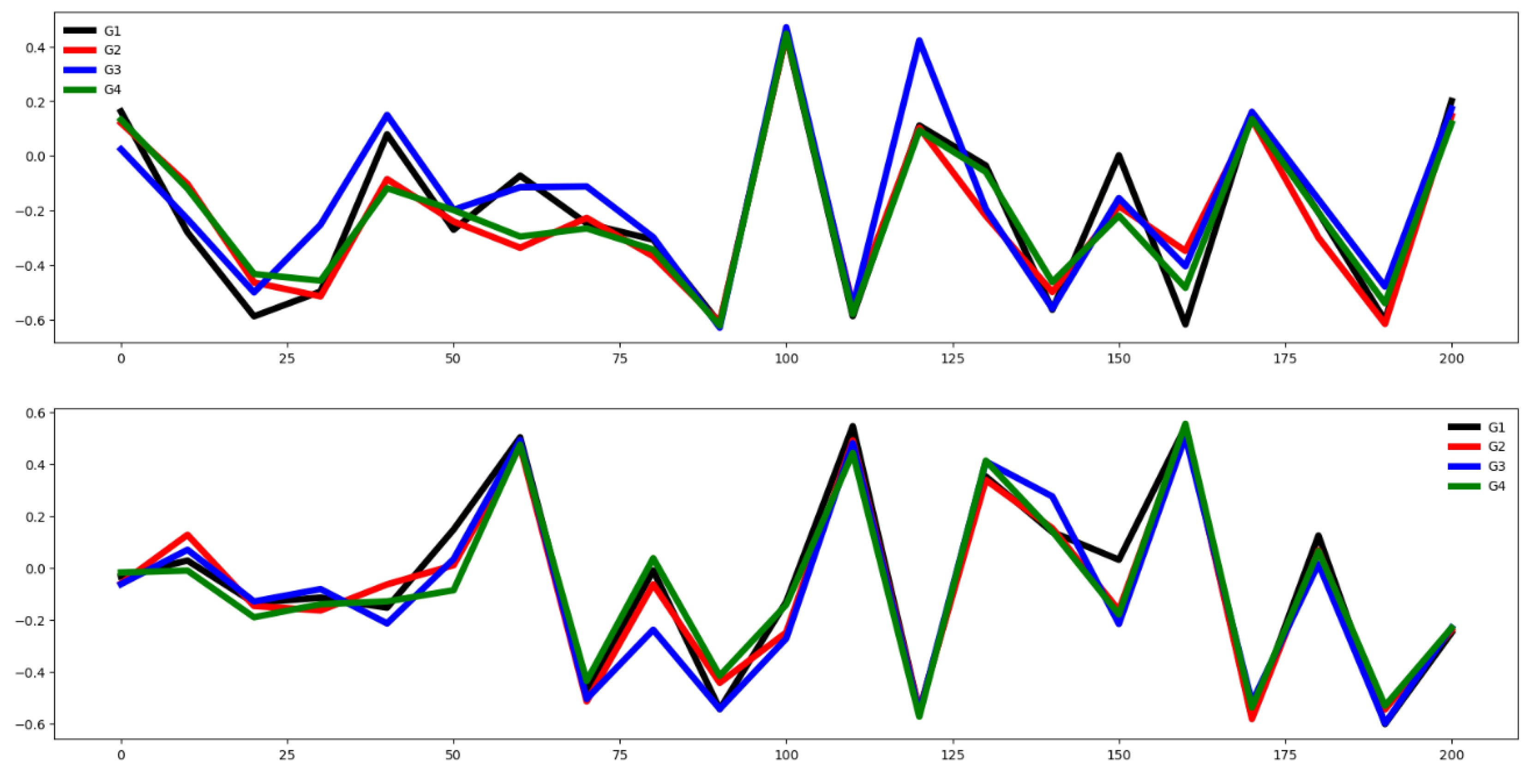

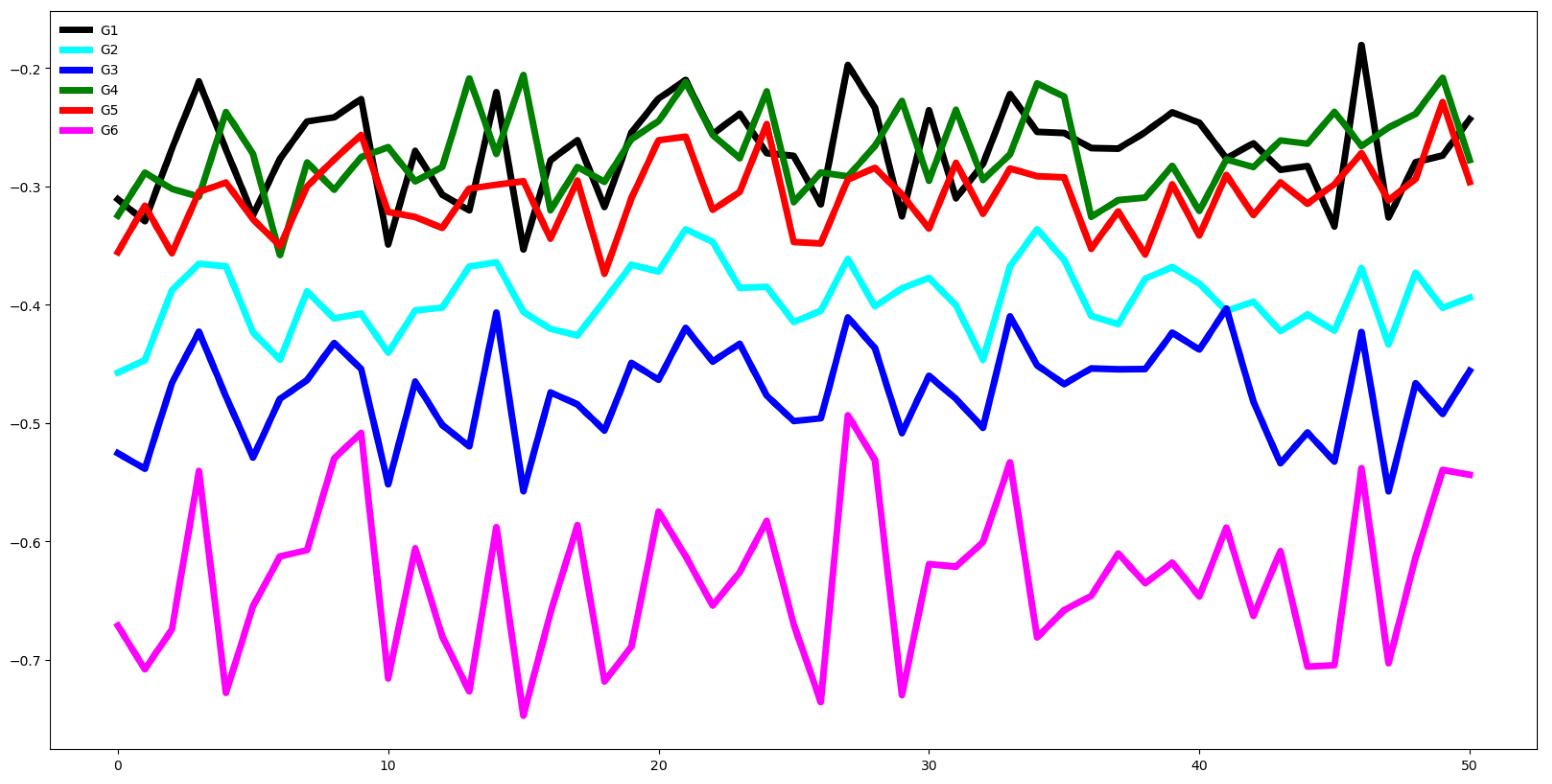

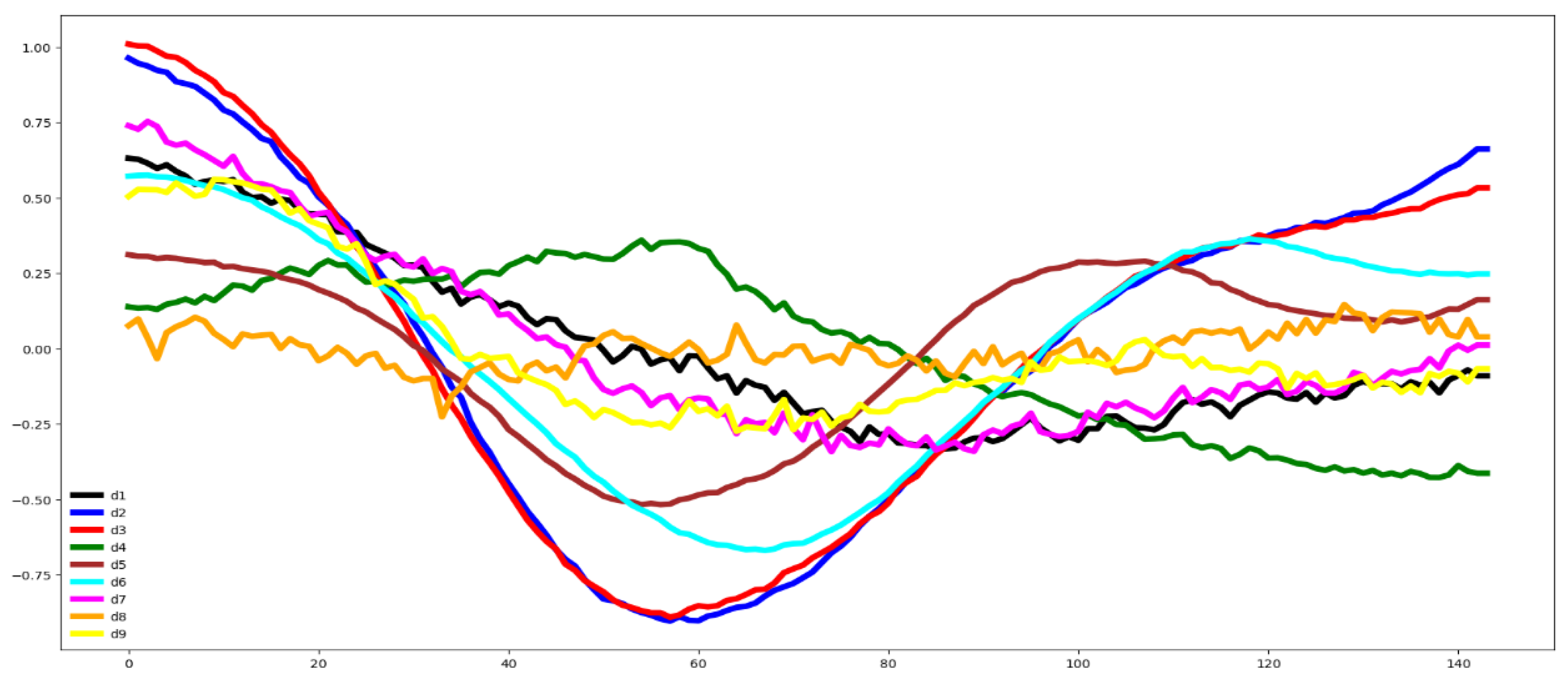

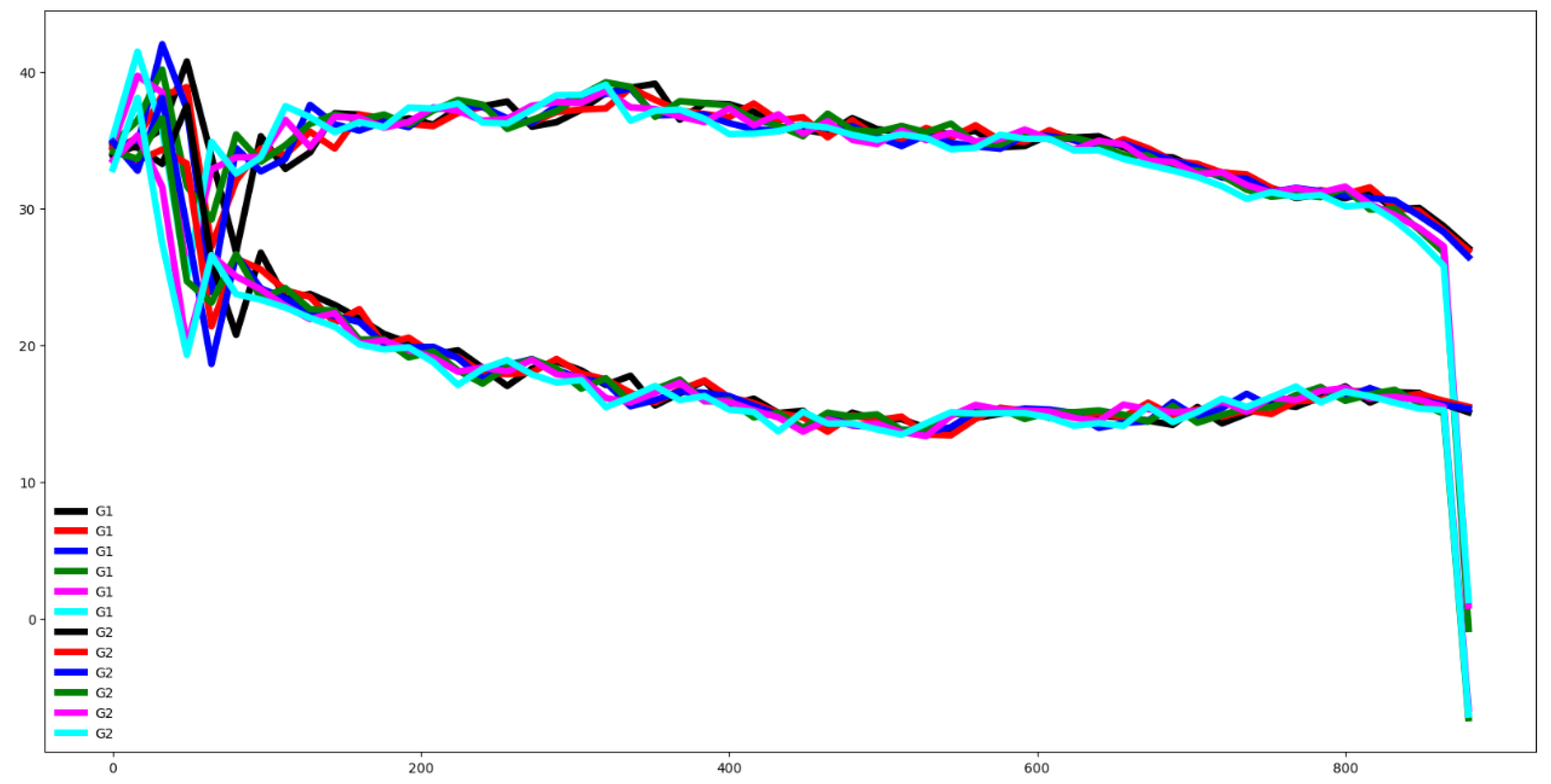

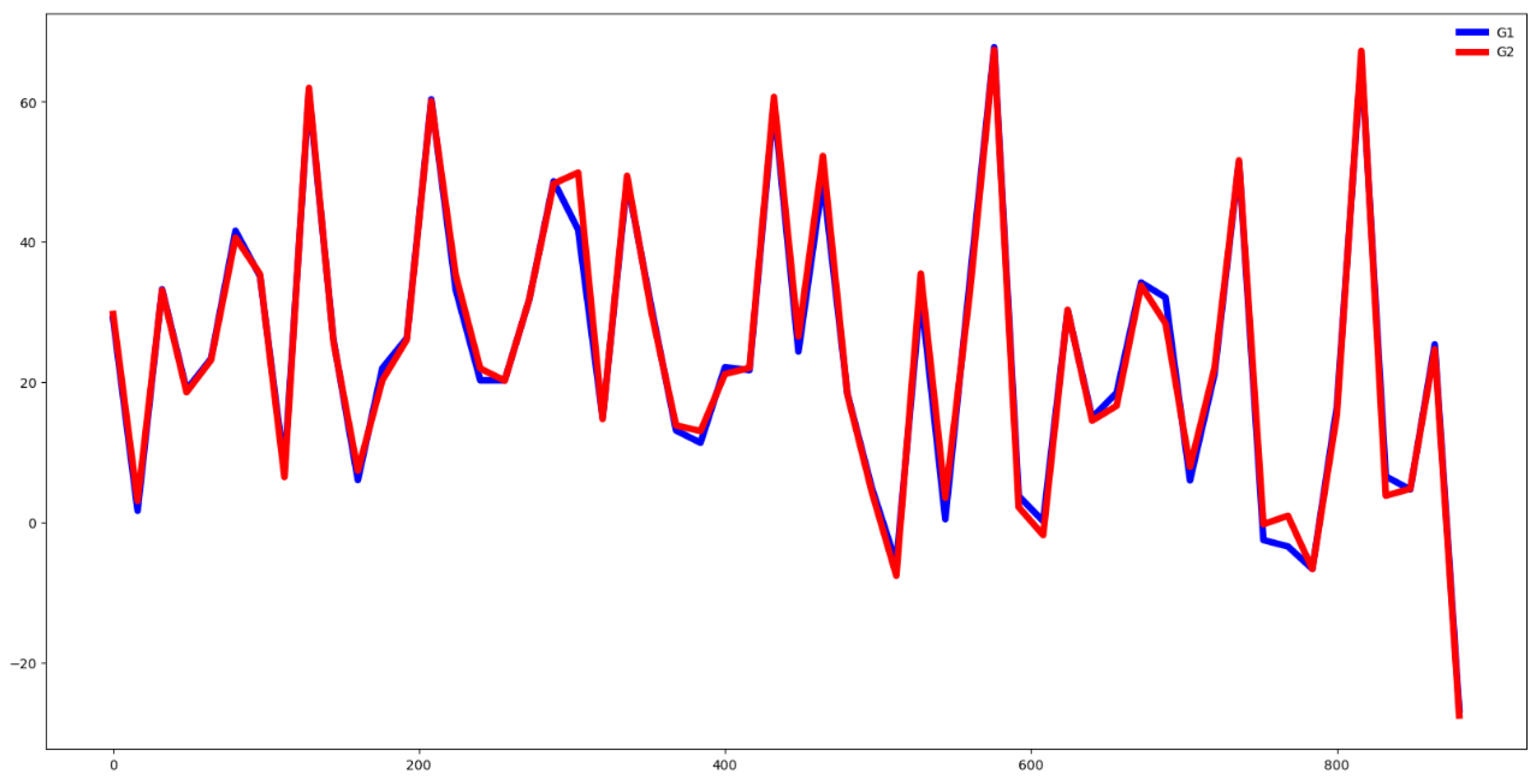

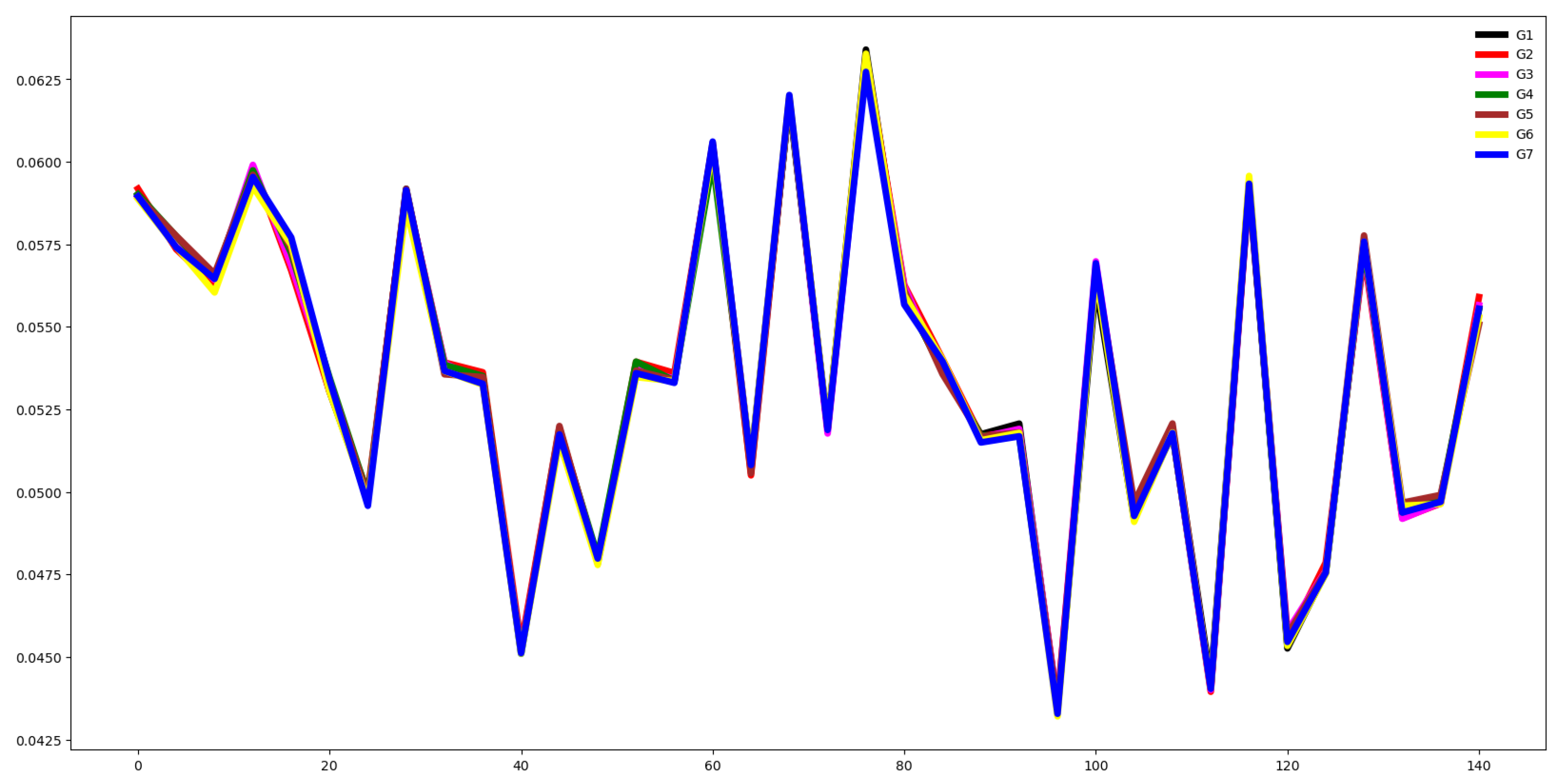

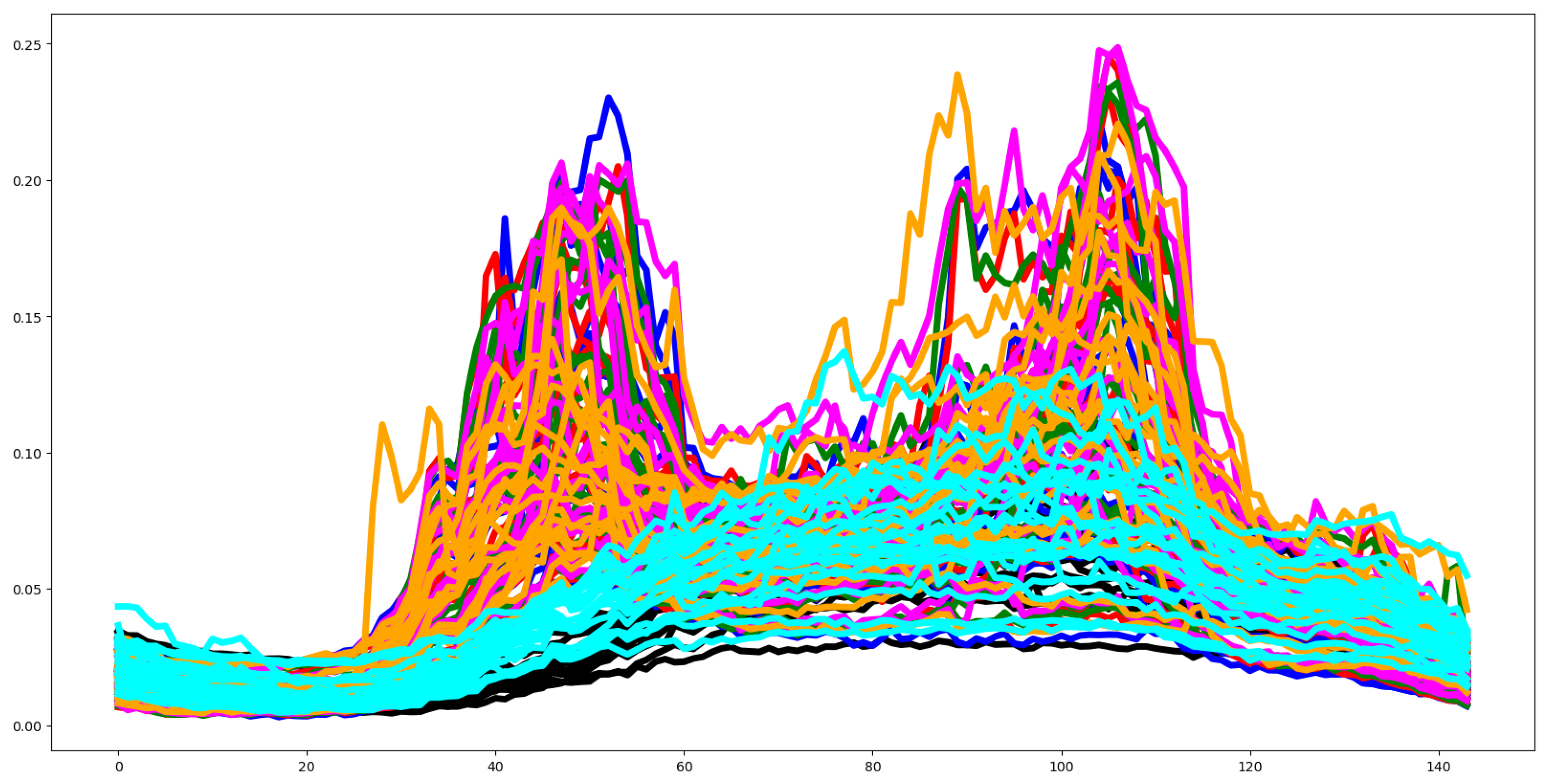

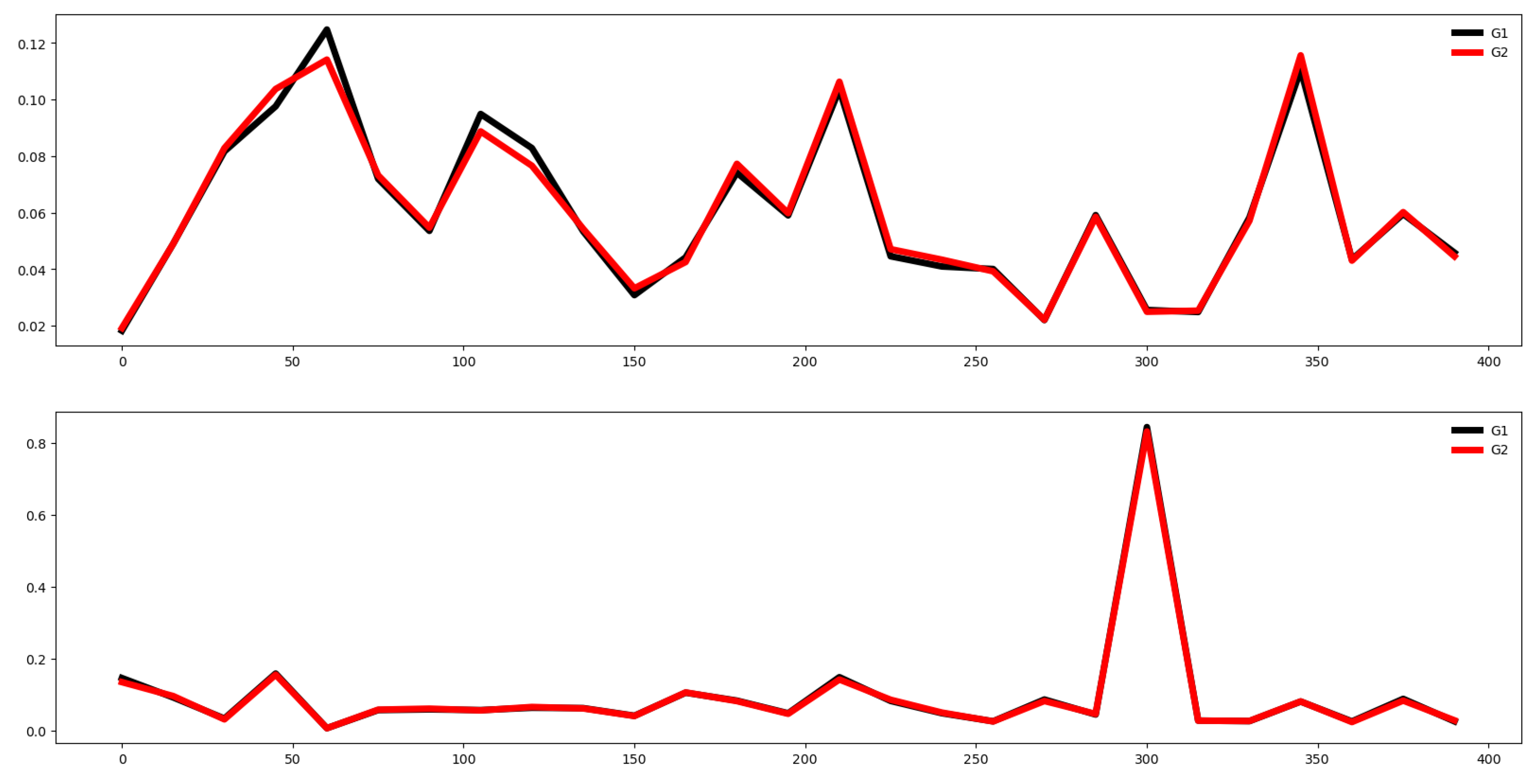

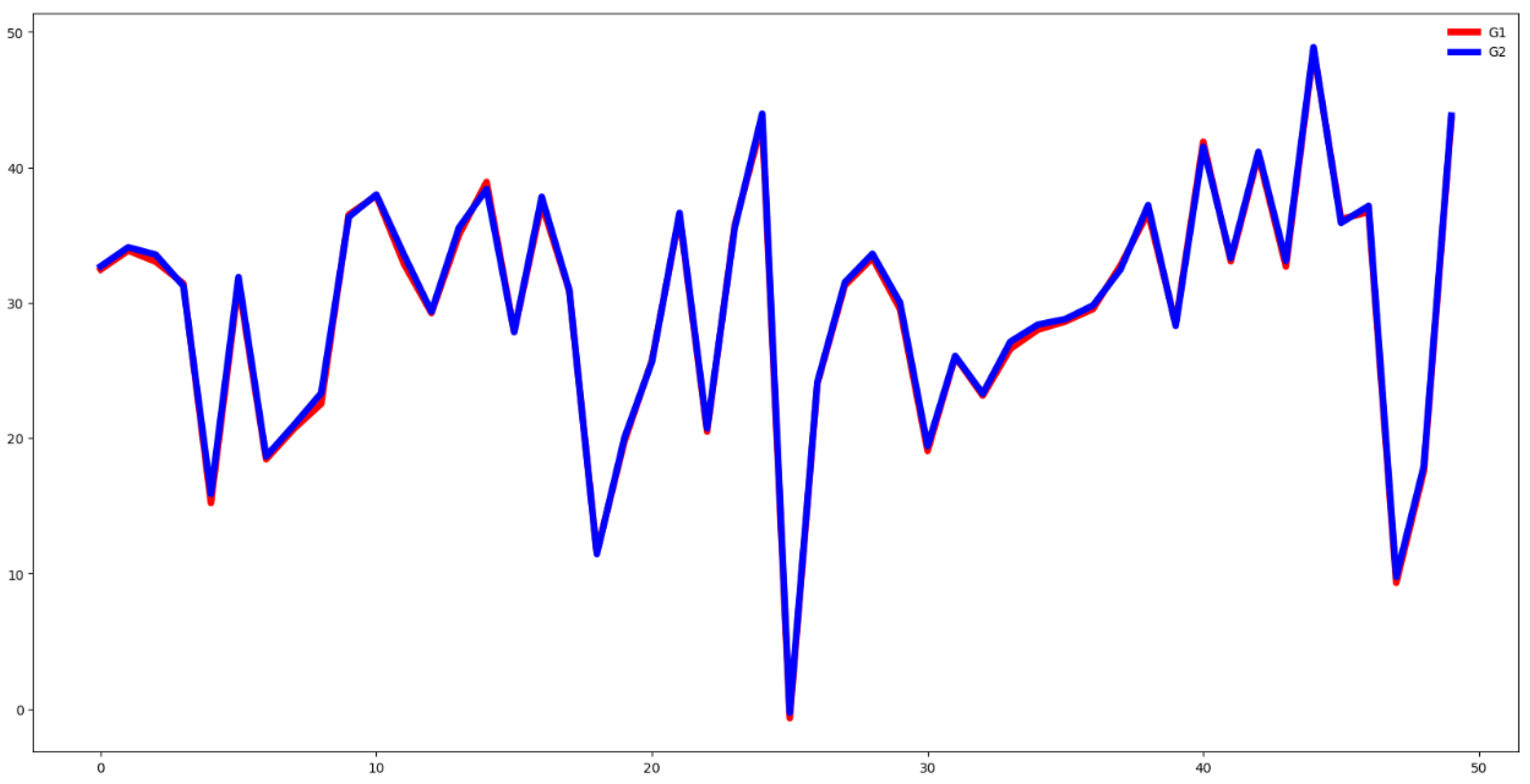

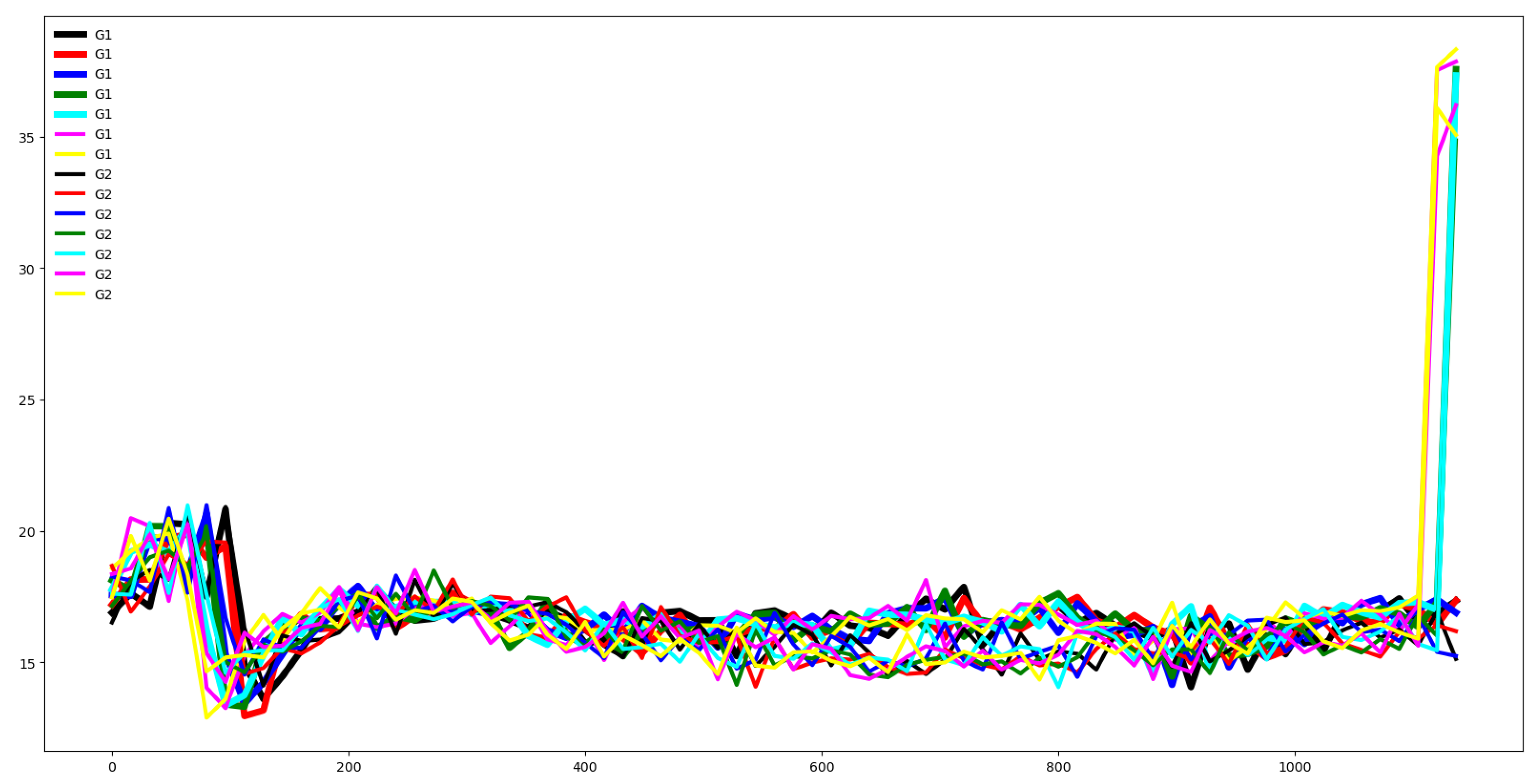

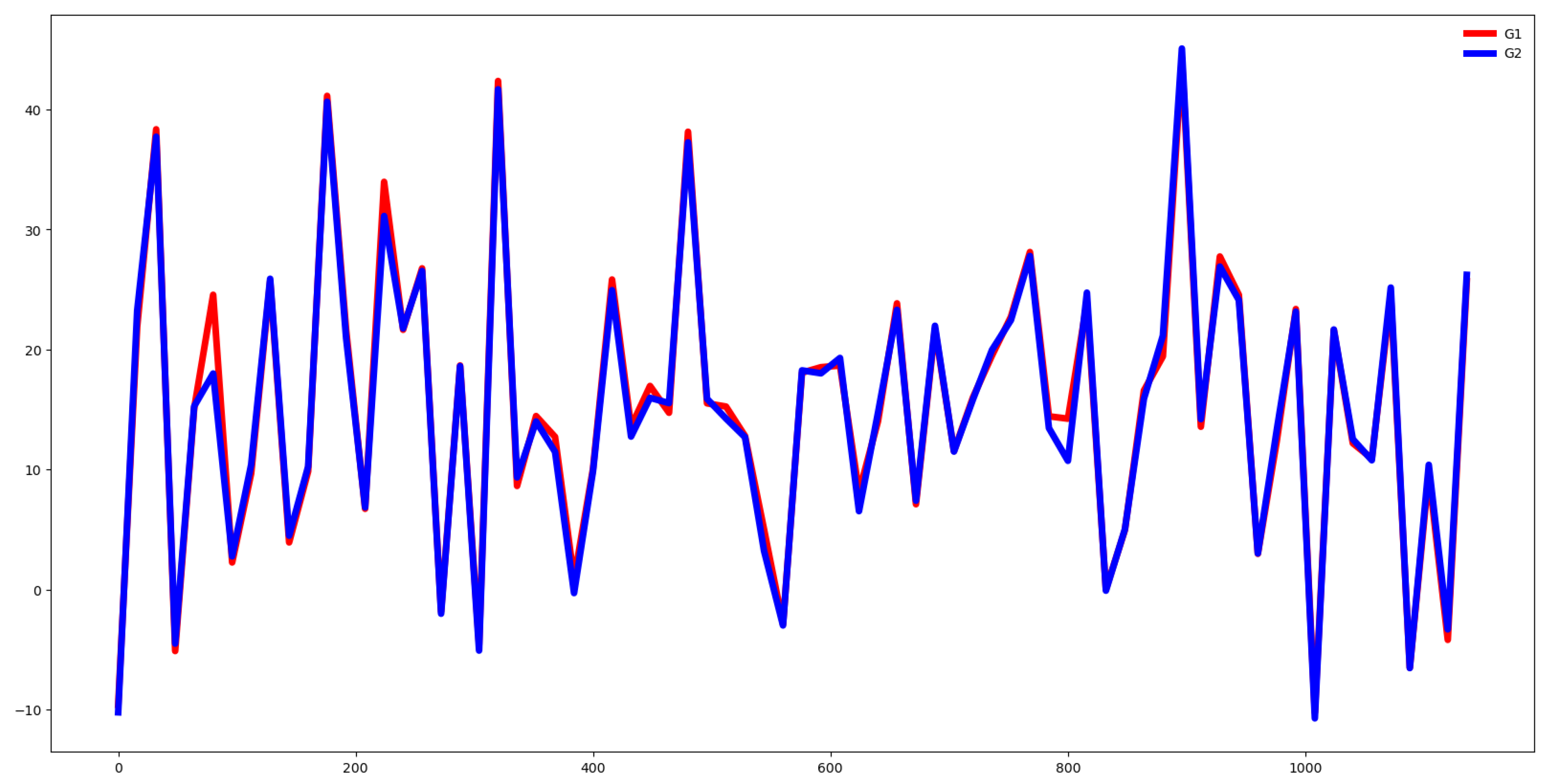

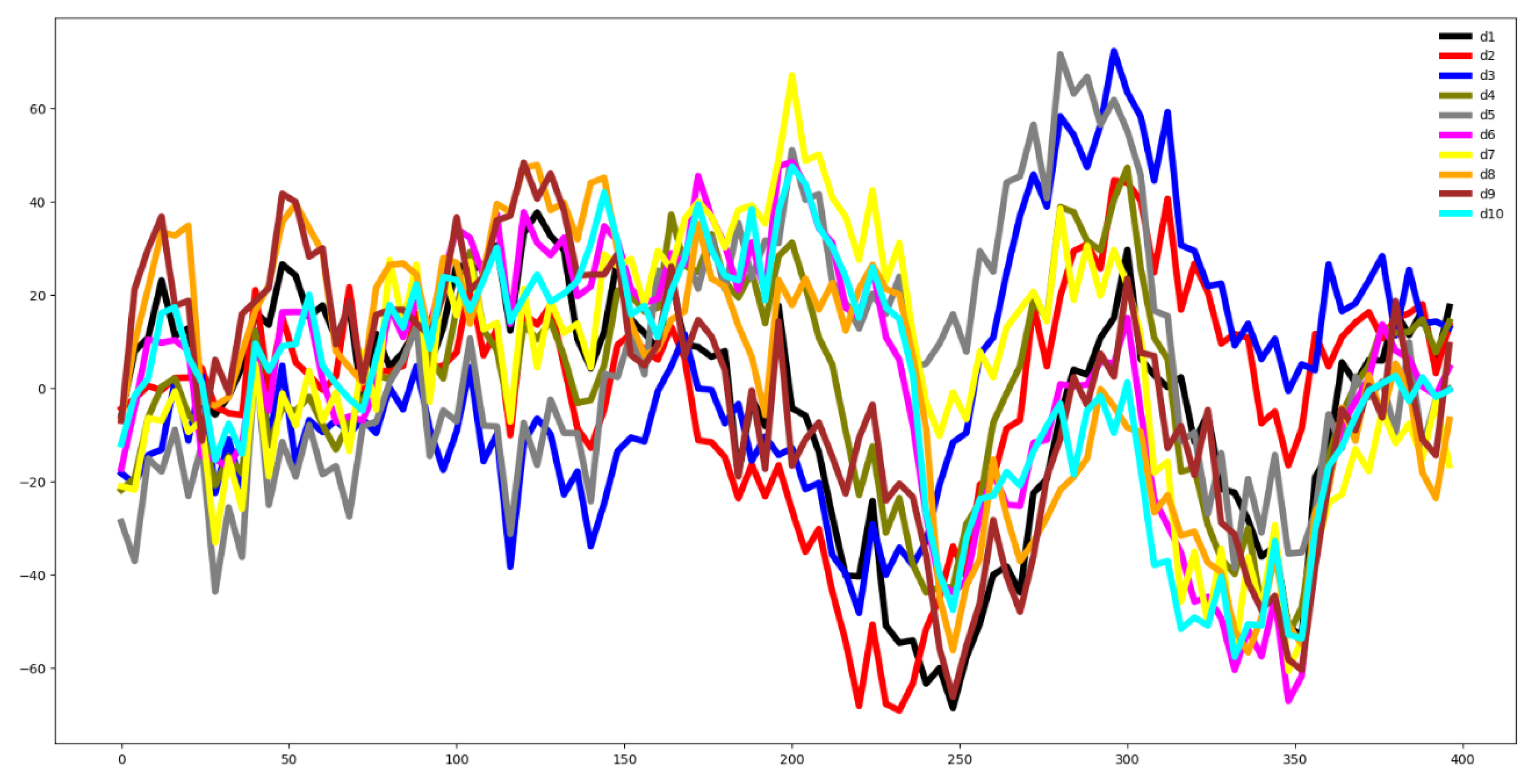

Second, we plot the mean values by channel in each group.

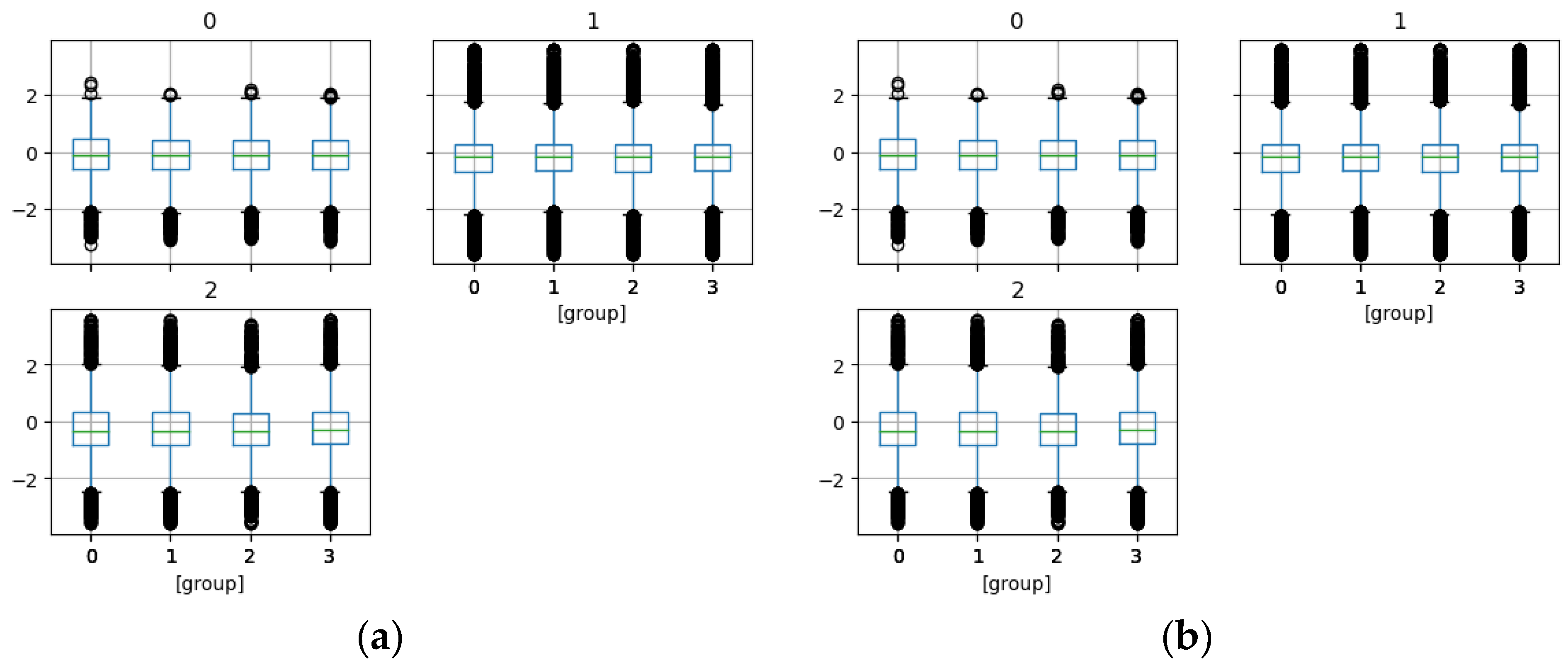

Third, we compute the distance between the groups through the Euclidean distance between the mean vector of each channel. If this distance is small the dataset is hard to classify.

Fourth, we carry out feature selection using either the F-test criterion for normalized data or the Mutual Information criterion for unnormalized data.

Fifth, we plotted the mean of the time series in each group of both the training and testing set and compute the Euclidean distance between the mean values of the training and testing set. If this distance is small then the distribution of training and testing is quite similar. Otherwise, the distributions are different, and the classifier algorithm will not perform well.

Sixth, stationarity tests and their p-values are computed.

Finally, the classifier algorithm is applied and evaluated.

In

Table 1 a summary of the datasets is presented in descending order according to the accuracy obtained