1. Introduction

Marine ranching, a cornerstone of China’s Blue Granary strategy, has emerged as a transformative approach to modernize marine fisheries, enhance aquaculture productivity, and promote ecological sustainability [

1]. The rapid development of related equipment, such as intelligent feeding systems, deep-sea cages, and multi-functional platform, have significantly improved operational efficiency [

2,

3].However, the exponential growth of domain-specific knowledge remains fragmented, with critical information dispersed across heterogeneous sources including enterprise records, experts experiences, academic literature, and technical standards.This fragmentation impedes intelligent decision-making, real-time monitoring, and knowledge sharing, thereby limiting the full potential of marine ranching industrialization.

Knowledge graph (KG) is a state-of-the-art semantic network paradigm, it employs graph structures to visualize relationships between entities, demonstrating advantages in intuitiveness, efficiency, and scalability[

4]. The concept of KG was first proposed by Google in 2012 and applied in the search engine field[

5].It has demonstrated remarkable success in integrating domain-specific knowledge, and can provide robust support for intelligent applications such as question-answering systems and decision-making analytics. Recent advancements in knowledge graph (KG) applications demonstrate their versatility in manufacturing, Ren et al. [

6] automated OPC UA information modeling via KG to unify heterogeneous equipment data, while Gu et al. [

7] integrated geometric and assembly process data through a KG-based semantic model (KG-ASM). For design optimization, Huet et al. [

8] proposed a KG-driven design rule recommendation system, and Qin et al. [

9] developed a KG-embedded tool for mechanical component design assistance. In quality management, Zhou et al. [

10] utilized manufacturing data to construct KG for defect root-cause analysis, and Hao. [

11] established a production-process-supported KG for anomaly detection.

Despite these advancements, existing research predominantly focuses on structured data from product design or assembly processes, neglecting the unique challenges of marine equipment domains where unstructured text dominates and entities exhibit complex interdependencies. Traditional extraction methods suffer from error propagation and inefficiency in handling such scenarios, while deep learning models like BERT-BiLSTM-CRF face limitations in parameter efficiency and contextual dependency modeling. By designing targeted questionnaires for diverse users and employees, several limitations in existing KG have been identified as follows: (1)Limited data volume and limited knowledge scope.(2)Ambiguity in the structure of the knowledge framework(3)Low efficiency and accuracy in knowledge extraction.(4)Difficult updates and maintenance.Consequently, there is an urgent need for a specialize KG framework tailored to marine ranching equipment.

This study proposes a novel KG framework to bridge the gap between unstructured marine equipment data and knowledge. The main contributions of this study include:

(1)Hybrid Ontology Design: A combined top-down and bottom-up approach constructs a domain ontology, defining 7 core concepts and 8 semantic relationships .

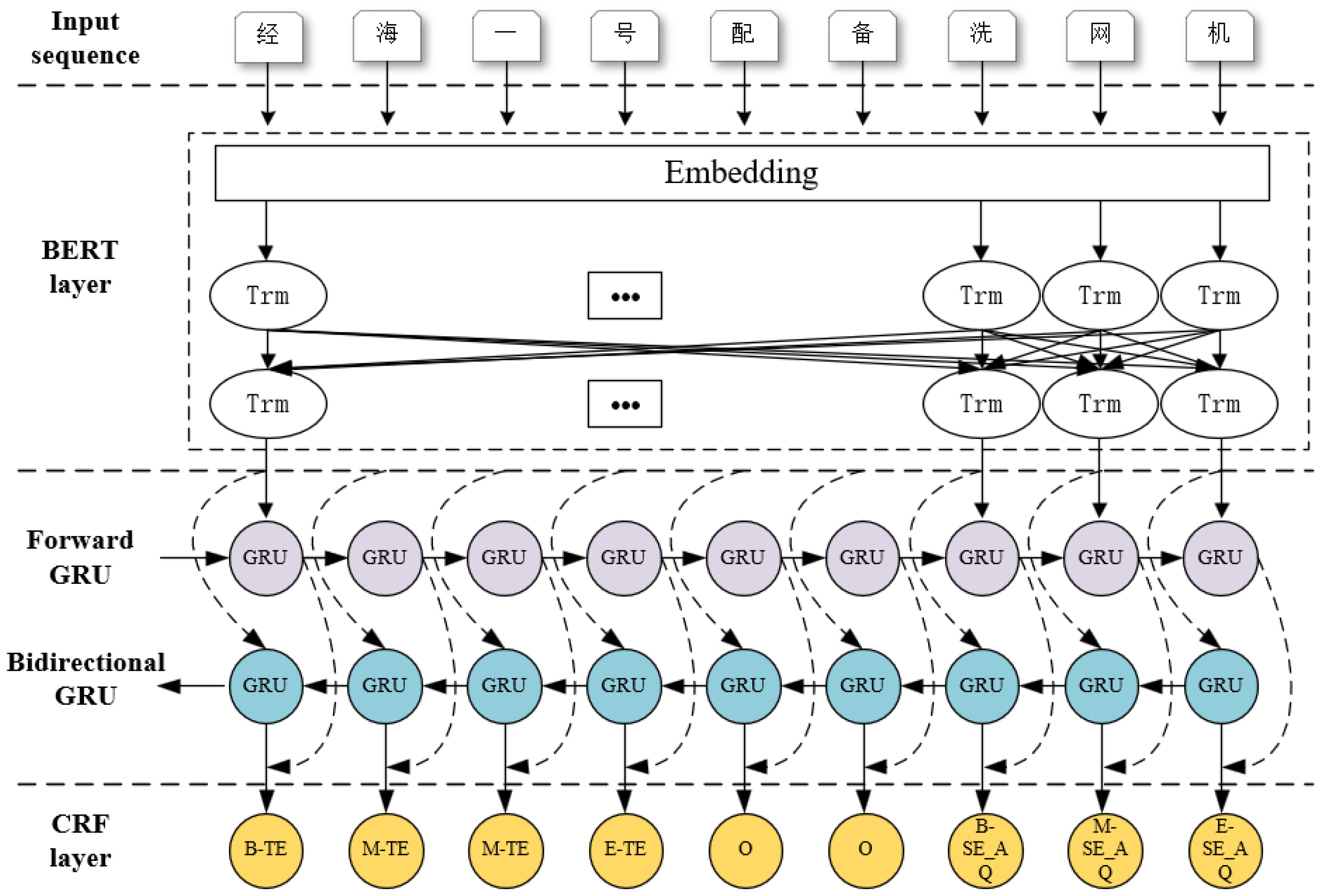

(2) Joint Extraction Model: A BERT-BiGRU-CRF model that integrating BERT’s contextual embeddings, BiGRU’s parameter-efficient sequence modeling and CRF’s global label optimization was developed. A novel TE+SE+Ri+BMESO tagging strategy resolves multi-relation extraction challenges.

(3)Dynamic Knowledge Storage: The extracted triples are stored in Neo4j, enabling scalable visualization and real-time updates via Cypher queries.

This work pioneers the first KG framework for marine ranching equipment, offering a transferable solution for vertical domains. By transforming fragmented data into structured knowledge, our framework supports intelligent applications including equipment fault diagnosis, maintenance planning, and policy formulation.

The remainder of this paper is structured as follows:

Section 2 details the hybrid knowledge graph construction methodology.

Section 3 describes the tagging strategy and BERT-BiGRU-CRF model .

Section 4 evaluates experimental results, and

Section 5 concludes with future directions.

2. Hybrid Knowledge Graph Construction Methodology

KG is basically a special semantic network composed of nodes and edges, which can connect different kinds of information together to form a relational network based on the connections between things.

The construction of KG can be divided into three types: top-down, bottom-up and the combination of the two. In the top-down approach, the ontology concept layer (i.e. pattern layer) is constructed from the top to down to determine the edge of knowledge extraction, and then the entity is added to the knowledge base through the graph construction technology such as knowledge extraction. In the bottom-up approach, entities, relationships and attributes with high confidence coefficient are extracted from data sources and added to the knowledge base. Then concepts are abstracted from the down to top to complete the construction of the pattern layer. The method of combining the two is to build the pattern layer from the top to down, and then to build data layer from the bottom to up. Through the induction and summary of newly acquired data, the entity expansion is realized based on the updated pattern layer [

4].

The KG can be divided into general KG and vertical KG . General KG is not aimed at specific fields, the accuracy of knowledge is not high, and the breadth of knowledge is highlighted. The DBpedia[

12], Yago[

13], Freebase[

14], Wikidata[

15]are typical examples. Vertical KG, such as MusicBrainz[

16], IMDB[

17], is oriented to a specific field, emphasizing the depth of knowledge, and demanding the professionalism and accuracy of knowledge.

The KG of marine ranching equipment is vertical KG, which generally adopts the top-down construction approach. However, with the increase of the amount of data, the difficulty of updating and maintaining the graph will become more and more prominent. Therefore, this study adopts a combination of the two methods to construct the KG of marine ranching equipment, which can not only support large data quantity, but also ensure the high quality of knowledge.

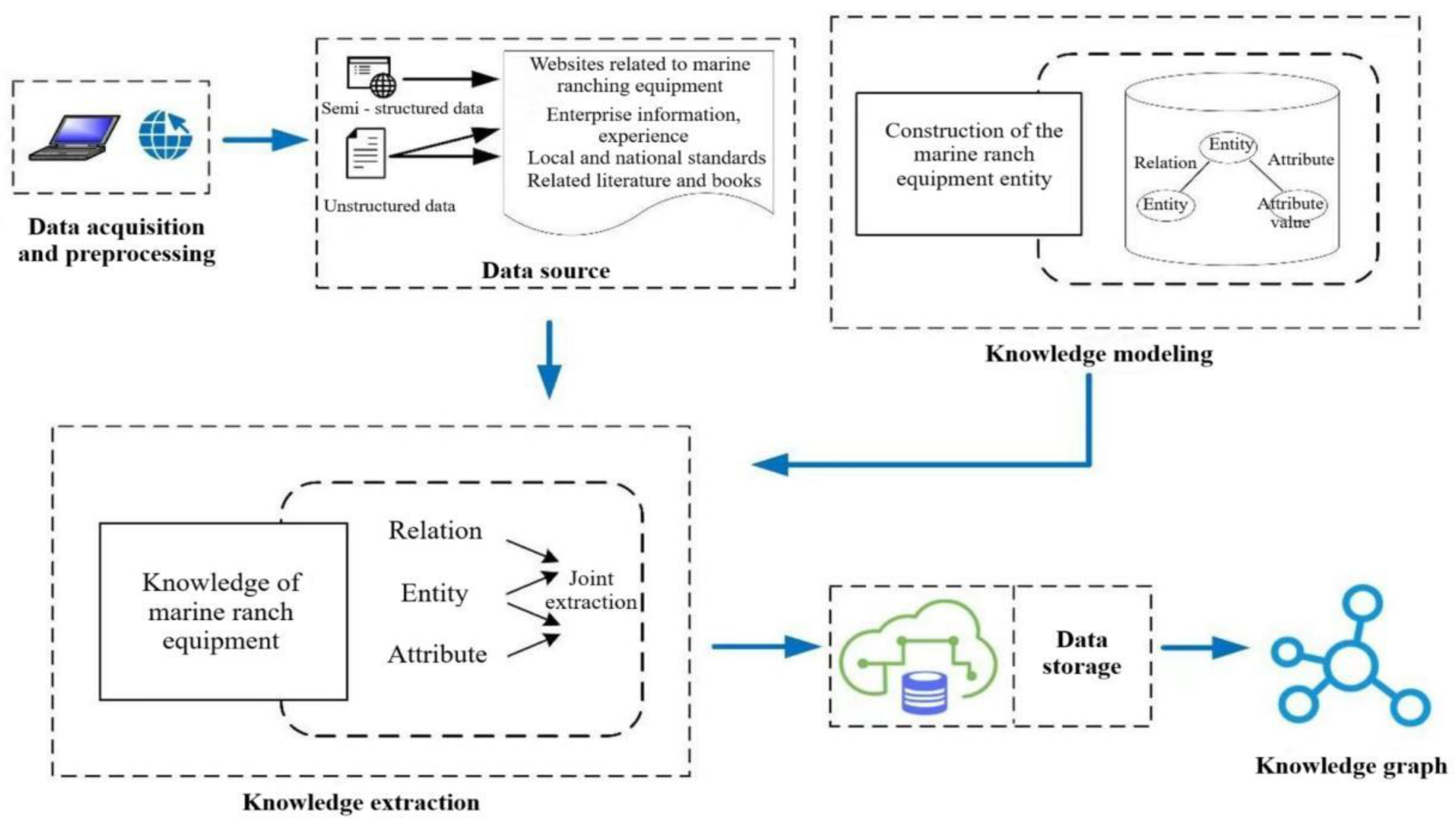

The process includes data acquisition and preprocessing, knowledge modeling, knowledge extraction and data storage,which is shown as follows:

Figure 1.

Construction process of marine ranching equipment KG.

Figure 1.

Construction process of marine ranching equipment KG.

2.1. Data Acquisition and Preprocessing

The primary data sources for constructing the KG include related websites, enterprise production records, expert interview, local and national standards, as well as relevant literature and publications. These data sources are categorized into semi-structured data and unstructured data. To ensure both the quality and quantity of acquired knowledge, distinct acquisition and preprocessing methods are implemented for different data types.

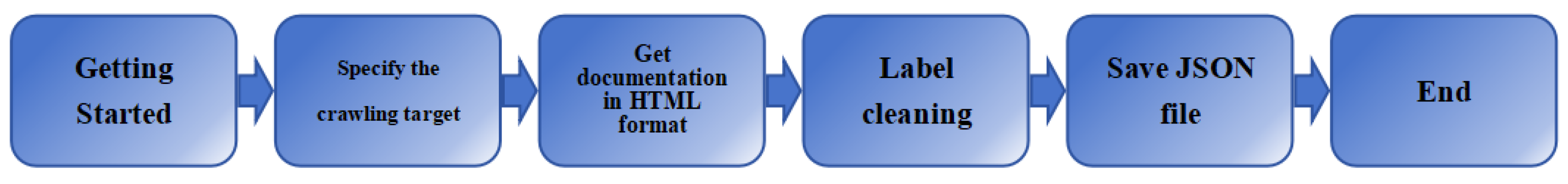

For semi-structured data from websites, the most popular data acquisition technique is web crawling. After comprehensively evaluating popular web crawling frameworks—such as Scrapy, PySpider, Crawley, and Portia.The Scrapy was selected due to its advantages in stability, speed, scalability, modular structure, and low inter-module coupling [

18].However, raw HTML documents obtained often contain irrelevant content and redundant information, which may compromise the quality and efficiency of subsequent knowledge extraction. Therefore, preprocessing is essential to perform data cleaning and format standardization, ensuring the reliability of knowledge sources for graph construction. The specific workflow is illustrated in

Figure 2.

Step 1: Collect and analyze the related websites, and specify the crawling target;

Step 2: According to the website structure, write corresponding scripts to obtain raw HTML format documents;

Step 3: Use regular expressions to clean HTML document, remove the advertising, labels, etc;

Step 4: Write a format conversion script and combine with certain manual review (such as clearing spaces, duplicate content, etc.) to sort out the JSON text research document in {" key ":" value ", "key" : [value]} format.

Unstructured data such as enterprise data, expert interview, relevant literature and books can be divided into electronic text data and paper text data.For the electronic text data, text parsing method is used to obtain it. The paper text data is obtained by OCR text recognition.In order to facilitate the unified processing of subsequent data, combined with manual audit, the obtained data is cleaned and the format is converted to obtain the JSON file in the same format as above.

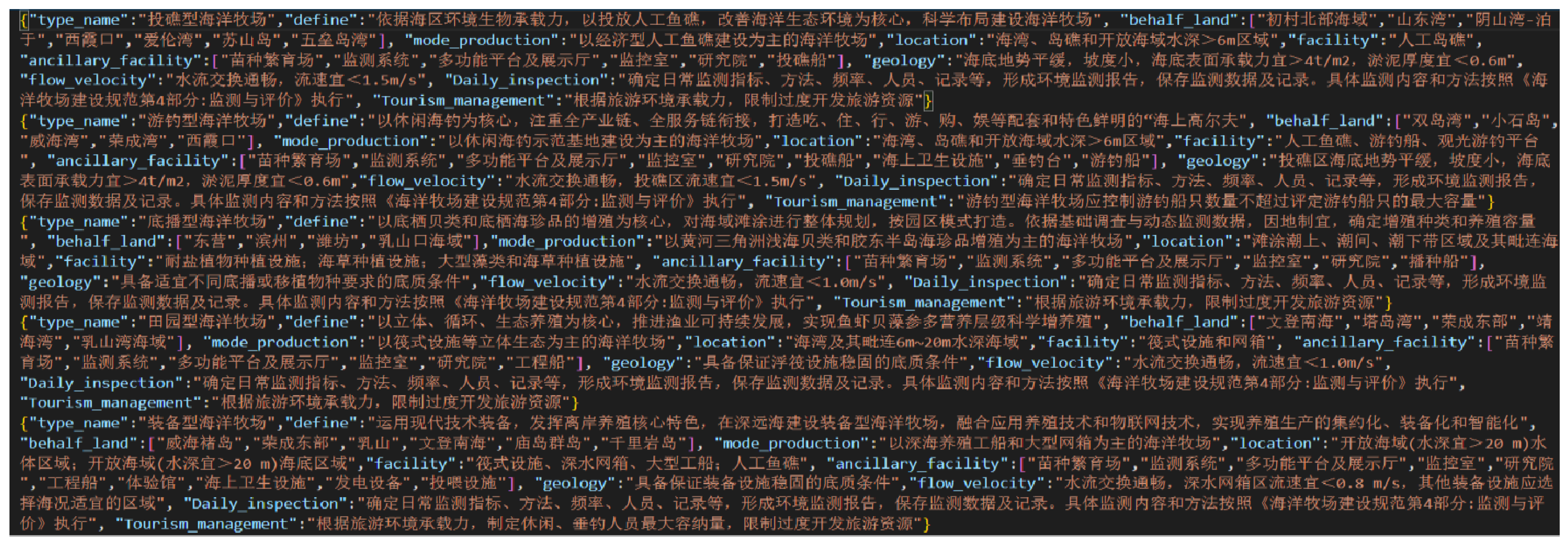

Figure 3 shows the processed data, which does not contain any irrelevant content and is subject to certain rules. The "value" containing one value is stored as a string, and the "value" containing multiple values is stored as an array. Each piece of data represents a type of marine ranching and its associated attributes and attribute values.

2.2. Knowledge Modeling

Knowledge modeling is not only the foundation and preparation work for the construction of KG, but also the premise for the complete construction of valuable KG. It can effectively organize and utilize useful knowledge in massive information to build a unified knowledge model that is convenient for computer processing [

19]. Ontology is a modeling tool that describes domain concepts, which can ensure that the graph has good structure and redundancy. Therefore, this study adopts the ontology-based modeling method to build the pattern layer of the graph.

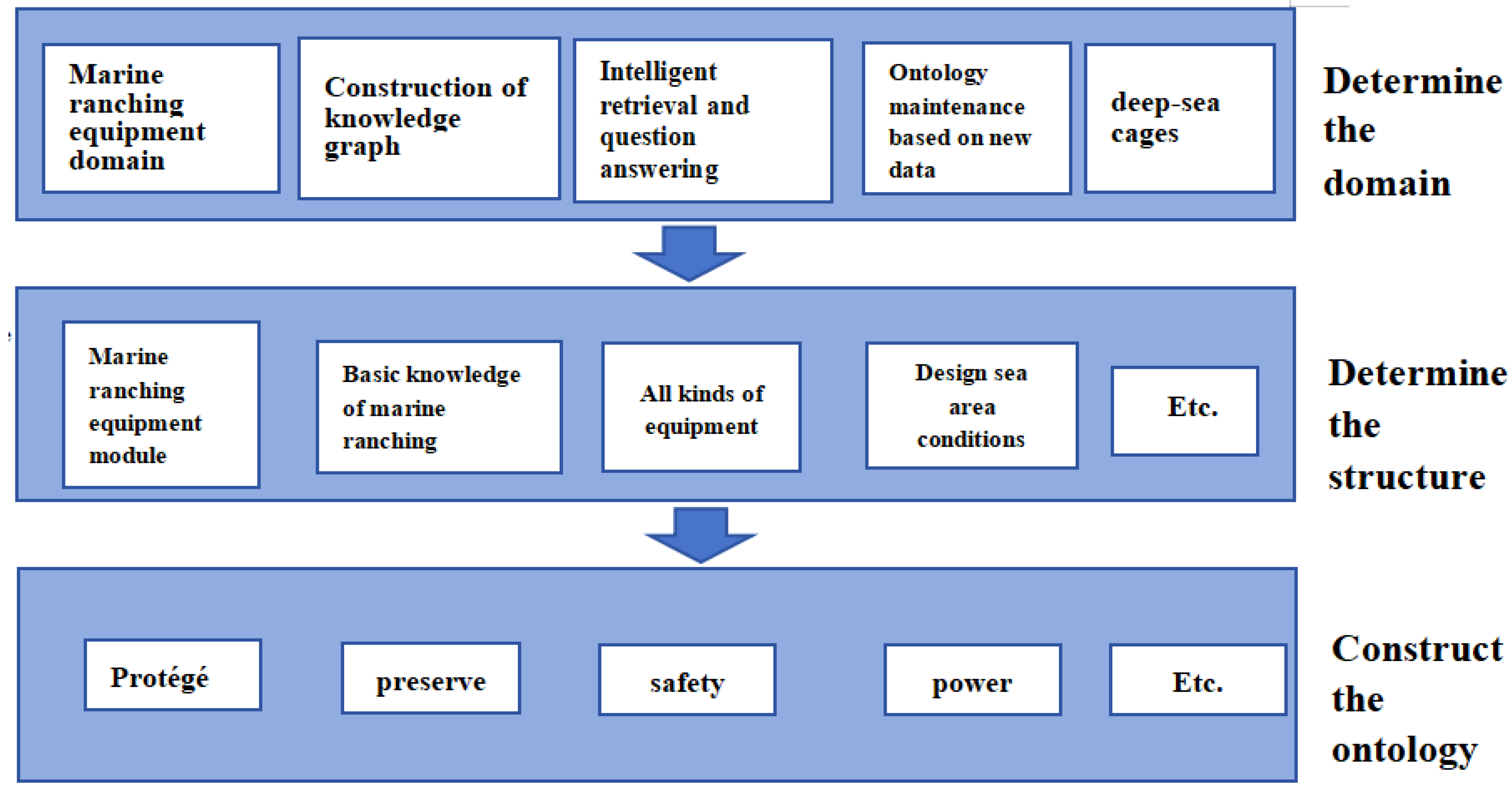

The graph in this study is vertical KG.It has higher professional knowledge and accuracy requirements, therefore adopting the method of top-down, manual building construct ontology. At present, common manual ontology construction methods include seven-step method [

20], skeleton method [

21], METHONTOLOGY method [

22], etc. The seven-step method is the most widely used method at present. It is an iterative ontology modeling method, which is mainly used for the construction of domain ontology. The advantages are that it has detailed step description and strong operability. The steps are as follows: Determine the domain of the ontology; Consider whether existing ontologies can be reused; List ontology key items; Determine the types and structure of types; Determine the attributes of the types; Identify the characteristics of the attribute; Create an instance. For ontology design, there is no absolutely correct domain ontology construction method,but the most suitable method for a certain application scenario. By referring to ontology construction methods in other fields, this study combined with the application scenario of marine ranching equipment field, optimized the existing seven-step method, and finally obtained the construction process of marine ranching equipment field ontology, as shown in

Figure 4.

2.2.1. Determine the Domain of the Ontology

Protégé[

23] is an open source ontology editing tool developed by Stanford University Biomedical Information Research Center based on Java programming language.When using protégé to build an ontology, the primary task is to determine the domain of the ontology, that is, we should clarify what the domain covered by the ontology is, what its purpose is, what scenarios it will be applied to and how to maintain it. In this study, the ontology covers the field of marine ranching equipment, which is mainly used for the construction of KG . The data in the ontology will be used for intelligent retrieval and question-answering. The main maintenance method is to update classes, relationships and attributes based on the induction and summary of new data. In addition, listing key concepts and terms in the domain gives users and builders a clearer understanding of the entire ontology database. Some of the key concepts and terms are shown in

Table 1.

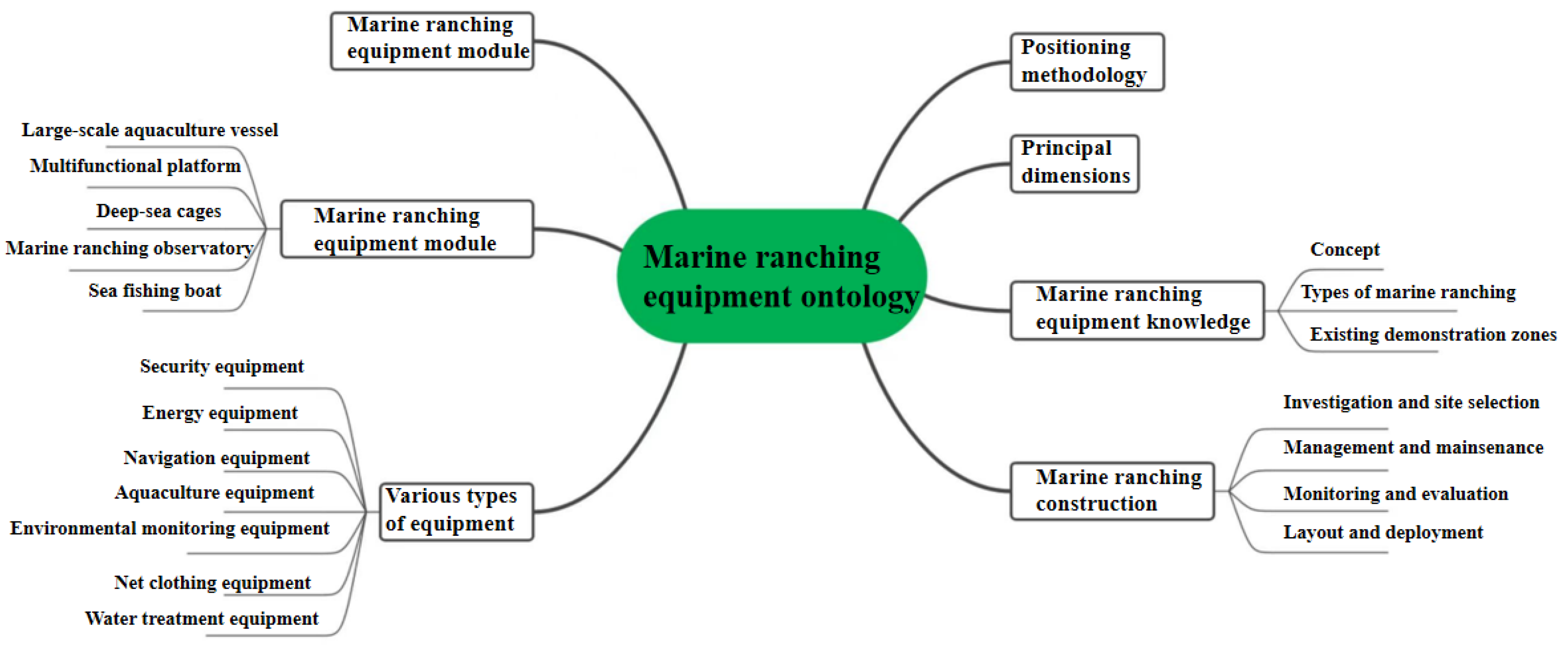

2.2.2. Determine the Structure and Related Elements

It is necessary to consider and design the concept, attribute and relation of marine ranching equipment ontology comprehensively. According to field investigation and relevant papers, marine ranching equipment can be divided into the following five equipment modules: multi-functional platform, deep-sea cages, marine ranching observatory, sea fishing boat and large-scale aquaculture vessel[

1]. Based on the five equipment modules and the existing data content and characteristics, the remaining four parent concepts are determined: Various types of equipment, marine design criteria, positioning methodology and principal dimensions, among which all kinds of equipment include seven sub-concepts such as security equipment, energy equipment, aquaculture equipment and navigation equipment. In order to further increase the amount of graph data and adapt to the current situation that marine ranching tends to be intelligent , this study further enriches the ontology database and adds two parent concepts, marine ranching equipment knowledge and marine ranching construction. The concept of marine ranching equipment knowledge mainly includes the knowledge of existing demonstration areas of marine ranching and the introduction of various types of marine ranching. The concept of marine ranching construction refers to local standards, including monitoring and evaluation, layout and distribution of construction norms.

Figure 5 shows the structure.

For each concept has different features and corresponding data, attributes need to be defined.

Table 2 lists some attributes of the marine ranching equipment ontology.

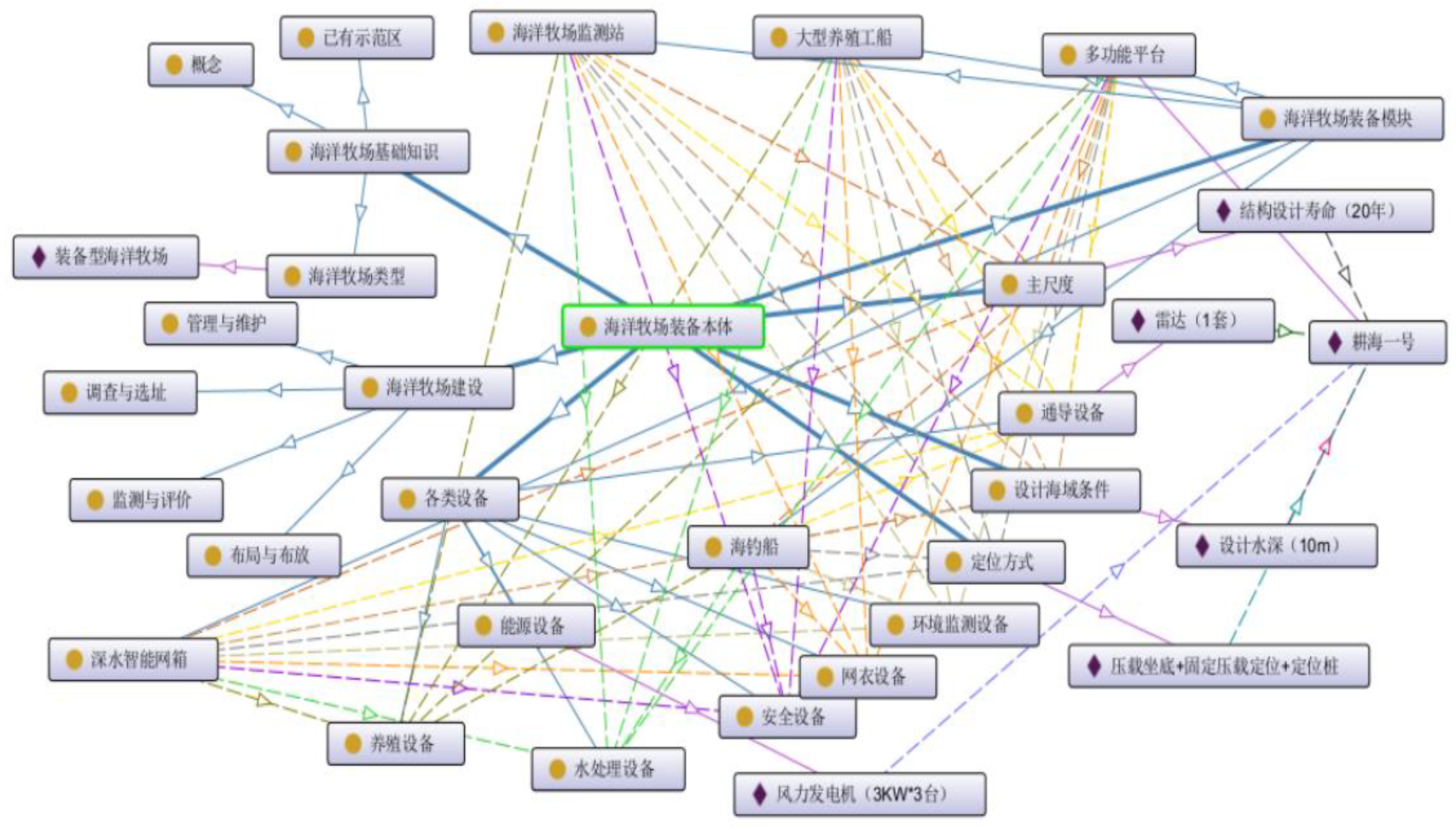

In the above ontology structure, in addition to the upper and lower relation between concepts, there are also certain semantic relations among entities contained in sub-concepts. For example, there is a use_condition relationship between the multi-functional platform (" Geng Hai No. 1 ") and marine design criteria (" designed water depth (10m) "), and there is aquaculture between the deep-sea cages (" Jing Hai No. 1 ") and the aquaculture equipment (" Automatic bait feeder (1 set) ") And the category of relationships is the same as the range.

Table 3 shows the semantic relationships of the marine ranching equipment body based on the concepts designed in the previous section.

2.2.3. Marine Ranching Equipment Ontology Construction

Finally, the ontology modeling tool protégé was used to build the ontology of marine ranching equipment.The above defined concepts, related elements and some examples were added to complete the knowledge modeling of the graph, as shown in

Figure 6.

2.3. Knowledge Extraction

Knowledge extraction aims to extract triple data from different types of acquired data, so as to provide necessary knowledge for the construction of KG. It is divided into three categories: entity, relation and attribute extraction.Entity extraction is the most basic and key step in knowledge extraction. Deep learning-based methods are currently the most popular. In the field of knowledge extraction, common deep learning models include BiGRU-CRF[

24], BiLSTM-CRF[

25]. In this study, it needs to identify marine ranching equipment entities in the marine ranching equipment text, such as "Geng Hai No. 1" (marine ranching equipment module instance), "equipped marine ranching type" (marine ranching type instance), etc. Relation extraction is to extract the relationship between entities on the basis of entity extraction. Attribute extraction refers to the extraction of entity attribute information such as ""equipped marine ranching type" and "Geng Hai No. 1", and generally regards attributes as the relationship between entities and attribute values for extraction [

26].

The extracted objects includes semi-structured data and unstructured data. Extraction methods for different types of data is not the same. For semi-structured data, the method of rule-based scripting is used to complete the joint extraction of entity attributes. For unstructured data, the method based on deep learning model is used to complete the joint extraction of entity relations. The specific extraction model is described in

Section 3.

2.4. Knowledge Storage

Knowledge storage should consider the application scenario and data scale , then choose the appropriate storage mode to store the structured knowledge in the database, which can realize the efficient management and analysis of data. At present, knowledge storage can be divided into two kinds according to the storage structure: table-based knowledge storage and graph-based knowledge storage. After comprehensive analysis, this study adopts the graph-based knowledge storage .

Presently, the predominant graph database systems encompass HyperGraphDB, OrientDB, and Neo4j. Neo4j is the most popular among them , which can store and query entities, attributes and relationships in the knowledge graph, and can also support applications to operate and analyze the knowledge graph.[

27]which is classified as a property graph within the realm of graph databases.And it is fundamentally structured around four core components: labels, nodes, relations, and attributes . The effects and description object represented by each constituent are delineated in

Table 4. In contrast to alternative graph database systems, Neo4j boasts superior scalability, the capacity to accommodate millions of nodes on standard hardware configurations, the availability of the Cypher query language, and compatibility with a multitude of popular programming languages. Consequently, Neo4j has been chosen as the database platform for the storage and maintenance of the graph structure in the present study.

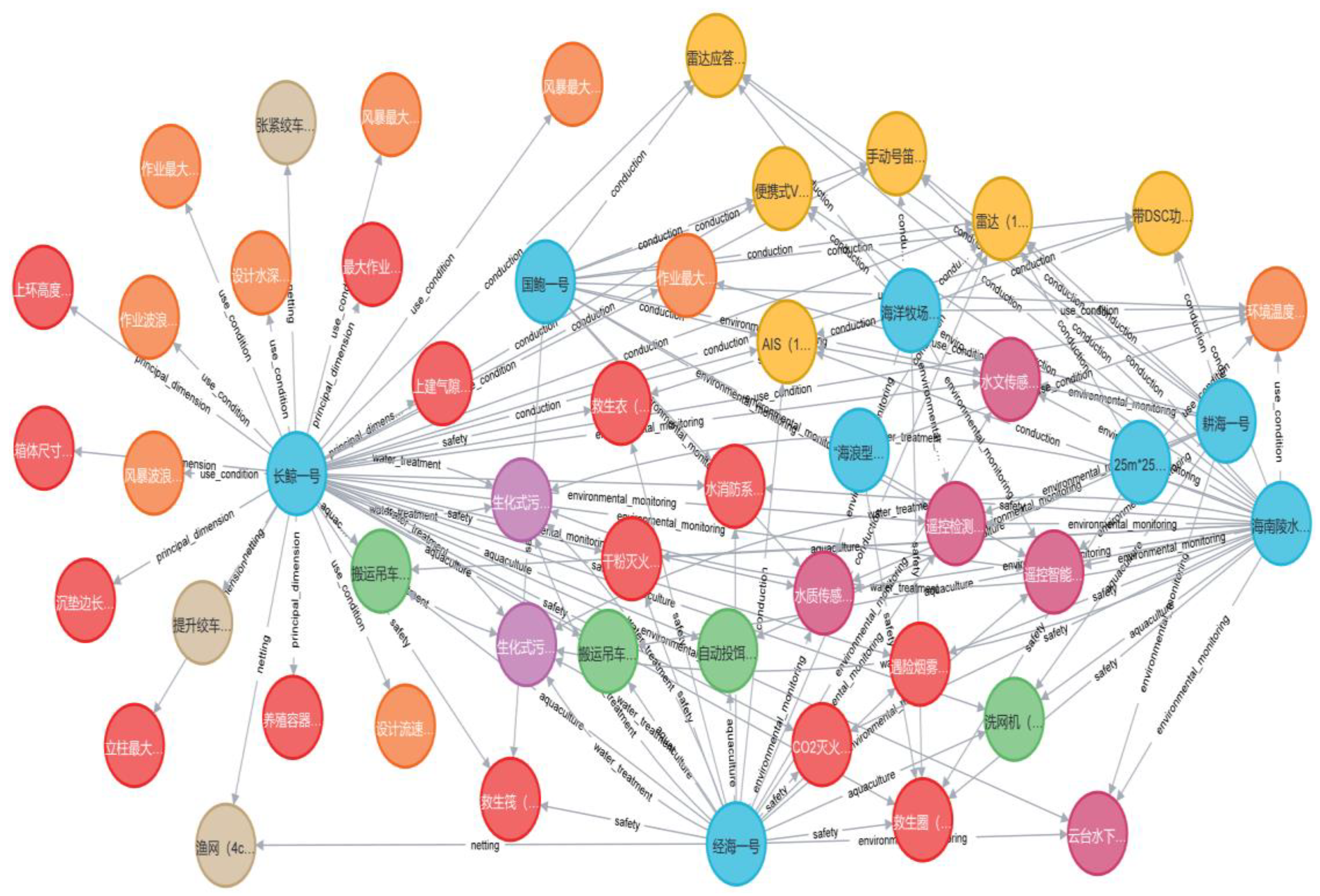

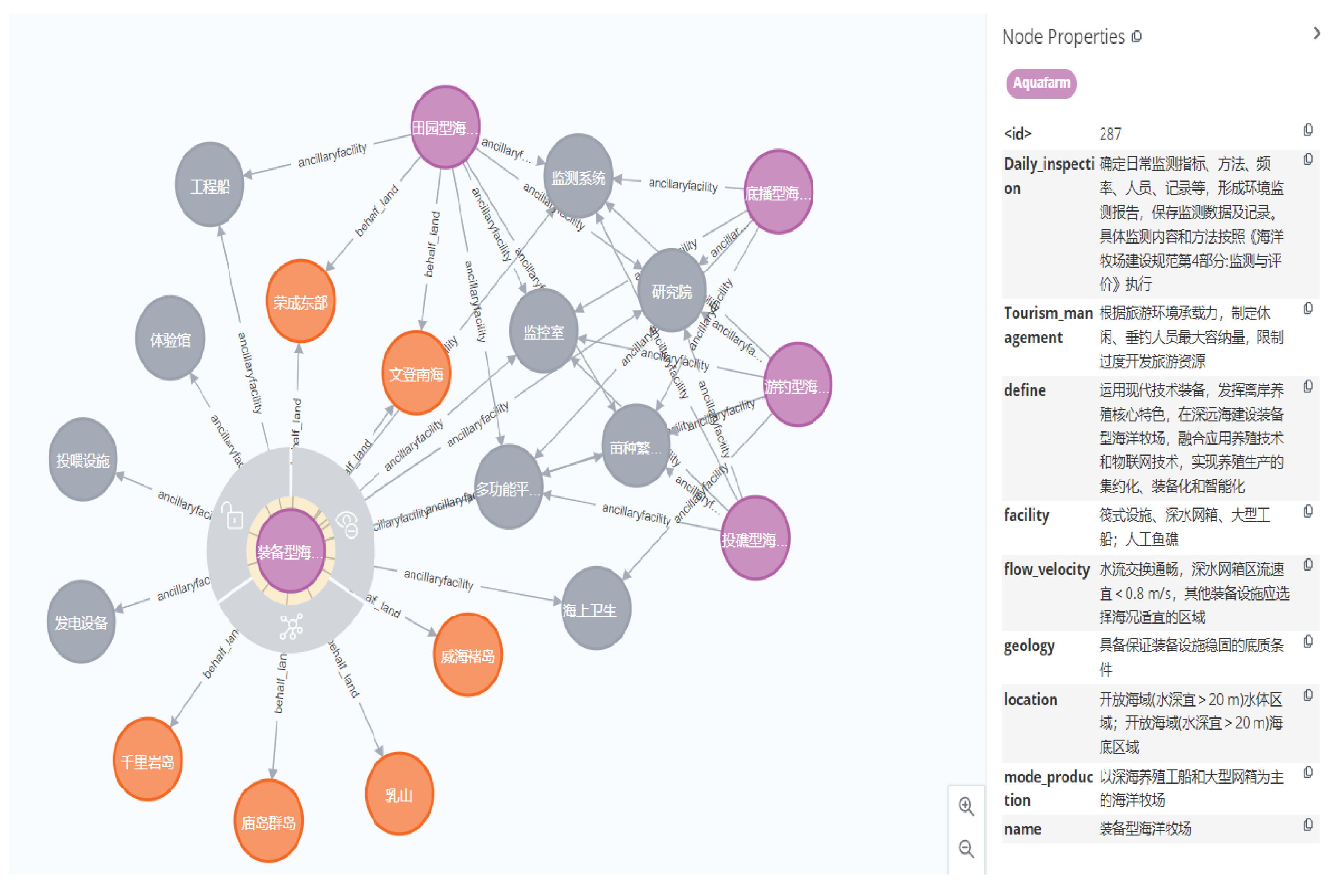

This study is based on the py2neo library in Python, and through script writing, it facilitates the batch import of triples, such as (entity, relationship, entity) and (entity, attribute, attribute value). The Neo4j-based knowledge graph encapsulated 2,153 nodes and 3,872 edges.,

Figure 7 shows partial content.

In

Figure 7, where in nodes distinguished by varying colors correspond to disparate conceptual instances, while the varying edges interlinking these nodes signify the interrelational aspects. Owing to the openness of the KG and the commendable scalability of the Neo4j database, it is anticipated the KG established in this research can be systematically enriched and augmented via Cypher query language statements. This will, in turn, provide a robust foundation for subsequent applications in equipment fault diagnosis, maintenance planning, and policy formulation.

3. Joint Extraction of Knowledge in the Field of Marine Ranching Equipment

Knowledge extraction aims to extract triplet data from different types of data obtained, so as to provide necessary knowledge for the construction of KG.As mentioned above, for semi-structured data, rule-based joint extraction is used to complete the joint extraction, for unstructured data, the method based on deep learning model is used to complete the joint extraction .

3.1. Rule-Based Joint Extraction of Entity Attributes

As depicted in

Figure 3, the dataset analyzed in the preceding section is characterized as semi-structured data, adhering to specific rules. For certain data entries, the initial key-value pair enclosed within each set of curly braces denotes the category to which the entity pertains, as well as the entity's name. Subsequent key-value pairs are archived in the format "attribute": "attribute value," with each pair pertaining to the entity identified in the initial key-value pair. Empirical validation has confirmed that this structured rule facilitates the extraction of entity-attribute-attribute value triples (e.g., marine ranching equipment entity-attribute 1-attribute value 1; marine ranching equipment entity-attribute 2-attribute value 2; ...; marine ranching equipment entity-attribute n-attribute value n).

In order to enhance the presentation and utility of the KG, the current study introduces a method to normalize multi-valued attributes into entities. A segment of the KG is illustrated in

Figure 8.

3.2. Joint Entity Relation Extraction Based on Deep Learning Models

3.2.1. The Innovative TE+SE+Ri+BMESO Tagging Strategy

Upon examining the “JSON” dataset that was previously processed, it has been ascertained that the "value" field comprises a substantial portion of unstructured textual data, which concurrently harbors numerous cryptic interconnections among entities. For example, within the "value" segment of the "introduction" (profile) attribute for "Jinghai No.1", there are intricate entity relationships pertaining to principal dimensions, aquaculture equipment, and marine design criteria.

Drawing upon the comprehensive analysis of the marine ranching equipment corpus, in conjunction with the interrelations delineated within the model layer, several distinctive attributes have been elucidated: (1) The extraction tasks for this iteration are unanimously centered around the conceptual entity of the marine ranching equipment module, thereby designating the marine ranching equipment module entity as the theme entity within the extracted triples; (2) The relations between the marine ranching equipment module entity and other entities, as well as the categorization of these other entities, remain consistent. The identification of the entity types facilitates the determination of their respective relationships; (3) In a sentence, it is feasible to encounter multiple relationships between the marine ranching equipment module entity and other diverse entities.

Drawing upon the preceding analytical insights, the current study introduces an innovative tagging strategy, designated as TE+SE+R

i+BMESO, which is specifically tailored for the marine ranching equipment corpus. The study employs the BERT-BiGRU-CRF entity extraction model to concurrently identify and extract inter-entity relationships. Within the context of this tagging schema, the marine ranching equipment module entity is denoted as the theme entity, represented by TE. Entities that interact with the marine ranching equipment module entity are denoted by SE_ R

i, with SE denoting the secondary entity and R

i indicating the category of the i-th secondary entity SE

i, which corresponds to the relationship type linking the theme entity to SE

i. The BMESO sequence labeling approach is utilized, with the detailed connotations of each label delineated in

Table 5.

3.2.2. The Specific Structure and Working Principle of the BERT-BiGRU-CRF Model

The BERT model[

18], a groundbreaking advancement in natural language processing (NLP) in recent years, demonstrates exceptional performance in text representation and semantic understanding. The BERT-BiGRU-CRF architecture comprises three layers: a BERT layer, a Bidirectional Gated Recurrent Unit (BiGRU) layer, and a Conditional Random Field (CRF) layer. Leveraging the Transformer architecture, BERT enables parallel computation, significantly enhancing training and inference efficiency compared to conventional models based on Recurrent Neural Networks (RNN) or Convolutional Neural Networks (CNN), particularly when processing large-scale datasets. The BiGRU layer excels in sequence modeling by autonomously capturing contextual information, offering advantages over Bidirectional Long Short-Term Memory (BiLSTM) networks, including a simpler architecture, fewer parameters, and faster computational speed. The CRF layer incorporates global sequence dependencies by calculating optimal label sequences, thereby effectively utilizing inter-label relationships. Currently, the BERT-BiGRU-CRF framework has been widely adopted for Named Entity Recognition (NER) across diverse domains. For instance, Wang Yuquan[

28] et al. enhanced the precision, recall, and F1-scores in geotechnical engineering text NER by proposing a pre-trained BERT-BiGRU-CRF language model. Similarly,Ma Wenxiang [

29] et al addressed challenges in Chinese resume NER, such as character-level polysemy and representation limitations, through their integrated BERT-BiGRU-CRF approach.The overall model is shown in the

figure 9.

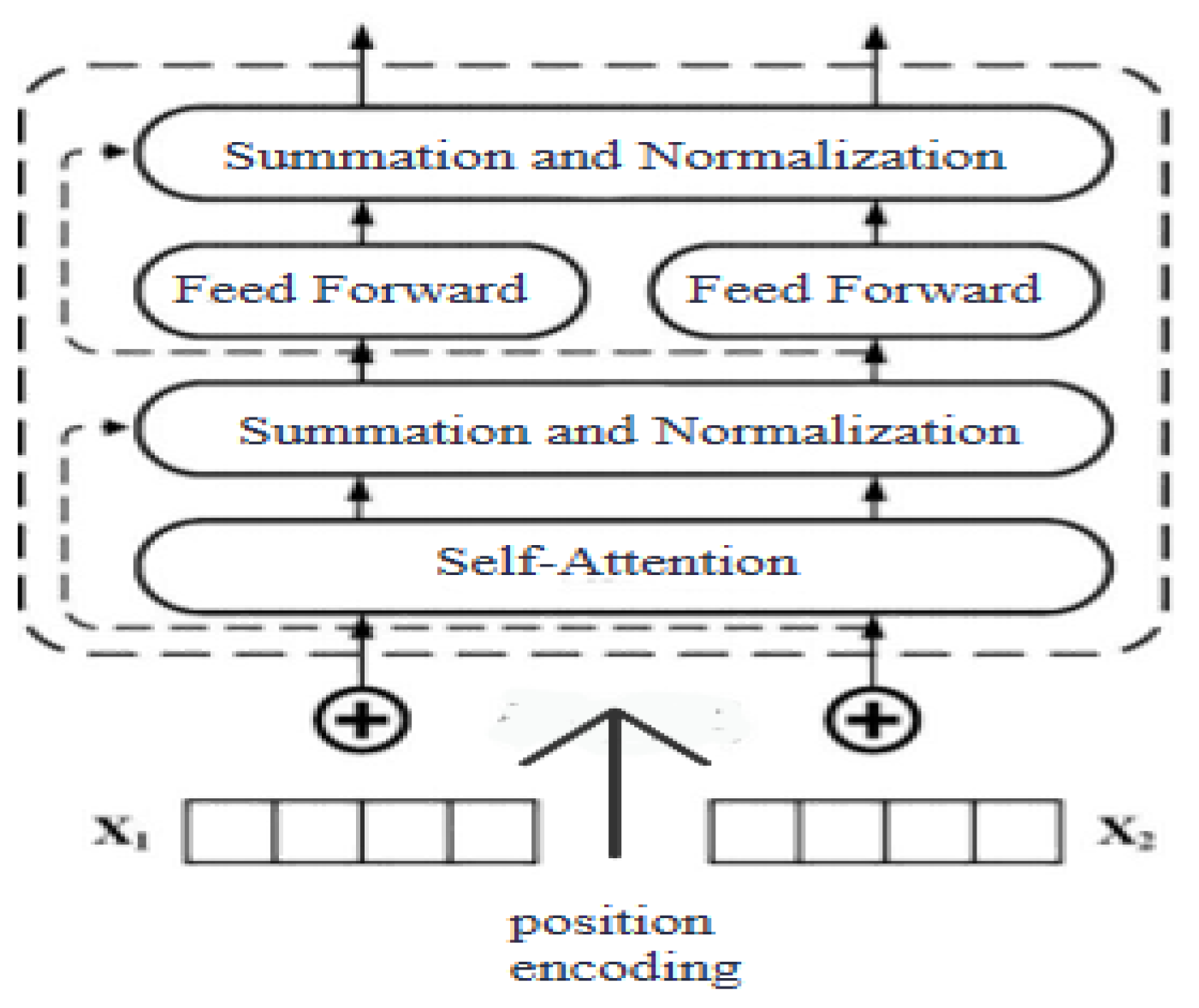

(1)BERT layer

BERT is a context-based word embedding model, boasts enhanced capabilities in deriving nuanced semantic attributes from textual data compared to its non-contextual predecessors like word2vec. Its proficiency in addressing the challenge of polysemy through contextual analysis is rooted in its core architecture the bidirectional transformer encoding structure. The intricacies of which are delineated in

Figure 10.

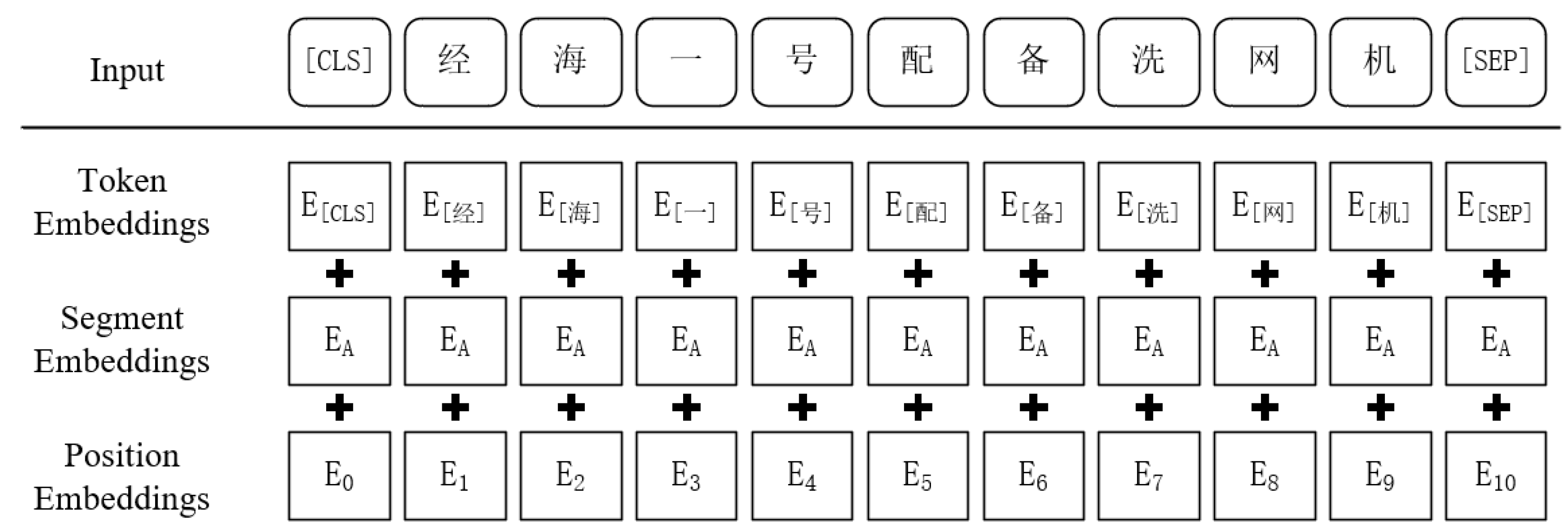

The execution of the BERT layer predominantly encompasses two pivotal components: the representation of input data and pre-training procedures. The representation of input data pertains to the transformation of the data into a format compatible with BERT's input requirements. Each character within the input data is the aggregate of token embeddings, segment embeddings, and position embeddings, as shown in

Figure 11[

30].

BERT is pre-trained based on two major tasks: "Masked Language Model" (MLM) and "Next Sentence Prediction" (NSP). Through the simultaneous training of these two tasks, it can better extract word-level and sentence-level features of the text, obtaining token embeddings that contain more semantic information.

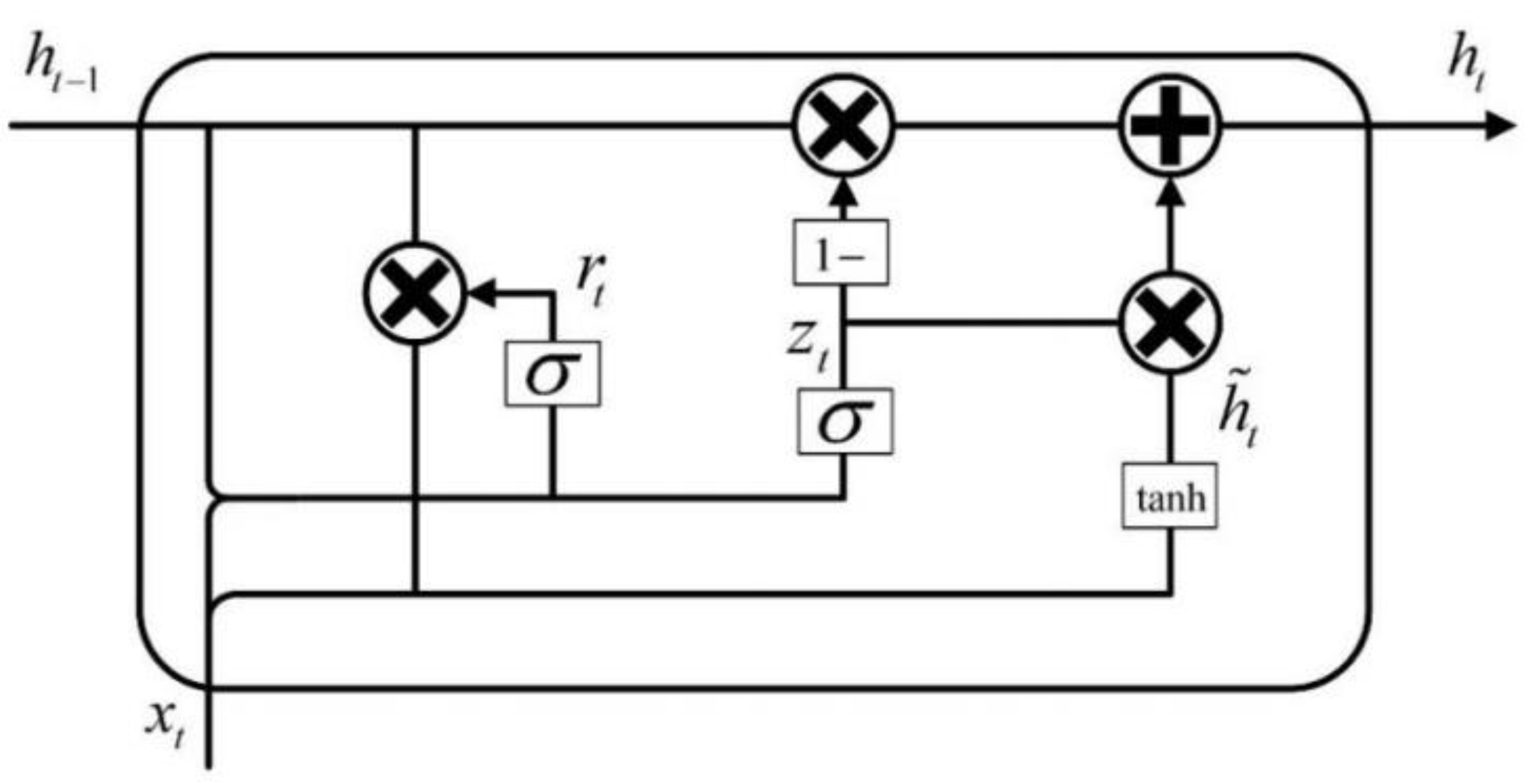

(2)BIGRU layer

The GRU is an alternative iteration of the Recurrent Neural Network (RNN) architecture. In contrast to the tripartite gate mechanism of the LSTM, the GRU has been streamlined to incorporate just two gates: the reset gate and the update gate, as depicted in

Figure 12. To concurrently acquire contextual insights, the current study has developed a Bidirectional Recurrent Neural Network (BiGRU) network, where in the fundamental building block consists of both forward and reversed GRU elements.

In

Figure12, the variable x

t denotes the input data at the current instance, whereas h

t signifies the output at the current instance, and h

t-1 denotes the output at the previous instance. Concurrently, the reset gate r

t and the update gate z

t operate synergistically to regulate the previous hidden state h

t-1 and to facilitate its transition into the new hidden state h

t. The reset gate combines h

t-1 and x

t, producing a matrix r

t, the elements of which range from 0 to 1, and which dictate the extent of information from the previous output h

t-1 that is to be discarded. A value closer to zero indicates a higher degree of information erasure from h

t-1. The specific formula is as follows.

The update gate combines h

t-1 and x

t to control how much information from the previous step's output h

t-1 is retained. The smaller the value, the more information from h

t-1 is retained, and the less information from the current step is retained. The specific formula is as follows.

The candidate memory

consists of two components: one part is the current input data x

t, and the other part is the output h

t-1 from the previous moment, determined by the reset gate r

t. When r

t equals 0, it signifies a complete erasure of past information. The specific formula is as follows.

The matrices W

r ,W

b and W

z denote the weight matrices, while b

r, b

z, and b

h represent the biases. The ultimate output is determined by the update gate, and the formula for this computation is as follows .

(3)CRF layer

In the domain of named entity recognition, it is imperative to acknowledge the existence of interdependencies among the labels. For example, the label "B-TE" is invariably followed by the proscription of the label "M-SE_AQ". Nonetheless, the BIGRU model inadvertently conforms to a strategy of selecting the label with the highest probability as the predicted outcome, without due consideration for the inter-label constraints. In response to this challenge, the current study introduces the integration of a CRF (Conditional Random Field) layer. During the label prediction phase, this CRF layer meticulously evaluates both the individual probabilities of each label and the transition probabilities derived from the training corpus, effectively mitigating the likelihood of illicit labels and enhancing the precision of the predictive outcomes.

Suppose the input sequence is X = {x1, x2, x3, ..., xn}, and the output label sequence is y = {y1, y2, y3, ..., yn}. The score calculation formula is as follows:

In the formula, A is a transfer matrix of size (k + 2) × (k + 2), where Ai,j represents the score of label i transferring to label j. P is the output matrix of the BIGRU layer, with a size of n×k, where n indicates the sentence length and k represents the number of labels. pi,j denotes the score of the i-th word being marked as the j-th label.In order to derive the probabilities corresponding to all potential tag sequence scores, the softmax function is employed. The mathematical formula is delineated as follows:

In the given formula, Y

X denotes the comprehensive set of all feasible label sequences corresponding to the input sequence X, with y~ representing the actual label sequence. Subsequently, a logarithmic transformation is applied to both sides of the equation, followed by the application of the Viterbi algorithm for decoding, thus identifying the sequence with the highest scoring value. The detailed computational formula is delineated as follows:

4. Results and Analysis

4.1. System Testing Environment

In the context of this investigative endeavor, the experimental procedures were executed utilizing the Python and PyTorch frameworks. The corresponding software and hardware configuration are delineated in

Table 6.

This investigation assesses the efficacy of the model by employing three pivotal performance metrics indigenous to the domain of knowledge extraction, viz., precision (P), recall (R), and the F1 score. The corresponding mathematical formula are delineated as follows:

In the given formula, "TP" — the number of entities that have been accurately identified; "FP" — the number of entities that have been inaccurately identified; "FN" — the number of entities that have eluded detection.

4.2. Experimental Data and Parameters

In this study, the dataset consists of 1,456 annotated sentences specifically related to marine ranching equipment. To garner more profound insights, the dataset was subdivided utilizing the cross-validation methodology, adhering to a proportion of 8:1:1, which yielded a training corpus of 1,164 sentences, a validation corpus of 146 sentences, and a test corpus of 146 sentences. Following an array of parameter fine-tuning trainings, the optimal parameter settings are outlined in

Table 7.

4.3. Experimental Results

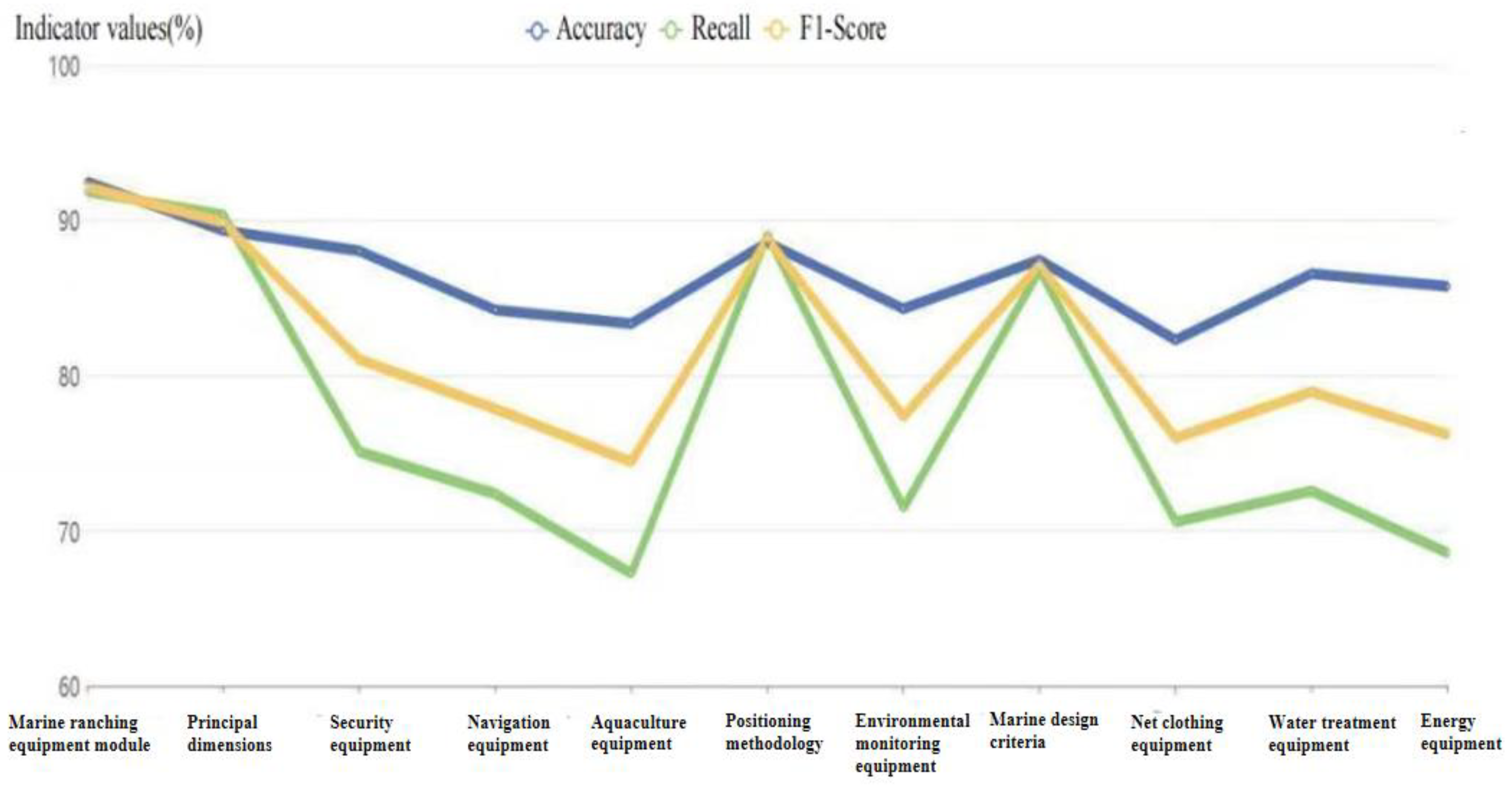

In order to substantiate the superiority of the model developed within the scope of this research, a comparative analysis was conducted against the prevalent algorithmic model, BERT-BiLSTM-CRF, in the domain of knowledge extraction. The detailed outcomes of these experiments are delineated in Table.8, while the recognition efficacy pertaining to diverse entities is graphically showed in

Figure 13.

As illustrated in

Table 8, experimental results demonstrated superior performance over BERT-BiLSTM-CRF, achieving 86.58% precision, 77.82% recall, and 81.97% F1-score(1.94% improvement, p < 0.05) . In contrast to the BiLSTM model,,the BiGRU model boasts a reduced parameter count, which not only enhances model performance but also accelerates the training process. The utilization of BiGRU as the encoding layer is deemed more appropriate for the text-based entity recognition task specific to marine ranching equipment.

Furthermore, an analysis of

Figure 13 and associated data reveals that the F1 score for the marine ranching equipment module entity is the highest at 92.17%. Notably, entities such as marine design criteria, positioning methodology, and principal dimensions exhibit considerable F1 scores at 87.12%, 88.81%, and 89.85% respectively. The presence of diverse nomenclature for various equipment entities—such as "batch feeder", "bait dispenser", and "GQ48902", all referring to the "feeding machine" in the context of aquaculture equipment—leads to an increased prevalence of unrecognizable entities and manifests as a relatively low recall rate. Consequently, this impacts the overall performance of the model, yielding a lower recall rate and F1 score.

5. Conclusions and Prospects

In essence,this study not only pioneers the first structured KG framework for marine ranching equipment, but also offers a transferable methodology for vertical domain knowledge extraction, yielded successful outcomes. The present study employs a hierarchical, top-down methodology to establish the model layer of marine ranching equipment , culminating in the formulation of an ontological framework for the marine ranching equipment KG. Subsequently, a bottom-up method is enacted to develop the corresponding data layer, facilitating the comprehensive acquisition of marine ranching equipment data and the subsequent extraction. Thereafter, the BERT-BiGRU-CRF model is used to accomplish the joint extraction of entity relationships within the marine ranching equipment domain. Ultimately, the graph data is stored within the Neo4j database. The conclusions are summarized as follows:

The Neo4j-based knowledge graph encapsulated 2,153 nodes and 3,872 edges, enabling scalable visualization and dynamic updates. Experimental results demonstrated superior performance over BERT-BiLSTM-CRF, achieving 86.58% precision, 77.82% recall, and 81.97% F1-score(1.94% improvement, p < 0.05)

Currently, there is still room for improvement in the KG, and it will be further deepened and expanded from the following aspects:

In the initial phase, it is imperative to address the dynamic and continuous evolution of marine ranching equipment, which is characterized by rapid advancement and an ever-expanding body of knowledge. The capacity to capture data in real-time and facilitate the dynamic updating of the marine ranching equipment KG represents a pivotal challenge for future endeavors.

Furthermore, the KG established in this research is presented solely in textual format. It is proposed that future initiatives explore the integration of visual or auditory data, such as images, audio, and video, to construct a multifaceted KG that enhances connectivity with the tangible world.

Finally, the KG of marine ranching equipment can be combined with a large language model. ChatGPT is a hot research topic in the field of intelligent question-answering, which can quickly understand and answer users' questions in various general fields, but for vertical fields such as marine ranching equipment, its reliability and professionalism are still insufficient. Combining the KG of marine ranching equipment with the large language model can give full play to the advantages of both, providing users with personalized equipment selection suggestions, and realizing more intelligent and efficient knowledge management and application.

Author Contributions

Conceptualization: D.C.; Methodology: D.C.; Software: Z.G.; Validation: Z.G.and S.L.; Investigation: S.L., X.G. and Y.W.; Writing original draft: D.C.; Writing review and editing:H.Z.and D.Z.; Visualization:S.L.and X.G.; Supervision: H.Z.and D.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhao, Z.; Ding, J.; Ji, Y.; et al. Exploration and Practice of Modern Information Technology and Engineering Equipment in Marine Ranching Construction .China Fisheries.2020,( 04 ), 33-37.

- Shen, W.; HU, Q.; Shen, T.; et al.Research and experiment of intelligent floating fish accumulating equipment for ocean ranching. Journal of Shanghai Ocean University.2016,25(02): 314-320.

- Shi, J. Intelligent equipment technology for offshore cage culture.Beijing: Ocean Press.2018, 1-159.

- Huang, H.; Yu, J.; Liao, X.; et al.Knowledge graph research review.Computer Systems & Applications.2019,28(06),1-12.

- Dieter, F.; et al. Introduction: what is a knowledge graph?.2020. [CrossRef]

- Ren, T.Research and Application of OPC UA Information Model Based on Knowledge Graph.Postgraduate,Zhejiang University , China, 2021. [CrossRef]

- Gu, X.; Bao, J.; Lü, C.; et al.Assembly Semantic Information Modeling Based on Knowledge Graph.Aeronautical manufacturing technology.2021,64(04),74-81. [CrossRef]

- Huet, A.; Segonds, F.; Pinquie, R.; et al. Context-aware cognitivedesign assistant:Implementation and study of design rules recommendations. Advanced Engineering Informatics.2021,50, 101419. [CrossRef]

- Hao, Q.; Hu, S. Smart design on the flexible gear based on knowledge graph. Journal of 29 Physics: Conference Series (Vol. 1885; No. 5; p. 052021). [CrossRef]

- Zhou, B.; Hua, B.; Gu, X.; Lu, Y.; Peng, T.; Zheng, Y. An end-to-end tabular information-oriented causality event evolutionary knowledge graph for manufacturing documents.Advanced Engineering Informatics.2021,50,101441. [CrossRef]

- Hao, X. Research on prediction and treatment method of workshop production anomaly based on LSTM.Postgraduate, Harbin University of Science and Technology, China, 2020. [CrossRef]

- Bizer C.; Lehmann J.; Kobilarov G.; et al. DBpedia - A crystallization point for the Web of Data.Web Semantics Science Services & Agents on the World Wide Web. 2009, 7(3):154-165. [CrossRef]

- Suchanek F M.; Kasneci G.; Weikum G. Yago: a core of semantic knowledge. Proceedings of the 16th international conference on World Wide Web, ACM, 2007, 697-706.

- Bollacker K D.; Cook R P.; Tufts P. Freebase: A shared database of structured general human knowledge. In: Proceedings of the 22nd National Conference on Artificial Intelligence, 2007, 2: 1962–1963.

- Vrandečić D. Wikidata: a new platform for collaborative data collection. Proceedin gs of the 21st International Conference on World Wide Web. ACM, 2012: 1063-1064.

- IMDB Official. IMDB[EB/OL]. [2016-02-27]. http://www. imdb.com.

- MetaBrainz Foundation. Musicbrainz[EB/OL]. [2016-06- 06].http://musicbrainz.org/.

- Sun, Y.Design and Implementation of Web Crawler System Based on Scrapy Framework. Postgraduate.Beijing Jiaotong University.China.2019.

- Yun, W. ;Zhang, X.;Li, Z.; et al. Knowledge modeling: A survey of processes and techniques.International Journal of Intelligent Systems, 2021(3).

- Noy, N. Ontology development 101: A guide to creating your first ontology.2001.

- Uschold, M.; Gruninger, M. Ontologies: Principles; methods and applications. The knowledge engineering review.1996.11(2); 93-136. [CrossRef]

- Jones, D.; Bench-Capon, T.;Visser, P. Methodologies for ontology development. Capon. 1998:62--75. 2004. [CrossRef]

- Knublauch, H.; Fergerson, R .; Noy, N.; et al.The Protégé OWL Plugin: An Open Development Environment

for Semantic Web Applications//International Semantic Web Conference.Springer, Berlin,

Heidelberg,2004. [CrossRef]

- Xu C.; Wang F.; Han J.; et al.Exploiting multiple embeddings for Chinese named entity recognition//Proceedings of the 28th ACM International Conference on Information a nd Knowledge Management,2019:2269-2272 .

- Huang Z.; Xu W.; Yu K .Bidirectional LSTM-CRF Models for Sequence Tagging.Computer Science.2015. [CrossRef]

- Ma, Z.;Ni, R.;Yu, K. The latest progress, key technologies and challenges of knowledge graph. Journal of Engineering Sciences.2020,42(10):1254-1266. [CrossRef]

- Dou, J.; Qin, J.; Jin, Z.; et al. Knowledge graph based on domain ontology and natural language processing technology for Chinese intangible cultural heritage.Journal of Visual Languages & Computing. 2018, 48(OCT.):19-28. [CrossRef]

- Wang, Q.; Li, Z.; Tu, Z.; et al. Geotechnical Named Entity Recognition Based on BERT-BiGRU-CRF Model. Earth Science. 2023,48(08): 3137-3150.

- Ma, W.; Liao, T.; Zhang, S.; et al.Named Entity Recognition of Chinese Electronic Resume Based on BERT-BiGRU-CRF. Journal of Yancheng Institute of Technology(Natural Science Edition).2022,35(03): 41-47. [CrossRef]

- Wang B.; Wang A.; Chen F.; et al.Evaluating word embedding models: methods and experimental results. APSIPA Transactions on Signal and Information Processing.2019, 8. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).