Submitted:

01 March 2025

Posted:

03 March 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. AI Trends: Federated Learning, Generative AI, and Enterprise AI

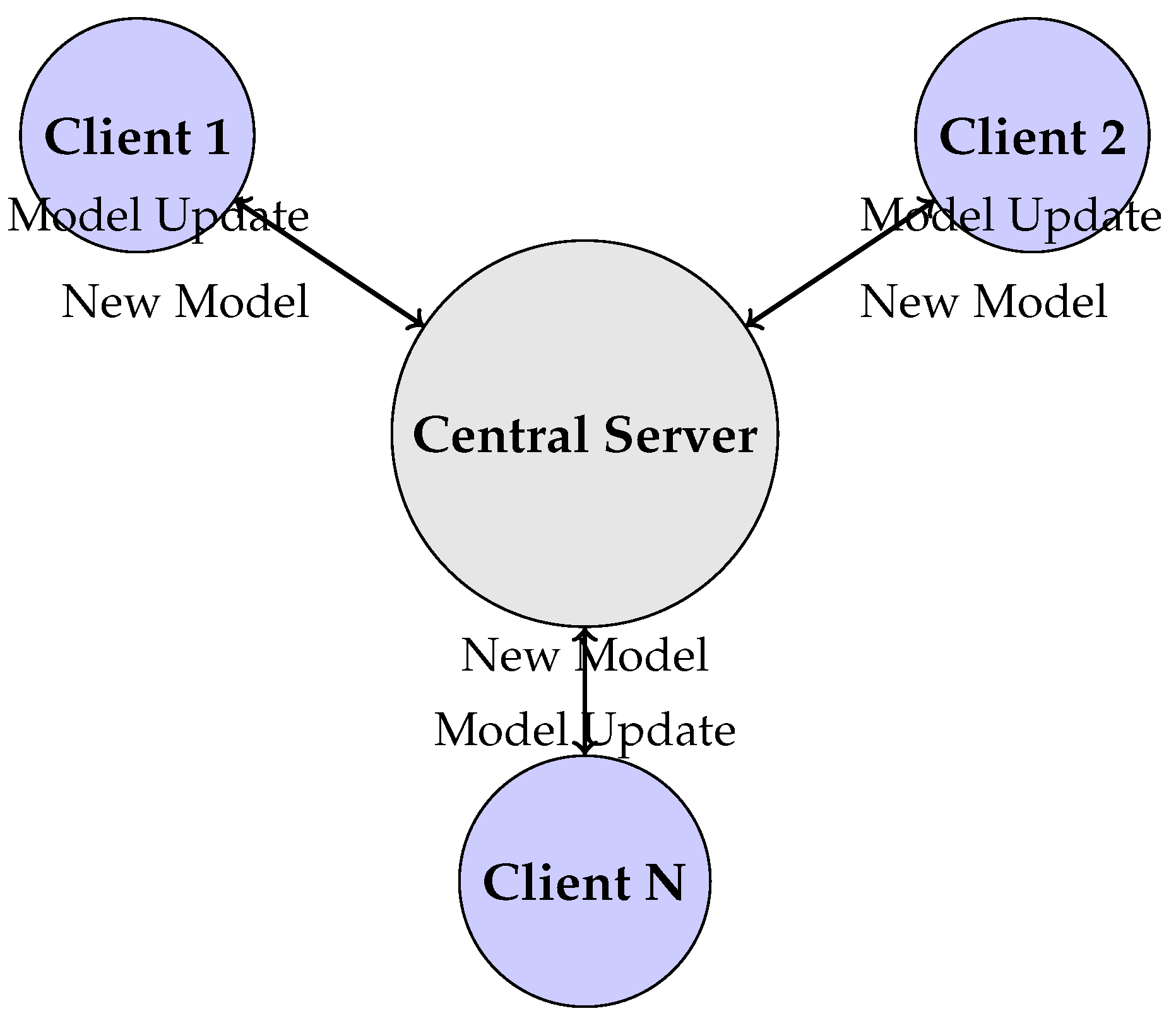

2.1. Federated Learning and Data Privacy

- Client Devices: Multiple distributed devices (such as smartphones, IoT sensors, or hospital servers) locally train a shared model on their respective datasets.

- Model Updates: Instead of sharing raw data, each client device computes model updates (e.g., weight gradients) based on local training.

- Central Server: A global aggregation mechanism (e.g., Federated Averaging) combines the local model updates from multiple clients to improve the overall model without accessing individual datasets.

- Privacy-Preserving Techniques: Techniques such as differential privacy and secure multiparty computation ensure that federated learning remains robust against inference attacks.

2.1.1. Advantages of Federated Learning

- Privacy Protection: Raw data remains on the local device, reducing the risk of data leaks.

- Reduced Communication Costs: Since only model updates are transmitted, federated learning minimizes bandwidth usage.

- Scalability: It enables large-scale collaboration across distributed networks, improving AI models with diverse datasets.

- Regulatory Compliance: FL supports compliance with data protection regulations such as GDPR and HIPAA.

2.1.2. Challenges and Future Directions

- Heterogeneous Data: Variability in data distribution across client devices can lead to biased models.

- Communication Overhead: Frequent model updates require efficient aggregation techniques to reduce latency.

- Security Risks: Federated learning is vulnerable to adversarial attacks such as model poisoning.

2.2. Generative AI in Education and Cybersecurity

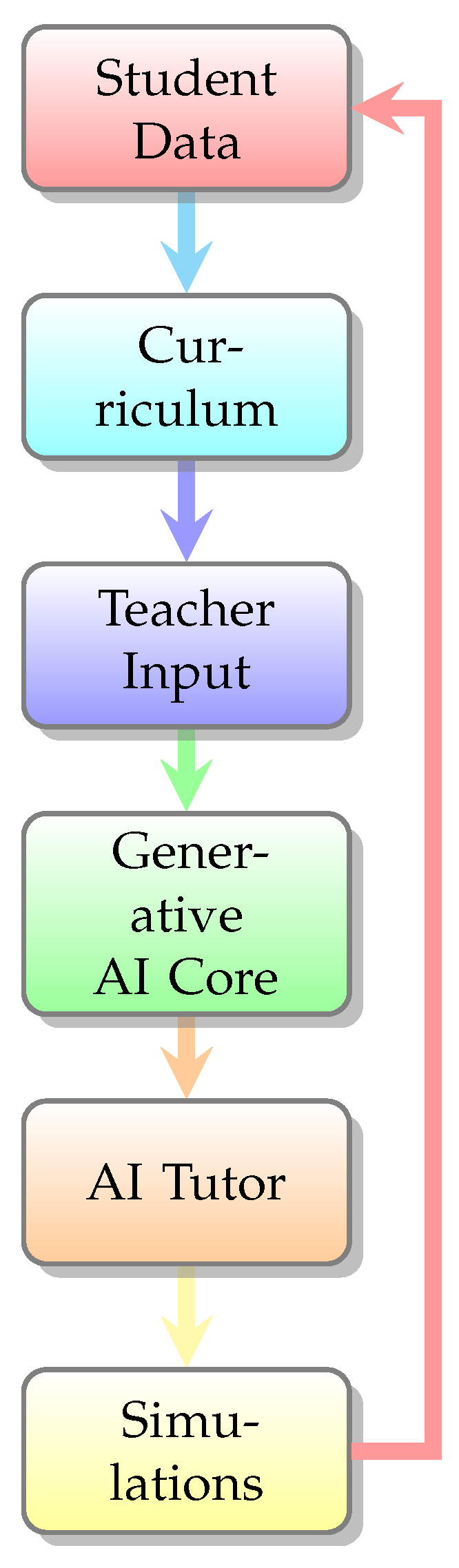

2.2.1. Generative AI in Education

- Personalized Learning: Generative AI adapts to students’ learning styles, offering customized explanations and study materials.

- Automated Content Generation: AI can create textbooks, quizzes, and lecture summaries, reducing the workload for educators.

- Conversational AI Tutors: AI-driven chatbots and virtual assistants provide instant academic support, making learning more interactive.

- AI-Generated Simulations: Generative models enable immersive educational experiences using augmented reality (AR) and virtual reality (VR).

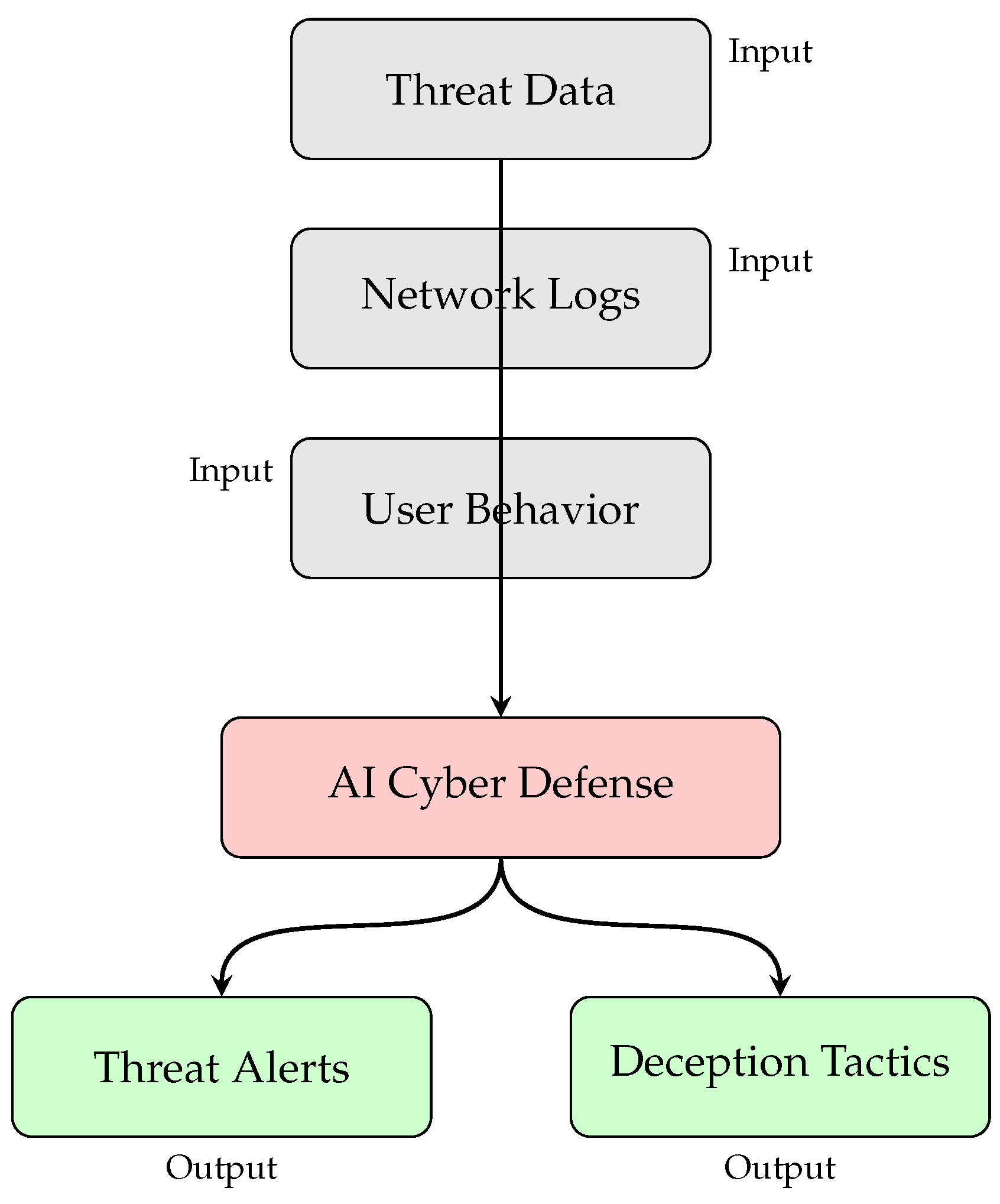

2.2.2. Generative AI in Cybersecurity

- Threat Detection and Prevention: AI models simulate cyber threats, enabling proactive defense mechanisms against malware, phishing, and network intrusions.

- Anomaly Detection: Generative models identify suspicious activities by analyzing deviations from normal patterns, crucial in fraud detection and insider threat monitoring.

- Automated Security Policy Generation: AI assists in dynamically creating security policies based on historical attack patterns.

- Cyber Deception Strategies: AI-generated honeytokens and decoy networks help mislead cybercriminals, reducing attack success rates.

2.2.3. Challenges and Ethical Considerations

- Bias and Fairness Issues: AI-generated content can reflect biases present in training data, impacting educational integrity and cybersecurity fairness.

- Deepfake Threats: Generative AI can be misused to create realistic deepfakes for misinformation, fraud, and identity theft.

- Privacy Concerns: AI models require large datasets, often raising privacy and data security challenges.

2.2.4. Future Directions

- Develop explainable generative models to enhance transparency.

- Implement zero-trust security architectures in AI-based cyber defense.

- Strengthen AI regulatory frameworks to prevent misuse.

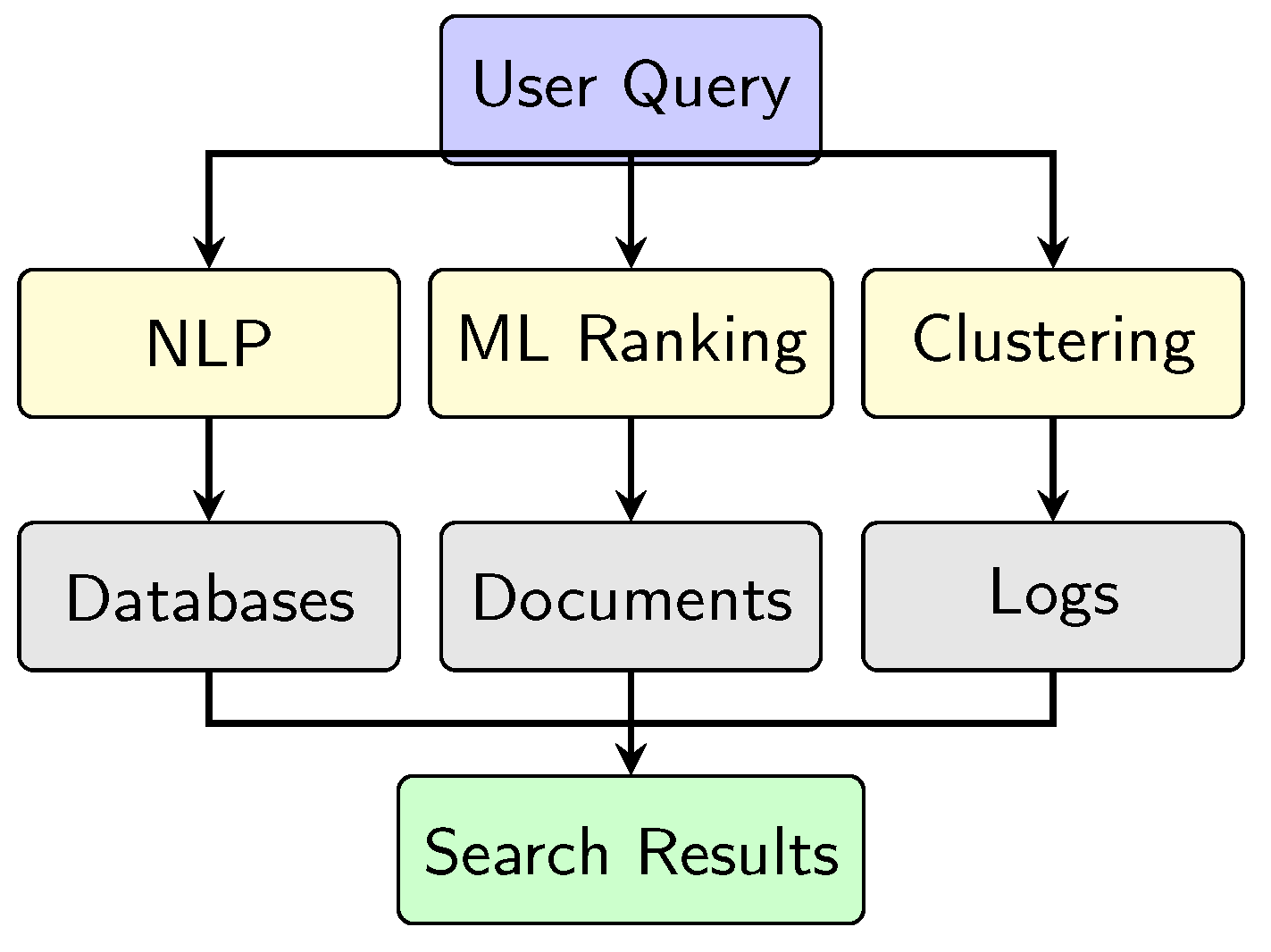

2.3. Enterprise AI and Intelligent Querying

2.3.1. Neural Retrieval Models for Enterprise Search

- Vector-based Search: AI transforms documents and queries into high-dimensional vectors, allowing similarity-based retrieval [15].

- Semantic Understanding: Neural networks analyze word relationships for better query matching.

- Personalized Query Results: AI learns user behavior and adapts search ranking based on relevance.

- Automated Knowledge Graphs: AI connects structured and unstructured data to enhance search context [16].

2.3.2. AI-powered Intelligent Querying: ENRIQ Framework

- Contextual Search Engine: Utilizes semantic embeddings to enhance search result relevance.

- Multi-Modal Search: Supports diverse query types, including text, images, and documents.

- Automated Document Tagging: Implements AI classifiers to categorize and index enterprise knowledge.

- Natural Language Querying: Enables conversational query processing for improved user experience.

2.3.3. Challenges and Future Directions

- Data Silos and Integration Issues: Enterprise data is often fragmented across multiple repositories.

- Bias in AI Search Algorithms: AI search models can inherit biases, affecting the fairness of search results.

- Privacy and Compliance: AI-powered enterprise search must adhere to data protection regulations (GDPR, HIPAA).

- Developing explainable AI search models for improved transparency.

- Integrating blockchain-based enterprise data validation.

- Enhancing AI-powered document summarization for real-time insights.

3. AI Tools and Frameworks

3.1. Transformer Models and Neural Networks

- BERT (Bidirectional Encoder Representations from Transformers) – Enables bidirectional language understanding, improving search engines and chatbots [18].

- GPT (Generative Pre-trained Transformer) – Powers AI text generation and conversational AI models such as ChatGPT [19].

- T5 (Text-to-Text Transfer Transformer) – Unifies NLP tasks using a single model trained for multiple text-based applications.

3.2. AI for Cybersecurity

- Intrusion Detection Systems (IDS): AI detects unauthorized access patterns in network traffic.

- Threat Intelligence: AI identifies malware patterns using deep learning.

- Behavioral Analysis: AI monitors user behavior to detect insider threats.

- Fraud Detection: AI models analyze transaction anomalies for financial security.

4. Explainable AI (XAI) and Challenges

4.1. Model Interpretability Techniques

4.1.1. Challenges in Explainable AI

- Trade-off Between Accuracy and Interpretability: Simple models are more explainable but less powerful.

- Lack of Standardization: XAI lacks universal evaluation metrics.

- Bias in Interpretability Methods: Some methods introduce biases while approximating explanations.

4.2. AI Bias and Fairness

4.2.1. Sources of AI Bias

- Data Bias: Training datasets may contain historical prejudices, leading AI models to replicate discriminatory patterns [23].

- Algorithmic Bias: Model architecture and learning algorithms may amplify pre-existing disparities.

- Representation Bias: Underrepresentation of certain demographics in training data can lead to skewed model predictions.

- Evaluation Bias: AI models trained and evaluated on biased benchmarks may produce systemic errors in real-world deployment.

4.2.2. Fairness-Aware AI Techniques

- Preprocessing Techniques: Adjust training data distribution to balance underrepresented groups [24].

- Fairness Constraints in Model Training: Introduce fairness-aware loss functions that minimize disparities across demographic groups.

- Post-hoc Bias Mitigation: Use reweighting techniques to equalize model predictions across different user categories.

- Explainability for Bias Detection: Employ SHAP and LIME methods to detect and interpret model bias [21].

4.2.3. Challenges in AI Fairness

- Trade-off Between Accuracy and Fairness: Reducing bias may impact model performance.

- Lack of Diverse Datasets: Many AI datasets lack sufficient representation of minority groups.

- Regulatory and Ethical Considerations: Compliance with AI ethics guidelines and legal frameworks remains an ongoing challenge.

4.2.4. Future Directions in Fair AI

- Developing more inclusive and representative datasets for AI model training.

- Enhancing explainable fairness metrics to assess bias impacts in real-world AI applications.

- Establishing stronger AI governance policies to regulate fairness in high-stakes domains like hiring and healthcare [6].

5. Conclusion and Future Directions

Acknowledgments

References

- Kamatala, S.; Bura, C.; Jonnalagadda, A.K. Unveiling Customer Sentiments: Advanced Machine Learning Techniques for Analyzing Reviews. Iconic Research And Engineering Journals 2025. https://www.irejournals.com/paper-details/1707104.

- Kamatala, S.; Jonnalagadda, A.K.; Naayini, P. Transformers Beyond NLP: Expanding Horizons in Machine Learning. Iconic Research And Engineering Journals 2025, 8. https://www.irejournals.com/paper-details/1706957. [CrossRef]

- Myakala, P.K. Adversarial Robustness in Transfer Learning Models. Iconic Research And Engineering Journals 2022, 6.

- Myakala, P.K.; Jonnalagadda, A.K.; Bura, C. The Human Factor in Explainable AI Frameworks for User Trust and Cognitive Alignment. International Advanced Research Journal in Science, Engineering and Technology 2025, 12 . [CrossRef]

- Myakala, P.K.; Kamatala, S. Scalable Decentralized Multi-Agent Federated Reinforcement Learning: Challenges and Advances. International Journal of Electrical, Electronics and Computers 2023, 8. [CrossRef]

- Jobin, A.; Ienca, M.; Vayena, E. The Global Landscape of AI Ethics Guidelines. Nature Machine Intelligence 2019. [CrossRef]

- et al., B.M. Communication-Efficient Learning of Deep Networks from Decentralized Data. AISTATS, 2017.

- et al., P.K. Advances and Open Problems in Federated Learning. Foundations and Trends in Machine Learning 2021.

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated Machine Learning: Concept and Applications. ACM Transactions on Intelligent Systems and Technology 2019, 10, 1–19. [CrossRef]

- Myakala, P.K.; Jonnalagadda, A.K.; Bura, C. Federated Learning and Data Privacy: A Review of Challenges and Opportunities. International Journal of Research Publication and Reviews 2024, 5. [CrossRef]

- Shokri, R.; Shmatikov, V. Privacy-Preserving Deep Learning. Proc. 22nd ACM CCS, 2015.

- Chiranjeevi, B. Generative AI in Learning. REDAY J. AI Comput. Sci. 2025.

- Bura, C. ENRIQ: Enterprise Neural Retrieval and Intelligent Querying. REDAY - Journal of Artificial Intelligence & Computational Science 2025. [CrossRef]

- et al., R.N. Document Ranking with BERT. Information Retrieval Journal 2020.

- et al., V.K. Dense Passage Retrieval for Open-Domain Question Answering. EMNLP, 2020.

- et al., A.G. Knowledge Graph Augmented Neural Search. ACM SIGIR, 2021.

- et al., A.V. Attention Is All You Need. NeurIPS, 2017.

- et al., J.D. BERT: Pre-training of Deep Bidirectional Transformers. NAACL, 2019.

- et al., T.B. Language Models Are Few-Shot Learners. NeurIPS, 2020.

- Naayini, P.; Myakala, P.K.; Bura, C. How AI is Reshaping the Cybersecurity Landscape. Available at SSRN 5138207 2025.

- Lundberg, S.; Lee, S. A Unified Approach to Interpretable Machine Learning. NeurIPS, 2017.

- et al., M.R. Why Should I Trust You? Explaining Predictions of Any Classifier. KDD, 2016.

- et al., N.M. A Survey on Bias and Fairness in Machine Learning. ACM CSUR 2021.

- et al., R.Z. Learning Fair Representations. ICML, 2013.

| Component | Description |

|---|---|

| User Query | Input query submitted by the user for search processing. |

| Natural Language Processing (NLP) | Interprets and processes the query to understand intent and context. |

| Vector Search | Uses dense embeddings to retrieve semantically relevant results. |

| Knowledge Graph | Incorporates structured relationships to enhance search accuracy. |

| Optimized Results | Ranked and refined search results presented to the user. |

| Component | Description |

|---|---|

| Input Tokens | Encoded word representations fed into the model for processing. |

| Self-Attention Layer | Captures contextual relationships by attending to all input tokens simultaneously. |

| Feedforward Layer | Applies non-linearity and transformation to enhance feature representation. |

| Output Predictions | Generates final results based on learned contextual dependencies. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).