Submitted:

13 February 2025

Posted:

21 February 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

- -

- Development based on NLP and machine learning models based on big data.

- -

- Rich-media inputs and outputs, text, audio, and images.

- -

- Text-to-speech and speech-to-text functions.

- -

- Text generation, synthesis, and analysis capabilities.

- -

- Uncertainty and non-deterministic in response generation.

- -

- Understanding selected languages and diverse questions and generating responses with diverse linguistic skills. These special features bring many issues and challenges in quality testing and evaluation of intelligent chat systems (like chatbots).

- Application of AI-based 3-dimensional test modeling and provides a reference classification test model for chatbot systems.

- Discussion of various test generation approaches for smart chatbot systems.

- AI to support test augmentation (positive and negative) for chatbots.

- Test result validation and adequacy using an AI-based approach for smart chatbot systems.

- AI test results and analysis, specifically for the mobile app, Wysa.

2. Literature Review

3. Understanding of Testing Intelligent Chatbot Systems

3.1. Testing Approach

- Conventional Testing Methods - Using conventional software testing methods to validate any given smart chatbots and applications online or on mobile devices/emulators. These include scenario-based testing, boundary value testing, decision table testing, category partition testing, and equivalence partition testing.

- Crowd-Sourced Testing - Using crowd-sourced testers (freelanced testers) to perform user-oriented testing for given smart chatbots and systems. They usually use ad-hoc approaches to validate the given systems as a user.

- Smart Chat Model-Based Testing - Using model-based approaches to establish smart chat models to enable test case and data generation, and support test automation and coverage analysis.

- AI-Based Testing - Using AI techniques and machine learning models to develop AI-based solutions to optimize smart chatbot system quality test process and automation.

- NLP/ML Model-Based Testing - Using white-box or gray-box approaches to discover, derive, and generate test cases and data focusing on diverse ML models, related structures, and coverage.

- Rule-based testing - This methodology employs rule-based testing to generate tests and data for handling intelligent chat systems. While effective for traditional rule-based chat systems, it faces numerous challenges in addressing modern intelligent chat systems due to their unique characteristics and the complexities introduced by NLP-based machine learning models and big data-driven training.

- System Testing - In this strategy, quality of service (QoS) parameters at the system level will be chosen, assessed, and tested using clearly defined evaluation metrics. This aims to quantitatively validate both system-level functions and AI-powered features. Common system QoS factors encompass reliability, availability, performance, security, scalability, speed, correctness, efficiency, and user satisfaction. AI-specific QoS parameters typically involve accuracy, consistency, relevance, as well as loss and failure rates.

- Leaning-based testing - In this method, the activities and tests conducted by human testers are monitored, and their test cases, data, and operational patterns are collected, analyzed, and learned from to understand how they design tests and data for a specific smart chat system. Additionally, any bugs they uncover can be learned from and utilized as future test cases.

- Metamorphic Testing - Metamorphic testing (MT) is a property-based software testing technique, which can be an effective approach for addressing the test oracle problem and test case generation problem.

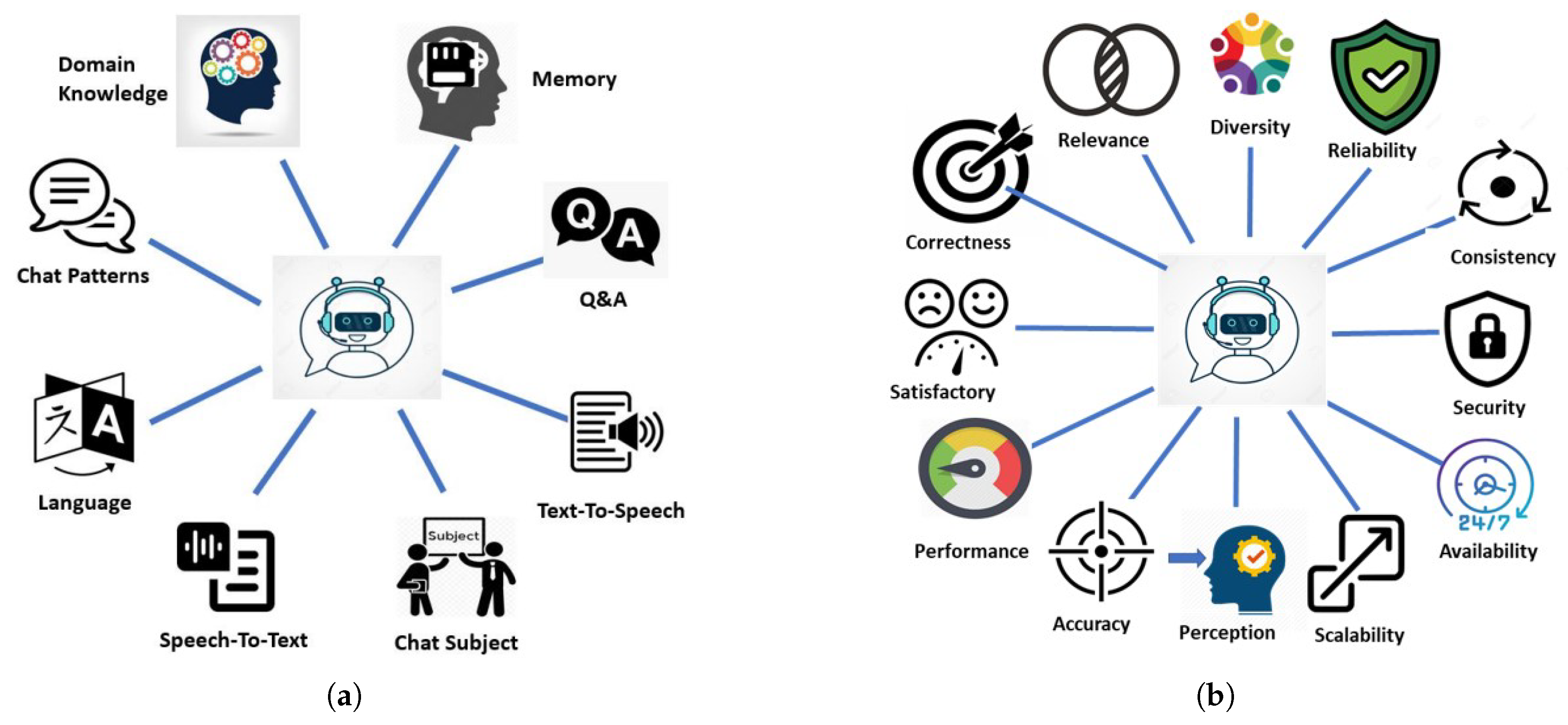

3.2. Testing Scope

- Domain Knowledge - Validate a smart chatbot system to see how well the current chatbot system has been trained for selected domain knowledge and demonstrate its knowledge at different levels during its chats and communication with clients.

- Chatflow Patterns - Validate a smart chatbot system to see how well it is capable of carrying out chats in diverse chatting flow patterns.

- Chat Memory - Validate Chatbot’s memory capability about clients’ profiles, cases, chat history, and so on.

- Q&A - Validate Chatbot’s Q&A intelligence to see how well an under-test smart chat system is capable of understanding the questions and responses from clients and providing the responses like a human agent.

- Chat Subject - Validate the Chatbot’s intelligence in subject chatting to see how well an under-test chatbot system is capable of chatting with clients on selected subjects.

- Language - Validate a smart chatbot’s language skills in communicating with clients in a selected natural language.

- Text-to-speech - Check how well the under-test chatbot system can convert text to speech correctly.

- Speech-to-text - Check how well the under-test chatbot system can convert speech audio to texts correctly.

- Accuracy - To make sure that the system can accurately process and understand NLP and rich media inputs, generate accurate chat responses in a variety of subject contents, languages, and linguistics using a range of tests with a variety of chat patterns and flows, assess system accuracy based on well-defined test models and accuracy metrics.

- Consistency - To ensure that the system can consistently process and understand a wide range of NLP, rich media inputs, and client attention, as well as generate consistent chat responses in a variety of subject contents, languages, and linguistics using a variety of tests with chat patterns and flows, evaluate system consistency based on well-defined test models and consistency metrics.

- Relevance - Use established testing models and profitability metrics to assess system relevance. This ensures the system’s capability to comprehend and process a wide array of NLP and multimedia inputs, identify pertinent subjects and concerns, and produce appropriate chat responses across diverse domains, subjects, languages, and linguistic variations through varied testing methodologies.

- Correctness - Assess the accuracy of a chat system’s processing and comprehension of NLP and/or rich media inputs, its ability to provide accurate replies related to domain subjects, contents, and language linguistics, using well-defined test models and metrics.

- Availability - Ensure system availability based on clearly defined parameters at various levels such as the underlying cloud infrastructure, the enabling platform environment, the targeted chat application SaaS, and user-oriented chat SaaS.

- Security - Assess the security of the system by utilizing specific security metrics to examine the chat system’s security from various angles. This includes scrutinizing its cloud infrastructure, platform environment, client application SaaS, user authentication methods, and end-to-end chat session security.

- Reliability - Assess the reliability of the system by employing established reliability metrics at various tiers. This encompasses evaluating the reliability of the underlying cloud infrastructure, the deployed and hosted platform environment, and the chat application SaaS.

- Scalability - Evaluate system scalability based on well-defined scalability metrics in different perspectives, including deployed cloud-based infrastructure, hosted platform, intelligent chat application, large-scale chatting data volume, and user-oriented large-scale accesses.

- User satisfactory – Assess user satisfaction with the system by employing well-defined metrics from various angles. This includes analyzing user reviews, rankings, chat interactions, session success rates, and goal completion rates.

- Linguistics diversity – Assess the linguistic diversity of the intelligent chat system in its ability to support and process various linguistic inputs, including diverse vocabularies, idioms, phrases, and different types of client questions and responses. Additionally, evaluate the system’s linguistic diversity in the responses it generates, considering domain content, subject matter, language syntax, semantics, and expression patterns.

- Performance - Assess the performance of the system using clearly defined metrics related to system and user response times, processing times for NLP-based and/or rich media inputs, and the time taken for generating chat responses.

- Perception and understanding – Assess the system’s perception and comprehension of diverse inputs and rich media content from its clients using established test models and perception metrics.

4. AI Test Modeling for Intelligent Chatbot Systems

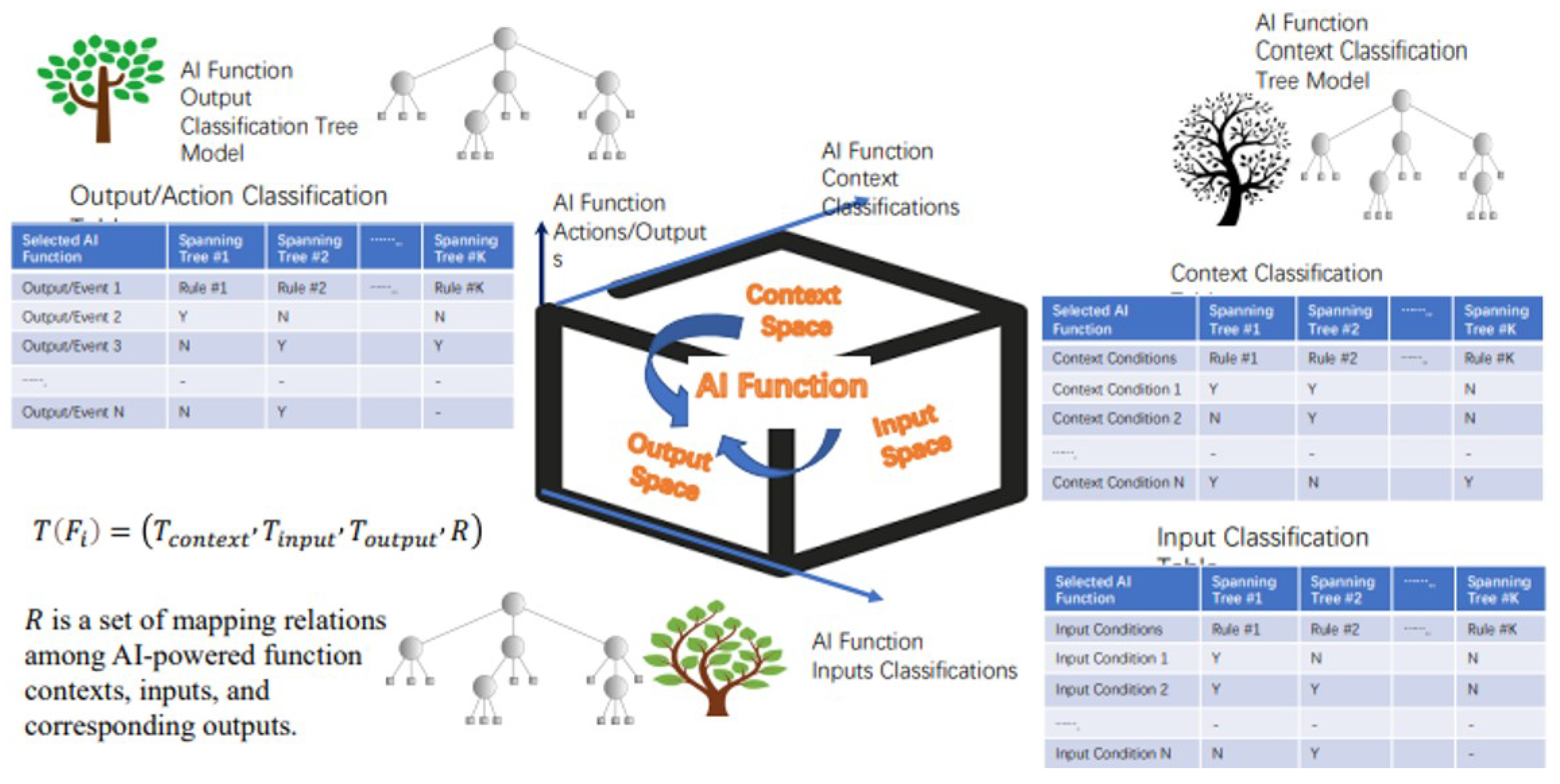

4.1. AI Test Modeling and Analysis

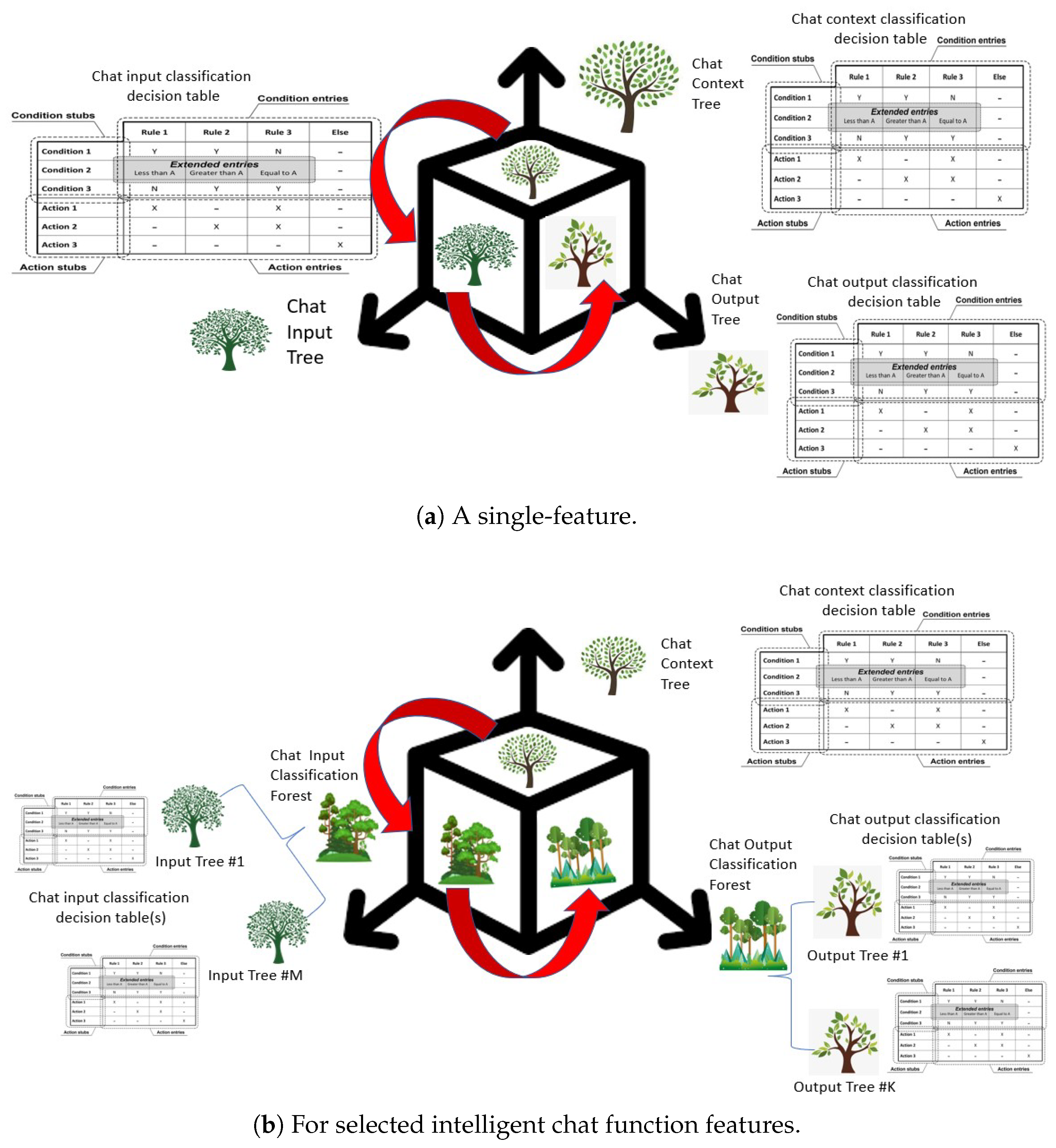

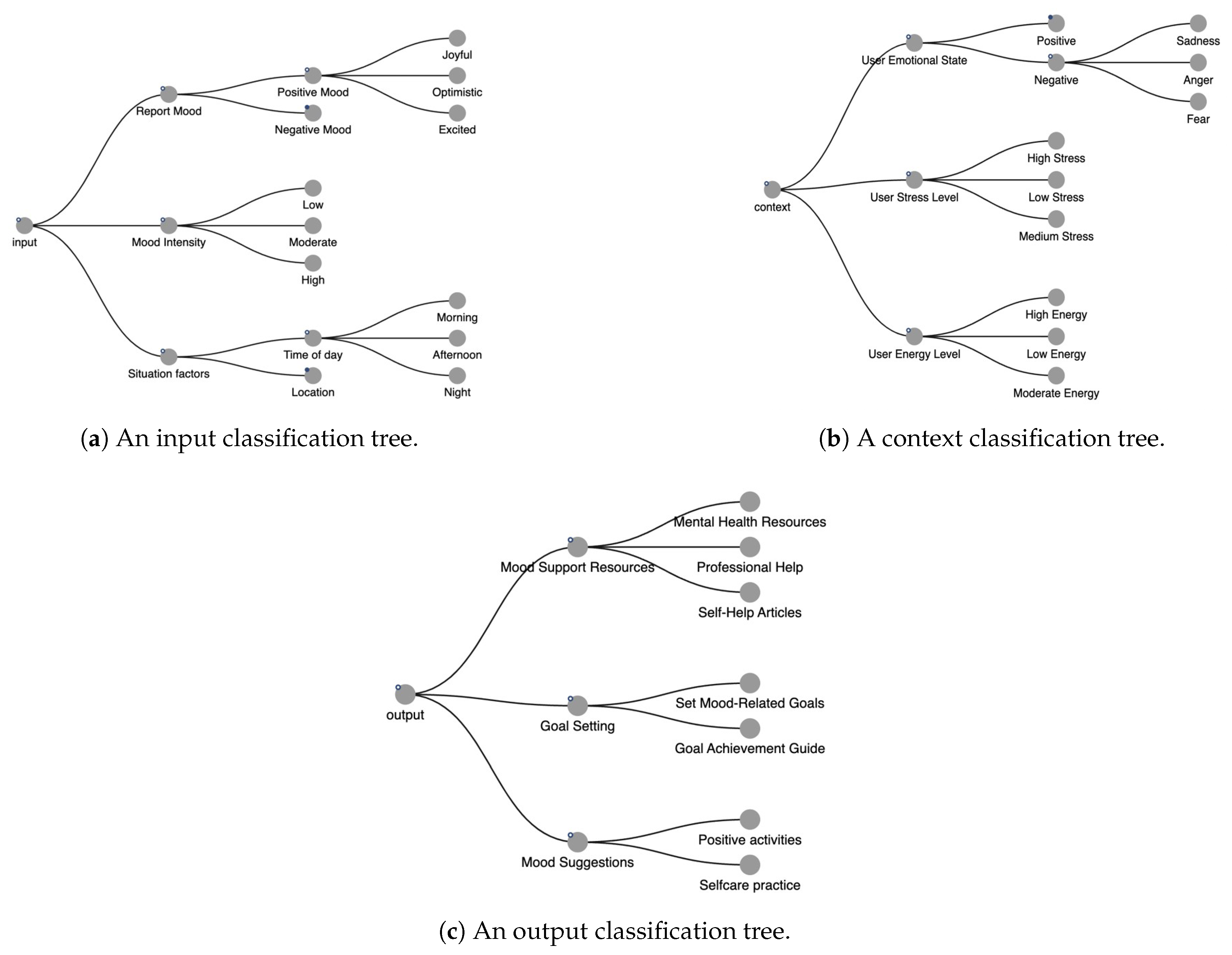

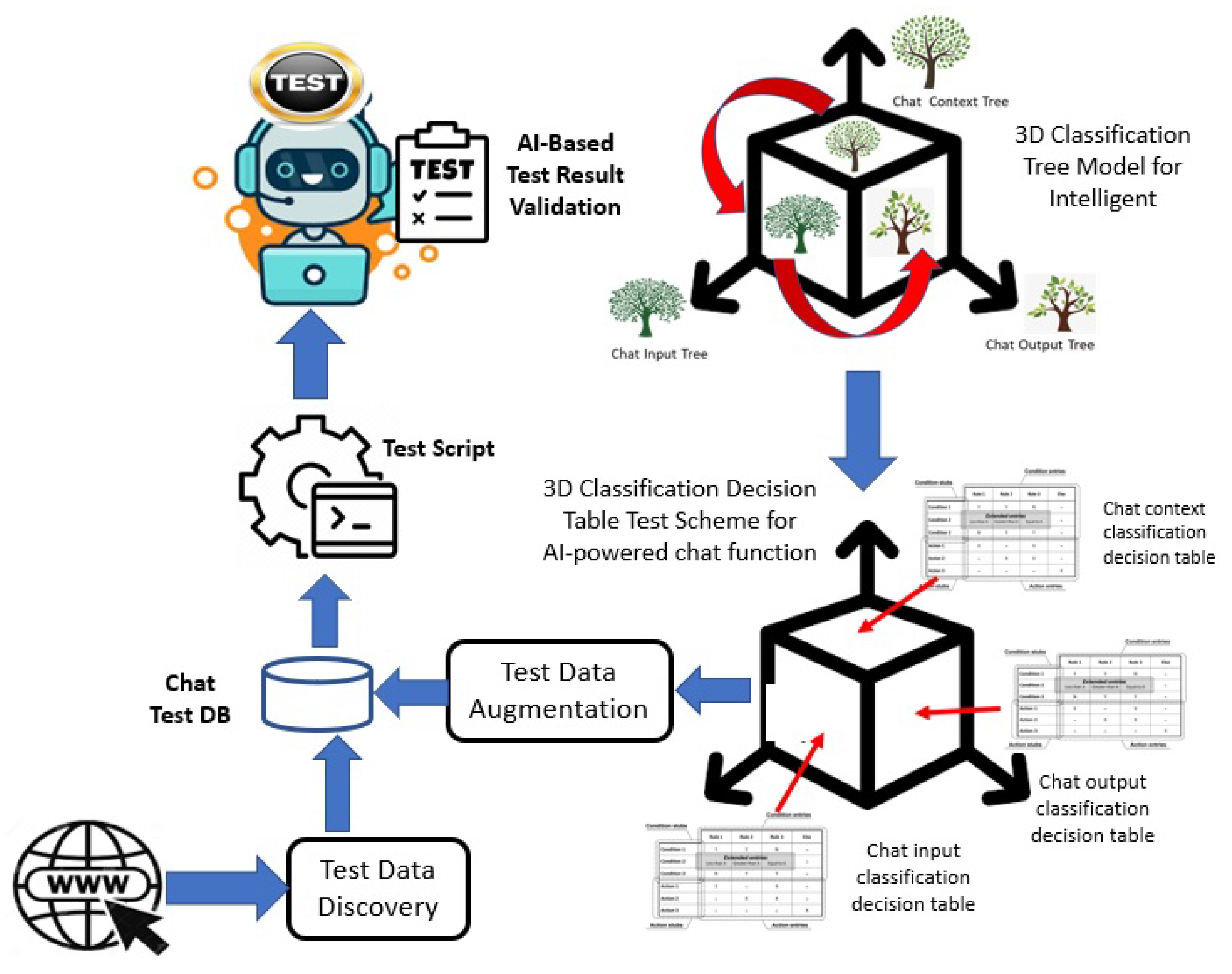

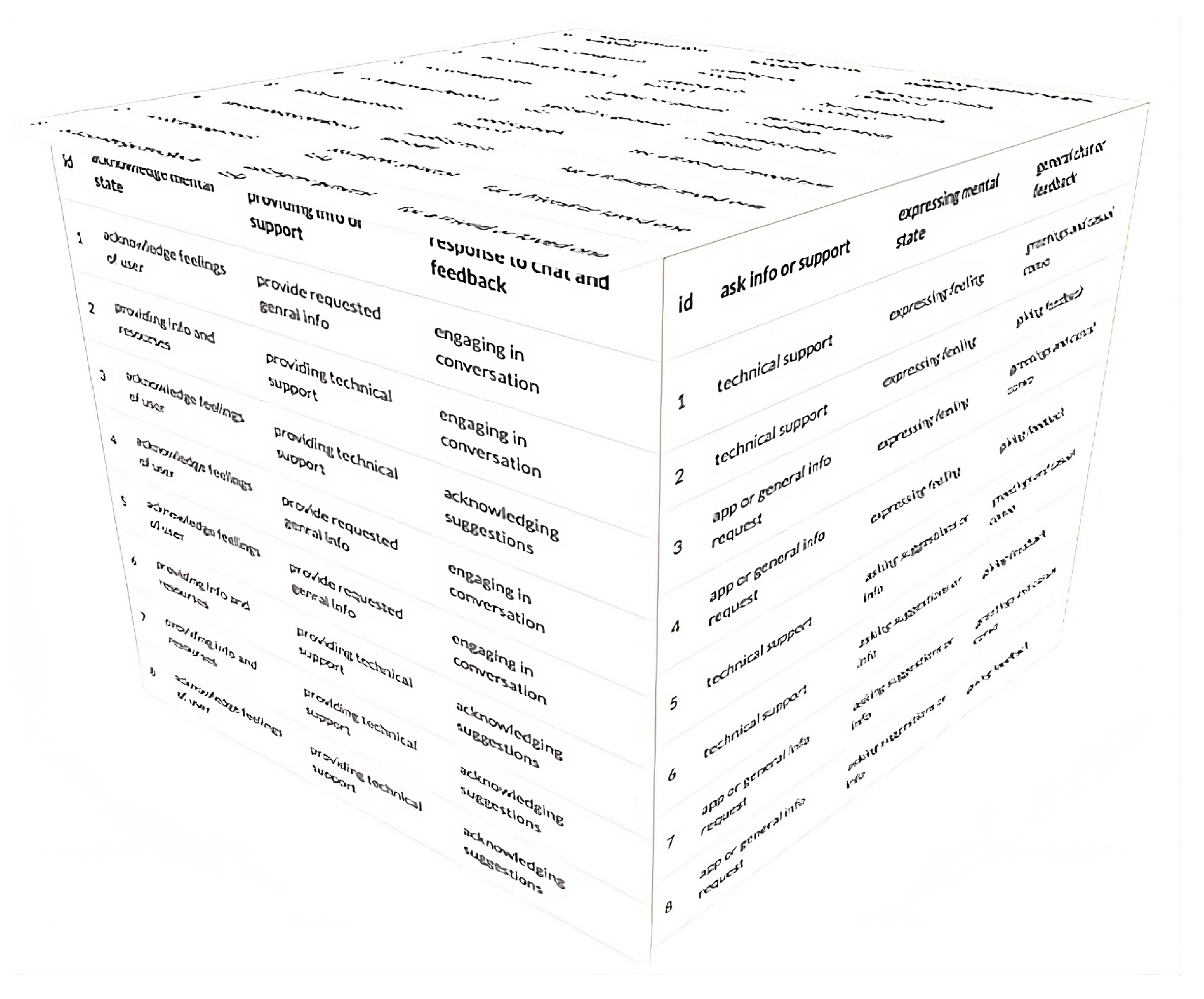

- 3D Intelligent Chat Test Modeling Process I - In this process, for the selected intelligent chat function feature, set up a 3D classification tree model in 3 steps. 1) Define an intelligent chat input context classification tree model to represent diverse context classifications. 2) Define an intelligent chat input classification tree model to represent diverse chat input classifications. 3) Define an intelligent chat output classification tree model to represent diverse chat output classifications. Figure 3(a) shows the schematic diagram for a single-feature 3D classification tree model.

- 3D Intelligent Chat Test Modeling Process II - For the selected intelligent chat function features, set up a 3D classification forest tree model in 3 steps. 1) Define an intelligent chat input context classification tree model to represent diverse context classifications. 2) For each selected intelligent chat AI feature, define one intelligent chat input classification tree model to represent diverse chat input classifications. One input classification decision table is generated based on the defined input tree model. As a result, a chat input forest is created, and a set of input decision tables are generated. 3) For each selected intelligent chat AI feature, define one intelligent chat output classification tree model to represent diverse chat output classifications. One output classification decision table is generated based on the defined output tree model. As a result, a chat output forest is created, and a set of output decision tables is generated. Figure 3(b) shows the schematic diagram for the 3D classification forest tree model for selected features of intelligent chat functions.

- 3D Intelligent Chat Test Modeling Process III - For each selected intelligent chat function feature, set up a 3D classification tree model in 3 steps similar to that of process I. A corresponding 3D decision table will be generated. After a modeling process, a set of 3D tree models will be derived, and related 3D decision tables will be generated.

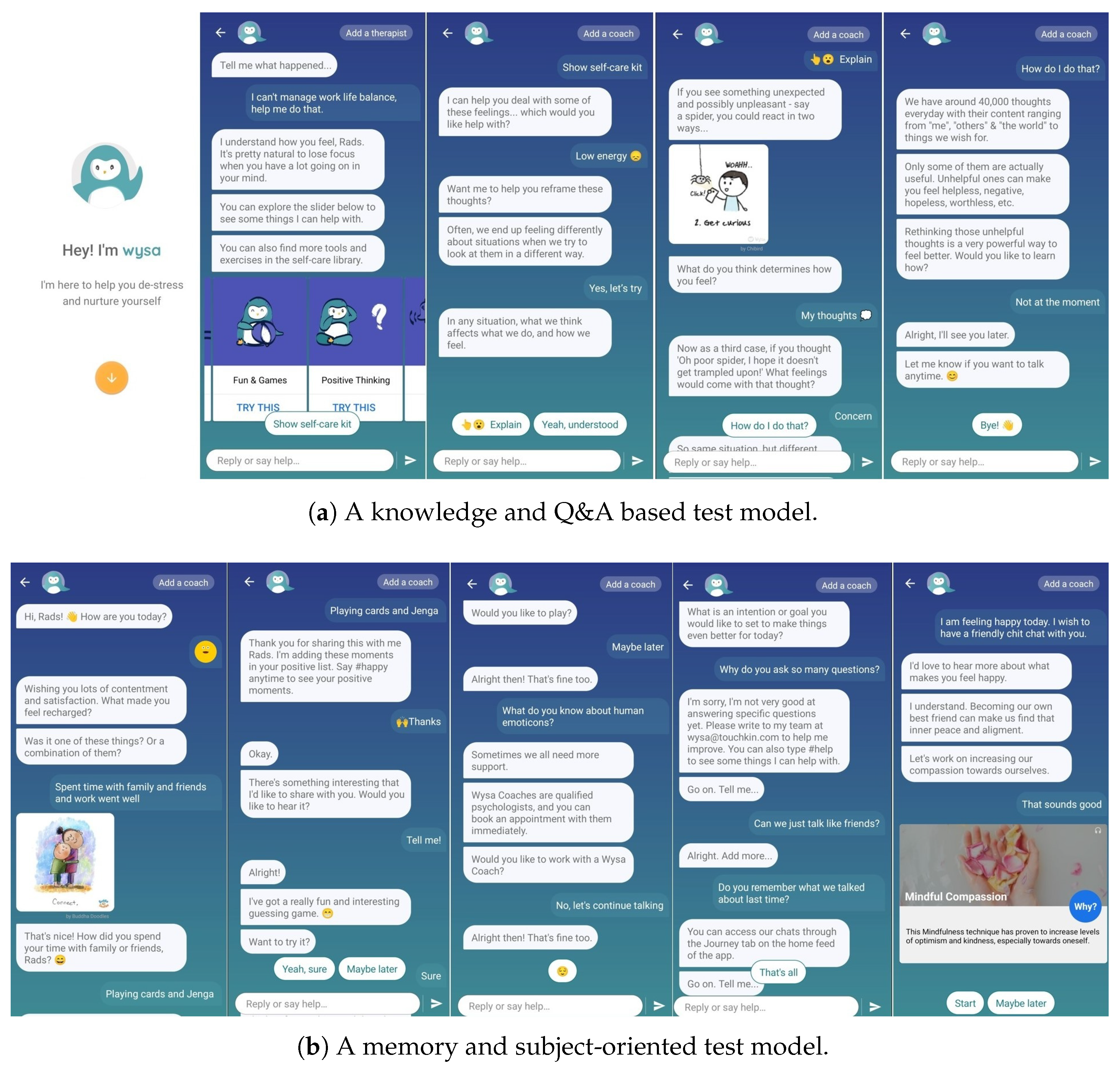

4.2. AI-Based Test Automation for Mobile Chatbot - WYSA

4.3. Classified Test Modeling for Intelligent Chat System

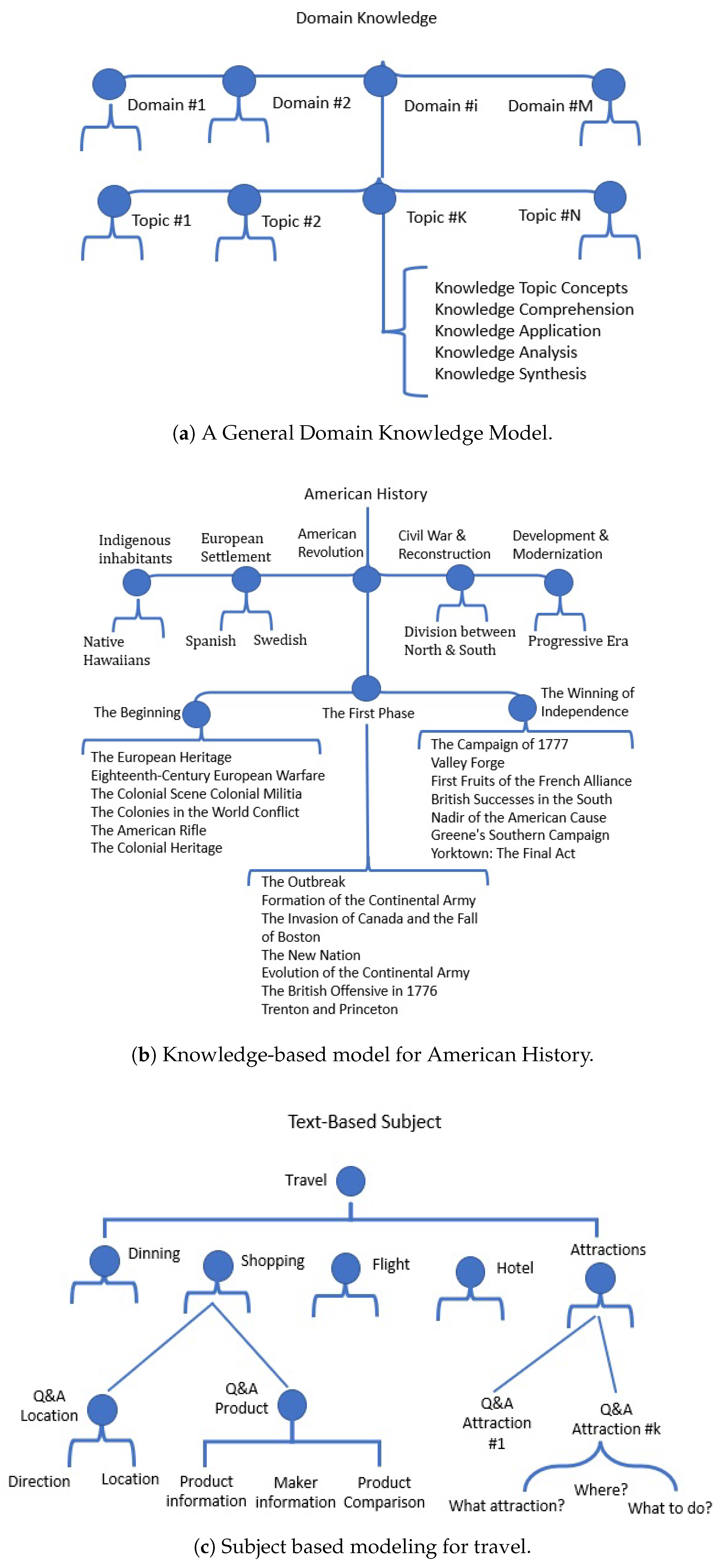

- Knowledge-based test modeling - This is useful to establish test models focusing on selected domain-specific knowledge of a given intelligent chat system to see how well it understands the received domain-specific questions and provides proper domain-related responses relating to responses. For service-oriented domain intelligent chat systems, one could consider diverse knowledge of products, services, programs, and customers. Figure 6 (a) shows a knowledge-based test model, while (b) shows the specific example where American history is considered the knowledge domain and has topics and subtopics as shown in the figure.

- Subject-oriented test modeling - This is useful to establish test models focusing on a selected conversation subject for a given intelligent chat system to see how well it understands the questions and provides proper responses on diverse select subjects in intelligent chat systems. Typical conversational subjects include travel, driving directions, sports, and so on. Figure 6(c) shows an elaborate subject-based test modeling for travel and the certain queries that a person generally has about it.

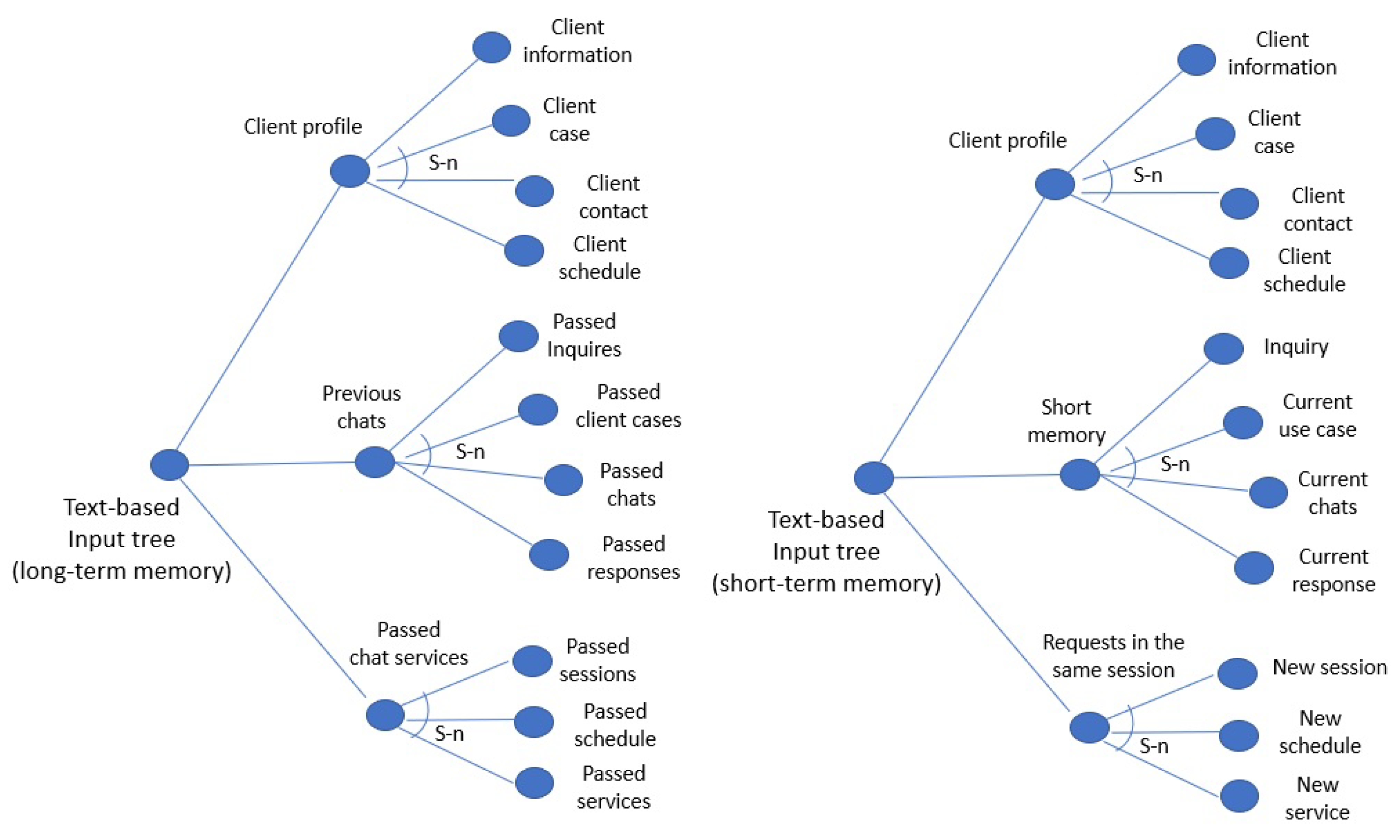

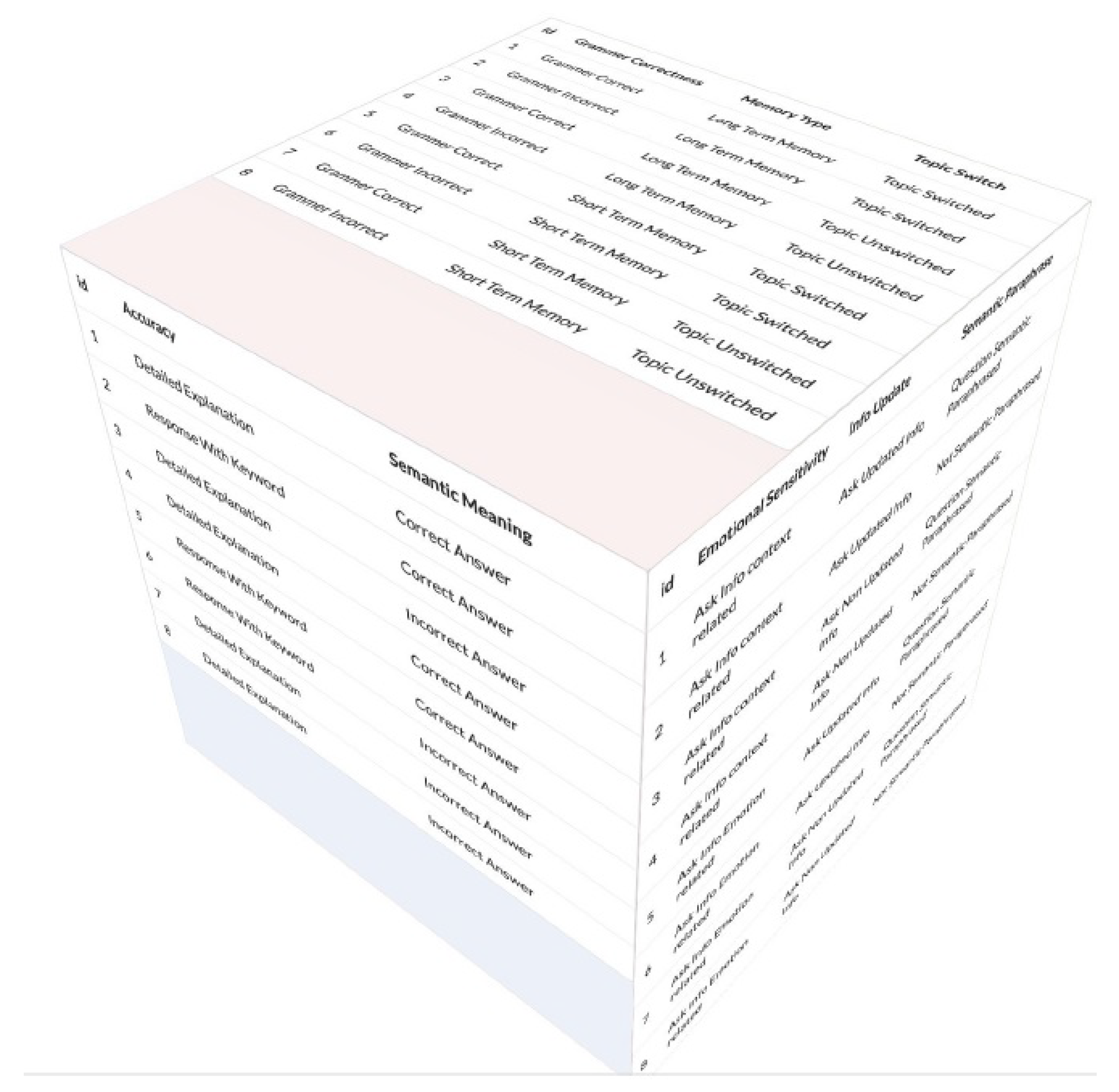

- Memory-oriented test modeling - This is useful to establish test models for validating the memory capability of a given intelligent chat system to see how well it remembers users’ profiles, questions, and related chats, as well as interactions. Figure 7 shows the text-based input tree for short and long-term memory.

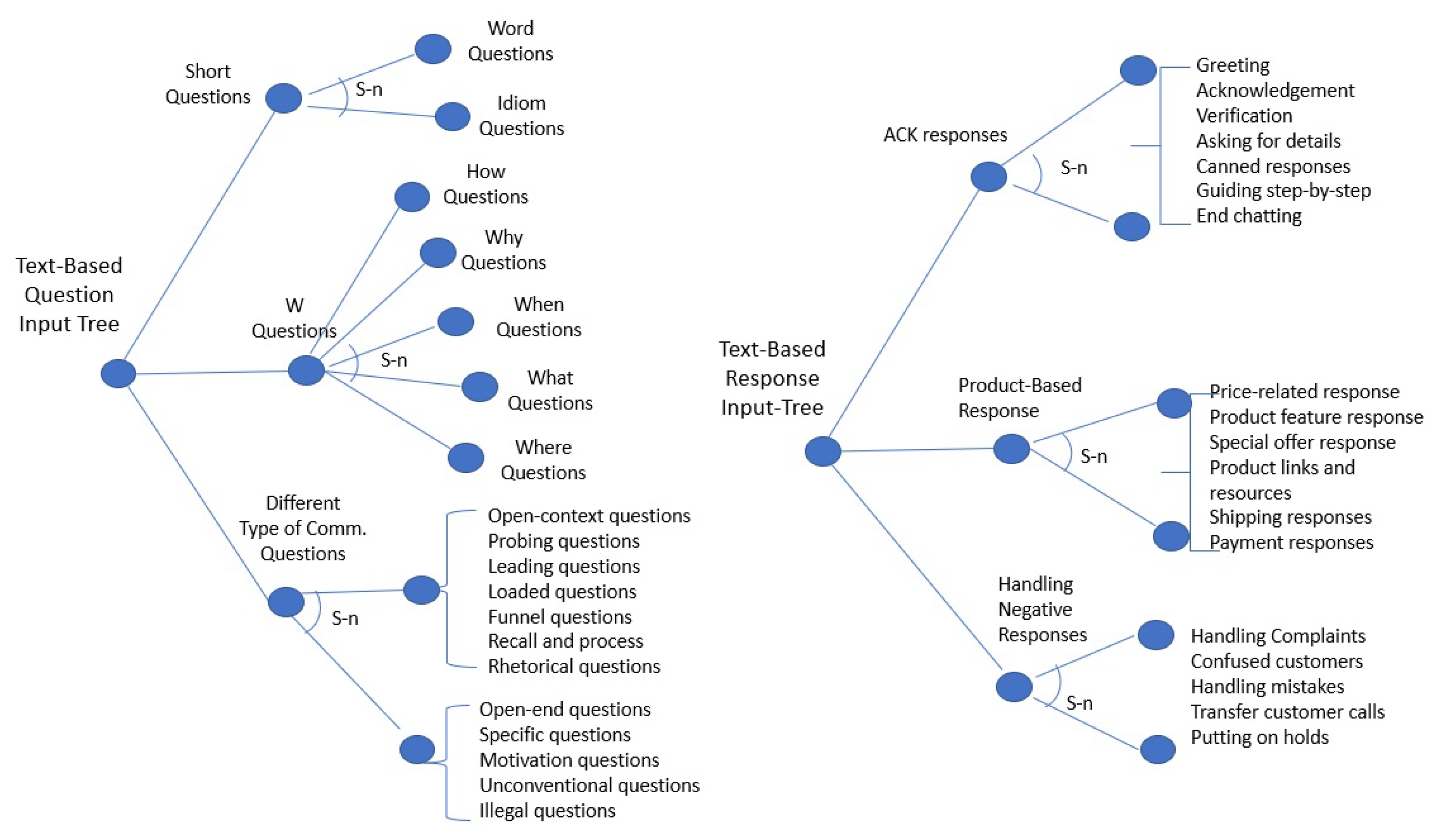

- Q&A pattern test modeling - This is useful to establish test models for validating the diverse question and answer capability of a given intelligent chat system to see how well it handles diverse questions from clients and generates different types of responses. Figure 8 shows the text-based input tree for the question and answer pattern.

- Chat pattern test modeling - This is useful to establish test models for validating diverse chat patterns for a given intelligent chat system to see how well it handles diverse chat patterns and interactive flows.

- Linguistics test modeling - This is useful to establish test models to validate language skills and linguistic diversity for a given intelligent chat system. Four aspects of test modeling for linguistics include sentences, diverse lexical items, different types of sentences, semantics, and syntax.

5. Test Generation and Data Augmentation for Intelligent Chatbot Systems

5.1. Test Generation

- Conventional Test Generation - It is simple and easy to use conventional software testing methods to generate tests for a selected smart chatbot system but there are certain limitations to this method, i.e., (1) difficult to perform test result validation using a systematic way, (2) hard to evaluate adequate test coverage, and (3) high costs to generate chat input tests manually.

- Random Test Generation - Using a random generator to select random chatbot tests as inputs from a given chatbot test DB to validate the under-test smart chatbot system. Random text generation can be used to generate unique and engaging text that can be used in various applications, from creative writing to marketing content.

- Test Model Based Test Generation - It is a model-based approach to enable automatic test generation for each targeted AI-powered intelligent chat function/feature. It supports model-based test coverage analysis and complexity assessment. It is important as AI-based test data augmentation solutions and result validation are needed. Figure 9 explains the semantic working of test model-based test generation.

- AI-Based Test Generation - Using AI techniques and machine learning models to generate augmented tests and test data for each selected test case. There are two types of data generated: 1) Synthetic data, which is generated artificially without using real-world images; and 2) Augmented data, which is derived from original images with some sort of minor geometric transformations (such as flipping, translation, rotation, or the addition of noise) to increase the diversity of the training set. This method also comes with its challenges, including: 1) Cost of quality assurance of the augmented datasets. 2) Research and Development to build synthetic data with advanced applications. 3) The inherent bias of original data persists in augmented data.

- NLP/ML Model Test Generation - Using white-box or gray-box approaches to discover, derive, and generate test cases and data based on each underlying NLP/ML model to achieve model-based structures and coverage.

5.2. Data Augmentation

6. AI-Based Test Result Validation and Adequacy Approaches for Smart Chatbot Systems

- Conventional Testing Oracle - Test Oracle is a mechanism that can be used to test the accuracy of a program’s output for test cases. Conceptually, consider testing a process in which test cases are given for testing and the program under test. The output of the two is then compared to determine whether the program behaves correctly for test cases. Ideally, an automated oracle is required, which always gives the correct answer. However, often oracles are human beings, who mostly calculate manually the output of the program. Consequently, when there is a discrepancy, between the program and the result, we must verify the result produced by the oracle before declaring that there is a defect in the result.

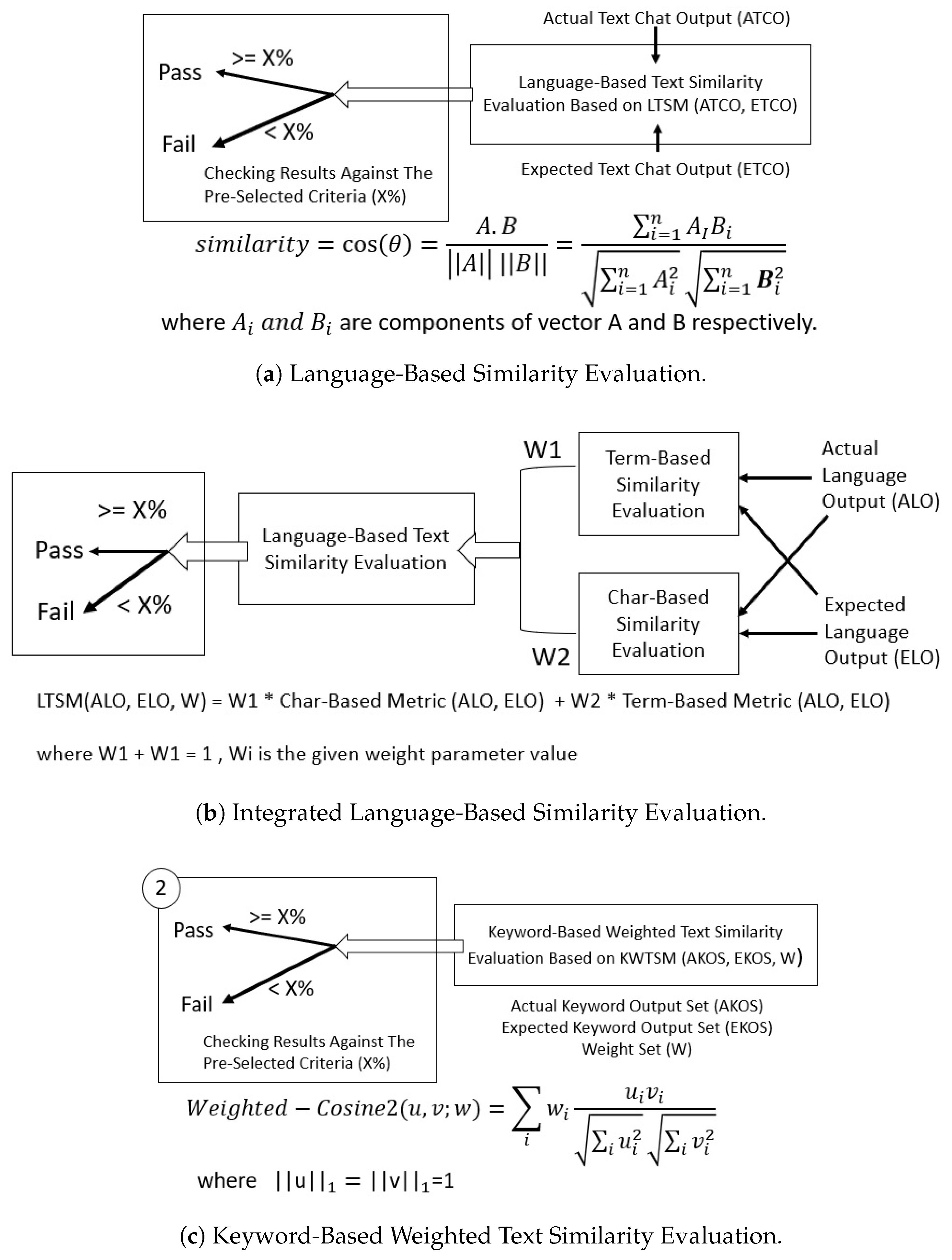

- Text-Based Result Similarity Checking - For text-based chat outputs, AI-based approaches can be used to perform text similarity analysis to determine if the intelligent chat test results are acceptable. Figure 10 (a) and (b) shows the language-based similarity evaluation. Lexical similarity is a measure of two texts’ similarity based on the intersection of word sets from the same or different languages. Lexical similarity scores range from 0 to 1, indicating no common terms between the two texts, to 1, indicating total overlap between the vocabulary. Figure 10 (c) shows the second approach, keyword-based weighted text similarity evaluation with the similarity formula.

- Image-Based Similarity Checking - For image-based outputs, AI-based approaches can be used to perform image similarity analysis to determine if the intelligent chat test results are acceptable. Diverse machine learning algorithms and deep learning models can be used to compute the similarity at the different levels and perspectives between an expected output image and a real output image from the under-test chatbot system, including objects/types, features, positions, scales, and colors.

- Audio-Based Result Similarity Checking - For audio-based outputs, AI-based approaches can be used to perform radio similarity analysis to determine if the intelligent chat test results are acceptable. Diverse machine learning algorithms and deep learning models can be used to compute the similarity (at different levels and perspectives) between an expected output audio and real output audio from the under-test chatbot system, including audio sources/types, audio features, timing, frequencies, noises, and so on.

6.1. Model-Based Test Coverage for Smart Chatbot system

- 3-dimensional AI Test Classification Decision Table Test Coverage - To achieve this coverage, the test set (3DT-Set) must include one test case for any 3D element - T (CT-x, IT-y, OT-z) in the 3D AI test classification decision table.

- Context classification decision table test coverage - To achieve this coverage, the test set(3DT-Set) must include one test case for any rule in a context classification decision table.

- Input classification decision table test coverage - To achieve this coverage, the test set (3DT-Set) must include at least one test case for any rule in an input classification decision table.

- Output classification decision table test coverage - To achieve this coverage, the test set (3DT-Set) must include at least a tree case for any rule in an output classification decision table.

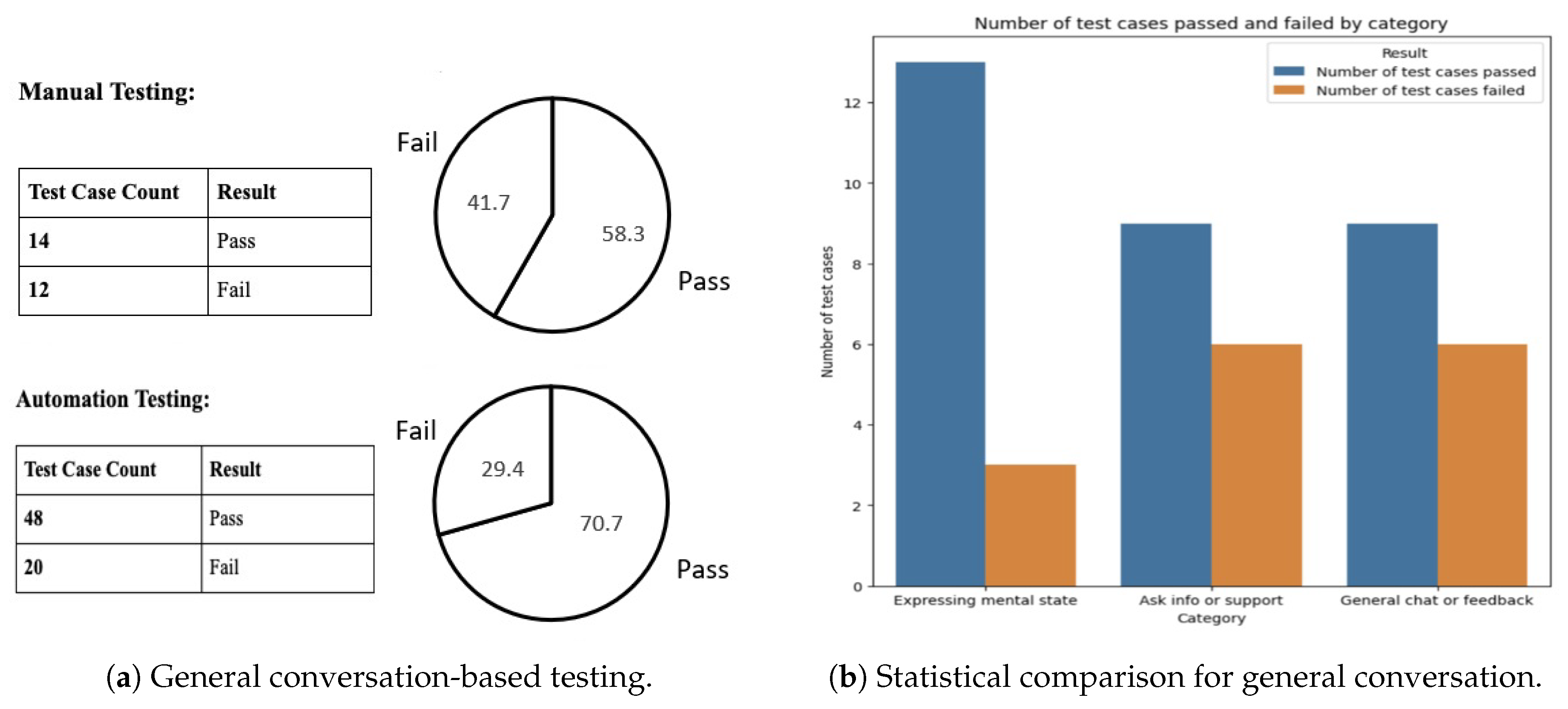

7. AI Test Result and Analysis

7.1. General Responses

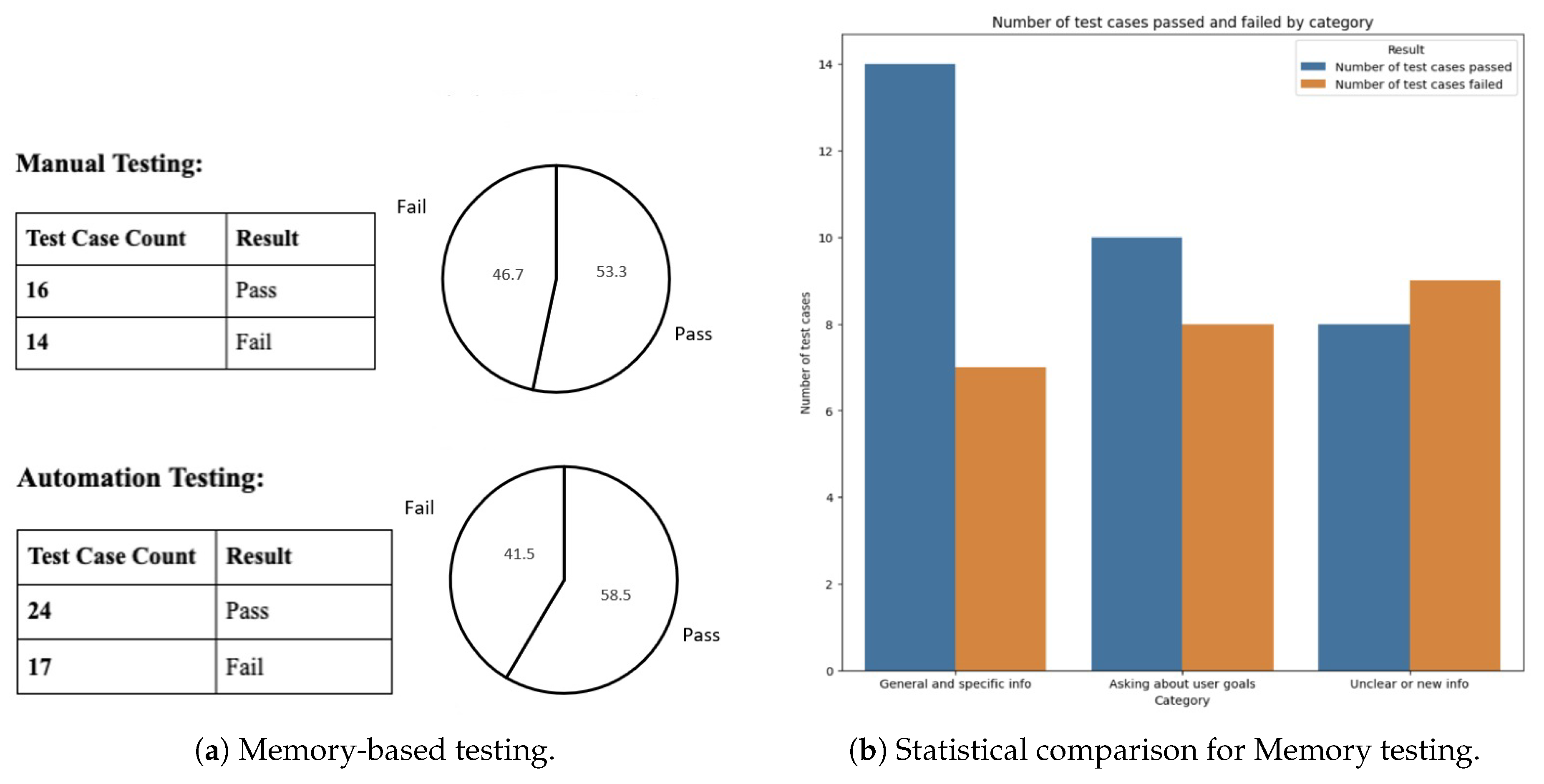

7.2. Memory-Based Responses

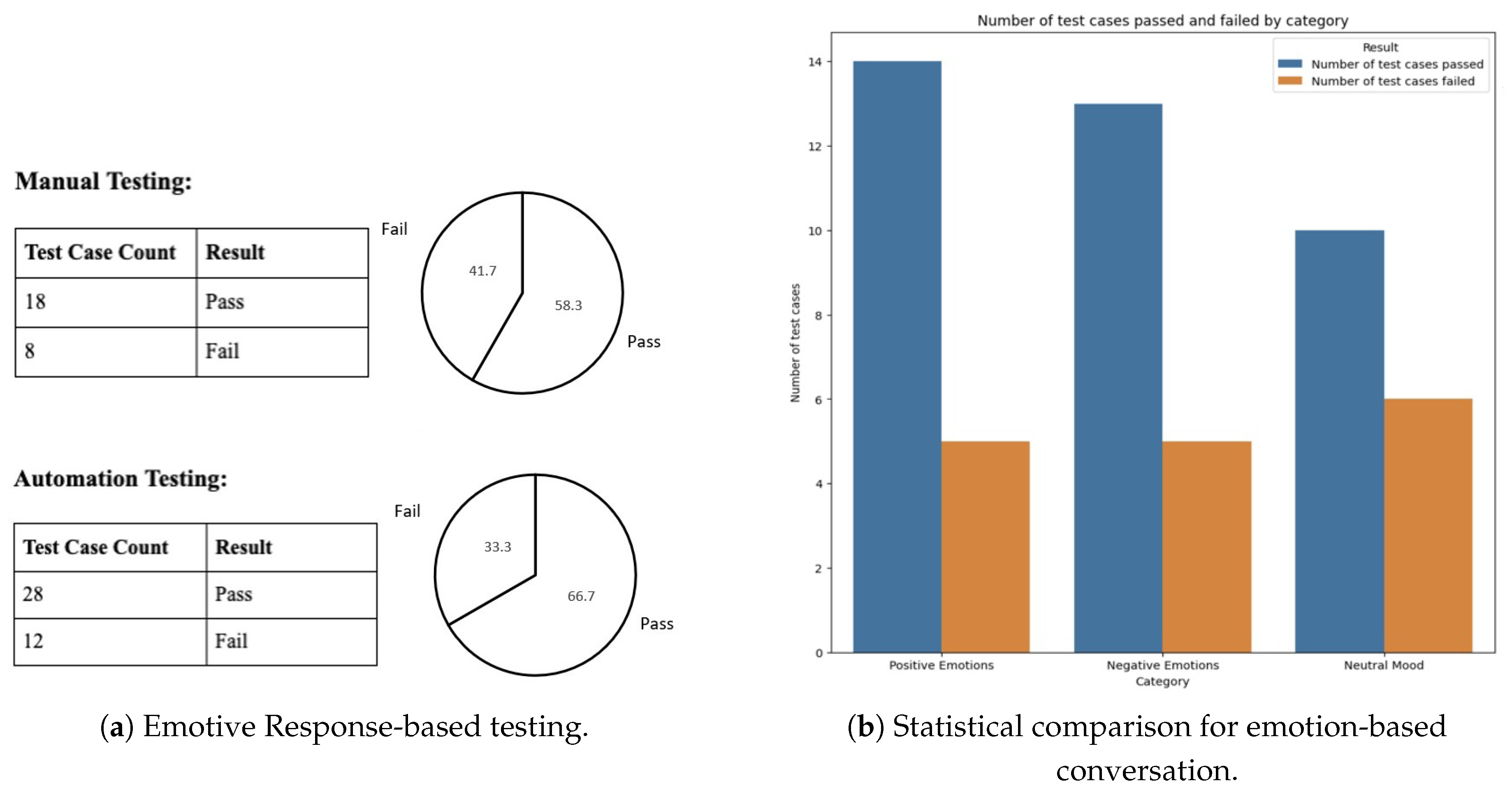

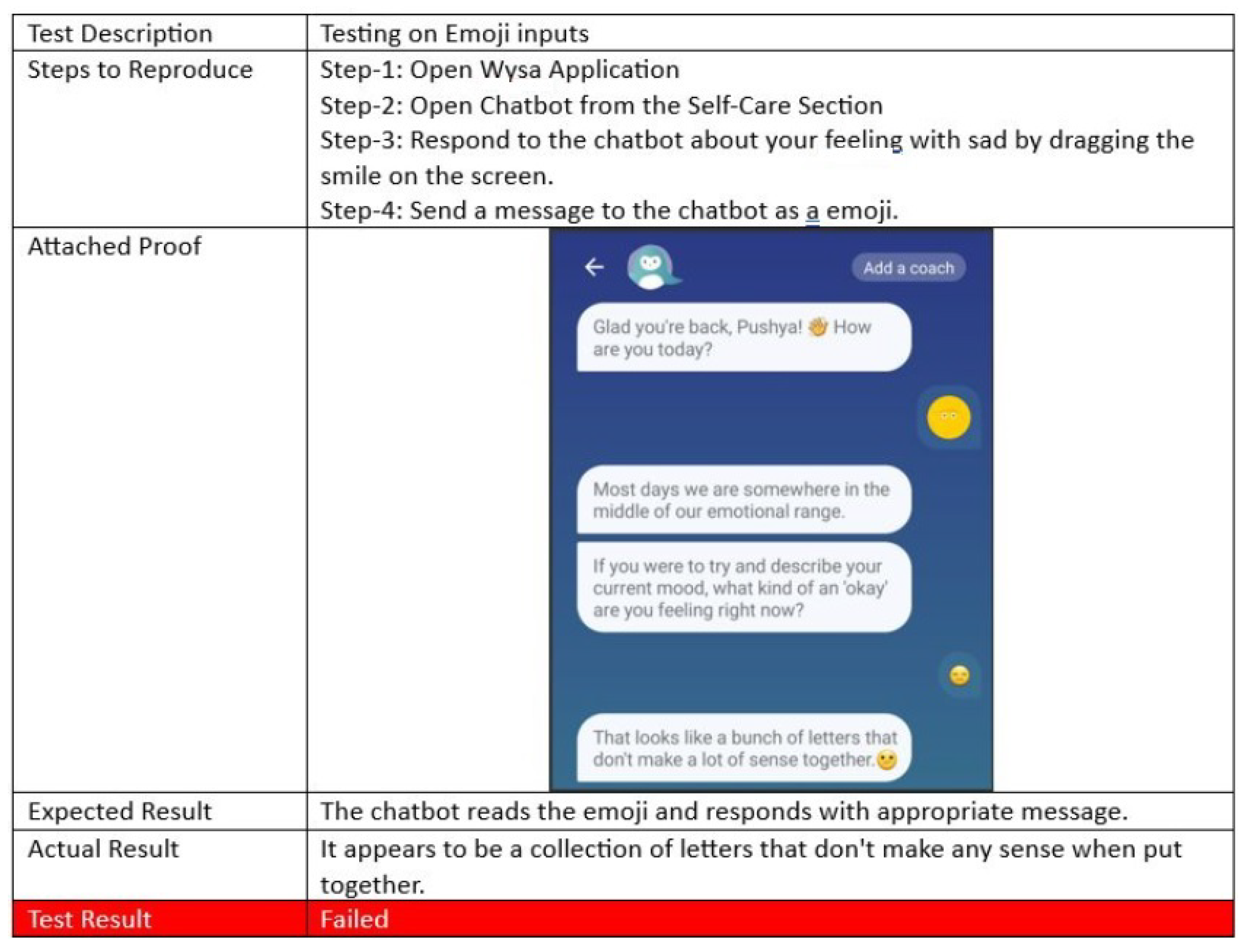

7.3. Emotive Reflexes

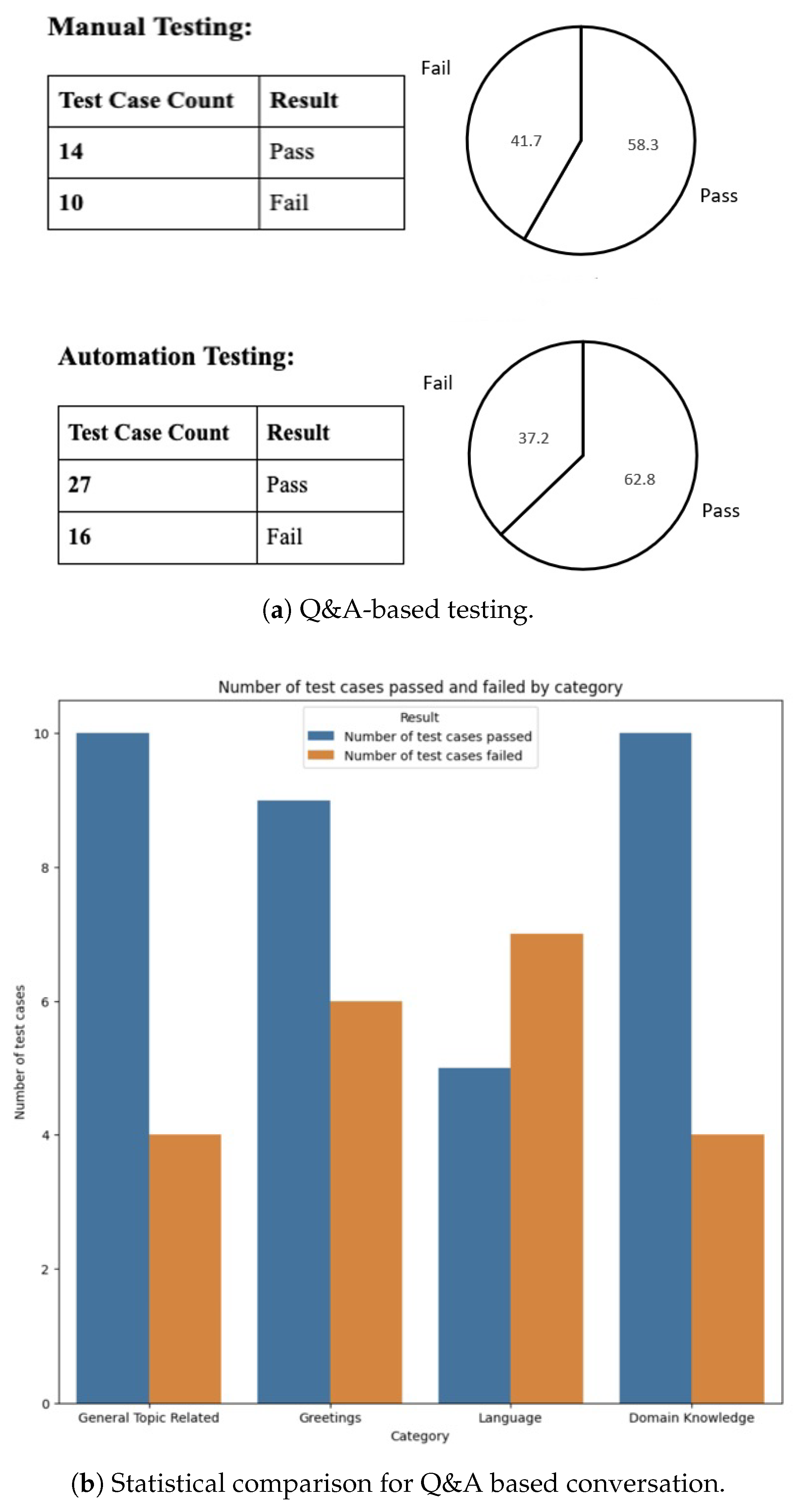

7.4. Q&A Interactions

8. Conclusion and Future Scope

Author Contributions

Funding

Conflicts of Interest

References

- Businesswire, “Global Chatbot Market Value to Increase by $1.11 Billion during 2020-2024 | Business Continuity Plan and Forecast for the New Normal | Technavio”. Available: https://www.businesswire.com/news/home/20201207005691/en/Global-Chatbot-Market-Value-to-Increase-by-1.11-Billion-during-2020-2024-Business-Continuity-Plan-and-Forecast-for-the-New-Normal-Technavio.

- J. Ni, T. Young, V. Pandelea, F. Xue, V. Adiga, and E. Cambria, “Recent Advances in Deep Learning-based Dialogue Systems,” ArXiv210504387 Cs, 2021. http://arxiv.org/abs/2105.04387.

- C. Tao, J. C. Tao, J. Gao, and T. Wang, “Testing and Quality Validation for AI Software–Perspectives, Issues, and Practices,” IEEE Access, vol. 7, pp. 120164–120175, 2019. [CrossRef]

- M. Vasconcelos, H. M. Vasconcelos, H. Candello, C. Pinhanez, and T. dos Santos, “Bottester: Testing Conversational Systems with Simulated Users,” in Proceedings of the XVI Brazilian Symposium on Human Factors in Computing Systems, Joinville Brazil, pp. 1–4, 2017. [CrossRef]

- Y. Xing and R. Fernández, “Automatic Evaluation of Neural Personality-based Chatbots,” in Proceedings of the 11th International Conference on Natural Language Generation, Tilburg University, The Netherlands, pp. 189–194, 2018. [CrossRef]

- J. Bozic, and F. Wotawa, "Testing Chatbots Using Metamorphic Relations," in Gaston, C., Kosmatov, N., Le Gall, P. (eds) Testing Software and Systems, ICTSS 2019. Lecture Notes in Computer Science, vol. 11812. Springer, Cham, 2019. [CrossRef]

- S. Bravo-Santos, E. S. Bravo-Santos, E. Guerra, and J. de Lara, "Testing Chatbots with Charm," in M. Shepperd, F. Brito e Abreu, A. Rodrigues da Silva, and R. Pérez-Castillo, (eds) Quality of Information and Communications Technology, QUATIC 2020. Communications in Computer and Information Science, vol 1266. Springer, Cham. [CrossRef]

- Q. Mei, Y. Xie, W. Yuan, and M. O. Jackson, "A Turing test of whether AI chatbots are behaviorally similar to humans," Economic Sciences, vol. 121, no. 9, e2313925121, 2024. [CrossRef]

- J. Bozic, O. A. Tazl, and F. Wotawa, “Chatbot Testing Using AI Planning,” in Proceedings of the 2019 IEEE International Conference On Artificial Intelligence Testing (AITest), Newark, CA, USA, pp. 37–44, 2019. [CrossRef]

- E. Ruane, T. Faure, R. Smith, D. Bean, J. Carson-Berndsen, and A. Ventresque, “BoTest: a Framework to Test the Quality of Conversational Agents Using Divergent Input Examples,” in Proceedings of the 23rd International Conference on Intelligent User Interfaces Companion, Tokyo Japan, pp. 1–2, 2018. [CrossRef]

- J. Guichard, E. Ruane, R. Smith, D. Bean, and A. Ventresque, “Assessing the Robustness of Conversational Agents using Paraphrases,” Proceedings of the 2019 IEEE International Conference On Artificial Intelligence Testing (AITest), Newark, CA, USA, pp. 55–62, 2019. [CrossRef]

- M. Kaleem, O. Alobadi, J. O’Shea, and K. Crockett, "Framework for the formulation of metrics for conversational agent evaluation," in RE-WOCHAT: Workshop on Collecting and Generating Resources for Chatbots and Conversational Agents-Development and Evaluation Workshop Programme, pp. 20–23, 2016.

- M. Nick and C. Tautz, “Practical evaluation of an organizational memory using the goal-question-metric technique,” in Proceedings of Biannual German Conference on Knowledge-Based Systems, pp. 138–147, 1999.

- M. Padmanabhan, “Test Path Identification for Virtual Assistants Based on a Chatbot Flow Specifications,” In: K. N. Das, J. C. Bansal, K. Deep, and A. K. Nagar, P. Pathipooranam, and R. C. Naidu, Soft Computing for Problem Solving. Advances in Intelligent Systems and Computing, Springer, Singapore, vol. 1057, 2020. [CrossRef]

- F. Aslam, "The impact of artificial intelligence on chatbot technology: A study on the current advancements and leading innovations," European Journal of Technology, vol. 7, no. 3, pp. 62–72, 2023.

- S. Ayanouz, B. A. Abdelhakim, and M. Benhmed, "A smart chatbot architecture based NLP and machine learning for health care assistance," in Proceedings of the 3rd International Conference on Networking, Information Systems, & Security, pp. 1-6, 2020.

- S. Bialkova, "Chatbot Efficiency—Model Testing," in The Rise of AI User Applications, Springer, Cham, 2024. [CrossRef]

- G. Bilquise, S. Ibrahim, and K. Shaalan, "Emotionally intelligent chatbots: A systematic literature review," Human Behavior and Emerging Technologies, vol. 2022, 2022.

- G. Caldarini, S. Jaf, and K. McGarry, "A Literature Survey of Recent Advances in Chatbots," Information, vol. 13, no. 1, pp. 2022; 41. [CrossRef]

- J. Gao, C. Tao, D. Jie, and S. Lu, "Invited Paper: What is AI Software Testing? and Why," 2019 IEEE International Conference on Service-Oriented System Engineering (SOSE), San Francisco, CA, USA, pp. 27-2709, 2019. [CrossRef]

- J. Gao, P. H. Patil, S. Lu, D. Cao and C. Tao, "Model-Based Test Modeling and Automation Tool for Intelligent Mobile Apps," 2021 IEEE International Conference on Service-Oriented System Engineering (SOSE), Oxford, United Kingdom, pp. 2021; 10. [CrossRef]

- J. Gao, S. Li, C. Tao, Y. He, A. P. Anumalasetty, E. W. Joseph, and H. Nayani, "An approach to GUI test scenario generation using machine learning," In 2022 IEEE International Conference on artificial intelligence testing (AITest), pp. 79–86, 2022.

- J. Gao, P. Garsole, R. Agarwal and S. Liu, "AI Test Modeling and Analysis for Intelligent Chatbot Mobile App - A Case Study on Wysa," in 2024 IEEE International Conference on Artificial Intelligence Testing (AITest), Shanghai, China, 2024, pp. [CrossRef]

- M. H. Kurniawan, H. Handiyani, T. Nuraini, R. T. S. Hariyati, and S. Sutrisno, "A systematic review of artificial intelligence-powered (AI-powered) chatbot intervention for managing chronic illness," Annals of Medicine, vol. 56, no. 1, 2302980, 2024.

- X. Li, C. Tao, J. Gao and H. Guo, "A Review of Quality Assurance Research of Dialogue Systems," in 2022 IEEE International Conference On Artificial Intelligence Testing (AITest), Newark, CA, USA, pp. 2022; 94. [CrossRef]

- C.-C. Lin, A.Y.Q. Huang, and S.J.H. Yang, "A Review of AI-Driven Conversational Chatbots Implementation Methodologies and Challenges (1999–2022)," Sustainability, vol. 15, no. 2023; 2. [CrossRef]

- E. W. Ngai, M. C. Lee, M. Luo, P. S. Chan, and T. Liang, "An intelligent knowledge-based chatbot for customer service," Electronic Commerce Research and Applications, vol. 50, 101098, 2021.

- A. Park, S. B. Lee, and J. Song, "Application of AI based Chatbot Technology in the Industry," Journal of the Korea Society of Computer and Information, vol. 25, no. 7, pp. 17-25, 2020.

- D. M. Park, S. S. Jeong, and Y. S. Seo, "Systematic review on chatbot techniques and applications," Journal of Information Processing Systems, vol. 18, no. 1, pp. 26-47, 2022.

- C. Tao, J. Gao and T. Wang, "Testing and Quality Validation for AI Software–Perspectives, Issues, and Practices," in IEEE Access, vol. 7, pp. 120164-120175, 2019. [CrossRef]

- C. T. P. Tran, M. G. Valmiki, G. Xu, and J. Z. Gao, "An intelligent mobile application testing experience report," in Journal of Physics: Conference Series, vol. 1828, no. 1, pp. 012080, 2021. IOP Publishing.

- L. Xu, L. Sanders, K. Li, and J. C. Chow, "Chatbot for health care and oncology applications using artificial intelligence and machine learning: systematic review," Journal of Medical Internet Research (JMIR) cancer, vol. 7, no. 4, e27850, 2021.

| Ref | Objective | Automated test validation | Test Modelling | Test Generation | Augmentation |

|---|---|---|---|---|---|

| [4] | Testing conversational systems with simulated users (chatbot specialized in financial advice) | No | Simulated User Testing | Scenario-Based, and Behavior-Driven | Automated Testing Augmentation |

| [5] | Sequence-to-sequence models with long short-term memory (LSTM) for open-domain dialogue response generation | No | Dialogue generation model and personality model using OCEAN personality traits | Context-response pairs from two TV series (Friends and The Big Bang Theory) | Automatically introduces variations in the input |

| [6] | A metamorphic testing approach for chatbots and obtain sequences of interactions with a chatbot | No | Metamorphic testing | Metamorphic relations to guide the generation of test cases and initial set of inputs | Constructing grammars in Backus-Naur form (BNF), mutations, and functional correctness |

| [7] | Testing chatbots with Charm | No | Used Botium to test coherence, sturdiness, and precision testing | Convo Generation, Utterance Mutation | Fuzzing/Mutation, Iteration and Improvement |

| [8] | Turing test to AI chatbots to examine how chatbots behave in a suite of classic behavioral games using ChatGPT-4 | No | Behavioral and personality testing | Classic behavioral games | Personality assessments using the Big-5 personality survey |

| Our purpose | Intelligent AI 3D test modeling, test generation, data augmentation, and test result validation for chat systems Wysa, a mental coach chatbot | Yes (Used AI-based test validation) | 3-Dimensional AI test modeling | AI-based model, and NLP/ML model | AI- model based positive and negative augmentation |

| Items | AI Testing | AI-based Software Testing | Conventional Software Testing |

|---|---|---|---|

| Objectives | Validate and assure the quality of AI software and system by focusing on system AI functions and features | Leverage AI techniques and solutions to optimize a software testing process and its quality | Assure the system function quality for conventional software and its features |

| Primary AI-Testing Focuses | AI feature quality factors: accuracy, consistency, completeness, and performance | Optimize a test process in product quality increase, testing efficiency, and cost reduction | Automate test operations for a conventional software process |

| System Function Testing | AI system function testing: Object detection & classification, recommendation and prediction, language translation | System functions, behavior, user interfaces | System functions, behavior, user interfaces |

| Test Selection | AI test model-based test selection, classification and recommendation | Test selection, classification and recommendation using AI techniques | Rule-based and/or experience-based test selection |

| Test Data Generation | AI test model-based data discovery, collection, generation, and validation | AI-based data collection, classification, and generation | Model-based and/or pattern-based test generation |

| Bug Detection and Analysis | AI-model based bug detection, analysis, and report | Data-driven analysis for bug classification, detection, and prediction | Digital and systematic bug/problem management. |

| Concept | Description |

|---|---|

| Mood Tracking and Analysis | Tracking and analyzing an individual’s mood or emotional state over time. It involves recording and assessing mood patterns, fluctuations, and trends to gain insights into emotional well-being. |

| Self-care Analysis | Analyzing and evaluating an individual’s self-care practices and habits. It involves assessing activities related to physical, mental, and emotional well-being, such as exercise, sleep, relaxation techniques, and mindfulness practices. |

| Conversational Support Analysis | Analyzing the effectiveness and impact of conversational support provided by chatbots or AI systems. It involves evaluating the quality, empathy, and appropriateness of responses to users’ emotional or support-related queries or needs. |

| Goal Setting | Setting specific, measurable, attainable, relevant, and time-bound (SMART) goals to promote personal growth and well-being. It involves identifying areas of improvement, defining objectives, and establishing action plans to achieve desired outcomes. |

| Sentiment Analysis | Analyzing and determining the sentiment or emotional tone expressed in text or conversations. It involves classifying text as positive, negative, or neutral to understand the overall sentiment or attitude conveyed. |

| Well-being Resources and Personalised Intervention | Providing personalized resources, recommendations, or interventions to support individual well-being. It involves offering tailored suggestions, activities, or resources based on an individual’s needs, preferences, or identified areas of improvement. |

| Text Input | Augmentation | Text Output |

|---|---|---|

| I am Sad | random char insert | I am aSad |

| I am Sad | random char swap | I am Sda |

| I am Sad | random char delete | I am ad |

| I am Sad | random word swap | Sad am I |

| I am Sad | random word delete | am Sad |

| I am Sad | ocr augmentation | 1 am Sad |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 1996 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).