3.1. Experimental Data and Settings

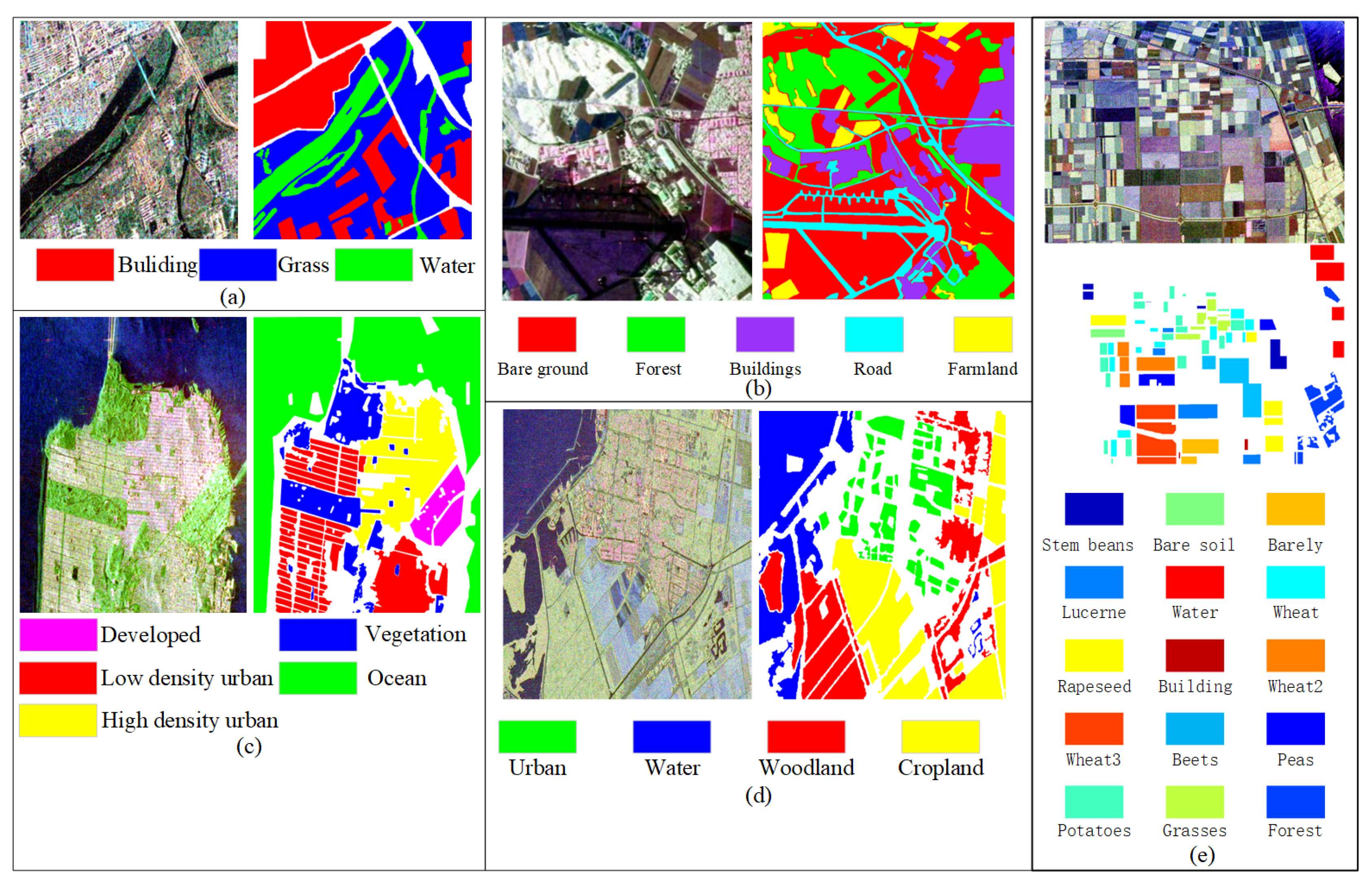

In order to verify classification performance of the proposed MLDnet, extensive experiments are conducted on five real PolSAR data sets. These data sets cover Xi’an, Oberpfaffenhofen, San Francisco and Flevoland areas respectively. Detailed information is given as follows:

A)

Xi’an data set: The first data set is C-band full polarimetric SAR data collected from Xi’an in the western region of China using RADARSAT-2 system. The image size is

pixels, with a resolution of

meters. It encompasses three types of land cover classes:

building,

grass, and

water. The Pauli-RGB pseudo-color image and the corresponding ground truth image are presented in

Figure 5(a).

B)

Oberpfaffenhofen data set: The second data set covers the Oberpfaffenhofen area in German and consists of an 8-look L-band fully PolSAR image acquired by the E-SAR sensor at the German Aerospace Center. This polarimetric radar image has the dimension of

pixels with a resolution of

m. It includes five distinct land cover types:

bare ground,

forest,

farmland,

road and

building.

Figure 5(b) illustrates the Pauli RGB image of the Oberpfaffenhofen data set along with the corresponding ground truth map.

C)

San Francisco data set: The third data set is the San Francisco data set, which is a C-band fully PolSAR image collected by the RADARSAT-2 system over the San Francisco area in the United States. The image has a resolution of

m and a size of

pixels. Its Pauli RGB image and ground truth map are displayed in

Figure 5(c), which contains five different land cover types:

ocean,

vegetation,

low density urban,

high density urban, and

developed.

D)

Flevoland1 data set: The fourth data set is C-band fully polarimetric SAR data collected by the RADARSET-2 sensor in the Flevoland region of the Netherlands. The image size is

pixels, with a resolution of

m. The PolSAR image encompasses four types of land cover, including

water,

urban,

woodland, and

cropland. We name this data set the Flevoland1 data set.

Figure 5(d) exhibits the Pauli-RGB image along with the corresponding ground truth map of the Flevoland1 data set.

E)

Flevoland2 data set: The last data set comes from the Flevoland region and consists of full-polarization L-band SAR data acquired by the AIRSAR system. The spatial resolution is

m, and the image size is

pixels. The Pauli RGB image and its ground truth are shown in

Figure 5(e). This image includes 15 types of crops:

stem beans, peas, forest, lucerne, beets, wheat, potatoes, bare soil, grasses, rapeseed, barley, wheat2, wheat3, water and

buildings. We name it the Flevoland2 data set.

The quantitative metrics employed in our experiments include average accuracy (AA), overall accuracy (OA), and Kappa coefficient. Experiments are conducted on Windows 10 operating system, using a 64GB NVIDIA GeForce GTX 3070 PC. The algorithm is implemented with Python 3.7 and Pytorch GPU 1.9.0. In addition, we utilize the Adam optimizer with a learning rate of 0.0001. The batch size is defined as

, and the number of training iteration is 50. The multi-class cross-entropy loss is employed. During the experimental process, 10% of the training samples are selected for each class of land cover in the image, with the remaining 90% serving as test samples. It should be noted that the white regions in the ground truth maps are unlabeled pixels in

Figure 5 and ??. During classification, all pixels in PolSAR images can obtain classification result, while unlabeled pixels are not considered into calculating quantitative metrics. Therefore, we just give the classification map with labeled pixels, and unlabeled pixels are colored by white in the following experimental results.

To assess the effectiveness of the proposed MLDnet, we compare it with seven state-of-the-art classification algorithms: Super-RF [

17], CNN [

21], CV-CNN [

23], 3D-CNN [

60], PolMPCNN [

61], CEGCN [

62] and SGCN-CNN[

63]. Specifically, the first compared method combines the random forest algorithm with superpixels, utilizing G0 statistical texture features to mitigate the interference from background targets, such as forests, on farmland classification, referred to as Super-RF. The second method, referred to as CNN, converts PolSAR data into normalized 9-D real feature vectors and learns hierarchical polarimetric spatial features automatically through two cascaded convolutional layers. The third method is a complex-valued convolutional neural network, named by CV-CNN. This approach transforms the PolSAR complex matrix into a complex vector, effectively leveraging both the amplitude and phase information presented in PolSAR images. The fourth method is based on 3-D convolutional neural network, shorted by 3D-CNN. It utilizes 3-D CNN to extract deep channel-spatial combined features, adapting well to the 3D data structure for classification. The fifth method combines polarimetric rotation kernels, employs a multi-path structure and dual-scale sampling to adaptively learn the polarimetric rotation angles of different land cover types, noted by PolMPCNN. The sixth method employs a graph encoder and decoder to facilitate collaboration between CNN and GCN within a single network, shorted by CEGCN. It enables feature learning in small-scale regular and large-scale irregular regions, respectively. The last approach similarly integrates super-pixel level and pixel-level networks into a single framework, named SGCN-CNN for short. It can obtain both global features and local features by defining the correlation matrix for feature transformation between superpixel and pixel.

3.2. Experimental Results on Xi’an Data Set

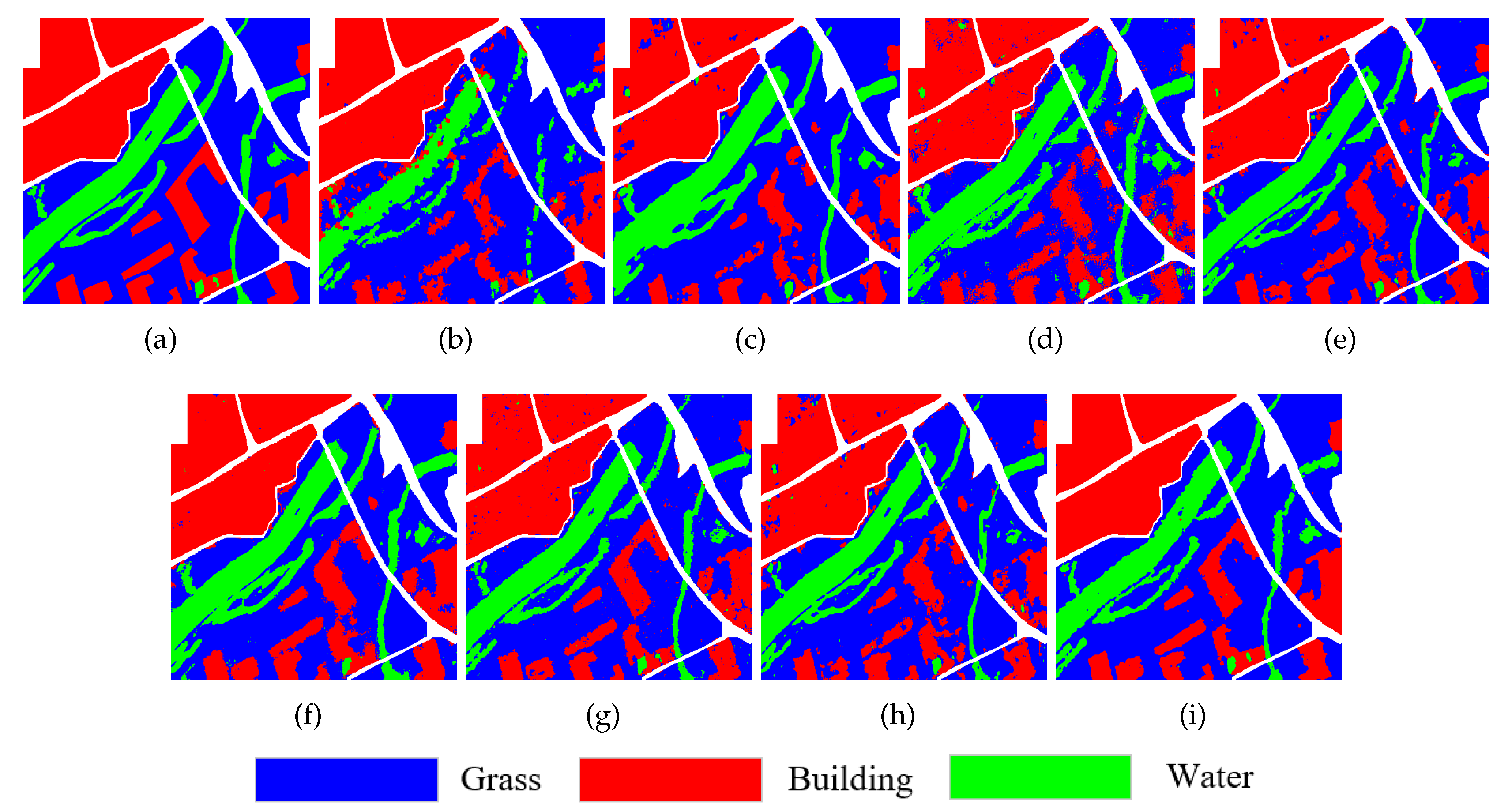

Classification results on the Xi’an data set are displayed in

Figure 6(b)-(i), illustrating the classification maps generated by Super-RF, CNN, CV-CNN, 3D-CNN, PolMPCNN, CEGCN, SGCN-CNN and the proposed MLDnet methods, respectively. Specifically,

Figure 6(b) displays the output of the Super-RF method, revealing numerous misclassified pixels, primarily concentrated around the

water class. At the same time, these misclassifications form distributed blocks due to superpixels. In contrast, the CNN method performs exceptionally well in distinguishing the

water class, but exhibits misclassifications in the

building class due to the lack of global information. It erroneously classifies some

building as

grass class. Similar to the CNN method, the CV-CNN exhibits noticeable misclassifications in the

building and

grass classes due to pixel-wise local features. 3D-CNN can adapt to the 3D structure of input data for classification, thus achieving better regional consistency. However, it struggles to adaptively handle distinct individual features, limiting its classification effectiveness at the boundaries of different land covers. In comparison, PolMPCNN excels in adaptively learning the polarimetric characteristics of various land covers, greatly improving overall classification effect. However, the feature learning procedures of different terrains are independent, resulting in a small number of misclassified pixels still existing at the land covers boundaries. Compared to PolMPCNN, the CEGCN method utilizes two branches, CNN and GCN, to generate complementary contextual features at the pixel and super-pixel levels, significantly improving classification accuracy at boundaries of diverse land covers. However, the CEGCN method cannot handle the inherent speckle noise in PolSAR images well, so there are some noise points in the image. The SGCN-CNN method, similar to the CEGCN method, also struggles to effectively handle the inherent speckle noise in images, resulting in the generation of a significant number of noise points. The proposed MLDnet combines the L-DeeplabV3+ network to deeply explore multi-dimensional features of PolSAR image, and select effective features through the incorporation of a channel attention module. Compared to six contrastive methods, the proposed MLDnet method in (h) demonstrates substantial improvements in both regional consistency and edge details.

Table 3 lists the classification accuracy of all methods in the Xi’an data set. It can be seen that the Super-RF method without deep features has lower accuracy than other methods. The main reason is that the classification accuracy of

water class is only 70.91%, which is relatively consistent with the classification result in

Figure 6(b). In comparison, CNN has greatly improved its accuracy in

water class, but its accuracy in the

building class is relatively lower, resulting in the low overall accuracy. It only improved by 0.27% compared to Super-RF. CV-CNN further improves the classification accuracy of the

water class, with the highest accuracy among all methods. However, its classification accuracy in the

grass class is the lowest among all methods, which also results in its OA value being slightly lower than CNN. Compared to the previous three methods, 3D-CNN has achieved a significant improvement in OA value, and PolMPCNN has further improve the performance, especially in the

building class, with the accuracy of 97.68%, which is significant higher than other compared methods. Analogously, due to better distinguishing

grass class, CEGCN has further improved the overall classification accuracy. The SGCN-CNN method experiences a decline in overall classification metrics due to a significant number of misclassifications within

grass class. The proposed MLDnet achieves the best classification accuracy in both two land cover types and three overall evaluation indicators. Specifically, the OA values are improved by 7.44%, 7.17%, 7.79%, 4.17%, 3.37%, 1.17% and 6.20% compared to other methods.

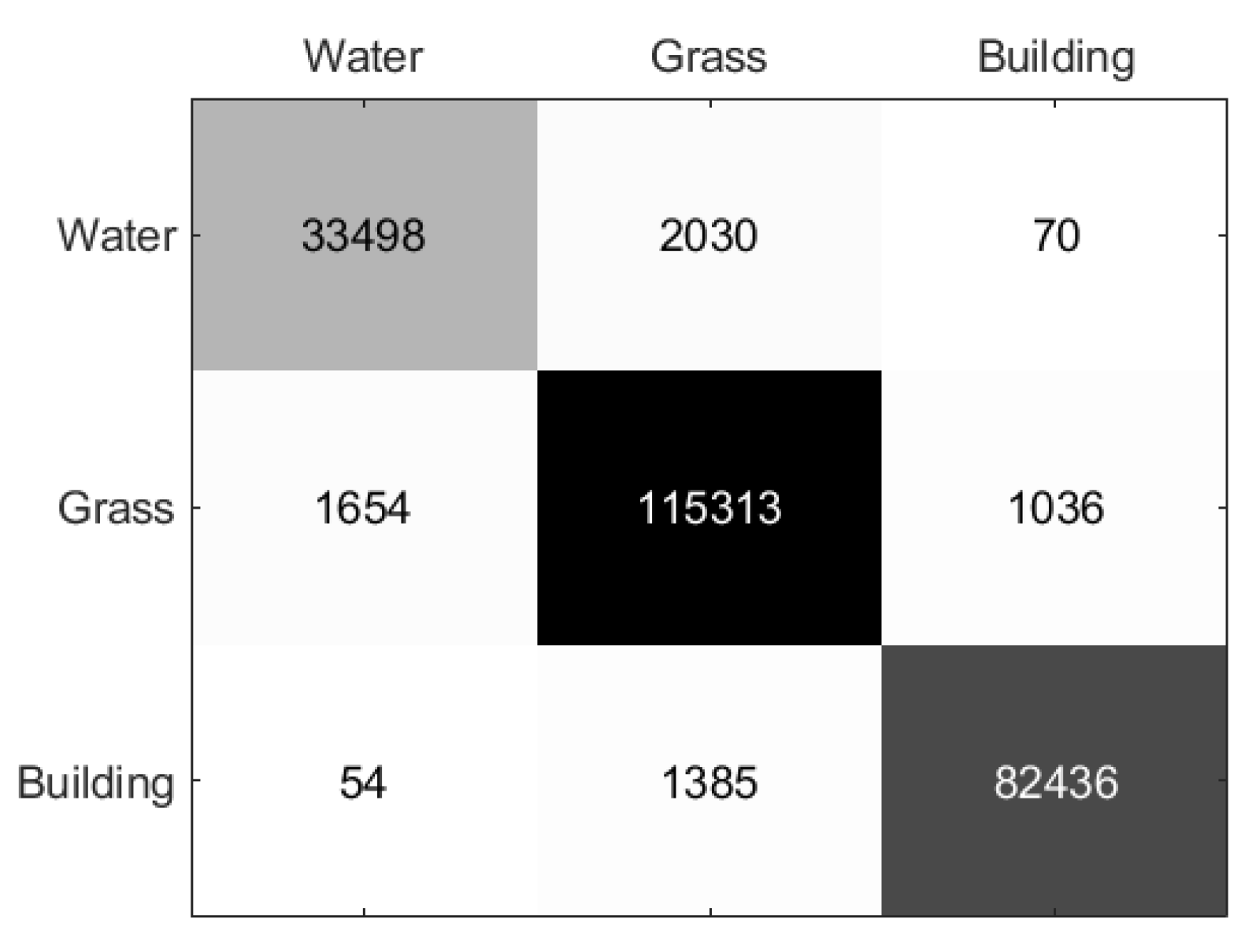

Figure 7 shows the confusion matrix of the Xi’an data set. From the figure, it can be seen that the proposed method misclassifies 2030 (about 5.70%) pixels of

water class as

grass, while misclassifying 1654 (about 1.40%) pixels of

grass as

water class. In addition, there are also a small number of misclassified pixels in the

building class, with 1385 pixels (about 1.65%) misclassified as

grass.

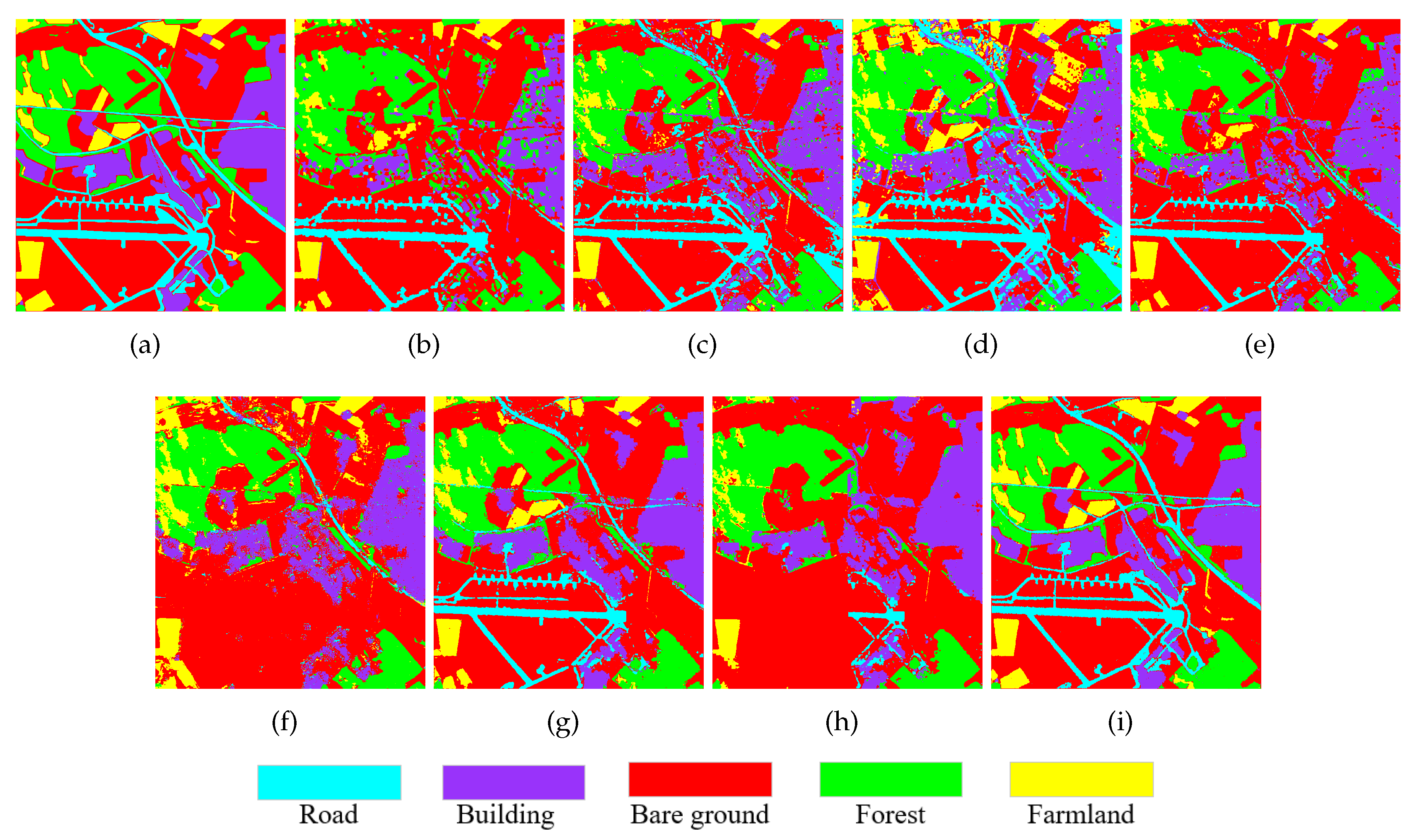

3.3. Experimental Results on Oberpfaffenhofen Data Set

The classification maps produced by compared and proposed methods are illustrated in

Figure 8(b)-(i), respectively. As shown in

Figure 8(b), the Super-RF method effectively distinguishes

bare ground and

farmland classes due to large number of samples. However, for classes with fewer samples such as

building,

forest, and

road classes, misclassification is more noticeable. The CNN method shown in (c) has enhanced classification performance for

building and

road classes. However, its effectiveness in differentiating the

farmland class remains suboptimal. In comparison, the CV-CNN method in (d) shows a marginal improvement in mitigating misclassification issues related to the

farmland class. Nevertheless, it exacerbates misclassification in the

bare ground class in the upper left corner, and overall regional consistency remains relatively poor. The 3D-CNN method in (e) exhibits similar to the Super-RF method, displaying a notable number of misclassified pixels in the

road and

farmland classes. Despite this, there is an enhancement in

building class. Compared to the preceding four methods, the PolMPCNN method in (f) demonstrates a substantial improvement in regional consistency. However, this method almost entirely misclassifies the

road class in the lower half of the images as the

building class. Additionally, the boundaries between different land covers appear blurry. CEGCN has further enhanced the classification performance of the

road class,

building upon the foundation laid by PolMPCNN. However, the effectiveness is still suboptimal. The SGCN-CNN method in (h) likewise demonstrates subpar performance when dealing with classes with fewer samples, such as

road and

farmland. The proposed MLDnet method, as exhibited in (i), demonstrates a remarkable improvement in the classification performance of all five land covers compared to other contrastive methods. Furthermore, it effectively preserves the boundaries among different land covers.

Table 4 compares the classification accuracy of different methods in the Oberpfaffenhofen area. From the table, it can be visually observed that the seven compared methods have relatively low OA values on the Oberpfaffenhofen data set, with the highest value being only 88.93% (CEGCN). Moreover, among the five land cover types,

bare ground class exhibits relatively high accuracy in discrimination. The four comparative methods (Super-RF, 3D-CNN, CEGCN and SGCN-CNN) all achieve accuracy rates exceeding 90%. Meanwhile, all seven comparative methods have an accuracy rate surpassing 81% for

forest class. However, for the remaining three classes, particularly

farmland and

road classes, none of the seven comparative methods achieve satisfactory performance. The highest discrimination rates are 80.70% (CEGCN) for

farmland class and 76.20% (CVCNN) for

road class. Notably, for the

road class in PolMPCNN, the correct discrimination rate is only 10.38%, consistent with the classification result displayed in

Figure 8(f). This indicates that for data sets with unbalanced samples, such as Oberpfaffenhofen, the classification performance of the six comparison methods is constrained. However, The proposed MLDnet method excels in achieving the highest classification accuracy across all evaluation metrics, with values consistently exceeding 93%. Furthermore, in comparison to the seven comparative algorithms, the OA value sees an increase of 17.79%, 15.32%, 21.31%, 13.37%, 20.37% ,7.26% and 18.90%, respectively. This highlights the superior capability of the proposed method in effectively handling complex scenes of PolSAR images and enhancing classification accuracy.

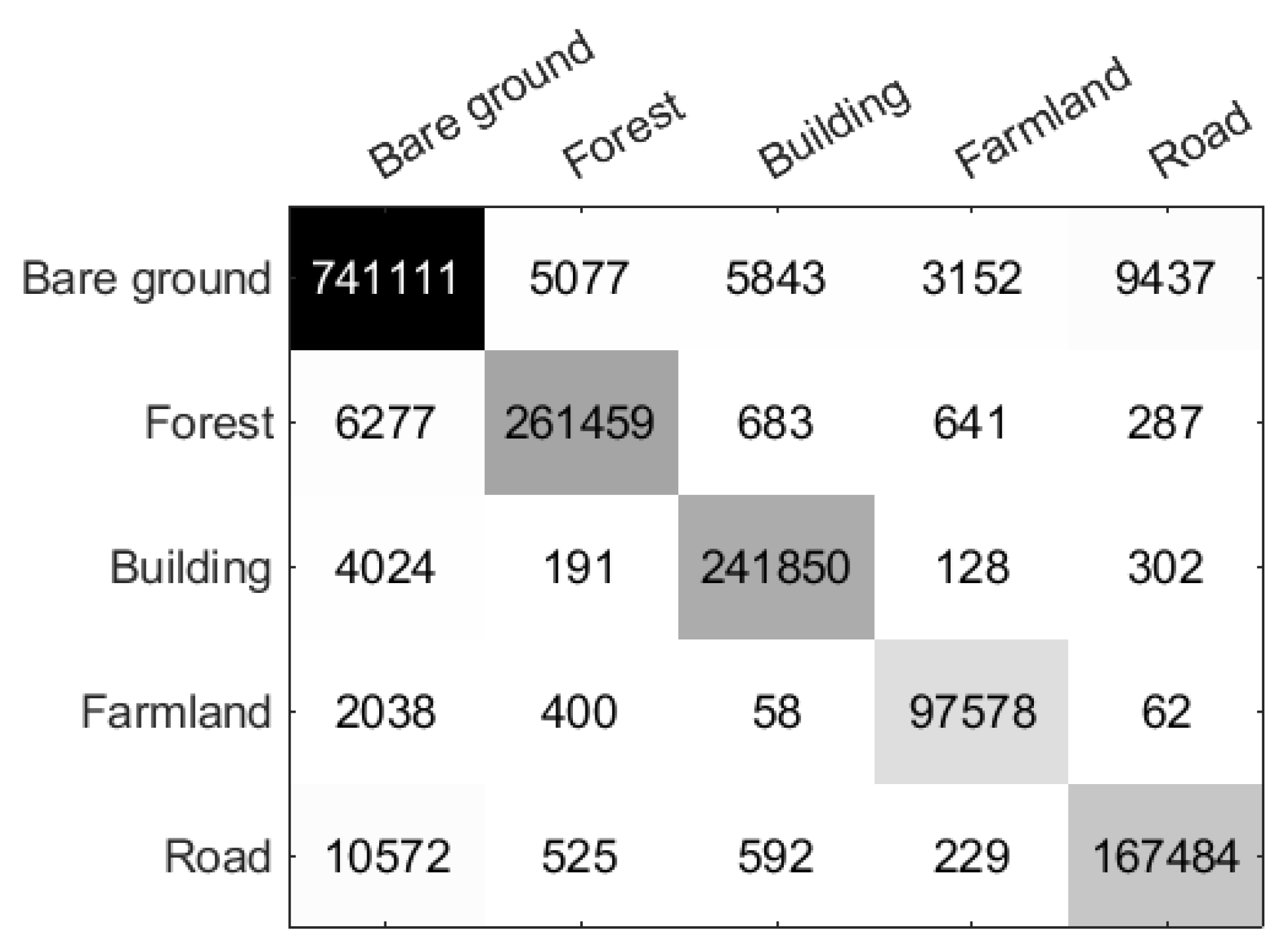

Additionally, the confusion matrix of the Oberpfaffenhofen data set is depicted in

Figure 9. The figure reveals that, for the

bare ground class, the primary misclassification occurs in the

road class, comprising 9437 pixels (approximately 1.23%). Regarding the

forest class, it is primary misclassified as the

bare ground class, accounting for 6277 pixels (approximately 2.33%). Similarly, for the

building,

farmland, and

road classes, they are primarily misclassified as the

bare ground class, with 4024 pixels (approximately 1.63%), 2038 pixels (approximately 2.04%), and 10572 pixels (approximately 5.89%), respectively. Therefore, the main confusion appears between

bare ground and

road classes.

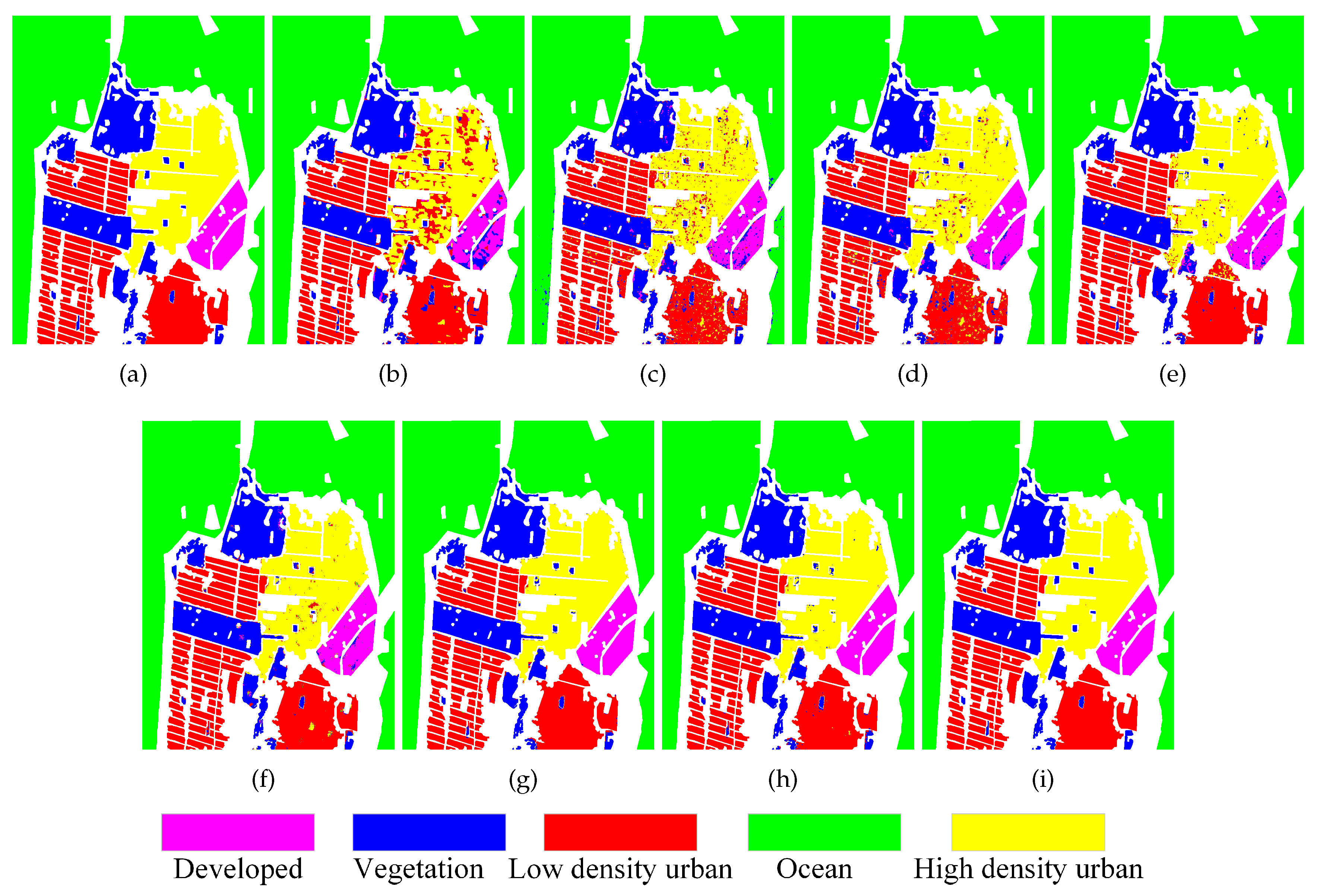

3.4. Experimental Results on San Francisco Data Set

The experimental results of seven contrastive methods and MLDnet algorithms on the San Francisco data set can be seen in

Figure 10(b)-(i), respectively.

Figure 10(a) displays the ground truth map of the San Francisco area. Upon comparing the ground truth map, it is evident that super-RF produces many misclassifications in

high density urban class with significant surface scattering differences. It also exhibits a portion of confusion between

vegetation and

developed classes. The CNN method also faces challenges in distinguishing

high density urban class from

developed class. In comparison to the previous two methods, the classification performance of CV-CNN has been improved in

high density urban and

developed classes, while there are still a small number of misclassified pixels. Simultaneously, the misclassification phenomenon in

low density urban class is also quite evident. The 3D-CNN method demonstrates improved classification performance on

high-density urban,

low-density urban, and

developed classes but exhibits more misclassified pixels in the

vegetation class. PolMPCNN improves classification performance in various classes. However, its classification map still contains some visually apparent misclassified pixels. CEGCN and SGCN-CNN almost perfectly distinguish various land covers; however, upon closer observation, it is not difficult to notice that they have some misclassified pixels along land cover boundaries. The proposed MLDnet method also effectively distinguishes various land covers, meanwhile, it preserves the boundaries of different land cover types well. This suggests that the MLDnet method is effective in achieving good classification results even for large-scale PolSAR data sets.

In addition,

Table 5 provides the classification accuracy of all methods for various land covers in the San Francisco data set, along with the corresponding OA, AA, and Kappa. Based on the table, it can be observed that, in comparison to the other three land cover types, both the Super-RF and CNN methods exhibit relatively low accuracy in classifying

high density urban and

developed classes. This is especially evident for the Super-RF method, with precision rates of 77.76% and 81.00%, respectively. CV-CNN exhibits a relatively low accuracy rate in the

low density urban class. In comparison, the classification accuracy by 3D-CNN and PolMPCNN methods is higher, with OA values both above 98%. The CEGCN and SGCN-CNN methods both exhibit excellent performance across five different land cover types, with accuracy exceeding 99% for all categories except

vegetation class. In contrast, the proposed MLDnet method achieves the highest class accuracy for all five land cover types, demonstrating superior classification performance. In terms of overall metrics, using OA metric as an example, compared to the seven other comparative algorithms, MLDnet improves OA by 5.64%, 3.69%, 2.26%, 1.59%, 1.04%, 0.42%, and 0.50% respectively.

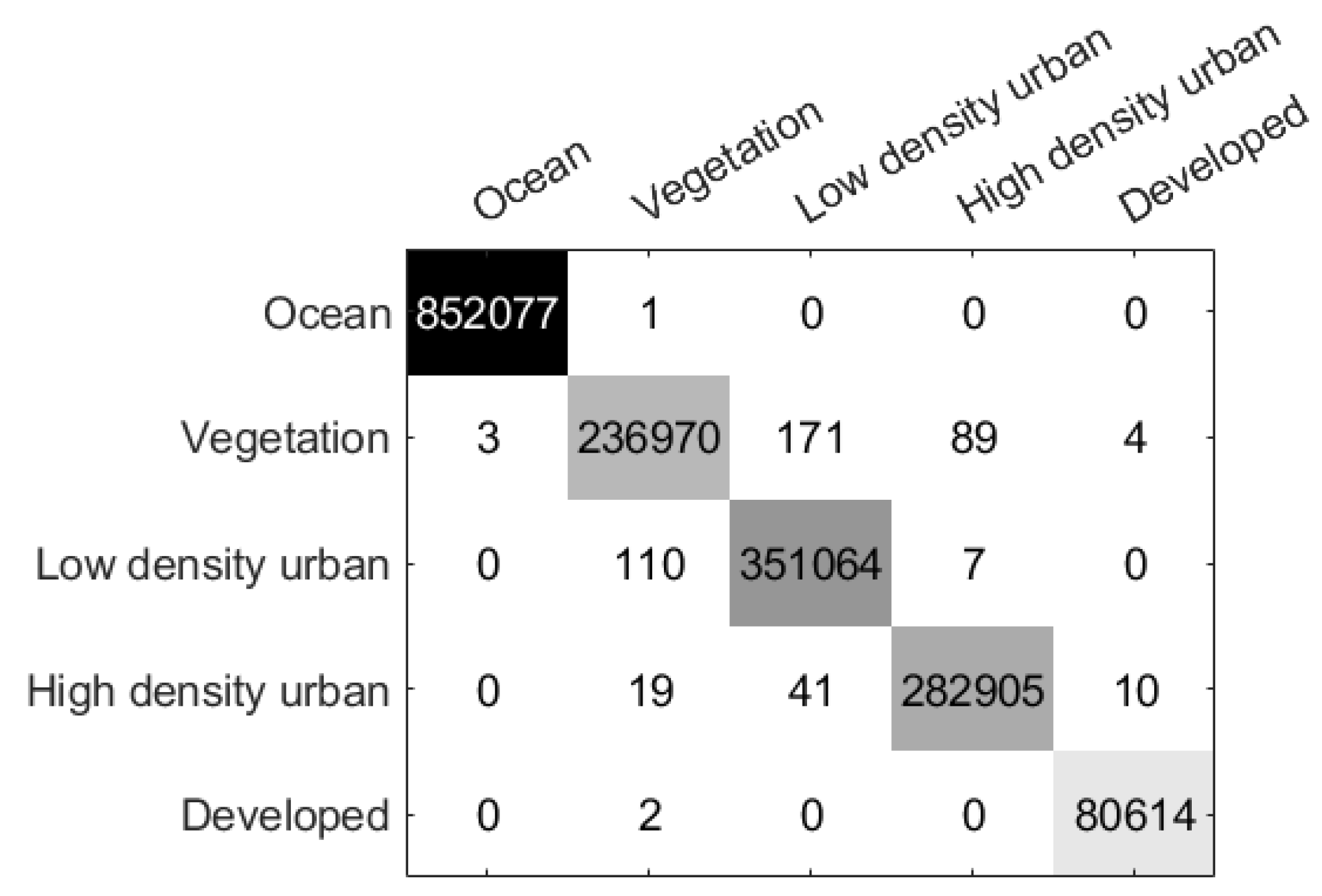

The confusion matrix of the MLDnet applies on the San Francisco data set is presented in

Figure 11. From the figure, it can be observed that MLDnet achieves the best discrimination between

ocean and

developed classes, with the main misclassification occurring in the

vegetation class, consistent with the results shown in

Table 5.

3.5. Experimental Results on Flevoland1 Data Set

The experimental results of various methods on the Flevoland1 data set in the Netherlands are depicted in Figure 12. Specifically, the Super_RF in (b) is a low-level feature learning method that exhibits limitation in effectively classifying urban class. In contrast, the CNN method in (c) demonstrates some enhancement in the classification of land cover boundaries; however, the overall classification performance remains inadequate, marked by numerous noisy points in the image. The CV-CNN method in (d) and the 3D-CNN method in (e) have enhanced regional consistency to some extent, but the improvement effect is not significant. There are still relatively noticeable misclassification pixels in the classification maps. PolMPCNN in (f) further enhances the regional consistency, effectively distinguishing between water and woodland classes, with only a small amount of misclassified pixels in cropland and urban classes. CEGCN in (g) and SGCN-CNN in (h) further enhance the ability to distinguish various land cover types, with only a few misclassifications occurring at the boundary between cropland and urban classes. The proposed MLDnet in (i), leveraging a 57-dimensional multi-feature and the L-DeeplabV3+ deep learning network, exhibits a notable improvement compared to previous methods. There are almost no obvious misclassification phenomena in the image. The consistency of regions and the preservation of boundaries have been significantly enhanced.

In addition, to quantitatively assess the classification performance, the classification accuracies of all methods on the Flevoland1 data set are presented in

Table 6. It is evident that Super-RF attains the lowest correct classification rate of 81.84% in the

urban class. CNN method achieves the lowest classification accuracy in the

cropland class, at 93.16%. CV-CNN demonstrates great improvements, especially for the

water class, with a correct discrimination rate second only to the proposed method MLDnet, reaching 99.85%. 3D-CNN method improves the classification accuracy in the

cropland class compared to the previous three methods, with increases of 2.59%, 3.59%, and 2.81% respectively. PolMPCNN method further improves the classification metrics for various classes in the Flevoland1 data set, achieving an OA value of 98.49%. The CEGCN and SGCN-CNN further improve the classification accuracy of various types of land cover, with numerical values consistently above 98%. Additionally, it is noticeable that multiple comparison algorithms exhibit relatively lower correct classification rates for the

urban class. This suggests that the classification of the

urban class is more difficult. However, it is obvious that the proposed MLDnet excels in classifying all types of land covers and in overall classification performance. Especially for the

urban class, the accuracy has also reached 99.98%. This demonstrates the effectiveness of the proposed method.

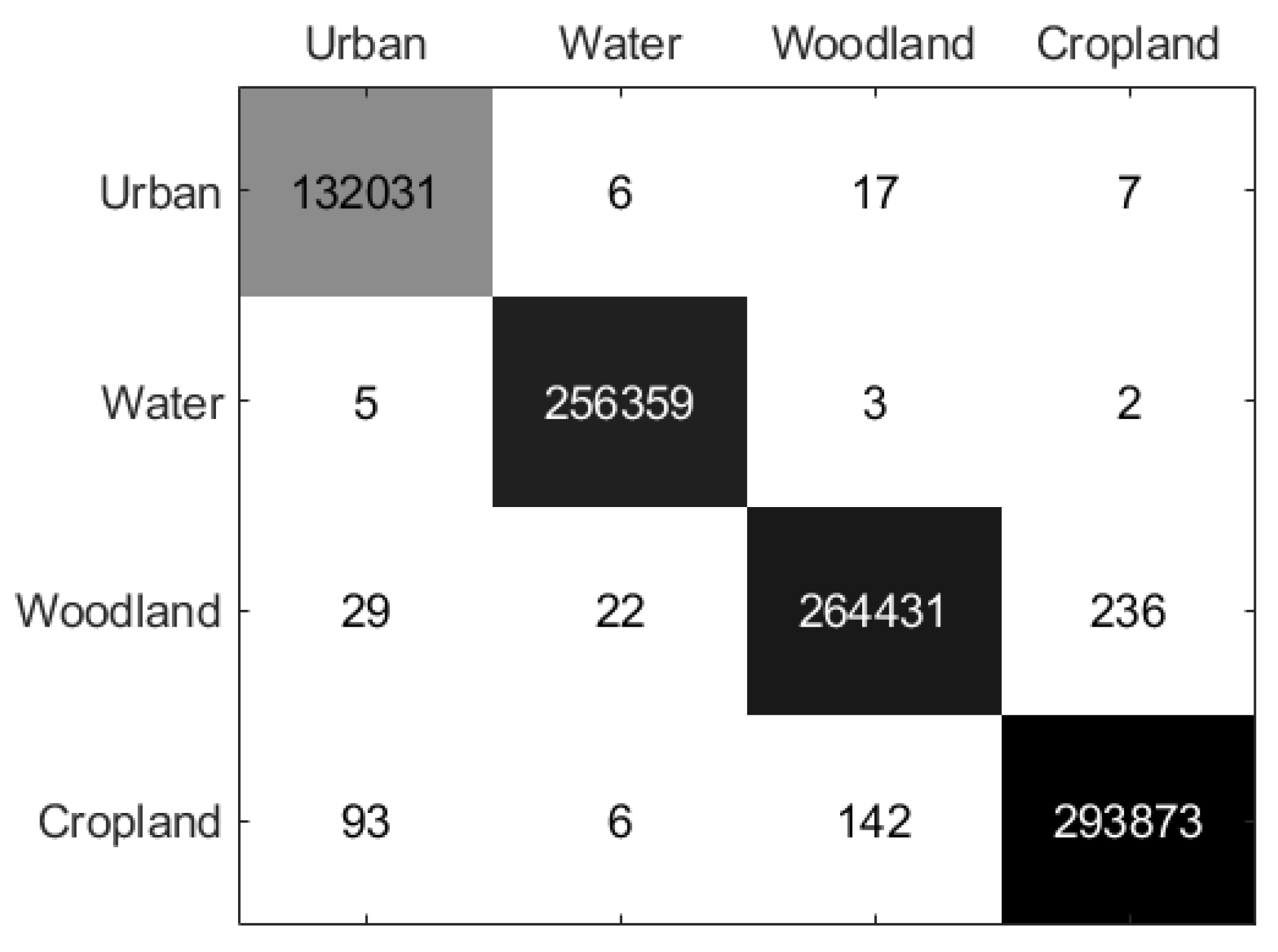

The confusion matrix of the Flevoland1 region is depicted in

Figure 13. It is evident that in the differentiation of four different land covers, the number of misclassified pixels is relatively low using the proposed MLDnet. Especially in the

water class, the number of misclassified pixels is only 10, while the total number of pixels in the

water class in the Flevoland1 data set is 256369.

3.6. Experimental Results on Flevoland2 Data Set

The experimental results using the proposed MLDnet method and seven comparison methods on the Flevoland2 data set are shown in Figure 14. By comparing these results with the label map in (a), it is evident that the superpixel-level classification method, Super-RF, and the pixel-level classification method, CNN, exhibit numerous patchy misclassified areas and scattered misclassification noise across multiple classes. In contrast, the CV-CNN method achieves better classification results, though it still shows noticeable misclassified pixels in the rapeseed class. Furthermore, due to the lack of global information, the 3D-CNN method generates spotty noise in multiple classes such as stem beans, wheat, and rapeseed. PolMPCNN effectively learns various land cover features through a multi-path architecture but still has limitations in distinguishing similar land covers such as barley, wheat2, wheat3, and rapeseed. CEGCN combines pixel-level and superpixel-level features effectively, resulting in good differentiation of various land covers, with only a few misclassifications in the rapeseed and wheat classes. The SGCN-CNN method exhibits a small number of misclassifications in the wheat2, rapeseed, and grasses classes. Additionally, the analysis reveals that all seven comparison methods exhibit varying degrees of misclassified pixels in the rapeseed class. However, the proposed MLDnet method achieves excellent classification performance across all land covers, including the rapeseed class. This success is contributed to the proposed MLDnet method, which comprehensively considers different land cover characteristics and extracts various features such as texture and contour features, thereby enhancing the network’s ability to distinguish similar crops.

Meanwhile,

Table 7 provides the per-class accuracy, overall accuracy (OA), average accuracy (AA), and Kappa coefficient for the above methods and the proposed model. The data show that the Super-RF method achieves excellent classification performance in the

barley and

water classes, with an accuracy of 100%, but completely misclassified the

buildings class, thus lowering the average accuracy. The CNN method shows a slight improvement in OA, but not reach 90% accuracy in several classes, such as

grasses and

barley. In contrast, CV-CNN significantly improves both class differentiation and overall evaluation metrics, achieving an OA of 98.96%. Similar to Super-RF, 3D-CNN performs well in the

water class but fails in

buildings class. PolMPCNN, on the other hand, differentiates the

buildings class well but has the lowest class accuracy in the

barley class, with only 40.25%. CEGCN achieves over 99% class accuracy in multiple classes, with main misclassifications occurring in the

peas, grasses, and

buildings classes. The SGCN-CNN method shows a slight improvement over CEGCN, with all metrics exceeding 90%. However, the proposed MLDnet achieves the highest class accuracy in all 13 land cover types, with an overall accuracy of 99.95%. These results fully validate the effectiveness and reliability of the proposed method.

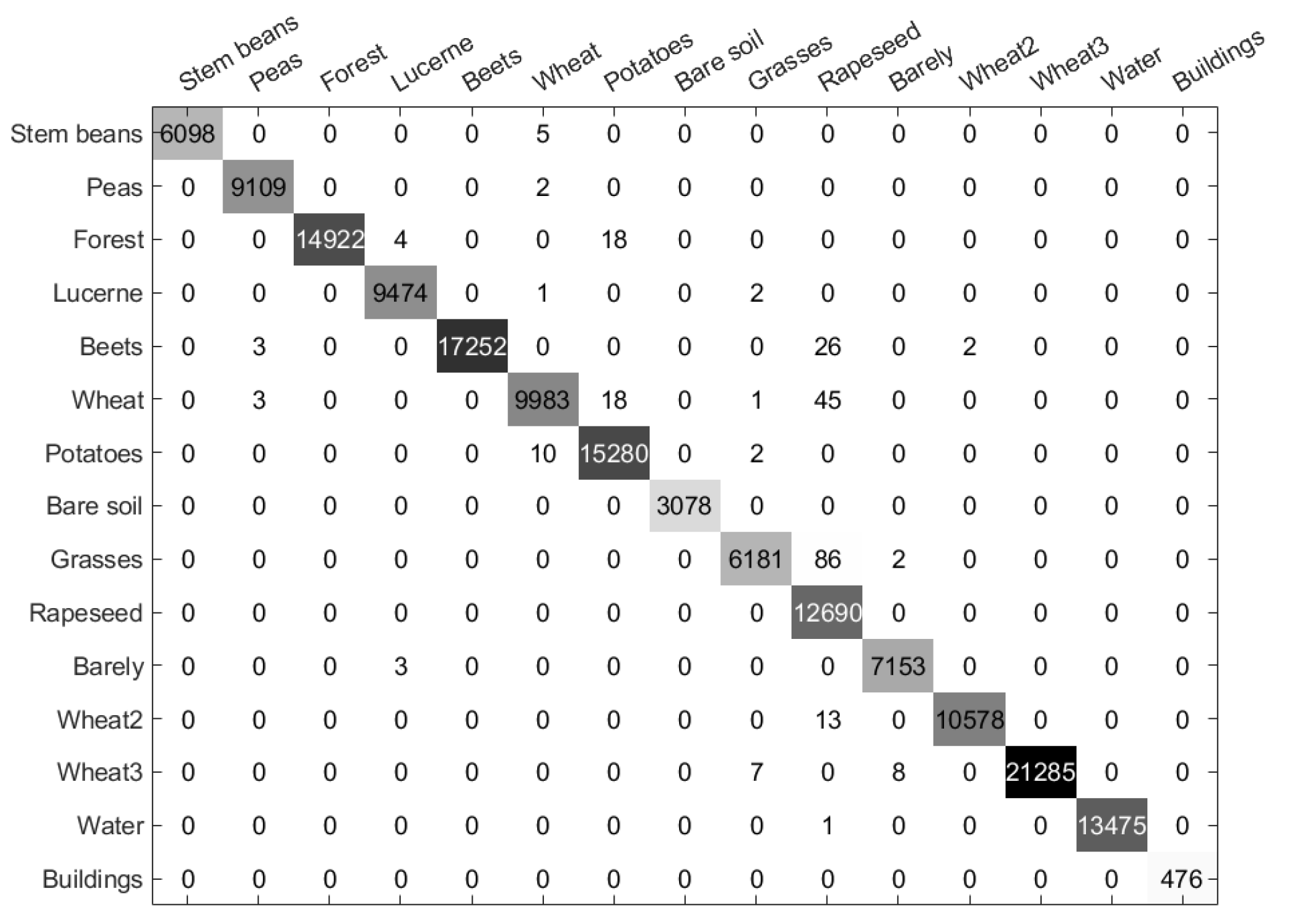

The confusion matrix of the Flevoland2 region is presented in

Figure 15. From the figure, it can be observed that the proposed method only misclassifies a small number of pixels. Compared to the total number of pixels in each class, which exceeds a thousand, the maximum number of misclassified pixels is only 86. Furthermore, even in the

buildings class, which has fewer pixels, the proposed method still achieves good performance. This demonstrates the method’s excellent performance in distinguishing complex PolSAR data sets with various land cover types.

3.7. Discussion

1)Effect of each submodule:

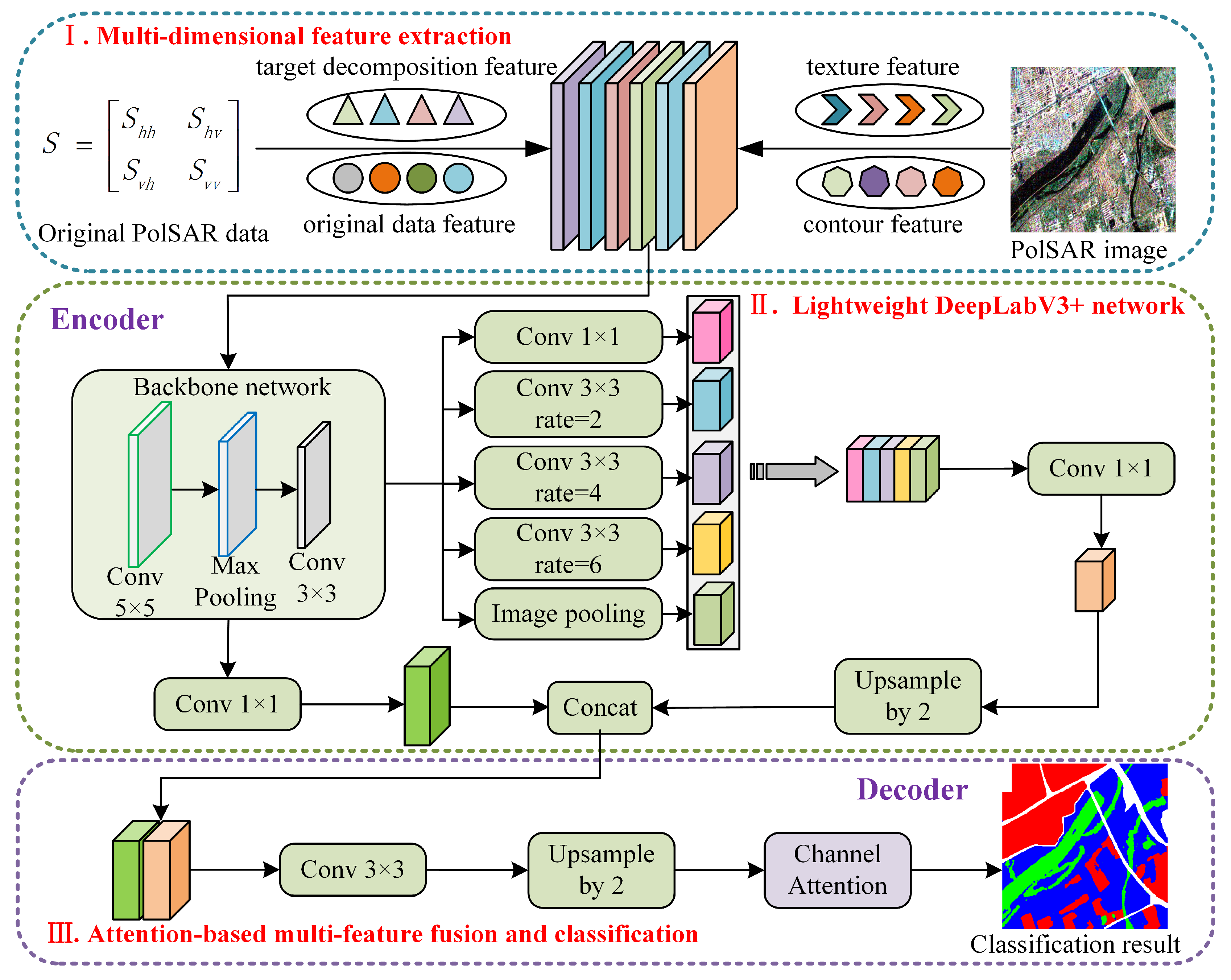

In this paper, we formulate 57-dimensional multi-features as the input, with L-DeeplabV3+ as the main network, combined with the channel attention module to form the proposed MLDnet. Therefore, in order to verify the effectiveness of the three modules mentioned above, we conducts the ablation experiments. Firstly, to assess the effectiveness of the proposed 57-dimension multi-features, we replace the 57-dimension multi-features by the original 9-dimensional PolSAR data as the input without changing other modules(denoted by "wihtout MF"). Secondly, to validate the effectiveness of proposed L-DeepLabV3+ network, we replace it by a traditional CNN model, while keeping other components the same as the proposed method, noted by "without L-deeplabV3+". Finally, to validate the channel attention module, we remove the channel attention module from the proposed MLDnet, denoted by "without Attention".

Table 8 presents the classification performance of three ablation experiments and the proposed MLDnet on five experimental data sets, evaluated using OA value and Kappa coefficient. From the Table, it is evident that for the three sets of ablation experiments, each group is able to achieve satisfactory classification performance. The proposed MLDnet integrates the advantages of all three methods, thus achieving higher statistical classification accuracy. For example, compared with "without MF" method, MLDnet improves the OA value by 0.52%, 2.94%, 0.75%, 1.03% and 0.17% on five data sets respectively, demonstrating the effectiveness of the proposed multi-feature module. The same conclusion can be found in other modules, which prove the effectiveness of the proposed method.

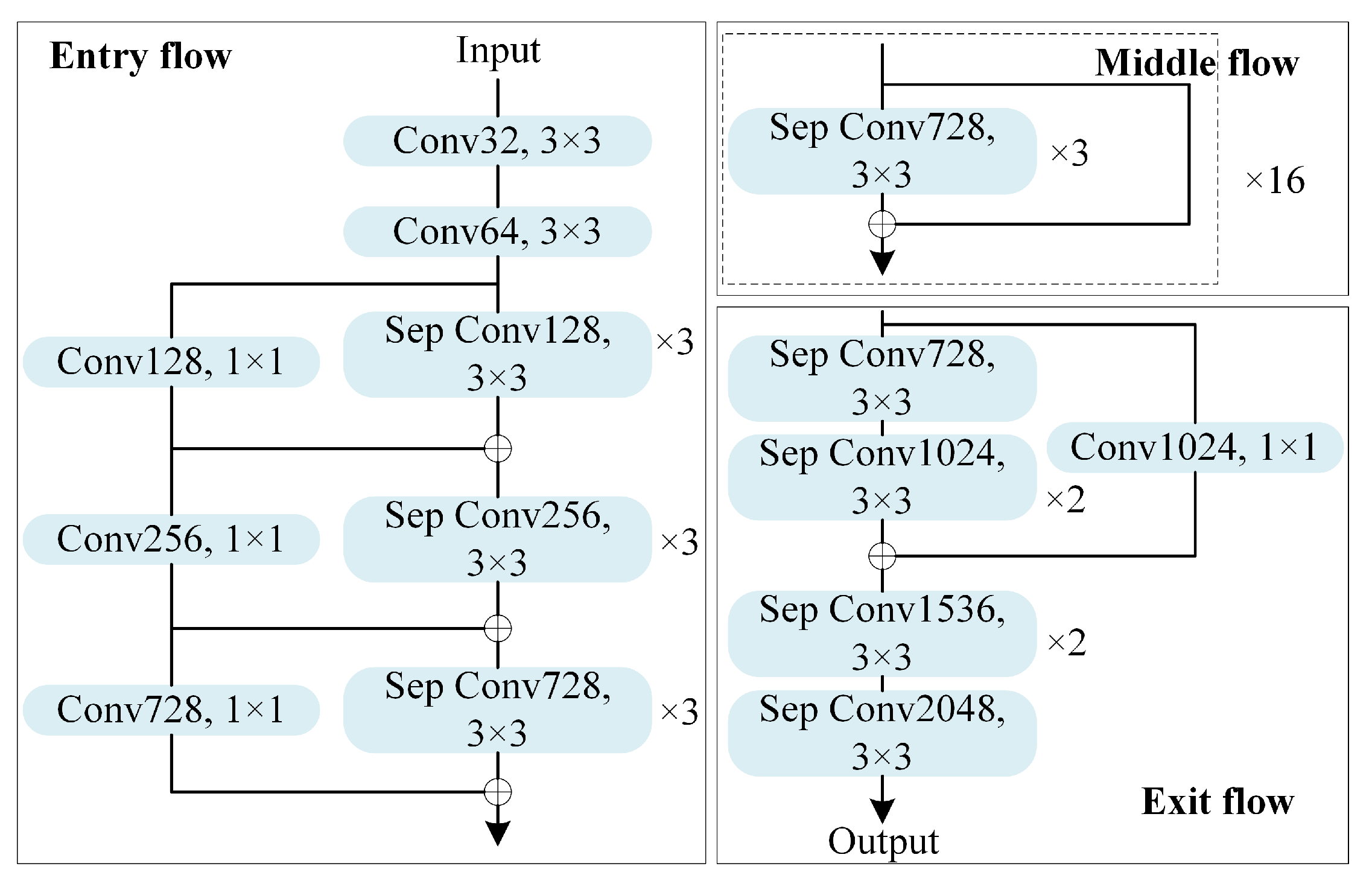

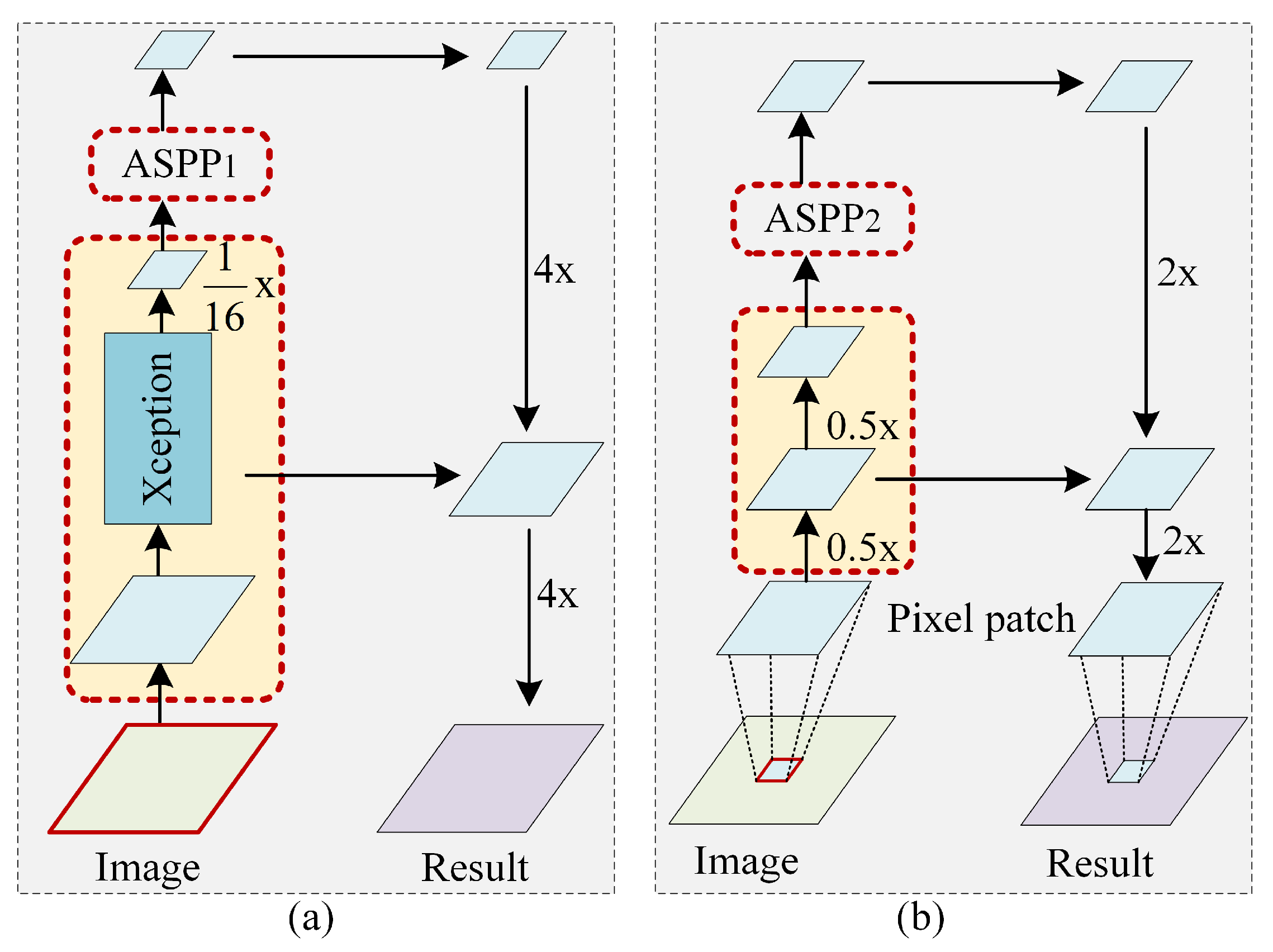

2)Validity analysis of L-deeplabV3+ network: In order to verify the performance of L-deeplabV3+, the multi-dimensional features of the extracted Flevoland2 data set are put into Deeplabv3+ and L-deeplabV3+ networks respectively for comparison, as shown in

Table 9. From the table data, it can be seen that the proposed L-deeplabV3+ network achieves better classification performance by fully considering the characteristics of PolSAR data. Among the fifteen different land cover types, fourteen achieve the highest accuracy. Additionally, in terms of overall evaluation metrics, the L-deeplabV3+ network improved by 1.96% and 6.63% respectively compared to the original DeeplabV3+ network.

3)Validity analysis of multi-features: To verify the effectiveness of the proposed 57-dimensional multi-feature input in the MLDnet method, we conduct ablation experiments on the Xi’an data set. Specifically, experiments are performed using the original data features (16 dimensions), the target decomposition features (17 dimensions), and the texture and contour features (24 dimensions) within the proposed network framework. For clarity, we name these three features as feature1, feature2, and feature3, respectively. The experimental results are shown in Figure 16. It can be seen that classification with only original data (feature1) cannot classify the heterogenous regions well in Figure 16(b)(such as the region in yellow circle), sine there are great scattering variations within heterogenous objects. In addition, compared with feature1, the method with feature2 can improve classification performance with multiple target decomposition-based features, while edges of urban areas, such as the region in the black circle, still cannot be well identified. The feature3 is contour and textual features, which can identify the urban and grass areas well, but confuse some pixels in water area. After utilizing multi-features, the proposed method can obtain superior performance in both heterogenous and water areas.

In addition, to quantitatively evaluate the effectiveness of multi-features, the classification accuracy of different features are given in

Table 10. Clearly, as mentioned earlier, using only the original data features is insufficient for effective classification of heterogeneous targets. For instance, in distinguishing

building class, feature1 has the lowest accuracy at only 85.95%, whereas feature2 and feature3 both achieve accuracy above 97%. Additionally, for the relatively homogeneous

grass class, feature3, which uses texture and contour features, demonstrates a clear advantage. For

water class with intricate boundaries, feature2, which uses target decomposition features, achieves higher class accuracy. The 57-dimensional multi-feature input used in this paper comprehensively considers various terrain characteristics and provides multiple complementary features, thereby achieving the best classification performance. In terms of overall accuracy (OA), compared to the three individual feature sets, the proposed method shows improvements of 5.99%, 4.13%, and 1.4%, respectively.

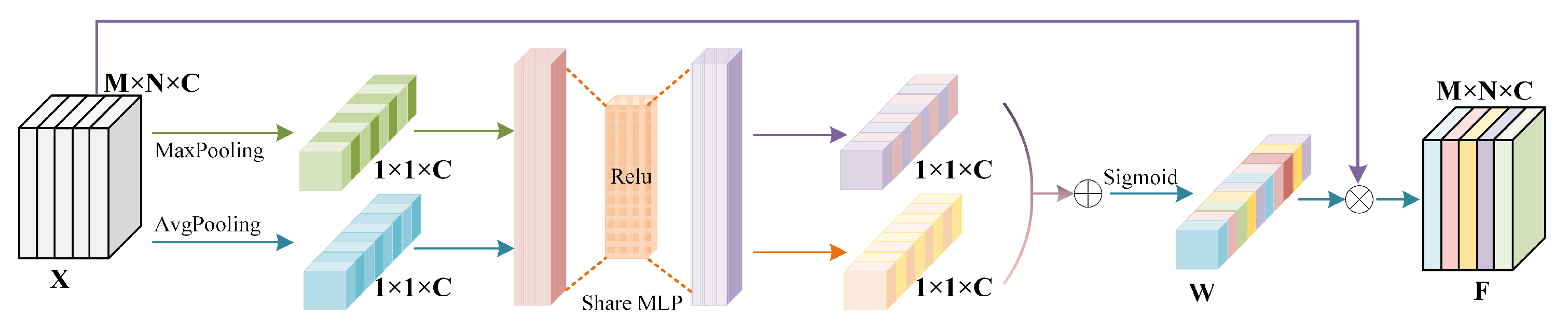

4)Validity analysis of channel attention module: Feature selection is one of the key factors influencing classification performance. To verify the effectiveness of the channel attention module in our network framework, we conduct experimental comparative analyses of the position and number of attention modules on the Xi’an data set, as shown in

Table 11. Specifically, considering that different types of features may be cross-fused under convolution operations, we introduce varying numbers of channel attention modules before and after the L-deeplabV3+ network in three sets of experiments. For the experiments with attention modules placed before the L-deeplabV3+ network, we name them front1, front2, and front3, with the numbers indicating the number of added channel attention modules. Similarly, for those placed after the L-deeplabV3+ network, we name them behind1, behind2, and behind3. From the data in the table, it is evident that adding multiple channel attention modules, whether before or after the convolution operations, can lead to a decrease of varying degrees in classification performance. Additionally, it increases the model’s parameter, causing unnecessary computational burden. What’s more, comparing front1 and behind1, it is clear that behind1 significantly outperforms the former in both class accuracy and overall classification metrics, with class accuracy improvements of 7.15%, 5.45%, and 3.38%, respectively. Therefore, in the proposed MLDnet method, the optimal strategy is to introduce one channel attention module after the L-deeplabV3+ network (behind1), thereby significantly enhancing classification performance and maintaining a low computational burden.

In summary, the experimental results show that introducing an appropriate number of channel attention modules in the network, especially when placed after the network, can effectively improve the classification performance of PolSAR images. At the same time, avoiding the excessive addition of attention modules prevents a significant increase in model complexity and computational cost, thus achieving a balance between performance and efficiency.

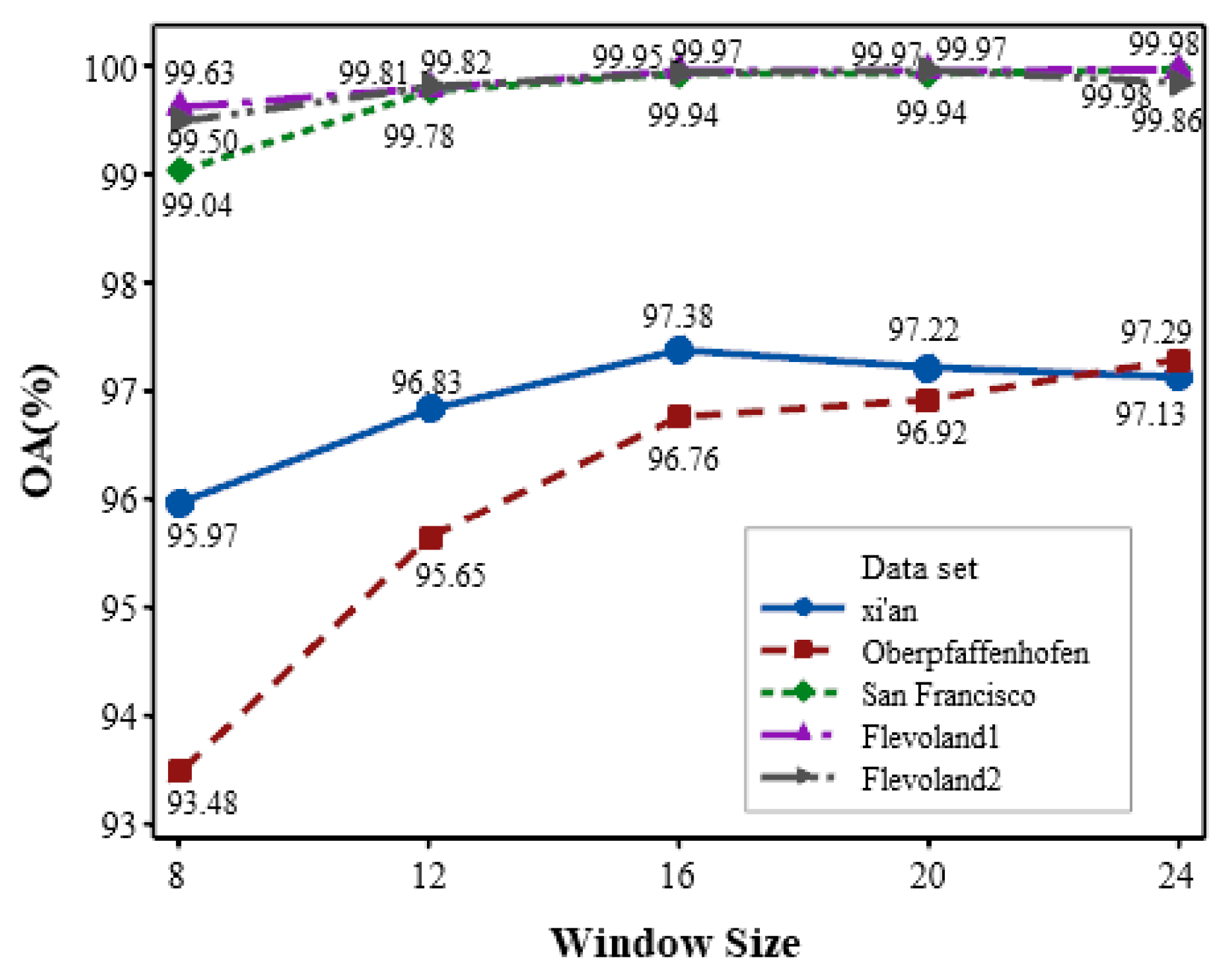

5)Effect of sampling window size:

Figure 17 further summarizes the change curve of the overall classification accuracy on sampling window size. The size of the sampling square window changes from

to

. From the figure, it can be observed that overall tendency of the change curve is gradually rising with increased sampling window size for most of data sets. However, there is a slightly decrease for Xi’an data set when the window size is larger than 16. The reason is analysed as that too small window is difficult to effectively capture specific contextual information, while too large window may involve a large amount of irrelevant neighborhood information, leading to misclassification. Moreover, it is evident that when the window size reaches

, the OA values of the five data sets do not significantly increase. Especially for the Flevoland1 and San Francisco data sets, as the window size varies from

to

, the OA value only increases by 0.04% and 0.01%. However, during this process, the network’s complexity is significantly increased. Therefore, considering the classification performance and time complexity comprehensively, we select the sampling window size as

.

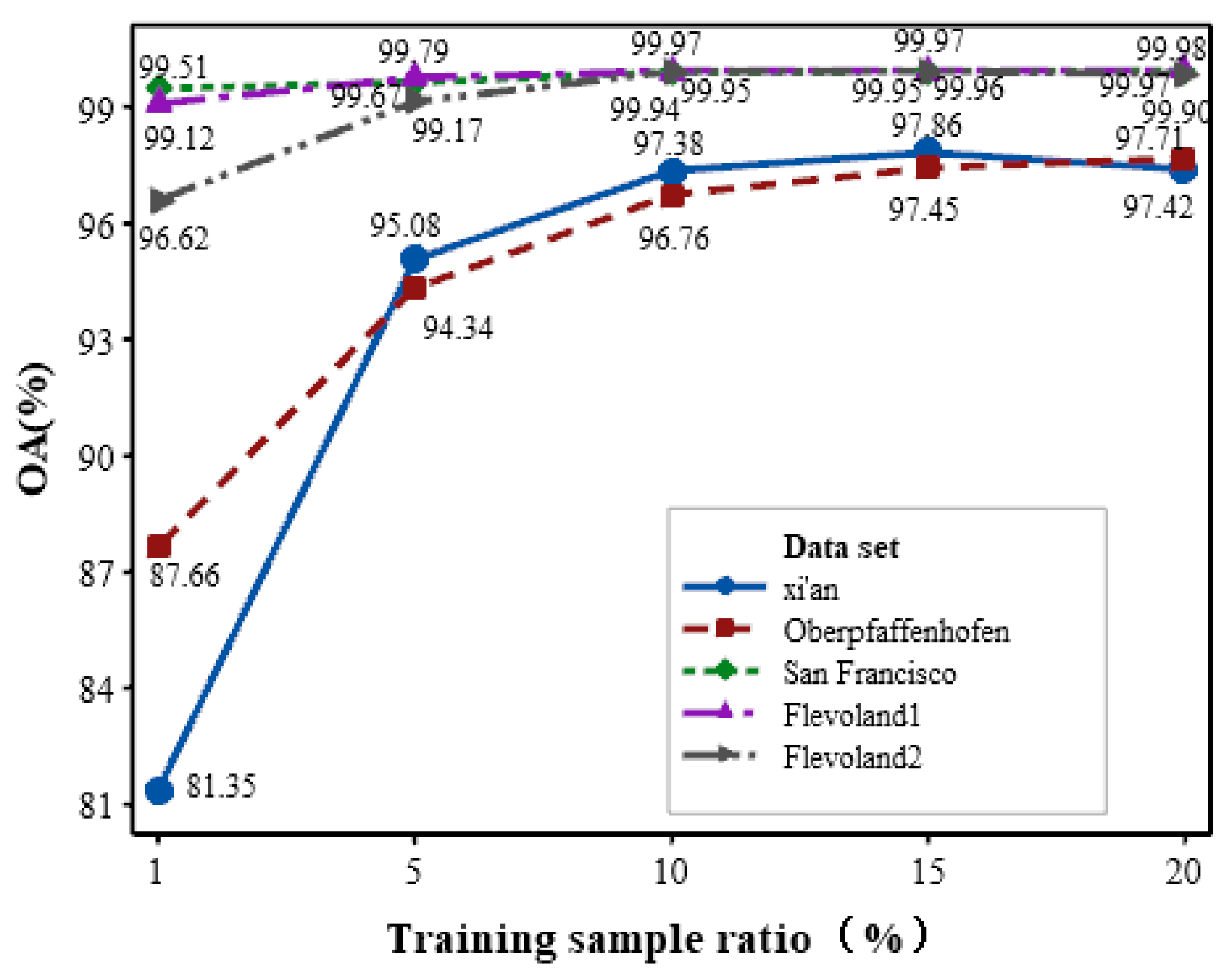

6)Effect of training sample ratio: The proportion of training samples is a crucial factor in influencing the classification performance. To analyze its impact on classification accuracy by the proposed MLDnet, we set the training sample proportions from 1%, 5%, 10%, 15% to 20%, and experiments are conducted on the five data sets above. The effect of the training sample ratio on classification accuracy is given in

Figure 18. It is obvious that for the Oberpfaffenhofen, Flevoland1, Flevoland2 and San Francisco data sets, when the training sample ratio reaches 10%, the model becomes stable, and the OA value no longer experiences a significant increase. However, for the Xi’an data set, it reaches stable when the training sample ratio is in the range of 10%-15%. Nevertheless, when the training sample ratio is in the range of 15%-20%, the OA value shows a downward trend. This may be attributed to the continuous increase in the training sample ratio exacerbating sample imbalance in the Xi’an data set, leading to the model overfitting. Therefore, considering the four experimental data sets overall, we chooses 10% as the training sample ratio for experiments.

7)Analysis of running time:

Table 12 summarizes the running time of each method on the Xi’an data set. From the table, it can be visually seen that, compared to first five deep learning methods, the traditional method Super-RF consumes the least time in both training and testing. Although it requires slightly longer time than CNN, CEGCN, SGCN-CNN and 3D-CNN methods, MLDnet can significantly reduce training and testing time compared with CV-CNN and PolMPCNN methods. In addition, compared with DeeplabV3+ method, the proposed MLDnet cost less time in both training and testing stages, which proves the effectiveness of the proposed lightweight deeplabV3+ network. Besides, the proposed MLDnet achieves the highest classification accuracy. This indicates that the proposed method can significantly improve the classification accuracy of PolSAR images without increasing too much time, thereby achieving dual improvements in time and performance.