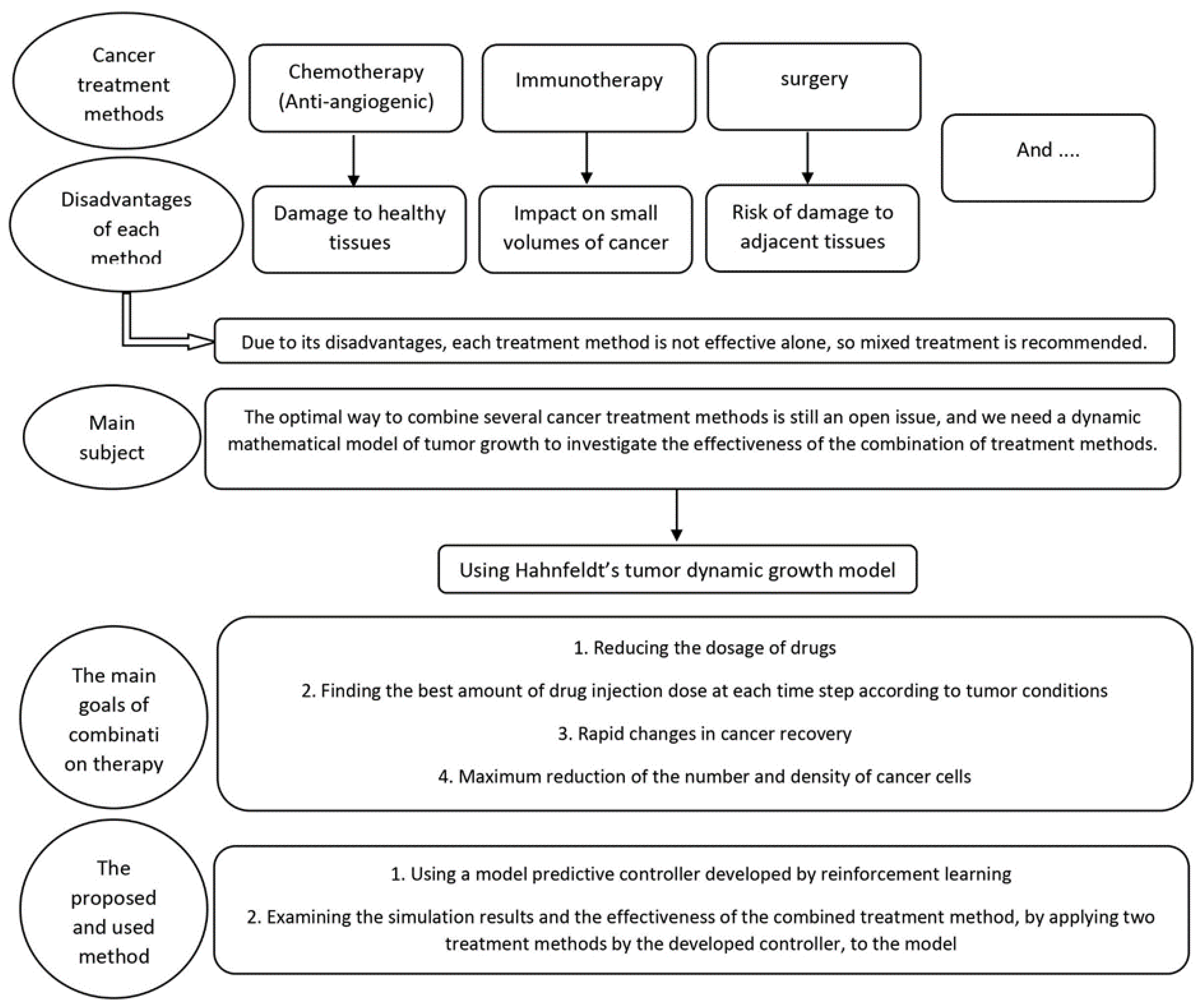

1. Introduction

Cancer is one of the most pressing health challenges of our time. It is the second leading cause of death worldwide, with millions of new cases diagnosed every year. Despite significant advances in cancer treatment and research, much remains to be done to find effective treatments [

11]. Several types of cancer treatment methods exist, including surgery, immunotherapy, radiotherapy, chemotherapy, and others. Surgery is often the most effective treatment for localized tumors in the early stages [

4], as it can remove the tumor completely. However, it cannot be used in cases of metastasis, and there is also a risk of damage to adjacent tissues.

Methods such as radiotherapy and chemotherapy are therapeutic methods based on targeted molecular therapy. Chemotherapy is the most common cancer treatment method [

4,

22] and, in some cases, the only treatment available. In chemotherapy and radiotherapy: 1. Cancer cells tend to grow rapidly, and chemotherapy drugs also work based on the mechanism of rapidly destroying growing cells, and since these drugs move throughout the body, they can also affect normal and healthy cells that are growing rapidly. Therefore, damage to healthy cells is the main side effect of chemotherapy and radiotherapy. 2. Cancer cells change shape to survive during chemotherapy treatment, so chemotherapy is often stopped alone [

11].

In recent years, a new type of immunotherapy has emerged that targets the blood vessels that supply tumours with nutrients and oxygen. This therapy is known as anti-angiogenic therapy and has shown great promise in the fight against cancer. Anti-angiogenic therapy is a form of molecular targeted therapy that drugs prevent the formation of new blood vessels to feed the tumour and also prevent tumour growth to a critical level [

1,

7,

12,

19]. Compared to other treatments, one of the main advantages of anti-angiogenic therapy is that tumor cells do not become resistant to anti-angiogenic drugs. Also, anti-angiogenic drugs have less side effects on patients due to their non-toxicity. [

11]. However, it is important to note that anti-angiogenic therapy does not remove the entire tumor but only stops its growth and keeps it at the initial stage. Therefore, this method is usually used in combination with conventional methods to remove the entire tumor [

13,

19]. Among other cancer treatment methods in the last few decades, we can mention immunotherapy, which has become an important part of treating some types of cancer. This treatment uses the body’s immune system to treat cancer with fewer side effects than targeted molecular treatments, such as chemotherapy [

2,

3]. However, despite the many available treatment methods and the disadvantages of each method, combination therapy as an optimal treatment method is still an open issue.

There are various mathematical models to investigate the interaction between tumor and immune system [

4], for example, Kuznetsov et al. [

5], de Vladar & González [

6], Stepanova’s classic article and Hahnfeldt’s dynamic tumor growth model [

14]. In an article published in 1999 [

14], a simple system with a few ordinary differential equations was presented by Hahnfeldt et al., which described the growth of tumor cell numbers in the presence of an angiogenesis inhibitor as a treatment. With the help of this model and selection of inputs, the interaction between tumor growth and immune system activity during cancer development is described. This model was then modified in articles in 2005, 2011 and 2012 [15-17]. One of the key findings of this model is that for small volumes of cancer, the immune system can effectively control cancer growth. However, for larger cancer volumes, cancer dynamics can suppress immune dynamics. This highlights the importance of combining different treatment methods to achieve optimal results.

The use of controllers in cancer treatment is common. Among the controllers, the multiple model predictive controller (MMPC) was used in a 2017 article to determine the optimal treatment plan for a combination of chemotherapy and immunotherapy in the modified Stepanova model [

10]. The results showed that the use of MMPC led to a decrease in tumor volume and drug dosage, making it an efficient strategy. In the article [

9], which was published in 2020, unlike the rest of the articles that used the optimal control strategy, a positive T-S phase controller was proposed for the combined treatment of cancer under combined immunotherapy and chemotherapy, and It has been found that the volume of tumor cells and the doses of drugs used have been reduced to a minimum. These findings demonstrate the potential of using controllers in cancer treatment to optimize treatment plans and improve patient outcomes.

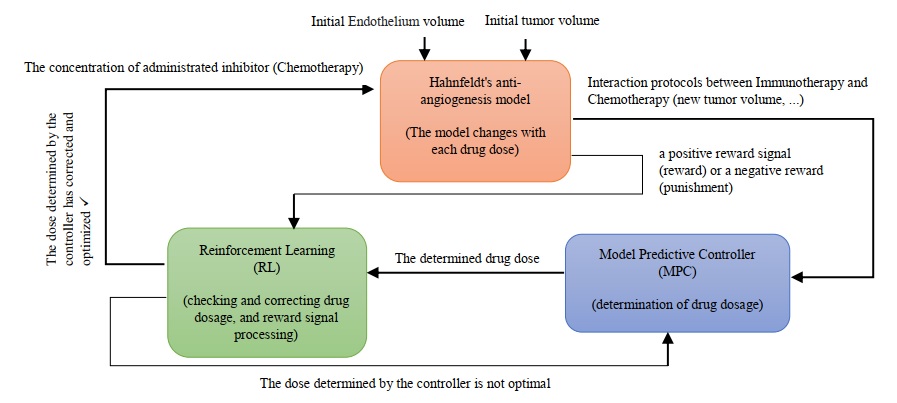

Cancer treatment has been challenging due to the complex and dynamic nature of the human body and the cancer system. The constantly changing environment and the activity of cancer cells make it difficult to develop effective treatments. Despite the numerous treatments and methods available, cancer has not been completely eliminated, and at best, its size has been fixed or reduced. This is due to uncertainties, disturbances, and unknown factors that have not been considered in the treatment process. To address this challenge, we used two treatment methods, chemotherapy and anti-angiogenesis, as a branch of immunotherapy, and then the combination of each method as cancer treatment methods. We propose a new approach that utilizes reinforcement learning (RL) to develop a predictive controller for determining the drug dosage amount that can be most effective at any moment. RL is a learning technique that enables an agent to learn through interaction with the environment and determine the best action to take in any situation to achieve optimal results. Therefore, the uncertainty factor is eliminated by this method. We have developed a model predictive controller with the concept of reinforcement learning to determine the dosage amount of the drug that can be most effective at any moment according to the unstable conditions of the system. Also, MPC is proposed to achieve this goal, which can be used for both immunotherapy and chemotherapy. The efficiency of the method is shown through simulation. As shown here, these results will help guide the development of combination therapies. In a general view, the research method is shown in

Figure 1.

The rest of this paper is organized as follows:

Section 2 provides a description of the model and the control problem. In

Section 3, a controller is developed based on the model predictive control scheme with the concept of reinforcement learning. The simulation results and discussion are presented in section 4. Finally, conclusions are presented in

Section 5.

2. Materials and Methods

In this part, the system model is explained and then we examine the problem of the controller.

2.1. Model of Tumor Dynamic Growth

We consider the dynamic model of tumor growth under anti-angiogenic therapy first developed in 1999 by Hahnfeldt et al. [

14]. The developed nonlinear dynamic model is presented at [

16]. By adding integrated administrated inhibitor as a new term to the model [

17] and assuming that the clearance rate of the administrated inhibitor is known, the final and simpler dynamic model of tumor growth under angiogenesis inhibitor can be described as follows [

12,

15,

18]:

(1)

The endothelium is a single layer of squamous endothelial cells that line the interior surface of blood vessels and lymphatic vessels. The model parameters values are presented in

Table 1.

Since this model considers the growth and reduction of tumor volume with the effect of anti-angiogenic drugs, it is more widely used [

18]. The model parameters definitions are presented in

Table 2.

2.2. Controller İmplementation Problem

The main goal of anti-cancer drugs is to reduce tumor cells, but at the same time, it has destructive effects on the body Therefore, it is necessary to design an MPC controller based on RL to combine treatment methods, so that RL can modify the drug dose to have the most optimal effect on MPC performance. However, we will achieve the best dose of drug injection for less damage to the body and also to speed up the healing process.

2.3. Controller Design

In this section, the goal is to implement a controller in such a way that it can correct its performance in the most optimal way according to the existing conditions at any moment.

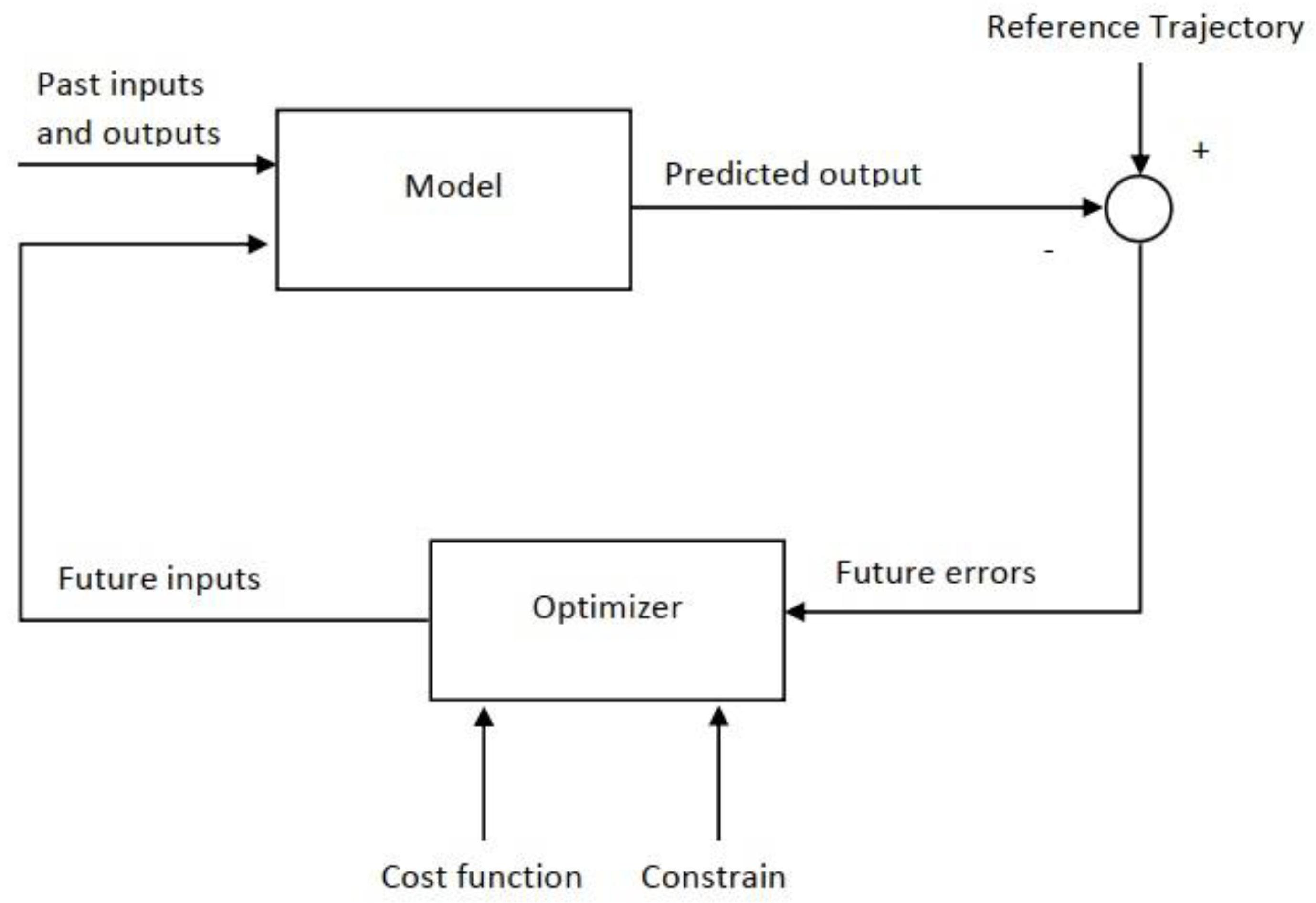

The most important benefit of this controller compared to the rest of them is that it can deal with the limitations directly and effectively. [

20]. The way the MPC works is that it can predict the next output of the control system using the inputs and outputs from the previous process and thus provide a real-time solution to the problem. The MPC base algorithm is shown in

Figure 2, as described in [

21].

2.4. Model Predictive Controller

As mentioned, MPC predicts the output according to the input and previous output of the process and its goal is to minimize the difference between the predicted path and the desired path. MPC performs this action with a series of calculations and execution on control actions and placing the predicted path under the constraints. An important step to design MPC is to set an objective function, which is defined as follows:

(2)

The limitations of this system are as follows:

By definition of the chemotherapy and anti-angiogenic agents, the following inequalities are obtained if the maximum dose rates become normalize to 1:

(3)

(4)

The tumour volume and the immune-competent cell density can be positive or zero.

(5)

(6)

The Model Predictive Controller values are presented in

Table 3 and definitions are presented in

Table 4.

3. Theory and Calculation

3.1. Reinforcement Learning-Based Model Predictive Controller Design

Designing an optimal model-based controller for nonlinear systems is one of the most challenging problems in the control theory. In this section, a Reinforcement Learning-based Model Predictive controller is developed for cancer therapy.

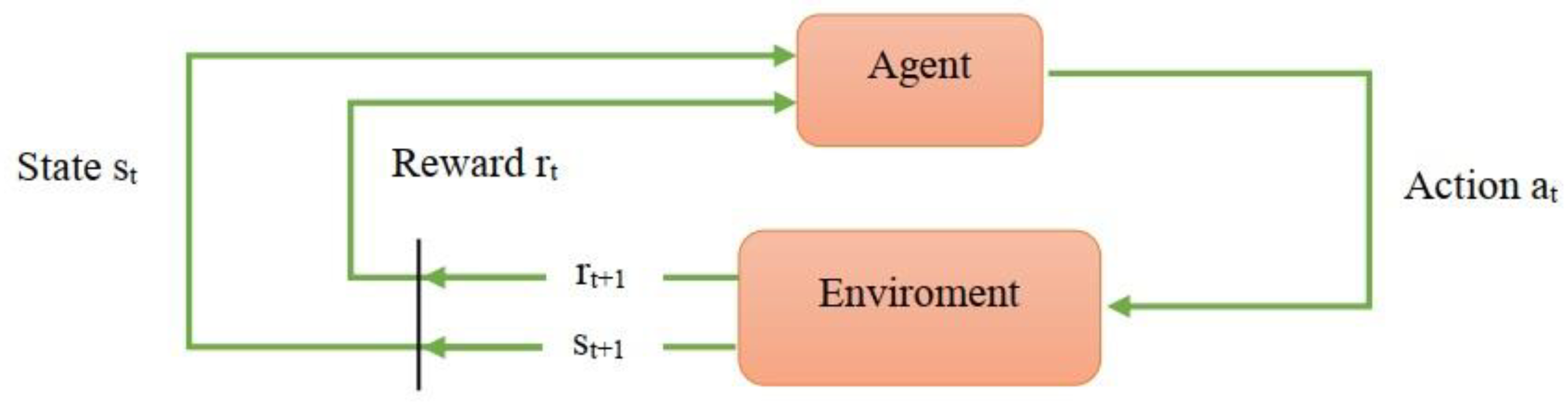

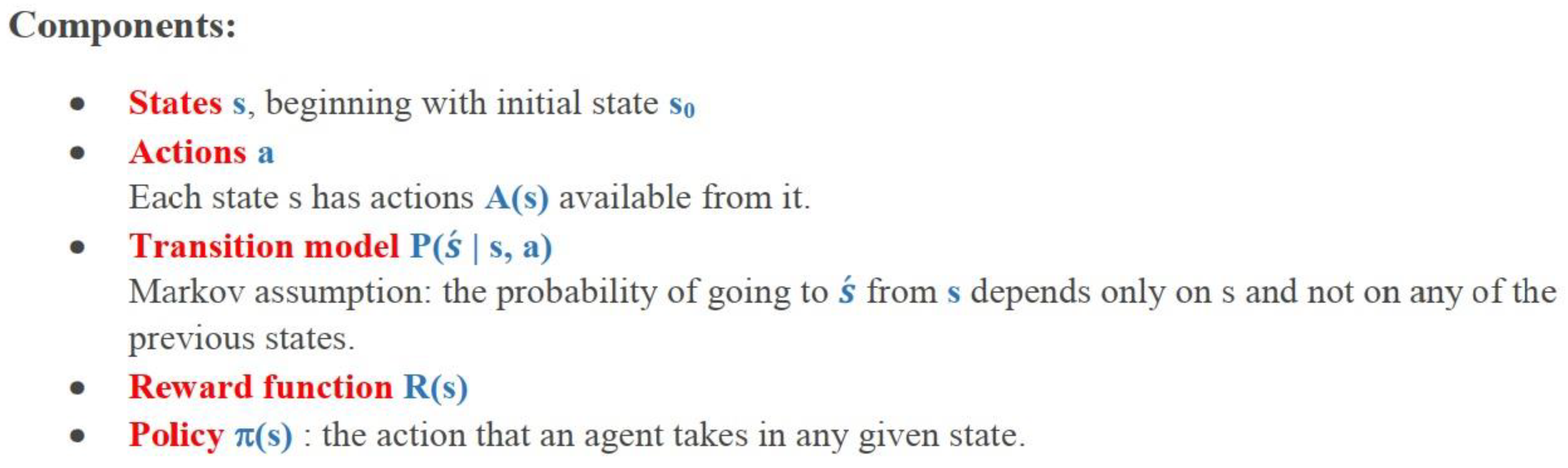

Reinforcement learning is one of the machine learning algorithms in which learning is done through the data received from the environment or interaction with the environment. In reinforcement learning, the agent knows the current state(S

t) and then the agent performs an action in the current state(A

t) and enters the new environment(S

t+1). For each action that the agent performs, the state of the system changes and then receives a positive reward signal (reward) or a negative reward (punishment) from the environment (R

t+1). After that, the agent processes the received reward. In the new state, the agent performs a new action based on the processing of the previous reward. Therefore, with every action it takes, the artificial intelligence (AI) learns to do what will get the most reward or the least punishment in every situation. The function in which the agent chooses a specific action in a specific situation is called policy. The special advantage of reinforcement learning is the control of systems whose dynamics we do not have. For example, an environment that is unstable or disturbed. The agent aims to obtain the strategy that maximizes the total received reward.

Figure 3 presents a schematic view of the RL problem. The principles of reinforcement learning are shown as a flowchart in

Figure 4.

The reinforcement learning framework is formalized according to the Markov Decision Process (MDP). Most reinforcement learning problems can be expressed in the form of a Markov Decision Process. In this process, states change and there are several states, and with each action, the environment changes from one state to another. In MDP, we calculate the optimal policy analytically based on our actions and states. The property of MDP is that the next state and the reward only depend on the previous state and the performed action, and the next state is not dependent on the history. In this process, by applying restrictions, actions are always chosen to take us to the permitted and available states. The Markov Decision Process is shown in

Figure 5.

MDP is defined as

as the description of the RL mathematical model system. It is necessary to mention that the transition probability matrix is not required in the model-free reinforcement learning algorithm environment and

is learned to approximate

. The MDP parameters and definitions are presented in

Table 5.

The action-value estimates are updated as follows, where by observing a transition :

(7)

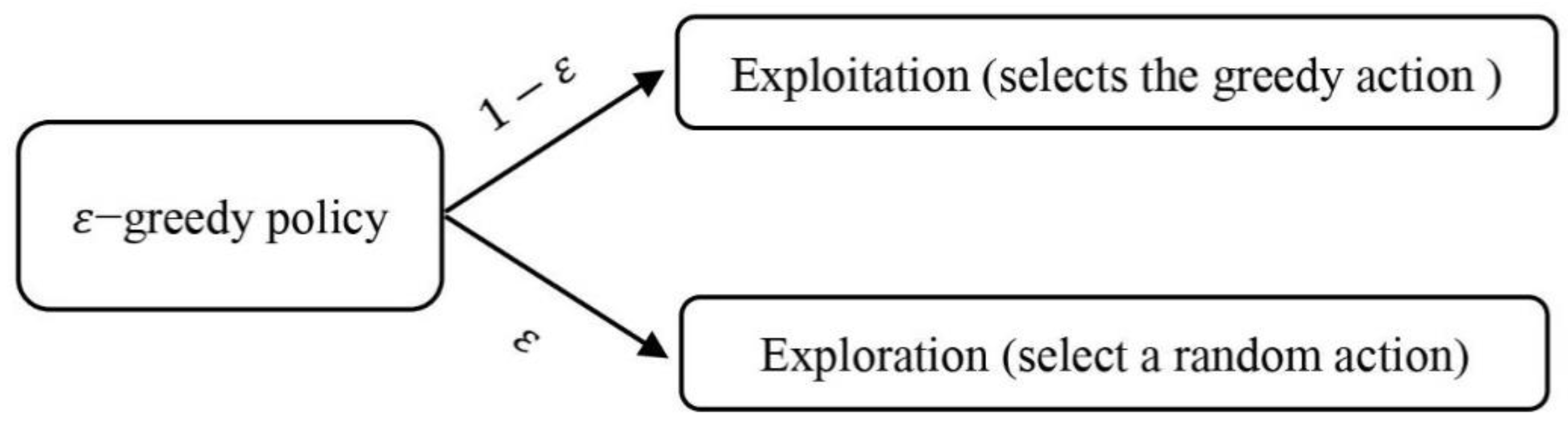

To reach an optimal policy, random actions follow the −greedy method, ε is a small positive number and decreases over time, and random actions are performed with this probability.

In simple terms, the smaller the value of ε, the less random actions will be taken to reach the optimal policy. Therefore, with this policy, there is an interaction between exploration and exploitation. The

−greedy policy is shown in

Figure 6.

The state value at the time instant k is defined through the mapping such that where:

(8)

The reward value at the time step is derived as follows to find out how much of the actions taken was desirable:

(9)

Once the is trained, the optimal action for at the is chosen by the agent or the controller as:

(10)

The

−greedy policy parameters and definitions are presented in

Table 6.

3.2. Method of Operation

According to the previous two sections, the method of operation is as follows:

Reinforcement learning corrects the dosage based on MPC performance. It means that the controller is running a platform and RL receives and corrects a function from it; so it can be said that we put another model under a double corrector on MPC performance. Therefore, MPC determines the drug dose and RL, as a corrector, has its effect on the controller and determines the final amount of the optimal dose. The performance of the designed system components and their interaction with each other are shown in

Figure 7.

4. Results

The values related to parameters and initial conditions used in simulations and modeling are presented in

Table 1 and

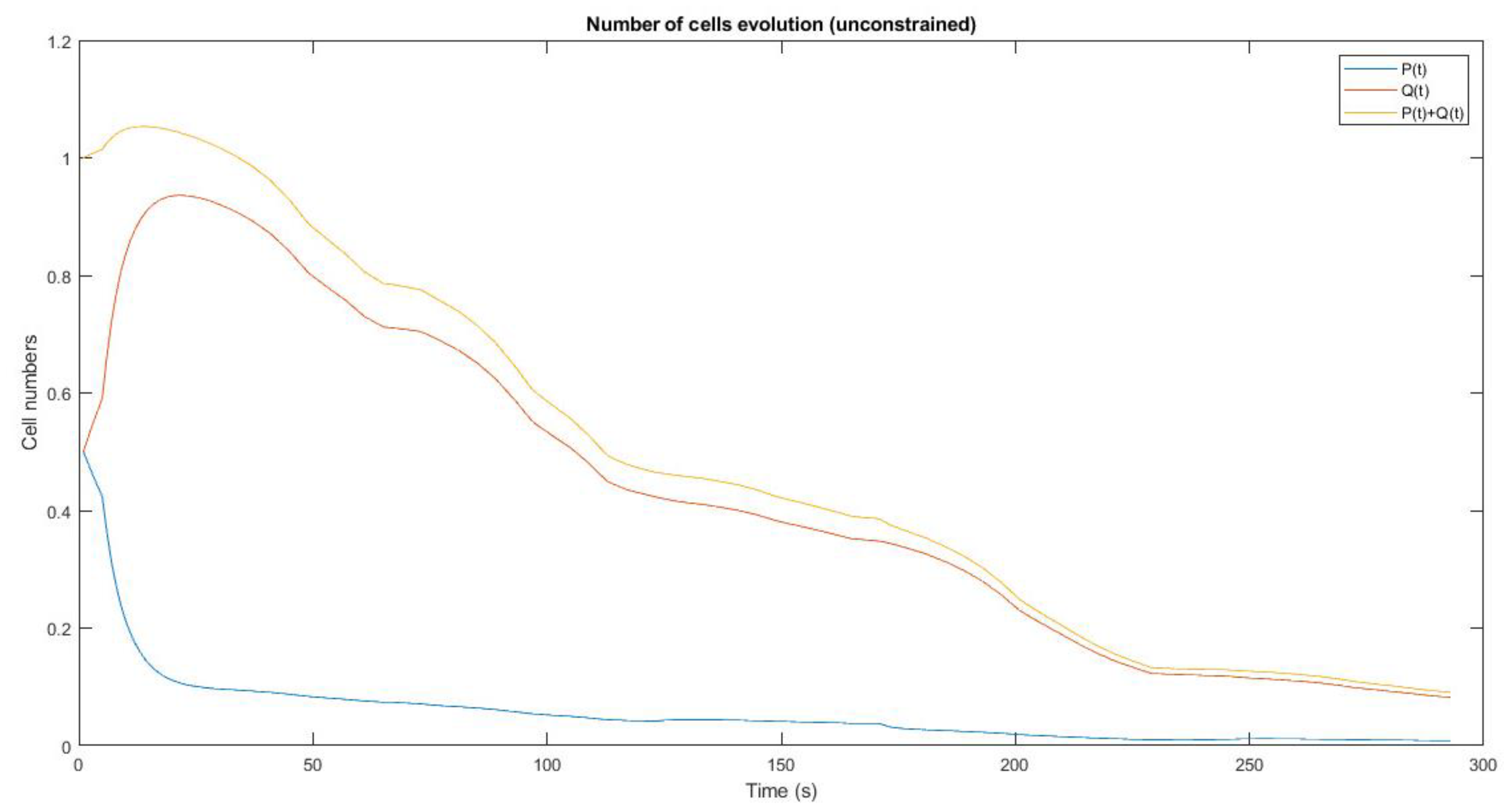

Table 3 and in order to reach the best drug dosage in unstable conditions. The experiments and simulations described in this section relied on MATLAB and were developed and designed by RL-based MPC to Control the tumor system by immune and anti-angiogenesis. Based on the weighting matrixes, different time steps and different sample time, strategies were devised for each therapy. In figures Q represents chemotherapy, P represents anti-angiogenesis immunotherapy and P+Q represents mixed-therapy.

Figure 8. shows that 50% of the cells are subjected to immunotherapy and the remaining 50%

are subjected to chemotherapy, and no restrictions applied for Reinforcement Learning in the

controller. The results indicate that in the chemotherapy treatment method, the slope of decreasing

the number of tumor cells is much faster than the immunotherapy treatment method, and in the

combined treatment method, chemotherapy has a greater effect than immunotherapy and the tumor

cell reduction graph is more similar to chemotherapy graph. The number of tumor cells in both

treatment methods and the combined method have a downward trend.

Figure 9. shows the results of the simulation by using RL and without considering the initial conditions in a period of 30 days and with time steps N=2, N=5, N=10. Also, the optimal control sequence corresponding to each time step is also shown. According to the graph of the percentage of the remaining cells after 30 days and also according to the optimal control sequence, it can be seen that the maximum optimization occurs in time step N=2 And the optimization in this time step is always upward and the controller in each moment has more effective behavior than the previous moment and the total optimization rate is almost 80%. After that, the optimization in step N=10 is better than N=5, because in N=10, although the optimality of the controller has successive ascending and descending behaviors in different stages, the total optimality is equal to 70%. In N=5, although at the beginning of the process, the optimization was downward and after step-2, it has had always an upward behavior, overall the optimization rate was only 35%.

Figure 10. shows the results of the simulation by using RL and with considering the random initial conditions in a period of 30 days and with time steps N=2, N=5, N=10. Also, the optimal control sequence corresponding to each time step is also shown. According to the graph of the percentage of remaining cells after 30 days and also according to the optimal control sequence, it can be seen that the maximum optimization occurs in the time step N=5, because the optimization process is always upward and although it is slower at the beginning, at the end, the optimal slope will be steeper and the optimal percentage is equal to 90%. After that, the optimization in the time step N=2 is better than N=10, because the optimization in this time step is always upward and the controller behaves more effectively at each moment than the previous moment, and the optimality percentage is almost equal to 80%. . However, in N=10, despite the sharp end slope, the optimality of the controller along the path has successive ascending and descending behaviors in different stages, and the optimality rate is 70%.

5. Discussion and Conclusions

According to the results of article [

18], we found that the chemotherapy by using reinforcement learning alone is effective in cancer treatment. Also, according to the results of article [

10], it was observed that applying both chemotherapy and immunotherapy methods by using a Multiple Model Predictive Controller (MMPC) is more effective than using only one treatment method. In this article as the simulation results in the previous section show, in general, with the help of the system model and using a model predictive control developed by RL, it can be seen that chemotherapy alone can decrease the number of cells and tumor growth in the long term and take the system from a malignant state to a benign state. Although there is a downward trend in the number of cells in the end, chemotherapy does not decrease the number of cells at the beginning and its effectiveness is determined in the long term. Also, although at the beginning of the work, the reduction of the number of cells is evident by the immunotherapy method, in general, its downward course is very slow. What distinguishes this article from previous and similar works and gives it superiority is that: 1. The results of both treatment methods have been examined individually and in combination. 2. A model predictive controller developed by RL has been used to apply a specific and required dose of medicine for treatment. 3. The optimality process of the developed controller in each time step has also been investigated.

Based on Hahnfeldt ’s mathematical model which is in [

14], we applied a model predictive controller developed by reinforcement learning to observe the effectiveness of the combination of two treatment methods, namely chemotherapy and anti-angiogenesis immunotherapy. Since the system is constantly changing, the controller predicts the drug dose, then reinforcement learning examines that dose. If the predicted dose is the most optimal (highest reward), the drug is delivered to the patient, but if the predicted dose is not optimal, the controller will predict a new dose to be injected to be checked again by reinforcement learning. This process continues according to the time steps until the best drug dose is determined for the effect and then it is applied to the system. According to the results of the simulation, taking into account all the different conditions and different time steps, the combination of the two methods reduces the number of cells by almost 65%, and this shows the effectiveness of the combination of the two treatment methods with the help of a developed model Predictive controller by RL. Also, when there are no initial conditions, the developed controller has optimality in the time step N=2, and when random initial conditions are applied, it has optimality in the time step N=5. Because in these time stages and in almost every stage, the controller has a more effective behavior compared to the previous stage to achieve the most effective dose, and the percentage of optimality is higher than the rest of the cases.