Submitted:

24 January 2025

Posted:

26 January 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Experiment 1

2.1. Method

2.1.1. Participants

2.1.2. Apparatus and Stimuli

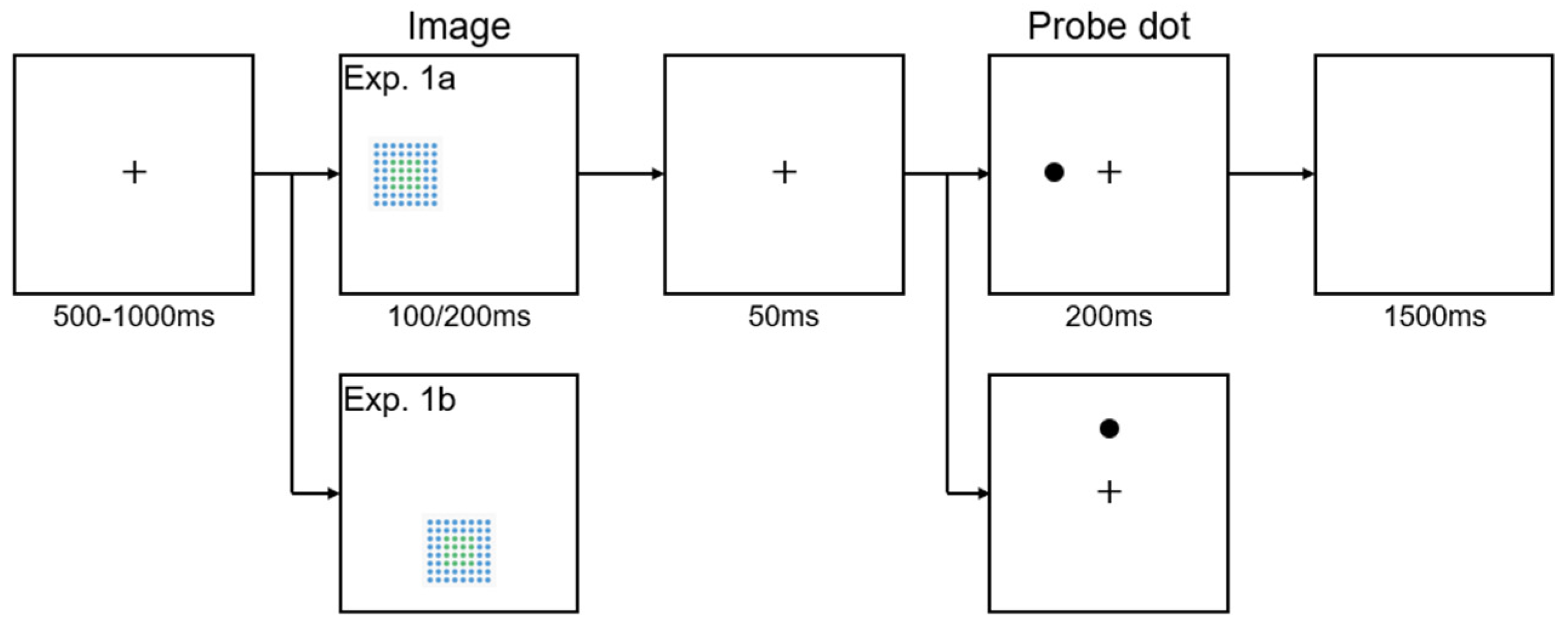

2.1.3. Design and Procedure

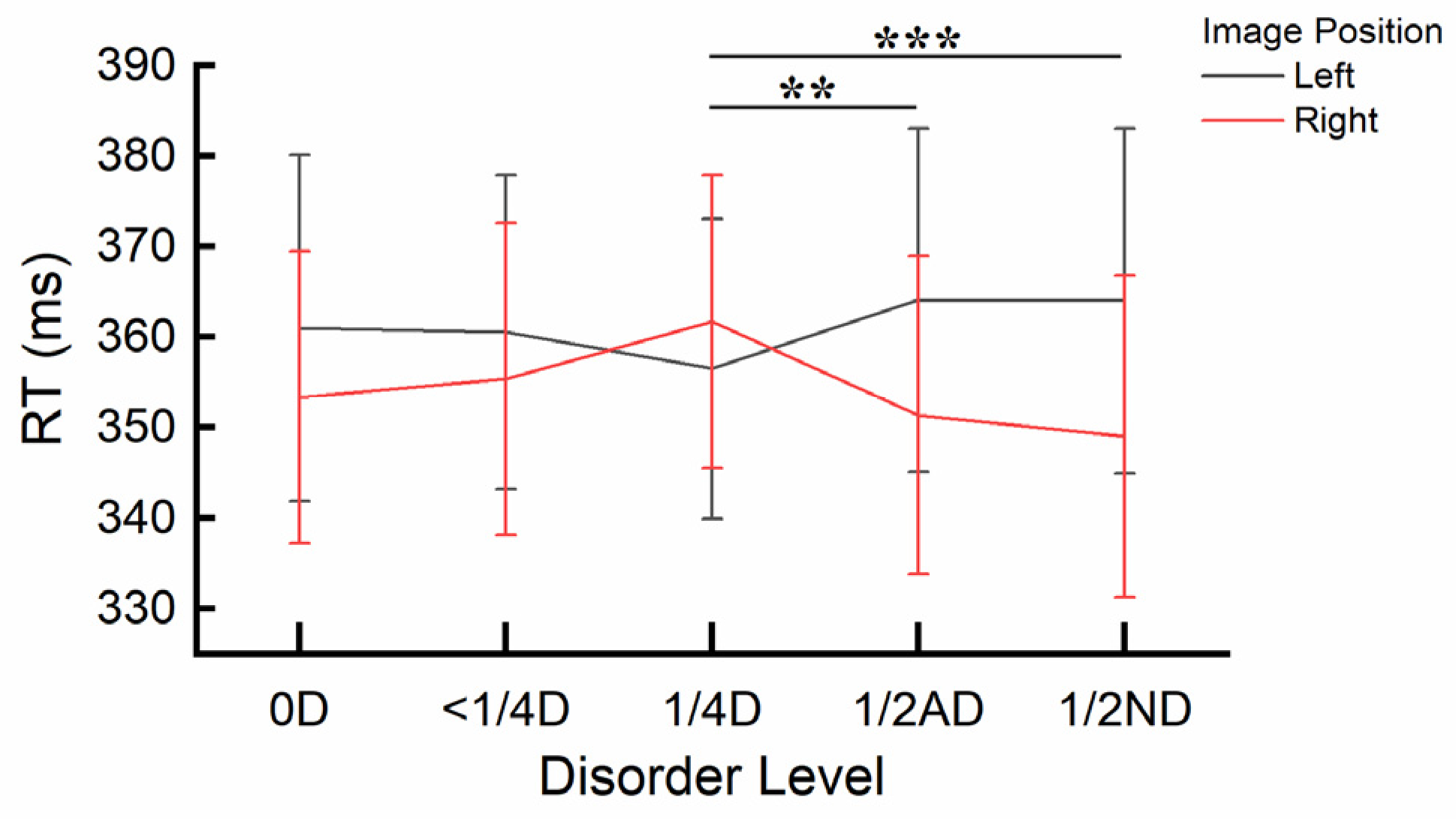

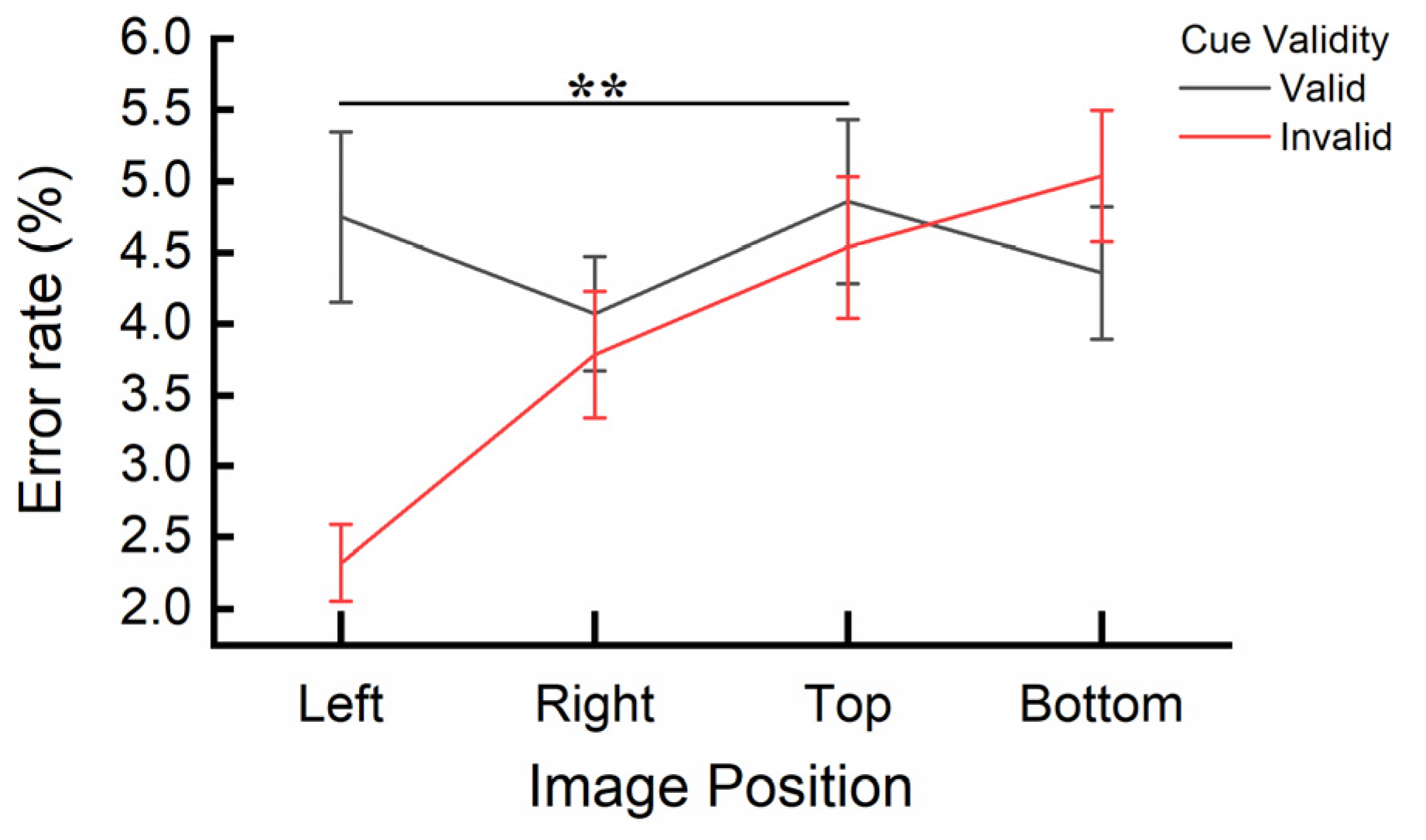

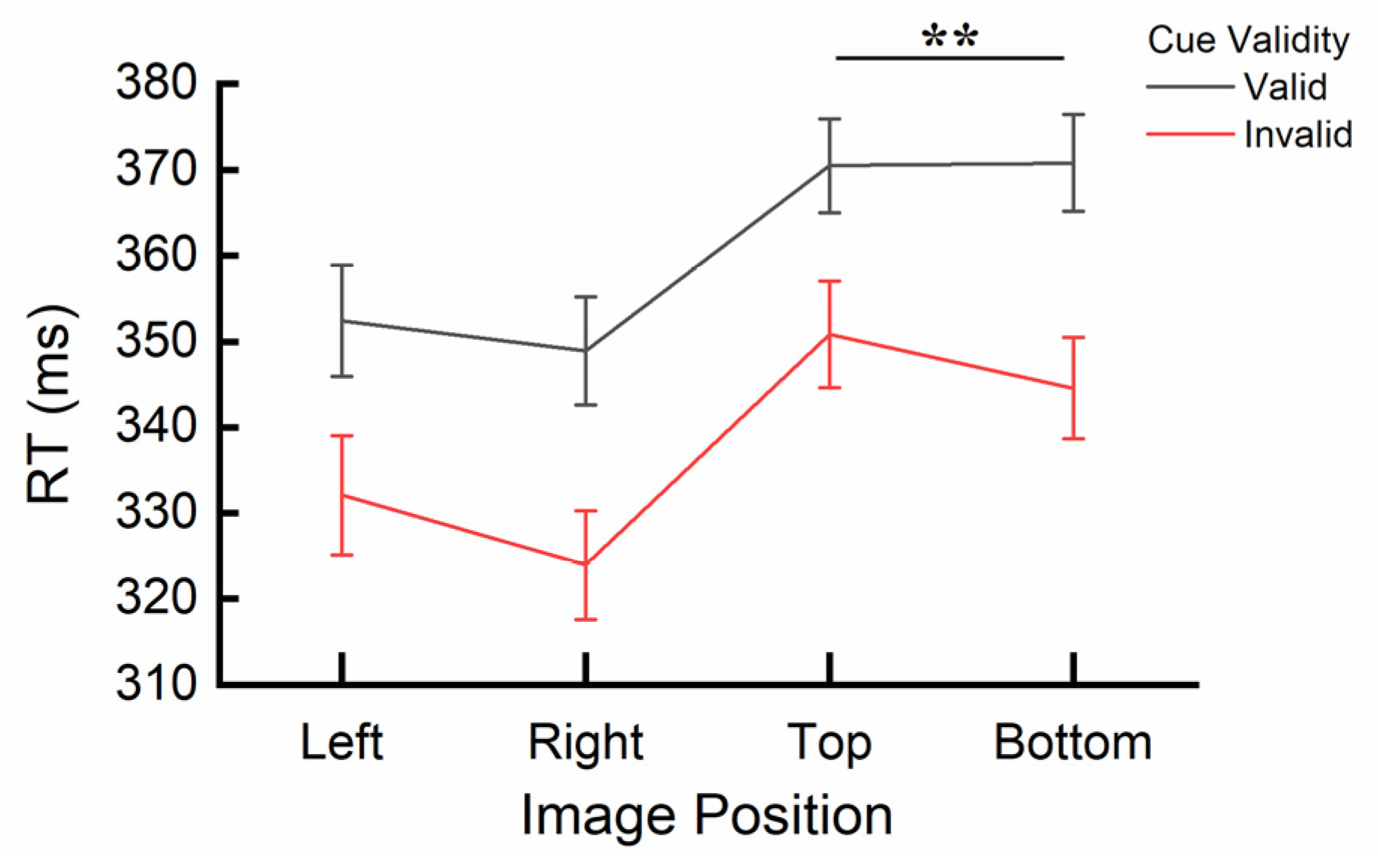

2.2. Results

2.3. Discussion

3. Experiment 2

3.1. Method

3.1.1. Participants

3.1.2. Apparatus and Stimuli

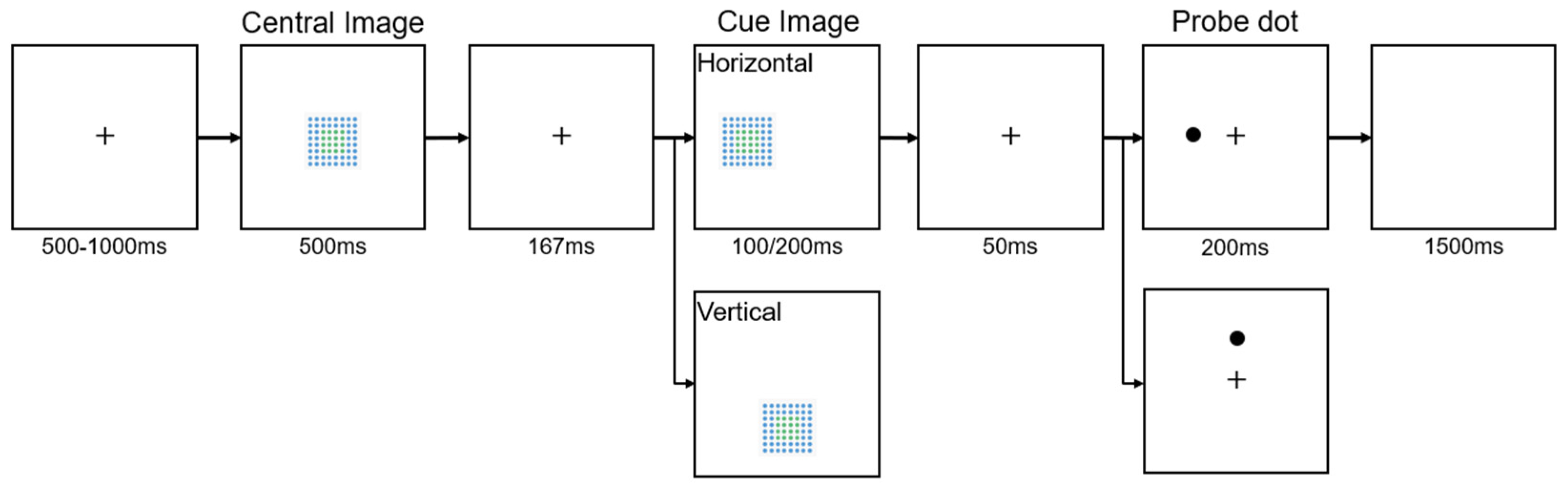

3.1.3. Design and Procedure

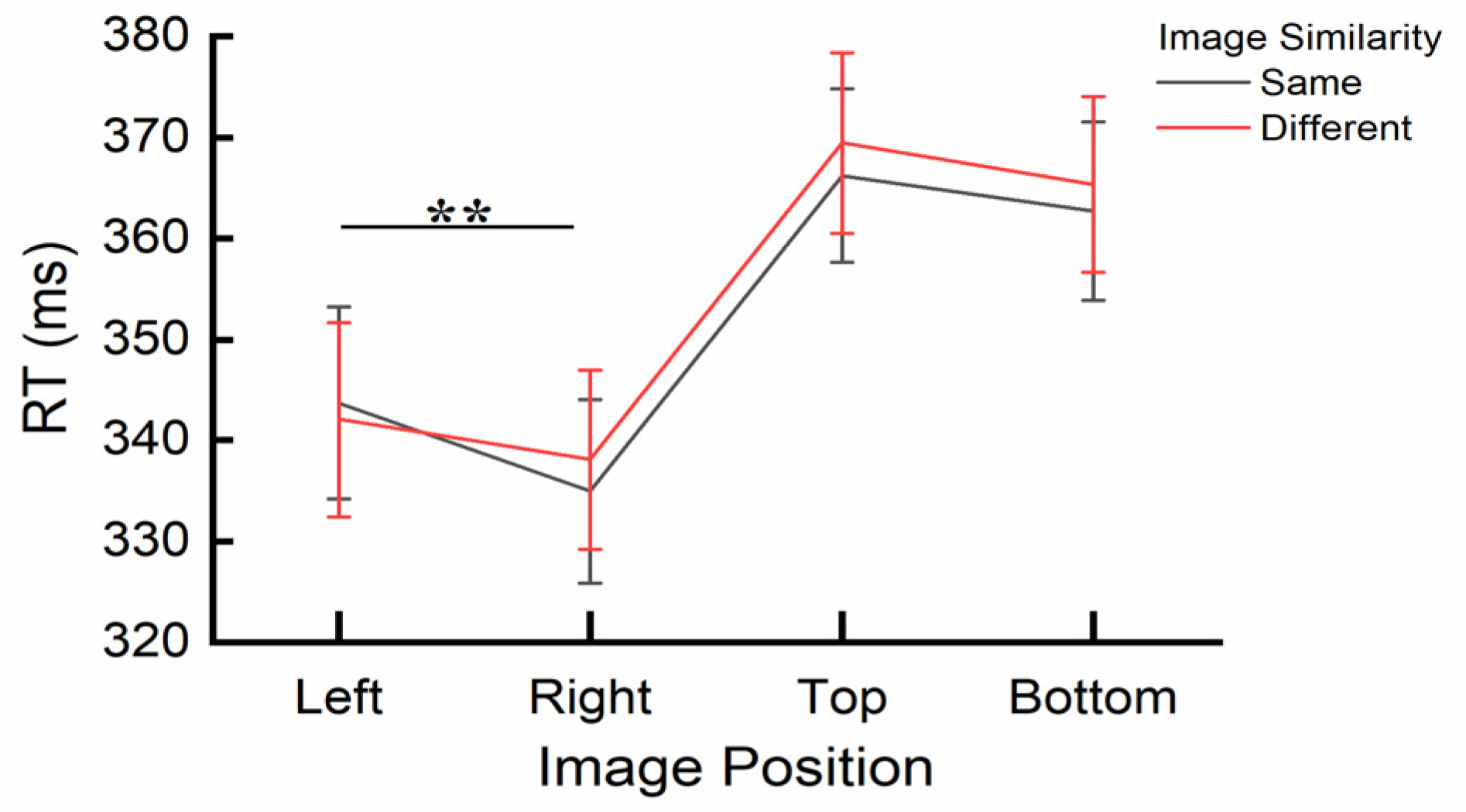

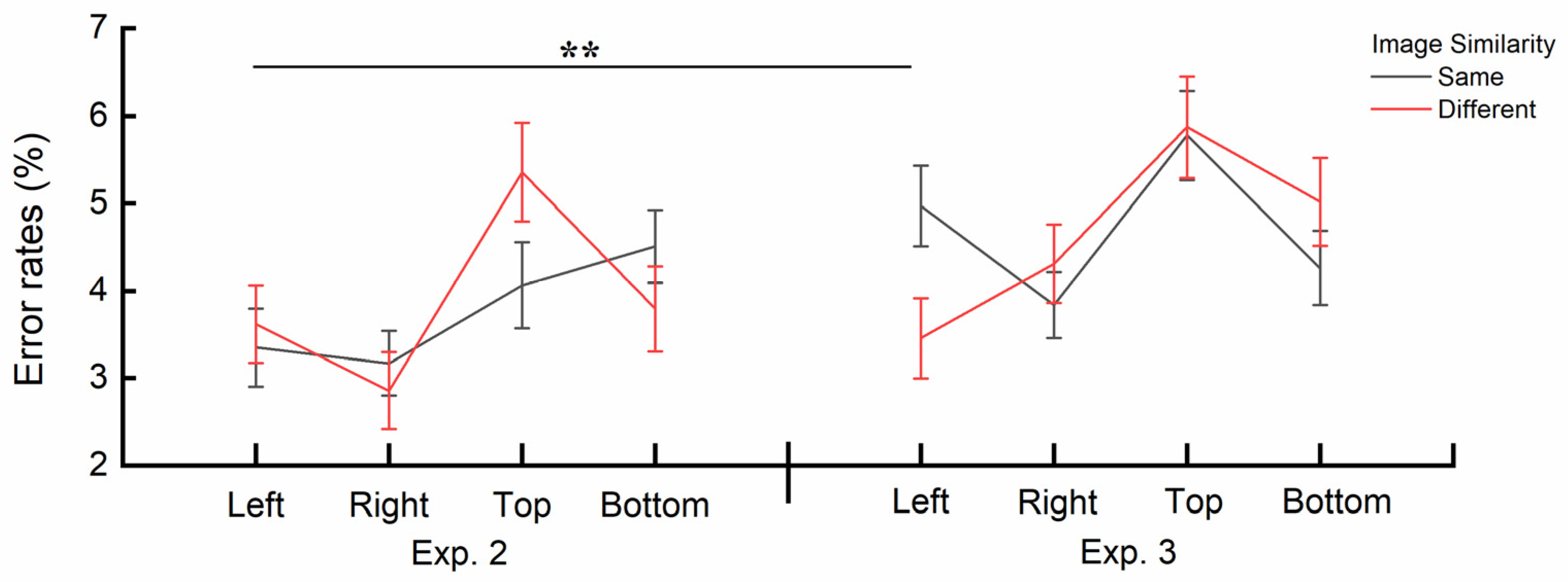

3.2. Results

2.4. Discussion

4. Experiment 3

4.1. Method

4.1.1. Participants

4.1.2. Apparatus, Design, Stimuli and Procedure

4.2. Results

4.3. Discussion

4.4. Combined Results of Experiment 2 and Experiment 3

4.5. Discussion

5. General Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hurlstone, M.J.; Hitch, G.J.; Baddeley, A.D. Memory for Serial Order across Domains: An Overview of the Literature and Directions for Future Research. Psychological Bulletin 2014, 140, 339–373. [Google Scholar] [CrossRef] [PubMed]

- Clark, H.H. SPACE, TIME, SEMANTICS, AND THE CHILD1. In Cognitive Development and Acquisition of Language; Moore, T.E., Ed.; Academic Press: San Diego, 1973; pp. 27–63. ISBN 978-0-12-505850-6. [Google Scholar]

- Oliveri, M.; Koch, G.; Caltagirone, C. Spatial–Temporal Interactions in the Human Brain. Exp Brain Res 2009, 195, 489–497. [Google Scholar] [CrossRef] [PubMed]

- Zampini, M.; Shore, D.I.; Spence, C. Audiovisual Temporal Order Judgments. Exp Brain Res 2003, 152, 198–210. [Google Scholar] [CrossRef]

- Pan, L.; Huang, X. The Influence of Spatial Location on Temporal Order Perception. Curr Psychol 2024, 43, 2052–2061. [Google Scholar] [CrossRef]

- Scozia, G.; Pinto, M.; Pellegrino, M.; Lozito, S.; Pia, L.; Lasaponara, S.; Doricchi, F. How Time Gets Spatial: Factors Determining the Stability and Instability of the Mental Time Line. Atten Percept Psychophys 2023, 85, 2321–2336. [Google Scholar] [CrossRef] [PubMed]

- Beracci, A.; Fabbri, M. Vertical Mental Timeline Is Not Influenced by VisuoSpatial Processing. Brain Sciences 2024, 14, 184. [Google Scholar] [CrossRef]

- He, J.; Bi, C.; Jiang, H.; Meng, J. The Variability of Mental Timeline in Vertical Dimension. Front. Psychol. 2021, 12. [Google Scholar] [CrossRef]

- Leone, M.J.; Salles, A.; Pulver, A.; Golombek, D.A.; Sigman, M. Time Drawings: Spatial Representation of Temporal Concepts. Consciousness and Cognition 2018, 59, 10–25. [Google Scholar] [CrossRef]

- Shinohara, K. Conceptual Mappings from Spatial Motion to Time: Analysis of English and Japanese. In Proceedings of the Computation for Metaphors, Analogy, and Agents; Nehaniv, C.L., Ed.; Springer: Berlin, Heidelberg, 1999; pp. 230–241. [Google Scholar]

- Marshuetz, C.; Smith, E.E.; Jonides, J.; DeGutis, J.; Chenevert, T.L. Order Information in Working Memory: fMRI Evidence for Parietal and Prefrontal Mechanisms. Journal of Cognitive Neuroscience 2000, 12, 130–144. [Google Scholar] [CrossRef]

- Wise, T.B.; Barack, D.L.; Templer, V.L. Geometrical Representation of Serial Order in Working Memory. Learn Behav 2022, 50, 443–444. [Google Scholar] [CrossRef]

- Liu, B.; Alexopoulou, Z.-S.; van Ede, F. Jointly Looking to the Past and the Future in Visual Working Memory. eLife 2024, 12, RP90874. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Wang, Y.; Guo, S.; Zhang, X.; Yan, B. The Causal Future: The Influence of Shape Features Caused by External Transformation on Visual Attention. Journal of Vision 2021, 21, 17. [Google Scholar] [CrossRef] [PubMed]

- Frey, H.-P.; Honey, C.; König, P. What’s Color Got to Do with It? The Influence of Color on Visual Attention in Different Categories. Journal of Vision 2008, 8, 6. [Google Scholar] [CrossRef] [PubMed]

- Glavan, J.J.; Haggit, J.M.; Houpt, J.W. Temporal Organization of Color and Shape Processing during Visual Search. Atten Percept Psychophys 2020, 82, 426–456. [Google Scholar] [CrossRef]

- Zhou, Y.; Wu, F.; Wan, X.; Shen, M.; Gao, Z. Does the Presence of More Features in a Bound Representation in Working Memory Require Extra Object-Based Attention? Mem Cognit 2021, 49, 1583–1599. [Google Scholar] [CrossRef]

- Ben-Av, M.B.; Sagi, D.; Braun, J. Visual Attention and Perceptual Grouping. Perception & Psychophysics 1992, 52, 277–294. [Google Scholar] [CrossRef]

- Freeman, E.; Sagi, D.; Driver, J. Lateral Interactions between Targets and Flankers in Low-Level Vision Depend on Attention to the Flankers. Nat Neurosci 2001, 4, 1032–1036. [Google Scholar] [CrossRef]

- Glicksohn, A.; Cohen, A. The Role of Gestalt Grouping Principles in Visual Statistical Learning. Atten Percept Psychophys 2011, 73, 708–713. [Google Scholar] [CrossRef]

- Kimchi, R.; Yeshurun, Y.; Cohen-Savransky, A. Automatic, Stimulus-Driven Attentional Capture by Objecthood. Psychonomic Bulletin & Review 2007, 14, 166–172. [Google Scholar] [CrossRef]

- Kimchi, R.; Yeshurun, Y.; Spehar, B.; Pirkner, Y. Perceptual Organization, Visual Attention, and Objecthood. Vision Research 2016, 126, 34–51. [Google Scholar] [CrossRef]

- White, B. Attention, Gestalt Principles, and the Determinacy of Perceptual Content. Erkenn 2022, 87, 1133–1151. [Google Scholar] [CrossRef]

- Reppa, I.; Schmidt, W.C.; Leek, E.C. Successes and Failures in Producing Attentional Object-Based Cueing Effects. Atten Percept Psychophys 2012, 74, 43–69. [Google Scholar] [CrossRef] [PubMed]

- Cavanagh, P.; Caplovitz, G.P.; Lytchenko, T.K.; Maechler, M.R.; Tse, P.U.; Sheinberg, D.L. The Architecture of Object-Based Attention. Psychon Bull Rev 2023, 30, 1643–1667. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z. Object-Based Attention: A Tutorial Review. Atten Percept Psychophys 2012, 74, 784–802. [Google Scholar] [CrossRef] [PubMed]

- Li, A.; Wolfe, J.M.; Chen, Z. Implicitly and Explicitly Encoded Features Can Guide Attention in Free Viewing. Journal of Vision 2020, 20, 8. [Google Scholar] [CrossRef] [PubMed]

- Awh, E.; Vogel, E.K.; Oh, S.-H. Interactions between Attention and Working Memory. Neuroscience 2006, 139, 201–208. [Google Scholar] [CrossRef]

- Downing, P.E. Interactions between Visual Working Memory and Selective Attention. Psychol Sci 2000, 11, 467–473. [Google Scholar] [CrossRef]

- Kruijne, W.; Meeter, M. Implicit Short- and Long-Term Memory Direct Our Gaze in Visual Search. Atten Percept Psychophys 2016, 78, 761–773. [Google Scholar] [CrossRef]

- Oberauer, K. Working Memory and Attention - A Conceptual Analysis and Review. J Cogn 2019, 2, 36. [Google Scholar] [CrossRef]

- Delogu, F.; Nijboer, T.C.W.; Postma, A. Encoding Location and Serial Order in Auditory Working Memory: Evidence for Separable Processes. Cogn Process 2012, 13, 267–276. [Google Scholar] [CrossRef]

- Galeano-Keiner, E.M.; Pakzad, S.; Brod, G.; Bunge, S.A. Examining the Role of Attentional Allocation in Working Memory Precision with Pupillometry in Children and Adults. Journal of Experimental Child Psychology 2023, 231, 105655. [Google Scholar] [CrossRef] [PubMed]

- Kar, B.R.; Kenderla, P.K. Working Memory and Executive Attention: Insights from Developmental Studies and Implications for Learning and Education. J Indian Inst Sci 2017, 97, 497–510. [Google Scholar] [CrossRef]

- Cowan, N.; Bao, C.; Bishop-Chrzanowski, B.M.; Costa, A.N.; Greene, N.R.; Guitard, D.; Li, C.; Musich, M.L.; Ünal, Z.E. The Relation Between Attention and Memory. Annual Review of Psychology 2024, 75, 183–214. [Google Scholar] [CrossRef] [PubMed]

- Rasoulzadeh, V.; Sahan, M.I.; van Dijck, J.-P.; Abrahamse, E.; Marzecova, A.; Verguts, T.; Fias, W. Spatial Attention in Serial Order Working Memory: An EEG Study. Cereb Cortex 2021, 31, 2482–2493. [Google Scholar] [CrossRef]

- Carlisle, N.B.; Kristjánsson, Á. How Visual Working Memory Contents Influence Priming of Visual Attention. Psychological Research 2018, 82, 833–839. [Google Scholar] [CrossRef]

- Foerster, R.M.; Schneider, W.X. Task-Irrelevant Features in Visual Working Memory Influence Covert Attention: Evidence from a Partial Report Task. Vision 2019, 3, 42. [Google Scholar] [CrossRef]

- Trentin, C.; Slagter, H.A.; Olivers, C.N.L. Visual Working Memory Representations Bias Attention More When They Are the Target of an Action Plan. Cognition 2023, 230, 105274. [Google Scholar] [CrossRef]

- Xie, T.; Nan, W.; Fu, S. Attention Can Operate on Object Representations in Visual Sensory Memory. Atten Percept Psychophys 2021, 83, 3069–3085. [Google Scholar] [CrossRef]

- Aben, B.; Stapert, S.; Blokland, A. About the Distinction between Working Memory and Short-Term Memory. Front Psychol 2012, 3, 301. [Google Scholar] [CrossRef]

- Colom, R.; Shih, P.C.; Flores-Mendoza, C.; Quiroga, M.Á. The Real Relationship between Short-Term Memory and Working Memory. Memory 2006, 14, 804–813. [Google Scholar] [CrossRef]

- Posner, M.I.; Snyder, C.R.; Davidson, B.J. Attention and the Detection of Signals. Journal of Experimental Psychology: General 1980, 109, 160–174. [Google Scholar] [CrossRef]

- Faul, F.; Erdfelder, E.; Lang, A.-G.; Buchner, A. G*Power 3: A Flexible Statistical Power Analysis Program for the Social, Behavioral, and Biomedical Sciences. Behavior Research Methods 2007, 39, 175–191. [Google Scholar] [CrossRef] [PubMed]

- Van Geert, E.; Bossens, C.; Wagemans, J. The Order & Complexity Toolbox for Aesthetics (OCTA): A Systematic Approach to Study the Relations between Order, Complexity, and Aesthetic Appreciation. Behav Res 2022. [Google Scholar] [CrossRef]

- Tse, C.-S.; Altarriba, J. Recognizing the Directionality of an Arrow Affects Subsequent Judgments of a Temporal Statement: The Role of Directionality in Spatial Metaphors. Psychol Rec 2012, 62, 497–506. [Google Scholar] [CrossRef]

- Weger, U.W.; Pratt, J. Time Flies like an Arrow: Space-Time Compatibility Effects Suggest the Use of a Mental Timeline. Psychonomic Bulletin & Review 2008, 15, 426–430. [Google Scholar] [CrossRef]

- Dalmaso, M.; Schnapper, Y.; Vicovaro, M. When Time Stands Upright: STEARC Effects along the Vertical Axis. Psychological Research 2023, 87, 894–918. [Google Scholar] [CrossRef]

- Hartmann, M.; Martarelli, C.S.; Mast, F.W.; Stocker, K. Eye Movements during Mental Time Travel Follow a Diagonal Line. Conscious Cogn 2014, 30, 201–209. [Google Scholar] [CrossRef]

- Stocker, K.; Hartmann, M.; Martarelli, C.S.; Mast, F.W. Eye Movements Reveal Mental Looking Through Time. Cogn Sci 2016, 40, 1648–1670. [Google Scholar] [CrossRef]

- Danckert, J.; Goodale, M.A. Superior Performance for Visually Guided Pointing in the Lower Visual Field. Exp Brain Res 2001, 137, 303–308. [Google Scholar] [CrossRef]

- Gottwald, V.M.; Lawrence, G.P.; Hayes, A.E.; Khan, M.A. Representational Momentum Reveals Visual Anticipation Differences in the Upper and Lower Visual Fields. Exp Brain Res 2015, 233, 2249–2256. [Google Scholar] [CrossRef]

- Soret, R.; Prea, N.; Peysakhovich, V. Exploring the Impact of Body Position on Attentional Orienting. Information 2024, 15, 111. [Google Scholar] [CrossRef]

- Posner, M.I.; Rafal, R.D.; Choate, L.S.; Vaughan, J. Inhibition of Return: Neural Basis and Function. Cognitive Neuropsychology 1985, 2, 211–228. [Google Scholar] [CrossRef]

- Han, S.; Ding, Y.; Song, Y. Neural Mechanisms of Perceptual Grouping in Humans as Revealed by High Density Event Related Potentials. Neuroscience Letters 2002, 319, 29–32. [Google Scholar] [CrossRef] [PubMed]

- Yu, D.; Park, H.; Gerold, D.; Legge, G.E. Comparing Reading Speed for Horizontal and Vertical English Text. Journal of Vision 2010, 10, 21. [Google Scholar] [CrossRef] [PubMed]

- Hilchey, M.D.; Klein, R.M.; Satel, J.; Wang, Z. Oculomotor Inhibition of Return: How Soon Is It “Recoded” into Spatiotopic Coordinates? Atten Percept Psychophys 2012, 74, 1145–1153. [Google Scholar] [CrossRef]

- Taylor, T.L.; Klein, R.M. On the Causes and Effects of Inhibition of Return. Psychonomic Bulletin & Review 1998, 5, 625–643. [Google Scholar] [CrossRef]

- Cohen, R.A. Processing Speed and Attentional Resources. In The Neuropsychology of Attention; Cohen, R.A., Ed.; Springer US: Boston, MA, 2014; pp. 751–762. ISBN 978-0-387-72639-7. [Google Scholar]

- Maolin, Y. Speed-Accuracy Tradeoff. In The ECPH Encyclopedia of Psychology; Springer Nature: Singapore, 2024; p. 1. ISBN 978-981-9960-00-2. [Google Scholar]

- Matthew, J.S.; Michael, A.N. Gestalt and Feature-Intensive Processing: Toward a Unified Model of Human Information Processing. Curr Psychol 2002, 21, 68–84. [Google Scholar] [CrossRef]

- Bonato, M.; Zorzi, M.; Umiltà, C. When Time Is Space: Evidence for a Mental Time Line. Neuroscience & Biobehavioral Reviews 2012, 36, 2257–2273. [Google Scholar] [CrossRef]

- von Sobbe, L.; Scheifele, E.; Maienborn, C.; Ulrich, R. The Space–Time Congruency Effect: A Meta-Analysis. Cognitive Science 2019, 43, e12709. [Google Scholar] [CrossRef]

- Geffen, G.; Bradshaw, J.L.; Wallace, G. Interhemispheric Effects on Reaction Time to Verbal and Nonverbal Visual Stimuli. Journal of Experimental Psychology 1971, 87, 415–422. [Google Scholar] [CrossRef]

- Miwa, K.; Dijkstra, T. Lexical Processes in the Recognition of Japanese Horizontal and Vertical Compounds. Read Writ 2017, 30, 791–812. [Google Scholar] [CrossRef]

- Rakover, S.S. Explaining the Face-Inversion Effect: The Face–Scheme Incompatibility (FSI) Model. Psychon Bull Rev 2013, 20, 665–692. [Google Scholar] [CrossRef] [PubMed]

- Kubovy, M.; Holcombe, A.O.; Wagemans, J. On the Lawfulness of Grouping by Proximity. Cognitive Psychology 1998, 35, 71–98. [Google Scholar] [CrossRef] [PubMed]

- Sasaki, Y. Processing Local Signals into Global Patterns. Current Opinion in Neurobiology 2007, 17, 132–139. [Google Scholar] [CrossRef] [PubMed]

- Wu, H.; Zuo, Z.; Yuan, Z.; Zhou, T.; Zhuo, Y.; Zheng, N.; Chen, B. Neural Representation of Gestalt Grouping and Attention Effect in Human Visual Cortex. Journal of Neuroscience Methods 2023, 399, 109980. [Google Scholar] [CrossRef]

- Heitz, R.P. The Speed-Accuracy Tradeoff: History, Physiology, Methodology, and Behavior. Front. Neurosci. 2014, 8. [Google Scholar] [CrossRef]

- Wickelgren, W.A. Speed-Accuracy Tradeoff and Information Processing Dynamics. Acta Psychologica 1977, 41, 67–85. [Google Scholar] [CrossRef]

- Jones, G.V.; Martin, M. Handedness Dependency in Recall from Everyday Memory. British Journal of Psychology 1997, 88, 609–619. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).