Submitted:

18 January 2025

Posted:

20 January 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Literature Review:

3. Proposed Methodology

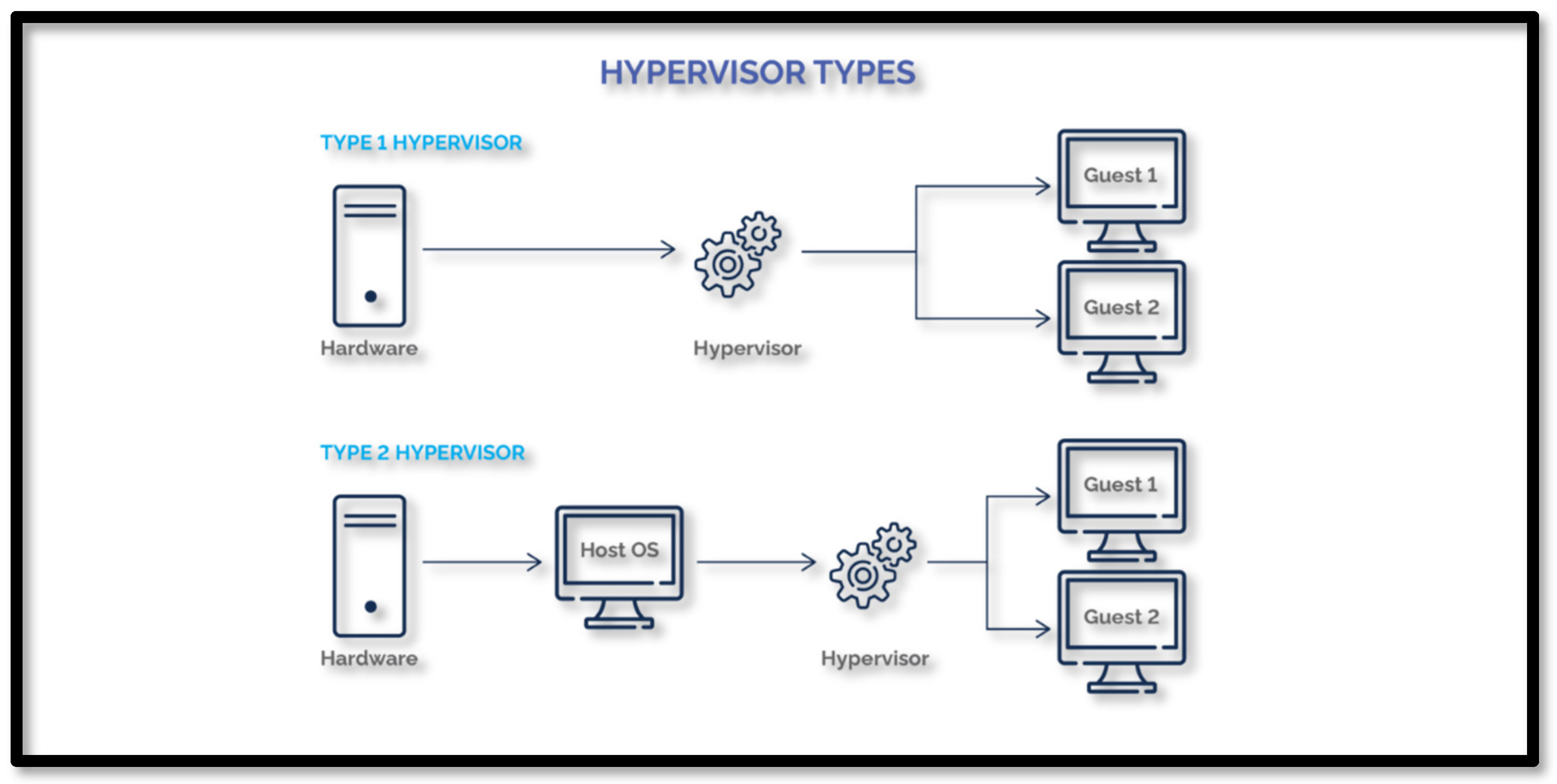

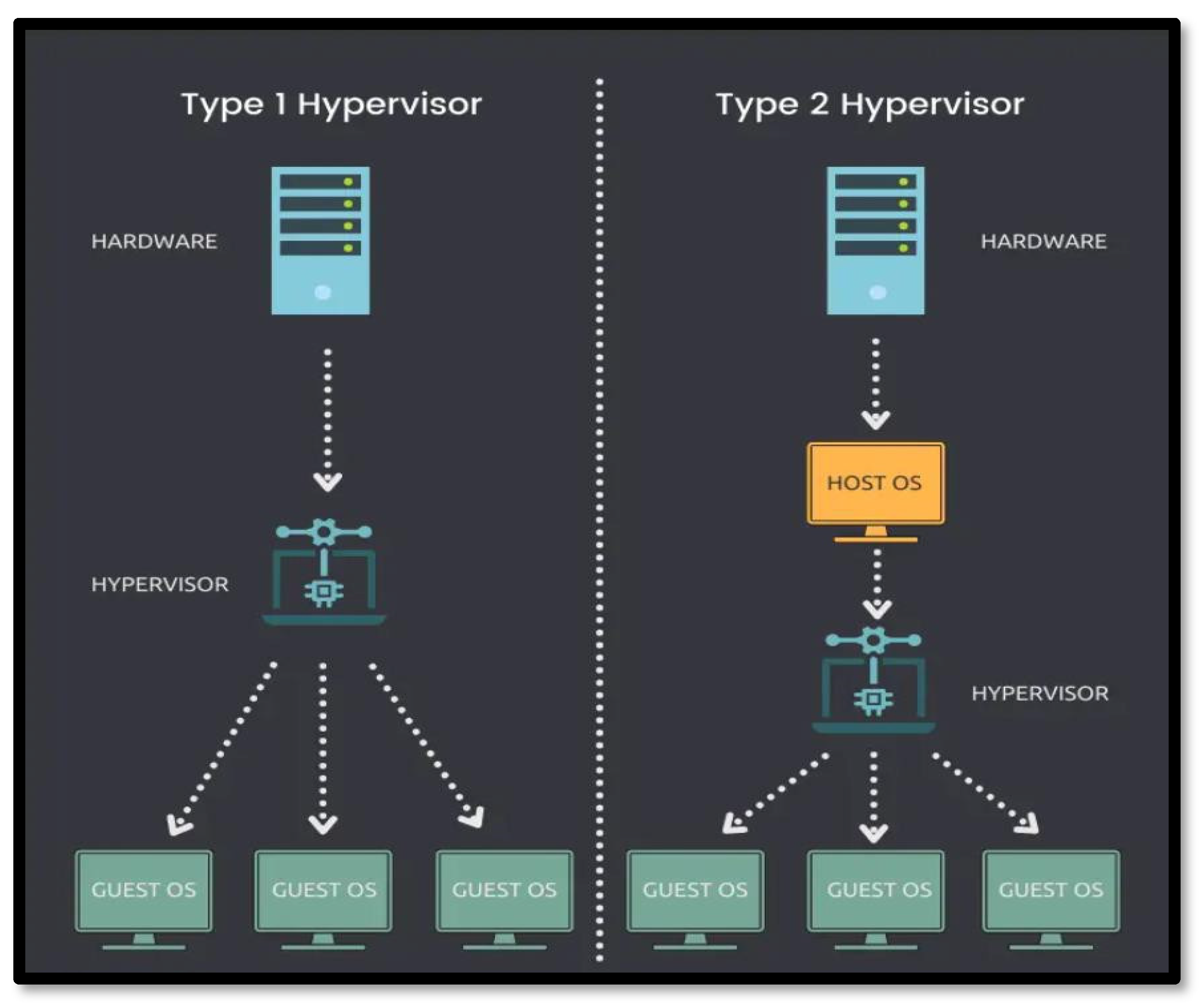

4. Choosing the Right Hypervisor Key

4.1. Factors to Consider

4.2. Hypervisor Reference Model

4.3. Detailed Issues and Limitations

5. Results and Discussion

6. Conclusion

References

- Uzochukwu-Theophilus, O. R. I. EFFICIENT DATA MANAGEMENT IN CLOUD STORAGE SYSTEMS CASE STUDY: ULK. Doctoral dissertation, ULK, 2024. [Google Scholar]

- Koloko, C. Assessing the Use of Public Cloud Computing in Developing Countries: A Case Study of the Malawian Telecommunications Industry. 2023. [Google Scholar]

- Plale, B. A.; Dickson, E.; Kouper, I.; Liyanage, S. H.; Ma, Y.; McDonald, R. H.; …; Withana, S. Safe open science for restricted data. Data and Information Management 2019, 3(1), 50–60. [Google Scholar] [CrossRef]

- Sangfor Technologies. What is a hypervisor? A key element of server virtualization. Sangfor Technologies. n.d. Retrieved July 10, 2024 from https://www.sangfor.com/glossary/cloud-and-infrastructure/what-is-hypervisor-in-server-virtualization.

- Naveen Kumar, K. R.; Priya, V.; Rachana, G. S. An overview of cloud computing for data-driven intelligent systems. Data-Driven Systems and Intelligent Applications 2024, 72. [Google Scholar]

- Spiceworks. What is a hypervisor? Definition, types, and software. Spiceworks. n.d. Retrieved from https://www.spiceworks.com/tech/virtualization/articles/what-is-a-hypervisor/.

- Lee, B. Hyper-V backup and disaster recovery features. BDRSuite. 2023. Retrieved July 9, 2024, from https://www.bdrsuite.com/blog/hyper-v-backup-and-disaster-recovery-features/.

- Dogra, V.; Singh, A.; Verma, S.; Kavita; Jhanjhi, N. Z.; Talib, M. N. Analyzing DistilBERT for sentiment classification of banking financial news. In Intelligent computing and innovation on data science; Peng, S. L., Hsieh, S. Y., Gopalakrishnan, S., Duraisamy, B., Eds.; Springer, 2021; Vol. 248, pp. 665–675. [Google Scholar] [CrossRef]

- Gopi, R.; Sathiyamoorthi, V.; Selvakumar, S.; et al. Enhanced method of ANN based model for detection of DDoS attacks on multimedia Internet of Things. Multimedia Tools and Applications 2022, 81(36), 26739–26757. [Google Scholar] [CrossRef]

- Chesti, I. A.; Humayun, M.; Sama, N. U.; Jhanjhi, N. Z. Evolution, mitigation, and prevention of ransomware. In 2020 2nd International Conference on Computer and Information Sciences (ICCIS); IEEE, October 2020; pp. 1–6. [Google Scholar]

- Alkinani, M. H.; Almazroi, A. A.; Jhanjhi, N. Z.; Khan, N. A. 5G and IoT based reporting and accident detection (RAD) system to deliver first aid box using unmanned aerial vehicle. Sensors 2021, 21(20), 6905. [Google Scholar] [CrossRef] [PubMed]

- Babbar, H.; Rani, S.; Masud, M.; Verma, S.; Anand, D.; Jhanjhi, N. Load balancing algorithm for migrating switches in software-defined vehicular networks. Computational Materials and Continua 2021, 67(1), 1301–1316. [Google Scholar] [CrossRef]

- .

- Daschott. Network isolation and security. Microsoft Learn. 2023. Retrieved July 9, 2024, from https://learn.microsoft.com/en-us/virtualization/windowscontainers/container-networking/network-isolation-security.

- SureLock Technology. Choosing the right virtualization platform: Hyper-V vs. VMware. SureLock Technology. n.d. Retrieved July 9, 2024, from https://surelocktechnology.com/blog/choosing-the-right-virtualization-platform-hyper-v-vs-vmware.

- Saeed, S.; Abdullah, A.; Jhanjhi, N. Z.; Naqvi, M.; Nayyar, A. New techniques for efficiently k-NN algorithm for brain tumor detection. Multimedia Tools and Applications 2022, 81(13), 18595–18616. [Google Scholar] [CrossRef]

- Saeed, S. Improved hybrid K-nearest neighbors’ techniques in segmentation of low-grade tumor and cerebrospinal fluid. 2024. [Google Scholar]

- Elbelgehy, A. G. A. Performance evaluation of virtual cloud labs using hypervisor and container. Indian Journal of Science and Technology 2020, 13(48), 4646–4653. [Google Scholar] [CrossRef]

- XenServer. Hypervisor: Definition, types, and benefits. XenServer. n.d. Retrieved from https://www.xenserver.com/what-is-a-hypervisor.

- Singh, T.; Mano, P. J.; Selvam, K. Emerging Trends in Data Engineering: amazon web services. 2020. [Google Scholar]

- Hammad, Madhuri. Difference between cloud computing and traditional computing. GeeksforGeeks. 2021. Retrieved from https://www.geeksforgeeks.org/difference-between-cloud-computing-and-traditional-computing/.

- Mary Ramya Poovizhi, J.; Devi, R. Performance Analysis of Cloud Hypervisor Using Network Package Workloads in Virtualization. Conversational Artificial Intelligence 2024, 185–198. [Google Scholar]

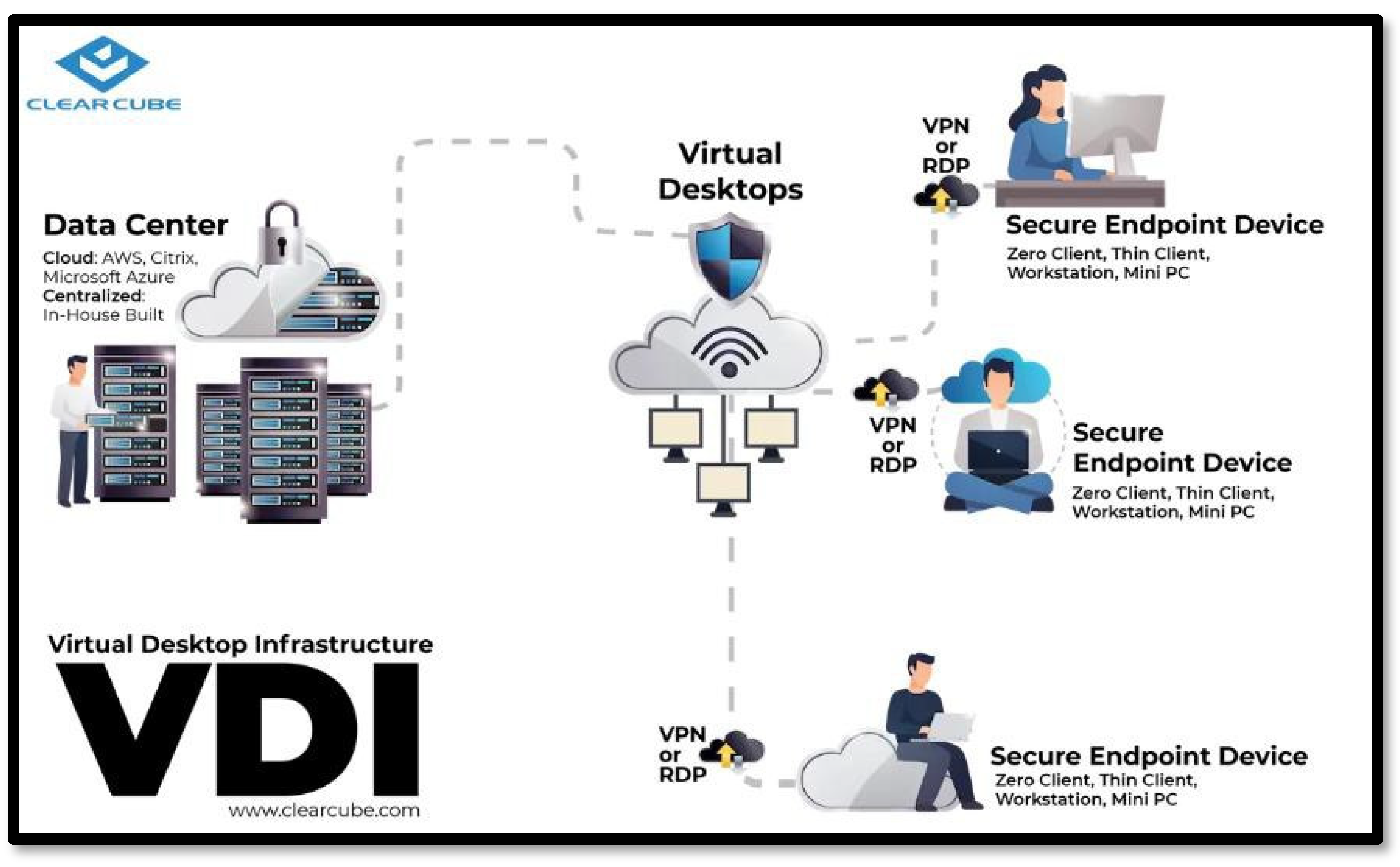

- VMware. What is desktop virtualization? VMware Glossary. 2022. Retrieved from https://www.vmware.com/topics/glossary/content/desktop-virtualization.html.

- Carroll, C. What is desktop virtualization? TechZone Omnissa. 2023. Retrieved July 9, 2024, from https://techzone.omnissa.com/blog/what-desktop-virtualization.

- Liquid Web. A deep dive into the benefits of virtualization. Liquid Web. 2024. Retrieved from https://www.liquidweb.com/blog/benefits-of-virtualization/.

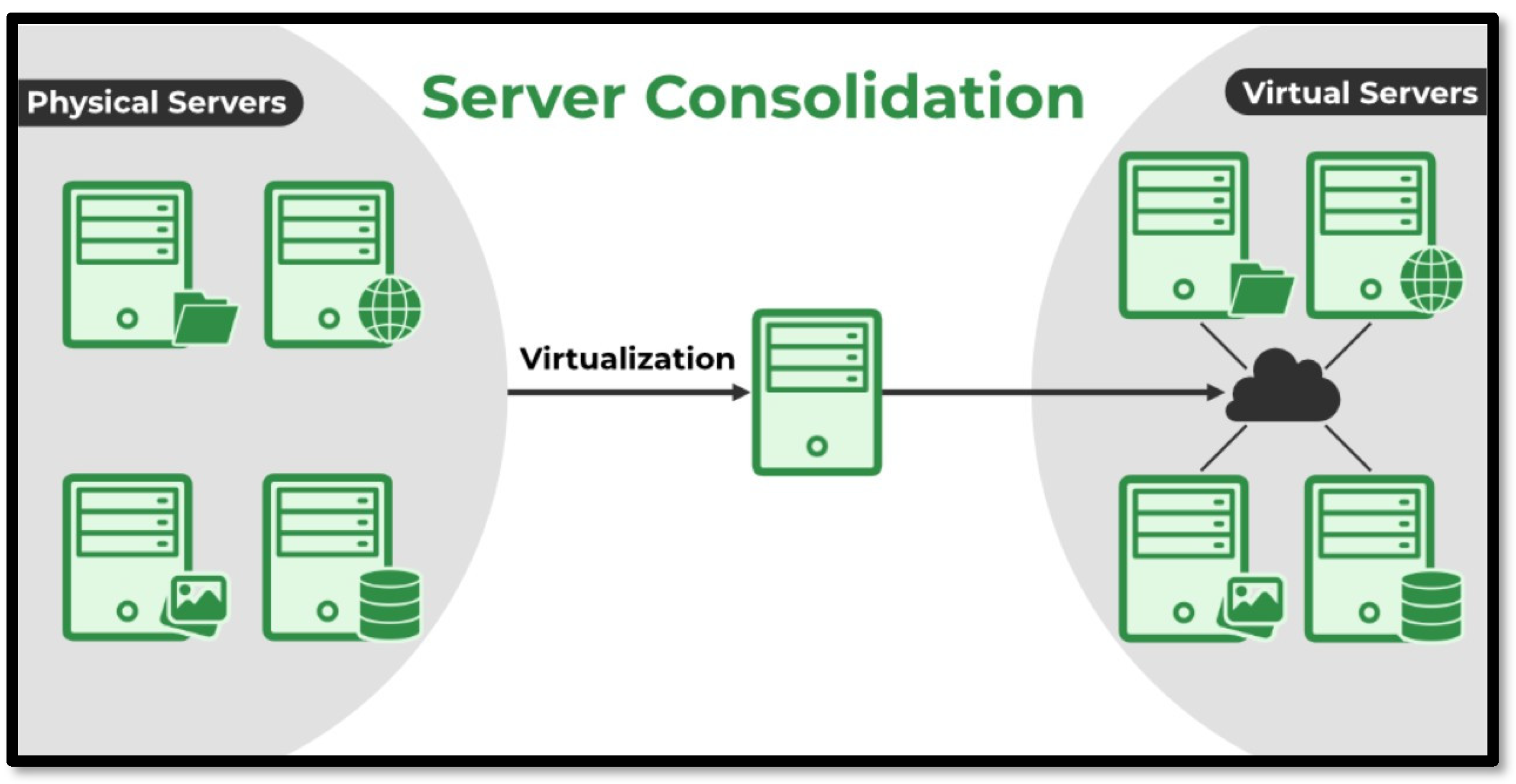

- VMware. Server virtualization Server consolidation. VMware. 2020. Retrieved from https://www.vmware.com/solutions/consolidation.html.

- Saeed, S.; Abdullah, A. Hybrid graph cut hidden Markov model of K-mean cluster technique. CMC-Computers, Materials & Continua 2022, 1–15. [Google Scholar]

- Saeed, S.; Haron, H. Improved correlation matrix of discrete Fourier transformation (CM-DFT) technique for finding the missing values of MRI images. Mathematical Biosciences and Engineering 2021, 1–22. [Google Scholar]

- Saeed, S. Implementation of failure enterprise systems in an organizational perspective framework. International Journal of Advanced Computer Science and Applications 2017, 8(5), 54–63. [Google Scholar] [CrossRef]

- Web, Liquid. Server consolidation in cloud computing: An in-depth analysis. Liquid Web. 2024. Retrieved from https://www.liquidweb.com/blog/server-consolidation-in-cloud-computing/.

- Kostic, N. What is server consolidation? phoenixNAP Blog. 2023. Retrieved from https://phoenixnap.com/blog/server-consolidation.

- Singh, T.; Mano, P. J.; Selvam, K. Emerging Trends in Data Engineering: amazon web services. 2020. [Google Scholar]

- Freet, D.; Agrawal, R.; Walker, J. J.; Badr, Y. Open source cloud management platforms and hypervisor technologies: A review and comparison. SoutheastCon 2016 2016, 1–8. [Google Scholar]

- Simic, S. How to implement validation for RESTful services with Spring. phoenixNAP Knowledge Base. 2019. Retrieved from https://phoenixnap.com/kb/what-is-hypervisor-type-1-2.

- StarWind Blog. Type 1 vs. Type 2 hypervisor: What is the difference? StarWind Blog. 2023. Retrieved from https://www.starwindsoftware.com/blog/type-1-vs-type-2-hypervisor-what-is-the-difference.

- Rosenblum, M.; Garfinkel, T. Virtual machine monitors: Current technology and future trends. IEEE Computer 2005, 38(5), 39–47. [Google Scholar] [CrossRef]

- Goldberg, R. P. Survey of virtual machine research. IEEE Computer Magazine 1974, 7(6), 34–45. [Google Scholar] [CrossRef]

- Perez-Botero, D.; Szefer, J.; Lee, R. B. Characterizing hypervisor vulnerabilities in cloud computing servers. In Proceedings of the International Workshop on Security in Cloud Computing; 2013; pp. 3–10. [Google Scholar]

- Armstrong, D. J. Management challenges in creating and maintaining virtualization. Journal of Network and Computer Applications 2010, 33(3), 375–385. [Google Scholar]

- Jagatheesan, A.; Shanthi, N. An overview of virtualization technology. Network Protocols and Algorithms 2015, 7(4), 59–77. [Google Scholar]

- Turner, J.; Moore, T. Network performance overhead introduced by virtualization technologies. Journal of Computer Networks 2012, 56(1), 32–42. [Google Scholar]

- Vijayalakshmi, B.; Ramar, K.; Jhanjhi, N. Z.; Verma, S.; Kaliappan, M.; Vijayalakshmi, K.; …; Ghosh, U. An attention-based deep learning model for traffic flow prediction using spatiotemporal features towards sustainable smart city. International Journal of Communication Systems 2021, 34(3), e4609. [Google Scholar] [CrossRef]

- Khan, N. A.; Jhanjhi, N. Z.; Brohi, S. N.; Nayyar, A. Chapter Three-Emerging use of UAV’s: secure communication protocol issues and challenges; Editor (s): Fadi Al-Turjman, Drones in Smart-Cities; 2020. [Google Scholar]

- Humayun, M.; Jhanjhi, N. Z.; Alsayat, A.; Ponnusamy, V. Internet of things and ransomware: Evolution, mitigation and prevention. Egyptian Informatics Journal 2021, 22(1), 105–117. [Google Scholar] [CrossRef]

- Muzafar, S. Energy harvesting models and techniques for green IoT: A review. Role of IoT in Green Energy Systems 2021, 117–143. [Google Scholar]

- Soobia, S.; Afnizanfaizal, A.; Jhanjhi, N. Z. Hybrid graph cut hidden Markov model of k-mean cluster technique. CMC-Computers, Materials & Continua 2022, 72(1), 1–15. [Google Scholar]

- Humayun, M.; Sujatha, R.; Almuayqil, S. N.; Jhanjhi, N. Z. A transfer learning approach with a convolutional neural network for the classification of lung carcinoma. In Healthcare; MDPI, June 2022; Vol. 10, No. 6, p. 1058. [Google Scholar]

- Zaman, N.; Ghazanfar, M. A.; Anwar, M.; Lee, S. W.; Qazi, N.; Karimi, A.; Javed, A. Stock market prediction based on machine learning and social sentiment analysis. In Authorea Preprints; 2023. [Google Scholar]

- Sindiramutty, S. R.; Jhanjhi, N. Z.; Tan, C. E.; Lau, S. P.; Muniandy, L.; Gharib, A. H.; …; Murugesan, R. K. Industry 4.0: Future Trends and Research Directions. Convergence of Industry 4.0 and Supply Chain Sustainability 2024, 342–405. [Google Scholar]

- Srinivasan, K.; Garg, L.; Chen, B. Y.; Alaboudi, A. A.; Jhanjhi, N. Z.; Chang, C. T.; …; Deepa, N. Expert System for Stable Power Generation Prediction in Microbial Fuel Cell. Intelligent Automation & Soft Computing 2021, 30(1). [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).