Submitted:

06 January 2025

Posted:

07 January 2025

You are already at the latest version

Abstract

Keywords:

Introduction

References

- Thirunavukarasu AJ, Ting DSJ, Elangovan K, Gutierrez L, Tan TF, Ting DSW. Large language models in medicine. Nature Medicine. 2023;29(8):1930-40. [CrossRef]

- Gargari OK, Mahmoudi MH, Hajisafarali M, Samiee R. Enhancing title and abstract screening for systematic reviews with GPT-3.5 turbo. BMJ Evidence-Based Medicine. 2024;29(1):69-70. [CrossRef]

- Rydzewski NR, Dinakaran D, Zhao SG, Ruppin E, Turkbey B, Citrin DE, Patel KR. Comparative Evaluation of LLMs in Clinical Oncology. NEJM AI. 2024:AIoa2300151. [CrossRef]

- Peng W, feng Y, Yao C, Zhang S, Zhuo H, Qiu T, et al. Evaluating AI in medicine: a comparative analysis of expert and ChatGPT responses to colorectal cancer questions. Scientific Reports. 2024;14(1):2840. [CrossRef]

- Duey AH, Nietsch KS, Zaidat B, Ren R, Ndjonko LCM, Shrestha N, et al. Thromboembolic prophylaxis in spine surgery: an analysis of ChatGPT recommendations. Spine J. 2023;23(11):1684-91. [CrossRef]

- Lim ZW, Pushpanathan K, Yew SME, Lai Y, Sun C-H, Lam JSH, et al. Benchmarking large language models’ performances for myopia care: a comparative analysis of ChatGPT-3.5, ChatGPT-4.0, and Google Bard. EBioMedicine. 2023;95.

- Shieh A, Tran B, He G, Kumar M, Freed JA, Majety P. Assessing ChatGPT 4.0’s test performance and clinical diagnostic accuracy on USMLE STEP 2 CK and clinical case reports. Scientific Reports. 2024;14(1):9330.

- Guo Z, Jin R, Liu C, Huang Y, Shi D, Yu L, et al. Evaluating large language models: A comprehensive survey. arXiv preprint arXiv:231019736. 2023.

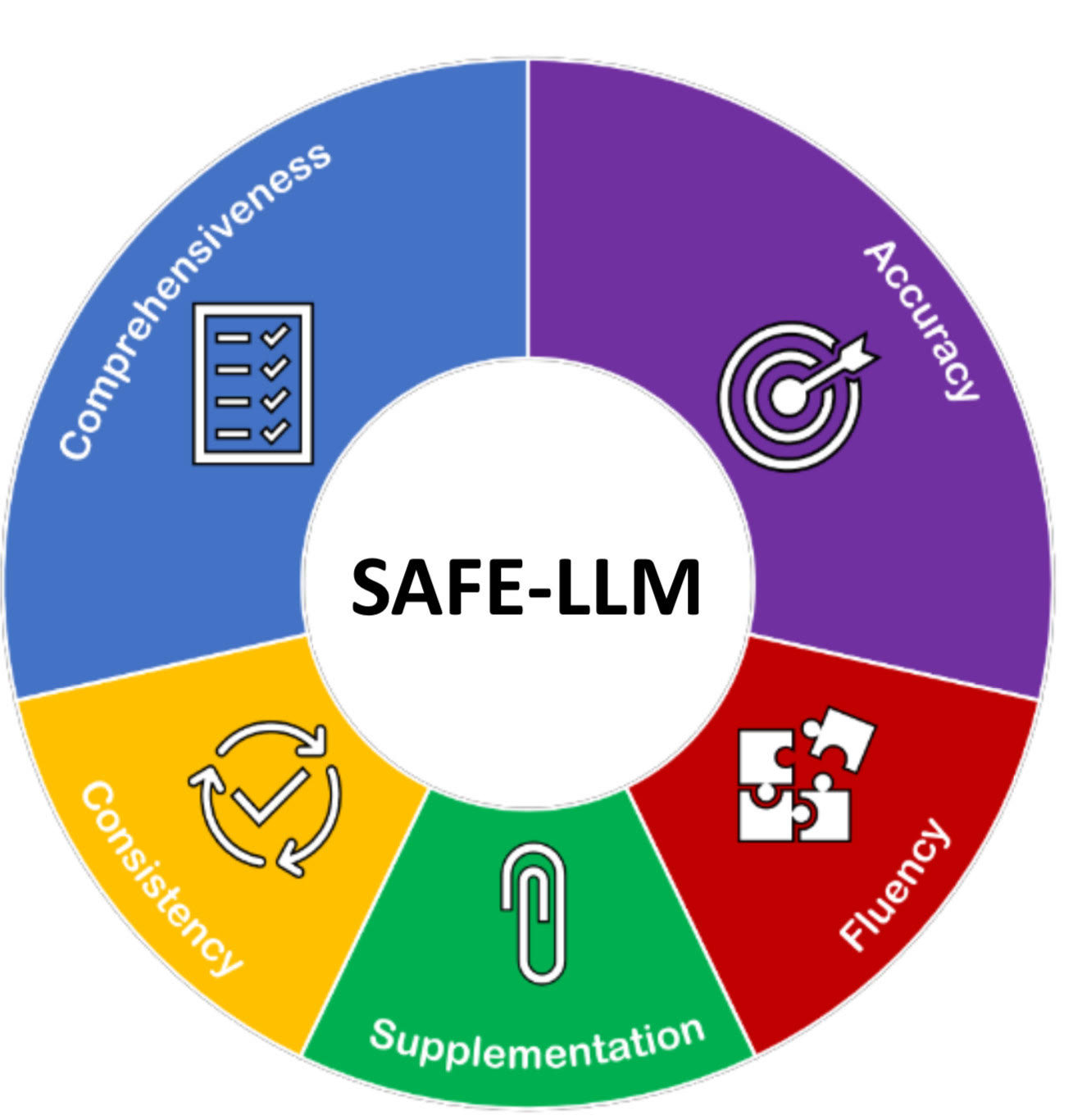

| Evaluation | Points | Description | Example |

|---|---|---|---|

| Accuracy | 0 | Inaccurate answer potentially harming the patient | Diagnosis of a different cluster of disease, treatment recommendations which would worsen the disease, wrong prognosis which would lead to high fiscal or mental costs for the patient |

| 1 | Partially accurate answers containing some errors with minimal benefit for the patient | Diagnosis within the same cluster of disease, treatment recommendations which would not harm the patient or benefit them significantly, wrong prognosis which does not fiscally or mentally harm the patient | |

| 2 | Accurate answers with no errors and maximum benefit for the patient | Exact diagnosis of disease, treatment recommendation in accordance with common medical practice, similar prognosis with relevant literature | |

| Comprehensiveness | 0 | No reasoning or detail provided or irrelevant reasoning or detail | No sign or symptom or criteria is provided for diagnosis, no regimen is provided for treatment, no evidence is provided for prognosis |

| 1 | Relevant reasoning or detail yet lacking key components | Some sign or symptom or criteria is provided for diagnosis yet necessary ones are missing, treatment regimen is provided yet one of dose, duration, or daily intake is missing, prognosis is based on some evidence yet fails to account for all evidence | |

| 2 | Relevant reasoning or detail encompassing most necessary criteria | Most sign or symptom or criteria is provided for diagnosis with all necessary ones mentioned, treatment regimen is provided in full, prognosis is based on all relevant evidence provided in the prompt | |

| Supplementation | 0 | No relevant additional reasoning or detail | No helpful sign or symptom or criteria is provided for diagnosis, no additional tips regarding treatment regimen is provided, prognosis is not based on any additional evidence provided in the prompt |

| 1 | Relevant additional reasoning or detail | Additional helpful sign or symptom or criteria is provided for diagnosis, additional tips regarding treatment regimen is provided, prognosis is based on additional evidence provided in the prompt (i.e. gender or family history) | |

| Consistency | 0 | Heterogeneous accuracy scores for three consecutive repetitions | One answer receives an accuracy score of “partially accurate” while another receives “inaccurate” |

| 1 | Similar accuracy scores for three consecutive repetitions | All three answers receive a score of “accurate” or “partially accurate” or “inaccurate” | |

| Fluency | 0 | Hard to understand in one sitting | Grammatical mistakes, repetitions of whole sentences, incoherence |

| 1 | Understandable in one sitting | Minimal grammatical mistakes, repetitions limited to concepts, cohesive structure | |

| Sum Score | 7 | The total SAFE-LLM score for each answer provided by an LLM |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).