1. Introduction

The study of evolution, complexity, and information has been a cornerstone of multiple scientific disciplines that bridge physics, biology, and computation. Foundational works such as Schrödinger’s

What is Life? [

1] posed fundamental questions about how order emerges from disorder, inspiring theoretical explorations of how physical laws govern the emergence of biological systems. Tegmark’s

Mathematical Universe Hypothesis [

2] posits that the universe itself is a mathematical structure, where physical phenomena are manifestations of abstract mathematical rules. These works frame the question of complexity, but leave the mechanisms of emergence unaddressed.

In the realm of evolutionary dynamics, Fisher [

3] and Nowak [

4] provide mathematical models that describe the mechanisms of replication, mutation, and selection. Although these models illuminate the principles of biological evolution, they assume the existence of self-replicating, mutating entities and do not delve into how such entities might arise from purely abiotic processes. Similarly, Wheeler’s concept of "It from Bit" [

5] intriguingly suggests that information underpins the physical world, but it lacks a concrete mechanism for how information structures form and evolve.

Dennis Noble’s work on top-down causality [

6] emphasizes the role of systems-level behavior in determining lower-level interactions. This perspective challenges reductionist paradigms, but does not address how such systems might emerge from simpler abiotic conditions. On the computational side, Seth Lloyd’s concept of the universe as a quantum computer [

7] provides a framework for understanding the universe as a computational entity but leaves open the question of how specific computational rules or patterns arise.

Algorithmic complexity [

8,

9,

10] and Shannon’s information theory [

11] provide powerful tools to quantify information and complexity, but do not explain how information is created or evolves within physical systems. These frameworks focus on static measures of complexity, often missing the dynamic interplay between interactions and selection that drives the formation of ordered structures.

This paper introduces Bayesian Assembly (BA) systems which offer an abstract model for the emergence of complexity and information. Unlike prior work tied to physical systems or abstract concepts without implementation pathways, BA systems provide a plausible mechanism for how stable patterns might arise, evolve, and persist based solely on probabilistic interactions.

Constructor Theory [

12] provides a foundational framework for understanding physical laws in terms of counterfactuals—statements about which transformations are possible or impossible. This perspective shifts the focus from dynamical laws to the principles governing what can be constructed or maintained by physical systems, offering profound insights into how patterns emerge and persist. While Constructor Theory captures the essence of counterfactual properties, it does not explicitly address the generational dynamics through which such patterns evolve and are selected over time.

Recent developments in Assembly Theory (AT) [

13] complement this by providing a quantitative framework for complexity, introducing the assembly index as a measure of the minimal number of recursive steps required to construct an object from basic building blocks. AT emphasizes how physical and historical constraints shape the combinatorial explosion of possibilities, offering a retrospective view of selection. However, while AT identifies selection as central to the emergence of complexity, it does not model the dynamic processes through which selection unfolds in real time.

Kauffman’s Theory of Adjacent Possible (TAP) emphasizes the combinatorial growth of adjacent possible configurations and introduces a quantitative measure for the rate of discovery within an expanding state space [

14]. In contrast, Bayesian Assembly (BA) systems focus explicitly on how stability-driven selection governs the persistence and evolution of patterns, providing a dynamic probabilistic framework that complements and extends the more combinatorial perspective of TAP.

More elaborate chemical modeling systems, such as Mass Action Kinetics (MAK) [

15], Chemical Reaction Network Theory (CRNT) [

16], and the stochastic framework introduced by [

17], provide detailed, quantitative descriptions of reaction dynamics, often incorporating conservation laws and equilibrium states. These systems excel at modeling well-defined reaction pathways, particularly in chemical and biochemical systems, but they typically require predefined reaction sets and fail to explore open-ended generativity. Tononi’s integrated information theory (IIT) [

18] offers a different perspective by quantifying the complexity of neural and information systems through measures like

, focusing on the integration and differentiation of information. Despite their strengths, these frameworks do not explicitly isolate selection as an emergent mechanism in the evolution of information.

Bayesian Assembly (BA) systems distinguish themselves by their narrow focus on stability as the central determinant of pattern persistence. Stability is simulated by associating different lifetimes with concatenated patterns, capturing the essential dynamics of selection without invoking detailed physical or chemical laws. This approach allows BA systems to isolate and model selection as the driving mechanism of information evolution. The term "Bayesian" reflects the iterative updating of probabilities for each pattern based on prior abundances and interactions. By emphasizing stability-driven selection in evolving state spaces, BA systems provide a dynamic and generative framework for studying how patterns are created, maintained, and ultimately evolve into complex configurations, complementing existing approaches while filling a crucial gap in our understanding of information evolution.

2. BA System Fundamentals

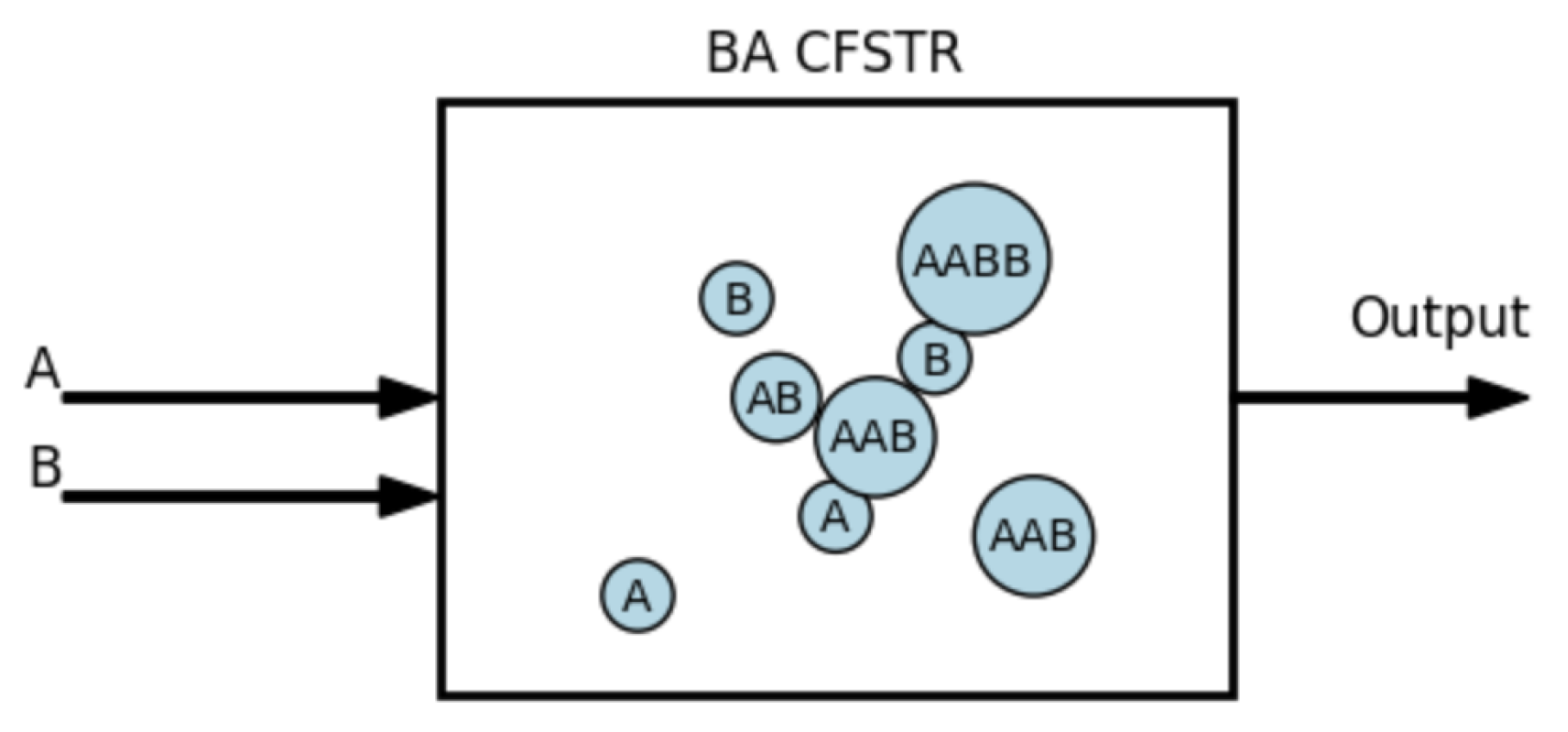

We define Bayesian Assembly (BA) Systems as follows: a population of base elements

capable of forming compounds through local interactions of unspecified forces. Compounds are represented as concatenations of base elements and other compounds, thus they can be repetitive and recursive. The elements could exist in our physical universe, or in an abstract universe. The stability of compounds is an abstraction that determines only one thing: how many generations they will persist. For example, if compound AAB has a stability of 10 then it will persist for 10 generations and then disappear. In contrast to bidirectional models like MAK, where dissipation of patterns is modeled explicitly, BA systems just allow the patterns to disappear. Since we do not constrain the interactions themselves in any way, compounds that have a stability of 0 may get created and destroyed in the same generation, which simulates impossible interactions. We allow the base elements to regenerate at a constant rate in every generation. This ensures a continuous influx of resources, preventing stagnation and enabling sustained exploration of the state space. This assumption mirrors the continuous flow stirred tank reactor (CFSTR) methodology commonly employed in chemical reaction modeling, where reactants are replenished at a constant rate to maintain steady-state conditions [

19]. The analogy highlights the foundational role of resource replenishment in both theoretical and experimental systems for exploring dynamic behaviors and emergent patterns.

Figure 1.

BA clocked equivalent of a Continuous Flow Stirred Tank Reactor (CFSTR)

Figure 1.

BA clocked equivalent of a Continuous Flow Stirred Tank Reactor (CFSTR)

Selection emerges naturally through the interplay of stability and probabilistic interactions. Consider a simple BA system starting with a few instances of base elements

A and

B. The pattern

has a lifetime of 10 generations, while

has a lifetime of 50 generations, and all other compounds degrade instantly with a lifetime of 0. After the first generation, the system produces combinations such as

,

, and

. Since

and

are unstable, they are eliminated, leaving

A,

B, and

. In subsequent generations, new unstable patterns may appear transiently, but

persists due to its longer lifetime. Over time, the population of

increases probabilistically, as multiple instances of

are likely to form, increasing the likelihood of forming

. This dynamic reflects a form of roulette wheel selection [

22,

23], where the stability-driven persistence of

amplifies its probability of interaction. The emergent pattern

represents a higher-order configuration, demonstrating how selection, grounded in differential stability, shapes the system’s evolutionary pathways. The mechanism relies on the stability-induced bias in interaction probabilities, ensuring that patterns with greater persistence are favored, enabling the accumulation of complexity over successive generations.

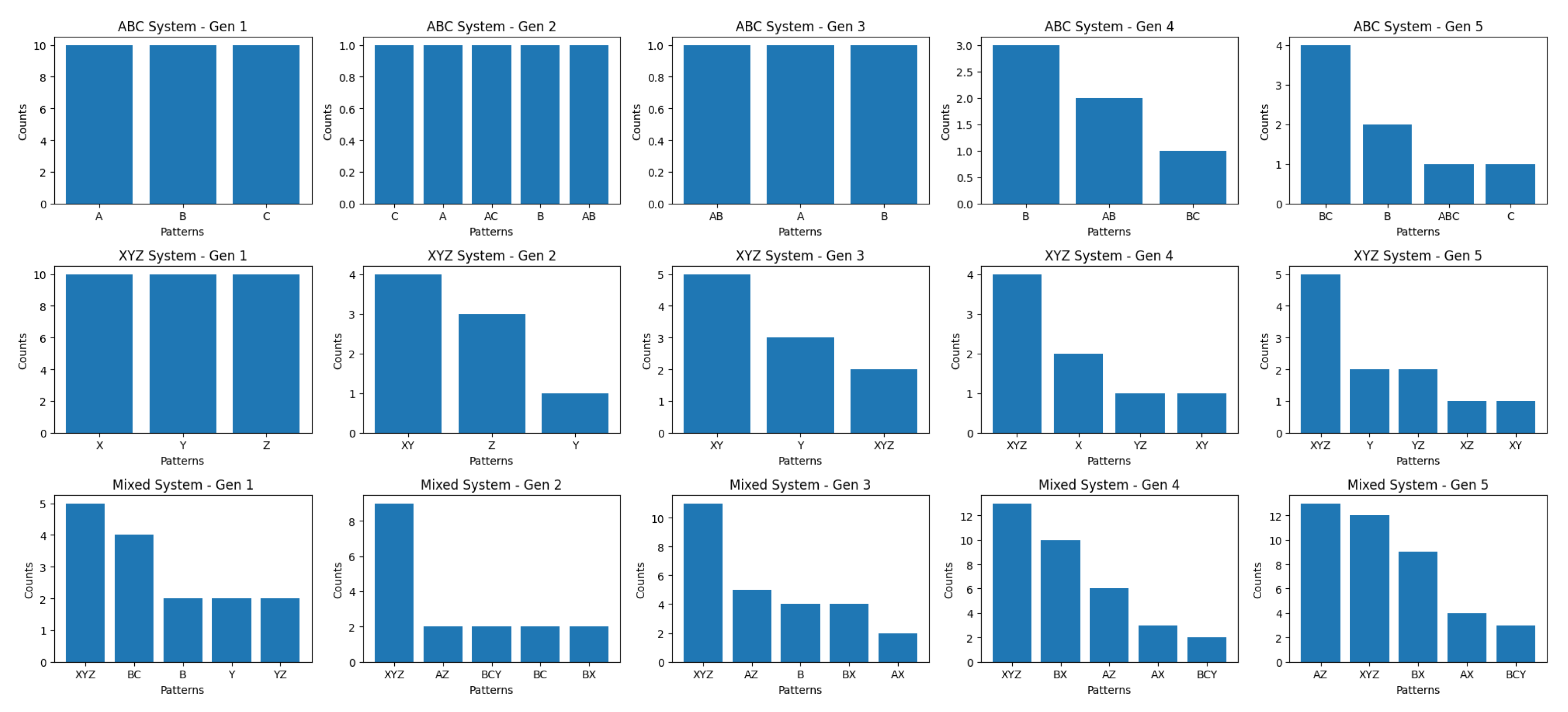

The following simulation shows a different BA system with a population of 3 elements: A, B, C. Assume B-compounds are more stable than those without B. Thus patterns like BB, AB, BC, ABC, have a higher stability than AC or A or C. Therefore, B compounds will persist for multiple generations, while the others will quickly dissipate. The more stable B-compounds will interact more frequently due to their relative frequency, even without replication or inheritance. A snapshot of the simulation is shown in

Figure 2.

Where is Maxwell’s demon [

20] hiding in this example, driving it towards a low-entropy state? As we saw, the answer lies in the roulette wheel. Compounds that persist longer have more chances to interact. As their frequency in the population grows, their chances to interact grow even more. In Evolutionary Dynamics, this is called fitness-proportionate selection [

21] or roulette wheel selection [

22,

23].

3. Dual Probability Distributions Governing BA Systems

BA systems can be effectively described by two interdependent probability distributions: the

population distribution, which represents the relative abundance of patterns in the system, and the

stability distribution, which quantifies the stable lifetime of successful compounds. The population distribution reflects the probabilities of patterns at any given generation

t. Let

represent the probability of pattern

p in the population at time

t:

where

is the absolute count of pattern

p in the population at time

t, and

is the total population size at time

t, ensuring normalization (

). The population distribution evolves over generations as patterns interact and new stable configurations emerge. As patterns with higher stability and frequent interactions become more prominent in the population, the population distribution evolves to reflect the history of past interactions and selection pressures.

The stability distribution represents the persistence or longevity of the individual pattern p in the system, defined as the expected lifetime or persistence of p over generations. Stability is a measure of how long a pattern remains viable before it dissipates or is replaced, reflecting physical properties such as energy barriers, bond strengths, environmental effects, and resistance to degradation.

Stability influences the dynamics of the system by determining how long a pattern persists to participate in future interactions. Patterns with higher stability values are more likely to accumulate over generations, increasing their representation in the population and increasing the likelihood of forming new patterns. This persistence-driven selection creates a feedback loop, where stable patterns dominate the system dynamics, guiding the evolution of complexity.

In simulations of real-world scenarios, the values of would reflect the underlying physical, chemical, or even economic or social principles. In a chemical context, for example, these stability factors might be derived from binding energies, reaction rate constants, or free energies of formation. In an economic model, they could represent relative cost efficiencies or market resilience. In each case, encodes the notion of persistence in the relevant domain: the likelihood that a given compound, pattern, or agent survives long enough to interact and persist into the next generation. Unlike the population distribution, which evolves dynamically based on interactions, the stability distribution remains constant or changes only gradually.

3.1. Combined Role of Population and Stability Distributions

Together, the population and stability distributions determine the dynamics of the system. The full probability expression for the distribution at the next generation, including the normalization denominator, is:

Intuitively, multiplying

by

reflects two factors: the initial abundance (or likelihood) of the pattern, and its ability to persist over enough time (generations) to interact repeatedly. Since

is interpreted as the expected lifetime of the pattern, then having more time (counted in generations) to react or be selected naturally increases its representation in the next update. Meanwhile, a pattern’s prior probability

captures how commonly it appears in the current generation. Both a high initial abundance and a long lifetime can boost a pattern’s success in the subsequent generation. For simplicity, we now use the proportional form in our discrete analysis:

Here,

represents the stable lifetime of pattern

p. Multiplying

by

ensures that patterns with higher stability gain an advantage in subsequent generations, as they remain available for more interactions or persist longer. This update rule encapsulates the idea that a stable pattern is more likely to be “selected” over time. To quantify the system-wide impact of stability, we define an

effective stability:

which measures the average or global level of stability in the population. If the system becomes dominated by highly stable patterns,

grows, increasing the overall rate at which such patterns are reinforced. Conversely, if less stable patterns predominate,

is smaller, slowing the formation of ordered structures. In a continuous-time limit approximation, we can write

leading to an exponential solution of the form

This exponential growth demonstrates how feedback from stable patterns accelerates the ordering process, continually reinforcing those patterns that remain viable. Entropy changes arise from two competing processes. On the one hand, random fluctuations broaden the distribution (increasing entropy) at a logarithmic rate. As in other diffusive or random-walk scenarios, the accessible state space grows sub-exponentially (e.g., proportional to

), causing the associated entropy to accumulate at a rate proportional to

:

whereas reinforcing stable patterns focuses the distribution (decreasing entropy) at an exponential rate:

If the stabilizing feedback outpaces random diffusion, the net result is a persistent reduction in entropy, guiding the system toward ordered, low-entropy configurations characteristic of Bayesian Assembly (BA) dynamics.

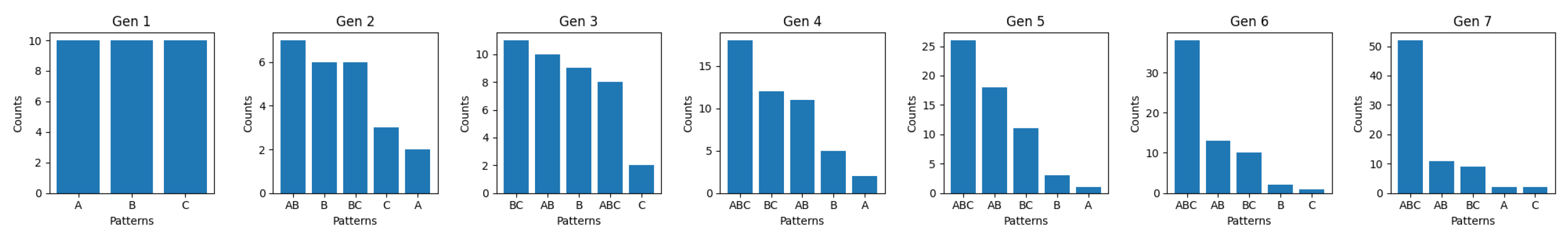

4. Mixing Two BA Systems

Mixing two independently evolved BA systems introduces a new dimension to their dynamics, where patterns and interactions from each system influence the other. This interaction results in the exchange of information, changes in entropy, and the potential emergence of novel patterns.

Let two independently evolved BA systems represent populations of compounds from different base elements and , respectively. Each system evolves independently on the basis of its population distribution and stability constraints. When the two systems are mixed, the patterns from both interact, allowing the formation of compounds in the cross-system (e.g., ). Stability constraints extend to intersystem interactions. The joint system evolves on the basis of updated probabilities reflecting both within-system and cross-system interactions.

In a continuous-flow stirred tank reactor (CFSTR) [

19], two different chemical feeds are mixed, producing a steady-state mixture. Once mixed, reactants from each feed can participate in cross-reactions, occasionally generating novel compounds (like AZ in the figure above) that surpass the stability of any compounds found in each feed. In a Bayesian Assembly (BA) analogy, new high-stability patterns proliferate over less stable alternatives. This explains how mixing can yield emergent compounds and reaction pathways that were inaccessible in isolation, driving a further entropy reduction.

Figure 3.

Mixing two evolved populations

Figure 3.

Mixing two evolved populations

5. Bayesian Updating in BA Systems

Kauffman’s

Theory of the Adjacent Possible [

28] provides a useful conceptual backdrop for understanding how new states or patterns can emerge once existing states pave the way for them. In chemical and biological contexts, the “adjacent possible” refers to structures or configurations that become accessible only after certain precursors appear. In a Bayesian Assembly (BA) framework, such newly available patterns are those that can arise in generation

but were beyond reach in generation

t. The BA perspective adds a dynamic view of selection: it explicitly tracks how some of these emergent patterns persist and proliferate when they possess sufficient node stability. As we saw in Equation

1 the BA generational update rule can be written as:

This rule can be interpreted as a Bayesian evolutionary formula:

Stability plays the role of Likelihood in the Bayesian equation because it is a measure of the likelihood of the specific pattern to persist over time, thereby determining its chances of being selected to interact with other patterns in the next generations. This generational update, in turn, drives an evolutionary exploration of the adjacent possible: once a newly available pattern displays sufficiently high node stability, its probability can rise, thus reshaping the distribution of patterns in subsequent generations.

This interpretation of the evolving process aligns with the view of Bayesian probabilities as a hallmark of dynamical systems [

29], where the distribution of each generation plays the role of the prior, and the posterior emerges as genuinely new distribution, rather than a subjective refinement of a single unknown distribution, allowing novel patterns and interactions to reshape the system over time. The distribution of compounds in each generation is determined by relative frequencies (reflecting a frequentist probability), while the transition to the next generation is driven by a Bayesian update process (reflecting an evolving Bayesian probability).

6. Top-Down vs. Bottom-Up Dynamics in BA Systems

Bayesian Assembly (BA) systems exhibit two complementary forms of causality, previously identified as bottom-up and top-down. From a bottom-up perspective, local probabilistic interactions drive the system’s evolution by creating or dissolving patterns. These interactions are regulated by node stability and selection pressures, producing emergent order from initially simple building blocks.

Once certain patterns become dominant, however, they exert a top-down influence on subsequent generations. These persistent, higher-level structures shape future interactions by catalyzing or constraining the formation of new patterns. Hence, BA systems do not merely accumulate complexity through local rules; they also incorporate feedback from emergent structures that guide (or “select for”) new configurations. The presence of memory and evolutionary history in these feedback loops distinguishes BA systems from frameworks in which higher-level states simply describe aggregates without influencing the underlying microstates.

The contrast with traditional statistical mechanics is informative [

30]. In a typical statistical-mechanical setting, macrostates (e.g., temperature, pressure) characterize large-scale properties that reflect averages of microstates; they rarely feed back to alter the fundamental interaction rules among particles. By contrast, the dominant patterns in BA systems play a direct, causal role in shaping micro-level interactions. This effect renders BA systems capable of embedding history and specificity into the very process that generates new patterns, rather than relying on pre-defined energy distributions or equilibrium conditions.

Such an explicit interplay between bottom-up and top-down dynamics in BA systems suggests a broader principle for understanding complexity: emergent structures can become causal agents that direct further evolution of the system. This perspective helps explain the accumulation of novel order in contexts from prebiotic chemistry to computational networks, where higher-level assemblies (compounds, motifs, or functional components) increasingly govern local interactions, thereby catalyzing the exploration of new possibilities for growth and adaptation.

These dynamics at times appear teleological, as if the system were “seeking” more stable or more complex configurations. Bertalanffy [

31] cautions that such minimization processes, while suggestive of goal-directed behavior, often reflect nothing more than the natural unfolding of feedback loops and gradients rather than true purposeful design. BA systems likewise illustrate how feedback from emergent structures can drive the exploration of new system states without implying an external or predetermined goal. By capturing both bottom-up and top-down mechanisms in an evolving distribution, BA systems provide a model for understanding how complexity can accumulate, whether in prebiotic chemistry or in computational and informational domains, through iterative processes of selection and the continual reshaping of local interactions.

7. Conclusion

BA systems provide a simple framework for understanding the emergence of complexity and order in systems governed by probabilistic interactions and stability-driven selection. By abstracting away specific physical laws, these systems offer a testable and generalizable model applicable to a wide range of phenomena.

The iterative interaction of elements in BA systems may help explain the evolution of matter and energy. From the aggregation of quarks into elementary particles, to the evolution of molecules and chemistry. The periodic table, for example, organizes elements based on properties like ionization energy, chemical reactivity, etc. These properties bias element interactions, favoring stable configurations. For instance, forms in dense regions of space where hydrogen atoms collide and bond. It is stable due to strong covalent bonds, and serves as a building block for stars. This reflects the dominance of stability-driven patterns, akin to how BA systems reinforce stable patterns over generations.

BA systems suggest that evolution may be a universal property of random populations with stability imbalances, not confined to living organisms. By demonstrating how random populations with stability-driven interactions naturally evolve toward order, this framework proposes that perhaps biological evolution of individual organisms is a later stage in such dynamics. Recent findings suggest that stability-driven self-assembly mechanisms may play a crucial role in biotic systems, highlighting the interplay between abiotic and biological evolution [

33].

BA systems provide a non-mystical explanation of the origins of order and information, but they still leave open the question of fine-tuning. Fine-tuned imbalances, such as those described in Rees’s

Just Six Numbers [

34] and Davies’s

The Goldilocks Enigma [

35], exemplify how asymmetries in fundamental constants enable complexity across scales. For instance, quantum fluctuations may have seeded the formation of stars and galaxies. Similarly, in BA systems, non-uniform stability biases interactions toward forming persistent ordered patterns. This connection reinforces the idea that evolution, driven by imbalance and selection, operates universally, bridging the emergence of complexity from cosmological to molecular scales. In summary, if the analogy holds, and the universe turns out to evolve like a BA system, one might be tempted to say that God does indeed play dice, but the dice are loaded.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The author declares no conflicts of interest.

References

- Schrödinger, E. What is Life? Cambridge University Press: Cambridge, UK, 1944. [Google Scholar]

- Tegmark, M. The Mathematical Universe. Found. Phys. 2008, 38, 101–150. [Google Scholar] [CrossRef]

- Fisher, R.A. The Genetical Theory of Natural Selection; Oxford University Press: Oxford, UK, 1930. [Google Scholar]

- Nowak, M.A. Evolutionary Dynamics: Exploring the Equations of Life; Belknap Press: Cambridge, MA, USA, 2006. [Google Scholar]

- Wheeler, J.A. Information, Physics, Quantum: The Search for Links. In Complexity, Entropy, and the Physics of Information; Zurek, W.H., Ed.; Addison-Wesley: Redwood City, CA, USA, 1990; pp. 3–28. [Google Scholar]

- Noble, D. A Theory of Biological Relativity: No Privileged Level of Causation. Interface Focus 2012, 2, 55–64. [Google Scholar] [CrossRef] [PubMed]

- Lloyd, S. Programming the Universe: A Quantum Computer Scientist Takes on the Cosmos; Alfred A. Knopf: New York, NY, USA, 2006. [Google Scholar]

- Kolmogorov, A.N. Three Approaches to the Quantitative Definition of Information. Problemy Peredachi Informatsii 1965, 1, 3–11. [Google Scholar] [CrossRef]

- Chaitin, G.J. Algorithmic Information Theory. IBM J. Res. Dev. 1977, 21, 350–359. [Google Scholar] [CrossRef]

- Solomonoff, R.J. A Formal Theory of Inductive Inference. Part I and Part II. Inf. Control 1964, 7, 1–22. [Google Scholar] [CrossRef]

- Shannon, C.E. A Mathematical Theory of Communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Deutsch, D.; Marletto, C. Constructor theory of information. Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences 2015, 471, 20140540. [Google Scholar] [CrossRef] [PubMed]

- S. I. Walker, L. Cronin, and others. Assembly theory explains and quantifies selection and evolution across physical and biological systems. Nature 2023, 618, 619–628. [Google Scholar] [CrossRef]

- M. Cortês and S.A. Kauffman and A.R. Liddle and L. Smolin (2024), "The TAP equation: evaluating combinatorial innovation in Biocosmology" arXiv:2204.14115.

- Turányi, T., Tomlin, A. S. (2014). Analysis of Kinetic Reaction Mechanisms. Springer. [CrossRef]

- Feinberg, M. (1987). Chemical Reaction Network Structure and the Stability of Complex Isothermal Reactors—I. The Deficiency Zero and Deficiency One Theorems. Chemical Engineering Science 2229, 42, 2229–2268. [Google Scholar] [CrossRef]

- Gillespie, D. T. (2005). Stochastic Simulation of Chemical Kinetics. arXiv:q-bio/0501016. Retrieved from https://arxiv.org/pdf/q-bio/0501016.

- Tononi, G. Consciousness as Integrated Information: A Provisional Manifesto. Biological Bulletin 2008, 215, 216–242. [Google Scholar] [CrossRef] [PubMed]

- Fogler, H. S. (1999). Elements of Chemical Reaction Engineering (3rd ed.). Prentice Hall.

- Leff, H.S.; Rex, A.F. Maxwell’s Demon: Entropy, Information, Computing; Princeton University Press: Princeton, NJ, USA, 2002. [Google Scholar]

- Bäck, T.; Fogel, D.B.; Michalewicz, Z. Evolutionary Computation 1: Basic Algorithms and Operators; CRC Press: FL, USA, 2000. [Google Scholar]

- Goldberg, D.E. Genetic Algorithms in Search, Optimization, and Machine Learning; Addison-Wesley: Boston, MA, USA, 1989. [Google Scholar]

- Holland, J.H. Adaptation in Natural and Artificial Systems; University of Michigan Press: Ann Arbor, MI, USA, 1975. [Google Scholar]

- Wolfram, S. Statistical Mechanics of Cellular Automata. Rev. Mod. Phys. 1983, 55, 601–644. [Google Scholar] [CrossRef]

- Pauling, L. The Nature of the Chemical Bond; Cornell University Press: Ithaca, NY, USA, 1960. [Google Scholar]

- Fox, G. E. Origin and evolution of the ribosome. Cold Spring Harbor Perspectives in Biology 2010, 2, a003483. [Google Scholar] [CrossRef] [PubMed]

- McGrayne, S.B. The Theory That Would Not Die: How Bayes’ Rule Cracked the Enigma Code, Hunted Down Russian Submarines, and Emerged Triumphant from Two Centuries of Controversy; Yale University Press: New Haven, CT, USA, 2011. [Google Scholar]

- Kauffman, S. Investigations; Oxford University Press: New York, NY, USA, 2000. [Google Scholar]

- N. Le, The Equation of Knowledge: From Bayes’ Rule to a Unified Philosophy of Science, Philosophical Press, New York, NY, 2020.

- Landau, L. D., Lifshitz, E. M. Statistical Physics; Pergamon Press, 1980.

- Bertalanffy, L. von. (1968). General System Theory. New York: George Braziller.

- Sutton, R. S., Barto, A. G. (2018). Reinforcement Learning: An Introduction. MIT Press.

- Davies, J.; Levin, M. Self-Assembly: Synthetic morphology with agential materials. Nature Reviews Bioengineering v 1 (2023).

- Rees, M. Just Six Numbers: The Deep Forces that Shape the Universe; Basic Books: New York, NY, USA, 2000. [Google Scholar]

- Davies, P. The Goldilocks Enigma: Why is the Universe Just Right for Life? Allen Lane: London, UK, 2006. [Google Scholar]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).