Submitted:

08 December 2024

Posted:

09 December 2024

You are already at the latest version

Abstract

Keywords:

Introduction

Materials and Methods

Results and Discussion

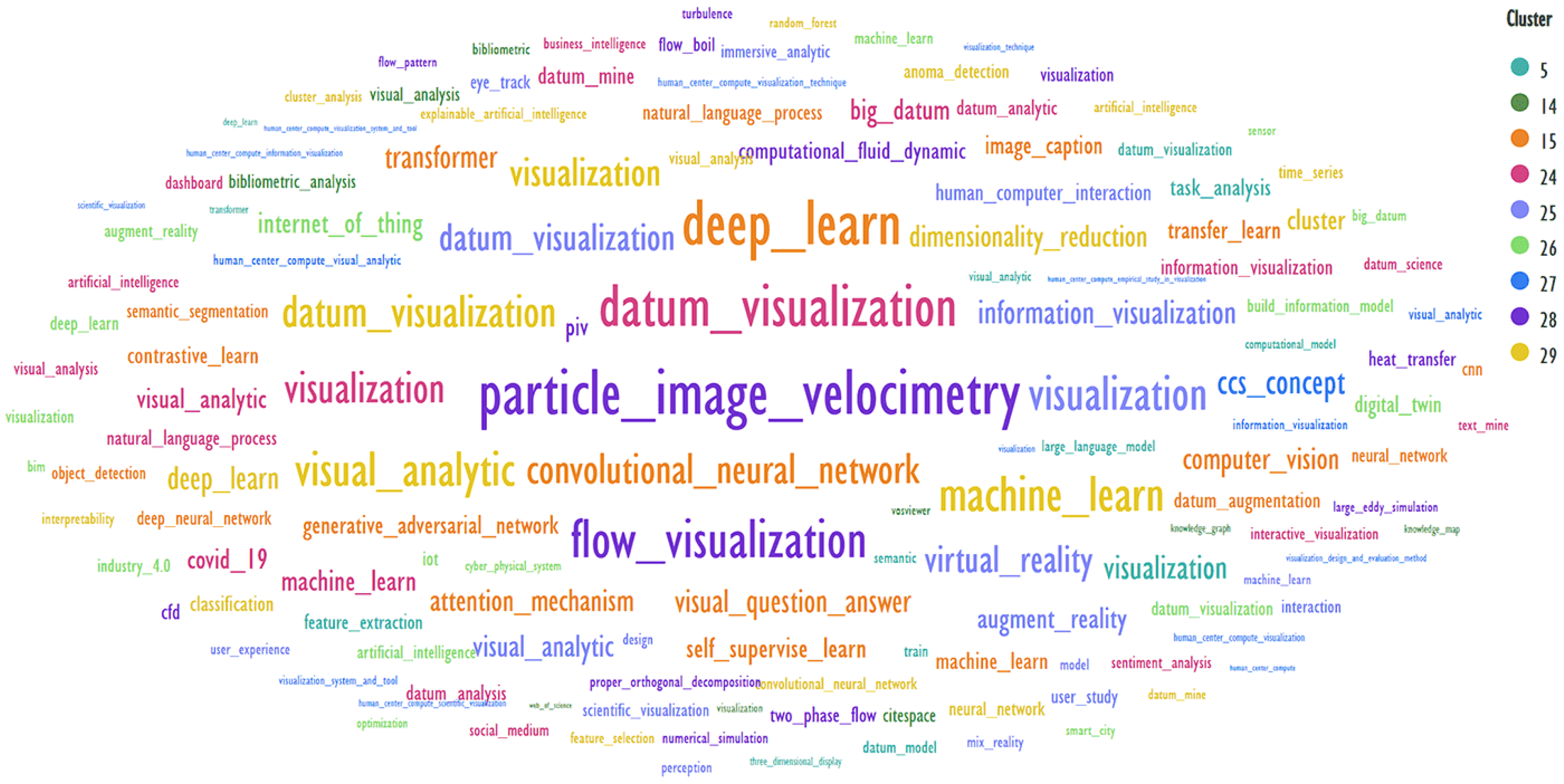

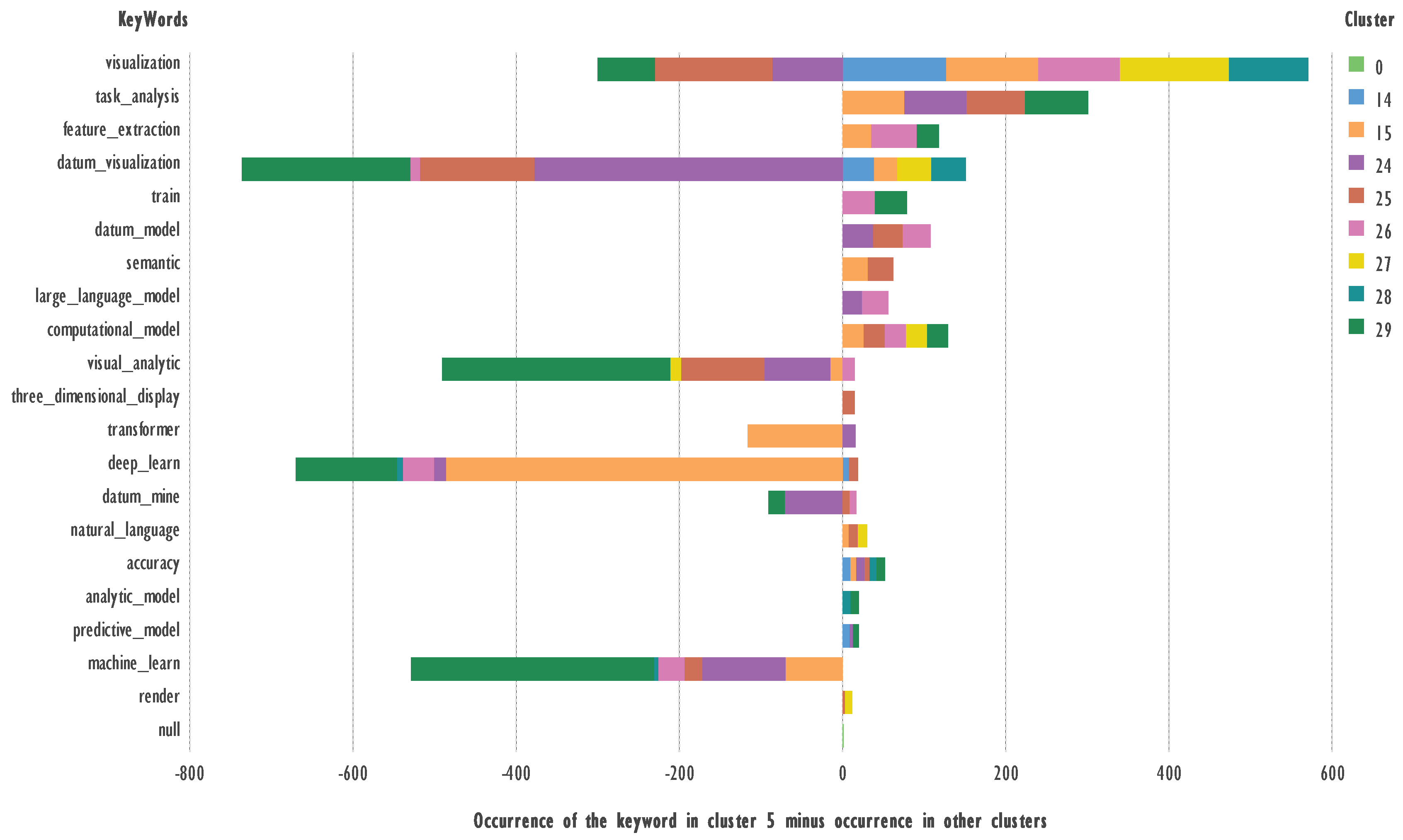

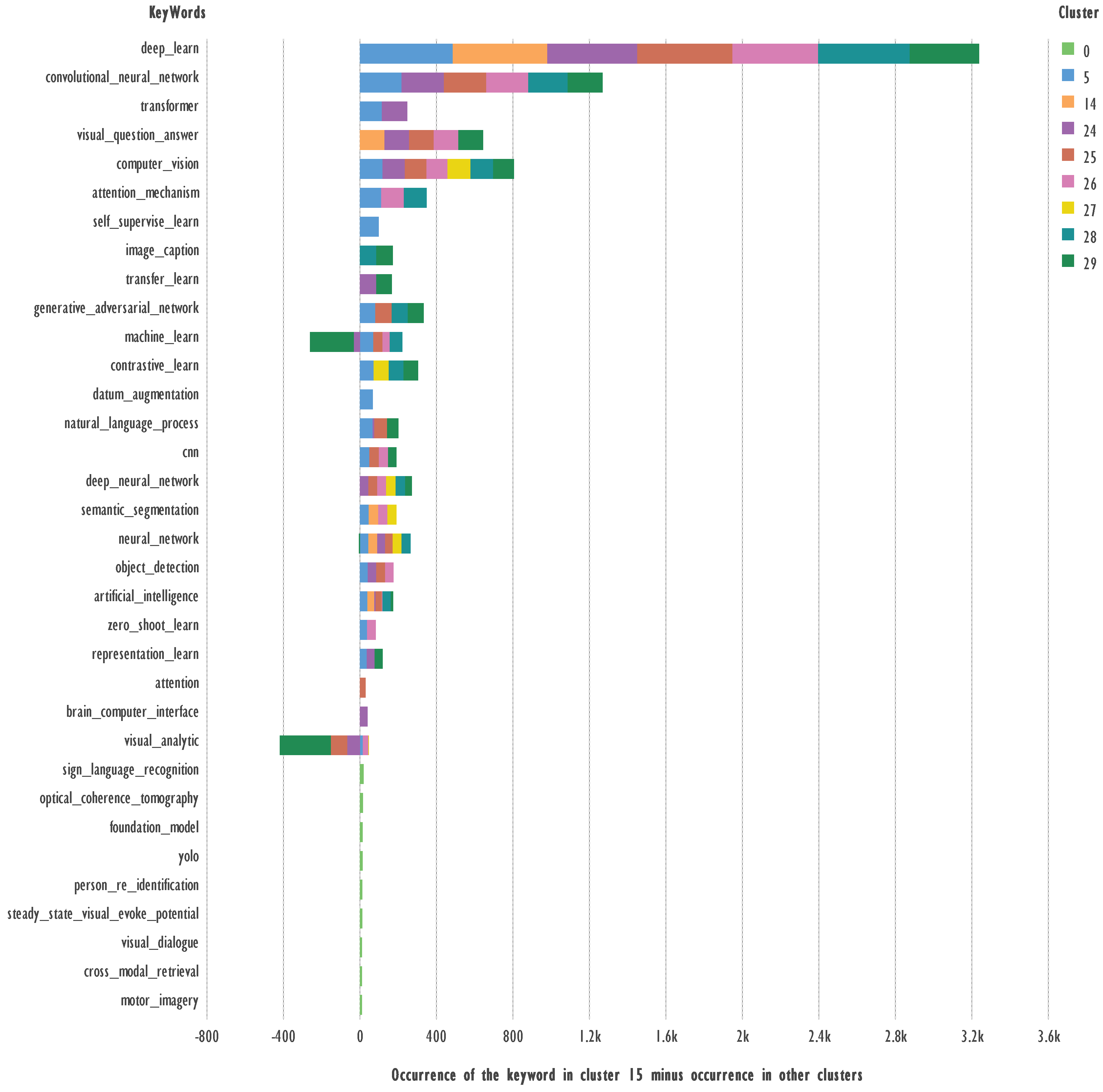

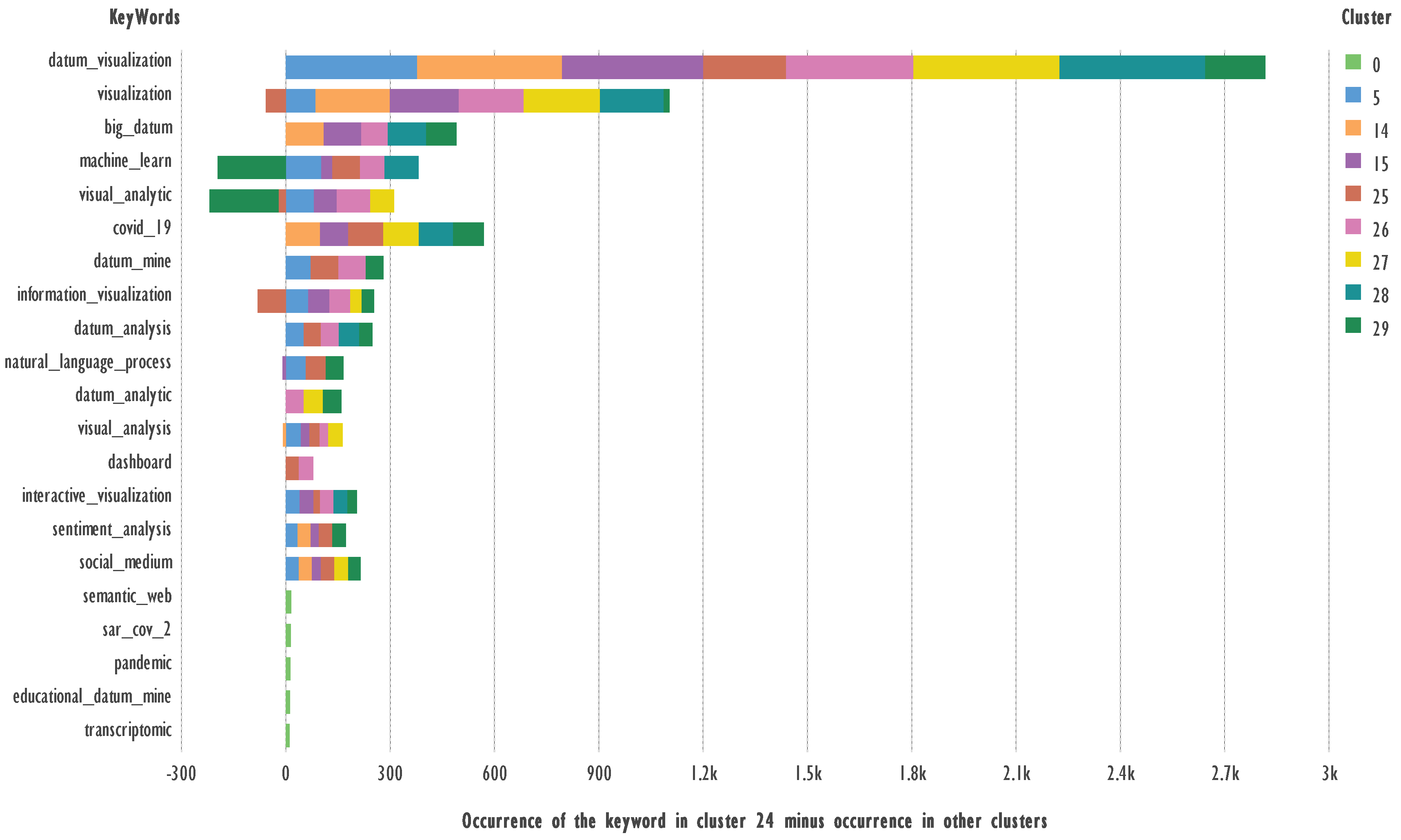

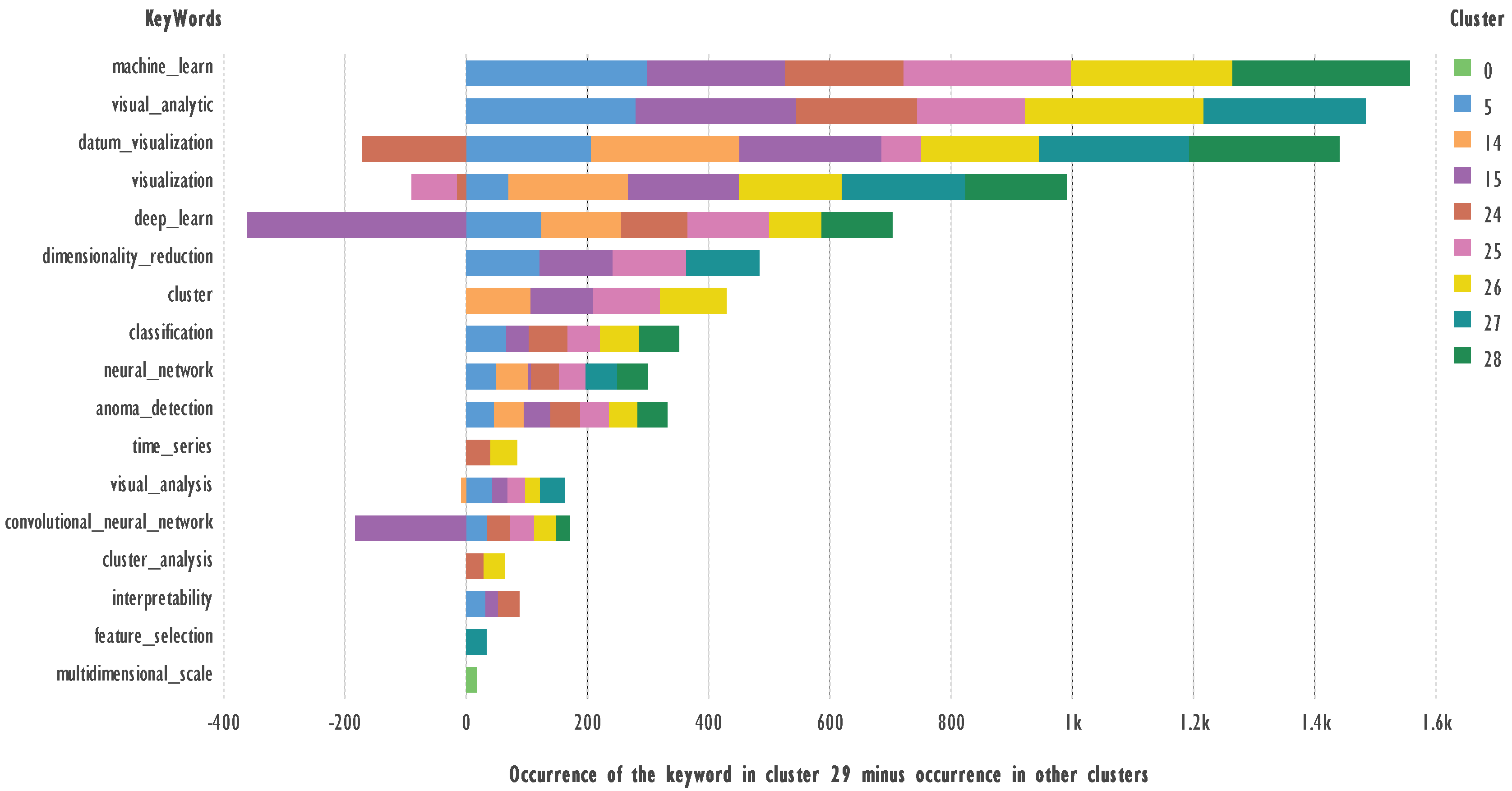

Analyzing the Data of Each Cluster Obtained Using the GSDMM Algorithm

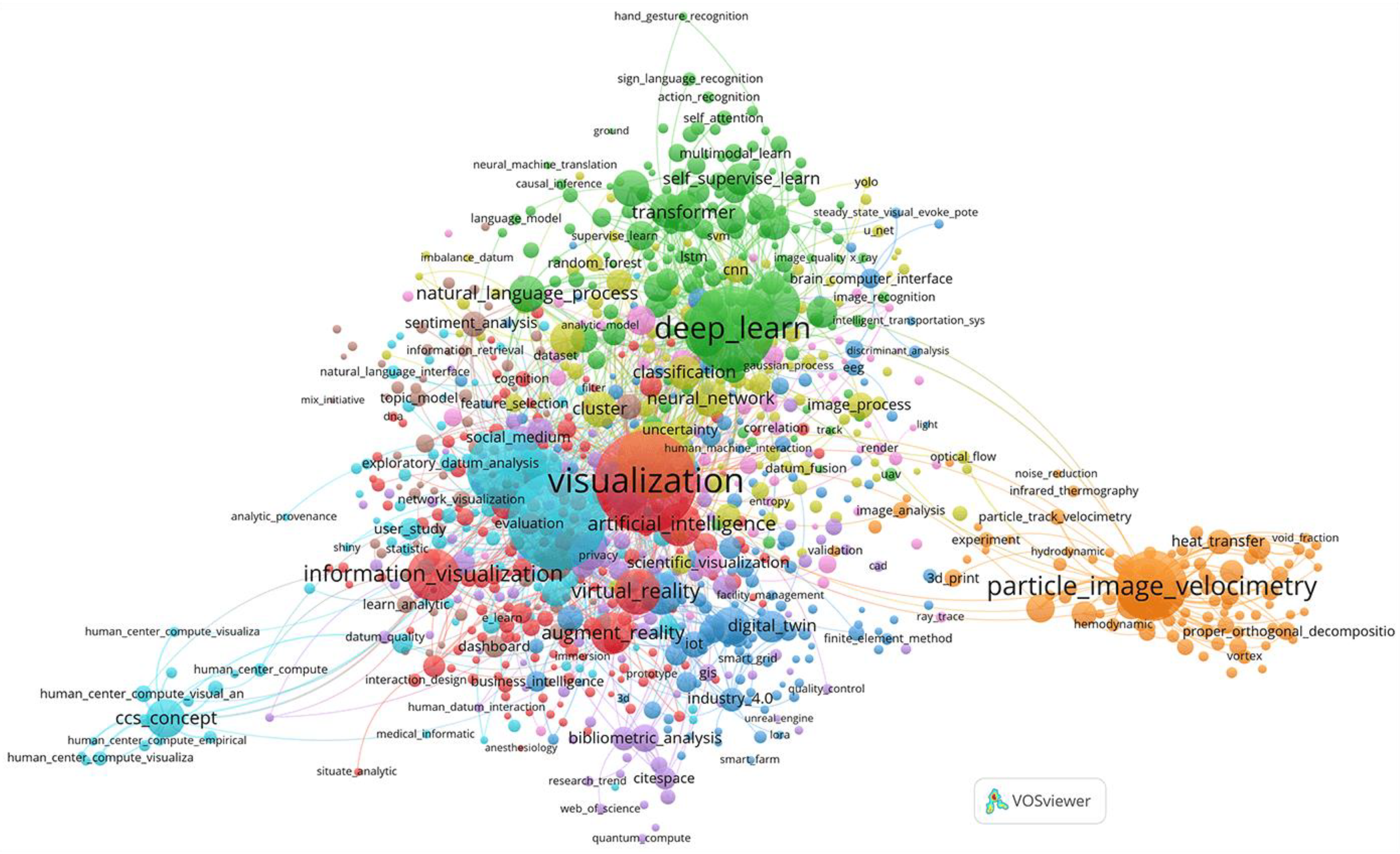

Comparison of Author Keywords in VOSviewer and GSDMM Clusters

Conclusions

- to compile a thematic dictionary of abbreviations that allows unambiguous interpretation of terms such as IoT and the Internet of Things;

- extend the obtained method of visualizing clustering results to other short texts — titles and abstracts;

- analyze the impact of dictionary compilation on the clustering results of the GSDMM algorithm.

| 1 |

https://github.com/MaartenGr/BERTopic — BERTopic is a topic modeling technique that leverages transformers and c-TF-IDF to create dense clusters. |

| 2 |

https://github.com/lmcinnes/umap — Uniform Manifold Approximation and Projection |

| 3 |

https://github.com/centre-for-humanities-computing/tweetopic — Blazing fast topic modelling for short texts |

| 4 |

https://github.com/Illias-b/Natural-Language-Processing/ — A concise project analysing NPS survey data using Python tools and GSDMM for topic modelling |

| 5 |

https://github.com/rwalk/gsdmm-rust — GSDMM: Short text clustering (Rust implementation) |

References

- McInnes L, Healy J, Melville J. UMAP: Uniform Manifold Approximation and Projection for Dimension Reduction 2018. [CrossRef]

- Wang M, Vijayaraghavan A, Beck T, Posma JM. Vocabulary Matters: An Annotation Pipeline and Four Deep Learning Algorithms for Enzyme Named Entity Recognition. J Proteome Res 2024;23:1915–25. [CrossRef]

- Yin J, Wang J. A dirichlet multinomial mixture model-based approach for short text clustering. Proceedings of the 20th ACM SIGKDD international conference on Knowledge discovery and data mining, New York New York USA: ACM; 2014, p. 233–42. [CrossRef]

- Hassan-Montero Y, De-Moya-Anegón F, Guerrero-Bote VP. SCImago Graphica: a new tool for exploring and visually communicating data. EPI 2022:e310502. [CrossRef]

- Elshehaly M, Randell R, Brehmer M, McVey L, Alvarado N, Gale CP, et al. QualDash: Adaptable Generation of Visualisation Dashboards for Healthcare Quality Improvement. IEEE Trans Visual Comput Graphics 2021;27:689–99. [CrossRef]

- Ding X, Yang Z. Knowledge mapping of platform research: a visual analysis using VOSviewer and CiteSpace. Electron Commer Res 2022;22:787–809. [CrossRef]

- Minaee S, Minaei M, Abdolrashidi A. Deep-Emotion: Facial Expression Recognition Using Attentional Convolutional Network. Sensors 2021;21:3046. [CrossRef]

- Camacho D, Panizo-LLedot Á, Bello-Orgaz G, Gonzalez-Pardo A, Cambria E. The four dimensions of social network analysis: An overview of research methods, applications, and software tools. Information Fusion 2020;63:88–120. [CrossRef]

- Yang Y, Dwyer T, Marriott K, Jenny B, Goodwin S. Tilt Map: Interactive Transitions Between Choropleth Map, Prism Map and Bar Chart in Immersive Environments. IEEE Trans Visual Comput Graphics 2021;27:4507–19. [CrossRef]

- Yu W, Dillon T, Mostafa F, Rahayu W, Liu Y. A Global Manufacturing Big Data Ecosystem for Fault Detection in Predictive Maintenance. IEEE Trans Ind Inf 2020;16:183–92. [CrossRef]

- Spinner T, Schlegel U, Schafer H, El-Assady M. explAIner: A Visual Analytics Framework for Interactive and Explainable Machine Learning. IEEE Trans Visual Comput Graphics 2019:1–1. [CrossRef]

- Harte NC, Obrist D, Versluis M, Jebbink EG, Caversaccio M, Wimmer W, et al. Second order and transverse flow visualization through three-dimensional particle image velocimetry in millimetric ducts. Experimental Thermal and Fluid Science 2024;159:111296. [CrossRef]

- Jiang F, Huo L, Chen D, Cao L, Zhao R, Li Y, et al. The controlling factors and prediction model of pore structure in global shale sediments based on random forest machine learning. Earth-Science Reviews 2023;241:104442. [CrossRef]

| VOSviewer-AuKWs | N | GSDMM-AuKWs | N |

|---|---|---|---|

| task_analysis | 89 | task_analysis | 78 |

| datum_model | 48 | datum_model | 39 |

| train | 44 | train | 41 |

| computational_model | 33 | computational_model | 27 |

| three_dimensional_display | 19 | three_dimensional_display | 17 |

| natural_language | 18 | natural_language | 12 |

| render | 18 | render | 10 |

| VOSviewer-AuKWs | N | GSDMM-AuKWs | N |

|---|---|---|---|

| visual_analysis | 205 | visual_analysis | 53 |

| bibliometric_analysis | 78 | bibliometric_analysis | 52 |

| knowledge_graph | 71 | knowledge_graph | 17 |

| bibliometric | 60 | bibliometric | 35 |

| citespace | 52 | citespace | 49 |

| vosviewer | 28 | vosviewer | 26 |

| knowledge_map | 23 | knowledge_map | 17 |

| VOSviewer-AuKWs | N | GSDMM-AuKWs | N |

|---|---|---|---|

| deep_learn | 763 | deep_learn | 500 |

| convolutional_neural_network | 299 | convolutional_neural_network | 224 |

| computer_vision | 170 | computer_vision | 122 |

| transformer | 151 | transformer | 133 |

| natural_language_process | 139 | natural_language_process | 68 |

| visual_question_answer | 136 | visual_question_answer | 130 |

| attention_mechanism | 128 | attention_mechanism | 119 |

| self_supervise_learn | 108 | self_supervise_learn | 103 |

| generative_adversarial_network | 97 | generative_adversarial_network | 86 |

| transfer_learn | 95 | transfer_learn | 87 |

| contrastive_learn | 91 | contrastive_learn | 79 |

| image_caption | 89 | image_caption | 87 |

| deep_neural_network | 85 | deep_neural_network | 51 |

| datum_augmentation | 69 | datum_augmentation | 68 |

| object_detection | 64 | object_detection | 48 |

| semantic_segmentation | 59 | semantic_segmentation | 50 |

| VOSviewer-AuKWs | N | GSDMM-AuKWs | N |

|---|---|---|---|

| datum_visualization | 971 | datum_visualization | 421 |

| visual_analytic | 644 | visual_analytic | 105 |

| datum_mine | 134 | datum_mine | 83 |

| interactive_visualization | 83 | interactive_visualization | 41 |

| VOSviewer-AuKWs | N | GSDMM-AuKWs | N |

|---|---|---|---|

| datum_analysis | 111 | datum_analysis | 60 |

| sentiment_analysis | 67 | sentiment_analysis | 40 |

| dashboard | 50 | dashboard | 43 |

| text_mine | 36 | text_mine | 33 |

| VOSviewer-AuKWs | N | GSDMM-AuKWs | N |

|---|---|---|---|

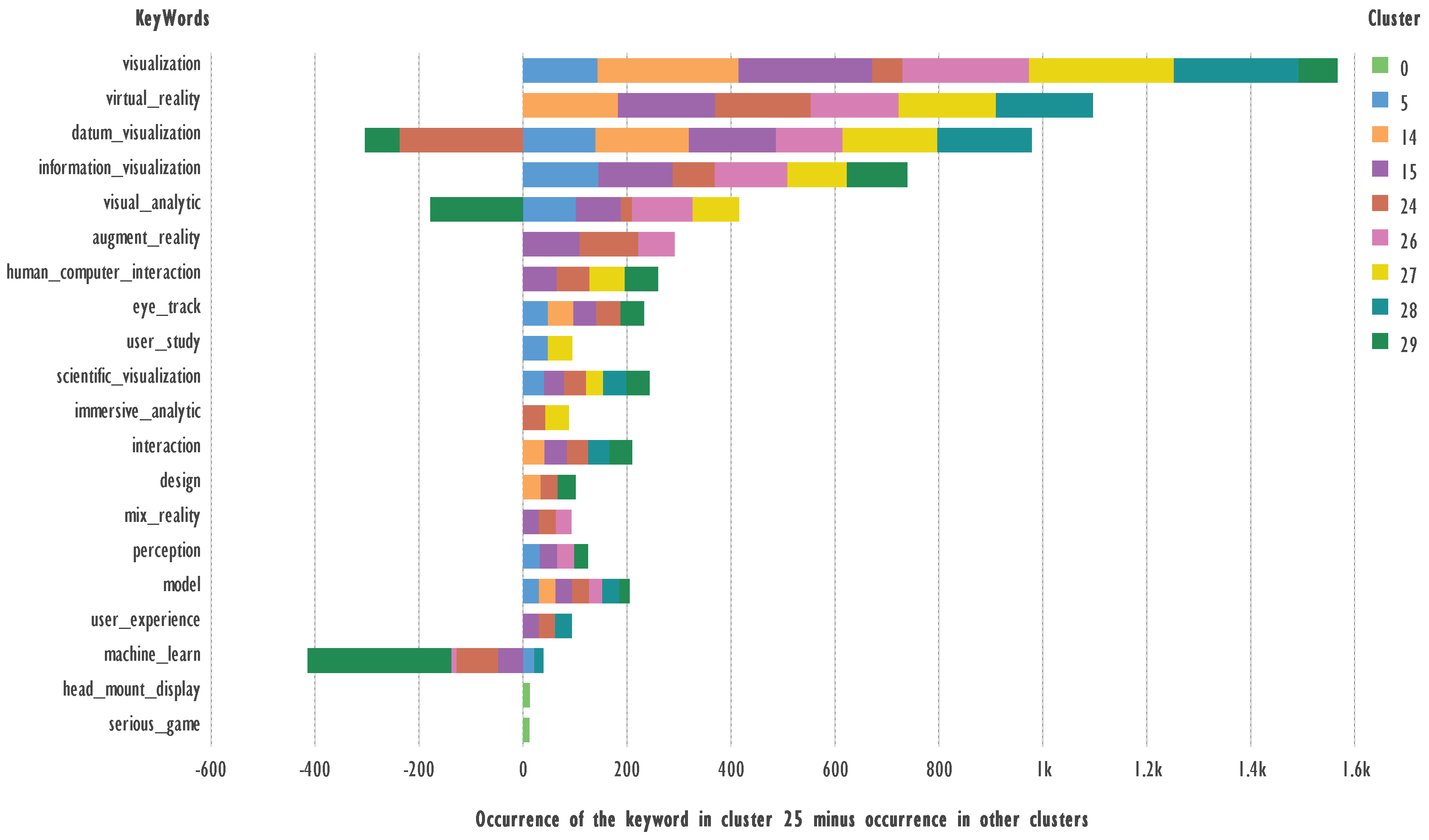

| visualization | 1061 | visualization | 292 |

| information_visualization | 283 | information_visualization | 146 |

| virtual_reality | 220 | virtual_reality | 188 |

| augment_reality | 180 | augment_reality | 118 |

| human_computer_interaction | 95 | human_computer_interaction | 71 |

| eye_track | 67 | eye_track | 50 |

| model | 58 | model | 33 |

| evaluation | 50 | evaluation | 31 |

| immersive_analytic | 50 | immersive_analytic | 46 |

| perception | 45 | perception | 34 |

| mix_reality | 43 | mix_reality | 34 |

| design | 39 | design | 35 |

| user_experience | 38 | user_experience | 33 |

| storytell | 33 | storytell | 31 |

| VOSviewer-AuKWs | N | GSDMM-AuKWs | N |

|---|---|---|---|

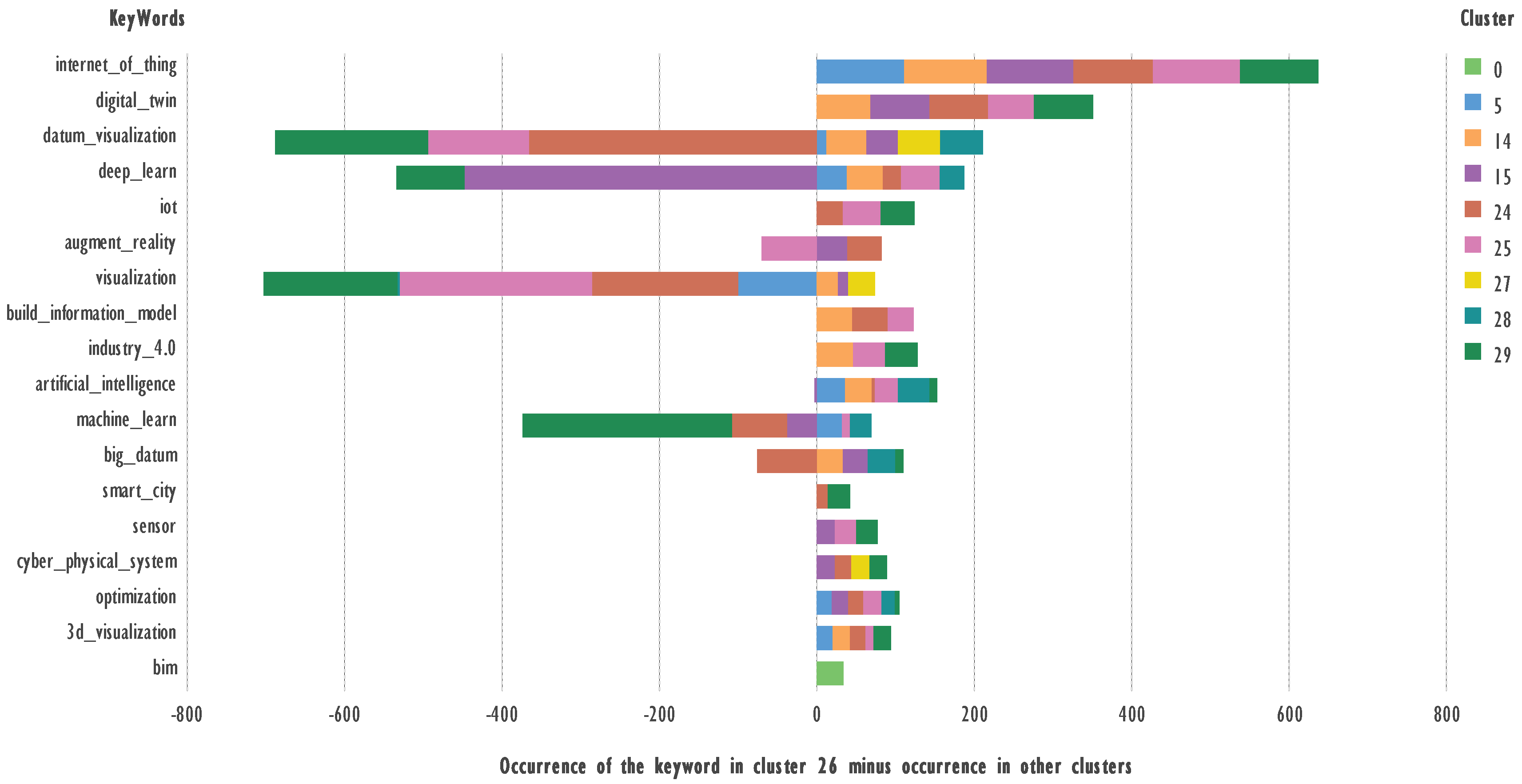

| big_datum | 182 | big_datum | 36 |

| internet_of_thing | 147 | internet_of_thing | 112 |

| digital_twin | 105 | digital_twin | 76 |

| iot | 72 | iot | 49 |

| build_information_model | 65 | build_information_model | 47 |

| industry_4.0 | 60 | industry_4.0 | 47 |

| smart_city | 45 | smart_city | 29 |

| cloud_compute | 39 | cloud_compute | 21 |

| sensor | 35 | sensor | 28 |

| bim | 34 | bim | 34 |

| VOSviewer-AuKWs | N | GSDMM-AuKWs | N |

|---|---|---|---|

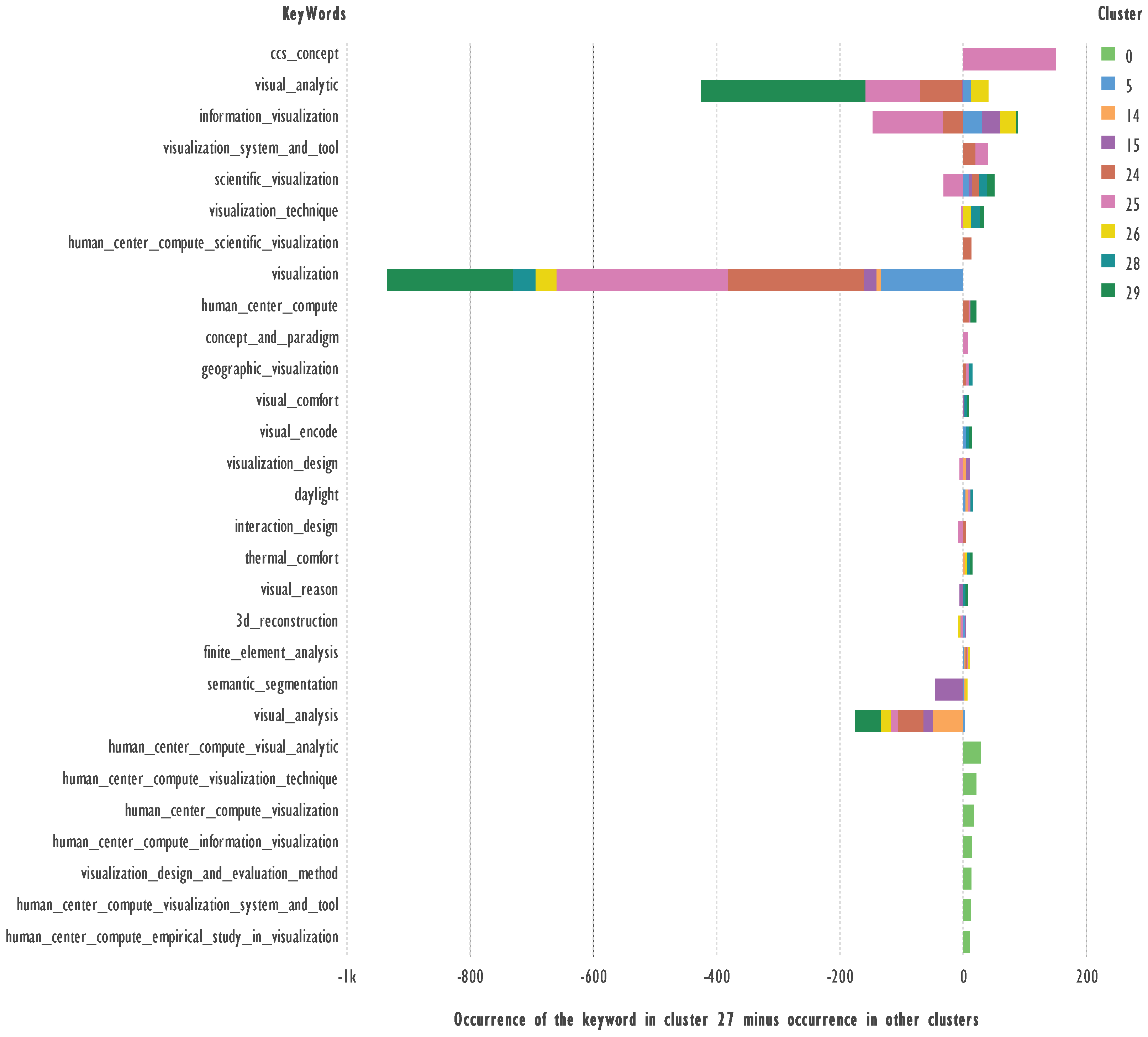

| visual_analytic | 644 | visual_analytic | 37 |

| ccs_concept | 152 | ccs_concept | 151 |

| visualization_technique | 44 | visualization_technique | 15 |

| human_center_compute_visual_analytic | 28 | human_center_compute_visual_analytic | 28 |

| visualization_system_and_tool | 23 | visualization_system_and_tool | 21 |

| VOSviewer-AuKWs | N | GSDMM-AuKWs | N |

|---|---|---|---|

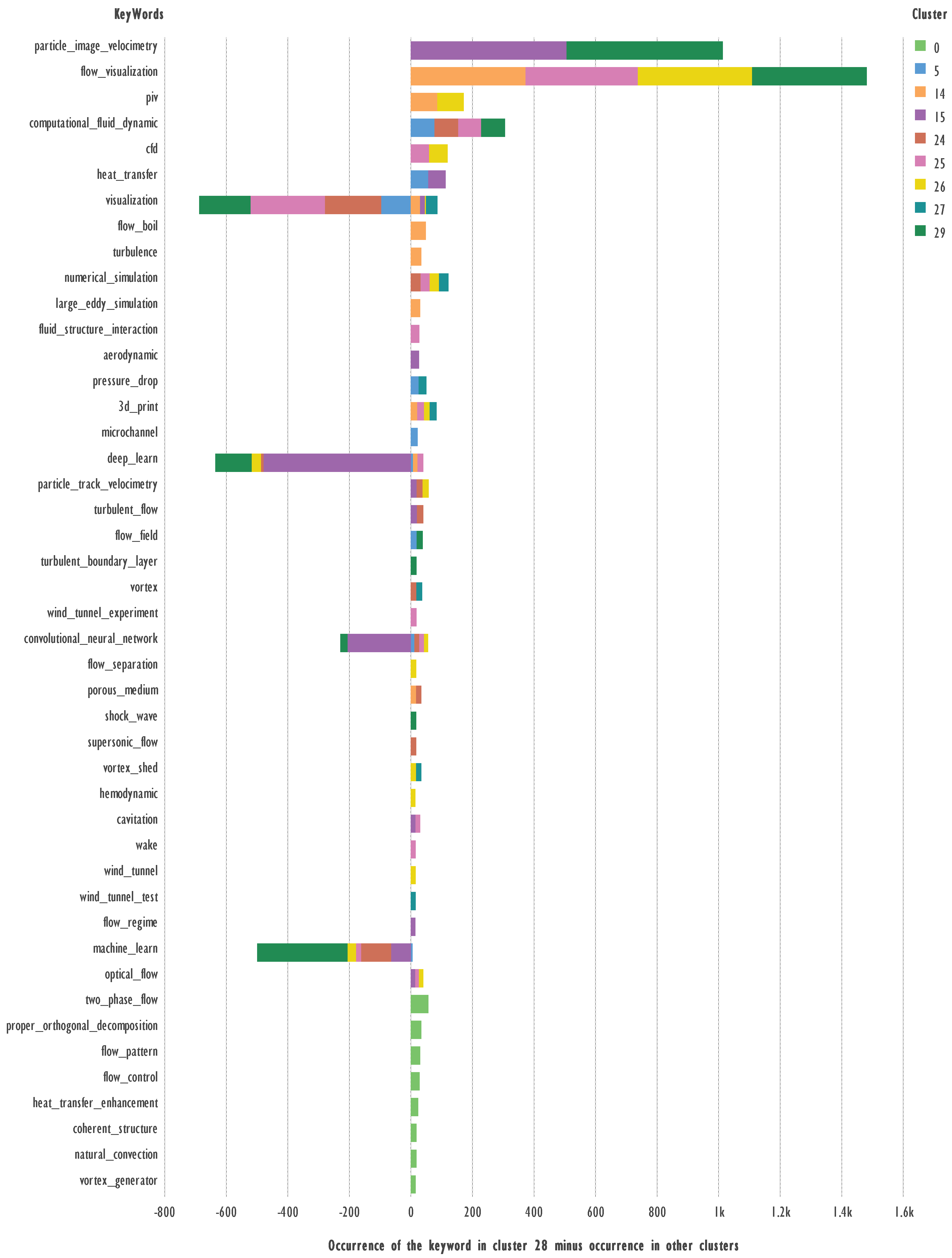

| particle_image_velocimetry | 511 | particle_image_velocimetry | 508 |

| flow_visualization | 389 | flow_visualization | 374 |

| piv | 89 | piv | 87 |

| computational_fluid_dynamic | 84 | computational_fluid_dynamic | 78 |

| cfd | 67 | cfd | 62 |

| heat_transfer | 61 | heat_transfer | 58 |

| two_phase_flow | 57 | two_phase_flow | 57 |

| flow_boil | 50 | flow_boil | 49 |

| numerical_simulation | 43 | numerical_simulation | 33 |

| turbulence | 36 | turbulence | 35 |

| proper_orthogonal_decomposition | 34 | proper_orthogonal_decomposition | 34 |

| 3d_print | 32 | 3d_print | 23 |

| large_eddy_simulation | 32 | large_eddy_simulation | 31 |

| flow_pattern | 30 | flow_pattern | 30 |

| fluid_structure_interaction | 29 | fluid_structure_interaction | 28 |

| aerodynamic | 28 | aerodynamic | 27 |

| flow_control | 28 | flow_control | 28 |

| pressure_drop | 28 | pressure_drop | 26 |

| heat_transfer_enhancement | 24 | heat_transfer_enhancement | 24 |

| VOSviewer-AuKWs | N | GSDMM-AuKWs | N |

|---|---|---|---|

| machine_learn | 599 | machine_learn | 308 |

| neural_network | 132 | neural_network | 54 |

| cluster | 130 | cluster | 112 |

| dimensionality_reduction | 126 | dimensionality_reduction | 122 |

| classification | 118 | classification | 67 |

| feature_selection | 35 | feature_selection | 34 |

| random_forest | 33 | random_forest | 32 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).