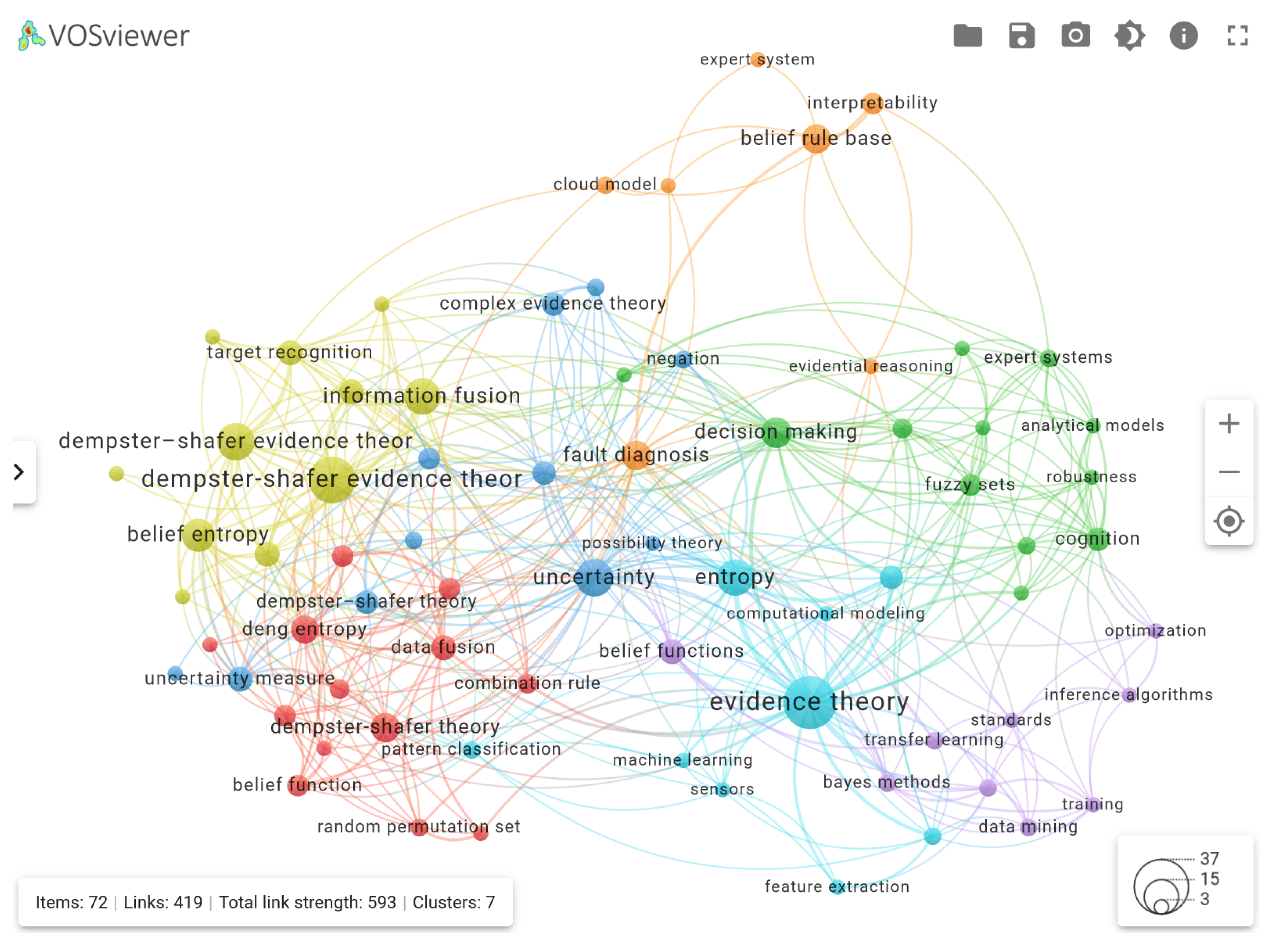

3.2. Scientific Landscape Visualization with VOSviewer

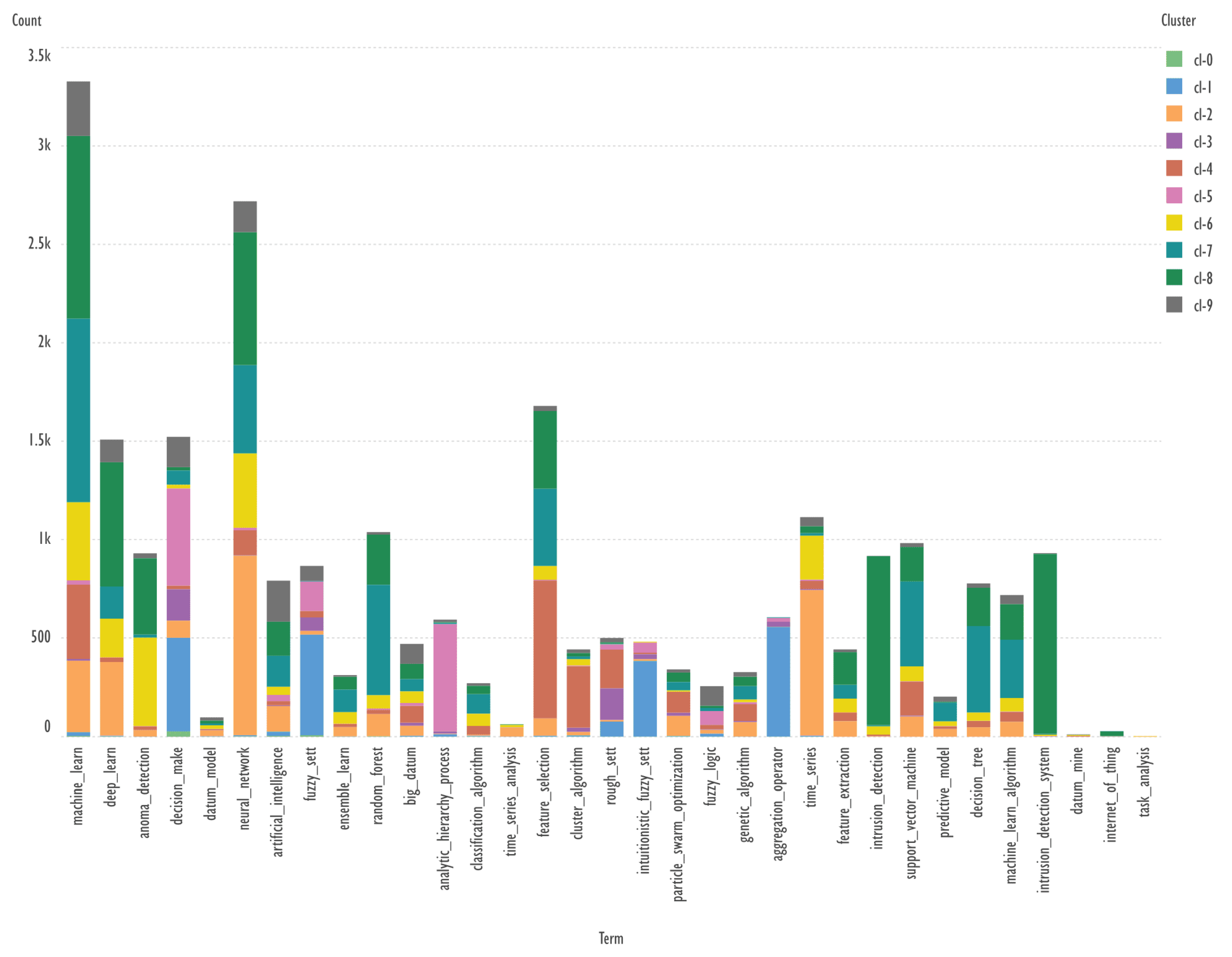

The visualization of scientific landscapes is very informative in bibliometric analysis. The most widely used free program is VOSviewer, which allows, for example, to create graphs of the clustering of keywords based on their co-occurrence. For 10 clusters of bibliometric publication records obtained using the GSDMM algorithm, these graphs were plotted using VOSviewer.

Preliminary bibliometric records containing keywords were converted to lower case and the text was “cleaned”, e.g., abbreviations in parentheses, markup tags, non-Latinized terms were removed.

In publications using VOSviewer, the author has usually not encountered lemmatization of keywords, so it was decided not to deviate too much from common practice. However, in VOSviewer itself it is possible to create a term replacement file that can be used as a dictionary lemmatizer. The main purpose of this article was to demonstrate the possibilities of using the means of visualizing the co-occurrence of terms for the subsequent compilation of possible queries for searching publications on a possible topic of interest, and not to demonstrate the possibilities of preparing the texts of bibliometric records.

Records without keywords were removed from the tables belonging to the 10 publication clusters.

Figure 4 shows the graph of keyword clustering based on their co-occurrence for the zero cluster of records as an example. Such a graph does not provide interactive features, so it is more rational to consider the obtained graphs using the available service

https://app.vosviewer.com/, which allows importing files saved by VOSviewer in JSON format. It is also possible to install VOSviewer locally and download the files (KWs_cl-0-JSON.json... KWs_cl-9-JSON.json), included in the archive attached to this article.

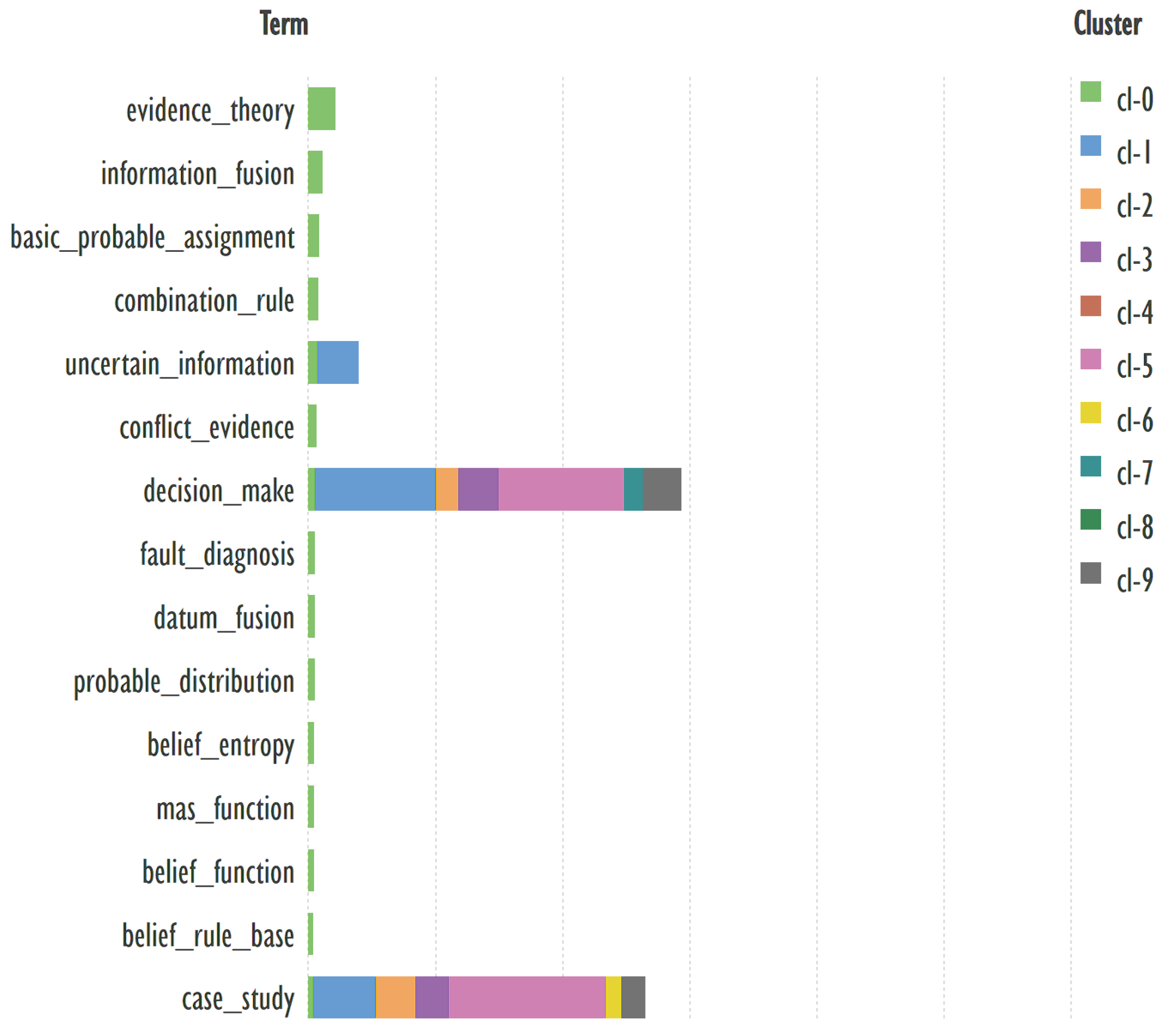

Let us consider a possible application of the results presented in

Figure 4 to find a relevant publication and its brief description. This approach will be applied to the other graphs below.

An example of keywords from other clusters related to the term ‘fault diagnosis’ → belief rule base, evidence theory.

Header generation for the query by elicit.com: “Fault Diagnosis Using Belief Rule Base and Evidence Theory”.

A highly-cited publication: “Agent oriented intelligent fault diagnosis system using evidence theory” [

19]. The abstract contains 218 words.

Summary by Elicit: “The paper presents an agent-oriented intelligent fault diagnosis system that uses evidence theory for multi-sensor information fusion to handle uncertainty, inaccuracy, and conflicts in sensor data, and proposes a new combination rule and decision rules for fault diagnosis”. The text is 38 words long.

Summary by QuillBot: “Multisensor fusion is crucial for fault diagnosis systems, as no single sensor can provide all the necessary information. Evidence theory, an extension of Bayesian reasoning, can be used for information fusion. This paper discusses the classical Dempster-Shafer evidence theory, its disadvantages, and proposes a new combination rule to allocate conflicted information based on the support degree of the focal element. Decision rules and an agent-oriented intelligent fault diagnosis system architecture are also proposed.” The text is 73 words long.

Depending on the objectives of the bibliometric research, either a more concise summary is sufficient or a more detailed summary is required to understand the details of the publication.

3.3. Keywords Clustering Visualization with Scimago Graphica

The free program Scimago Graphica is not yet as widely used for visualizing the results of bibliometric analysis as VOSviewer, but since it is focused on the construction of a wide range of graphs, it offers a broad opportunity to visualize the results. Examples are available on the page

https://www.graphica.app/catalogue.

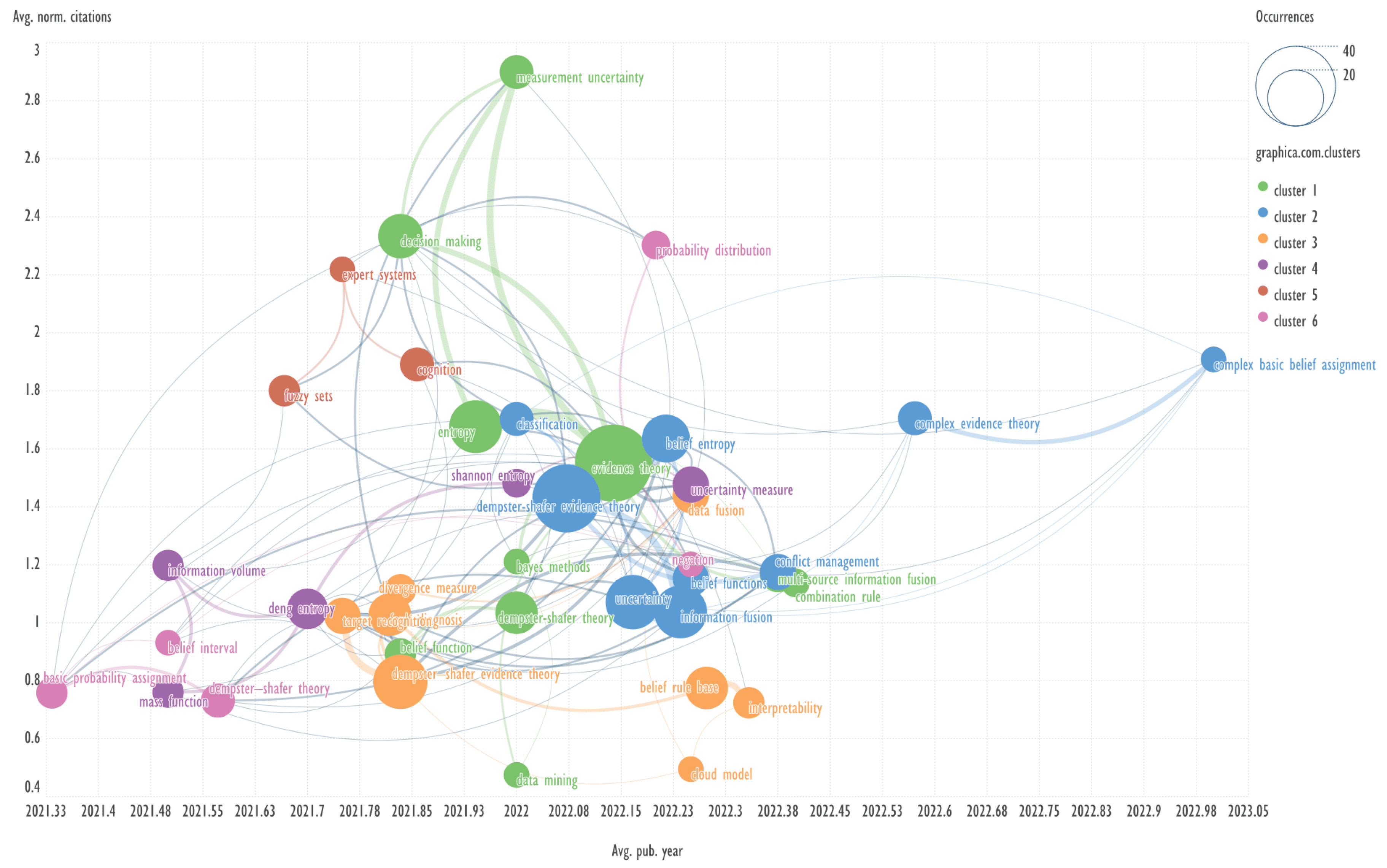

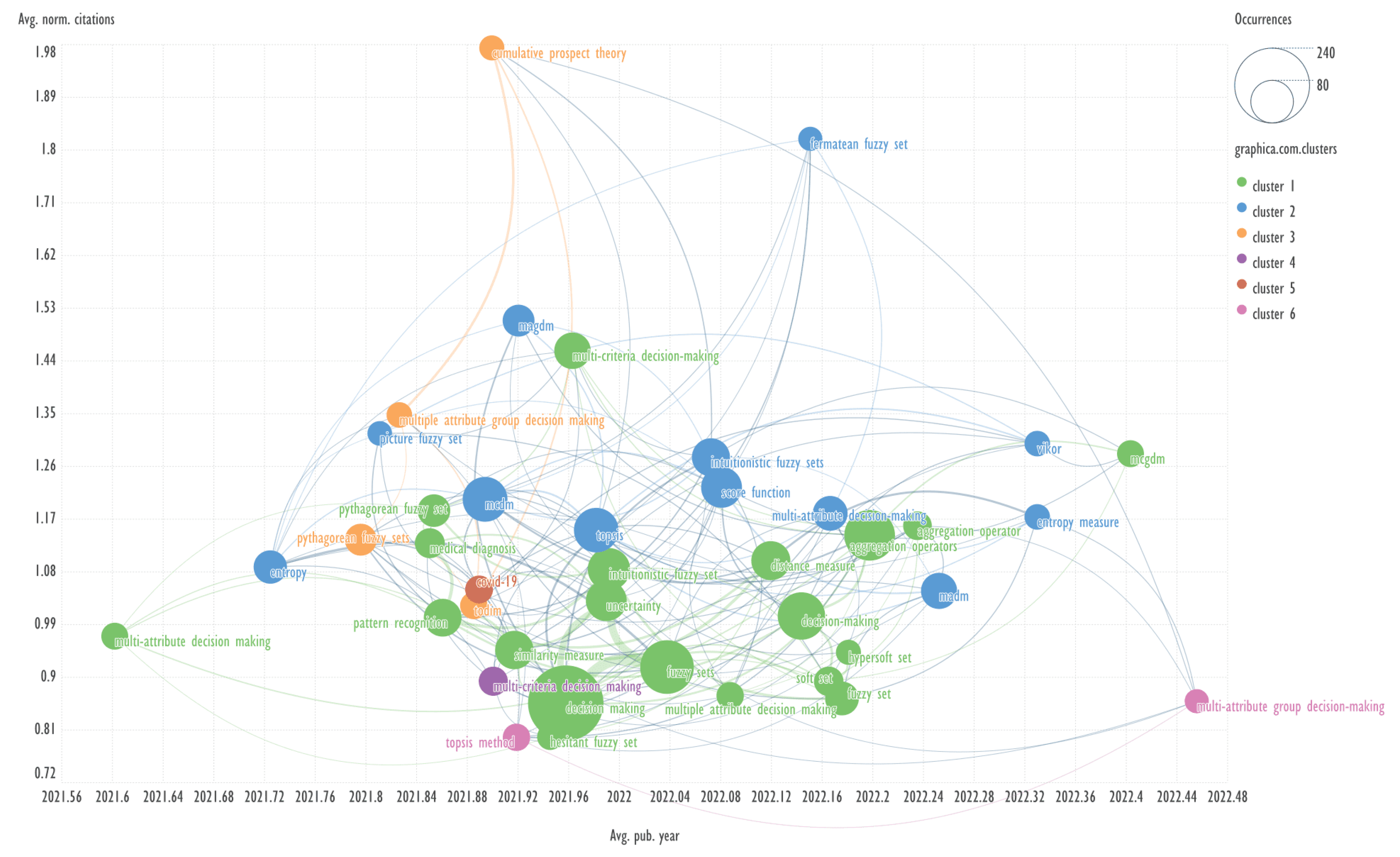

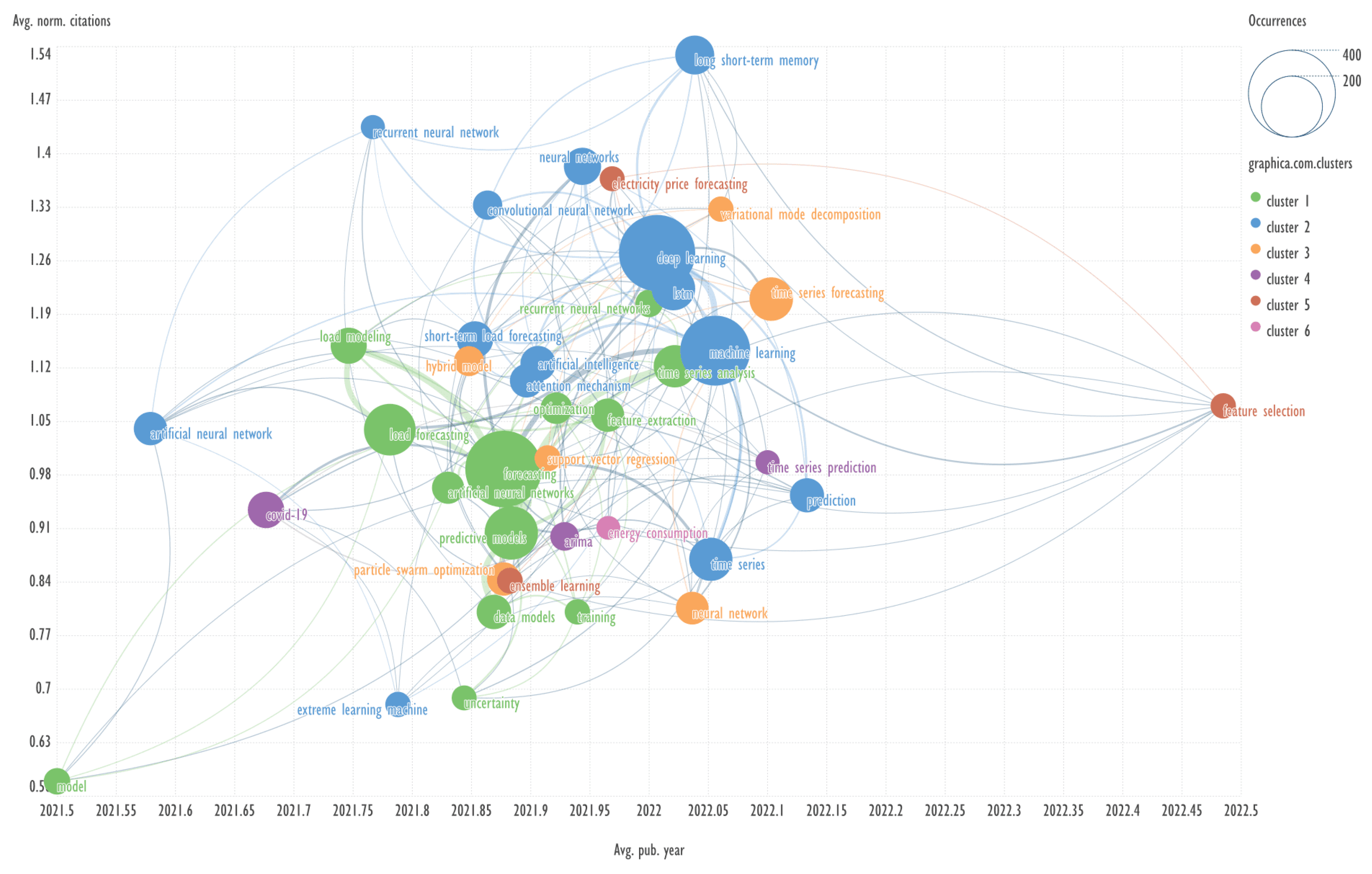

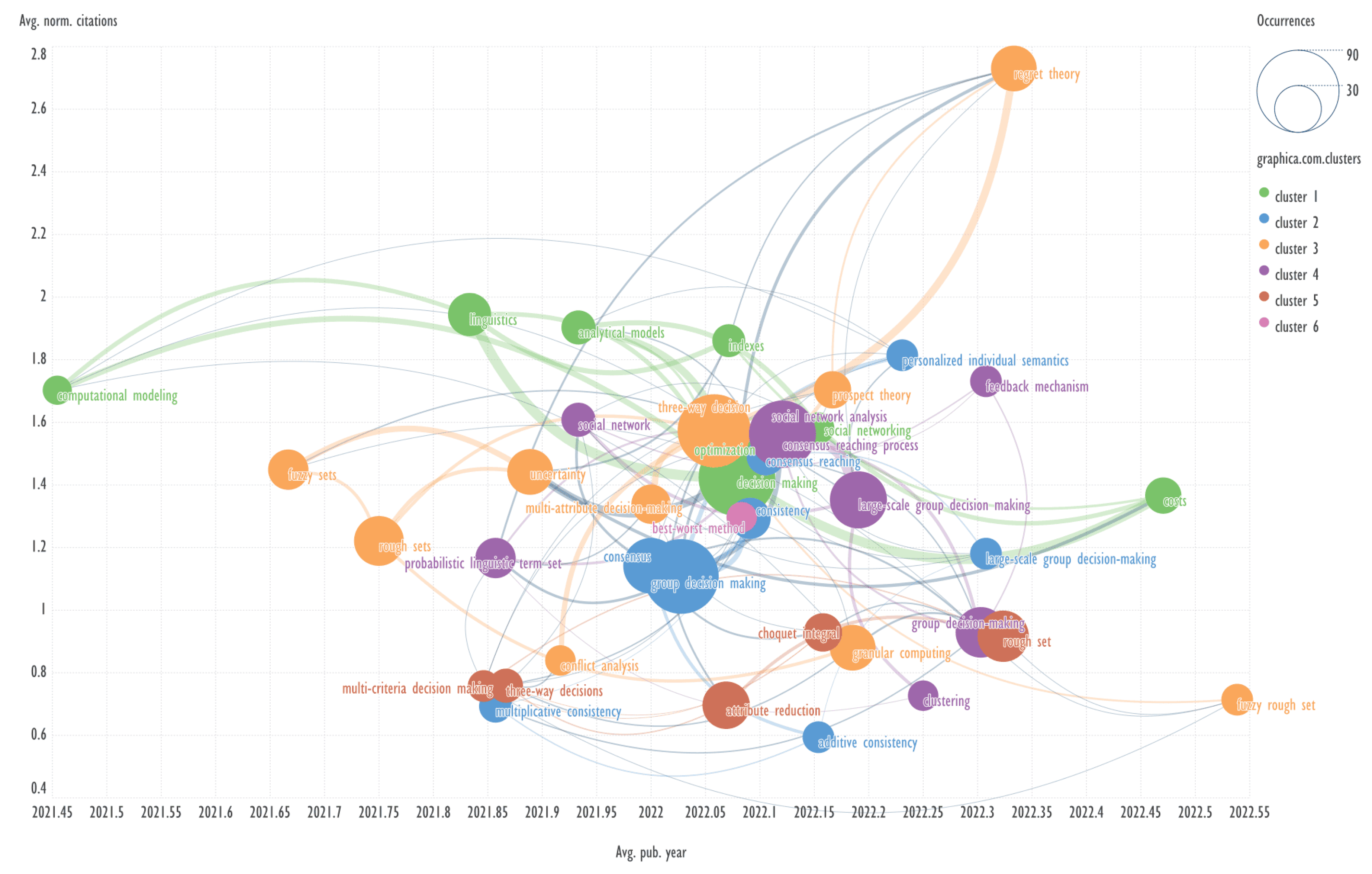

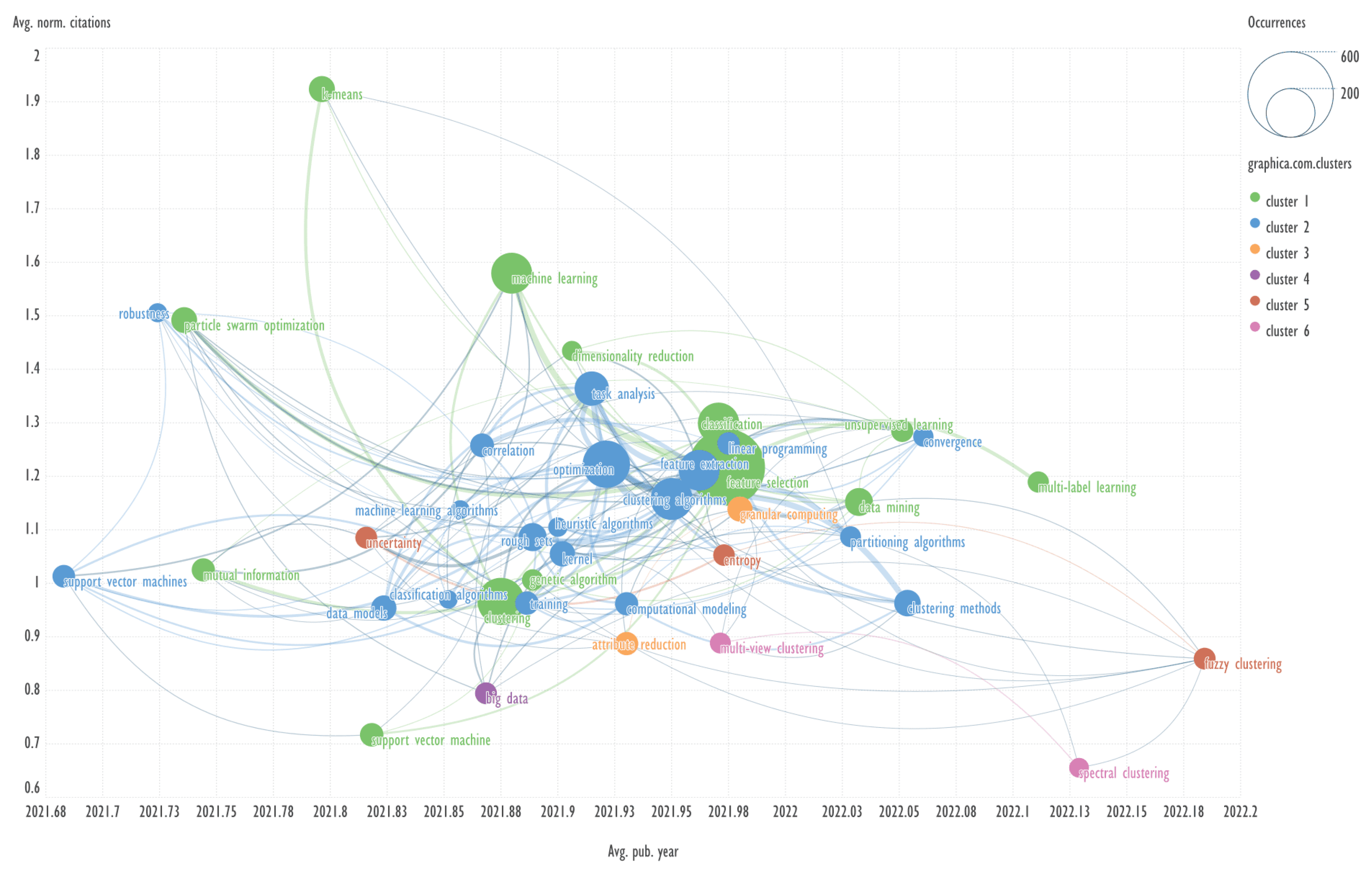

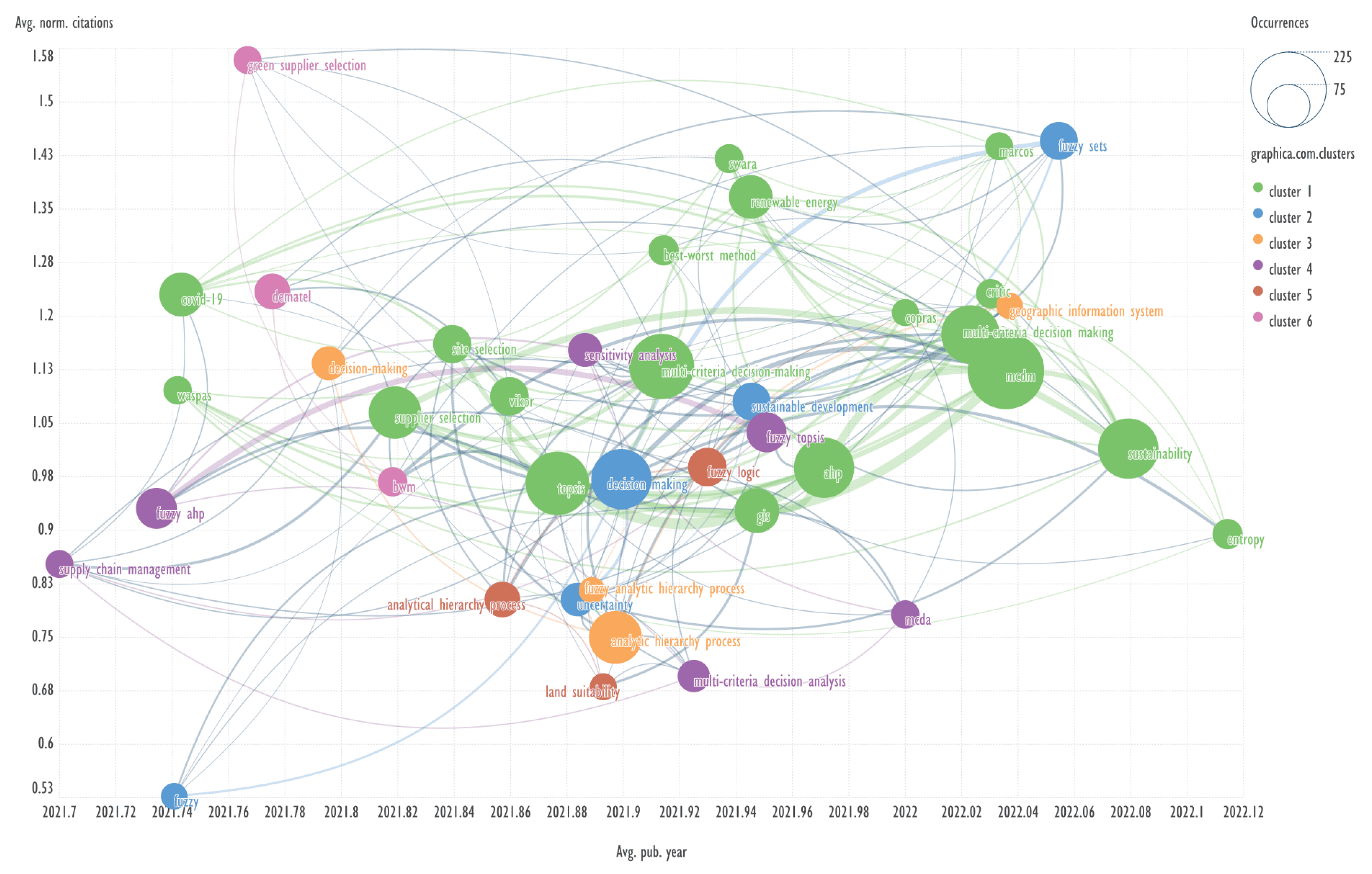

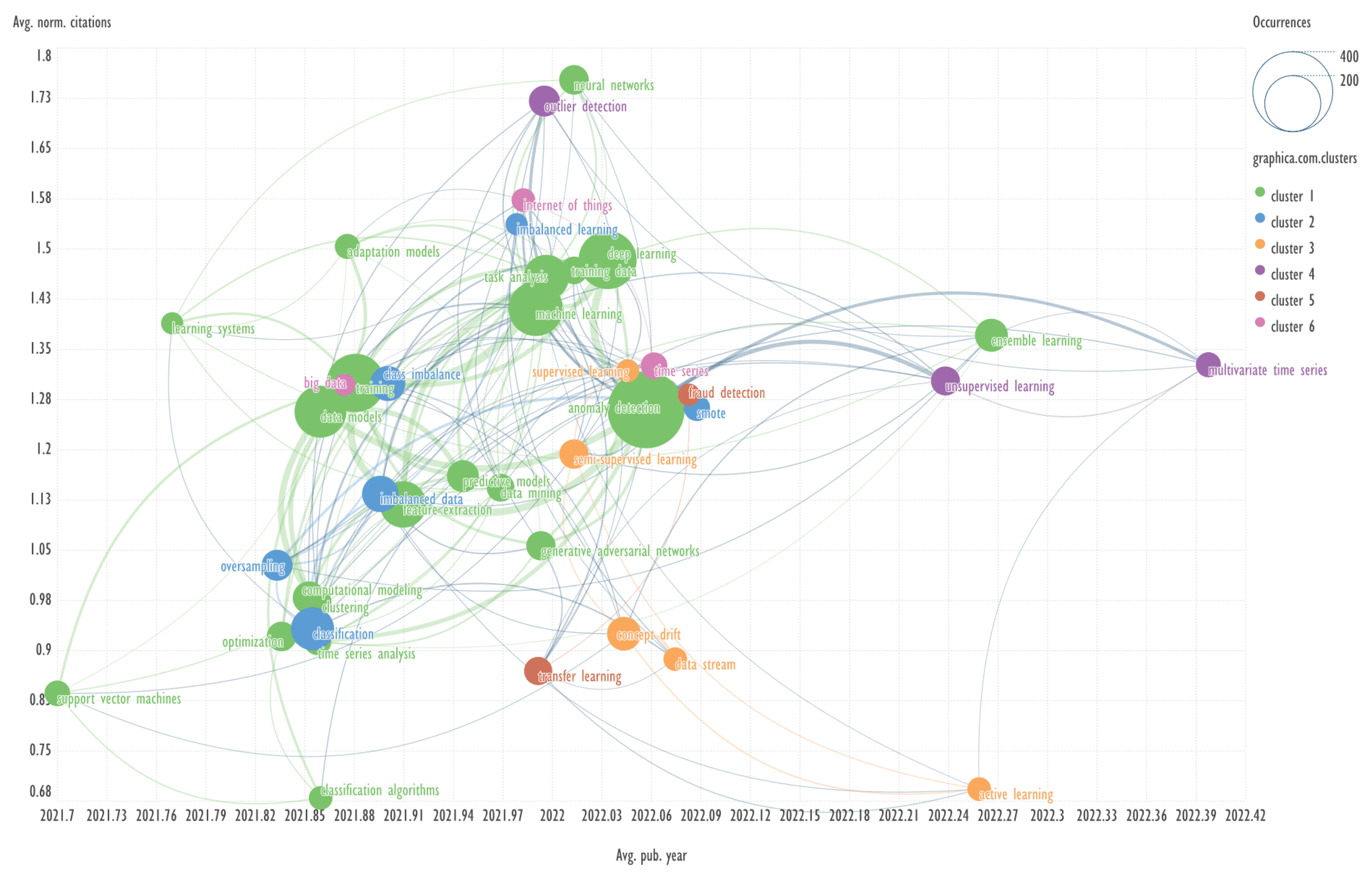

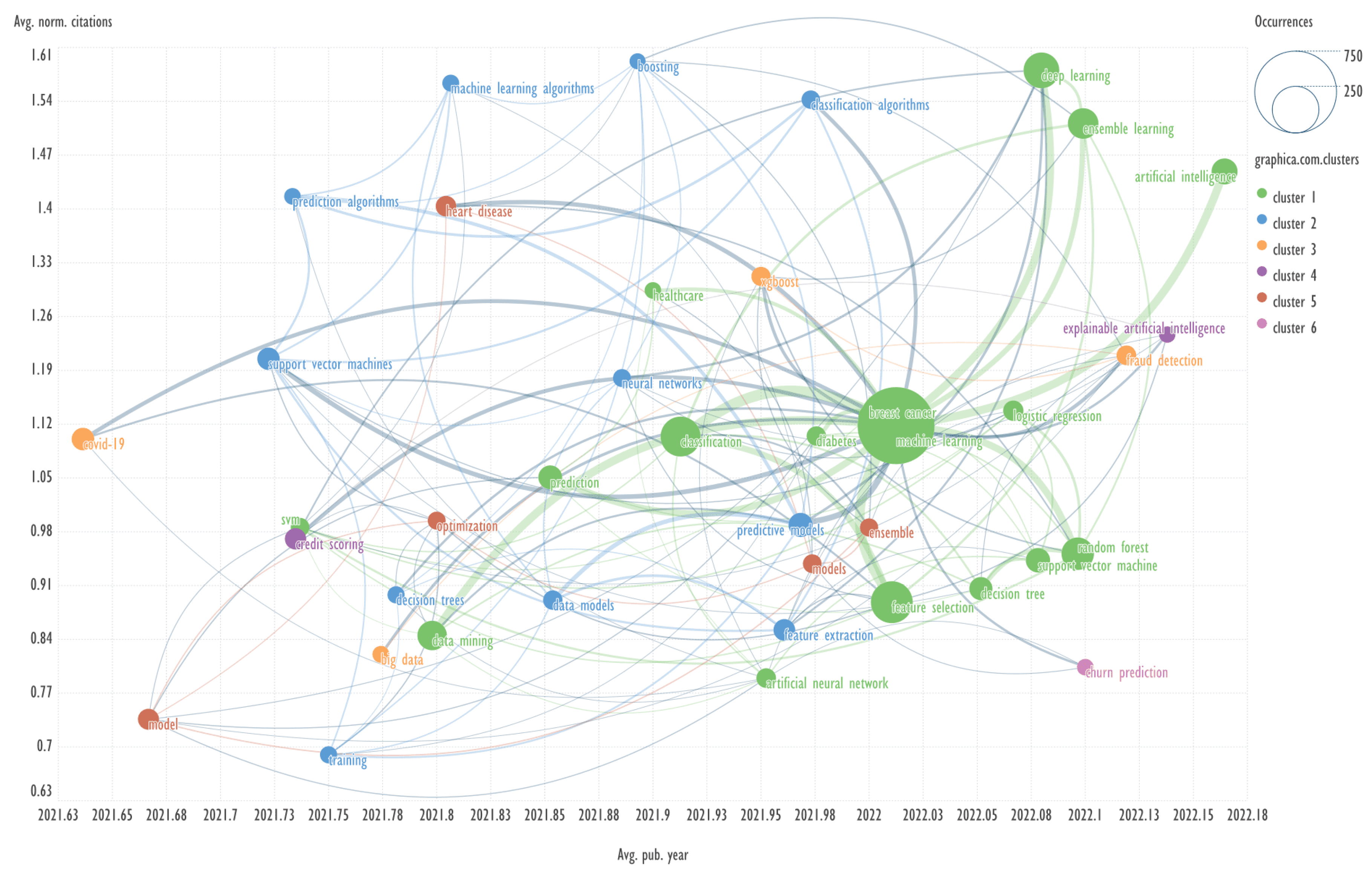

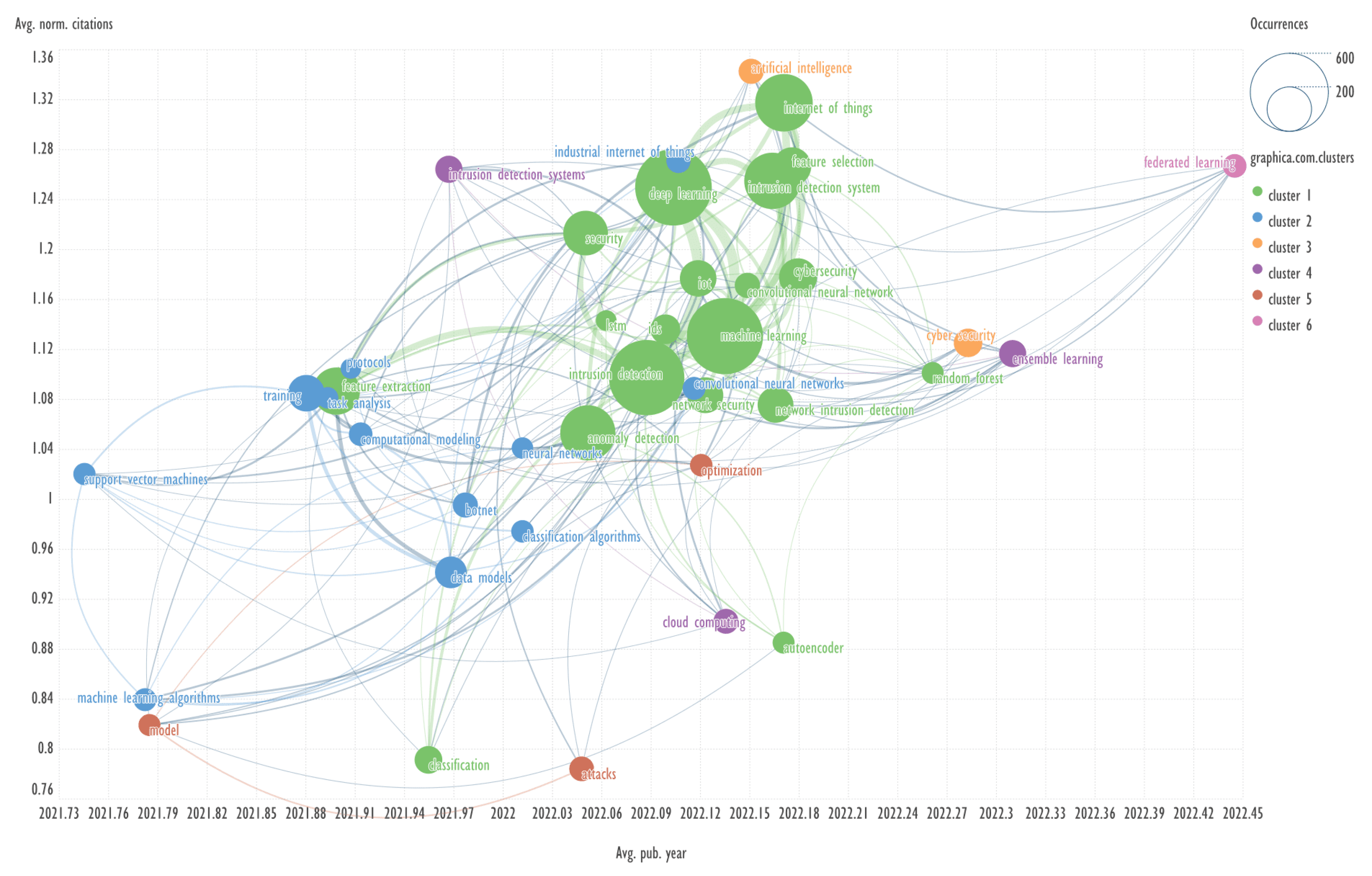

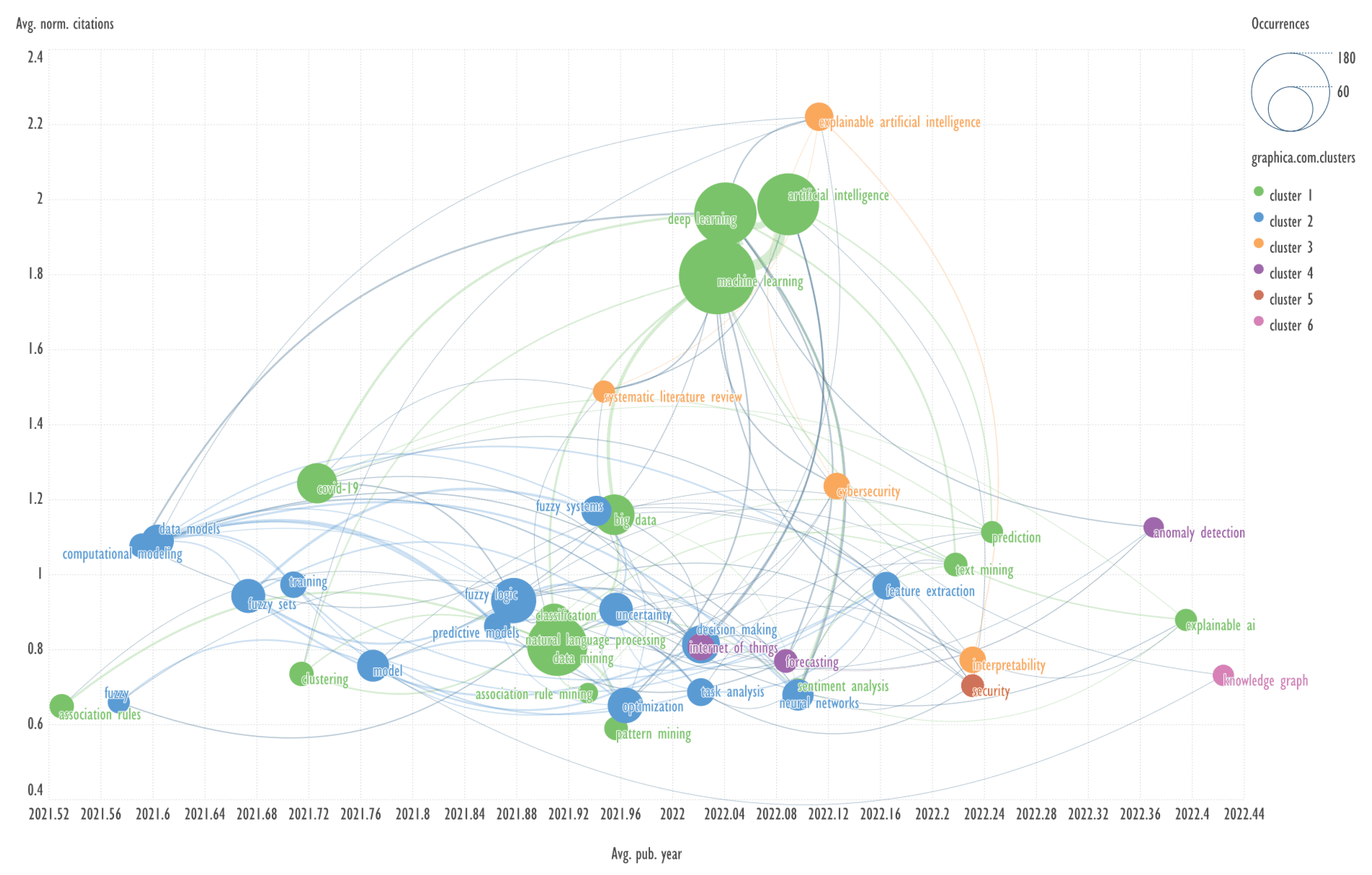

This section presents diagrams reflecting the clustering of keywords based on their co-occurrence, presented in the coordinates average publication year (Avg. pub. year) - average normalized citations (Avg. norm. citations). Charts were plotted for each of the ten clusters of bibliometric records obtained using the GSDMM algorithm.

Explanation: for example, Avg. pub. year is the average year of publications containing the specified keyword. The concepts of Avg. pub. year and Avg. norm. citations are taken from the VOSviewer program.

Scimago Graphica employs a clustering based on Clauset, Newman and Moore algorithm [

20]. In our work, the number of clusters was set to six.

The combination of visualizing the keyword network in the aforementioned coordinates represents a novel approach, which has not been previously identified in other published works.

An example of keywords from other clusters related to the term ‘dempster-shafer evidence theory’ → decision making, deng entropy.

Header generation for the query by elicit.com: “Decision Making with Dempster-Shafer Theory and Deng Entropy”.

A highly-cited publication: “A decomposable Deng entropy” [

21]. The abstract contains 147 words.

Summary by Elicit: “This paper proposes a new decomposable Deng entropy that can effectively decompose the Deng entropy and is an extension of the decomposable entropy for Dempster-Shafer evidence theory.” The text is 27 words long.

Summary by QuillBot: “Dempster-Shafer evidence theory is an extension of classical probability theory used in evidential environments. It uses a decomposable entropy to efficiently decompose the Shannon entropy. This article proposes a decomposable Deng entropy, that can efficiently decompose the entropy for the Dempster–Shafer evidence theory. Experimental results show the performance of the model in decomposing Deng entropy.” The text is 56 words long.

Note, primary article: Deng entropy [

22].

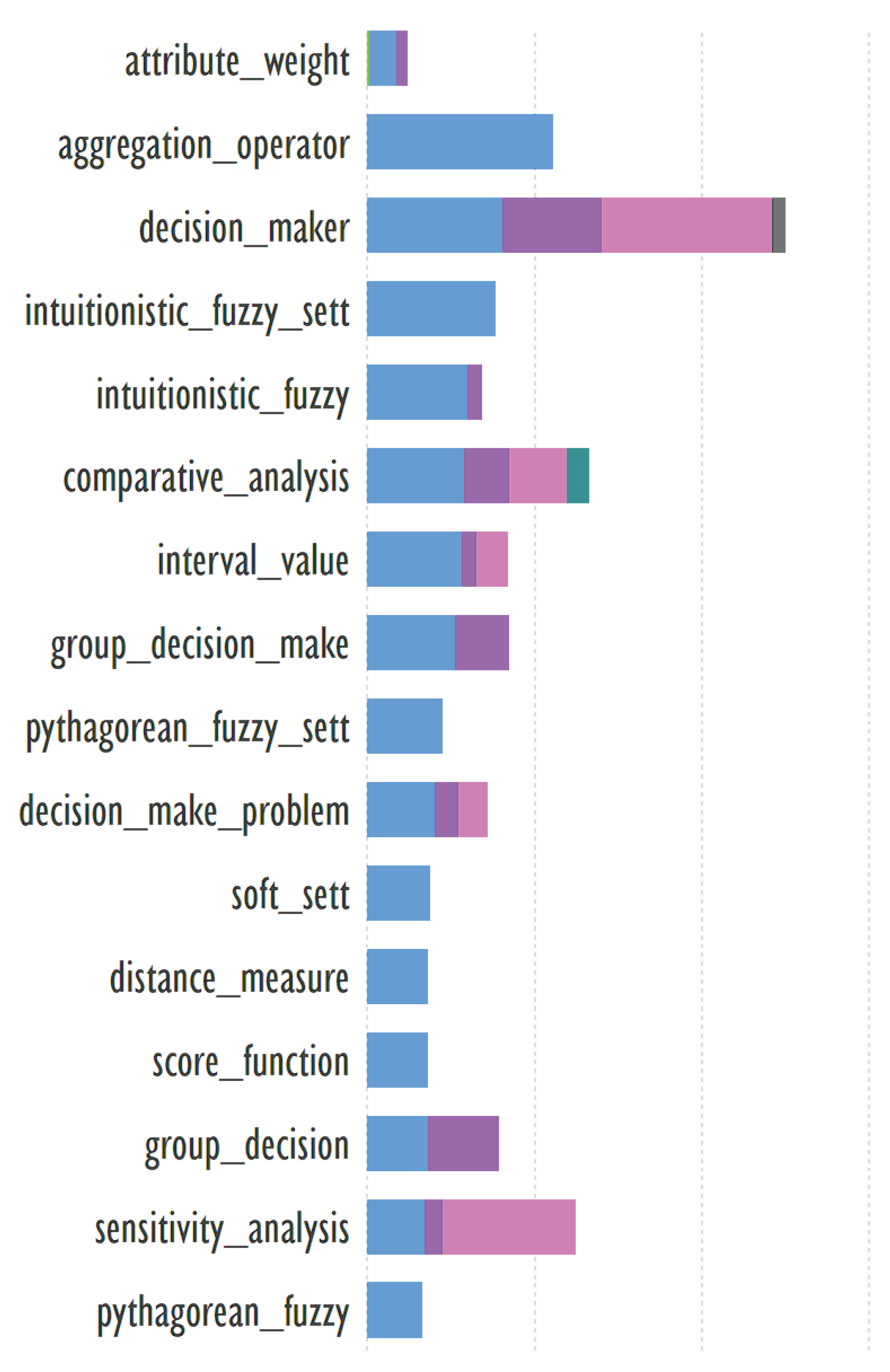

An example of keywords from other clusters related to the term ‘aggregation operators’ → multi-criteria decision making, topsis method.

Header generation for the query by elicit.com: “Aggregation Operators in Multi-Criteria Decision Making Using TOPSIS Method”.

Note: Technique for Order Preference by Similarity to Ideal Solution — TOPSIS.

A highly-cited publication: “Fermatean fuzzy TOPSIS method with Dombi aggregation operators and its application in multi-criteria decision making” [

23]. The abstract contains 162 words.

Summary by Elicit: “This paper develops new Fermatean fuzzy aggregation operators using Dombi operations, analyzes their properties, compares them to existing operators, and applies them to a Fermatean fuzzy TOPSIS decision-making method.” The text is 29 words long.

Summary by QuillBot: “This paper explores the use of Dombi operations in decision-making problems, specifically aggregation operators. It develops Fermatean fuzzy aggregation operators, including the Fermatean fuzzy Dombi weighted average operator, Fermatean fuzzy Dombi weighted geometric operator, Fermatean fuzzy Dombi ordered weighted average operator, Fermatean fuzzy Dombi ordered weighted geometric operator, Fermatean fuzzy Dombi hybrid weighted average operator, and Fermatean fuzzy TOPSIS to understand their impact on decision-making.” The text is 65 words long.

An example of keywords from other cluster related to the term ‘long short-term memory’ → variational mode decomposition, particle swarm optimization.

Header generation for the query by elicit.com: “Integrating LSTM, VMD, and PSO”.

Note: long short-term memory (LSTM) networks, particle swarm optimization (PSO), variational mode decomposition (VMD).

A highly-cited publication: “Blood Glucose Prediction with VMD and LSTM Optimized by Improved Particle Swarm Optimization” [

24]. The abstract contains 282 words.

Summary by Elicit: “A short-term blood glucose prediction model (VMD-IPSO-LSTM) combining variational modal decomposition and improved particle swarm optimization to optimize a long short-term memory network was proposed and shown to achieve high prediction accuracy at 30, 45, and 60 minutes in advance.” The text is 40 words long.

Summary by QuillBot: “A short-term blood glucose prediction model (VMD-IPSO-LSTM) was proposed to improve accuracy in diabetics’ time series. The model decomposes blood glucose concentrations using the VMD method to reduce non-stationarity. The model uses the Long short-term memory network (LSTM) to predict each component IMF. The Particle swarm optimization algorithm optimizes parameters like number of neurons, learning rate, and time window length. The model achieved high accuracy at 30min, 45min, and 60min in advance, with a decrease in RMSE and MAPE. This improved accuracy and longer prediction time can enhance diabetes treatment effectiveness.” The text is 91 words long.

An example of keywords from other cluster related to the term ‘group decision making’ → probabilistic linguistic term set, social network analysis.

Header generation for the query by elicit.com: “Group Decision Making with Probabilistic Linguistic Term Sets using Social Network Analysis”.

A highly-cited publication: “Consistency and trust relationship-driven social network group decision-making method with probabilistic linguistic information” [

25]. The abstract contains 196 words.

Summary by Elicit: “This paper presents a novel probabilistic linguistic group decision-making method that uses consistency-adjustment and trust relationship-driven expert weight determination to determine a reliable ranking of alternatives.” The text is 26 words long.

Summary by QuillBot: “This paper presents a novel probabilistic linguistic GDM method, focusing on consistency-adjustment and expert weights determination. It redefines multiplicative consistency of probabilistic linguistic preference relations (PLPRs), proposes a convergent consistency-adjustment algorithm, and develops a trust relationship-driven expert weight determination model. The method is designed to determine reliable ranking of alternatives and is illustrated through a case study on logistics service suppliers evaluation.” The text is 62 words long.

Note: GDM — group decision-making.

An example of keywords from other cluster related to the term ‘dimensionality reduction’ → feature extraction, clustering algorithms.

Header generation for the query by elicit.com: “Advanced Techniques in Data Analysis”.

A highly-cited publication: “Randomized Dimensionality Reduction for k-Means Clustering” [

26]. The abstract contains 245 words.

Summary by Elicit: “This paper presents new provably accurate randomized dimensionality reduction methods, including feature selection and feature extraction approaches, that provide constant-factor approximation guarantees for the k-means clustering objective.” The text is 27 words long.

Summary by QuillBot: “This paper explores dimensionality reduction for k-means clustering, a method that combines feature selection and extraction. Despite the importance of k-means clustering, there is a lack of provably accurate feature selection methods. Two known methods are random projections and singular value decomposition (SVD). The paper presents the first accurate feature selection method and two feature extraction methods, improving time complexity and feature extraction. The algorithms are randomized and provide constant-factor approximation guarantees for the optimal k-means objective value.” The text is 78 words long.

An example of keywords from other clusters related to the term ‘multi-criteria decision-making’ → sensitivity analysis, analytical hierarchy process.

Header generation for the query by elicit.com: “Analyzing Multi-Criteria Decision-Making with Sensitivity Analysis in AHP”.

A highly-cited publication: “Spatial sensitivity analysis of multi-criteria weights in GIS-based land suitability evaluation” [

27]. The abstract contains 200 words.

Summary by Elicit: “The paper presents a novel approach to examining the sensitivity of a GIS-based multi-criteria decision-making model to the weights of input parameters, and demonstrates its application in a case study of irrigated cropland suitability assessment. A tool incorporating the OAT method with the Analytical Hierarchy Process.” The text is 46 words long.

Summary by QuillBot: “This paper presents a novel approach to examining the multi-criteria weight sensitivity of a GIS-based MCDM model. It explores the dependency of model output on input parameter weights, identifying criteria sensitive to weight changes, and their impacts on spatial dimensions. A tool incorporating the OAT method with the Analytical Hierarchy Process (AHP) within the ArcGIS environment is implemented, allowing user-defined simulations to quantitatively evaluate model dynamic changes and display spatial change dynamics.” The text is 72 words long.

Note: OAT — one-at-a-time.

An example of keywords from other clusters related to the term ‘anomaly detection’ → internet of things, multivariate time series.

Header generation for the query by elicit.com: “Anomaly Detection in IoT Multivariate Time Series”.

A highly-cited publication: “Real-Time Deep Anomaly Detection Framework for Multivariate Time-Series Data in Industrial IoT” [

28]. The abstract contains 264 words.

Summary by Elicit: “The paper presents a hybrid deep learning framework for real-time anomaly detection in industrial IoT time-series data, which combines CNN and two-stage LSTM-based autoencoder and outperforms other state-of-the-art models.” The text is 29 words long.

Summary by QuillBot: “The Industrial Internet of Things (IIoT) generates dynamic, large-scale, heterogeneous, and time-stamped data that can significantly impact industrial processes. To detect anomalies in real-time, a hybrid end-to-end deep anomaly detection framework is proposed. This framework uses a convolutional neural network and a two-stage long short-term memory (LSTM)-based Autoencoder to identify short- and long-term variations in sensor values. The model is trained using the Keras/TensorFlow framework, achieving better performance than other competitive models. The model is also designed for network edge devices, demonstrating its potential for anomaly detection.” The text is 87 words long.

An example of keywords from other clusters related to the term ‘fraud detection’ → decision tree, feature extraction.

Header generation for the query by elicit.com: “Enhancing Fraud Detection Using Decision Trees and Feature Extraction”.

A highly-cited publication: “A cost-sensitive decision tree approach for fraud detection” [

29]. The abstract contains 209 words.

Summary by Elicit: “The paper presents a new cost-sensitive decision tree approach for credit card fraud detection that outperforms traditional classification models and can help reduce financial losses from fraudulent transactions.” The text is 28 words long.

Summary by QuillBot: “The study presents a cost-sensitive decision tree approach for fraud detection, focusing on minimizing misclassification costs and selecting splitting attributes at non-terminal nodes. Compared to traditional classification models, this approach outperforms existing methods in accuracy, true positive rate, and a new cost-sensitive metric specific to credit card fraud detection. This approach can help decrease financial losses due to fraudulent transactions and improve fraud detection systems.” The text is 65 words long.

Note: The highly cited publication proposed by Elicit does not contain the term ‘feature extraction’.

An alternative containing the term ‘feature extraction’ can be found in the publication [

30].

Note: It is not always possible to find a suitable publication for three non-trivial terms.

An example of keywords from other cluster related to the term ‘cyber security’ → IISTM, network intrusion detection, here: ISTM — Information Systems and Technology Management.

Header generation for the query by elicit.com: “Cyber Security and Network Intrusion Detection in Information Systems Management”.

A highly-cited publication: “A Survey of Data Mining and Machine Learning Methods for Cyber Security Intrusion Detection” [

31]. The abstract contains 104 words.

Summary by Elicit: “This paper provides a survey of machine learning and data mining methods for cyber security intrusion detection in wired networks.” The text is 20 words long.

Summary by QuillBot: “This paper surveys machine learning and data mining methods for cyber analytics, focusing on intrusion detection. It provides tutorial descriptions, discusses the complexity of algorithms, discusses challenges, and provides recommendations on when to use each method in cyber security.” The text is 39 words long.

An example of keywords from other clusters related to the term ‘explainable artificial intelligence’ → clustering, data models.

Header generation for the query by elicit.com: “Exploring Explainable AI in Clustering Data Models”.

A highly-cited publication: “Explainable Artificial Intelligence: a Systematic Review” [

32]. The abstract contains 99 words.

Summary by Elicit: “This systematic review provides a hierarchical classification of methods for Explainable Artificial Intelligence (XAI) and summarizes the state-of-the-art in the field, while also recommending future research directions.” The text is 27 words long.

Summary by QuillBot: “Explainable Artificial Intelligence (XAI) has grown significantly due to deep learning applications, resulting in highly accurate models but lack of explainability and interpretability. This systematic review categorizes methods into review articles, theories, methods, and evaluation, summarizing state-of-the-art, and suggesting future research directions.” The text is 42 words long.

In the considered data for clusters 0-9 the expected result is obtained - a more complete ‘Summary’ gives a better idea of the publication. Anti-plagiarism checks on free services such as

https://www.plagiarismremover.net/plagiarism-checker and

https://plagiarismdetector.net/ did not reveal any plagiarism or signs of machine generation, indicating the quality of the summaries obtained by Elicit and QuillBot. Getting quality “Summaries” can allow subject matter experts to make decisions whether it is appropriate to study the article in question in more detail. When writing reports, the use of ‘Summaries’ will reduce the time required to write the report, but it is advisable to check and manually edit the AI-generated texts. This is also true for machine translation, where AI is now actively used; no one has eliminated manual editing, but the acceleration of the translation process is significant.

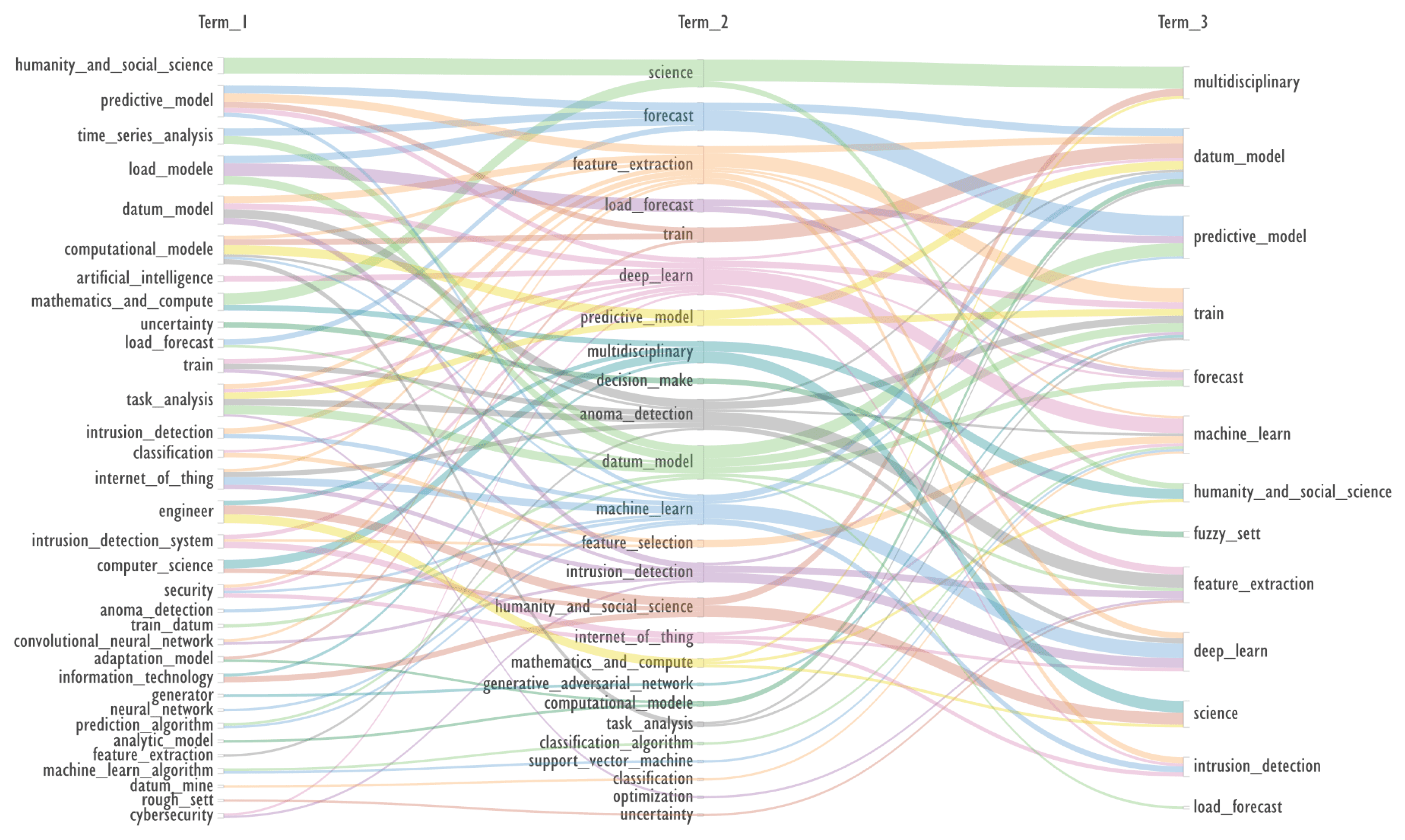

3.4. Visual Selection of Multiple Terms to Build Queries Using the Alluvial Diagram

The Alluvial Diagram, shown in

Figure 15, is a simple visual method for selecting multiple terms for queries on a topic. It is most effective when presented as an interactive web page. In the attached archive, the files KWs_3x3-003-101-Term-1.htm and KWs_3x3-003-101-Term-2.htm provide examples of the active highlighting associated with the first term and the second term, respectively.

The co-occurrence of the three terms was assessed using the FP-grows utility.

For example, three co-occurring terms ‘train → intrusion detection, feature extraction’ are selected, for them:

Header generation for the query by elicit.com: “Intrusion Detection in Train Systems Using Feature Extraction”.

The closest to the subject described by these three terms is the work proposed by elicit.com: “A New Feature Extraction Method of Intrusion Detection” [

33]. The abstract contains 62 words — very brief abstract.

Summary by Elicit: “The paper presents a new feature extraction method for intrusion detection that uses kernel principal component analysis and a reduced computation RSVM method to improve training speed and classification performance.” The text is 30 words long.

Summary by QuillBot: “The paper employs kernel principal component analysis and RSVM to extract features from intrusion detection training samples, enhancing training speed and classification effect.” The text is23 words long. Summary by QuillBot is shorter than by Elicit, which can be explained by very brief abstract.

The possible lack of citation of this article can be explained by the fact that it was published in: 2009 First International Workshop on Education Technology and Computer Science and the article is poorly indexed even in Google, the text itself is four-page theses, the manuscript of which was only found on sci-hub.

This example is interesting because neither elicit.com nor Litmaps provide a direct citation of the publication [

33], but Litmaps offers publications that cite the cited articles [

33].

If we take the original bibliometric records used in our work, the term ‘feature extraction’ occurs in 638 records in which the term ‘intrusion detection’ appears in 109 records of which the term ‘train’ is found only in 32. This example shows how significantly the sample of publications corresponding to the three terms in the query is reduced. These terms were chosen algorithmically and are not random, for three terms not specifically picked the sample reduction would be even greater. This indicates the usefulness of algorithmically selecting three or more terms to find relevant literature.

In a sample of 32 publications, the article [

34] is a good example, containing three analyzed terms. For this article, the ‘Publication Keywords’ field consists of the terms ‘Intrusion detection, Mathematical models, Feature extraction, Training, Standards, Statistical analysis, Numerical models’; and the Times of Cited field indicates that the article has been cited 27 times.

If using the title of this article as a query to elicit.com: “An Agile Approach to Identify Single and Hybrid Normalization for Enhancing Machine Learning-Based Network Intrusion Detection”, this article is the first to appear in the list. For which:

Header generation for the query by elicit.com: “Agile Normalization for ML-Based Intrusion Detection”.

Summary by Elicit: “The paper proposes a statistical method to identify the most suitable data normalization technique for improving the performance of machine learning-based intrusion detection systems”.

I.e. the terms ‘train, feature extraction’ do not appear here, they are in the keywords of the ‘Publication Keywords’ column, but in the text of the paper itself the following keywords are given: ‘Anomaly detection, Bot-IoT, CIC-IDS 2017, intrusion detection, IoT, ISCX-IDS 2012, normalization, NSL KDD, skewness, scaling, transformation, UNSW-NB15’. The reason for these results is shown in

Table 2 and is related to the structure of the bibliometric data of the IEEE Xplore platform.

The table shows that the ‘Publication Keywords’ field of the Scilit platform includes terms from the IEEE Terms field of the IEEE Xplore platform and not author keywords.

This is a rather typical example of the fact that there are not, and cannot be, the only true solutions in bibliometric and textual analysis. Such analysis, especially in combination with visualization tools and third-party services using AI, only acts as an effective clue to formulate relevant questions for further research on the topic.

Despite its simplicity, Alluvial Diagram is very flexible - diagrams can be created not only by frequency of co-occurrence of terms, but also by time of publication, average citation and other parameters.

While the co-occurrence of a keyword pairs can be represented by various diagrams, including a network, the possibilities for visualizing the co-occurrence of three or more terms are more limited.

Alluvial diagram has a significant disadvantage - for a large number of inputs (colors) the visibility of the diagram decreases. To overcome this problem, the interactive web pages generated by the Scimago Graphica program can be used.

Using the SVG format to represent diagrams allows you to edit them, but also to copy terms of interest directly on the diagram, thus speeding up the process of querying the abstract database.