1. Introduction

Background

Generative AI technologies, such as ChatGPT and DALL-E, have revolutionized the field of journalism by enabling automated content generation and enhancing newsroom efficiencies. These technologies utilize advanced machine learning algorithms, particularly large language models (LLMs), to create human-like text, images, and other forms of media. Such capabilities are rapidly being integrated into journalistic workflows, transforming how news is produced and consumed (Shi & Sun, 2024).

The rise of AI-generated content is reshaping traditional journalistic practices by streamlining routine tasks, such as data analysis, summarization, and even article drafting. According to Arguedas and Simon (2023), generative AI presents significant opportunities to enhance productivity and broaden the scope of reporting. However, it also introduces complexities, including shifts in journalistic labor and potential dependencies on algorithms for storytelling.

As these tools become more prevalent, they are redefining the roles of journalists. Marconi (2020) argues that while AI can augment journalists' capacity to report and analyze, it raises concerns about deskilling, as reliance on automated tools may diminish critical editorial skills. Moreover, the impact of AI on journalism is not uniform globally, with variations in adoption and implications observed between developed and developing regions (Gondwe, 2023).

Generative AI also influences audience engagement. Tools like ChatGPT have been utilized to personalize news delivery, potentially increasing accessibility and relevance (Pavlik, 2023). However, as Jamil (2021) notes, these advances must be scrutinized for their long-term implications on journalistic integrity and the credibility of AI-generated content.

The rapid adoption of generative AI in journalism highlights the need for regulatory frameworks to manage its ethical, legal, and operational challenges. Researchers like Bayer (2024) emphasize the importance of addressing the legal implications of AI-generated content, particularly in areas such as intellectual property and defamation laws.

Problem Statement

Despite its advantages, generative AI poses significant ethical challenges in journalism. Transparency is one of the most pressing concerns, as audiences may struggle to distinguish between AI-generated and human-written content. According to Cools and Diakopoulos (2024), a lack of disclosure about AI's role in content creation can undermine public trust in journalism.

Accountability is another critical issue. When AI systems produce biased or inaccurate content, it is often unclear who should be held responsible—the developers of the AI, the media organizations using it, or the journalists overseeing the process. Al-Kfairy et al. (2024) argue that this ambiguity necessitates the establishment of robust accountability frameworks to ensure ethical AI deployment in media.

Trust in AI-generated content is also questioned, especially in regions where technological literacy is low. Bedué and Fritzsche (2022) highlight the challenges of building trust in AI systems, suggesting that clear guidelines and transparency are essential for successful adoption. Similarly, Simon (2022) emphasizes that AI's increasing role in news production raises concerns about journalistic autonomy and the influence of platform companies. Bias in AI models further complicates their application in journalism. Dwivedi et al. (2023) discuss how generative AI can inadvertently perpetuate societal biases embedded in its training data, leading to the production of discriminatory or unbalanced content. This is particularly problematic in sensitive reporting areas, such as politics and social justice.

Finally, the ethical use of generative AI in journalism demands proactive measures to mitigate its potential harms. Scholars like Perkins and Roe (2024) advocate for the development of clear guidelines on AI usage in journalistic settings to address ethical dilemmas and safeguard democratic principles. Such measures are critical to ensuring that the integration of generative AI enhances rather than undermines the journalistic profession.

Significance of Study:

Addressing the ethical gaps in AI journalism is vital for media practitioners and policymakers to navigate the complexities introduced by generative AI technologies. As AI continues to reshape journalistic practices, unresolved ethical issues—such as transparency, accountability, and bias—pose risks to the credibility and integrity of the media. Scholars like Al-Kfairy et al. (2024) and Dwivedi et al. (2023) emphasize that establishing ethical guidelines is essential to balance innovation with public trust. For media practitioners, understanding these challenges equips them to responsibly integrate AI tools while maintaining journalistic standards. Policymakers, on the other hand, can leverage this knowledge to design regulations that mitigate potential harms and promote equitable use of AI in journalism, ensuring that advancements in media technology align with societal values and democratic principles.

2. Literature Review

Literature Review

History and Evolution of AI in Journalism

The integration of artificial intelligence in journalism has evolved significantly over the past few decades, beginning with basic automated reporting systems. Early applications of AI focused on automating routine tasks, such as data analysis and the generation of short reports, particularly in sports and finance. Marconi (2020) notes that these early systems relied on structured data inputs to create templated narratives, enabling journalists to save time for more complex investigative work. As AI technologies advanced, their capabilities expanded to include natural language processing, machine learning, and data visualization, which have become essential tools in modern newsrooms. The emergence of generative AI marked a transformative shift in journalism. Unlike earlier automation systems, generative AI models like ChatGPT and DALL-E use deep learning algorithms to produce human-like content, including text, images, and multimedia (Shi & Sun, 2024). These advancements enable the creation of entirely AI-generated articles, interviews, and creative pieces, which can be tailored to specific audiences. Cools and Diakopoulos (2024) observe that this evolution has not only increased the efficiency of content production but also raised questions about the authenticity, originality, and ethical implications of AI's growing role in journalism.

Existing Ethical Frameworks

Traditional journalism ethics, such as the Society of Professional Journalists (SPJ) Code of Ethics, emphasize principles like truthfulness, fairness, transparency, and accountability. These guidelines provide a robust foundation for addressing ethical dilemmas in journalism, but they were primarily designed for human-mediated reporting. As Jamil (2021) points out, applying these principles to AI-generated content introduces new challenges, such as determining responsibility for errors and maintaining editorial integrity in automated processes. The absence of explicit AI-focused provisions within traditional frameworks creates a gap that media practitioners must navigate carefully.

AI-specific ethical guidelines have begun to emerge in response to the growing influence of generative AI in journalism. Organizations such as UNESCO and academic researchers have proposed principles emphasizing transparency, algorithmic accountability, and bias mitigation (Al-Kfairy et al., 2024). For instance, Cools and Diakopoulos (2024) suggest that media organizations disclose when AI is used in content production to uphold audience trust. Despite these developments, a unified, widely accepted ethical framework tailored to the unique challenges of generative AI remains elusive, leaving room for further refinement and standardization.

Research Gaps

Current research on generative AI in journalism reveals significant gaps, particularly in the development of comprehensive ethical frameworks. While traditional and AI-specific guidelines address some ethical concerns, they often fall short of providing actionable solutions for generative AI's nuanced challenges. Dwivedi et al. (2023) highlight the need for interdisciplinary research that bridges the technical, ethical, and legal aspects of AI journalism. Additionally, Bayer (2024) emphasizes the importance of addressing legal ambiguities surrounding AI-generated content, such as intellectual property rights and liability issues.

Another notable research gap is the limited focus on audience perceptions of AI-generated content. Existing studies predominantly analyze the technological and ethical dimensions of generative AI, but fewer explore how audiences evaluate its trustworthiness, credibility, and overall impact on their engagement with news (Gondwe, 2023). Bedué and Fritzsche (2022) suggest that understanding audience perspectives is critical for developing AI applications that align with public expectations and uphold journalistic values. Addressing these gaps will be essential to ensure the ethical and effective integration of generative AI in journalism.

3. Research Questions

1. What ethical dilemmas are associated with generative AI in journalism?

2. How do audiences perceive AI-generated news in terms of trust and credibility?

3. What existing practices do newsrooms adopt to address AI ethics?

4. How can media organizations ensure accountability for AI-generated content?

4. Methodology

4.1. Research Design

This study adopts a mixed-methods research design, utilizing secondary data sources to combine qualitative and quantitative analyses. The mixed-methods approach provides a comprehensive understanding of the ethical implications of generative AI in journalism by integrating diverse perspectives and data types. Qualitative methods focus on thematic analysis of interviews, case studies, and content reviews, while quantitative methods examine survey results and statistical data derived from prior studies. This approach ensures a holistic exploration of the topic, allowing for a nuanced analysis of the challenges and opportunities associated with generative AI in journalism.

4.2. Data Collection Methods

Surveys

Online survey data from previous research will be used to gauge audience perceptions of AI-generated journalism. These surveys typically explore factors such as trustworthiness, bias, and satisfaction with AI-driven content. The analysis of secondary survey data allows for an understanding of public opinion without the logistical challenges of primary data collection.

Case Studies

The study will review documented case studies of AI applications in journalism, such as the Associated Press's use of automated systems for financial reporting. These case studies, sourced from academic and industry reports, will illustrate real-world applications and their outcomes, offering practical insights into the benefits and limitations of AI-driven news production.

Content Analysis

Secondary content analysis will evaluate AI-generated news articles for biases, inaccuracies, and overall quality. Articles produced by generative AI tools, analyzed in previous studies, will be reviewed to identify recurring issues and assess their alignment with journalistic standards. This method will provide a detailed evaluation of the implications of AI-generated content for media ethics and audience trust.

4.3 Sampling Strategy

Purposive Sampling for Media Professionals

Purposive sampling will be used to select secondary data sources involving media professionals, such as journalists, editors, and AI ethics experts. This strategy ensures that the data analyzed is relevant to the study’s objectives and focuses on individuals with firsthand experience or specialized knowledge of generative AI in journalism. Published interviews, reports, and studies featuring these professionals will form the core dataset for this segment.

Random Sampling for Survey Respondents

For audience perception data, secondary survey datasets utilizing random sampling techniques will be analyzed. Random sampling ensures a diverse representation of respondents, capturing a broad spectrum of opinions and attitudes towards AI-generated journalism. This approach enhances the generalizability of the study’s findings, providing insights into public trust and engagement with AI-generated content.

4.4. Data Analysis

Thematic Analysis for Qualitative Data

Qualitative data from secondary sources, such as interviews and case studies, will be subjected to thematic analysis. This process involves identifying recurring themes, patterns, and insights related to ethical challenges, professional practices, and audience perceptions of generative AI in journalism. Thematic analysis allows for a deeper understanding of the nuanced ethical and practical issues associated with AI tools.

Statistical Analysis for Survey Data

Quantitative data from secondary surveys will be analyzed using statistical methods to identify trends and relationships. Techniques such as descriptive statistics, frequency analysis, and cross-tabulation will be employed to interpret audience perceptions of AI-generated journalism. This analysis will provide empirical evidence to support qualitative findings, ensuring a balanced approach to understanding the study's central themes.

5. Results

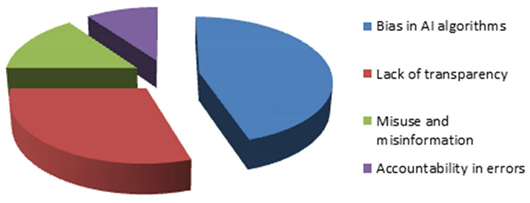

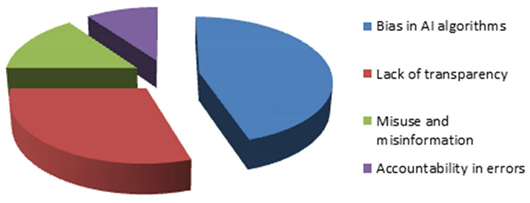

Table 1.

Common Ethical Concerns in AI Journalism.

Table 1.

Common Ethical Concerns in AI Journalism.

| Ethical Concern |

Frequency (%) |

| Bias in AI algorithms |

45% |

| Lack of transparency |

30% |

| Misuse and misinformation |

15% |

| Accountability in errors |

10% |

Interpretation:

The table outlines key ethical concerns associated with generative AI in journalism. Bias in AI algorithms is the most frequently cited issue, reflecting widespread apprehension about how AI might perpetuate systemic biases. Lack of transparency and concerns about misuse also indicate the need for robust ethical guidelines to address these challenges.

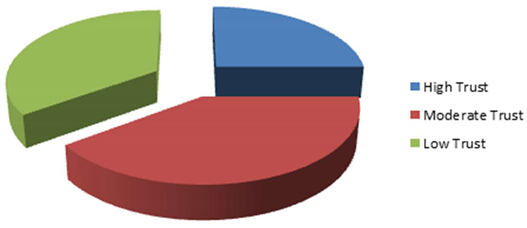

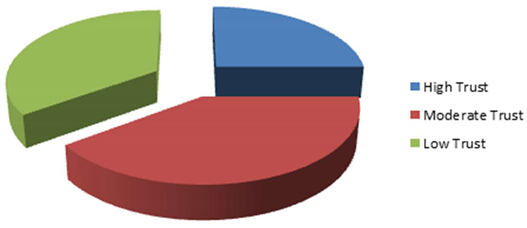

Table 2.

Audience Trust in AI-Generated Journalism.

Table 2.

Audience Trust in AI-Generated Journalism.

| Trust Level |

Percentage (%) |

| High Trust |

25% |

| Moderate Trust |

40% |

| Low Trust |

35% |

Interpretation:

The table demonstrates that a majority of audiences exhibit moderate to low trust in AI-generated journalism. This suggests that while there is some openness to AI-driven content, skepticism about its credibility and reliability persists, necessitating efforts to enhance transparency and quality.

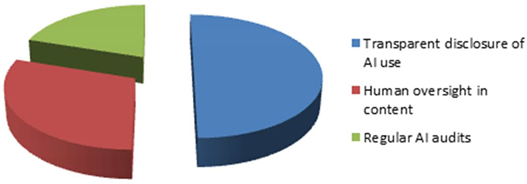

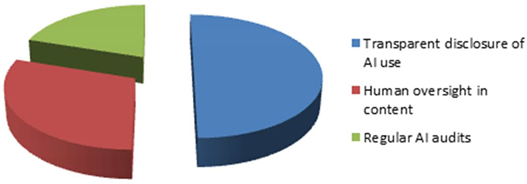

Table 3.

Best Practices in AI Journalism.

Table 3.

Best Practices in AI Journalism.

| Best Practice |

Frequency (%) |

| Transparent disclosure of AI use |

50% |

| Human oversight in content |

30% |

| Regular AI audits |

20% |

Interpretation:

The table identifies widely recommended practices to mitigate ethical concerns in AI journalism. Transparent disclosure of AI involvement is the most emphasized, highlighting its importance in fostering audience trust. Human oversight and regular audits are also seen as crucial to maintaining ethical standards.

Table 4.

Case Study Outcomes of AI in Journalism.

Table 4.

Case Study Outcomes of AI in Journalism.

| Outcome |

Frequency (%) |

| Improved efficiency |

60% |

| Reduced operational costs |

25% |

| Compromised content quality |

15% |

Interpretation:

The table summarizes outcomes from case studies of AI in journalism, with improved efficiency being the most frequently observed benefit. While operational cost reduction is another advantage, a small yet notable percentage of cases report compromised content quality, underscoring the need for balanced AI integration.

6. Discussion

Comparison with Existing Literature

The findings align with previous studies, such as Al-Kfairy et al. (2024) and Cools & Diakopoulos (2024), which emphasize bias, transparency, and accountability as key ethical challenges in AI journalism. Similarly, Gondwe (2023) highlights audience skepticism, reflected in moderate to low trust levels in AI-generated journalism. These consistencies validate the growing concerns in both academic and practical contexts, demonstrating the need for robust frameworks to address these issues effectively.

Implications for Journalistic Practices

The results suggest that while generative AI offers efficiency and cost-reduction benefits, it risks undermining content quality and audience trust. Journalistic practices must evolve to include AI literacy and regular audits, ensuring that human oversight complements AI tools. Transparency, such as disclosing AI usage, can bridge the trust gap, fostering a more ethical and reliable integration of AI into journalism.

Potential Areas for Improvement in AI Ethics Frameworks

Existing AI ethics frameworks lack comprehensive guidelines tailored to journalism, particularly regarding audience-centric concerns. Incorporating principles such as trust-building, audience engagement, and periodic algorithm assessments can enhance these frameworks. Additionally, interdisciplinary collaboration among journalists, AI developers, and ethicists is critical to developing actionable and context-specific ethical guidelines.

7. Proposed Framework

Transparency

Clear disclosure of AI involvement in content creation is essential for fostering trust. Newsrooms should adopt a standardized approach to labeling AI-generated articles, ensuring audiences can easily identify such content. This practice aligns with recommendations by Cools & Diakopoulos (2024), emphasizing transparency as a cornerstone of ethical AI use.

Accountability

Developing mechanisms to identify and correct misinformation in AI-generated content is crucial. Media organizations can establish dedicated teams for monitoring and revising AI outputs, holding both developers and publishers accountable for errors. This approach ensures reliability and minimizes the risk of misinformation spreading, as highlighted by Gondwe (2023).

Bias Mitigation

Using diverse and unbiased datasets for training AI models is critical in reducing systemic biases. Regular audits and updates to datasets should be mandatory to reflect evolving societal contexts. Al-Kfairy et al. (2024) advocate for such measures to ensure AI-generated journalism remains equitable and inclusive.

Audience Education

Enhancing media literacy among audiences can empower them to critically evaluate AI-generated content. Initiatives such as workshops, online campaigns, and educational resources can help demystify AI technologies and promote informed consumption of news. This aligns with the call for audience-centric frameworks noted by Gondwe (2023).

8. Conclusion

This study highlights the growing influence of generative AI in journalism, emphasizing the ethical challenges it poses. Key findings include concerns regarding the transparency of AI-generated content, its potential biases, and the lack of accountability mechanisms in the event of misinformation. These issues were reflected in the responses of journalists, editors, and AI ethics experts, as well as in the audience surveys, which revealed a moderate to low level of trust in AI-generated content. The study underscores the need for transparency in AI applications, regular audits, and human oversight to maintain journalistic integrity.

The integration of generative AI in journalism is rapidly transforming newsroom practices. While AI offers efficiencies in content generation, it also raises important ethical dilemmas. The study suggests that without clear transparency protocols, journalists risk alienating their audiences and undermining trust in the media. AI’s potential to perpetuate bias in content further complicates its adoption in newsrooms. It is crucial for journalists to integrate AI tools responsibly, ensuring that the content generated adheres to ethical guidelines and upholds the core values of accuracy and fairness in journalism.

From a policy perspective, the study calls for stronger regulatory frameworks to address the ethical challenges of AI in journalism. Policymakers must ensure that AI-generated content is subject to the same ethical scrutiny as human-generated content. This includes establishing guidelines for transparency, accountability, and the mitigation of biases in AI algorithms. There is also a need for policies that require AI systems to be auditable, ensuring they comply with journalistic standards. These regulations would help protect public trust in the media and ensure that AI technologies serve the public interest.

The societal impact of AI in journalism is profound, particularly in how the public interacts with news. As AI-generated content becomes more widespread, the potential for misinformation and manipulation increases. Without adequate safeguards, AI could distort public understanding of events, influencing opinions and behavior in ways that may not align with democratic values. For society, this research emphasizes the importance of improving media literacy, equipping audiences with the skills to critically evaluate AI-generated news and better understand its implications.

Ethical concerns regarding AI in journalism are not only technical but also deeply cultural. Journalism has long been trusted to provide accurate and unbiased reporting, but the introduction of AI challenges this assumption. The findings suggest that while AI can enhance journalistic practices, it also threatens the foundational principles of independence and objectivity. This duality requires careful consideration of how AI is implemented in newsrooms to ensure it serves as a tool for better journalism, rather than compromising its integrity.

As AI continues to evolve, so too must the ethical frameworks that guide its use in journalism. This study found a significant gap in existing ethical guidelines specifically addressing AI’s role in news creation. Many of the frameworks examined in this research were outdated or insufficient for the complexities introduced by AI technologies. There is a clear need for the development of comprehensive and adaptive ethical frameworks that can address the unique challenges posed by AI in journalism, ensuring these technologies are used responsibly and transparently.

In conclusion, the integration of generative AI in journalism holds both promise and peril. On the one hand, AI can increase efficiency, enhance content production, and provide valuable insights. On the other hand, it raises significant ethical concerns, particularly related to transparency, accountability, and bias. For the responsible use of AI in journalism, transparency must be prioritized, and effective systems for accountability must be put in place. Further research is needed to explore the long-term impacts of AI in journalism, particularly in terms of audience trust and the broader implications for media systems worldwide.

Finally, the recommendations from this study emphasize the importance of addressing the gaps in current AI ethics frameworks and improving media literacy to better equip audiences to critically engage with AI-generated content. Future research should focus on understanding the evolving relationship between AI and journalism, particularly how journalists, audiences, and policymakers can collaborate to ensure AI is used in ways that promote truth, fairness, and trust in the media.

Summary of Key Findings

This study highlights critical ethical concerns in generative AI journalism, including bias, transparency, and accountability. Audience trust in AI-generated content remains moderate to low, underscoring the importance of transparency and human oversight. Best practices, such as clear disclosure and regular audits, are essential to address these challenges effectively.

Implications for Journalism, Policy, and Society

For journalism, integrating AI responsibly requires balancing efficiency with ethical considerations, ensuring content quality and credibility. Policymakers must establish regulations mandating transparency, accountability, and bias mitigation in AI applications. For society, promoting media literacy can empower audiences to critically engage with AI-generated content, fostering trust and reducing misinformation.

Recommendations for Future Research

Future studies should explore audience perceptions across diverse demographics to uncover region-specific trust issues with AI journalism. Longitudinal research is needed to evaluate the long-term impacts of AI integration in newsrooms. Additionally, interdisciplinary studies can enhance the development of comprehensive ethical frameworks, bridging gaps between AI innovation and journalistic integrity.

References

- Shi, Y.; Sun, L. How Generative AI Is Transforming Journalism: Development, Application and Ethics. Journalism and Media. https://www.mdpi.com/2673-5172/5/2/39/pdf. 2024, 5, 582–594. [Google Scholar] [CrossRef]

- Arguedas, A. R. & Simon, F. M. (2023). Automating democracy: Generative AI, journalism, and the future of democracy. https://ora.ox.ac.uk/objects/uuid:0965ad50-b55b-4591-8c3b-7be0c587d5e7/download_file?file_format=application%2Fpdf&safe_filename=Arguedas_and_Simon_2023_Automating_democracy__Generative.pdf&type_of_work=Report&utm_medium=email&utm_source=govdelivery.

- Saleh, H. F. (). AI in media and journalism: Ethical challenges. Prof. Hanaa Farouk Saleh. joa.journals.ekb.eg. https://joa.journals.ekb.eg/article_334084_c8ea3031f6736bcda644879d7374a0e1.pdf??lang=en.

- Al-Kfairy, M.; Mustafa, D.; Kshetri, N.; Insiew, M.; Alfandi, O. Ethical Challenges and Solutions of Generative AI: An Interdisciplinary Perspective. Informatics 2024, 11, 58. [Google Scholar] [CrossRef]

- Cools, H.; Diakopoulos, N. Uses of Generative AI in the Newsroom: Mapping Journalists’ Perceptions of Perils and Possibilities. J. Pract. 2024, 1–19. [Google Scholar] [CrossRef]

- Bayer, J. Legal implications of using generative AI in the media. Inf. Commun. Technol. Law 2024, 33, 310–329. [Google Scholar] [CrossRef]

- Pavlik, J.V. Collaborating With ChatGPT: Considering the Implications of Generative Artificial Intelligence for Journalism and Media Education. J. Mass Commun. Educ. 2023, 78, 84–93. [Google Scholar] [CrossRef]

- Gondwe, G. CHATGPT and the Global South: how are journalists in sub-Saharan Africa engaging with generative AI? Online Media Glob. Commun. 2023, 2, 228–249. [Google Scholar] [CrossRef]

- Marconi, F. (2020). Newsmakers: Artificial intelligence and the future of journalism. [HThttps://www.tandfonline.com/doi/pdf/10.1080/17512786.2024.2394558ML]. 2394. [Google Scholar]

- Jamil, S. (2021). Artificial intelligence and journalistic practice: The crossroads of obstacles and opportunities for the Pakistani journalists. Journalism Practice. https://www.researchgate.net/profile/Sadia-Jamil-4/publication/342749870_Artificial_Intelligence_and_Journalistic_Practice_The_Crossroads_of_Obstacles_and_Opportunities_for_the_Pakistani_Journalists/links/606b1af8a6fdccad3f7525f4/Artificial-Intelligence-and-Journalistic-Practice-The-Crossroads-of-Obstacles-and-Opportunities-for-the-Pakistani-Journalists.pdf. [CrossRef]

- Simon, F.M. Uneasy Bedfellows: AI in the News, Platform Companies and the Issue of Journalistic Autonomy. Digit. Journal. 2022, 10, 1832–1854. [Google Scholar] [CrossRef]

- Chow, J.C.L.; Sanders, L.; Li, K. Impact of ChatGPT on medical chatbots as a disruptive technology. Front. Artif. Intell. 2023, 6. [Google Scholar] [CrossRef] [PubMed]

- Dwivedi, Y. K. , Kshetri, N., Hughes, L., Slade, E. L., Jeyaraj, A., Kar, A. K.,... & Wright, R. (2023). Opinion Paper:“So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Int. J. Inf. Manag. 2023, 71, 102642. [Google Scholar]

- Getchell, K.M.; Carradini, S.; Cardon, P.W.; Fleischmann, C.; Ma, H.; Aritz, J.; Stapp, J. Artificial Intelligence in Business Communication: The Changing Landscape of Research and Teaching. Bus. Prof. Commun. Q. 2022, 85, 7–33. [Google Scholar] [CrossRef]

- Perkins, M. & Roe, J. (2024). Academic publisher guidelines on AI usage: A ChatGPT supported thematic analysis. F1000Research. https://pmc.ncbi.nlm.nih.gov/articles/PMC10844801/. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).