Submitted:

25 November 2024

Posted:

27 November 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

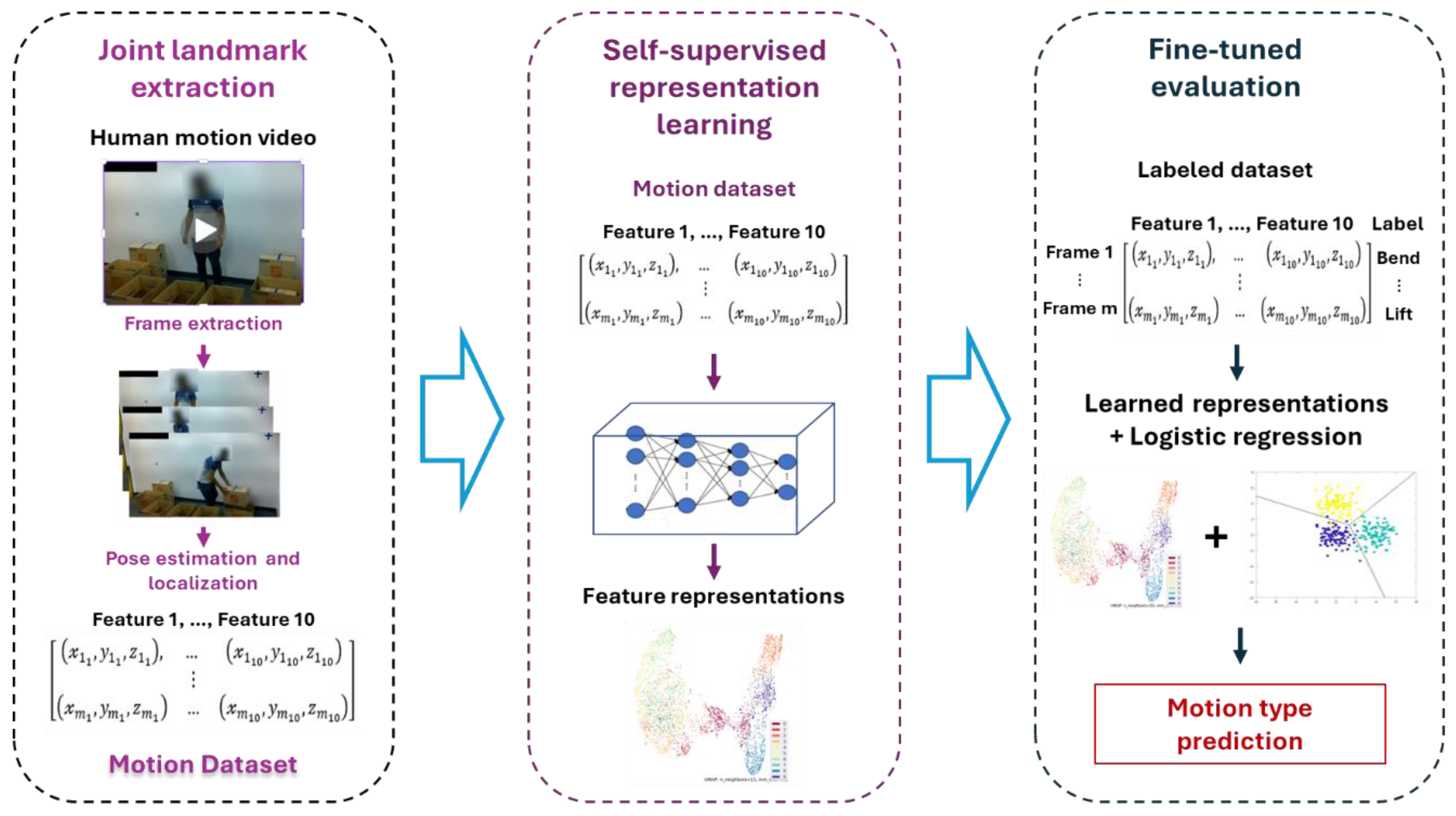

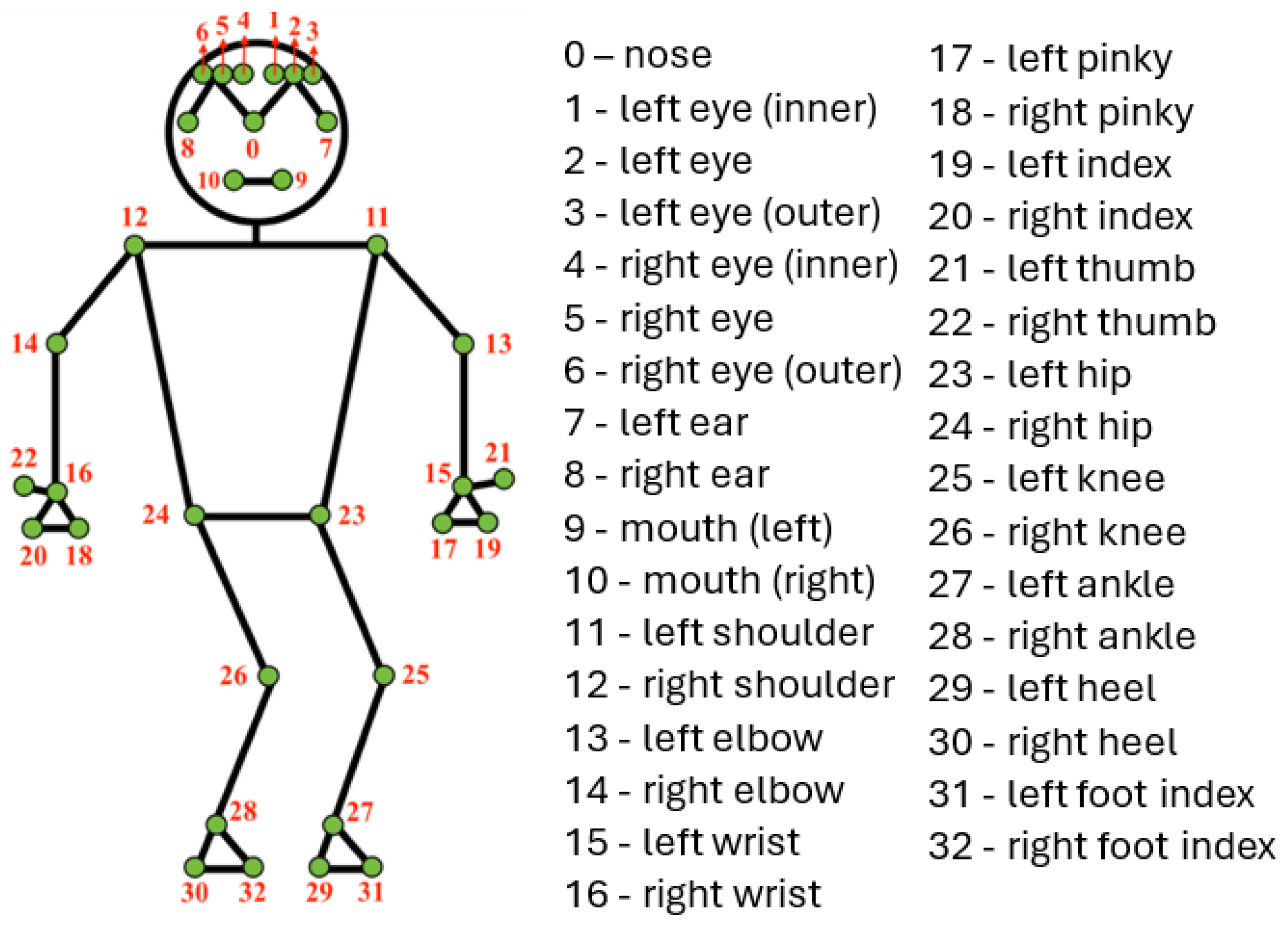

- Joint tracking by Computer Vision (CV). We remove the effect of the complex operational environment by directly extracting the coordinates of specific joints from the human body using CV techniques, specifically MediaPipe pose (MPP) [19]. MPP is an open-source framework developed by Google for estimating high-fidelity 2D and 3D coordinates of body joints. It uses BlazePose [20], a lightweight pose estimation network, to detect and track 33 3D body landmarks from videos or images. From the body landmarks identified by MPP, we select key landmarks based on the inputs of ergonomic experts to formulate the initial features significant to various motion types. This method of extracting joint information also preserves privacy by learning from joint coordinates instead of raw image data. Additionally, the MPP is scale and size invariant [19], which enables it to handle variations in human sizes and height.

- SimCLR feature embedding. We address the data bottleneck using a contrastive SSL approach to directly learn representations from camera data without requiring extensive manual labeling. We specifically use SimCLR [21] an SSL method that learns features by maximizing agreement between different augmented views of the same sample using a contrastive loss. We use SimCLR to learn embeddings and identify meaningful patterns and similarities within the extracted joint data depicting various motion categories. The learned representations are further leveraged in a downstream task to identify specific motion types.

- Classification for motion recognition and anomaly detection. Lastly, we leverage the learned representations from the CL training for a downstream classification task involving different motion categories. We train a simple logistic regression model on top of our learned representations to identify different motion categories in a few-shot learning [22] setting. This demonstrates the robustness and generalizability of our learned representations to downstream tasks. Additionally, we perform outlier analysis by evaluating the ability of our framework to identify out-of-distribution data. We introduce different amounts of outliers with varying deviations from the classes of interest and measure the ability of our framework to identify these outliers and the effect of outliers on the discriminative ability of our framework.

2. Literature Review

2.1. Unobtrusive Human Motion Monitoring

2.2. Self-Supervised Learning

2.3. Section Summary

3. Method Development

3.1. CLUMM Training

3.1.1. Pose Landmark Extraction

3.1.2. Contrastive Self-Supervised Representation Learning

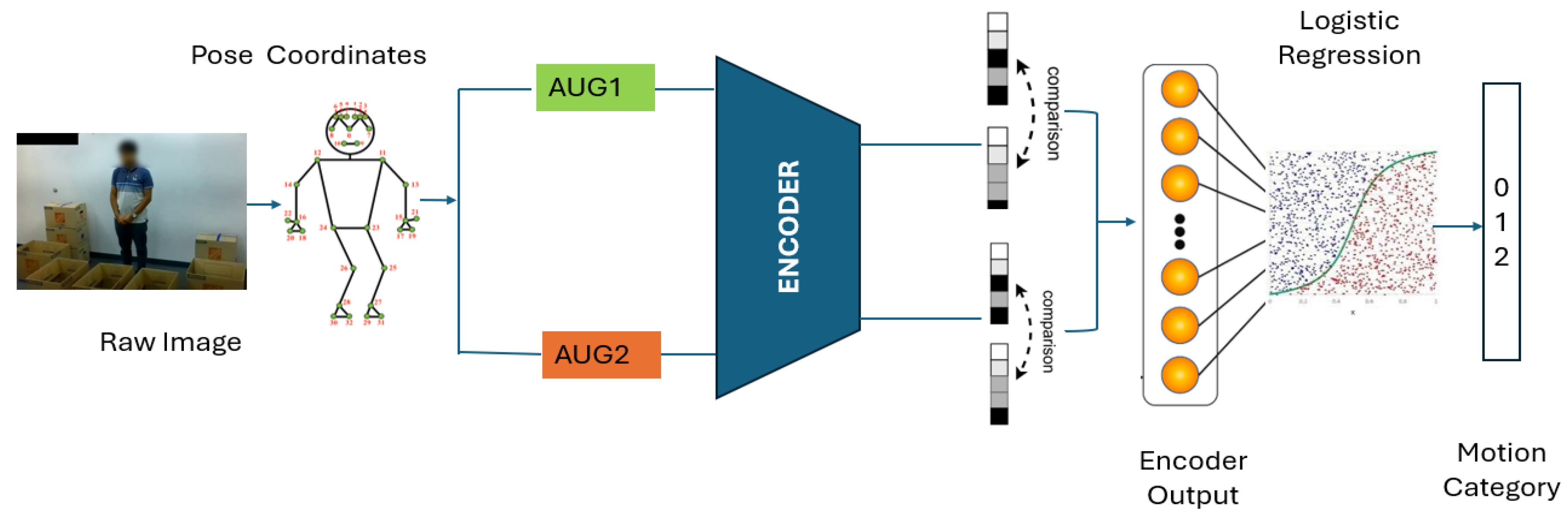

- Random augmentation module: This module applies random data augmentations on input samples . It performs two transformations on a single sample, resulting in two correlated views and treated as a positive pair.

- Encoder module : This module uses a neural network to extract latent space encodings of the augmented samples and . The encoder is model agnostic, allowing various network designs to be used. The encoder produces output and , where is the encoder network.

- Projector head : This small neural network maps the encoded representations into a space where a contrastive loss is applied to maximize the agreement between the views [16]. A multi-layer perceptron is used, which produces output and , where represents the projector head and represents the output of the encoder module.

- Contrastive loss function: This learning objective maximizes the agreement between positive pairs.

- A.

- Data Augmentation

- Random Jitter : We apply a random jitter on the input samples using a noise signal drawn from a normal distribution with a mean of zero and a standard deviation of 0.5, i.e., . Thus, for each input sample we obtain .

- Random scaling : We scale samples with a random factor drawn from a normal distribution with a mean zero and a standard deviation of 0.2, i.e., . Therefore, for each input sample , we obtain .

- B.

- Encoder Module

- C.

- Projector Head

- D.

- Contrastive Loss Function

| Algorithm1: CLUMM feature representation learning |

|

- E.

- Finetuning with SoftMax Logistic Regression

3.2. Impact of Outliers on CLUMM Performance

- 1.

- The average distance from all points in a cluster to their respective centroid

- 2.

- The maximum distance of any point in a cluster to the centroid:

3.3. Summary of CLUMM

3.4. Note to Practitioners

4. Case Study

4.1. Data Preparation

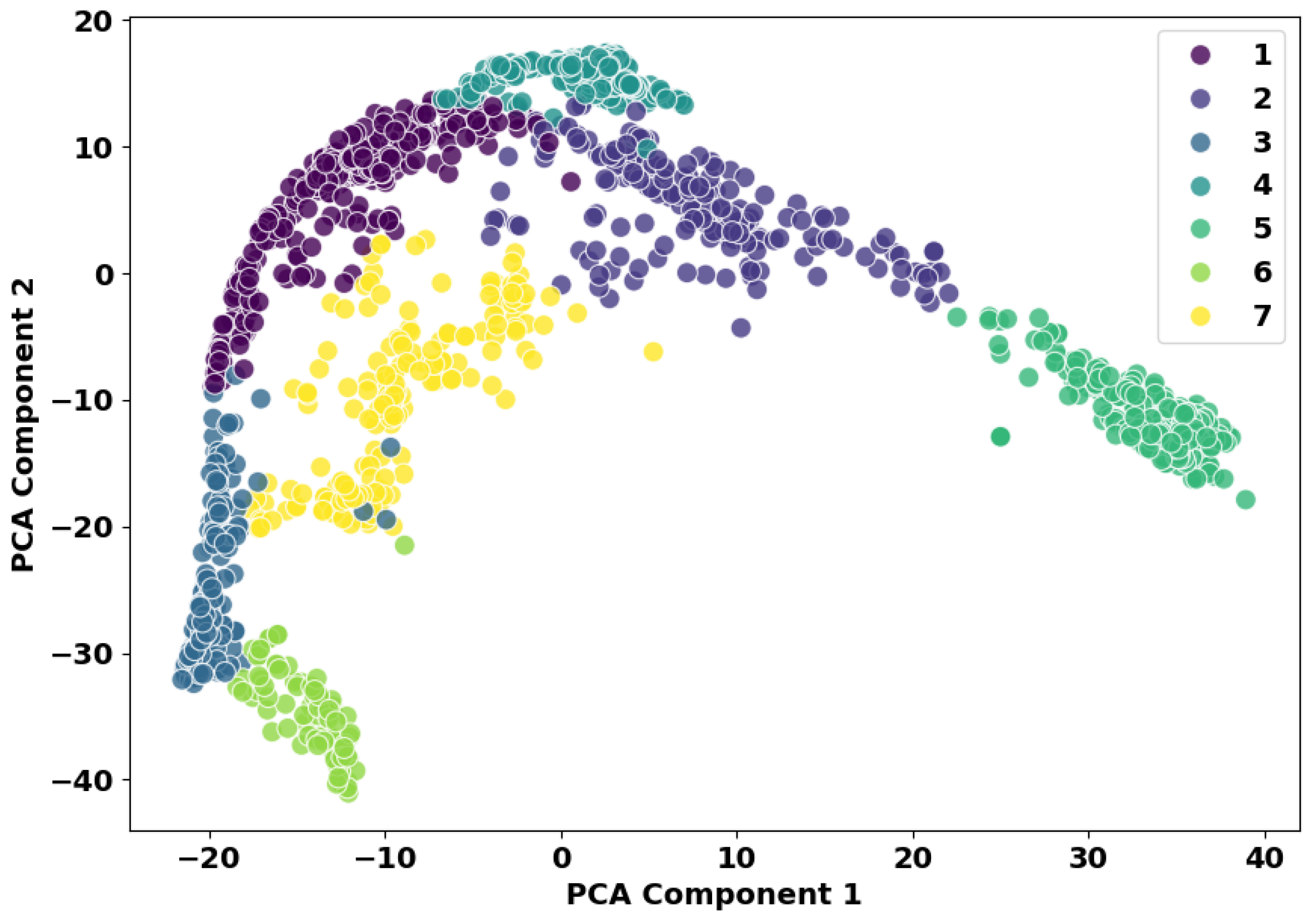

4.2. Human Motion Recognition with CLUMM

4.3. Baseline Evaluation

4.5. Results from Outlier Analysis

5. Conclusion and Future Work

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- C. I. Tang, I. Perez-Pozuelo, D. Spathis, and C. Mascolo, “Exploring Contrastive Learning in Human Activity Recognition for Healthcare,” Nov. 2020, [Online]. Available online: http://arxiv.org/abs/2011.11542.

- Y. Wang, S. Cang, and H. Yu, “A survey on wearable sensor modality centred human activity recognition in health care,” Dec. 15, 2019, Elsevier Ltd. [CrossRef]

- D. V. Thiel and A. K. Sarkar, “Swing Profiles in Sport: An Accelerometer Analysis,” Procedia Eng, vol. 72, pp. 624–629, 2014. [CrossRef]

- H. Liu and L. Wang, “Human motion prediction for human-robot collaboration,” J Manuf Syst, vol. 44, pp. 287–294, Jul. 2017. [CrossRef]

- H. Iyer, N. Macwan, S. Guo, and H. Jeong, “Computer-Vision-Enabled Worker Video Analysis for Motion Amount Quantification,” May 2024, [Online]. Available online: http://arxiv.org/abs/2405.13999.

- J. M. Fernandes, J. S. Silva, A. Rodrigues, and F. Boavida, “A Survey of Approaches to Unobtrusive Sensing of Humans,” Mar. 01, 2023, Association for Computing Machinery. [CrossRef]

- C. Pham, N. N. Diep, and T. M. Phuonh, “e-Shoes: Smart Shoes for Unobtrusive Human Activity Recognition,” IEEE, 2017.

- A. Rezaei, M. C. Stevens, A. Argha, A. Mascheroni, A. Puiatti, and N. H. Lovell, “An Unobtrusive Human Activity Recognition System Using Low Resolution Thermal Sensors, Machine and Deep Learning,” IEEE Trans Biomed Eng, vol. 70, no. 1, pp. 115–124, Jan. 2023. [CrossRef]

- C. Yu, Z. Xu, K. Yan, Y. R. Chien, S. H. Fang, and H. C. Wu, “Noninvasive Human Activity Recognition Using Millimeter-Wave Radar,” IEEE Syst J, vol. 16, no. 2, pp. 3036–3047, Jun. 2022. [CrossRef]

- M. Gochoo, T. H. Tan, S. H. Liu, F. R. Jean, F. S. Alnajjar, and S. C. Huang, “Unobtrusive Activity Recognition of Elderly People Living Alone Using Anonymous Binary Sensors and DCNN,” IEEE J Biomed Health Inform, vol. 23, no. 2, pp. 693–702, Mar. 2019. [CrossRef]

- K. A. Muthukumar, M. Bouazizi, and T. Ohtsuki, “A Novel Hybrid Deep Learning Model for Activity Detection Using Wide-Angle Low-Resolution Infrared Array Sensor,” IEEE Access, vol. 9, pp. 82563–82576, 2021. [CrossRef]

- K. Takenaka, K. Kondo, and T. Hasegawa, “Segment-Based Unsupervised Learning Method in Sensor-Based Human Activity Recognition,” Sensors (Basel), vol. 23, no. 20, Oct. 2023. [CrossRef]

- I. H. Sarker, “Deep Learning: A Comprehensive Overview on Techniques, Taxonomy, Applications and Research Directions,” Nov. 01, 2021, Springer. [CrossRef]

- S. Lapuschkin, S. Wäldchen, A. Binder, G. Montavon, W. Samek, and K. R. Müller, “Unmasking Clever Hans predictors and assessing what machines really learn,” Nat Commun, vol. 10, no. 1, Dec. 2019. [CrossRef]

- J. Gui et al., “A Survey on Self-supervised Learning: Algorithms, Applications, and Future Trends,” Jan. 2023, [Online]. Available online: http://arxiv.org/abs/2301.05712.

- R. Balestriero et al., “A Cookbook of Self-Supervised Learning,” Apr. 2023, [Online]. Available online: http://arxiv.org/abs/2304.12210.

- H. Haresamudram et al., “Masked reconstruction based self-supervision for human activity recognition,” in Proceedings - International Symposium on Wearable Computers, ISWC, Association for Computing Machinery, Sep. 2020, pp. 45–49. [CrossRef]

- H. Haresamudram, I. Essa, and T. Plötz, “Assessing the State of Self-Supervised Human Activity Recognition Using Wearables,” Proc ACM Interact Mob Wearable Ubiquitous Technol, vol. 6, no. 3, Sep. 2022. [CrossRef]

- C. Lugaresi et al., “MediaPipe: A Framework for Building Perception Pipelines,” Jun. 2019, [Online]. Available online: http://arxiv.org/abs/1906.08172.

- V. Bazarevsky, I. Grishchenko, K. Raveendran, T. Zhu, F. Zhang, and M. Grundmann, “BlazePose: On-device Real-time Body Pose tracking,” Jun. 2020, [Online]. Available online: http://arxiv.org/abs/2006.10204.

- T. Chen, S. Kornblith, M. Norouzi, and G. Hinton, “A Simple Framework for Contrastive Learning of Visual Representations,” Feb. 2020, [Online]. Available online: http://arxiv.org/abs/2002.05709.

- A. Parnami and M. Lee, “Learning from Few Examples: A Summary of Approaches to Few-Shot Learning,” Mar. 2022, [Online]. Available online: http://arxiv.org/abs/2203.04291.

- C. Chen, R. Jafari, and N. Kehtarnavaz, “A survey of depth and inertial sensor fusion for human action recognition,” Multimed Tools Appl, vol. 76, no. 3, pp. 4405–4425, Feb. 2017. [CrossRef]

- M. Al-Amin, R. Qin, W. Tao, and M. C. Leu, “Sensor Data Based Models for Workforce Management in Smart Manufacturing.”.

- Y. Wang, S. Cang, and H. Yu, “A survey on wearable sensor modality centred human activity recognition in health care,” Dec. 15, 2019, Elsevier Ltd. [CrossRef]

- M. Al-Amin et al., “Action recognition in manufacturing assembly using multimodal sensor fusion,” in Procedia Manufacturing, Elsevier B.V., 2019, pp. 158–167. [CrossRef]

- S. Chung, J. Lim, K. J. Noh, G. Kim, and H.-T. Jeong, “Sensor Data Acquisition and Multimodal Sensor Fusion for Human Activity Recognition Using Deep Learning.,” Sensors, 2019. [CrossRef]

- L. Yao et al., “Compressive Representation for Device-Free Activity Recognition with Passive RFID Signal Strength,” IEEE Trans Mob Comput, vol. 17, no. 2, pp. 293–306, Feb. 2018. [CrossRef]

- Z. Luo, Y. Zou, V. Tech, J. Hoffman, and L. Fei-Fei, “Label Efficient Learning of Transferable Representations across Domains and Tasks.”.

- Y. Karayaneva, S. Baker, B. Tan, and Y. Jing, “Use of Low-Resolution Infrared Pixel Array for Passive Human Motion Movement and Recognition,” BCS Learning & Development, 2018. [CrossRef]

- A. D. Singh, S. S. Sandha, L. Garcia, and M. Srivastava, “Radhar: Human activity recognition from point clouds generated through a millimeter-wave radar,” in Proceedings of the Annual International Conference on Mobile Computing and Networking, MOBICOM, Association for Computing Machinery, Oct. 2019, pp. 51–56. [CrossRef]

- M. Möncks, J. Roche, and V. De Silva, “Adaptive Feature Processing for Robust Human Activity Recognition on a Novel Multi-Modal Dataset.”.

- H. Foroughi, B. Shakeri Aski, and H. Pourreza, “Intelligent Video Surveillance for Monitoring Fall Detection of Elderly in Home Environments.”.

- J. Heikenfeld et al., “Wearable sensors: modalities, challenges, and prospects,” Lab Chip, vol. 18, no. 2, pp. 217–248, 2018. [CrossRef]

- N. T. Newaz and E. Hanada, “A Low-Resolution Infrared Array for Unobtrusive Human Activity Recognition That Preserves Privacy,” Sensors, vol. 24, no. 3, Feb. 2024. [CrossRef]

- S. Nehra and J. L. Raheja, “Unobtrusive and Non-Invasive Human Activity Recognition using Kinect Sensor,” IEEE, 2020.

- H. Li, C. Wan, R. C. Shah, P. A. Sample, and S. N. Patel, “IDAct: Towards Unobtrusive Recognition of User Presence and Daily Activities,” Institute of Electrical and Electronics Engineers, 2019, p. 245.

- G. A. Oguntala et al., “SmartWall: Novel RFID-Enabled Ambient Human Activity Recognition Using Machine Learning for Unobtrusive Health Monitoring,” IEEE Access, vol. 7, pp. 68022–68033, 2019. [CrossRef]

- I. Alrashdi, M. H. Siddiqi, Y. Alhwaiti, M. Alruwaili, and M. Azad, “Maximum Entropy Markov Model for Human Activity Recognition Using Depth Camera,” IEEE Access, vol. 9, pp. 160635–160645, 2021. [CrossRef]

- H. Wu, W. Pan, X. Xiong, and S. Xu, “Human Activity Recognition Based on the Combined SVM&HMM,” in Proceeding of the IEEE International Conference on Information and Automation, IEEE, 2014, p. 1317.

- Y. Lecun, Y. Bengio, and G. Hinton, “Deep learning,” May 27, 2015, Nature Publishing Group. [CrossRef]

- B. Açış and S. Güney, “Classification of human movements by using Kinect sensor,” Biomed Signal Process Control, vol. 81, Mar. 2023. [CrossRef]

- R. G. Ramos, J. D. Domingo, E. Zalama, and J. Gómez-García-bermejo, “Daily human activity recognition using non-intrusive sensors,” Sensors, vol. 21, no. 16, Aug. 2021. [CrossRef]

- P. Choudhary, P. Kumari, N. Goel, and M. Saini, “An Audio-Seismic Fusion Framework for Human Activity Recognition in an Outdoor Environment,” IEEE Sens J, vol. 22, no. 23, pp. 22817–22827, Dec. 2022. [CrossRef]

- J. Quero, M. Burns, M. Razzaq, C. Nugent, and M. Espinilla, “Detection of Falls from Non-Invasive Thermal Vision Sensors Using Convolutional Neural Networks,” MDPI AG, Oct. 2018, p. 1236. [CrossRef]

- H. Iyer and H. Jeong, “PE-USGC: Posture Estimation-Based Unsupervised Spatial Gaussian Clustering for Supervised Classification of Near-Duplicate Human Motion,” IEEE Access, vol. 12, pp. 163093–163108, 2024. [CrossRef]

- E. S. Rahayu, E. M. Yuniarno, I. K. E. Purnama, and M. H. Purnomo, “Human activity classification using deep learning based on 3D motion feature,” Machine Learning with Applications, vol. 12, p. 100461, Jun. 2023. [CrossRef]

- G. Huang, Z. Liu, L. Van Der Maaten, and K. Q. Weinberger, “Densely Connected Convolutional Networks.” [Online]. Available online: https://github.com/liuzhuang13/DenseNet.

- F. Chollet, “Xception: Deep Learning with Depthwise Separable Convolutions.”.

- K. He, X. Zhang, S. Ren, and J. Sun, “Deep Residual Learning for Image Recognition,” Dec. 2015, [Online]. Available online: http://arxiv.org/abs/1512.03385.

- K. Wickstrøm, M. Kampffmeyer, K. Ø. Mikalsen, and R. Jenssen, “Mixing up contrastive learning: Self-supervised representation learning for time series,” Pattern Recognit Lett, vol. 155, pp. 54–61, Mar. 2022. [CrossRef]

- S. Liu et al., “Audio Self-supervised Learning: A Survey,” Mar. 2022, [Online]. Available online: http://arxiv.org/abs/2203.01205.

- M. C. Schiappa, Y. S. Rawat, and M. Shah, “Self-Supervised Learning for Videos: A Survey,” Jun. 2022. [CrossRef]

- I. Goodfellow, Y. Bengio, and A. Courville, Deep Learning. in Adaptive Computation and Machine Learning series. MIT Press, 2016. [Online]. Available online: https://books.google.com/books?id=omivDQAAQBAJ.

- Z. Xie et al., “SimMIM: a Simple Framework for Masked Image Modeling.” [Online]. Available online: https://github.com/microsoft/SimMIM.

- W. Ye, G. Zheng, X. Cao, Y. Ma, and A. Zhang, “Spurious Correlations in Machine Learning: A Survey,” Feb. 2024, [Online]. Available online: http://arxiv.org/abs/2402.12715.

- A. Jaiswal, A. R. Babu, M. Z. Zadeh, D. Banerjee, and F. Makedon, “A Survey on Contrastive Self-supervised Learning,” Oct. 2020, [Online]. Available online: http://arxiv.org/abs/2011.00362.

- Y. Tian, C. Sun, B. Poole, D. Krishnan, C. Schmid, and P. Isola, “What Makes for Good Views for Contrastive Learning?,” May 2020, [Online]. Available online: http://arxiv.org/abs/2005.10243.

- K. Shah, D. Spathis, C. I. Tang, and C. Mascolo, “Evaluating Contrastive Learning on Wearable Timeseries for Downstream Clinical Outcomes,” Nov. 2021, [Online]. Available online: http://arxiv.org/abs/2111.07089.

- T. Chen, S. Kornblith, M. Norouzi, and G. Hinton, “A Simple Framework for Contrastive Learning of Visual Representations,” Feb. 2020, [Online]. Available online: http://arxiv.org/abs/2002.05709.

- C. Kwak and A. Clayton-Matthews, “Multinomial Logistic Regression,” Nurs Res, vol. 51, pp. 404–410, 2002, [Online]. Available online: https://api.semanticscholar.org/CorpusID:21650170.

- D. R. Cox, “The regression analysis of binary sequences,” Journal of the Royal Statistical Society: Series B (Methodological), vol. 20, no. 2, pp. 215–232, 1958.

- S. Ji and Y. Xie, “Logistic Regression: From Binary to Multi-Class.”.

- S. Ruder, “An overview of gradient descent optimization algorithms,” Sep. 2016, [Online]. Available online: http://arxiv.org/abs/1609.04747.

- D. Hong, J. Wang, and R. Gardner, “Chapter 1 - Fundamentals,” in Real Analysis with an Introduction to Wavelets and Applications, D. Hong, J. Wang, and R. Gardner, Eds., Burlington: Academic Press, 2005, pp. 1–32. [CrossRef]

- H. Xuan, A. Stylianou, X. Liu, and R. Pless, “Hard Negative Examples are Hard, but Useful,” 2020, pp. 126–142. [CrossRef]

| Pose number | Representation |

| 11 | Left Shoulder |

| 12 | Right Shoulder |

| 13 | Left Elbow |

| 14 | Right Elbow |

| 15 | Left Wrist |

| 16 | Right Wrist |

| 23 | Left Hip |

| 24 | Right Hip |

| 25 | Left Knee |

| 26 | Right Knee |

| Network | Accuracy | Precision | Recall | F1 Score |

| ResNet18 Baseline | 79.6% | 0.801 | 0.797 | 0.796 |

| ResNet50 Baseline | 77.3% | 0.782 | 0.773 | 0.771 |

| CLUMM (ResNet50 Backbone) | 83.5 % | 0.833 | 0.835 | 0.831 |

| CLUMM (ResNet18 Backbone) | 90.0 % | 0.899 | 0.90 | 0.899 |

| Number of Outlier images | Percentage of outlier landmarks | Mean outlier distance | Max outlier distance | Accuracy | Precision | Recall | F1-Score |

| None | - | 90.00 % | 0.899 | 0.90 | 0.899 | ||

| 100 | 3.5 % | 0.30 | 0.80 | 86.76 % | 0.867 | 0.867 | 0.865 |

| 200 | 3.1 % | 0.30 | 0.81 | 84.48% | 0.845 | 0.844 | 0.843 |

| 500 | 3 % | 0.31 | 0.83 | 84.64 | 0.845 | 0.846 | 0.846 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).