Submitted:

25 November 2024

Posted:

26 November 2024

You are already at the latest version

Abstract

Keywords:

1. Sleep Stage Interpretation and Analysis

2. Materials and Methods

2.1. Database Description

2.2. Generalized probabilities of ordinal patterns

2.3. Data Analysis

3. Results

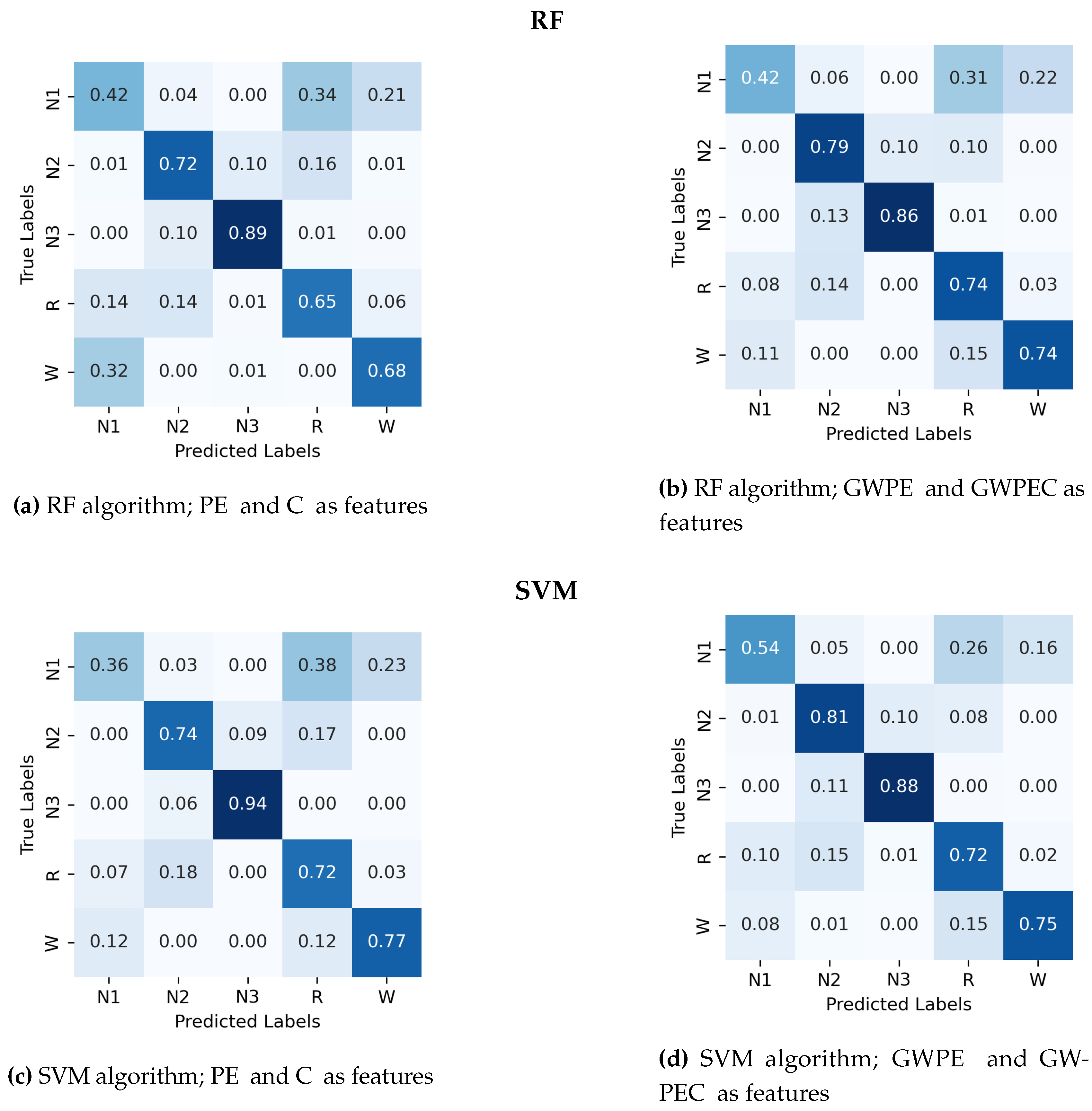

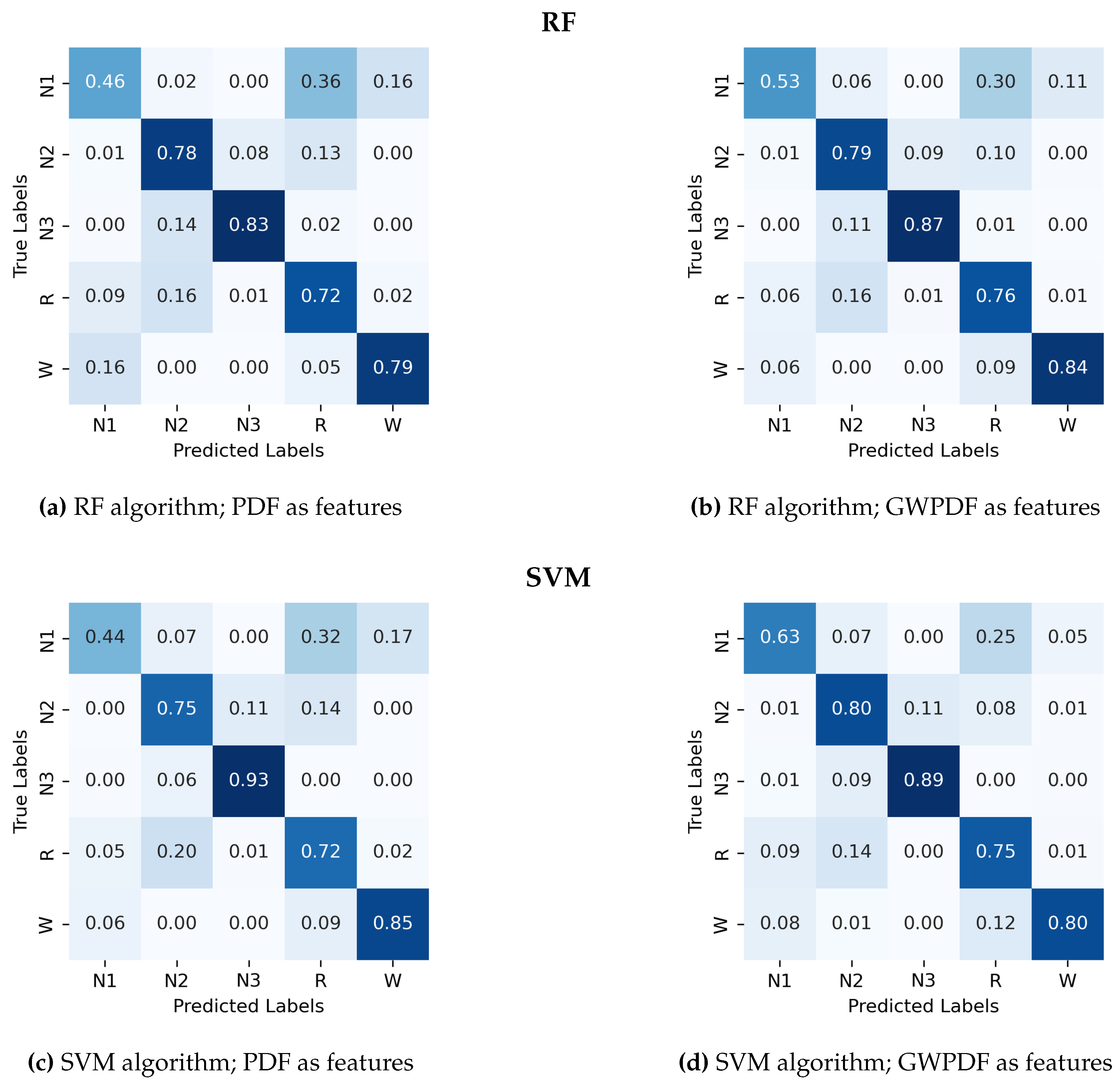

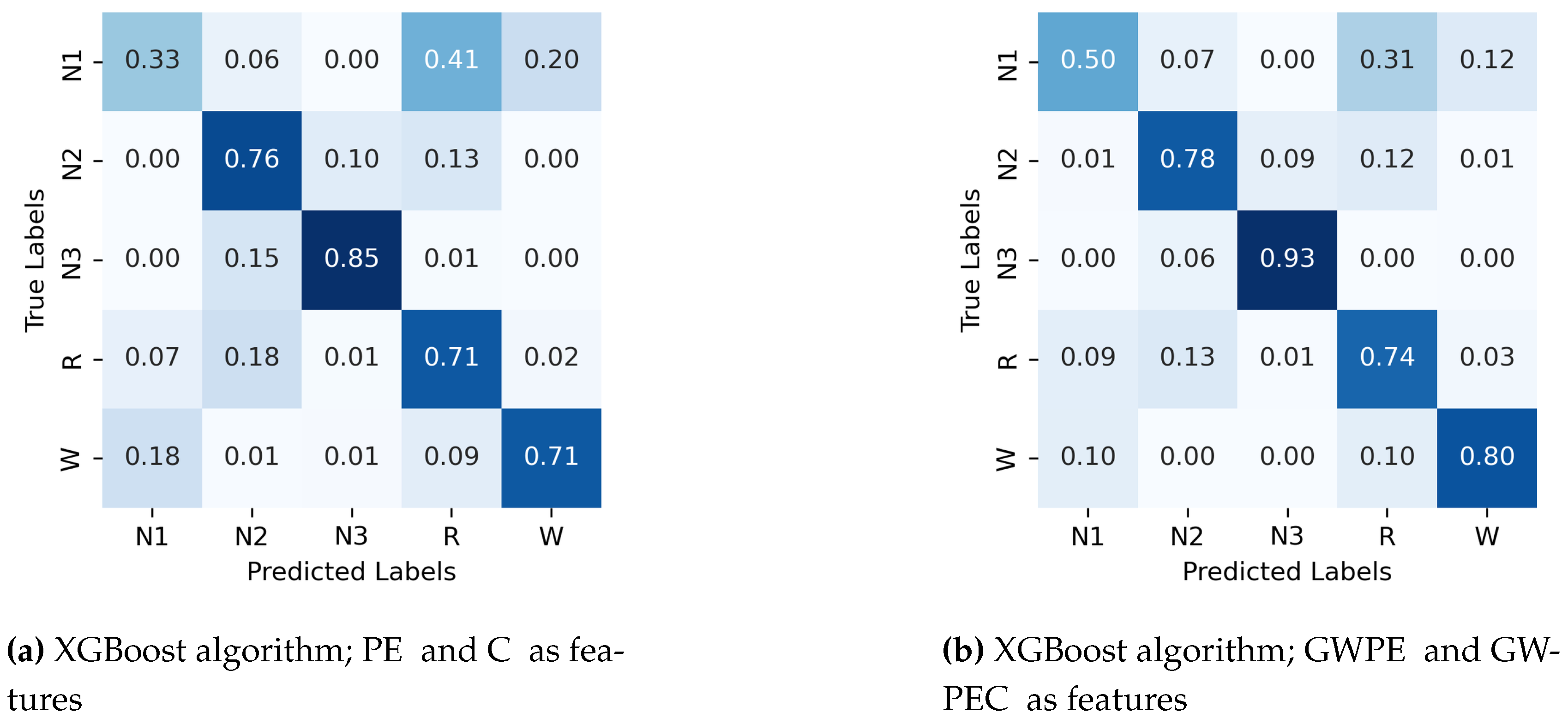

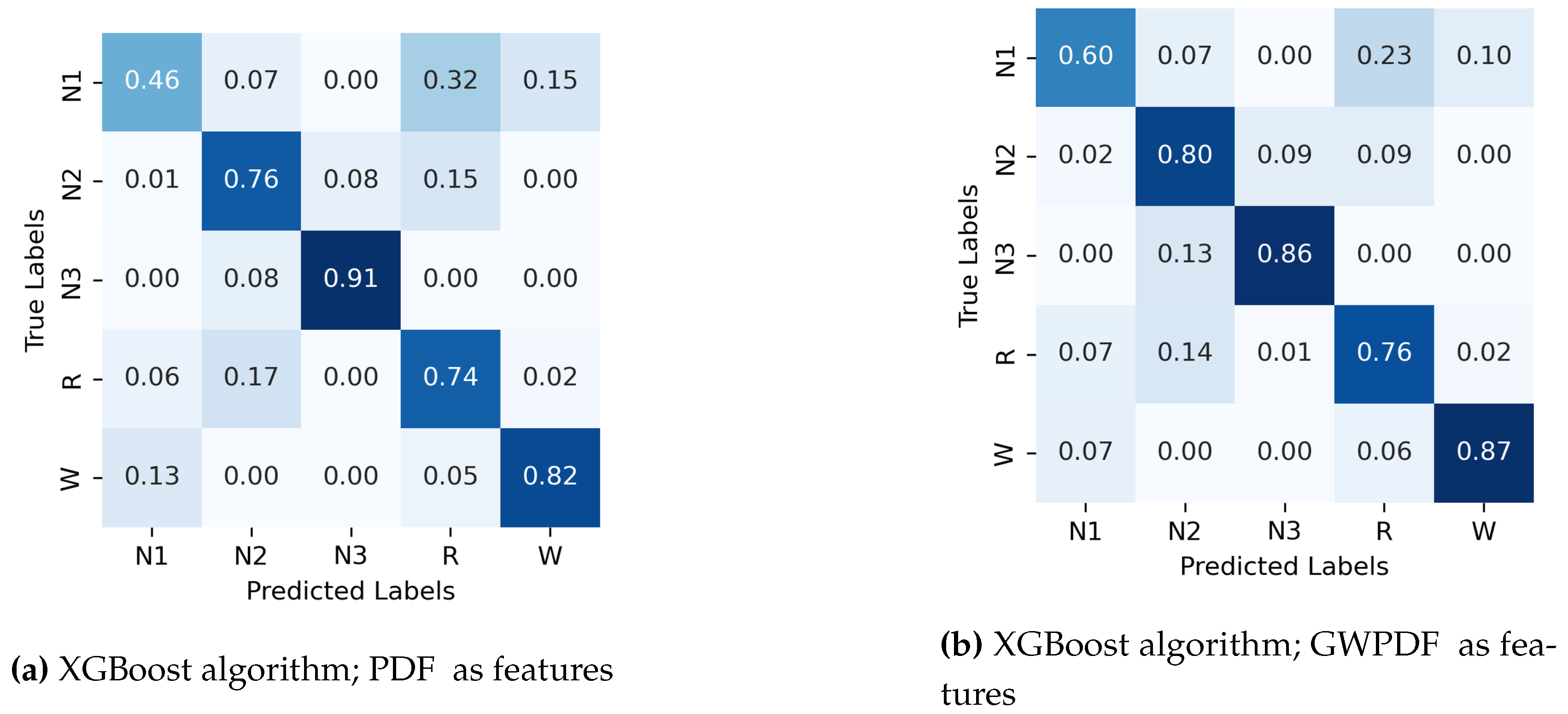

3.1. Classifier and Feature Set Comparison for Sleep Stage Classification

- Permutation entropy (PE) and complexity (C)

- Generalized weighted permutation entropy (GWPE) and complexity (GWPEC)

- The probability distribution function of the ordinal patterns (PDF)

- The generalized weighted probability distribution of the ordinal patterns (GWPDF)

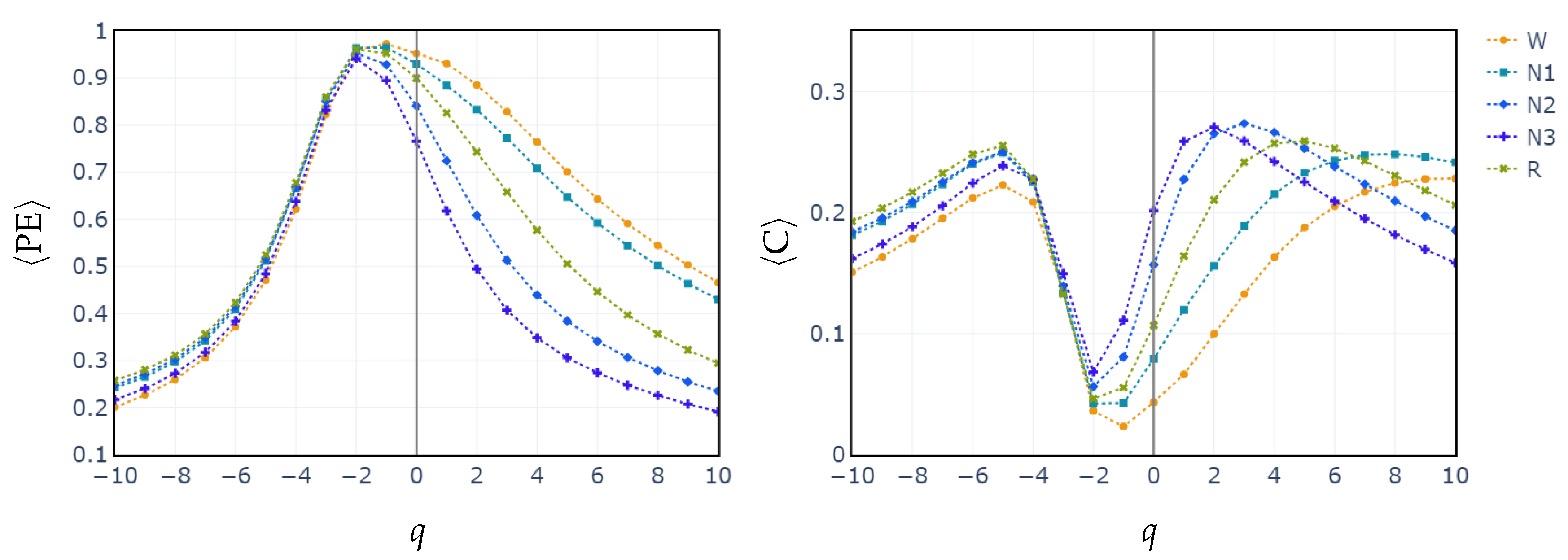

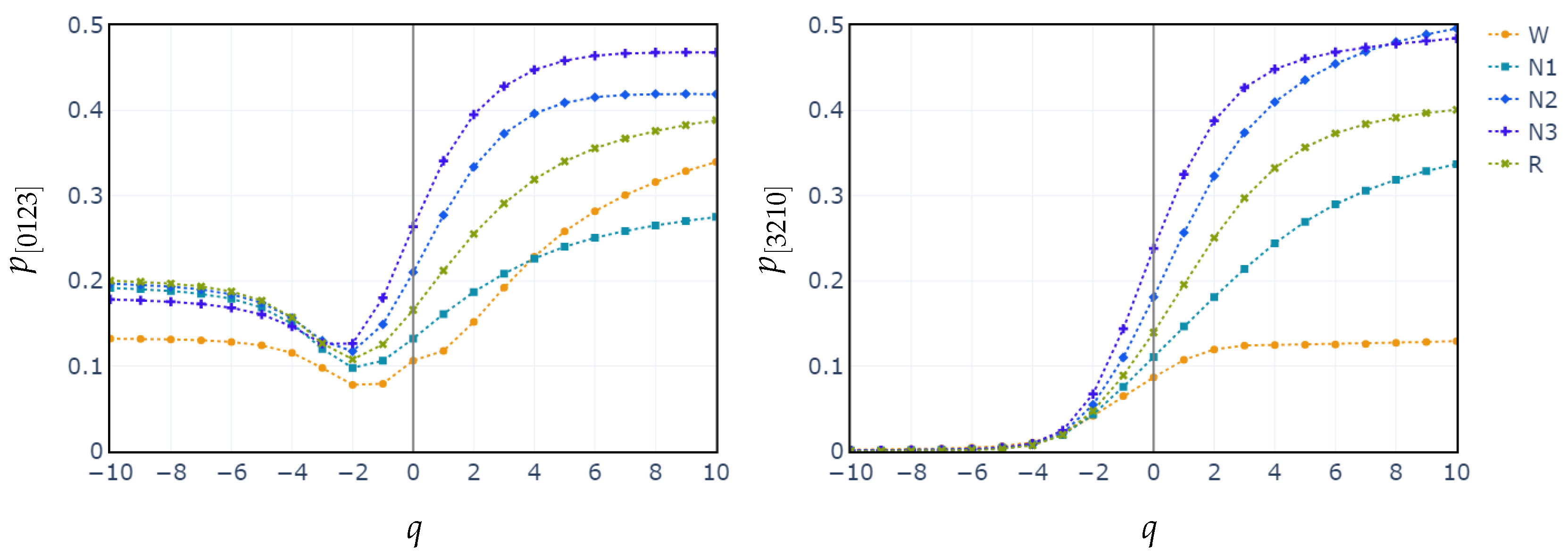

3.2. Behavior of PE, C vs. q and POs vs. q

4. Discussion

5. Conclusion

Author Contributions

Funding

Conflicts of Interest

Appendix A.

Appendix A.1. Confusion Matrix for Random Forest and Support Vector Machines.

References

- Gottesman, R.F.; Lutsey, P.L.; Benveniste, H.; Brown, D.L.; Full, K.M.; Lee, J.M.; Osorio, R.S.; Pase, M.P.; Redeker, N.S.; Redline, S.; Spira, A.P.; on behalf of the American Heart Association Stroke Council; Council on Cardiovascular. ; Nursing;, S.; on Hypertension, C. Impact of Sleep Disorders and Disturbed Sleep on Brain Health: A Scientific Statement From the American Heart Association. Stroke 2024, 55, e61–e76. [Google Scholar] [CrossRef] [PubMed]

- Rial, R.V.; Akaârir, M.; Canellas, F.; Barceló, P.; Rubiño, J.A.; Martín-Reina, A.; Gamundí, A.; Nicolau, M.C. Mammalian NREM and REM sleep: Why, when and how. Neuroscience & Biobehavioral Reviews 2023, 146, 105041. [Google Scholar]

- Kumar, V.M. Sleep and sleep disorders. Indian Journal of Chest Diseases and Allied Sciences 2008, 50, 129. [Google Scholar] [PubMed]

- Palagini, L.; Geoffroy, P.A.; Miniati, M.; Perugi, G.; Biggio, G.; Marazziti, D.; Riemann, D. Insomnia, sleep loss, and circadian sleep disturbances in mood disorders: A pathway toward neurodegeneration and neuroprogression? A theoretical review. CNS Spectrums 2022, 27, 298–308. [Google Scholar] [CrossRef]

- Panossian, L.A.; Avidan, A.Y. Review of Sleep Disorders. Medical Clinics of North America 2009, 93, 407–425. [Google Scholar] [CrossRef]

- Patel, A.K.; Reddy, V.; Shumway, K.R.; Araujo, J.F. Physiology, sleep stages. In StatPearls [Internet]; StatPearls Publishing, 2024.

- Malhotra, R.K. Neurodegenerative Disorders and Sleep. Sleep Medicine Clinics 2022, 17, 307–314. [Google Scholar] [CrossRef]

- Gilley, R.R. The Role of Sleep in Cognitive Function: The Value of a Good Night’s Rest. Clinical EEG and Neuroscience 2023, 54, 12–20. [Google Scholar] [CrossRef] [PubMed]

- Porter, V.R.; Buxton, W.G.; Avidan, A.Y. Sleep, Cognition and Dementia. Current Psychiatry Reports 2015, 17. [Google Scholar] [CrossRef]

- Yan, T.; Qiu, Y.; Yu, X.; Yang, L. Glymphatic Dysfunction: A Bridge Between Sleep Disturbance and Mood Disorders. Frontiers in Psychiatry 2021, 12. [Google Scholar] [CrossRef]

- Pearson, O.; Uglik-Marucha, N.; Miskowiak, K.W.; Cairney, S.A.; Rosenzweig, I.; Young, A.H.; Stokes, P.R. The relationship between sleep disturbance and cognitive impairment in mood disorders: A systematic review. Journal of Affective Disorders 2023, 327, 207–216. [Google Scholar] [CrossRef]

- de Boer, M.; Nijdam, M.J.; Jongedijk, R.A.; Bangel, K.A.; Olff, M.; Hofman, W.F.; Talamini, L.M. The spectral fingerprint of sleep problems in post-traumatic stress disorder. Sleep 2019, 43, zsz269. [Google Scholar] [CrossRef] [PubMed]

- Barone, D.A. Dream enactment behavior—a real nightmare: A review of post-traumatic stress disorder, REM sleep behavior disorder, and trauma-associated sleep disorder. Journal of Clinical Sleep Medicine 2020, 16, 1943–1948. [Google Scholar] [CrossRef] [PubMed]

- Chouvarda, I.; Rosso, V.; Mendez, M.O.; Bianchi, A.M.; Parrino, L.; Grassi, A.; Terzano, M.; Cerutti, S. Assessment of the EEG complexity during activations from sleep. Computer methods and programs in biomedicine 2011, 104, e16–e28. [Google Scholar] [CrossRef] [PubMed]

- Kryger, M.H. Atlas of Clinical Sleep Medicine: Expert Consult-Online; Elsevier Health Sciences, 2022.

- Nicolaou, N.; Georgiou, J. The Use of Permutation Entropy to Characterize Sleep Electroencephalograms. Clinical EEG and Neuroscience 2011, 42, 24–28. [Google Scholar] [CrossRef] [PubMed]

- Bandt, C. A new kind of permutation entropy used to classify sleep stages from invisible EEG microstructure. Entropy 2017, 19, 197. [Google Scholar] [CrossRef]

- Rahman, M.M.; Bhuiyan, M.; Hassan, A. Sleep stage classification using single-channel EOG. Computers in biology and medicine 2018, 102, 211–220. [Google Scholar] [CrossRef]

- Zhang, Z.; Wei, S.; Zhu, G.; Liu, F.; Li, Y.; Dong, X.L.; Liu, C.; Liu, F. Efficient sleep classification based on entropy features and a support vector machine classifier. Physiological Measurement 2018, 39. [Google Scholar] [CrossRef]

- Kemp, B.; Zwinderman, A.; Tuk, B.; Kamphuisen, H.; Oberyé, J. Sleep-edf database expanded. Physionet org 2018. [Google Scholar]

- Chen, Z.; Ma, X.; Fu, J.; Li, Y. Ensemble Improved Permutation Entropy: A New Approach for Time Series Analysis. Entropy 2023, 25. [Google Scholar] [CrossRef]

- Loh, H.W.; Ooi, C.P.; Vicnesh, J.; Oh, S.L.; Faust, O.; Gertych, A.; Acharya, U.R. Automated detection of sleep stages using deep learning techniques: A systematic review of the last decade (2010–2020). Applied Sciences 2020, 10, 8963. [Google Scholar] [CrossRef]

- Bandt, C.; Pompe, B. Permutation entropy: A natural complexity measure for time series. Physical review letters 2002, 88, 174102. [Google Scholar] [CrossRef]

- Rosso, O.A.; Larrondo, H.; Martin, M.T.; Plastino, A.; Fuentes, M.A. Distinguishing noise from chaos. Physical review letters 2007, 99, 154102. [Google Scholar] [CrossRef] [PubMed]

- Fadlallah, B.; Chen, B.; Keil, A.; Principe, J. Weighted-permutation entropy: A complexity measure for time series incorporating amplitude information. Physical review. E, Statistical, nonlinear, and soft matter physics 2013, 87, 022911. [Google Scholar] [CrossRef] [PubMed]

- Stosic, D.; Stosic, D.; Stosic, T.; Stosic, B. Generalized weighted permutation entropy. Chaos: An Interdisciplinary Journal of Nonlinear Science 2022, 32, 103105. [Google Scholar] [CrossRef] [PubMed]

- Mateos, D.M.; Gómez-Ramírez, J.; Rosso, O.A. Using time causal quantifiers to characterize sleep stages. Chaos, Solitons & Fractals 2021, 146, 110798. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; Vanderplas, J.; Passos, A.; Cournapeau, D.; Brucher, M.; Perrot, M.; Duchesnay, E.; Louppe, G. Scikit-learn: Machine Learning in Python. Journal of Machine Learning Research 2012, 12. [Google Scholar]

| RF | SVM | XGBoost | |||||

|---|---|---|---|---|---|---|---|

| Acc | SD | Acc | SD | Acc | SD | ||

| Features | PE and C | 0.71 | 0.01 | 0.72 | 0.01 | 0.71 | 0.01 |

| GWPE and GWPEC | 0.76 | 0.01 | 0.77 | 0.01 | 0.77 | 0.01 | |

| 0.74 | 0.01 | 0.74 | 0.01 | 0.74 | 0.01 | ||

| GWPDF | 0.79 | 0.01 | 0.78 | 0.01 | 0.80 | 0.01 | |

| OPs | GWPE and GWPEC | |||||

|---|---|---|---|---|---|---|

| Features | q | Relative Importance | Features | q | Relative Importance | |

| 0 | 0.0868 | H | 1 | 0.1655 | ||

| 1 | 0.0716 | H | 0 | 0.1028 | ||

| -1 | 0.0355 | C | 3 | 0.0874 | ||

| 2 | 0.0290 | C | 1 | 0.4366 | ||

| 3 | 0.0286 | H | -1 | 0.0595 | ||

| 1 | 0.0285 | C | 0 | 0.0416 | ||

| 0 | 0.0210 | C | -1 | 0.0300 | ||

| 2 | 0.0206 | H | 2 | 0.0277 | ||

| 2 | 0.0204 | C | 2 | 0.0214 | ||

| 3 | 0.0124 | C | 7 | 0.0208 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).