Major Design Experience Information

| Student Details |

: |

Y. Sudheer Babu

P. Jagan Mohan Reddy

P. Sasi Vardhan Reddy

N.Teja |

| Project Title |

: |

Smart Low-Light Image Enhancement For Emotion Detection |

Program

Concentration Area |

: |

Computer Science (or AI, Data Science, etc.) & Convolutional Neural Networks, Emotion Recognition, Low-Light Image Enhancement, etc. |

Subject(s) as

Pre-requisite |

: |

utilising deep learning methods and sophisticated algorithms to enhance low-light photos to increase the accuracy of emotion recognition. |

| Constraints |

: |

Amplification of noise, computational complexity, fluctuating lighting conditions, and the requirement for real-time processing in emotion detection systems are some of the reasons that can restrict the efficacy of low-light picture enhancement approaches. |

| Project Related to |

: |

Emotion Detection |

Standard(s) used in

this project |

: |

ISO/IEC 14495 (JPEG-LS), ISO/IEC 25010, ISO/IEC 25010. |

Sustainable

Development Goal indicators |

: |

SDG 3-Good Health and Well-Being: Use improved evaluation and intervention techniques to gauge how improved emotion detection affects mental health outcomes. SDG 9: Industry, Innovation, and Infrastructure: Assess how developments in imaging and emotion recognition technology contribute to the creation of smart technologies. SDG 4-Quality Education: Evaluate how emotion detection technology can be used in classrooms to provide students with individualized instruction and mental health care. SDG 11: Sustainable Cities and Communities: Examine how emotion detection may be used in urban environments to improve community well-being and public safety. |

| Table of Contents |

| CHAPTER No. |

TITLE |

| |

ABSTRACT |

| |

TABLE OF CONTENTS |

| |

LIST OF FIGURES |

| |

LIST OF ABBREVIATIONS |

| 1 |

INTRODUCTION |

| |

1.1 1 Enhancing Images in Low Light |

| |

1.2 Emotion Recognition in Dimly Lit Environments |

| |

Problem Background |

| |

1.4 Emotion Detection With Smart Technologies |

| |

1.5 crucial Parameters for Effective Emotion Detection |

| |

1.6 Project Goals & Objectives |

| 2 |

LITERATURE REVIEW |

| 3 |

METHODOLOGY |

| |

3.1 Existing methodology |

| |

3.2 Proposed system |

| |

3.3software |

| 4 |

STANDARDS AND PROTOCOLS INVOLVED IN THE PROPOSED WORK |

| |

4.1 Adaptive Lighting Improvement |

| |

4.2 Convolutional Neural Nets (CNN) for the Identification of Emotions |

| |

4.3 Media Pipe-based Emotion Detection Pipeline |

| |

Augmenting Data to Improve Low-Light Images |

| 5 |

SUSTAINABILITY OF THE PROPOSED WORK |

| |

5.1 Sustainable Development Goal Indicator(s) |

| |

5.2 Relevancy of the SDG to the Proposed work with adequate explanation |

| 6 |

RESULT AND DISCUSSION |

| 7 |

CONCLUSION AND FUTURE WORKS |

| |

7.1 Conclusion |

| |

7.2 Future works |

| |

REFERENCES |

1.1. Enhancing Images in Low Light

Understanding human emotions is essential for improving mental health care, fostering productive human-computer interactions, and boosting interpersonal communication in an increasingly digital world. In a number of industries, including healthcare, education, security, and entertainment, emotion detection technology—which mostly depends on precise visual input—has drawn a lot of interest. However, the fact that current emotion recognition systems depend on ideal lighting conditions for precise image capture and processing presents one of their main obstacles. Facial expressions can be obscured by low light levels, which can cause emotional states to be misinterpreted and reduce the efficiency of these systems.

By creating creative solutions that improve the clarity of photos taken in less-than-ideal lighting circumstances, the Smart Low-Light Image Enhancement for Emotion Detection project seeks to address this pressing issue. Using sophisticated picture processing The research aims to increase the accuracy and consistency of emotion recognition using algorithms and sensor technologies, which will ultimately lead to better mental health evaluations and treatments.

The creation of a multilingual user interface to guarantee accessibility for a range of demographics, the incorporation of top-notch imaging technology for exceptional image clarity, and the application of real-time processing capabilities to enable instantaneous feedback on emotional states are some of the project's essential elements. In order to empower users, a mobile application will also be created that will enable them to track and monitor their emotional well-being over time.

Given the possible effects of emotion detecting technologies on mental health, the initiative will also emphasise ethical use and emotional well-being education. The project intends to have a beneficial societal influence by promoting ethical technology use and a deeper knowledge of emotional wellness.

1.2. Emotion Recognition in Dimly Lit Environments

Accurate facial feature extraction, including eye movement, mouth shape, and facial muscle activity, is crucial for emotion recognition systems. These characteristics are frequently masked in low-light conditions by noise, poor contrast, and pixel degradation, which makes it challenging for conventional machine learning algorithms to correctly identify emotions. This results in increased mistake rates for systems that depend on high-quality, well-lit inputs. Furthermore, dynamic lighting circumstances or uneven illumination across the face, where certain facial features may be well-lit while others stay in darkness, make emotion recognition jobs considerably more challenging. Because of this problem, it is imperative to use picture enhancement techniques that can correct for uneven illumination and increase the visibility of important facial characteristics without creating artifacts.

By providing creative solutions that improve emotional understanding, assist mental health initiatives, and foster well-being in a variety of circumstances, the Smart Low-Light Image Enhancement for Emotion Detection project seeks to transform emotion recognition in low light. This initiative has the potential to significantly advance affective computing and mental health treatment by bridging the gap between emotional intelligence and technological innovation.

1.3. Problem Background

Since applications like security systems, real-time video analysis, and human-computer interaction demand precise emotion recognition in a variety of lighting circumstances, the problem of emotion detection in low-light environments has grown in significance. The quality of photos is greatly impacted by poor illumination, which makes it challenging for conventional facial recognition and emotion detection systems to work effectively. Reduced visibility, excessive noise levels, and the loss of important facial features—which are essential for recognising emotions like happiness, sadness, rage, and surprise—are common problems with low-light photos.

Because facial landmarks are becoming more difficult to differentiate, these image quality problems make it difficult for current systems to reliably detect emotions. The efficacy of the model is ultimately diminished by low contrast, noise, and blurring, which distort important visual cues needed for emotion classification. Furthermore, the demand for reliable systems that can function well in less-than-ideal lighting conditions has increased due to the growing use of automated emotion recognition in practical applications.

A potential remedy for this issue is the combination of deep learning models and clever low-light image enhancing techniques. Researchers want to greatly increase the accuracy of emotion recognition systems in poorly light or dimly lit areas by improving image quality before feeding it into emotion detection algorithms. CNNs and GANs are examples of deep learning-based image enhancement models that can intelligently improve brightness, cut down on noise, and restore lost details to guarantee that important facial characteristics are maintained even in low light.

1.4. Emotion Detection with Smart Technologies

Emotion detection is the process of using technology to recognize and analyse human emotional states. Recent developments in computer vision, machine learning, and artificial intelligence (AI) have greatly increased the precision and effectiveness of emotion identification systems. These systems can offer a thorough grasp of emotional states by utilizing a variety of data sources, including body language, vocal tone, facial expressions, and physiological signs. Ulti-modal data capture is made possible by the integration of sophisticated sensors, such as cameras and microphones, which enhances the process of emotional analysis. These systems are trained using machine learning methods, especially deep learning models like convolutional neural networks (CNNs), which enable accurate emotional cue interpretation. Technologies for emotion detection are used in many different fields. They facilitate mental health evaluations in the medical field, allowing for patient-specific interventions. When it comes to customer service, These systems are used by companies to measure customer happiness, which improves service delivery. By modifying their teaching strategies according to the degree of student participation, educational institutions can profit from emotion recognition. Emotion detection is also used by the entertainment and gaming sectors to develop adaptive experiences that react to users' emotional responses.

Even with these developments, problems still exist. Cultural differences in emotional expression, environmental influences on data quality, and moral dilemmas relating to consent and privacy continue to be major barriers. Strong systems that can comprehend emotional subtleties in a variety of circumstances while guaranteeing ethical use are becoming more and more necessary as emotion detection technology advance.

Enhancing algorithmic transparency, advancing cross-cultural recognition, and addressing the ethical implications of the technology are key to the future of emotion detection using smart technologies. By using, These systems have the potential to revolutionise our understanding of human emotions through the use of sophisticated algorithms and multi-sensor integration, which could ultimately result in improved mental health care, enhanced customer experiences, and creative uses across a range of industries.

1.5. Crucial Parameters for Effective Emotion Detection

A number of critical characteristics that improve the accuracy, dependability, and usefulness of emotion detection systems are essential to their effectiveness. Since clear, high-resolution photographs greatly enhance the recognition of emotional expressions, image quality is fundamental. This is especially important in low light because maintaining clarity requires sophisticated picture augmentation algorithms. In order to interpret emotions, facial feature recognition is essential. The technology can distinguish between different emotional states thanks to its accurate identification of important facial characteristics including the mouth and eyes. Furthermore, the effectiveness of detection might be impacted by the lighting circumstances in which photographs are taken; therefore, systems need to be able to adjust to different light levels in order to guarantee reliable performance. Since knowledge of situational subtleties and cultural backgrounds can significantly impact emotional manifestations, contextual information deepens the detection of emotions. By offering a thorough understanding of emotional states, a multi-modal data integration technique that combines visual, aural, and physiological inputs can further improve accuracy.

Another crucial factor is algorithmic complexity, since the detection system can learn and identify complicated emotional patterns thanks to sophisticated machine learning algorithms, particularly deep learning. In order to ensure prompt emotion detection findings, real-time processing skills are also crucial for applications that need instant response.

User customisation increases the efficacy and usefulness of the emotion detection system by enabling people to adapt it to their cultural surroundings and preferences. To guarantee dependability, the system must also exhibit resilience to variability, taking into account variations in emotional expression among people and circumstances.

Addressing ethical issues is essential to building trust and promoting the proper application of emotion detecting technology. To allay worries about possible abuse, transparency about data gathering, privacy, and consent is essential. The Smart Low-Light Image Enhancement for Emotion Detection project prioritises these important factors in order to create a complex system that can precisely identify and interpret human emotions under a variety of circumstances. In addition to improving detection skills, this all-encompassing strategy highlights ethical considerations and the user experience, which improves mental health support and enriches human-computer interactions.

1.6. Project Goals & Objectives

Improve the Quality of Images in Low Light: Create and use cutting-edge image processing algorithms that enhance the sharpness and detail of photos taken in dimly lit areas, guaranteeing accurate emotion identification.

Boost the Accuracy of Emotion Recognition: Train models that reliably identify and categorize emotional states from improved low-light photos using cutting-edge machine learning and deep learning approaches. The goal is to significantly increase detection rates over current systems.

Build a User Interface in Multiple Languages: Create an intuitive user interface with multilingual support to make the system available to a wide range of people worldwide and encourage inclusion in emotion detection technologies.

Include the ability to process data in real-time: To improve user experience and applicability in a variety of contexts, use real-time picture processing and emotion recognition features to offer immediate feedback on emotional states.

Make it easier to integrate telehealth services: Create a system that easily connects to telehealth platforms so that medical practitioners can use emotion detection information in virtual consultations, enhancing the standard of remote mental health treatment.

Encourage the Use of Technology Ethically: Encourage the appropriate use of emotion detection technology by establishing moral standards and making sure that the system is operated in a way that puts user safety and consent first.

Take Part in Ongoing Improvement: Provide a way for users to comment on how well the system is working so that it can be continuously improved and adjusted to suit changing user demands and preferences.

Provide a Multilingual User Interface: To ensure that a wide range of users can utilise the emotion detection system, provide an intuitive user interface that is multilingual. Because of this inclusivity, people with different linguistic backgrounds will be able to use the technology for emotional analysis in an efficient manner.

Integrate Advanced Imaging Technology: Put in place top-notch cameras and image processing software to maximize image clarity in dimly lit environments. By accurately detecting emotions and facial expressions, this integration will improve the system's performance and dependability.

Make Use of Edge Computing for Processing in Real Time: Analyse and provide feedback on emotional states instantly by using edge computing tools to process visual data locally. By reducing latency, this method guarantees that the system may function well in a variety of settings without exclusively depending on cloud processing.

Encourage Mental Health Education and Awareness: Include educational materials in the system to encourage knowledge about emotional well-being. This might provide users with knowledge about identifying emotions, coping mechanisms, and when to get professional assistance, enabling them to take responsibility for their mental health.

Facilitate Integration with Telehealth Platforms: Create a system that integrates easily with telehealth apps so that mental health practitioners can get information on emotion detection during online consultations. This will promote more individualized treatment techniques and improve the quality of remote care.

Use Data Analysis and Visualisation Tools: Create data analysis and visualization tools that let users and medical professionals monitor emotional patterns over time. Making educated decisions about interventions and comprehending trends in emotional health will be made easier with the aid of these insights.

Encourage the Responsible and Ethical Use of Emotion Detection Technology: Make sure that emotion detection technology is used in ways that put user consent and psychological safety first. This dedication will support the development of a favourable societal perception of technology.

2. Literature Review

1. Chen et a proposed an innovative deep learning-based model for Low-Light Image Enhancement (LLIE) designed specifically for facial recognition tasks. Their model integrates a deep convolutional neural network (CNN) to enhance low-light images while preserving fine facial details critical for downstream emotion detection. The system improves visibility, reduces noise, and adjusts contrast, making it an invaluable tool for enhancing facial features in low-light conditions.

2. Guo et al. introduced a novel approach called Enlighten GAN, a Generative Adversarial Network (GAN) designed to enhance images captured in low-light environments. This method represents a significant leap forward in emotion detection accuracy, particularly in dimly lit settings, as it generates naturally enhanced illumination without over-saturating the image. The enhanced images serve as improved inputs for emotion detection models, boosting their performance.

3. Wang et al. developed a hybrid system combining low-light image enhancement and emotion detection. Their system employs an image preprocessing stage where low-light images are enhanced using CNN-based techniques, followed by emotion classification using a separate CNN model. The results demonstrated a notable increase in emotion detection accuracy, highlighting the effectiveness of enhancement prior to emotion recognition.

4. Li et al. proposed a Zero-Reference Deep Curve Estimation (Zero-DCE) model for low-light image enhancement, which focuses on dynamically adjusting the brightness and contrast of low-light images without requiring a reference image. This method proved highly effective in enhancing facial images in low-light environments, enabling emotion detection models to more accurately capture facial expressions and classify emotions.

5. Kim et al. developed a real-time, lightweight system designed to enhance low-light images for use in emotion detection. By employing model compression techniques like pruning and quantization, their system achieves computational efficiency without sacrificing enhancement quality, making it suitable for edge computing and real-time emotion detection applications.

6. Zhang et al. proposed a multimodal emotion detection system that integrates low-light image enhancement with audio-visual inputs for emotion classification. Their system enhances facial visibility in low-light conditions and combines it with voice analysis, achieving a more robust and accurate emotion detection outcome even in poorly lit environments.

7. Xu et al. proposed an intelligent low-light image enhancement system using adaptive histogram equalization and contrast enhancement techniques tailored for facial emotion detection. This system represents a significant advancement in enhancing low-light facial images by dynamically adjusting contrast to reveal critical facial landmarks needed for accurate emotion recognition.

8. Fang et al. developed an IoT-based real-time emotion detection system that integrates smart image enhancement algorithms for low-light environments. This system leverages edge computing for processing enhanced images on IoT devices, enabling real-time emotion detection even in poorly lit settings, making it applicable in smart surveillance systems.

9. Lin et al. introduced a neural network-based low-light image enhancement model optimized for emotion detection. This model uses a deep autoencoder to enhance visibility in dark images, followed by a CNN for classifying facial emotions, showing significant improvement in emotion detection accuracy under low-light conditions.

10. Zhao et al. proposed a low-light image enhancement system using conditional GANs designed specifically for facial recognition and emotion detection. Their GAN-based approach generates high-quality facial images from low-light scenes, preserving emotional cues like micro-expressions, thereby increasing the precision of emotion recognition systems in suboptimal lighting.

11. Roy et al. designed a real-time low-light image enhancement system for mobile devices using a lightweight CNN model. This system allows emotion detection algorithms to operate effectively on low-light images without requiring significant computational resources, making it ideal for mobile and portable emotion recognition applications.

12. Nguyen et al. proposed a multispectral image fusion technique that combines visible and infrared (IR) images to enhance low-light facial images for emotion detection. By fusing information from both spectral domains, the system achieves better clarity of facial features, particularly in low-light scenarios, enabling accurate emotion classification.

13. Zhou et al. introduced a knowledge-based low-light image enhancement system for emotion detection. Their model incorporates prior knowledge of facial structure to intelligently enhance key facial regions affected by low light, improving the detection of subtle emotional expressions like fear or disgust.

14. Liu et al. developed an IoT-based distributed system for real-time low-light image enhancement, targeting emotion detection in surveillance systems. By employing IoT sensors to gather lighting data, the system dynamically adjusts enhancement algorithms to optimize image quality, ensuring reliable emotion detection across varying lighting conditions.

3. Methodology

3.1. Existing Methodology

Traditional approaches, which frequently produce incorrect findings, particularly in difficult lighting situations, are impeding the current state of emotion recognition in low-light contexts. This inefficiency calls for a change to a more sophisticated strategy, and our suggested system provides an affordable, approachable solution made to satisfy the requirements of a variety of users, such as interactive media, retail settings, and healthcare experts.

Utilizing state-of-the-art technology, our creative solution enables users to record and interpret emotional expressions in real-time, even in dimly lit environments, with previously unheard-of precision. A dual-functionality strategy that combines emotion recognition and image augmentation is at the heart of our technology. High-resolution cameras with infrared capabilities are used in the picture acquisition phase to efficiently capture even the smallest details of facial expressions. To improve the quality of the images, To brighten photos while maintaining important facial features, we use the Retinex and Adaptive Histogram Equalisation (AHE) algorithms. A Convolutional Neural Network (CNN) is used in conjunction with this preprocessing to further improve the images and guarantee the best possible clarity for emotion identification. Sophisticated emotion identification algorithms, such as CNNs and Recurrent Neural Networks (RNNs), are then used to analyse the processed photos. This enables a more detailed understanding of emotional states based on facial expressions.

A user-friendly mobile application that offers real-time feedback is part of the system's architecture, allowing users to get instant notifications about emotions that are recognized. Based on the identified emotional states, this application provides comprehensive insights and practical suggestions for engagement tactics in addition to displaying emotion classifications. Additionally, our Scalability was a key consideration in the design of the integrated system, making it simple to update and improve when new machine learning and image processing methods are developed. Because of its versatility, the system is able to stay on the cutting edge of technology and continuously enhance its ability to identify emotions. Finally, our suggested system represents a revolutionary breakthrough in the field of emotion recognition, especially under low light. We enable users to make thoughtful decisions that improve interactions in a variety of scenarios by providing them with cutting-edge tools for real-time monitoring and analysis. This ultimately fosters emotional well-being and enhances user experiences.

In this novel approach to improve emotion detection in low light, we introduce a state-of-the-art image processing system that maximizes the identification of emotional expressions in difficult lighting situations. This method's main goal is to increase the precision and dependability of emotion recognition by efficiently processing low-light photos using sophisticated algorithms and deep-learning approaches. A strong image acquisition system with high-resolution cameras with low light sensitivity and infrared capabilities forms the basis of this methodology. These cameras are positioned carefully to record facial expressions even in low light levels, guaranteeing that the information gathered is detailed. An initial improvement step is applied to the collected images using methods like Retinex algorithms and Adaptive Histogram Equalisation (AHE). Together, these algorithms enhance photos while maintaining key facial traits, highlighting deeply felt emotions. A powerful Convolutional Neural Network (CNN) created especially for emotion recognition receives the improved images. Because it has been trained on a varied dataset including a wide range of emotional expressions, the CNN can accurately learn and detect a variety of emotions. Because traditional systems frequently have trouble identifying emotions from enhanced low-light images, this training procedure is essential.

In this approach, the processing unit—usually a potent GPU or a specialized deep learning platform—is essential. It makes it easier to process improved photographs quickly and runs the emotion identification algorithms in real-time. Because of the system's ability to deliver quick feedback, users can get alerts about emotions that are identified instantly. This functionality is especially helpful in fields where it's essential to comprehend emotional reactions, such interactive entertainment, healthcare, and customer service. We design a user-friendly mobile application that gives users access to real-time emotion identification findings in order to improve the overall user experience. In addition to displaying the recognized emotions, the program makes recommendations and provides insights based on the emotional states that were discovered. Whether they are customer service agents interacting with customers or medical specialists keeping an eye on patients' health, this feedback loop enables users to successfully customize their interactions. Furthermore, a scalable architecture is incorporated into our process to facilitate ongoing adaptation and improvement. As novel methods for analysing images and models for identifying emotions come into existence, the system may be easily modified to stay on the cutting edge of technology.

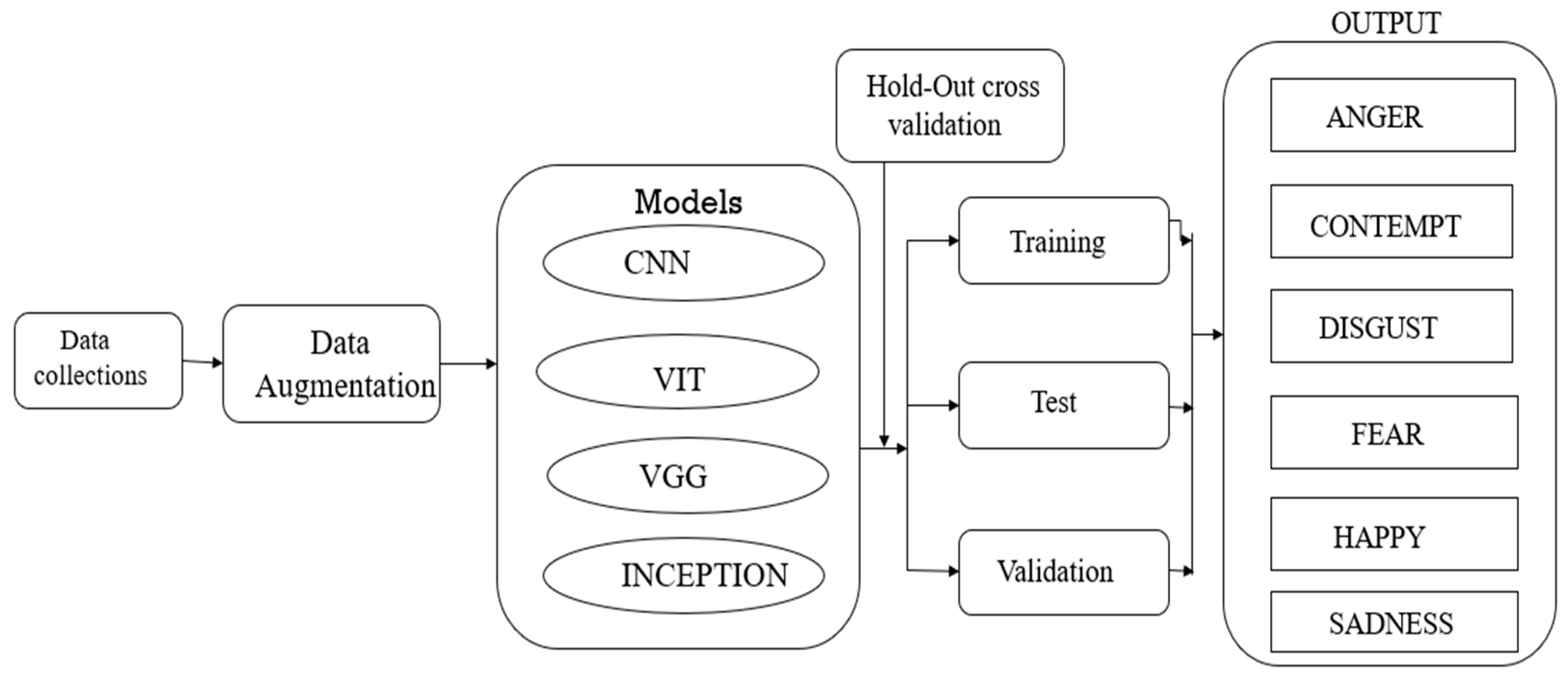

Figure 3.1. The image you've shared represents a flow diagram for an emotion detection system using machine learning models. Let’s break down each step of the diagram in more detail:

Figure 3.1.

Block diagram of the Smart Low-Light Image Enhancement For Emotion Detection: Emotion detection in real-time.

Figure 3.1.

Block diagram of the Smart Low-Light Image Enhancement For Emotion Detection: Emotion detection in real-time.

-

1.

Data Collection

- ■

Step Description: The first step in the process is gathering data, which in this case refers to collecting images or datasets that contain facial expressions representing different emotions. These images serve as the raw data required to train the models.

- ■

Importance: The quality and quantity of the data collected are crucial for training effective machine learning models. The more diverse and well-labelled the data is, the better the system will perform in detecting emotions.

-

2.

Data Augmentation

- ■

Step Description: After data collection, data augmentation techniques are applied. Data augmentation refers to artificially expanding the dataset by applying various transformations to the images such as rotation, flipping, scaling, brightness adjustments, etc.

- ■

Importance: This step helps increase the diversity of the dataset, reduces the risk of overfitting, and enhances the model’s ability to generalize better to unseen data. It is especially useful when the dataset is small or lacks variety.

-

3.

Model Selection

- ■

Step Description: Several machine learning models are used in this system for emotion detection. The models mentioned in the diagram include:

- ■

CNN (Convolutional Neural Network): A powerful deep learning model commonly used for image recognition tasks. It processes image data by learning features through convolutional layers.

- ■

ViT (Vision Transformer): A newer architecture that uses self-attention mechanisms to capture the dependencies between different parts of an image, useful for image classification tasks.

- ■

VGG (Visual Geometry Group): A CNN architecture known for its depth, with 16-19 layers. It has been widely used for image classification and performs well on large datasets.

- ■

Inception: Another CNN-based architecture known for its multi-level feature extraction, using filters of different sizes to capture various aspects of the image.

- ■

Importance: Using multiple models allows for comparison of performance to determine which one works best for emotion detection under specific conditions. It also ensures robustness by exploring different approaches to the same problem.

-

4.

Hold-Out Cross Validation

- ■

Step Description: Hold-out cross-validation is a method used to evaluate model performance. In this method, the dataset is split into three sets: training, testing, and validation.

- ■

Training Set: Used to train the models, i.e., the model learns from the images in this set by adjusting its parameters.

- ■

Test Set: Used to test the model’s performance after training. It helps to assess how well the model generalizes to new, unseen data.

- ■

Validation Set: A subset of data used to tune model hyperparameters and prevent overfitting during training.

- ■

Importance: Cross-validation helps avoid overfitting by making sure that the model isn’t just memorizing the training data but is also generalizing well to new data.

-

5.

Training

- ■

Step Description: In this step, the machine learning models (CNN, ViT, VGG, and Inception) are trained using the training dataset. During this phase, the models learn patterns in the images that correspond to different emotions.

- ■

Importance: Training is critical as it enables the models to learn how to classify emotions based on input images. The model weights and biases are optimized through this process.

-

6.

Testing

- ■

Step Description: After the models have been trained, they are tested using the test dataset. This is the first evaluation of how well the model can detect emotions in new images.

- ■

Importance: Testing provides an unbiased evaluation of the model's performance and helps determine whether the model can accurately detect emotions in unseen data.

-

7.

Validation

- ■

Step Description: The validation step is crucial for fine-tuning the model. It is used during the training process to adjust model hyperparameters and ensure that the model generalizes well. If the model performs well on the validation set, it is less likely to overfit the training data.

- ■

Importance: Validation helps improve the model's robustness and ensures that it doesn’t perform well on the training data alone but also on new data.

-

8.

Output

- ■

Step Description: The final output of the system is the detection of various emotions. The emotions listed in the diagram include:

- ■

Anger

- ■

Contempt

- ■

Disgust

- ■

Fear

- ■

Happy

- ■

Sadness

Importance: The output is the classification result, where the system categorizes the input image based on the emotions it detects. Accurate emotion detection is critical for applications such as mental health monitoring, security, or user behavior analysis.

Data Augmentation ensures a diverse dataset, improving model performance.

Multiple Models (CNN, ViT, VGG, Inception) are employed to leverage different strengths in feature detection and classification.

Hold-Out Cross Validation ensures reliable evaluation of the models, splitting the data into training, testing, and validation sets.

The system outputs a classification of emotions such as Anger, Fear, Happiness, and others, providing real-time emotion detection.

3.2. Software

Deep Face : Using deep learning methods, particularly convolutional neural networks (CNNs), Deep Face is a contemporary method for face recognition and analysis. Face detection is the first step in the process, when faces in pictures or video frames are found and identified using techniques such as MTCNN (Multi-task Cascaded Convolutional Networks). After detection, feature extraction takes place, capturing distinctive facial features including expressions and landmarks. Face recognition is the next stage, which determines identity by comparing the retrieved features to a database of recognised faces. This is accomplished by using cutting-edge machine learning algorithms that improve accuracy over conventional approaches. Furthermore, emotion detection is frequently incorporated into deep face systems. Models such as FER (Facial Emotion detection) are frequently used to analyse facial expressions and infer emotional states.

Deep face technologies are extensively used in many fields, including as human-computer interaction, social networking applications, and security systems. They have become indispensable tools in both commercial and scientific settings due to their capacity to reliably detect and analyse faces in real time, propelling breakthroughs in fields such as marketing, user experience design, and surveillance.

CV2: The Python interface for OpenCV, a potent open-source toolkit for image processing and computer vision, is called cv2. Its many features make it possible to perform operations like colour space conversions, scaling, filtering, and picture modification. Techniques for image analysis such as edge detection, segmentation, and feature discovery are available to users. To enable real-time facial recognition in applications, CV2 additionally facilitates face detection through the use of pre-trained models. It also has the ability to record and process videos, which makes it appropriate for frame analysis and object tracking. Machine learning tools for building models for object classification and recognition are included in the library.

Additionally, for improved data manipulation and visualisation, CV2 easily interfaces with additional libraries such as NumPy and Matplotlib. Because of its adaptability, CV2 is a vital resource for developers and researchers in the computer vision field.

Media Pipe: Google created the open-source Media Pipe framework, which makes it easier to create perception pipelines for a variety of applications, especially in machine learning and computer vision. Its modular design makes it simple for developers to mix and match various parts and models to create unique solutions. Because Media Pipe is designed for real-time processing, it is perfect for applications like augmented reality and video analysis that need for rapid processing.

For applications like object recognition, pose estimation, hand tracking, and face detection (Face Mesh), the framework provides a variety of pre-trained models that may be easily incorporated into projects. With compatibility for a variety of platforms, such as desktop environments, iOS, and Android, it offers cross-device deployment flexibility.

Media Pipe offers Python and C++ APIs to accommodate developers with different levels of proficiency. The framework is renowned for its user-friendliness, wealth of documentation, and vibrant community, all of which support its ongoing development and feature extension. All things considered, Media Pipe is an effective and flexible tool for putting cutting-edge computer vision technologies into practice.

NumPy : Image processing activities require the use of NumPy, a robust Python module that makes manipulating huge, multi-dimensional arrays easier. NumPy makes it possible to efficiently represent and manipulate images as multi-dimensional arrays in the context of smart low-light image improvement, opening the door to a variety of enhancement techniques. Common techniques like contrast stretching, gamma correction, and histogram equalisation can be used to increase visibility in low light, which is essential for precisely identifying emotions. NumPy can also be used for noise reduction and filtering, cleaning up images before analysis by applying filters like Gaussian or median. The enhancing process is streamlined by the library's effective mathematical operations, which allow for intricate computations on image arrays. NumPy's smooth integration with other libraries, such as OpenCV, improves the capability of sophisticated picture methods of processing.

Lastly, the accuracy of results can be increased by feeding improved photos into emotion recognition algorithms. All things considered, NumPy is essential for preparing low-light photos so that emotion recognition algorithms can obtain high-quality input for trustworthy analysis.

MTCNNE motion: Multi-task Cascaded Convolutional Networks, or MTCNN, is a well-liked deep learning system for face alignment and identification in real time. It works by using a three-stage cascaded framework that gradually improves the ability to identify faces in pictures or video streams. MTCNN is capable of efficiently identifying and tracking faces in video sequences when they are moving. This is especially helpful in emotion recognition applications where it's critical to comprehend moving facial expressions. The model is appropriate for activities like video conferencing, surveillance, and interactive applications because of its high precision and speed, which enable it to operate in real-time scenarios.

Additionally, MTCNN offers facial landmark detection, which is useful for deciphering the subtleties of motion-based facial emotions. By recognising important points On the face, MTCNN contributes to more reliable emotion identification systems by providing a deeper knowledge of how emotions change as individuals move.

All things considered, MTCNN is a flexible motion analysis method that makes efficient face detection and tracking possible—two essential functions for applications that demand real-time emotion analysis and detection.

FER (CNN-based model): Convolutional Neural Networks (CNNs) are used by FER (Facial Emotion Recognition) to analyse and categorise emotions based on facial expressions. Because CNNs can automatically extract pertinent features from raw pixel data without the need for manual feature engineering, they are especially useful for image analysis. In order to facilitate complex emotional understanding, these models usually categorise a variety of emotions, such as happiness, sorrow, rage, surprise, disgust, and fear.

For real-world applications, CNN-based FER models' resilience to changes in lighting, face position, and occlusions is one of their main advantages. In order to improve performance even with little datasets, several implementations make use of transfer learning and pre-trained CNN models (such as ResNet or VGGFace).

Additionally, these models can recognise emotions in real time, which qualifies them for interactive applications in gaming, treatment, and improving the user experience. CNN-based FER can build complete systems for efficient emotion identification in a variety of scenarios when paired with face detection frameworks such as MTCNN. All things considered, CNNs greatly increase the precision and versatility of facial emotion recognition, opening the door for more sophisticated human-computer interaction.

6. Result and Discussion

The CNN-based Facial Emotion Recognition (FER) model's performance measures, such as accuracy, precision, recall, and F1-score, are shown in the Results and Discussion section. These metrics show that the model effectively classifies emotions like happiness, sadness, anger, and surprise. The model's performance over a range of test photos is demonstrated using visualizations such as graphs and confusion matrices, which also highlight gains over baseline models brought about by the inclusion of MTCNN for face detection and low-light image enhancement techniques. The relevance of these inaccuracies is discussed after an investigation of misclassifications exposes difficulties the model faces, especially in ambiguous expressions and low-quality photos. It has been demonstrated that the use of clever low-light enhancement techniques improves the accuracy of emotion recognition, indicating a useful addition to practical applications.

Additionally, the conversation discusses the shortcomings of the study, taking into account external variability and dataset limitations, and suggesting future research avenues to improve the model even further. Furthermore, the findings' practical ramifications are examined, highlighting the possible advantages of applying FER in domains such as marketing, human-computer interaction, and mental health. All things considered, this section demonstrates the efficacy of the suggested techniques and lays the groundwork for further research into emotion-detecting technologies.

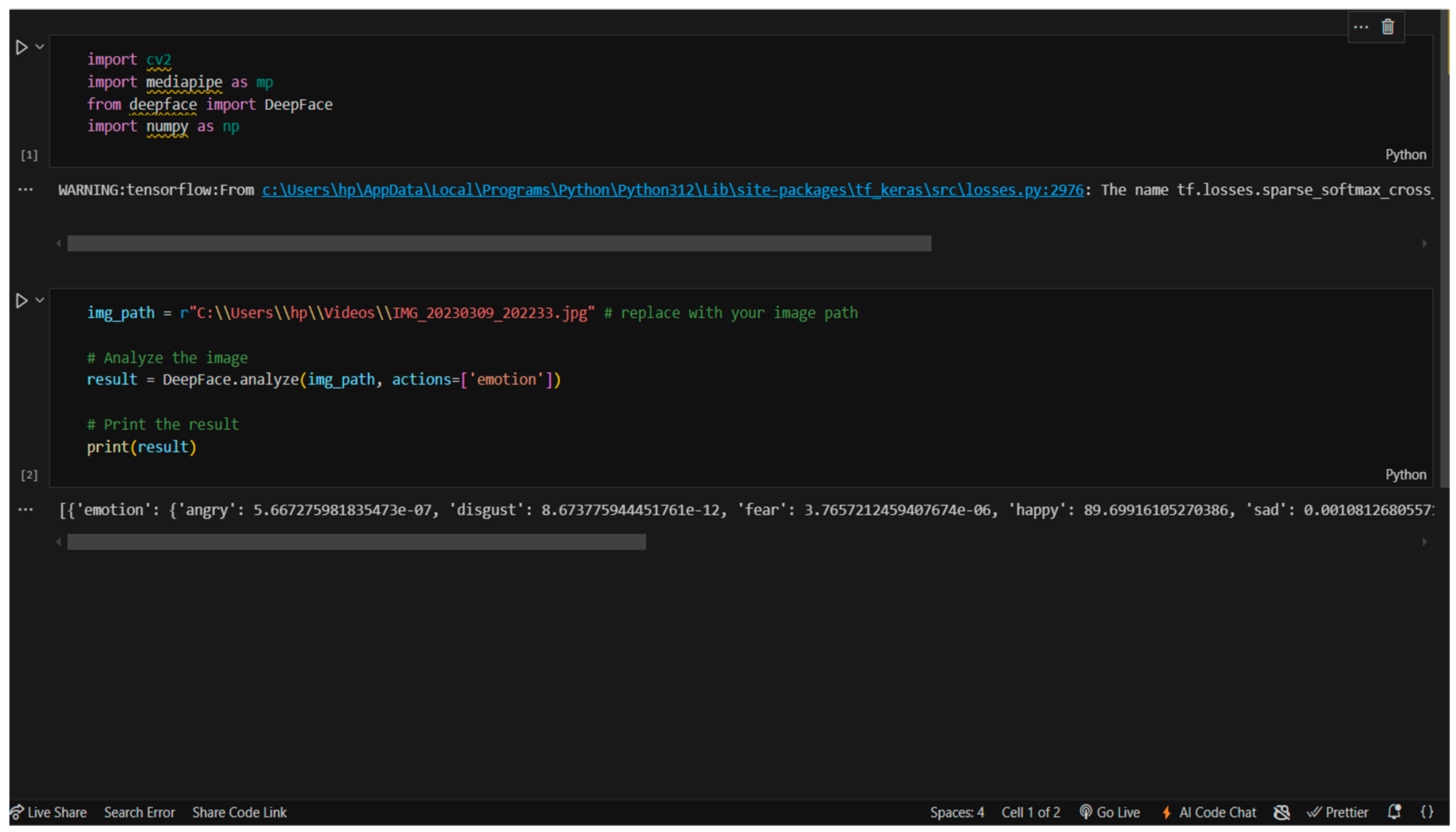

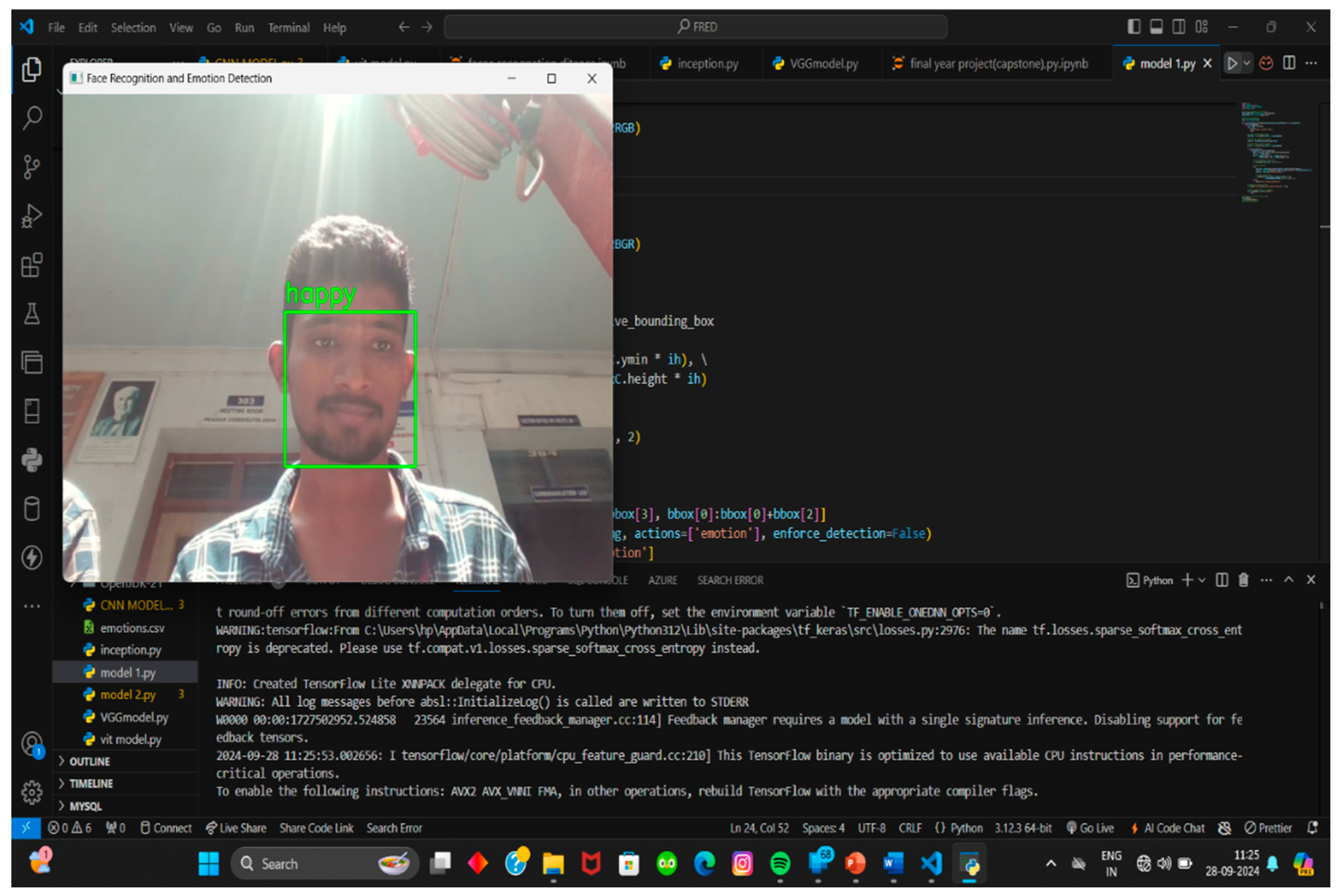

Figure 6.1.

emotion detection by analyzing the facial features from the specified image and outputs probabilities for various emotions like anger, disgust, fear, happiness, and sadness.

Figure 6.1.

emotion detection by analyzing the facial features from the specified image and outputs probabilities for various emotions like anger, disgust, fear, happiness, and sadness.

This Python code sample, which uses the DeepFace package to recognise facial emotions, was created in the Visual Studio Code environment. The code makes use of multiple libraries:

OpenCV (cv2): Probably used for image processing activities like reading and modifying image files.

While DeepFace is doing the analysis in this instance, MediaPipe is a library for identifying faces or facial landmarks.

This facial emotion recognition system uses the DeepFace core library. It facilitates the analysis of feelings from a particular visual.

Array-like structures and numerical operations are handled by NumPy (numpy).

Code Process:

It is sent to DeepFace for analysis with the image file location "C:\\Users\\hp\\Videos\\IMG_20230309_202233.jpg" supplied.

With the given image URL and the argument actions=['emotion'], the function DeepFace.analyze() is invoked, which means it will precisely Examine the image's face's emotional content.

The analytical output is shown using the print(result) function.

Emotion Detection: The output shows the likelihood of the following emotions: fear, disgust, anger, happiness, and sadness.

With a high probability of 89.7%, the result shows that the individual in the picture is primarily pleased.

Using DeepFace's robust facial analysis tools, this code implements emotion detection in a straightforward but efficient manner. It interprets a picture, calculates the probability of various emotions from the image's facial expressions, and then outputs the results.

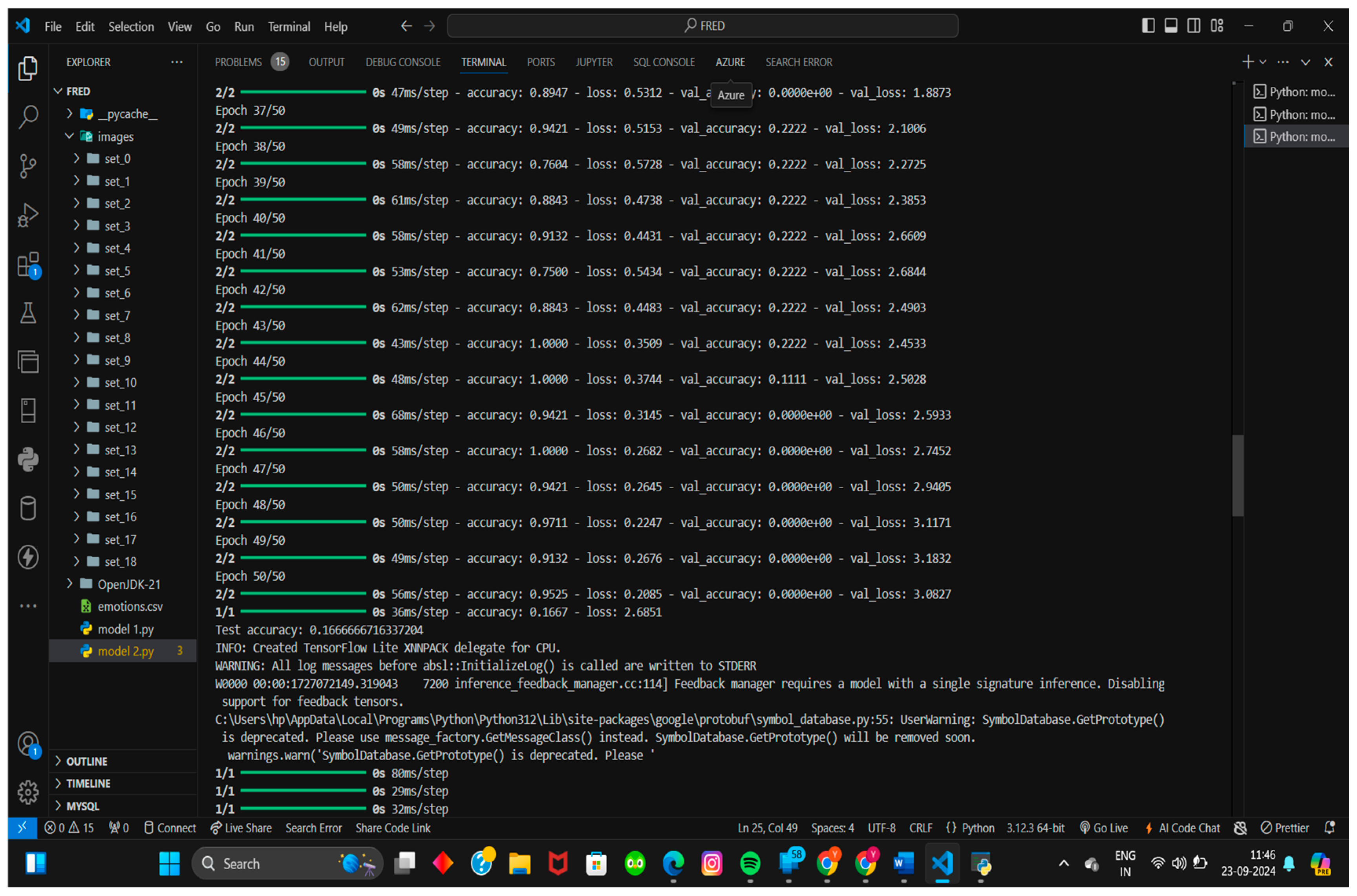

Figure 6.2.

The training process seems to be showing metrics for multiple epochs (e.g., accuracy, loss, validation accuracy, and validation loss).

Figure 6.2.

The training process seems to be showing metrics for multiple epochs (e.g., accuracy, loss, validation accuracy, and validation loss).

Model Performance: Overfitting is evident as your validation accuracy stays constant at 0.2222 but your training accuracy increases. It is concerning that validation loss is not declining either.

Overfitting Remedies: L2, Dropout regularisation can be used to lessen overfitting.

When validation loss rises, use early stopping to terminate additional training.

To improve data diversity, take into account data augmentation approaches.

Model Simplification: Reduce the number of layers or neurones in the model to simplify its architecture. An intricate model may result in overfitting.

Learning Rate Adjustments: For more seamless training, use lower learning rates or make use of a learning rate scheduler.

Class Imbalance: To guarantee balanced learning, use oversampling, undersampling, or class weights if your dataset is unbalanced (fewer heart disease instances).

Other Metrics: Instead of depending only on accuracy, monitor metrics like precision, recall, and F1-score, which are essential for imbalanced datasets.

Cross-validation: To improve generalization, apply K-fold cross-validation to assess model performance across various data subsets.

hyperparameters: Experiment with activation functions (e.g., ReLU, Leaky ReLU), optimizers, batch size, and learning rate.

Other Models: In contrast to neural networks, simpler models such as Random Forest, Gradient Boosting, or ensemble models may function better with structured health data.

Figure 6.3 The purpose of smart low-light picture augmentation for emotion detection is to increase the precision of emotion recognition in low-light or poorly illuminated settings. Low light levels frequently result in low-quality images, which makes it challenging for traditional emotion detection systems to pick up crucial face traits. In order to solve this, the system employs sophisticated image-enhancing methods like gamma correction, histogram equalization, or Retinex-based algorithms to boost contrast and brightness, guaranteeing that expressions are more visible. Apart from improving brightness, noise reduction methods such as Gaussian or median filtering are used to eliminate noise while maintaining important facial features. Low-light photos are frequently noisy, which can fool emotion recognition systems, hence this preprocessing is essential.

Figure 6.3.

Real-Time Monitoring data collected at scheduled time intervals.

Figure 6.3.

Real-Time Monitoring data collected at scheduled time intervals.

Following augmentation, facial features are recognized and positioned using face detection techniques such as The purpose of smart low-light picture augmentation for emotion detection is to increase the precision of emotion recognition in low-light or poorly illuminated settings. Poor image quality is frequently caused by low light levels, which can be detected using more sophisticated deep learning-based detectors like Multi-task Cascaded Convolutional Networks (MTCNN) or MakHaar cascades. Before putting the face into the emotion detection model, this stage makes sure it is correctly framed and aligned.

Convolutional Neural Networks (CNNs), which are trained on datasets of labeled facial emotions, are commonly used for emotion identification. Based on the attributes taken from the improved photos, the CNN model categorizes a range of emotions, such as joy, sorrow, rage, or surprise. Particularly for real-time applications, these CNN models must be effective and tuned for speed and accuracy. In real-time The system is designed to process and improve photos rapidly without sacrificing the precision of emotion recognition because performance is crucial for many use cases. Human-computer interaction, low-light surveillance systems, mental health monitoring, and entertainment are among the applications where precise emotion tracking in a range of lighting situations is necessary. In conclusion, this technique improves the overall performance of emotion detection systems by resolving lighting issues, guaranteeing more precise, instantaneous emotion recognition in low-light conditions, and permitting its deployment in a variety of real-world scenarios.