Submitted:

29 October 2024

Posted:

31 October 2024

You are already at the latest version

Abstract

Keywords:

0. Introduction

1. Basic Theory

1.1. Untraceable Kalman Filter

1.1.1. Define

1.1.2. Characterization of Applications in Information Processing

- (1)

-

Advantages:

- No need of Jacobi matrix: UKF does not need to calculate Jacobi matrix, which simplifies the implementation of the algorithm .

- Suitable for highly nonlinear systems: By sampling the sigma points, UKF can capture the nonlinear properties of the system more accurately .

- Avoiding dispersion problem: Compared with EKF, UKF is less susceptible to the instability problem caused by improperly selected nonlinear functions, which improves the robustness of the filtering .

- Not limited to Gaussian distribution: UKF has no special assumptions on the shape of the distribution of the state variables, so it is more flexible in dealing with non-Gaussian distributions .

- (2)

-

Disadvantages:

- High computational cost: compared with the standard Kalman filter, the computational cost of UKF is relatively high, especially when dealing with high-dimensional state spaces .

- Sensitive to initial conditions: UKF is sensitive to the initial conditions, and inaccuracy of the initial estimation may affect the performance of the filter .

- Not suitable for all nonlinear systems: Although UKF is suitable for most nonlinear systems, it may not be able to achieve good results for some extreme nonlinear or highly noisy systems.

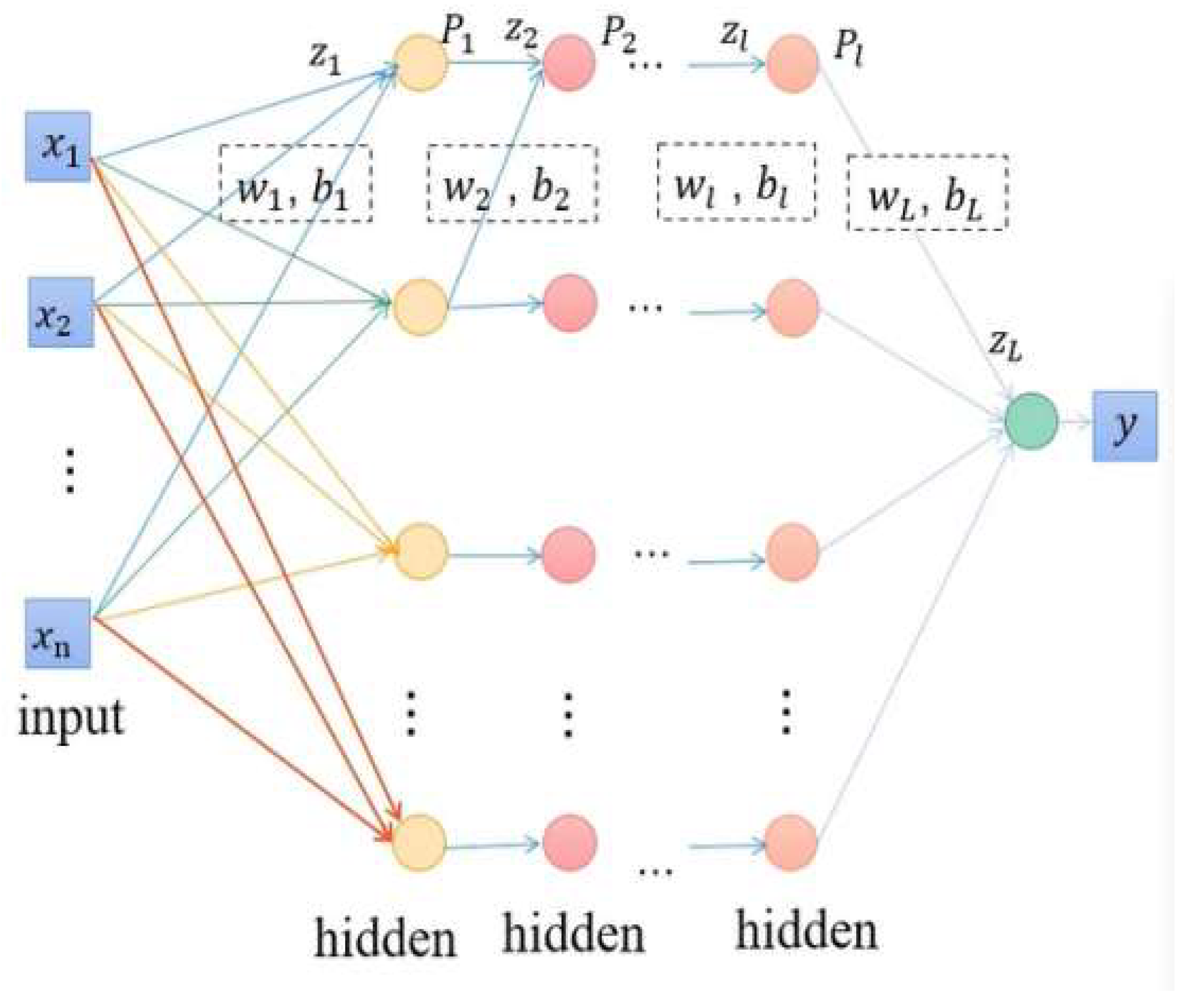

1.2. BP Neural Network

1.2.1. Define

- (1)

- Forward propagation

- (2)

- Gradient descent

1.2.2. Characterization of Applications in Information Processing

- (1)

-

Advantages:

- Strong nonlinear modeling ability: the BP neural network can deal with nonlinear relationships and can approximate arbitrary complex function mapping relationships.

- Strong learning and inference ability: through the back propagation algorithm, the model can be trained and learned, thus improving the prediction accuracy of the model.

- Applicable to a variety of tasks: can be applied to classification, regression, clustering and other machine learning tasks.

- Can handle large amounts of data: applicable to large-scale datasets, can be trained and predicted in a shorter period of time.

- (2)

-

Disadvantages:

- Easy to fall into the local optimal solution: the training process of BP neural network relies on the selection of initial parameters, and it is easy to fall into the local optimal solution and difficult to converge to the global optimal solution.

- Long training time: the training process of the model usually requires a large number of iterations, and the training time is long.

- Sensitive to the initial parameters and data preprocessing: the quality of the initial parameters and data preprocessing is highly required, and different parameters and data processing methods may lead to different results.

- Not suitable for all nonlinear systems: for some extreme nonlinear or highly noisy systems, BP neural networks may also fail to achieve good results.

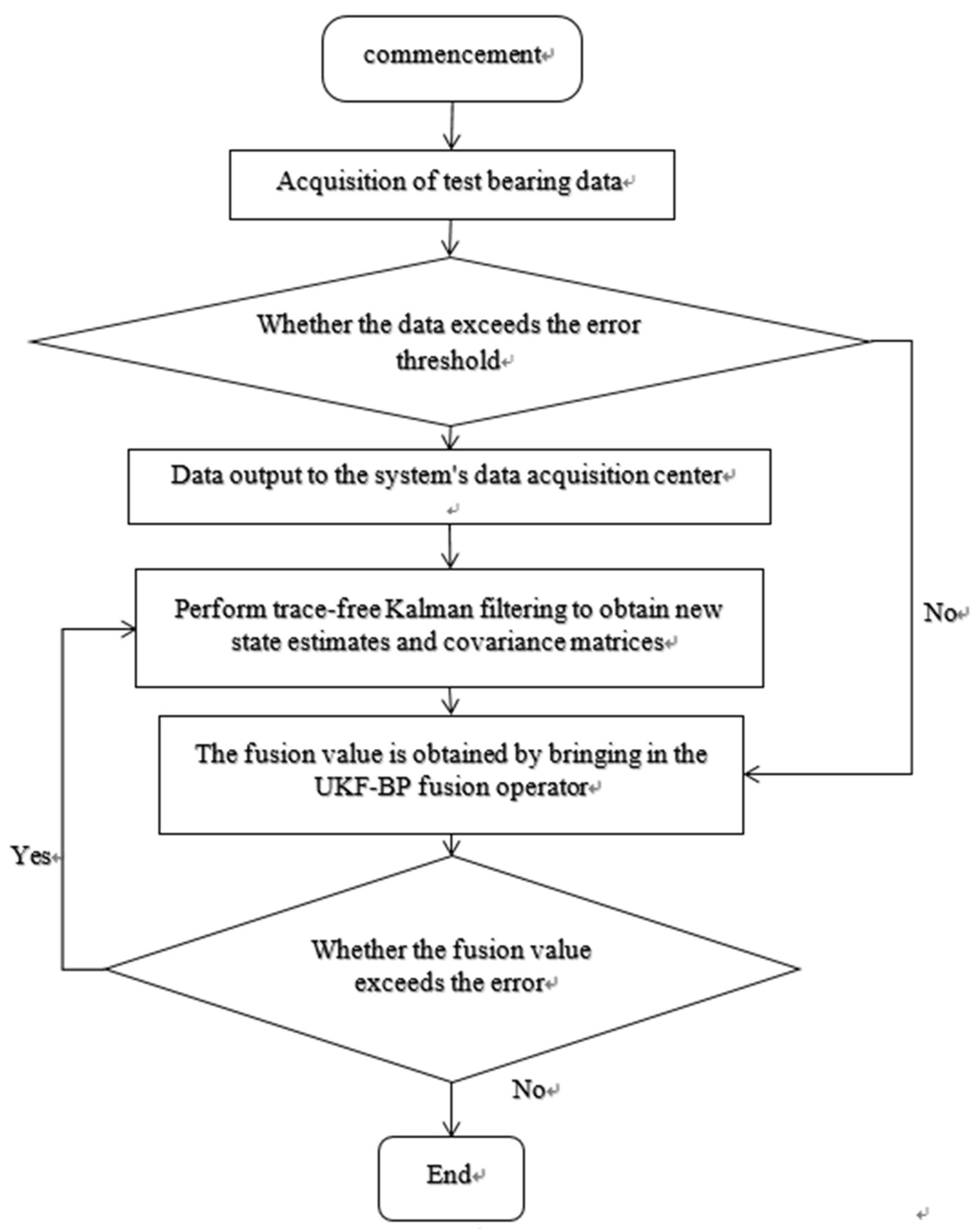

2. Information Data Processing Method Based on UKF-BPNN

2.1. Algorithmic Model

2.2. Implementation Steps

3. Examples of Application

3.1. Experimental Platform Establishment

3.2. Experimental Step

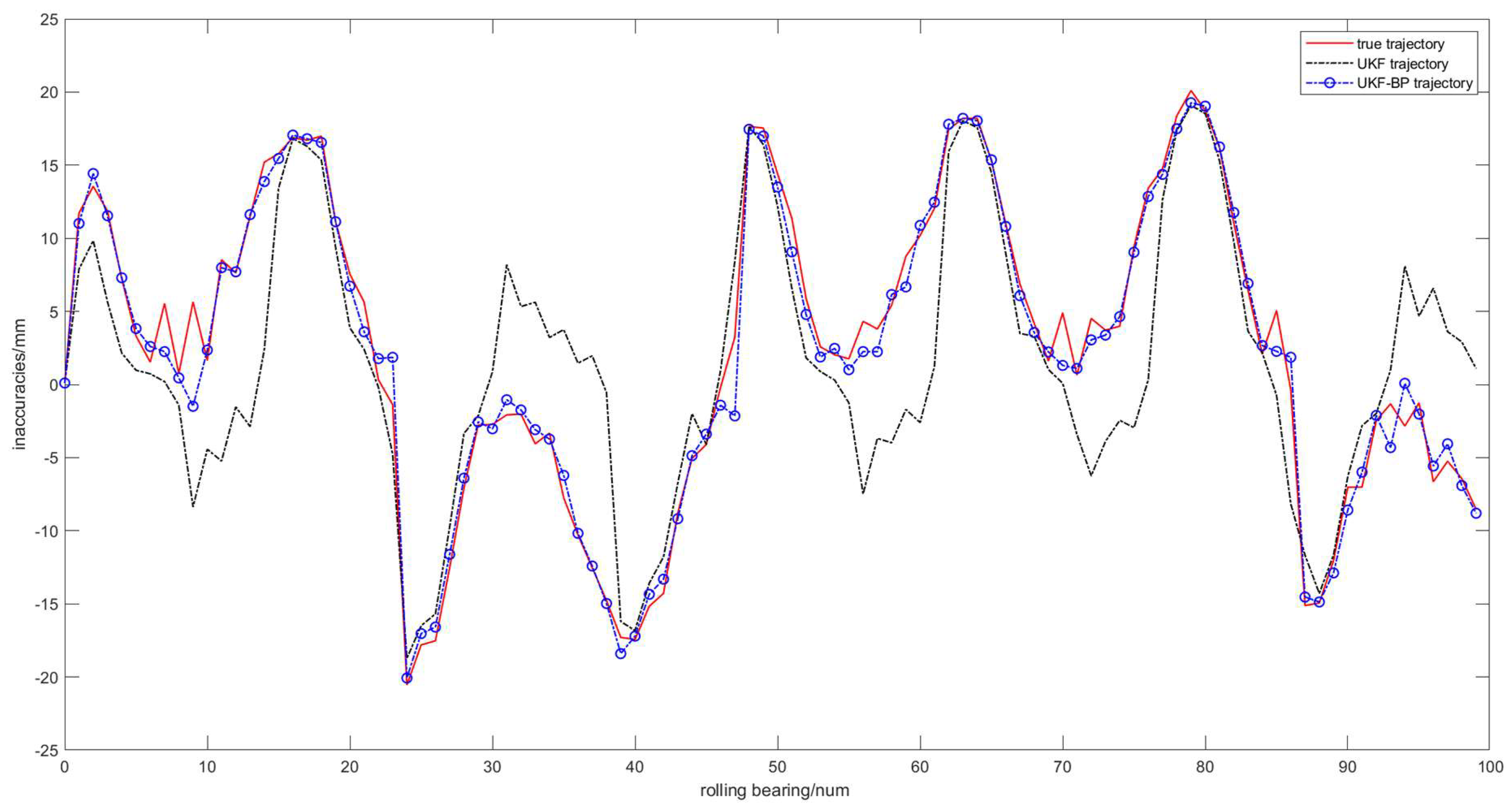

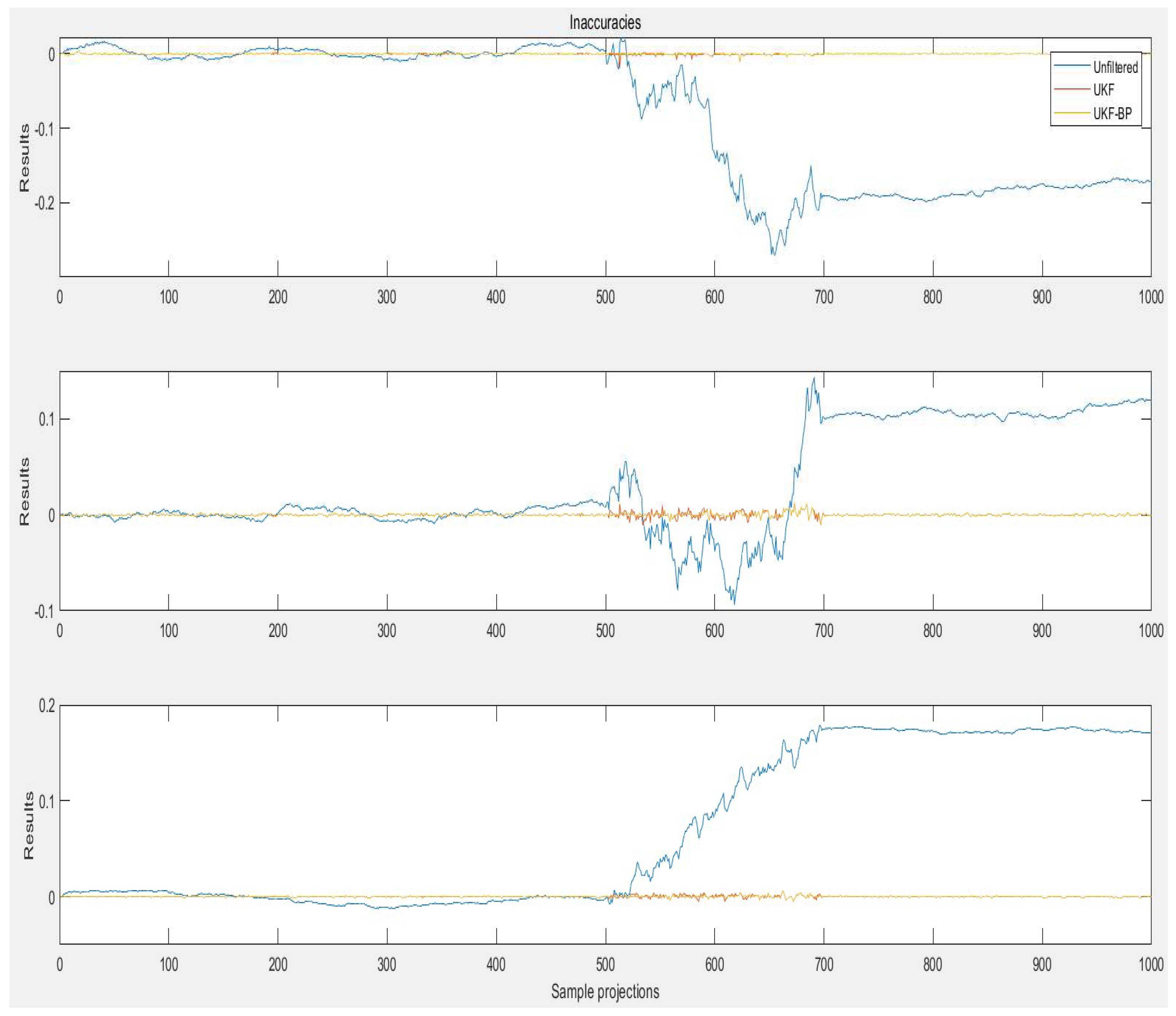

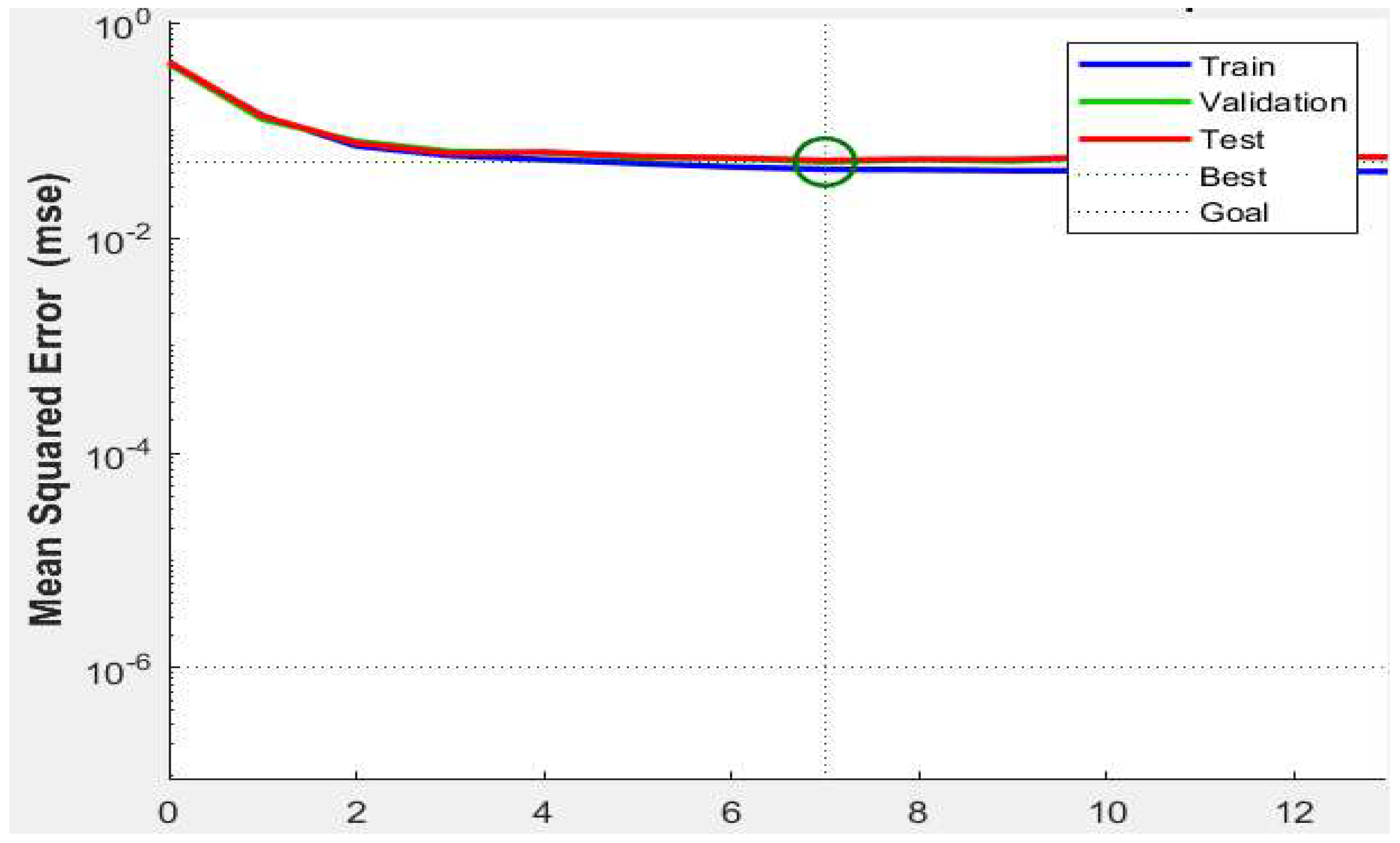

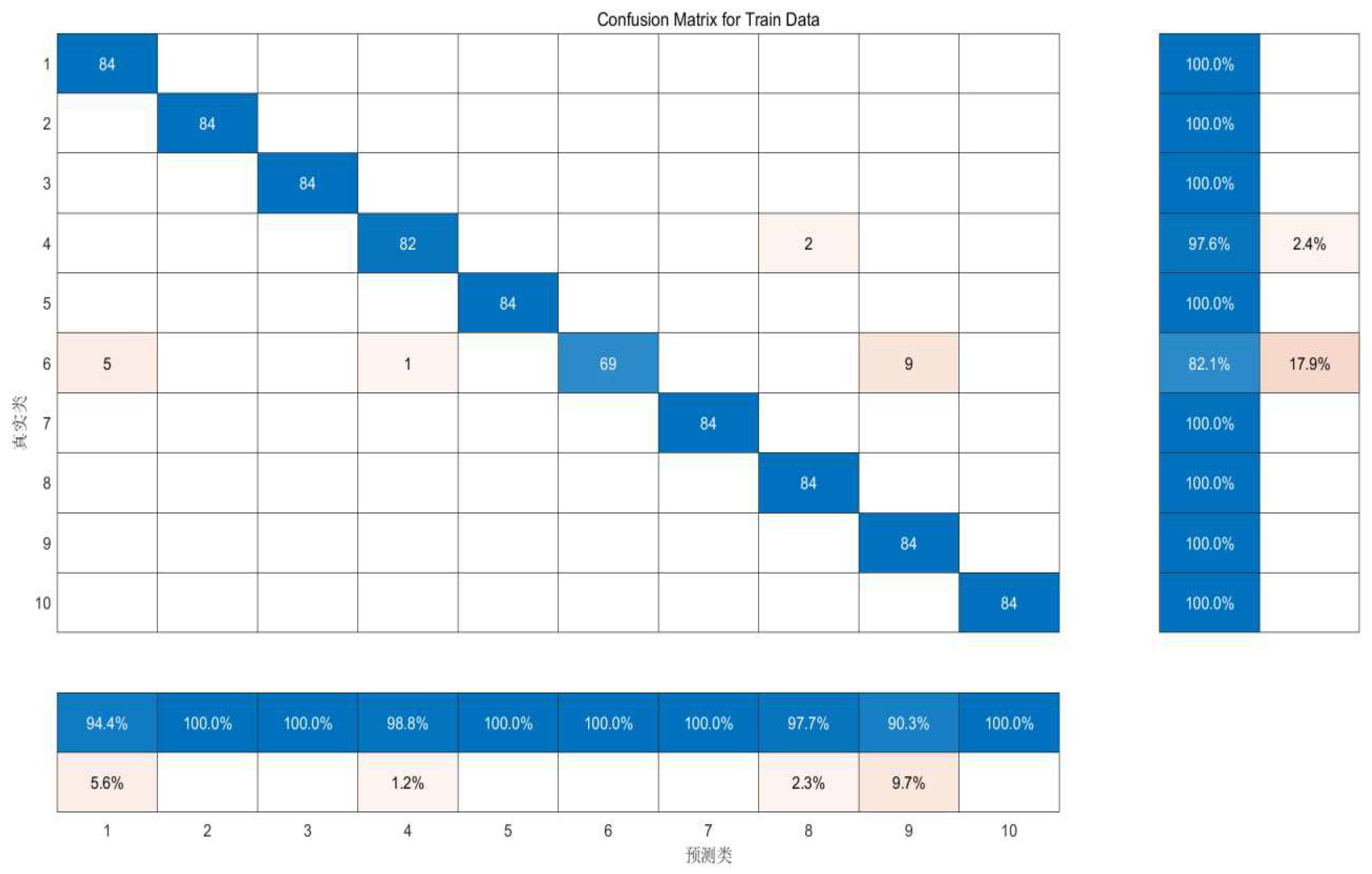

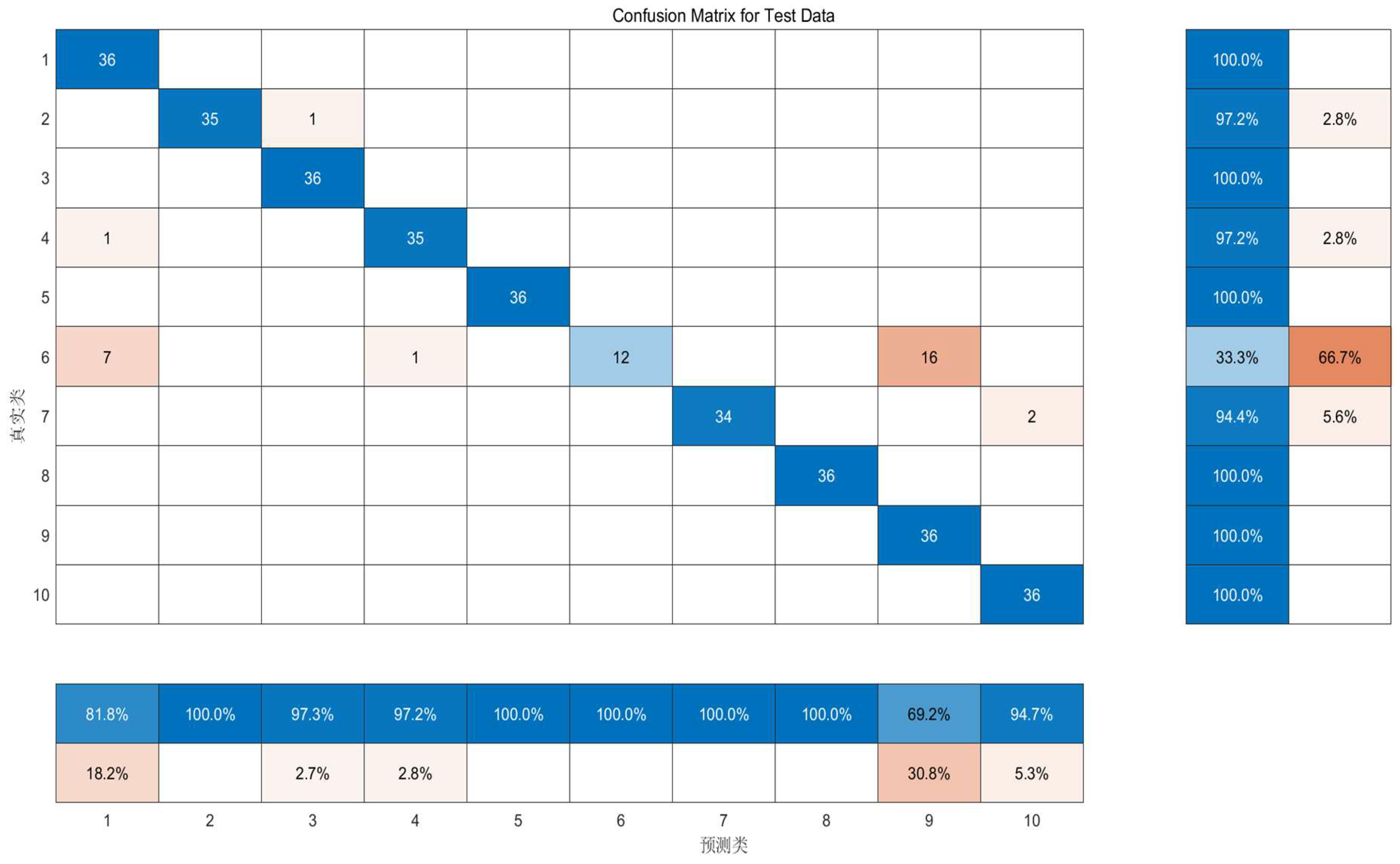

3.3. Information Data Processing Results and Analysis

4. Conclusion

Acknowlelgments

References

- Wu, X. Analysis of modern mechanical design and manufacturing process and precision machining technology [J]. China Equipment Engineering 2023, 520, 109–111. [Google Scholar] [CrossRef]

- Zhou, Y.; Hu, J.; Zhao, Y.; et al. Vehicle target tracking based on Kalman filter improved compressed perception algorithm[J]. Journal of Hunan University (Natural Science Edition) 2023, 50, 11–21. [Google Scholar] [CrossRef]

- Yang, Z.; Li, Y.; Wu, G. Research on denoising technique of IMU sensor based on traceless Kalman filter and wavelet analysis[J]. Modern Electronic Technology 2024, 47, 53–59. [Google Scholar] [CrossRef]

- Xie, K.; Qin, P. Research on the extrapolation algorithm of gun position detection radar based on Doppler information[J]. Journal of Ballistics 2018, 30, 53-58+76. [Google Scholar] [CrossRef]

- ZHANG Yu, YAO Yao, LIU Rui,et al. Joint estimation method of SOC/SOP for all-vanadium liquid-flow battery based on adaptive traceless Kalman filtering and economic model predictive control[J/OL].Energy Storage Science and Technology,1-13[2024-07-31]. [CrossRef]

- Zhang, C.; Wang, L.; Kong, X. A hemispherical resonant gyro temperature modeling compensation method based on KPCA and data processing combined method neural network[J]. Journal of Zhejiang University (Engineering Edition) 2024, 58, 1336–1345. [Google Scholar] [CrossRef]

- DONG Yi-Han,ZENG Sen,ZHOU Xiang. Optimization of BP neural network based on genetic algorithm for RC column skeleton curve prediction[J]. China Water Transportation, 2024, 24(07) :34-36. Journal, 2024, 45(08): 94-99+106. [CrossRef]

- Yanqiang Duan. Coal rock identification error analysis based on BP neural network optimization algorithm[J]. Energy and Energy Conservation, 2024, 4-7. [CrossRef]

- Nie, J.L.; Qin, Y.; Liu, F. Adaptive UKF-based BP neural network and its application in elevation fitting[J]. Surveying and Mapping Science 2007, 120-122+208. [Google Scholar] [CrossRef]

- T. Haarnoja, A. Ajay, S. Levine, and P. Abbeel, “Backprop KF: Learning discriminative deterministic state estimators,” in Advances in Neural Information Processing Systems, 2016, pp. 4376–4384.

- C.-F. Teng and Y.-L. Chen, “Syndrome enabled unsupervised learning for neural network based polar decoder and jointly optimized blindequalizer,” IEEE Trans. Emerg. Sel. Topics Circuits Syst., 2020. [CrossRef]

- Revach, G.; Shlezinger, N.; Ni, X.; Escoriza, A.L.; van Sloun, R.J.; Eldar, Y.C. KalmanNet: Neural network aided Kalman filtering forpartially known dynamics. IEEE Trans. Signal Process. 2022, 70, 1532–1547. [Google Scholar] [CrossRef]

- X. Ni, G. Revach, N. Shlezinger, R. J. van Sloun, and Y. C. Eldar, “RTSNET: Deep learning aided Kalman smoothing,” in Proc. IEEE ICASSP, 2022. [CrossRef]

- Shlezinger, N.; Eldar, Y.C.; Boyd, S.P. Model-based deep learning:On the intersection of deep learning and optimization. arXiv 2022, arXiv:2205.02640. [Google Scholar] [CrossRef]

- M. B. Matthews. A state-space approach to adaptive nonlinear filteringusing recurrent neural networks. In Proceedings IASTED Internat. Symp. Artificial Intelligence Application and Neural Networks, pages 197–200, 1990.

- Puskorius, G.; Feldkamp, L. Decoupled Extended Kalman Filter Training of Feedforward Layered Networks. IJCNN 1991, 1, 771–777. [Google Scholar] [CrossRef]

- Deng, S.; Chang, H.; Qian, D.; Wang, F.; Hua, L.; Jiang, S. Nonlinear dynamic model of ball bearings with elastohydrodynamic lubrication and cage whirl motion, influences of structural sizes, and materials of cage. Nonlinear Dyn. 2022, 110, 2129–2163. [Google Scholar] [CrossRef]

- Bovet, C.; Linares, J.-M.; Zamponi, L.; Mermoz, E. Multibody modeling of non-planar ball bearings. Mechanics & Industry 2013, 14, 335–345. [Google Scholar] [CrossRef]

- Xiu C, Su X, Pan X. Improved target tracking algorithm based on Camshift. In: 2018 Chinese control and decision conference (CCDC), Shenyang, China, 2018, pp.4449–4454. Piscataway, NJ: IEEE. [CrossRef]

- Hsia K, Lien SF, Su JP. Moving target tracking based on CamShift approach and kalman filter. Int J Appl Math Inf Sci 2013; 7: 193–200. [CrossRef]

- Qu, S.; Yang, H. Multi-target detection and tracking of video sequence based on kalman_BP neural network. Infrared Laser Eng 2013, 42, 2553–2560. [Google Scholar]

- ReganChai. BP Kalman Fusion Prediction. Online Referencing. https://github.com/ReganChai/BPKalman-FusionPrediction (accessed 21 April 2018).

| Arithmetic | MAE | MSE |

| UKF | 0.6325 | 0.0693 |

| BPNN | 0.4843 | 0.0773 |

| UKF -BPNN | 0.1132 | 0.0512 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).