Submitted:

16 October 2024

Posted:

17 October 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- in the field of security and defense, the ability of timely UAV detection prevents dangerous activities such as espionage, smuggling and terrorist attacks;

- drone identification on the issue of confidentiality allows to protect individuals and organizations from unauthorized control;

- timely detection of suspicious UAVs in the matter of maintaining the safety of the airways allows to prevent an air collision.

2. Understanding LiDAR: Technology, Principles, and Classifications

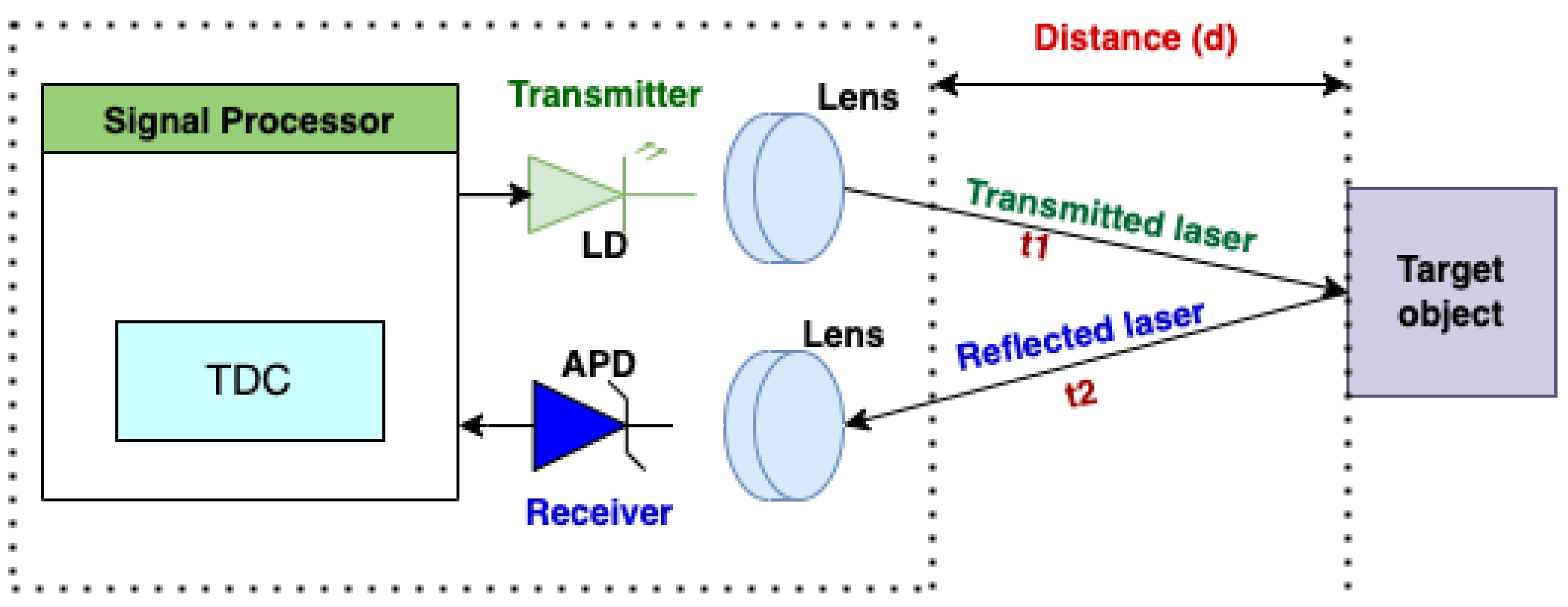

2.1. Key Components and Operational Principles of LiDAR systems

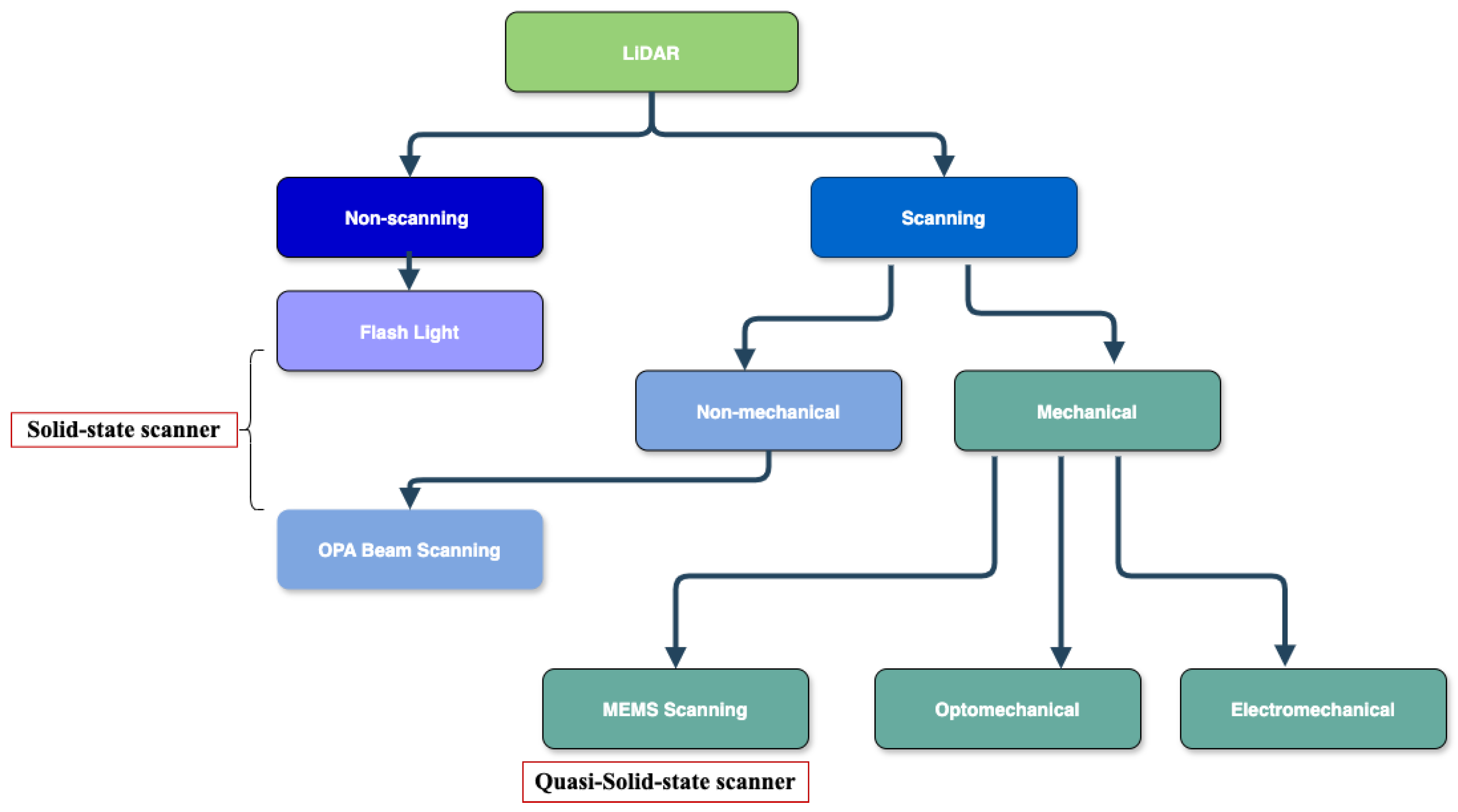

2.2. Types of LiDAR Sensors: A Comprehensive Classification

3. Deep Learning Approaches in LiDAR Data Processing

3.1. Overview of Deep Learning Techniques

4. State-of-the-Art Approaches in LiDAR-Based Drone Detection

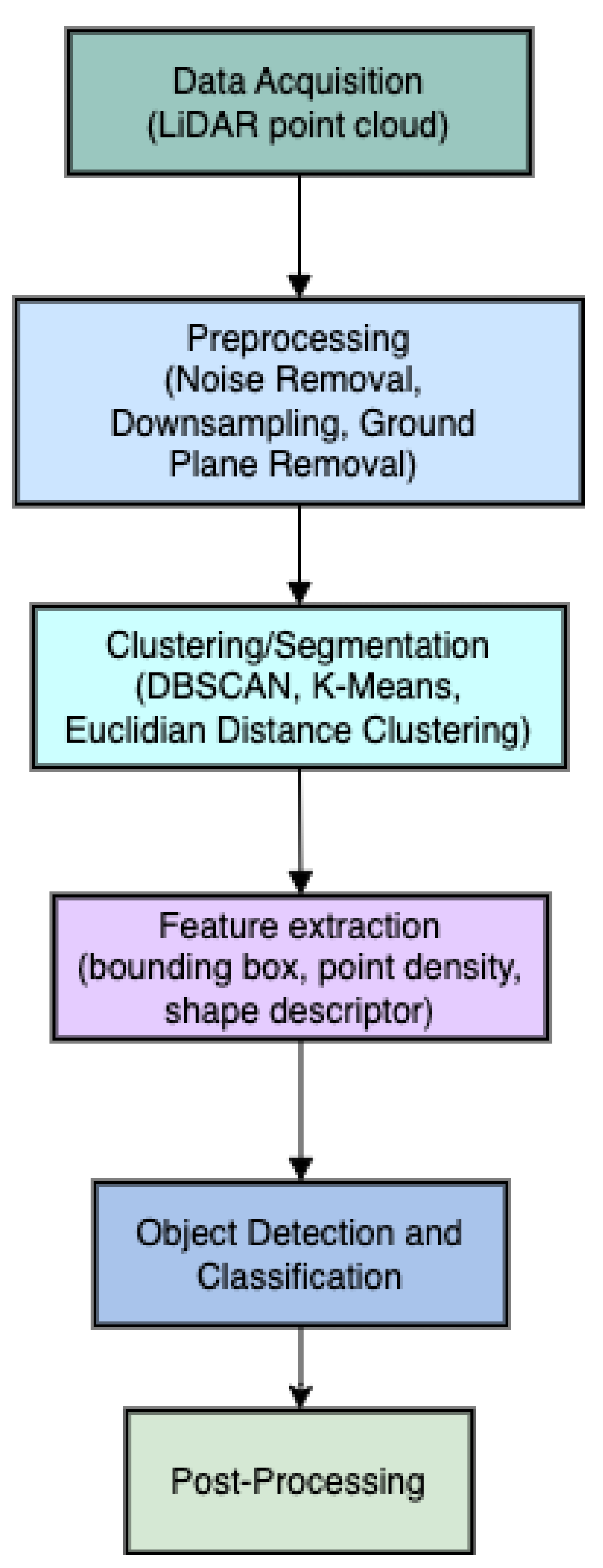

4.1. Clustering-Based Approaches in LiDAR-Based Drone Detection

4.2. ML and DL Approaches in LiDAR-Based Drone Detection

4.3. Multimodal Sensor Fusion for Enhanced UAV Detection: Leveraging LiDAR and Complementary Technologies

4.3.1. Integrating LiDAR for Robust UAV Detection in GNSS-Enabled Environments

5. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ADAS | Advanced driving assistance systems |

| APE | Absolute pose error |

| AVG | Automated Guided Vehicle |

| AI | Artificial Intelligence |

| AP | Average precision |

| APD | Avalanche photo diode |

| API | Application Programming Interface |

| ATSP | Asymmetric traveling salesman problem |

| BEV | Bird’s-Eye View |

| BiLSTM | Bidirectional Long short-term memory |

| BVLOS | Beyond Visual Line of Sight |

| CBRDD | Clustering algorithm based on relative distance and density |

| CLSTM | Convolutional Long Short Term Memory |

| CNN | Convolutional Neural Networks |

| CRNN | Convolutional recurrent neural network |

| DBSCAN | Density-Based Spatial Clustering of Applications with Noise |

| DGCNN | Dynamic Graph Convolutional Neural Network |

| DNN | Deep neural network |

| EDC | Euclidean Distance Clustering |

| EIG | Environmental information gain |

| FAA | Federal Aviation Administration |

| FAEP | Fast Autonomous Exploration Planner |

| FMCW | Frequency modulated Continuous wave |

| FDR | False discovery rate |

| FNR | False negative rate |

| FoV | Field of View |

| FUEL | Fast UAV Exploration |

| GAN | Generative Adversarial Networks |

| GCN | Graph Convolutional Network |

| GNN | Graph neural network |

| GRU | Gated Recurrent Unit |

| HAR | Human active recognition |

| IoU | Intersection over Union |

| IEEE | Institute of Electrical and Electronics Engineers |

| IMU | Inertial Measurement Unit |

| ITS | Intelligent Transport System |

| LAEA | LiDAR-assisted Exploration Algorithm |

| LD | Laser diode |

| LiDAR | Light detection and ranging |

| LoS | Line-of-Sight |

| LSTM | Long short-term memory |

| MLP | Multi-Layer Perceptron |

| MOCAP | Motion capture |

| MOT | Multi-object tracking |

| MRS | Mobile robot system |

| MVF | Multi-view fusion |

| NIR | Near-infrared ray |

| OPA | Optical Phased Array |

| PIC | Photonic integrated circuit |

| RCS | Radar cross section |

| RGB-D | Red, Green, Blue plus Depth |

| R-LVIO | Resilient LiDAR-Visual-Inertial Odometry |

| RMSE | Root Mean Squared Error |

| ROI | Region of interest |

| SECOND | Sparsely Embedded Convolutional Detection |

| SNR | Signal-to-noise ratio |

| SPAD | Single Photon Avalanche Diodes |

| TCP/IP | Transmission Control Protocol/Internet Protocol |

| TDC | Time-to-digital converter |

| ToF | Time of Flight |

References

- Drones and Airplanes: A Growing Threat to Aviation Safety. Available online: https://www.skysafe.io/blog/ drones-and-airplanes-a-growing-threat-to-aviation-safety (accessed on 30 March 2024).

- Seidaliyeva, U.; Ilipbayeva, L.; Taissariyeva, K.; Smailov, N.; Matson, E.T. Advances and Challenges in Drone Detection and Classification Techniques: A State-of-the-Art Review. Sensors 2024, 24, 125. [Google Scholar] [CrossRef]

- Drone Incident Review: First Half of 2023. Available online: https://d-fendsolutions.com/blog/drone-incident-review-first-half-2023/ (accessed on 8 August 2023).

- Yan, J.; Hu, H.; Gong, J.; Kong, D.; Li, D. Exploring Radar Micro-Doppler Signatures for Recognition of Drone Types. Drones 2023, 7, 280. [Google Scholar] [CrossRef]

- Rudys, S.; Laučys, A.; Ragulis, P.; Aleksiejūnas, R.; Stankevičius, K.; Kinka, M.; Razgūnas, M.; Bručas, D.; Udris, D.; Pomarnacki, R. Hostile UAV Detection and Neutralization Using a UAV System. Drones 2022, 6, 250. [Google Scholar] [CrossRef]

- Brighente, A.; Ciattaglia, G.; Gambi, Peruzzi, G.; Pozzebon, A.; Spinsante, S. Radar-Based Autonomous Identification of Propellers Type for Malicious Drone Detection. In Proceedings of the 2024 IEEE Sensors Applications Symposium(SAS), Naples, Italy, 2024,pp.1–6,.

- Alam, S.S.; Chakma, A.; Rahman, M.H.; Bin Mofidul, R.; Alam, M.M.; Utama, I.B.K.Y.; Jang, Y.M. RF-Enabled Deep-Learning-Assisted Drone Detection and Identification: An End-to-End Approach. Sensors 2023, 23, 4202. [Google Scholar] [CrossRef]

- Yousaf, J.; Zia, H.; Alhalabi, M.; Yaghi, M.; Basmaji, T.; Shehhi, E.A.; Gad, A.; Alkhedher, M.; Ghazal, M. Drone and Controller Detection and Localization: Trends and Challenges. Appl. Sci. 2022, 12, 12612. [Google Scholar] [CrossRef]

- Aouladhadj, D.; Kpre, E.; Deniau, V.; Kharchouf, A.; Gransart, C.; Gaquière, C. Drone Detection and Tracking Using RF Identification Signals. Sensors 2023, 23, 7650. [Google Scholar] [CrossRef]

- D. Lofù, P. D. Gennaro, P. Tedeschi, T. D. Noia and E. D. Sciascio. URANUS: Radio Frequency Tracking, Classification and Identification of Unmanned Aircraft Vehicles. in IEEE Open Journal of Vehicular Technology 2023, 4, 921–935. [Google Scholar] [CrossRef]

- Casabianca, P.; Zhang, Y. Acoustic-Based UAV Detection Using Late Fusion of Deep Neural Networks. Drones 2021, 5, 54. [Google Scholar] [CrossRef]

- Tejera-Berengue, D.; Zhu-Zhou, F.; Utrilla-Manso, M.; Gil-Pita, R.; Rosa-Zurera, M. Analysis of Distance and Environmental Impact on UAV Acoustic Detection. Electronics 2024, 13, 643. [Google Scholar] [CrossRef]

- Utebayeva, D.; Ilipbayeva, L.; Matson, E.T. Practical Study of Recurrent Neural Networks for Efficient Real-Time Drone Sound Detection: A Review. Drones 2023, 7, 26. [Google Scholar] [CrossRef]

- S. Salman, J. S. Salman, J. Mir, M. T. Farooq, A. N. Malik and R. Haleemdeen. Machine Learning Inspired Efficient Audio Drone Detection using Acoustic Features. In Proceedings of the 2021 International Bhurban Conference on Applied Sciences and Technologies (IBCAST), Islamabad, Pakistan, 2021, pp. 335–339.

- Sun, Y.; Zhi, X.; Han, H.; Jiang, S.; Shi, T.; Gong, J.; Zhang, W. Enhancing UAV Detection in Surveillance Camera Videos through Spatiotemporal Information and Optical Flow. Sensors 2023, 23, 6037. [Google Scholar] [CrossRef]

- Seidaliyeva, U.; Akhmetov, D.; Ilipbayeva, L.; Matson, E.T. Real-Time and Accurate Drone Detection in a Video with a Static Background. Sensors 2020, 20, 3856. [Google Scholar] [CrossRef]

- Samadzadegan, F.; Dadrass Javan, F.; Ashtari Mahini, F.; Gholamshahi, M. Detection and Recognition of Drones Based on a Deep Convolutional Neural Network Using Visible Imagery. Aerospace 2022, 9, 31. [Google Scholar] [CrossRef]

- Jamil, S.; Fawad; Rahman, M. ; Ullah, A.; Badnava, S.; Forsat, M.; Mirjavadi, S.S. Malicious UAV Detection Using Integrated Audio and Visual Features for Public Safety Applications. Sensors 2020, 20, 3923. [Google Scholar] [CrossRef]

- J. Kim et al. Deep Learning Based Malicious Drone Detection Using Acoustic and Image Data. In Proceedings of the 2022 Sixth IEEE International Conference on Robotic Computing (IRC), Italy, 2022, pp. 91–92.

- M. Aledhari, R. M. Aledhari, R. Razzak, R. M. Parizi and G. Srivastava. Sensor Fusion for Drone Detection. In Proceedings of the 2021 IEEE 93rd Vehicular Technology Conference (VTC2021-Spring), Helsinki, Finland, 2021, pp. 1–7.

- W. Xie, Y. W. Xie, Y. Wan, G. Wu, Y. Li, F. Zhou and Q. Wu. A RF-Visual Directional Fusion Framework for Precise UAV Positioning. in IEEE Internet of Things Journal.

- M. ki, J. M. ki, J. cha and H. Lyu. Detect and avoid system based on multi sensor fusion for UAV. In Proceedings of the 2018 International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Korea (South), 2018, pp. 1107–1109.

- V. Mehta, F. V. Mehta, F. Dadboud, M. Bolic and I. Mantegh. A Deep Learning Approach for Drone Detection and Classification Using Radar and Camera Sensor Fusion. In Proceedings of the 2023 IEEE Sensors Applications Symposium (SAS), Ottawa, ON, Canada, 2023, pp. 01–06.

- Li, N. , Ho, C., Xue, J., Lim, L., Chen, G., Fu, Y.H., Lee, L. A Progress Review on Solid-State LiDAR and Nanophotonics-Based LiDAR Sensors. Laser and Photonics Reviews, 2022. [Google Scholar] [CrossRef]

- Behroozpour, B. , Sandborn, P., Wu, M., Boser, B. Lidar System Architectures and Circuits. IEEE Commun. Mag. 2017, 55, 135–142. [Google Scholar] [CrossRef]

- Alaba, S.Y.; Ball, J.E. A Survey on Deep-Learning-Based LiDAR 3D Object Detection for Autonomous Driving. Sensors 2022, 22, 9577. [Google Scholar] [CrossRef]

- Lee, S. , Lee, D., Choi, P., Park, D. Accuracy–Power Controllable LiDAR Sensor System with 3D Object Recognition for Autonomous Vehicle. Sensors 2020, 20, 5706. [Google Scholar] [CrossRef]

- Chen, C.; Guo, J.; Wu, H.; Li, Y.; Shi, B. Performance Comparison of Filtering Algorithms for High-Density Airborne LiDAR Point Clouds over Complex LandScapes. Remote Sens. 2021, 13, 2663. [Google Scholar] [CrossRef]

- Feneyrou, P.; Leviandier, L.; Minet, J.; Pillet, G.; Martin, A.; Dolfi, D.; Schlotterbeck, J.P.; Rondeau, P.; Lacondemine, X.; Rieu, A.; et al. Frequency-modulated multifunction lidar for anemometry, range finding, and velocimetry—1. Theory and signal processing. Appl. Opt. 2017, 56, 9663–9675. [Google Scholar] [CrossRef]

- Wang, D. , Watkins, C., Xie, H. MEMS Mirrors for LiDAR: A Review. Micromachines 2020, 11, 456. [Google Scholar] [CrossRef]

- C. -H. Lin, H. -S. Zhang, C. -P. Lin and G. -D. J. Su. Design and Realization of Wide Field-of-View 3D MEMS LiDAR. IEEE Sensors Journal 2022, 22, 115–120. [Google Scholar] [CrossRef]

- Felix Berens , Markus Reischl , Stefan Elser . Generation of synthetic Point Clouds for MEMS LiDAR Sensor. TechRxiv, 21 April. [CrossRef]

- Haider, A.; Cho, Y.; Pigniczki, M.; Köhler, M.H.; Haas, L.; Kastner, L.; Fink, M.; Schardt, M.; Cichy, Y.; Koyama, S.; et al. Performance Evaluation of MEMS-Based Automotive LiDAR Sensor and Its Simulation Model as per ASTM E3125-17 Standard. Sensors 2023, 23, 3113. [Google Scholar] [CrossRef]

- Yoo, H.W. , Druml, N., Brunner, D. et al. MEMS-based lidar for autonomous driving. Elektrotech. Inftech. 2018, 135, 408–415. [Google Scholar] [CrossRef]

- Li, L.; Xing, K.; Zhao, M.; Wang, B.; Chen, J.; Zhuang, P. Optical–Mechanical Integration Analysis and Validation of LiDAR Integrated Systems with a Small Field of View and High Repetition Frequency. Photonics 2024, 11, 179–10. [Google Scholar] [CrossRef]

- Raj, T. , Hashim, F.H., Huddin, A.B., Ibrahim, M.F., Hussain, A. A Survey on LiDAR Scanning Mechanisms. Electronics 2020, 9, 741. [Google Scholar] [CrossRef]

- Zheng, H.; Han, Y.; Qiu, L.; Zong, Y.; Li, J.; Zhou, Y.; He, Y.; Liu, J.; Wang, G.; Chen, H.; et al. Long-Range Imaging LiDAR with Multiple Denoising Technologies. Appl. Sci. 2024, 14, 3414. [Google Scholar] [CrossRef]

- Wang, Zh. , Menenti, M. Challenges and Opportunities in Lidar Remote Sensing. Frontiers in Remote Sensing 2021, 2. [Google Scholar] [CrossRef]

- Yi, Y.; Wu, D.; Kakdarvishi, V.; Yu, B.; Zhuang, Y.; Khalilian, A. Photonic Integrated Circuits for an Optical Phased Array. Photonics 2024, 11, 243. [Google Scholar] [CrossRef]

- Yunhao Fu, Baisong Chen, Wenqiang Yue, Min Tao, Haoyang Zhao, Yingzhi Li, Xuetong Li, Huan Qu, Xueyan Li, Xiaolong Hu, Junfeng Song. Target-adaptive optical phased array lidar[J]. Photonics Research 2024, 12, 904. [Google Scholar] [CrossRef]

- Tontini, A.; Gasparini, L.; Perenzoni, M. Numerical Model of SPAD-Based Direct Time-of-Flight Flash LIDAR CMOS Image Sensors. Sensors 2020, 20, 5203. [Google Scholar] [CrossRef]

- Xia, Z. Q. : Flash LiDAR single photon imaging over 50 km, Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2023, 48, 1601–1606. [Google Scholar] [CrossRef]

- Assaf, E.H.; von Einem, C.; Cadena, C.; Siegwart, R.; Tschopp, F. High-Precision Low-Cost Gimballing Platform for Long-Range Railway Obstacle Detection. Sensors 2022, 22, 474. [Google Scholar] [CrossRef]

- Rutuja, Athavale; et al. Low cost solution for 3D mapping of environment using 1D LIDAR for autonomous navigation. 2019 IOP Conf. Ser. Mater. Sci. Eng. 2019, 561, 012104. [Google Scholar] [CrossRef]

- Dogru, S. , Marques, L. Drone Detection Using Sparse Lidar Measurements. IEEE Robotics and Automation Letters 2022, 7, 3062–3069. [Google Scholar] [CrossRef]

- Hou, X.; Pan, Z.; Lu, L.; Wu, Y.; Hu, J.; Lyu, Y.; Zhao, C. LAEA: A 2D LiDAR-Assisted UAV Exploration Algorithm for Unknown Environments. Drones 2024, 8, 128. [Google Scholar] [CrossRef]

- Gonz, A.; Torres, F.

- Mihálik, M.; Hruboš, M.; Vestenický, P.; Holečko, P.; Nemec, D.; Malobický, B.; Mihálik, J. A Method for Detecting Dynamic Objects Using 2D LiDAR Based on Scan Matching. Appl. Sci. 2022, 12, 5641. [Google Scholar] [CrossRef]

- Fagundes, L.A., Jr.; Caldeira, A.G.; Quemelli, M.B.; Martins, F.N.; Brandão, A.S. Analytical Formalism for Data Representation and Object Detection with 2D LiDAR: Application in Mobile Robotics. Sensors 2024, 24, 2284. [Google Scholar] [CrossRef]

- Tasnim, A.A.; Kuantama, E.; Han, R. ; Dawes,J.; Mildren, R., Ed.; Nguyen, P. Towards Robust Lidar-based 3D Detection and Tracking of UAVs. In Proceedings of the DroNet ’23: Ninth Workshop on Micro Aerial Vehicle Networks, Systems, and Applications, Helsinki, Finland, 18 June 2023. [Google Scholar]

- Cho, M.; Kim, E. 3D LiDAR Multi-Object Tracking with Short-Term and Long-Term Multi-Level Associations. Remote Sens. 2023, 15, 5486. [Google Scholar] [CrossRef]

- Peng, H. , Huang, D. Small Object Detection with lightweight PointNet Based on Attention Mechanisms. hys.: Conf. Ser. 2024, 2829. [Google Scholar] [CrossRef]

- Nong X, Bai W, Liu G (2023) Airborne LiDAR point cloud classification using PointNet++ network with full neighborhood features. PLoS ONE 2023, 18, e0280346. [CrossRef]

- Ye, M. , Xu, S. In , Cao, T. HVNet: Hybrid Voxel Network for LiDAR Based 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2020; pp. 1631–1640. [Google Scholar]

- Chen, Y. , Liu, J. , Zhang, X., Qi, X., Jia, 2023, J. VoxelNeXt: Fully Sparse VoxelNet for 3D Object Detection and Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); pp. 21674–21683.

- A. Milioto, I. A. Milioto, I. Vizzo, J. Behley and C. Stachniss. "RangeNet ++: Fast and Accurate LiDAR Semantic Segmentation. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 2019, pp. 4213–4220.

- Alnaggar, Y. , Afifi, M. Amer, K., ElHelw, 2021, M. Multi Projection Fusion for Real-Time Semantic Segmentation of 3D LiDAR Point Clouds. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV); pp. 1800–1809.

- Chen, J. , Lei, B. , Song, Q., Ying, H., Chen, Z., Wu, 2020, J. A Hierarchical Graph Network for 3D Object Detection on Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); pp. 392–401.

- Liu, X.; Zhang, B.; Liu, N. The Graph Neural Network Detector Based on Neighbor Feature Alignment Mechanism in LIDAR Point Clouds. Machines 2023, 11, 116. [Google Scholar] [CrossRef]

- Lis, K. , Kryjak, T. PointPillars Backbone Type Selection for Fast and Accurate LiDAR Object Detection. In: Chmielewski, L.J., Orłowski, A. (eds) Computer Vision and Graphics. ICCVG 2022. Lecture Notes in Networks and Systems, 2023, vol 598. Springer, Cham.

- Manduhu, M. , Dow,A., Trslic, P., Dooly,G., Blanck, B., Riordan, J. Airborne Sense and Detect of Drones using LiDAR and adapted PointPillars DNN. arXiv 2023. [Google Scholar]

- Zheng, L. , Zhang, P., Tan, J.,Li, F. The Obstacle Detection Method of UAV Based on 2D Lidar. IEEE Access 2019, 7, 163437–163448. [Google Scholar] [CrossRef]

- Xiao, J. , Pisutsin, P., Tsao, C. W., Feroskhan, M. Clustering-based Learning for UAV Tracking and Pose Estimation. arXiv preprint, arXiv:2405.16867.

- Wu, D.; Liang, Z.; Chen, G. Deep learning for LiDAR-only and LiDAR-fusion 3D perception: a survey. Intell. Robot. 2022, 2, 105–129. [Google Scholar] [CrossRef]

- Ding, Z.; Sun, Y.; Xu, S.; Pan, Y.; Peng, Y.; Mao, Z. Recent Advances and Perspectives in Deep Learning Techniques for 3D Point Cloud Data Processing. Robotics 2023, 12, 100. [Google Scholar] [CrossRef]

- Alaba, S.Y.; Ball, J.E. A Survey on Deep-Learning-Based LiDAR 3D Object Detection for Autonomous Driving. Sensors 2022, 22, 9577. [Google Scholar] [CrossRef]

- Sun, Z.; Li, Z.; Liu, Y. An Improved Lidar Data Segmentation Algorithm Based on Euclidean Clustering. In Proceedings of the 11th International Conferenceon Modelling, Identificationand Control(ICMIC2019). Lecture Notes in Electrical Engineering, 2019, vol582. Springer, Singapore.

- A. Dow et al. Intelligent Detection and Filtering of Swarm Noise from Drone Acquired LiDAR Data using PointPillars. In Proceedings of the OCEANS 2023 - Limerick, Limerick, Ireland, 2023, pp. 1-6.

- Bouazizi, M.; Lorite Mora, A.; Ohtsuki, T. A 2D-Lidar-Equipped Unmanned Robot-Based Approach for Indoor Human Activity Detection. Sensors 2023, 23, 2534. [Google Scholar] [CrossRef]

- Park, C. , Lee, S., Kim, H., Lee, D. Aerial Object Detection and Tracking based on Fusion of Vision and Lidar Sensors using Kalman Filter for UAV. International Journal of Advanced Smart Convergence 2020, 9, 232–238. [Google Scholar] [CrossRef]

- Ma, Z. , Yao, W., Niu, Y. et al. UAV low-altitude obstacle detection based on the fusion of LiDAR and camera. Auton. Intell. Syst. 2021, 1, 12. [Google Scholar] [CrossRef]

- M. Hammer, B. M. Hammer, B. Borgmann, M. Hebel, and M. Arens. A multi-sensorial approach for the protection of operational vehicles by detection and classification of small flying objects. In Proceedings of the Electro-Optical Remote Sensing XIV, online, 2020.

- Deng, T. , Zhou, Y., Wu, W., Li, M., Huang, J., Liu, Sh., Song, Y., Zuo, H., Wang, Y., ue, Y., Wang, H., Chen, W. (2024). Multi-Modal UAV Detection, Classification and Tracking Algorithm – Technical Report for CVPR 2024 UG2 Challenge. 10.48550/arXiv.2405.16464.

- H. Sier, X. H. Sier, X. Yu, I. Catalano, J. P. Queralta, Z. Zou and T. Westerlund. UAV Tracking with Lidar as a Camera Sensor in GNSS-Denied Environments. In Proceedings of the 2023 International Conference on Localization and GNSS (ICL-GNSS), Castellón, Spain, 2023, pp. 1–7.

- I. Catalano, X. I. Catalano, X. Yu and J. P. Queralta. Towards Robust UAV Tracking in GNSS-Denied Environments: A Multi-LiDAR Multi-UAV Dataset. In Proceedings of the 2023 IEEE International Conference on Robotics and Biomimetics (ROBIO), Koh Samui, Thailand, 2023, pp. 1–7.

- Zhang, B. , Shao, X., Wang, Y., Sun, G., Yao, W. R-LVIO: Resilient LiDAR-Visual-Inertial Odometry for UAVs in GNSS-denied Environment. Drones 2024, 8, 487. [Google Scholar] [CrossRef]

| LiDAR sensor type | Description | Field of View (FoV) | Scanning mechanism | Use cases | Advantages | Limitations |

|---|---|---|---|---|---|---|

| MEMS LiDAR [30,31,32,33,34] | uses moving micro-mirror plates to steer laser beam in free space while the rest of the system’s components remain motionless | moderate, depends on mirror steering angle | quasi-solid-state scanning (a combination of solid-state LiDAR and mechanical scanning ) | autonomous vehicles; drones and robotics; medical imaging; space exploration; mobile devices | accurate steering with minimal moving components; superior in terms of size, resolution, scanning speed, and cost | limited range and FoV; sensitivity to vibrations and environmental factors |

| Optomechanical LiDAR [35,36,37] | uses mechanical/moving components (mirrors, prisms or entire sensor heads) to steer the laser beam and scan the environment | wide FoV (up to 360°) | rotating and oscillating mirror, spinning prism | remote sensing, self-driving cars, aerial surveying and mapping, robotics, security | long range, high accuracy and resolution, wide FoV, fast scanning | bulky and heavy, high cost and power consumption |

| Electromechanical LiDAR [38] | uses electrically controlled motors or actuators to move mechanical parts that allow to steer the laser beam in various directions | wide FoV (up to 360°) | mirror, prism or entire sensor head | autonomous vehicles, remote sensing, atmospheric studies, surveying and mapping | enhanced scanning patterns, wide FoV, moderate cost, long range, high precision and accuracy | high power consumption, limited durability, bulky |

| OPA LiDAR [39,40] | employs optical phased arrays (OPAs) to steer the laser beam without any moving components. | flexible FoV, electronically controlled, can be narrow or wide | solid-state beam (non-mechanical) scanning mechanism | autonomous vehicles; high-precision sensing; compact 3D mapping systems | no moving parts; rapid beam steering; compact size; energy efficiency | limited steering range and beam quality; high manufacturing costs |

| Flash LiDAR [41,42] | employs a broad laser beam and a huge photodetector array to gather 3D data in a single shot | wide FoV (up to 120° horizontally, 90° vertically) | no scanning mechanism | terrestrial and space applications; advanced driving assistance systems (ADAS); | no moving parts; instantaneous capture; real-time 3D imaging | limited range; lower resolution; sensitive to light and weather conditions |

| DL approach | Data representation | Main techniques | Strengths | Limitations | Examples |

|---|---|---|---|---|---|

| Point-based [52,53] | point clouds | directly processes point clouds and captures features using shared MLPs | direct processing of raw point clouds; efficient for sparse data; prevents voxelization and quantization concerns | computationally expensive due to large-scale and irregular point clouds | PointNet, PointNet++ |

| Voxel-based [54,55] | voxel grids | converts the sparse and irregular point cloud into a volumetric 3D grid of voxels | well-structured representation; easy to use 3D CNNs; suitable for capturing global context | high memory usage and computational cost due to voxelization; loss of precision in 3D space due to quantization; loss of detail in sparse data region. | VoxelNet, SECOND |

| Projection-based [56,57] | plane (image), spherical, cylindrical, BEV projection | projects the 3D point cloud onto a 2D plane | efficient processing using 2D CNNs | loss of spatial features due to 3D-to-2D projection | RANGENet++, BEV, PIXOR, SqueezeSeg |

| Graph-based [58,59] | adjacency matrix, feature matrices, graph Laplacian | models point clouds as a graph, where each point regarded as a node and edges represent the interactions between them | effective for dealing with sparse, non-uniform point clouds; enables both local and global context-aware detection; ideal for capturing spatial relationships between points | high computational complexity due to large point clouds | GNN, DGCNN |

| Hybrid appoach [60,61] | combination of raw point clouds, voxels, projections etc. | combines several methods to improve the accuracy of 3D object detection, segmentation, and classification tasks | improved object localization and segmentation accuracy, flexibility | high memory and computational resources, complex architecture | PointPillars, MVF |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).