1. Introduction

Criminal acts deprive humanity of peace and safety. Therefore, there are several ways to stand against such acts, like societies' norms, religions, and man-made laws. Furthermore, criminologists study crime and deviant behaviors to improve artificial counteract for crimes, where it could be beneficial to implement these studies to fight crime. Moreover, there are preventive crime regulations and corrective actions after the occurrence of such acts. Usually, corrective actions aim is to ensure prosecution of the criminal and justice. Typically, the process of investigations to find the criminal and the story behind a crime have three stages: Identifying and securing the crime scene where the evidence will be collected, and forensic photographs will be taken, then, Analyzing stage where lab specialists will analyze the collected evidence, witnesses questioning, and suspects interrogating, and the final stage is drawing conclusions and apprehending the primary suspects for prosecution and trial. In our study, we study the possibility of robotics applications implementation in the chain of the crime investigation, specifically through the interrogation of suspects and the productivity of such implementation.

Moreover, a robotic application in crime investigation has already been implemented by UK police [

8]. However, one of the current challenges for robotics is human interaction, and this type of implantation requires a high level of human-robot interaction. There are many active and passive interrogation techniques and techniques within the law and ethics of interrogation, while others are against them. Moreover, these interactions will require natural language recognition and machine vision for passive interrogation interactions. In addition, they will require speaking and physical contact for active interrogation interactions.

2. Literature Review

We have surveyed related work to multiple topics concerning our issue, and each topic will be implemented in our proposed system. The surveyed topics are as follows: interrogation techniques implementation, witness/interrogator reliability quantifying, lying detection, bluffing recognition, emotion recognition, and intent recognition from physical contact.

2.1. Interrogation Techniques

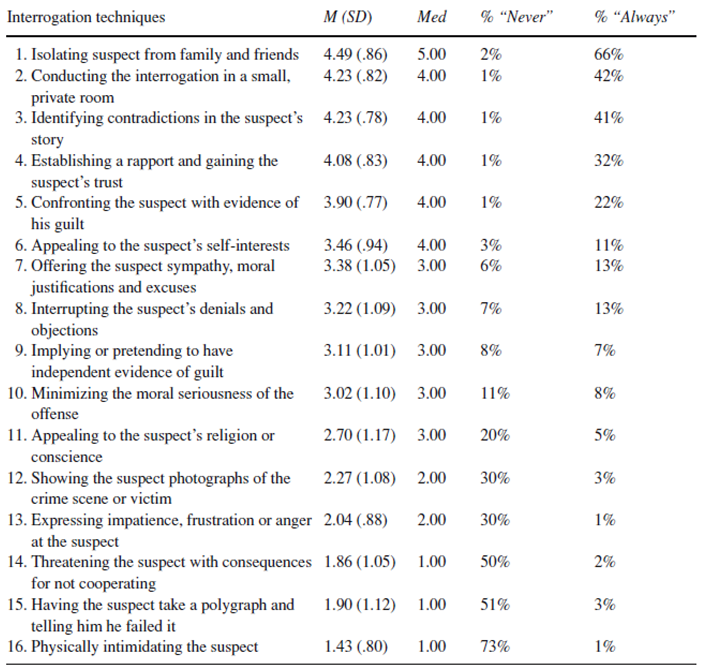

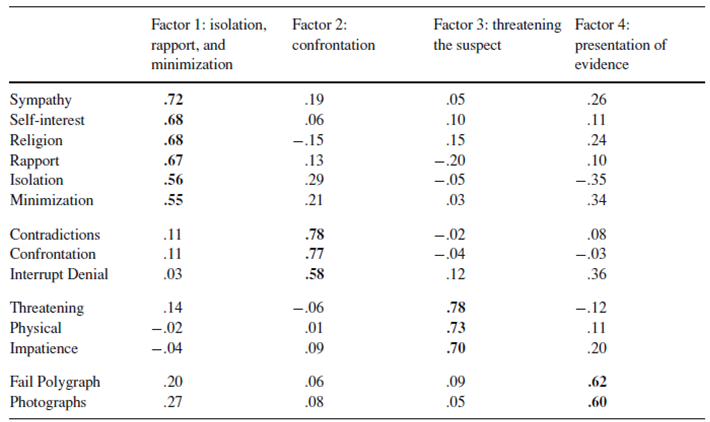

Interrogation is an extraordinarily interactive and complex procedure. Such complexity stems from the complexity of humans and the variety in personalities, psychological states, norms, ideologies, among other factors. However, interrogators have several techniques for various situations and mentalities. Kassin et al. [

1] surveyed 631 police investigators, took several statistical facts, and analyzed the interrogation techniques conducted as shown in

Table 1. Further analyses concluded the correlation between various attributes during interrogation, as shown in

Table 2.

Furthermore, a particular interrogation technique was studied by Mann et al. [

3]. They have examined the effect of a second interrogator's presence and interaction. Moreover, they have concluded that a second interrogator has a positive effect on interrogating and deception detection. Thus, making a robot as a second interviewer could have a positive effect if applied correctly.

2.2. Witness/Interrogator Reliability Quantifying

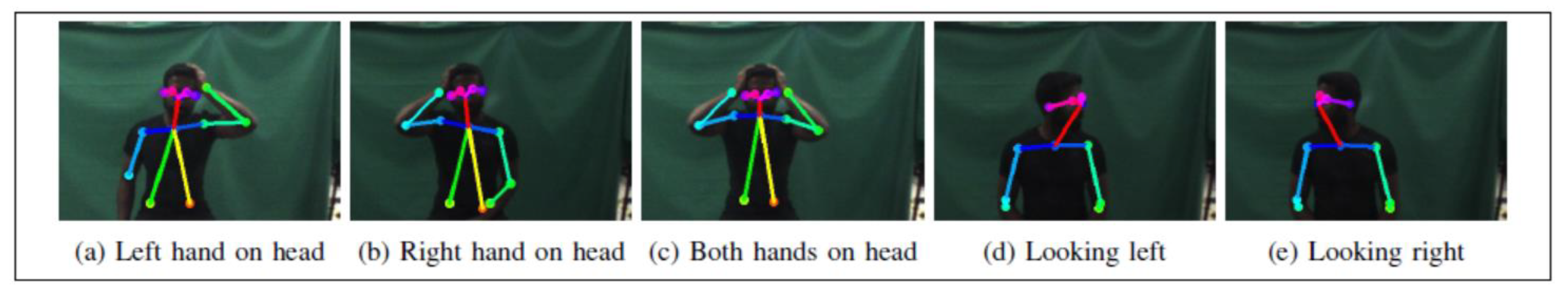

Moreover, the witness's trustworthiness can influence the result of any investigation. For example, Surendran and Wagner show that a robot can decide while interacting with a person can detect and understand the level of trust based on surface cues [

19]. An example is shown in

Figure 1.

Another motivation is to have a robot in the interrogation process to standardize the performance level for any interrogation. One paper studied three factors that lead to decreased quality of the interrogation based on a study conducted on 49 investigators. The first factor is time pressure on some cases where the information is needed in a short time and how this factor could produce poor judgment and restriction on decision making. The second factor is the heavy workload on the investigators, which could decrease their efforts during any investigation. The third one is the work environment and how this can influence the quality of work of individual investigators [

20].

2.3. Lying Detection

One paper introduced a humanoid robot solution to be used in lie detection by utilizing machine learning. Also, it showed that a robot could detect deception by the cognitive load needed when someone was lying compared with someone telling the truth as lying required a coherent story and plausible. This cognitive load can be linked with eye blinking and pupil dilatation, classified as oculomotor patterns. The machine learning result will be based on the higher cognitive cost as deception will result in a higher score [

5].

Another paper used the same technique with two investigators: human to human and human to robot. The experiment was done for young people to witness a crime from a video where the victim is someone from their family. The outcome of this experiment showed that human data is better than robot data during an investigation by humans and robots. However, the level of accuracy from robot data is promising for improvement [

4].

2.4. Bluffing Recognition

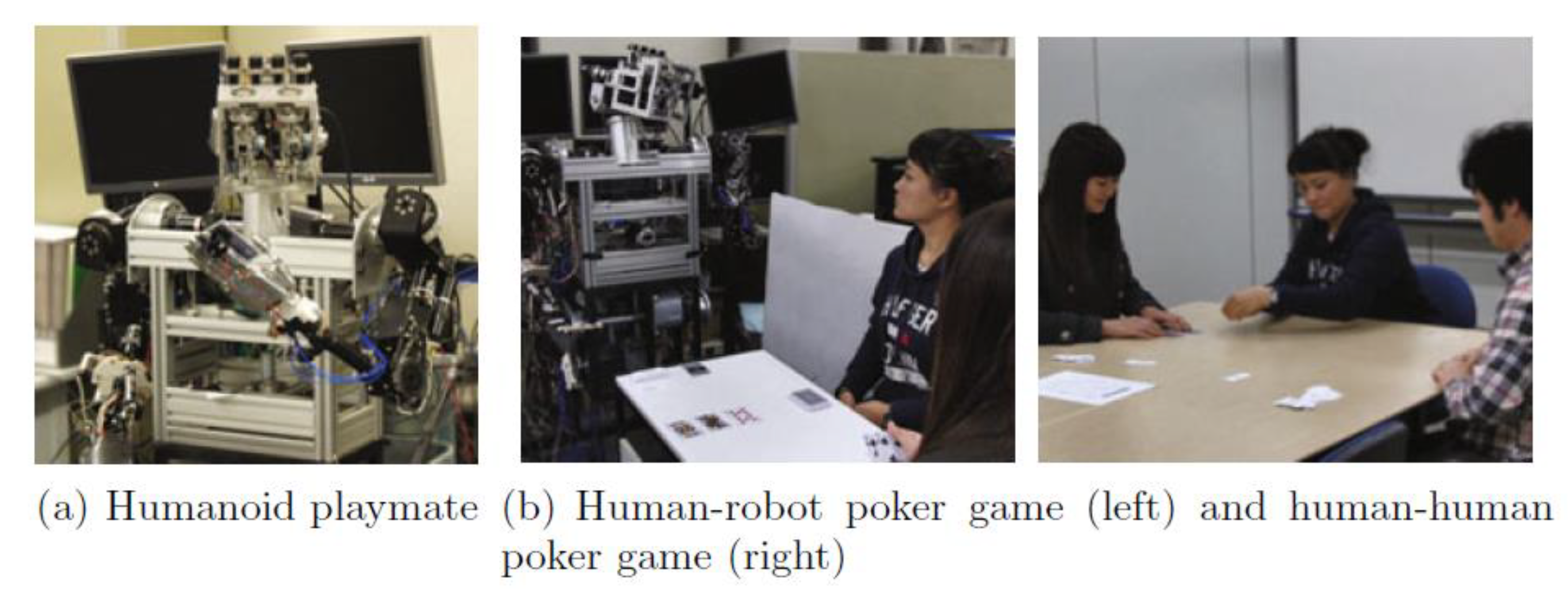

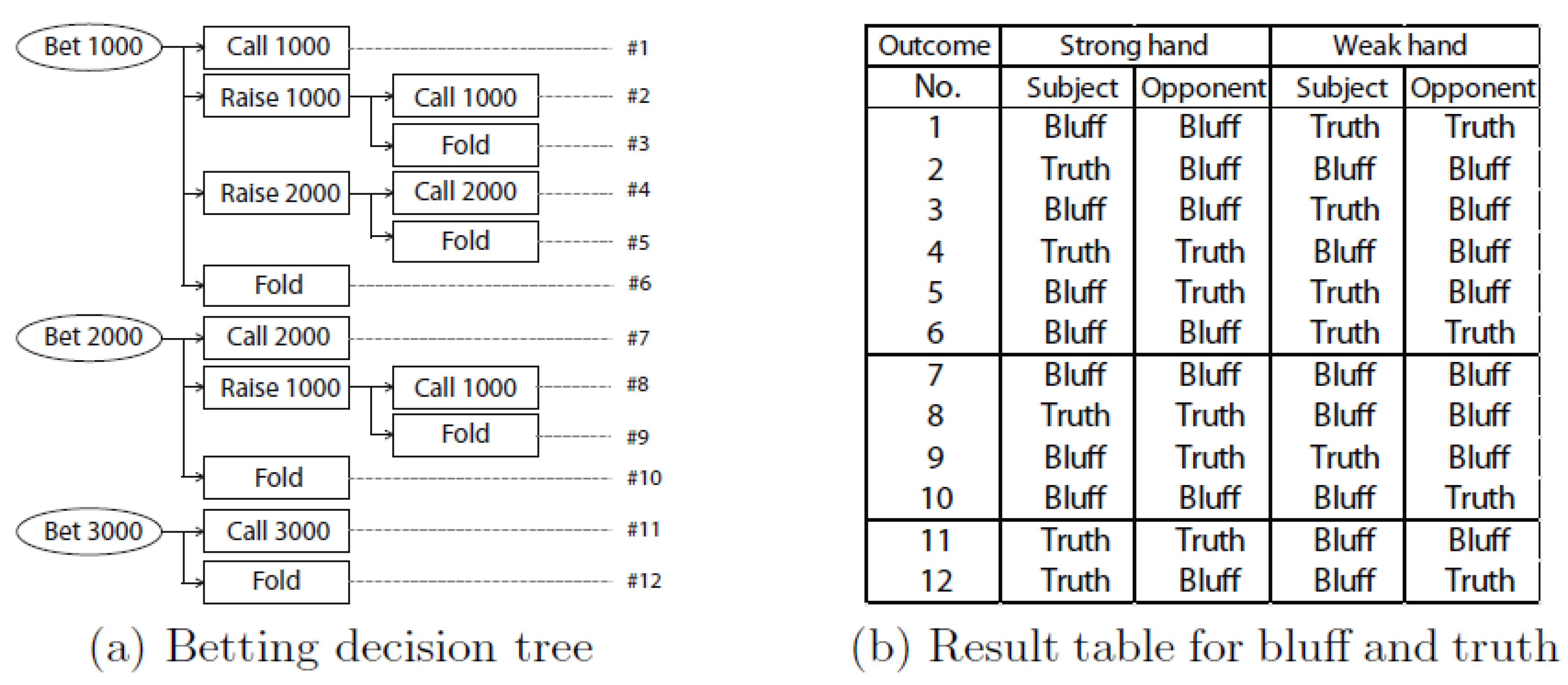

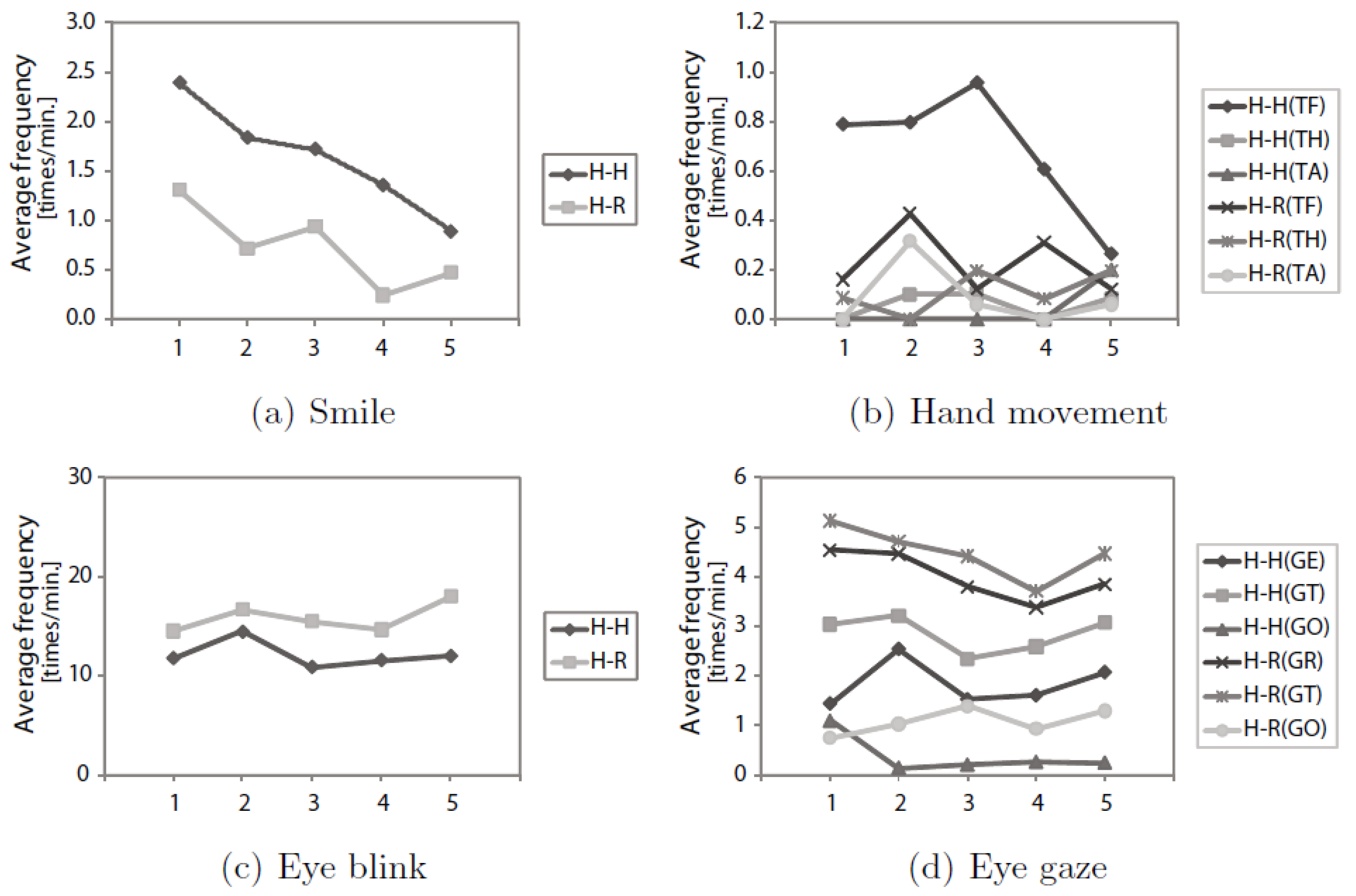

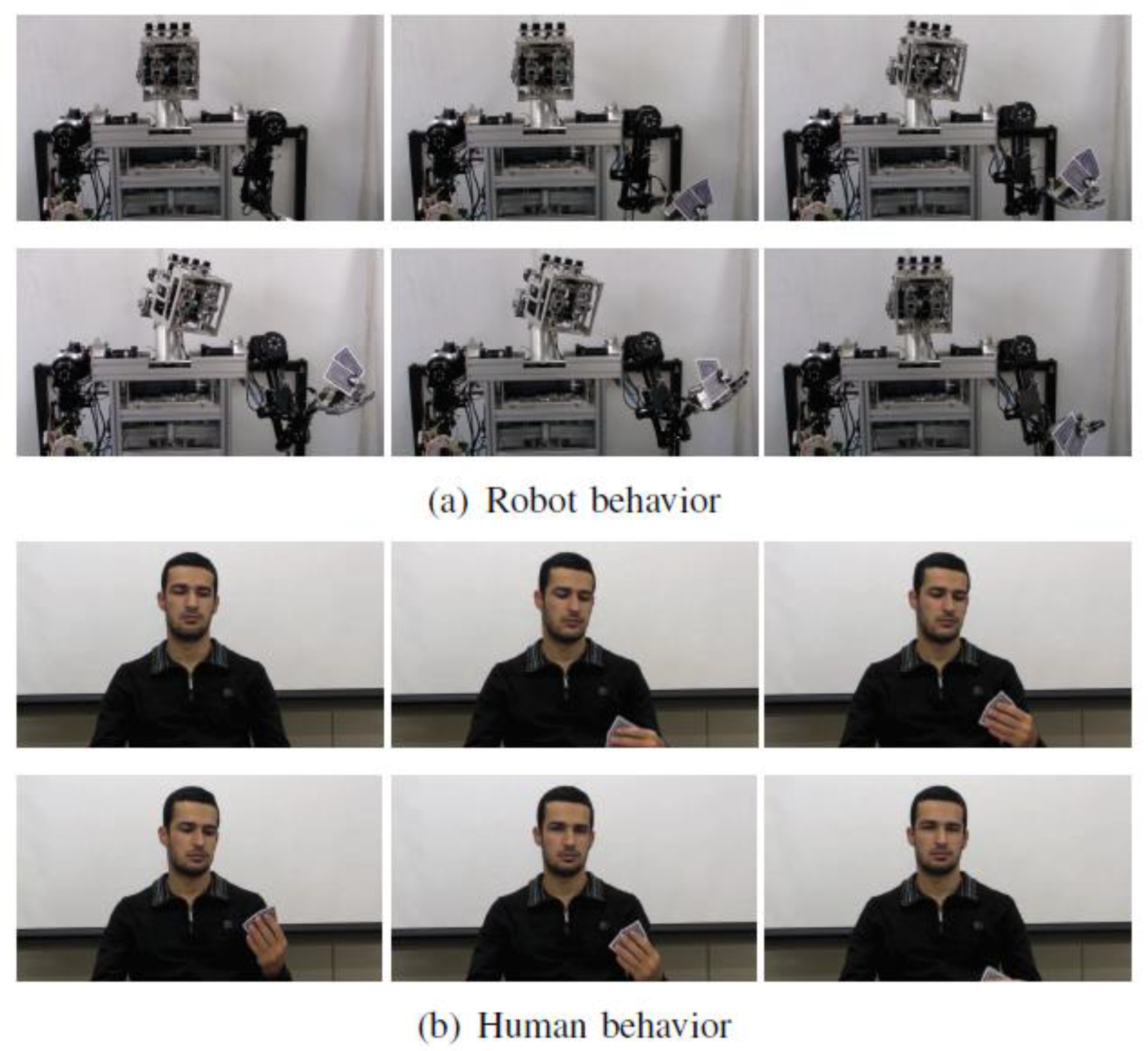

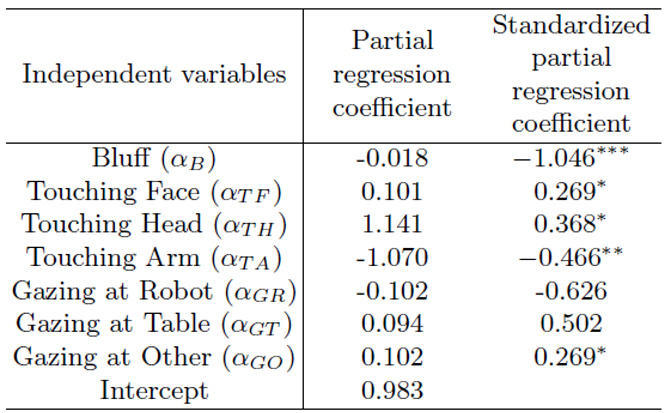

Some mind games require a high level of emotional intelligence and cognition to differentiate between the different states of mind, psychological background, and stress level to interpret the actions taken by other parties. These types of skills are valuable for detectives during questioning witnesses and suspects. Furthermore, understanding such behaviors will advance the human-robot interaction field and technology. For example, Kim and Suzuki [

14] analyzed multiple human-human and human-robot poker games in a controlled environment, as shown in

Figure 3. Moreover, they have made a truth table based on players' decisions, as shown in

Figure 4. Also, as shown in

Figure 5, they analyzed associated behaviors to make the robot know if the human is bluffing and how strong his card set is based on his behaviors, as shown in

Table 3.

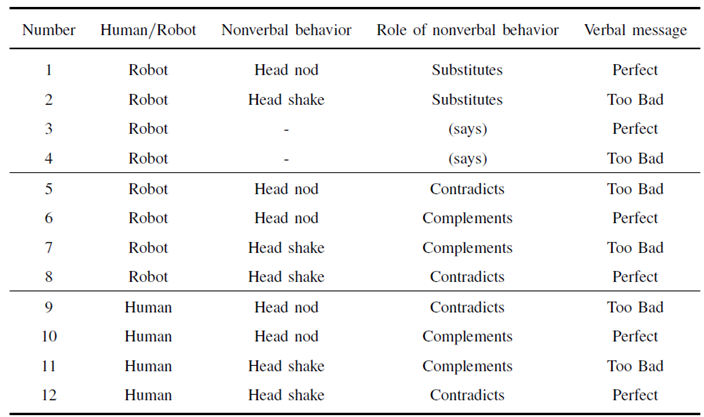

In addition, they have another paper [

13] where they explore how humans interpret robot behaviors. Furthermore, the robot exhibits intentionality where it does nonverbal actions, and some of the actions are bluffs, as shown in

Table 4. Also, the setup is shown in

Figure 6. Thus, the experiment showed how robot verbal and nonverbal communication conveys a specific intention to humans. This outcome can be handy in the applications of active interrogations.

2.5. Emotion Recognition

On the other hand, emotion plays an essential role during any social conversation between humans. Therefore, providing the ability of emotion recognition to any robot is essential in the HRI field. LUAN and et al. used a convolutional neural network (CNN) and a long short-term memory (LSTM) in motion detection. The model used several data sets of people images with different emotions: happy, angry, surprise, fear, disgust, and sad. The process used transfer learning further to improve the recognition success rate of the model. The result of the experiment increased the success from 58.62 % to 90.51 %; applying this model in any robot can improve HRI for any robot application [

17].

Related research identified a relation between Action Units and facial expression. For example, affection and happiness came with a cheek riser and lip corner puller. They suggested using supervised neural networks based on Facial Action Coding System (FACS). It can identify basic emotions from 17 Action Units taken from a robot in real-time processing with a 78.6% accuracy rate [

18]. The emotional expression will be added value for the interrogation process as this expression drives to a conclusion of a lie or truth for each question during this process.

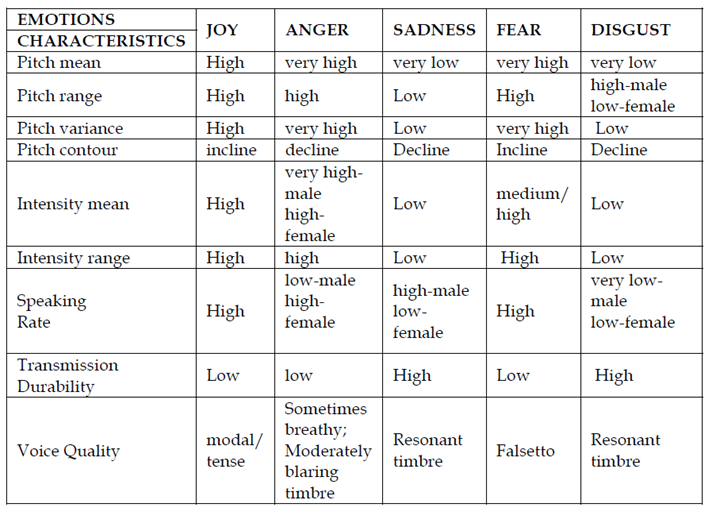

In addition to machine vision techniques for emotion detection, recognizing human emotions from his vocals is a natural human skill. Furthermore, it is essential for a robust system for interrogation to integrate all possible media of information exchange (Multi-modal HRI communication). For example, S. Ramakrishnan [

15] has analyzed and tested various SER (speech emotion recognition) databases and found promising results. Acoustic Characteristics of Emotions are shown in

Table 5.

2.6. Intent Recognition from Physical Contact

In addition to the vision and vocal communication in HRI applications, De Carli et al. [

16] have experimented with physical contact communication through a cooperative HRI environment, as shown in

Figure 10. Basically, through measuring forces vectors applied, the robot will know the intent of the human as to where to move or orient.

2.7. Staged Setup

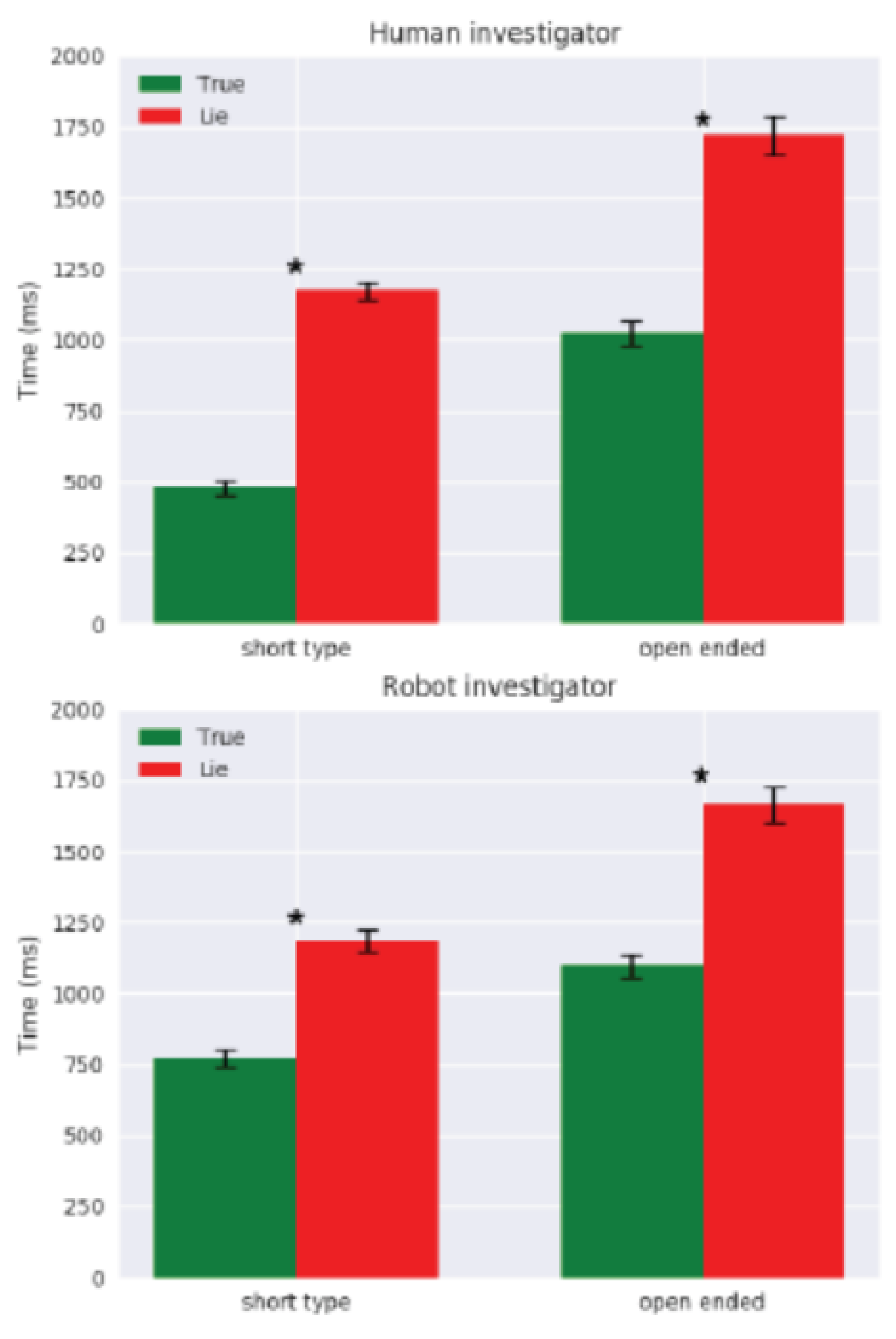

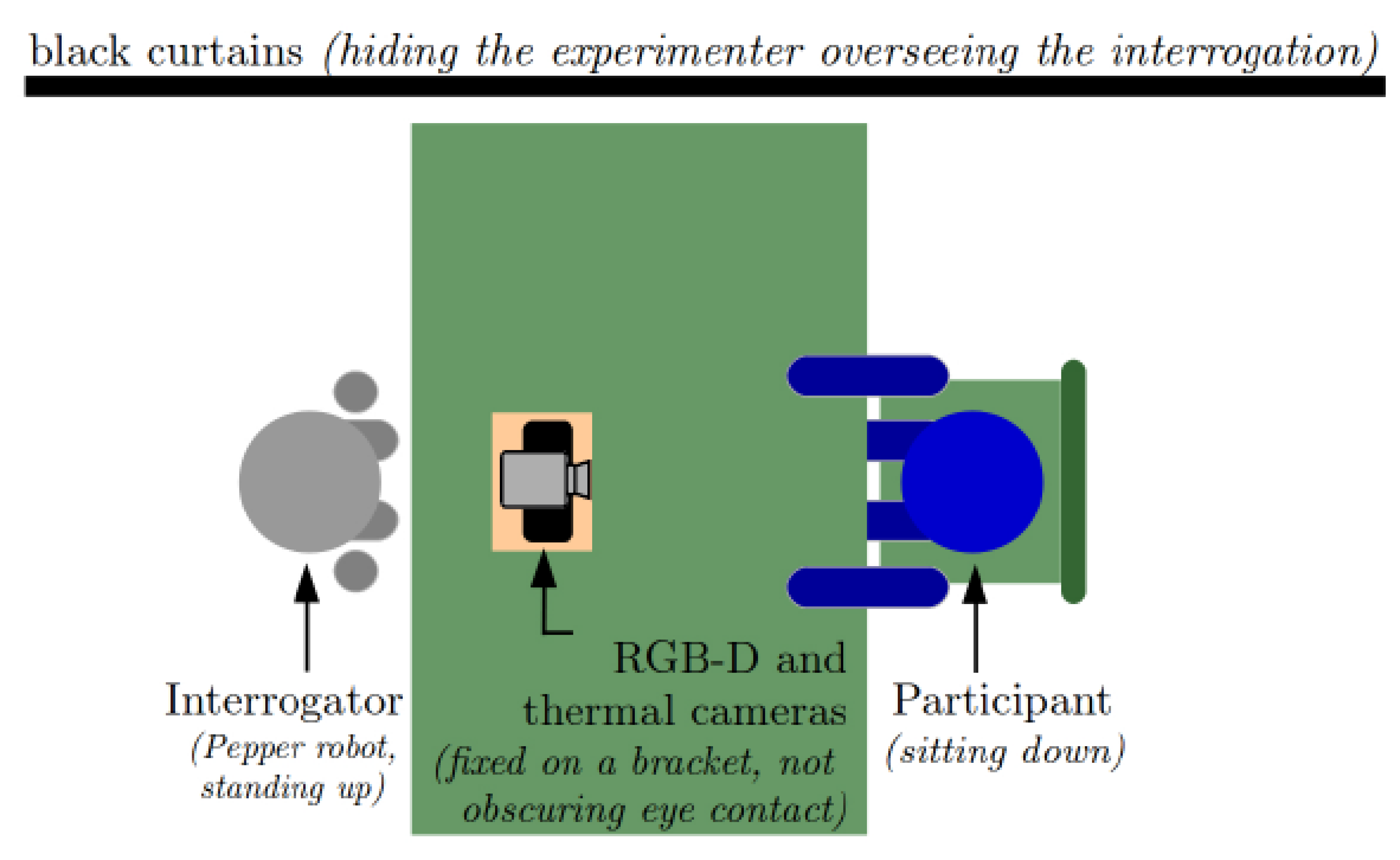

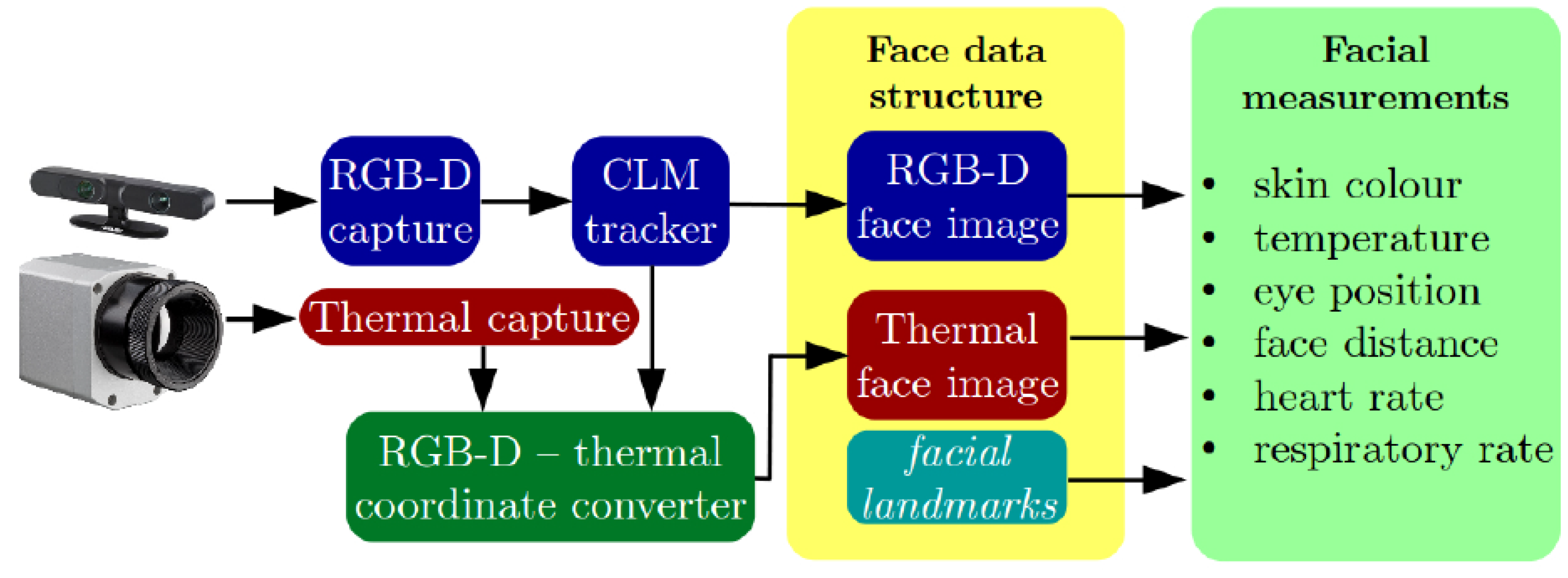

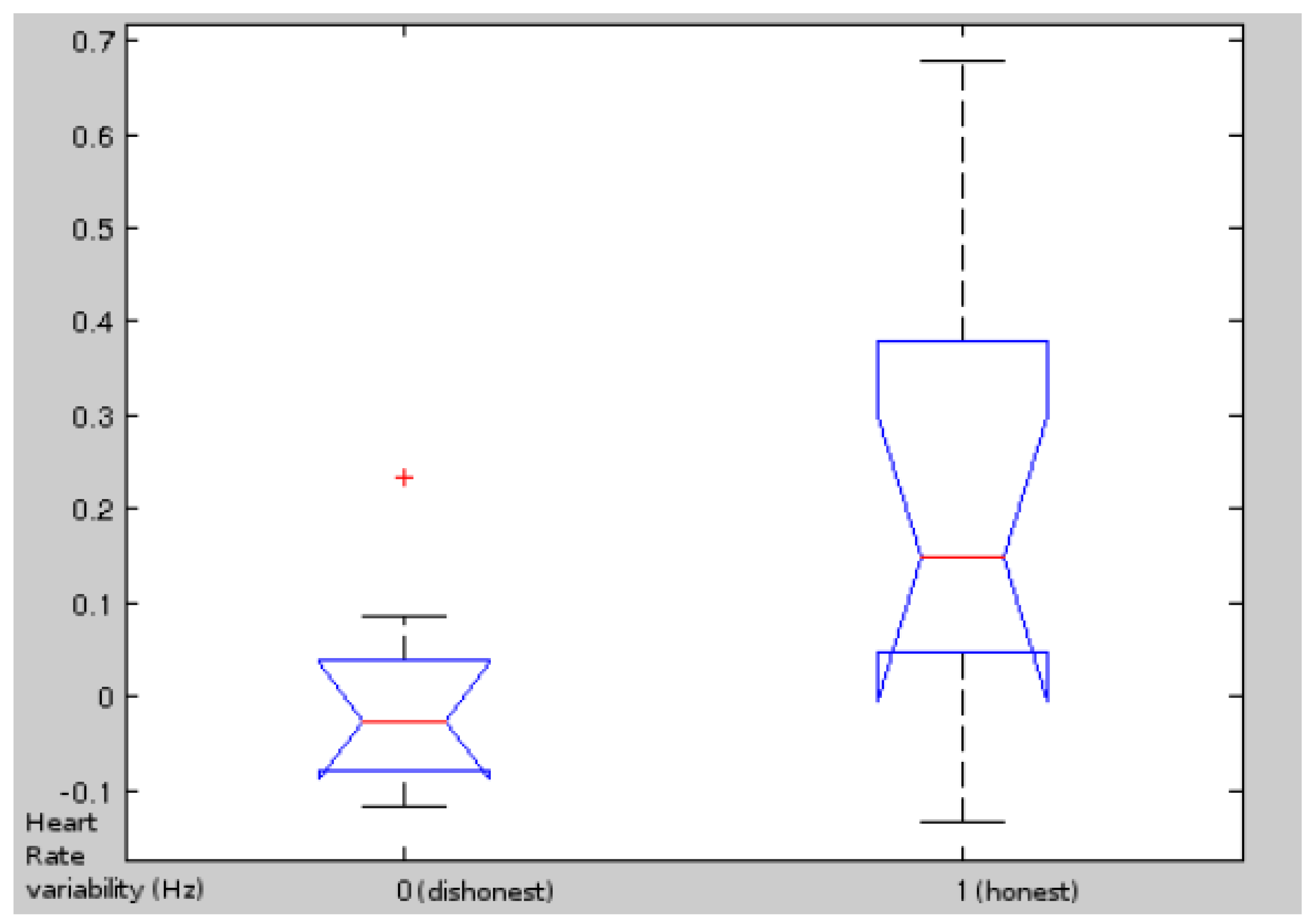

Another example given by Iacob and Tapus [

9] has evaluated several staged interrogations in a human-robot environment, as shown in

Figure 7. Moreover, the software setup is shown in

Figure 8. Furthermore, they use noninvasive techniques, such as thermal and RGB-D imaging, to detect a person is deceitful or honest, based on an evaluation of their physiological state. Finally, the experiment was repeated with female participants, male participants, and Participants with high neuroticism. In conclusion, the final results were promising, as shown in

Figure 9.

Figure 7.

This figure illustrates how the user and the robot interact through a shared load. The user manipulates one end of a long, slender load connected to the robot's end-effector by a 6-axis force/torque sensor. The robot used is a CRS A460 6-DOF.

Figure 7.

This figure illustrates how the user and the robot interact through a shared load. The user manipulates one end of a long, slender load connected to the robot's end-effector by a 6-axis force/torque sensor. The robot used is a CRS A460 6-DOF.

Figure 8.

Interrogation experimental setup.

Figure 8.

Interrogation experimental setup.

Figure 9.

Software architecture.

Figure 9.

Software architecture.

Figure 10.

Female participants: heart rate variability.

Figure 10.

Female participants: heart rate variability.

3. Problem Statement

The criminal investigation process is susceptible to error. It is believed that an interrogation process best follows investigations to find out the criminal. The interrogation process varies in its techniques and procedures, which many investigators share with their peers. This variation causes two significant problems:

(1) time consumption and

(2) the quality of information extracted from the suspect can vary significantly due to different techniques and procedures.

The police departments worldwide are always looking for better ways to find out the truth from criminals. However, the manual investigation is time-consuming. Moreover, it might not be efficient enough because some criminals might have been arrested multiple times, or others might have eluded police officers many times already.

Criminal interrogation is a crucial stage that led to identifying the criminal for any case. The interrogation quality in most cases will depend on the experience of the interrogator. A solution is needed to improve their chances of arrest the criminal or find out the truth. The main objective of this proposal is to enhance the effectiveness of criminal investigation by offering a standardized process. Moreover, this solution can be utilized in many organizations such as airports, universities, health, or even private sectors. This solution follows a specific standard that makes the process more efficient and effective.

4. System Design

A robotic system is a multi-modal system composed of mechanical, electrical, control, and software systems. Therefore, in our proposed solution, we will discuss the environmental setup of the system for optimum performance and the robotic system design. Furthermore, with highly interactive robots, HRI studies and human psychology rises in the robotics field. Hence, we will discuss the implementation of human studies in our suggested design.

4.1. Environment Setup

In the beginning, each person has unique personality traits and behavior. Thus, making our mission challenging and complex. However, we suggest a pre-processing stage for interrogation where the robot will ask questions based on many psychologists and human studies scientists. Furthermore, the result of the questionnaire will lead to identifying the personality of the suspect. Thus, making the rest of the mission clearer for implementation after analyzing the answers and deciding which questioning method is optimum based on the human personality.

Before getting into the robot design, we will discuss the design of the environment and the limitation of the implementation. At first, we suggest implementing the questioning in two interrogators' scenarios since it has proved its efficiency [

3]. Moreover, a specific interrogation technique will be implemented based on the analyzed human traits, as shown in

Table 1.

4.2. Robot Design

For the robot design, we consider constructing a High-level interaction robot with multi-modal communication for information exchange. Therefore, we will discuss the desired hardware properties, as well as the software properties.

4.2.1. Hardware Properties

We consider constructing a humanoid robot focusing on functionality more than the appearance of the robot hardware. Therefore, we will discuss the frame, sensors, and actuators needed for robot construction.

Frame Design

The robot scope of implementation is indoor in a specific area. Therefore, we designed it to be a wheeled robot for simplicity in comparison with a legged one. In addition, it needs a rigid, reliable, and average size of a human. Moreover, we need a frame that is optimum for sensors and actuators implementation.

Sensors

To build a robot capable of emotion detection, heartbeat detection, body language recognition, voice recognition, speech processing, speaking, motion, and physical interaction, we need a set of sensors to fulfill these requirements. For example, we need depth cameras for 3D modeling of the suspect to recognize his body language—also, a set of RGB-D cameras and Thermal cameras for emotion detection. In addition, we need millimeter-wave radar sensors or IR sensors for heartbeat detection.

We need IMU, proximity sensors, ultrasonic sensors, encoders, and thermal sensors for motion and physical interaction.

Actuators

Since our suggested design is a wheeled humanoid robot, we need a set of actuators for motion and physical interaction. First of all, we need microphones for robot speech enabling. As for the motion, we suggest a four-wheel differential drive system with a set of industrial motors on the base and manipulator joints for multiple-axis motion and maneuverability.

4.2.2. Software Properties

After we have discussed the brawl, the brain of the robot is what is left to design. Thus, we will discuss computer vision, and voice recognition algorithms for the robot.

Computer Vision

We suggest using the OpenCV library with Tensorflow and deep learning techniques to recognize body language, emotions, and gestures and improve the model using a neural network for optimum performance.

Voice Recognition

As in the S. Ramakrishnan [

15] study, we plan to implement various SER (speech emotion recognition) databases to recognize Acoustic Characteristics of Emotions.

5. Performance Evaluation

5.1. Performance Measures

After implementation, we plan to set performance measures for the robot and build upon them to keep improving our system.

5.1.1. Interaction Time

We are quantifying this based on the longevity of the interaction time between the human and the robot. Where if the interaction time is limited by any chance, this reduces the performance.

5.1.2. Cognitive Workload

We are quantifying this based on the capability of the system to handle variables and the continuous environment. Where if a certain scenario limits the robot's performance, this reduces the performance.

5.1.3. Responsiveness

We are quantifying this based on the speed of the system to analyze, quantify, and decide. Where if the robot is slow at any stage, this will reduce the performance.

5.2. Experimental/Simulation Design

After building the prototype, we need experimental data to analyze and improve upon. Therefore, we suggest the following scenario for the experiments:

Experimental staged questioning in Malls.

Experimental staged questioning in Schools.

Experimental staged questioning in Hospitals.

Experimental staged questioning between two of our robots.

Experimental staged questioning in Police stations.

And then, the data will be analyzed, and the benchmark will be set by human studies experts for error and metrics calculations.

6. Conclusion

The criminal investigation is a fun and unforgiving job. It requires a lot of determination, patience, and skills. Without the use of any special equipment or gadgets, criminal interrogation is done manually. Criminal investigators are often required to interrogate more than one suspect at once. The closer the investigator gets to the truth, the better the results will be. However, it is has been observed that most investigators are not good in their cases, and as a result, they fail in their intention to find out the criminal. Their inability to extract the truth from a suspect is not due to a lack of general experience but rather due to the process which they went through in their interrogation. The solution we propose will contribute to improving criminal investigation.

References

- Kassin, S. M., Leo, R. A., Meissner, C. A., Richman, K. D., Colwell, L. H., Leach, A.-M., & La Fon, D. (2007). Police interviewing and interrogation: A self-report survey of police practices and beliefs. Law and Human Behavior, 31(4), 381–400. [CrossRef]

- Leo, R. A., & Drizin, S. A. (n.d.). The three errors: Pathways to false confession and wrongful conviction. Police Interrogations and False Confessions: Current Research, Practice, and Policy Recommendations., 9–30. [CrossRef]

- Mann, S., Vrij, A., Shaw, D. J., Leal, S., Ewens, S., Hillman, J., Granhag, P. A., & Fisher, R. P. (2012). Two heads are better than one? How to effectively use two interviewers to elicit cues to deception. Legal and Criminological Psychology, 18(2), 324–340. [CrossRef]

- Aroyo, A. M., Gonzalez-Billandon, J., Tonelli, A., Sciutti, A., Gori, M., Sandini, G., & Rea, F. (2018). Can a Humanoid Robot Spot a Liar? 2018 IEEE-RAS 18th International Conference on Humanoid Robots (Humanoids). [CrossRef]

- Gonzalez-Billandon, J., Aroyo, A. M., Tonelli, A., Pasquali, D., Sciutti, A., Gori, M., Sandini, G., & Rea, F. (2019). Can a Robot Catch You Lying? A Machine Learning System to Detect Lies During Interactions. Frontiers in Robotics and AI, 6. [CrossRef]

- Weitzenfeld A., Dominey P.F. (2007) Cognitive Robotics: Command, Interrogation and Teaching in Robot Coaching. In: Lakemeyer G., Sklar E., Sorrenti D.G., Takahashi T. (eds) RoboCup 2006: Robot Soccer World Cup X. RoboCup 2006. Lecture Notes in Computer Science, vol 4434. Springer, Berlin, Heidelberg. [CrossRef]

- P. F. Dominey et al., "Robot command, interrogation and teaching via social interaction," 5th IEEE-RAS International Conference on Humanoid Robots, 2005., 2005, pp. 475-480. [CrossRef]

- Revell, T. (2017). Robot detective gets on the case. New Scientist, 234(3125), 8. [CrossRef]

- David-Octavian Iacob, Adriana Tapus. First Attempts in Deception Detection in HRI by using Ther-mal and RGB-D cameras. RO-MAN 2018, Aug 2018, Nanjing, China. hal-01840122.

- Calo, R., Froomkin, A., & Kerr, I. (2016). Robot Law. [CrossRef]

- Chaiken, Jan M., Peter W. Greenwood, and Joan R. Petersilia, The Criminal Investigation Process: A Summary Report. Santa Monica, CA: RAND Corporation, 1976. https://www.rand.org/pubs/papers/P5628-1.html.

- Gehl, R., & Plecas, D. (2017). Introduction to Criminal Investigation: Processes, Practices and Thinking \. Open Textbook Library.

- Kim, M.-G., & Suzuki, K. (2012). On the evaluation of interpreted robot intentions in human-robot poker game. 2012 12th IEEE-RAS International Conference on Humanoid Robots (Humanoids 2012). [CrossRef]

- Kim, M.-G., & Suzuki, K. (2011). Analysis of Bluffing Behavior in Human-Humanoid Poker Game. Social Robotics, 183–192. [CrossRef]

- Ramakrishnan, S. (2012). Recognition of Emotion from Speech: A Review. Speech Enhancement, Modeling and Recognition- Algorithms and Applications. [CrossRef]

- De Carli, D., Hohert, E., Parker, C. A., Zoghbi, S., Leonard, S., Croft, E., & Bicchi, A. (2009). Measuring intent in human-robot cooperative manipulation. 2009 IEEE International Workshop on Haptic Audio Visual Environments and Games. [CrossRef]

- Li, T.-H. S., Kuo, P.-H., Tsai, T.-N., & Luan, P.-C. (2019). CNN and LSTM Based Facial Expression Analysis Model for a Humanoid Robot. IEEE Access, 7, 93998–94011. [CrossRef]

- Zhang, L., Jiang, M., Farid, D., & Hossain, M. A. (2013). Intelligent facial emotion recognition and semantic-based topic detection for a humanoid robot. Expert Systems with Applications, 40(13), 5160–5168. [CrossRef]

- Surendran, V., & Wagner, A. R. (2019). Your Robot is Watching: Using Surface Cues to Evaluate the Trustworthiness of Human Actions. 2019 28th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN). [CrossRef]

- Ask, K., & Granhag, P. A. (2007). Motivational Bias in Criminal Investigators' Judgments of Witness Reliability. Journal of Applied Social Psychology, 37(3), 561–591. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).