1. Introduction

Smart transportation has recently emerged as the optimal solution to address the mobility needs of people [

1,

2,

3]. By leveraging modern technology, such as wireless communication, cloud-fog-edge computing, and object detection, smart transportation aims to simplify processes, enhance safety, reduce costs, and improve overall efficiency in transportation systems [

4,

5,

6]. For example, smart transportation can offer optimized route suggestions, easy parking reservations, accident prevention, traffic control, and support for autonomous driving [

4,

5,

6]. Among modern technology building smart transportation, object detection techniques play the most important role, as identifying and tracking specific objects is crucial for various purposes, such as traffic monitoring [

7], collision avoidance [

8], pedestrian safety [

7], parking assistance [

7], infrastructure monitoring [

7], and public transport security [

9].

Specifically, several object detection techniques exist, such as pioneer detection (i.e., histogram of oriented gradients (HOG) [

10], deformable part model (DPM) detector [

11], etc.), two-stage detection (i.e., region-based convolutional neural network (R-CNN), spatial pyramid pooling network (SPP-net) [

12], Fast R-CNN [

13], RepPoints [

14], etc.), transformer-based detection (i.e., detection transformer (DETR) [

15], segmentation transformer (SETR) [

16], transformer-based set prediction (TSP) [

17], etc.), and one-stage detection (i.e., region proposal network (RPN) [

18], single-shot detector (SSD) [

19], You Only Look Once (YOLO) [

20], RetinaNet [

21], CornerNet [

22], etc.). In smart transportation applications, real-time processing is essential to guarantee the safety, accuracy, and efficiency of operating vehicles. For example, in a self-driving car within a smart transportation system, a real-time object detector is required to quickly and accurately detect speed limit signs, pavement, pedestrian zone signs, and other vehicles on the street, allowing the car to take the appropriate subsequent actions in real-time. Therefore, one-stage detection is the most suitable for real-time smart transportation applications due to its fast detection speed and high accuracy [

23,

24]. Furthermore, among recently developed one-stage detection models, YOLO is considered the leading representative.

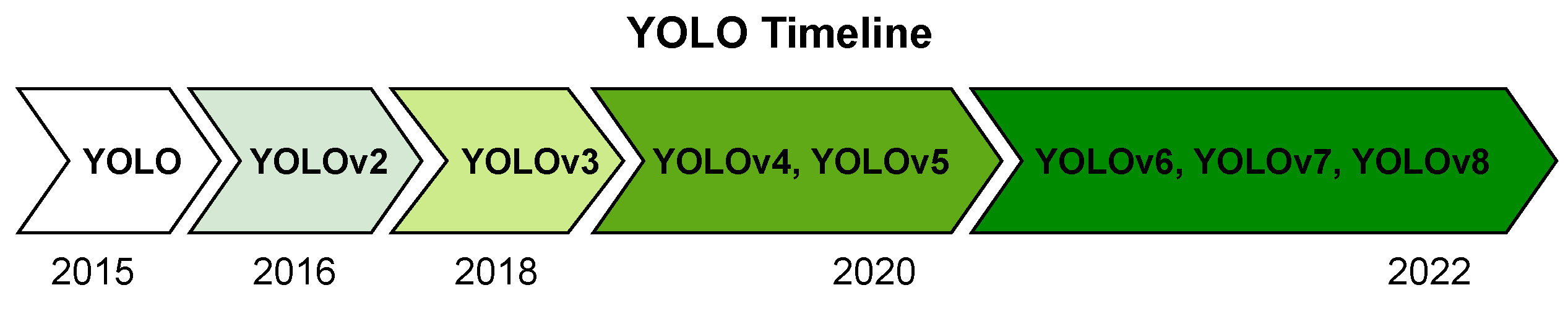

First launched in 2015, the YOLO [

20] real-time object detection model has been developed through the years with multiple versions (i.e., YOLOv2 [

25], YOLOv3 [

26], YOLOv4 [

27], YOLOv5 [

28], YOLOv6 [

29], YOLOv7 [

30], and YOLOv8 [

31]) as depicted in

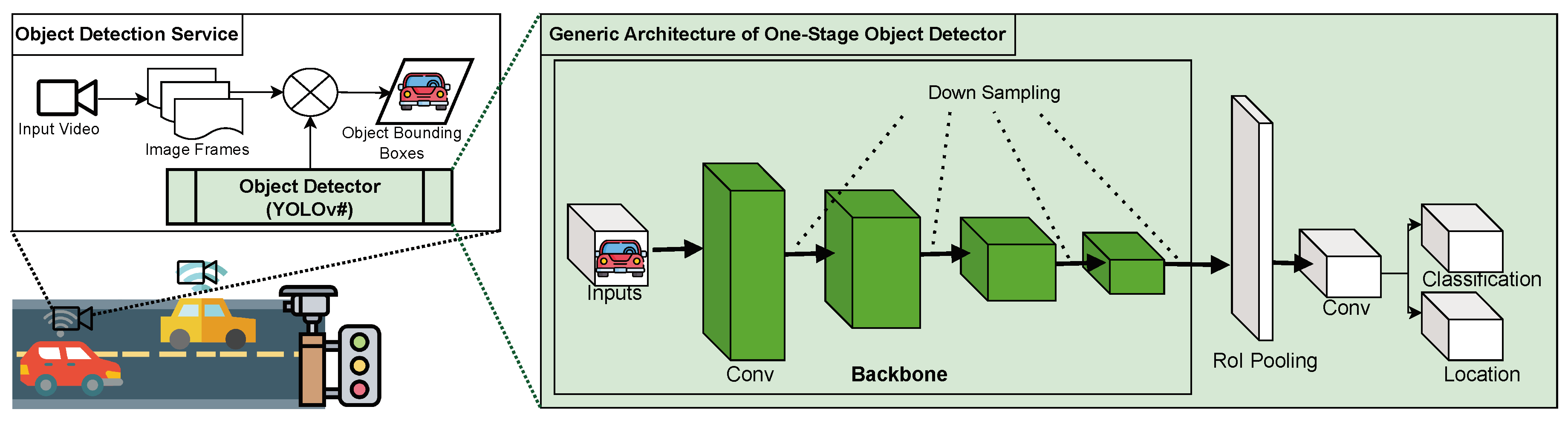

Figure 1. Specifically, YOLO takes a different approach compared with traditional object detection models. Instead of dividing the image into regions and applying classification and localization separately, YOLO performs both tasks in a single pass through its network [

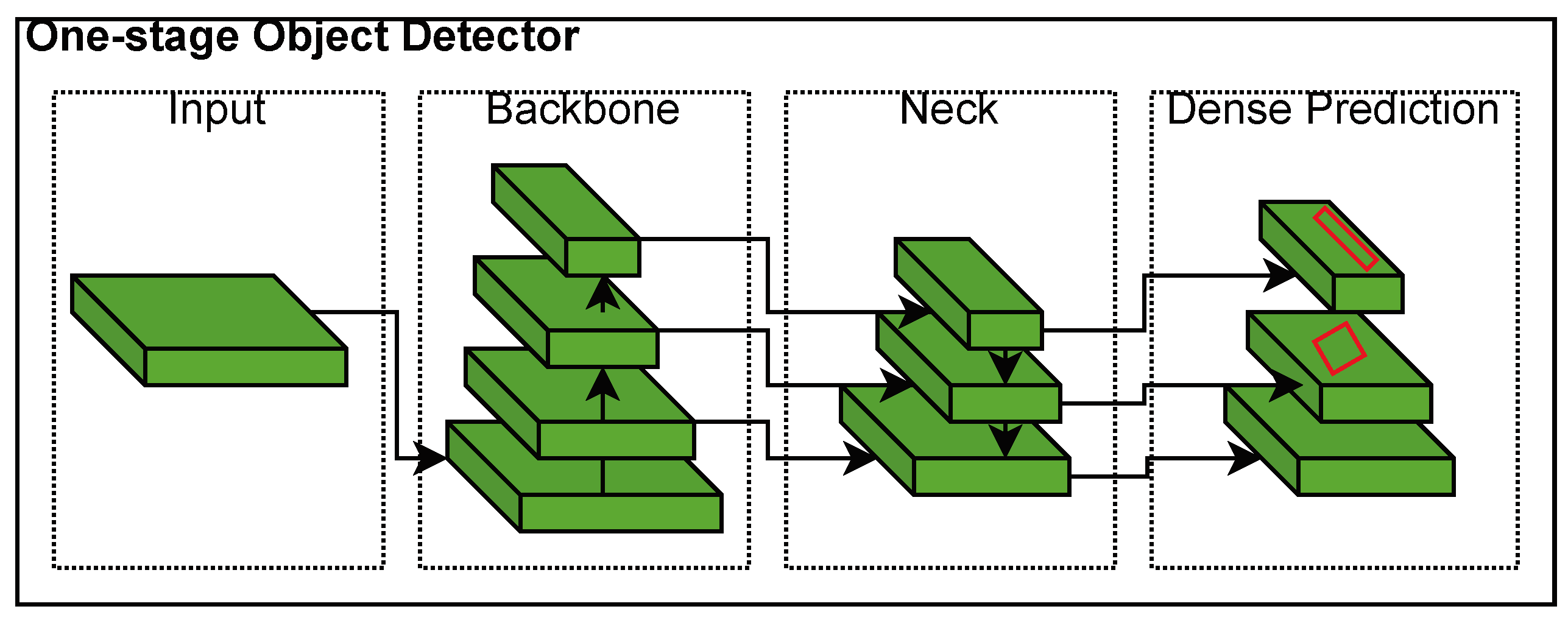

24]. It predicts bounding boxes and class probabilities directly on a grid-based output. By doing so, YOLO and its variants have significantly contributed to object detection. Moreover, YOLO models aim to improve themselves, becoming more efficient, accurate, and scalable for real-world applications [

32,

33,

34]. However, YOLO models differ in their network backbone, neck, head, loss functions, and other features, making it crucial to select the optimal models for smart transportation applications by evaluating and analyzing their performance.

Previous studies have investigated object detection models. Nazir et al. [

35] introduce an overview of YOLO variants, including YOLOv2, YOLOv3, YOLOv4, YOLOv5, YOLOv6, and YOLOv7. In addition, Sozzi et al. [

36] introduce a comparative evaluation of the accuracy and speed of various versions of YOLO object detection algorithms, such as YOLOv3, YOLOv3-tiny, YOLOv4, YOLOv4-tiny, YOLOv5x, and YOLOv5s, for grape-yield estimation. Our study is inspired by these works regarding the performance comparison and architectural analysis of various YOLO models. In contrast, this article specifically focuses on the performance of YOLO models on transportation data that affects the autonomous driving situation in smart transportation. Notably, we evaluate YOLO performance across various datasets, object classes, and intersection-over-union (IoU) thresholds.

In this article, we conduct an empirical evaluation and analysis of multiple YOLO models, including YOLO version 3 to 8 to observe their performance and determine the optimal models for smart transportation, with a focus on road transportation. To achieve this, we first analyze and evaluate the performance of multiple YOLO models in terms of accuracy (i.e., average precision (AP)) according to road transportation data using multiple datasets (i.e., COCO and PASCAL VOC datasets). Second, we evaluate the performance of various YOLO models on multiple object classes within the datasets, specifically focusing on those relevant to road transportation. Third, multiple IoU thresholds are also considered in the performance measurement and analysis. By doing so, we make six observations related to the following three aspects:

Datasets: The observations on datasets are obtained in the evaluation and analysis. First, the performance of YOLO models trained and evaluated on various datasets can be disparate. In particular, YOLO models perform better on PASCAL VOC than on COCO. Second, YOLOv5 and YOLOv8 are dominant models on both datasets. The reason for the performance disparity of YOLO models on the two datasets can be attributed to various factors, including differences in object classes, dataset sizes, object characteristics, architectural design, optimization techniques, and dataset biases. These factors affect model performance on various datasets, leading to variations in AP scores.

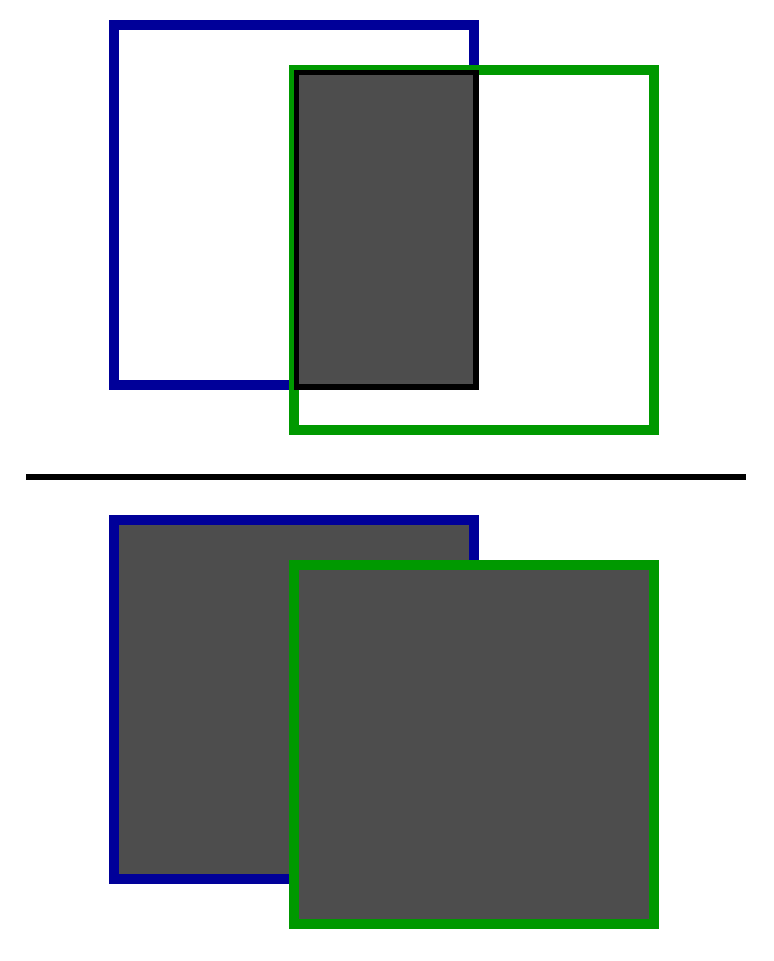

IoU thresholds: The observation on IoU thresholds indicates that as the IoU threshold increases, the mean AP (mAP) of a YOLO model decreases. YOLOv5 and YOLOv8 perform better than other YOLO models across various IoU thresholds. The first observation is related to the essence of the IoU threshold, where a higher threshold demands a higher standard for accurate predictions. For the second observation, YOLOv5 and YOLOv8 outperform other models, because of their bag of freebies (BoF) and bag of specials (BoS) techniques, such as backbone, neck, head architectures, loss functions, anchor-based mechanisms, modules for enhancing the receptive field, data augmentation strategies, feature integration, and activation functions.

Class of objects: Based on the observation of object classes, the performance of YOLO models can fluctuate or stabilize depending on the quality of the datasets and the characteristics of the objects within them. Similar to the above observations, YOLOv5 and YOLOv8 outperform most object classes in the two datasets. For the first observation related to the class of objects, we observe that differences in AP values of YOLO models across object classes come from certain factors, such as object class imbalance and object class-specific characteristics. For example, objects with smaller sizes or complex shapes can pose challenges for detection, resulting in lower AP values for those object classes. For the second observation, YOLOv5 and YOLOv8 still maintain their lead, because of their BoF and BoS techniques as analyzed in the observations related to IoU thresholds.

The rest of the article is organized as follows.

Section 2 provides the background and motivation.

Section 3 describes the evaluation and analysis of multiple YOLO versions in smart transportation.

Section 4 discusses related work. Finally, we conclude our work in

Section 5.

3. Evaluation and Result Analysis

In this article, the performance of YOLO models is extensively evaluated using various benchmark datasets (e.g., COCO and PASCAL VOC) and the evaluation metric setup (e.g., IoU thresholds). Specifically, we evaluate YOLO models on the COCO and PASCAL VOC datasets which cover a wide range of object categories on road transportation. The mAP evaluation metric is used to measure the accuracy of various YOLO models across different datasets, object classes, and IoU thresholds.

3.1. Experimental Setup

We use a server equipped with a 32-core Intel Xeon Gold CPU and 64 GB of DRAM, running with an 18.04.5 Ubuntu and 4.15.0 Linux kernel for training and evaluation. We use COCO2017 and PASCAL VOC as training and evaluation datasets. In this article, we focus on object detection in road transportation and only consider object classes related to road transportation in two datasets. The COCO2017 datasets have about 80 classes, but only road transportation-related object classes are used in the experiments, including ten classes: bicycle, car, motorcycle, bus, train, truck, traffic light, stop sign, parking meter, and person. The total number of images we use for the validation is 5000 and with each object class detection, the number of labels varies from 60 to 10777.

For the PASCAL VOC datasets, we use 11530 images with 20 object classes of road transportation for training. In validation, we use 3422 images with six object classes (e.g., bicycle, bus, car, motorbike, person, and train). The number of labels varies from 142 to 7835. A summary of the datasets’ usage in the experiments is presented in

Table 3.

3.2. Evaluation Overview

We present the experiment results, comparing the performance of YOLO models in terms of the datasets (e.g., COCO and PASCAL VOC), accuracy (e.g., mAP scores at various IoU thresholds), and object classes.

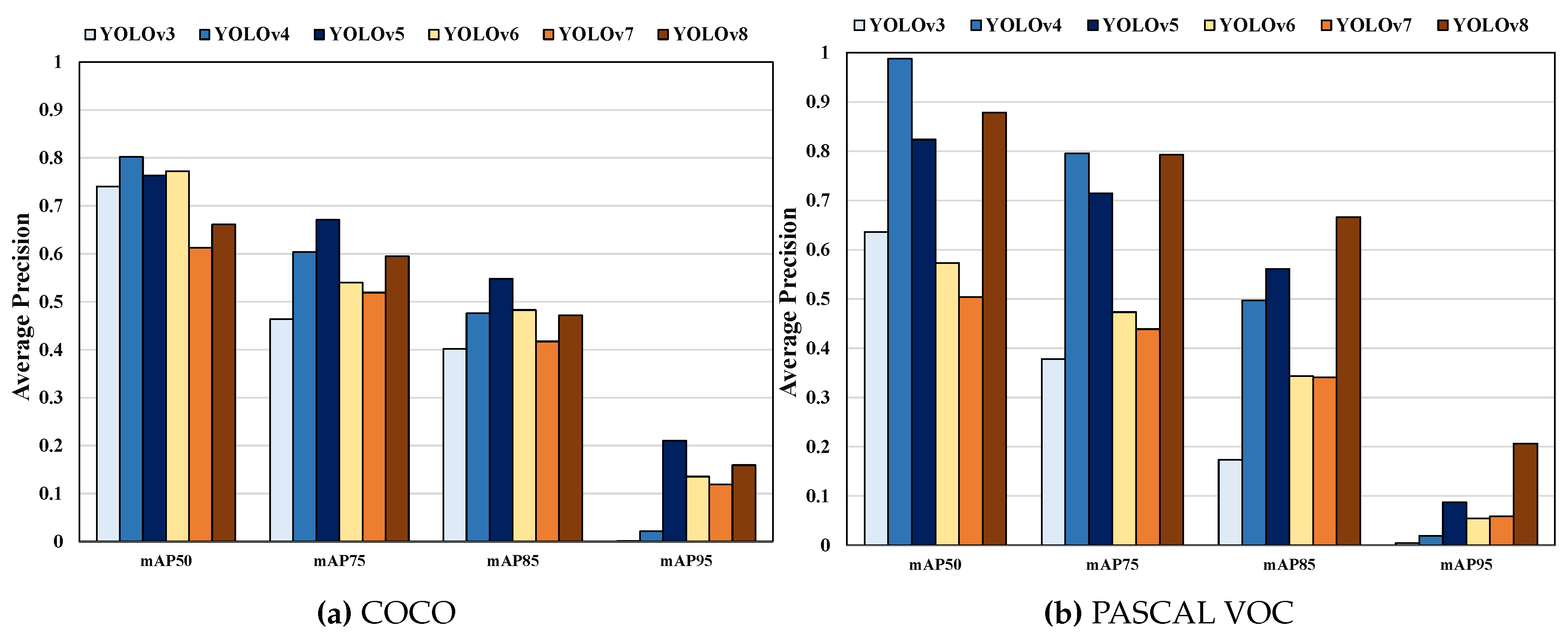

Figure 5a,b illustrate the AP results of YOLO models on COCO and PASCAL VOC datasets, respectively.

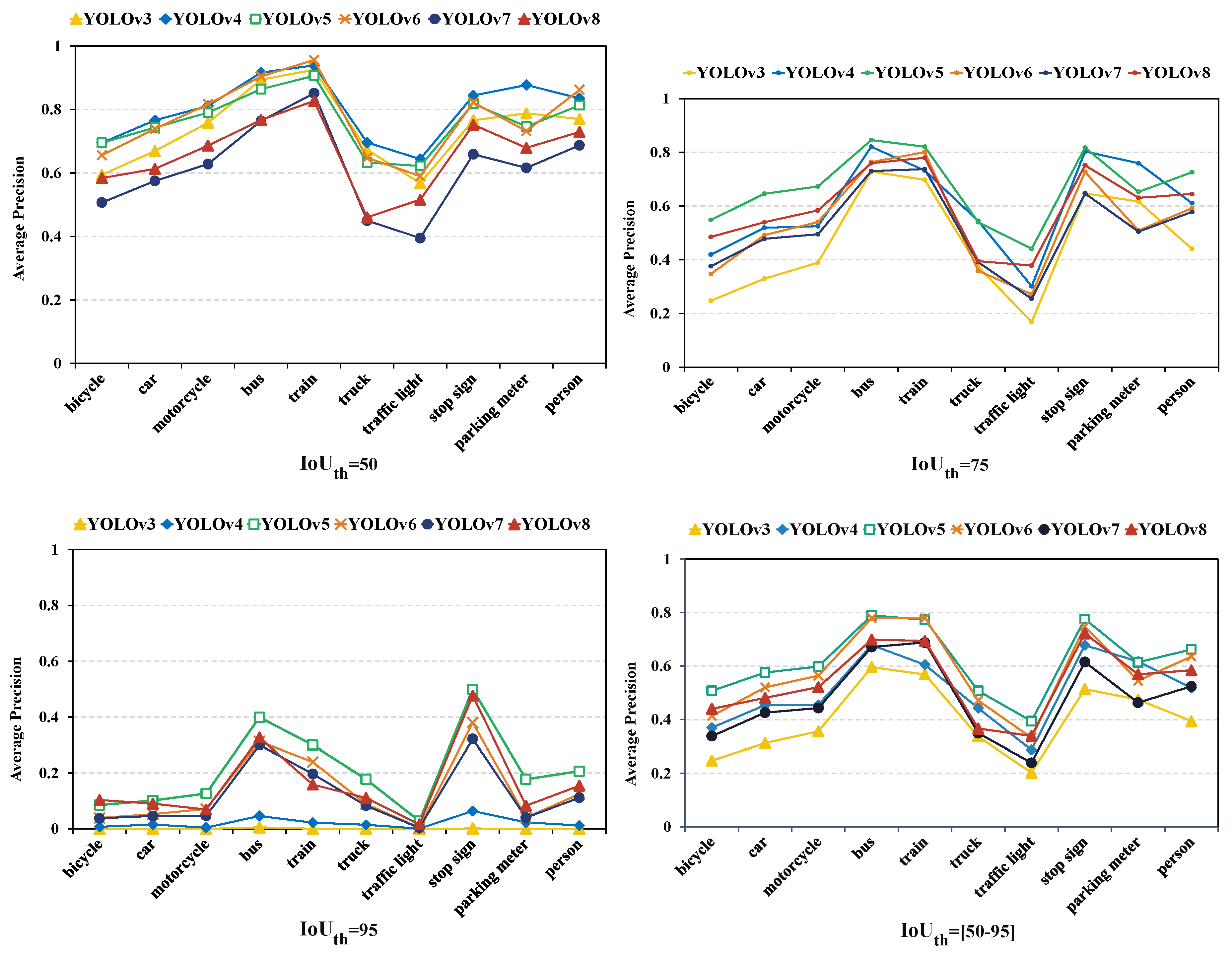

Figure 6 and

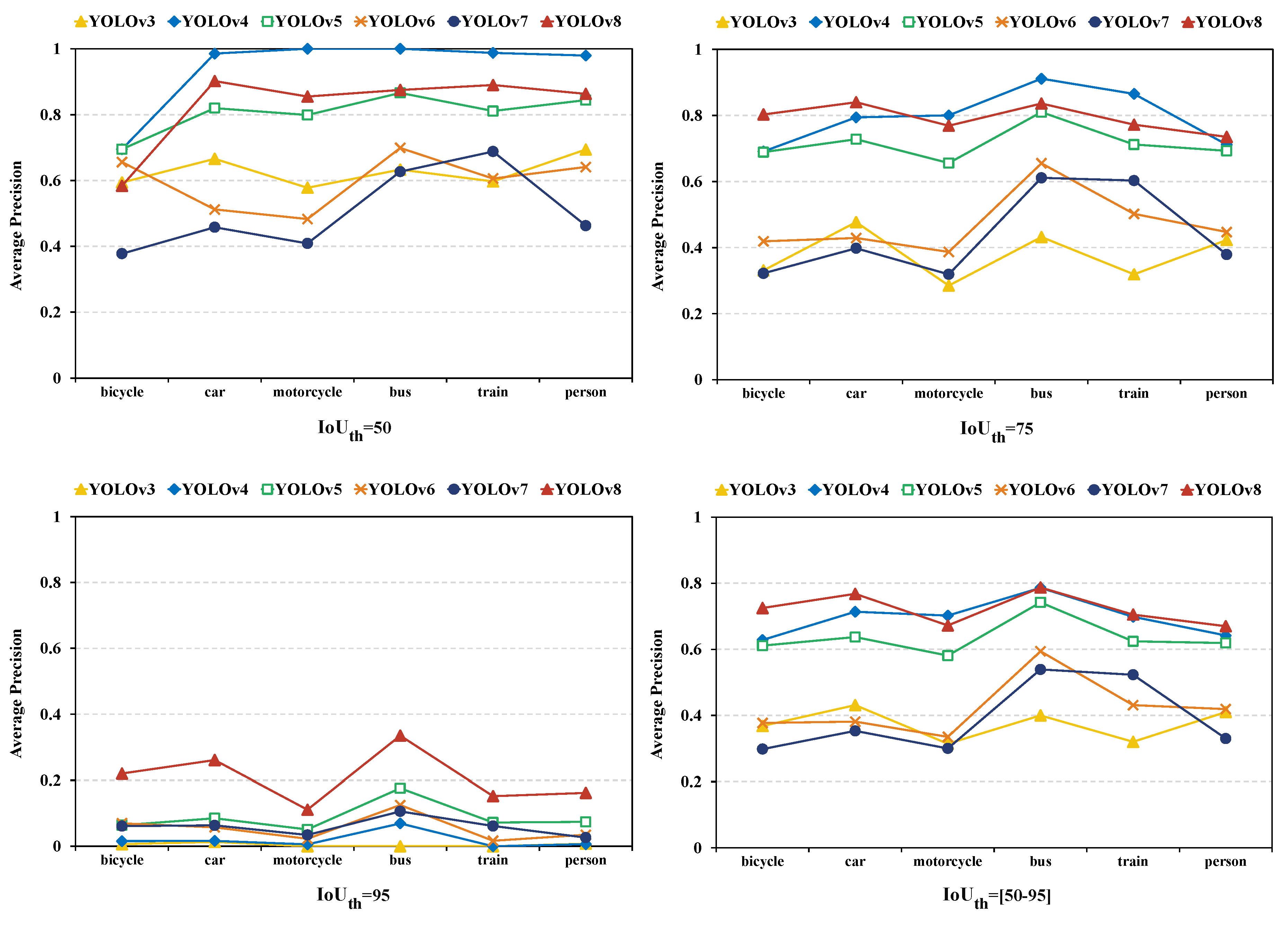

Figure 7 indicate how the IoU thresholds affect the AP of object classes in COCO and PASCAL VOC datasets, respectively.

Table 4,

Table 5,

Table 6 and

Table 7 show the AP at the IoU threshold 50 and the average AP (e.g., average AP at all IoU thresholds) of YOLO models on specific object classes in COCO and PASCAL VOC datasets, respectively.

3.3. Experimental Results and Observations

The next section examines the performance of YOLO from three perspectives: datasets, IoU thresholds, and object classes. Based on the performance analysis, we provide empirical observations on the performance of various YOLO models when applied to road transportation datasets (e.g., COCO and PASCAL VOC).

3.3.1. Dataset

Figure 5a,b present the AP of six YOLO models at four thresholds

(e.g., IoU threshold

) on the COCO and PASCAL VOC datasets, respectively. As depicted in the figures, YOLO models on the PASCAL VOC datasets can get higher mAP than on the COCO datasets across various IoU thresholds.

For example, as shown in

Figure 5a, dominant YOLO models (e.g., YOLOv4, YOLOv5, YOLOv8), trained on COCO datasets, raise the AP in a range

at

,

at

,

at

, and

at

. Whereas, for these dominant YOLO models on PASCAL VOC datasets shown in

Figure 5b, the AP range is greater with

at

,

at

,

at

, and

at

.

YOLO models perform better on the Pascal VOC dataset than on COCO because Pascal VOC contains fewer object classes and a less diverse range of object sizes compared to COCO. Additionally, the PASCAL VOC dataset is specifically designed for object detection tasks, whereas the COCO dataset covers a broader range of computer vision tasks, including object detection, instance segmentation, and more. Hence, images in PASCAL VOC can contain efficient and suitable objects for the training and validation processes of object detection tasks. Thus, according to the database variety, we make the following observation related to the YOLO performance.

In addition, in

Figure 5, we can observe that YOLOv5 and YOLOv8 are dominant models at various IoU thresholds. For example, on COCO datasets, YOLOv5 outperforms the others at

. Further, YOLOv8 follows YOLOv5. At

, YOLOv4 and YOLOv6 can achieve high performance, but at other IoU thresholds, they cannot maintain their performance like YOLOv5 and YOLOv8 can do. Therefore, we can not consider YOLOv4 and YOLOv6 as dominant YOLO models on the COCO datasets. On the PASCAL VOC datasets, YOLOv8 is better than the others at

. YOLOv5 goes after YOLOv8 at

. YOLOv4 can achieve high performance at

, but has the lowest performance at

. Therefore, we can not consider it a dominant YOLO model on the PASCAL VOC datasets.

Furthermore, YOLOv5 outperforms other models because it uses the CSPDarknet53 backbone, PANet for effective multi-scale feature integration, the auto-anchor technique for bounding box adjustment, and improved loss function. Additionally, YOLOv8 demonstrates superior performance due to its use of the modified CSPDarknet53 backbone, PAN feature fusion, anchor-free detection, class loss optimization, complete IoU loss for precise bounding boxes, and distribution focal loss to enhance accuracy across diverse object classes. Therefore, we make the following observation related to the datasets as follows.

Observation 2: The YOLOv5 model is dominant on the COCO dataset, and YOLOv8 is dominant on the PASCAL VOC dataset.

3.3.2. Intersection over Union (IoU) Thresholds

Next, we conduct experiments using various IoU thresholds to observe how YOLO models perform on the two datasets. In detail, four different IoU thresholds (e.g., 50, 75, 85, and 95) are set up in the experiment to measure the performance of the YOLO models using mAP.

Figure 5a and

Figure 5b present the mAP for six YOLO models on the COCO and PASCAL VOC datasets, respectively.

As shown in the two figures, as the IoU threshold increases (e.g., from 50 to 95), the mAP of all YOLO models tends to decrease. This finding is apparently according to (5) and (6), indicating that a higher IoU threshold results in a higher standard for good prediction. Thus, fewer predictions are considered good or correct. Hence, we make the first observation related to the IoU threshold as follows.

Also in

Figure 5, we can observe that YOLOv5 and YOLOv8 outperform the rest of the models for most of the IoU threshold scales. For example, on the COCO datasets, YOLOv5 and YOLOv8 obtain the highest mAP at three IoU thresholds (e.g.,

). On PASCAL VOC datasets, YOLOv5 and YOLOv8 outperform the other models at two IoU thresholds (85 and 95).

This better performance can be attributed to various architectural improvements and optimizations introduced in YOLOv5 and YOLOv8, such as CSPDarknet53, PANet, Binary Cross Entropy, Logit Loss function, and CIoU loss. The first factor is the model architecture of YOLO models. The architectural design for each YOLO model plays a crucial role in performance at various IOU thresholds. Further, YOLOv3, YOLOv4, YOLOv5, YOLOv6, YOLOv7, and YOLOv8 have distinct network structures, layer configurations, and feature fusion mechanisms. These architectural variances affect the models’ ability to handle object detection tasks with varying IOU thresholds. For example, some models may prioritize precision at higher IOU thresholds, whereas others may focus on recall at lower IOU thresholds. Hence, we provide a second observation of the best YOLO models when varying IoU thresholds.

3.3.3. Class of Objects

Figure 6 and

Figure 7 illustrate the performance of various object classes in the two datasets. As depicted in

Figure 6, the performance of YOLO models on object classes in the COCO datasets fluctuates, demonstrating the difference in performance between the traffic light and bicycle classes perform the worst for all YOLO models. For example, at

and

, traffic light and bicycle classes get the lowest performance with all YOLO models. As for the bicycle class, it achieves around

(e.g., with YOLOv7) and

(e.g., with YOLOv3) at

and

, respectively. As for the traffic light class, it even achieves even lower performance of around

(e.g., with YOLOv7) and

(e.g., with YOLOv3) at

and

, respectively. Considering the same YOLO models at

and

, the training object classes can achieve around

and

, respectively, demonstrating a large performance gap between object classes on the same dataset.

In contrast, the performance of YOLO models on object classes in the Pascal VOC dataset remains more stable than in the COCO dataset, as presented in

Figure 7 because PASCAL VOC contains more efficient and suitable objects than COCO. The fluctuating and stable performance of YOLO models on various object classes in the COCO and PASCAL VOC datasets respectively indicate that the data quality of object classes can influence the performance of YOLO models. Thus, the first observation related to the datasets is provided below.

Table 4 and

Table 5 detail the YOLO performance at

and the average YOLO performance at a range of IoU thresholds

on the COCO datasets, respectively. The performance on the PASCAL VOC datasets is presented in

Table 6 and

Table 7. As shown in

Table 4 and

Table 6, at a low IoU threshold,

, YOLOv4 outperforms other YOLO models on both datasets. For example, on COCO datasets, shown in

Table 4, YOLOv4 achieves the highest performance with

object classes (e.g., bicycle, car, bus, truck, traffic light, and parking meter). On the PASCAL VOC datasets, in

Table 6, YOLOv4 is dominant for

object classes. However, at various IoU thresholds as displayed in

Table 5 and

Table 7, the dominant models are YOLOv5 and YOLOv8 on the COCO and PASCAL VOC datasets, respectively. For example, on COCO datasets as shown in

Table 5, YOLOv5 outperforms with

object classes. in contrast, on the PASCAL VOC datasets as shown in

Table 7, YOLOv8 outperforms the other models for

object classes. Thus, YOLOv5 and YOLOv8 outperform other models for most object classes in the two datasets, leading to the following observation.

Observation 6: Both YOLOv5 and YOLOv8 outperform the other models on most of the object classes.

The following section summarizes the overall performance results, demonstrating that YOLOv4, YOLOv5, and YOLOv8 outperform the other YOLO models on the two datasets.

3.4. Summary

In summary, the experimental results confirm the anticipated effects of datasets, object classes, and IoU thresholds on the performance of YOLO models. Specifically, first, the performance of YOLO models trained and validated on different datasets can perform disparately. Second, the higher the IoU threshold is, the lower YOLO performance achieves. Last, YOLO performance on various object classes stabilizes according to the dataset quality.

Moreover, the experimental results show that YOLOv5 and YOLOv8 consistently outperform others accross the IoU thresholds ranging from 50 to 95 on the COCO and PASCAL VOC datasets. Additionally, at a low IoU threshold (e.g., ), YOLOv4 exhibits the best performance on both datasets. These results suggest that YOLOv5 and YOLOv8 are promising choices for object detection tasks in the road transportation scenario, due to their strong performance across a suitable range of IoU thresholds (e.g., from 50 to 95).

4. Related Work

Several studies have focused on evaluating the performance of YOLO variants in object detection. For example, Liule et al. [

63] experiment on three versions: YOLOv3, YOLOv4, and YOLOv5. They evaluate and analyze the performance of the three YOLO variants regarding accuracy and inference speed by using training and predicting on the PASCAL VOC dataset. The present study aligns with their research in evaluating and analyzing the performance of YOLO variants on Pascal VOC. In contrast, we evaluated and analyzed more YOLO variants, including the latest one (YOLOv8). Further, we considered their performance on various datasets (COCO and Pascal VOC) and IoU thresholds, whereas they [

63] does not consider such diverse factors.

Nazir et al. [

35] provide a review of the YOLO variants (YOLO version 1 to 5) on their performance. They also study the accuracy and inference speed of various YOLO models like [

63] does but on various datasets (e.g., COCO, KITTI, and SUN-RGBD datasets). The present work aligns with this research regarding evaluating the performance of YOLO variants on various datasets. In contrast, the present study provides a performance evaluation of YOLO variants on various datasets and contributes an empirical analysis of the performance evaluation. Furthermore, the other difference is that this article considers YOLO performance on various IoU thresholds, whereas [

35] does not.

Sozzi et al. [

36] introduce a comparative evaluation of the accuracy and inference speed of various versions of YOLO object detection algorithms, such as YOLOv3, YOLOv3-tiny, YOLOv4, YOLOv4-tiny, YOLOv5x, and YOLOv5s, for grape-yield estimation. The current study aligns with this work regarding providing a performance evaluation of YOLO variants in object detection tasks. However, while [

36] focuses on object detection tasks in smart farming, the current study focuses on smart transportation. Therefore, the datasets used in these experiments are different. Moreover, the current study considers more variants of YOLO than in their study [

36], more datasets, and more IoU thresholds.

Finally, this study empirically evaluates and analyzes various YOLO variants considering multiple datasets, object classes in datasets, and IoU thresholds. However, it also considers the scenario of smart transportation (e.g., road transportation), which is novel and imperative among object detection studies in smart transportation.