Submitted:

13 September 2024

Posted:

14 September 2024

Read the latest preprint version here

Abstract

Keywords:

1. Introduction

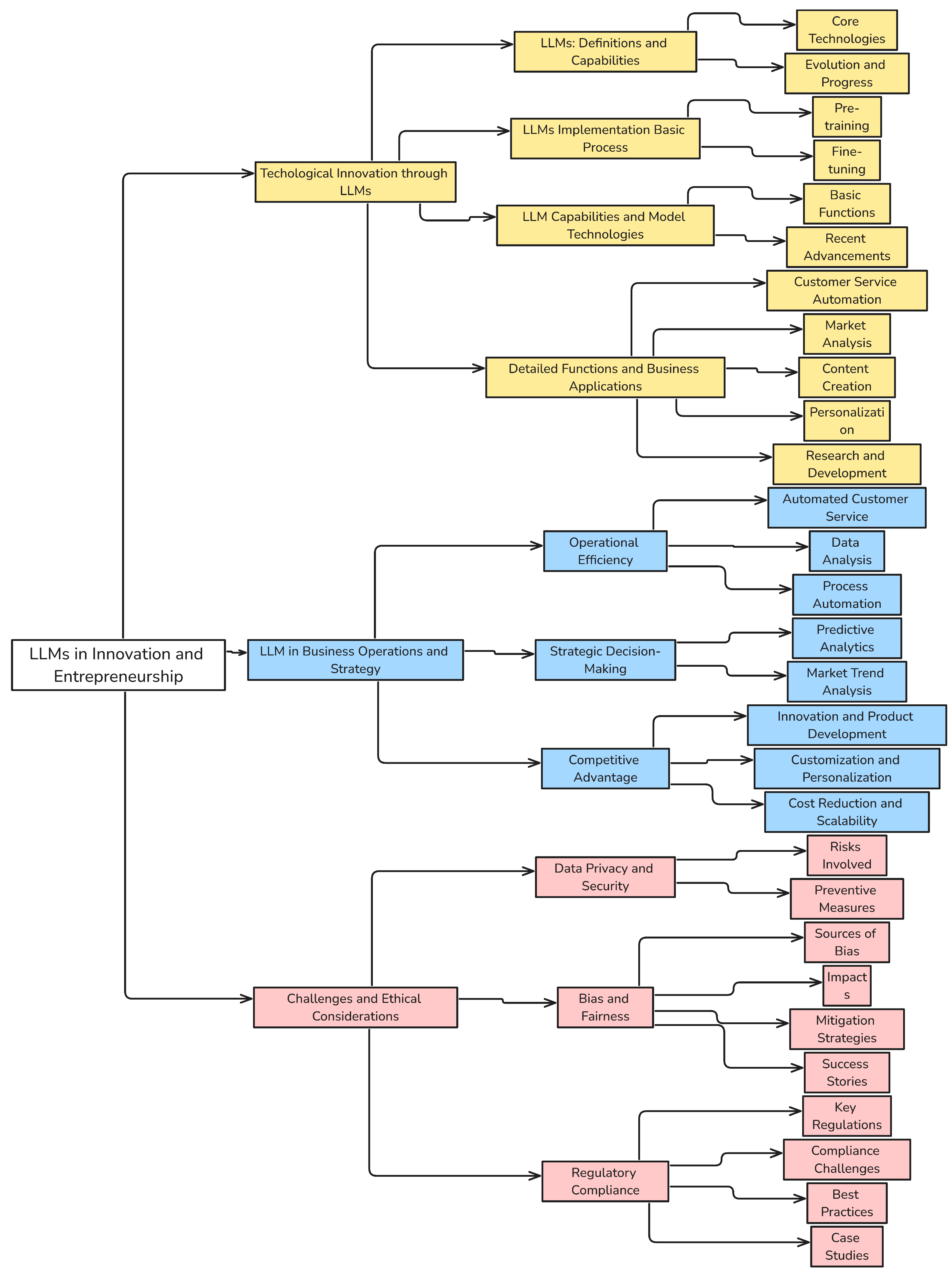

2. Technological Innovation through LLMs

2.1. LLMs: Definitions and Capabilities

2.2. LLMs Implementation Basic Process

Pre-training Stage.

Fine-tuning Stage.

2.3. LLM Capabilities and Model Technologies

Basic Functions.

Recent Advancements.

2.4. Detailed Functions and Business Applications

- Personalization. Personalizing user experiences on digital platforms based on user behavior and preferences [89]. This increases customer loyalty and potentially higher sales through personalized recommendations and communications.

3. LLMs in Business Operations and Strategy

3.1. Operational Efficiency

Automated Customer Service.

Data Analysis.

Process Automation.

3.2. Strategic Decision-Making

Predictive Analytics with LLMs.

Market Trend Analysis.

3.3. Competitive Advantage

Innovation and Product Development.

Advanced Language Models in Industry.

Emerging LLMs Showcasing Advanced Capabilities.

Customization and Personalization.

Cost Reduction and Scalability.

4. Challenges and Ethical Considerations

4.1. Data Privacy and Security

4.2. Bias and Fairness

4.3. Regulatory Compliance

5. Conclusion

References

- Bengio, Y.; Ducharme, R.; Vincent, P. A neural probabilistic language model. Advances in neural information processing systems 2000, 13. [Google Scholar]

- Collobert, R.; Weston, J.; Bottou, L.; Karlen, M.; Kavukcuoglu, K.; Kuksa, P. Natural language processing (almost) from scratch. Journal of machine learning research 2011, 12, 2493–2537. [Google Scholar]

- Pinker, S. The language instinct: How the mind creates language; Penguin uK, 2003. [Google Scholar]

- Hauser, M.D.; Chomsky, N.; Fitch, W.T. The faculty of language: what is it, who has it, and how did it evolve? science 2002, 298, 1569–1579. [Google Scholar] [CrossRef]

- Turing, A.M. Computing machinery and intelligence; Springer, 2009. [Google Scholar]

- Stolcke, A.; others. SRILM-an extensible language modeling toolkit. Interspeech, 2002, Vol. 2002, p. 2002.

- Gao, J.; Lin, C.Y. Introduction to the special issue on statistical language modeling, 2004. [CrossRef]

- Rosenfeld, R. Two decades of statistical language modeling: Where do we go from here? Proceedings of the IEEE 2000, 88, 1270–1278. [Google Scholar] [CrossRef]

- Liu, X.; Croft, W.B. Statistical language modeling for information retrieval. Annu. Rev. Inf. Sci. Technol. 2005, 39, 1–31. [Google Scholar] [CrossRef]

- Thede, S.M.; Harper, M. A second-order hidden Markov model for part-of-speech tagging. Proceedings of the 37th annual meeting of the Association for Computational Linguistics, 1999, pp. 175–182.

- Bahl, L.R.; Brown, P.F.; De Souza, P.V.; Mercer, R.L. A tree-based statistical language model for natural language speech recognition. IEEE Transactions on Acoustics, Speech, and Signal Processing 1989, 37, 1001–1008. [Google Scholar] [CrossRef]

- Yao, Y.; Duan, J.; Xu, K.; Cai, Y.; Sun, Z.; Zhang, Y. A survey on large language model (llm) security and privacy: The good, the bad, and the ugly. High-Confidence Computing 2024, p. 100211. [CrossRef]

- Wang, Z.; Wang, P.; Liu, K.; Wang, P.; Fu, Y.; Lu, C.T.; Aggarwal, C.C.; Pei, J.; Zhou, Y. A Comprehensive Survey on Data Augmentation. arXiv 2024, arXiv:2405.09591. [Google Scholar]

- Kalyan, K.S. A survey of GPT-3 family large language models including ChatGPT and GPT-4. Natural Language Processing Journal 2023, 100048. [Google Scholar] [CrossRef]

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z. A survey of large language models. arXiv others. 2023, arXiv:2303.18223. [Google Scholar]

- Chang, Y.; Wang, X.; Wang, J.; Wu, Y.; Yang, L.; Zhu, K.; Chen, H.; Yi, X.; Wang, C.; Wang, Y.; others. A survey on evaluation of large language models. ACM Transactions on Intelligent Systems and Technology 2024, 15, 1–45. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, J.; Rekabdar, B.; Zhou, Y.; Wang, P.; Liu, K. Dynamic and Adaptive Feature Generation with LLM. arXiv 2024, arXiv:2406.03505. [Google Scholar]

- Minaee, S.; Mikolov, T.; Nikzad, N.; Chenaghlu, M.; Socher, R.; Amatriain, X.; Gao, J. Large language models: A survey. arXiv 2024, arXiv:2402.06196. [Google Scholar]

- Zhao, H.; Chen, H.; Yang, F.; Liu, N.; Deng, H.; Cai, H.; Wang, S.; Yin, D.; Du, M. Explainability for large language models: A survey. ACM Transactions on Intelligent Systems and Technology 2024, 15, 1–38. [Google Scholar] [CrossRef]

- Vaswani, A. Attention is all you need. Advances in Neural Information Processing Systems 2017. [Google Scholar]

- Huang, K.; Mo, F.; Li, H.; Li, Y.; Zhang, Y.; Yi, W.; Mao, Y.; Liu, J.; Xu, Y.; Xu, J.; others. A Survey on Large Language Models with Multilingualism: Recent Advances and New Frontiers. arXiv 2024, arXiv:2405.10936. [Google Scholar]

- Su, Z.; Zhou, Y.; Mo, F.; Simonsen, J.G. Language modeling using tensor trains. arXiv 2024, arXiv:2405.04590. [Google Scholar]

- Wang, C.; Li, M.; Smola, A.J. Language models with transformers. arXiv 2019, arXiv:1904.09408. [Google Scholar]

- Sun, Y.; Dong, L.; Huang, S.; Ma, S.; Xia, Y.; Xue, J.; Wang, J.; Wei, F. Retentive network: A successor to transformer for large language models. arXiv 2023, arXiv:2307.08621. [Google Scholar]

- Wang, J.; Mo, F.; Ma, W.; Sun, P.; Zhang, M.; Nie, J.Y. A User-Centric Benchmark for Evaluating Large Language Models. arXiv 2024, arXiv:2404.13940. [Google Scholar]

- Mao, K.; Dou, Z.; Mo, F.; Hou, J.; Chen, H.; Qian, H. Large language models know your contextual search intent: A prompting framework for conversational search. arXiv 2023, arXiv:2303.06573. [Google Scholar]

- Dai, Z.; Yang, Z.; Yang, Y.; Carbonell, J.; Le, Q.V.; Salakhutdinov, R. Transformer-xl: Attentive language models beyond a fixed-length context. arXiv 2019, arXiv:1901.02860. [Google Scholar]

- Thirunavukarasu, A.J.; Ting, D.S.J.; Elangovan, K.; Gutierrez, L.; Tan, T.F.; Ting, D.S.W. Large language models in medicine. Nature medicine 2023, 29, 1930–1940. [Google Scholar] [CrossRef] [PubMed]

- Meyer, J.G.; Urbanowicz, R.J.; Martin, P.C.; O’Connor, K.; Li, R.; Peng, P.C.; Bright, T.J.; Tatonetti, N.; Won, K.J.; Gonzalez-Hernandez, G.; others. ChatGPT and large language models in academia: opportunities and challenges. BioData Mining 2023, 16, 20. [Google Scholar] [CrossRef]

- Goldberg, A. AI in Finance: Leveraging Large Language Models for Enhanced Decision-Making and Risk Management. Social Science Journal for Advanced Research 2024, 4, 33–40. [Google Scholar]

- Li, H.; Gao, H.; Wu, C.; Vasarhelyi, M.A. Extracting financial data from unstructured sources: Leveraging large language models. Journal of Information Systems 2023, 1–22. [Google Scholar] [CrossRef]

- Birhane, A.; Kasirzadeh, A.; Leslie, D.; Wachter, S. Science in the age of large language models. Nature Reviews Physics 2023, 5, 277–280. [Google Scholar] [CrossRef]

- Liu, Y.; Han, T.; Ma, S.; Zhang, J.; Yang, Y.; Tian, J.; He, H.; Li, A.; He, M.; Liu, Z.; others. Summary of chatgpt-related research and perspective towards the future of large language models. Meta-Radiology 2023, 100017. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, Z.; Jiang, L.; Gao, W.; Wang, P.; Liu, K. TFWT: Tabular Feature Weighting with Transformer. arXiv 2024, arXiv:2405.08403. [Google Scholar]

- Abd-Alrazaq, A.; AlSaad, R.; Alhuwail, D.; Ahmed, A.; Healy, P.M.; Latifi, S.; Aziz, S.; Damseh, R.; Alrazak, S.A.; Sheikh, J.; others. Large language models in medical education: opportunities, challenges, and future directions. JMIR Medical Education 2023, 9, e48291. [Google Scholar] [CrossRef]

- Zeng, J.; Chen, B.; Deng, Y.; Chen, W.; Mao, Y.; Li, J. Fine-tuning of financial Large Language Model and application at edge device. Proceedings of the 3rd International Conference on Computer, Artificial Intelligence and Control Engineering, 2024, pp. 42–47.

- Zhao, H.; Liu, Z.; Wu, Z.; Li, Y.; Yang, T.; Shu, P.; Xu, S.; Dai, H.; Zhao, L.; Mai, G.; others. Revolutionizing finance with llms: An overview of applications and insights. arXiv 2024, arXiv:2401.11641. [Google Scholar]

- Hadi, M.U.; Al Tashi, Q.; Shah, A.; Qureshi, R.; Muneer, A.; Irfan, M.; Zafar, A.; Shaikh, M.B.; Akhtar, N.; Wu, J.; others. Large language models: a comprehensive survey of its applications, challenges, limitations, and future prospects. Authorea Preprints 2024.

- Li, X.V.; Passino, F.S. FinDKG: Dynamic Knowledge Graphs with Large Language Models for Detecting Global Trends in Financial Markets. arXiv 2024, arXiv:2407.10909. [Google Scholar]

- Carlini, N.; Tramer, F.; Wallace, E.; Jagielski, M.; Herbert-Voss, A.; Lee, K.; Roberts, A.; Brown, T.; Song, D.; Erlingsson, U.; others. Extracting training data from large language models. 30th USENIX Security Symposium (USENIX Security 21), 2021, pp. 2633–2650.

- Naveed, H.; Khan, A.U.; Qiu, S.; Saqib, M.; Anwar, S.; Usman, M.; Akhtar, N.; Barnes, N.; Mian, A. A comprehensive overview of large language models. arXiv 2023, arXiv:2307.06435. [Google Scholar]

- Shi, W.; Ajith, A.; Xia, M.; Huang, Y.; Liu, D.; Blevins, T.; Chen, D.; Zettlemoyer, L. Detecting pretraining data from large language models. arXiv 2023, arXiv:2310.16789. [Google Scholar]

- Korbak, T.; Shi, K.; Chen, A.; Bhalerao, R.V.; Buckley, C.; Phang, J.; Bowman, S.R.; Perez, E. Pretraining language models with human preferences. International Conference on Machine Learning. PMLR, 2023, pp. 17506–17533.

- Gururangan, S.; Marasović, A.; Swayamdipta, S.; Lo, K.; Beltagy, I.; Downey, D.; Smith, N.A. Don’t stop pretraining: Adapt language models to domains and tasks. arXiv 2020, arXiv:2004.10964. [Google Scholar]

- Petroni, F.; Rocktäschel, T.; Lewis, P.; Bakhtin, A.; Wu, Y.; Miller, A.H.; Riedel, S. Language models as knowledge bases? arXiv 2019, arXiv:1909.01066. [Google Scholar]

- Bert, C.W.; Malik, M. Differential quadrature method in computational mechanics: a review 1996.

- Chowdhery, A.; Narang, S.; Devlin, J.; Bosma, M.; Mishra, G.; Roberts, A.; Barham, P.; Chung, H.W.; Sutton, C.; Gehrmann, S.; others. Palm: Scaling language modeling with pathways. Journal of Machine Learning Research 2023, 24, 1–113. [Google Scholar] [CrossRef]

- Zhang, S.; Dong, L.; Li, X.; Zhang, S.; Sun, X.; Wang, S.; Li, J.; Hu, R.; Zhang, T.; Wu, F.; others. Instruction tuning for large language models: A survey. arXiv 2023, arXiv:2308.10792. [Google Scholar]

- Wang, Y.; Zhong, W.; Li, L.; Mi, F.; Zeng, X.; Huang, W.; Shang, L.; Jiang, X.; Liu, Q. Aligning large language models with human: A survey. arXiv 2023, arXiv:2307.12966. [Google Scholar]

- Zhang, J.; Wang, X.; Jin, Y.; Chen, C.; Zhang, X.; Liu, K. Prototypical Reward Network for Data-Efficient RLHF. arXiv 2024, arXiv:2406.06606. [Google Scholar]

- Min, B.; Ross, H.; Sulem, E.; Veyseh, A.P.B.; Nguyen, T.H.; Sainz, O.; Agirre, E.; Heintz, I.; Roth, D. Recent advances in natural language processing via large pre-trained language models: A survey. ACM Computing Surveys 2023, 56, 1–40. [Google Scholar] [CrossRef]

- Mahowald, K.; Ivanova, A.A.; Blank, I.A.; Kanwisher, N.; Tenenbaum, J.B.; Fedorenko, E. Dissociating language and thought in large language models. Trends in Cognitive Sciences 2024. [Google Scholar] [CrossRef]

- Meier, J.; Rao, R.; Verkuil, R.; Liu, J.; Sercu, T.; Rives, A. Language models enable zero-shot prediction of the effects of mutations on protein function. Advances in neural information processing systems 2021, 34, 29287–29303. [Google Scholar]

- Madani, A.; Krause, B.; Greene, E.R.; Subramanian, S.; Mohr, B.P.; Holton, J.M.; Olmos, J.L.; Xiong, C.; Sun, Z.Z.; Socher, R.; others. Large language models generate functional protein sequences across diverse families. Nature Biotechnology 2023, 41, 1099–1106. [Google Scholar] [CrossRef]

- Rafailov, R.; Sharma, A.; Mitchell, E.; Manning, C.D.; Ermon, S.; Finn, C. Direct preference optimization: Your language model is secretly a reward model. Advances in Neural Information Processing Systems 2024, 36. [Google Scholar]

- OpenAI. GPT-3.5 Turbo Fine-Tuning and API Updates. https://openai.com/index/gpt-3-5-turbo-fine-tuning-and-api-updates/, 2023. Accessed: 2024-05-20.

- Huang, W.; Abbeel, P.; Pathak, D.; Mordatch, I. Language models as zero-shot planners: Extracting actionable knowledge for embodied agents. International Conference on Machine Learning. PMLR, 2022, pp. 9118–9147.

- Zhang, S.; Chen, Z.; Shen, Y.; Ding, M.; Tenenbaum, J.B.; Gan, C. Planning with large language models for code generation. arXiv 2023, arXiv:2303.05510. [Google Scholar]

- Zhang, J.; Wang, X.; Ren, W.; Jiang, L.; Wang, D.; Liu, K. RATT: AThought Structure for Coherent and Correct LLMReasoning. arXiv 2024, arXiv:2406.02746. [Google Scholar]

- Zhao, P.; Zhang, H.; Yu, Q.; Wang, Z.; Geng, Y.; Fu, F.; Yang, L.; Zhang, W.; Cui, B. Retrieval-augmented generation for ai-generated content: A survey. arXiv 2024, arXiv:2402.19473. [Google Scholar]

- Renze, M.; Guven, E. Self-Reflection in LLM Agents: Effects on Problem-Solving Performance. arXiv 2024, arXiv:2405.06682. [Google Scholar]

- Noor, A.K.; Burton, W.S.; Bert, C.W. Computational models for sandwich panels and shells 1996. [CrossRef]

- Adoma, A.F.; Henry, N.M.; Chen, W. Comparative analyses of bert, roberta, distilbert, and xlnet for text-based emotion recognition. 2020 17th International Computer Conference on Wavelet Active Media Technology and Information Processing (ICCWAMTIP). IEEE, 2020, pp. 117–121.

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. Journal of machine learning research 2020, 21, 1–67. [Google Scholar]

- Guo, M.; Ainslie, J.; Uthus, D.; Ontanon, S.; Ni, J.; Sung, Y.H.; Yang, Y. LongT5: Efficient text-to-text transformer for long sequences. arXiv 2021, arXiv:2112.07916. [Google Scholar]

- Mastropaolo, A.; Scalabrino, S.; Cooper, N.; Palacio, D.N.; Poshyvanyk, D.; Oliveto, R.; Bavota, G. Studying the usage of text-to-text transfer transformer to support code-related tasks. 2021 IEEE/ACM 43rd International Conference on Software Engineering (ICSE). IEEE, 2021, pp. 336–347.

- Li, Z.; Guan, B.; Wei, Y.; Zhou, Y.; Zhang, J.; Xu, J. Mapping new realities: Ground truth image creation with pix2pix image-to-image translation. arXiv 2024, arXiv:2404.19265. [Google Scholar]

- Zhang, B.; Yang, H.; Zhou, T.; Ali Babar, M.; Liu, X.Y. Enhancing financial sentiment analysis via retrieval augmented large language models. Proceedings of the fourth ACM international conference on AI in finance, 2023, pp. 349–356.

- Ding, H.; Li, Y.; Wang, J.; Chen, H. Large Language Model Agent in Financial Trading: A Survey. arXiv 2024, arXiv:2408.06361. [Google Scholar]

- Xue, L. mt5: A massively multilingual pre-trained text-to-text transformer. arXiv 2020, arXiv:2010.11934. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, X.; Li, K.; Zhang, S. Semi-supervised learning for k-dependence Bayesian classifiers. Applied Intelligence 2022, 1–19. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, S.; Mammadov, M.; Li, K.; Zhang, X.; Wu, S. Semi-supervised weighting for averaged one-dependence estimators. Applied Intelligence 2022, 1–17. [Google Scholar] [CrossRef]

- Yuan, Y.; Huang, Y.; Ma, Y.; Li, X.; Li, Z.; Shi, Y.; Zhou, H. Rhyme-aware Chinese lyric generator based on GPT. arXiv 2024, arXiv:2408.10130. [Google Scholar]

- Zhang, J.; Cao, J.; Chang, J.; Li, X.; Liu, H.; Li, Z. Research on the Application of Computer Vision Based on Deep Learning in Autonomous Driving Technology. arXiv 2024, arXiv:2406.00490. [Google Scholar]

- Xie, Q.; Han, W.; Zhang, X.; Lai, Y.; Peng, M.; Lopez-Lira, A.; Huang, J. Pixiu: A large language model, instruction data and evaluation benchmark for finance. arXiv 2023, arXiv:2306.05443. [Google Scholar]

- Kang, H.; Liu, X.Y. Deficiency of large language models in finance: An empirical examination of hallucination. I Can’t Believe It’s Not Better Workshop: Failure Modes in the Age of Foundation Models, 2023.

- Robles-Serrano, S.; Rios-Perez, J.; Sanchez-Torres, G. Integration of Large Language Models in Mobile Applications for Statutory Auditing and Finance. Prospectiva (1692-8261) 2024, 22. [Google Scholar]

- Xie, Q.; Huang, J.; Li, D.; Chen, Z.; Xiang, R.; Xiao, M.; Yu, Y.; Somasundaram, V.; Yang, K.; Yuan, C.; others. FinNLP-AgentScen-2024 Shared Task: Financial Challenges in Large Language Models-FinLLMs. Proceedings of the Eighth Financial Technology and Natural Language Processing and the 1st Agent AI for Scenario Planning, 2024, pp. 119–126.

- Wu, S.; Irsoy, O.; Lu, S.; Dabravolski, V.; Dredze, M.; Gehrmann, S.; Kambadur, P.; Rosenberg, D.; Mann, G. Bloomberggpt: A large language model for finance. arXiv 2023, arXiv:2303.17564. [Google Scholar]

- Li, Y.; Wang, S.; Ding, H.; Chen, H. Large language models in finance: A survey. Proceedings of the fourth ACM international conference on AI in finance, 2023, pp. 374–382.

- Yang, Y.; Uy, M.C.S.; Huang, A. Finbert: A pretrained language model for financial communications. arXiv 2020, arXiv:2006.08097. [Google Scholar]

- Huang, A.H.; Wang, H.; Yang, Y. FinBERT: A large language model for extracting information from financial text. Contemporary Accounting Research 2023, 40, 806–841. [Google Scholar] [CrossRef]

- Park, T. Enhancing Anomaly Detection in Financial Markets with an LLM-based Multi-Agent Framework. arXiv 2024, arXiv:2403.19735. [Google Scholar]

- Kim, D.; Lee, D.; Park, J.; Oh, S.; Kwon, S.; Lee, I.; Choi, D. KB-BERT: Training and Application of Korean Pre-trained Language Model in Financial Domain. Journal of Intelligence and Information Systems 2022, 28, 191–206. [Google Scholar]

- Krause, D. Large language models and generative AI in finance: an analysis of ChatGPT, Bard, and Bing AI. Bard, and Bing AI (July 15, 2023) 2023.

- Liu, X.Y.; Wang, G.; Yang, H.; Zha, D. Fingpt: Democratizing internet-scale data for financial large language models. arXiv 2023, arXiv:2307.10485. [Google Scholar]

- Mo, F.; Qu, C.; Mao, K.; Zhu, T.; Su, Z.; Huang, K.; Nie, J.Y. History-Aware Conversational Dense Retrieval. arXiv 2024, arXiv:2401.16659. [Google Scholar]

- Zhang, C.; Liu, X.; Jin, M.; Zhang, Z.; Li, L.; Wang, Z.; Hua, W.; Shu, D.; Zhu, S.; Jin, X.; others. When AI Meets Finance (StockAgent): Large Language Model-based Stock Trading in Simulated Real-world Environments. arXiv 2024, arXiv:2407.18957. [Google Scholar]

- Krumdick, M.; Koncel-Kedziorski, R.; Lai, V.; Reddy, V.; Lovering, C.; Tanner, C. BizBench: A Quantitative Reasoning Benchmark for Business and Finance. Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), 2024, pp. 8309–8332.

- Wang, L.; Ma, C.; Feng, X.; Zhang, Z.; Yang, H.; Zhang, J.; Chen, Z.; Tang, J.; Chen, X.; Lin, Y.; others. A survey on large language model based autonomous agents. Frontiers of Computer Science 2024, 18, 186345. [Google Scholar] [CrossRef]

- Peng, C.; Yang, X.; Chen, A.; Smith, K.E.; PourNejatian, N.; Costa, A.B.; Martin, C.; Flores, M.G.; Zhang, Y.; Magoc, T.; others. A study of generative large language model for medical research and healthcare. NPJ digital medicine 2023, 6, 210. [Google Scholar] [CrossRef]

- Obschonka, M.; Audretsch, D.B. Artificial intelligence and big data in entrepreneurship: a new era has begun. Small Business Economics 2020, 55, 529–539. [Google Scholar] [CrossRef]

- Weber, M.; Beutter, M.; Weking, J.; Böhm, M.; Krcmar, H. AI startup business models: Key characteristics and directions for entrepreneurship research. Business & Information Systems Engineering 2022, 64, 91–109. [Google Scholar]

- Hu, T.; Zhu, W.; Yan, Y. Artificial intelligence aspect of transportation analysis using large scale systems. Proceedings of the 2023 6th Artificial Intelligence and Cloud Computing Conference, 2023, pp. 54–59.

- Zhu, W. Optimizing distributed networking with big data scheduling and cloud computing. International Conference on Cloud Computing, Internet of Things, and Computer Applications (CICA 2022). SPIE, 2022, Vol. 12303, pp. 23–28.

- Yan, Y. Influencing Factors of Housing Price in New York-analysis: Based on Excel Multi-regression Model 2022.

- Khang, A.; Jadhav, B.; Dave, T. Enhancing Financial Services. Synergy of AI and Fintech in the Digital Gig Economy 2024, p. 147.

- Crosman, P. Banking 2025: The rise of the invisible bank. American Banker 2019. [Google Scholar]

- Wendlinger, B. The challenge of FinTech from the perspective of german incumbent banks: an exploratory study investigating industry trends and considering the future of banking. PhD thesis, 2022.

- Kang, Y.; Xu, Y.; Chen, C.P.; Li, G.; Cheng, Z. 6: Simultaneous Tracking, Tagging and Mapping for Augmented Reality. SID Symposium Digest of Technical Papers. Wiley Online Library, 2021, Vol. 52, pp. 31–33. [CrossRef]

- Zhu, W.; Hu, T. Twitter Sentiment analysis of covid vaccines. 2021 5th International Conference on Artificial Intelligence and Virtual Reality (AIVR), 2021, pp. 118–122.

- Zhang, X.; Zhang, J.; Mo, F.; Chen, Y.; Liu, K. TIFG: Text-Informed Feature Generation with Large Language Models. arXiv 2024, arXiv:2406.11177. [Google Scholar]

- Mo, F.; Mao, K.; Zhu, Y.; Wu, Y.; Huang, K.; Nie, J.Y. ConvGQR: generative query reformulation for conversational search. arXiv 2023, arXiv:2305.15645. [Google Scholar]

- Mao, K.; Deng, C.; Chen, H.; Mo, F.; Liu, Z.; Sakai, T.; Dou, Z. ChatRetriever: Adapting Large Language Models for Generalized and Robust Conversational Dense Retrieval. arXiv 2024, arXiv:2404.13556. [Google Scholar]

- Zhu, Y.; Honnet, C.; Kang, Y.; Zhu, J.; Zheng, A.J.; Heinz, K.; Tang, G.; Musk, L.; Wessely, M.; Mueller, S. Demonstration of ChromoCloth: Re-Programmable Multi-Color Textures through Flexible and Portable Light Source. Adjunct Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology, 2023, pp. 1–3.

- Yang, X.; Kang, Y.; Yang, X. Retargeting destinations of passive props for enhancing haptic feedback in virtual reality. 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW). IEEE, 2022, pp. 618–619.

- Kang, Y.; Zhang, Z.; Zhao, M.; Yang, X.; Yang, X. Tie Memories to E-souvenirs: Hybrid Tangible AR Souvenirs in the Museum. Adjunct Proceedings of the 35th Annual ACM Symposium on User Interface Software and Technology, 2022, pp. 1–3.

- Mo, F.; Nie, J.Y.; Huang, K.; Mao, K.; Zhu, Y.; Li, P.; Liu, Y. Learning to relate to previous turns in conversational search. Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, 2023, pp. 1722–1732.

- Mo, F.; Yi, B.; Mao, K.; Qu, C.; Huang, K.; Nie, J.Y. Convsdg: Session data generation for conversational search. Companion Proceedings of the ACM on Web Conference 2024, 2024, pp. 1634–1642. [Google Scholar]

- Grohs, M.; Abb, L.; Elsayed, N.; Rehse, J.R. Large language models can accomplish business process management tasks. International Conference on Business Process Management. Springer, 2023, pp. 453–465.

- Bellan, P.; Dragoni, M.; Ghidini, C. Extracting business process entities and relations from text using pre-trained language models and in-context learning. International Conference on Enterprise Design, Operations, and Computing. Springer, 2022, pp. 182–199.

- George, A.S.; George, A.H. A review of ChatGPT AI’s impact on several business sectors. Partners universal international innovation journal 2023, 1, 9–23. [Google Scholar]

- Sha, H.; Mu, Y.; Jiang, Y.; Chen, L.; Xu, C.; Luo, P.; Li, S.E.; Tomizuka, M.; Zhan, W.; Ding, M. Languagempc: Large language models as decision makers for autonomous driving. arXiv 2023, arXiv:2310.03026. [Google Scholar]

- Li, S.; Puig, X.; Paxton, C.; Du, Y.; Wang, C.; Fan, L.; Chen, T.; Huang, D.A.; Akyürek, E.; Anandkumar, A.; others. Pre-trained language models for interactive decision-making. Advances in Neural Information Processing Systems 2022, 35, 31199–31212. [Google Scholar]

- Song, Y.; Arora, P.; Singh, R.; Varadharajan, S.T.; Haynes, M.; Starner, T. Going Blank Comfortably: Positioning Monocular Head-Worn Displays When They are Inactive. Proceedings of the 2023 International Symposium on Wearable Computers; ACM: Cancun, Quintana Roo Mexico, 2023; pp. 114–118. [Google Scholar] [CrossRef]

- Ni, H.; Meng, S.; Chen, X.; Zhao, Z.; Chen, A.; Li, P.; Zhang, S.; Yin, Q.; Wang, Y.; Chan, Y. Harnessing Earnings Reports for Stock Predictions: A QLoRA-Enhanced LLM Approach. arXiv 2024, arXiv:2408.06634. [Google Scholar]

- Chen, L.; Zhang, Y.; Ren, S.; Zhao, H.; Cai, Z.; Wang, Y.; Wang, P.; Liu, T.; Chang, B. Towards end-to-end embodied decision making via multi-modal large language model: Explorations with gpt4-vision and beyond. arXiv 2023, arXiv:2310.02071. [Google Scholar]

- Zhou, Y.; Zeng, Z.; Chen, A.; Zhou, X.; Ni, H.; Zhang, S.; Li, P.; Liu, L.; Zheng, M.; Chen, X. Evaluating Modern Approaches in 3D Scene Reconstruction: NeRF vs Gaussian-Based Methods. arXiv 2024, arXiv:2408.04268. [Google Scholar]

- Yao, S.; Yu, D.; Zhao, J.; Shafran, I.; Griffiths, T.; Cao, Y.; Narasimhan, K. Tree of thoughts: Deliberate problem solving with large language models. Advances in Neural Information Processing Systems 2024, 36. [Google Scholar]

- Rao, A.; Kim, J.; Kamineni, M.; Pang, M.; Lie, W.; Succi, M.D. Evaluating ChatGPT as an adjunct for radiologic decision-making. MedRxiv 2023, pp. 2023–02.

- Shen, Y.; Heacock, L.; Elias, J.; Hentel, K.D.; Reig, B.; Shih, G.; Moy, L. ChatGPT and other large language models are double-edged swords, 2023. [CrossRef]

- Song, Y.; Arora, P.; Varadharajan, S.T.; Singh, R.; Haynes, M.; Starner, T. Looking From a Different Angle: Placing Head-Worn Displays Near the Nose. Proceedings of the Augmented Humans International Conference 2024; ACM: Melbourne VIC Australia, 2024; pp. 28–45. [Google Scholar] [CrossRef]

- Yao, S.; Zhao, J.; Yu, D.; Du, N.; Shafran, I.; Narasimhan, K.; Cao, Y. React: Synergizing reasoning and acting in language models. arXiv 2022, arXiv:2210.03629. [Google Scholar]

- Chowdhury, R.; Bouatta, N.; Biswas, S.; Floristean, C.; Kharkar, A.; Roy, K.; Rochereau, C.; Ahdritz, G.; Zhang, J.; Church, G.M.; others. Single-sequence protein structure prediction using a language model and deep learning. Nature Biotechnology 2022, 40, 1617–1623. [Google Scholar] [CrossRef]

- Christian, H.; Suhartono, D.; Chowanda, A.; Zamli, K.Z. Text based personality prediction from multiple social media data sources using pre-trained language model and model averaging. Journal of Big Data 2021, 8, 68. [Google Scholar] [CrossRef]

- Lin, Z.; Akin, H.; Rao, R.; Hie, B.; Zhu, Z.; Lu, W.; dos Santos Costa, A.; Fazel-Zarandi, M.; Sercu, T.; Candido, S.; others. Language models of protein sequences at the scale of evolution enable accurate structure prediction. BioRxiv 2022, 2022, 500902. [Google Scholar]

- Guu, K.; Lee, K.; Tung, Z.; Pasupat, P.; Chang, M. Retrieval augmented language model pre-training. International conference on machine learning. PMLR, 2020, pp. 3929–3938.

- Eloundou, T.; Manning, S.; Mishkin, P.; Rock, D. Gpts are gpts: An early look at the labor market impact potential of large language models. arXiv 2023, arXiv:2303.10130. [Google Scholar]

- Horton, J.J. Large language models as simulated economic agents: What can we learn from homo silicus? Technical report, National Bureau of Economic Research, 2023.

- Zhang, H.; Hua, F.; Xu, C.; Kong, H.; Zuo, R.; Guo, J. Unveiling the Potential of Sentiment: Can Large Language Models Predict Chinese Stock Price Movements? arXiv 2023, arXiv:2306.14222. [Google Scholar]

- Tang, X.; Lei, N.; Dong, M.; Ma, D. Stock Price Prediction Based on Natural Language Processing1. Complexity 2022, 2022, 9031900. [Google Scholar] [CrossRef]

- Muhammad, T.; Aftab, A.B.; Ibrahim, M.; Ahsan, M.M.; Muhu, M.M.; Khan, S.I.; Alam, M.S. Transformer-based deep learning model for stock price prediction: A case study on Bangladesh stock market. International Journal of Computational Intelligence and Applications 2023, 22, 2350013. [Google Scholar] [CrossRef]

- Fan, X.; Tao, C.; Zhao, J. Advanced Stock Price Prediction with xLSTM-Based Models: Improving Long-Term Forecasting. Preprints 2024. [Google Scholar]

- Yan, C. Predict lightning location and movement with atmospherical electrical field instrument. 2019 IEEE 10th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON). IEEE, 2019, pp. 0535–0537.

- Gu, W.; Zhong, Y.; Li, S.; Wei, C.; Dong, L.; Wang, Z.; Yan, C. Predicting stock prices with FinBERT-LSTM: Integrating news sentiment analysis. arXiv 2024, arXiv:2407.16150. [Google Scholar]

- Xing, Y.; Yan, C.; Xie, C.C. Predicting NVIDIA’s Next-Day Stock Price: A Comparative Analysis of LSTM, MLP, ARIMA, and ARIMA-GARCH Models. arXiv 2024, arXiv:2405.08284. [Google Scholar]

- Shoeybi, M.; Patwary, M.; Puri, R.; LeGresley, P.; Casper, J.; Catanzaro, B. Megatron-lm: Training multi-billion parameter language models using model parallelism. arXiv 2019, arXiv:1909.08053. [Google Scholar]

- Fan, X.; Tao, C. Towards Resilient and Efficient LLMs: A Comparative Study of Efficiency, Performance, and Adversarial Robustness. arXiv 2024, arXiv:2408.04585. [Google Scholar]

- Lieber, O.; Lenz, B.; Bata, H.; Cohen, G.; Osin, J.; Dalmedigos, I.; Safahi, E.; Meirom, S.; Belinkov, Y.; Shalev-Shwartz, S.; others. Jamba: A hybrid transformer-mamba language model. arXiv 2024, arXiv:2403.19887. [Google Scholar]

- Liu, A.; Feng, B.; Wang, B.; Wang, B.; Liu, B.; Zhao, C.; Dengr, C.; Ruan, C.; Dai, D.; Guo, D.; others. DeepSeek-V2: A Strong, Economical, and Efficient Mixture-of-Experts Language Model. arXiv 2024, arXiv:2405.04434. [Google Scholar]

- Chen, J.; Liu, Z.; Huang, X.; Wu, C.; Liu, Q.; Jiang, G.; Pu, Y.; Lei, Y.; Chen, X.; Wang, X.; others. When large language models meet personalization: Perspectives of challenges and opportunities. World Wide Web 2024, 27, 42. [Google Scholar] [CrossRef]

- Wu, J.; Antonova, R.; Kan, A.; Lepert, M.; Zeng, A.; Song, S.; Bohg, J.; Rusinkiewicz, S.; Funkhouser, T. Tidybot: Personalized robot assistance with large language models. Autonomous Robots 2023, 47, 1087–1102. [Google Scholar] [CrossRef]

- Salemi, A.; Mysore, S.; Bendersky, M.; Zamani, H. Lamp: When large language models meet personalization. arXiv 2023, arXiv:2304.11406. [Google Scholar]

- Geng, S.; Liu, S.; Fu, Z.; Ge, Y.; Zhang, Y. Recommendation as language processing (rlp): A unified pretrain, personalized prompt & predict paradigm (p5). Proceedings of the 16th ACM Conference on Recommender Systems, 2022, pp. 299–315.

- Li, L.; Zhang, Y.; Chen, L. Personalized transformer for explainable recommendation. arXiv 2021, arXiv:2105.11601. [Google Scholar]

- Zheng, Y.; Zhang, R.; Huang, M.; Mao, X. A pre-training based personalized dialogue generation model with persona-sparse data. Proceedings of the AAAI Conference on Artificial Intelligence, 2020, Vol. 34, pp. 9693–9700. [CrossRef]

- Nasr, M.; Carlini, N.; Hayase, J.; Jagielski, M.; Cooper, A.F.; Ippolito, D.; Choquette-Choo, C.A.; Wallace, E.; Tramèr, F.; Lee, K. Scalable extraction of training data from (production) language models. arXiv 2023, arXiv:2311.17035. [Google Scholar]

- Choromanski, K.; Likhosherstov, V.; Dohan, D.; Song, X.; Gane, A.; Sarlos, T.; Hawkins, P.; Davis, J.; Belanger, D.; Colwell, L.; others. Masked language modeling for proteins via linearly scalable long-context transformers. arXiv 2020, arXiv:2006.03555. [Google Scholar]

- Peris, C.; Dupuy, C.; Majmudar, J.; Parikh, R.; Smaili, S.; Zemel, R.; Gupta, R. Privacy in the time of language models. Proceedings of the sixteenth ACM international conference on web search and data mining, 2023, pp. 1291–1292.

- Pan, X.; Zhang, M.; Ji, S.; Yang, M. Privacy risks of general-purpose language models. 2020 IEEE Symposium on Security and Privacy (SP). IEEE, 2020, pp. 1314–1331.

- Huang, Y.; Song, Z.; Chen, D.; Li, K.; Arora, S. TextHide: Tackling data privacy in language understanding tasks. Findings of the Association for Computational Linguistics: EMNLP 2020, 2020, pp. 1368–1382. [Google Scholar]

- Kandpal, N.; Wallace, E.; Raffel, C. Deduplicating training data mitigates privacy risks in language models. International Conference on Machine Learning. PMLR, 2022, pp. 10697–10707.

- Das, B.C.; Amini, M.H.; Wu, Y. Security and privacy challenges of large language models: A survey. arXiv 2024, arXiv:2402.00888. [Google Scholar]

- Shi, W.; Cui, A.; Li, E.; Jia, R.; Yu, Z. Selective differential privacy for language modeling. arXiv 2021, arXiv:2108.12944. [Google Scholar]

- Raeini, M. Privacy-preserving large language models (PPLLMs). Available at SSRN 4512071 2023. [Google Scholar] [CrossRef]

- Sommestad, T.; Ekstedt, M.; Holm, H. The cyber security modeling language: A tool for assessing the vulnerability of enterprise system architectures. IEEE Systems Journal 2012, 7, 363–373. [Google Scholar] [CrossRef]

- Geren, C.; Board, A.; Dagher, G.G.; Andersen, T.; Zhuang, J. Blockchain for large language model security and safety: A holistic survey. arXiv 2024, arXiv:2407.20181. [Google Scholar]

- Chen, H.; Mo, F.; Wang, Y.; Chen, C.; Nie, J.Y.; Wang, C.; Cui, J. A customized text sanitization mechanism with differential privacy. arXiv 2022, arXiv:2207.01193. [Google Scholar]

- Huang, K.; Huang, D.; Liu, Z.; Mo, F. A joint multiple criteria model in transfer learning for cross-domain chinese word segmentation. Proceedings of the 2020 conference on empirical methods in natural language processing (EMNLP), 2020, pp. 3873–3882.

- Mao, K.; Qian, H.; Mo, F.; Dou, Z.; Liu, B.; Cheng, X.; Cao, Z. Learning denoised and interpretable session representation for conversational search. Proceedings of the ACM Web Conference 2023, 2023, pp. 3193–3202. [Google Scholar]

- Huang, K.; Xiao, K.; Mo, F.; Jin, B.; Liu, Z.; Huang, D. Domain-aware word segmentation for Chinese language: A document-level context-aware model. Transactions on Asian and Low-Resource Language Information Processing 2021, 21, 1–16. [Google Scholar] [CrossRef]

- Zhang, L.; Wu, Y.; Mo, F.; Nie, J.Y.; Agrawal, A. MoqaGPT: Zero-Shot Multi-modal Open-domain Question Answering with Large Language Model. arXiv 2023, arXiv:2310.13265. [Google Scholar]

- Wang, P.; Fu, Y.; Zhou, Y.; Liu, K.; Li, X.; Hua, K.A. Exploiting Mutual Information for Substructure-aware Graph Representation Learning. IJCAI, 2020, pp. 3415–3421.

- Gallegos, I.O.; Rossi, R.A.; Barrow, J.; Tanjim, M.M.; Kim, S.; Dernoncourt, F.; Yu, T.; Zhang, R.; Ahmed, N.K. Bias and fairness in large language models: A survey. Computational Linguistics 2024, pp. 1–79. [CrossRef]

- Liang, P.P.; Wu, C.; Morency, L.P.; Salakhutdinov, R. Towards understanding and mitigating social biases in language models. International Conference on Machine Learning. PMLR, 2021, pp. 6565–6576.

- Delobelle, P.; Tokpo, E.K.; Calders, T.; Berendt, B. Measuring fairness with biased rulers: A survey on quantifying biases in pretrained language models. arXiv 2021, arXiv:2112.07447. [Google Scholar]

- Xu, Y.; Hu, L.; Zhao, J.; Qiu, Z.; Ye, Y.; Gu, H. A Survey on Multilingual Large Language Models: Corpora, Alignment, and Bias. arXiv 2024, arXiv:2404.00929. [Google Scholar]

- Navigli, R.; Conia, S.; Ross, B. Biases in large language models: origins, inventory, and discussion. ACM Journal of Data and Information Quality 2023, 15, 1–21. [Google Scholar] [CrossRef]

- Garrido-Muñoz, I.; Montejo-Ráez, A.; Martínez-Santiago, F.; Ureña-López, L.A. A survey on bias in deep NLP. Applied Sciences 2021, 11, 3184. [Google Scholar] [CrossRef]

- Wu, L.; Zheng, Z.; Qiu, Z.; Wang, H.; Gu, H.; Shen, T.; Qin, C.; Zhu, C.; Zhu, H.; Liu, Q.; others. A survey on large language models for recommendation. World Wide Web 2024, 27, 60. [Google Scholar] [CrossRef]

- Lin, L.; Wang, L.; Guo, J.; Wong, K.F. Investigating Bias in LLM-Based Bias Detection: Disparities between LLMs and Human Perception. arXiv 2024, arXiv:2403.14896. [Google Scholar]

- Rubinstein, I.S.; Good, N. Privacy by design: A counterfactual analysis of Google and Facebook privacy incidents. Berkeley Tech. LJ 2013, 28, 1333. [Google Scholar] [CrossRef]

- Yan, B.; Li, K.; Xu, M.; Dong, Y.; Zhang, Y.; Ren, Z.; Cheng, X. On protecting the data privacy of large language models (llms): A survey. arXiv 2024, arXiv:2403.05156. [Google Scholar]

- Voigt, P.; Von dem Bussche, A. The eu general data protection regulation (gdpr). A Practical Guide, 1st Ed., Cham: Springer International Publishing 2017, 10, 10–5555. [Google Scholar] [CrossRef]

- Goldman, E. An introduction to the california consumer privacy act (ccpa). Santa Clara Univ. Legal Studies Research Paper 2020. [Google Scholar] [CrossRef]

- Song, Y. Deep Learning Applications in the Medical Image Recognition. American Journal of Computer Science and Technology 2019, 2, 22–26. [Google Scholar] [CrossRef]

- Ni, H.; Meng, S.; Geng, X.; Li, P.; Li, Z.; Chen, X.; Wang, X.; Zhang, S. Time Series Modeling for Heart Rate Prediction: From ARIMA to Transformers. arXiv 2024, arXiv:2406.12199. [Google Scholar]

| 1 | |

| 2 | |

| 3 | |

| 4 | |

| 5 | |

| 6 | |

| 7 | |

| 8 | |

| 9 | |

| 10 | |

| 11 | |

| 12 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).