1. Introduction

The manufacturing industry has experienced a major transformation in recent years. The increasing need for automation has driven the manufacturing industry. Implementing automation in manufacturing enhances the efficiency and precision of the process as well as improves quality control [

1]. As industries maintain product consistent quality, the inconsistent shape issue during the manufacturing process needs to be solved. Psarommatis et al. [

2] present a literature review study on the methods to minimize product defects in industrial production lines based on 280 articles published from 1987 to 2018. The study identified four strategies: detection, repair, prediction, and prevention. The essence revolves around the depreciation of deficiencies within the end product and across its components and the energy consumption in the production process, among many other indicators [

3]. Detection was the most commonly used strategy, followed by quality assessment after the product was fully fabricated.

In decades, manufacturing has transformed from digital to intelligent manufacturing [

4]. The paper categorized the manufacturing transformation into i.e., digital manufacturing, digital-networked manufacturing, and new-generation intelligent manufacturing. It emphasizes integrating new-generation AI technology with advanced manufacturing technology as the core driving force of the new industrial revolution. The paper also introduces the concept of Human-Cyber-Physical Systems (HCPS) as the leading technology in intelligent manufacturing.

Artificial Intelligence (AI) improves conventional methods by enabling consistent, effective, efficient, and reliable processes and the end product. This is because a human-dependent manufacturing process typically faces problems such as inconsistency, inaccuracy due to human error, human fatigue, and the absence of an expert. Such issues can be solved by incorporating AI, especially Machine Learning (ML) and Deep Learning (DL) methods [

5]. One of the particular important process in the manufacturing process is the grinding and chamfering. These method are included in the surface finishing process to enhance the product quality. The automation process for grinding and chamfering tasks is becoming more significant due to requirements for exactness and efficiency. Systems for grinding and polishing driven by robots with have visual recognition abilities will increase an accuracy while significantly reduce the time taken for manual work. Adding machine learning into these kinds of processes makes possible adjustments in real-time. This optimizes how things are done because it keeps track of when the wheel used for grinding needs dressing, so performance is improved, and waste is lessened. For an automated grinding and chamfering processes with a machine vision based AI has a main task to detect the edge to be processed by grinding and chamfering. An examples of the edge detection using non-AI and AI methods are presented in the following paragraphs:

An example application of image processing method based on wavelet transform for edge detection is presented in [

6]. The paper presents an image edge detection algorithm that utilizes multi-sensor data fusion to enhance defect detection in metal components. The edge detection accuracy of the method is improved by integrating data from ultrasonic, eddy current, and magnetic flux leakage sensors using wavelet transform. It is also demonstrated that the result of the proposed method with fused-sensor data outperforms single-sensor data [

6].

An example application of AI (Machine Learning) for edge detection is presented in [

7]. Yang et al. [

7] used Support Vector Machine (SVM) to identify defects in logistics packaging boxes. The authors developed an image acquisition protocol and a strategy to address the defects commonly found in packaging boxes used in logistics settings. The first phases required constructing a denoising template and utilizing Laplace sharpening methods to improve the picture quality of packaging boxes. Next, they used an enhanced morphological approach and an algorithm based on grey morphological edge detection to eliminate noise from box pictures. The study finished with extracting and transforming packing box characteristics for quality classification utilizing the scale-invariant feature transform technique and SVM classifiers. The results indicated a 91.2% likelihood of successfully detecting two major defect categories in logistics packing boxes: surface and edge faults. Although the SVM method performed satisfactorily in the edge detection and classification, it required a denoising, a sharpening, and a feature extraction method which are not applicable and unreliable for the software to hardware implementation and deployment.

An example application of AI (Deep Learning) for edge detection is presented in [

8] and [

9] [

10]. The Canny-Net neural network adaption of the classic Canny edge detector is presented in [

8]. It is intended to solve frequent artifacts in CT scans, including scatter, complete absorption, and beam hardening, especially when metal components are present. Comparing Canny-Net to the traditional Canny edge detector, test images show an 11% rise in

F1 score, indicating a considerable performance improvement. Notably, the network is computationally efficient and versatile for a range of applications due to its lightweight design and minimal set of trainable parameters. A study presented in [

9] contributes a new approach that would help identify the mechanical defects in high voltage circuit breakers through integrating advanced edge detection with DL. Circuit breakers with higher resolution images undergo through contour detection, binarization and morphological processing. These images are then processed for feature extraction and for the identification of defects in the product through a DL platform on TensorFlow and Convolutional Neural Network (CNN). It is shown that this method is more accurate and has better feature extraction than classical CNN models for defect detection including plastic deformation, metal loss, and corrosion. This method ensures a safe method of identifying mechanical faults without destroying the High voltage circuit breaker and improve maintenance, and thus minimize failure. The convolution method in deep learning is particularly effective and prevalent in edge detection; its integration with various techniques, such as lighting adjustments or horizontal and vertical augmentation, yields substantial results [

10]. The implementation of machine vision in the experiments by González et al. for edge detection and deburring significantly enhanced chamfer quality with precision and efficiency [

11].

Robot manipulators has been useful in manufacturing processes as they are excellent at performing multiple tasks and completing them. Recent advancements in combining DL with robot manipulators have opened a new research direction and potential application in practice. Studies in this area have gained much attention to elaborate on robot manipulator performance in the application of intelligent manufacturing. The use of deep reinforcement learning algorithms has been integrated into robot manufacturing and has shown a good result in helping robot manipulators for grasping and object manipulation [

12]. It shows how manipulators grasp, handle, and manipulate better with relatively less time [

12]. Using advanced algorithms from deep reinforcement learning, experts have made the work of robot manipulators better in terms of their speed to adapt, accuracy, and overall performance. This progress opens possibilities for creating smarter and more effective robots in future research [

13] [

14] [

15]. Furthermore, algorithms have been made to control robot manipulators with vision through deep reinforcement learning. They help robots pick up the objects by themselves based on the visuals and demonstrate how incorporating DL can improve the work of handling things during the robot’s movement and performance [

16].

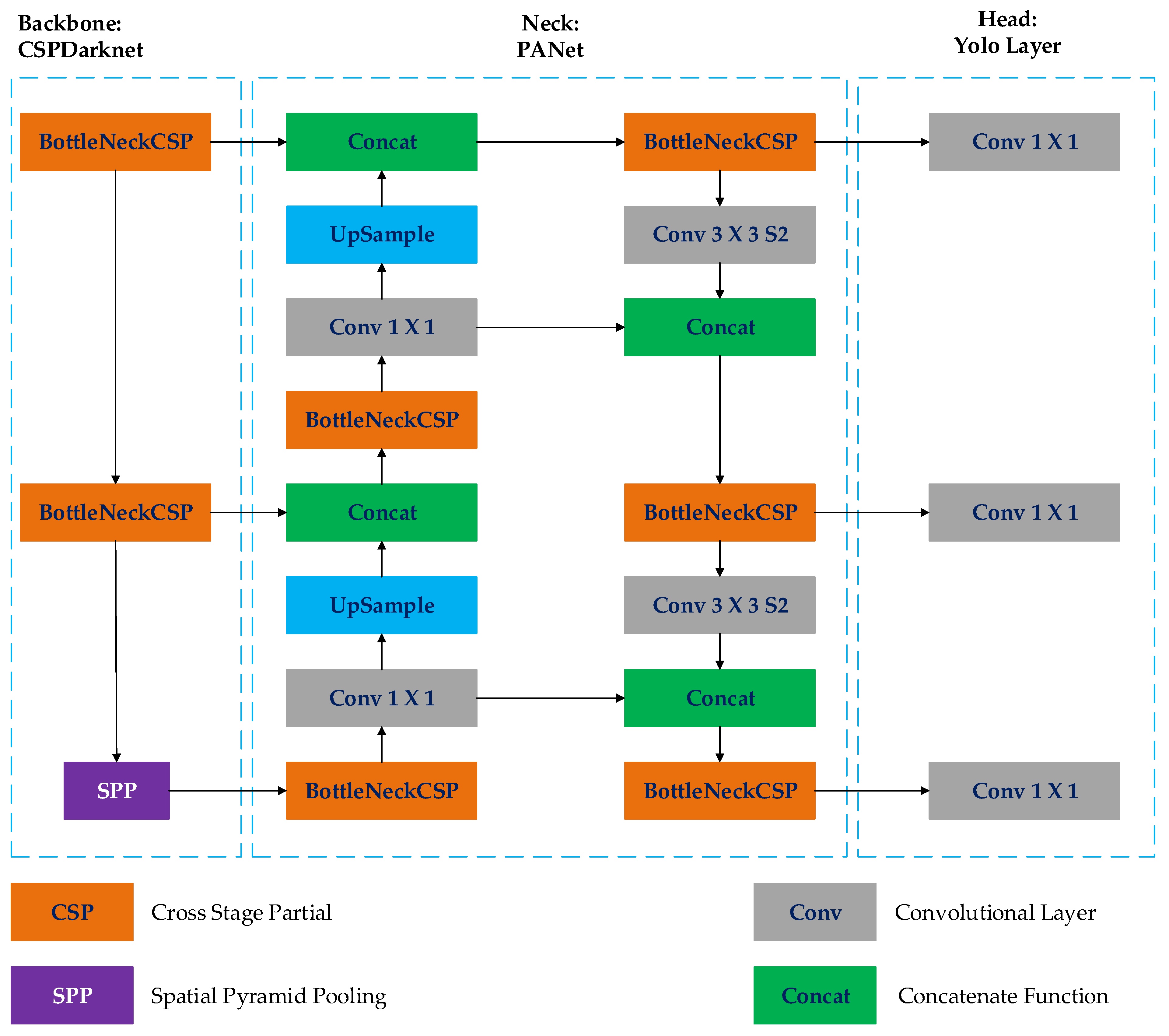

YOLOv5 is a powerful tool for image recognition and object detection [

17]. Aein et al. [

18] devised a technique to assess the integrity of metal surfaces. The authors employed the YOLO object detection network to detect faults on metal surfaces, resulting in an integrated inspection system that can distinguish between different types of defects and identify them immediately. The solution was implemented and evaluated on a Jetson Nano platform with a dataset from Northeastern University. The investigation yielded a mean Average Precision (mAP) of 71% across six different fault types, with a processing rate of 29 FPS.

Li et al. [

19] employed the YOLO algorithm to create a method for real-time identification of surface flaws in steel strips. The authors improved the YOLO network, making it completely convolutional. Their unique approach offers an end-to-end solution for detecting steel-strip surface flaws. The network obtained a 99% detection rate at 83 frames per second (FPS). The invention also involves anticipating the size and location of faulty zones. The YOLO network was upgraded by creating a convolutional structure with 27 layers. The first 25 levels gather useful information about surface defect features on steel strips, while the final two layers forecast defect types and bounding boxes.

Xu et al. [

20] developed a novel modification of the YOLO network to enhance metal surface flaw identification. Their strategy centred on creating a new scale feature layer to extract subtle features associated with tiny flaws on metal surfaces. This invention combined Darknet-53 architecture’s 11th layer with deep neural network characteristics. The k-means++ technique significantly lowered the sensitivity of the first cluster. The study yielded an average detection performance of 75.1% and a processing speed of 83 frames per second.

A number of study has reported a benefit of YOLO on the hardware implementation using NVIDIA Jetson Nano [

21], [

22]. The NVIDIA Jetson Nano, notable for its computational capabilities, facilities the infusion of AI models into devices [

23]. The NVIDIA Jetson Nano is a device which have a low power consumption and can performs real-time inferences that can be considered in terms of frame rates. For instance, the implementations of YOLOv3-tiny show that the system-level approaches can achieve frame rates of approximately 30 FPS, which is crucial for surveillance and monitoring purposes [

24], [

25]. The YOLO algorithm and architecture has a benefit in the application of object detection in machine vision i.e., the determination of objects on images can be done in a single pass, which helps to reduce the amount of computational work several times compared with multi-step methods [

26]. This results in high efficiency, particularly when it is deployed on the NVIDIA Jetson Nano, which, due to its insufficient power compared to other Graphics Processing Units (GPUs), can handle satisfactory performance with optimized models [

27].

Integrating robot manipulators with machine vision technologies has revolutionized various industrial applications [

28]. In certain industries, automatic manufacturing with machine vision technologies can reduce workers’ risks while performing repetitive and potentially hazardous tasks [

29]. A method presented using the YOLO Convolutional Neural Network for analyzing video streams from a camera on the robotic arm, recognizing objects, and allowing the user to interact with them through Human Machine Interaction (HMI), which helps the movement of individuals with disabilities [

30]. The method involves using the Niryo-One robotic arm equipped with a USB HD camera and modifying the YOLO algorithm to enhance its functionality for robotic applications, showing that the robotic arm can detect and deliver objects with high accuracy and in a timely manner [

31]. The study suggests an industrial simulation, a practical robotic application using robot manipulators and DL to perform both grinding and chamfering simultaneously. The approach of incorporating robotic manipulators and computer vision systems based on DL to carry out grinding and chamfering tasks automatically is an essential aspect of this research. This study utilizes the capabilities of a robot manipulator, specifically, the Mitsubishi Electric Melfa RV-2F-1D1-S15 to execute precise grinding and chamfering tasks in the lab experimental study. This study aims to proof that the machine vision technology can be integrate with the robot manipulator because it is expected that by integrating manipulator and computer vision technology it can increase the precision and efficiency of the grinding and chamfering process.

Table 1.

Acronyms and abbreviations.

Table 1.

Acronyms and abbreviations.

| Acronym |

Abbreviation |

| AI |

Artificial intelligence |

| AP |

Average Precision |

| AUC |

Area Under the Curve |

| CAD |

Computer Aided Design |

| CM |

Confusion Matrix |

| CNN |

Convolutional Neural Network |

| COCO |

Common Objects in COntext |

| CSPNet |

Cross-Stage Partial Network |

| CT |

Computed Tomography |

| CVAT |

Computer Vision Annotation Tools |

| DL |

Deep Learning |

| DOF |

Degree of Freedom |

|

F1 |

Precision-recall score |

| FLOPS |

Floating-point Operations Per Second |

| FPS |

Frames per second |

| FPN |

Feature Pyramid Networks |

| FN |

False Negative |

| FP |

False Positive |

| GPU |

Graphics Processing Unit |

| HCPS |

Human-Cyber-Physical Systems |

| HMI |

Human Machine Interaction |

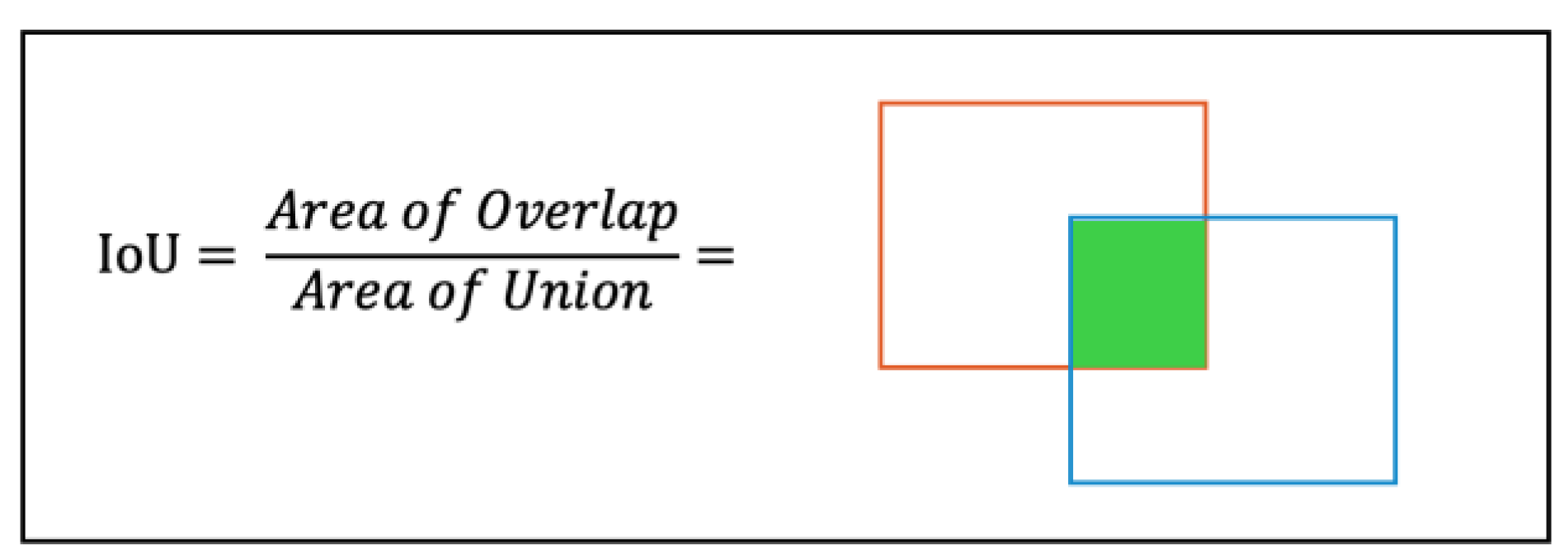

| IoU |

Intersection over Union |

| mAP |

Mean Average Precision |

| ML |

Machine Learning |

| PANet |

Pyramid Aggregation Network |

| ROC |

Receiver Operating Characteristic |

| RPN |

Region Proposal Network |

| SGD |

Stochastic Gradient Descent |

| SPP |

Spatial Pyramid Pooling |

| SSD |

Single Shot Detector |

| SVM |

Support Vector Machine |

| TN |

True Negative |

| TP |

True Positive |

| VGG |

Visual Geometric Group |

| VOC |

Visual Object Classes |

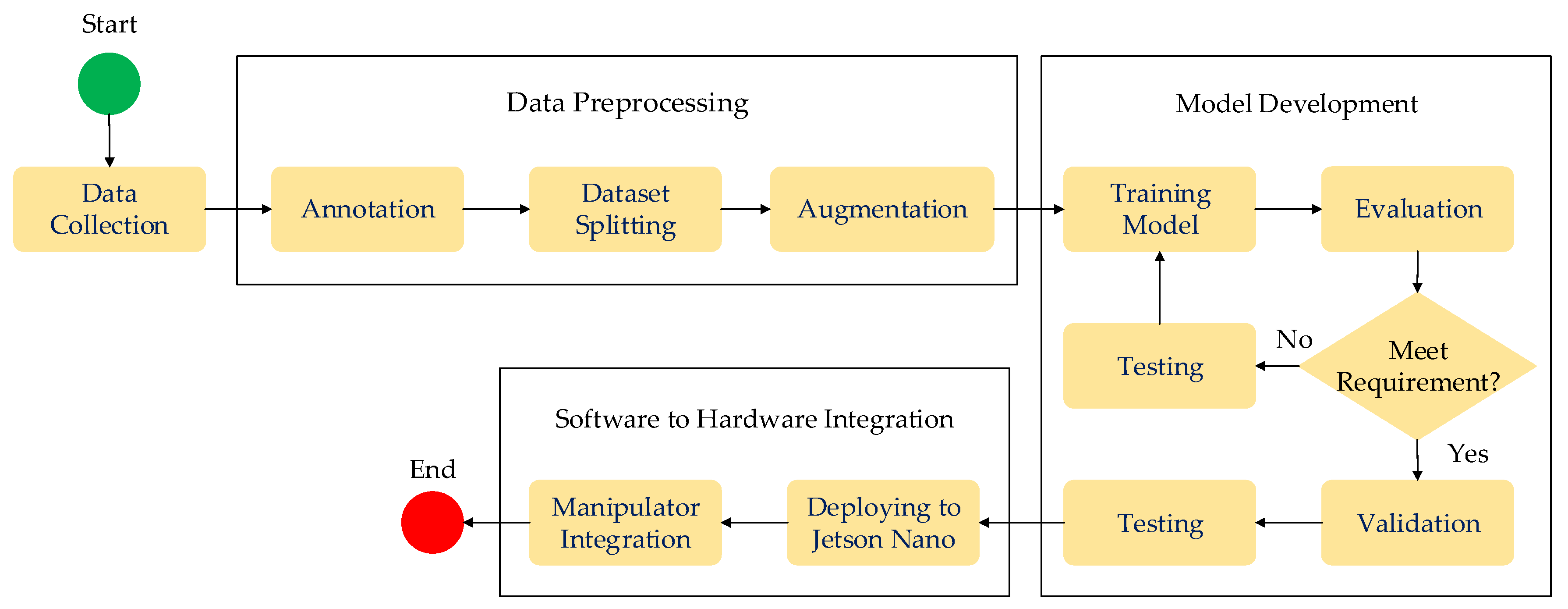

4. Results and Discussion

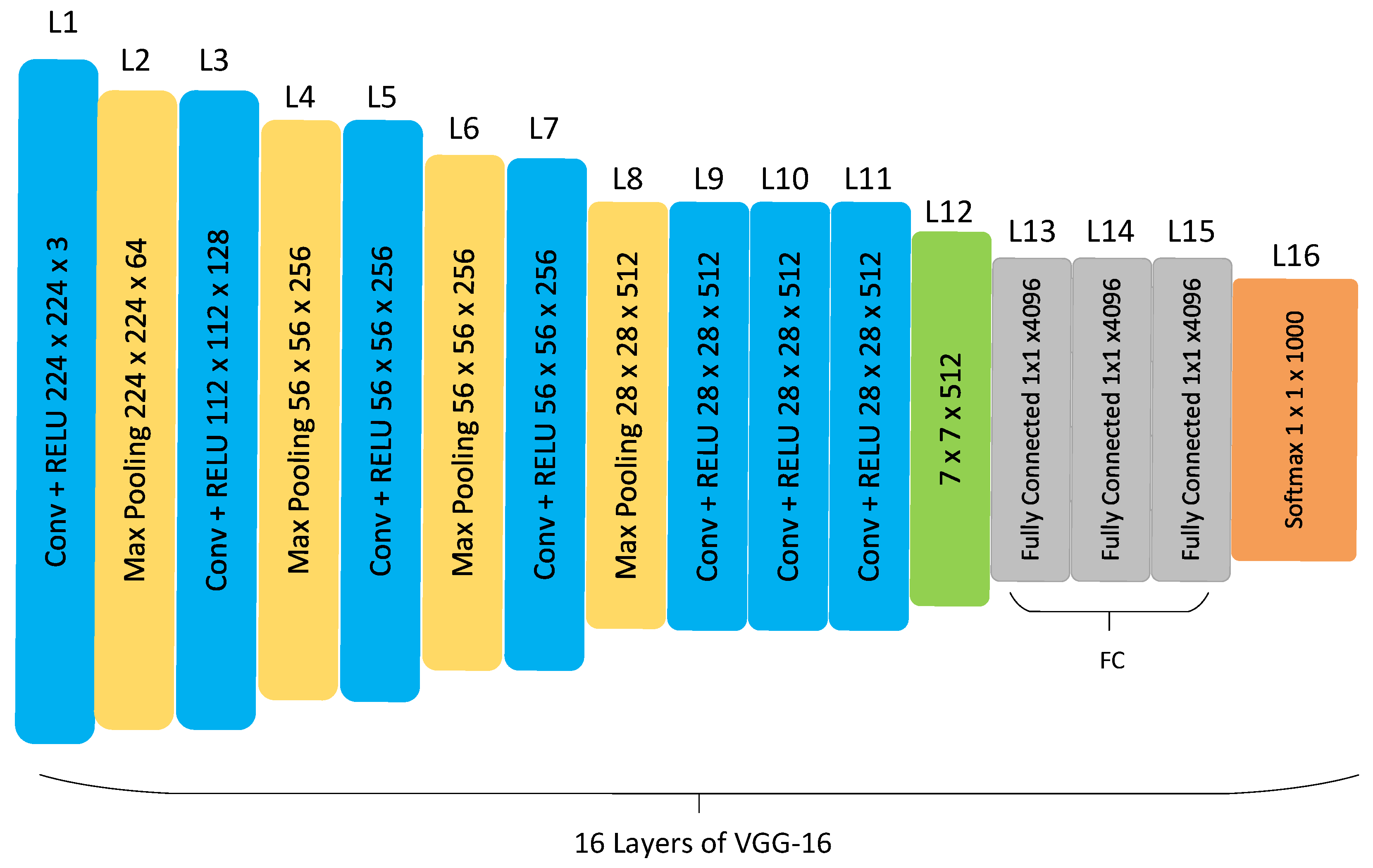

In automated manufacturing, a reliable machine vision-based DL method is important. This chapter provides the results and discussion of the deep learning methods in the machine vision system to predict and classify sharp edges, chamfer edges, and burrs edges of the metal workpiece.

4.1. Model Performance

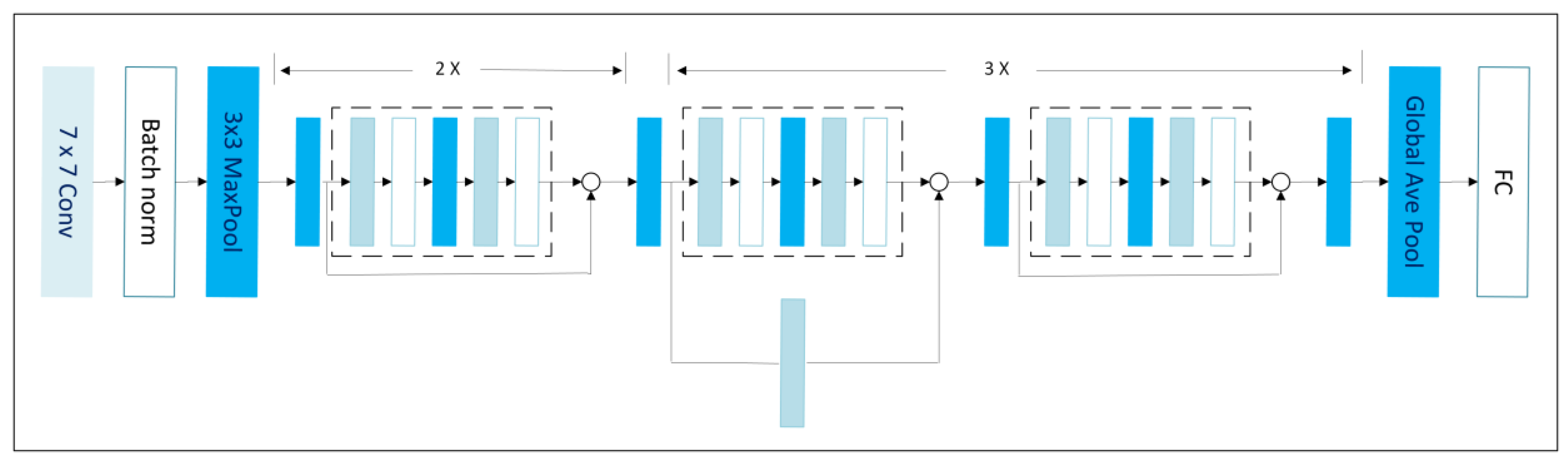

Summarizes the models performance presented in

Table 6. As evident from

Table 6, YOLOv5 demonstrates superior performance across all metrics, followed by VGG-16 and then ResNet.

4.2. Mean Average Precision (mAP)

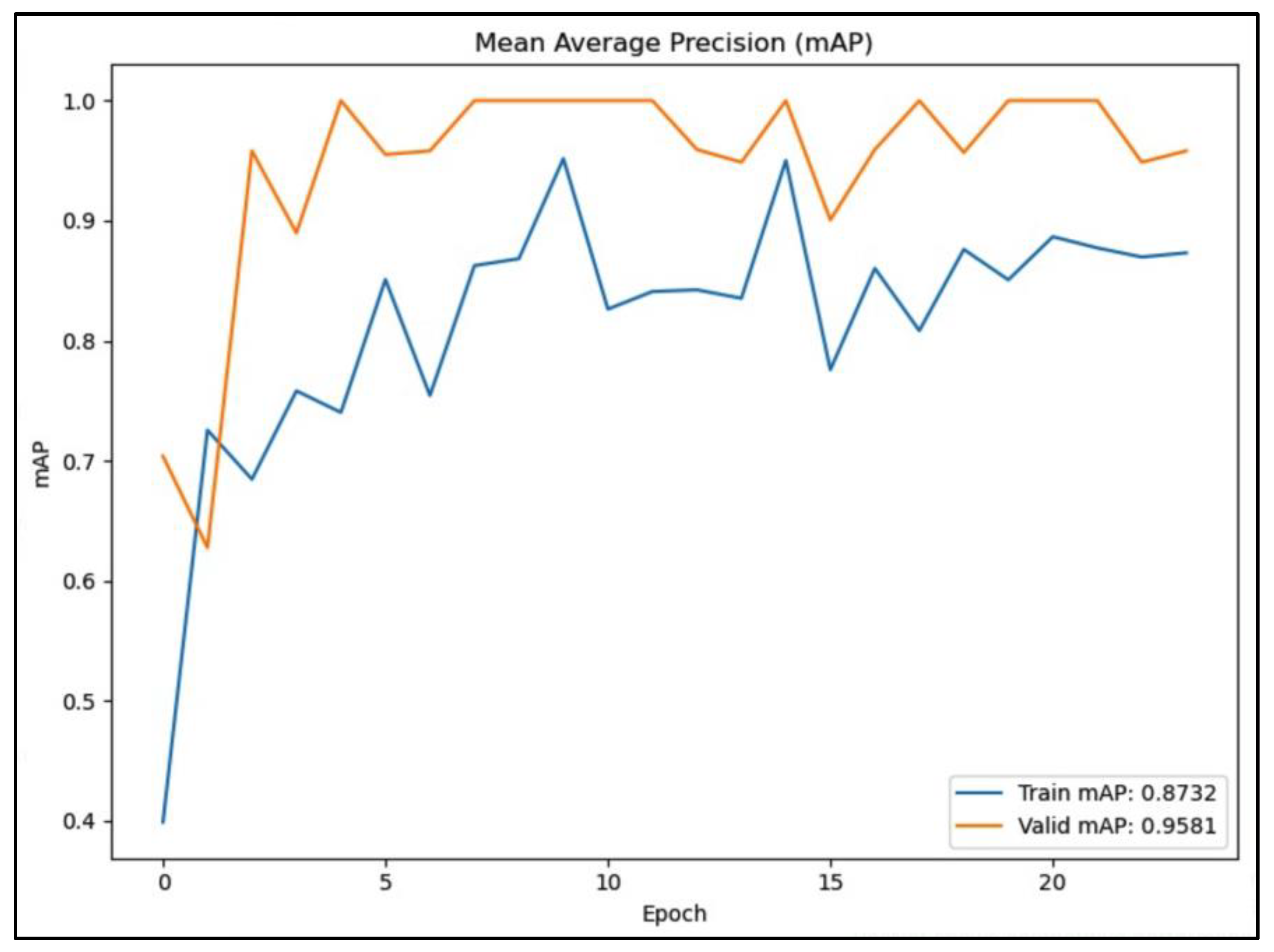

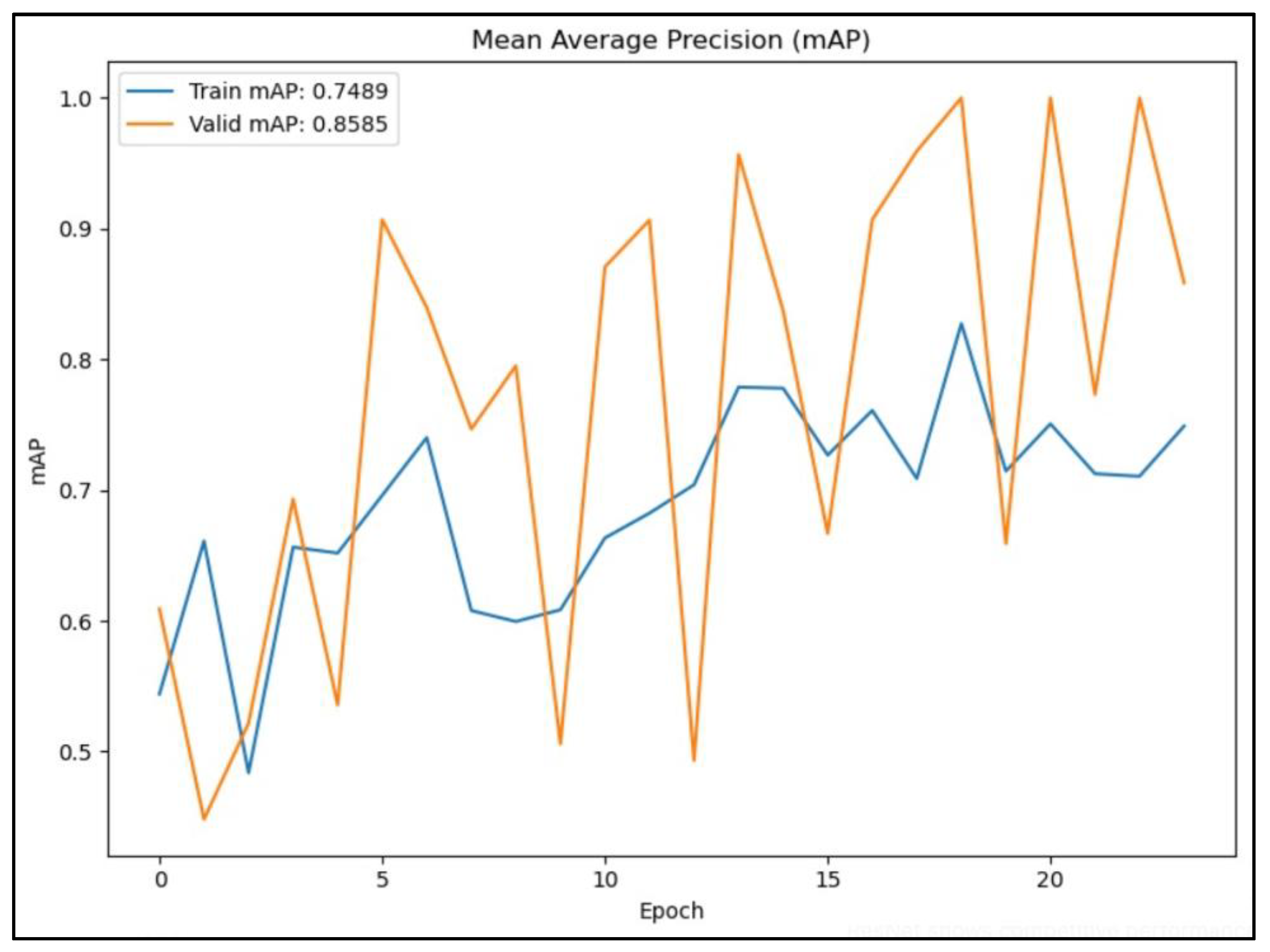

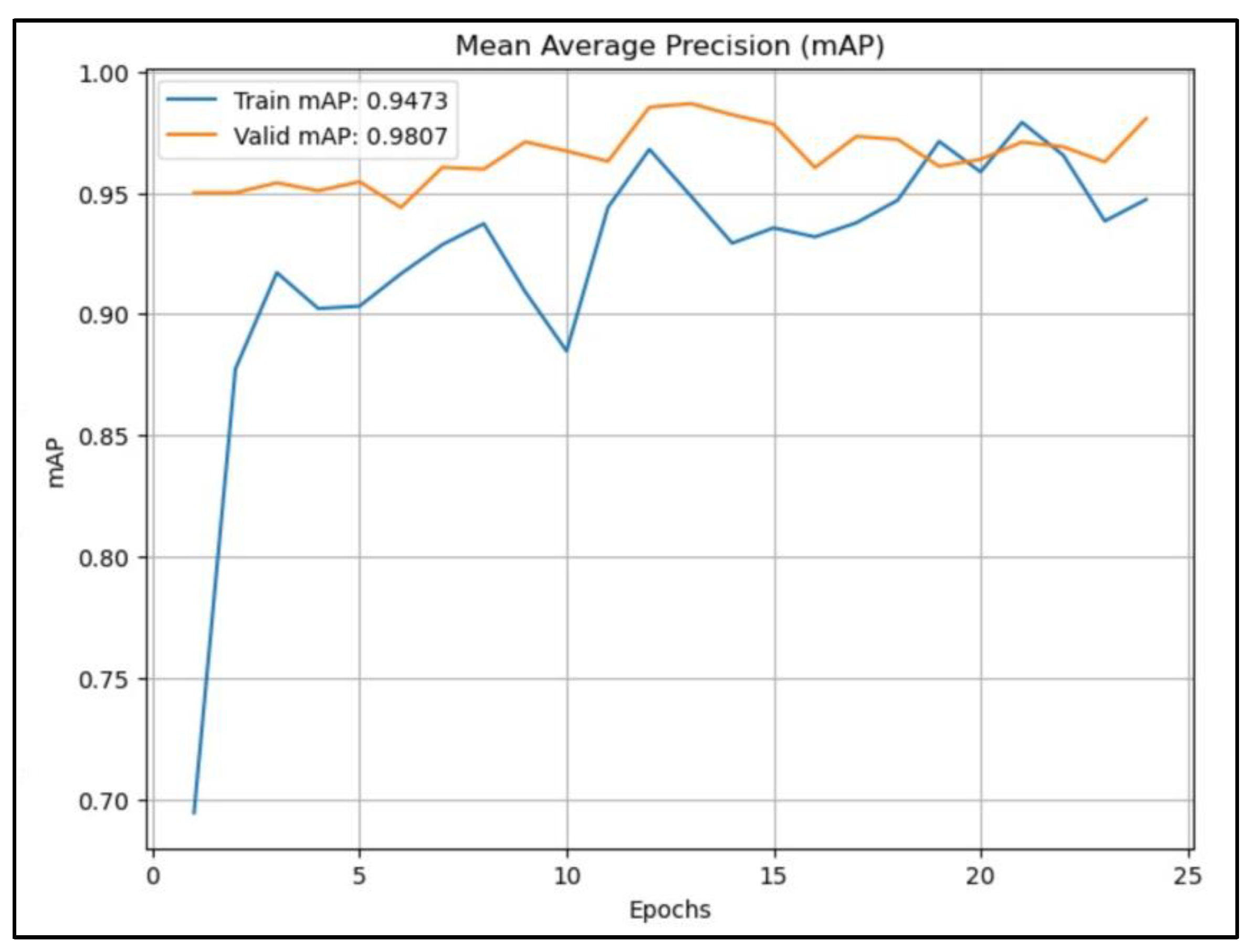

Figure 8,

Figure 9 and

Figure 10 illustrate the mAP scores for each model across different IoU thresholds.

Figure 8 shows that the validation mAP (solid orange line) consistently outperforms the training mAP (solid blue line), indicating good generalization to unseen data. The validation mAP stabilizes around 0.9581, suggesting a robust model performance.

Figure 9 shows that the validation mAP is consistently higher than the training mAP throughout the epochs, indicating that the model performs better on the validation set. However, the insignificant fluctuations in the validation mAP suggest variability in model performance.

Figure 10 shows that the validation mAP consistently outperforms the training mAP, indicating good generalization of the model. As the epochs progress, both training and validation mAP values improve, with validation mAP reaching approximately 0.9807, suggesting a strong performance on the validation dataset.

In general, the mAP curves in

Figure 8,

Figure 9 and

Figure 10 show that the YOLOv5 model consistently outperforms the VGG-16 and ResNet models across various IoU thresholds, indicating its robust object detection capabilities. In addition, VGG-16 shows competitive performance, especially at lower IoU thresholds, while ResNet lags behind but still provides respectable results.

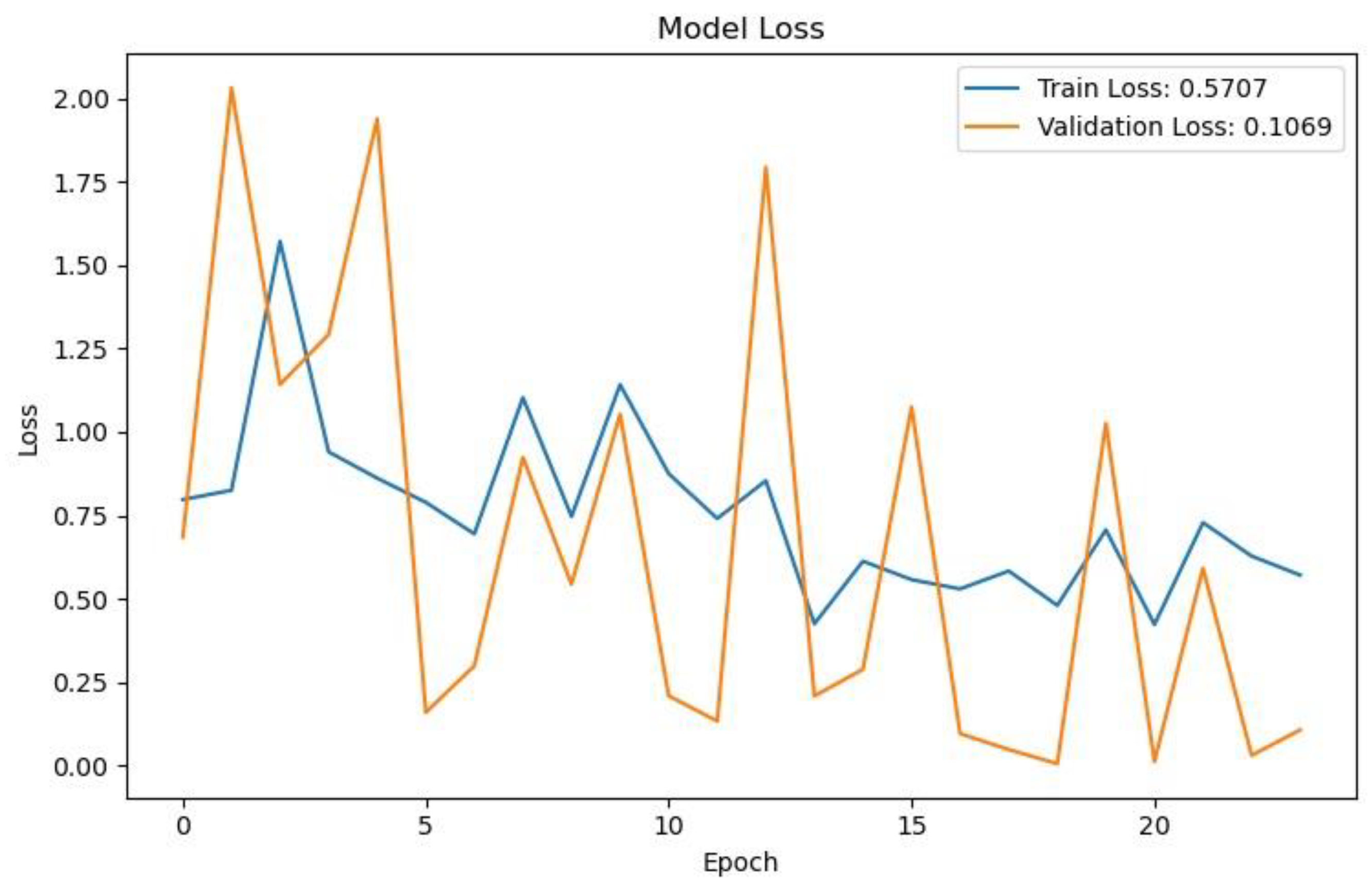

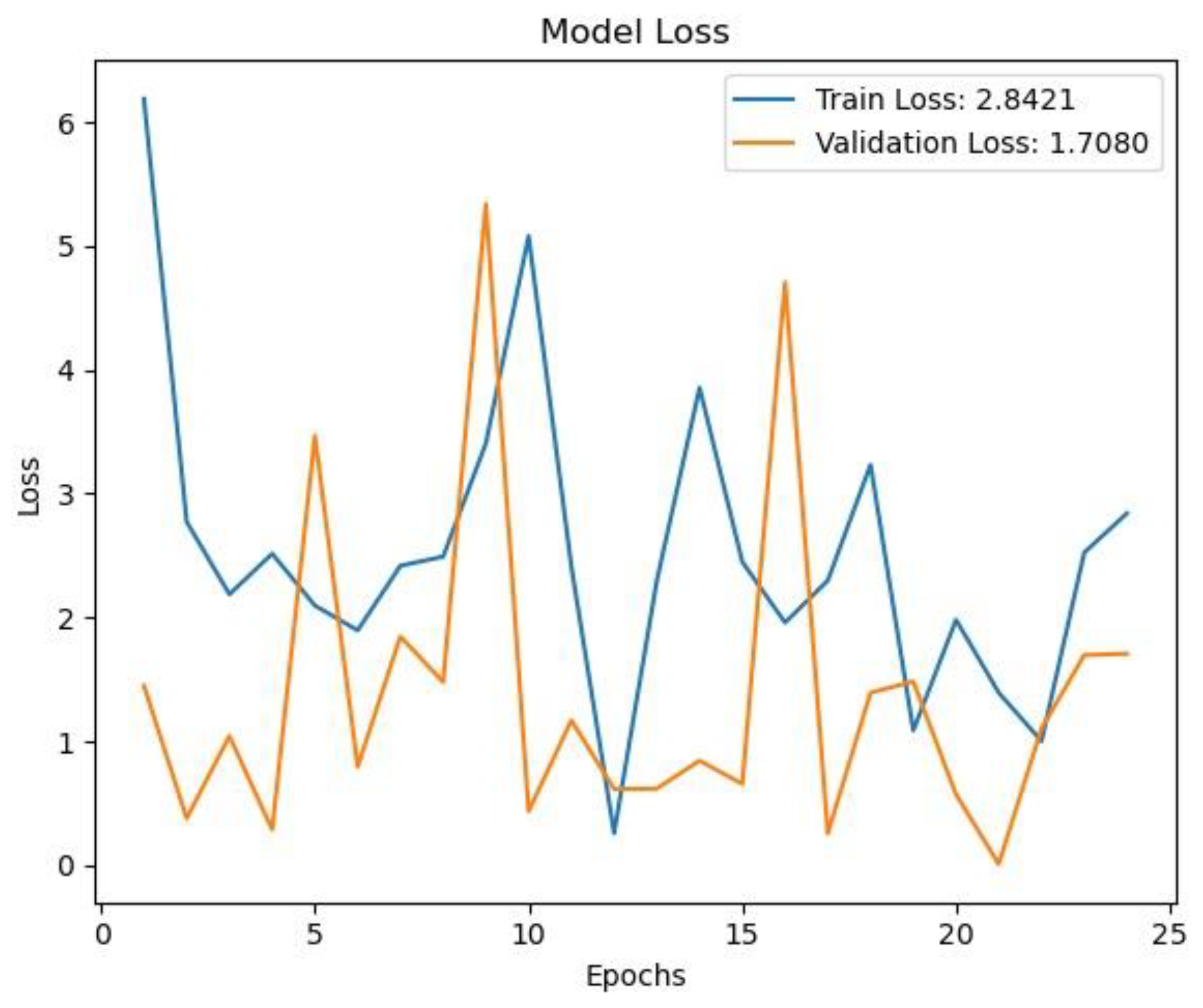

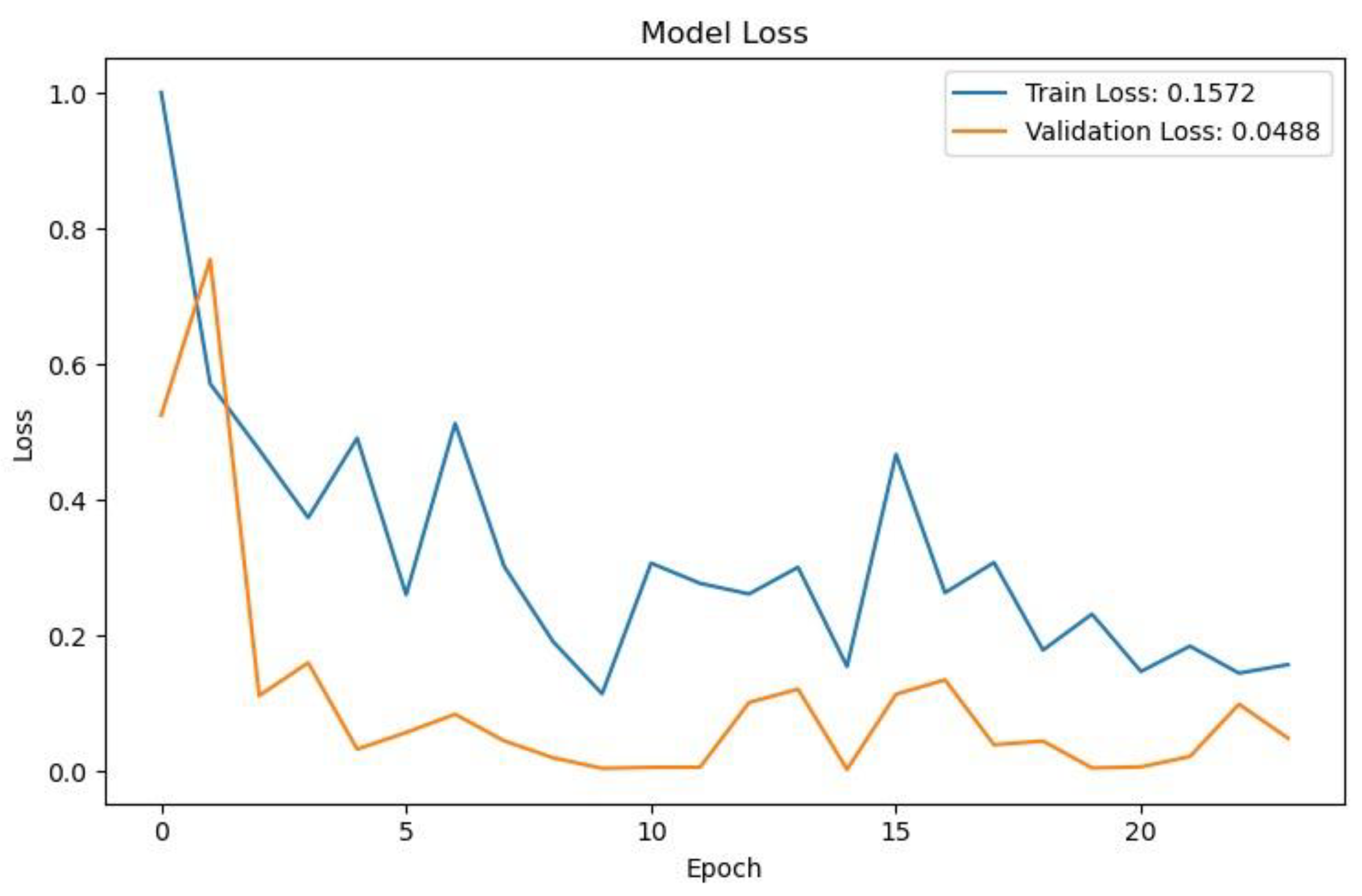

4.3. Loss Model

Figure 11,

Figure 12 and

Figure 13 present the loss curves for each model in training and validation. The loss figure indicates that both training and validation losses decrease over time, suggesting effective learning. However, the validation loss experiences significant fluctuations, which may point to potential overfitting.

The loss figure indicates that the validation loss (orange line) generally trends lower than the training loss (blue line), suggesting that the model may be overfitting on the training data. The loss graph indicates that both training and validation losses decrease significantly during the initial epochs, suggesting effective learning. Over time, the validation loss stabilizes at a lower level, approximately 0.0488, while the training loss shows more fluctuation. The loss curves in

Figure 11,

Figure 12 and

Figure 13 demonstrate that all three models converge well, with YOLOv5 showing the fastest convergence and lowest final loss. YOLOv5 achieving the best loss, followed closely by VGG-16 and then ResNet.

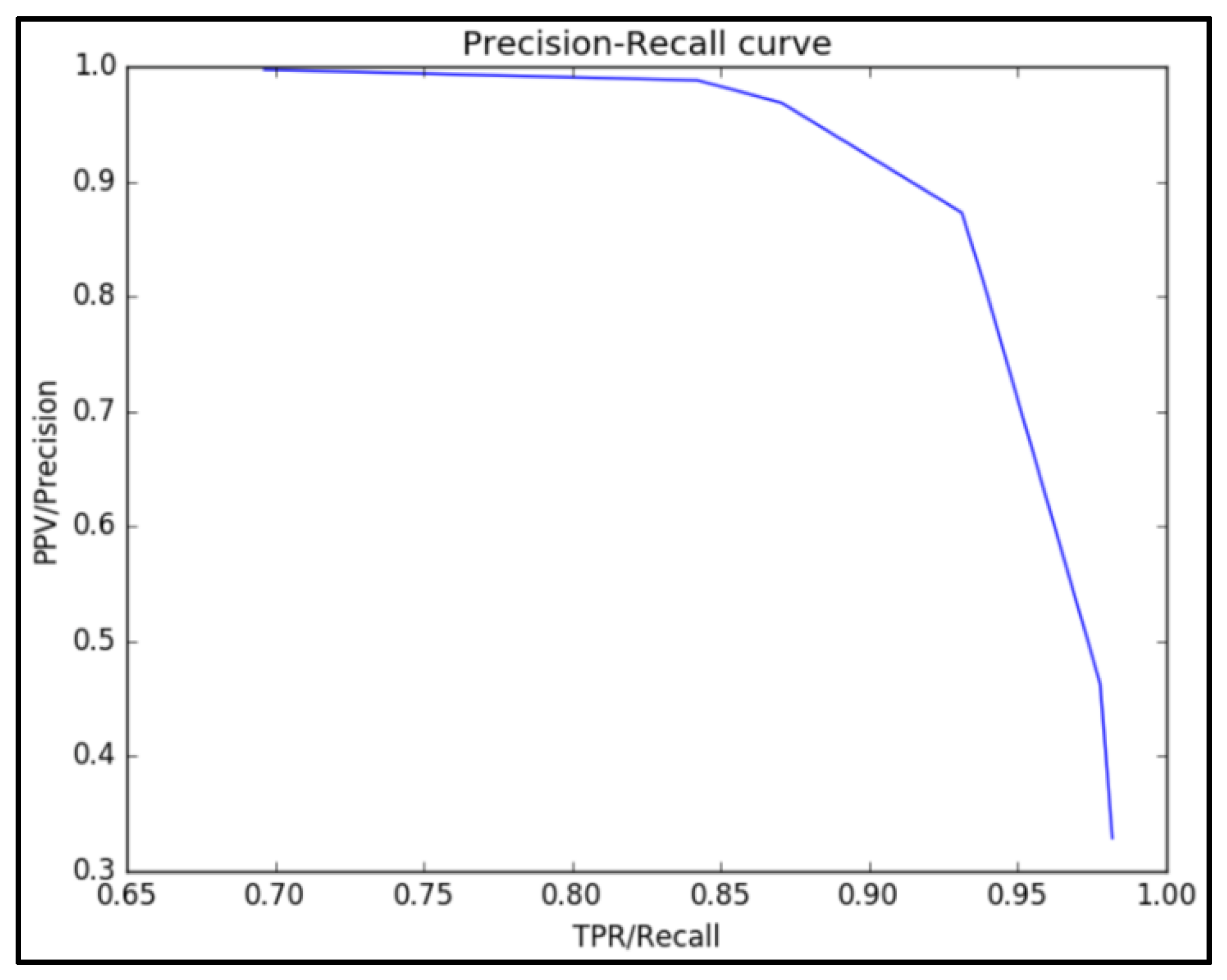

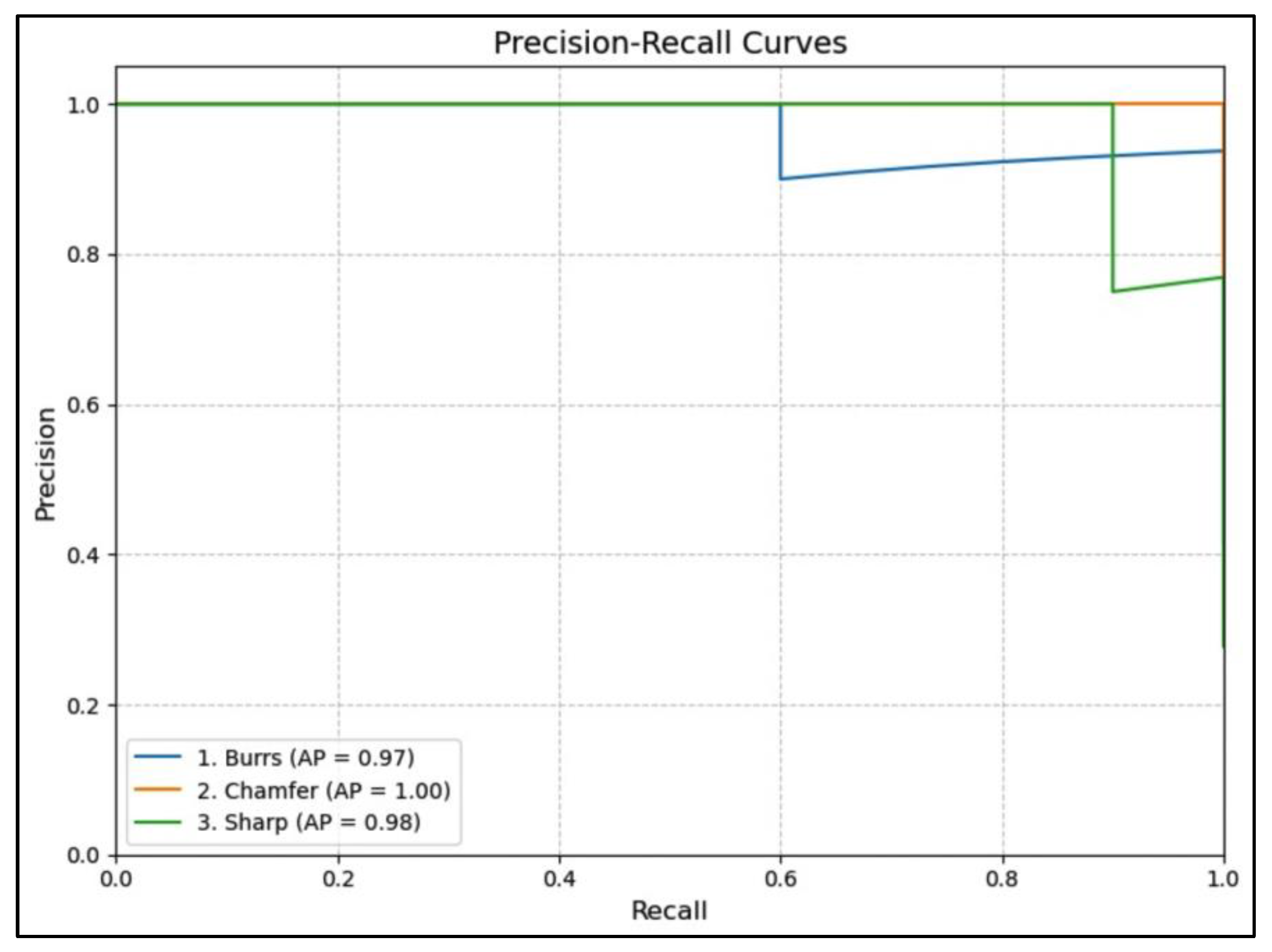

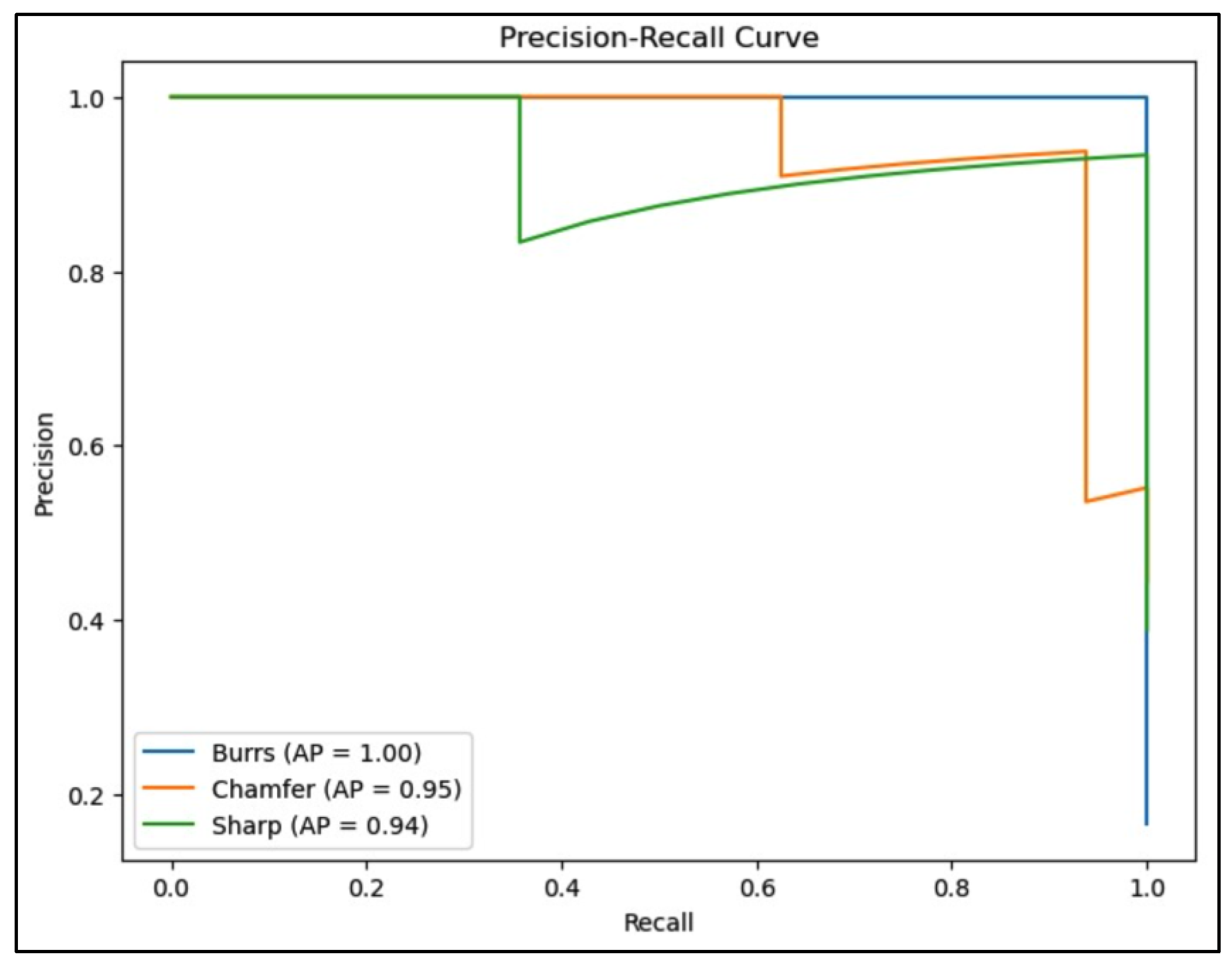

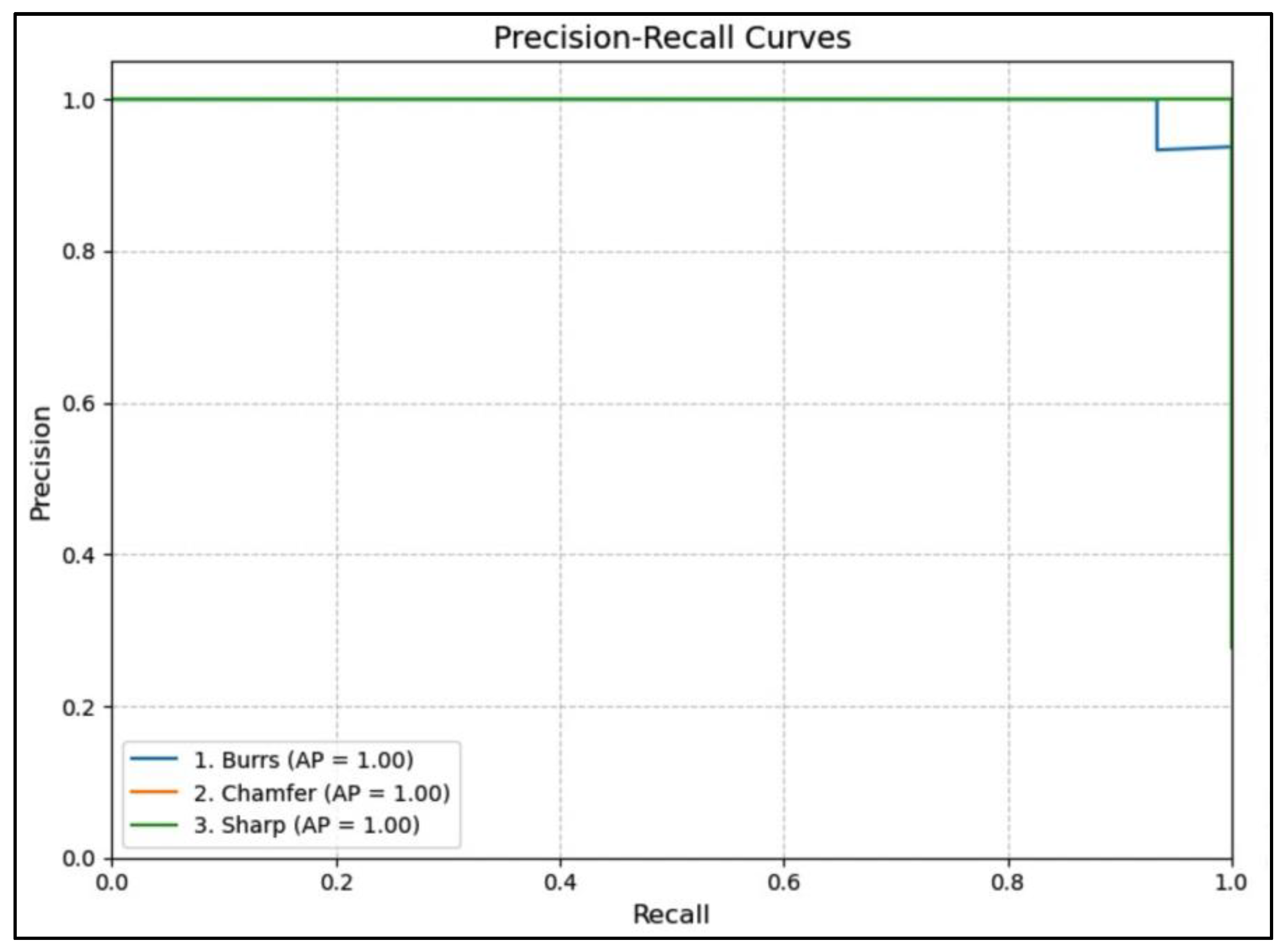

4.4. Precision-Recall Curve

Figure 14,

Figure 15 and

Figure 16 show the precision-recall curves for each model across the three defect classes.

Figure 14 presents a precision-recall curve of VGG-16. It shows high performance across all classes, with Chamfer achieving an average precision of 1.00, indicating perfect precision at every recall level. Burrs and Sharp also demonstrate strong precision, with average precisions of 0.97 and 0.98 respectively, highlighting the model’s robust ability to distinguish between these classes.

The precision-recall curve of ResNet as shown in

Figure 15 illustrates that the model achieves excellent performance across all classes, with the “Burrs” class achieving perfect precision and recall (AP = 1.00). The “Chamfer” and “Sharp” classes also demonstrate high average precision scores of 0.95 and 0.94, respectively, indicating strong model reliability and consistency in distinguishing these classes.

The precision-recall curve of YOLOv5 as presented in

Figure 16 demonstrates perfect performance across all classes, with each achieving an average precision (AP) of 1.00. This indicates that the model consistently maintains high precision and recall, effectively distinguishing between the classes. The precision-recall curves in

Figure 3 provide insights into the trade-off between precision and recall for each model. YOLOv5 maintains high precision even at higher recall values across all defect classes, indicating its strong performance in both correctly identifying defects and minimizing false positives.

4.5. Class-wise Performance

To provide a more detailed analysis, we present the precision, recall, and

F1-score for each metal edge detection class across the three models as presented in

Table 7. According to

Table 7, it is shows that YOLOv5 consistently outperforms the other models across all defect classes. Notably, all models show slightly better performance in detecting “Sharp” defects compared to “Burrs” and “Chamfer”, suggesting that sharp edges may have more distinctive features for the models to learn.

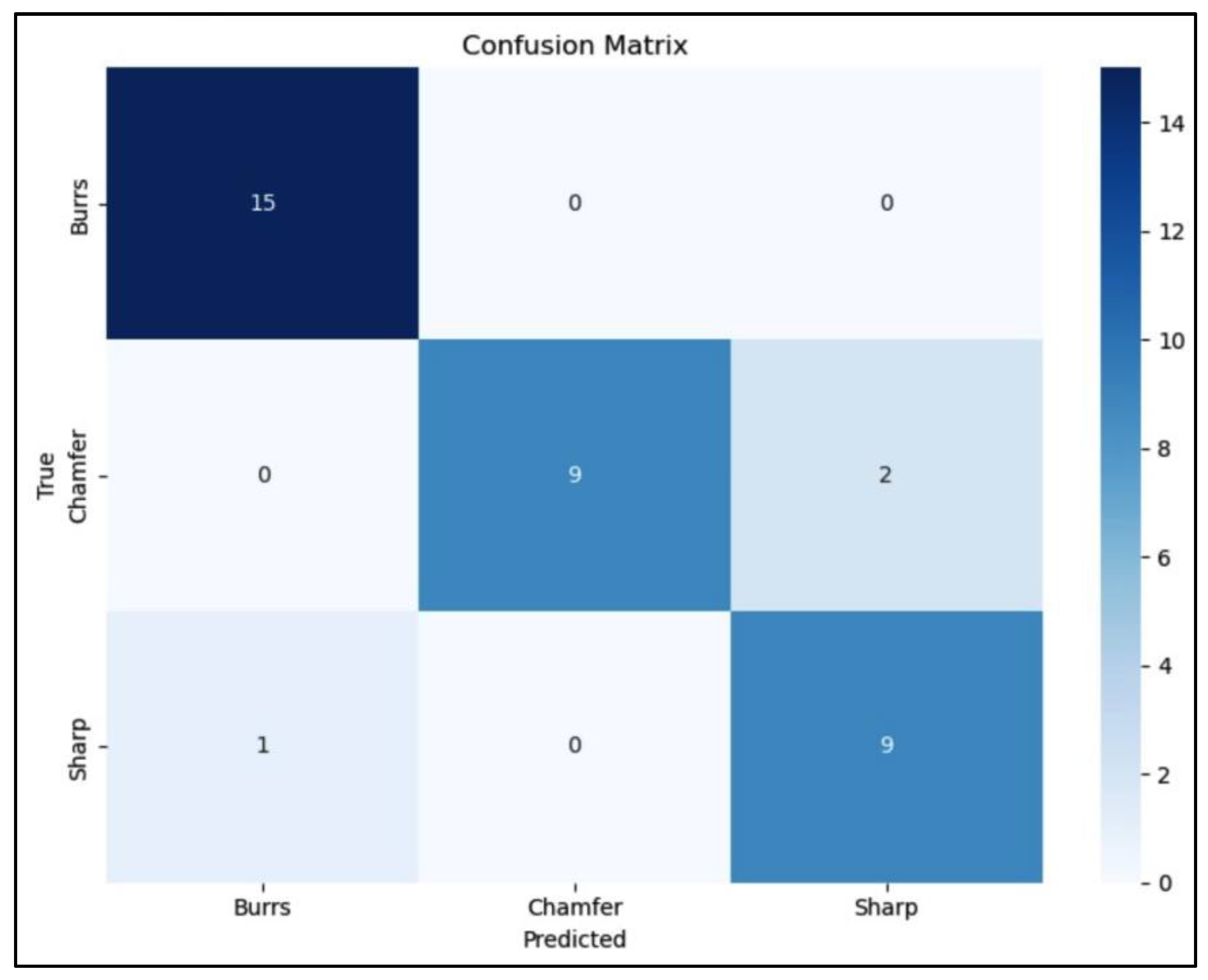

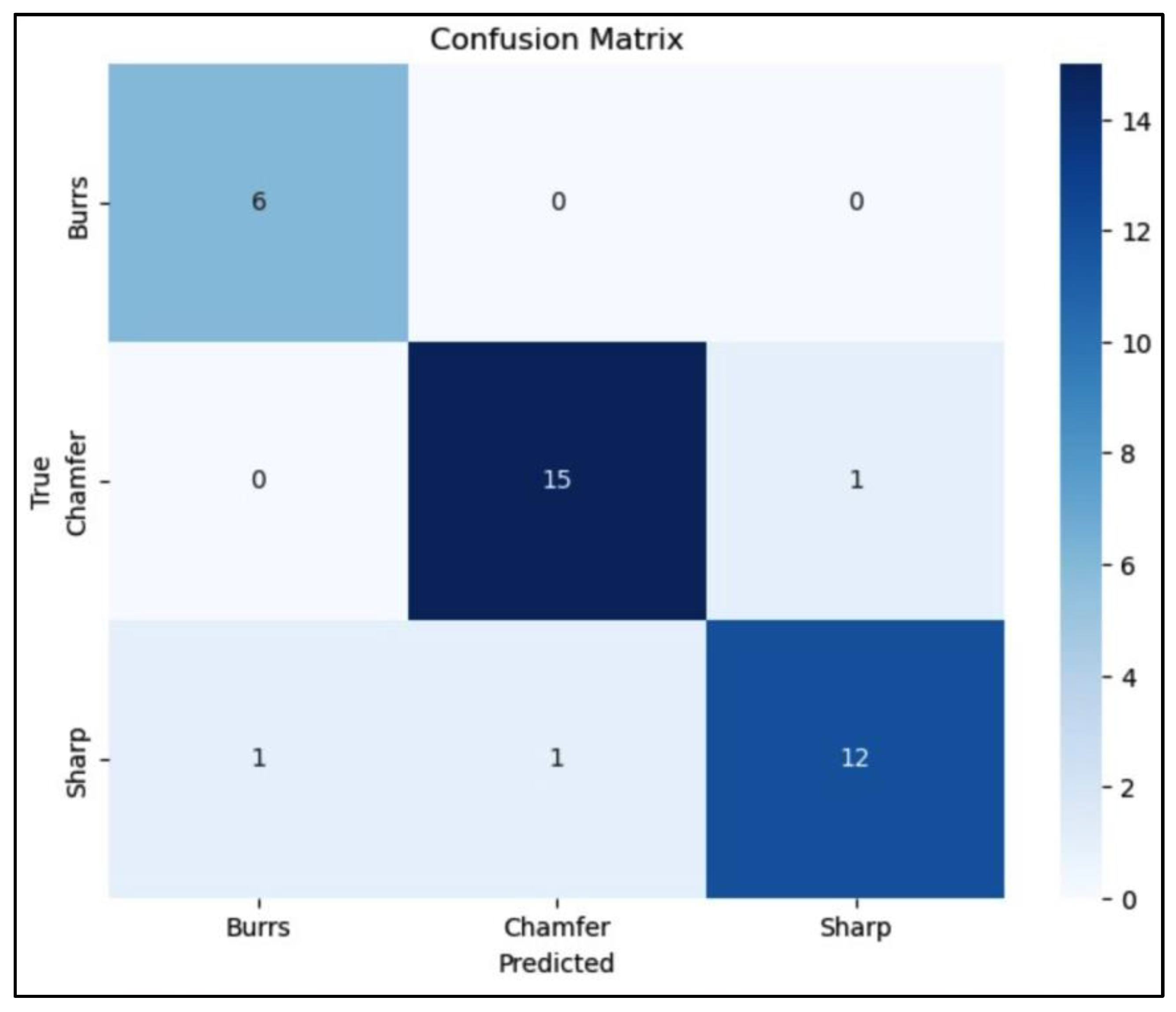

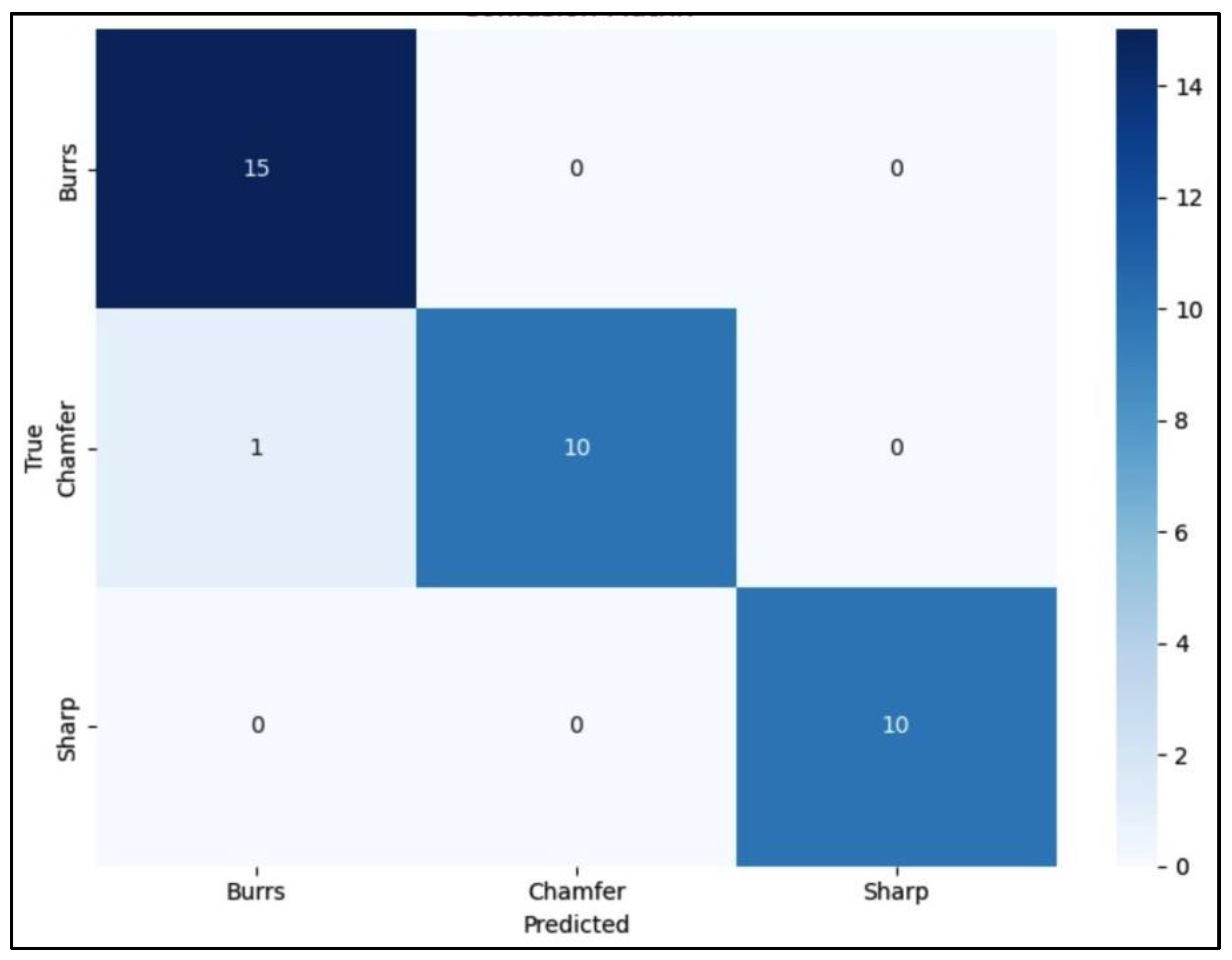

4.6. Confusion Matrix

A confusion matrix (CM) is a tool used visualize the performance of classification of DL model that shows a visual representation of how accurate the developed model predicts the class or label of the given data by displaying a matrix of actual and predicted data. The CM analysis of the three DL models for edge detection and classification reveals its performance across different categories as presented in

Figure 17,

Figure 18 and

Figure 19. The confusion matrix

Figure 17 shows that the model performs well, with good classification of the “Burrs” class and only minor misclassifications in the “Chamfer” and “Sharp” classes. Specifically, there are two instances of “Chamfer” misclassified as “Sharp,” and one “Sharp” misclassified as “Burrs,” indicating areas for potential improvement. The confusion matrix

Figure 18 indicates that the model performs well, the “Chamfer” class shows the highest accuracy with 15 correct predictions, while minor misclassifications occur in the “Sharp” class, suggesting slight areas for improvement. The confusion matrix

Figure 19 shows that the model accurately predicts most classes, with “Burrs” and “Sharp” achieving perfect classification. However, there’s a slight misclassification for “Chamfer,” where one instance is incorrectly predicted as “Burrs.”.

The confusion matrices in

Figure 17,

Figure 18 and

Figure 19 reveal that YOLOv5 has the least misclassifications among the three models. VGG16 shows a higher tendency to confuse Burrs with Chamfer, while ResNet and YOLOv5 demonstrate more balanced performance across all classes. The comparative analysis of YOLOv5, VGG16, and ResNet for metal defect detection reveals interesting insights, especially considering the constraints of limited dataset availability. YOLOv5 demonstrates superior performance across all metrics, as evidenced by its higher mAP scores, faster convergence, and better precision-recall trade-off. This exceptional performance can be attributed to YOLOv5’s architecture, which is specifically designed for efficient object detection. Its ability to process images in a single forward pass allows for effective feature extraction and localization, resulting in higher accuracy and mAP scores even with limited training data. This makes YOLOv5 particularly well-suited for real-time defect detection in a robotic arm setup, where both speed and accuracy are crucial.

Surprisingly, VGG-16, despite being the oldest architecture among the three, outperforms ResNet in our specific use case. This unexpected result might be due to VGG-16’s simpler architecture, which could be more effective at learning from a limited dataset. The shallower network may be less prone to overfitting when training data is scarce, allowing it to generalize better to unseen examples. ResNet, with its deep architecture and residual connections, shows the lowest performance among the three models in this specific scenario. This outcome is contrary to expectations, as ResNet typically excels in various computer vision tasks. The underperformance might be attributed to the limited dataset, which may not provide sufficient examples for ResNet to fully leverage its deep architecture and learn the complex features it’s capable of extracting.

The consistently high performance across all defect classes for all models indicates that our dataset, although limited, is well-balanced. This suggests that the chosen models are capable of learning distinctive features for each defect type, even with constrained data. The slightly higher performance in detecting “Sharp” defects across all models implies that these defects may have more pronounced visual characteristics, making them easier to identify even with limited training examples. It’s worth noting that the image augmentation techniques employed have likely played a crucial role in maximizing the utility of the limited dataset, helping to artificially expand it and improve the models’ ability to generalize.

For future work, the plan to expand the defect types from three to five categories is a promising direction. This expansion will increase the complexity of the classification task and provide a more comprehensive defect detection system. Continuing to refine and expand augmentation techniques will be crucial in managing the increased complexity, especially if dataset limitations persist. In conclusion, this study demonstrates the effectiveness of modern object detection architectures, particularly YOLOv5, in handling metal defect detection tasks even with limited data. It also highlights the importance of choosing the right model architecture based on dataset characteristics and the potential benefits of simpler models like VGG-16 in data-constrained scenarios.

5. Testing and Integration YOLOv5, Jetson Nano, and Mitsubishi RV-2F-1D1-S15 Robot Manipulator

The verification studies were conducted with the developed AI system in real-life environments. For this purpose, the device implementing the operation of the DL algorithm was connected with a high-precision robot manipulator, the Mitsubishi Electric Melfa RV-2F-1D1-S15, with a repeatability of +/-0.02 mm and 6 DOF. Such connections between different hardware platforms are common in practice and allow for the optimization of activities satisfactorily [

1]. This robot manipulator was available in the Faculty of Electrical Engineering, Opole University of Technology, Poland. Prior to the integration, the manipulator was programmed with the default CAD software. The CAD software is a compatible and specific software for Mitsubishi Electric Melfa RV-2F-1D1-S15. This CAD software consisted of the CAD visualization and the syntax based programming to program the movement of the robot manipulator. Once the syntax programming was completed for a certain robot manipulator movement, it can be seen in the CAD visualization to check whether the movement is as expected. This CAD software can create a sequential robot manipulator movement for automate grinding and chamfering when it is connected to the machine vision system. The CAD robot manipulator program consisted of three different actions to accommodate the three possible input from the NVIDIA Jetson Nano. A detail explanation of the three different action is provided in the last paragraph of this section.

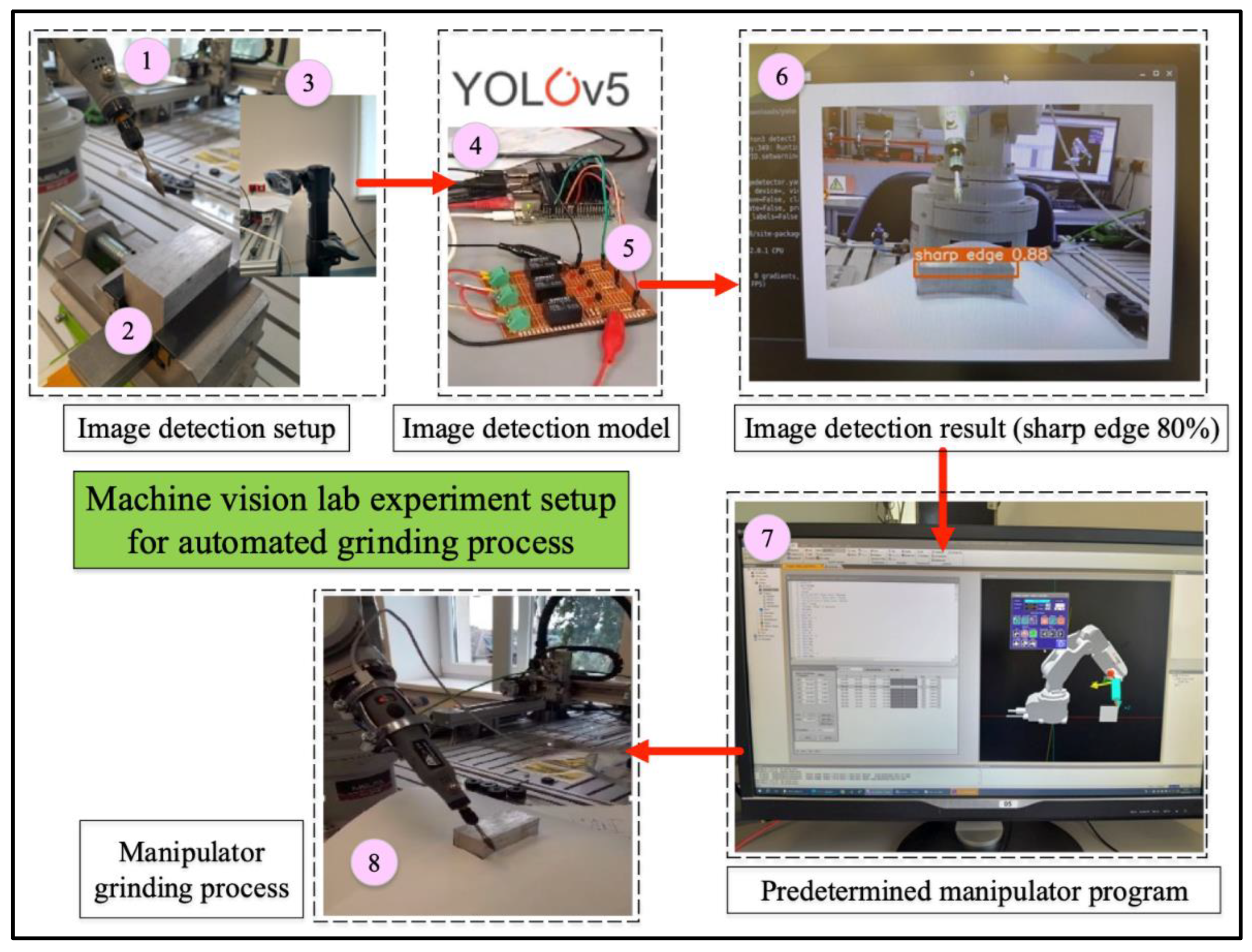

A schematic diagram for the real-time machine vision system is presented in

Figure 12. The machine vision system consists of a PC for monitoring the image detection result, a NVIDIA Jetson Nano microprocessor, an electric board to connect the NVIDIA Jetson Nano to the PLC input of the manipulator, and the robot manipulator. Due to the differing voltage levels of the devices, a special translation board has been prepared. The aforementioned electrical plate allows the robot to easily and effectively manage the work of the robot based on the signals from the DL system.

Figure 12.

An example of a cycle process of the machine vision lab experiment for automated chamfering process integrating YOLOv5, Jetson Nano microprocessor, and Mitsubishi manipulator.

Figure 12.

An example of a cycle process of the machine vision lab experiment for automated chamfering process integrating YOLOv5, Jetson Nano microprocessor, and Mitsubishi manipulator.

The described experimental setup is based on the complex integration of the Mitsubishi Melfa RV-2F-1D1-S15 manipulator and the YOLOv5 program that is running on a NVIDIA Jetson Nano. The overarching objective is to enable automated chamfering of the metal workpiece based on the detection of sharp edges, chamfer edges, or burrs on their surface. The process commences with the Jetson Nano, with the YOLOv5 program inside. The program is designed to undertake real-time object detection utilizing the input from the connected camera. This camera serves as the sensory input, capturing images of the metal workpiece’s surface. Upon image acquisition, the YOLOv5 program processes the visuals to classify and detect three different images i.e., sharp edge, chamfer edge, and burrs edge of the metal workpiece.

Upon successful detection, the YOLOv5 program initiates the subsequent action. In this instance, it generates a set of distinct class classification commands (sharp edge, chamfer edge, burr edge) in a signal format. Mitsubishi Melfa RV-2F-1D1-S15 robot manipulator receives the polynomial command signal set as the input medium. The manipulator is prepared to receive signals from the machine vision as commands. A detail explanation of the communication from NVIDIA Jetson Nano and Mitsubishi Melfa RV-2F-1D1-S15 robot manipulator is presented in

Figure 12’s description No. 5. Depending on the type of signal received, which indicates the presence of an edge image detection, the robot manipulator interprets the signal and initiates the appropriate corrective action. The robot manipulator action is based on the predetermined CAD software which was explain in the first paragraph of this section. The robot have three different actions to accommodate three possible edge detection output from real-time machine vision method. For example, if the sharp image was detected, the robot manipulator was programmed to have 5 passes of grinding and chamfering process. A detail of the three actions based on the machine vision output is presented in

Table 6. A simulation of the successful real time machine vision lab experiment is presented in the following YouTube link:

https://www.youtube.com/watch?v=Qe6DPEanpjg&t=3s.

Table 6.

Action of robot manipulator based on NVIDIA Jetson Nano prediction output.

Table 6.

Action of robot manipulator based on NVIDIA Jetson Nano prediction output.

Edge Image Detection

(input from Jetson Nano) |

Number of Passes for Grinding and Chamfering

(robot manipulator action according to the Jetson Nano input) |

| Sharp |

5 |

| Chamfer |

3 |

| Burrs |

10 |

The Mitsubishi Electric Melfa RV-2F-1D1-S15 manipulator was connected to the customized grinding equipment. The end effector was connected to the portable grinder.

A selected metal workpiece with a sharp type was attached to the clamp close to the manipulator for testing of real-time machine vision method.

A Logitech camera with 720p and 30 fps was set up to capture the tested metal workpiece with a sharp-end feature.

-

NVIDIA Jetson Nano with embedded YOLOv5 model was connected to a PC and camera. The following is a detail of the embedded system:

To begin engagement with it. It is necessary to get the most recent operating system (OS) image known as Jetpack SDK. This software package encompasses the Linux Driver Package (L4T), which consists of the Linux operating system, as well as CUDA-X accelerated libraries and APIs. These components are specifically designed to facilitate Deep Learning, Computer Vision, Accelerated Computing, and Multimedia tasks. The website [

45] provides comprehensive documentation, including all necessary materials and step-by-step instructions for utilizing the product. In this project, Ubuntu 20.04 has been utilized, encompassing the most recent iterations of CUDA, cuDNN, and TensorRT, which will be discussed in subsequent sections [

46].

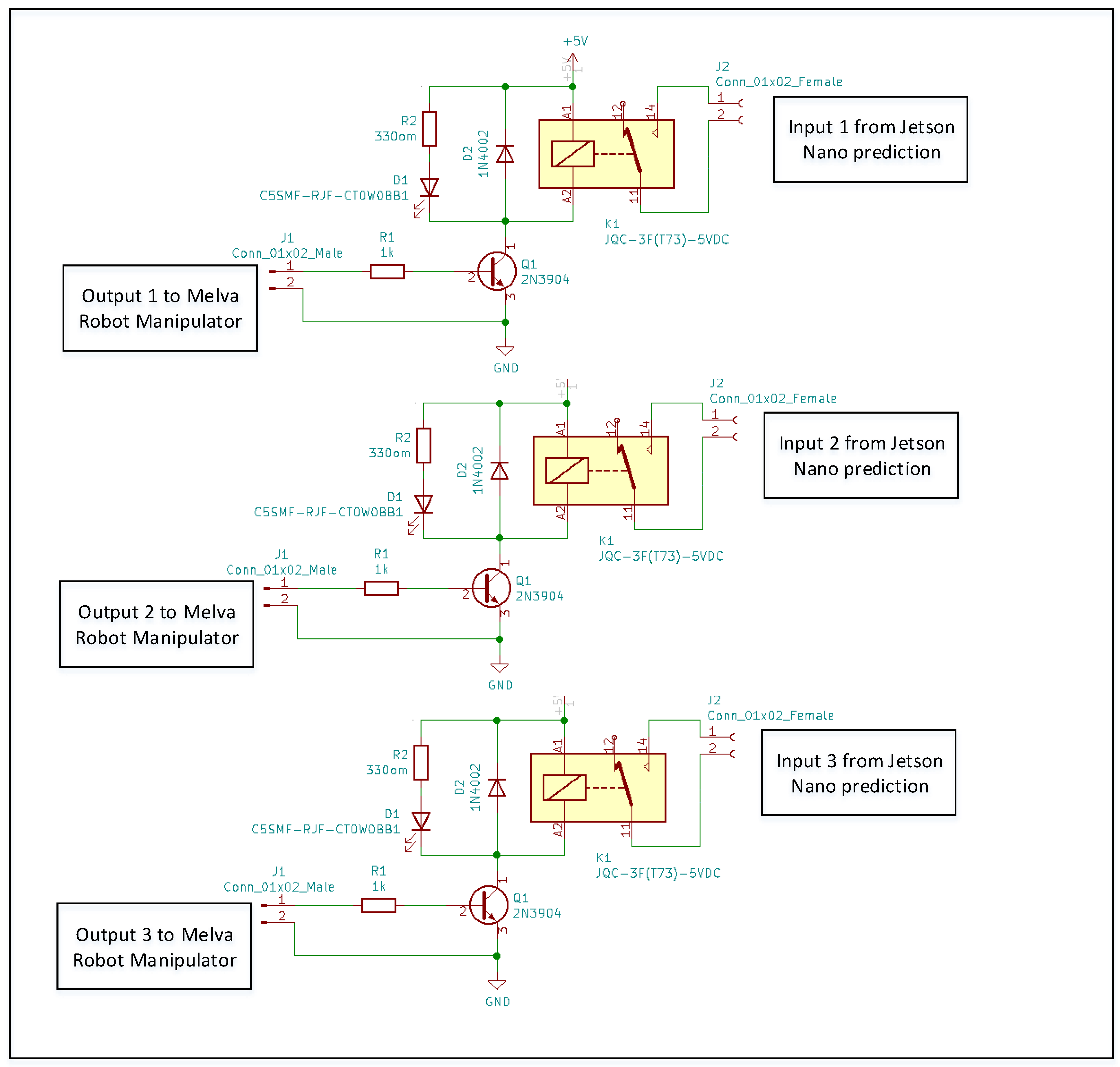

A customized electrical board was designed as communication hardware between Jetson Nano and the robot manipulator. The communication was done through GPIO (General Purpose Input/Output) pins on the Jetson Nano device. This circuit board translates the output from GPIO inputs to the PLC controller of the manipulator. A detail of electrical circuit board in presented in

Figure 13. The board has three inputs and three outputs. The inputs were from the Jetson Nano machine vision image detection result represented in GPIO input and the outputs for the Mitsubishi Electric Melfa RV-2F-1D1-S15 robot manipulator actuator. For example, if the NVIDIA Jetson Nano detect a sharp email, it will goes to GPIO input 1 and go through to output 1 in Mitsubishi Electric Melfa RV-2F-1D1-S15 robot manipulator.

An image detection of the metal workpiece is shown. It was successfully detected as a sharp edge with a probability of 88%.

The manipulator has been programmed initially as an action of the image detection from the Jetson Nano.

The manipulator does the grinding process according to the result of the Jetson Nano that has been interpreted by the customized electrical circuit and the predetermined manipulator program.

Figure 13.

A customized electronic circuit board.

Figure 13.

A customized electronic circuit board.

7. Conclusions

The tree DL methods (VGG-16, ResNet, and YOLOv5) with has potential application for machine vision technologies has been studied and examined. These vision algorithms were used to classify and detect three different edge conditions of metal workpieces i.e., sharp edge, chamfer edge, and burrs edge with a satisfactory result. Integration of embedded selected DL method i.e., YOLOv5 model in NVIDIA Jetson Nano microprocessor with Mitsubishi Electric Melfa RV-2F-1D1-S15 manipulator has also been presented. The embedded system and the integrated system were demonstrated in the lab experiment as a potential machine vision approach for automated manufacturing process. For real application in practice or industry, the machine vision system-based YOLOv5 algorithm is integrated with the robot manipulator as the actuator of the predicted outcome as demonstrated in this study.

The integration shows that the YOLOv5 model in real-time image detection provides efficient results in identifying the suggested conditions and classifying them correctly. The stability of this system also improved the efficiency and reliability of the automated system making them suitable for industrial applications, especially in manufacturing applications.

This study contribute in the development of the real-time machine vision system based on YOLOv5 and NVIDIA Jetson Nano in the lab experimental study. The machine vision system was successfully integrated with a Mitsubishi Electric Melfa RV-2F-1D1-S15 robot manipulator to perform automate grinding and chamfering.

The future work of the study:

The present study used YOLOv5 for the embedded machine vision algorithm, and will try YOLOv8 in the further study.

Three different edge condition were used in present study. As there are a number of edge condition in practice such as crack and chip, future study will use more than 3 different edge condition.

A similar size of the metal workpiece is maintain in the present study. In the future different size (length, height, and weight) will be considered for the real-time machine vision system.

The Mitsubishi Electric Melfa RV-2F-1D1-S15 robot manipulator, which is equipped with a chamfering tool attached to the tooltip, serves as the medium for automatic intervention. The present study has successfully deliver an experimental study. In the future, in the event of the presence of sharp edges or burrs, the manipulator employs a chamfering tool to modify the surface of the metal workpiece. This process is repeated until the edge of the metal workpiece becomes appropriately blunted, thereby effectively correcting the defect.

Author Contributions

Conceptualization W.C.; methodology, A.W.K. and W.C.; software, A.W.K. and P.M.; validation, A.W.K. and W.C.; formal analysis, A.W.K., P.M. and W.C.; investigation, W.C.; resources, P.M., G.B., M.B. and W.C.; data curation, A.W.K., P.M., G.B. and M.B.; writing—original draft preparation, A.W.K., P.M. and W.C.; writing—review and editing, A.W.K., P.M., N.A.A. and W.C.; visualization, A.W.K., P.M. and W.C.; supervision, H.Y., G.K. and W.C.; project administration, G.K. and W.C.; funding acquisition, G.K. and W.C.