Submitted:

03 September 2024

Posted:

04 September 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

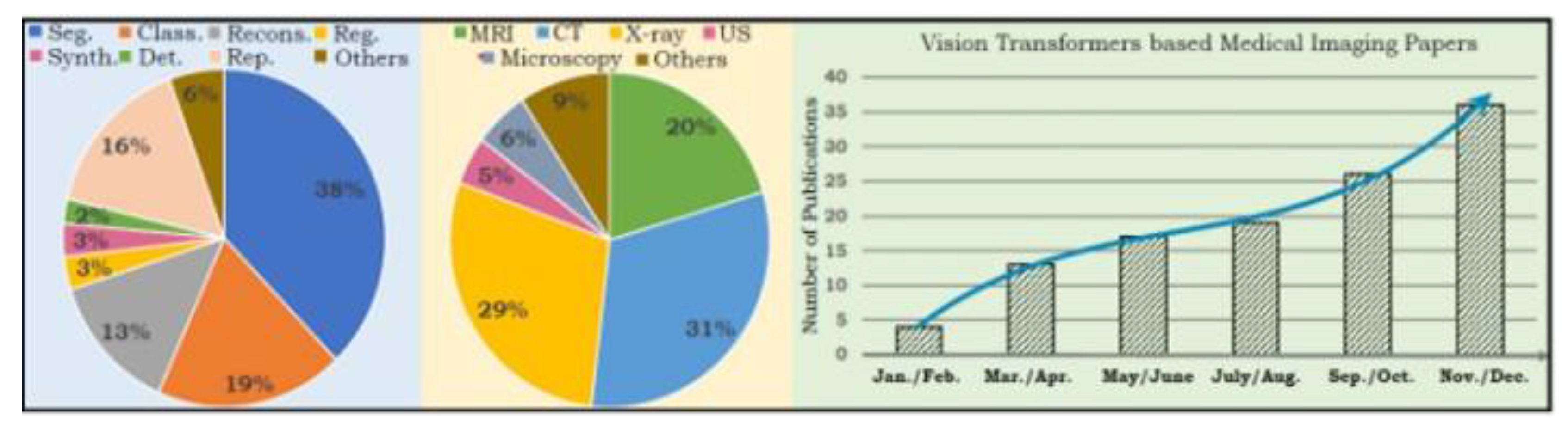

2. Literature Review

3. Transformer

3.1. ViT: Challenging CNNs and RNNs in Image Classification

3.2. Main Components of a Vision Transformer

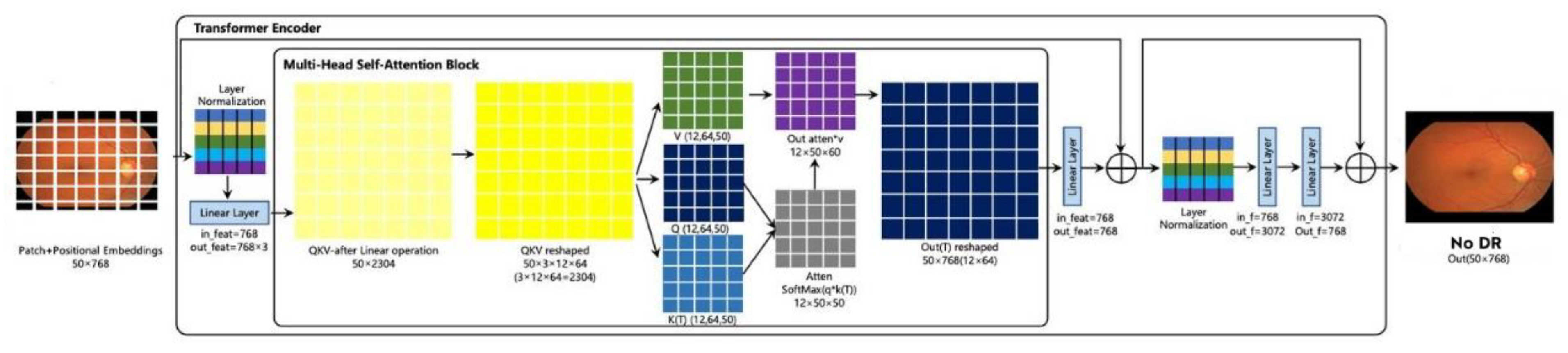

3.2.1. Transformer Encoder

3.2.2. Patch Embedding

3.2.3. Position Encoding

3.2.4. Attention Mechanism

3.2.4. Self-Attention

3.2.5. Multi-Head Self-Attention Mechanism

3.3. Compact Convolutional Network

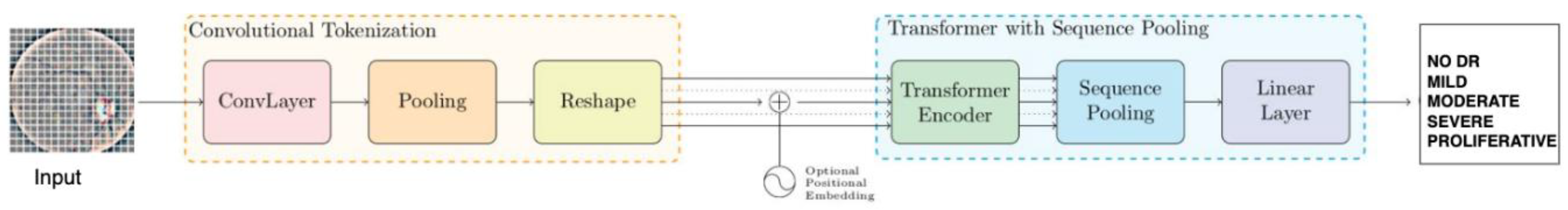

3.4.1. Convolutional Tokenization

3.4.2. Transformer Encoder

3.4.3. Sequence Pooling

3.4.4. Classification Tasks

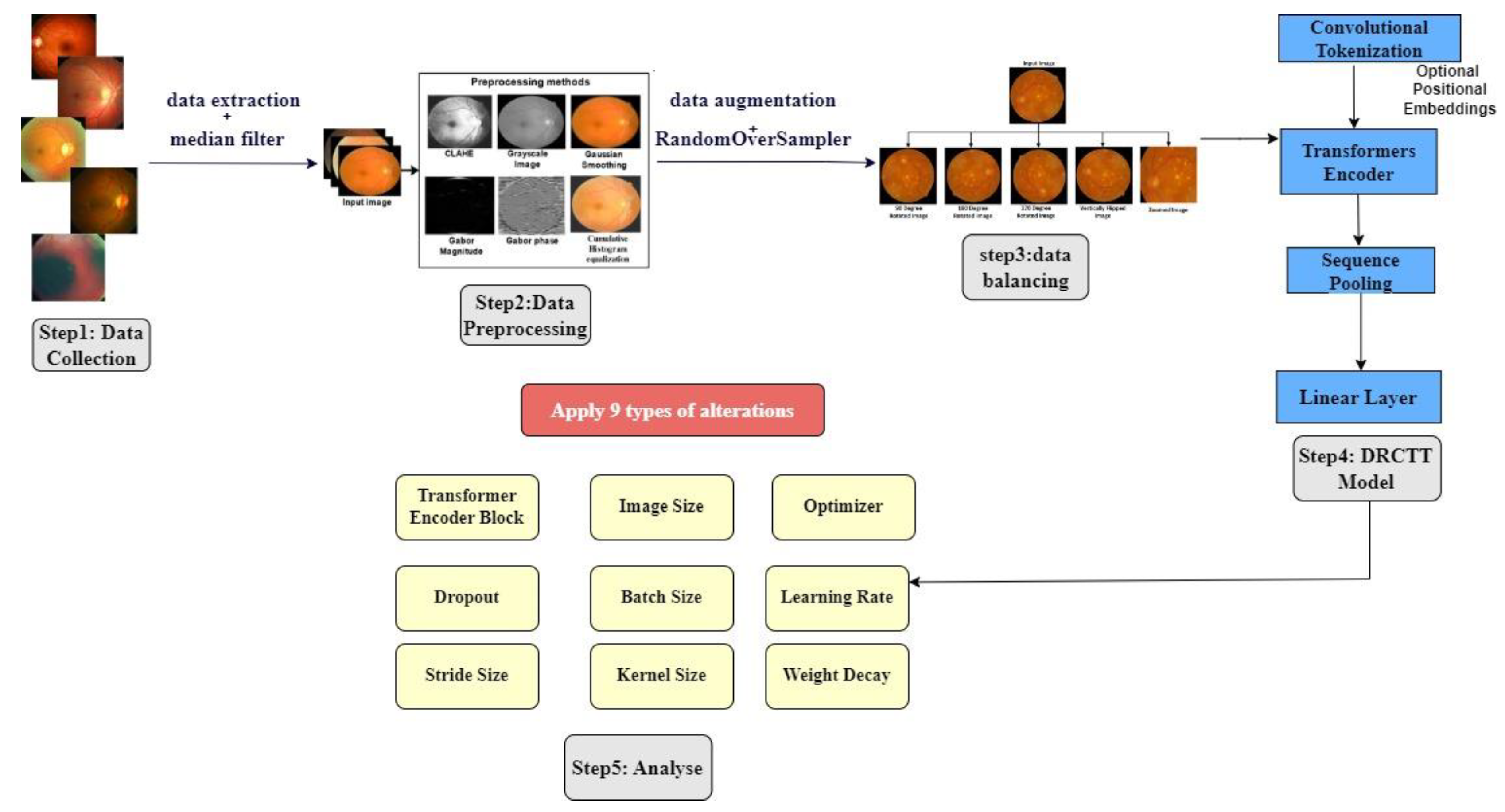

4. Work Done

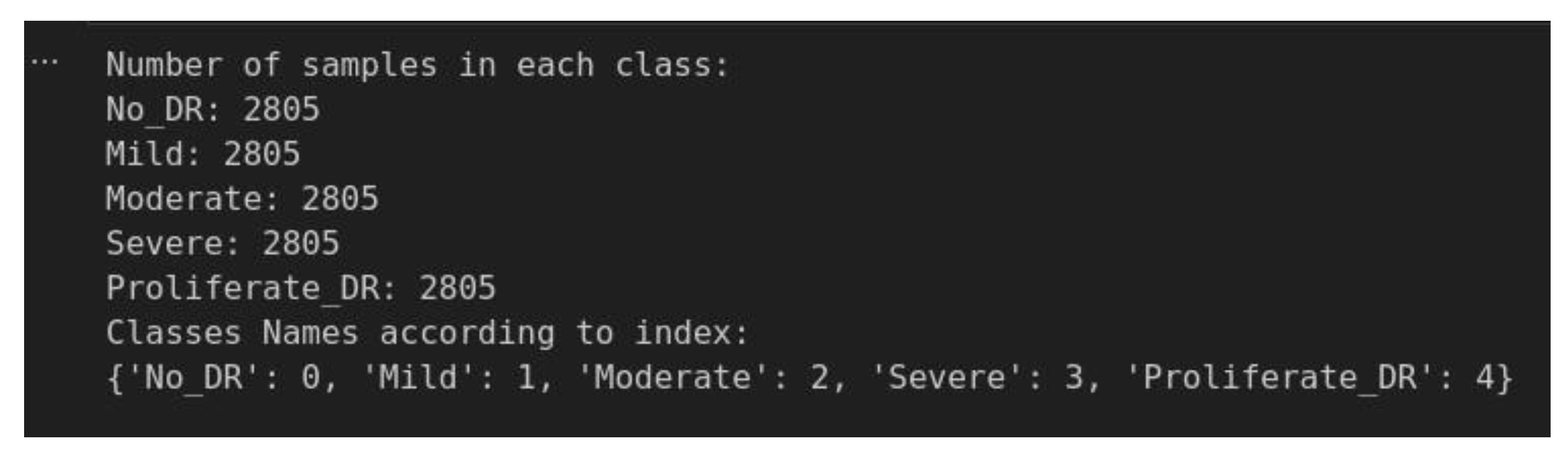

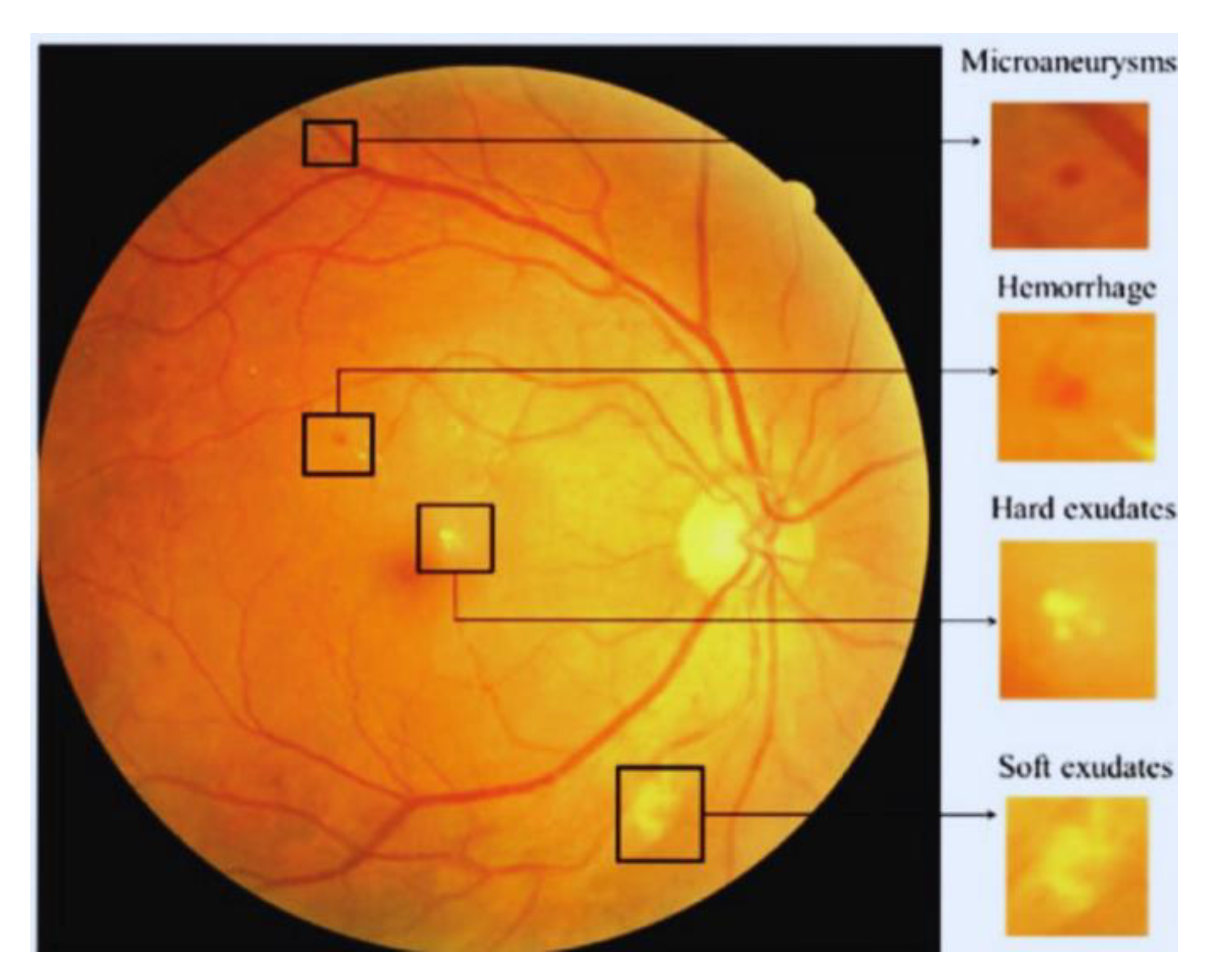

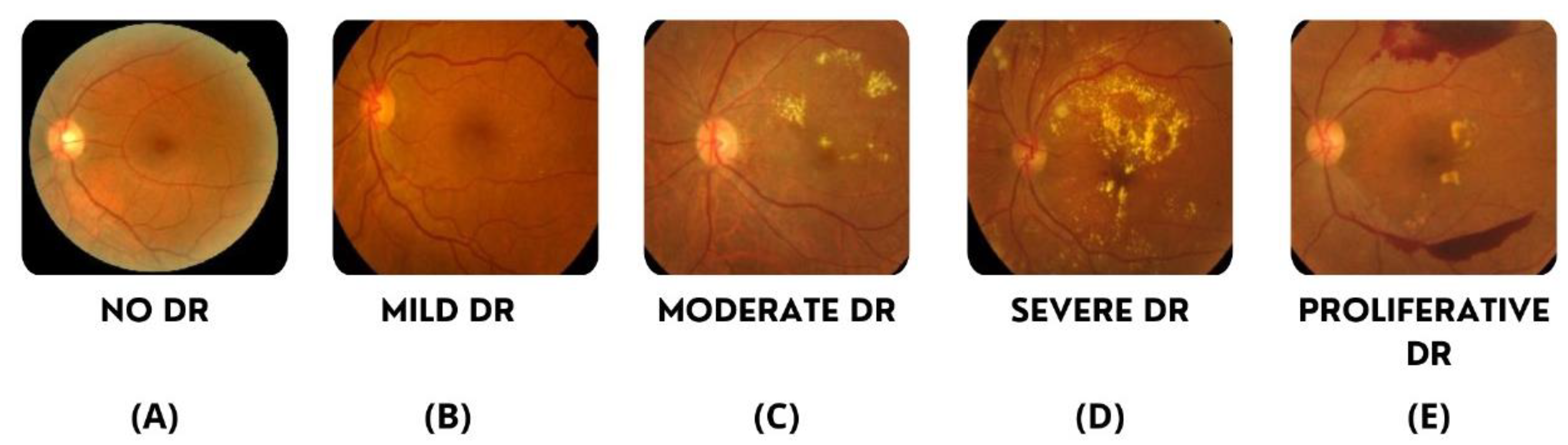

4.1. Data Undestading

4.2. Image Preprocessing

4.2.1. Feature Extraction

4.2.1. Noise Reduction

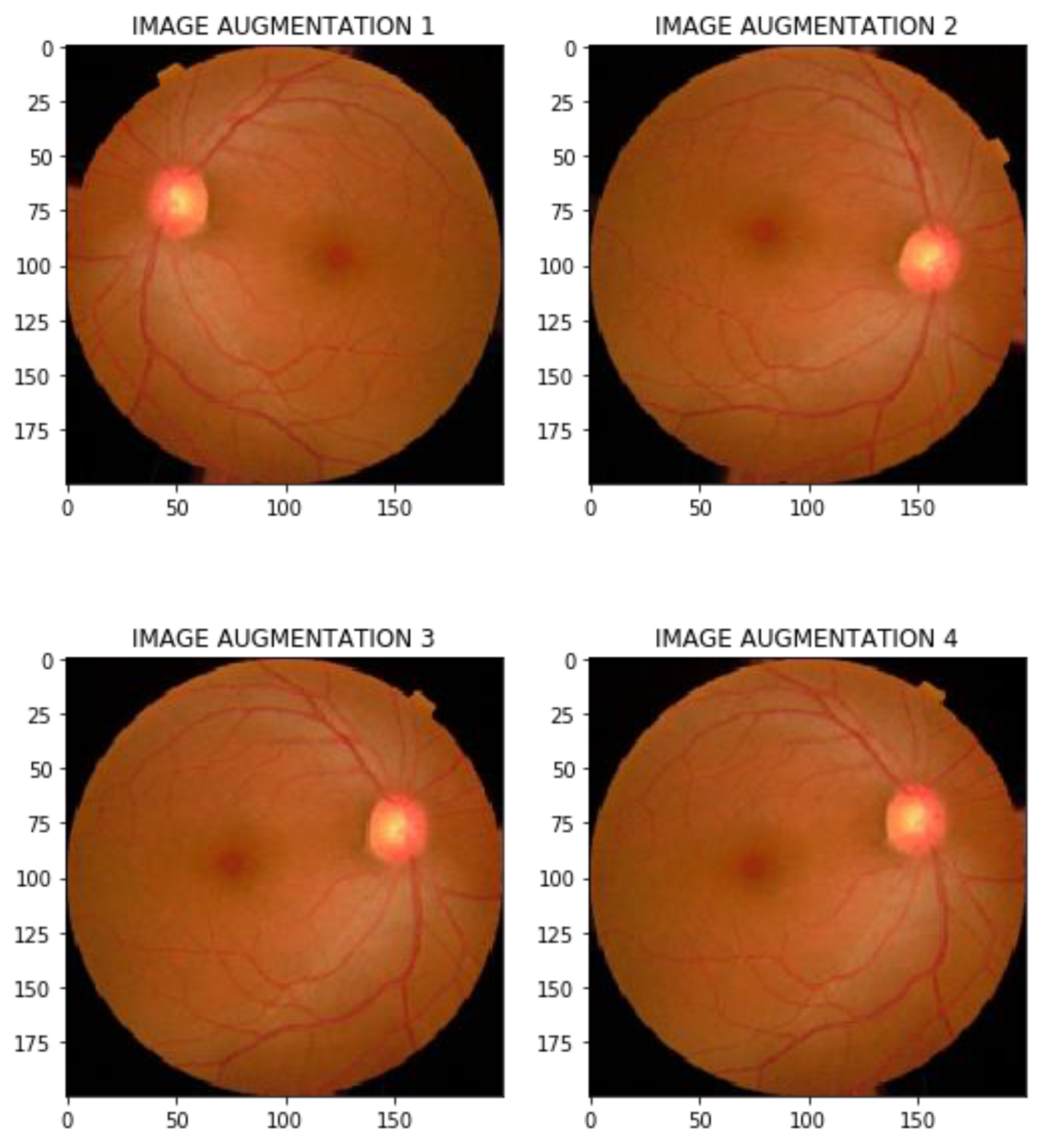

4.2.3. Data Augmentation

4.2.4. Data Balancing

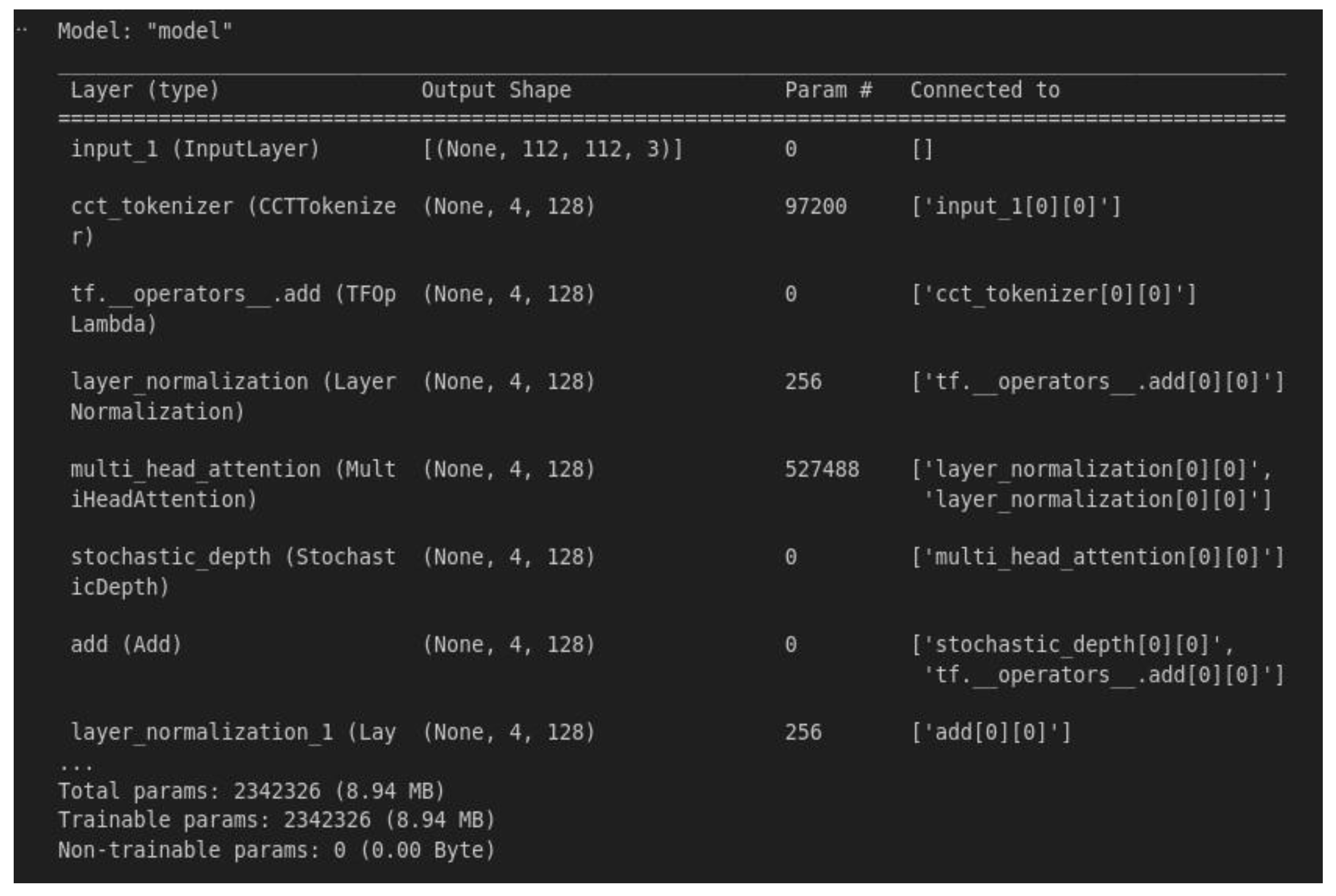

4.3. Modeling Bulding

4. Results and Discussion

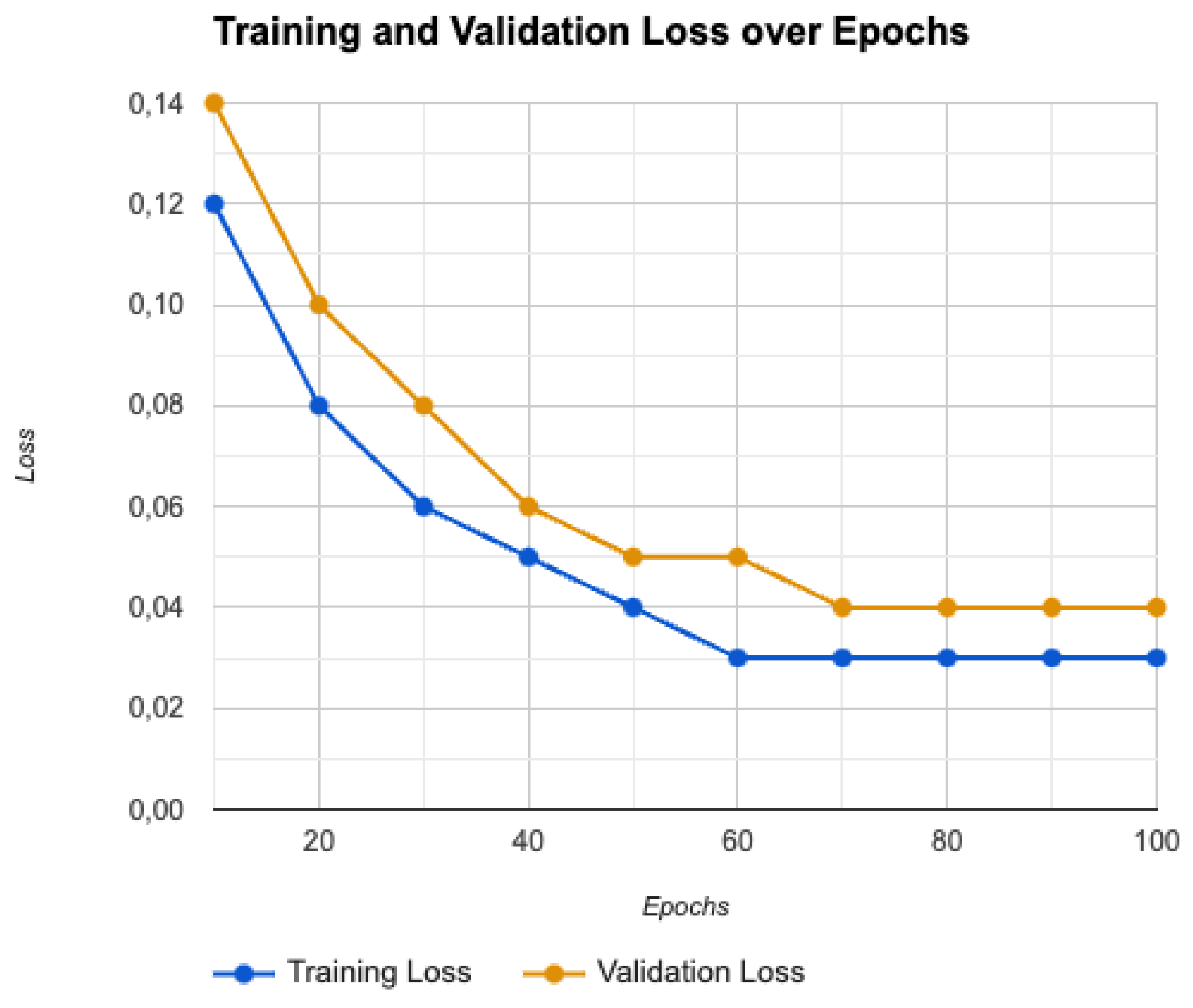

4.1. Training and Validation Performance

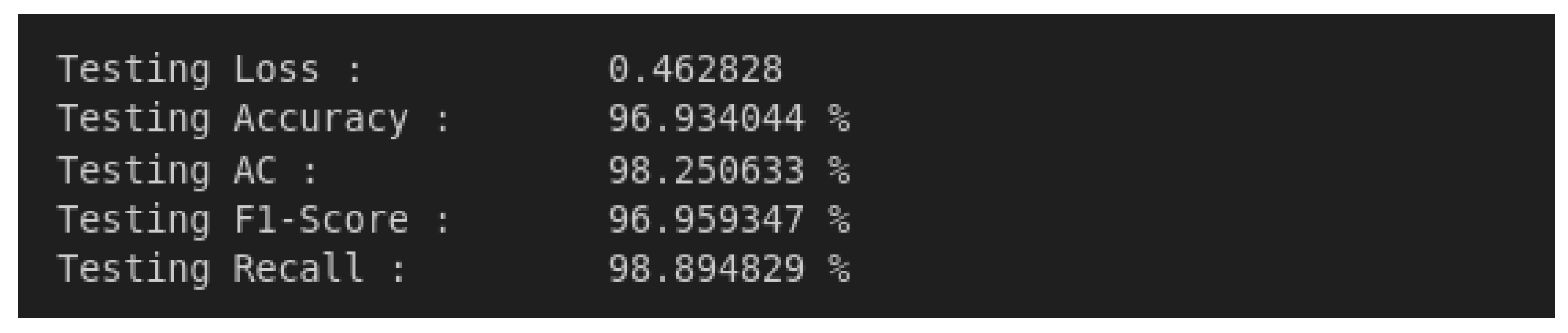

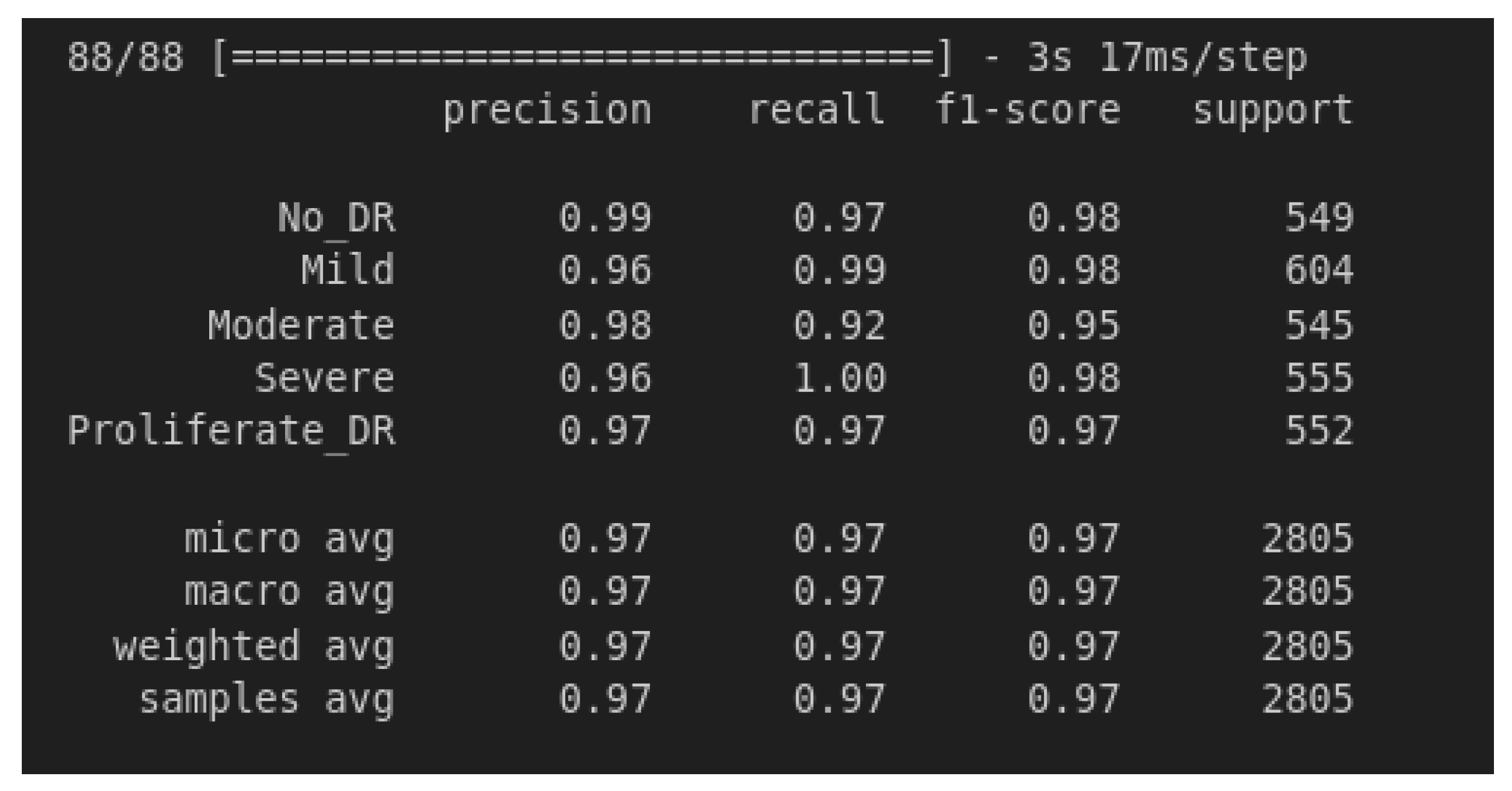

4.2. Model Testing and Metrics

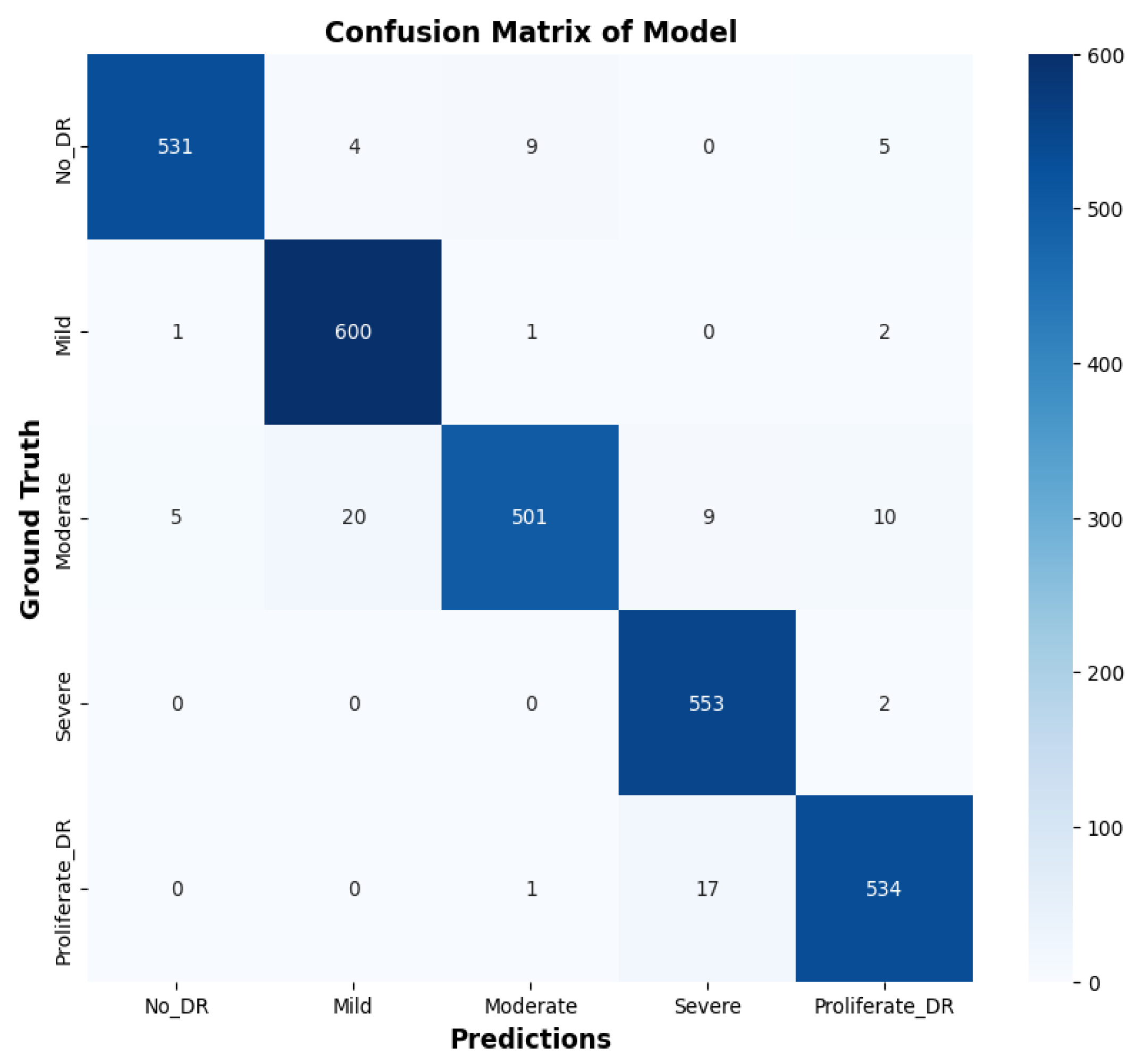

4.3. Confusion Matrix Analysis

- True Positives (TP): Correctly identified positive cases.

- True Negatives (TN): Correctly identified negative cases.

- False Positives (FP): Incorrectly identified positive cases.

- False Negatives (FN): Incorrectly identified negative cases.

4.4. Training and Validation Loss

4.5. Advanced Optimization Strategies

4.5.1. Optimizer

- θt represents the parameters at time step t,

- η is the learning rate,

- and are the bias-corrected first and second moment estimates,

- is a small constant for numerical stability,

- λ= 0.01 is the weight decay coefficient.

4.5.2. Cost Function

- N is the number of classes,

- is the ground truth label for class i,

- i is the predicted probability for class i.

- γ is the focusing parameter that adjusts the rate at which easy examples are down-weighted.

4.5.3. Learning Rate Adjustment

4.5.4. Regularization Techniques

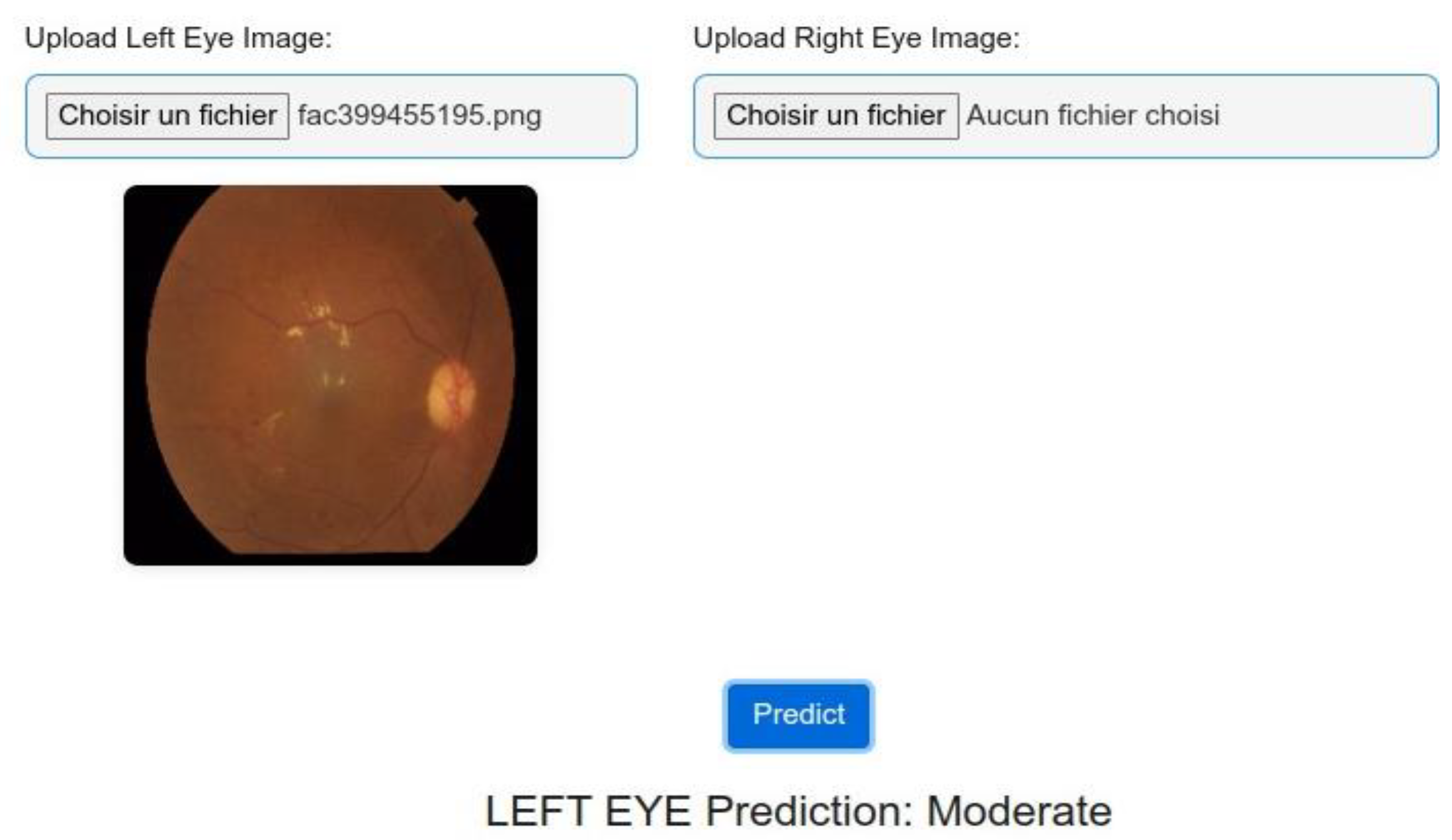

4.6. Results Overview

4.7. Comparative Study of Results

5. Conclusions

6. Future Research

Additional Perspective

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bidwai, P.; Gite, S.; Pahuja, K.; Kotecha, K. A Systematic Literature Review on Diabetic Retinopathy Using an Artificial Intelligence Approach. Big Data Cogn. Comput. 2022, 6, 152. [Google Scholar] [CrossRef]

- Subramanian, S.; Mishra, S.; Patil, S.; Shaw, K.; Aghajari, E. Machine Learning Styles for Diabetic Retinopathy Detection: A Review and Bibliometric Analysis. Big Data Cogn. Comput. 2022, 6, 154. [Google Scholar] [CrossRef]

- S. et al. « Transformers in medical imaging: A survey”. In: Medical Image Analysis. » (2023), adresse. [CrossRef]

- Sheikh, S., & Qidwai, U. (2020). Using MobileNetV2 to Classify the Severity of Diabetic Retinopathy. International Journal of Simulation- -Systems, Science & Technology, 21(2).

- J. Gao, C. Leung and C. Miao, "Diabetic Retinopathy Classification Using an Efficient Convolutional Neural Network", in 2019 IEEE InternationalConferenceonAgents (ICA),2019.

- JWang,S.,Wang,X.,Hu,Y.,Shen,Y.,Yang,Z.,Gan,M.,&Lei,B. (2020). Diabetic retinopathy diagnosis using multichannel generative adversarial network with semisupervision. IEEE Transactions on Automation Science and Engineering, 18(2), 574-585.

- Touati, M., Nana, L., Benzarti, F. (2023). A Deep Learning Model for Diabetic Retinopathy Classification. In: Motahhir, S., Bossoufi, B. (eds) Digital Technologies and Applications. ICDTA 2023. Lecture Notes in Networks and Systems, vol 669. Springer, Cham.

- Yaqoob, M. K., Ali, S. F., Bilal, M., Hanif, M. S., & Al-Saggaf, U. M. (2021). ResNet based deep features and random forest classifier for diabetic retinopathy detection. Sensors, 21(11), 3883.

- Dharmana, M. M., & Aiswarya, M. S. (2020, July). Pre-diagnosis of Diabetic Retinopathy using Blob Detection. In 2020 Second International Conference on Inventive Research in Computing Applications (ICIRCA) (pp. 98-101). IEEE.

- Toledo-Cortés, S., De La Pava, M., Perdomo, O., & González, F. A. (2020, October). Hybrid Deep Learning Gaussian Process for Diabetic Retinopathy Diagnosis and Uncertainty Quantification. In International Workshop on Ophthalmic Medical Image Analysis (pp. 206- 215). Springer, Cham.

- Wang, Z., Yin, Y., Shi, J., Fang, W., Li, H., & Wang, X. (2017). Zoom- in-net: Deep mining lesions for diabetic retinopathy detection. In Medical Image Computing and Computer Assisted Intervention− MICCAI 2017: 20th International Conference, Quebec City, QC, Canada, September 11-13, 2017, Proceedings, Part III 20 (pp. 267- 275). Springer International Publishing.

- Vo, H. H., & Verma, A. (2016, December). New deep neural nets for fine-grained diabetic retinopathy recognition on hybrid color space. In 2016 IEEE International Symposium on Multimedia (ISM) (pp. 209- 215). IEEE.

- Touati, M., Nana, L., & Benzarti, F. (2024). Enhancing diabetic retinopathy classification: A fusion of ResNet50 with attention mechanism. In 10th International Conference on Control, Decision and Information Technologies (CoDIT).

- Vani, K. S., Praneeth, P., Kommareddy, V., Kumar, P. R., Sarath, M., Hussain, S., & Ravikiran, P. (2024). An Enhancing Diabetic Retinopathy Classification and Segmentation based on TaNet. Nano Biomedicine & Engineering, 16(1).

- Alwakid, G., Gouda, W., Humayun, M., & Jhanjhi, N. Z. (2023). Enhancing diabetic retinopathy classification using deep learning. Digital Health, 9, 20552076231203676.

- Al-Hammuri, K., Gebali, F., Kanan, A., & Chelvan, I. T. (2023). Vision transformer architecture and applications in digital health: a tutorial and survey. Visual computing for industry, biomedicine, and art, 6(1), 14.

- Nazih, W., Aseeri, A. O., Atallah, O. Y., & El-Sappagh, S. (2023). Vision transformer model for predicting the severity of diabetic retinopathy in fundus photography-based retina images. IEEE Access, 11, 117546-117561.

- Khan, I. U., Raiaan, M. A. K., Fatema, K., Azam, S., Rashid, R. U., Mukta, S. H., ... & De Boer, F. (2023). A computer-aided diagnostic system to identify diabetic retinopathy, utilizing a modified compact convolutional transformer and low-resolution images to reduce computation time. Biomedicines, 11(6), 1566.

- M. Berbar. « Features extraction using encoded local binary pattern for detection and grading diabetic retinopathy. » (2022), adresse. [CrossRef]

- Bashir, I., Sajid, M. Z., Kalsoom, R., Ali Khan, N., Qureshi, I., Abbas, F., & Abbas, Q. (2023). RDS-DR: An Improved Deep Learning Model for Classifying Severity Levels of Diabetic Retinopathy. Diagnostics, 13(19), 3116.

- Y. R. et al. « Diabetic Retinopathy Classification Using CNN and Hybrid Deep Convolutional Neural Networks. » (2022), adresse. [CrossRef]

- N. G. et al. « Evaluation of artifcial intelligence techniques in disease diagnosis and prediction. » (2023), adresse: https://link.springer.com/article/10.1007/s44163-023-00049-5?fromPaywallRec=true.

- Y. Y. et al. « Vision transformer with masked autoencoders for referable diabetic retinopathy classification based on large-size retina image. » (2023), adresse. [CrossRef]

- N. S. R. Karthikeyan. « Diabetic Retinopathy Detection using CNN, Transformer and MLP based Architectures. » (2021), adresse: https://ieeexplore.ieee.org/abstract/document/9651024.

- J. W. et al. « Vision Transformer-based recognition of diabetic retinopathy grade. » (2021), adresse. [CrossRef]

- A. D. et al. « An image is worth 16x16 words: Transformers for image recognition at scale. » (2020), adresse. [CrossRef]

- A.-H. et al. « Vision transformer architecture and applications in digital health: a tutorial and survey. » (2023), adresse:. [CrossRef]

- I. et al. « Recent advances in vision transformer: A survey and outlook of recent work. » (2022), adresse:. [CrossRef]

- H. et al. « Escaping the big data paradigm with compact transformers. » (2021), adresse https://www.researchgate.net/publication/350834450_Escaping_the_Big_Data_Paradigm_with_Compact_Transformers.

| Aspect | CNNs | RNNs | ViTs |

|---|---|---|---|

| Arhitecture | Convolutional layers | Sequential recurrent layers | Transformer Encoder witd self-attention |

| Data Processing | Local patterns, spatial hierarchies | Sequential information | Dependencies, global integration |

| Feature Learning | Local features, sequential learning | Global features, entire sequence | Local integration into patches, global integration |

| Receptive Field | Local | Local (sequential) | Global |

| Feature Engineering | More manual, learns from data | More manual, learns from data | Less manual, learns from data |

| Scalability | Average | Low (sequential processing) | High (parallel processing) |

| Classes | Train set | Test set |

|---|---|---|

| No DR | 2192 | 549 |

| Mild | 592 | 148 |

| Moderate | 1518 | 380 |

| Proliferative DR | 472 | 118 |

| Severe | 284 | 72 |

| Metric | No_DR | Mild | Moderate | Severe | Proliferate | Micro Avg | Macro Avg | Weighted Avg | Samples Avg |

|---|---|---|---|---|---|---|---|---|---|

| Recall | 0.99 | 0.96 | 0.980.98 | 0.96 | 0.97 | 0.97 | 0.97 | 0.97 | 0.97 |

| Recall | 0.97 | 0.99 | 0.92 | 1.00 | 0.97 | 0.97 | 0.97 | 0.97 | 0.97 |

| F1-Score | 0.98 | 0.98 | 0.95 | 0.98 | 0.97 | 0.97 | 0.97 | 0.97 | 0.97 |

| Support | 549 | 604 | 545 | 555 | 552 | 2805 | 2805 | 2805 | 2805 |

| Authors | Method | Performance | Our Model |

|---|---|---|---|

| Sheikh, S., & Qidwai, (2020) | Transfer Learning of MobileNetV2 | 90.8% DR, 92.3% RDR | Likely superior, F1-score: 0.97 |

| Gao et al., (2019) | DL/Efficient CNN | 90.5% Accuracy | better |

| Yaakoob et al. (2021) | ResNet-50 with a Random Forest classifier | 96% on the Messidor-2 ,75.09% EyePACS | Better than EyePACS, comparable to Messidor-2 |

| Dharmana, M al., (2020) | Blob Technique and Naïve Bayes | 83% Accuracy | Significantly better |

| Wang, J & al., 2020 | Deep Convolutional Neural Networks | Kappa 0.8083 | Likely superior based on F1-score |

| Toledo-Cortés et al., (2020) | Deep Learning/DLGP- DR, Inception-V3 | 93.23% Sensitivity, 91.73% Specificity, 0.9769 AUC | Comparable, slightly better F1-score |

| Wang, S. & al., (2020) | Deep Learning/GAN Discriminative model | EyePACS: 86.13% Accuracy, Messidor: 84.23% Accuracy, Messidor(2): 80.46% Accuracy | Superior performance across metrics |

| Touati, M., Nana, L., Benzarti, F. (2023) | Xception pretrained model | Training accuracy: 94%, Test accuracy: 89%, F1 Score: 0.94 | Better F1-score: 0.97 |

| Toledo-Cortés et al., 2020 | Deep Learning/ DLGP- DR, Inception-V3 | 93.23% Sensitivity, 91.73% Specificity, 0.9769 AUC | Better in Sensiftiviy and Specificiy |

| Z. Wang, Y. Yin. (2017) | Deep Learning/CNN+Attention Network | AUC 0.921 Acc 0.905 for normal/abnormal | Likely superior based on metrics |

| Khan, I et al. (2023) | Compact Convolution Network | Acc 90.17% | Significantly better, likely 97% accuracy |

| M. Berbar (2022) | Residual-Dense System | 97% in classifying DR severity | Comparable or slightly better |

| Nazi et a (2023) | ViT CNN | F1-score: 0.825, accuracy: 0.825, B Acc: 0.826, AUC: 0.964, precision: 0.825, recall: 0.825, specificity: 0.956. | Significantly better, F1-score: 0.97 |

| Ijaz Bashir, et al. (2023) | Residual Block + CNN | Accuracy of 97.5% | Comparable, accuracy likely around 97% |

| Yasashvini R et al. (2022) | hybrid CNNs ResNet, and a hybrid CNN with DenseNet | .The models achieved accuracy rates of 96.22%, 93.18%, and 75.61%, respectively | Better |

| Yaoming Yang et al. (2024) | Vision Transformers (ViT) combined with Masked Autoencoders (MAE) | accuracy 93.42% ,AUC 0.9853, sensitivity 0.973, specificity 0.9539 | Slightly better F1-score |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).