Submitted:

12 July 2024

Posted:

15 July 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Background

2.1. Literature Review

3. Technical Approaches

3.1. Data Collection

3.2. Environmental Setup:

3.3. Model Architecture:

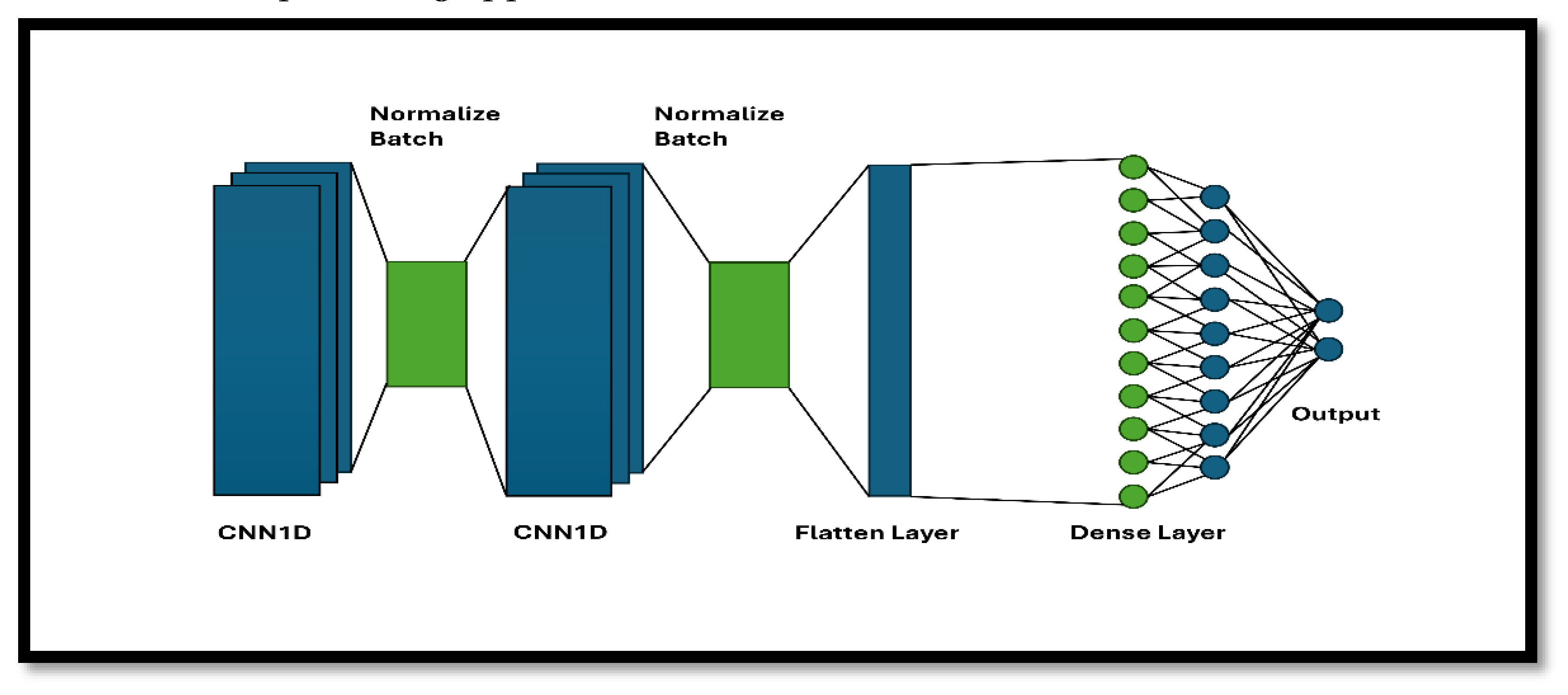

- CNN1D Model Architecture:

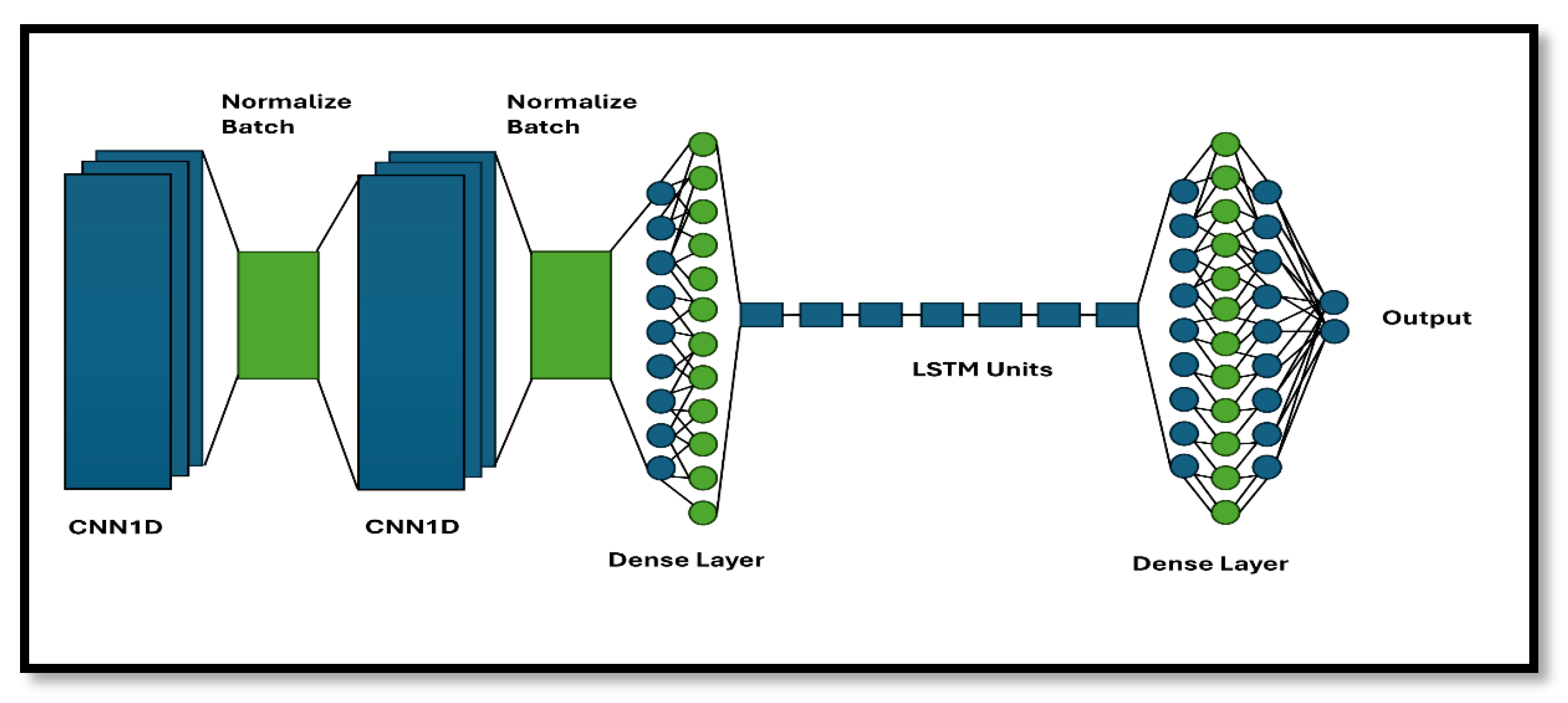

- CNN1D with LSTM Model Architecture:

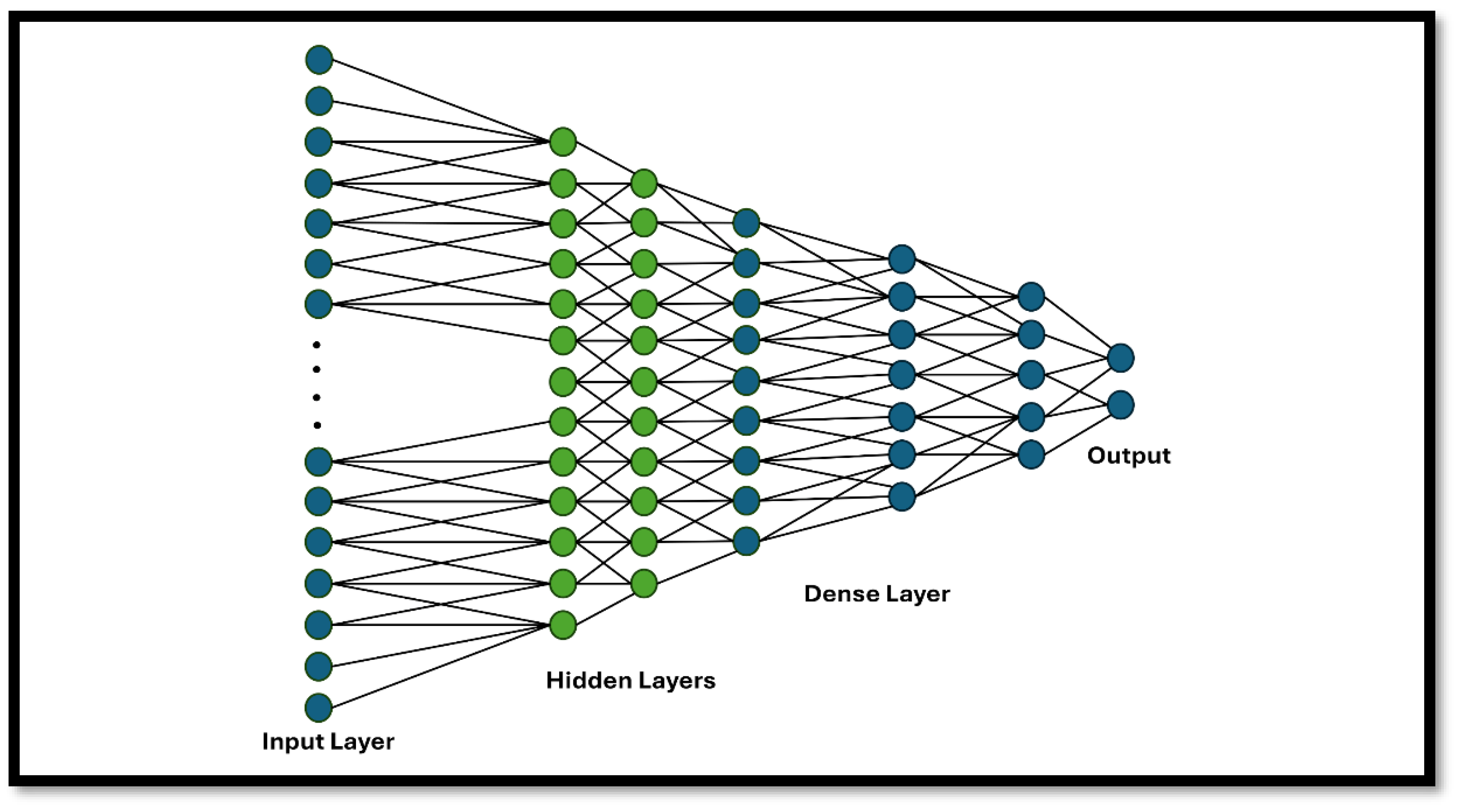

- Neural Networks Model Architecture:

4. Experimentation Results

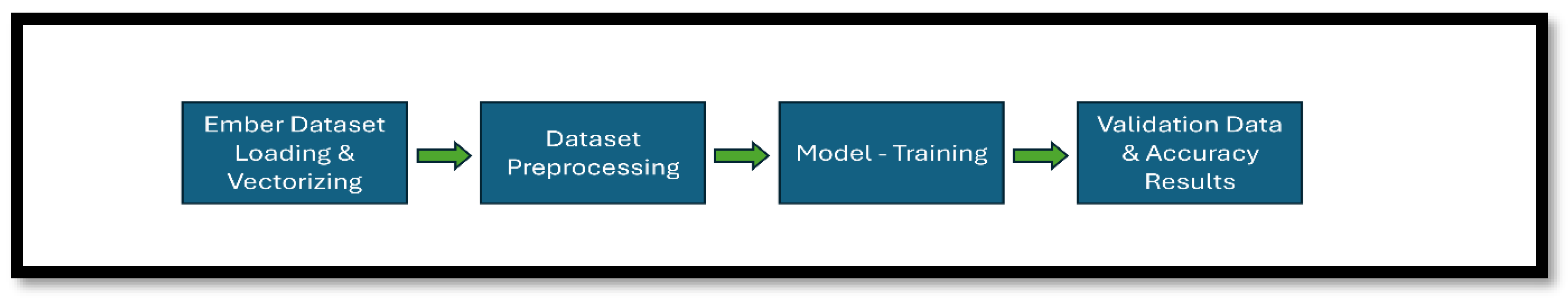

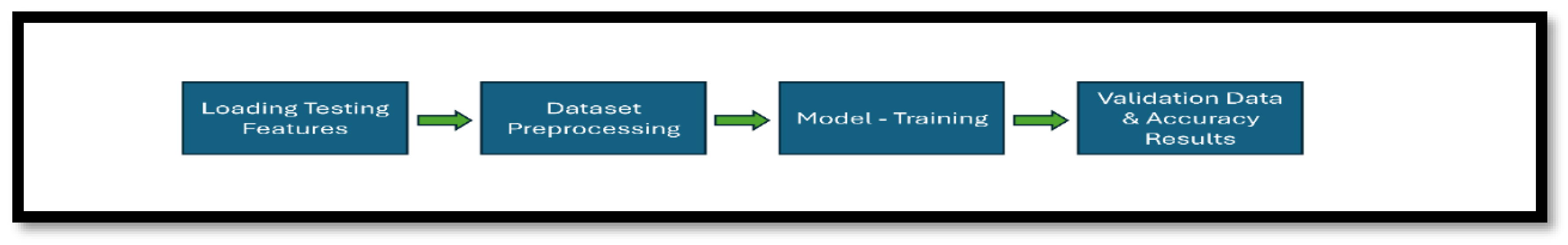

4.1. Implementation

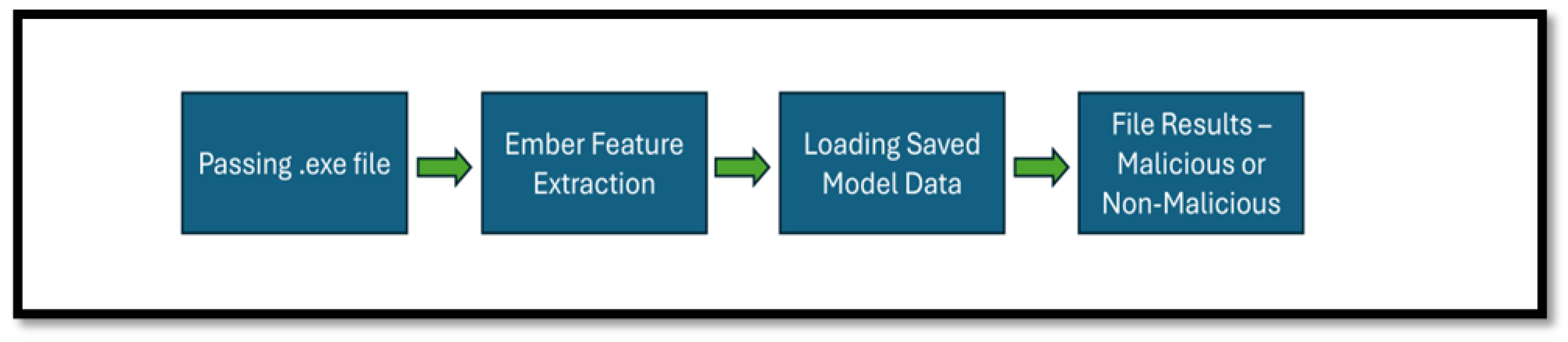

- Making predictions with the model using the raw properties extracted from the PE file. (See Appendix B: Figure A3).

4.2. Results

4.3. Improvisation Techniques

- Hardware Optimization: Using hardware accelerators like GPUs and TPUs can dramatically cut training times.

- Algorithm Optimization: Fine-tuning hyperparameters, optimizing network designs, and using advanced optimization techniques can all increase model performance.

- Data Augmentation: Increasing the diversity and volume of training data using strategies like data augmentation can improve model generalization and accuracy.

- Ensemble Learning: Bringing together predictions from several models or model modifications might increase overall performance and robustness.

- Transfer Learning: Using pre-trained models or features from big datasets can speed up training and increase performance, particularly in settings with limited training data.

- Device specific Model Development: Specific Models for raspberry pi, CPU, GPU, and TPU should be developed which must be consistent on all platforms based on collective performance.

4.4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

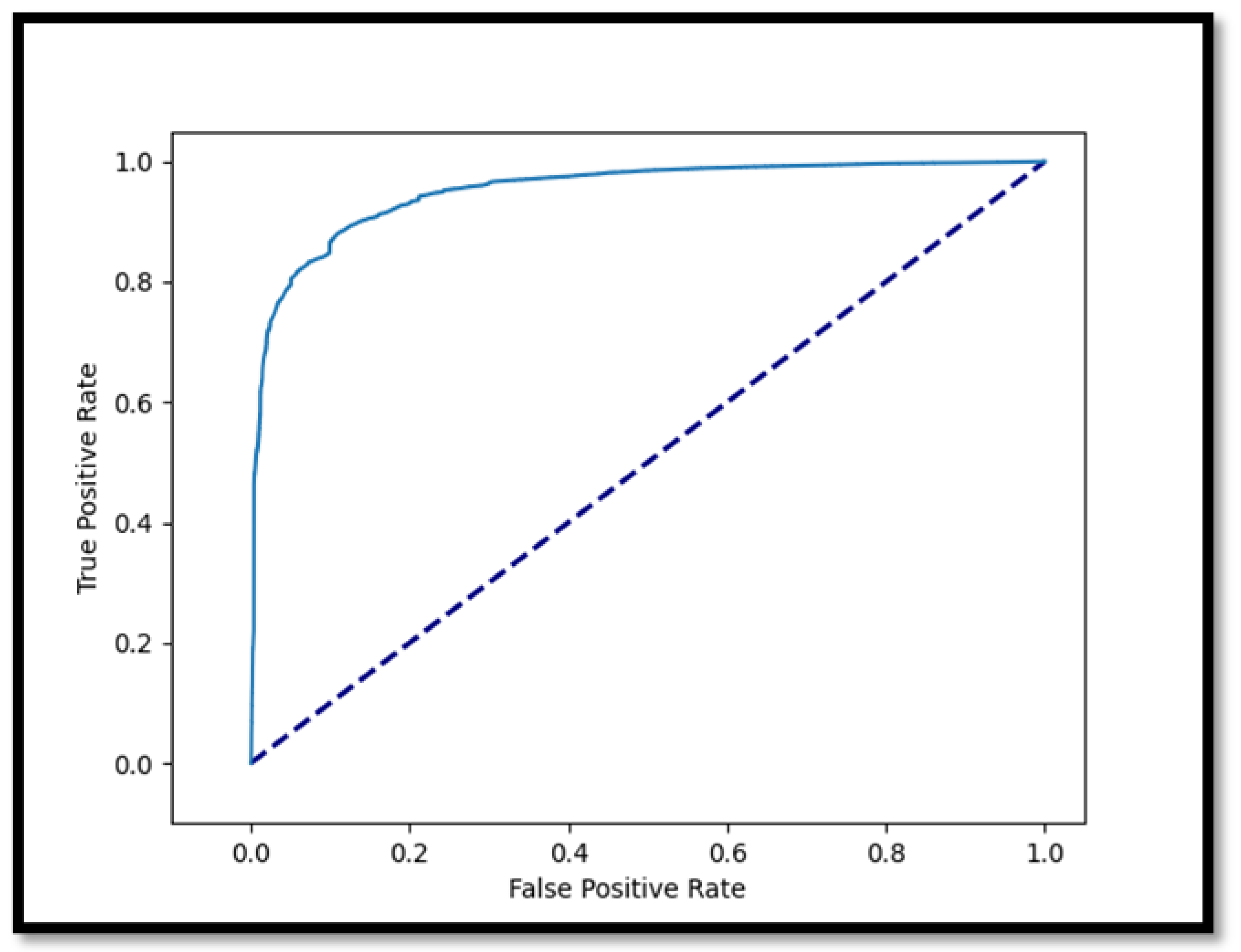

Appendix A: ROC Curve of CNN1D

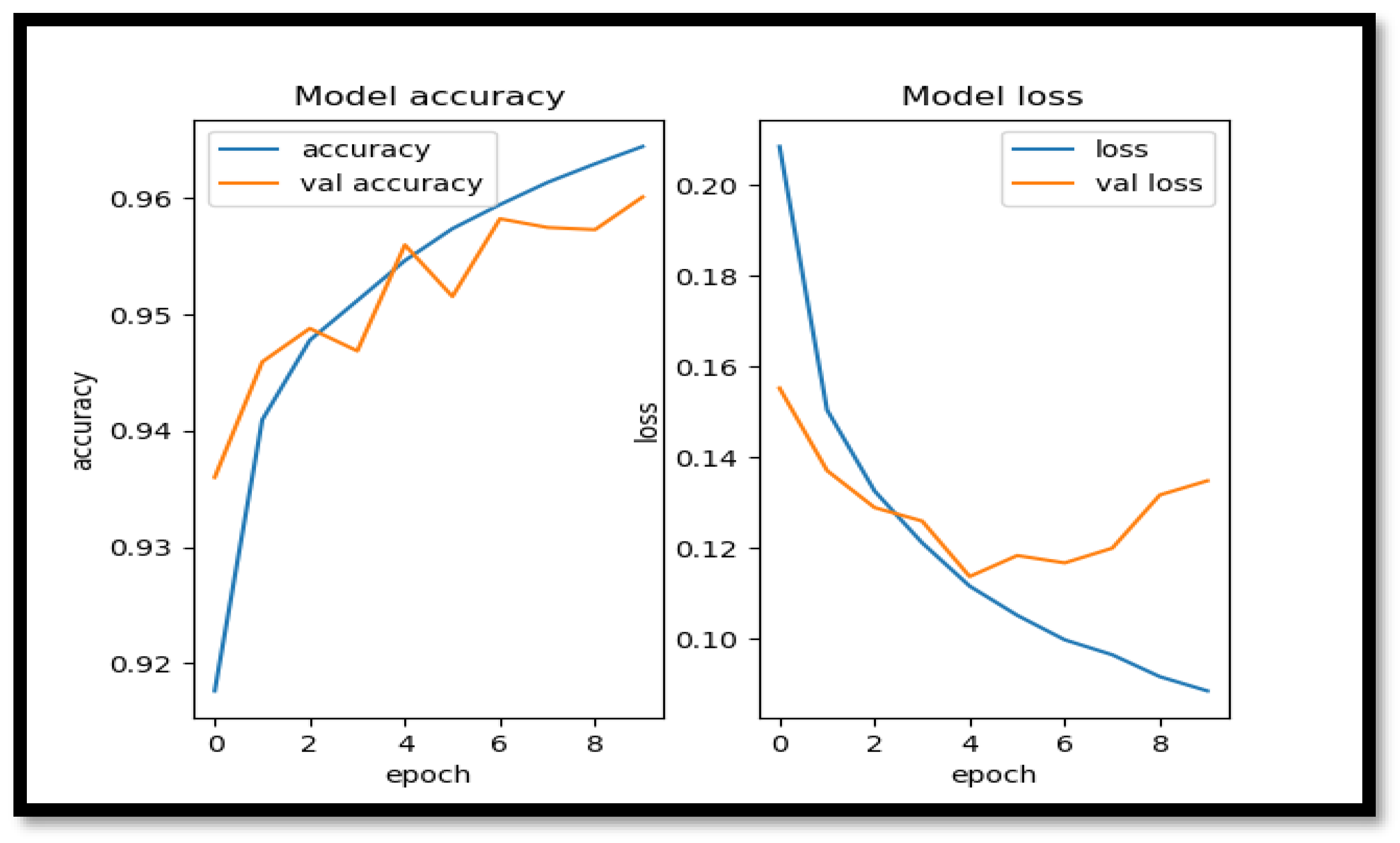

- Accuracy Results of CNN1D:

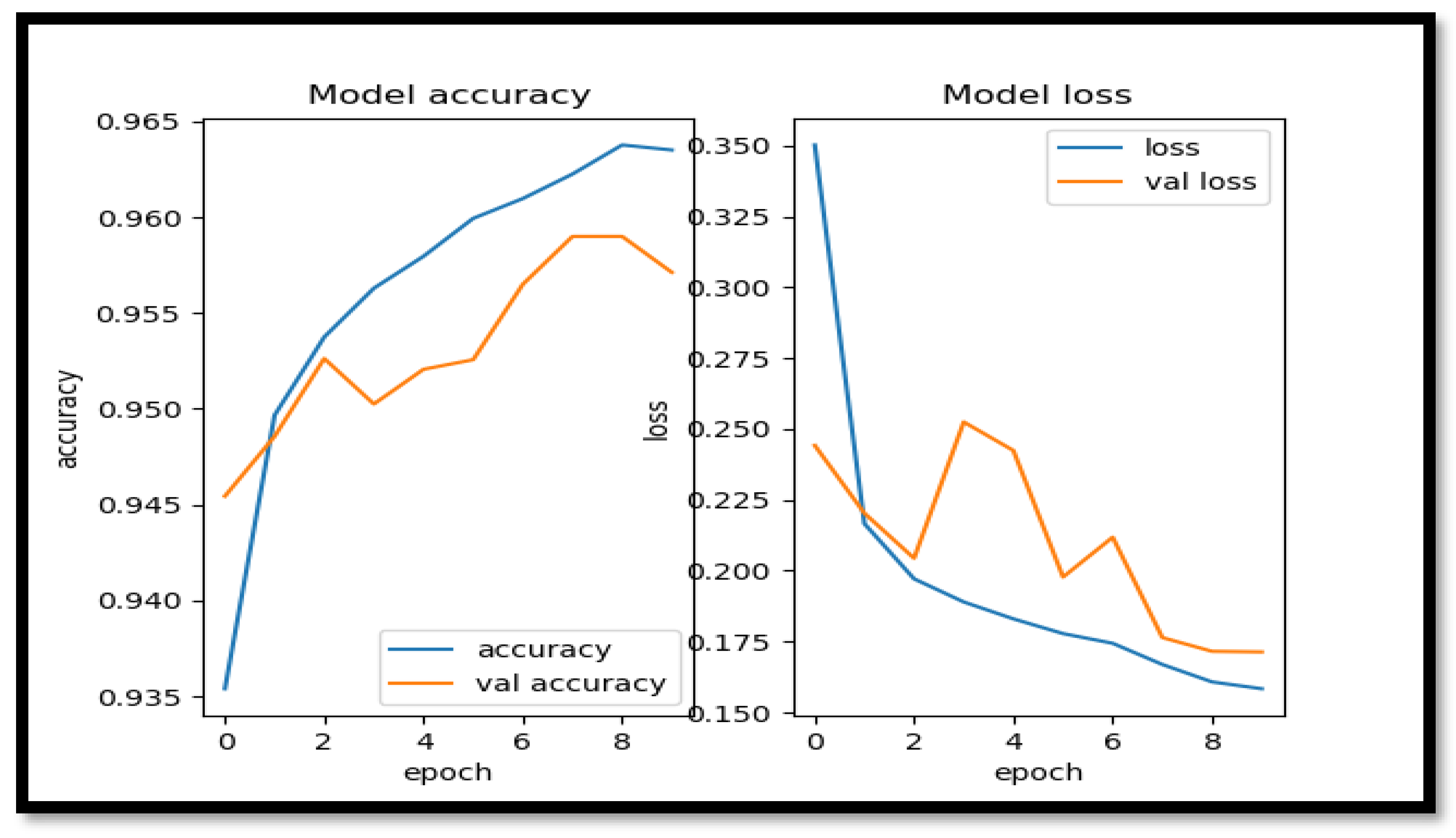

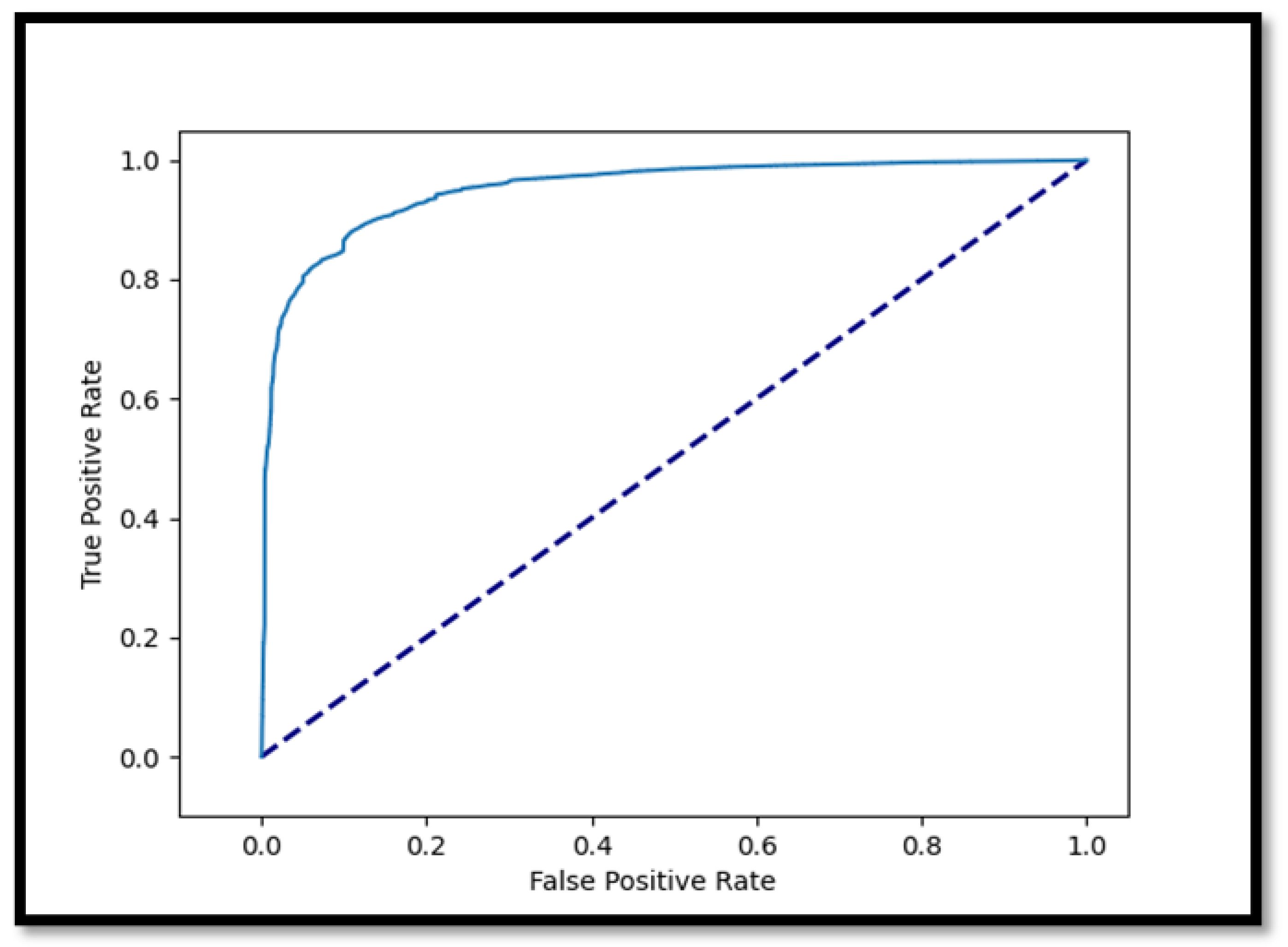

- ROC Curve of CNN1D-LSTM:

- Accuracy Results of CNN1D-LSTM:

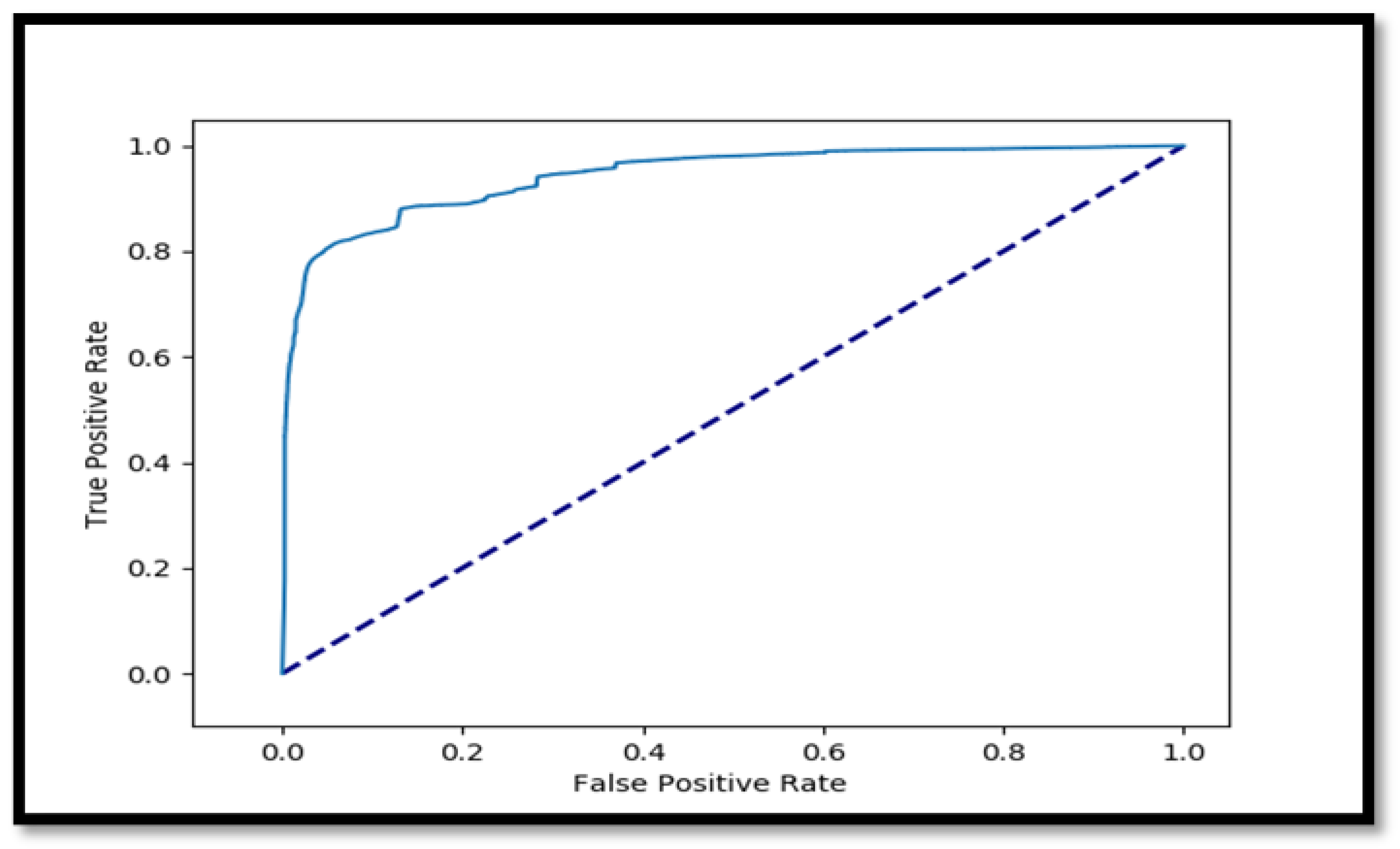

- ROC Curve of NN:

- Accuracy Results of NN:

Appendix B

References

- Raff, Edward, Jon Barker, Jared Sylvester, Robert Brandon, Bryan Catanzaro, and Charles K. Nicholas. "Malware detection by eating a whole exe." In Workshops at the thirty-second AAAI conference on artificial intelligence. 2018.

- Sikorski, Michael, and Andrew Honig. Practical malware analysis: the hands-on guide to dissecting malicious software. no starch press, 2012.

- Perdisci, Roberto, Andrea Lanzi, and Wenke Lee. "Classification of packed executables for accurate computer virus detection." Pattern recognition letters 29, no. 14: 1941-1946, 2008.

- S. William, Computer security: Principles and practice. Pearson Education India, 2008.

- Kim, Samuel. "PE header analysis for malware detection.", 2018.

- Ken Proska, Corey Hildebrandt, Daniel Kappellmann Zafra, Nathan Brubaker, “Portable Executable File Infecting Malware Is Increasingly Found in OT Networks”, Oct 27, 2021. Available Online: https://www.mandiant.com/resources/pe-file-infecting-malware-ot.

- Damodaran, Anusha, Fabio Di Troia, Corrado Aaron Visaggio, Thomas H. Austin, and Mark Stamp. "A comparison of static, dynamic, and hybrid analysis for malware detection." Journal of Computer Virology and Hacking Techniques 13: 1-12, 2017.

- Moser, Andreas, Christopher Kruegel, and Engin Kirda. "Limits of static analysis for malware detection." In Twenty-third annual computer security applications conference (ACSAC 2007), pp. 421-430. IEEE, 2007.

- Gandotra, Ekta, Divya Bansal, and Sanjeev Sofat. "Malware analysis and classification: A survey." Journal of Information Security 2014, 2014.

- U. Bayer, A. Moser, C. Kruegel, and E. Kirda, “Dynamic analysis of malicious code,” Journal in Computer Virology, 2006, vol. 2, no. 1, pp. 67–77.

- I. You and, K. Yim, “Malware obfuscation techniques: A brief survey,” in Broadband, Wireless Computing, Communication and Applications (BWCCA), 2010 International Conference on, 2010, pp. 297–300, IEEE.

- [1804.04637] EMBER: An Open Dataset for Training Static PE Malware Machine Learning Models (arxiv.org) April 16, 2018 https://arxiv.org/abs/1804.04637.

- Multilayer Perceptron Explained with a Real-Life Example and Python Code: Sentiment Analysis – https://towardsdatascience.com/multilayer-perceptron-explained-with-a-real-life-example-and-python-code-sentiment-analysis-cb408ee93141, Sept 2021.

- Li-Ion Batteries Parameter Estimation with Tiny Neural Networks Embedded on Intelligent IoT Microcontrollers, Giulia Crocioni, Danilo Pau, Jean-Michel Delorme, Giambattista Gruosso, July 2020, https://www.researchgate.net/publication/342686148_Li-Ion_Batteries_Parameter_Estimation_With_Tiny_Neural_Networks_Embedded_on_Intelligent_IoT_Microcontrollers.

- H. Kang, J.-w. Jang, A. Mohaisen, and H. K. Kim, “Detecting and classifying android malware using static analysis along with creator information” International Journal of Distributed Sensor Networks, 2015, vol. 11, no. 6, p. 479174.

- Z. Aung and W. Zaw, “Permission-based android malware detection,” International Journal of Scientific & Technology Research, 2013, vol. 2, no. 3, pp. 228–234.

- M. Z. Shafiq, S. M. Tabish, F. Mirza, and M. Farooq, “Pe-miner: Mining structural information to detect malicious executables in Realtime,” in International Workshop on Recent Advances in Intrusion Detection, 2009, pp. 121–141.

- GitHub – tamnguyenvan/malnet: Malware Detection using Convolutional Neural Networks, 2020.

- T.-Y. Wang, C.-H. Wu, and C.-C. Hsieh, “Detecting unknown malicious executables using portable executable headers,” in INC, IMS and IDC, 2009. NCM’09. Fifth International Joint Conference on, 2009, pp. 278–284, IEEE.

- Saxe, A. M., Berlin, K., & Cunningham, A., "Deep neural network-based malware detection using two-dimensional binary program features.”, 2015.

- Kuang, Xiaohui, Ming Zhang, Hu Li, Gang Zhao, Huayang Cao, Zhendong Wu, and Xianmin Wang. "DeepWAF: detecting web attacks based on CNN and LSTM models." In Cyberspace Safety and Security: 11th International Symposium, December 1–3, 2019, Part II 11, pp. 121-136. Springer International Publishing. [CrossRef]

- Kumar, R.; Subbiah, G. Zero-Day Malware Detection and Effective Malware Analysis Using Shapley Ensemble Boosting and Bagging Approach. Sensors, 2022, 22, 2798. [CrossRef]

- Bilge, L., & Dumitras, T., "Before We Knew It: An Empirical Study of Zero-Day Attacks In The Real World.”, 2012.

- Carlini, N., & Wagner, D., "Towards Evaluating the Robustness of Neural Networks.", 2017.

- Yuan, Z., Lu, Y., & Xue, Y., DroidDetector: Android malware characterization and detection using deep learning. Tsinghua Science and Technology, 2016, 21(1), 114-123.

- A. Damodaran, F. D. Troia, C. A. Visaggio, T. H. Austin, and M. Stamp, “A comparison of static, dynamic, and hybrid analysis for malware detection,” Journal of Computer Virology and Hacking Techniques, 2015, vol. 13, pp. 1–12.

- Abdullah, Muhammed Amin, Yongbin Yu, Kwabena Adu, Yakubu Imrana, Xiangxiang Wang, and Jingye Cai. "HCL-Classifier: CNN and LSTM based hybrid malware classifier for Internet of Things (IoT)." Future Generation Computer Systems 142, 2023, 41-58. [CrossRef]

- Omotosho, O.I & Baale, Adebisi & Oladejo, O.A. & Ojiyovwi, Adelodun. “An Exploratory Study of Recurrent Neural Networks for Cybersecurity”. Advances in Multidisciplinary and scientific Research Journal Publication. 2021, 197-204. [CrossRef]

- Saxe, J., Berlin, K., & Pentney, W., Deep neural network-based malware detection using two-dimensional binary program features. Journal of Computer Virology and Hacking Techniques, 15(1), 2019, 13-24.

- Rieck, K., Trinius, P., Willems, C., & Holz, T., Automatic analysis of malware behavior using machine learning. Journal of Computer Security, 19(4), 2011, 639-668.

- Handaya, Wilfridus Bambang Triadi, Mohd Najwadi Yusoff, and A. Jantan. "Machine learning approach for detection of fileless cryptocurrency mining malware." In Journal of Physics: Conference Series, 2020, vol. 1450, no. 1, p. 012075.

- Oyama, Yoshihiro et al. “Identifying Useful Features for Malware Detection in the Ember Dataset.” 2019 Seventh International Symposium on Computing and Networking Workshops (CANDARW), 2019, 360-366.

- Almazroi, A.A., Ayub, N. Deep learning hybridization for improved malware detection in smart Internet of Things. 2024, Sci Rep 14, 7838. [CrossRef]

- T.-Y. Wang, C.-H. Wu, and C.-C. Hsieh, “Detecting unknown malicious executables using portable executable headers,” in INC, IMS and IDC, 2009. NCM’09. Fifth International Joint Conference on, 2009, pp. 278–284, IEEE.

- Gueriani, Afrah, Hamza Kheddar, and Ahmed Cherif Mazari. "Enhancing IoT Security with CNN and LSTM-Based Intrusion Detection Systems." 2024, arXiv:2405.18624.

- Vitorino, João, Rui Andrade, Isabel Praça, Orlando Sousa, and Eva Maia. "A comparative analysis of machine learning techniques for IoT intrusion detection." In International Symposium on Foundations and Practice of Security, 2021, pp. 191-207. Cham: Springer International Publishing, 2021. arXiv:2111.13149v3.

- Akhtar, M.S.; Feng, T. Detection of Malware by Deep Learning as CNN-LSTM Machine Learning Techniques in Real Time. Symmetry. 2022, 14, 2308. [CrossRef]

- Mahadevappa, Poornima, Syeda Mariam Muzammal, and Raja Kumar Murugesan. "A comparative analysis of machine learning algorithms for intrusion detection in edge-enabled IoT networks.", 2021. [CrossRef]

- Riaz, S.; Latif, S.; Usman, S.M.; Ullah, S.S.; Algarni, A.D.; Yasin, A.; Anwar, A.; Elmannai, H.; Hussain, S. Malware Detection in Internet of Things (IoT) Devices Using Deep Learning. Sensors. 2022, 22, 9305. [CrossRef]

| Model | CPU | GPU | TPU | Raspberry Pi | Epochs | Batch Size |

|---|---|---|---|---|---|---|

| NN | 3 mins 24 seconds | 2 mins 39 Seconds | 1 min 17 seconds | 4 mins 36 seconds | 10 | 64 |

| CNN1D | 35 min 27 Seconds | 4 mins 26 Seconds | 1 min 14 seconds | 42 mins 2 Seconds | 10 | 64 |

| CNN1D + LSTM | 21 mins 1 seconds | 2 mins 27 seconds | 1 min 25 seconds | 29 mins 20 seconds | 10 | 1000 |

| Device | Models | Accuracy | Validation Accuracy | Testing Accuracy (exe Files) |

|---|---|---|---|---|

| CPU | NN | 99.25% | 98.82% | 0.9% |

| CNN1D | 98.89% | 98.52% | 0.8% | |

| CNN1D + LSTM | 98.77% | 98.56% | 0.8% | |

| GPU | NN | 99.87% | 98.79% | 0.9% |

| CNN-1D | 95.94% | 95.54% | 0.7% | |

| CNN1D + LSTM | 98.86% | 98.61% | 0.8% | |

| TPU | NN | 99.91% | 98.12% | 0.9% |

| CNN1D | 98.89% | 98.59% | 0.8% | |

| CNN1D + LSTM | 98.81% | 98.56% | 0.7% | |

| Raspberry Pi | NN | 97.29% | 97.02% | 0.6% |

| CNN1D | 96.95% | 95.21% | 0.5% | |

| CNN1D + LSTM | 96.14% | 95.23% | 0.6% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).