Submitted:

17 June 2024

Posted:

18 June 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. An Initial Overview of AI Techniques for Digitalisation

- (1)

- One-dimensional inputs: Sensor data, including data from sensors such as accelerometers, gyroscopes, and temperature sensors; audio data, including speech, music, and other audio recordings; and some time series data.

- (2)

- Two-dimensional inputs: Image data, including photographs, drawings, spectrograms, etc. Image data can be analysed using CV techniques, which involve processing and analysing visual data. The sequence of image data or video can be considered multi-dimensional.

- (3)

- Three-dimensional inputs: Point cloud data and 3D scans of objects or environments, such as buildings, landscapes, and industrial equipment. Point cloud data can be analysed using algorithms that are specifically designed for 3D point cloud data. Addressed in the work of PointNet [3], points from the Euclidean space are unordered, which greatly differentiates from pixel arrays in 2D images or voxel arrays in volumetric grids. Changes of data feeding order will not change the point cloud essence. Another popular representative format of 3D is RGB-D data, which combines RGB colour data with depth data (D). Depending on the methods (either structure light or time-of-flight), the camera acquires the precise distance of the object's surfaces from the specific viewpoint where RGB information is collected.

- (4)

- Multi-dimensional inputs: Text data, including articles, reviews and more, could have many features such as word frequency, word length, and syntactic structure. Text data can be analysed using NLP techniques, which involve processing and analysing natural language data.

1.2. Digitalization in Architectural Scenario: Data Collection, Fusion, and Processes

- (1)

- Architectural elements are large and complex: architectural elements like pillars and walls cannot be represented by merely one shot of photo or scan in close range. The dataset that describes the elements of a big volume will require relatively more computer resources for storage and processing.

- (2)

- Unavoidable noises and shadows: Architectural space is not an ideal space of simple geometric shape but a space of volumetric changes. Despite that, it is usually occupied with items casting shadows in 3D. Therefore, the digitalisation work of full coverage is costly and time-consuming.

- (3)

- Objects of interest: the objects related to architectural elements, components, structures, and joints are featured with multiple scales of geometric features. Some objects, like walls, require global information. Some, like cracks and disintegration, need the millimetre-scale representation of the geometry. Highly decorated architectural elements, especially in the case of cultural heritage, are one of the most challenging objects because they are easily confused with some less important components or facilities.

- (4)

- Recurrent monitoring in architectural spaces: The architectural scenario is close to human activity: from daily residential usage to massive production, architectural spaces vary in functional typology over time. Nonetheless, architecture is constantly threatened by environmental issues, anthropogenic damages, and continuous interventions. So, data acquisition is a constant need for monitoring. The acquired data will be used for object tracking, detecting translation, deformation, twisting, chromatic alteration, etc.

1.3. Data Types and AI Methods

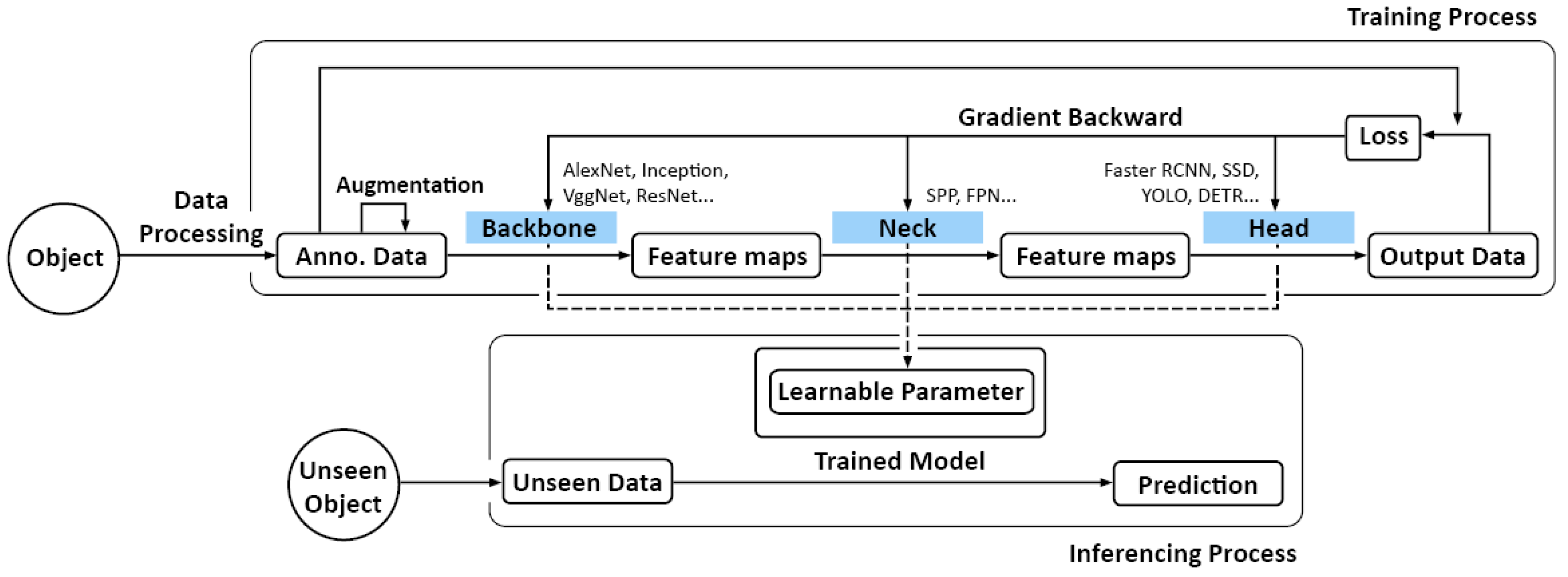

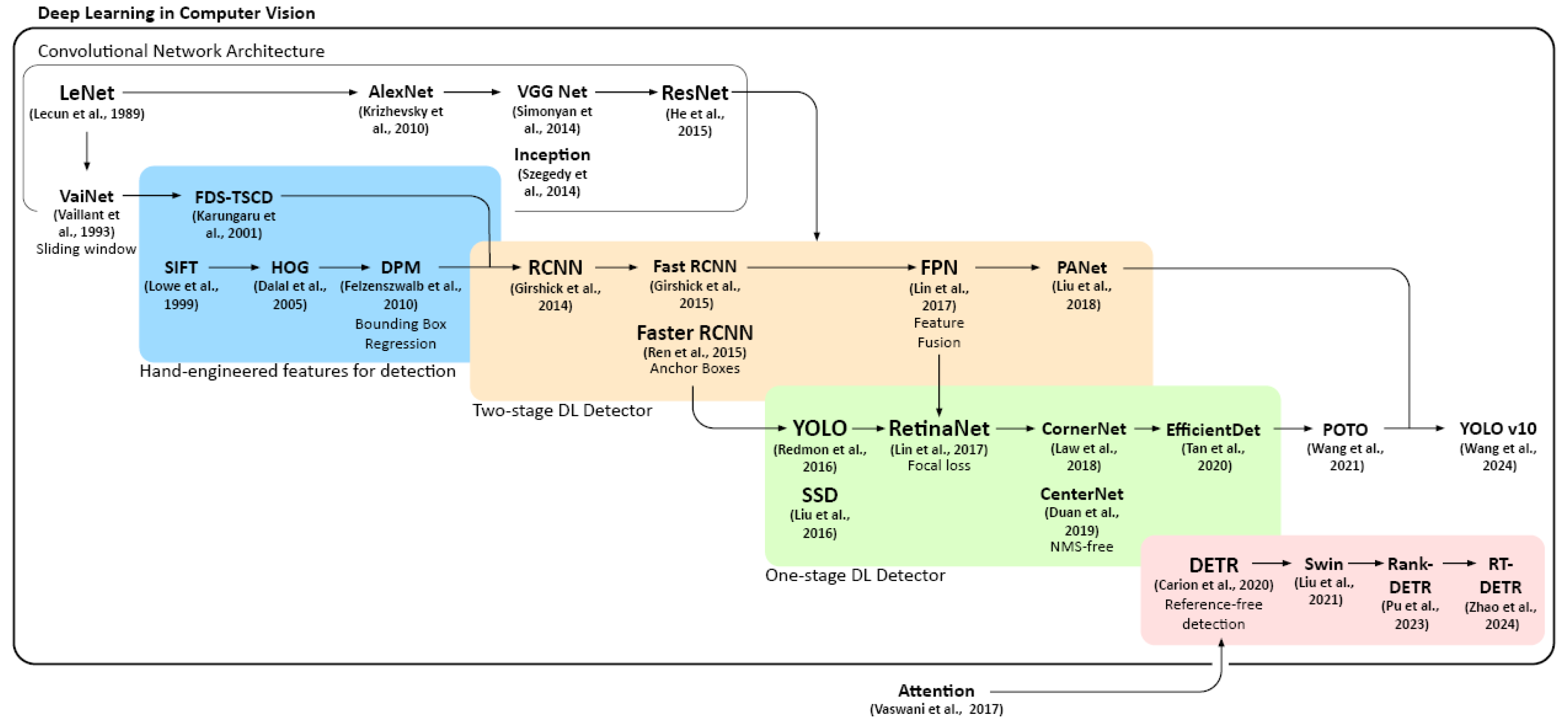

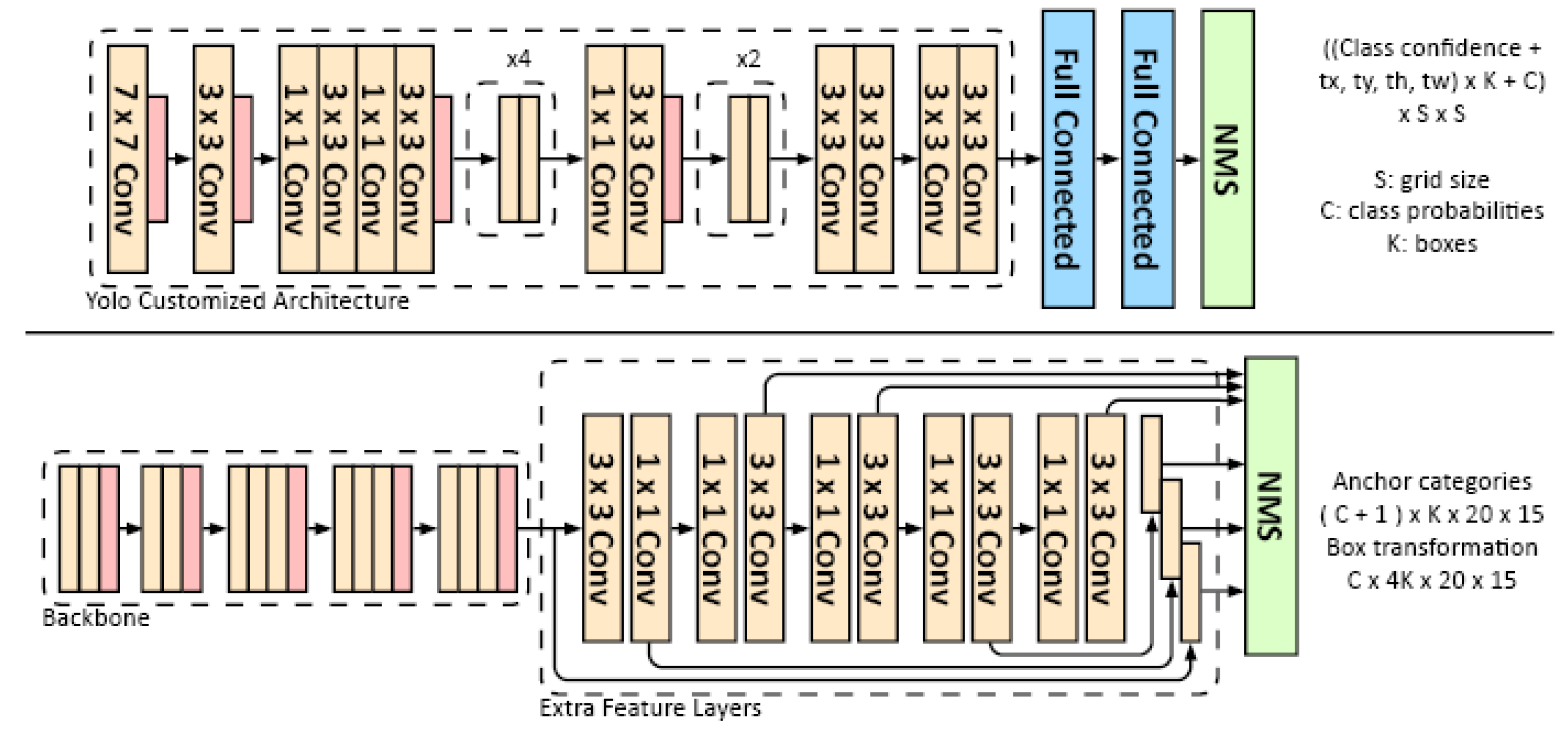

1.3.1. 2D Data

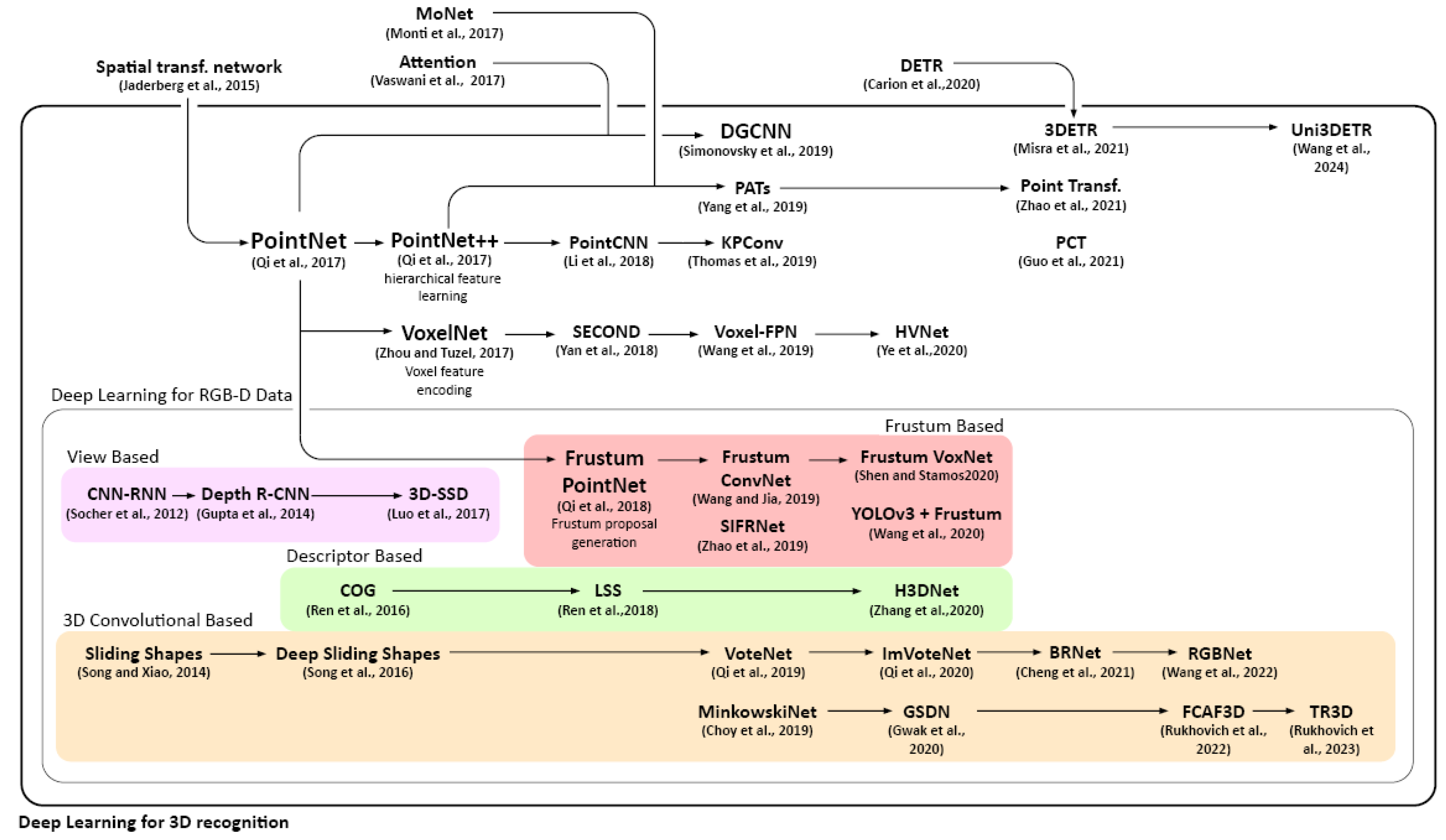

1.3.2. 3D Data

1.3.3. RGB-D Data

- (1)

- View-based: Some researchers make use of RGB-D data by considering the depth map as an additional channel [58]. Some process the 3D data as front-view images [59,60], or projects 3D information to bird’s view [61]. Depth R-CNN [62] was inspired by the 2D object detection model RCNN, it introduced geocentric embedding for better usage of the depth maps. Similarly, 3D-SSD [63] is a 3D generalization of the SSD framework.

- (2)

- Frustum-based (2D driven 3D): Frustum-PointNet [64] as extensions of PointNet turns to process RGB-D information. It extracts the 3D bounding frustum of an object by projecting 2D bounding boxes from image detectors to 3D spaces. Then, within the trimmed 3D, segmentation and box regression are consecutively performed using variants of PointNet. It has addressed some of the limitations, such as local features handling, rotation sensibility, feature extraction, using hierarchical neural network architecture and graph convolutional network. Frustum-PointNet was the breakthrough method at time, it inspired later models such as YoloV3 & F-PointNet [65] and Frustum Voxnet [66].

- (3)

- (4)

- Convolution-based: Sliding Shapes [5] applies a 3D sliding window to detect 3D places directly. It was later updated in Deep sliding shapes [69], introducing a 3D region proposal network to speed up the computation. Qi et al. presented VoteNet [70], which directly detects objects in the point cloud. It has addressed the challenge that the centroid of an object in 3D can be far from the surfaces by applying Hough voting.

2. AI Applications in Architectural Scenarios

2.1. 2D Applications

2.2. 3D Application

3. Conclusion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic Graph CNN for Learning on Point Clouds. ACM Trans. Graph. 2019, 38, 146:1–146:12. [Google Scholar] [CrossRef]

- Charles, R.Q.; Su, H.; Kaichun, M.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); IEEE: Honolulu, HI, 2017; pp. 77–85. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. arXiv 2020. [Google Scholar] [CrossRef]

- Song, S.; Xiao, J. Sliding Shapes for 3D Object Detection in Depth Images. In Proceedings of the Computer Vision—ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, 2014; pp. 634–651. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.; Henderson, D.; Howard, R.; Hubbard, W.; Jackel, L. Handwritten Digit Recognition with a Back-Propagation Network. In Proceedings of the NIPS; 1989. [Google Scholar]

- Fassi, F.; Campanella, C. From Daguerreotypes to Digital Automatic Photogrammetry. Applications and Limits for The Built Heritage Project. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-5-W1, 313–319. [Google Scholar] [CrossRef]

- Achille, C.; Fassi, F.; Fregonese, L. 4 Years history: From 2D to BIM for CH: The Main Spire on Milan Cathedral. In Proceedings of the 2012 18th International Conference on Virtual Systems and Multimedia; 2012; pp. 377–382. [Google Scholar] [CrossRef]

- Perfetti, L.; Polari, C.; Fassi, F. Fisheye Photogrammetry: Tests and Methodologies for The Survey of Narrow Spaces. In Proceedings of the The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; Copernicus GmbH, 2017; Vol. XLII-2-W3, pp. 573–580. [Google Scholar] [CrossRef]

- Patrucco, G.; Gómez, A.; Adineh, A.; Rahrig, M.; Lerma, J.L. 3D Data Fusion for Historical Analyses of Heritage Buildings Using Thermal Images: The Palacio de Colomina as a Case Study. Remote Sens. 2022, 14, 5699. [Google Scholar] [CrossRef]

- Raimundo, J.; Lopez-Cuervo Medina, S.; Aguirre de Mata, J.; Prieto, J.F. Multisensor Data Fusion by Means of Voxelization: Application to a Construction Element of Historic Heritage. Remote Sens. 2022, 14, 4172. [Google Scholar] [CrossRef]

- Lehmann, E.H.; Vontobel, P.; Deschler-Erb, E.; Soares, M. Non-Invasive Studies of Objects From Cultural Heritage. Nucl. Instrum. Methods Phys. Res. Sect. Accel. Spectrometers Detect. Assoc. Equip. 2005, 542, 68–75. [Google Scholar] [CrossRef]

- Zollhöfer, M.; Stotko, P.; Görlitz, A.; Theobalt, C.; Nießner, M.; Klein, R.; Kolb, A. State of the Art on 3D Reconstruction with RGB-D Cameras. Comput. Graph. Forum 2018, 37, 625–652. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, C.; Long, P.; Gu, Y.; Li, W. Recent Advances in 3D Object Detection Based on RGB-D: A Survey. Displays 2021, 70, 102077. [Google Scholar] [CrossRef]

- Adamopoulos, E.; Rinaudo, F. Close-Range Sensing and Data Fusion for Built Heritage Inspection and Monitoring—A Review. Remote Sens. 2021, 13, 3936. [Google Scholar] [CrossRef]

- Orbán, Z.; Gutermann, M. Assessment of Masonry Arch Railway Bridges Using Non-Destructive In-Situ Testing Methods. Eng. Struct. 2009, 31, 2287–2298. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. arXiv 2014. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. arXiv 2015. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition; 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context. arXiv 2015. [Google Scholar] [CrossRef]

- Caesar, H.; Uijlings, J.; Ferrari, V. COCO-Stuff: Thing and Stuff Classes in Context. arXiv 2018. [Google Scholar] [CrossRef]

- Vaillant, R.; Monrocq, C.; Le Cun, Y. An Original Approach for The Localization of Objects In Images. In Proceedings of the 1993 Third International Conference on Artificial Neural Networks; 1993; pp. 26–30. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05); 2005; Vol. 1, pp. 886–893. [Google Scholar] [CrossRef]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object Detection with Discriminatively Trained Part-Based Models. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed]

- Lowe, D.G. Object Recognition from Local Scale-Invariant Features. In Proceedings of the Seventh IEEE International Conference on Computer Vision; 1999; Vol. 2, pp. 1150–1157. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. arXiv 2015. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2016. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv, 2016. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. 2016; Vol. 9905, pp. 21–37. [Google Scholar] [CrossRef]

- Law, H.; Deng, J. CornerNet: Detecting Objects as Paired Keypoints. 2019. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. arXiv 2021. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-time Object Detection. arXiv 2024. [Google Scholar] [CrossRef]

- Pu, Y.; Liang, W.; Hao, Y.; Yuan, Y.; Yang, Y.; Zhang, C.; Hu, H.; Huang, G. Rank-DETR for High Quality Object Detection. arXiv 2023. [Google Scholar] [CrossRef]

- Huang, J.; Rathod, V.; Sun, C.; Zhu, M.; Korattikara, A.; Fathi, A.; Fischer, I.; Wojna, Z.; Song, Y.; Guadarrama, S.; et al. Speed/accuracy trade-offs for modern convolutional object detectors. arXiv 2017. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. arXiv 2018. [Google Scholar] [CrossRef]

- Grilli, E.; Menna, F.; Remondino, F. A Review of Point Clouds Segmentation and Classification Algorithms. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W3, 339–344. [Google Scholar] [CrossRef]

- Liu, W.; Sun, J.; Li, W.; Hu, T.; Wang, P. Deep Learning on Point Clouds and Its Application: A Survey. Sensors 2019, 19, 4188. [Google Scholar] [CrossRef]

- Li, Y.; Bu, R.; Sun, M.; Wu, W.; Di, X.; Chen, B. PointCNN: Convolution On Χ-Transformed Points. arXiv 2018. [Google Scholar] [CrossRef]

- Thomas, H.; Qi, C.R.; Deschaud, J.-E.; Marcotegui, B.; Goulette, F.; Guibas, L.J. KPConv: Flexible and Deformable Convolution for Point Clouds. arXiv 2019. [Google Scholar] [CrossRef]

- Yang, J.; Zhang, Q.; Ni, B.; Li, L.; Liu, J.; Zhou, M.; Tian, Q. Modeling Point Clouds with Self-Attention and Gumbel Subset Sampling. arXiv 2019. [Google Scholar] [CrossRef]

- Guo, M.-H.; Cai, J.-X.; Liu, Z.-N.; Mu, T.-J.; Martin, R.R.; Hu, S.-M. PCT: Point cloud transformer. Comput. Vis. Media 2021, 7, 187–199. [Google Scholar] [CrossRef]

- Zhao, H.; Jiang, L.; Jia, J.; Torr, P.; Koltun, V. Point Transformer. arXiv 2021. [Google Scholar] [CrossRef]

- Misra, I.; Girdhar, R.; Joulin, A. An End-to-End Transformer Model for 3D Object Detection. 2021; pp. 2906–2917. [Google Scholar]

- Wang, Z.; Li, Y.; Chen, X.; Zhao, H.; Wang, S. Uni3DETR: Unified 3D Detection Transformer. arXiv 2023. [Google Scholar] [CrossRef]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. arXiv 2017. [Google Scholar] [CrossRef]

- Yan, Y.; Mao, Y.; Li, B. SECOND: Sparsely Embedded Convolutional Detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef] [PubMed]

- Wang, B.; An, J.; Cao, J. Voxel-FPN: Multi-Scale Voxel Feature Aggregation in 3D Object Detection from Point Clouds. arXiv 2019. [Google Scholar] [CrossRef]

- Ye, M.; Xu, S.; Cao, T. HVNet: Hybrid Voxel Network for LiDAR Based 3D Object Detection. arXiv 2020. [Google Scholar] [CrossRef]

- Hirschmuller, H. Stereo Processing by Semiglobal Matching and Mutual Information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.; Shang, G.; Ji, A.; Zhou, C.; Wang, X.; Xu, C.; Li, Z.; Hu, K. An Overview on Visual SLAM: From Tradition to Semantic. Remote Sens. 2022, 14, 3010. [Google Scholar] [CrossRef]

- Mayer, N.; Ilg, E.; Häusser, P.; Fischer, P.; Cremers, D.; Dosovitskiy, A.; Brox, T. A Large Dataset to Train Convolutional Networks for Disparity, Optical Flow, and Scene Flow Estimation. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2016; pp. 4040–4048. [Google Scholar] [CrossRef]

- Saxena, A.; Sun, M.; Ng, A.Y. Make3D: Learning 3D Scene Structure from a Single Still Image. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 824–840. [Google Scholar] [CrossRef]

- Liu, F.; Shen, C.; Lin, G.; Reid, I. Learning Depth from Single Monocular Images Using Deep Convolutional Neural Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 2024–2039. [Google Scholar] [CrossRef] [PubMed]

- Xie, J.; Girshick, R.; Farhadi, A. Deep3D: Fully Automatic 2D-to-3D Video Conversion with Deep Convolutional Neural Networks. arXiv 2016. [Google Scholar] [CrossRef]

- Socher, R.; Huval, B.; Bhat, B.; Manning, C.; Ng, A. Convolutional-Recursive Deep Learning for 3D Object Classification. NIPS 2012, 1. [Google Scholar]

- Mousavian, A.; Anguelov, D.; Flynn, J.; Kosecka, J. 3D Bounding Box Estimation Using Deep Learning and Geometry. arXiv 2017. [Google Scholar] [CrossRef]

- Chen, X.; Kundu, K.; Zhang, Z.; Ma, H.; Fidler, S.; Urtasun, R. Monocular 3D Object Detection for Autonomous Driving. 2016; pp. 2147–2156. [Google Scholar]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-View 3D Object Detection Network for Autonomous Driving. arXiv 2017. [Google Scholar] [CrossRef]

- Gupta, S.; Girshick, R.; Arbeláez, P.; Malik, J. Learning Rich Features from RGB-D Images for Object Detection and Segmentation. In Proceedings of the Computer Vision—ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, 2014; pp. 345–360. [Google Scholar] [CrossRef]

- Luo, Q.; Ma, H.; Tang, L.; Wang, Y.; Xiong, R. 3D-SSD: Learning Hierarchical Features From RGB-D Images for Amodal 3D Object Detection. Neurocomputing 2020, 378, 364–374. [Google Scholar] [CrossRef]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum PointNets for 3D Object Detection from RGB-D Data. arXiv 2018. [Google Scholar] [CrossRef]

- Wang, Y.; Xu, S.; Zell, A. Real-time 3D Object Detection from Point Clouds using an RGB-D Camera. 2024; pp. 407–414. [Google Scholar]

- Shen, X.; Stamos, I. Frustum VoxNet for 3D object detection from RGB-D or Depth images. 2020; pp. 1698–1706. [Google Scholar]

- Ren, Z.; Sudderth, E.B. Three-Dimensional Object Detection and Layout Prediction Using Clouds of Oriented Gradients. 2016; pp. 1525–1533. [Google Scholar]

- Ren, Z.; Sudderth, E.B. 3D Object Detection with Latent Support Surfaces. 2018; pp. 937–946. [Google Scholar]

- Song, S.; Xiao, J. Deep Sliding Shapes for Amodal 3D Object Detection in RGB-D Images. 2016; pp. 808–816. [Google Scholar]

- Qi, C.R.; Litany, O.; He, K.; Guibas, L.J. Deep Hough Voting for 3D Object Detection in Point Clouds. 2019; pp. 9277–9286. [Google Scholar]

- Hatır, M.E.; İnce, İ.; Korkanç, M. Intelligent Detection of Deterioration In Cultural Stone Heritage. J. Build. Eng. 2021, 44, 102690. [Google Scholar] [CrossRef]

- Mishra, M.; Barman, T.; Ramana, G.V. Artificial Intelligence-Based Visual Inspection System for Structural Health Monitoring of Cultural Heritage. J. Civ. Struct. Health Monit. 2022. [Google Scholar] [CrossRef]

- Liu, C.; ME Sepasgozar, S.; Shirowzhan, S.; Mohammadi, G. Applications of Object Detection in Modular Construction Based on a Comparative Evaluation of Deep Learning Algorithms. Constr. Innov. 2021, 22, 141–159. [Google Scholar] [CrossRef]

- Verges-Belmin, V.; ISCS. For Illustrated Glossary on Stone Deterioration Patterns. ICOMOS, 2008; ISBN 978-2-918086-00-0. [Google Scholar]

- Kwon, D.; Yu, J. Automatic Damage Detection of Stone Cultural Property Based on Deep Learning Algorithm. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W15, 639–643. [Google Scholar] [CrossRef]

- Wang, N.; Zhao, X.; Zhao, P.; Zhang, Y.; Zou, Z.; Ou, J. Automatic Damage Detection of Historic Masonry Buildings Based on Mobile Deep Learning. Autom. Constr. 2019, 103, 53–66. [Google Scholar] [CrossRef]

- Idjaton, K.; Desquesnes, X.; Treuillet, S.; Brunetaud, X. Transformers with YOLO Network for Damage Detection in Limestone Wall Images. In Proceedings of the Image Analysis and Processing; ICIAP 2022 Workshops, Mazzeo, P.L., Frontoni, E., Sclaroff, S., Distante, C., Eds.; Springer International Publishing: Cham, 2022; pp. 302–313. [Google Scholar] [CrossRef]

- Karimi, N.; Valibeig, N.; Rabiee, H.R. Deterioration Detection in Historical Buildings with Different Materials Based on Novel Deep Learning Methods with Focusing on Isfahan Historical Bridges. Int. J. Archit. Herit. 2023, 0, 1–13. [Google Scholar] [CrossRef]

- Guerrieri, M.; Parla, G. Flexible and Stone Pavements Distress Detection and Measurement by Deep Learning and Low-Cost Detection Devices. Eng. Fail. Anal. 2022, 141, 106714. [Google Scholar] [CrossRef]

- Tatzel, L.; Tamimi, O.A.; Haueise, T.; Puente León, F. Image-Based Modelling and Visualisation of The Relationship Between Laser-Cut Edge And Process Parameters. Opt. Laser Technol. 2021, 141, 107028. [Google Scholar] [CrossRef]

- Bach, S.; Binder, A.; Montavon, G.; Klauschen, F.; Müller, K.-R.; Samek, W. On Pixel-Wise Explanations for Non-Linear Classifier Decisions by Layer-Wise Relevance Propagation. PLOS ONE 2015, 10, e0130140. [Google Scholar] [CrossRef] [PubMed]

- Pathak, R.; Saini, A.; Wadhwa, A.; Sharma, H.; Sangwan, D. An Object Detection Approach for Detecting Damages in Heritage Sites Using 3-D Point Clouds And 2-D Visual Data. J. Cult. Herit. 2021, 48, 74–82. [Google Scholar] [CrossRef]

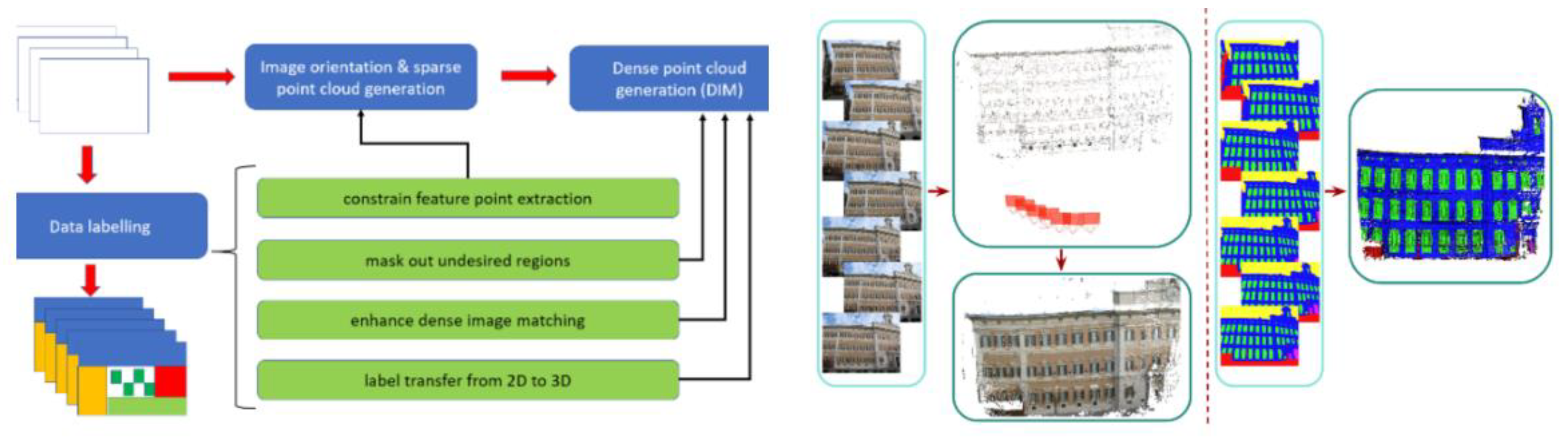

- Grilli, E.; Dininno, D.; Petrucci, G.; Remondino, F. From 2D to 3D Supervised Segmentation and Classification for Cultural Heritage Applications. In Proceedings of the The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Copernicus GmbH; 2018; Vol. XLII-2, pp. 399–406. [Google Scholar] [CrossRef]

- Grilli, E.; Remondino, F. Classification of 3D Digital Heritage. Remote Sens. 2019, 11, 847. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An Efficient Alternative to SIFT Or SURF. In Proceedings of the 2011 International Conference on Computer Vision; 2011; pp. 2564–2571. [Google Scholar] [CrossRef]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperPoint: Self-Supervised Interest Point Detection and Description. 2018; pp. 224–236. [Google Scholar]

- Dusmanu, M.; Rocco, I.; Pajdla, T.; Pollefeys, M.; Sivic, J.; Torii, A.; Sattler, T. D2-Net: A Trainable CNN for Joint Description and Detection of Local Features. 2019; pp. 8092–8101. [Google Scholar]

- Revaud, J.; De Souza, C.; Humenberger, M.; Weinzaepfel, P. R2D2: Reliable and Repeatable Detector and Descriptor. In Proceedings of the Advances in Neural Information Processing Systems, Curran Associates, Inc. 2019; Vol. 32. [Google Scholar]

- Stathopoulou, E.-K.; Remondino, F. Semantic Photogrammetry—Boosting Image-Based 3d Reconstruction with Semantic Labeling. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W9, 685–690. [Google Scholar] [CrossRef]

- Stathopoulou, E.-K.; Remondino, F. Multi-View Stereo with Semantic Priors. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2-W15, 1135–1140. [Google Scholar] [CrossRef]

- Stathopoulou, E.K.; Battisti, R.; Cernea, D.; Remondino, F.; Georgopoulos, A. Semantically Derived Geometric Constraints for MVS Reconstruction of Textureless Areas. Remote Sens. 2021, 13, 1053. [Google Scholar] [CrossRef]

- Liu, Z.; Brigham, R.; Long, E.R.; Wilson, L.; Frost, A.; Orr, S.A.; Grau-Bové, J. Semantic Segmentation and Photogrammetry of Crowdsourced Images to Monitor Historic Facades. Herit. Sci. 2022, 10, 27. [Google Scholar] [CrossRef]

- Civera, J.; Gálvez-López, D.; Riazuelo, L.; Tardós, J.D.; Montiel, J.M.M. Towards Semantic SLAM Using a Monocular Camera. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems; 2011; pp. 1277–1284. [Google Scholar] [CrossRef]

- Yu, C.; Liu, Z.; Liu, X.-J.; Xie, F.; Yang, Y.; Wei, Q.; Fei, Q. DS-SLAM: A Semantic Visual SLAM towards Dynamic Environments. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS); 2018; pp. 1168–1174. [Google Scholar] [CrossRef]

- Truong, P.H.; You, S.; Ji, S. Object Detection-based Semantic Map Building for A Semantic Visual SLAM System. In Proceedings of the 2020 20th International Conference on Control, Automation and Systems (ICCAS); 2020; pp. 1198–1201. [Google Scholar] [CrossRef]

- Sun, Z.; Xu, Y.; Hoegner, L.; Stilla, U. Classification of MLS Point Clouds in Urban Scenes Using Detrended Geometric Features From Supervoxel-Based Local Contexts. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, IV-2, 271–278. [Google Scholar] [CrossRef]

- Grilli, E.; Özdemir, E.; Remondino, F. Application of Machine and Deep Learning Strategies for The Classification of Heritage Point Clouds. In Proceedings of the The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; Copernicus GmbH, 2019; Vol. XLII-4-W18, pp. 447–454. [Google Scholar] [CrossRef]

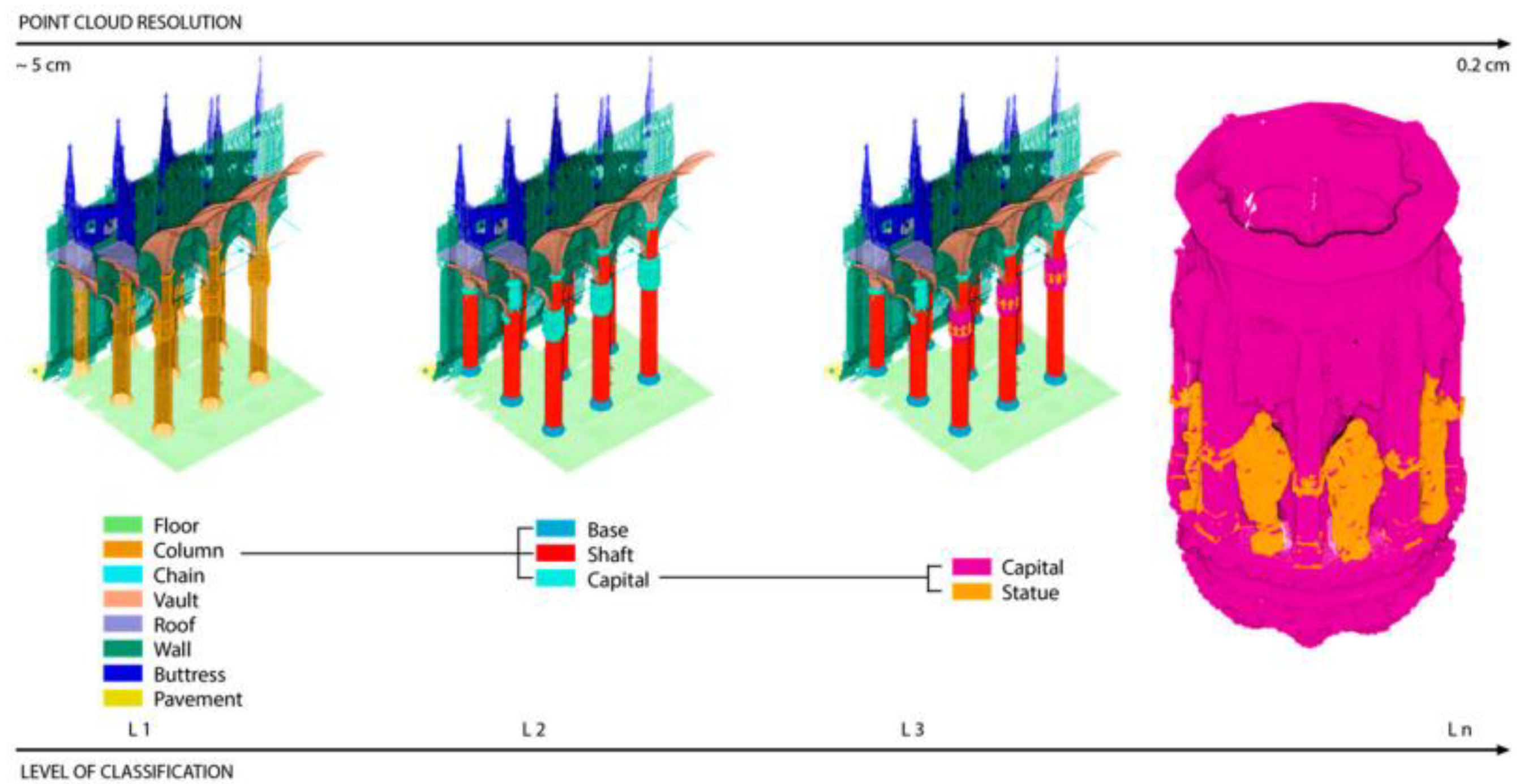

- Teruggi, S.; Grilli, E.; Russo, M.; Fassi, F.; Remondino, F. A Hierarchical Machine Learning Approach for Multi-Level and Multi-Resolution 3D Point Cloud Classification. Remote Sens. 2020, 12, 2598. [Google Scholar] [CrossRef]

- Zhang, K.; Teruggi, S.; Fassi, F. Machine Learning Methods for Unesco Chinese Heritage: Complexity and Comparisons. In Proceedings of the The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; Copernicus GmbH, 2022; Vol. XLVI-2-W1-2022, pp. 543–550. [Google Scholar] [CrossRef]

- Zhang, K.; Teruggi, S.; Ding, Y.; Fassi, F. A Multilevel Multiresolution Machine Learning Classification Approach: A Generalization Test on Chinese Heritage Architecture. Heritage 2022, 5, 3970–3992. [Google Scholar] [CrossRef]

- Grilli, E.; Remondino, F. Machine Learning Generalisation across Different 3D Architectural Heritage. ISPRS Int. J. Geo-Inf. 2020, 9, 379. [Google Scholar] [CrossRef]

- Cao, Y.; Teruggi, S.; Fassi, F.; Scaioni, M. A Comprehensive Understanding of Machine Learning and Deep Learning Methods for 3D Architectural Cultural Heritage Point Cloud Semantic Segmentation. In Proceedings of the Geomatics for Green and Digital Transition; Borgogno-Mondino, E., Zamperlin, P., Eds.; Springer International Publishing: Cham, 2022; pp. 329–341. [Google Scholar] [CrossRef]

- Malinverni, E.S.; Pierdicca, R.; Paolanti, M.; Martini, M.; Morbidoni, C.; Matrone, F.; Lingua, A. Deep Learning for Semantic Segmentation of 3D Point Cloud. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W15, 735–742. [Google Scholar] [CrossRef]

- Sural, S.; Qian, G.; Pramanik, S. Segmentation and Histogram Generation Using the HSV Color Space for Image Retrieval. In Proceedings of the International Conference on Image Processing; 2002; Vol. 2, p. II–II. [Google Scholar] [CrossRef]

- Pierdicca, R.; Paolanti, M.; Matrone, F.; Martini, M.; Morbidoni, C.; Malinverni, E.S.; Frontoni, E.; Lingua, A.M. Point Cloud Semantic Segmentation Using a Deep Learning Framework for Cultural Heritage. Remote Sens. 2020, 12, 1005. [Google Scholar] [CrossRef]

- Matrone, F.; Grilli, E.; Martini, M.; Paolanti, M.; Pierdicca, R.; Remondino, F. Comparing Machine and Deep Learning Methods for Large 3D Heritage Semantic Segmentation. ISPRS Int. J. Geo-Inf. 2020, 9, 535. [Google Scholar] [CrossRef]

- Matrone, F.; Lingua, A.; Pierdicca, R.; Malinverni, E.S.; Paolanti, M.; Grilli, E.; Remondino, F.; Murtiyoso, A.; Landes, T. A Benchmark for Large-Scale Heritage Point Cloud Semantic Segmentation. In Proceedings of the The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; Copernicus GmbH, 2020; Vol. XLIII-B2-2020, pp. 1419–1426. [Google Scholar] [CrossRef]

- Cao, Y.; Scaioni, M. A Pre-Training Method for 3d Building Point Cloud Semantic Segmentation. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, V-2–2022, 219–226. [Google Scholar] [CrossRef]

- Cao, Y.; Scaioni, M. 3DLEB-Net: Label-Efficient Deep Learning-Based Semantic Segmentation of Building Point Clouds at LoD3 Level. Appl. Sci. 2021, 11, 8996. [Google Scholar] [CrossRef]

- Cao, Y.; Previtali, M.; Scaioni, M. Understanding 3d Point Cloud Deep Neural Networks by Visualization Techniques. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLIII-B2-2020, 651–657. [Google Scholar] [CrossRef]

- Cao, Y.; Scaioni, M. Label-Efficient Deep Learning-Based Semantic Segmentation of Building Point Clouds at Lod3 Level. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, XLIII-B2-2021, 449–456. [Google Scholar] [CrossRef]

- Armeni, I.; Sax, S.; Zamir, A.R.; Savarese, S. Joint 2D-3D-Semantic Data for Indoor Scene Understanding. arXiv 2017. [Google Scholar] [CrossRef]

- Ma, J.W.; Czerniawski, T.; Leite, F. Semantic Segmentation of Point Clouds of Building Interiors with Deep Learning: Augmenting Training Datasets with Synthetic BIM-Based Point Clouds. Autom. Constr. 2020, 113, 103144. [Google Scholar] [CrossRef]

- Landrieu, L.; Simonovsky, M. Large-Scale Point Cloud Semantic Segmentation with Superpoint Graphs. 2018; pp. 4558–4567. [Google Scholar]

- Li, G.; Muller, M.; Thabet, A.; Ghanem, B. DeepGCNs: Can GCNs Go As Deep As CNNs? 2019; pp. 9267–9276. [Google Scholar]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. RandLA-Net: Efficient Semantic Segmentation of Large-Scale Point Clouds. 2020; pp. 11108–11117. [Google Scholar]

| Authors | Scene | Data Type | Labels | Trainset | Methods | Evaluation | 3D |

|---|---|---|---|---|---|---|---|

| Hatır et al., 2021 | Stone heritage | Photo | 9 | Subset from site | Mask RCNN | mAP 0.98 | - |

| Mishra et al., 2022 | Heritage exterior | Photo | 4 | Subset from site | YOLO v5 | mAP 0.93 | - |

| Faster RCNN | mAP 0.85 | ||||||

| Liu et al., 2022 | Construction filed | Photo | 3 separately | Subset from dataset | Faster RCNN | mAP 0.90, 0.87, 0.74 | - |

| SSD | mAP 0.88, 0.69, 0.53 | ||||||

| Kwon et al., 2019 | Stone | Photo | 4 separately | Subset from dataset | Faster RCNN *bb. Inception | Avg. conf. 0.95 | - |

| Wang et al., 2019 | Mansory facade | Orthophoto | 2 | Subset from dataset | Faster RCNN *bb. ResNet101 | mAP 0.95 | - |

| Idjaton et al., 2022 | Stone facade | Photo | 1 | Subset from site | YOLO v5 & Attention | mAP 0.79 | - |

| YOLO v5x | mAP 0.74 | ||||||

| Karimi et al., 2023 | Bridge defect | Photo | 7 | Individual case | Inception-ResNet-v2 | Accu. 0.96 | - |

| Guerrieri et al., 2022 | Stone pavement | Photo | 4 | Subset from site | YOLO v3 | Accu. 0.91-0.97 | - |

| Zhang et al., 2024 | Pathology | Photo | 12 | Subset from dataset | ResNet | Accu. 0.72 | - |

| YOLO v5 | mAP_0.5 0.34 | + | |||||

| Pathak et al., 2021 | Mansory heritage | Photo & Rendered | 2 | Individual case | Faster RCNN Nas | mAP 0.39 | + |

| Faster RCNN Resnet-FPN | mAP 0.58 | ||||||

| Grilli et al., 2018 | Heritage exterior | Orthophoto | 7 | Subset from site | Random Forest etc. | Accu. 0.44-0.69 | + |

| Grilli et al., 2019 | Heritage interior | Panorama | 1 | Subset from site | K-meansclustering | Accu. 0.91 | + |

| Authors | Scene | Trainset | Methods | Feature | Dataset | Labels | Precision |

|---|---|---|---|---|---|---|---|

| Sun et al., 2018 | Urban | Subset from site | RF on supervoxel | Covariance features | - | 8 | Ov. Acc. 0.92 |

| Grilli et al., 2019 | Heritage exterior | Subset from site | RF | Covariance features | 2.2, 1.1 million | 10, 14 | Avg. F1 0.92, 0.82 |

| Teruggi et al., 2020 | Heritage complex | Subset from site | RF | Covariance features | 3.6 billion | 25 | F1 0.94-0.99 |

| Zhang et al., 2022 | Heritage complex | Subset from related site | RF | Covariance features | 354.2 milion | 18 | F1 0.92-0.97 |

| 24.2 million | 18 | F1 0.92-0.99 | |||||

| 156 million | 19 | F1 0.90-0.99 | |||||

| Grilli et al., 2020 | Heritage exterior | Individual case | RF | Covariance features | 6-14 million | 9 | Acc. 0.78-0.98 |

| Cao et al., 2022 | ArCH | Subset from dataset | RF & DGCNN | Covariance features and none | 14, 10, 17 million | 9 | Ov. Acc. 0.62-0.98 |

| Malinverni et al., 2019 | ArCH | Subset from dataset | PointNet++ | - | 25-569 million | 4 | Wgt. Avg. F1 0.31 |

| Pierdica et al., 2020 | ArCH | Subset from dataset | Modified DGCNN | HSV + Norm | Avg. 114 million | 10 | Mean Acc. 0.74 |

| Matrone et al., 2020 | ArCH | Subset from dataset | Modified DGCNN | Covariance features | Avg. 114 million | 10 | Wgt. F1 0.82-0.91 |

| Cao et al., 2022 | ArCH | S3DIS (interior) | PointNet | - | Avg. 114 million | 10 | mIoU 0.31 |

| KPConv | mIoU 0.65 | ||||||

| DGCNN | mIoU 0.47 | ||||||

| Cao et al., 2020 | ArCH | Subset from dataset | 3DLEB-Net | - | Avg. 114 million | 10 | Ov. Acc. 0.67-0.77 |

| Ma et al., 2020 | S3DIS | Synthetic data from dataset | DGCNN | - | 695+ million | 12 | Avg. Prec. 0.53-0.65 |

| Landrieu and Simonovsky, 2018 | S3DIS | Subset from dataset | Superpoint Gragh + GCNN | - | 695 million | 12 | Ov. Acc. 0.86 |

| Li et al., 2019 | S3DIS | Subset from dataset | ResGCN-28 | - | 695 million | 12 | Ov. Acc. 0.86 |

| Hu et al., 2020 | S3DIS | Subset from dataset | RandLA-Net | - | 695 million | 12 | Ov. Acc. 0.88 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).