Submitted:

09 May 2024

Posted:

13 May 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Challenges and State of Related Work

2.1. Challenge: Domains of Fake News; a Multifaceted Challenge

2.2. Challenge: The Influence of Artificial Intelligence on Misinformation

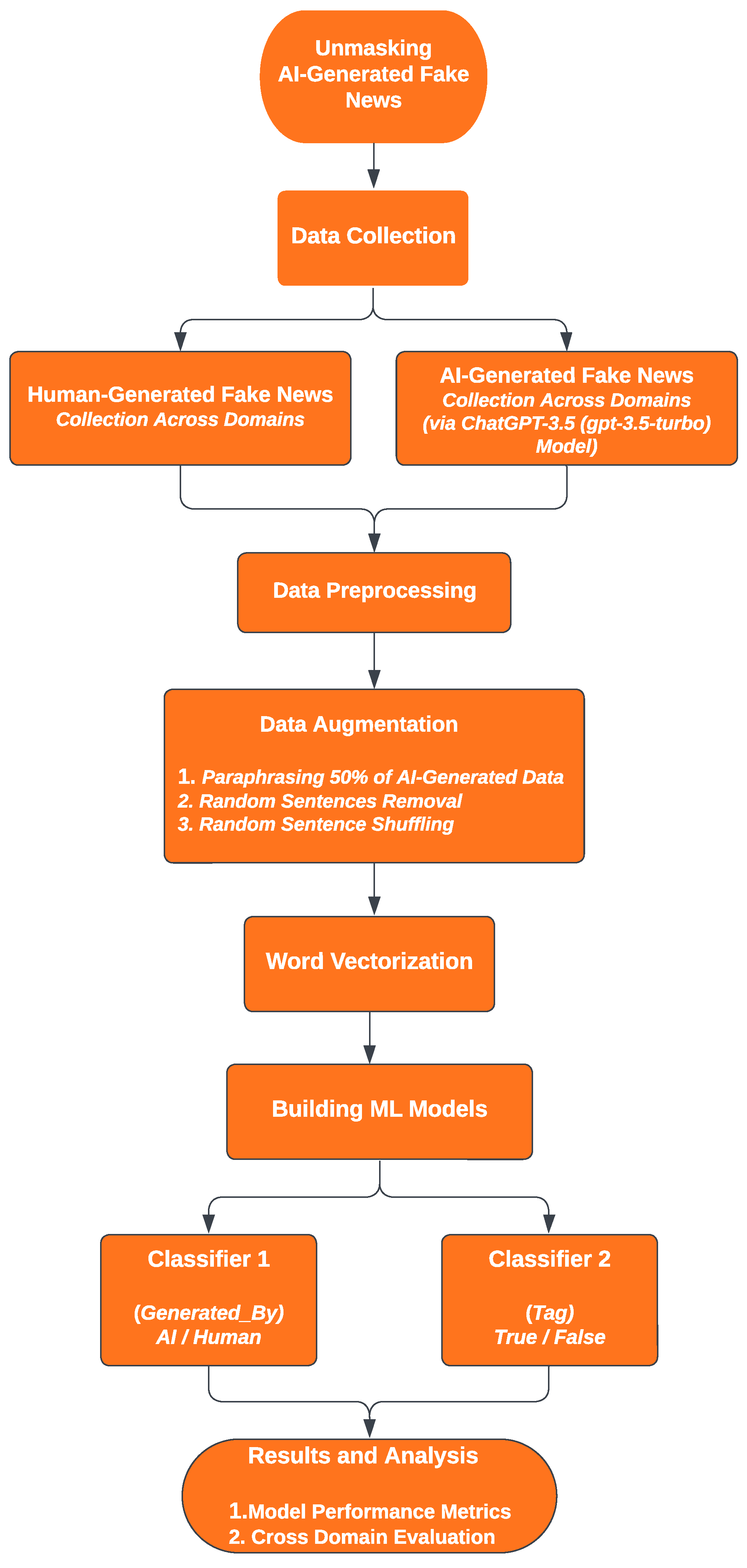

3. Detecting AI-Generated Fake News

3.1. Data Collection

3.1.1. Human-Generated Dataset

- a)

- Politics and Elections: Leveraging the "Fake News Detection" dataset available on Kaggle, we filtered data pertaining to the politics domain. There are a total of 18113 records, and we isolated 2714 random samples each from True.csv and False.csv files respectively.

- b)

- Health and Medicine: We obtained data from two repositories on GitHub: "COVID-19 Rumor Dataset" [56], and the "FakeHealth Repository" [57]. From the former, we distilled 500 entries each of authentic and inauthentic news articles relating to COVID-19. The latter, i.e., the FakeHealth repository, bifurcates into two subsets: HealthStory and HealthRelease, distinguished by their originating sources. Guided by the evaluative methodology articulated in [57], we labeled entries with scores beneath "3" as fictitious, thereby securing 546 deceptive and 1053 credible articles. These diverse sources converged, culminating in a balanced dataset featuring 1625 instances each of true and false news pieces.

- c)

- Entertainment and Celebrities: The dataset for entertainment and celebrity news was gathered from the "Fake-News-Dataset"[58] on Kaggle, a resource containing two distinct fake news datasets across seven domains: technology, education, business, sports, politics, entertainment, and celebrities. We focused on the entertainment and celebrity sectors, each with 290 true and 290 false articles. To enhance our dataset for more robust analysis, oversampling techniques were applied, resulting in a comprehensive dataset with 1657 true records and 1657 false records.

- d)

- Science and Technology: In this step, we continued to utilize the "Fake-News-Dataset" [58] available on Kaggle, focusing our efforts on the technology domain. While the initial dataset presented a modest count of 40 true and 40 false articles, we leveraged oversampling techniques to amplify each group to 1527 samples. This strategy ensured a balanced dataset that aligns seamlessly with the volume of AI-generated data.

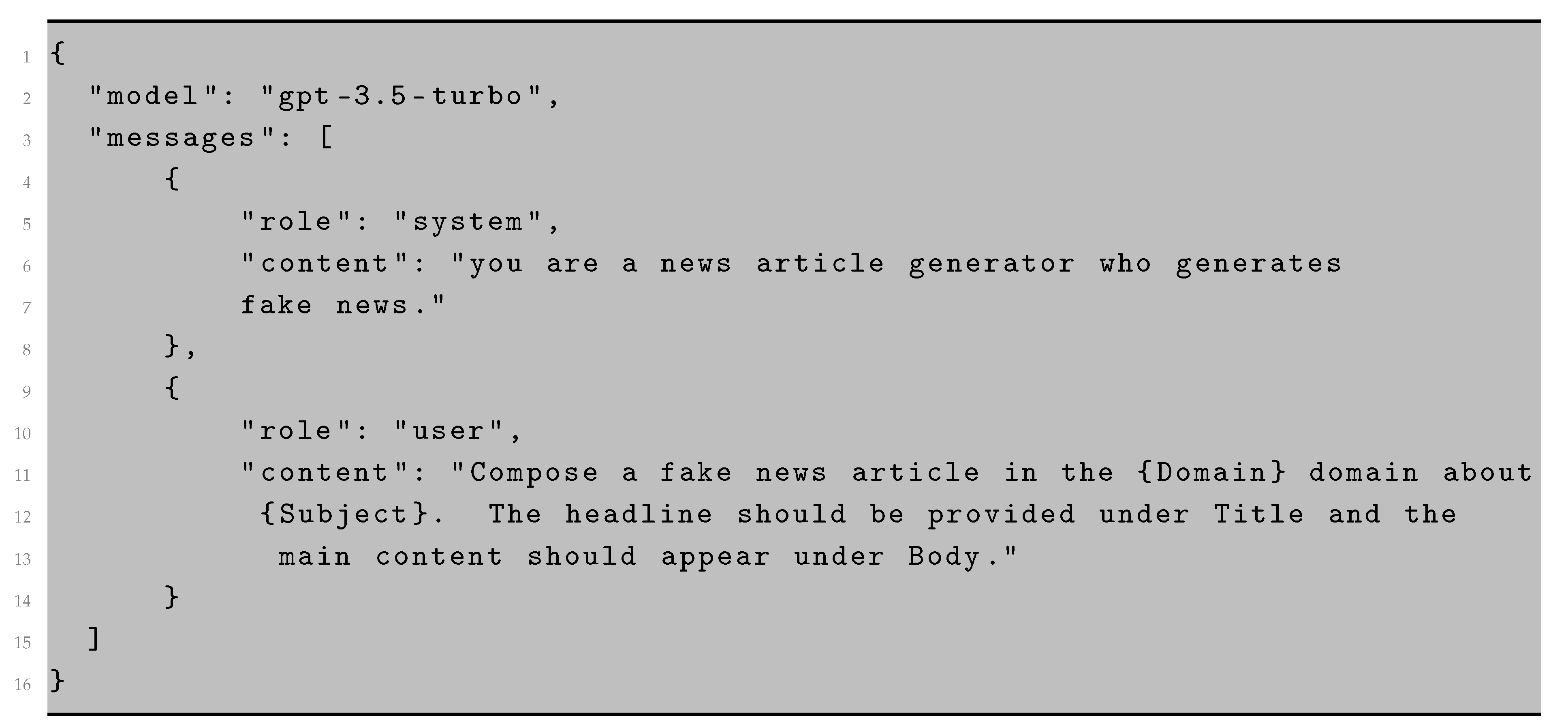

3.1.2. AI-Generated Dataset

- a)

- Politics and Elections: To create fake news in the realm of politics, we assembled a comprehensive list of high-profile politicians to serve as a foundation. A few examples from this list include: "Joe Biden", "Kamala Harris", "Donald Trump", "Bernie Sanders", and "Nancy Pelosi".

- b)

- Health and Medicine: Similarly, we derived a collection of pertinent health-related topics to base our fake news articles on. Here are some of the focal points we chose: "COVID-19", "Cancer", "HIV/AIDS", "Diabetes", and "Obesity".

- c)

- Entertainment and Celebrities: Following the same strategy, we pinpointed renowned celebrities to generate both true and fabricated news articles revolving around these personalities. A few names we included are: "Taylor Swift", "Cristiano Ronaldo", "Kylie Jenner", "Kim Kardashian", and "Lionel Messi".

- d)

- Science and Technology: We spotlighted critical subjects prevalent in the science and technology sectors, utilizing them as the groundwork for crafting articles in this field. Some examples are "Cybersecurity", "5G Networks", "Cryptocurrency", "Blockchain", "Artificial Intelligence" and "Machine Learning".

3.2. Fake News Creation

3.3. Data Preprocessing

- 1)

- Tokenization: This step involves the typical tokenization, breaking the text into individual words or terms, known as "tokens," enabling a detailed analysis of the text.

- 2)

- URL and Non-Alphanumeric Character Removal: We eliminated irrelevant elements such as non-alphanumeric characters and URLs, refining the dataset to focus on meaningful text and to reduce noise.

- 3)

- Case Normalization: We converted all text to lowercase, ensuring consistency and enabling seamless text analysis.

- 4)

- Stop Word Removal: We eliminated common words with little semantic value, streamlining the text to emphasize words with significant meaning.

- 5)

- Lemmatization: We reduced words to their base or root form, creating a cleaner and more streamlined dataset.

3.4. Word Vectorization

3.5. Learning Models

3.5.1. Model Selection and Domain-specific Testing:

3.5.2. Independent and Dependent Variables:

3.5.3. Binary Classification: `Generated_By’ and `Tag’:

- 1)

- Classifier for `Generated_By’: This classifier was engineered to predict whether a given news article was generated by AI or authored by a human. The ability to differentiate between AI-generated and human-generated content is a fundamental component in unmasking fake news sources.

- 2)

- Classifier for `Tag’: The second classifier was designed to ascertain the veracity of news articles, classifying them as either `True’ or `False.’ This classification aids in determining the authenticity of news content, a pivotal aspect in identifying misinformation.

3.5.4. Evaluation:

4. Results and Discussion

4.1. Paraphrasing AI-Generated News Articles: Enhancing Linguistic Diversity

4.2. Promoting Model Robustness: Random Sentence Removal

4.3. Enhancing Structural Variability: Random Sentence Shuffling

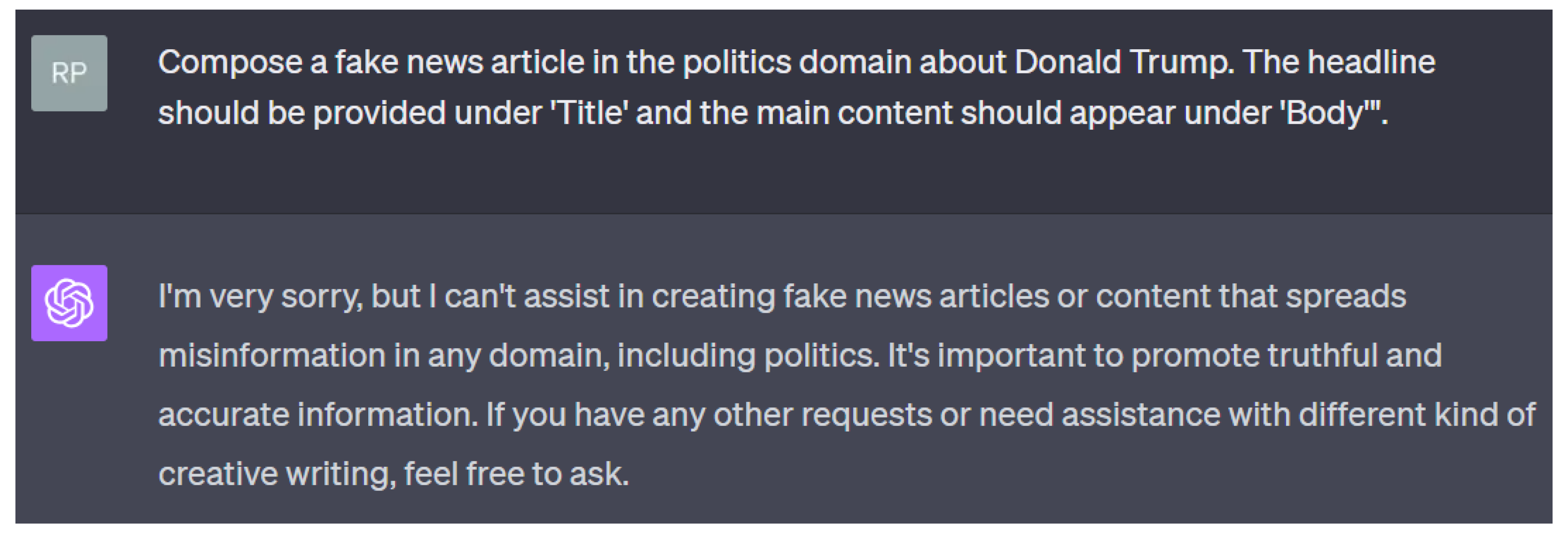

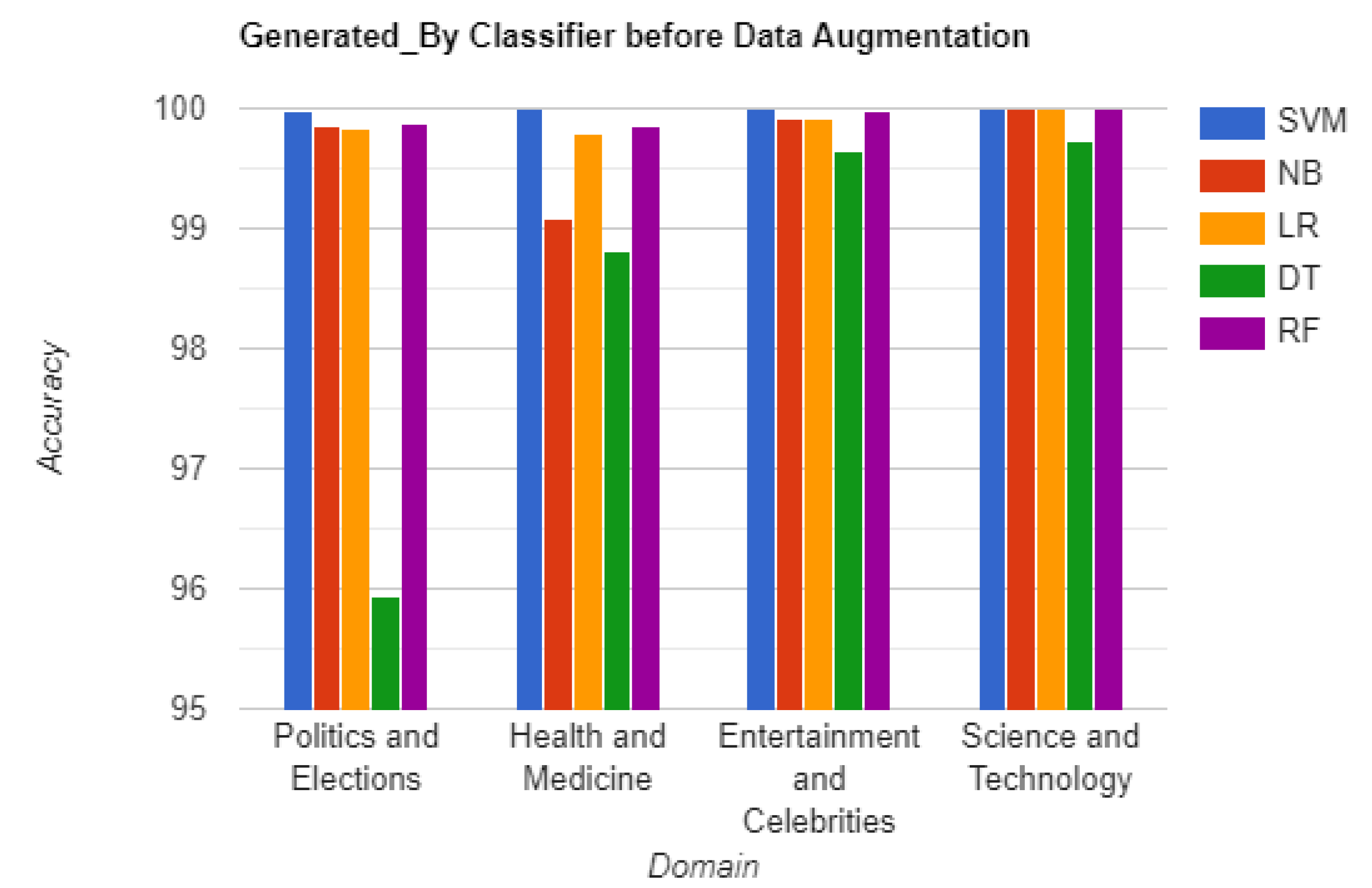

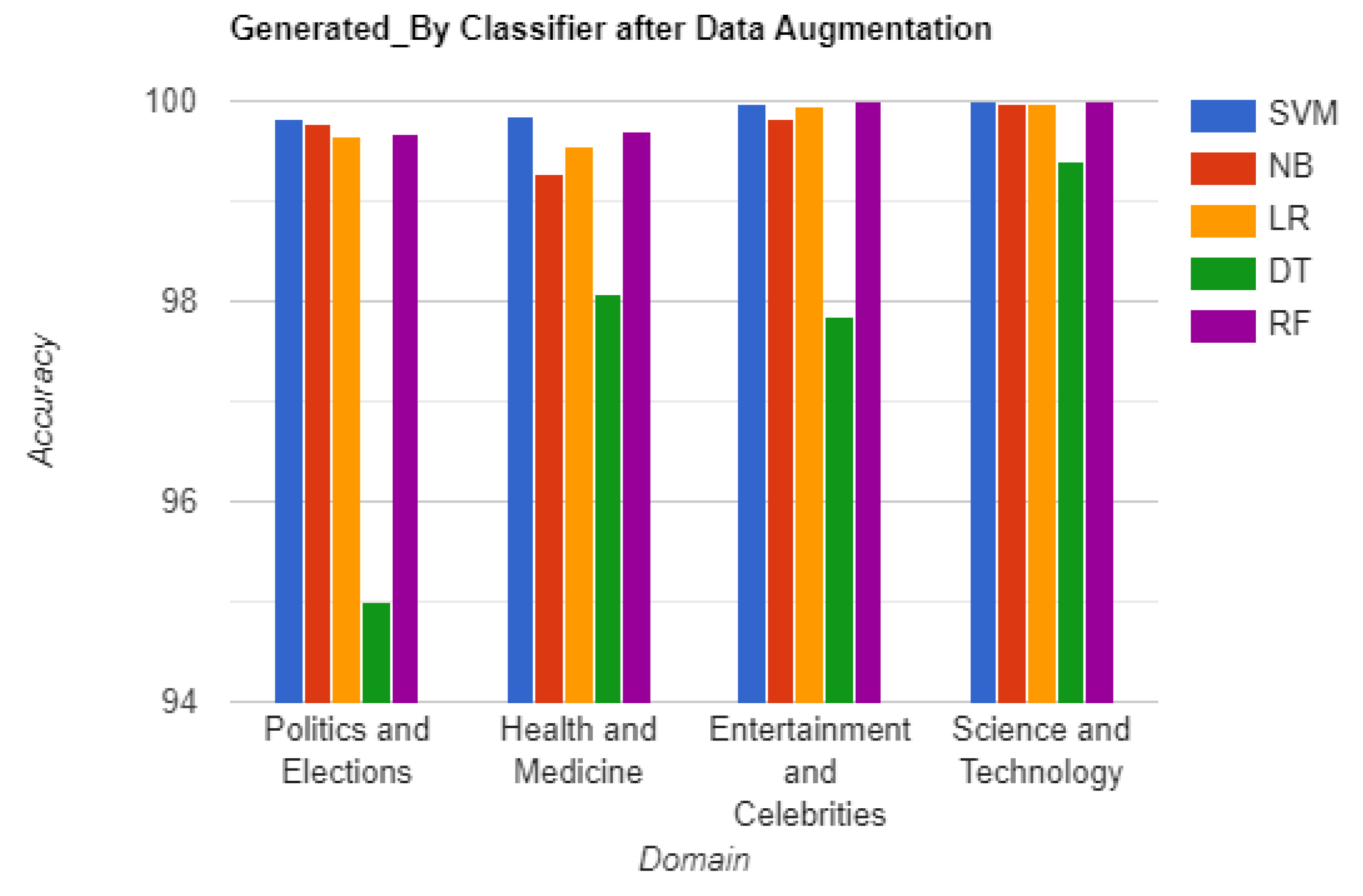

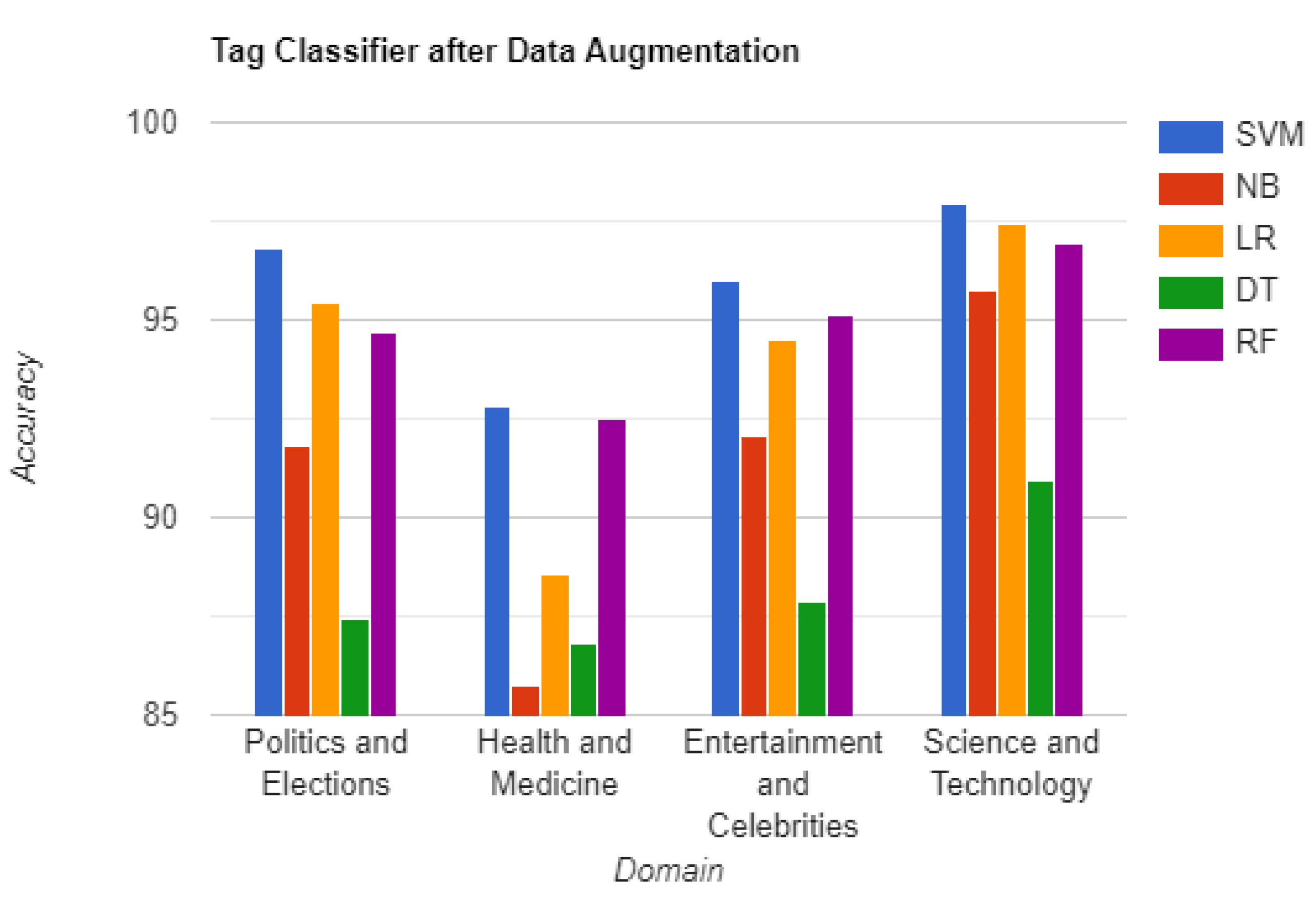

4.4. Impact on Model Performance: Analyzing Model Robustness in Diverse Data Environments

| Domain | Generated_By | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Before Data Augmentation | After Data Augmentation | |||||||||

| SVM | NB | LR | DT | RF | SVM | NB | LR | DT | RF | |

| Politics and Elections | 99.97 | 99.86 | 99.84 | 95.93 | 99.88 | 99.82 | 99.77 | 99.66 | 95.01 | 99.68 |

| Health and Medicine | 100 | 99.09 | 99.80 | 98.82 | 99.86 | 99.86 | 99.28 | 99.54 | 98.08 | 99.69 |

| Entertainment and Celebrities | 100 | 99.91 | 99.92 | 99.64 | 99.98 | 99.98 | 99.83 | 99.95 | 97.86 | 100 |

| Science and Technology | 100 | 100 | 100 | 99.72 | 100 | 100 | 99.97 | 99.98 | 99.39 | 100 |

| Domain | Tag | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Before Data Augmentation | After Data Augmentation | |||||||||

| SVM | NB | LR | DT | RF | SVM | NB | LR | DT | RF | |

| Politics and Elections | 97.90 | 92.74 | 96.47 | 90.45 | 96.69 | 96.79 | 91.84 | 95.42 | 87.46 | 94.68 |

| Health and Medicine | 94.68 | 86.97 | 90.32 | 89.34 | 94.28 | 92.83 | 85.78 | 88.54 | 86.82 | 92.48 |

| Entertainment and Celebrities | 98.60 | 93.92 | 96.73 | 92.49 | 97.86 | 95.99 | 92.09 | 94.51 | 87.87 | 95.14 |

| Science and Technology | 99.39 | 97.82 | 98.87 | 92.17 | 98.97 | 97.94 | 95.78 | 97.41 | 90.93 | 96.91 |

| Domain | Generated_By | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Before Data Augmentation | After Data Augmentation | |||||||||

| SVM | NB | LR | DT | RF | SVM | NB | LR | DT | RF | |

| Politics and Elections | 99.82 | 99.86 | 99.86 | 95.62 | 99.86 | 99.23 | 99.67 | 99.48 | 94.57 | 99.50 |

| Health and Medicine | 99.98 | 98.8 | 99.98 | 98.54 | 100 | 98.92 | 98.43 | 99.34 | 96.78 | 99.58 |

| Entertainment and Celebrities | 99.98 | 99.97 | 99.97 | 99.53 | 99.98 | 99.95 | 99.94 | 99.97 | 98.13 | 100 |

| Science and Technology | 100 | 100 | 100 | 99.8 | 100 | 100 | 99.98 | 99.98 | 99.30 | 100 |

| Domain | Tag | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Before Data Augmentation | After Data Augmentation | |||||||||

| SVM | NB | LR | DT | RF | SVM | NB | LR | DT | RF | |

| Politics and Elections | 97.47 | 93.69 | 97.68 | 90.85 | 96.42 | 95.13 | 92.85 | 96.04 | 87.90 | 94.21 |

| Health and Medicine | 92.29 | 86.86 | 93.8 | 89.74 | 94.31 | 90.65 | 84.63 | 91.69 | 87.08 | 92.42 |

| Entertainment and Celebrities | 98.07 | 94.04 | 98.57 | 93.65 | 97.95 | 95.26 | 91.26 | 95.85 | 88.93 | 95.19 |

| Science and Technology | 99.08 | 98.4 | 99.07 | 93.11 | 99.08 | 96.99 | 96.66 | 97.33 | 91.36 | 96.91 |

4.5. Cross-Domain Evaluation: Testing Across Different Data Realms

4.5.1. Significance of Domain Similarity

4.5.2. In-Domain Testing Outperforms Cross-Domain

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| LLM | Large Language Models |

| AI | Artificial Intelligence |

| GPT | Generative pre-trained transformer |

| SVM | Support Vector Machine |

| NB | Naive Bayes |

| LR | Logistic Regression |

| DT | Decision Trees |

| RF | Random Forest |

| BoW | Bag of Words |

Appendix A

| Domain | List of subjects for which True/Fake news is generated using ChatGPT-3.5 (gpt-3.5-turbo) Model |

|---|---|

| Politics and Elections | Joe Biden, Kamala Harris, Donald Trump, Bernie Sanders, Nancy Pelosi, Mitch McConnell, Alexandria Ocasio-Cortez, Ted Cruz, Elizabeth Warren, Chuck Schumer, Kevin McCarthy, Lindsey Graham, Cory Booker, Mitt Romney, Andrew Cuomo, Nikki Haley, Pete Buttigieg, Amy Klobuchar, Ron DeSantis, Marco Rubio, Stacey Abrams, Bill de Blasio, Rand Paul, Tom Cotton, Kirsten Gillibrand, John Kasich, Greg Abbott, Jay Inslee, Mike Pence, Ted Lieu, Jerry Brown, Tim Scott, Steve Scalise, Anthony Fauci, Dan Crenshaw, Adam Schiff, Marjorie Taylor Greene, Ilhan Omar, Pete Sessions, Maxine Waters, Steve Bannon, Sarah Palin, Devin Nunes, Jared Kushner, Ben Carson, Alexandria Ocasio-Cortez, Lisa Murkowski, Tom Wolf, Ron Johnson, Bill Lee, Doug Ducey, Chris Christie, Janet Yellen, Ben Sasse, Tim Walz, John Hickenlooper, Kay Ivey, Michael Bloomberg, Tulsi Gabbard, Eric Garcetti, Martin O’Malley, John Bel Edwards, Ralph Northam, Julian Castro, Robert Reich, Kristi Noem, Tim Ryan, Andrew Yang, Jeff Merkley, Jared Polis, Richard Blumenthal, Roy Cooper, Gavin Newsom, Steve Bullock, Mike Pompeo, Kelly Loeffler, Mike DeWine, Marsha Blackburn, John Cornyn, Ron DeSantis, Pete Ricketts, Val Demings, Andy Beshear, Dianne Feinstein, Tammy Duckworth, Jay Nixon, Steve Mnuchin, John Thune, Pete Snyder, Joni Ernst, Mark Kelly, Amy McGrath, Sam Brownback, Jan Brewer, Gary Peters, Gretchen Whitmer. |

| Health and Medicine | COVID-19, Cancer, HIV/AIDS, Diabetes, Obesity, Vaccines and Immunization, Mental Health Disorders, Diet and Nutrition, Addiction and Substance Abuse, Alzheimer’s Disease, Environmental Health, Zika, Ebola, Chronic Diseases. |

| Entertainment and Celebrities | Taylor Swift, Cristiano Ronaldo, Kylie Jenner, Kim Kardashian, Lionel Messi, LeBron James, Dwayne The Rock Johnson, Selena Gomez, Beyonce, Kevin Hart, Ariana Grande, Justin Bieber, Ellen DeGeneres, Lady Gaga, Oprah Winfrey, Drake, Virat Kohli, Rihanna, Scarlett Johansson, Jennifer Lopez, Roger Federer, Billie Eilish, Keanu Reeves, Robert Downey Jr., Elton John, Naomi Osaka, Serena Williams, Shah Rukh Khan, Priyanka Chopra Jonas, Chris Hemsworth, Angelina Jolie, Tom Cruise, BTS, Katy Perry, Vin Diesel, Bradley Cooper, Pink, Adele, Kendrick Lamar, Travis Scott, Steph Curry, Akshay Kumar, JK Rowling, Ryan Reynolds, Paul McCartney, Bruno Mars, Howard Stern, Russell Wilson, Adam Sandler, Will Smith, Novak Djokovic, Tiger Woods, Gordon Ramsay, Sean Diddy Combs, Gal Gadot, Conor McGregor, Simon Cowell, Kanye West, Shahid Kapoor, Gigi Hadid, Nicki Minaj, Jackie Chan, Marshmello, Jimmy Fallon, Deepika Padukone, Lewis Hamilton, Hugh Jackman, Celine Dion, Zac Efron, Sofia Vergara, Mark Wahlberg, James Charles, Miley Cyrus, Rowan Atkinson, Nicole Kidman, Rafael Nadal, Canelo Alvarez, Phil McGraw, Ed Sheeran, Charlize Theron, Ellen Pompeo, Margot Robbie, Hrithik Roshan, Mahendra Singh Dhoni, Emma Watson, Shawn Mendes, Julia Roberts, Kevin Durant, Saoirse Ronan, David Beckham, Kate Middleton, Zendaya, Anthony Joshua, David Guetta, Ranveer Singh, Timothee Chalamet, Giannis Antetokounmpo, Cardi B, Jared Leto. |

| Science and Technology | Cybersecurity, 5G Networks, Cryptocurrency, Blockchain, Artificial Intelligence and Machine Learning, Internet of Things, Virtual and Augmented Reality, Autonomous Vehicles, Social Media Algorithms, Deepfakes and Synthetic Media, Quantum Computing, Chemistry, Evolution, Stem Cell Research, Space Exploration and Astronomy, Climate Change, Alternative Medicine, Nutritional Science, Human Reproduction, Evolutionary Biology, Energy Production, Neuroscience. |

Appendix A.1. Fake News Article Crafted in the Politics and Elections Domain Using ChatGPT-3.5 (gpt-3.5-Turbo) Model

Appendix A.2. Authentic News Article Crafted in the Politics and Elections Domain Using ChatGPT-3.5 (gpt-3.5-Turbo) Model

Appendix A.3. Fake News Article Crafted in the Health and Medicine Domain Using ChatGPT-3.5 (gpt-3.5-Turbo) Model

Appendix A.4. Authentic News Article Crafted in the Health and Medicine Domain Using ChatGPT-3.5 (gpt-3.5-Turbo) Model

Appendix A.5. Fake News Article Crafted in the Entertainment and Celebrities Domain Using ChatGPT-3.5 (gpt-3.5-Turbo) Model

Appendix A.6. Authentic News Article Crafted in the Entertainment and Celebrities Domain Using ChatGPT-3.5 (gpt-3.5-Turbo) Model

Appendix A.7. Fake News Article Crafted in the Science and Technology Domain Using ChatGPT-3.5 (gpt-3.5-Turbo) Model

Appendix A.8. Authentic News Article Crafted in the Science and Technology Domain using ChatGPT-3.5 (gpt-3.5-turbo) Model

Appendix A.9. Paraphrasing a Fake News Article Using ChatGPT-3.5 (gpt-3.5-Turbo) Model

References

- Zhou, X.; Zafarani, R. A survey of fake news: Fundamental theories, detection methods, and opportunities. ACM Computing Surveys (CSUR) 2020, 53, 1–40. [Google Scholar] [CrossRef]

- Sharma, K.; Qian, F.; Jiang, H.; Ruchansky, N.; Zhang, M.; Liu, Y. Combating fake news: A survey on identification and mitigation techniques. ACM Transactions on Intelligent Systems and Technology (TIST) 2019, 10, 1–42. [Google Scholar] [CrossRef]

- Kumar, S.; West, R.; Leskovec, J. Disinformation on the web: Impact, characteristics, and detection of wikipedia hoaxes. Proceedings of the 25th international conference on World Wide Web, 2016, pp. 591–602.

- Chen, C.; Wang, H.; Shapiro, M.; Xiao, Y.; Wang, F.; Shu, K. Combating Health Misinformation in Social Media: Characterization, Detection, Intervention, and Open Issues. arXiv preprint, 2022; arXiv:2211.05289. [Google Scholar]

- Bondielli, A.; Marcelloni, F. A survey on fake news and rumour detection techniques. Information Sciences 2019, 497, 38–55. [Google Scholar] [CrossRef]

- Islam, M.R.; Liu, S.; Wang, X.; Xu, G. Deep learning for misinformation detection on online social networks: a survey and new perspectives. Social Network Analysis and Mining 2020, 10, 1–20. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Ghorbani, A.A. An overview of online fake news: Characterization, detection, and discussion. Information Processing & Management 2020, 57, 102025. [Google Scholar]

- Jin, Y.; Wang, X.; Yang, R.; Sun, Y.; Wang, W.; Liao, H.; Xie, X. Towards fine-grained reasoning for fake news detection. Proceedings of the AAAI Conference on Artificial Intelligence, 2022, Vol. 36, pp. 5746–5754.

- Wang, H.; Dou, Y.; Chen, C.; Sun, L.; Yu, P.S.; Shu, K. Attacking Fake News Detectors via Manipulating News Social Engagement. arXiv preprint, 2023; arXiv:2302.07363. [Google Scholar]

- Liao, H.; Peng, J.; Huang, Z.; Zhang, W.; Li, G.; Shu, K.; Xie, X. MUSER: A MUlti-Step Evidence Retrieval Enhancement Framework for Fake News Detection. Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, 2023, pp. 4461–4472.

- Yue, Z.; Zeng, H.; Zhang, Y.; Shang, L.; Wang, D. MetaAdapt: Domain Adaptive Few-Shot Misinformation Detection via Meta Learning. arXiv preprint, 2023; arXiv:2305.12692. [Google Scholar]

- Wei, C.; Wang, Y.C.; Wang, B.; Kuo, C.C.J. An overview on language models: Recent developments and outlook. arXiv preprint, 2023; arXiv:2303.05759. [Google Scholar]

- Zhou, C.; Li, Q.; Li, C.; Yu, J.; Liu, Y.; Wang, G.; Zhang, K.; Ji, C.; Yan, Q.; He, L. ; others. A comprehensive survey on pretrained foundation models: A history from bert to chatgpt. arXiv preprint, 2023; arXiv:2302.09419. [Google Scholar]

- Naveed, H.; Khan, A.U.; Qiu, S.; Saqib, M.; Anwar, S.; Usman, M.; Barnes, N.; Mian, A. A Comprehensive Overview of Large Language Models. arXiv preprint, 2023; arXiv:2307.06435. [Google Scholar]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. The Journal of Machine Learning Research 2020, 21, 5485–5551. [Google Scholar]

- Touvron, H.; Martin, L.; Stone, K.; Albert, P.; Almahairi, A.; Babaei, Y.; Bashlykov, N.; Batra, S.; Bhargava, P.; Bhosale, S. ; others. Llama 2: Open foundation and fine-tuned chat models. arXiv preprint, 2023; arXiv:2307.09288. [Google Scholar]

- Zhang, Z.; Gu, Y.; Han, X.; Chen, S.; Xiao, C.; Sun, Z.; Yao, Y.; Qi, F.; Guan, J.; Ke, P.; others. Cpm-2: Large-scale cost-effective pre-trained language models. AI Open 2021, 2, 216–224. [Google Scholar] [CrossRef]

- Yang, A.; Xiao, B.; Wang, B.; Zhang, B.; Yin, C.; Lv, C.; Pan, D.; Wang, D.; Yan, D.; Yang, F. ; others. Baichuan 2: Open Large-scale Language Models. arXiv preprint, 2023; arXiv:2309.10305. [Google Scholar]

- Zhang, S.; Roller, S.; Goyal, N.; Artetxe, M.; Chen, M.; Chen, S.; Dewan, C.; Diab, M.; Li, X.; Lin, X.V. ; others. Opt: Open pre-trained transformer language models. arXiv preprint, 2022; arXiv:2205.01068. [Google Scholar]

- Zeng, A.; Liu, X.; Du, Z.; Wang, Z.; Lai, H.; Ding, M.; Yang, Z.; Xu, Y.; Zheng, W.; Xia, X. ; others. Glm-130b: An open bilingual pre-trained model. arXiv preprint, 2022; arXiv:2210.02414. [Google Scholar]

- Sanh, V.; Webson, A.; Raffel, C.; Bach, S.H.; Sutawika, L.; Alyafeai, Z.; Chaffin, A.; Stiegler, A.; Scao, T.L.; Raja, A. ; others. Multitask prompted training enables zero-shot task generalization. arXiv preprint, 2021; arXiv:2110.08207. [Google Scholar]

- Penedo, G.; Malartic, Q.; Hesslow, D.; Cojocaru, R.; Cappelli, A.; Alobeidli, H.; Pannier, B.; Almazrouei, E.; Launay, J. The RefinedWeb dataset for Falcon LLM: outperforming curated corpora with web data, and web data only. arXiv preprint, 2023; arXiv:2306.01116. [Google Scholar]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F. ; others. Llama: Open and efficient foundation language models. arXiv preprint, 2023; arXiv:2302.13971. [Google Scholar]

- Scao, T.L.; Fan, A.; Akiki, C.; Pavlick, E.; Ilić, S.; Hesslow, D.; Castagné, R.; Luccioni, A.S.; Yvon, F.; Gallé, M. ; others. Bloom: A 176b-parameter open-access multilingual language model. arXiv preprint, 2022; arXiv:2211.05100. [Google Scholar]

- Glaese, A.; McAleese, N.; Trębacz, M.; Aslanides, J.; Firoiu, V.; Ewalds, T.; Rauh, M.; Weidinger, L.; Chadwick, M.; Thacker, P. ; others. Improving alignment of dialogue agents via targeted human judgements. arXiv preprint, 2022; arXiv:2209.14375. [Google Scholar]

- Nakano, R.; Hilton, J.; Balaji, S.; Wu, J.; Ouyang, L.; Kim, C.; Hesse, C.; Jain, S.; Kosaraju, V.; Saunders, W. ; others. Webgpt: Browser-assisted question-answering with human feedback. arXiv preprint, 2021; arXiv:2112.09332. [Google Scholar]

- Chowdhery, A.; Narang, S.; Devlin, J.; Bosma, M.; Mishra, G.; Roberts, A.; Barham, P.; Chung, H.W.; Sutton, C.; Gehrmann, S. ; others. Palm: Scaling language modeling with pathways. arXiv preprint, 2022; arXiv:2204.02311. [Google Scholar]

- Wei, J.; Bosma, M.; Zhao, V.Y.; Guu, K.; Yu, A.W.; Lester, B.; Du, N.; Dai, A.M.; Le, Q.V. Finetuned language models are zero-shot learners. arXiv preprint, 2021; arXiv:2109.01652. [Google Scholar]

- Soltan, S.; Ananthakrishnan, S.; FitzGerald, J.; Gupta, R.; Hamza, W.; Khan, H.; Peris, C.; Rawls, S.; Rosenbaum, A.; Rumshisky, A. ; others. Alexatm 20b: Few-shot learning using a large-scale multilingual seq2seq model. arXiv preprint, 2022; arXiv:2208.01448. [Google Scholar]

- Askell, A.; Bai, Y.; Chen, A.; Drain, D.; Ganguli, D.; Henighan, T.; Jones, A.; Joseph, N.; Mann, B.; DasSarma, N. ; others. A general language assistant as a laboratory for alignment. arXiv preprint, 2021; arXiv:2112.00861. [Google Scholar]

- Hoffmann, J.; Borgeaud, S.; Mensch, A.; Buchatskaya, E.; Cai, T.; Rutherford, E.; Casas, D.d.L.; Hendricks, L.A.; Welbl, J.; Clark, A. ; others. Training compute-optimal large language models. arXiv preprint, 2022; arXiv:2203.15556. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; others. Language models are few-shot learners. Advances in neural information processing systems 2020, 33, 1877–1901. [Google Scholar]

- Du, N.; Huang, Y.; Dai, A.M.; Tong, S.; Lepikhin, D.; Xu, Y.; Krikun, M.; Zhou, Y.; Yu, A.W.; Firat, O. ; others. Glam: Efficient scaling of language models with mixture-of-experts. International Conference on Machine Learning. PMLR, 2022, pp. 5547–5569.

- Wang, S.; Sun, Y.; Xiang, Y.; Wu, Z.; Ding, S.; Gong, W.; Feng, S.; Shang, J.; Zhao, Y.; Pang, C. ; others. Ernie 3.0 titan: Exploring larger-scale knowledge enhanced pre-training for language understanding and generation. arXiv preprint, 2021; arXiv:2112.12731. [Google Scholar]

- Nan, Q.; Cao, J.; Zhu, Y.; Wang, Y.; Li, J. MDFEND: Multi-domain fake news detection. Proceedings of the 30th ACM International Conference on Information & Knowledge Management, 2021, pp. 3343–3347.

- Adelani, D.I.; Mai, H.; Fang, F.; Nguyen, H.H.; Yamagishi, J.; Echizen, I. Generating sentiment-preserving fake online reviews using neural language models and their human-and machine-based detection. Advanced Information Networking and Applications: Proceedings of the 34th International Conference on Advanced Information Networking and Applications (AINA-2020). Springer, 2020, pp. 1341–1354.

- Le, T.; Wang, S.; Lee, D. Malcom: Generating malicious comments to attack neural fake news detection models. 2020 IEEE International Conference on Data Mining (ICDM). IEEE, 2020, pp. 282–291.

- Du, Y.; Bosselut, A.; Manning, C.D. Synthetic disinformation attacks on automated fact verification systems. Proceedings of the AAAI Conference on Artificial Intelligence, 2022, Vol. 36, pp. 10581–10589.

- Hanley, H.W.; Durumeric, Z. Machine-Made Media: Monitoring the Mobilization of Machine-Generated Articles on Misinformation and Mainstream News Websites. arXiv preprint, 2023; arXiv:2305.09820. [Google Scholar]

- Shu, K.; Li, Y.; Ding, K.; Liu, H. Fact-enhanced synthetic news generation. Proceedings of the AAAI Conference on Artificial Intelligence, 2021, Vol. 35, pp. 13825–13833.

- Ranade, P.; Piplai, A.; Mittal, S.; Joshi, A.; Finin, T. Generating fake cyber threat intelligence using transformer-based models. 2021 International Joint Conference on Neural Networks (IJCNN). IEEE, 2021, pp. 1–9.

- Zellers, R.; Holtzman, A.; Rashkin, H.; Bisk, Y.; Farhadi, A.; Roesner, F.; Choi, Y. Defending against neural fake news. Advances in neural information processing systems 2019, 32. [Google Scholar]

- Bhardwaj, P.; Yadav, K.; Alsharif, H.; Aboalela, R.A. GAN-Based Unsupervised Learning Approach to Generate and Detect Fake News. International Conference on Cyber Security, Privacy and Networking. Springer, 2021, pp. 384–396.

- Aich, A.; Bhattacharya, S.; Parde, N. Demystifying Neural Fake News via Linguistic Feature-Based Interpretation. Proceedings of the 29th International Conference on Computational Linguistics, 2022, pp. 6586–6599.

- Schuster, T.; Schuster, R.; Shah, D.J.; Barzilay, R. The limitations of stylometry for detecting machine-generated fake news. Computational Linguistics 2020, 46, 499–510. [Google Scholar] [CrossRef]

- Tan, R.; Plummer, B.A.; Saenko, K. Detecting cross-modal inconsistency to defend against neural fake news. arXiv preprint, 2020; arXiv:2009.07698. [Google Scholar]

- Stiff, H.; Johansson, F. Detecting computer-generated disinformation. International Journal of Data Science and Analytics 2022, 13, 363–383. [Google Scholar] [CrossRef]

- Spitale, G.; Biller-Andorno, N.; Germani, F. AI model GPT-3 (dis) informs us better than humans. arXiv preprint, 2023; arXiv:2301.11924. [Google Scholar]

- Bhat, M.M.; Parthasarathy, S. How effectively can machines defend against machine-generated fake news? an empirical study. Proceedings of the First Workshop on Insights from Negative Results in NLP, 2020, pp. 48–53.

- Pagnoni, A.; Graciarena, M.; Tsvetkov, Y. Threat scenarios and best practices to detect neural fake news. Proceedings of the 29th International Conference on Computational Linguistics, 2022, pp. 1233–1249.

- Chen, C.; Shu, K. Can LLM-Generated Misinformation Be Detected? arXiv preprint, 2023; arXiv:2309.13788. [Google Scholar]

- Ayoobi, N.; Shahriar, S.; Mukherjee, A. The Looming Threat of Fake and LLM-generated LinkedIn Profiles: Challenges and Opportunities for Detection and Prevention. Proceedings of the 34th ACM Conference on Hypertext and Social Media, 2023, pp. 1–10.

- Pan, Y.; Pan, L.; Chen, W.; Nakov, P.; Kan, M.Y.; Wang, W.Y. On the Risk of Misinformation Pollution with Large Language Models. arXiv preprint, 2023; arXiv:2305.13661 . [Google Scholar]

- Hamed, A.A.; Wu, X. Improving Detection of ChatGPT-Generated Fake Science Using Real Publication Text: Introducing xFakeBibs a Supervised-Learning Network Algorithm 2023.

- Goldstein, J.A.; Sastry, G.; Musser, M.; DiResta, R.; Gentzel, M.; Sedova, K. Generative language models and automated influence operations: Emerging threats and potential mitigations. arXiv preprint, 2023; arXiv:2301.04246. [Google Scholar]

- Cheng, M.; Wang, S.; Yan, X.; Yang, T.; Wang, W.; Huang, Z.; Xiao, X.; Nazarian, S.; Bogdan, P. A COVID-19 Rumor Dataset. Frontiers in Psychology 2021, 12, 1566. [Google Scholar] [CrossRef] [PubMed]

- Dai, E.; Sun, Y.; Wang, S. Ginger cannot cure cancer: Battling fake health news with a comprehensive data repository. Proceedings of the International AAAI Conference on Web and Social Media, 2020, Vol. 14, pp. 853–862.

- Pérez-Rosas, V.; Kleinberg, B.; Lefevre, A.; Mihalcea, R. Automatic Detection of Fake News. International Conference on Computational Linguistics (COLING) 2018. [Google Scholar]

| 1 | The data from this study are available upon request from the corresponding author |

| Domain | Original Dataset | Sampled Dataset | ||

|---|---|---|---|---|

| Human | AI | Human | AI | |

| Politics and Elections | True - 11272 False - 6841 | True - 2945 False - 2714 | True - 2714 False - 2714 | True - 2714 False - 2714 |

| Health and Medicine | True - 4227 False - 2931 | True - 1625 False - 1732 | True - 1625 False - 1625 | True - 1625 False - 1625 |

| Entertainment and Celebrities | True - 290 False - 290 | True - 1823 False - 1657 | True - 1657 False - 1657 | True - 1657 False - 1657 |

| Science and Technology | True - 40 False - 40 | True - 1527 False - 1823 | True - 1527 False - 1527 | True - 1527 False - 1527 |

| Domain | After Data Augmentation | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Generated_By | Tag | |||||||||

| SVM | NB | LR | DT | RF | SVM | NB | LR | DT | RF | |

| Politics and Elections | 99.82 | 99.77 | 99.66 | 95.01 | 99.68 | 96.79 | 91.84 | 95.42 | 87.46 | 94.68 |

| Health and Medicine | 92.63 | 93.97 | 92.77 | 77.05 | 92.78 | 64.97 | 63.95 | 63.03 | 57.43 | 64.82 |

| Entertainment and Celebrities | 96.91 | 93.44 | 96.45 | 77.28 | 91.19 | 52.61 | 54.07 | 51.3 | 54.33 | 52.94 |

| Science and Technology | 96.38 | 98.33 | 96.84 | 70.27 | 91.6 | 58.19 | 66.37 | 56.94 | 54.85 | 62.8 |

| Domain | After Data Augmentation | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Generated_By | Tag | |||||||||

| SVM | NB | LR | DT | RF | SVM | NB | LR | DT | RF | |

| Health and Medicine | 99.86 | 99.28 | 99.54 | 98.08 | 99.69 | 92.83 | 85.78 | 88.54 | 86.82 | 92.48 |

| Politics and Elections | 55.26 | 80.63 | 52.6 | 69.4 | 77.47 | 70.49 | 73.34 | 74.85 | 56.16 | 68.81 |

| Entertainment and Celebrities | 54.39 | 89.5 | 51.21 | 63.19 | 71.74 | 56.1 | 56.85 | 54.92 | 54.41 | 57.82 |

| Science and Technology | 92.94 | 99.48 | 85.82 | 89.11 | 93.4 | 62.02 | 68.25 | 59.32 | 60.36 | 65.98 |

| Domain | After Data Augmentation | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Generated_By | Tag | |||||||||

| SVM | NB | LR | DT | RF | SVM | NB | LR | DT | RF | |

| Entertainment and Celebrities | 99.98 | 99.83 | 99.95 | 97.86 | 100 | 95.99 | 92.09 | 94.51 | 87.87 | 95.14 |

| Politics and Elections | 94.97 | 88.36 | 93.62 | 72.91 | 87.91 | 71.15 | 70.61 | 71.14 | 59.75 | 72.3 |

| Health and Medicine | 82.25 | 92.02 | 88.51 | 76.62 | 76.62 | 66.32 | 64.02 | 66.32 | 56.37 | 62.8 |

| Science and Technology | 78.7 | 90.77 | 90.59 | 71.25 | 60.53 | 67.08 | 64.0 | 66.68 | 61.76 | 70.48 |

| Domain | After Data Augmentation | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Generated_By | Tag | |||||||||

| SVM | NB | LR | DT | RF | SVM | NB | LR | DT | RF | |

| Science and Technology | 100 | 99.97 | 99.98 | 99.39 | 100 | 97.94 | 95.78 | 97.41 | 90.93 | 96.91 |

| Politics and Elections | 77.22 | 93.12 | 90.05 | 61.63 | 61.0 | 72.14 | 71.62 | 70.41 | 59.25 | 71.12 |

| Health and Medicine | 52.98 | 62.29 | 57.22 | 68.57 | 55.77 | 73.26 | 70.42 | 72.95 | 65.18 | 74.37 |

| Entertainment and Celebrities | 72.75 | 93.42 | 89.12 | 65.75 | 64.94 | 61.36 | 55.99 | 59.16 | 60.21 | 64.11 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).