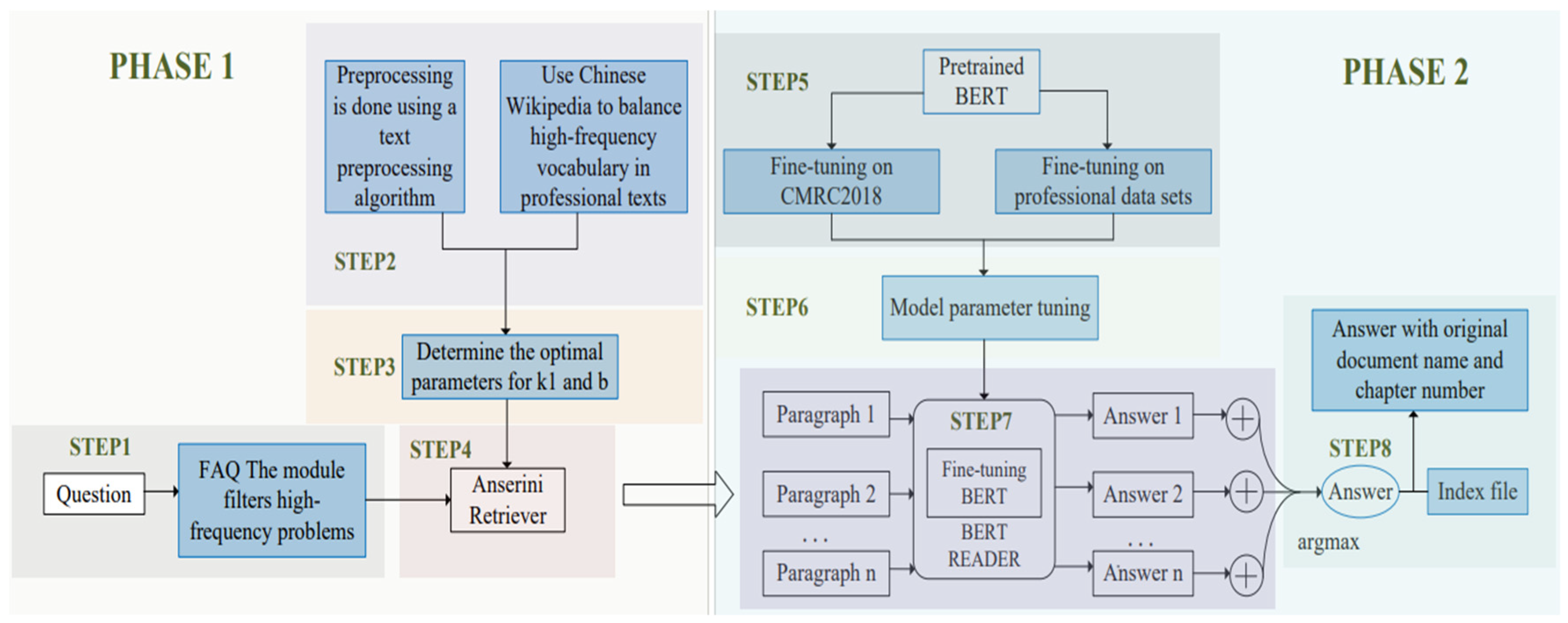

3.1. Algorithm description

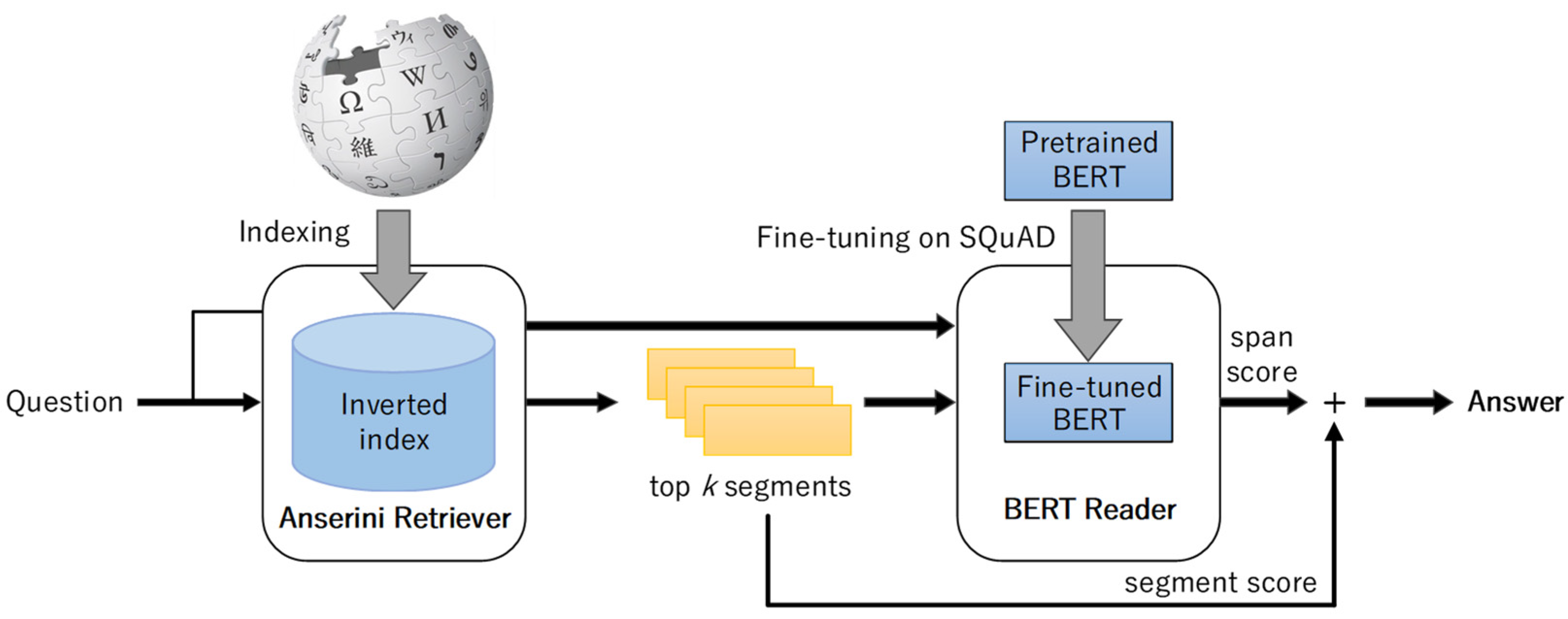

The improved BERTserini algorithm presented in this paper can be divided into two stages, and the flowchart is illustrated in

Figure 5.

(1) Phase 1: Text Segmentation Stage

The first stage is text segmentation stage, which comprises two key components: (1) Question preprocessing: The FAQ module is utilized to intercept high-frequency questions in advance, thereby achieving question preprocessing. If the FAQ module cannot provide an answer that corresponds to the user’s query, then the query is transferred to the subsequent stage of paragraph extraction. Anserini retrieval technology is utilized for paragraph extraction, enabling the rapid extraction of highly relevant paragraphs which are pertinent to user queries within multi-document long text. (2) Document preprocessing: Due to the high degree of keyword overlap in power regulation documents. The paper proposes a multi-document long text preprocessing method supporting regulation texts, which can accurately segment the regulation texts and support the retrieval and tracing of the answer chapters’ sources.

STEP1 The FAQ module filters out high-frequency problems

The FAQ module is designed to pre-process questions by intercepting and filtering out high-frequency problems. To achieve this, the module requires a default question library that contains a comprehensive collection of manually curated questions and their corresponding answer pairs from the target document. By matching the most similar question to the user’s inquiry, the FAQ module can efficiently provide an accurate answer based on the corresponding answer to the question.

The FAQ module employs ElasticSearch, an open-source distributed search and analysis engine, to match user queries in a predefined question library. ElasticSearch is built upon the implementation of Lucene, an open-source full-text search engine library released by the Apache Foundation, and incorporates Lucene’s BM25 text similarity algorithm. This algorithm calculates similarity by evaluating word overlap between the user query’s text and the default question library, as shown in (3).

The FAQ module will directly return the preset answer to the matched question if the BM25 score returned by ElasticSearch exceeds the predetermined threshold. In cases where the return score falls below this threshold, instead of returning an answer, the question is referred to subsequent steps.

STEP2 Text preprocessing and document index generation

The first step involves two tasks. Firstly, Due to the high coincidence rate of keywords in regulations, employing Anserini directly for paragraph retrieval and calculation may lead to the problem of high weight value of high-frequency words. Consequently, when indexing documents, it is advisable to incorporate Chinese Wikipedia text data alongside rules and regulations to counteract the undue influence of excessive word frequency. Secondly, a novel multi-document long text preprocessing algorithm is proposed. This algorithm accurately segments the regulation text while retaining chapter position information of each paragraph and generating the index file. Specific steps include:

Convert documents in pdf or docx format to plain text in txt format.

Remove irrelevant information such as header/footer and page number.

Use regular expressions to extract the title number from the text (for example: 3.3.1), and match the title number to the text.

Use rules to filter out paragraphs in the text such as tables and pictures that are not suitable for machine reading comprehension.

Use Anserini to divide the text title number into words and index the corresponding text.

STEP3 Determine the two parameters k1 and b

The k1 and b parameters utilized in the Anserini module are empirically selected to determine the optimal parameters for this study. A specific methodology is employed, starting from 0.1 within their respective value ranges and incrementing by 0.05 to systematically explore all possible combinations of k1 and b values. The selection of the best k1 and b values is based on the accuracy assessment of the second stage Bert reading comprehension module.

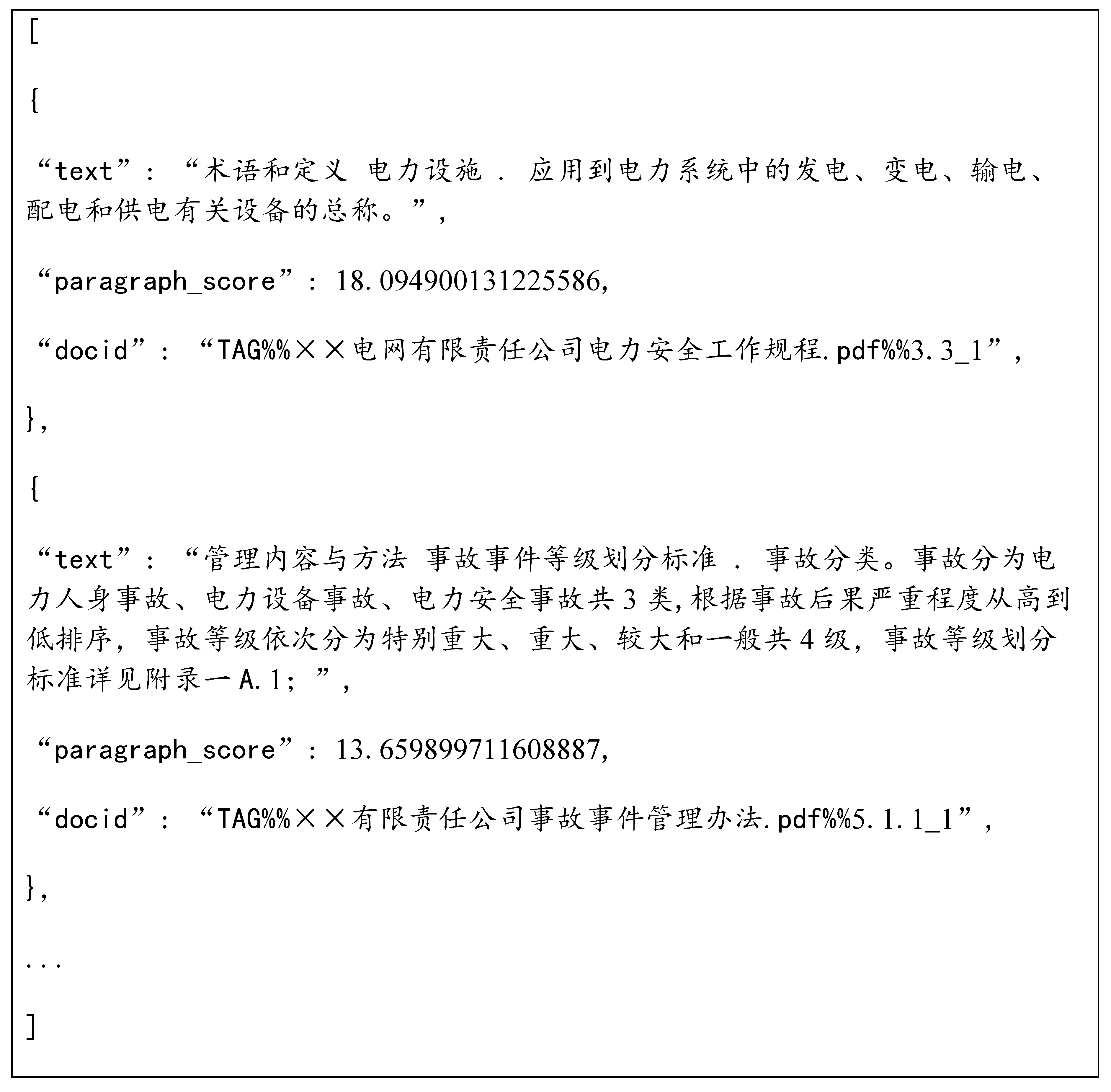

STEP4 Extract paragraphs and generate paragraph scores

Based on the user’s question, Anserini extracts relevant paragraphs from the preprocessed document by filtering out those that are not related to the query. It then matches the question with the paragraphs in the index and selects the top N paragraphs with the highest relevance to the question. These paragraphs are scored using the BM25 algorithm and recorded them as .

(2) Phase 2: answer generation and source retrieval stage

The second stage is the answer generation and source retrieval stage. After undergoing two steps of fine-tuning and key parameter tuning, the model is capable of extracting accurate answers from N paragraphs based on the given question. Additionally, the model can output the chapter information of the answer in the original document according to the index file.

STEP5 Select the appropriate Chinese Bert model and fine-tune it

In this research, the Chinese-Bert-WWM-EXT Base model is chosen as the foundational framework. The initial step involves fine-tuning the model using the Chinese Open domain Question answering dataset (CMRC2018). Subsequently, a second round of fine-tuning is conducted by employing the training exam questions related to rules and regulations as specialized datasets.

STEP6 Algorithm parameter tuning

Based on the structural and characteristic features of regulatory documents, the following five crucial parameters of the improved BERTserini algorithm have been optimized:

paragraph_threshold. The paragraph threshold is employed to exclude paragraphs with Anserini scores below this specified limit, thereby conserving computational resources.

phrase_threshold. The answer threshold serves as a filter, excluding responses with a Bert reader score below the specified limit.

remove_title. Removes the paragraph title. If this item is True (y=True, n=False), paragraph headings are not taken into account when the Bert reader performs reading comprehension.

max_answer_length. The maximum answer length. The maximum length of an answer is allowed to be extracted when the Bert reader performs a reading comprehension task.

mu. Score weight is implemented to evaluate both the answer and paragraph using the Bert reader and Anserini extractor, subsequently calculating the final score value of the answer.

STEP7 Extract the answers and give a reading comprehension score

Bert is used to extract the exact answers to the question from the N paragraphs extracted by Anserini. The sum of the probability of starting and ending positions (logits) for each answer predicted by the model is used as the score of the answer generated by the Bert reading comprehension module. It can be expressed by the following equation:

STEP8 The candidate answers are scored by a comprehensive weighted score, rank the answers by score, output the answer with the highest score, and give the original document name and specific chapter information for the answer.

Use the following equation to calculate the overall weighted score of the answer:

The final score of the answer is calculated by the above formula. represents the BM25 score returned by the Anserini extracter, and represents the answer score returned by Bert. The answers are sorted by the calculated answer score, and the final output is the answer with the highest score. According to the index file, the original document name and chapter information are output together.

3.2. Main innovations

(1) Multi-document long text preprocessing method which can process rules and regulations text and support answer provenance retrieval.

In this paper, a multi-document long text preprocessing method is proposed that facilitates answer provenance retrieval and can effectively process the rules and regulations text, which provides a technical path for the construction of intelligent question answering system in specific professional fields. The innovation point of this method is reflected in STEP2. This method divides the rules and regulations into chapters. The original document name of each paragraph and its chapter number information can be preserved. To address the issue of excessive frequency of certain proper nouns, the method incorporates text data from Chinese Wikipedia and performs balance processing. By incorporating a larger corpus, the frequency of a specific proper noun in the text can be effectively diminished, thereby mitigating its influence on the model. This innovative preprocessing method can improve the calculation effect of the subsequent reading comprehension module. The answer can be provided in the original document, including chapter and location information.

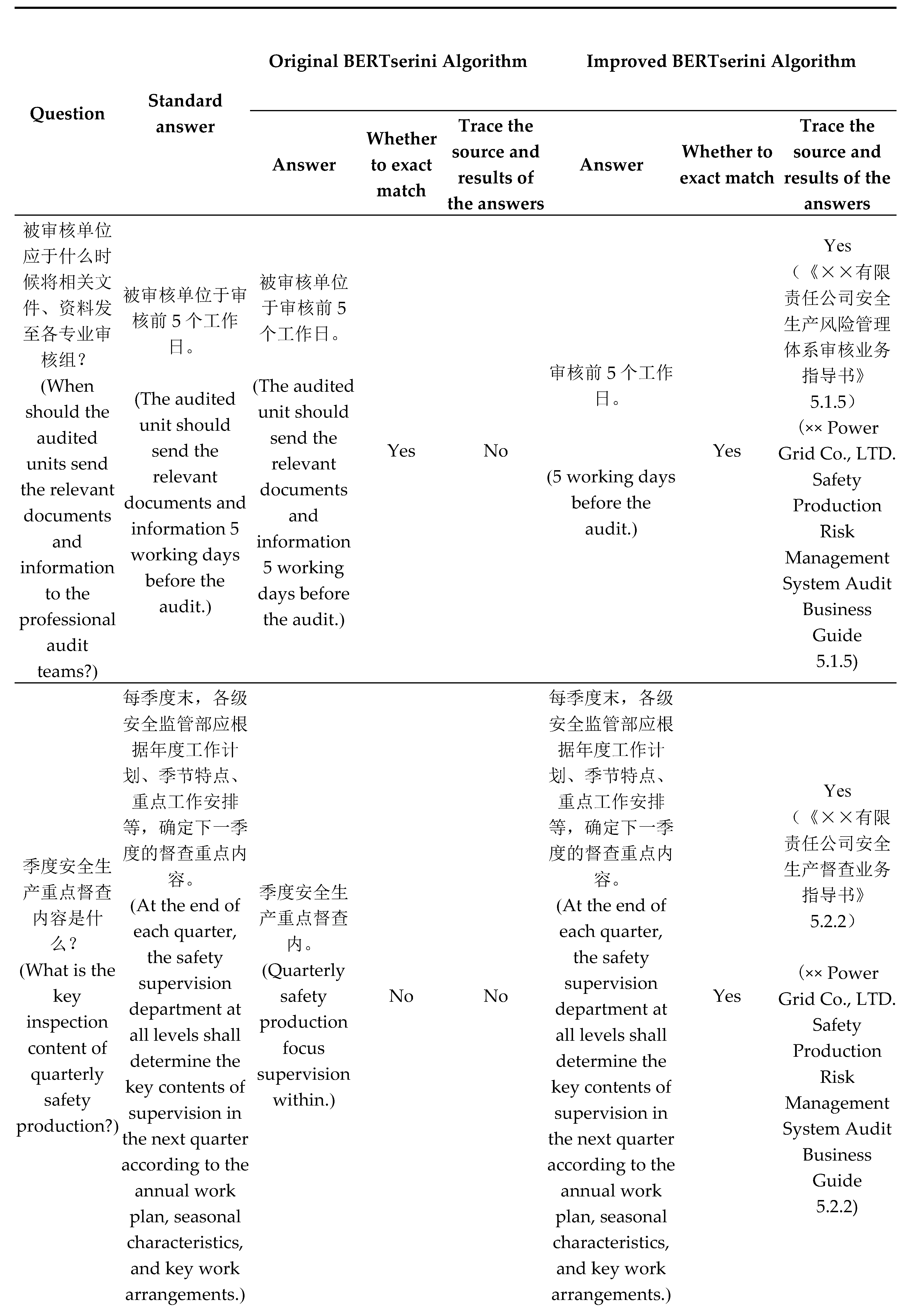

(2) Determination of optimal parameters of Anserini and improved BERTserini algorithm

① Determination of the optimal parameters of Anserini. In STEP3, the optimal parameters of Anserini are determined. All possible combinations of k1 and b are experimentally tried one by one. And the best value is selected according to the answer performance of the subsequent reading comprehension module questions. The determination of the optimal parameters of Anserini improves the performance of the intelligent question answering system and the exact match of answers (EM).

② Determination of the optimal parameters of the improved BERTserini algorithm: In STEP6, the optimal parameters of the improved BERTserini algorithm are determined. According to the structure and characteristics of regulation documents, five important parameters are optimized. Thus, the algorithm can determine the reasonable threshold of generating candidate answers when the Bert reading comprehension module performs the reading comprehension task. And the answer generation does not take into account the paragraph title and the optimal overall rating weight and other details that constitute high-quality questions and answers.

(3) Fine-tuning of multi-data sets for Bert reading comprehension model

This step is illustrated in STEP5. The Bert model is pre-trained using the CMRC2018 data, and a two-step Fine-tuning was carried out using the existing rules and regulations exam questions. By making full use of data sets in different fields, the accuracy and generalization ability of the model are improved. This method achieves better results in question answering system. At the same time, this method also reduces the time and labor cost required for manual editing of question answering pairs in traditional model training. It also significantly improves Bert’s reading comprehension of rules and regulations.

(4) Clever use of FAQ

The clever use of the FAQ is reflected in STEP1. In this paper, the existing rules and regulations are used to train and test questions, which constitutes the questions and answers pairs required by the pre-FAQ module to intercept some high-frequency questions. In this way, a low-cost FAQ module is constructed, which improves the answering efficiency of high-frequency questions, and also improves the exact match rate (EM) of the intelligent question answering system.