0. Introduction

With the continuous progress of science and technology, monitoring equipment is developing towards informatization and intelligence, and unmanned platforms are widely used in environmental monitoring. For unmanned platforms, automatic target recognition technology is the key technology to realize situation awareness. At present, the UAV platform lacks autonomous ability in the process of environmental monitoring and relies heavily on communication links and ground terminals to realize information interaction [

1]. Therefore, how to realize the autonomous detection and identification of aerial targets is the key to improving the autonomous situational awareness of UAVs [

19]. Environmental information (mainly target image information) is collected and analyzed by the UAV airborne image sensor, and the acquired images and videos are preprocessed by the airborne computing platform, so that image feature extraction and target detection are realized on the UAV platform, which provides accurate support for further decision-making [

20].

However, current Slimmable target recognition models have not fully addressed the independent operation of deep learning algorithms on onboard computing devices, which makes achieving true onboard detection challenging even if the algorithms perform well [

22]. To overcome the limitations of computational capabilities on unmanned aerial vehicle (UAV) platforms, it is crucial to enrich methods for aerial target recognition and detection by introducing Slimmable neural networks and incorporating the Squeeze and Excitation (SE) and Swin-Transformer mechanisms. These enhancements strengthen the model’s adaptability to changes in perspective and scale, enabling it to possess stronger recognition capabilities [

23]. Finally, comparative experiments using the publicly available VisDrone19 dataset have confirmed that both the SE-YOLOv5s and ST-YOLOv5s models outperform the YOLOv5s model in terms of performance [

24]. Furthermore, experiments using actual aerial data have been conducted to validate the detection and recognition performance of the models on low-altitude UAV aerial imagery. The main contributions of this paper are as follows:

The Squeeze and Excitation (SE) attention module [

2] is introduced into the backbone network.

The integration of the Swin Transformer and Transformer into the Neck network of YOLOv5s, combined with C3, allowing for accurate object localization in high-density scenes.

Experimental validation using the VisDrone19 dataset demonstrating improved model accuracy, thereby proving that the enhanced model possesses higher autonomous object recognition capabilities.

1. Related Work

Before the application of deep learning techniques in the field of object detection and recognition, traditional algorithms primarily relied on image-based features [

25]. These methods involved a combination of manually designed image features, sliding window approaches, and the classification algorithm Support Vector Machine (SVM) to achieve the task. The specific approach included manual feature extraction for object recognition, followed by regression algorithms for object localization to accomplish the detection and recognition tasks. Representative features included Histogram of Oriented Gradients (HOG) [

3], Scale Invariant Feature Transform (SIFT) [

5], and Harr-like features [

6].

However, traditional object detection methods face several challenges in practical applications. Firstly, these methods rely on manually designed features, and the performance of the detection algorithm is solely dependent on the developer’s expertise, making it difficult to leverage the advantages of large-scale data. Secondly, the sliding window approach used in traditional detection algorithms involves exhaustive traversal of all possible object positions, resulting in high algorithm redundancy, computational complexity, and space complexity. As a result, traditional algorithms struggle to meet the requirements for high accuracy and real-time performance in practical applications. Furthermore, traditional detection algorithms only exhibit good detection performance for specific classes of objects.

Currently, deep learning-based object detection algorithms can be categorized into two main types based on the features of their network structures: the first type is the two-stage deep learning-based object detection algorithm [

25], and the second type is the end-to-end deep learning-based object detection algorithm, also known as the one-stage object detection algorithm [

26]. In comparison, two-stage object detection algorithms generally achieve higher accuracy than one-stage object detection algorithms. However, one-stage object detection algorithms often have higher recognition efficiency due to their unique structural characteristics.

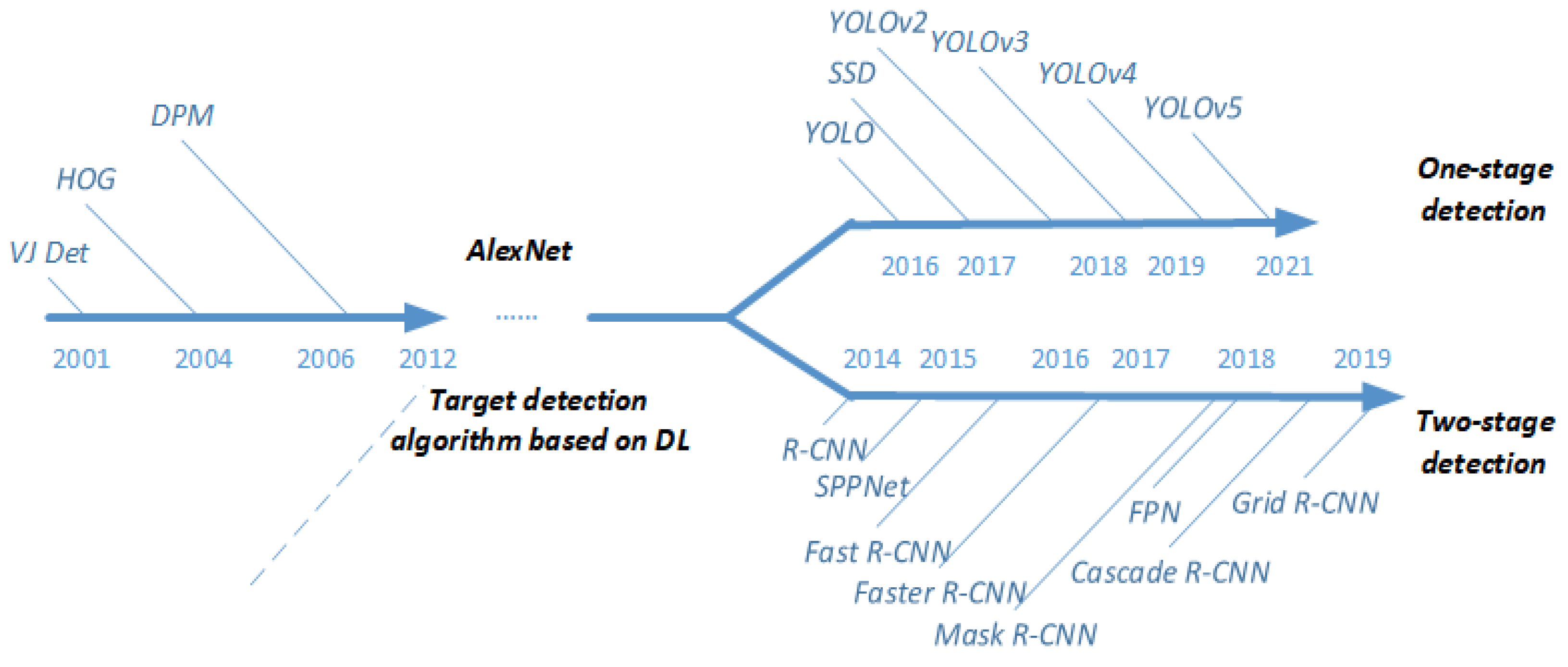

Figure 1 illustrates the development roadmap of object recognition algorithms in recent years.

The two-stage algorithm. Two-stage algorithms, also known as region-based object detection algorithms, follow a series of steps. Firstly, a large number of candidate regions are selected from the input image. Then, convolutional neural networks (CNNs) are utilized to extract features from each candidate region. Finally, a classifier is employed to determine the category of each candidate region. In 2014, Girshick proposed the R-CNN [

8], which established the framework for two-stage object detection algorithms. This algorithm was the first to combine deep learning techniques with traditional object candidate region generation algorithms. However, R-CNN has clear drawbacks. In the first step, the algorithm generates approximately 2000 candidate regions from the image. Each candidate region undergoes CNN computations and is finally classified using an SVM, resulting in slow detection speed even with GPU acceleration. In 2015, He et al. addressed the limitations of R-CNN by proposing a new network architecture called SPP-Net [

9]. SPP-Net calculates feature maps only once for the entire image and then gathers features within the regions of arbitrary sub-images, generating fixed-length feature representations. SPP-Net accelerated R-CNN testing by 10-100 times and reduced training time by three times. In the same year, Girshick integrated the idea of SPP-Net to design a new training algorithm called Fast R-CNN [

10]. It overcame the drawbacks of R-CNN and SPP-Net while improving training speed and accuracy. Both R-CNN and Fast R-CNN employ traditional image processing algorithms to generate candidate regions from the original image, leading to high algorithmic complexity. To address these limitations, Chirshick et al. introduced Faster R-CNN [

28], which employs a Region Proposal Network (RPN) to generate candidate regions and delegates the selection process to the neural network. This method significantly reduces the search time for candidate regions and achieves a detection accuracy of 75.9(%) on the PASCAL VOC test set. Subsequently, numerous improved algorithms for Faster R-CNN have emerged. Dai designed Region-based Fully Convolutional Networks (R-FCN) [

2], and Ren demonstrated the importance of carefully designed deep networks for object classification and reconstructed Faster R-CNN using the latest ResNet backbone network [

11].

The one-stage algorithm. The main characteristic of two-stage algorithms is that they divide the entire detection process into two stages: Region Proposal and Detection. Many researchers have continuously improved the structure of such algorithms by pruning redundant parts. However, the two-stage nature of the algorithm itself limits its speed. Therefore, researchers have been studying end-to-end one-stage algorithms, where the image only needs to be input into the neural network, and the position and category information of the objects in the image can be directly obtained at the output end. In 2016, Redmon et al. proposed the YOLO (You Only Look Once) algorithm. This algorithm divides the image into a grid of 7×7 cells, and each cell predicts rectangular bounding boxes containing objects and their respective class probabilities using deep CNN. Each bounding box contains five pieces of data: the coordinates of the center of the bounding box, its width, height, and the confidence score of the object. YOLO integrates object detection and recognition, avoiding the redundant steps of region proposal, making it advantageous in terms of fast detection speed, achieving up to 45 frames per second. Additionally, YOLO supports the detection of non-natural images. However, compared to previous detection algorithms, YOLO may produce more localization errors. Subsequently, Redmon drew inspiration from the idea of Faster R-CNN and introduced the anchor mechanism into the algorithm. By using K-means clustering, better anchor templates are computed from the training set, resulting in an improved version called YOLO9000. In 2018, the author further enhanced the YOLO algorithm and proposed the YOLOv3 method, which designed a novel multi-scale Darknet53 network architecture to improve the feature extraction capability of the model. In 2020, Bochkovskiy et al. continued to optimize the network model and built the YOLOv4 backbone network. By adopting the Cross-Stage Partial Network (CSPNet) idea and designing the CSPDarkNet backbone network, the transmission of convolutional neural network feature information was effectively improved. The YOLOv4 model also incorporated a spatial pyramid pooling module in the neck network to enhance its recognition performance.

YOLOv5 is an improved algorithm based on YOLOv4, which brings better accuracy and detection speed. Compared to YOLOv4, YOLOv5 reduces model size and inference time while improving detection accuracy. It exhibits faster training speed and better generalization ability, making it suitable for real-time object detection in modern applications. To make YOLOv5 applicable to a wider range of practical problems, the authors designed four different-sized models: YOLOv5s, YOLOv5m, YOLOv5l, and YOLOv5x [

29]. Among them, YOLOv5x is the largest-scale network, offering the highest detection accuracy. However, its detection speed is relatively slower and it is more suitable for detection environments with higher hardware configurations. The performance comparison of the four models is shown in

Table 1, where mAP represents the mean average precision measured on the COCO dataset.

By comparing the results, it was observed that although YOLOv5s exhibits a slight decrease in algorithmic accuracy, its detection speed increases significantly. Additionally, it has the smallest parameter size, making it more easily deployable on computationally limited onboard computing devices. Therefore, this study focuses on improving YOLOv5s to achieve better detection performance in scenarios such as small and densely packed objects in unmanned aerial imagery.

3. Experiment and analysis

The core part of this thesis is to elaborate the experimental design, experimental process and data analysis results in detail. This chapter will show our research methods and experimental progress, and draw research conclusions through in-depth analysis of experimental data. In this chapter, we will first introduce the purpose of the experiment, experimental equipment and materials, experimental methods and processes, and then record, organize and visualize the experimental data in detail. Finally, we will make an in-depth analysis of the experimental results and draw a conclusion. This chapter will fully demonstrate the reliability and accuracy of the research, and provide a solid foundation for subsequent discussions and conclusions.

3.1. Experimental preparation

This section mainly introduces the data, platform equipment, evaluation index and related preparation work. The purpose of this section is to ensure the smooth progress of the experiment and provide accurate and reliable data. First, we will list the experimental data used and explain its specifications. Secondly, we will describe in detail the preparation work before the experiment, including the control of laboratory environment, equipment specifications and so on. Through these detailed descriptions and preparations, we will lay a solid foundation for the subsequent experimental process and ensure the accuracy and repeatability of the experimental results.

3.1.1. Introduction of data

The dataset used in this study is the VisDrone19 aerial imagery dataset [

13], which was collected and annotated by the AISKYEYE team at Tianjin University. It consists of a total of 8,599 aerial images, with 6,471 images in the training set, 1,580 images in the testing set, and 548 images in the validation set.

Table 2 provides an overview of the basic statistics of the dataset.

The image data in the VisDrone19 dataset is collected from 14 cities in China and their surrounding areas. The images have varying resolutions, ranging from 480 pixels × 360 pixels to 2000 pixels × 1500 pixels [

17]. The dataset includes several object categories, including people, pedestrians, cars, trucks, vans, buses, tricycles, motorcycles, and bicycles. The dataset contains a wide range of image scales and varying levels of aerial object density. Additionally, it encompasses aerial images captured in different urban settings during both daytime and nighttime scenes.

3.1.2. Experimental platform

This experiment primarily focuses on evaluating the object detection and recognition performance of the YOLOv5s network model under different improvement strategies using the VisDrone19 dataset. The VisDrone19 dataset contains a large volume of image data, making the training time for the model considerably long. Therefore, it requires a certain level of computational resources. In this experiment, both a personal laptop and the AutoDL cloud server platform were utilized for training and testing the network model. The training environment details are presented in

Table 3.

The test environment of the experiment is shown in

Table 4.

3.1.3. Evaluating Indicator

The evaluation metrics for algorithms can be categorized into two aspects. Firstly, the accuracy of the algorithm is commonly assessed using precision (P) and recall (R), which refer to the measures of precision and recall rate, respectively. Precision represents the ability of the algorithm to correctly identify positive instances, while recall measures the algorithm’s ability to identify all positive instances. Secondly, the real-time performance of the algorithm is evaluated. The spatial complexity of the model is typically assessed using parameters (Params) to evaluate the model’s space complexity. The time complexity of the algorithm is evaluated using the number of floating-point operations per second (GFLOPs), and the detection speed of the model is measured using frames per second (FPS).

The accuracy evaluation of target detection and recognition is different from the label prediction of machine learning. How to evaluate whether the prediction frame is accurate or not, the threshold of Intersection over Union (IOU) is introduced here to evaluate. IOU indicates the intersection ratio and union area of the dimension box and the prediction box. Both accuracy and recall are based on IOU formula. The specific calculation formula is as follows:

Among them, TP (True Positives) means that the prediction frame is consistent with the labeling frame, that is

, FP means that the detection frame is labeled on the wrong object, and TN (True Negatives) means that the target is not detected. In addition, the Average Precision (AP) and the average precision (mAP) are usually used. mAP is calculated by calculating the AP value of each category and then averaging. The specific calculation formula is as follows:

In order to evaluate the real-time performance of the algorithm, it is necessary to calculate the parameters, calculation and detection speed of the network model. The parameters are mainly calculated by the parameters of each layer, the convolution kernel size (

,

), the number of network channels (

) and the number of output channels (

), and the specific formula is as follows:

The calculation amount GFLOPs indicates that the computer can perform one billion floating-point operations per second, and the calculation formula of BFLOPs is:

The detection speed is measured by FPS, that is, the number of pictures that can be detected per second. The higher the FPS, the faster the detection speed of the algorithm.

3.2. Model Training and Evaluation Based on Visdrone19 Aerial Data

In order to conduct ablation experiments, different training models set the same training parameters. The image size at the input end of the network is 640pixel×640pixel. In terms of training parameter setting, batch-size is set to 8, epoch is set to 300, and the initial learning rate is set to 0.01 by default. The unique data enhancement method in YOLOv5s structure and cosine annealing attenuation algorithm are adopted to optimize the parameters.

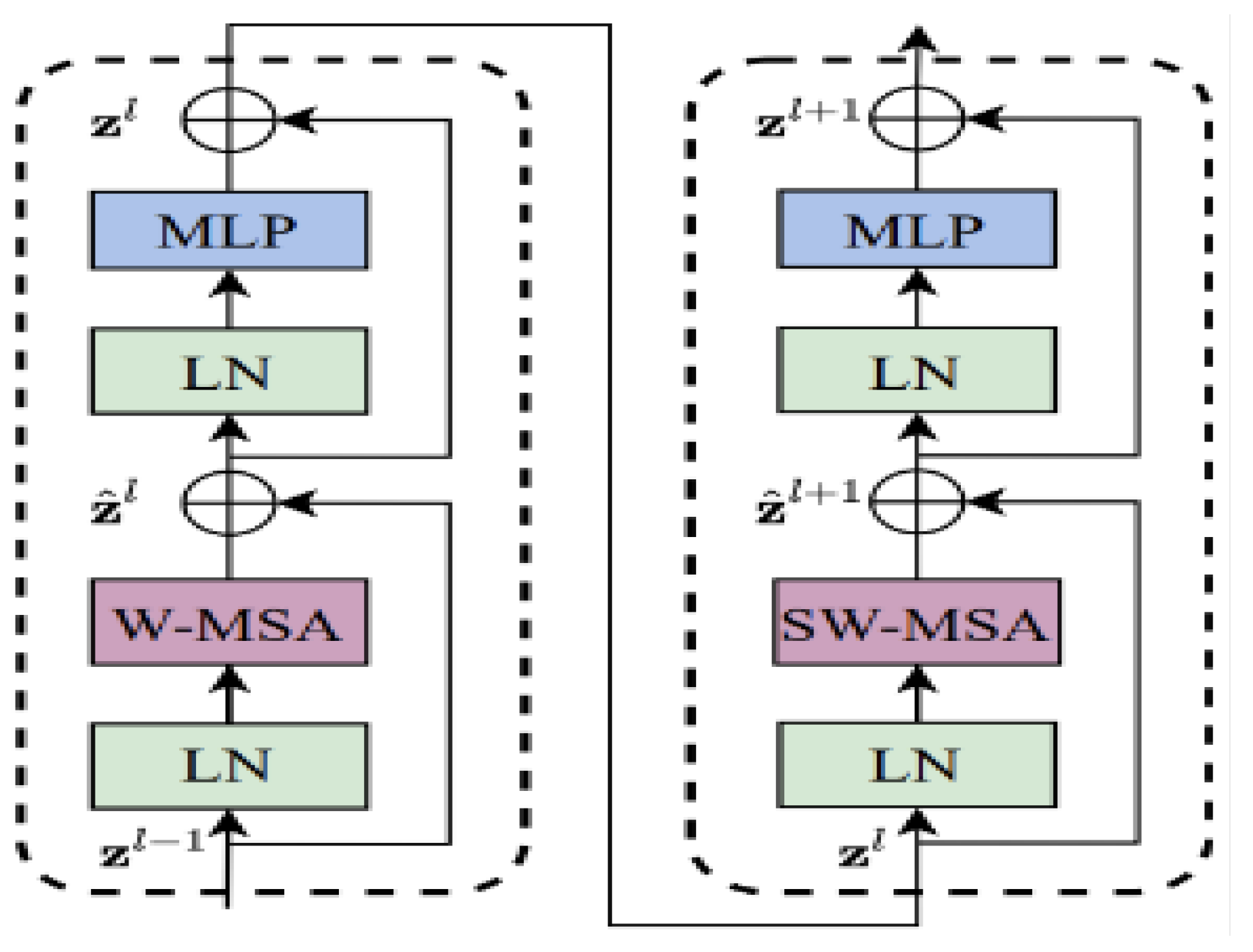

In order to analyze the influence of introducing SE attention mechanism and improving the network based on Swin-Transformer on aerial target detection, the network models of SE-YOLOv5s and ST-YOLOv5s are trained with VisDrone19, and the convergence curve of model training is shown in

Figure 8.

By analyzing the convergence curves of accuracy, mAP and recall of YOLOv5s, SE-YOLOv5s and ST-YOLOv5s on the verification set, it can be seen that the training amount of the three models is not much different, and they all converge in 100 epoch. Visualize the changes of parameters such as mAP, Precision and Recall obtained by the three algorithms in the training process, and it is obvious that SE-YOLOv5s and ST-YOLOv5s have obviously improved YOLOv5s on mAP and Recall. This is because SE module can enhance the features of small target images, and Swin-Transformer’s multi-head attention mechanism and feature pyramid structure can make it stand out in aerial target detection. There are often various types of small targets in aerial images, and Swin-Transformer can detect and identify these different types of small targets.

The experimental results of various algorithm models on VisDrone19 test set are shown in

Table 5, and the comparison of the accuracy of different algorithms is shown.

Through the experimental results, it can be found that after adding attention mechanism and Transformer module to YOLOv5s, the mAP of target detection has been improved to some extent, and the improvement effect of SE-YOLOv5s is the most obvious, with an increase of 2.1%. In terms of accuracy, the lifting effect of SE-YOLOv5s is better than that of ST-YOLOv5s, with an increase of 4.3%. The recall rates of the three algorithms are close.

As can be seen from

Table 6, after SE-YOLOv5 joined the SElayer module, although the weight of each channel was dynamically calculated through Squeeze operation and Excitation operation, and the features related to the target task were enhanced, the accuracy of the algorithm was effectively improved, but at the cost of increasing the network computation, SE-YOLO V5 and ST-YOLO V5 sacrificed 20.3FPS and 24.5FPS respectively. However, there is no excessive loss in the parameter quantity and size of the algorithm model, so compared with the complex YOLOv5 model, it achieves the goal of Slimmable. The model parameters of various neural networks trained on VisDrone19 test set are shown in the following table.

Compared with YOLOv5s training model, the FPS of SE-YOLOv5s and ST-YOLOv5s neural network models is smaller, and the theoretical model size and calculation amount are similar, which takes a little longer.

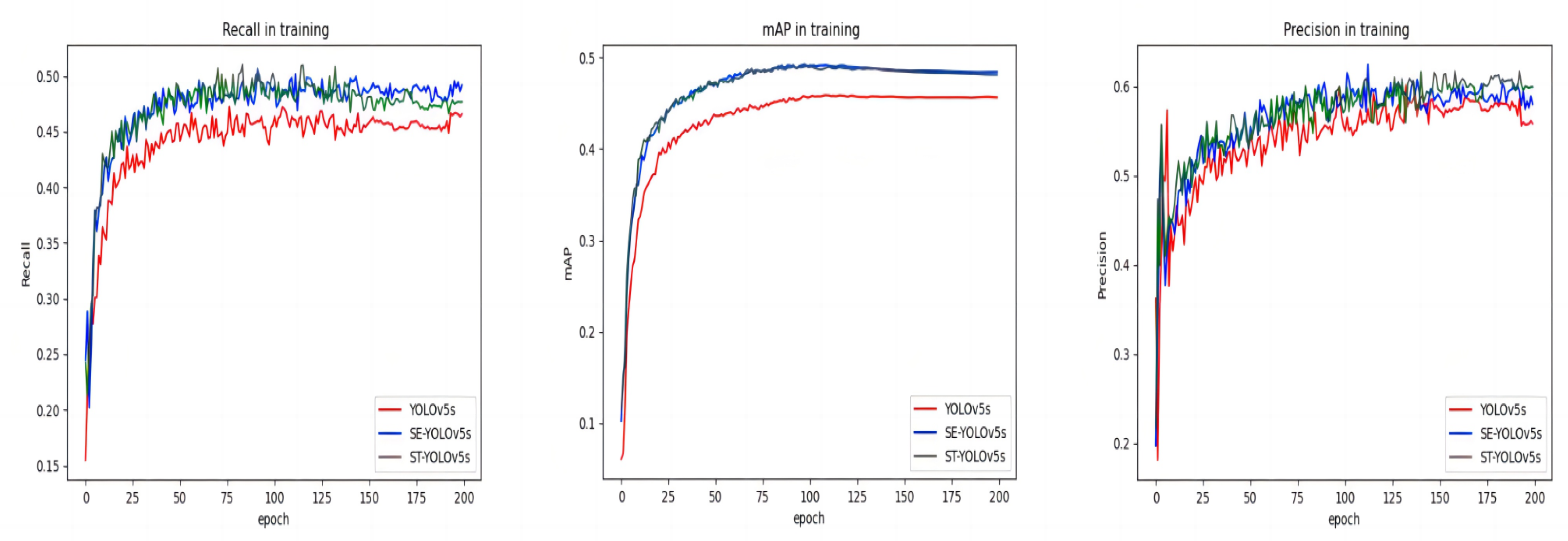

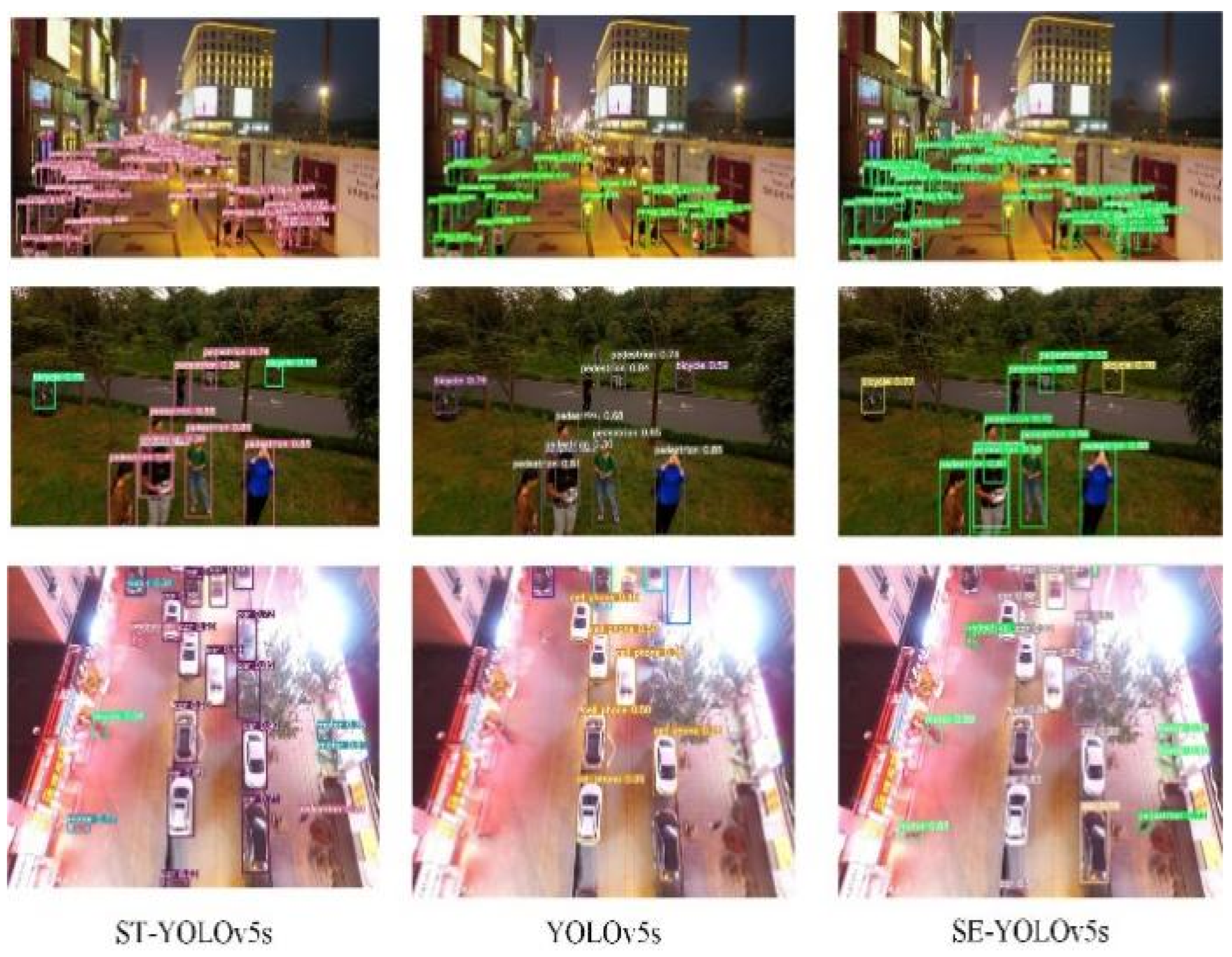

A typical example of different algorithms detecting on VisDrone data set is shown in

Figure 9. It can be found that, compared with the basic YOLOv5s, the two improved algorithms have higher detection rate for small targets, and YOLOv5s has not detected the vehicles in the service area. However, the false detection rate of ST-YOLOv5s is higher than that of SE-YOLOv5s. As can be seen from the

Figure 9, ST-YOLOv5s recognizes the gas station as a Truck. For the detection example, we can intuitively feel that the size of the target in the aerial image is closely related to the flight altitude. When the UAV flies to a certain height, the captured image contains more information, and the target becomes difficult to observe. For example, to detect the Car at the gas station in

Figure 9, the field of vision needs to be focused on a smaller range. Therefore, neural network is required to have a good receptive field. Comparing the detection results of the above three algorithms, it is found that the detection effect of SE-YOLOv5s is better than the other two algorithms for long-distance small targets with smaller visual field, which shows that SE-YOLOv5s has better receptive field than the other two neural networks.

A typical example of different algorithms to detect and identify targets for images taken from various perspectives in VisDrone dataset is shown in

Figure 9. Comparing the images taken from different perspectives, it can be seen that the available features of the target in aerial images are closely related to the perspective. When the drone flies directly above the target, most of the targets in the captured images have global features, but some targets only include local features. For example, it is difficult to observe the whole picture of the objects at the edge of the image because they are partially occluded. Therefore, neural network is required to extract multi-scale features and identify targets by using local features.

As can be seen from

Figure 9, YOLOv5s did not detect two kinds of targets, namely bicycles and motorcycles, and the detection effects of ST-YOLOv5s and SE-YOLOv5s in the image detection of head-up shooting angle were similar. Overlooking Angle For the target at the edge of the image, SE-YOLOv5 failed to detect it because of occlusion. Compared with SE-YOLOv5s, ST-YOLOv5s can detect more objects at the edge of the image and partially blocked because it can extract not only global features but also local features.

Figure 1.

This is a flow chart showing the development of target detection and recognition algorithms. It started with VJ Det algorithm in 2001 and ended with YOLOv5 algorithm in 2021. Later, it is divided into the one-stage target detection and recognition algorithm and two-stage target detection and recognition algorithm.

Figure 1.

This is a flow chart showing the development of target detection and recognition algorithms. It started with VJ Det algorithm in 2001 and ended with YOLOv5 algorithm in 2021. Later, it is divided into the one-stage target detection and recognition algorithm and two-stage target detection and recognition algorithm.

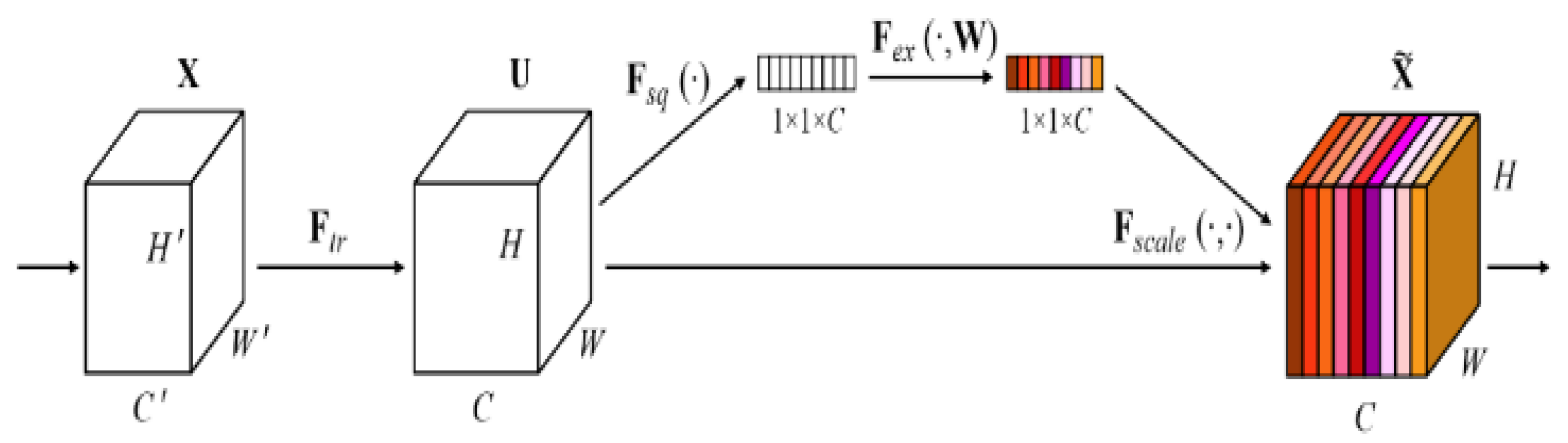

Figure 2.

This is a diagram showing the experimental principle and structure of SE model. The structure of SE model mainly includes two parts: Squeeze and Excitation. The working principle is to enhance the performance of the model by strengthening the channel features of the input feature map.

Figure 2.

This is a diagram showing the experimental principle and structure of SE model. The structure of SE model mainly includes two parts: Squeeze and Excitation. The working principle is to enhance the performance of the model by strengthening the channel features of the input feature map.

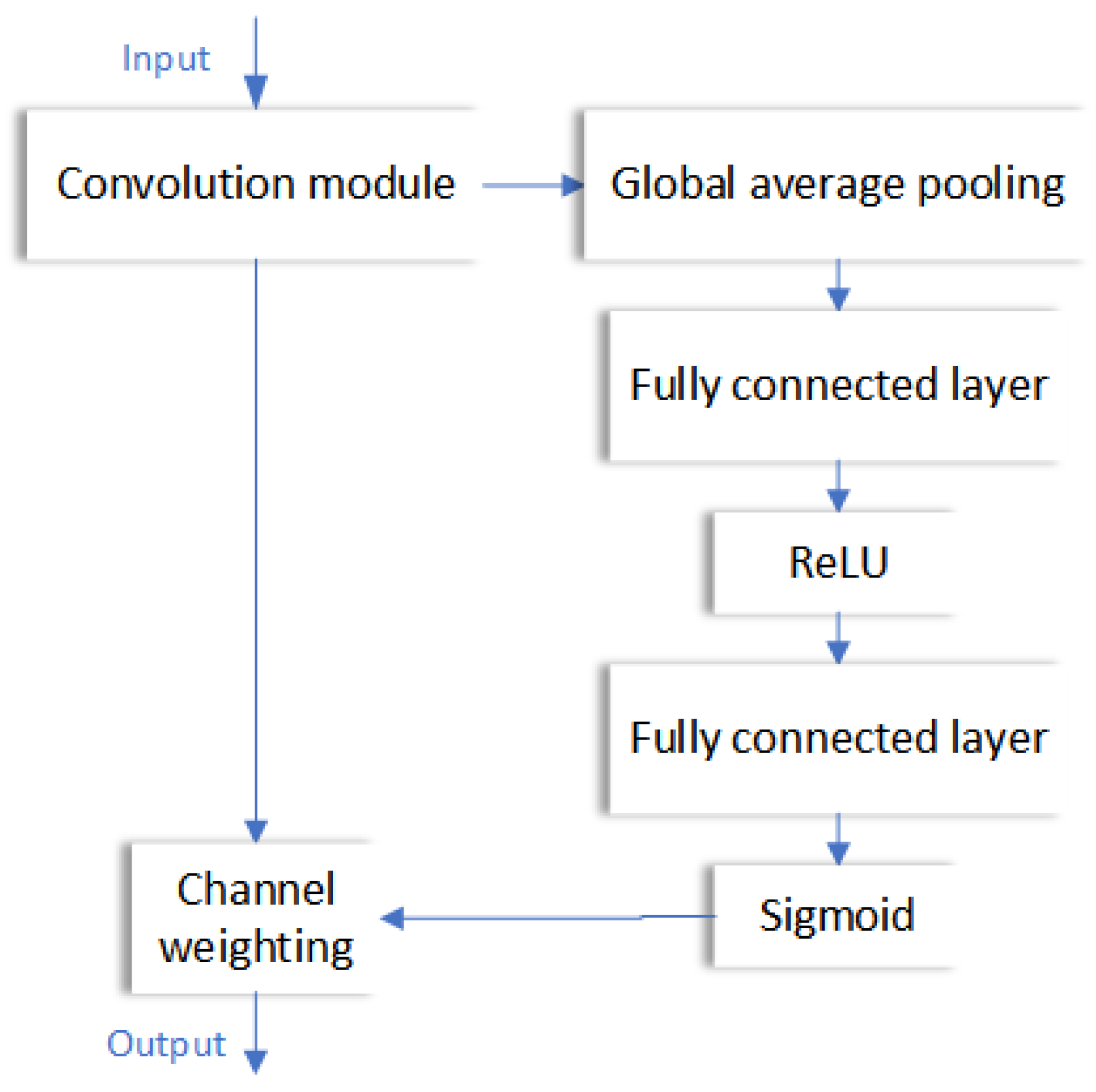

Figure 3.

This is a workflow diagram representing the SENet model. By introducing SE module to enhance the perception of features, the performance of the model is improved, which is mainly used in image classification tasks.

Figure 3.

This is a workflow diagram representing the SENet model. By introducing SE module to enhance the perception of features, the performance of the model is improved, which is mainly used in image classification tasks.

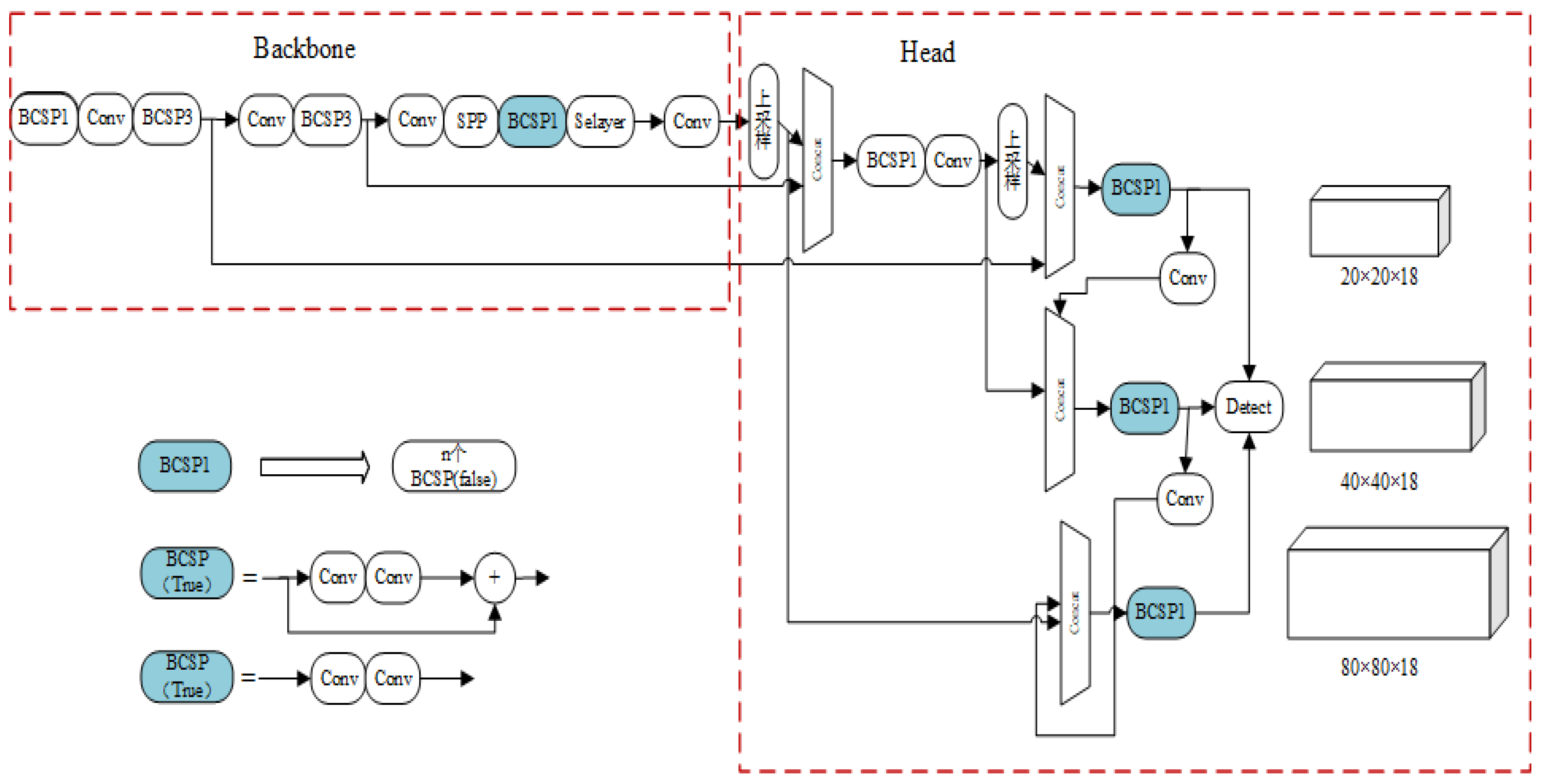

Figure 4.

This is a representation of the improved SE-YOLOv5s model workflow and schematic diagram. By introducing SE-Layer module improves the performance of target detection and recognition. It can increase the attention of the model and make the model pay more attention to the key areas of the image.

Figure 4.

This is a representation of the improved SE-YOLOv5s model workflow and schematic diagram. By introducing SE-Layer module improves the performance of target detection and recognition. It can increase the attention of the model and make the model pay more attention to the key areas of the image.

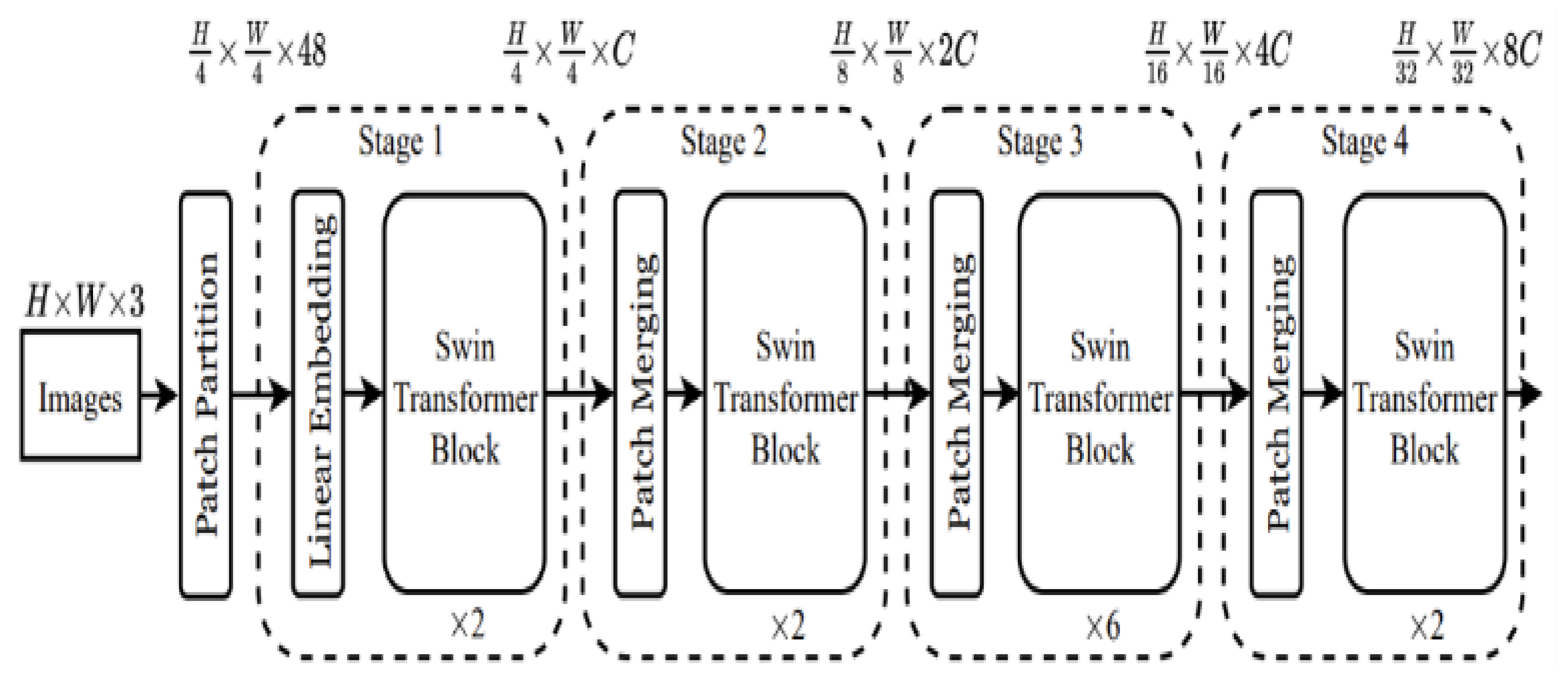

Figure 5.

This is a schematic representation of the structure and workflow of Swin Transformer Tiny. Through multiple Transformer modules and multi-level feature extraction and transformation mechanism, the deep-level feature extraction of the input image is realized.

Figure 5.

This is a schematic representation of the structure and workflow of Swin Transformer Tiny. Through multiple Transformer modules and multi-level feature extraction and transformation mechanism, the deep-level feature extraction of the input image is realized.

Figure 6.

This is a schematic representation of the structure and workflow of Swin Transformer Tiny. Through multiple Transformer modules and multi-level feature extraction and transformation mechanism, the deep-level feature extraction of the input image is realized.

Figure 6.

This is a schematic representation of the structure and workflow of Swin Transformer Tiny. Through multiple Transformer modules and multi-level feature extraction and transformation mechanism, the deep-level feature extraction of the input image is realized.

Figure 7.

This is a representation of the improved ST-YOLOv5s structure and workflow diagram. The structure of ST-YOLOv5s includes a Swin-T model, which uses a hierarchical Transformer module to extract features at different scales. Its sliding window strategy is helpful to capture the global context information in the image, while the multi-head attention mechanism allows learning task-related information in their respective presentation subspaces.

Figure 7.

This is a representation of the improved ST-YOLOv5s structure and workflow diagram. The structure of ST-YOLOv5s includes a Swin-T model, which uses a hierarchical Transformer module to extract features at different scales. Its sliding window strategy is helpful to capture the global context information in the image, while the multi-head attention mechanism allows learning task-related information in their respective presentation subspaces.

Figure 8.

YOLOv5s, SE-YOLOv5s and ST-YOLOv5s. Recall, mAP and Precision are used to evaluate the performance of the model. The results show that the improved SE-YOLOv5s and ST-YOLOv5s models have better performance than the unimproved YOLOv5s models.

Figure 8.

YOLOv5s, SE-YOLOv5s and ST-YOLOv5s. Recall, mAP and Precision are used to evaluate the performance of the model. The results show that the improved SE-YOLOv5s and ST-YOLOv5s models have better performance than the unimproved YOLOv5s models.

Figure 9.

The experimental results of YOLOv5s, SE-YOLOv5s and ST-YOLOv5s on VisDrone. In the picture, we can see the target classification and the corresponding probability obtained from target detection and recognition.

Figure 9.

The experimental results of YOLOv5s, SE-YOLOv5s and ST-YOLOv5s on VisDrone. In the picture, we can see the target classification and the corresponding probability obtained from target detection and recognition.

Figure 10.

The experimental results of YOLOv5s, SE-YOLOv5s and ST-YOLOv5s on VisDrone. In the picture, we can see the target classification and the corresponding probability obtained from target detection and recognition.

Figure 10.

The experimental results of YOLOv5s, SE-YOLOv5s and ST-YOLOv5s on VisDrone. In the picture, we can see the target classification and the corresponding probability obtained from target detection and recognition.

Table 1.

This is a performance comparison of YOLOv5 models representing different dimensions. Among them, YOLOv5x model has better performance on positive indicators mAP, GFOLPs and Params. On the negative index FPS, YOLOv5x model consumes the least. Overall, YOLOv5x has the best performance.

Table 1.

This is a performance comparison of YOLOv5 models representing different dimensions. Among them, YOLOv5x model has better performance on positive indicators mAP, GFOLPs and Params. On the negative index FPS, YOLOv5x model consumes the least. Overall, YOLOv5x has the best performance.

| Model |

mAP(%) |

GFOLPs(Billion) |

FPS |

Params(MB) |

| YOLOv5s |

44.3 |

16.4 |

113 |

7.07 |

| YOLOv5m |

48.2 |

50.4 |

65 |

21.07 |

| YOLOv5l |

50.4 |

114.3 |

28 |

46.68 |

| YOLOv5x |

51.3 |

140.2 |

25 |

89.0 |

Table 2.

This is a table that describes the VisDrone 19 dataset [

18]. VisDrone19 is an aerial data set of unmanned aerial vehicles, which was collected by AISKYEYE team of Machine Learning and Data Mining Laboratory of Tianjin University.

Table 2.

This is a table that describes the VisDrone 19 dataset [

18]. VisDrone19 is an aerial data set of unmanned aerial vehicles, which was collected by AISKYEYE team of Machine Learning and Data Mining Laboratory of Tianjin University.

| Data Information |

Basic Situation |

| Partition of data |

6471 training sets, 1580 test sets, 548 verification sets. |

| Data scene |

Cities, villages, highways |

| Target type |

23 categories of vehicles, pedestrians and bicycles. |

| Resolution ratio |

1024pixel×1024pixel |

| Data source |

Drone shooting |

Table 3.

Various equipment models in training experimental environment.

Table 3.

Various equipment models in training experimental environment.

| Parameter |

Deploy |

| GPU model |

NVIDIA GeForce RTX 3090. |

| CPU model |

Intel(R) Xeon(R) Gold 6330 |

| Internal storage |

30GB |

| Memory |

24GB |

| Deep learning framework |

Pytorch 1.9.0 |

| CUDA |

CUDA 11.1 |

| Programming environment |

Python 3.8 |

Table 4.

Various equipment models in Off-line experimental environment.

Table 4.

Various equipment models in Off-line experimental environment.

| Parameter |

Deploy |

| GPU model |

NVIDIA GeForce RTX 3060. |

| CPU model |

Intel(R) Core(TM) i9-12900H |

| Internal storage |

16GB |

| Memory |

6GB |

| Deep learning framework |

Pytorch 1.12.0 |

| CUDA |

CUDA 11.6 |

| Programming environment |

Python 3.8 |

Table 5.

YOLOv5s, SE-YOLOv5s and ST-YOLOv5s. Recall, mAP and Precision are used to evaluate the performance of the model. The results show that the improved SE-YOLOv5s and ST-YOLOv5s models have better performance than the unimproved YOLOv5s models.

Table 5.

YOLOv5s, SE-YOLOv5s and ST-YOLOv5s. Recall, mAP and Precision are used to evaluate the performance of the model. The results show that the improved SE-YOLOv5s and ST-YOLOv5s models have better performance than the unimproved YOLOv5s models.

| Algorithms |

mAP(%) |

Precision(%) |

Recall(%) |

| YOLOv5s |

45.7 |

55.8 |

46.6 |

| SE-YOLOv5s |

47.8 |

60.1 |

46.6 |

| ST-YOLOv5s |

47.6 |

57.1 |

46.0 |

Table 6.

YOLOv5s, SE-YOLOv5s and ST-YOLOv5s. Six negative indicators, Time, FPS, Params, GFLOPs and Scale, were used to evaluate the performance of the model.

Table 6.

YOLOv5s, SE-YOLOv5s and ST-YOLOv5s. Six negative indicators, Time, FPS, Params, GFLOPs and Scale, were used to evaluate the performance of the model.

| Algorithms |

Time(ms) |

FPS |

Params |

GFLOPs |

Scale (MB) |

| YOLOv5s |

12 |

83.3 |

7396135 |

16.8 |

15.2 |

| SE-YOLOv5s |

16 |

62.5 |

7078183 |

16.4 |

14.5 |

| ST-YOLOv5s |

17 |

58.8 |

7122891 |

17.0 |

14.6 |