Submitted:

30 October 2023

Posted:

03 November 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

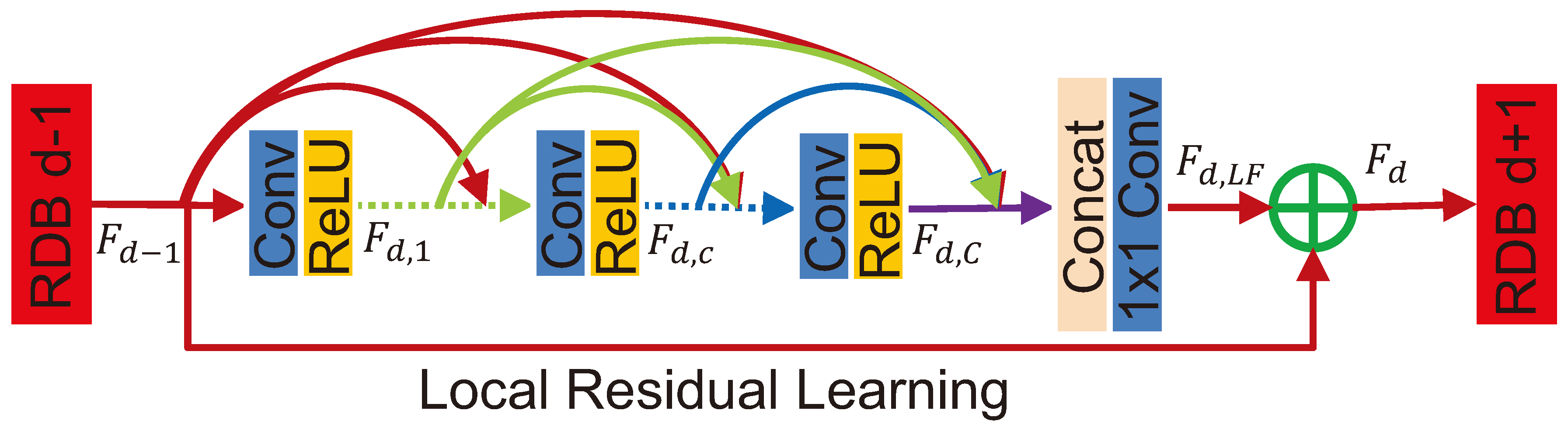

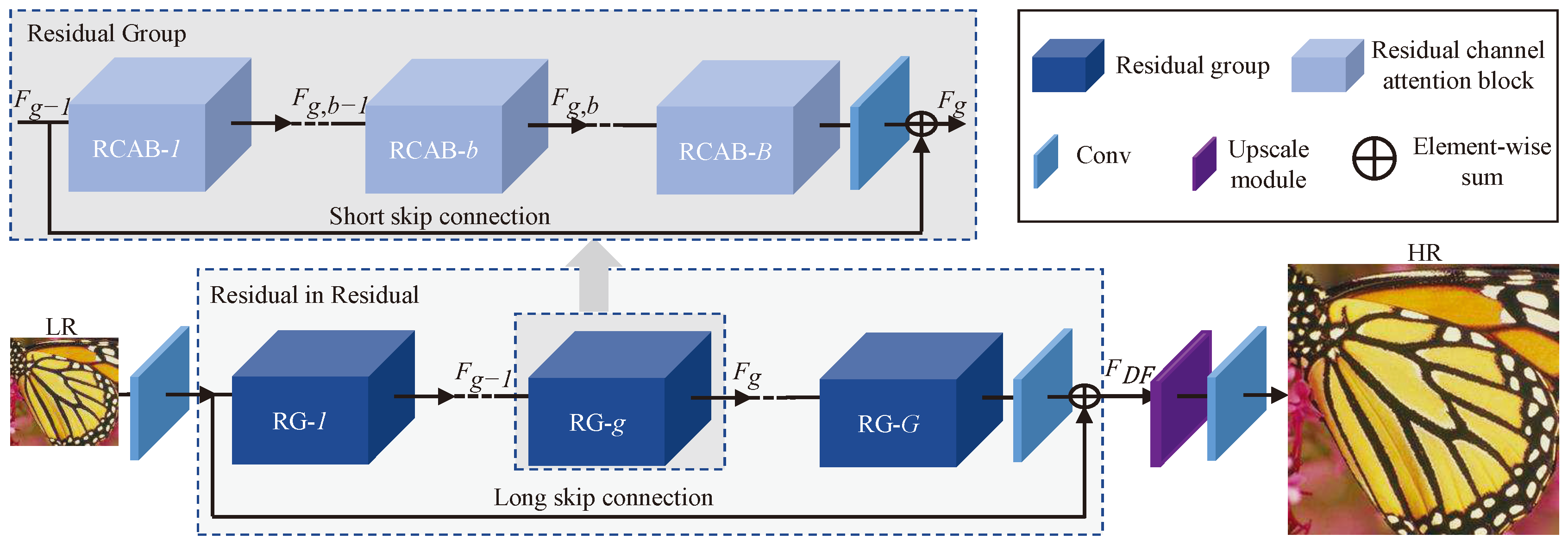

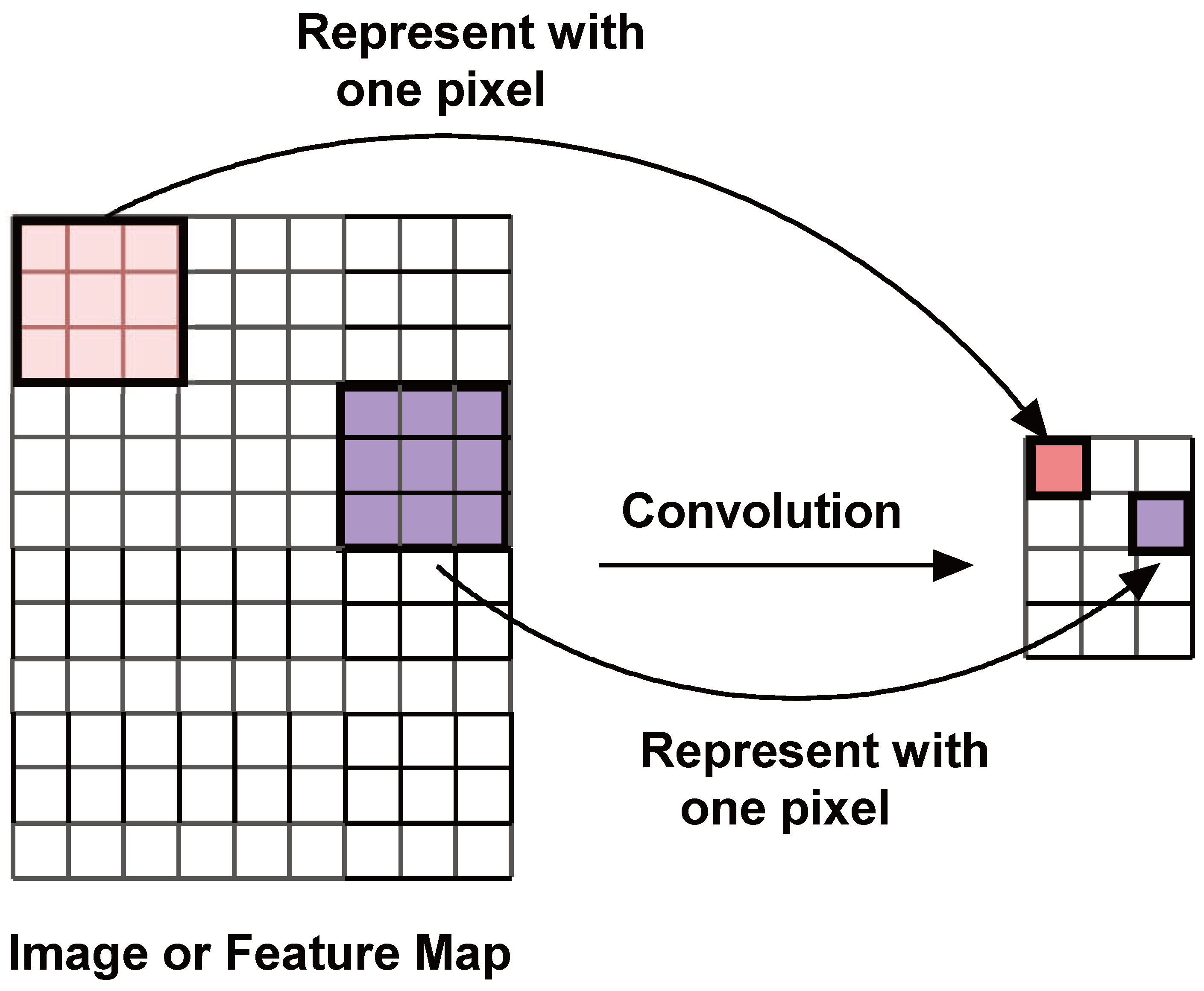

2. Related Work

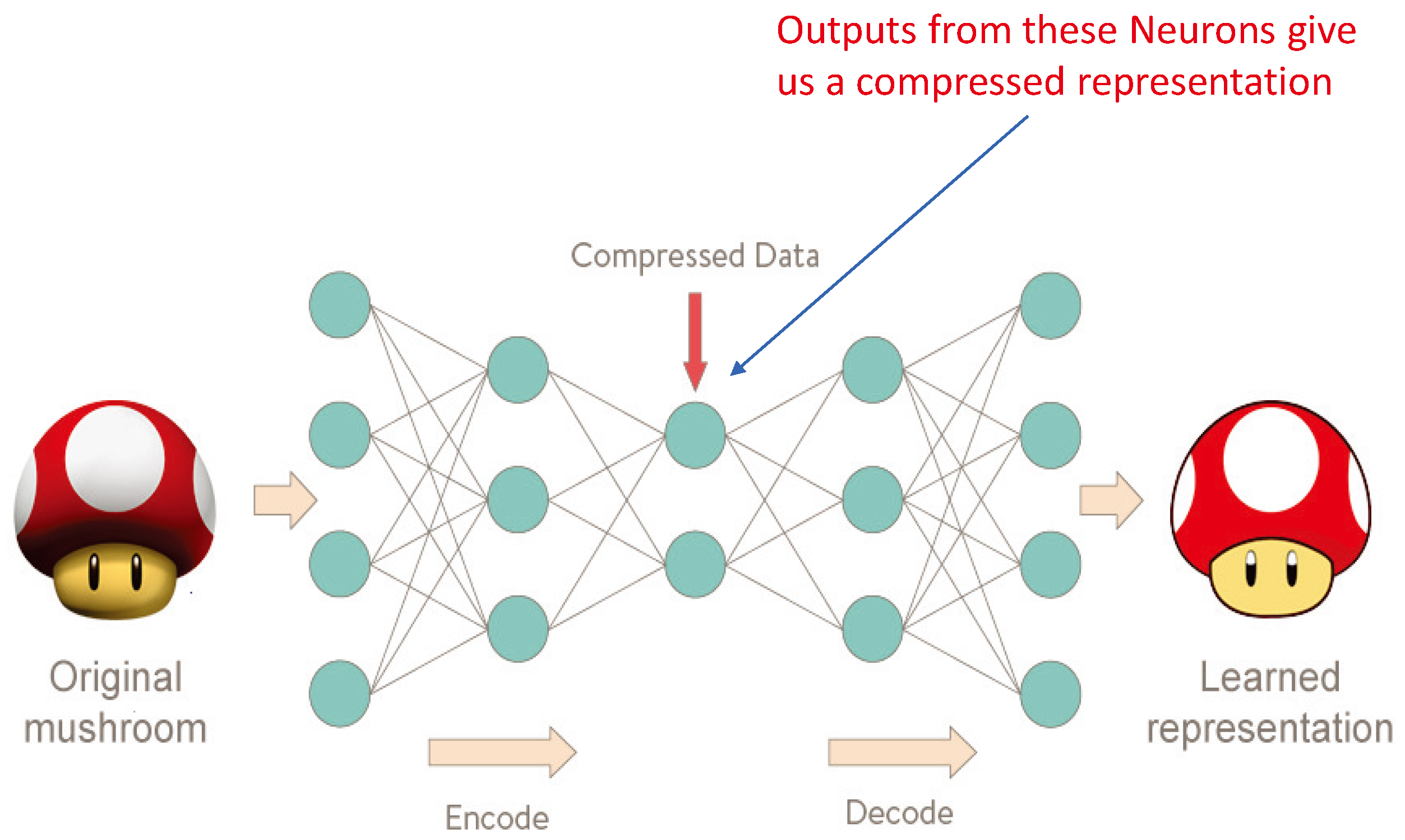

3. Autoencorders

4. Problem Statement and the Model

5. Proposed Method

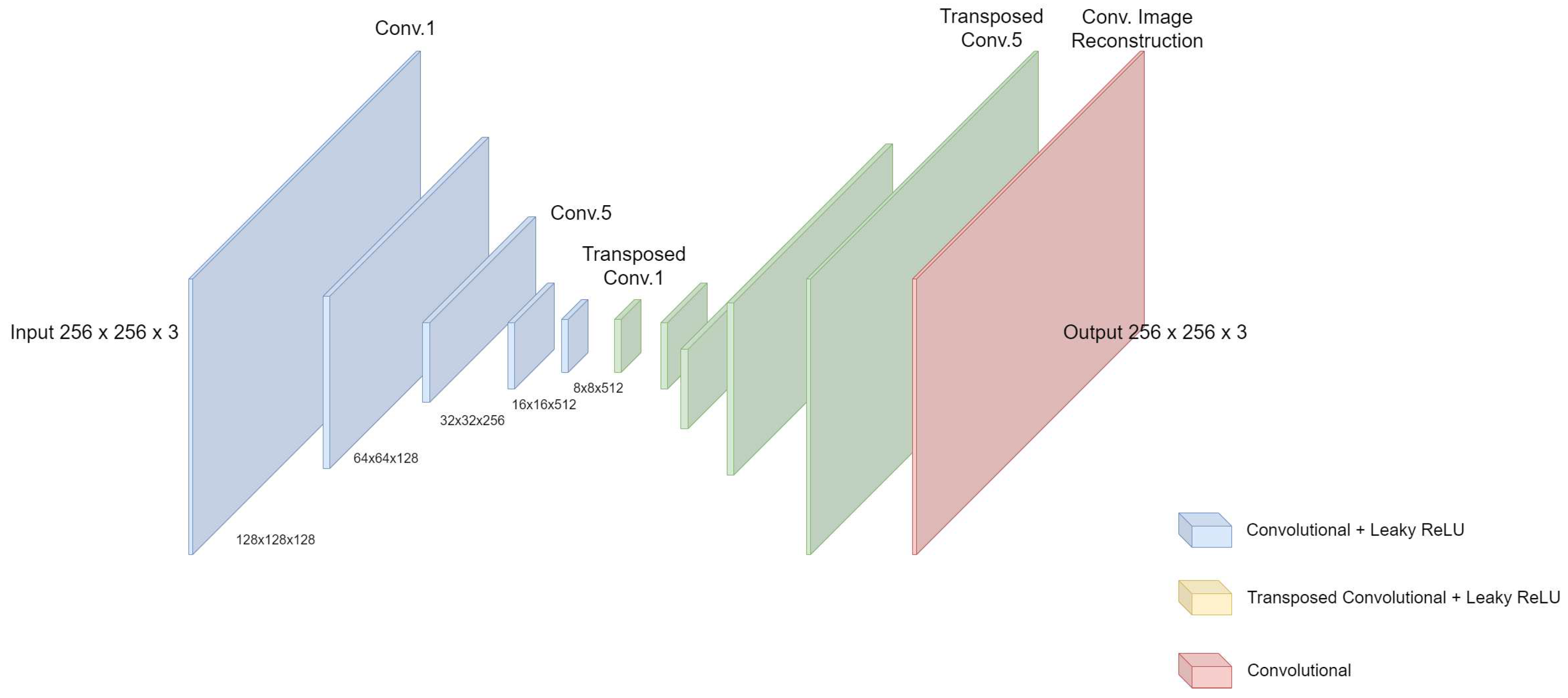

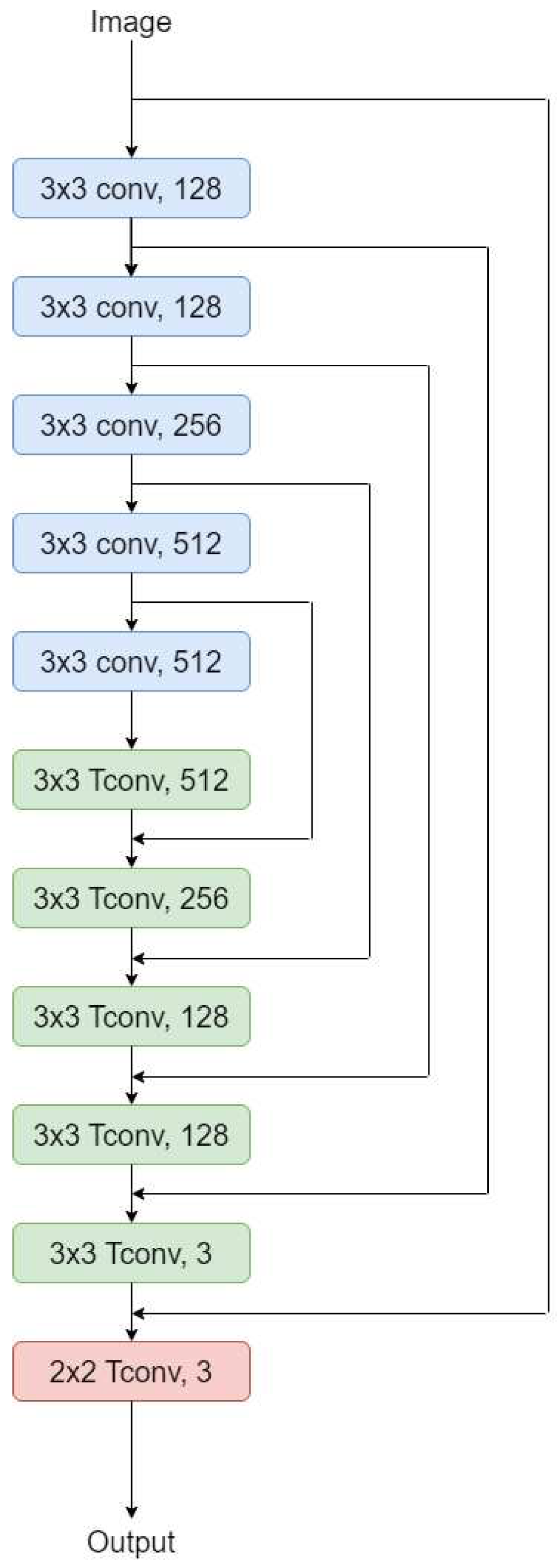

5.1. Proposed Network

5.2. Training

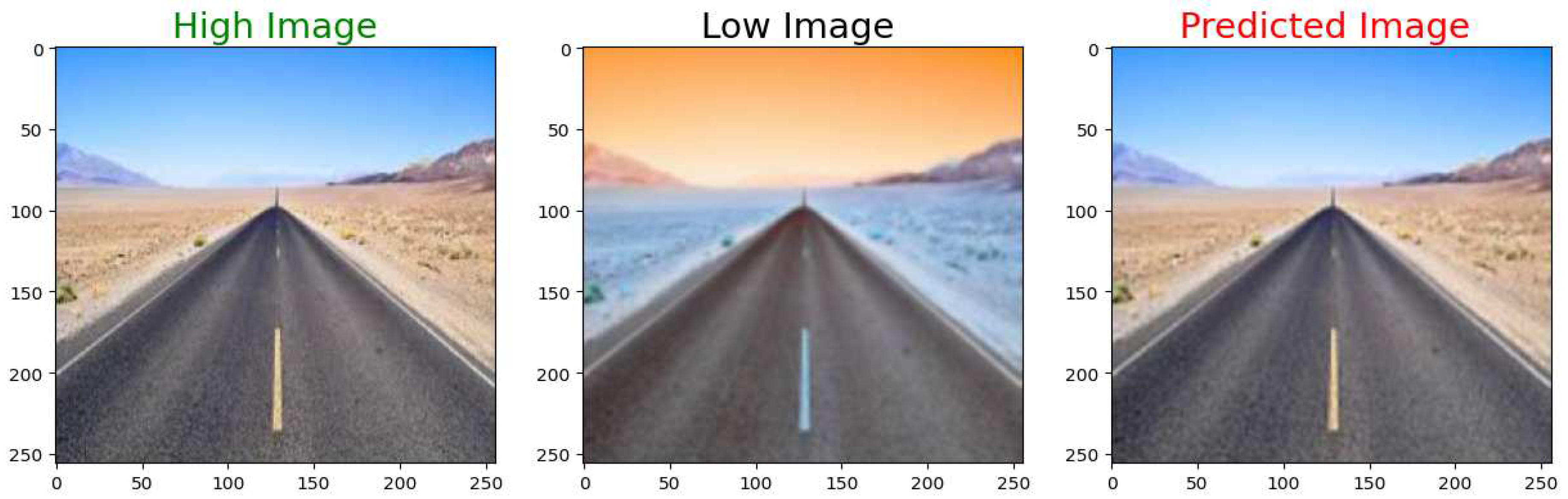

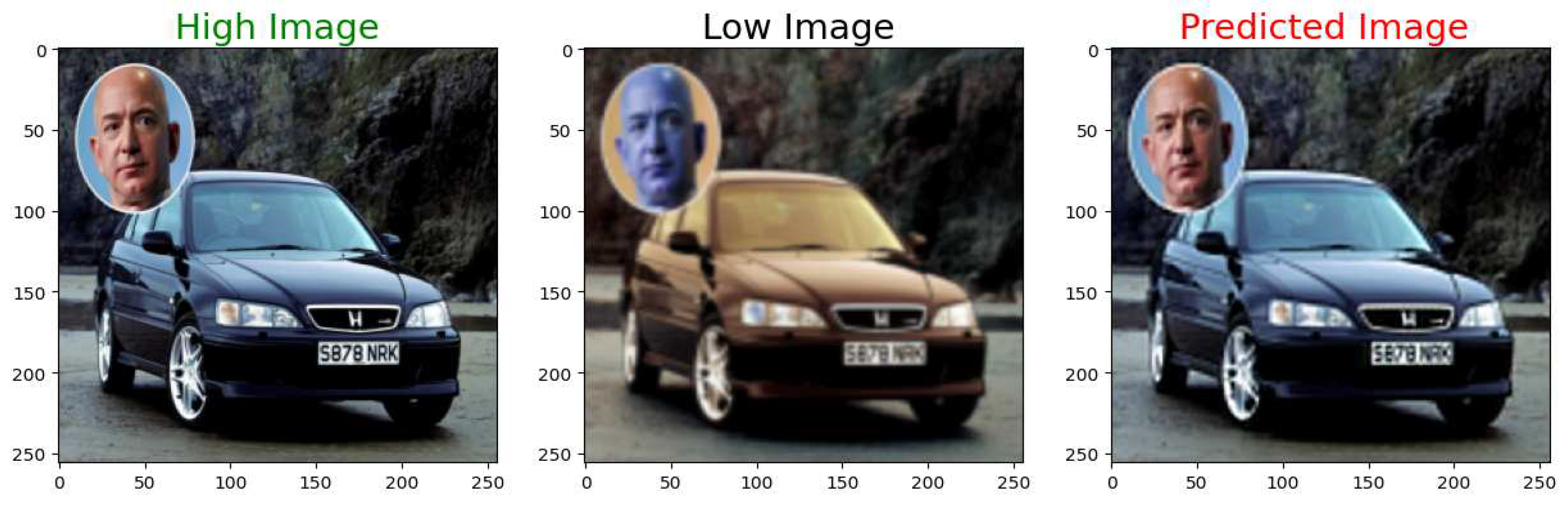

6. Experiments and Results

6.1. Training Data

6.2. Training Parameters

6.3. Model and Performance Trade-off

7. Conclusions

Data Availability Statement

- https://www.kaggle.com/datasets/adityachandrasekhar

- /image-super-resolution

Abbreviations

- The following abbreviations were used in this manuscript:

| SISR | Single Image Super Resolution |

| CNN | Convolutional Neural Network |

| SNR | Signal to Noise Ratio |

| PSNR | Peak Signal to Noise Ratio |

| SSIM | Structural Similarity Index |

Appendix A

- ###################################

- #### Importing the Libraries #####

- ###################################

- import numpy as np

- import tensorflow as tf

- import keras

- import cv2

- from keras.models import Sequential

- from tensorflow.keras.utils import img_to_array

- from skimage.metrics import structural_similarity as ssim

- import math

- import os

- from tqdm import tqdm

- import re

- import matplotlib.pyplot as plt

- from google.colab import drive

- drive.mount(’/content/gdrive’)

- ################################

- ##### Managing the Files #####

- ################################

- def sorted_alphanumeric(data):

- convert = lambda text: int(text) if text.isdigit() else text.lower()

- alphanum_key = lambda key: [convert(c) for c in re.split(’([0-9]+)’,key)]

- return sorted(data,key = alphanum_key)

- # defining the size of the image

- SIZE = 256

- high_img = []

- #os.makedirs(’gdrive/MyDrive/TMU/Neural_Networks/Project/kaggle/

- Raw Data/high_res’, exist_ok=True)

- path = ’gdrive/MyDrive/TMU/Neural_Networks/Project/kaggle/

- Raw Data/high_res’

- files = os.listdir(path)

- files = sorted_alphanumeric(files)

- for i in tqdm(files):

- if i == ’855.jpg’:

- break

- else:

- img = cv2.imread(path + ’/’+i,1)

- # open cv reads images in BGR format so we have to convert it to RGB

- img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

- #resizing image

- img = cv2.resize(img, (SIZE, SIZE))

- img = img.astype(’float32’) / 255.0

- high_img.append(img_to_array(img))

- low_img = []

- path = ’gdrive/MyDrive/TMU/Neural_Networks/Project/kaggle/Raw Data/low_res’

- files = os.listdir(path)

- files = sorted_alphanumeric(files)

- for i in tqdm(files):

- if i == ’855.jpg’:

- break

- else:

- img = cv2.imread(path + ’/’+i,1)

- #resizing image

- img = cv2.resize(img, (SIZE, SIZE))

- img = img.astype(’float32’) / 255.0

- low_img.append(img_to_array(img))

- ##################################

- ##### Visualizing the Data #####

- ##################################

- for i in range(4):

- a = np.random.randint(0,855)

- plt.figure(figsize=(10,10))

- plt.subplot(1,2,1)

- plt.title(’High Resolution Imge’, color = ’green’, fontsize = 20)

- plt.imshow(high_img[a])

- plt.axis(’off’)

- plt.subplot(1,2,2)

- plt.title(’low Resolution Image ’, color = ’black’, fontsize = 20)

- plt.imshow(low_img[a])

- plt.axis(’off’)

- ################################

- ##### Reshaping the Data #####

- ################################

- train_high_image = high_img[:700]

- train_low_image = low_img[:700]

- train_high_image = np.reshape(train_high_image,

- len(train_high_image),SIZE,SIZE,3))

- train_low_image = np.reshape(train_low_image,(len(train_low_image),

- SIZE,SIZE,3))

- validation_high_image = high_img[700:830]

- validation_low_image = low_img[700:830]

- validation_high_image= np.reshape(validation_high_image,

- (len(validation_high_image),SIZE,SIZE,3))

- validation_low_image = np.reshape(validation_low_image,

- (len(validation_low_image),SIZE,SIZE,3))

- test_high_image = high_img[830:]

- test_low_image = low_img[830:]

- test_high_image= np.reshape(test_high_image,(len(test_high_image),

- SIZE,SIZE,3))

- test_low_image = np.reshape(test_low_image,(len(test_low_image),

- SIZE,SIZE,3))

- print("Shape of training images:",train_high_image.shape)

- print("Shape of test images:",test_high_image.shape)

- print("Shape of validation images:",validation_high_image.shape)

- ###############################

- ##### Defining the Model #####

- ###############################

- from keras import layers

- def down(filters , kernel_size, apply_batch_normalization = True):

- downsample = tf.keras.models.Sequential()

- downsample.add(layers.Conv2D(filters,kernel_size,padding = ’same’,

- strides = 2))

- if apply_batch_normalization:

- downsample.add(layers.BatchNormalization())

- downsample.add(keras.layers.LeakyReLU())

- return downsample

- def up(filters, kernel_size, dropout = False):

- upsample = tf.keras.models.Sequential()

- upsample.add(layers.Conv2DTranspose(filters, kernel_size,

- padding = ’same’, strides = 2))

- if dropout:

- upsample.dropout(0.2)

- upsample.add(keras.layers.LeakyReLU())

- return upsample

- def model():

- inputs = layers.Input(shape= [SIZE,SIZE,3])

- d1 = down(128,(3,3),False)(inputs)

- d2 = down(128,(3,3),False)(d1)

- d3 = down(256,(3,3),False)(d2)

- d4 = down(512,(3,3),False)(d3)

- d5 = down(512,(3,3),False)(d4)

- #upsampling

- u1 = up(512,(3,3),False)(d5)

- u1 = layers.concatenate([u1,d4])

- u2 = up(256,(3,3),False)(u1)

- u2 = layers.concatenate([u2,d3])

- u3 = up(128,(3,3),False)(u2)

- u3 = layers.concatenate([u3,d2])

- u4 = up(128,(3,3),False)(u3)

- u4 = layers.concatenate([u4,d1])

- u5 = up(3,(3,3),False)(u4)

- u5 = layers.concatenate([u5,inputs])

- output = layers.Conv2D(3,(2,2),strides = 1, padding = ’same’)(u5)

- return tf.keras.Model(inputs=inputs, outputs=output)

- model = model()

- model.summary()

- ################################

- ##### Compiling the Model #####

- ################################

- model.compile(optimizer = tf.keras.optimizers.Adam(learning_rate = 0.001),

- loss = ’mean_absolute_error’,

- metrics = [’acc’])

- ###############################

- ##### Fitting the Model ######

- ###############################

- model.fit(train_low_image, train_high_image, epochs = 20, batch_size = 1,

- validation_data = (validation_low_image,validation_high_image))

- #################################

- ##### Evaluating the Model #####

- #################################

- def psnr(target, ref):

- target_data = target.astype(float)

- ref_data = ref.astype(float)

- diff = ref_data - target_data

- diff = diff.flatten(’C’)

- rmse = math.sqrt(np.mean(diff**2.))

- return 20 * math.log10(255. / rmse)

- def mse(target, ref):

- err = np.sum((target.astype(float) - ref.astype(float))**2)

- err /= float(target.shape[0] * target.shape[1])

- return err

- def compare_images(target, ref):

- scores = []

- scores.append(psnr(target, ref))

- scores.append(mse(target, ref))

- scores.append(ssim(target, ref, multichannel=True))

- return scores

- ####################################

- ##### Visualizing Predictions #####

- ####################################

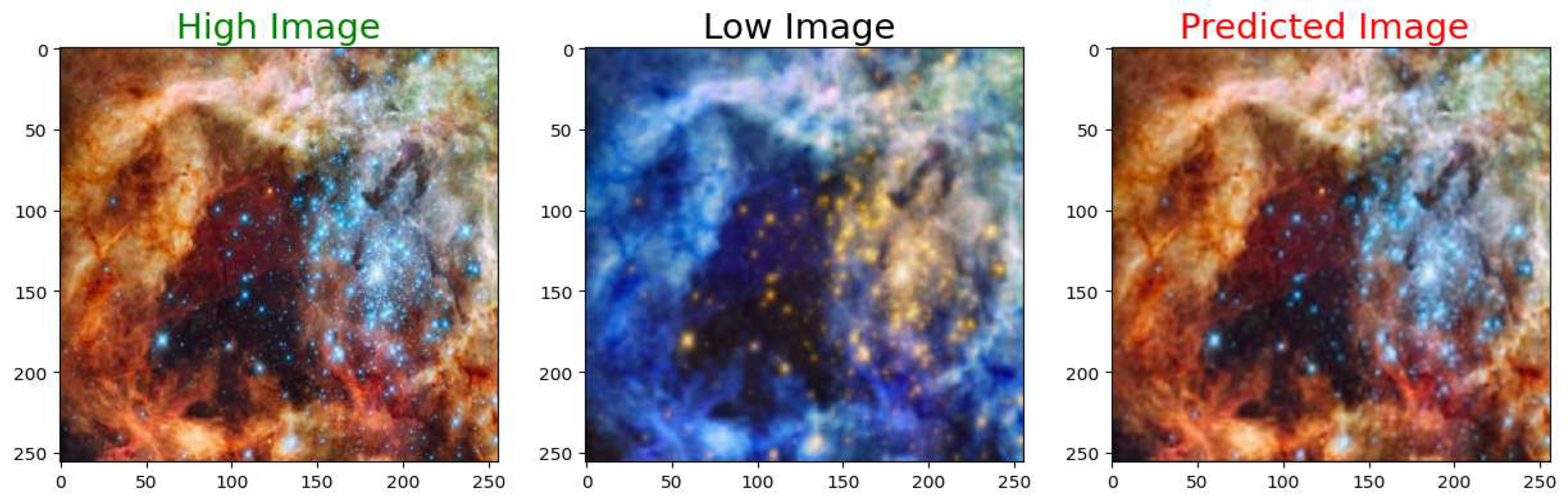

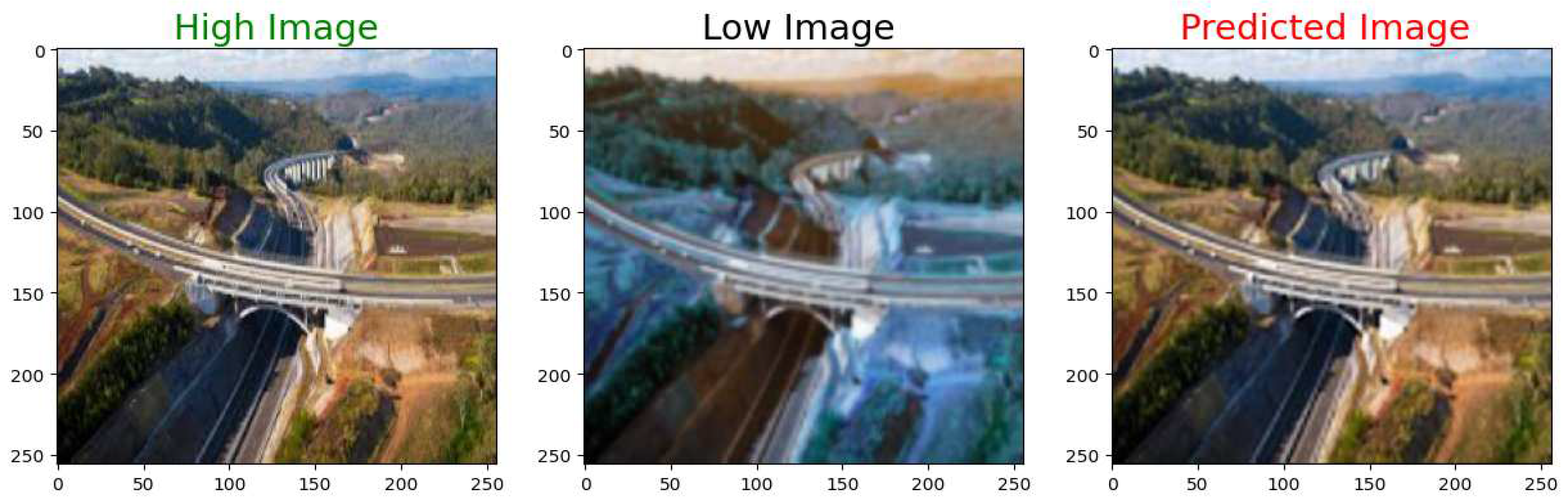

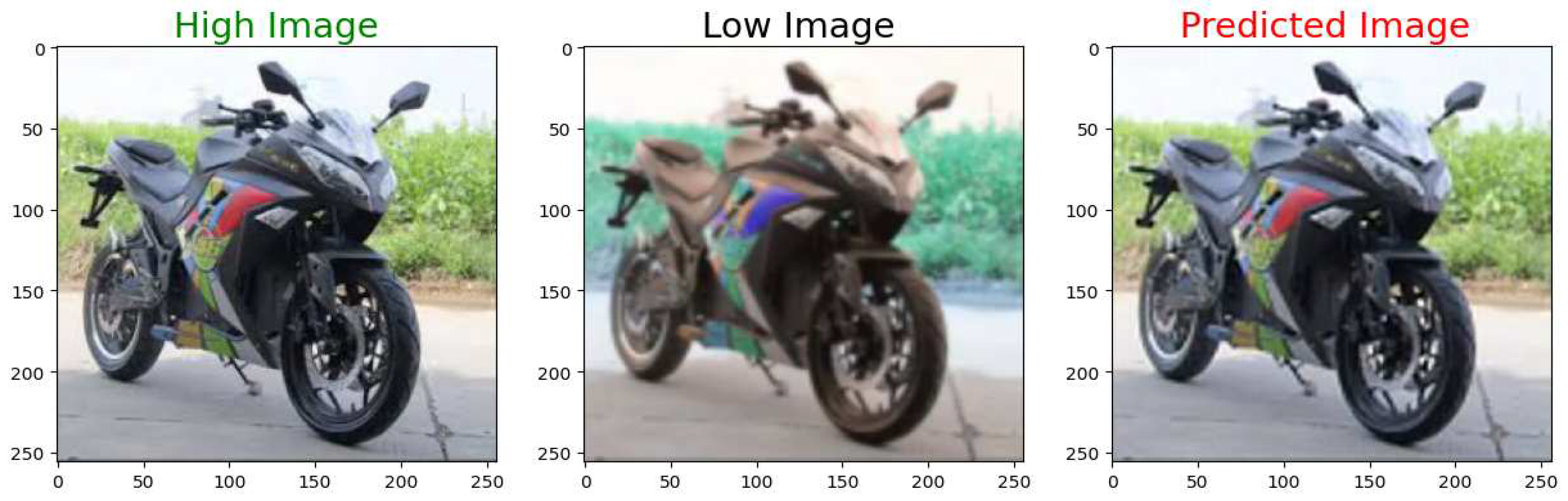

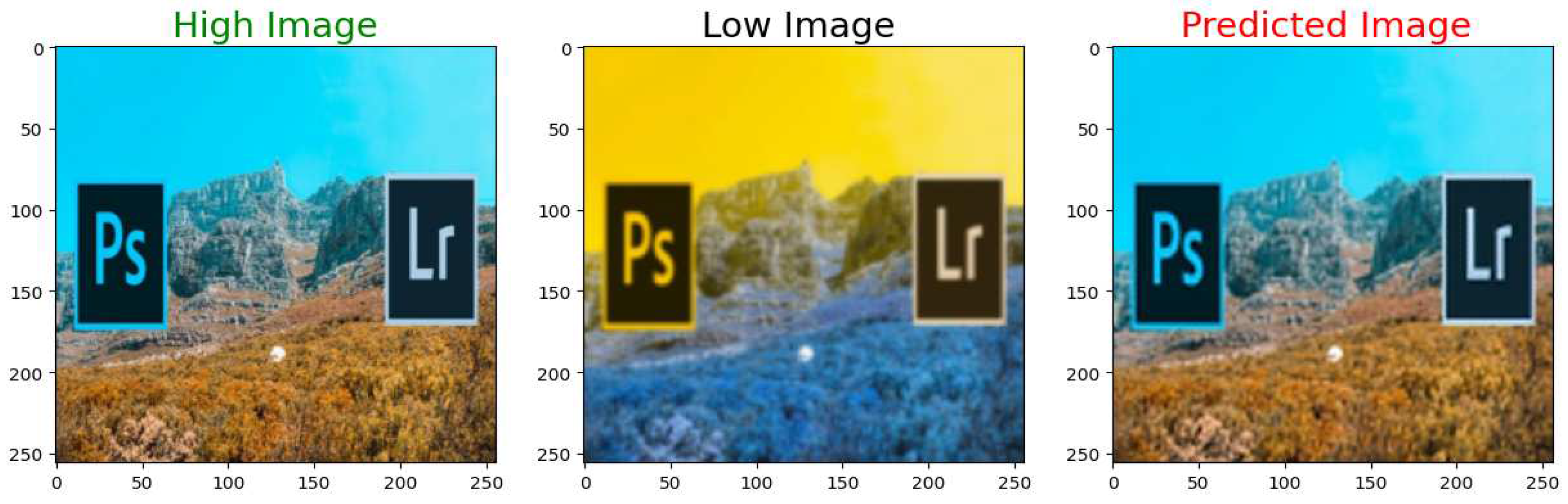

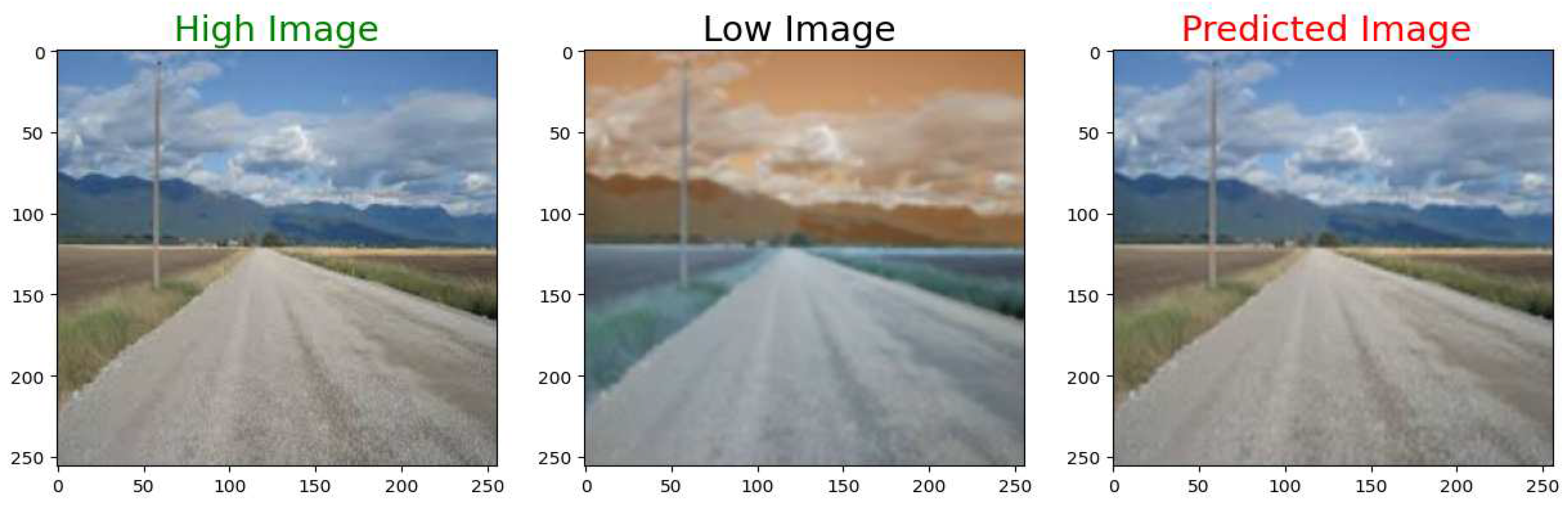

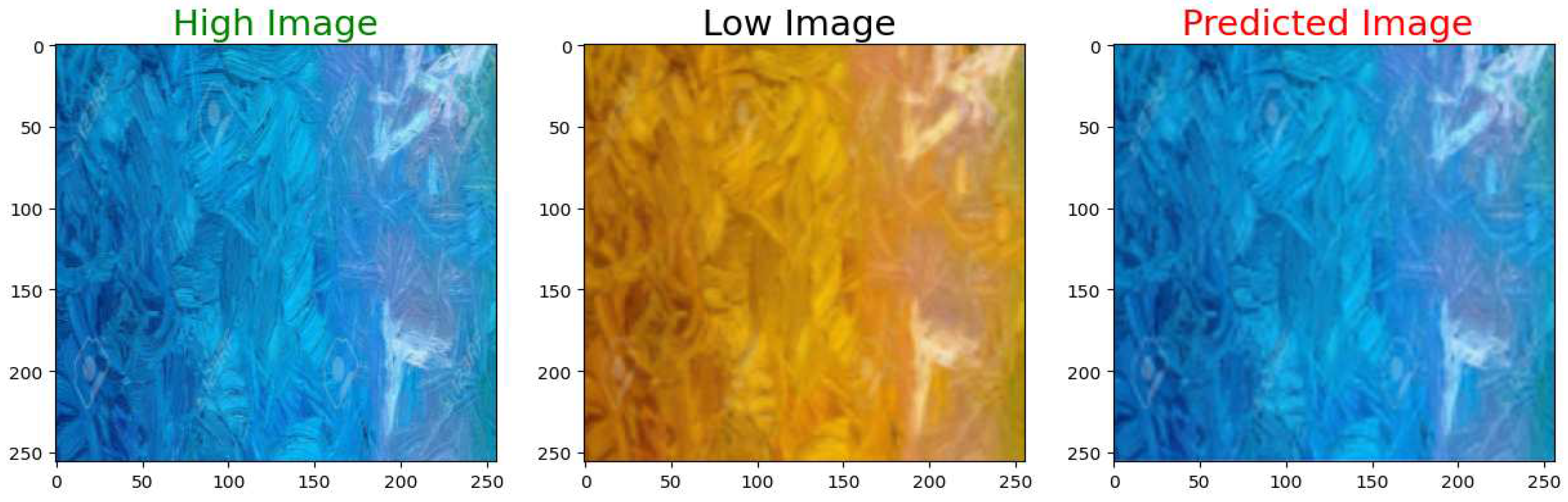

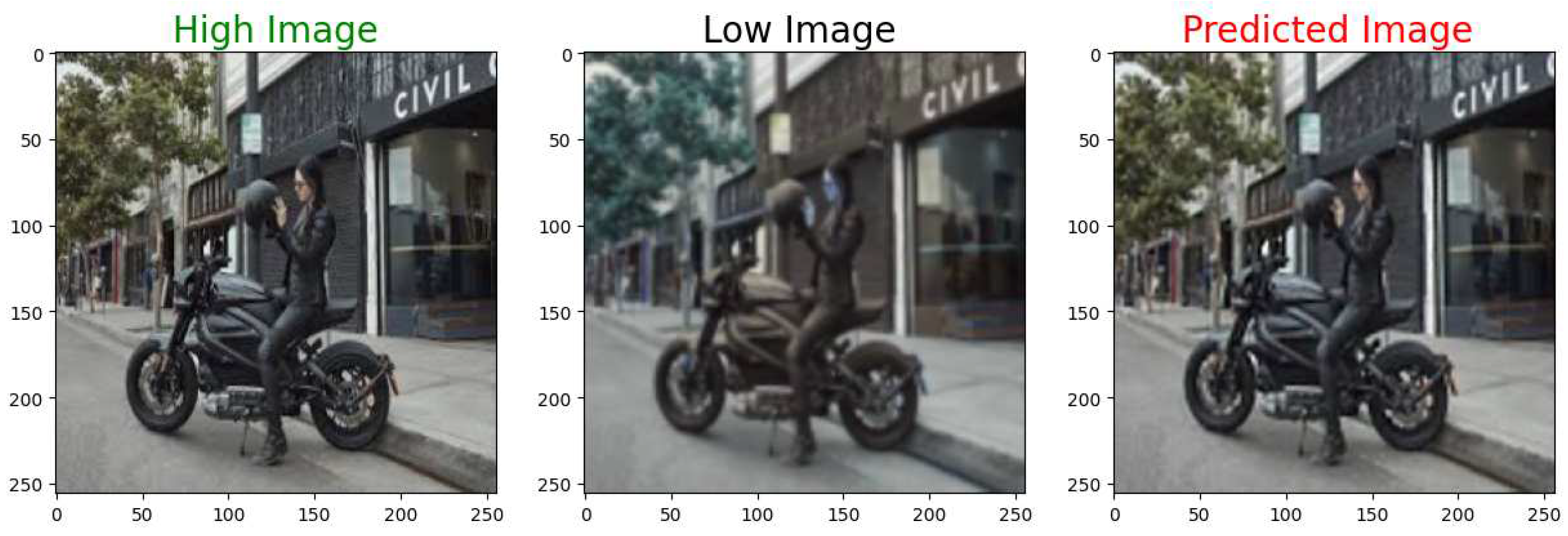

- def plot_images(high,low,predicted):

- plt.figure(figsize=(15,15))

- plt.subplot(1,3,1)

- plt.title(’High Image’, color = ’green’, fontsize = 20)

- plt.imshow(high)

- plt.subplot(1,3,2)

- plt.title(’Low Image ’, color = ’black’, fontsize = 20)

- plt.imshow(low)

- plt.subplot(1,3,3)

- plt.title(’Predicted Image ’, color = ’Red’, fontsize = 20)

- plt.imshow(predicted)

- plt.show()

- scores = []

- for i in range(1,10):

- predicted = np.clip(model.predict(test_low_image[i].reshape(

- 1,SIZE, SIZE,3)),0.0,1.0).reshape(SIZE, SIZE,3)

- scores.append(compare_images(predicted, test_high_image[i]))

- plot_images(test_high_image[i],test_low_image[i],predicted)

- #########################################

- ##### Saving results and the model #####

- #########################################

- import pandas as pd

- df = pd.DataFrame(scores)

- df.columns = [’PSNR’, ’MSE’, ’SSIM’]

- df.to_csv(’3_3_11_20_0.001.csv’, index=False)

- model.save("final_model.h5")

References

- Keys, R. Cubic convolution interpolation for digital image processing. IEEE Transactions on Acoustics, Speech, and Signal Processing 1981, 29, 1153–1160. [Google Scholar] [CrossRef]

- Duchon, C.E. Lanczos filtering in one and two dimensions. Journal of Applied Meteorology 1979, 18, 1016–1022. [Google Scholar] [CrossRef]

- Dai, S.; Han, M.; Xu, W.; Wu, Y.; Gong, Y.; Katsaggelos, A.K. Softcuts: a soft edge smoothness prior for color image super-resolution. IEEE Transactions on Image Processing 2009, 18, 969–981. [Google Scholar] [PubMed]

- Sun, J.; Xu, Z.; Shum, H.Y. Image super-resolution using gradient profile prior. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE, 2008, pp. 1–8.

- Yan, Q.; Xu, Y.; Yang, X.; Nguyen, T.Q. Single image super-resolution based on gradient profile sharpness. IEEE Transactions on Image Processing 2015, 24, 3187–3202. [Google Scholar] [PubMed]

- Marquina, A.; Osher, S.J. Image super-resolution by TV-regularization and Bregman iteration. Journal of Scientific Computing 2008, 37, 367–382. [Google Scholar] [CrossRef]

- A. Krizhevsky, I.S.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Communications of the ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Hinton, G.E.; Zimel, R.S. Autoencoders, Minimum Description Length and Helmholtz Free Energy. Advances in Neural Information Processing Systems 6 (NIPS 1993). ACM, 1993, pp. 600–605.

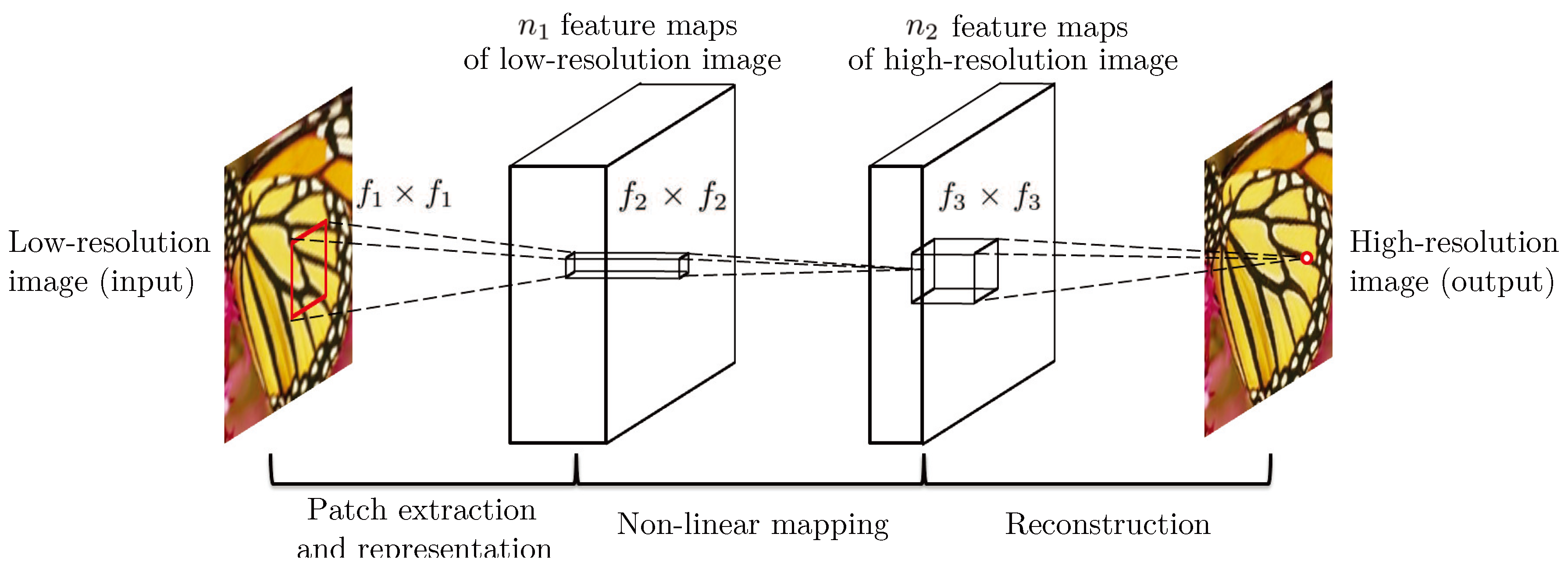

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE transactions on pattern analysis and machine intelligence 2015, 38, 295–307. [Google Scholar] [CrossRef] [PubMed]

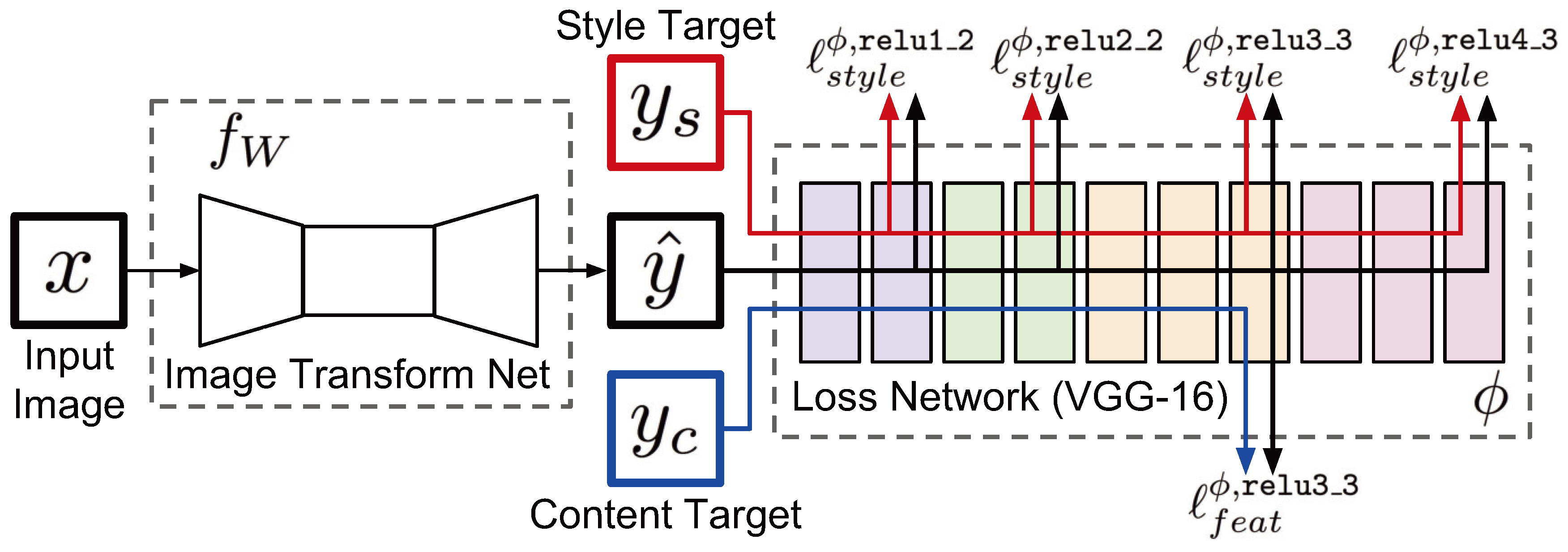

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual losses for real-time style transfer and super-resolution. European Conference on Computer Vision. Springer, 2016, pp. 694–711.

- Wang, Z.; Bovik, A.C. Mean squared error: love it or leave it? a new look at signal fidelity measures. IEEE Signal Processing Magazine 2009, 26, 98–117. [Google Scholar] [CrossRef]

- Kundu, D.; Evans, B.L. Full-reference visual quality assessment for synthetic images: A subjective study. Proc. IEEE Int. Conf. on Image Processing. IEEE, 2015, pp. 3046–3050.

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE, 2016, pp. 1646–1654.

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, pp. 770–778.

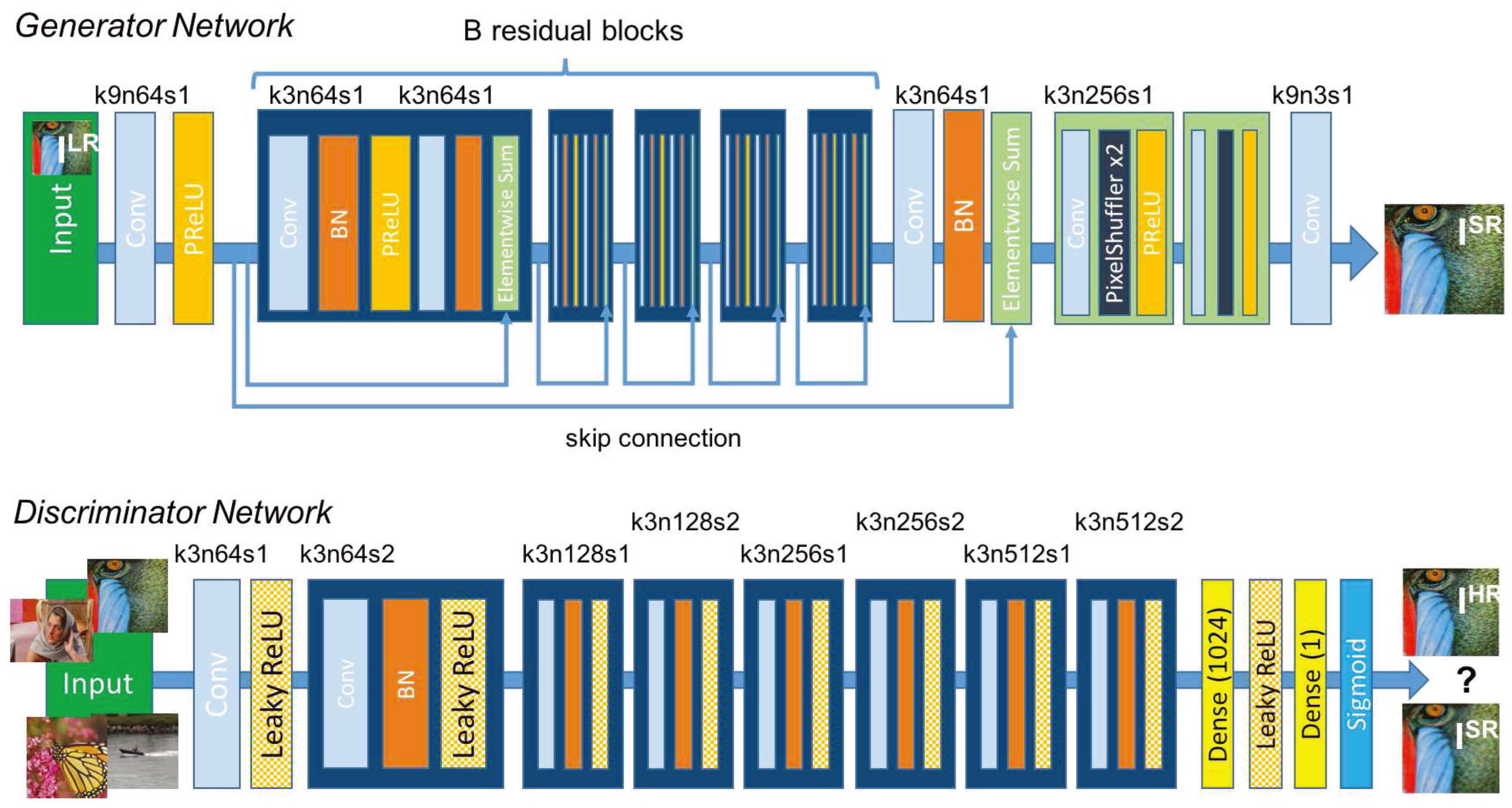

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; others. Photo-realistic single image super-resolution using a generative adversarial network. IEEE Transactions on Pattern Analysis and Machine Intelligence 2017, 39, 777–791.

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Lee, K.M. Enhanced deep residual networks for single image super-resolution. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops. IEEE, 2017, pp. 136–144.

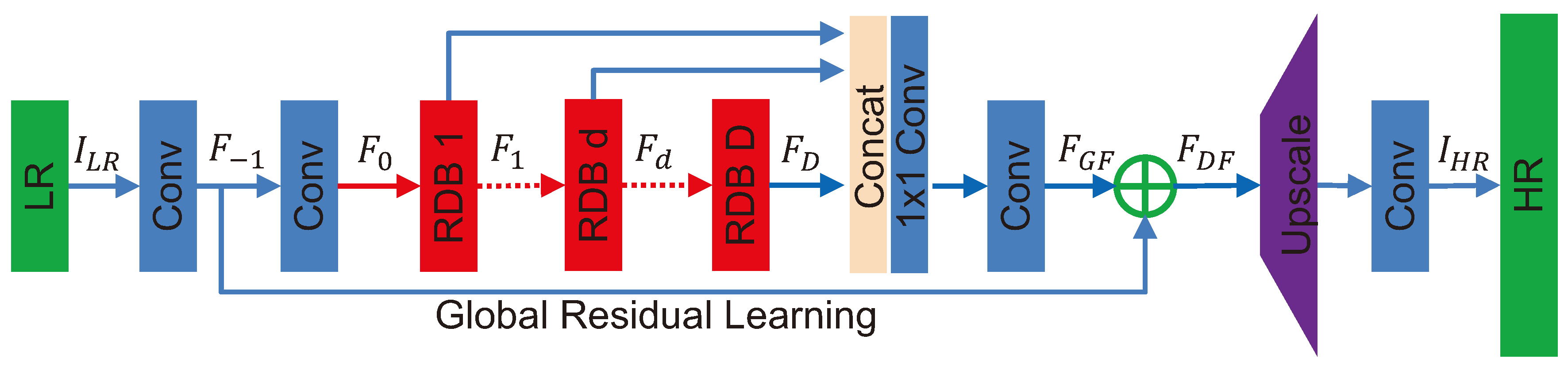

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Residual dense network for image super-resolution. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2018, pp. 39–48.

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Image super-resolution using very deep residual channel attention networks. Proceedings of the European Conference on Computer Vision, 2018, pp. 286–301.

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; Shi, W. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 105–114.

- Yang, W.; Zhang, X.; Tian, Y.; Wang, W.; Xue, J.H.; Liao, Q. Deep Learning for Single Image Super-Resolution: A Brief Review. IEEE Signal Processing Magazine 2019, 36, 110–117. [Google Scholar] [CrossRef]

| PSNR | MSE | SSIM |

|---|---|---|

| 73.02 | 0.01 | 0.9 |

| 75.69 | 0.01 | 0.88 |

| 75.63 | 0.01 | 0.90 |

| 75.85 | 0.01 | 0.92 |

| 73.51 | 0.01 | 0.83 |

| 82.34 | 0.001 | 0.96 |

| 79.64 | 0.002 | 0.93 |

| 77.38 | 0.003 | 0.86 |

| 74.59 | 0.01 | 0.90 |

| PSNR | MSE | SSIM |

|---|---|---|

| 70.51 | 0.02 | 0.81 |

| 72.50 | 0.01 | 0.78 |

| 72.50 | 0.01 | 0.81 |

| 71.95 | 0.01 | 0.84 |

| 69.78 | 0.02 | 0.68 |

| 77.90 | 0.003 | 0.93 |

| 75.25 | 0.01 | 0.88 |

| 72.75 | 0.01 | 0.76 |

| 71.75 | 0.01 | 0.81 |

| PSNR | MSE | SSIM |

|---|---|---|

| 73.98 | 0.01 | 0.92 |

| 75.34 | 0.01 | 0.89 |

| 76.07 | 0.004 | 0.92 |

| 75.41 | 0.01 | 0.94 |

| 73.16 | 0.01 | 0.86 |

| 79.86 | 0.002 | 0.97 |

| 77.54 | 0.003 | 0.94 |

| 75.36 | 0.01 | 0.83 |

| 75.09 | 0.01 | 0.92 |

| PSNR | MSE | SSIM |

|---|---|---|

| 74.47 | 0.007 | 0.93 |

| 77.24 | 0.004 | 0.92 |

| 77.35 | 0.004 | 0.94 |

| 78.09 | 0.003 | 0.95 |

| 75.02 | 0.006 | 0.89 |

| 85.10 | 0.001 | 0.98 |

| 81.87 | 0.001 | 0.95 |

| 79.05 | 0.002 | 0.90 |

| 76.05 | 0.005 | 0.93 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).