4.1. Experimental Setup

The performance of the proposed method was evaluated based on four benchmark facial expression databases: three from a laboratory environment, namely, Cohn-Kanade Plus (CK+) [

27], Oulu-CASIA [

28], MMI [

29]; and one from a wild environment, FER2013 [

30]. A 10-fold cross-validation strategy was employed for model evaluation, especially focusing on scenarios with small and imbalanced datasets such as CK+, MMI, and Oulu-CASIA. Each of these databases was strictly divided into 90% as a training set and 10% allocated as a testing set. Furthermore, FER is a large-scale dataset; the training and evaluation processes were conducted on its provided datasets. Several sample images derived from these databases are illustrated in

Fig. 6. The details of the databases and the number of images for each emotion are presented in

Table 1.

To minimize the variations in the face scale and in-plane rotation, the face was detected and aligned from the original database using the OpenCV library with Haar–Cascade detection [

42]. The aligned facial images were resized to 64×64 pixels. Moreover, intensity equalization was used to enhance the contrast in facial images. A data augmentation technique was used to overcome the restricted number of training images in the FER problem. Furthermore, the facial images were flipped, and each one and its corresponding flipped image was rotated at -15, -10, -5, 5, 10, and 15 °. The training databases were augmented 14 times using original, flipped, six-angle, and six-angle-flipped images. The rotated facial images are shown in

Fig. 7.

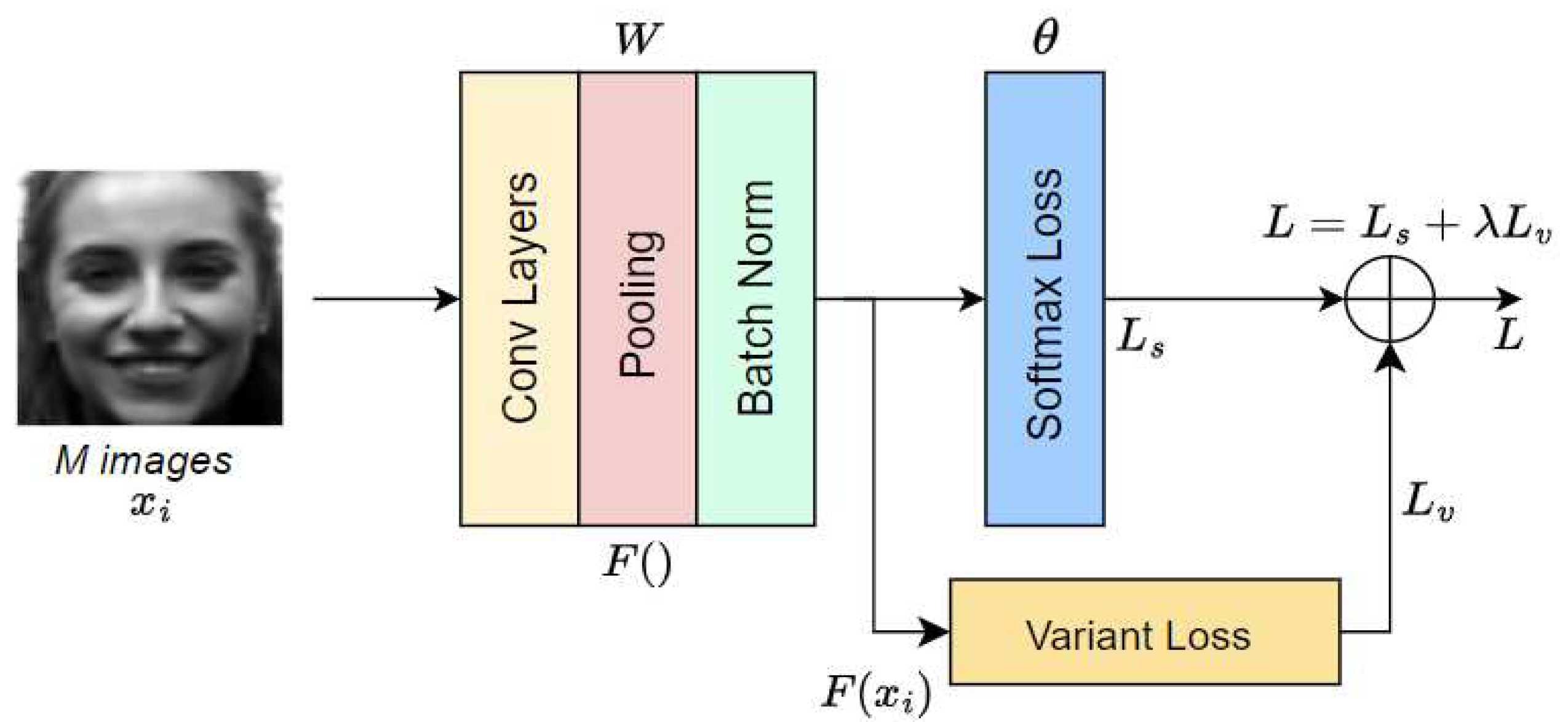

The proposed loss function was compared with softmax, center [

26], range [

31], and marginal losses[

32] using the same CNN architectures to demonstrate the effectiveness of the proposed loss function. The experiment was conducted in a subject-independent scenario. The CNN architectures were processed with 64 images in each batch. The training was performed using the standard SGD technique to optimize the loss functions. The hyper-parameter

was used to balance the softmax and variant losses.

and

were utilized to balance among these losses in the variant loss, and

controlled the learning rate of the class center

. All of these factors affect the performance of our model. In this experiment, the values

=0.001,

=0.4,

=0.6,

=

=10e-3 were empirically selected for the proposed loss. For the center, marginal, and range losses,

was set to 0.001. An

Intel®Xeon® CPU E5-2620 v2 @ 2.10GHz ×12 48GB RAM and an NVIDIA RTX 3090 GPU were used to implement the proposed method in a Pytorch framework.

4.2. Experimental Results

1) Results on Cohn-Kanade Plus (CK+) database: The CK+ is a representative laboratory-controlled database for FER. It comprises 593 image sequences collected from 123 participants. 327 of these image sequences have one of seven emotion labels: anger, contempt, disgust, fear, happiness, sadness, and surprise, from 118 subjects. Each image sequence starts with a neutral face and ends with the peak emotion. To collect additional data, the last three frames of each sequence were collected and associated with the provided labels. Therefore, a database containing 981 experimental images was constructed. The images were primarily grayscale and digitized to a 640×490 or 640×480 resolution.

The average recognition precision of the methods based on the loss functions and CNN architectures is listed in

Table 2. The accuracy of the proposed loss function was superior to that of the others for all six CNN architectures. For the same loss functions, the accuracy of ResNet was the highest, followed by those of MobileNetV3, ResNeSt, InceptionNet, AlexNet, and DenseNet. Overall, the proposed loss produced an average recognition accuracy of 94.89% for the seven expressions using the ResNet.

Table 3 presents the confusion matrix of the ResNet, which was optimized using the proposed loss function. The accuracy of the contempt, disgust, happiness, and surprise labels was significant. Notably, the happiness percentage was the highest at 99.5%, followed closely by surprise, disgust, and contempt at 98.4%, 97.7%, and 93.4%, respectively. The proportions of the anger, fear, and sadness labels were inferior to these emotions because of their visual similarity.

2) Results on Oulu-CASIA database: The Oulu-CASIA database includes 2,880 image sequences obtained from 80 participants using a visible light (VIS) imaging system under normal illumination conditions. Six emotion labels were assigned to each image sequence: anger, disgust, fear, happiness, sadness, and surprise. Like the Cohn-Kanade Plus database, the image sequence started with a neutral face and ended with the peak emotion. For each image sequence, the last three frames were collected as the peak frames of the labeled expression. The imaging hardware was operated at 25 fps with an image resolution of 320×240 pixels.

The average recognition accuracy of the methods is listed in

Table 4. The performance of the proposed loss function was comparable to that of previous ones. Specifically, the proposed loss achieved an average recognition accuracy of 77.61% for the six expressions using the ResNet architectures.

Table 5 presents the confusion matrix of ResNet trained with the proposed loss function. The accuracy of the happiness and surprise labels increased, with the former achieving 92.1% and the latter gaining 84.0%. The accuracy for anger, disgust, fear, and sadness was inferior, obtained 66.2%, 70.5%, 76.3%, and 76.5%, respectively.

3) Results on MMI database: The laboratory-controlled MMI database comprises 312 image sequences collected from 30 participants. 213 image sequences were labeled with six facial expressions: anger, disgust, fear, happiness, sadness, and surprise. Moreover, 208 sequences from 30 participants were captured in frontal view. The spatial resolution was 720×576 pixels, and the videos were recorded at 24 fps. Unlike the Cohn-Kanade Plus and Oulu-CASIA databases, the MMI database features image sequences labeled by the onset-apex. Therefore, the sequences started with a neutral expression, peaked near the middle, and returned to a neutral expression. The location of the peak expression frame was not provided. Furthermore, the MMI database presented challenging conditions, particularly in the case of large interpersonal variations. Three middle frames were chosen as the peak expression frames in each image sequence to conduct a subject-independent cross-validation scenario.

Table 6 lists the average recognition accuracy of the methods. Our loss function outperformed all the other loss functions by a certain margin. Specifically, the proposed loss achieved an average recognition accuracy of 67.43% for the six expressions using the MobileNetV3 architecture.

Table 7 presents the percentages in the confusion matrix of the MobileNetV3 optimized with the proposed loss function. The accuracy for all emotions was under 80.0%, except for happiness and surprise, which obtained 89.7% and 81.3%, respectively. This may be due to the number of images in each class. An instance of this is fear, which had the fewest labels and whose accuracy was a low 31.0%. Similar results were also confirmed for the accuracy of anger, disgust, and sadness.

4) Results on FER2013 database: FER2013 is a large-scale, unconstrained database automatically collected by the Google image search API. It includes 35,887 images with a relatively low resolution of 48×48 pixels, which are labeled with one of seven emotion labels: anger, disgust, fear, happiness, sadness, surprise, and neutral. The training set comprises 28,709 examples. The public test consists of 3,589 examples; the remaining 3,589 images are used as a private test set.

Table 8 lists all the methods’ average recognition accuracy. The accuracy of the proposed loss function greatly exceeds that of the others in all CNN architectures except AlexNet. The proposed loss achieved a peak average recognition accuracy of 61.05% for the seven expressions using the ResNeSt architecture.

The confusion matrix of ResNeSt, which was trained with the proposed loss function, is present in

Table 9. The happiness percentage was highest at 80.7%, followed by the surprise at 77.4%. The others obtained relatively low prediction ratios.